- 1Kaiser Permanente Washington Health Research Institute, Seattle, WA, United States

- 2Department of Psychological and Brain Sciences, Indiana University, Bloomington, IN, United States

- 3Department of Psychiatry and Behavioral Sciences, University of Washington, Seattle, WA, United States

- 4Department of Health Policy and Management, Gillings School of Global Public Health, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States

- 5Public Health Sciences Division, Fred Hutchinson Cancer Research Center, Seattle, WA, United States

- 6Department of Global Health, University of Washington, Seattle, WA, United States

Background: The science of implementation has offered little toward understanding how different implementation strategies work. To improve outcomes of implementation efforts, the field needs precise, testable theories that describe the causal pathways through which implementation strategies function. In this perspective piece, we describe a four-step approach to developing causal pathway models for implementation strategies.

Building causal models: First, it is important to ensure that implementation strategies are appropriately specified. Some strategies in published compilations are well defined but may not be specified in terms of its core component that can have a reliable and measureable impact. Second, linkages between strategies and mechanisms need to be generated. Existing compilations do not offer mechanisms by which strategies act, or the processes or events through which an implementation strategy operates to affect desired implementation outcomes. Third, it is critical to identify proximal and distal outcomes the strategy is theorized to impact, with the former being direct, measurable products of the strategy and the latter being one of eight implementation outcomes (1). Finally, articulating effect modifiers, like preconditions and moderators, allow for an understanding of where, when, and why strategies have an effect on outcomes of interest.

Future directions: We argue for greater precision in use of terms for factors implicated in implementation processes; development of guidelines for selecting research design and study plans that account for practical constructs and allow for the study of mechanisms; psychometrically strong and pragmatic measures of mechanisms; and more robust curation of evidence for knowledge transfer and use.

Background: Why Build Causal Pathway Models?

In recent years, there has been growing recognition of the importance of implementing evidence-based practices as a way to improve the quality of health care and public health. However, the results of implementation efforts have been mixed. About two-thirds of efforts fail to achieve the intended change (2), and nearly half have no effect on outcomes of interest (3). Implementation strategies are often mismatched to barriers [e.g., training, a strategy that could affect implementation outcomes through changes in an individual’s knowledge (intrapersonal-level), is used inappropriately to address an organizational-level barrier like poor culture] (4), and implementation efforts are increasingly complex and costly without enhanced impact (5). These suboptimal outcomes are due, in large part, to the dearth of tested theory in the field of implementation science (6). In particular, the field has a limited understanding of how different implementation strategies work—the specific causal mechanisms through which implementation strategies influence care delivery [7; Lewis et al. (under review)1]. As a consequence, implementation science has been limited in its ability to effectively inform implementation practice by providing guidance about when and in what contexts specific implementation strategies should be used and, just as importantly, when they should not.

The National Academy of Science defines “science” as “the use of evidence to construct testable explanations and predictions of natural phenomena, as well as the knowledge generated through this process.” (8) The field of implementation has spent the past two decades building and organizing knowledge, but we are far from having testable explanations that afford us the ability to generate predictions. To improve outcomes of implementation efforts, the field needs testable theories that describe the causal pathways through which implementation strategies function (6, 9). Unlike frameworks, which offer a basic conceptual structure underlying a system or concept (10), theories provide a testable way of explaining phenomena by specifying relations among variables, thus enabling prediction of outcomes (10, 11).

Causal pathway models represent interrelations among variables and outcomes of interest in a given context (i.e., the building blocks of implementation theory). Specifying the structure of causal relations enables scientists to empirically test whether the implementation strategies are operating via theorized mechanisms, how contextual factors moderate the causal processes through which implementation strategies operate, and how much variance in outcomes is accounted for by those mechanisms. Findings from studies based on causal models can, over time, both help the field develop more robust theories about implementation processes and advance the practice of implementation by addressing key issues. For instance, causal models can do the following: (1) inform the development of improved implementation strategies, (2) identify mutable targets for new strategies, (3) increase the impact of existing strategies, and (4) prioritize which strategies to use in which contexts.

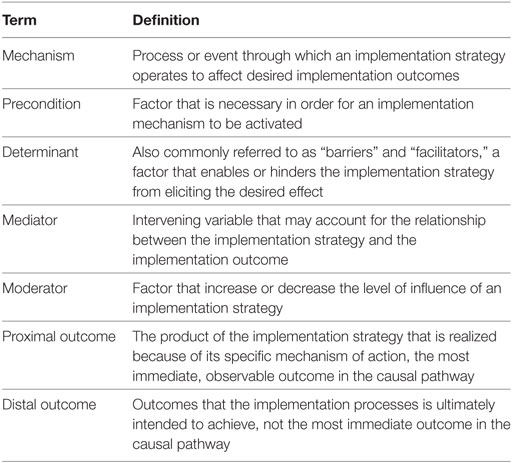

In this perspective piece, we propose an approach to theory development by specifying, in the form of causal pathway models, hypotheses about the causal operation of different implementation strategies in various settings, so that these hypotheses can be tested and refined. Specifically, we offer a four-step process to developing causal pathway models for implementation strategies. Toward this end, we argue the field must move beyond having lists of variables that can rightly be considered determinants [i.e., factors that obstruct or enable change in provider behavior or health-care delivery processes (12)], and toward precise articulation of mediators, moderators, preconditions, and (proximal versus distal) outcomes (see Table 1 for definitions).

Building Causal Pathway Models

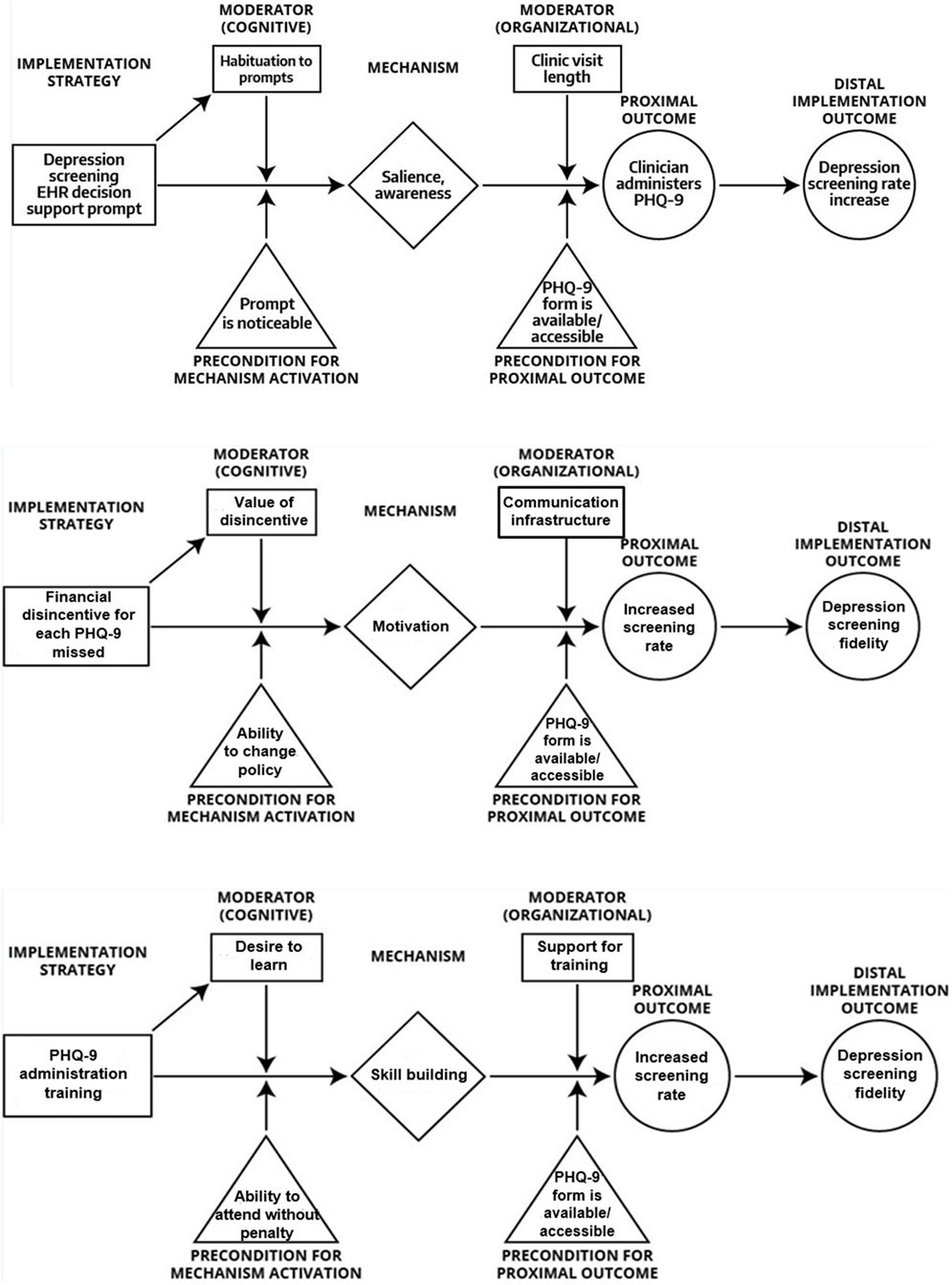

Our perspective draws upon Agile Science (13, 14)—a new method for developing and studying behavioral interventions that focuses on intervention modularity, causal modeling, and efficient evaluations to generate empirical evidence with clear boundary conditions (in terms of population, context, behavior, etc.) to maximize knowledge accumulation and repurposing. Agile Science has been used to investigate goal-setting interventions for physical activity, engagement strategies for mobile health applications, depression interventions for primary care, and automated dietary cues to promote weight loss (13, 15). Applied to implementation strategies, Agile Science-informed causal pathway diagram modeling consists of at least four steps: (1) specifying implementation strategies; (2) generating strategy-mechanism linkages; (3) identifying proximal and distal outcomes; and (4) articulating moderators and preconditions. To demonstrate this approach, we offer examples of causal pathway models for a set of three diverse implementation strategies (see Figure 1). The strategies are drawn from the following example. A community mental health center is planning to implement measurement-based care in which providers solicit patient-reported outcome data [e.g., Patient Health Questionnaire 9-item depression symptom severity measure (16)] prior to clinical encounters to inform treatment (17). The community mental health center plans to use training, financial penalty (disincentives), and audit and feedback as they are common strategies used to support measurement-based care implementation (18).

Step 1: Specifying Implementation Strategies

The Expert Recommendations for Implementing Change study yielded a compilation of 73 implementation strategies (19) developed by a multidisciplinary team through a structured literature review (20), Delphi process, and concept mapping exercise (19, 21, 22). Thus, there exists a solid foundation of strategies that are conceptually clear and well defined. However, the compilation was never explicitly linked to mechanisms. Following Kazdin (7), we define “mechanisms” as the processes or events through which an implementation strategy operates to effect desired implementation outcomes. Upon careful examination, it seems many strategies are not well enough specified to be linked to mechanisms in a coherent manner, a key step in causal model building. For instance, the compilation of 73 strategies lists “learning collaboratives,” a general approach for which the discrete strategies or core components are underspecified. This makes it difficult to identify their precise mechanisms of action (23). Underspecified strategies also leave the field vulnerable to inappropriately synthesizing data across studies (24, 25).

In our case example, training is a strategy that is underspecified. We adapted procedures from Michie et al. (26) to guide strategy specification recommending that each strategy be assessed for whether it: (1) aims to promote the adoption, implementation, sustainment, or scale-up of an evidence-based practice; (2) is a proposed “active ingredient” of adoption, implementation, sustainment, or scale-up; (3) represents the smallest component while retaining the proposed active ingredient; (4) can be used alone or in combination with other discrete strategies; (5) is observable and replicable; and (6) can have a measureable impact on specified mechanisms of implementation (and, if so, whether putative mechanisms can be listed). If strategies do not meet these criteria, they require revision and further specification. This could involve suggesting alternative definitions, eliminating an implementation strategy altogether, or articulating a new, narrower strategy that is a component or a type of the original strategy. Training would meet all but the third and sixth criteria (listed previously), because training can be comprised of several active ingredients (e.g., didactics, modeling, role play/rehearsal, feedback, shadowing) each of which may operate on an unique mechanism. In this case, training ought to be more narrowly defined to make clear its core components.

Step 2: Generating Strategy-Mechanism Linkages

Once specified, an implementation strategy needs to be linked to the mechanisms hypothesized to underlie its functioning. Mechanisms explain how an implementation strategy has an effect by describing the actions that lead from the administration of the strategy to the implementation outcomes (see Table 1 for definitions). Statistically speaking, mechanisms are always mediators, but mediators may not be mechanisms. Similarly, moderators can point toward mechanisms but are not themselves reliably mechanisms. Determinants may explain why an implementation strategy did or did not have an effect, but mechanisms explain how a strategy had an effect, by, for example, altering the status of a determinant. Determinants are naturally occurring, and often but not always, malleable factors that could prevent or enable the strategy to affect the desired outcomes. Mechanisms are intentionally activated by the application of an implementation strategy and can operate at different levels of analysis, such as at the levels of intrapersonal (e.g., learning), interpersonal (e.g., sharing), organizational (e.g., leading), community (e.g., restructuring), and macro policy (e.g., guiding) (27). For an implementation effort to be successful, chosen strategies should be compatible with and able to act on the local determinants [e.g., provider habit (determinant) is addressed with clinical decision support (strategy) via self-reflection/reflecting (mechanism)]. Although commonly used in implementation science, we propose that the notion of a determinant is insufficiently specific as researchers have used it to refer to at least two types of variables in a causal process: proximal outcomes and effect modifiers (see text footnote 1). Our discussion below uses these more precise terms instead.

Most implementation strategies likely act via multiple mechanisms, although it remains an empirical question whether one mechanism is primary and others are ancillary. It is also likely that the same mechanism might be involved in the operation of multiple implementation strategies. Initial assessment of strategy-mechanism linkages is made in the context of the broader scientific knowledge base about how a strategy produces an outcome (7). For instance, many strategies have their own literature base (e.g., audit and feedback) (28) that offer theoretical and empirical insights about which mechanisms might be underlying the functioning of those strategies [e.g., reflecting, learning, and engaging (28)]. Effort should always be made to draw upon and test existing theories, but if none offer sufficient guidance, hypothesizing variables that may have causal influence remains critical. In this way, over time, the initially formulated strategy-mechanism linkages can be reassessed and refined as studies begin to test them empirically. While such empirical evaluations are currently rare—across two systematic reviews of implementation mechanisms, only 31 studies were identified and no mechanisms were empirically established (see text footnote 1; 29)—the causal pathway models we propose here are explicitly intended to facilitate evaluations of the mechanistic processes through which implementation strategies operate.

Step 3: Identifying Proximal and Distal Outcomes

Implementation scientists have isolated eight outcomes as the desired endpoints of implementation efforts: acceptability, feasibility, appropriateness, adoption, penetration, fidelity, cost, and sustainability (1). Many of these outcomes are appropriately construed as latent variables, but others are manifest/observable in nature (30); a recent systematic review offers measures of these outcomes and measure meta-data (31). In terms of the causal processes through which implementation strategies operate, these outcomes are often best conceptualized as distal outcomes that the implementation process is intended to achieve, and each of them may be more salient at one phase of implementation than another. For instance, with the Exploration, Preparation, Implementation, Sustainment Framework (32), acceptability of an evidence-based practice may be most salient in the exploration phase, whereas fidelity may be the goal of an implementation phase. Despite the plausible temporal interrelations among the outcomes, mounting evidence indicates that not all implementation strategies influence each of the aforementioned outcomes (e.g., workshop training can influence adoption but not fidelity) (33). To fully establish the plausibility of an implementation mechanism and a testable causal pathway, proximal outcomes must be expounded.

Proximal outcomes are direct, measurable, and typically observable, products of the implementation strategy that occur because of its specific mechanism of action. That is, affecting a proximal outcome in the intended direction can confirm/disconfirm activation of the putative mechanism, offering a low-inference way to establish evidence for a theorized mechanism. Most often, mechanisms themselves cannot be directly measured, forcing (either high-inference assessment or) reliance on the observation of change in a proximal outcome of interest. For instance, didactic education, as an active ingredient of training, acts primarily through the mechanism of learning on the proximal outcome of knowledge to influence the distal implementation outcome of perceived acceptability or even adoption. Practice with feedback acts through the mechanism of reflecting on proximal outcomes of skills and confidence to influence the distal implementation outcome of adoption or even fidelity. To identify proximal outcomes, one must answer the question, “How will I know if this implementation strategy had an effect via the mechanism that I think it is activating?” or “What will be different if the hypothesized mechanisms for this strategy is at play?” It is very common for mechanisms and proximal outcomes to be conflated in the literature given that researchers often test mediation models examining the impact of a strategy on a distal implementation outcome via a more proximal outcome. The way we are using the terms, a mechanism is a process through which an implementation strategy operates, and a proximal outcome is a measurable effect of that process that is in the causal pathway toward the distal implementation outcomes.

Step 4: Articulating Effect Modifiers

Finally, there are two types of effect modifiers that are important to articulate, both of which can occur across multiple levels of analysis: moderators and preconditions. Moderators are factors that increase or decrease the level of influence of an implementation strategy on an outcome. See Figure 1 in which an example for intra-individual and organizational-level moderators for audit and feedback are articulated. Theoretically, moderators are factors that interact with a strategy’s mechanism of action, even if exactly how they interact mechanistically are not understood. Preconditions are factors that are necessary for an implementation mechanism to be activated at all (see Figure 1). They are necessary conditions that need to be in place for the causal process that leads from an implementation strategy to its proximal and distal outcomes to take place. Both moderators and preconditions are most often mischaracterized as “determinants” in the implementation science literature base, which may limit our ability to understand the nature of the relations between a strategy and the individual and contextual factors that modify its effects, and, in turn, where, when, and why strategies have an effect on outcomes of interest.

Future Directions: What the Field of Implementation Needs to Fully Establish Itself as a Science

In order to fully establish itself as a science by offering testable explanations and enabling the generation of predictions, we offer four critical steps for the field of implementation: (1) specify implementation strategies; (2) generate implementation strategy-mechanism linkages; (3) identify proximal and distal outcomes; and (4) articulate effect modifiers. In addition to these steps, we suggest that future research should strive for the generation of precise terms for factors implicated in implementation processes and use them consistently across studies. In a systematic review of implementation mechanisms, researchers conflated preconditions, predictors, moderators, mediators, and proximal outcomes (see text footnote 1). In addition, there is room for the field to develop guidelines for selecting research designs and study plans that account for practical constraints of the contexts in which implementation is studied and allow for mechanism evaluation. The types of causal pathway models that we advocated for here, paired with an understanding of the constraints of a particular study site, would enable researchers to select appropriate methods and designs to evaluate hypothesized relations by carefully considering the temporal dynamics such as how often a mechanism should be measured and how much the outcome is expected to change and when.

In order to truly advance the field, much work needs to be done to identify or develop psychometrically strong and pragmatic measures of implementation mechanisms. Empirically evaluating causal pathway models requires psychometrically strong measures of mechanisms that are also pragmatic, yet none of the seven published reviews of implementation-relevant measures focus on mechanisms. It is likely that measure development will be necessary to advance the field. Finally, implementation science could benefit from the building of more robust curation of evidence for knowledge transfer and use. Other fields house web-based databases for collecting, organizing, and synthesizing empirical findings [e.g., Science of Behavior Change (34)]. In doing so, fields can accumulate knowledge more rapidly and users of knowledge can determine what is working, when, and why, as well as what generalizes and what does not. Such curation of evidence can more efficiently lead to the development of improved implementation strategies (e.g., through strategy specification), identification of mutable targets for new strategies (e.g., mechanisms revealed for existing strategies that may not be pragmatic), and prioritization of strategy use for a given context (e.g., given knowledge of preconditions and moderators).

Author Contributions

CL and PK are co-first authors, who co-led manuscript development. CL and BW are co-PIs on an R01 proposal that led to the inception of this manuscript. All authors (CL, PK, BP, AL, LT, SJ, CW-B, and BW) contributed to idea development, writing, and editing of this manuscript and agreed with its content.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer TW declared a past co-authorship with one of the author BP to the handling Editor.

Acknowledgments

BP would like to acknowledge funding from the National Institute of Mental Health (K01MH113806).

Footnote

- ^Lewis CC, Boyd MR, Walsh-Bailey C, Lyon AR, Beidas RS, Mittman B, et al. A systematic review of empirical studies examining mechanisms of dissemination and implementation in health. Implement Sci (under review).

References

1. Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health (2011) 38(2):65–76. doi:10.1007/s10488-010-0319-7

2. Damschroder L, Aron D, Keith R, Kirsh S, Alexander J, Lowery J. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci (2009) 4:50. doi:10.1186/1748-5908-4-50

3. Powell BJ, Proctor EK, Glass JE. A systematic review of strategies for implementing empirically supported mental health interventions. Res Soc Work Pract (2014) 24(2):192–212. doi:10.1177/1049731513505778

4. Bosch M, van der Weijden T, Wensing M, Grol R. Tailoring quality improvement interventions to identified barriers: a multiple case analysis. J Eval Clin Pract (2007) 13(2):161–8. doi:10.1111/j.1365-2753.2006.00660.x

5. Grimshaw JM, Eccles MP, Lavis JN, Hill SJ, Squires JE. Knowledge translation of research findings. Implement Sci (2012) 7(1):50. doi:10.1186/1748-5908-7-50

6. Grol RP, Bosch MC, Hulscher ME, Eccles MP, Wensing M. Planning and studying improvement in patient care: the use of theoretical perspectives. Milbank Q (2007) 85(1):93–138. doi:10.1111/j.1468-0009.2007.00478.x

7. Kazdin AE. Evidence-based treatment and practice: new opportunities to bridge clinical research and practice, enhance the knowledge base, and improve patient care. Am Psychol (2008) 63:146–59. doi:10.1037/0003-066X.63.3.146

8. National Academy of Sciences. Definitions of Evolutionary Terms [Internet]. Washington, DC: National Academy of Sciences (2017) [updated 2018; cited 2017 Nov 14]. Available from: http://www.nas.edu/evolution/Definitions.html

9. Eccles MImproved Clinical Effectiveness through Behavioural Research Group. Designing theoretically-informed implementation interventions. Implement Sci (2006) 1(1):4. doi:10.1186/1748-5908-1-4

10. Merriam-Webster Inc. Dictionary [Internet]. Springfield, MA: Merriam-Webster, Inc (2017) [updated 2018; cited 2017 2 Nov]. Available from: https://www.merriam-webster.com/dictionary/

11. Glanz K, Bishop DB. The role of behavioral science theory in development and implementation of public health interventions. Annu Rev Public Health (2010) 31:399–418. doi:10.1146/annurev.publhealth.012809.103604

12. Krause J, Van Lieshout J, Klomp R, Huntink E, Aakhus E, Flottorp S, et al. Identifying determinants of care for tailoring implementation in chronic diseases: an evaluation of different methods. Implement Sci (2014) 9:102. doi:10.1186/s13012-014-0102-3

13. Hekler EB, Klasnja P, Riley WT, Buman MP, Huberty J, Rivera DE, et al. Agile science: creating useful products for behavior change in the real world. Transl Behav Med (2016) 6(2):317–28. doi:10.1007/s13142-016-0395-7

14. Klasnja P, Hekler EB, Korinek EV, Harlow J, Mishra SR. Toward usable evidence: optimizing knowledge accumulation in HCI research on health behavior change. Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. Denver, CO: ACM (2017). p. 3071–82.

15. Patrick K, Hekler EB, Estrin D, Mohr DC, Riper H, Crane D, et al. The pace of technologic change: implications for digital health behavior intervention research. Am J Prev Med (2016) 51(5):816–24. doi:10.1016/j.amepre.2016.05.001

16. Kroenke K, Spitzer RL. The PHQ-9: a new depression diagnostic and severity measure. Psychiatr Ann (2002) 32(9):509–15. doi:10.3928/0048-5713-20020901-06

17. Lewis CC, Scott KE, Hendricks KE. A model and guide for evaluating supervision outcomes in cognitive–behavioral therapy-focused training programs. Train Educ Prof Psychol (2014) 8(3):165–73. doi:10.1037/tep0000029

18. Lewis CC, Puspitasari A, Boyd MR, Scott K, Marriott BR, Hoffman M, et al. Implementing measurement based care in community mental health: a description of tailored and standardized methods. BMC Res Notes (2018) 11(1):76. doi:10.1186/s13104-018-3193-0

19. Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci (2015) 10:21. doi:10.1186/s13012-015-0209-1

20. Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev (2012) 69(2):123–57. doi:10.1177/1077558711430690

21. Waltz TJ, Powell BJ, Chinman MJ, Smith JL, Matthieu MM, Proctor EK, et al. Expert recommendations for implementing change (ERIC): protocol for a mixed methods study. Implement Sci (2014) 9(1):39. doi:10.1186/1748-5908-9-39

22. Waltz TJ, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL, et al. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci (2015) 10:109. doi:10.1186/s13012-015-0295-0

23. Nadeem E, Olin SS, Hill LC, Hoagwood KE, Horwitz SM. Understanding the components of quality improvement collaboratives: a systematic literature review. Milbank Q (2013) 91(2):354–94. doi:10.1111/milq.12016

24. Michie S, Fixsen D, Grimshaw JM, Eccles MP. Specifying and reporting complex behaviour change interventions: the need for a scientific method. Implement Sci (2009) 4(1):40. doi:10.1186/1748-5908-4-40

25. Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci (2013) 8:139. doi:10.1186/1748-5908-8-139

26. Michie S, Carey RN, Johnston M, Rothman AJ, de Bruin M, Kelly MP, et al. From theory-inspired to theory-based interventions: a protocol for developing and testing a methodology for linking behaviour change techniques to theoretical mechanisms of action. Ann Behav Med (2016):1–12. doi:10.1007/s12160-016-9816-6

27. Weiner BJ, Lewis MA, Clauser SB, Stitzenberg KB. In search of synergy: strategies for combining interventions at multiple levels. J Natl Cancer Inst Monogr (2012) 2012(44):34–41. doi:10.1093/jncimonographs/lgs001

28. Colquhoun HL, Carroll K, Eva KW, Grimshaw JM, Ivers N, Michie S, et al. Advancing the literature on designing audit and feedback interventions: identifying theory-informed hypotheses. Implement Sci (2017) 12:117. doi:10.1186/s13012-017-0646-0

29. Williams NJ. Multilevel mechanisms of implementation strategies in mental health: integrating theory, research, and practice. Adm Policy Ment Health (2016) 43(5):783–98. doi:10.1007/s10488-015-0693-2

30. Lewis CC, Proctor E, Brownson RC. Measurement issues in dissemination and implementation research. 2nd ed. In: Brownson RC, Colditz GA, Proctor E, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press (2018). p. 229–45.

31. Lewis CC, Fischer S, Weiner BJ, Stanick C, Kim M, Martinez RG. Outcomes for implementation science: an enhanced systematic review of instruments using evidence-based rating criteria. Implement Sci (2015) 10:155. doi:10.1186/s13012-015-0342-x

32. Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health (2011) 38(1):4–23. doi:10.1007/s10488-010-0327-7

33. Jensen-Doss A, Cusack KJ, de Arellano MA. Workshop-based training in trauma-focused CBT: an in-depth analysis of impact on provider practices. Community Ment Health J (2008) 44(4):227–44. doi:10.1007/s10597-007-9121-8

34. Columbia University Medical Center, National Institutes of Health. Science of Behavior Change [Internet]. Columbia University Medical Center [cited 2017 Nov 6]. Available from: https://scienceofbehaviorchange.org/

Keywords: implementation, mechanism, mediator, moderator, theory, causal pathway, strategy

Citation: Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, Walsh-Bailey C and Weiner B (2018) From Classification to Causality: Advancing Understanding of Mechanisms of Change in Implementation Science. Front. Public Health 6:136. doi: 10.3389/fpubh.2018.00136

Received: 01 December 2017; Accepted: 20 April 2018;

Published: 07 May 2018

Edited by:

Thomas Rundall, University of California, Berkeley, United StatesReviewed by:

Carolyn Berry, New York University, United StatesThomas J. Waltz, Eastern Michigan University, United States

Copyright: © 2018 Lewis, Klasnja, Powell, Lyon, Tuzzio, Jones, Walsh-Bailey and Weiner. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Cara C. Lewis, bGV3aXMuY2NAZ2hjLm9yZw==

†Joint first authorship.

Cara C. Lewis

Cara C. Lewis Predrag Klasnja1†

Predrag Klasnja1† Leah Tuzzio

Leah Tuzzio Salene Jones

Salene Jones Callie Walsh-Bailey

Callie Walsh-Bailey Bryan Weiner

Bryan Weiner