- 1Prevention Research Center in St. Louis, Brown School, Washington University in St. Louis, St. Louis, MO, United States

- 2Focus Pointe Global, St. Louis, MO, United States

- 3School of Public Health, The University of Alabama at Birmingham, Birmingham, AL, United States

- 4JED Foundation, New York, NY, United States

- 5Division of Public Health Sciences, Department of Surgery and Alvin J. Siteman Cancer Center, Washington University School of Medicine, Washington University in St. Louis, St. Louis, MO, United States

Background: Evidence-based decision making (EBDM) in health programs and policies can reduce population disease burden. Training in EBDM for the public health workforce is necessary to continue capacity building efforts. While in-person training for EBDM is established and effective, gaps in skills for practicing EBDM remain. Distance and blended learning (a combination of distance and in-person) have the potential to increase reach and reduce costs for training in EBDM. However, evaluations to-date have focused primarily on in-person training. Here we examine effectiveness of in-person trainings compared to distance and blended learning.

Methods: A quasi-experimental pre-post design was used to compare gaps in skills for EBDM among public health practitioners who received in-person training, distance and blended learning, and controls. Nine training sites agreed to replicate a course in EBDM with public health professionals in their state or region. Courses were conducted either in-person (n = 6) or via distance or blended learning (n = 3). All training participants, along with controls, were asked to complete a survey before the training and 6 months post-training. Paired surveys were used in linear mixed models to compare effectiveness of training compared to controls.

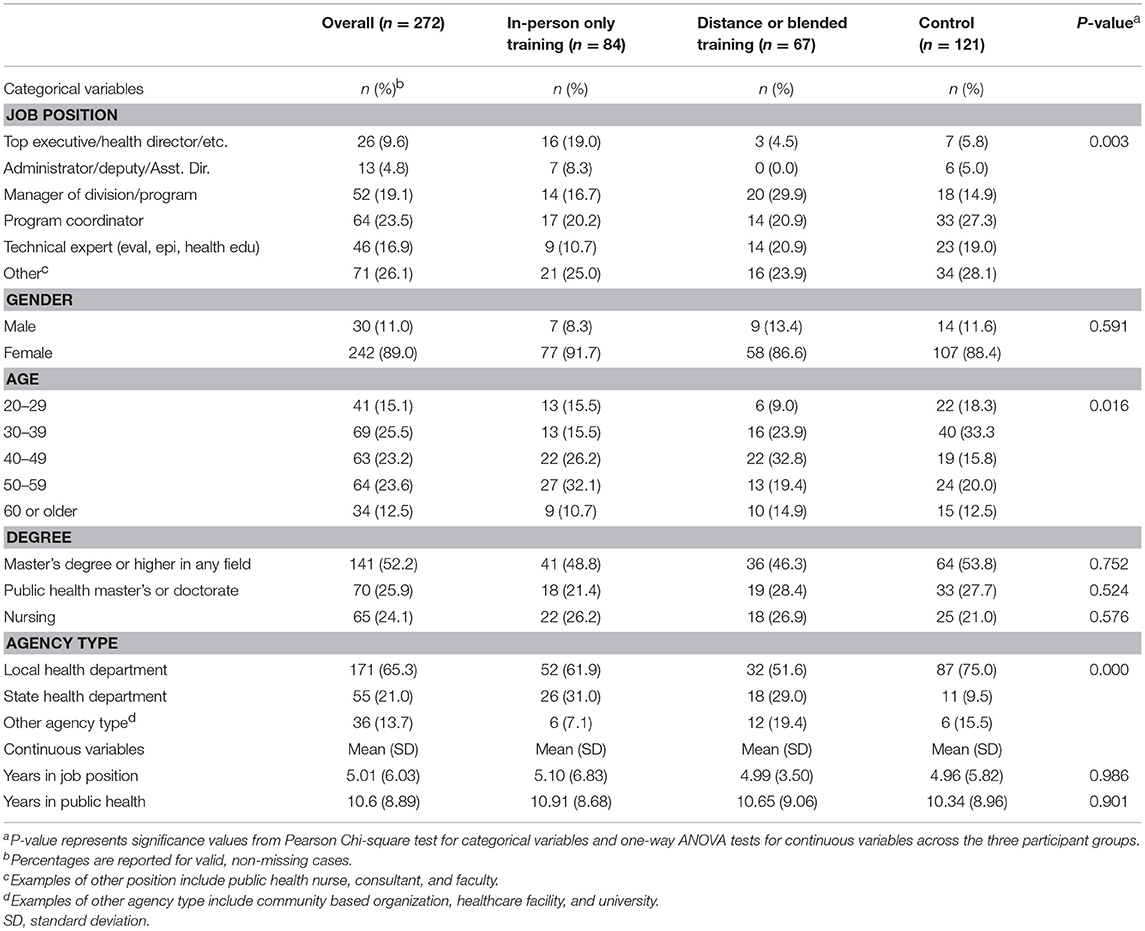

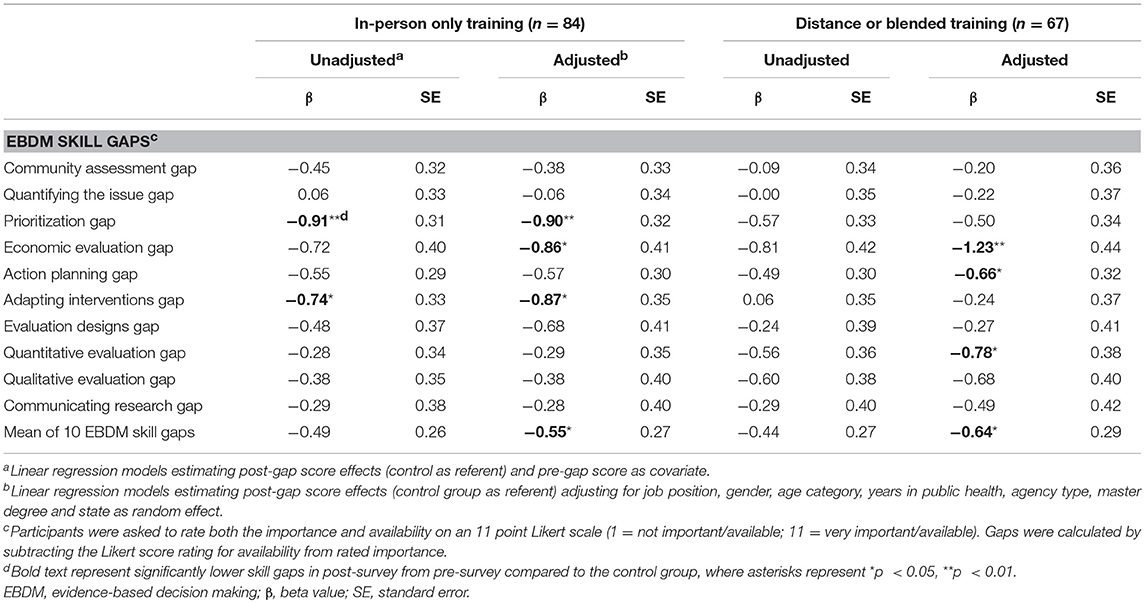

Results: Response rates for pre and post-surveys were 63.9 and 48.8% for controls and 81.6 and 62.0% for training groups. Participants who completed both pre and post-surveys (n = 272; 84 in-person, 67 distance or blended, and 121 controls) were mostly female (89.0%) and about two-thirds (65.3%) were from local health departments. In comparison to controls, overall gaps in skills for EBDM were reduced for participants of both in-person training (β = −0.55, SE = 0.27, p = 0.041) and distance or blended training (β = −0.64, SE = 0.29, p = 0.026).

Conclusions: This study highlights the importance of using diverse methods of learning (including distance or blended in-person approaches) for scaling up capacity building in EBDM. Further exploration into effective implementation strategies for EBDM trainings specific to course delivery type and understanding delivery preferences are important next steps.

Introduction

The US public health system is complex and includes several key organizations with diverse functions. Governmental health departments (state, local, tribal and territorial) hold primary responsibility for health under the US and state constitutions and directly or indirectly provide disease prevention services to communities such as health screenings, health education, and conduct surveillance. The 51 state health departments (1 per state and the District of Columbia) along with nearly 3,000 local health departments are diverse in the populations they serve (rural, urban, etc.) and in funding and resources available to them (number of full-time employees, partner organizations, etc.) (1, 2). Health departments play a key role in determining programs and policies to keep local communities or larger populations healthy.

Making programmatic and policy decisions based upon the best available research evidence, or evidence-based decision making (EBDM) (3–6), can further the ability to decrease the burden of disease in populations. The systematic application of principles of EBDM and training and capacity building for EBDM vary widely across the globe (7). Many of the fundamental tenets of EBDM originated in Australia, Canada, and the US. Among four countries recently surveyed regarding knowledge and use of EBDM principles, knowledge was highest in the US, followed closely by Australia and Brazil, with much lower knowledge in China (8). Consensus among the public health field exists regarding EBDM as a Core Competency needed among the US workforce (9). The US Public Health Accreditation Board's Standards and Measures also emphasize the importance of having a skilled workforce by requiring accreditation-seeking health departments to demonstrate that they “identify and use the best available evidence for making informed public health practice decisions” (standard 10.1) and “promote understanding and use of the current body of research results, evaluations, and evidence-based practices with appropriate audiences” (standard 10.2) (10). Even so, gaps in skills for EBDM remain (11). The spread of EBDM is crucial among the public health workforce charged with planning, implementing, and evaluating programs and policies for disease prevention. However, the EBDM process, particularly within the context of US governmental public health agencies, is met with several barriers. Individual-level barriers include skills needed to carry out EBDM, e.g., prioritizing programs or policies, and adapting interventions for various populations or settings. Organizational barriers include leadership and/or organizational culture supportive of EBDM, and access to resources for EBDM (12–14). Health departments in rural areas often have less access to resources for EBDM (15). Health departments in all locations also experience high staff turnover (16–18), and few employees (less than one quarter) enter the workforce with formal training in public health (17, 19) leading to the need for periodic training to build and maintain a skilled workforce. In addition, system-level barriers can include funding, external political influence, and competing priorities. Organizational and system-level barriers may be difficult to address in the short term, though individual skill deficits can be addressed through training relatively quickly (<1 year) (7, 20, 21). Thus, training is a suitable first step agencies can take to support EBDM and build capacity (22). Understanding effective training methods for public health professionals within the context of unique barriers is important. Higher education institutions, with expertise in formally preparing the public health workforce, and who are equipped with training technology resources, can provide an advantageous training partnership for health departments.

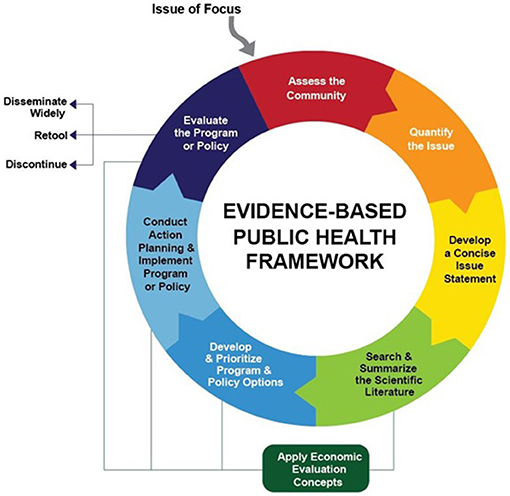

For nearly two decades, the Prevention Research Center in St. Louis has offered Evidence-based Public Health training to public health professionals nationally and internationally on the EBDM process (Figure 1) (13, 23). The standard course provided by researchers is an in-person, 3.5 days mix of didactic and small group learning, and is effective at increasing individual skills for EBDM (20, 21, 23–25). With staff turnover, training demands are ongoing and sustainability of training programs is needed (16–18, 26). To address this, train-the-trainer models for the course were developed and utilized to scale-up trainings and reduce EBDM skill gaps (20, 27, 28). Evaluations of train-the-trainer models thus far have focused on fidelity to the original in-person delivery format, and participants have noted advantages such as small group work, use of local examples, networking with other colleagues, and in-person interaction with trainers (27, 29). Offering the course in other formats (e.g., distance learning or some combination of in-person and online) could potentially reach larger audiences such as staff in rural or satellite locations and international audiences. Distance learning methods can provide cost savings, offer a better fit for employee schedules, and/or prove more sustainable for agencies to offer regularly to staff (30–32). Scaling up capacity building approaches is a significant issue in implementation science (33). Much of the literature on public health workforce training in distance or blended learning (combination of in-person and distance) formats focuses on single topics or specific skills (31, 32, 34–43), with few focusing on the utility of training modes for scale-up potential (44, 45).

The Public Health Foundation's TRAIN Learning Network, which currently includes 25 state health departments and/or associated health divisions, provides a national web-based platform for the public health workforce to access, develop, and share trainings in various topics (46). In addition, learning networks and centers charged with educating the US public health workforce, such as Public Health Training Centers, Regional Public Health Training Centers, and the Public Health Learning Network provide distance and blended training options (47, 48). With the growing availability of web-based platforms within national and regional learning networks for public health professionals (46, 49) and increasing interest in making trainings continuously available with reduced costs, further exploring multiple modes of trainings for EBDM is necessary and timely.

The purpose of this study was to evaluate the effectiveness of distance and blended training delivery methods to reduce skill gaps in making evidence-based decisions, as compared to in-person training, as a potential option for public health professionals.

Materials and Methods

This study used a quasi-experimental design (pre-post with comparison group) to examine the effect of EBDM training on gaps in EBDM skills. The following describes the training sites, the comparison or control group, data collection and analysis, and the EBDM training modules and learning objectives.

Training Site Selection and Study Overview

In 2014, we identified training sites that had previously requested training in EBDM and had existing collaborations across academic and health department settings. After an initial pool of 12 was identified, research staff contacted each site to determine the level of interest in collaborating as well as experience with web-based approaches for training. In total, the purposeful sample included nine training sites within Missouri, Washington, Colorado, North Carolina, Nebraska, Oklahoma, Tennessee, Vermont, and New York. Washington and Colorado included participants from outside of their state, though they were considered participants of the Washington or Colorado sites' course. The nine sites were led by five state health departments, one in-state Public Health Training Center, two Regional Public Health Training Centers, and one school of public health.

Invited site representatives included chronic disease and health promotion directors, workforce development staff, school of public health faculty, and training center staff. Site representatives agreed to: (1) recruit in-state staff and faculty to attend a train-the-trainer course in EBDM provided by the study team; (2) replicate the course in their own states or regions; and (3) support data collection for evaluation. Sites agreed to include both public health practitioners and academicians as course instructors. The study team provided technical assistance and financial support for course replication. While sites replicated the course at varying intervals in 2015–2018, and planned to continue beyond the timeframe of the funded project, this study examines pre and post-evaluations for the initial course replication at each site.

We recruited control participants through snowball sampling in health departments. We contacted directors from local health departments that were similar to participating departments based on jurisdiction population size and service to urban or rural communities. Directors identified department staff to participate in pre-post survey data collection as controls. In addition, representatives from the nine training sites identified possible contacts for controls. Control participants did not take part in the EBDM training.

Training Description

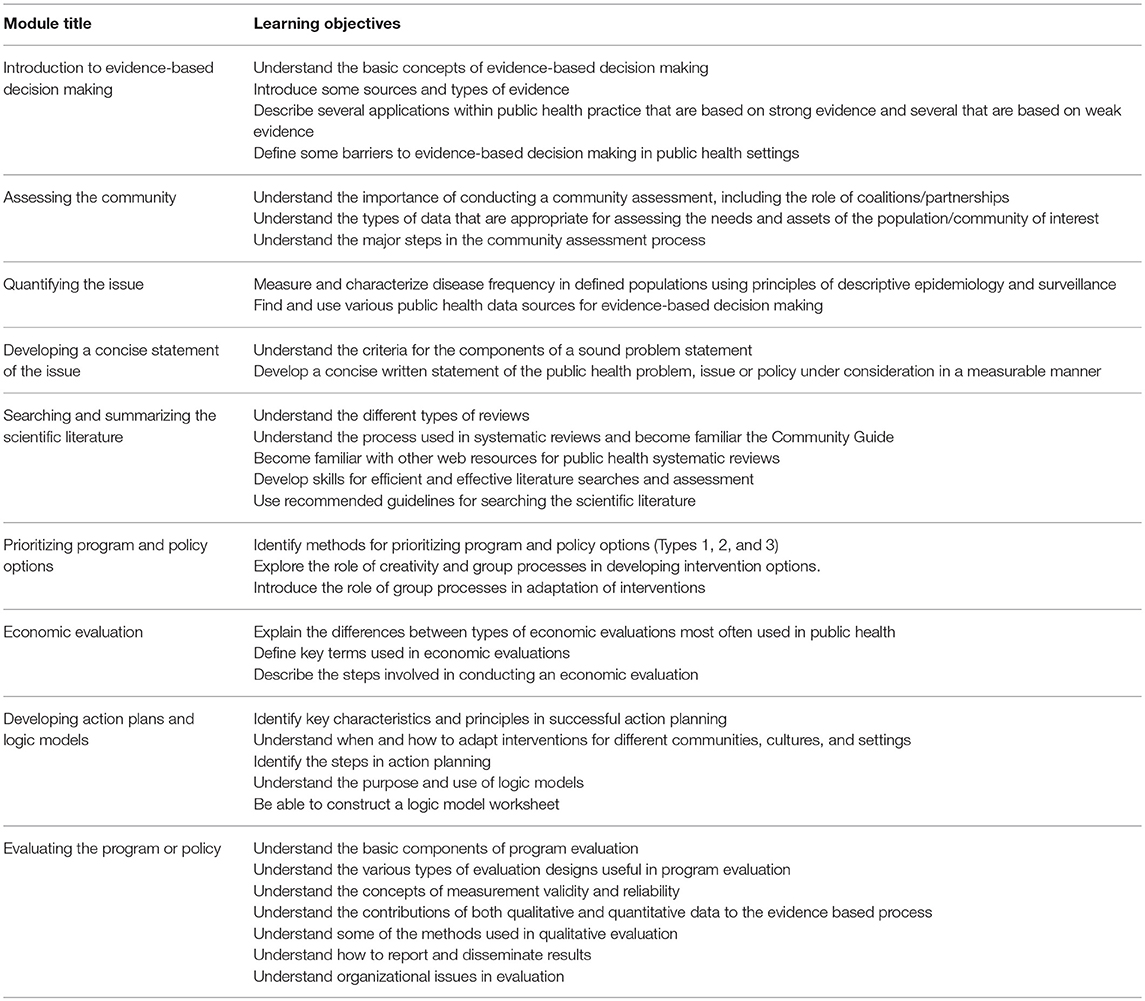

The evidence-based public health course, as originally designed and evaluated (23, 25, 27), is a 3.5 days in-person interactive training provided by university-based researchers that includes a mix of both didactic lectures and small group exercises. The course is organized into nine modules with accompanying learning objectives (Table 1) centered on the EBDM process. The nine modules cover foundational-level public health skills needed to make evidence-based decisions such as assessing needs in the community and using quantitative methods to quantify a public health issue. For example, in module three, “Quantifying the Issue,” participants complete a hands-on exercise in finding online data available for their state/county/city and become familiar with querying databases with terms for various disease rates, indicators, and outcomes. Each state has different public health governance and thus, for each course, exercises are tailored to reflect locally relevant circumstances and data and/or public health issues. While the content for exercises and/or examples varied based on relevance to states' and attendees' local health department jurisdictions, main components and learning objectives in the EBDM training are cross-cutting (Table 1) and apply to all health departments. Training site representatives attended the train-the-trainer course (27) and, along with support from study staff, planned a replication course in EBDM within their networks and recruited additional trainers from health departments and universities in their states. As courses and plans for replication developed, the core study team provided technical assistance to each site—this varied from site to site and included input on updating modules, tips on choosing course trainers, ideas for local examples, and logistics for organizing the courses. Six of the nine total sites used the in-person format and three delivered their course in a distance or blended format that combined distance and in-person components.

Table 1. Nine Modules of the Evidence-Based Public Health Course and Accompanying Learning Objectives.

In-person Training

Six sites provided in-person courses following the format of the original evidence-based public health course using a mix of didactic lectures, large- and small-group discussion, and small-group exercises. Most sites shortened the in-person course from the original 3.5 consecutive days to 2–2.5 consecutive days by shaving a bit of time from each module in consultation with the study team. One site delivered the in-person course in 4 days, with each day a week apart. The six in-person sites included local or in-state examples in didactic materials and exercises to make the learning relevant to current priority issues, but otherwise retained module content as provided in their train-the-trainer session.

Distance and Blended Training

Three sites implemented their course either solely via online web modules, or some combination of web modules and in-person session. The first site engaged participants through nine self-paced online modules on EBDM. Each module was followed by a quiz that had to be taken in order to “pass” the module. Optional activities and reflection questions were available for participants to complete. Participants also had the option to discuss any questions through a user forum as they progressed through the course. In the second site, participants completed two online modules individually, followed by a 2-day in-person meeting and additional online modules afterwards. For the third site, training participants watched pre-recorded videos (archived online) for each module, completed homework assignments, and then attended scheduled live online learning sessions. The live distance learning sessions featured a facilitator that led module-specific activities and guided participants in further discussion.

Survey Tool

Informed by previous work (50, 51), the online survey tool comprised 51 total survey items and was used for all groups: in-person, distance or blended, and control. The survey assessed participants' agency type and university affiliation, job position, length of employment in position and in public health, gender, age, and educational degrees earned (Table 2). Participants rated each of ten skills in EBDM on their importance (1: not important to 11: very important) and “how available you feel each skill is to you when you need it either in your own skill set or among others' in your agency” (1: not available to 11: very available). We assessed the following EBDM skills:

1. Community assessment: understand how to define the health issue according to the needs and assets of the population/community of interest.

2. Quantifying the issue: understand the uses of descriptive epidemiology (e.g., concepts of person, place, and time) in quantifying a public health issue.

3. Prioritization: understand how to prioritize program and policy options.

4. Economic evaluation: understand how to use economic data in the decision making process.

5. Action planning: understand the importance of developing an action plan for how to achieve goals and objectives.

6. Adapting interventions: understand how to modify programs and policies for different communities and settings.

7. Evaluation designs: understand the various designs useful in program or policy evaluation.

8. Quantitative evaluation: understand the uses of quantitative evaluation approaches (e.g., surveillance or surveys).

9. Qualitative evaluation: understand the value of qualitative evaluation approaches (e.g., focus groups, key informant interviews) including the steps involved in conducting qualitative evaluations.

10. Communicating research to policy makers: understand the importance of communicating with policy-makers about public health issues.

Skills for EBDM are established as a reliable measure of effect for the in-person course in EBDM (21, 51, 52), and thus are used in this study to assess the relative effectiveness of distance or blended formats. The differences between importance and availability scores were calculated to represent gaps in EBDM skills and are the main dependent or outcome variable in this study.

Data Collection

Staggered pre-post data collection based on training date was completed between December 2014 and May 2017 with in-person, distance or blended training groups, and controls. Email invitations with a link to participate in the baseline survey (administered via Qualtrics online software) were sent 1 month or less prior to each site's training. Follow up surveys were sent 6 months post-training to all who completed the baseline survey, except in one training site where follow up surveys were not administered. Also, participants who moved to another organization or retired after the baseline survey were not invited to complete the follow up as they were deemed ineligible. Survey invitees (n = 857 invited at baseline and n = 545 invited at follow up) received both reminder emails and phone calls in order to increase response rates. The Washington University in St. Louis Institutional Review Board granted human subject exempt approval.

Data Analysis

We sought to examine the relative effectiveness of distance and blended training approaches compared to in-person courses in reducing skill gaps; thus only paired responses from individuals that completed both surveys were used for analysis in this study. Because of similarities in distance learning approaches and small numbers, the distance learning and blended trainings were combined into one group. A previous meta-analysis of internet training also assigned trainings with any type of distance learning into a single group for analysis (53) and a recent review of distance learning for professional development of rural health professionals included studies that compared blended modalities to entirely face-to-face trainings (54), lending support for the group assignment in the present study. We used descriptive statistics to examine participant characteristics and mean skill gaps at pre and post-surveys across the three participant groups: in-person only training, distance or blended training, and control. Mixed effects linear models in IBM SPSS 25 MIXED were used to assess the effect of in-person training and distance or blended training on EBDM skill gaps at post-survey compared to controls (55). Smaller gaps at post-survey indicate reduced skill gaps and/or increased skills. Adjusted models accounted for baseline EBDM skill gap, job position, years in job position, gender, age group, master's degree earned, and public health agency type based on previous research (20, 21, 31) and/or their statistically significant between-group difference at baseline between the control and training groups. Participants' state was included as a random effect to account for any between-state variation. Data analysis was completed between September and December 2017.

Results

Response to the baseline survey was 63.9% (454 invited; 290 completed) for control and 81.6% (403 invited; 329 completed) for training groups. For the follow-up survey, the response rates for control and training groups were 48.8% (287 invited; 137 completed) and 62.0% (258 invited; 160 completed), respectively. In total, 272 post-surveys matched baseline surveys (pairs) and were used in all analyses.

Overall, most (89.0%) respondents were female, nearly two-thirds (65.3%) were from local health departments, and more than half (52.2%) held a graduate degree (Table 2). The largest (26.1%) category of selected job position was “Other,” which included a wide range of types of occupations, such as public health nurse, consultant, faculty, and planner. Between-group differences at baseline were found in job position (p = 0.003), age (p = 0.016), and agency type (p < 0.001) and were adjusted in multivariate modeling.

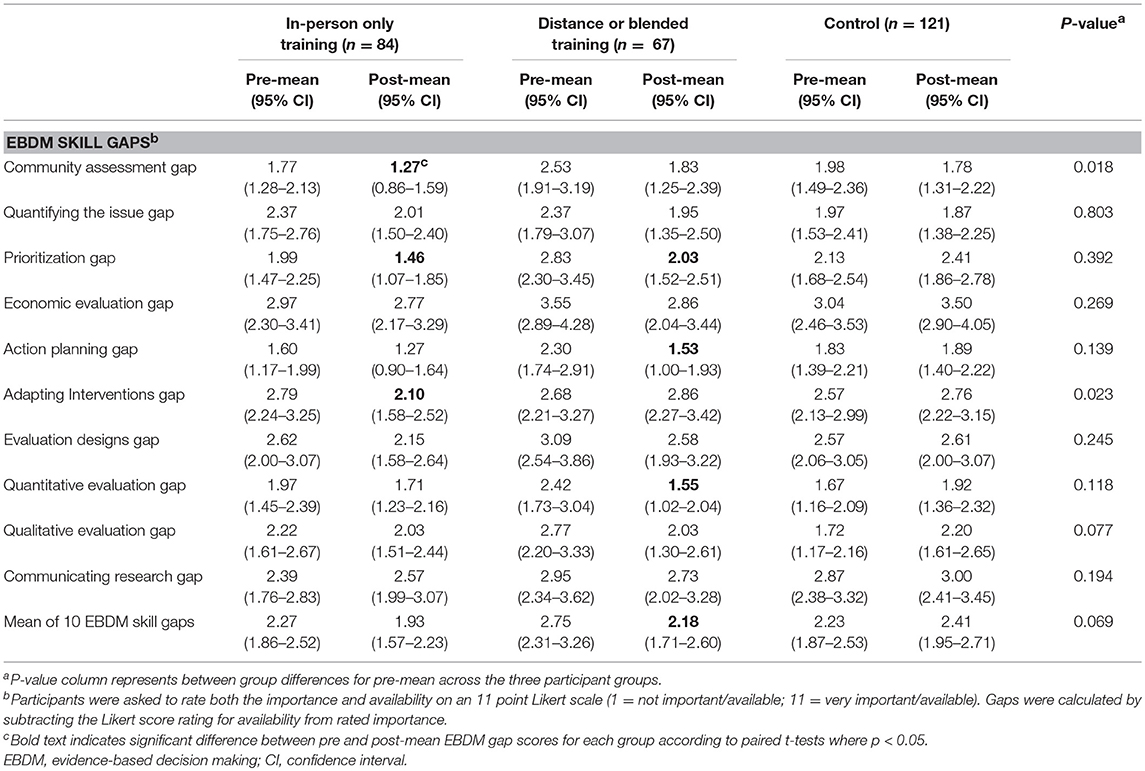

At baseline, EBDM skill gaps differed significantly across the three participant groups in two skills: community assessment (p = 0.018) and adapting interventions (p = 0.023; Table 3). Economic evaluation was the largest EBDM skill gap across all three groups at baseline and remained among the largest at follow up. Overall skill gap estimates for both in-person and distance/blended training were less at post-survey as compared to the control group (Table 4). The total mean of 10 EBDM skill gaps was significantly reduced for participants in the in-person (β = −0.55, SE = 0.27, p = 0.041) and distance/blended trainings (β = −0.64, SE = 0.29, p = 0.026) compared to controls. The gap in economic evaluation skills was also significantly reduced for both in-person (β = −0.86, SE = 0.41, p = 0.037) and distance/blended (β = −1.23, SE = 0.44, p = 0.005) training groups. Two skills, prioritization and adapting interventions, were significantly reduced (β = −0.90, SE = 0.32, p = 0.005 and β = −0.87, SE = 0.35, p = 0.013, respectively) in the in-person training group but not in the distance/blended training groups. In addition, skill gaps were reduced in quantitative evaluation (β = −0.78, SE = 0.38, p = 0.04) and in action planning (β = −0.66, SE = 0.32, p = 0.039) in the distance/blended group but not in the in-person group.

Table 3. Evidence-based decision making skill gaps pre vs. post-training by training delivery method.

Table 4. Intervention effects for evidence-based decision making skill gaps by training delivery method.

Discussion

Overall, our findings are in line with previous studies (20, 21) in that training in evidence-based public health can reduce EBDM skill gaps. In this study, we specifically sought to test the utility of distance and blended training models featuring web-based learning components. This fills a significant gap in the literature. In a recent review of 20 training programs for EBDM, only three used a distance learning approach (7). We found that, like in-person training, distance and blended training models significantly reduced overall skill gaps in EBDM. The results also support continuation of the study team's train-the-trainer approach, since surveyed course participants were instructed by state-level trainers who first attended a train-the-trainer course. Below, we discuss the implications of distance and blended trainings for public health professionals as a possible supplement to in-person approaches to building capacity for EBDM as well as next steps to further apply the learnings.

Comparing Delivery Methods

We found that training via in-person or distance and blended approaches had similar effects in reducing overall gaps in EBDM skills. Similarly, Sitzmann and colleagues' meta-analysis of the comparative effectiveness of web-based and classroom-style trainings show that overall, effects tend to be similar across the trainings (44). While we did not examine each variety of distance or blended trainings separately due to small subgroup numbers, Sitzmann et al.'s findings suggest a potentially larger effect for combination trainings that integrate both web-based formats and in-person components. Sitzmann et al. caution training developers against completely replacing in-person instruction with web-based modalities. Instead, enhancing existing components with either in-person or web-based counterparts could be alternatively effective, as well as offering a choice of delivery methods. For example, the meta-analysis showed larger effects in studies where learners self-selected into web-based trainings when compared to experimental designs. Thus, autonomy may be key. Our study did not inherently offer a choice to each individual, but sites themselves were autonomous in creating the delivery method that would be most advantageous for their staff and partners. Allowing sites to select their training delivery method may have contributed to the overall success of the training in reducing EBDM skill gaps. Limitations that Sitzmann et al. pose as possible next steps for examination are level of interaction with learners, learners' experience with computers or other web-based trainings, and course quality, as these may also contribute to effectiveness of delivery method.

Offer Diversity in Training Delivery Methods

The public health workforce come from many professional backgrounds, and less than one quarter have formal training in public health (17, 19). Training in EBDM is thus a common need across the workforce spectrum. With funding traditionally attached to specific programs (e.g., grant awards for diabetes and colorectal cancer screening programs), public health has become a workforce with diverse but program specific skills. The EBDM process cuts across silos of public health funding to examine foundational principals such as involving stakeholders in a community assessment, designing evaluations before programs are underway that capture the full impact of services, and effectively communicating the value of programs to policy makers who can sustain or allocate funding. This process is integral to public health practice and is why increasing skills in EBDM among the workforce has the potential to affect population health. If training is a key ingredient to enhancing EBDM skills, any training approach should consider the end user. Our study suggests that distance and/or blended learning for public health professionals can reduce skill gaps in EBDM similarly to in-person training. Kaufman and colleagues conducted key informant interviews with public health agency employees regarding sustainable change through trainings at health departments; a common theme that arose for producing sustainable change was to offer combinations of approaches (in-person training, on-site learning, distance learning, peer learning collaboratives, etc.) (56). Training modalities are not one-size-fits-all. Formative research with groups before development and implementation should be used to determine what is needed by the training's target audience (30, 34). Unique individuals make up the public health workforce, and we should be cognizant of the diverse needs of those individuals. This includes assessing the advantages and/or disadvantages of training modalities for the various public health settings in which professionals operate.

Train-the-Trainer Model

Regardless of delivery style, the instructors for the EBDM courses evaluated in this study were state-level trainers who attended a train-the-trainer course provided by the study team. Benefits of the EBDM course as delivered by the study team have been documented previously (23, 25). Similar benefits were found in four sites with courses led by state-level instructors following training by the study team (20, 27). This study's assessment was more detailed and shows the EBDM courses reduced specific participant skill gaps when taught by state-level trainers who were previously taught by the core trainers in St. Louis. Reduced skill gaps were found both among attendees of in-person courses and participants in distant and/or blended courses. This has positive implications for scale-up of ongoing EBDM training delivered via in-person, distance, or blended formats. Partly because of staff turnover in public health agencies (16, 18), ongoing periodic EBDM trainings will continue to be needed.

Training Delivery Considerations

EBDM is not a process carried out by a sole worker. EBDM requires collaboration with colleagues, stakeholders, and community members. Therefore, learning the basics of EBDM among peers, in-person, has many advantages, such as the ability for small group work—learning from each other, having one-on-one questions answered by trainers in the room, and networking with other colleagues—all of which have been cited as facilitators of learning in in-person courses (23, 27). However, online training platforms are increasingly able to integrate more interactive features which allow for similar experiences. In addition, web-based training has the potential to reach parts of the public health workforce that may not otherwise have access to in-person training (e.g., rural workforce, not in close proximity to schools of public health/trainings). Web-based trainings also provide the flexibility for those whose schedules are too demanding for a 3.5 days intensive in-person training, but could learn at their own pace, though immersion offers a chance to focus solely on training without the distraction of day-to-day work activities. Finally, purely web-based models have the potential to reach larger audiences and can perhaps do so more quickly if they are offered frequently or on demand. In-person trainings traditionally accommodate about 35 individuals. One site in our study, which offered their web-based training without any in-person component, trained well over three times that. Web-based trainings offer the opportunity to quickly and simultaneously push consistent and standardized messaging around a topic or skill to all employees.

Costs to participants can be reduced without full day trainings (that often require travel, food, etc.), though upfront costs to an organization to develop and launch web-based training can be high and money should be budgeted for ongoing updates to trainings. One way organizations can minimize their costs (which some sites did in this study) is to integrate web-based modules or training components into already existing web-training platforms. One example is TRAIN, a national learning collaborative that allows web-access options to develop and disseminate professional development trainings with an organizational subscription. Currently, 25 state health departments are affiliated TRAIN sites, and subscriptions for local public health agencies are also available (46). Regional and in-state Public Health Training Centers can also potentially offer needed resources for training infrastructure that could reduce upfront costs to organizations. To support sustainability of any ongoing training programs, regardless of format, formal plans to acquire and designate resources are needed (39).

Next Steps

This is an exciting time to be in public health. Much focus is placed on creating and maintaining a competent workforce capable and passionate about keeping populations of people healthy. Learnings from this study can infuse current plans to spread capacity for EBDM through training efforts, and more importantly, can inform further, more rigorous studies to explore with more depth the implementation variations in blended or distance course delivery.

Limitations

While our study provides important findings regarding the use of alternative approaches to training public health professionals in-person, findings should be interpreted within the context of several limitations. All data presented are self-reported, which introduces the potential for social desirability bias. This study did not ascertain behavior change (level of using EBDM processes before and after training), an important piece of evaluating training outcomes (57). Three participant characteristics differed across training and control groups, but did not drive the reported adjusted findings per additional analyses conducted. Sites also differed in their implementation of the training, and detailed implementation differences were not directly measured. Because of small numbers, the distance learning and blended trainings were combined, thus we were not able to fully isolate differences between full distance learning and either partial or full in-person training. Future studies are needed to determine the level of effect by particular distance and/or blended model.

Conclusions

The public health workforce is a diverse group of professionals. The spread of EBDM within the workforce has the potential to decrease disease burden in the population. Building capacity for EBDM through training is not a one size-fits-all approach—offering multiple training modes increases the potential for scaling up and sustaining EBDM across the public health workforce.

Availability of Data and Material

Raw data will be made available by the authors, without undue reservation, to any qualified researcher upon request.

Author Contributions

RB and PA conceptualization and design, RB, PA, PE, and KD survey instrument development, KA and SY data collection, RJ data management and data analyses. All authors read and approved the final manuscript.

Funding

This study was funded by the Robert Wood Johnson Foundation (Award # 71704).

Conflict of Interest Statement

RB is currently a guest associate editor for Frontiers in Public Health, Public Health Education and Promotion. KD is employed by Focus Pointe Global.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank the members of our LEAD Research Team including: Janet Canavese and Kathleen Wojciehowski, Missouri Institute for Community Health; Jenine Harris, Brown School, Washington University in St. Louis; Carolyn Leep, National Association of City and County Health Officials; and Katherine Stamatakis, College for Public Health and Social Justice, Saint Louis University. We also are extremely grateful to the participating training sites.

Abbreviations

EBDM, evidence-based decision making

References

1. National Association of County and City Health Officials (NACCHO). 2016 National Profile of Local Health Departments. Washington, DC (2017). Available online at: http://nacchoprofilestudy.org/wp-content/uploads/2017/10/ProfileReport_Aug2017_final.pdf (Accessed July 30, 2018).

2. Association of State and Territorial Health Officials (ASTHO): ASTHO Profile of State and Territorial Public Health Volume Four. Arlington, VA. (2017) Available online at: http://www.astho.org/Profile/Volume-Four/2016-ASTHO-Profile-of-State-and-Territorial-Public-Health/ (Accessed July 30, 2018).

3. Kohatsu ND, Robinson JG, Torner JC. Evidence-based public health: an evolving concept. Am J Prev Med. (2004) 27:417–21. doi: 10.1016/j.amepre.2004.07.019

4. Brownson RC, Fielding JE, Maylahn CM. Evidence-based public health: a fundamental concept for public health practice. Annu Rev Public Health (2009) 30:175–201. doi: 10.1146/annurev.publhealth.031308.100134

5. Brownson RC, Baker Elizabeth A, Deshpande Anjali D, Gillespie et al. Evidence-Based Public Health, 3rd edn. New York, NY: Oxford University Press (2018).

6. Glasziou P, Longbottom H. Evidence-based public health practice. Aust N Z J Public Health (1999) 23:436–40.

7. Brownson RC, Fielding JE, Green LW. Building capacity for evidence-based public health: reconciling the pulls of practice and the push of research. Annu Rev Public Health (2018) 39:27–53. doi: 10.1146/annurev-publhealth-040617-014746

8. DeRuyter AJ, Ying X, Budd EL, Furtado K, Reis R, Wang Z, et al. Comparing knowledge, accessibility, and use of evidence-based chronic disease prevention processes across four countries. Front Public Health (2018) 6:214. doi: 10.3389/fpubh.2018.00214

9. The Council on Linkages Between Academia and Public Health Practice. Modified Version of the Core Competencies for Public Health Professionals. (2017). Available online at: http://www.phf.org/resourcestools/Documents/Modified_Core_Competencies.pdf.

10. Public Health Accreditation Board (PHAB). Public Health Accreditation Board Standards and Measures Version 1.5. Alexandria, VA (2013). Available online at: http://www.phaboard.org/wp-content/uploads/PHABSM_WEB_LR1.pdf.

11. Jacob RR, Baker EA, Allen P, Dodson EA, Duggan K, Fields R, et al. Training needs and supports for evidence-based decision making among the public health workforce in the United States. BMC Health Serv Res. (2014) 14:564. doi: 10.1186/s12913-014-0564-7

12. Jacobs JA, Dodson EA, Baker EA, Deshpande AD, Brownson RC. Barriers to evidence-based decision making in public health: a national survey of chronic disease practitioners. Public Health Rep. (2010) 125:736–42. doi: 10.1177/003335491012500516

13. Brownson RC, Gurney JG, Land GH. Evidence-based decision making in public health. J Public Health Manag Pract. (1999) 5:86–97.

14. Brownson RC, Allen P, Duggan K, Stamatakis KA, Erwin PC. Fostering more-effective public health by identifying administrative evidence-based practices: a review of the literature. Am J Prev Med. (2012) 43:309–19. doi: 10.1016/j.amepre.2012.06.006

15. Harris JK, Beatty K, Leider JP, Knudson A, Anderson BL, Meit M. The double disparity facing rural local health departments. Annu Rev Public Health (2016) 37:167–84. doi: 10.1146/annurev-publhealth-031914-122755

16. Leider JP, Harper E, Shon JW, Sellers K, Castrucci BC. Job satisfaction and expected turnover among federal, state, and local public health practitioners. Am J Public Health (2016) 106:1782–8 doi: 10.2105/AJPH.2016.303305

17. Sellers K, Leider JP, Harper E, Castrucci BC, Bharthapudi K, Liss-Levinson R, et al. The public health workforce interests and needs survey: the first national survey of state health agency employees. J Public Health Manag Pract. (2015) 21:S13–27. doi: 10.1097/PHH.0000000000000331

18. Beck AJ, Boulton ML, Lemmings J, Clayton JL. Challenges to recruitment and retention of the state health department epidemiology workforce. Am J Prev Med. (2012) 42:76–80. doi: 10.1016/j.amepre.2011.08.021

19. Fields RP, Stamatakis KA, Duggan K, Brownson RC. Importance of scientific resources among local public health practitioners. Am J Public Health (2015) 105:S288–94. doi: 10.2105/AJPH.2014.302323

20. Jacobs JA, Duggan K, Erwin P, Smith C, Borawski E, Compton J, et al. Capacity building for evidence-based decision making in local health departments: scaling up an effective training approach. Implement Sci. (2014) 9:124. doi: 10.1186/s13012-014-0124-x

21. Brownson RC, Allen P, Jacob RR, deRuyter A, Lakshman M, Reis RS, et al. Controlling chronic diseases through evidence-based decision making: a group-randomized trial. Prev Chronic Dis. (2017) 14:E121.doi: 10.5888/pcd14.170326

22. DeCorby-Watson K, Mensah G, Bergeron K, Abdi S, Rempel B, Manson H. Effectiveness of capacity building interventions relevant to public health practice: a systematic review. BMC Public Health (2018) 18:684. doi: 10.1186/s12889-018-5591-6

23. Dreisinger M, Leet TL, Baker EA, Gillespie KN, Haas B, Brownson RC. Improving the public health workforce: evaluation of a training course to enhance evidence-based decision making. J Public Health Manag Pract. (2008) 14:138–43. doi: 10.1097/01.PHH.0000311891.73078.5

24. Baker EA, Brownson RC, Dreisinger M, McIntosh LD, Karamehic-Muratovic A. Examining the role of training in evidence-based public health: a qualitative study. Health Promot Pract. (2009) 10:342–8. doi: 10.1177/1524839909336649

25. Gibbert WS, Keating SM, Jacobs JA, Dodson E, Baker E, Diem G, et al. Training the workforce in evidence-based public health: an evaluation of impact among US and international practitioners. Prev Chronic Dis. (2013) 10:E148. doi: 10.5888/pcd10.130120

26. Drehobl PA, Roush SW, Stover BH, Koo D, Centers for Disease C Prevention. Public health surveillance workforce of the future. MMWR Suppl. (2012) 61:25–9.

27. Yarber L, Brownson CA, Jacob RR, Baker EA, Jones E, Baumann C, et al. Evaluating a train-the-trainer approach for improving capacity for evidence-based decision making in public health. BMC Health Serv Res. (2015) 15:547. doi: 10.1186/s12913-015-1224-2.

28. Douglas MR, Lowry JP, Morgan LA. Just-in-time training of the evidence-based public health framework, Oklahoma, 2016–2017. J Public Health Manag Pract. (2018). doi: 10.1097/PHH.0000000000000773. [Epub ahead of print].

29. Brown KK, Maryman J, Collins T. An evaluation of a competency-based public health training program for public health professionals in Kansas. J Public Health Manag Pract. (2017) 23:447–53. doi: 10.1097/PHH.0000000000000513

30. Ballew P, Castro S, Claus J, Kittur N, Brennan L, Brownson RC. Developing web-based training for public health practitioners: what can we learn from a review of five disciplines? Health Educ Res. (2013) 28:276–87. doi: 10.1093/her/cys098

31. Morshed AB, Ballew P, Elliott MB, Haire-Joshu D, Kreuter MW, Brownson RC. Evaluation of an online training for improving self-reported evidence-based decision-making skills in cancer control among public health professionals. Public Health (2017) 152:28–35. doi: 10.1016/j.puhe.2017.06.014

32. Demers AL, Mamary E, Ebin VJ. Creating opportunities for training California's public health workforce. J Contin Educ Health Prof. (2011) 31:64–9. doi: 10.1002/chp.20102

33. Milat AJ, Bauman A, Redman S. Narrative review of models and success factors for scaling up public health interventions. Implement Sci. (2015) 10:113. doi: 10.1186/s13012-015-0301-6

34. Alexander LK, Horney JA, Markiewicz M, MacDonald PD. 10 Guiding principles of a comprehensive Internet-based public health preparedness training and education program. Public Health Rep. (2010) 125:51–60. doi: 10.1177/00333549101250S508

35. Steckler A, Farel A, Bontempi JB, Umble K, Polhamus B, Trester A. Can health professionals learn qualitative evaluation methods on the World Wide Web? A case example. Health Educ Res. (2001) 16:735–45. doi: 10.1093/her/16.6.735

36. Bell M, MacDougall K. Adapting online learning for Canada's Northern public health workforce. Int J Circumpolar Health (2013) 72:21345. doi: 10.3402/ijch.v72i0.21345

37. Macvarish K, Kenefick H, Fidler A, Cohen B, Orellana Y, Todd K. Building professionalism through management training: New England public health training center's low-cost, high-impact model. J Public Health Manag Pract. (2018) 24:479–86. doi: 10.1097/PHH.0000000000000693

38. Salinas-Miranda AA, Nash MC, Salemi JL, Mbah AK, Salihu HM. Cutting-edge technology for public health workforce training in comparative effectiveness research. Health Inform J. (2013) 19:101–15. doi: 10.1177/1460458212461366

39. Kenefick HW, Ravid S, MacVarish K, Tsoi J, Weill K, Faye E, et al. On your time: online training for the public health workforce. Health Promot Pract. (2014) 15:48S−55S. doi: 10.1177/1524839913509270

40. Yost J, Mackintosh J, Read K, Dobbins M. Promoting awareness of key resources for evidence-informed decision-making in public health: an evaluation of a webinar series about knowledge translation methods and tools. Front Public Health (2016) 4:72. doi: 10.3389/fpubh.2016.00072

41. Jaskiewicz L, Dombrowski RD, Barnett GM, Mason M, Welter C. Training local organizations to support healthy food access: results from a year-long project. Community Dev J. (2016) 51:285–301. doi: 10.1093/cdj/bsv022

42. Chan L, Mackintosh J, Dobbins M. How the “Understanding Research Evidence” web-based video series from the national collaborating centre for methods and tools contributes to public health capacity to practice evidence-informed decision making: Mixed-methods evaluation. J Med Internet Res. (2017) 19:e286. doi: 10.2196/jmir.6958

43. Liu Q, Peng W, Zhang F, Hu R, Li Y, Yan W. The effectiveness of blended learning in health professions: systematic review and meta-analysis. J Med Internet Res. (2016) 18:e2. doi: 10.2196/jmir.4807

44. Sitzmann T, Kraiger K, Stewart D, Wisher R. The comparative effectiveness of web-based and classroom instruction: a meta-analysis. Pers Psychol. (2006) 59:623–64. doi: 10.1111/j.1744-6570.2006.00049.x

45. Eysenbach G, Kaewkungwal J, Nguyen C, Zhan X, Zhang Z, Sun F, et al. Effects of improving primary health care workers' knowledge about public health services in rural china: a comparative study of blended learning and pure e-learning. J Med Internet Res. (2017) 19:e116. doi: 10.2196/jmir.6453

46. Public Health Foundation. What is TRAIN? Available online at: http://www.phf.org/programs/TRAIN/Pages/default.aspx (Accessed December 6, 2017).

47. Health Resources and Services Administration. Regional Public Health Training Centers (2017). Available online at: https://bhw.hrsa.gov/grants/publichealth/regionalcenters (Accessed December 6, 2017).

48. National Network of Public Health Institutes. Public Health Learning Network: Educating Professionals, Elevating Practice (2017). Available online at: https://nnphi.org/phln/ (Accessed December 6, 2017).

49. Millery M, Hall M, Eisman J, Murrman M. Using innovative instructional technology to meet training needs in public health: a design process. Health Promot Pract. (2014) 15:39S−47S. doi: 10.1177/1524839913509272

50. Brownson RC, Reis RS, Allen P, Duggan K, Fields R, Stamatakis KA, et al. Understanding administrative evidence-based practices: findings from a survey of local health department leaders. Am J Prev Med. (2014) 46:49–57. doi: 10.1016/j.amepre.2013.08.013

51. Jacobs JA, Clayton PF, Dove C, Funchess T, Jones E, Perveen G, et al. A survey tool for measuring evidence-based decision making capacity in public health agencies. BMC Health Serv Res. (2012) 12:57. doi: 10.1186/1472-6963-12-57

52. Jacobs JA, Jones E, Gabella BA, Spring B, Brownson RC. Tools for implementing an evidence-based approach in public health practice. Prev Chronic Dis. (2012) 9:E116. doi: 10.5888/pcd9.11032

53. Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA (2008) 300:1181–96. doi: 10.1001/jama.300.10.1181

54. Berndt A, Murray CM, Kennedy K, Stanley MJ, Gilbert-Hunt S. Effectiveness of distance learning strategies for continuing professional development (CPD) for rural allied health practitioners: a systematic review. BMC Med Educ. (2017) 17:117. doi: 10.1186/s12909-017-0949-5

55. Gueorguieva R, Krystal JH. Move over ANOVA: progress in analyzing repeated-measures data and its reflection in papers published in the archives of general psychiatry. Arch Gen Psychiatry (2004) 61:310–7. doi: 10.1001/archpsyc.61.3.310

56. Kaufman NJ, Castrucci BC, Pearsol J, Leider JP, Sellers K, Kaufman IR, et al. Thinking beyond the silos: emerging priorities in workforce development for state and local government public health agencies. J Public Health Manag Pract. (2014) 20:557–65. doi: 10.1097/PHH.0000000000000076

Keywords: evidence-based decision making, public health workforce training, training approaches, evidence-based practice, public health department

Citation: Jacob RR, Duggan K, Allen P, Erwin PC, Aisaka K, Yang SC and Brownson RC (2018) Preparing Public Health Professionals to Make Evidence-Based Decisions: A Comparison of Training Delivery Methods in the United States. Front. Public Health 6:257. doi: 10.3389/fpubh.2018.00257

Received: 22 June 2018; Accepted: 20 August 2018;

Published: 13 September 2018.

Edited by:

Connie J. Evashwick, George Washington University, United StatesReviewed by:

Margo Bergman, University of Washington Tacoma, United StatesKrista Mincey, Xavier University of Louisiana, United States

Copyright © 2018 Jacob, Duggan, Allen, Erwin, Aisaka, Yang and Brownson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rebekah R. Jacob, cmViZWthaGphY29iQHd1c3RsLmVkdQ==

Rebekah R. Jacob

Rebekah R. Jacob Kathleen Duggan2

Kathleen Duggan2 Kristelle Aisaka

Kristelle Aisaka Ross C. Brownson

Ross C. Brownson