- 1Division of Research and Innovation, Department of Computer Science and Engineering, Saveetha School of Engineering, SIMATS, Chennai, Tamil Nadu, India

- 2School of Information Technology and Engineering, Vellore Institute of Technology, Vellore, India

- 3School of Computer Science and Engineering, Vellore Institute of Technology, Vellore, Tamil Nadu, India

- 4Department of Computer Science Engineering, Sahyadri College of Engineering and Management, Mangaluru, India

- 5Department of Computer Science and Information Engineering, National Yunlin University of Science and Technology, Yunlin, Taiwan

- 6Service Systems Technology Center, Industrial Technology Research Institute, Hsinchu, Taiwan

Introduction: Cancer happening rates in humankind are gradually rising due to a variety of reasons, and sensible detection and management are essential to decrease the disease rates. The kidney is one of the vital organs in human physiology, and cancer in the kidney is a medical emergency and needs accurate diagnosis and well-organized management.

Methods: The proposed work aims to develop a framework to classify renal computed tomography (CT) images into healthy/cancer classes using pre-trained deep-learning schemes. To improve the detection accuracy, this work suggests a threshold filter-based pre-processing scheme, which helps in removing the artefact in the CT slices to achieve better detection. The various stages of this scheme involve: (i) Image collection, resizing, and artefact removal, (ii) Deep features extraction, (iii) Feature reduction and fusion, and (iv) Binary classification using five-fold cross-validation.

Results and discussion: This experimental investigation is executed separately for: (i) CT slices with the artefact and (ii) CT slices without the artefact. As a result of the experimental outcome of this study, the K-Nearest Neighbor (KNN) classifier is able to achieve 100% detection accuracy by using the pre-processed CT slices. Therefore, this scheme can be considered for the purpose of examining clinical grade renal CT images, as it is clinically significant.

1. Introduction

It is becoming increasingly apparent that infectious and acute syndromes are rising worldwide. Appropriate clinical procedures are necessary for detecting and treating these diseases as early as possible. Untreated diseases will likely result in various problems, including death, and they may also burden the healthcare system substantially. It should be noted that acute diseases are usually more severe than infectious diseases. Compared with infectious diseases, acute diseases will also lead to death in individuals. According to the current literature, cancer is a severe acute disease that accounts for a substantial number of deaths worldwide and has been identified as a disease that causes many deaths as well (1–3).

A report published by the World Health Organization (WHO) in 2020 shows that cancer was the leading cause of death worldwide in 2020 and is expected to continue in that way.1 Several studies have indicated that, in the year 2020, approximately 10 million people will have died worldwide from various cancer-related causes, including cancer of the internal and external organs. According to this report, lung and colon cancer are the leading causes of death worldwide.

The Global Cancer Observatory (GCO) report lists several cancer cases in various body organs.2 This report lists cancer in organs based on its occurrence rate, cancer in the kidney is listed as the 14th most dangerous disease, and untreated renal cancer will lead to death. This report also confirms that, in 2020, the number of cancer patients increased to 431,288. This report also confirms that nearly 430,000 new cases will be diagnosed in 2020 alone. According to the disease prediction by GCO, kidney (renal) cancer is severe, and its occurrence rate is gradually rising due to various causes. Early recognition and management are compulsory to cure the disease completely using appropriate medications. Kidney cancer (KC) is commonly assessed by automatic methods using a chosen medical imaging dataset (renal CT images), and the achieved results are analyzed and recorded for further investigation.

The earlier studies in the literature confirm that renal CT (RCT)-based kidney detection is a recommended procedure to precisely detect kidney abnormality during the disease screening process. Usually, the RCT is collected as a three-dimensional (3D) image, and then, a 3D to 2D conversion is employed to reduce the computation complexity during the RCT analysis (4, 5). The axial-plane 2D slices are commonly adopted in the literature, and it helps to provide the necessary information about abdominal conditions, including kidney health. Hence, this study also considered the axial-plane 2D RCT slices to examine the KC. Before implementing the detection task, every image is resized to a recommended dimension.

The ultimate task of this investigation is to prepare a disease detection structure to accurately identify the KC using the RCT images with the help of the chosen deep-learning scheme. To achieve better detection accuracy, this study implemented a preprocessing image procedure to treat the raw renal CT using a threshold filter approach discussed in earlier research. In the earlier studies, this arrangement is considered to strip the skull region from the brain MRI slices (6, 7) and to remove the artifact in lung CT slices (8–10). A similar procedure is adopted in this study to remove the artifact in RCT slices to improve the visibility of the kidney section. The proposed cancer detection framework consists of the following phases:

i. Image resizing and artifact removal using threshold filter.

ii. Deep feature extraction using chosen pre-trained methods.

iii. Dual-deep feature generation using serially concatenated deep features.

iv. Binary classification and verification using a 5-fold cross-validation.

The merit of the computerized scheme depends on its explainability and robustness, and hence, this study considered a framework that is very simple and robust (11). This scheme considered MATLAB for initial image processing, and the developed framework is implemented using PYTHON. The experimental exploration is separately implemented using (i) RCT with the artifact and (ii) RCT without the artifact, and the achieved performance values are compared. This approves that the classification accuracy realized with the artifact-removed RCT is better than the raw RCT. Furthermore, this study employs pre-trained schemes, such as VGG16, VGG19, ResNet50, ResNet101, DenseNet121, and DenseNet201, to obtain better detection in the considered task. The results authorize that the outcome achieved with VGG19 and DenseNet121 is better for the chosen RCT, and hence, the proposed scheme is implemented using deep features of (i) VGG19 and (ii) DenseNet121 and serially concatenated features of VGG19 and DenseNet121 after a 50% dropout. The deep feature-based classification helps accomplish an accuracy of 100% with the RCT without the artifact. This confirms that the proposed framework is clinically noteworthy and can be considered to identify the KC from the RCT collected from actual patients.

The key contributions of this framework include the following:

i. Threshold filter-supported preprocessing is executed to eliminate artifacts in RCT.

ii. Implementation of the proven deep-learning schemes to detect the KC using RCT.

iii. Implementation of serially concatenated deep features to enhance the KC detection accuracy.

This study is divided into the following sections: Section 2 presents the context, Section 3 illustrates the methodology, and Sections 4 and 5 discuss the results and conclusions.

2. Related studies

Computerized disease screening and diagnosis is one of the recent advancements, adopted in a variety of hospitals and disease screening laboratories to reduce the diagnostic burden of doctors and lab technicians. The increased disease occurrence rates need a faster and more accurate system to detect the disease using chosen medical data. The bio-image-supported disease screening is a common and widely adopted procedure to verify the condition of the internal organs. Furthermore, the bio-image-supported methods support accurate disease information compared with other medical modalities, and hence, these methods are widely employed to screen patients suffering due to cancer.

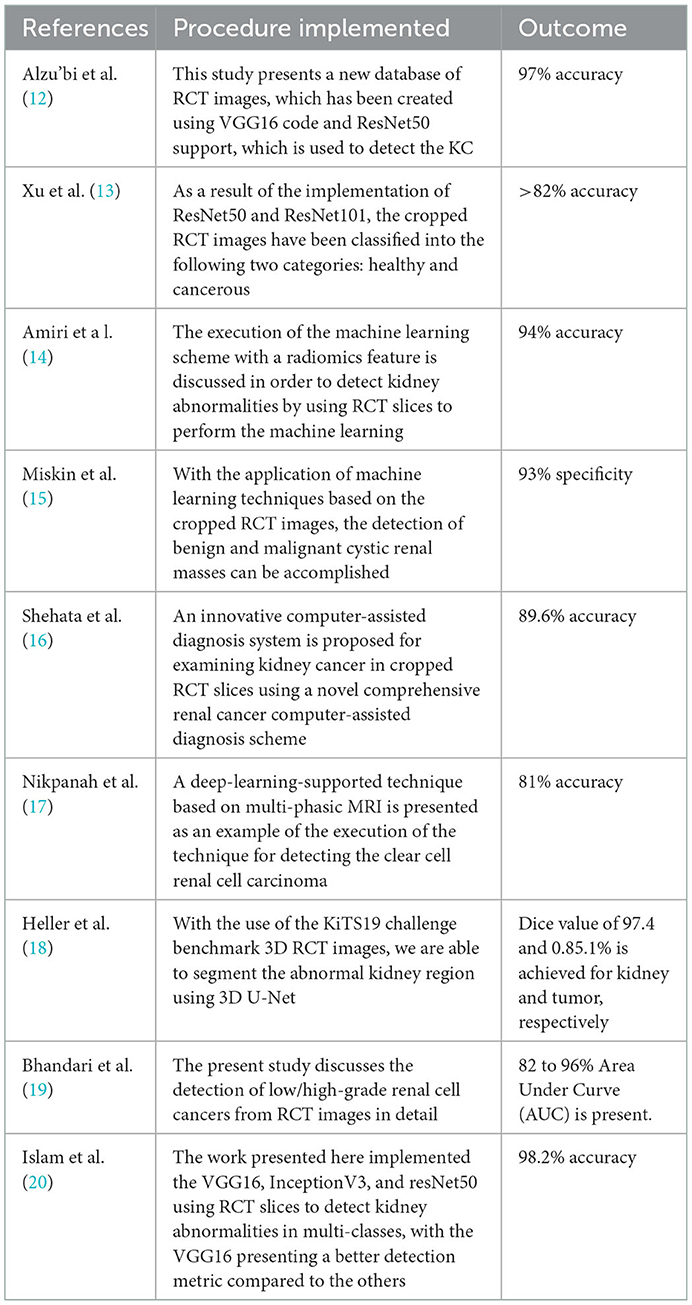

Kidney cancer is one of the acute diseases and ranked 14th based on the year 2020 reports of the WHO and GCO. Appropriate diagnosis and treatment will help the patient to recover from the disease. Due to its importance, a number of computerized schemes are discussed by the researchers to distinguish the KC using RCT pictures. Table 1 summarizes a few chosen KC detection procedures found in the literature.

Along with the above-considered studies, the research by Abdelrahman and Viriri (21) presents a detailed survey on traditional and deep-learning segmentation of the abnormal fragment in the kidney in RCT images. The research by Wang et al. (22) also presents a thorough evaluation of the deep-learning-supported scheme for biomedical image examination, including the RCT. These studies authorize the need for a well-organized methodology to detect abnormality from the chosen medical image. Hence, in this research, a framework based on deep learning is proposed to detect the KC from the axial-plane RCT slices accurately.

3. Methodology

Using a binary classifier, this research division demonstrates how RCT slices are classified into healthy and cancerous classes in an axial plane. When the patient visits the nephrologist to verify the condition of the kidney, a recommended clinical protocol will be followed by the doctor to examine the kidney and its condition, and based on the observations/disease symptoms, the nephrologist recommends a bio-imaging-based examination to get the complete information about the kidney. When the patient undergoes a CT scan, it will provide a 3D picture of the abdominal region, which is then converted into 2D to reduce the computational complexity. Furthermore, the personal verification of the kidney section from the bio-image needs a 2D picture printed on a specialized film. A similar procedure is executed when a computer-supported diagnosis is implemented.

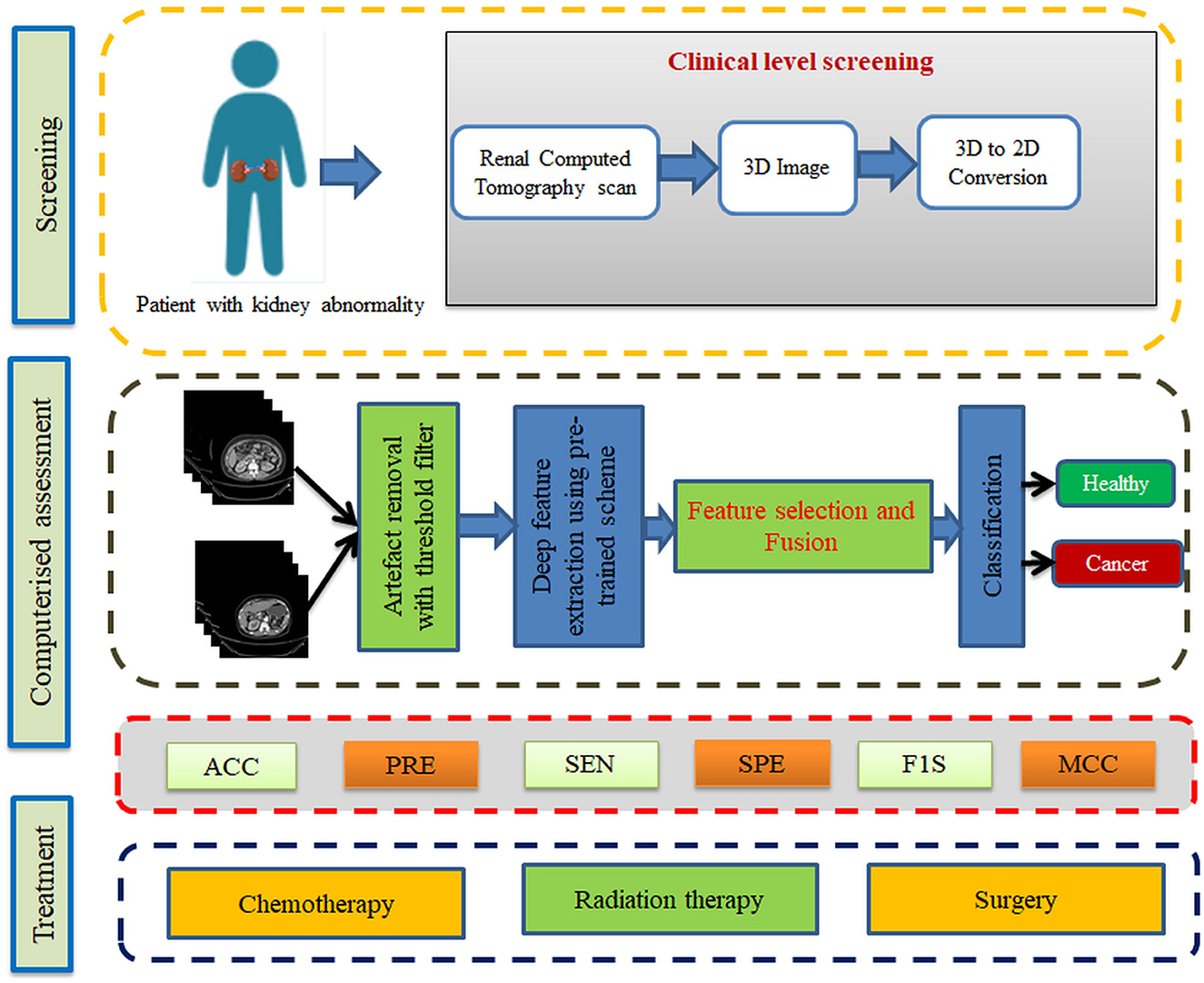

From the data collection to the decision-making process, the proposed scheme is depicted in Figure 1. A number of procedures are involved in the proposed scheme, including image collection and preprocessing for improved detection accuracy, feature extraction utilizing a selected deep-learning technique, feature reduction, and serial feature concatenation to produce the fused feature vector, binary classification via 5-fold cross-validation, and verification of the proposed scheme's performance on the basis of the results obtained. In this study, the fused feature vector is constructed by integrating the deep features of VGG19 and DenseNet121. In addition, based on the computation of performance measures, the merit of the proposed scheme is confirmed based on the evaluation of these features to determine the classification performance of SoftMax and other binary classifiers.

3.1. Image database

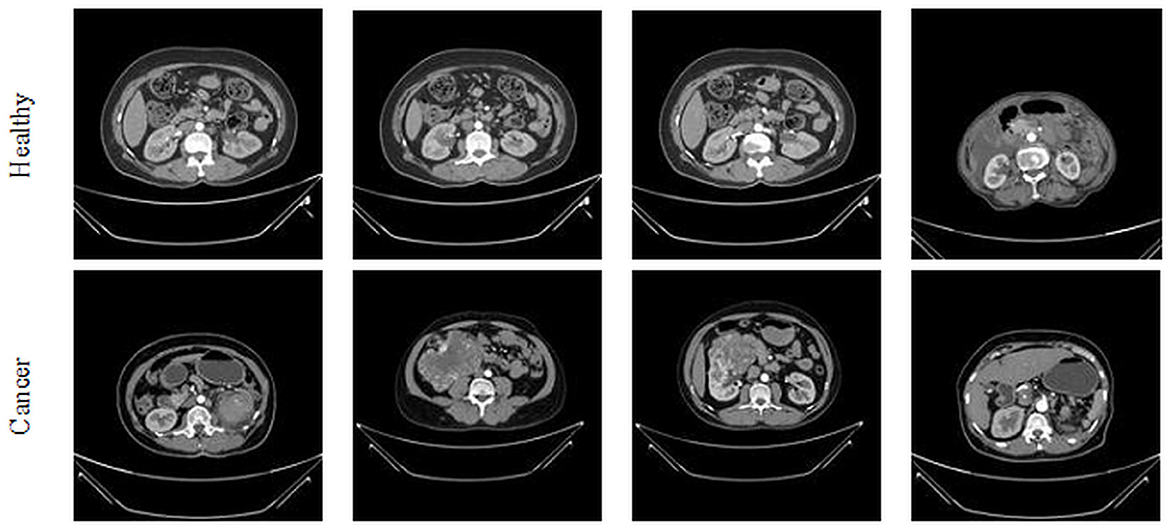

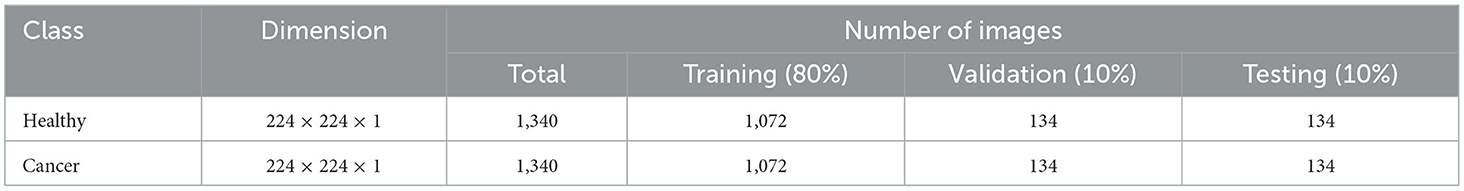

This study considered the axial-plane RCT slices provided by Islam et al. (21). This dataset consists of both the axial-plane and coronal-plane images with categories, such as cyst, stone, cancer, and healthy. In this study, only the healthy and cancer axial-plane images alone are considered for the examination. To have a balanced database, this study considered 2,680 images (1,340 healthy class and 1,340 cancer class). Before implementing the classification task, every image is resized to 224 × 224 × 1 pixels. The proposed detection task is implemented using the RCT with and without the artifact, and the obtained results are separately examined and verified. Figure 2 represents the trial imageries considered in this study, and the number of images considered in this study is depicted in Table 2. In this study, 80% of images are considered to train the deep-learning scheme, 10% of images are considered for validation, and the remaining 10% of images are used to test the performance of the scheme with a 5-fold cross-validation with individual and fused features.

3.1.1. Artifact removal

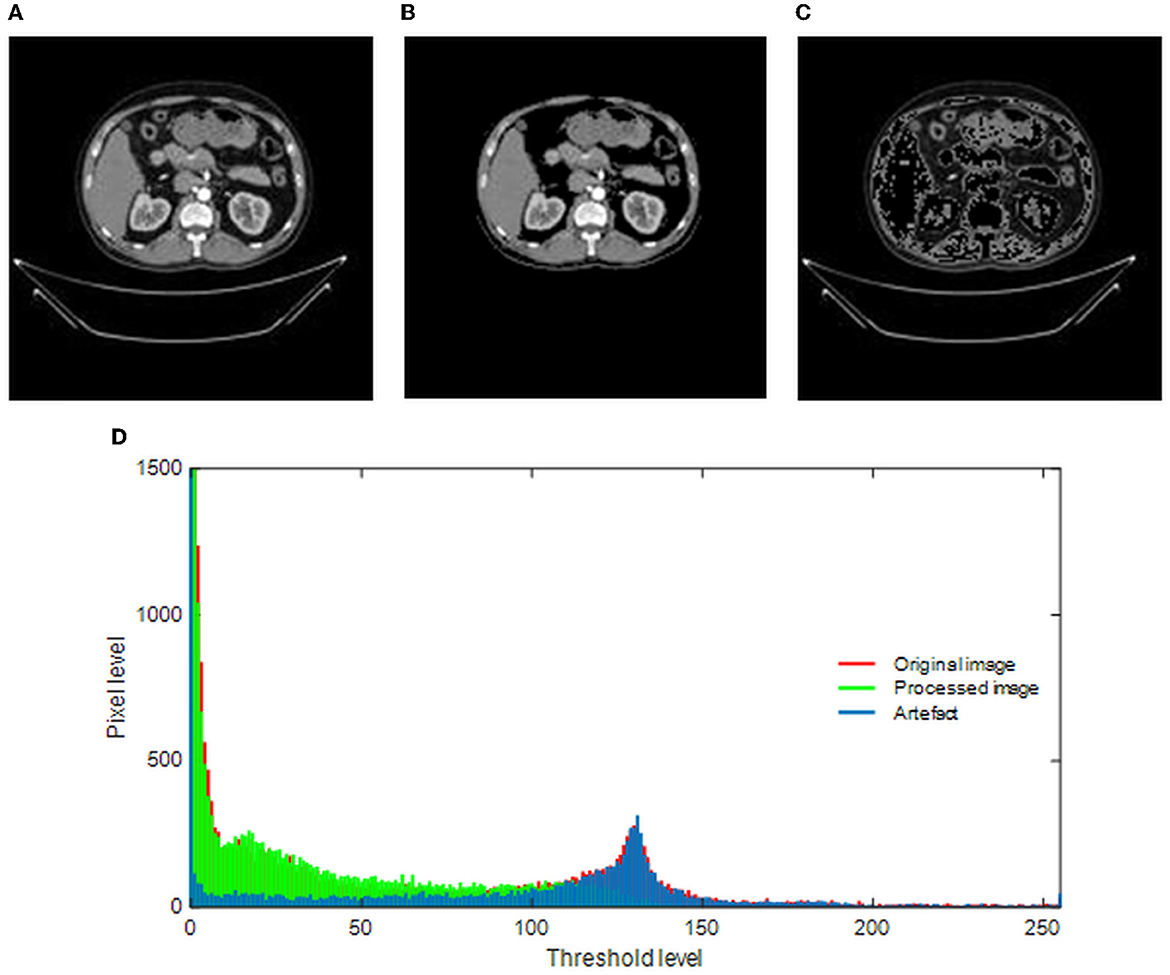

The merit of the automatic medical image examination procedure depends mainly on the image database considered during the experimental investigation. The earlier studies in the research verify that the images without the artifact help in achieving a better accuracy compared with the images with the artifact (23). This study implements a threshold filter-supported method to remove the artifact from the chosen RCT, and this task is executed using MATLAB software as discussed in (24). In this process, the threshold value (Th), which separates the image into a processed artifact, is identified manually. When an appropriate Th is obtained, it is implemented to divide the raw test image into two sections as shown in Figure 3. Figure 3A shows the raw RCT, and Figures 3B, C shows the processed picture and the removed artifact. This task depends on the threshold level of the image, and it is shown in Figure 3D. The original histogram (red) depicts the pixel distribution of the raw RCT, the green histogram depicts the pixel distribution of the processed image, and the remaining section (blue) shows the pixel value of the artifact.

Figure 3. Implementation of threshold filter to eliminate the artifact. (A) Original image. (B) Processed image. (C) Artifact. (D) Gray-scale histogram.

3.2. Deep-learning model

Recently, pre-trained and customized deep-learning procedures have been widely implemented in various data analytic tasks due to their performance, ease of implementation, and significance. Compared to the traditional and machine-learning schemes, the deep-learning procedures efficiently provide a better result on moderate and large datasets. Furthermore, most of these methods can be practically implementable in a chosen hardware system, improving its performance (25–27).

Researchers have recently widely employed pre-trained models to achieve better results during medical image examination tasks. The proposed research study also implements well-known pre-training procedures, such as VGG16, VGG19, ResNet50, ResNet101, DenseNet121, and DenseNet201, to examine the KC in RCT slices. The complete evidence concerning the preferred schemes can be found in the literature (28–32), and in this study, these schemes are considered along with chosen binary classifiers. The following initial parameters are assigned for these models: learning rate = 1 × 10−5, training with linear dropout rate (LDR), Adam optimization, ReLu activation, total iteration = 2000, total epochs = 150, and classification with a SoftMax unit using a 5-fold cross-validation.

Before implementing the developed scheme, an image augmentation procedure is implemented to increase the learning capability of the chosen deep-learning systems. The augmentation process involves the horizontal and vertical flip, an angle-based rotation, and zoom-in and zoom-out. This helps the system to learn better about the features of the image.

3.3. Feature vector generation and classification

Each deep-learning procedure implemented in this study provides a deep feature vector of dimension 1 x 1 x 1,000, which is then used to authenticate the merit of the classifiers. The feature vector after a 50% dropout will offer a reduced feature vector of dimension 1 x 1 x 500, which is the concatenated deep feature with similar reduced features to achieve a fused feature vector of dimension 1 x 1 x 1,000, which helps in achieving a better classification accuracy during the RCT-based KC detection task. The total dimension of these features is 1 x 1 x 1,000, which is then reduced to 1 x 1 x 500 using a 50% dropout, and from this, the fused feature vector is obtained. The feature vectors of this system are depicted in Equations (1)–(3) (33, 34):

where DLF = deep-learning features, VGG = VGG19, and DN = DenseNet121.

3.4. Performance metric computation

Performance metrics obtained during the classification task are used to verify the merit of the proposed scheme. To begin with, the measures, such as true-positive (TP), false-positive (FP), true-negative (TN), and false-negative (FN), are computed from the confusion matrix presented in Equations, which are then used to implement these values into mathematical expressions. From Equations (4) to (9), the necessary measures, such as accuracy (ACC), precision (PRE), sensitivity (SEN), specificity (SPE), F1-score (F1S), and Matthews correlation coefficient (MCC), are calculated. In contrast to the binary classification task in this study, SoftMax, Nave-Bayes (NB), decision trees (DT), random forests (RF), KNNs, and support vector machine (SVM) are used (35–37).

4. Results and discussions

The proposed study is implemented with MATLAB and Python on a workstation equipped with an Intel i7 2.9 GHz processor, 20 GB RAM, and 4 GB VRAM.

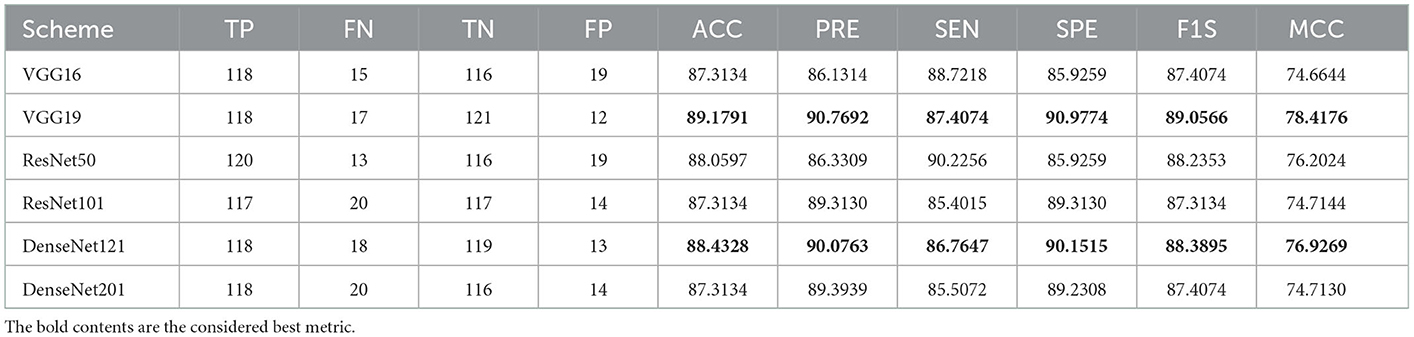

Initially, the proposed framework is implemented on the raw RCT images with the artifacts, and the classification performance is verified using the chosen binary classifiers. Then, the RCT classification performance of chosen pre-trained models is verified using the raw axial-plane images, and the outcomes are equated. The outcome of this experiment authorizes that the SoftMax-based binary classification with a 5-fold cross-validation provides a better detection performance with VGG19 and DenseNet121 methods compared with VGG16, ResNet50, ResNet101, and DenseNet201. Furthermore, along with the detection accuracy, the MCC achieved with these schemes is also better; this information is shown in Table 2.

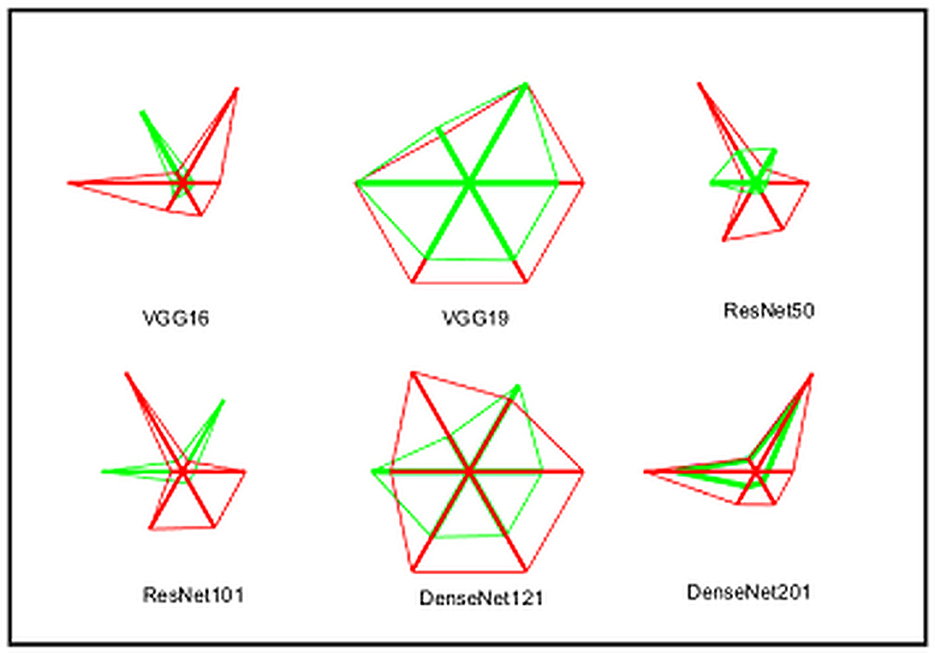

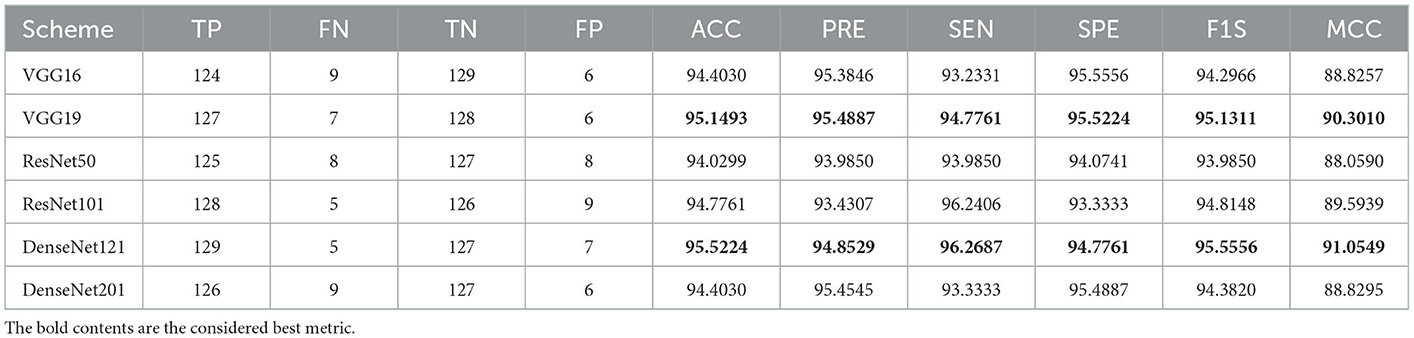

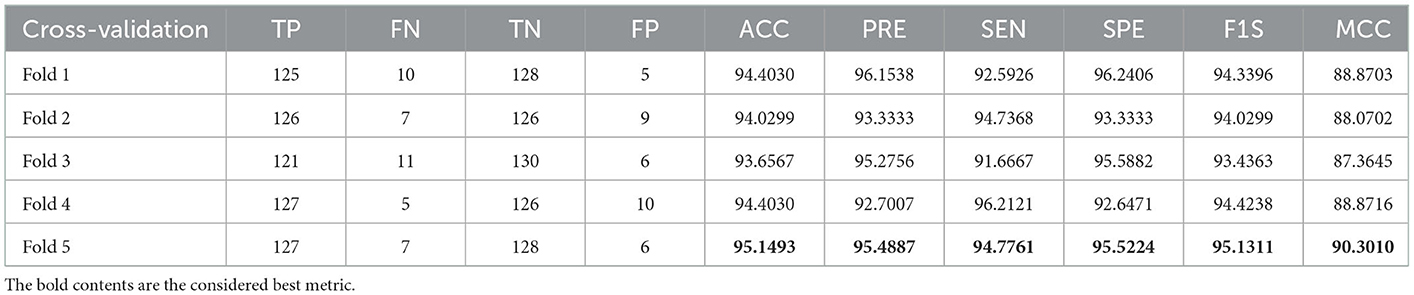

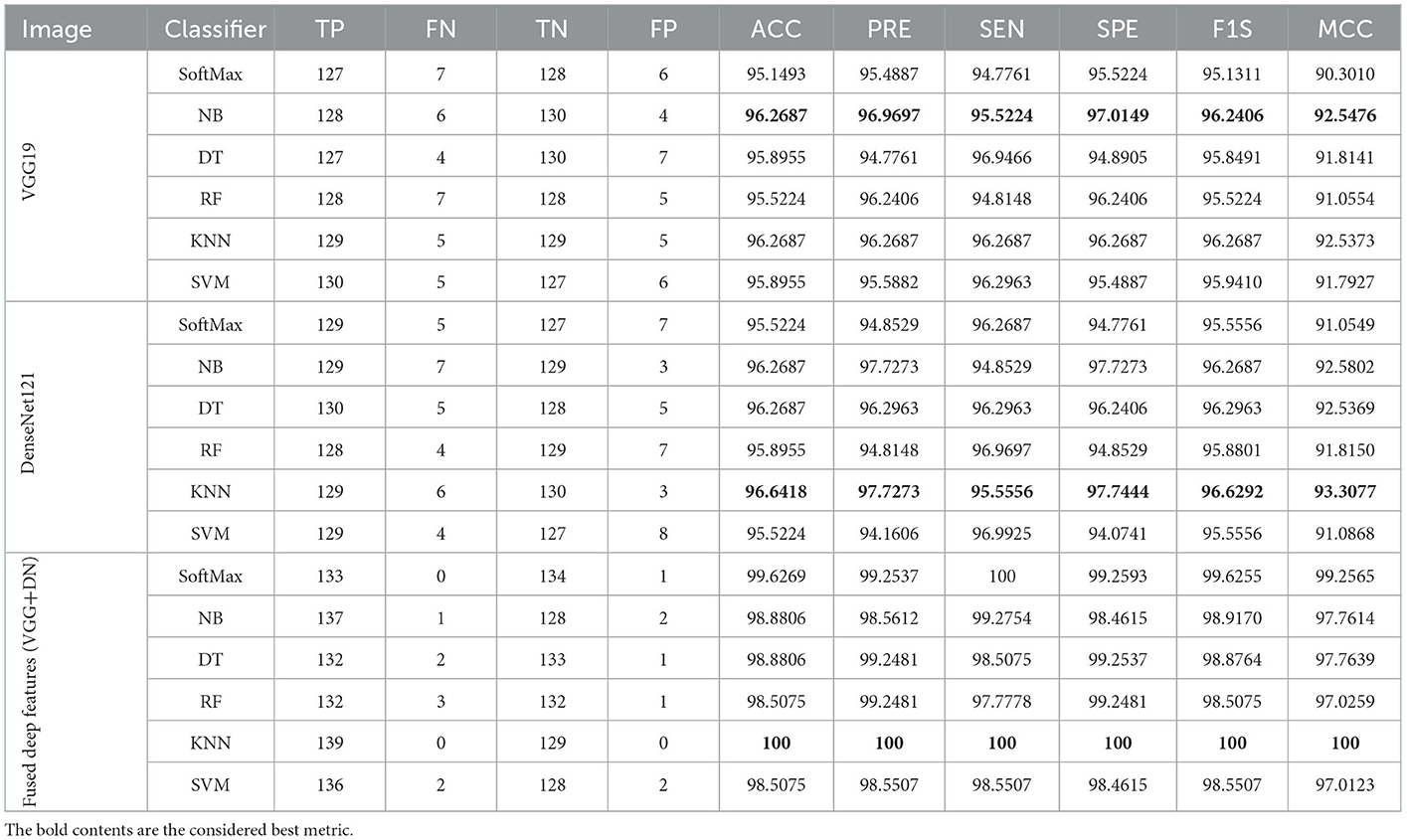

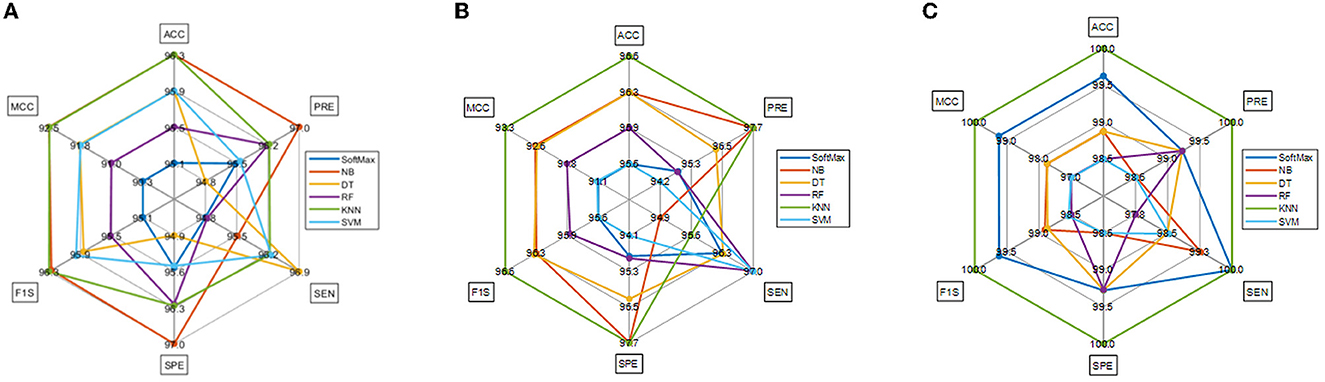

A similar experimental task is repeated using the images whose artifacts are eliminated with a threshold filter. The results of this study confirm that this process offers a better ACC and MCC than other methods, as represented in Table 3. This table also approves that the VGG19 and DenseNet121 offer better performance. Table 5 presents the outcome for VGG16 with a SoftMax for various folds, and the best fold value is chosen as the outcome. The result of a chosen cross-validation approach is also presented in Figure 4. In this figure, the Glyph plot of Tables 3, 4 is separately developed and merged. These images are necessary to confirm the overall merit of this scheme, and this confirms that the artifact-removed RCT provides a better result than other methods. In addition, the result authorizes that this structure works fine on the chosen RCT images.

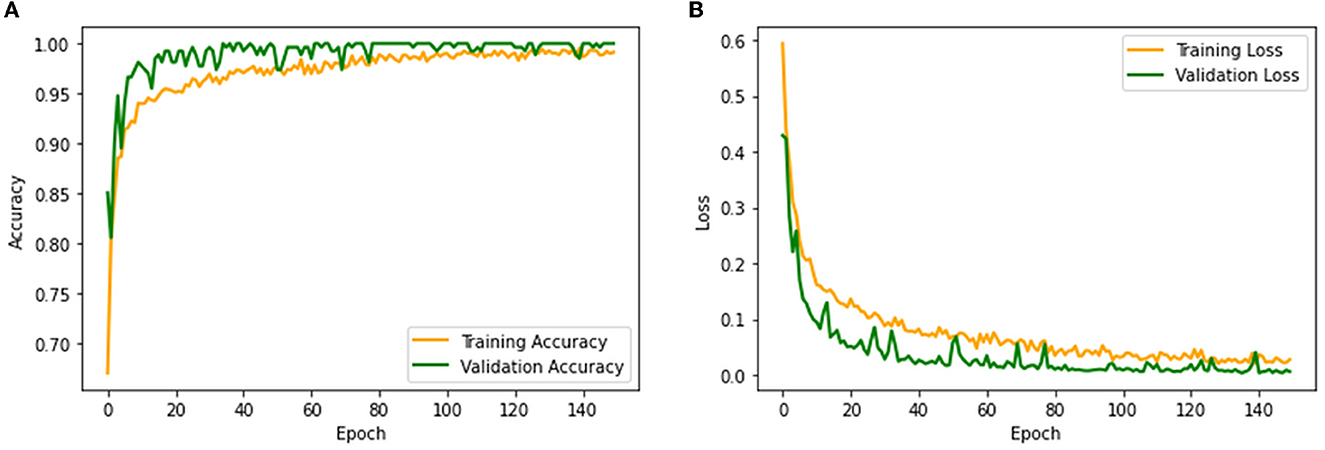

The performance of the proposed system is then verified by considering the fused deep features of dimension 1 x 1 x 1,000. During this task, the VGG19 and DenseNet121 features are considered. Then, their features are sorted based on their value, and finally, a 50% dropout of these features is employed. To execute the classification task, the attained features are then serially fused to achieve a fused feature vector with dimensions of 1 x 1 x 1,000 pixels. The result of this experiment with fused features is presented in Figures 5–7. Figure 5 presents the convergence achieved with RCT image databases, and this figure confirms that the proposed method helps to achieve better detection accuracy (1,000%) than other methods. Figures 5A, B denote the experimental result achieved in this study.

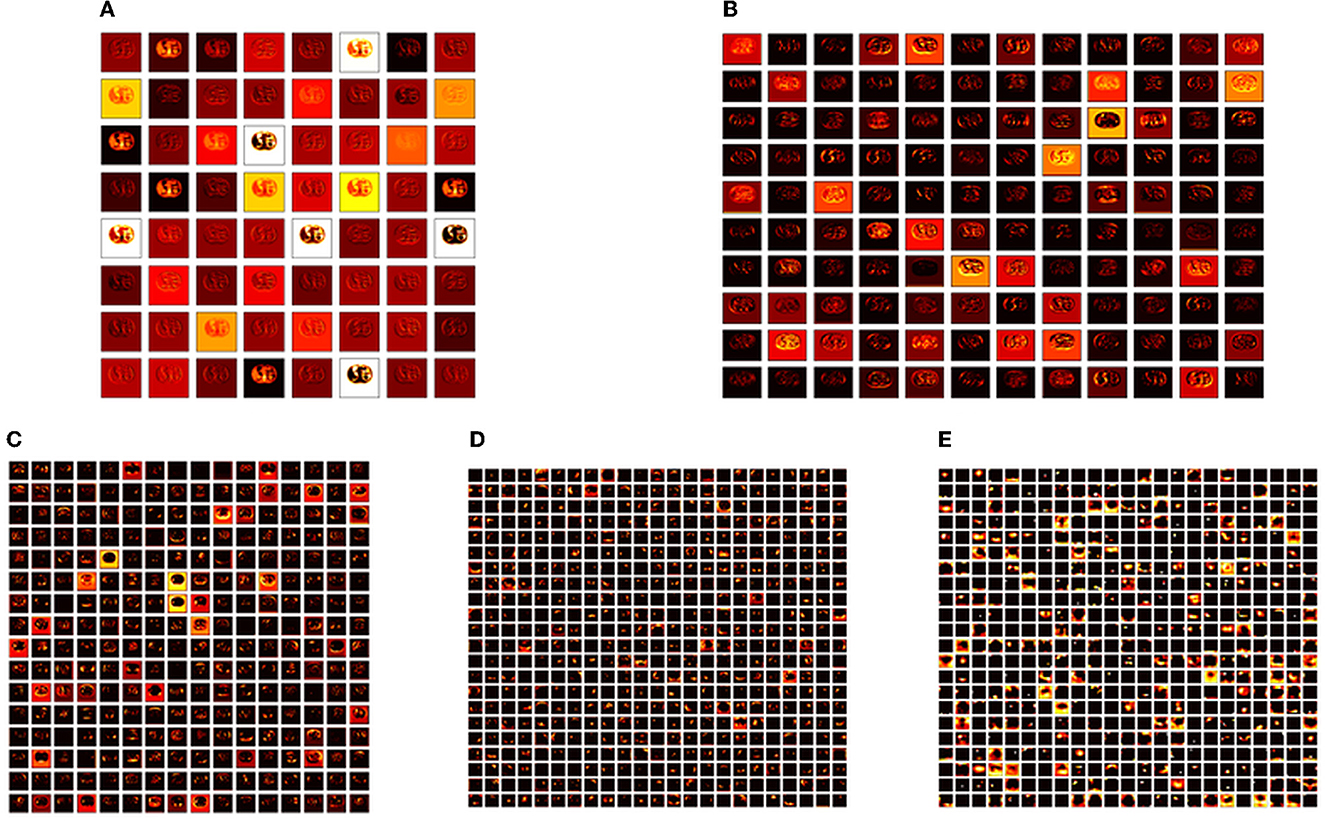

Figure 6. Intermediate layer outcomes collected from VGG19. (A) Conv1. (B) Conv2. (C) Conv3. (D) Conv4. (E) Conv5.

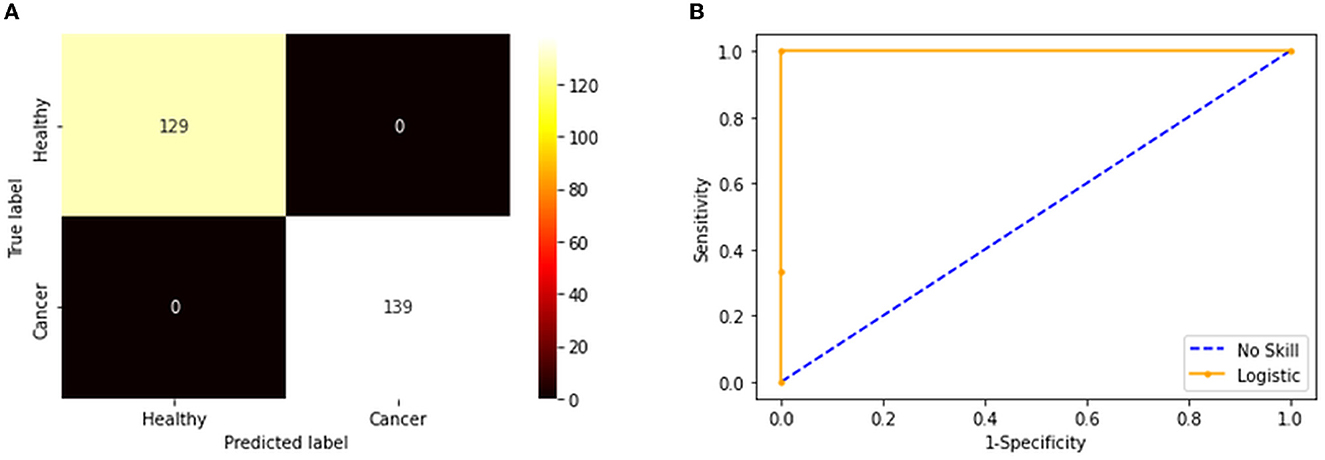

Figure 7. Confusion matrix and ROC curve achieved with fused features. (A) Confusion matrix. (B) the ROC curve.

The convolutional layer outcome was extracted with these results to verify the framework's performance with the chosen database. The results of Figure 6 show that this method will provide a better result and efficiency in completing the task. Figure 7 presents the outcome of the proposed technique, which shows the confusion matrix and the receiver operating characteristic (ROC), which depends mainly on the test images considered. The ROC value achieved is improved compared with the alternatives.

The result of this method authorizes that this system benefits in achieving a better result, and these measures for the experiment with conventional and fused features are shown in Table 5. The initial result for this table is achieved using a VGG19 and DenseNet121, which confirms the merit of the proposed technique. Finally, a spider plot is constructed to demonstrate the result in a graphical form, and the best result is highlighted.

The task of the proposed scheme is successfully employed using the fused features, and this scheme helps to accomplish an improved recognition accuracy (100%) compared with other methods found in the literature. The performance evaluation of Table 6 presented in Figure 8 confirms its overall merit on various classifiers. Figures 8A–C present the classification performance for different feature vectors. The main limitation of this research is the implementation of the threshold filter, which needs a manually verified Th. Nevertheless, the merit of the proposed scheme is verified using the clinical grade CT database, and the achieved experimental outcome verifies that the planned technique is better and helps to get better detection accuracy. The limitation of the proposed study is it needs an artifact removal process and it can be replaced by a chosen image enhancement scheme to achieve better disease detection accuracy.

Figure 8. Spider plot achieved using the results of Table 6. (A) VGG19. (B) DenseNet121. (C) Fused deep features (VGG+DN).

5. Conclusion

The literature authorizes that cancer is a severe disease in human communities, and early diagnosis and treatment are necessary. When the cancer is accurately diagnosed, it can be controlled using a recommended clinical protocol. Due to its importance, a substantial amount of automatic cancer detection based on the bio-image-supported technique has been proposed and executed by researchers (38). The proposed study aims to develop a framework to effectively detect the KC in RCT images with the help of pre-trained deep-learning procedures. This study considered VGG19 and DenseNet121 schemes to classify the RCT into healthy/cancer classes with improved accuracy. As part of this study, individual DLFs and fused DLFs are employed to perform the binary classification task, and the results are compared to identify the most appropriate KC scheme. According to the results of this study, a binary classification with a KNN classifier was effective in achieving an accuracy of 100% for RCTs that had previously been preprocessed using a threshold filter. Based on the results of this research, the proposed framework appears to be effective, and it will be possible to test and validate its performance using clinically collected RCT slices in future.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

KS and C-YC conceptualized and supervised the research and carried out the project administration and validated the results. VR contributed to the development of the model, data processing, training procedures, and implementation of the model. VR, PV, and KS wrote the manuscript. VR, PV, KS, GA, and C-YC reviewed and edited the manuscript. C-YC carried out the funding acquisition. All authors contributed to the article and approved the submitted version.

Funding

This research was partially funded by the Intelligent Recognition Industry Service Research Center from the Featured Areas Research Center Program within the framework of the Higher Education Sprout Project by the Ministry of Education (MOE) in Taiwan and the Ministry of Science and Technology in Taiwan (Grant No. MOST 109-2221-E-224-048-MY2).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

1. Amin J, Sharif M, Fernandes SL, Wang SH, Saba T, Khan AR. Breast microscopic cancer segmentation and classification using unique 4-qubit-quantum model. Microsc Res Tech. (2022) 85:1926–36. doi: 10.1002/jemt.24054

2. Fernandes SL, Martis RJ, Lin H, Javadi B, Tanik UJ, Sharif M. Recent advances in deep learning, biometrics, health informatics, and data science. Expert Systems. (2022) 39:e13060. doi: 10.1111/exsy.13060

3. Wang SH, Fernandes SL, Zhu Z, Zhang YD, AVNC. attention-based VGG-style network for COVID-19 diagnosis by CBAM. IEEE Sens J. (2021) 22:17431–8. doi: 10.1109/JSEN.2021.3062442

4. Ueda T. Estimation of three-dimensional particle size and shape characteristics using a modified 2D−3D conversion method employing spherical harmonic-based principal component analysis. Powder Technol. (2022) 404:117461. doi: 10.1016/j.powtec.2022.117461

5. Krishnamoorthy S, Zhang Y, Kadry S, Yu W. Framework to segment and evaluate multiple sclerosis lesion in MRI slices using VGG-UNet. Comput Intell Neurosci. (2022) 2022:8096. doi: 10.1155/2022/4928096

6. Rajinikanth V, Fernandes SL, Bhushan B, Sunder NR. Segmentation and analysis of brain tumor using Tsallis entropy and regularised level set. InProceedings of 2nd International Conference on Micro-Electronics, Electromagnetics, and Telecommunications. (pp. 313-321). Springer, Singapore (2018). doi: 10.1007/978-981-10-4280-5_33

7. Arunmozhi S, Raja NS, Rajinikanth V, Aparna K, Vallinayagam V. Schizophrenia detection using brain MRI—A study with watershed algorithm. In2020 International Conference on System, Computation, Automation and Networking (ICSCAN) 2020 Jul 3. (pp. 1-4). IEEE (2020). doi: 10.1109/ICSCAN49426.2020.9262281

8. Rajinikanth V, Dey N, Raj AN, Hassanien AE, Santosh KC, Raja N. Harmony-search and otsu based system for coronavirus disease (COVID-19) detection using lung CT scan images. arXiv preprint arXiv. (2020).

9. Dey N, Zhang YD, Rajinikanth V, Pugalenthi R, Raja NS. Customized VGG19 architecture for pneumonia detection in chest X-rays. Pattern Recognit Lett. (2021) 143:67–74. doi: 10.1016/j.patrec.2020.12.010

10. Kadry S, Rajinikanth V. Computer assisted detection of low/high grade nodule from lung CT scan slices using handcrafted features. Det Sys Lung Cancer Imag. (2022) 1:3–1. doi: 10.1088/978-0-7503-3355-9ch3

11. Holzinger, Andreas. The next frontier: Ai we can really trust. Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer, Cham (2021). doi: 10.1007/978-3-030-93736-2_33

12. Alzu'bi D, Abdullah M, Hmeidi I, AlAzab R, Gharaibeh M, El-Heis M, et al. Kidney tumor detection and classification based on deep learning approaches: a new dataset in CT scans. J Healthcare Engin. (2022) 2022:1161. doi: 10.1155/2022/3861161

13. Xu L, Yang C, Zhang F, Cheng X, Wei Y, Fan S, et al. Deep learning using CT images to grade clear cell renal cell carcinoma: development and validation of a prediction model. Cancers. (2022) 14:2574. doi: 10.3390/cancers14112574

14. Amiri S, Akbarabadi M, Abdolali F, Nikoofar A, Esfahani AJ, Cheraghi S. Radiomics analysis on CT images for prediction of radiation-induced kidney damage by machine learning models. Comput Biol Med. (2021) 133:104409. doi: 10.1016/j.compbiomed.2021.104409

15. Miskin N, Qin L, Matalon SA, Tirumani S.H, Alessandrino F, Silverman SG, et al. Stratification of cystic renal masses into benign and potentially malignant: applying machine learning to the Bosniak classification. Abdominal Radiol. (2021) 46:311–8. doi: 10.1007/s00261-020-02629-w

16. Shehata M, Alksas A, Abouelkheir RT, Elmahdy A, Shaffie A, Soliman A, et al. A comprehensive computer-assisted diagnosis system for early assessment of renal cancer tumors. Sensors. (2021) 21:4928. doi: 10.3390/s21144928

17. Heller N, Isensee F, Maier-Hein KH, Hou X, Xie C, Li F, et al. The state of the art in kidney and kidney tumor segmentation in contrast-enhanced CT imaging: results of the KiTS19 challenge. Med Image Anal. (2021) 67:101821. doi: 10.1016/j.media.2020.101821

18. Bhandari A, Ibrahim M, Sharma C, Liong R, Gustafson S, Prior M. CT-based radiomics for differentiating renal tumours: a systematic review. Abdom Radiol. (2021) 46:2052–63. doi: 10.1007/s00261-020-02832-9

19. Islam MN, Hasan M, Hossain M, Alam M, Rabiul G, Uddin MZ, et al. Vision transformer and explainable transfer learning models for auto detection of kidney cyst, stone and tumor from CT-radiography. Sci Rep. (2022) 12:1–4. doi: 10.1038/s41598-022-15634-4

20. Nikpanah M, Xu Z, Jin D, Farhadi F, Saboury B, Ball MW, et al. Deep-learning based artificial intelligence (AI) approach for differentiation of clear cell renal cell carcinoma from oncocytoma on multi-phasic MRI. Clin Imag. (2021) 77:291–8. doi: 10.1016/j.clinimag.2021.06.016

21. Abdelrahman A, Viriri S. Kidney tumor semantic segmentation using deep learning: a survey of state-of-the-Art. J Imaging. (2022) 8:55. doi: 10.3390/jimaging8030055

22. Wang J, Zhu H, Wang SH, Zhang YD. A review of deep learning on medical image analysis. Mob Networks Appl. (2021) 26:351–80. doi: 10.1007/s11036-020-01672-7

23. Khan MA, Rajinikanth V, Satapathy SC, Taniar D, Mohanty JR, Tariq U, et al. VGG19 network assisted joint segmentation and classification of lung nodules in CT images. Diagnostics. (2021) 11:2208. doi: 10.3390/diagnostics11122208

24. T Krishnan P, Balasubramanian P, Krishnan C. Segmentation of brain regions by integrating meta heuristic multilevel threshold with markov random field. Curr Med Imag. (2016) 12:4–12. doi: 10.2174/1573394711666150827203434

25. Liu S, Liu S, Zhang S, Li B, Hu W, Zhang YD. SSAU-net: a spectral-spatial attention-based u-net for hyperspectral image fusion. IEEE Transact Geosci Remote Sens. (2022). doi: 10.1109/TGRS.2022.3217168

26. Arco JE, Ortiz A, Ramírez J, Martínez-Murcia FJ, Zhang YD, Górriz JM. Uncertainty-driven ensembles of multi-scale deep architectures for image classification. Inform Fusion. (2023) 89:53–65. doi: 10.1016/j.inffus.2022.08.010

27. Mohan R, Kadry S, Rajinikanth V, Majumdar A, Thinnukool O. Automatic detection of tuberculosis using VGG19 with seagull-algorithm. Life. (2022) 12:1848. doi: 10.3390/life12111848

28. Nancy AA, Ravindran D, Raj Vincent PD, Srinivasan K, Gutierrez Reina D. IoT-cloud-based smart healthcare monitoring system for heart disease prediction via deep learning. Electronics. (2022) 11:2292. doi: 10.3390/electronics11152292

29. Nandhini Abirami R, Durai Raj Vincent PM, Srinivasan K, Manic KS, Chang CY. Multimodal medical image fusion of positron emission tomography and magnetic resonance imaging using generative adversarial networks. Behav Neurol. (2022) 2022:8783. doi: 10.1155/2022/6878783

30. Mahendran N, PM DR A. deep learning framework with an embedded-based feature selection approach for the early detection of the Alzheimer's disease. Comput Biol Med. (2022) 141:105056. doi: 10.1016/j.compbiomed.2021.105056

31. Tiwari RS, Das TK, Srinivasan K, Chang CY. Conceptualising a channel-based overlapping CNN tower architecture for COVID-19 identification from CT-scan images. Sci Rep. (2022) 12:1–5. doi: 10.1038/s41598-022-21700-8

32. Bhardwaj P, Gupta P, Guhan T, Srinivasan K. Early diagnosis of retinal blood vessel damage via deep learning-powered collective intelligence models. Comput Math Meth Med. (2022) 5:2022. doi: 10.1155/2022/3571364

33. Gudigar A, Raghavendra U, Rao TN, Samanth J, Rajinikanth V, Satapathy SC, et al. FFCAEs: an efficient feature fusion framework using cascaded autoencoders for the identification of gliomas. Int J Imag Sys Technol. (2022) 3:2820. doi: 10.1002/ima.22820

34. Kadry S, Srivastava G, Rajinikanth V, Rho S, Kim Y. Tuberculosis detection in chest radiographs using spotted hyena algorithm optimized deep and handcrafted features. Comput Intell and Neurosci. (2022) 3:2022. doi: 10.1155/2022/9263379

35. Srinivasan K, Cherukuri AK, Vincent DR, Garg A, Chen BY. An efficient implementation of artificial neural networks with K-fold cross-validation for process optimization. J Internet Technol. (2019) 20:1213–25. doi: 10.3966/160792642019072004020

36. Amin J, Sharif M, Yasmin M, Fernandes SL. A distinctive approach in brain tumor detection and classification using MRI. Pattern Recognit Lett. (2020) 139:118–27. doi: 10.1016/j.patrec.2017.10.036

37. Fernandes SL, Gurupur VP, Sunder NR, Arunkumar N, Kadry S, A. novel non-intrusive decision support approach for heart rate measurement. Pattern Recognit Lett. (2020) 139:148–56. doi: 10.1016/j.patrec.2017.07.002

Keywords: kidney cancer, renal CT slices, deep learning, KNN classifier, validation

Citation: Rajinikanth V, Vincent PMDR, Srinivasan K, Ananth Prabhu G and Chang C-Y (2023) A framework to distinguish healthy/cancer renal CT images using the fused deep features. Front. Public Health 11:1109236. doi: 10.3389/fpubh.2023.1109236

Received: 27 November 2022; Accepted: 04 January 2023;

Published: 30 January 2023.

Edited by:

Steven Fernandes, Creighton University, United StatesReviewed by:

Jabez Christopher, Birla Institute of Technology and Science, IndiaFeras Alattar, National University of Science and Technology, Oman

Kameswara Buragapu, Gandhi Institute of Technology and Management (GITAM), India

Copyright © 2023 Rajinikanth, Vincent, Srinivasan, Ananth Prabhu and Chang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chuan-Yu Chang,  Y2h1YW55dUB5dW50ZWNoLmVkdS50dw==

Y2h1YW55dUB5dW50ZWNoLmVkdS50dw==

Venkatesan Rajinikanth

Venkatesan Rajinikanth P. M. Durai Raj Vincent

P. M. Durai Raj Vincent Kathiravan Srinivasan

Kathiravan Srinivasan G. Ananth Prabhu4

G. Ananth Prabhu4 Chuan-Yu Chang

Chuan-Yu Chang