- 1School of Electronic Engineering and Computer Science, Queen Mary University of London, London, United Kingdom

- 2Robotics Research, Ocado Technology, London, United Kingdom

- 3The Alan Turing Institute, Programme – Artificial Intelligence, London, United Kingdom

- 4Department of Bioengineering, Imperial College of Science, Technology and Medicine, London, United Kingdom

This paper demonstrates how tactile and proximity sensing can be used to perform automatic mechanical fractures detection (surface cracks). For this purpose, a custom-designed integrated tactile and proximity sensor has been implemented. With the help of fiber optics, the sensor measures the deformation of its body, when interacting with the physical environment, and the distance to the environment's objects. This sensor slides across different surfaces and records data which are then analyzed to detect and classify fractures and other mechanical features. The proposed method implements machine learning techniques (handcrafted features, and state of the art classification algorithms). An average crack detection accuracy of ~94% and width classification accuracy of ~80% is achieved. Kruskal-Wallis results (p < 0.001) indicate statistically significant differences among results obtained when analysing only integrated deformation measurements, only proximity measurements and both deformation and proximity data. A real-time classification method has been implemented for online classification of explored surfaces. In contrast to previous techniques, which mainly rely on visual modality, the proposed approach based on optical fibers might be more suitable for operation in extreme environments (such as nuclear facilities) where radiation may damage electronic components of commonly employed sensing devices, such as standard force sensors based on strain gauges and video cameras.

1. Introduction

An important task often performed in remote hazardous environments is the detection of mechanical fractures on the objects, such as containers, tanks, pipes, and other technical systems used for keeping chemical and radioactive waste. A crack may be caused by physical damage or material degradation over time or environment changes (e.g., temperature or pressure). The effects of non-detected fractures may lead to larger macro-scale catastrophic failures making the cracked surface mechanically weak to perform its function.

Conventional automatic crack detection methods applied in industry to inspect large mechanical structures rely on acoustic methods (Chakraborty et al., 2019), use X-ray scanning (Barhli et al., 2017; Naragani et al., 2017), apply eddy currents techniques (Yao et al., 2014), or explore changes in a system's motion dynamics (Lu and Chu, 2011; Nicoletti et al., 2018). Such techniques require specialized and costly equipment and well-trained technical staff making their usage in extreme environments (i.e., decommissioning of radioactive waste) less beneficial or even impossible.

Rapid development of computer vision and machine learning led to the introduction of multiple vision-based tools for mechanical fracture detection that we briefly review below. Chen and Jahanshahi (2017) proposes a fusion between a convolutional neural network and a Naive Bayes to analyse video frames for crack detection in nuclear reactors. The framework achieves a 98.3% hit rate against 0.1 false positives per frame. Schmugge et al. (2016) suggested a crack detection method for nuclear power plant inspection videos by fine-tuning a deep neural network for detecting local patches containing cracks which are then grouped in spatial-temporal space for group-level classification which obtains an increase of 40% in the F1-Score with respect to the compared methods. Iliopoulos et al. (2015) analyzed the evolution of a cracked concrete structure obtained by applying Digital Image Correlation, Acoustic Emission, and Ultrasonic Pulse Velocity techniques. The results highlight the time of onset and location that the crack started to form as well as the width and depth of the cracks.

Vision based methods demonstrate high detection accuracy and they are easy to implement in telerobotics applications as cameras are essential parts of the remote inspection robots. However, vision-based methods can fail in remote environments with limited luminosity and video-cameras cannot operate in presence of strong radiation. Furthermore, vision-based methods are not capable of acquiring material properties, such as texture and hardness.

Our work proposes to use tactile and proximity sensing for mechanical cracks detection. In contrast to the visual modality, tactile, and proximity sensing can provide important information on material properties, such as shape, texture, and hardness (Huet et al., 2017; Yuan et al., 2017; Kaboli and Cheng, 2018). Tactile sensors were efficiently used to characterize different materials in robotic teleoperation. Liu et al. (2012, 2015) implemented a 6-axis force/torque finger-shaped sensor capable of estimating the instantaneous friction force and normal force to recognize physical properties of the surface of unknown objects. Average classification accuracy of 88.5% is obtained when implementing a naïve Bayes classifier on 12 different texture surfaces. Feng et al. (2018) proposed a new method, called Active Prior Tactile Knowledge Transfer (APTKT) to re-implement tactile knowledge of previously explored objects which improves the discrimination accuracy by over 20%. A multi-modal tactile sensor (BioTac, developed by SynTouch1) was used by Wong et al. (2014) to estimate the order of curvature and footprint dimensions explored with various movements (distal-proximal stroke, radial-ulnar stroke, etc.) of the robotic finger. Fishel and Loeb (2012) proposed a Bayesian exploration which selects the optimal movements based on previous experience to recognize 117 different textures. Kaboli et al. (2016) propose an online tactile transfer learning method to re-use previously learned tactile models to discriminate new textures with limited numbers of training samples. An expanded tactile sensors module was implemented for recognizing the alphanumeric characters inscribed on rubber stamps in Lee et al. (2006). The stiffness of objects was investigated by Konstantinova et al. (2017) implementing a hybrid force and proximity finger-shaped sensor achieving 87% classification accuracy on a set of household objects with different stiffness values. Drimus et al. (2014) proposed a method to classify objects into rigid and deformable using dynamic time warping to compare the distance between time series of signals. An optical sensor was implemented by Huang et al. (2018) to detect target objects in dynamic environments prior to contact allowing the teleoperator to feel the object without an actual contact improving the benefits of touch interaction to the operator, without negative consequences of the robot contacting unknown geometric structures. Tomo et al. (2017, 2018) introduced uSkin, a soft-skin based sensor, which measures the applied force based on changes in the magnetic field for object shape recognition. Not many approaches use tactile sensing for crack detection and characterization. For additional research on tactile sensing and texture recognition, please refer to Kappassov et al. (2015) and Luo et al. (2017).

In this work, we propose a novel tactile sensing-based technique for mechanical fractures detection with the potential application to nuclear-decommissioning tasks performed by remotely operated robots. The nuclear power industry has been among the slowest to adopt advanced technologies (Wood, 2004; Bogue, 2011). Any instrumentation to be used in the nuclear environment must show robustness under the influence of nuclear radiation, match safety requirements and satisfy the highest industrial standards. The effects of radiation greatly vary and depend on several parameters, including the type of radiation and the total dose (Bogue, 2013). Our approach relies on optical fibers for data transmission from the sensor's measurement elements to the remotely located electronic unit. Optical fibers are among the devices that are less influenced in a nuclear environment since gamma radiation does not interfere with their basic sensing mechanism (Berghmans et al., 1999; Inaudi et al., 2001; Phéron et al., 2012). Berghmans and Decreton (1998) compared the gamma radiation response of three types of optical fiber temperature sensors. For the three sensor types, the transducer mechanism does not seem to be affected by gamma radiation. Fiber optic cables are expected to see greater use in the nuclear power industry, replacing electrical cables (Berthold, 1994; Hashemian, 2009). Several applications implementing fiber optic cables are already been realized. Kim et al. (2017) developed a fiber-optic based monitoring system for water temperature, water level and radiation level of spent nuclear fuel pool (SNFP) at a nuclear power plant. The performance test results show that individual sensors can measure the changes in real-time. Ball et al. (2012) described several measurement technologies with potential application to gas reactors. Among these, an optical-based pressure sensor based on the trajectory of the light in glass is analyzed. The polarization of light crossing the glass is created through stress-induced in the glass as a result of pressure. Through the fiber optic sensor, the pressure measurement can be found through the polarized light intensity.

Present work demonstrates how tactile and proximity sensing can be efficiently used to perform automatic crack detection. The proposed method uses machine learning techniques to detect cracks and bumps based on the deformation and proximity signals which are recorded during physical interaction between a custom-designed robotic finger and the remote environment, Konstantinova et al. (2017). In case a crack is detected, the proposed automated technique classifies its width. Both offline and online classifications are performed. A fiber optic sensor has been implemented for data acquisition because of the reduced dimensions (~55 mm), weight (~200 g), low cost, the strong immunity to electromagnetic interference and the improved environmental resistance. This approach may be implemented also in extreme environments (e.g., in nuclear plants), since gamma radiation does not interfere with the basic sensing mechanism of fiber optic-based sensors (Berghmans et al., 1999). In addition, the nylon component of the implemented sensor can be used in irradiation conditions with limitations as Morita and Seguchi (1983) presented. To the best of the authors' knowledge, this is one of the first works on fracture recognition based on hybrid fiber optical force/proximity sensors. The Present work is based on our previous results (Palermo et al., 2020) demonstrating the feasibility of a tactile sensor for cracks detection. The novelty of this work is the implementation of more accurate mechanical fracture detection and classification methods, and a corresponding comparative study. Additionally, this paper provides a detailed description of the tactile data collection, processing and real-time classification implementation.

2. Experimental Methodology

2.1. Tactile and Proximity Sensor

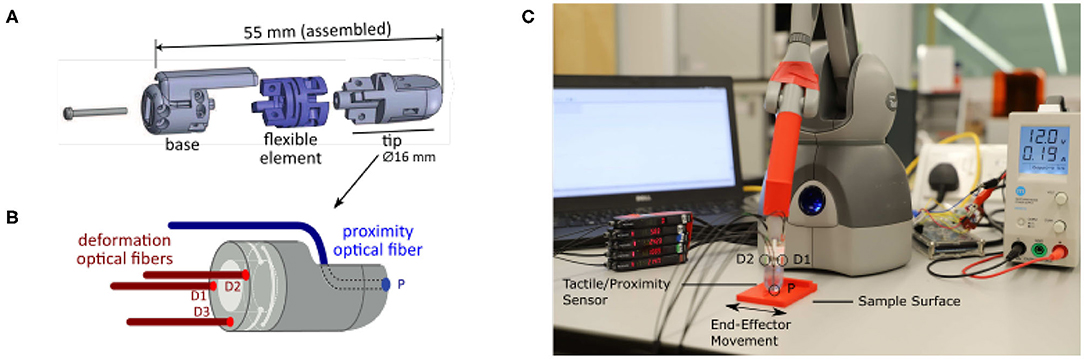

In this work, the integrated force and proximity finger-shaped sensor described by Konstantinova et al. (2017) is used. The sensor is made of 3D printed rigid (VeroClear Glossy) and soft (Nylon—PA2200) components allowing it to bend during interaction with the environment, as shown in Figures 1A,B. All the components are printed with an SLS printer EOS P100. The sensor employs three pairs of optical fiber cables (D1, D2, D3) to measure the deformation of the flexible middle part based on the changes of the reflected light intensity. The fourth pair of optical fiber cables (P) is used to measure proximity, i.e., the distance between the tip of the finger sensor and nearby objects. The implemented proximity permits the shape recreation in 2D of the explored surface. The sensor is capable of measuring bending torque and normal contact force during physical interaction with the environment. As described in Konstantinova et al. (2017), the implemented sensor is able to detect three-axis force/torque signals and measure the distance to the explored object. The sensor measures normal force up to 4.5 N. The lateral torque values (around the x- and y-axes) reach a maximum of ±18 N/mm. The usage of nylon to print the flexible structure led to low hysteresis and high robustness. The proximity sensor (P) can measure distances up to 30 mm. The calibration method has been described in Konstantinova et al. (2016). Each pair of the sensor's fiber optic cables is attached to a Keyence FS-N11MN light-to-voltage transducer. Thus, the change of light intensity modulation is measured and, using a calibration matrix, converted to force, torque, and distance measurements.

Figure 1. Hybrid Fiber Optical Force/Proximity fingertip sensor: (A) Visualization of the three different components of the sensor; (B) Close up visualization of the fiber optics operating principles. D1, D2, D3 indicates the three deformation optical fibers. P the proximity optical fiber. (C) The complete setup for the data acquisition. From left to right: Laptop, Keyence sensors, Touch Haptic device with 3d printed end effector, and Hybrid Fiber Optical Force/Proximity Sensor, Arduino board, and Power Supply.

2.2. Experimental Setup

To collect data and test the proposed crack detection algorithm, the tactile and proximity sensor, described in section 2.1, has been attached to the end-effector of a Touch desktop haptic interface (formerly known as Phantom Omni Geomagic) as shown in Figure 1C. The Phantom Omni was programmed to slide the tactile sensor along a static sample surface following a programmed periodic movement. Data from tactile and proximity sensors were recorded through an Arduino Mega ADK micro-controller, connected via a USB port, at 400 Hz. These data were later synchronized with the absolute position of the tip of the tactile sensor calculated through the encoder readings of the Phantom Omni. Data acquisition and control were implemented through dedicated software libraries (OpenHaptics and Robotic Operating System) running on an Ubuntu desktop computer. The material samples, as well as the Phantom Omni interface, were fixed to a laboratory desk to minimize any vibration and unwanted displacements.

2.3. Data Acquisition Protocol

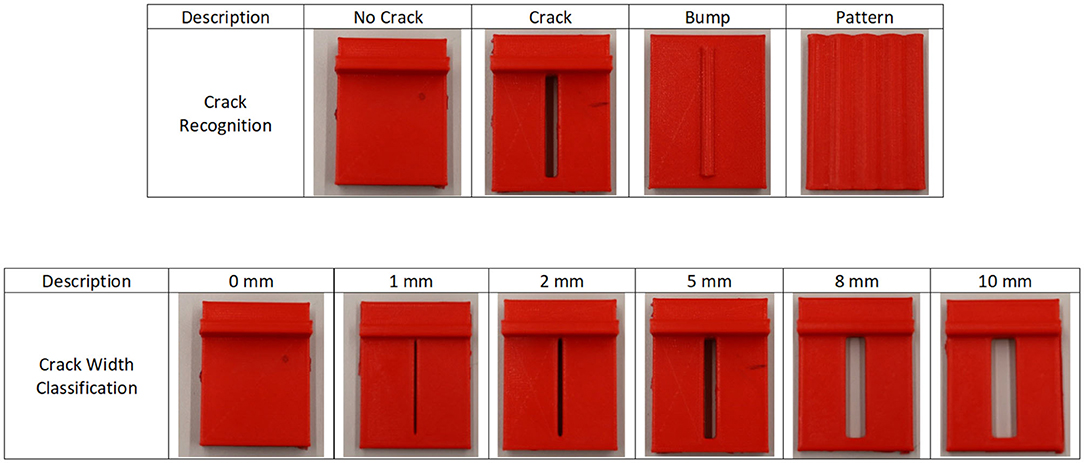

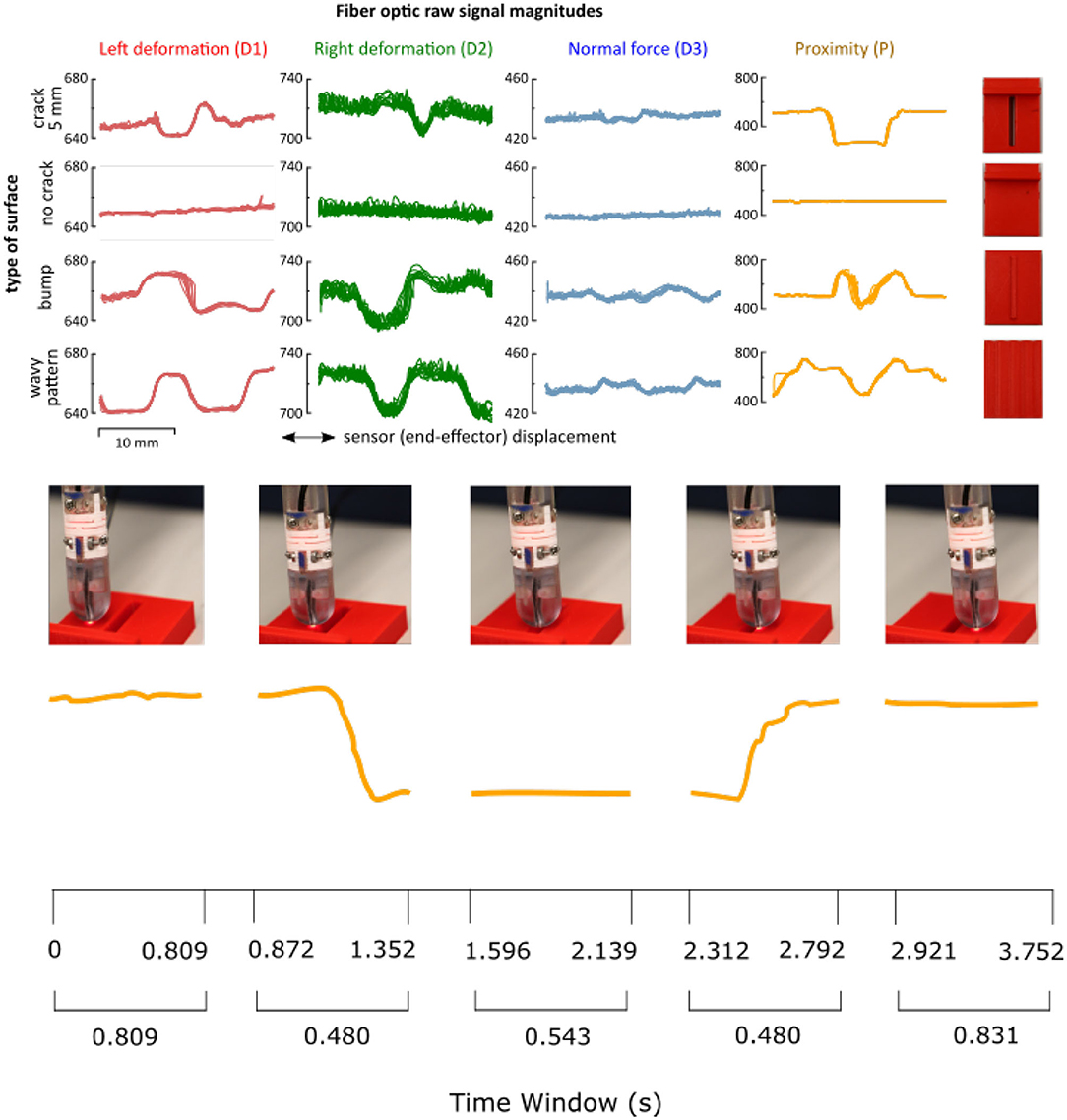

In this work, machine learning techniques are employed for crack detection and crack width classification. A set of 10 objects with different surfaces (no crack, cracks of different widths, a bump and a wavy pattern) were manufactured with PLA plastic using 3D printing technology (Ultimaker III, 0.2 mm layer height, 0.4 mm nozzle diameter). The wavy pattern consists of a repeated pattern of sine waves of 1mm amplitude and 5mm magnitude. The samples are shown in Figure 2. The types of these sample objects correspond to the classes implemented for training and testing the classifier. The Phantom Omni moved the tactile sensor across the sample objects: the periodic sliding has a magnitude of 1.6 cm and a frequency of 1,000 Hz. The average sliding velocity was 3.89 mm/s. The initial position of the tactile sensors was not controlled and varied from trial to trial and was set at ~5–10 mm from the crack edge. No normal force was applied by the sensor to the sampled surfaces except the force caused by the sensor weight (~200 g). Tactile and proximity signals were recorded for 12 repeated continuous sliding movements. This continuous recording was repeated five times. Figure 3 shows an example of raw data acquired on “no crack,” “crack,” “bump,” and “wavy pattern” for a continuous recording. For brevity, only the data acquired during sliding on different surfaces are shown.

Figure 2. Visualization of the set of objects explored during the experiments. The set for the Crack Recognition Analysis is formed by no crack, crack, bump, and wavy pattern surfaces. The series for the Crack Width classification experiment is made up of the same fractured surface with distinct widths of 0, 1, 2, 5, 8, and 10 mm.

Figure 3. (Top) Raw measurements from the four sensing elements of the sensor (deformations D1, D2, D3, and proximity P) for the set of surface patterns: “no crack,” “crack,” “bump,” and “wavy pattern.” Each column shows a different optical fiber signal. In red, the results of the left deformation (D1). In green, the data of the right deformation (D2). In blue, the normal deformation (D3). In yellow, the proximity data (P). (Bottom) The movement of the sensor is shown together with the corresponding proximity data.

2.4. Experimental Dataset

The data-set generated and used in this study is publicly available on the figshare repository “Automatic Fracture Database”2. The data is organized in a nine column format, corresponding to the following chronological measurements: sensor displacement, sensing elements signals (D1, D2, D3, P), the identification number of the current experiment, the number of the measurement trials (single sensors movement), the type of the surface explored (0 = no crack, 1 = crack, 2 = bump, and 3 = wavy pattern), and the direction of movement (0 = right, 1 = left).

3. Data Analysis for Crack Detection

The goal of the proposed algorithm is to detect and characterize mechanical fractures, such as cracks, based on the deformation and proximity data recorded from the sensors. The time history of the deformation and proximity data is recorded. Preprocessing step and features extraction are performed. The resultant output is used as an input for the classification algorithm.

3.1. Pre-processing

The goal of preprocessing was to prepare datasets containing information for the mechanical features, such as crack, bump, and wavy pattern and exclude not relevant datapoints (i.e., recording of the sensors sliding on a flat surface before and after interacting with the bump or crack). The preprocessed labelled datasets were then used for training process and cross-validation test. The preprocessing was fully automatic and was performed on the data collected from the haptic manipulator (sensor displacement) and optical sensing elements integrated in the sensor (deformations D1, D2, D3 and proximity P). The sensor's position data (obtained from the Geomagic haptic device) and the sensing elements data (D1–D3, P) were synchronized and sampled at 400 Hz. Prior the prepossessing stage the measurements of each trial (single sensor movement along the explored surface) were arranged in the following matrix:

with x, d1, d2, d3, and p vectors of size m × 1 representing single trial recordings (time history) of the sensor's displacement, three deformation signals and proximity signal, correspondingly, and m the number of data points in a specific trial. The proximity data (p) of each measurement trial was used to extract the data points corresponding directly to a specific mechanical feature (crack, bump, wavy pattern). This allowed to create a subset of data containing only the information specific to the mechanical feature, and to exclude the data points at the start and the end of the recording. This process was performed automatically, based on the analysis of the discrete-time derivative of proximity sensing for a given time window, and extracting the data for which the derivative exceeded a pre-defined threshold. The average discrete-time derivative for proximity measurement was computed as

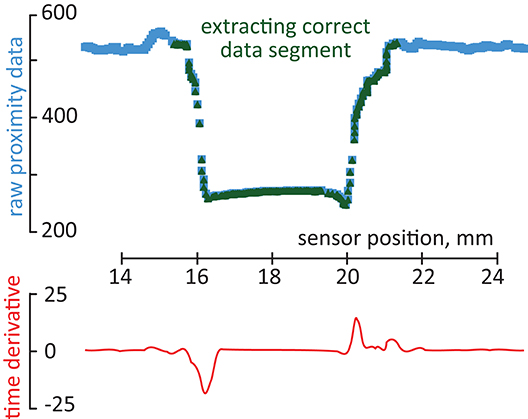

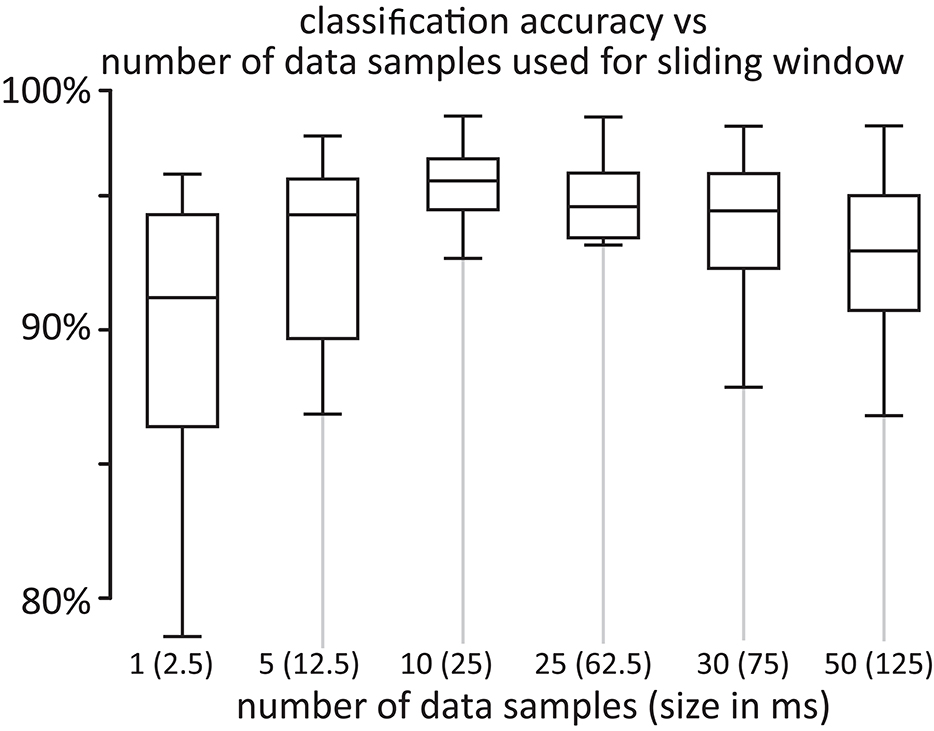

with Δpi a local discrete derivative of ith proximity signal at measurement based on n data points, pi representing ith element of proximity measurement vector, and representing the averaged discrete-time derivative of the proximity measurements. Then, the data points of all measurement signals for which are extracted from each trial as they represent the changes in the sensor-sample interaction mechanics. Additional 10% of the original measurement data is added before and after the extracted points to ensure that the data is complete and represents the explored mechanical feature well. Figure 4 shows an example of the data extraction for one sliding movement on a crack object. The complete measurement trial is displayed in blue. In green, the data points corresponding to the changes in the sensor-sample interaction mechanics. In red, these represent the discrete-time derivative for proximity measurement. To determine the appropriate moving window size for computing the derivatives we performed sample classification tests with different sliding window sizes. Figure 5 shows the results of this test which demonstrated that a time window of 25 ms (containing n = 10 data samples), is sufficient to achieve better good classification accuracy.

Figure 4. Example of correct segment extraction. The complete measurement trial is displayed in blue. In green, the data points corresponding to the changes in the sensor-sample interaction mechanics. In red discrete-time derivative for proximity measurement.

Figure 5. Comparison of classification accuracy of different moving window size to compute the derivatives for the automatic preprocessing step. Time window of n = 10 data samples achieves better classification accuracy.

3.2. Feature Extraction

Feature extraction was performed on each successive 25 ms time window with an increment of 5 ms. The size of the time window was selected based on the sampling frequency. Feature extraction is executed on windows of 10 data points with a window shift of 2 data points. The window length was empirically chosen through a grid search analysis. Time-domain features, including Mean Absolute Value (MAV) and Root Mean Square (RMS), were computed. The advantage of time-domain features is that they are fast to calculate since they do not require any mathematical transformation, e.g., into the frequency domain. On the other hand, they are sensitive to noise. These feature demonstrated high performance in previous surface Electromyography (sEMG) works of (Hakonen et al., 2015; Palermo et al., 2017).

3.3. Classification Algorithm

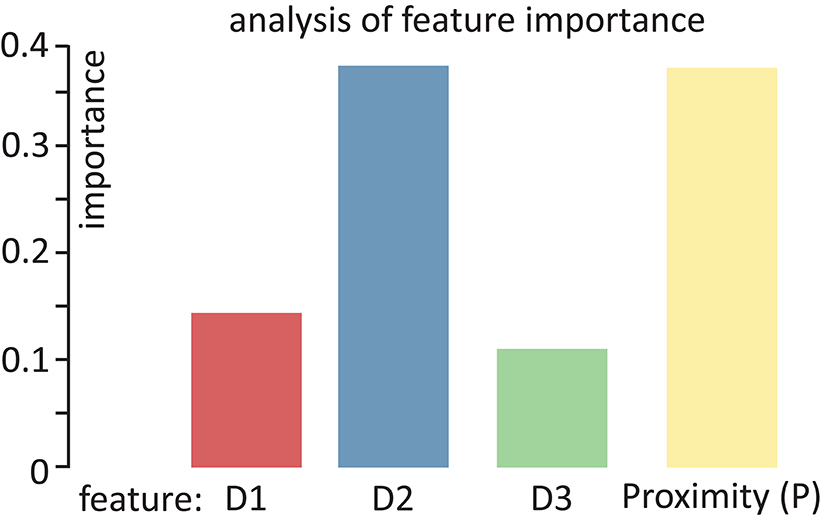

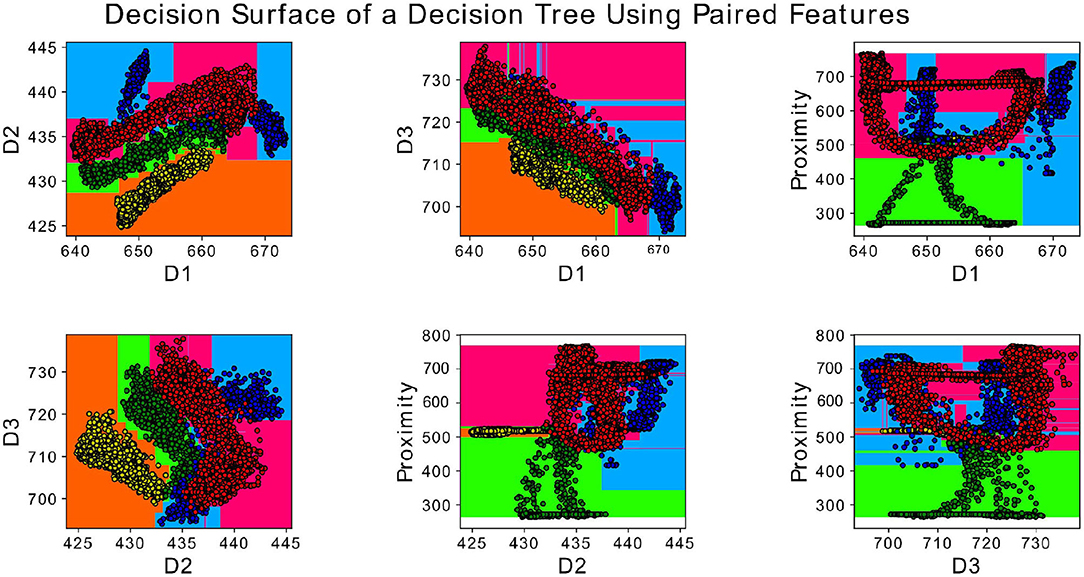

A set of classifiers was employed for the classification step: Random Forest with 100 trees, K-Nearest Neighbors (KNN) with five neighbors, and Quadratic Discriminant Analysis (QDA). Random Forest classifier (Breiman, 2001) can successfully handle high data dimensionality since it is both fast and insensitive to over-fitting. In addition, it was evaluated for remote sensing Belgiu and Drăguţ (2016). First, Random Forest with 1,000 trees was tested but it resulted in non-statistical relevant differences in respect to a Random Forest with 100 trees. It was then decided to discard it and use the Random Forest with 100 trees to increase the speed of the classification. The classification classes are equal to the type of surface explored (no crack, crack, bump and wavy pattern) for the surface crack recognition experiment and the width of the crack (0, 1, 2, 5, 8, and 10 mm) for the crack width classification. The complete data-set was then split 70% for training test and 30% for testing. Figure 6 shows an example of the decision surface of one of a decision tree of the Random Forest for paired features of Proximity (P) and Deformation data (D1, D2 and D3) with MAV feature. First, raw, MAV and RMS data were classified using only the proximity data (P) or the deformation signals (D1, D2, D3). During the experiments, it was found that implementing the four dimensionality features together (P, D1, D2, D3) over-fitted the classifier. The features importance analysis was performed to avoid over-fitting. Figure 7 shows the calculated feature importance. Among the four features. D3 is the least decisive one for the random forest classifier. Thus, the random forest was later trained and tested on proximity data (P) together with D1 and D2 deformation signals. Each observation was trained on itself and tested against the rest of the set one at a time (e.g., observation 2 was trained on itself and tested against observations 1, 3, 4, and 5) for intersession investigation. In total, 20 results for each analyzed feature were obtained. Kruskal-Wallis statistical analysis, which indicates if the data samples come from the same distribution, was performed on the whole set of results.

Figure 6. Decision surface of random forest classifier with four classes (no crack, crack, bump, and wavy pattern) for paired features: D1, D2, D3, and Proximity.

4. Results

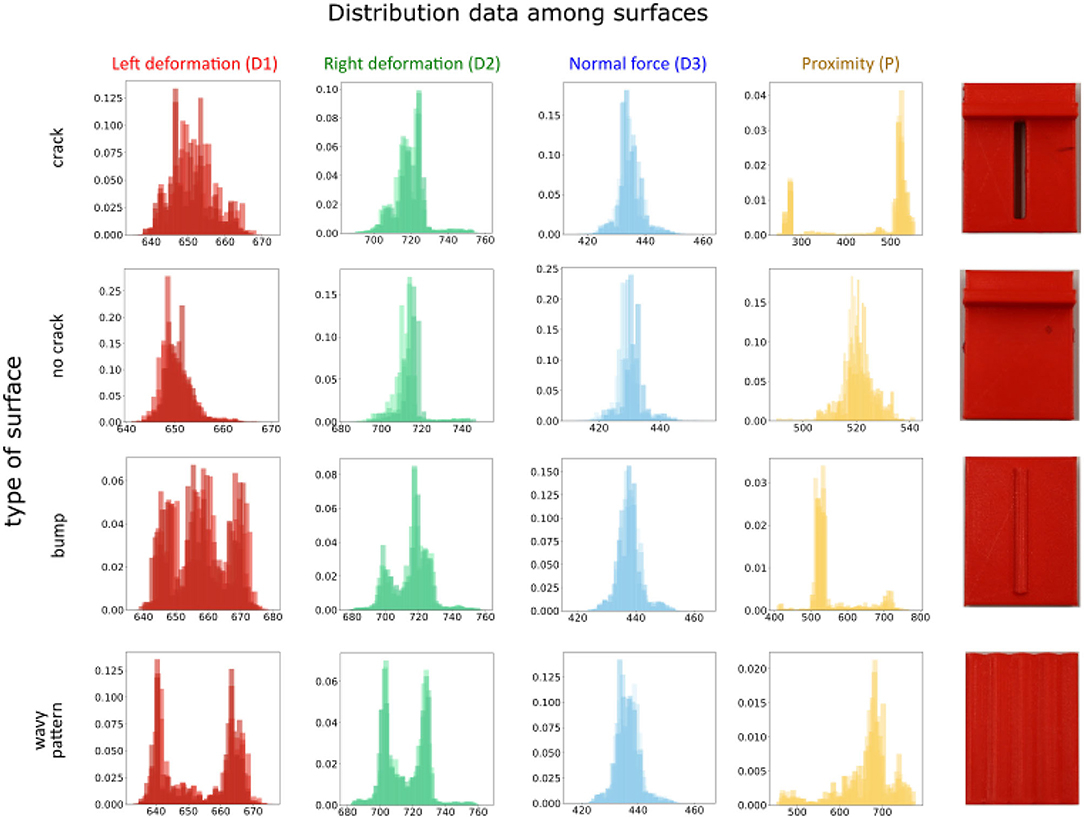

Figure 8 shows the distribution of data on the different surfaces of 6 of the 12 repetitions, for brevity. The common response among the repetitions permits to have no dependence on the sensor starting position and movement.

Figure 8. Measurements distribution for sensing elements D1, D2, D3, and P for “crack,” “no crack,” “bump,” and “wavy pattern” samples. Each plot shows distribution collected from six measurement trials.

4.1. Crack Recognition

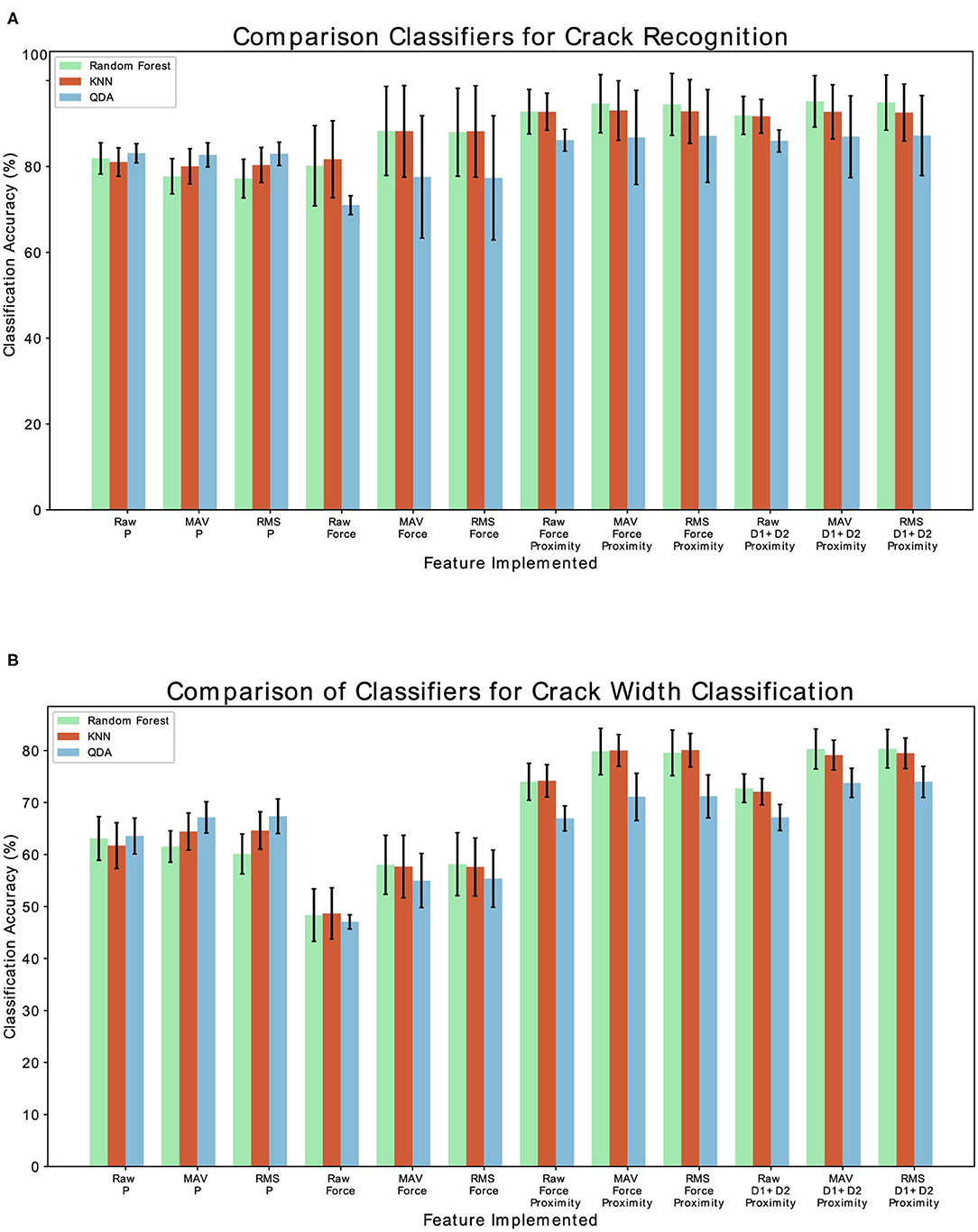

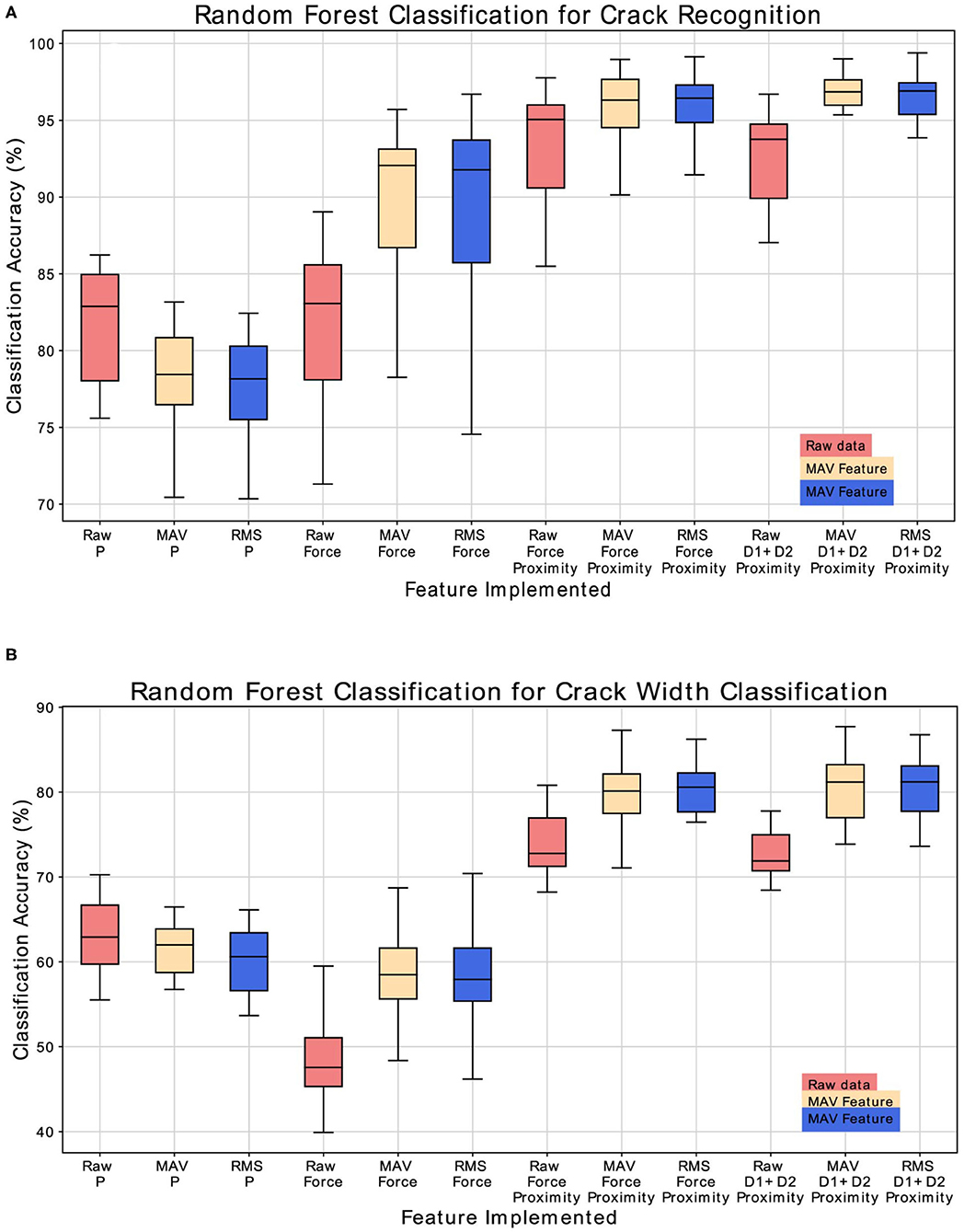

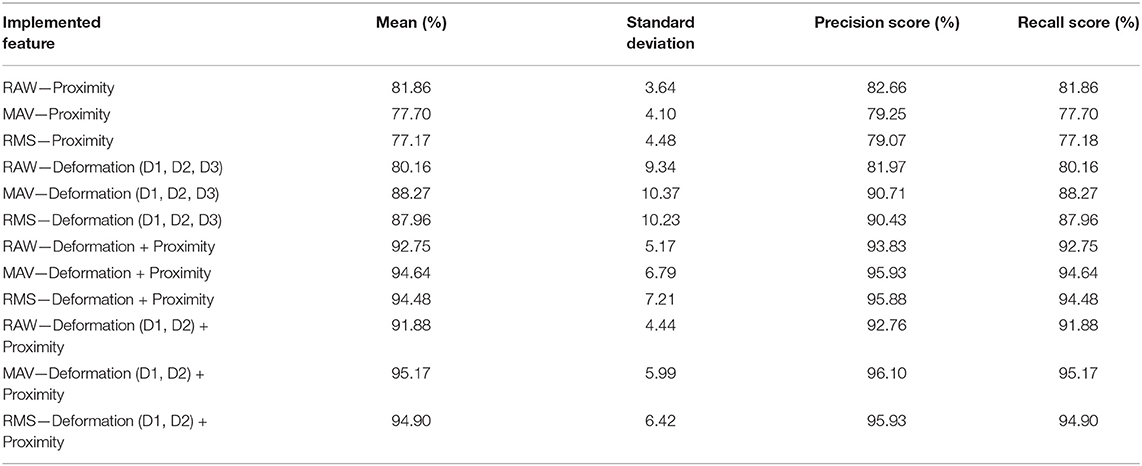

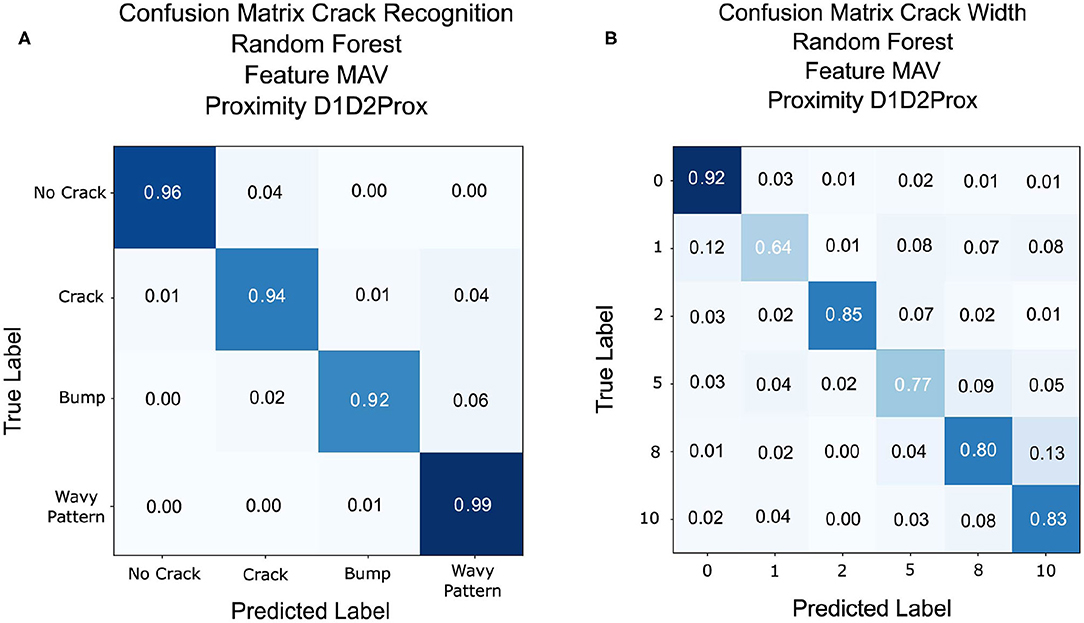

The goal of the Crack Recognition experiment is to recognize the presence of a crack in the object. Figure 9A shows the results of the classification with the implemented classifiers. Random Forest achieves the best classification accuracy using the implemented feature of MAV and RMS and considering the left and right displacement of the sensor (D1, D2) together with the proximity data (P). The second best classifier is KNN, which is expected, since the various class (nocrack, crack, bump, wavy pattern) data are distributed in close proximity to each other, as shown in Figure 6. For brevity, only the results of the Random Forest classifier are shown in the following tables and figures. Figure 10A shows the complete results for the classification analysis. Table 1 shows that the lowest classification accuracy of 77% is obtained when classifying MAV or RMS data only considering the proximity data. Whereas, the best classification accuracy of 94% is achieved when implementing the MAV or RMS feature for the left and right displacement of the sensor and the proximity data. Using only deformation or only proximity data may be sufficient to train the classifier. However, better results are obtained when increasing the dimensionality of the classifier and considering proximity (P) together with the left and right displacement of the sensor (D1, D2). Thus, Implementing the whole deformation signals together with the proximity data brings little or nothing improvement to the classification accuracy in respect to using a feature with one less dimensionality. Figure 11A shows the results for the crack recognition. The most difficult surface to classify for the algorithm is the bump surface since it is comparable to the wavy pattern one. The Kruskal-Wallis test was performed on the results of the classification analysis of the different features and the value obtained (p < 0.001) indicates that the null hypothesis of having all data samples from the same distribution is rejected. Thus, there are significant differences between the implemented features.

Figure 9. (A) Comparison of the three implemented classifiers (Random Forest, QDA, and KNN) for fracture recognition classification. (B) Comparison of the three implemented classifiers (Random Forest, QDA, KNN) for crack width classification.

Figure 10. (A) Classification accuracy results for the crack recognition. (B) Classification accuracy results for the crack width classification analysis.

Table 1. Complete classification accuracy for crack recognition experiment with Random Forest classifier.

Figure 11. (A) Confusion matrix result for crack recognition with Random Forest classification with MAV feature and left and right displacements combined with proximity data. (B) Confusion matrix result for crack width classification with Random Forest classification with MAV feature and left and right displacements combined with proximity data.

4.2. Crack Width Classification

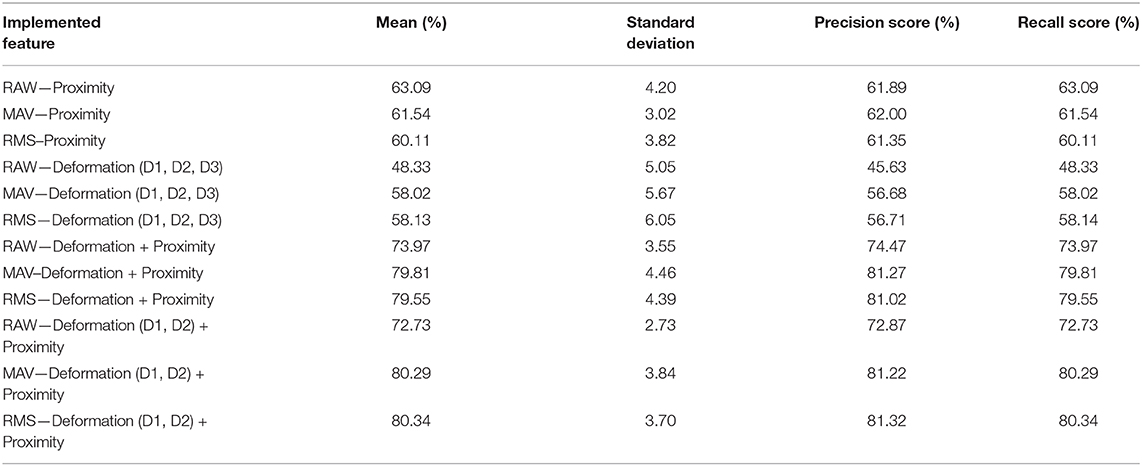

The scope of the crack width classification experiment is to classify the width in millimeters (mm) of the fracture of the explored object. Figure 9B shows the comparison of accuracy, recall score, and precision score of the implemented classifier. Random Forest classifier achieves the best classification accuracy, followed by KNN. As described in the previous section, class data are close in proximity to each other, which is why KNN obtains good results for this experiment. Figure 10B and Table 2 shows the complete results for the classification analysis. The lowest classification accuracy of 48.19% is obtained when classifying raw data with only deformation signals. Whereas, the best classification accuracy of ~80% is achieved when implementing MAV or RMS features with left and right deformation (D1 and D2) together with the proximity data. In this case, using only deformation or only proximity data is not sufficient to train the classifier. Figure 11B shows that the most difficult label to classify is the fracture of 1 mm width which can get mislabeled as a flat surface. This may be due to the fact that the fracture is so small that the left and right displacement are not big enough to trigger the recognition of the crack. Kruskal-Wallis results (p < 0.001) indicate statistically significant differences among results obtained when analyzing only deformation signals, only proximity data and both deformation and proximity data. In this case, instead of using a classifier, a regressor may be more appropriate to use since having a discrete class may not be the best solution when predicting the width of a fracture.

Table 2. Complete classification accuracy for crack width classification experiment with Random Forest classifier.

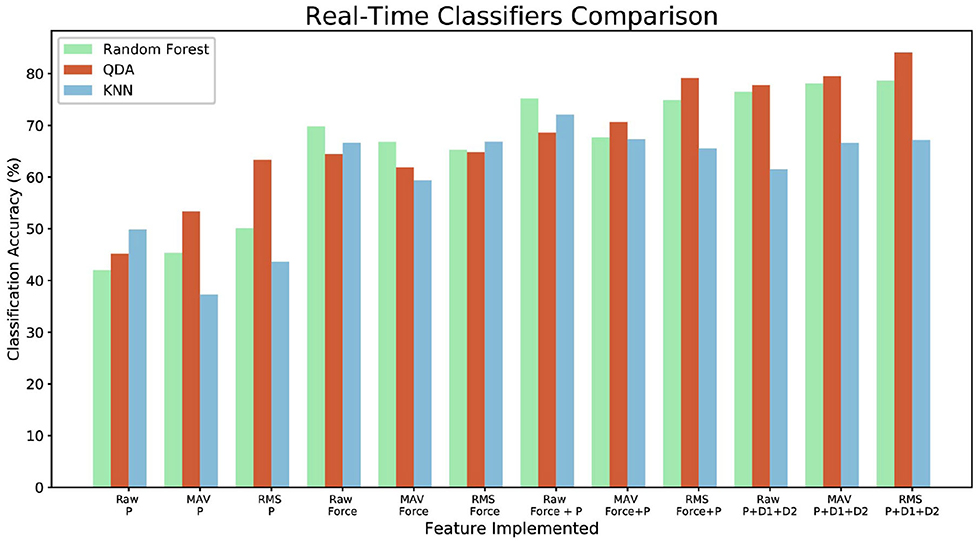

4.3. Real-Time Implementation

To further test the result of the classifier an online application was developed for the crack recognition analysis. During this experiment, it was found that the fiber optic cables position and their twisting influence the sensor data. Thus, an additional acquisition was necessary to obtain a model to use for the real-time classification. Offline models of Random Forest Classifier, KNN, and QDA were generated implementing the newly acquired data. The models were later used to predict the class of the data acquired in real-time while sliding the sensor over different surfaces. The software marks the start position of the detected crack and end position in relation to the Geomagic position. The same analysis, as the previously described offline classifier, was applied. Three continuous sliding movements were performed on each of the crack type surfaces as shown on the first row of Figure 2. Each movement was performed in a different section of the surface (top, center, bottom). The possible proximity data (only proximity, only deformation, deformation + proximity, and P + D1 + D2) and features (Raw Data, MAV, RMS) combination were investigated for each classifier, for a total of 432 classified movements. Figure 12 shows the results of the real time classification accuracy. In this case, the KNN classifier achieves better results than the Random Forest. Increasing the number of classified sliding movements may reduce this difference. Having 3D printing and of regular shape objects may limit the training and testing but this will be addressed and improved in future analysis.

Figure 12. Real-time classification comparison for Random Forest, QDA, and KNN for the complete set of implemented features.

5. Conclusion and Future Work

This work demonstrates how tactile and proximity sensing can be efficiently used to perform automatic crack detection. The proposed method uses machine learning techniques to detect the surface fractures and bumps of explored objects based on fiber optical proximity signals which are recorded during physical interaction between a custom-designed robotic finger and the remote environment. Experimental validation of the proposed method has shown that it is possible to achieve around 94% for crack detection and 80% for crack width classification accuracy. To achieve better results for the crack width classification, an alternative regressor may be more appropriate to use with respect to the implemented classifier. Real-time classification results, on three sliding movements, shows that it is possible to correctly characterize the surface of the investigated object. During this experiment, it was found that the fiber optic cables position and their twisting influences the sensor data. Thus, additional analysis will be required to compensate for the change of flow of the data. In contrast to previous techniques, which rely on visual modality, the proposed approach, based on optical fibers, which may be more suitable for operation in extreme environments (such as nuclear facilities) where radiation damages electronic components of video cameras.

Future research will focus on integrating a multi-modal approach with visual patches and implementation of the proposed system on a teleoperated mobile manipulation system. We plan to demonstrate how automatic fracture characterization will be efficiently integrated with the mobile manipulator controller (Farkhatdinov and Ryu, 2008) and how the obtained tactile data can be visualized in a dedicated virtual reality-based human-operator interface (Omarali et al., 2020). Further studies will be performed on dimensionality reduction with principal component analysis which may increase the classification accuracy. Additional features, such as local min-max values, which may give a better comprehension of the data, will be analyzed. The implementation of an alternative bio-inspired ciliary force sensor will be investigated for small crack detection (Ribeiro et al., 2017).

Data Availability Statement

The datasets generated for this study can be found in the figshare repository “Automatic Fracture Database” available at https://figshare.com/s/14deb00d874400e34d67.

Author Contributions

FP developed the classification algorithm, experimental methods, performed the experiments, and wrote the draft of the paper. IF developed the experimental methods, contributed to the data analysis, and provided the input to the paper. KA advised on the experimental methods and corrected the paper. JK and SP assisted with the paper writing. All authors contributed to the article and approved the submitted version.

Funding

This work has been funded by the National Centre for Nuclear Robotics (UK EPSRC grant NCNR EP/R02572X/1), UK Innovate iGRASP project, by the European Community's Seventh Framework Programme grant agreement no. 610532 (SQUIRREL), and by the Alan Turing Fellowships to KA and IF.

Conflict of Interest

JK was employed by the company Ocado Technology.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

References

Ball, S. J., Holcomb, D. E., and Cetiner, S. (2012). HTGR Measurements and Instrumentation Systems. ORNL/TM-2012/107. Oak Ridge, TN: Oak Ridge National Laboratory.

Barhli, S., Saucedo-Mora, L., Jordan, M., Cinar, A., Reinhard, C., Mostafavi, M., et al. (2017). Synchrotron X-ray characterization of crack strain fields in polygranular graphite. Carbon 124, 357–371. doi: 10.1016/j.carbon.2017.08.075

Belgiu, M., and Drăguţ, L. (2016). Random forest in remote sensing: a review of applications and future directions. ISPRS J. Photogramm. Rem. Sens. 114, 24–31. doi: 10.1016/j.isprsjprs.2016.01.011

Berghmans, F., and Decreton, M. (1998). Evaluation of three different optical fibre temperature sensor types for application in gamma radiation environments. IEEE Trans. Nucl. Sci. 45, 1537–1542. doi: 10.1109/23.685236

Berghmans, F., Fernandez, A. F., Brichard, B., Vos, F., Decreton, M. C., Gusarov, A. I., et al. (1999). “Radiation hardness of fiber optic sensors for monitoring and remote handling applications in nuclear environments,” in Process Monitoring With Optical Fibers and Harsh Environment Sensors, Vol. 3538 (Boston, MA: International Society for Optics and Photonics), 28–39. doi: 10.1117/12.335757

Berthold, J. W. III. (1994). “Overview of prototype fiber optic sensors for future application in nuclear environments,” in Optical Fibre Sensing and Systems in Nuclear Environments, Vol. 2425 (Mol: Society of Photo-Optical Instrumentation Engineers), 74–83. doi: 10.1117/12.198644

Bogue, R. (2011). Robots in the nuclear industry: a review of technologies and applications. Industrial Robot: An International Journal.

Bogue, R. (2013). Radiation hardening and sensors for radioactive environments. Sens. Rev. 33, 191–196. doi: 10.1108/02602281311324636

Chakraborty, J., Katunin, A., Klikowicz, P., and Salamak, M. (2019). Early crack detection of reinforced concrete structure using embedded sensors. Sensors 19:3879. doi: 10.3390/s19183879

Chen, F.-C., and Jahanshahi, M. R. (2017). NB-CNN: deep learning-based crack detection using convolutional neural network and naïve bayes data fusion. IEEE Trans. Ind. Electron. 65, 4392–4400. doi: 10.1109/TIE.2017.2764844

Drimus, A., Kootstra, G., Bilberg, A., and Kragic, D. (2014). Design of a flexible tactile sensor for classification of rigid and deformable objects. Robot. Auton. Syst. 62, 3–15. doi: 10.1016/j.robot.2012.07.021

Farkhatdinov, I., and Ryu, J.-H. (2008). “Teleoperation of multi-robot and multi-property systems,” in 2008 6th IEEE International Conference on Industrial Informatics (Daejeon: IEEE), 1453–1458. doi: 10.1109/INDIN.2008.4618333

Feng, D., Kaboli, M., and Cheng, G. (2018). Active prior tactile knowledge transfer for learning tactual properties of new objects. Sensors 18:634. doi: 10.3390/s18020634

Fishel, J. A., and Loeb, G. E. (2012). Bayesian exploration for intelligent identification of textures. Front. Neurorobot. 6:4. doi: 10.3389/fnbot.2012.00004

Hakonen, M., Piitulainen, H., and Visala, A. (2015). Current state of digital signal processing in myoelectric interfaces and related applications. Biomed. Signal Process. Control 18, 334–359. doi: 10.1016/j.bspc.2015.02.009

Hashemian, H. (2009). The state of the art in nuclear power plant instrumentation and control. Int. J. Nucl. Energy Sci. Technol. 4, 330–354. doi: 10.1504/IJNEST.2009.028597

Huang, K., Lancaster, P., Smith, J. R., and Chizeck, H. J. (2018). “Visionless tele-exploration of 3D moving objects,” in 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO) (Kuala Lumpur: IEEE), 2238–2244. doi: 10.1109/ROBIO.2018.8665228

Huet, L. A., Rudnicki, J. W., and Hartmann, M. J. (2017). Tactile sensing with whiskers of various shapes: determining the three-dimensional location of object contact based on mechanical signals at the whisker base. Soft Robot. 4, 88–102. doi: 10.1089/soro.2016.0028

Iliopoulos, S., Aggelis, D., Pyl, L., Vantomme, J., Van Marcke, P., Coppens, E., et al. (2015). Detection and evaluation of cracks in the concrete buffer of the belgian nuclear waste container using combined ndt techniques. Constr. Build. Mater. 78, 369–378. doi: 10.1016/j.conbuildmat.2014.12.036

Inaudi, D., Glisic, B., Fakra, S., Billan, J., Redaelli, S., Perez, J. G., et al. (2001). Development of a displacement sensor for the CERN-LHC superconducting cryodipoles. Meas. Sci. Technol. 12:887. doi: 10.1088/0957-0233/12/7/324

Kaboli, M., and Cheng, G. (2018). Robust tactile descriptors for discriminating objects from textural properties via artificial robotic skin. IEEE Trans. Robot. 34, 985–1003. doi: 10.1109/TRO.2018.2830364

Kaboli, M., Walker, R., and Cheng, G. (2016). “Re-using prior tactile experience by robotic hands to discriminate in-hand objects via texture properties,” in 2016 IEEE International Conference on Robotics and Automation (ICRA) (Stockholm: IEEE), 2242–2247. doi: 10.1109/ICRA.2016.7487372

Kappassov, Z., Corrales, J.-A., and Perdereau, V. (2015). Tactile sensing in dexterous robot hands. Robot. Auton. Syst. 74, 195–220. doi: 10.1016/j.robot.2015.07.015

Kim, R., Park, C. H., Yoo, W. J., and Moon, J. H. (2017). Development and characterization of a fiber-optic monitoring system for the key environment variables of the spent nuclear fuel pool at a nuclear power plant. Ann. Nucl. Energy 99, 183–192. doi: 10.1016/j.anucene.2016.08.020

Konstantinova, J., Cotugno, G., Stilli, A., Noh, Y., and Althoefer, K. (2017). “Object classification using hybrid fiber optical force/proximity sensor,” in 2017 IEEE Sensors (Glasgow: IEEE), 1–3. doi: 10.1109/ICSENS.2017.8234057

Konstantinova, J., Stilli, A., Faragasso, A., and Althoefer, K. (2016). “Fingertip proximity sensor with realtime visual-based calibration,” in 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Daejeon: IEEE), 170–175. doi: 10.1109/IROS.2016.7759051

Lee, H.-K., Chang, S.-I., and Yoon, E. (2006). A flexible polymer tactile sensor: fabrication and modular expandability for large area deployment. J. Microelectromech. Syst. 15, 1681–1686. doi: 10.1109/JMEMS.2006.886021

Liu, H., Nguyen, K. C., Perdereau, V., Bimbo, J., Back, J., Godden, M., et al. (2015). Finger contact sensing and the application in dexterous hand manipulation. Auton. Robots 39, 25–41. doi: 10.1007/s10514-015-9425-4

Liu, H., Song, X., Bimbo, J., Seneviratne, L., and Althoefer, K. (2012). “Surface material recognition through haptic exploration using an intelligent contact sensing finger,” in 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (Vilamoura: IEEE), 52–57. doi: 10.1109/IROS.2012.6385815

Lu, W., and Chu, F. (2011). Shaft crack identification based on vibration and AE signals. Shock Vibr. 18, 115–126. doi: 10.1155/2011/460178

Luo, S., Bimbo, J., Dahiya, R., and Liu, H. (2017). Robotic tactile perception of object properties: a review. Mechatronics 48, 54–67. doi: 10.1016/j.mechatronics.2017.11.002

Morita, Y., and Seguchi, T. (1983). Radiation resistance of nylon. Denki Gakkai Zetsuen Zairyo Kenkyukai Shiryo 83, 47–52.

Naragani, D., Sangid, M. D., Shade, P. A., Schuren, J. C., Sharma, H., Park, J.-S., et al. (2017). Investigation of fatigue crack initiation from a non-metallic inclusion via high energy X-ray diffraction microscopy. Acta Mater. 137, 71–84. doi: 10.1016/j.actamat.2017.07.027

Nicoletti, R., Cavalini, A. A., and Steffen, V. (2018). Detection of cracks in rotating shafts by using the combination resonances approach and the approximated entropy algorithm. Shock Vibr. 2018:4094631. doi: 10.1155/2018/4094631

Omarali, B., Denoun, B., Althoefer, K., Jamone, L., Valle, M., and Farkhatdinov, I. (2020). “Virtual reality based telerobotics framework with depth cameras,” in 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN) (Naples: IEEE). doi: 10.1109/RO-MAN47096.2020.9223445

Palermo, F., Cognolato, M., Gijsberts, A., Müller, H., Caputo, B., and Atzori, M. (2017). “Repeatability of grasp recognition for robotic hand prosthesis control based on sEMG data,” in 2017 International Conference on Rehabilitation Robotics (ICORR) (London: IEEE), 1154–1159. doi: 10.1109/ICORR.2017.8009405

Palermo, F., Konstantinova, J., Poslad, S., Althoefer, K., and Farkhatdinov, I. (2020). “Implementing tactile and proximity sensing for crack detection,” in 2020 IEEE International Conference on Robotics and Automation (ICRA) (Paris: IEEE). doi: 10.1109/ICRA40945.2020.9196936

Phéron, X., Girard, S., Boukenter, A., Brichard, B., Delepine-Lesoille, S., Bertrand, J., et al. (2012). High γ-ray dose radiation effects on the performances of brillouin scattering based optical fiber sensors. Opt. Express 20, 26978–26985. doi: 10.1364/OE.20.026978

Ribeiro, P., Khan, M. A., Alfadhel, A., Kosel, J., Franco, F., Cardoso, S., et al. (2017). Bioinspired ciliary force sensor for robotic platforms. IEEE Robot. Autom. Lett. 2, 971–976. doi: 10.1109/LRA.2017.2656249

Schmugge, S. J., Rice, L., Nguyen, N. R., Lindberg, J., Grizzi, R., Joffe, C., et al. (2016). “Detection of cracks in nuclear power plant using spatial-temporal grouping of local patches,” in 2016 IEEE Winter Conference on Applications of Computer Vision (WACV) (Lake Placid, NY: IEEE), 1–7. doi: 10.1109/WACV.2016.7477601

Tomo, T. P., Regoli, M., Schmitz, A., Natale, L., Kristanto, H., Somlor, S., et al. (2018). A new silicone structure for uSkin—a soft, distributed, digital 3-axis skin sensor and its integration on the humanoid robot icub. IEEE Robot. Autom. Lett. 3, 2584–2591. doi: 10.1109/LRA.2018.2812915

Tomo, T. P., Schmitz, A., Wong, W. K., Kristanto, H., Somlor, S., Hwang, J., et al. (2017). Covering a robot fingertip with uSkin: a soft electronic skin with distributed 3-axis force sensitive elements for robot hands. IEEE Robot. Autom. Lett. 3, 124–131. doi: 10.1109/LRA.2017.2734965

Wong, R. D. P., Hellman, R. B., and Santos, V. J. (2014). “Haptic exploration of fingertip-sized geometric features using a multimodal tactile sensor,” in Next-Generation Robots and Systems, Vol. 9116 [Baltimore: Society of Photo-Optical Instrumentation Engineers (SPIE)], 911605.

Wood, R. (2004). Advanced Reactor Licensing: Experience With Digital I and C Technology in Evolutionary Plants. Technical report, ORNL (US). US Department of Energy (United States).

Yao, Y., Tung, S.-T. E., and Glisic, B. (2014). Crack detection and characterization techniques—an overview. Struct. Control Health Monit. 21, 1387–1413. doi: 10.1002/stc.1655

Keywords: sensing, haptic exploration, crack recognition, extreme environment, optical sensing, fiber-optics

Citation: Palermo F, Konstantinova J, Althoefer K, Poslad S and Farkhatdinov I (2020) Automatic Fracture Characterization Using Tactile and Proximity Optical Sensing. Front. Robot. AI 7:513004. doi: 10.3389/frobt.2020.513004

Received: 26 November 2019; Accepted: 19 October 2020;

Published: 02 December 2020.

Edited by:

Kushal Mukherjee, United Technologies Research Center, IrelandReviewed by:

Zhanat Kappassov, Nazarbayev University, KazakhstanMohsen Kaboli, Radboud University Nijmegen, Netherlands

Copyright © 2020 Palermo, Konstantinova, Althoefer, Poslad and Farkhatdinov. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Francesca Palermo, Zi5wYWxlcm1vQHFtdWwuYWMudWs=; Ildar Farkhatdinov, aS5mYXJraGF0ZGlub3ZAcW11bC5hYy51aw==

Francesca Palermo

Francesca Palermo Jelizaveta Konstantinova

Jelizaveta Konstantinova Kaspar Althoefer1,3

Kaspar Althoefer1,3 Stefan Poslad

Stefan Poslad Ildar Farkhatdinov

Ildar Farkhatdinov