Abstract

Adoption of human–robot collaboration is hindered by barriers in collaborative task design. A new approach for solving these problems is to empower operators in the design of their tasks. However, how this approach may affect user welfare or performance in industrial scenarios has not yet been studied. Therefore, in this research, the results of an experiment designed to identify the influences of the operator’s self-designed task on physical ergonomics and task performance are presented. At first, a collaborative framework able to accept operator task definition via parts’ locations and monitor the operator’s posture is presented. Second, the framework is used to tailor a collaborative experience favoring decision autonomy using the SHOP4CF architecture. Finally, the framework is used to investigate how this personalization influences collaboration through a user study with untrained personnel on physical ergonomics. The results from this study are twofold. On one hand, a high degree of decision autonomy was felt by the operators when they were allowed to allocate the parts. On the other hand, high decision autonomy was not found to vary task efficiency nor the MSD risk level. Therefore, this study emphasizes that allowing operators to choose the position of the parts may help task acceptance and does not vary operators’ physical ergonomics or task efficiency. Unfortunately, the test was limited to 16 participants and the measured risk level was medium. Therefore, this study also stresses that operators should be allowed to choose their own work parameters, but some guidelines should be followed to further reduce MSD risk levels.

1 Introduction

Small-batch manufacturing is becoming increasingly important for the competitive advantage of European factories, especially within small and medium enterprises (SMEs) (Bayha et al., 2020). Technologies proposed in Industry 4.0 (I4.0) can enable economically feasible small-batch manufacturing (Rüßmann et al., 2015). One of the promising technologies within I4.0 is human–robot collaboration (HRC). In HRC, humans are foreseen to collaborate with robots in a shared workspace to achieve higher flexibility and throughput. However, their introduction is still hampered by safety, interfaces, and design (Villani et al., 2018). To address these problems, including the human operator in the design via human-driven design paradigms can be beneficial (Kaasinen et al., 2019). In such scenarios, the design paradigms known as human-centered design (HCD) (Deutschen Instituts für Normung, 2020) and value-sensitive design (VSD) (Friedman, 1996) have helped to improve the usability of robotic systems for novice operators (Coronado et al., 2021; Eiband et al., 2022), reduce workload by using custom designed interfaces (Pantano et al., 2020), or improve acceptance by changing the appearance of humanoid robots (Kahn et al., 2007). However, to achieve these results, design must aim to establish means of communication that enable humans to build good mental models of the application (Rook, 2013; Sofge, 2013; Hoff and Bashir, 2015; Teo et al., 2018; Demir et al., 2019; Kolbeinsson et al., 2019; Shahrdar et al., 2019). One recent example proving the benefit of good mental models can be seen in the work of (Tausch and Kluge, 2020). In this work, the authors found that greater satisfaction in human–robot interaction can be achieved if operators design their own sequence of tasks. However, no investigation in industrial scenarios was performed in the study. Therefore, there is a need for further research in this sector. By looking at the adoption of HRC in industry, the applications which could benefit the most from those design suggestions are the ones known as cooperation and collaboration (Weiss et al., 2021). In these modes, teammates (i.e., human and robot) perform tasks in a shared workspace on different components or on the same components (Bauer et al., 2016). If these modes are successfully implemented through good mental models, several benefits can be achieved. One of those is the improvement of the operators’ physical ergonomics (Gualtieri et al., 2021). Therefore, the following section describes how mental models were implemented when the physical ergonomics of operators had to be taken into account in an HRC application.

To consider the physical ergonomics of operators in HRC, an assessment must be carried out. In the literature, two assessment methodologies are available: simulation via computer-aided engineering (CAE) and digital human models (DHMs) (Sanchez-Lite et al., 2013; Baizid et al., 2016; Mgbemena et al., 2020) or in situ process surveys (Lidstone et al., 2021). In the case of CAE simulations, the digital workcell, the digital task workflow, and the DHM must be available (Gläser et al., 2016). This is often the case when planning new automotive production lines (Ruiz Castro et al., 2017; Zhu et al., 2019). Therefore, several commercial tools are available in the market such as IPS IMMA™1 or Siemens Tecnomatix™2. However, given the complexity of such commercial systems, they cannot be always applied in the context of SMEs, and the level of freedom for the operator is rather limited (Ballestar et al., 2020). In the case of in situ measurements, experts are requested to monitor the task and provide evaluations. This is often performed through the classification of operator postures through observations (Namwongsa et al., 2018). However, not surprisingly, this latter method can be subject to errors due to the observational source (Diego-Mas et al., 2017). Despite that, the in situ measurement is more flexible and does not require intensive digitization like the CAE method. Hence, the in situ approach, with proper technologies for reducing errors, has been widely adopted for estimating and improving physical ergonomics in HRC. Rahal et al., 2020 proposed a haptic control based on an inverse kinematics (IK) of the human arm to derive user comfort and thus change the control strategy. This strategy leads to lower muscular loads of operators by considering a physical ergonomics measurement in the algorithm. Shafti et al., 2019 presented a robot-assisted interaction that improves the operator armload by controlling the robot arm positioning in accordance with muscular and physical ergonomics measurements. Their approach tunes the robot response according to the physical ergonomics obtained through computer vision (CV). Makrini et al., 2019 suggested that human and robot task allocation based on physical ergonomics can improve the overall working conditions of the operators. Their result was based on a visual module for estimating the operator position, but it was influenced by a human posture tracking algorithm in sub-optimal operation. A similar approach, but with an improved visual algorithm based on OpenPose (Cao et al., 2017), can be used to derive compliant robot motions that follow postures of different operators, thus reducing the operator joint torque overloading (Kim et al., 2019).

The proposed in situ methodologies are mainly presenting robot control algorithms which adapt to the human posture to improve the physical ergonomics in the hand-over task. Therefore, individuals cannot explicitly program the robot behavior but should trust the robot control algorithm to choose the most comfortable position. Although beneficial to the user physical ergonomics, this could lead to rising feelings of uncertainty which can influence team dynamics (Friedman et al., 2000; Kolbeinsson et al., 2019; Tausch and Kluge, 2020). Therefore, studies investigating the effect of individuals’ explicit decisions on task design in industrial scenarios are missing. Thus, this work investigates if mental models based on a self-designed task in an industrial scenario influence the operator in terms of physical ergonomics and task performance. To study these influences, we measure the level of physical ergonomics in two experiments with different levels of task autonomy and we formulate the following research hypotheses.

Hypothesis 1(H1): The worker, through the ability to explicitly define the location of objects to be manipulated, has a high task decision autonomy.

Hypothesis 2(H2): When the worker can explicitly define the location of the objects and perceives more autonomy, the physical ergonomics of the operator is better.

To test these research hypotheses, the work in this article is structured as follows. In Section 2, the use case and the envisioned novel control method based on an adaptive control architecture that leaves the decision on where to place the parts to the operator are described. In Section 3, the results of a user test with untrained personnel on physical ergonomics data are presented. In Section 4, the results considering the research hypotheses and possible factors influencing the outcomes are discussed. Finally, in Section 5, the conclusions along with future research directions are given.

2 Materials and methods

This section discusses the materials and methods used to test the research hypotheses through experiments. First, the task and the interaction envisioned for the experiments are described in Section 2.1. Second, the methods to estimate the physical ergonomics are described in Section 2.2. Third, Section 2.3 and Section 2.4 describe how the user could specify the location of the objects and operate safely with the robot. Finally, Section 2.5 describes the experiment and the procedure for gathering data from the user study.

2.1 Task description and envisioned interaction

To efficiently produce parts that require sintering processes, batch production is applied. Therefore, stacks composed of several insulating layers and parts must be prepared before thermal sintering (Verlee et al., 2012). However, due to the fragility of some parts, this process is often performed manually, resulting in production errors and strain for human operators (Murrell, 1961; Hansen et al., 2003).

To integrate the self-designed task while reducing operator effort, a VSD-based approach was used to ensure a holistic solution. Therefore, users were observed during the manual task, and two conclusions were drawn. On the one hand, the stakeholders are the human operators involved in the stacking process. On the other, the users are moving the pieces as they please as long as they have their own way of performing the task. Therefore, the value of autonomy was highlighted as the most important thing because of the operators’ autonomy in the task. Moreover, the value of human well being was identified as second most important considering that the task can be strenuous for operators.

Considering these values, and the limitations found in SMEs where application of CAE modeling and robot offline programming like in (Baizid et al., 2016) is limited, the following twofold approach has been selected. A human collaboration approach was chosen to alleviate operators’ efforts and CV to identify where the robot should pick up parts and give operators the freedom to customize the task. Therefore, the envisioned interaction was composed of two parts. First, a teach-in phase where the user could exert autonomy by placing parts at preferred location. Second, the collaboration for fulfilling the task of stack creation. The final task workflow is shown in Figure 1.

FIGURE 1

Graphical representation of the envisioned interaction. The task is composed of a teach-in phase and a collaboration phase. At first, the user decides where the parts should be placed. Afterward, the collaboration unfolds with 5 sub-steps where the robot and the user have to share some tasks. Step ➀ the robot moves the part to the insulating layer. Step ➁ the user places the distancers on the insulating layer. Step ➂ the robot moves another insulating layer while the user moves away the paper sheet. Step ➃ the robot moves the part while the user adjusts the insulating layer stack. Step ➄ the user moves away the paper sheet. The actions marked with light green are taken over by the human operator. The actions marked in pink are taken over by the robot.

2.2 Posture evaluation

To investigate the influence of human decisions on posture, an evaluation method was necessary. As pointed out previously, an approach based solely on expert observation can carry errors. Therefore, an approach based on convolutional neural networks (CNNs) and CV was selected. The method was integrated via the pretrained limb detection algorithm OpenPose (Cao et al., 2017) and a calculation library for joint angles. These two tools were used to evaluate the operator’s distances to the workspace and the exposure to ergonomic risk factors related to musculoskeletal disorders (MSDs) following the Rapid Upper Limb Assessment (RULA) method (McAtamney and Nigel Corlett, 1993), due its better performance in calculating risks ranging from low to high (Yazdanirad et al., 2018).

To implement the RULA calculation, a similar approach to the one used by (Makrini et al., 2019) was used. Therefore, the operators were monitored from two points of view via different cameras, one for recording information of the upper limbs (front) and one for the lower limbs (side). Afterward, the views were synchronized, and all relevant limb positions were extracted and evaluated as shown in Figure 2. More precisely, to calculate RULA relevant angles, the limb end points according to Table 1 were used and angles were calculated using Eq. 1 (nomenclature refers to labels in Figure 2; the numerator is the dot product of the two vectors representing two adjacent limbs and the denominator is the multiplication of the lengths of the two limb vectors).

FIGURE 2

Example for the evaluation of the operator angles using front and side views. The view from the front is necessary to get information about the upper limbs, and the view from the side is necessary to get information about lower limbs and trunk position. The calculation of the angles is performed between adjacent limbs (A). In case no adjacent limbs were present, a reference line had to be selected to create a fictitious line for the calculation (B).

TABLE 1

| RULA criteria | Relevant key points of limbs | Reference line |

|---|---|---|

| Upper arm position | Shoulder—elbow | Trunk |

| Shoulder raise | Neck—shoulder | Trunk |

| Lower arm position | Elbow—wrist | Trunk |

| Wrist position | Wrist—palm | Elbow—wrist |

| Neck position | Neck—ear | Trunk |

| Trunk position | Trunk | Perpendicular line to the ground |

Key points used for the RULA evaluation using the side view. The first column specifies which RULA criteria is considered. The second column explains which key points from the OpenPose output were considered for the angle calculation. Finally, the last column identifies which reference line was taken to create the fictitious line in case the relevant key points were not adjacent.

Finally, the physical ergonomics score was calculated according to the RULA criteria using lookup tables to convert the quantitative limb angle information into the ordinal data needed for RULA (this conversion assumed that a constant load under 0.5 kg and non-repetitive actions due to the task structure were present). More precisely, the angles calculated using Eq. 1 were used to give intermediate risk levels for all the different limbs (e.g., upper arm). Then, the intermediate risk levels were used to define target rows and columns in the RULA tables. Finally, the cell identified by the row and column gave the final MSD risk level. For the sake of clarity, an example is presented here. The example is composed of two steps following the RULA evaluation. On one side, there is an intermediate MSD risk level for the wrist and arm, and on the other side, there is an intermediate MSD risk level for the neck, trunk, and legs. First, if the upper arm had an angle between +20∘ and -20∘, the lower arm was bent for more than 100∘, and the wrist was parallel to the ground and without twisting, an intermediate limb risk level equivalent to 1, 2, 1, and 1 was obtained. Therefore, this yielded an intermediate risk level of 2 for the wrist arm. Second, if the neck and trunk were straight and the legs were supported, an intermediate limb risk level equivalent to 1, 1, and 1 was obtained. Therefore, this yielded an intermediate risk level of 3 for the neck, trunk, and legs. Finally, by combining the results of the first and second steps, the final MSD risk level was obtained by looking at the second row and third column of the RULA evaluation table3, which, in this case, results in a final MSD risk level of 3.

In addition to the main RULA assessment, the posture assessment was supplemented with a measurement of the distance between the robot’s work area and the operator to get an overview of work area utilization. Therefore, another CV method was applied. The pipeline was as follows. Initially, the output from OpenPose was taken, and the front view was selected to calculate the average operator distance using shoulder and hip positions with the as show in Eq. 2.

Afterward, the boundary between the working areas (table edge) was identified. Finally, the distance between the boundary and the operator was calculated using the , and an average value was calculated using Eq. 3.

To perform these evaluations during the experiment, the information was processed using a script written in Python and run on a Linux computer that had access to camera video streams and robot status using the robot operating system (ROS) middleware. The source code for performing these evaluations has been made available4.

2.3 Adaptive robot control architecture

To achieve a level of robot control which could be easily adapted to the requirements of the operator, a distributed cyber-physical system had to be conceived. The system consisted of four main parts: an application controller, a manipulator trajectory planner and executor, an object detector, and a low-level end-effector controller. The requirements for this structure were determined by the necessity of handling objects placed at user-defined locations. Therefore, capability for adaptation had to be considered. In this case, flexibility was integrated through a parametrizable solution of the robot IK via target coordinate frames. For the sake of clarity, the problem is shown in Figure 3. The pipeline to solve the IK started from the object detector, which initially analyzed the images and identified the different objects via an appropriately trained CNN (Redmon et al., 2015). Afterward, the identified objects’ pixel coordinates were transformed to the camera coordinate system by knowing the camera intrinsic parameters and assuming a pinhole camera model. This was achieved using Eq. 4 ( are the pixel coordinates of the identified projected object, are the coordinates of the principal point of the camera frame in the image center, and are the focal lengths of the camera expressed in pixels).

FIGURE 3

Coordinate transform problem. For the robot to be able to handle the parts, the transformations marked with , , and are needed. The first two points (), marked in green in the figure, define the position of the parts in the camera coordinate system, and they are obtained through a CNN algorithm. The second two (), marked in pink in the figure, define the position of the parts in the robot coordinate system, and their position is necessary for the robot to handle the parts. To get this last information, the transformation between the robot and camera (), marked in orange above, was obtained via the Perspective-n-Point algorithm (Lepetit et al., 2009).

Next, having the coordinates of the object in the camera coordinate system, the coordinates in the robot coordinate system were needed. To obtain those, the homogeneous transformation matrix between the camera and robot frame ( in Figure 3) was calculated through the Perspective-n-Point algorithm (Lepetit et al., 2009) was obtained via a camera calibration routine5. The obtained transformation is expressed by Eq. 5 ( is the number of singular values obtained from different camera poses, are initial coefficients, and are the right singular vectors).

Finally, point-to-point trajectories were generated for reaching the target positions calculated through Eq. 6 by solving the robot’s IK problem ( is the scaling factor dependent on the camera, and are the coordinates of the objects in the robot coordinate frame).

2.4 Cyber-physical system implementation

To ensure a safe collaboration between the robot and the operator, the implemented cyber-physical system had to consider the current safety regulations. Hence, the ISO/TS 15066 (International Organization for Standardization, 2016) and the ISO 12100 (International Organization for Standardization, 2010) were followed. Therefore, the safety modality known as power and force limiting (PFL) was selected to reduce the risks identified by the hazard analysis conducted according to ISO 12100 (2010). For allowing the implementation of the modalities, a safe Programmable Logic Controller (PLC) Siemens® S7-1500 together with a Sick® microScan3 were used with a network topology as shown in Figure 4. Moreover, to consent to the integration of PFL, a robot with ISO10218-1 (International Organization for Standardization, 2011) certification had to be selected, in this case, a Universal Robot® UR10 was used. Finally, to complete the integration of PFL, a custom gripper for the needs of the use case was developed and collision tests were performed to determine a safe operating speed as proposed by (Pantano et al., 2021). The final workcell with the implemented features is shown in Figure 5.

FIGURE 4

Network topology. The connections within the cyber-physical system allowed the implementation of speed and separation monitoring (SSM) and other risk reduction measures. The implemented hardware was Siemens® S7-1500, Sick® microScan3, Universal Robot® UR10, Siemens® Unified Control Panel, and an ethernet camera to get the images of the part displacement. Moreover, to control the different systems, the SHOP4CF architecture was adopted, employing the Manufacturing Process Management System (MPMS) component and the Task data model.

FIGURE 5

Implemented cyber-physical system and the gripper. The cyber-physical system is an ensemble of safety devices to guarantee safe cooperation and actuators for handling the parts of the use case. The robot is placed on one side of the table and the user had to stand in front of it to have a clear view of the robot motions (A). Moreover, the laser scanner was placed in front of the table so that it could promptly measure the presence of operators in the workspace and drive the robot to a slower operating speed. The specially designed gripper tested for safe human–robot collaboration was made using 3D printing and implemented mechanical principles which allowed grasping of the components in the use case (B).

To coordinate the collaboration and consent the transferring of information, the SHOP4CF6 architecture was adopted to design and execute the scenario (Zimniewicz, 2020). More precisely, the Task data model7 and the Manufacturing Process Management System (MPMS) component (Erasmus et al., 2020) were employed to coordinate the robot controller and the operator. On one hand, the MPMS provided a process modeler to design the process models and a process engine to automatically execute these models. On the other hand, the Tasks were published and monitored in a shared Context Broker (CB), allowing the robot controller to receive triggers on when to perform actions and send to the MPMS Human Machine Interface (HMI) triggers on operator actions. The sequence diagram depicting the interaction among these components is shown in Figure 6. By adopting this architecture, it was possible to integrate operator inputs while ensuring safety and coordination with cyber-physical system components.

FIGURE 6

Sequence diagram for the interaction of the different technological components. The user could execute the task through the MPMS interface. Afterward, the MPMS is taking care of publishing and monitoring the tasks in the FIWARE context broker for either the operator or the robot controller (ROBOT CTRL.). The same is done by the robot controller which waits for triggers on when to execute certain actions. This included moving the robot, gathering position data from the camera, and controlling the gripper.

2.5 Experiment design

To test the hypotheses, an experiment following the SHOP4CF guidelines for user studies was created (Aromaa and Heikkilä, 2020). The study was designed to compare interactions between a priori defined parts’ positions and operator-defined parts’ positions. Therefore, a 2 × 2 mixed design with two subsequent balanced randomized user interactions was used. Through this design, two variables were manipulated: the positioning of the parts (user defined vs. a priori defined) and the degree of familiarity with the application (first interaction vs. second interaction). To distinguish across the experiments, the following abbreviations are used: std for the test with a priori part positioning and usr for the test with operator-defined part positioning, and Interaction I for the first interaction and Interaction II for the second interaction.

With this design, the experiment procedure carried out by the authors was as follows; nomenclature and visual representation are shown in Figure 7. Initially, between times t0 and t1, the users were presented with the robotic cell, and a short explanation of the robot’s safety was given. Afterward, a script describing the human–robot interaction was read, and a video describing the collaboration in the experiment was presented. Then, at time t1, informed consent and general user demographics, technology fitness and level of trust in automation (Jian et al., 2000) were collected. Second, between t1 and t2, Interaction I was performed. During the interaction, the position of the operator was monitored and evaluated as outlined in Section 2.2 for identifying data regarding H2. This second part concluded at t2 with the user replying to the section of the work design questionnaire (WDQ) (Morgeson and Humphrey, 2006) related to task autonomy for properly identifying data for H1. Finally, between t2 and t3, Interaction II was performed, and similarly to Interaction I, the position of the operator was monitored. The experiment concluded at t3 with the user replying to the section of the WDQ related to task autonomy.

FIGURE 7

Experiment structure. Between t0 and t1, the user was presented with the robot cell and the experiment was explained. Afterward, at t1, the trust level was measured along with user demographics and informed consent. Between t1 and t2, the user was then performing the first interaction, which ended at t2 with the WDQ. Then, between t2 and t3, the user was performing the second interaction, which ended at t3 with the WDQ.

3 Results

This section reports the results collected during the study. The analysis has been conducted on the collected datasets during the experiments. Some of the datasets are made available in the Supplementary Materials of this article.

3.1 User demographics

The user group taking part in the study was composed of 17 individuals, not trained in physical ergonomics, M age = 33.05 years SD = 12.89, M height = 180.11 cm SD = 9.26. Within this group, the technology fitness measured in average hours per week spent with electronic devices was M = 31.15 h, (SD = 8.38), and the group expressed an average trust in automation of M = 4.87 (SD = 1.02) on a scale from 1 to 7. Out of the 17 test subjects, the task was performed correctly by 16 participants. Therefore, one test was discarded.

3.2 Task autonomy

To measure the task autonomy, the WDQ criteria were as follows: Criteria 1 (“The system gives me a chance to use my personal initiative or judgment in carrying out the work”) and Criteria 2 (“The system provides me with significant autonomy in making decisions”). The responses to these criteria were monitored after each trial, and the results are shown in Figure 8. It is possible to denote that Criteria 1 and Criteria 2 have different ratings; moreover, the usr shows a higher rating. Therefore, a Mann–Whitney U test was performed after having identified that the homogeneity of variance assumption for the t-test did not hold true, and the Levene test reported p < 0.05 (CI = 95%). From these results, it is possible to see that p < 0.05 (CI = 95%) for both Criteria 1 and Criteria 2. Therefore, a statistically significant difference among std and usr responses for the criteria is found, and the usr scored better than std.

FIGURE 8

Histogram plots showing the outcomes to the WDQ evaluation collected in the Criteria 1 (A) and the Criteria 2 (B). In blue, the USR interaction, and in pink, the STD interaction. The figures show that both criteria received a better rating in the case of the USR interaction.

3.3 Operators’ posture

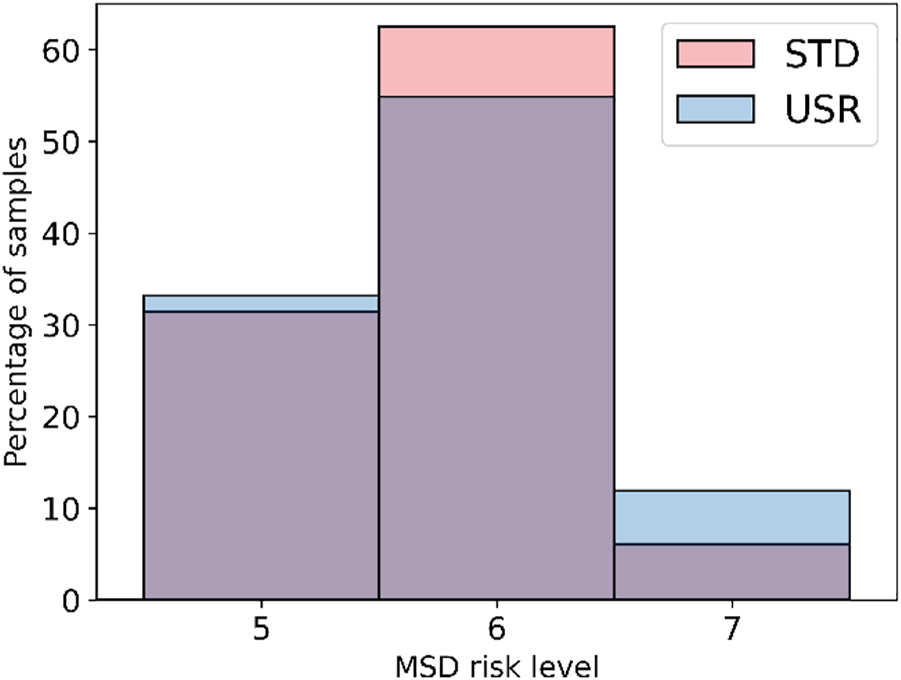

To measure the operators’ posture, the RULA assessment was monitored. As long as the RULA assessment was calculated for each frame in each test, the average MSD risk level was used to analyze the change between std and usr. The calculated risk levels among the trials are shown in Figure 9. Looking at the figure it is possible to see that there are similar risk levels among usr and the std. To check any similarity among the two distributions, a Mann–Whitney U test was performed after having identified that the homogeneity of variance assumption for the t-test did not hold true, and the Levene test reported p < 0.05 (CI = 95%). The outcome of the Mann–Whitney U test was p > 0.05 (CI = 95%); therefore, a significant difference between the MSD risk level in std and usr is not found. Therefore, the hypothesis of statistically significant difference must be rejected, and the samples should be considered to have a similar distribution.

FIGURE 9

Histogram plot representing MSD risk levels for the two tests calculated through the RULA assessment, the USR in blue and the STD in pink. The figure shows that the STD interaction had a larger number of samples classified with the risk level 6 when compared to the USR interaction. Moreover, the USR interaction reported more samples with risk level 7.

Furthermore, to measure the usage of the workspace during the experiment, the distance between the robot workspace and operator position was used. As long as the distance was calculated for each frame, the average value was monitored. The measured distance among the trials is shown in Figure 10. Looking at the figure, it is possible to detect similar distances in usr and std. A Mann–Whitney U test was performed after having identified that the homogeneity of variance assumption for the t-test did not hold true, and the Levene test reported p < 0.05 (CI = 95%). The outcome of the Mann–Whitney U was p < 0.05 (CI = 95%); therefore, a statistically significant difference between the samples is found. Since the distance in std M = 275.05 cm, SD = 46.88 and in usr M = 277. cm, SD = 44.75, the distance in usr is found to be larger than the one in std.

FIGURE 10

Boxplots representing the distances for the two tests, the means in green and the medians in orange. The means and medians between the two interactions are similar. However, the STD interaction displays a slightly smaller mean, meaning that on average, the users were closer to the robot workspace in the STD interaction.

3.4 Robot performances

To measure the performance of the task, the time taken for completing the collaboration was monitored. The times taken are shown in Figure 11. The figure shows that usr completion times are bigger than std completion times. To prove this assumption, a Mann–Whitney U test was performed after having identified that the homogeneity of variance assumption for the t-test did not hold true, and the Levene test reported p < 0.05 (CI = 95%). The result of the test led to p > 0.05 (CI = 95%). Therefore, the hypothesis of statistically significant difference must be rejected, and the samples should be considered to have a similar distribution.

FIGURE 11

Boxplots representing the time necessary to complete the whole test, the means in green and the medians in orange. The figure displays that the time to complete the activity with STD interaction was shorter than that of USR.

4 Discussion

4.1 Task autonomy

The responses to the WDQ criteria highlighted that leaving operators to decide where to place the parts can lead to higher task decision autonomy. Therefore, we can conclude that allowing this decision can create a good mental model for task autonomy and H1 can be accepted. This is aligned with what (Tausch and Kluge, 2020) discovered in their review of performing user tests without real robots. Consequently, we can infer that leaving the decision to the operator on robot tasks is beneficial to the perceived autonomy from the operator.

4.2 Posture of the operators during the tests

The results of the analysis of the operators’ postures show that the level of MSD risk in usr is slightly higher, Δ = +0.05, and this is not statistically significant. This outcome is against the hypothesis that higher autonomy should lead to lower level of MSD risk. Therefore, H2 needs to be rejected. However, no statistical significance was found between the tests, thus suggesting that operators’ decisions did not influence the MSD risk level. Despite this, the risk level measured in both the tests is medium according to the RULA assessment (McAtamney and Nigel Corlett, 1993). To further investigate the results of the RULA ergonomic assessment and identify any shortcomings, an experiment was performed to compare the calculated MSD risk level values with a ground truth. To do so, the angular values of the main arm joints calculated using the method explained in Section 2.2 were compared with the angular values of an arm simulation model developed in Matlab™. To achieve this comparison, a positional tracking experiment was performed. An operator was placed in front of the cameras and, holding the tracker on the hand, performed different arm positions. Afterward, the dataset was used to simulate the arm angular values through an IK algorithm, and the results were compared with the angles calculated using the method outlined in this work. The comparisons are reported in Figure 12. Although differences exist in the values, the trend between the two measurements is similar. Considering both that RULA uses the thresholds of 20° and 45° for the upper arm and that the relative difference between the two tests was measured, we can consider the evaluation through our method good enough for the estimation of the risk levels as also identified by (Kim et al., 2019), thus eliminating the hypothesis of a wrongly performed ergonomic assessment. Consequently, we can conclude that leaving the decision of where to move the parts to the operator does not result in an increased level of MSD risk in situations similar to the experiment conducted.

FIGURE 12

Comparison between the OpenPose angular values and the IK ones for the upper arm. Although differences exist, if the threshold values of the RULA assessment are considered (20° and 45°), the trend between the two evaluations is comparable. Therefore, the final MSD risk level calculated with the two approaches can be considered similar.

In addition, the results showed that operators in usr kept a larger distance from the robot than those in std. Therefore, this suggests that operators learn the robot’s behavior better (the further away, the faster) when they had higher task autonomy. Unfortunately, this did not reflect in different completion times as shown in Section 3.4. Therefore, further studies are necessary to investigate why users did not move farther away from the robot.

4.3 Robot performances

The tests showed that std led to lower competition times than usr. However, this difference was not found to have a strong impact due to the non-statistical significance (p > 0.05). Therefore, further tests should be conducted to investigate the matter.

4.4 Influences of task familiarity on the interaction

For further investigating if the autonomy had additional implications with other factors in the experiments, a correlation analysis using the Pearson correlation coefficient was conducted on the available measures for the two groups. The results for the group which performed std as Interaction I are depicted in Table 2. The results for the group which performed usr as Interaction I are depicted in Table 3.

TABLE 2

| M | SD | Trust scale | Tech. fitness | Dist. std | Dist. usr | Erg. std | Erg. usr | Time std | Time usr | Aut. std | Aut. usr | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Trust scale | 5.00 | 1.19 | - | |||||||||

| 2 | Tech. fitness | 34.0 | 6.21 | 0.50 | - | ||||||||

| 3 | Dist. std | 295.22 | 35.49 | 0.24 | 0.12 | - | |||||||

| 4 | Dist. usr | 276.06 | 38.05 | −0.27 | -0.23 | 0.66 | - | ||||||

| 5 | Erg. std | 5.57 | 0.60 | 0.04 | 0.03 | -0.22 | −0.35 | - | |||||

| 6 | Erg. usr | 5.69 | 0.54 | −0.30 | -0.39 | 0.70 | −0.60 | 0.59 | - | ||||

| 7 | Time std | 129.13 | 17.63 | −0.64 | -0.15 | −0.76 | −0.37 | -0.16 | 0.53 | - | |||

| 8 | Time usr | 123.50 | 23.29 | −0.15 | -0.08 | -0.68 | −0.80 | 0.52 | 0.86 | 0.59 | - | ||

| 9 | Aut. std | 2.75 | 1.06 | −0.11 | −0.03 | −0.11 | −0.12 | 0.49 | 0.02 | -0.17 | 0.29 | - | |

| 10 | Aut. usr | 3.50 | 0.72 | 0.21 | 0.32 | 0.29 | 0.01 | 0.02 | −0.36 | −0.38 | −0.05 | 0.70 | - |

Correlation analysis among the metrics gathered during the experiment for the group which performed the std as Interaction I calculated with the Pearson correlation coefficient. The meaningful correlations (p < 0.05) are highlighted in bold. The following abbreviations are used: Dist. is used for Distance, Erg. for physical ergonomics, and Aut. for autonomy

TABLE 3

| M | SD | Trust scale | Tech. fitness | Dist. usr | Dist. std | Erg. usr | Erg. std | Time usr | Time std | Aut. usr | Aut. std | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Trust scale | 4.75 | 0.88 | - | |||||||||

| 2 | Tech. fitness | 28.31 | 9.67 | 0.40 | - | ||||||||

| 3 | Dist. usr | 276.42 | 50.39 | −0.22 | 0.09 | - | |||||||

| 4 | Dist. std | 254.30 | 47.41 | −0.21 | 0.52 | 0.82 | - | ||||||

| 5 | Erg. usr | 5.92 | 0.70 | −0.35 | −0.15 | −0.04 | −0.11 | - | |||||

| 6 | Erg. std | 5.83 | 0.52 | −0.25 | −0.26 | −0.32 | 0.06 | 0.09 | - | ||||

| 7 | Time usr | 127.63 | 20.80 | −0.08 | −0.02 | −0.31 | −0.67 | −0.62 | 0.30 | - | |||

| 8 | Time std | 118.00 | 16.10 | −0.23 | −0.54 | −0.64 | −0.78 | 0.07 | 0.23 | 0.66 | - | ||

| 9 | Aut. usr | 4.41 | 0.44 | 0.02 | −0.05 | 0.37 | 0.28 | −0.22 | 0.13 | −0.21 | 0.11 | - | |

| 10 | Aut. std | 3.91 | 0.88 | −0.08 | −0.25 | 0.12 | 0.16 | −0.05 | 0.40 | 0.15 | 0.13 | 0.60 | - |

Correlation analysis among the metrics gathered during the experiment for the group which performed the usr as Interaction I calculated with the Pearson correlation coefficient. The meaningful correlations (p < 0.05) are highlighted in bold. The following abbreviations are used: Dist. is used for Distance, Erg. for physical ergonomics, and Aut. for autonomy. For the sake of readability, the usr and std rows have been swapped compared to Table 2 to show differences between interaction I (usr) and interaction II (std).

When looking for similarities across the two groups, the following can be noted. In both cases, the average distance from operator to robot workspace always decreased in the second interaction (see averages of Dist. std and Dist. usr in Tables 2, 3). Similarly, in both cases, the average time to complete the task decreased in the second interaction (see averages of Time std and Time usr in Tables 2, 3). This is related to the fact that error-free robot interaction, which, in this case, is always Interaction II, leads to a better acquaintance and familiarity, as also identified by (Miller et al., 2021). Another important factor is the time taken to complete the task. In both scenarios, the time was negatively correlated with the distance kept by the operator when the std unfolded. This means that the users correctly learned the robot safety strategy, which adopted slower speeds when they were closer, only when the std was active, therefore suggesting a need for the users to interact with a standard configuration at first before allowing the operator to customize its own interaction for learning the safety operation modality. Last, the MSD risk level is always slightly higher in the case of usr being active independently on the interaction order, as also shown in Section 3.3.

Then, when looking for differences across the groups, the following can be identified. First, in usr as Interaction I (Table 3), the distance kept by the operator in Interaction I (usr) is positively correlated with the distance kept in Interaction II (std); this indicates that the distance kept in the first interaction has influenced the second interaction. This might be connected to the higher feeling of task autonomy given by the location selection in the first collaboration instance. Second, in std as Interaction I (Table 2), the MSD risk level in Interaction II (usr) is positively correlated with the time elapsed in the Interaction II (usr), meaning the more time, the worse the MSD risk level. Moreover, the distance in the Interaction II (usr) is negatively correlated with the time taken. Considering these two points, it is possible to note a connection between the table distance, the time, and the level of MSD risk. This can, on one hand, be connected to the programming of the robot where the closer the users, the slower the robot, as identified in the paragraph before. On the other hand, we see that a closer distance to the table might have led to uncomfortable positions for the users, which prolonged the time in usr, thus leading to an overall worse scoring of the physical ergonomics in usr. This is similar to what was discovered by (Kar et al., 2015), where faster movements led to lower operator risk. However, this held true in just one scenario, and the overall analysis yielded that operators in usr were, on average, farther away, as shown in Section 3.3. Therefore, further tests will be necessary to investigate this last finding, also considering that no statistical significance was found on the difference of competition times between std and usr.

4.5 Study limitations

During the study, some limitations were observed. In this section, the two main drawbacks are explained.

The experiment script read to the test participants contained a detailed step-by-step description of the experiment in the following order. First, how std worked. Second, how usr worked and how the parts’ positioning might influence the user. Finally, how the robot was programmed (i.e., the closer, the slower). Therefore, no other goals other than completing the activity were assigned to users. During the tests, this resulted in a high variability on the part displacement. Some users placed the parts closer to the robot and some users placed them farther away from the robot. The authors always asked in an open-ended question why that was the case and some of the answers were as follows: “I placed the part closer to the robot so I can be faster although this leads to a bad position for me” or “I placed the parts closer to me so I can handle them better” or “I like the parts in the center of the table, and they are easy to handle.” Considering these observations, it is possible to denote that not having a clear objective on what to optimize for (i.e., robot speed, safety, or physical ergonomics) was having some degree of impact. Therefore, users had the choice to select randomly what to optimize for, and this was probably influenced by different individuals’ backgrounds, as already identified by (Miller et al., 2021). This might have led to the unexpected results observed in this study like the increase in the time necessary to complete the task with usr. Therefore, the results of this study should be considered only in situations where the users are requested to accomplish a pick-and-place task without any clear objective on what to focus apart from completing the activity.

Aside from this, it is important to underline that the employed methodology for the calculation of the MSD risk levels was the RULA assessment, through a software pipeline which analyzed images. The software pipeline has been developed by the authors and despite proving to be accurate, as described in Section 4.2, some limitations might still be present due to the CV approach based on OpenPose. Other literature using the same approach as the study by (Kim et al., 2021) reported that the approach could be affected by the placement and resolution of the cameras. Despite this, considering that the same software pipeline has been used both for usr and std, the delta differences in the physical ergonomics between the two groups should still be valid.

5 Conclusion

In this work, a study to investigate the influence of task autonomy on operator physical ergonomics and robot performances in industrial human–robot collaboration via a user study has been presented. The results yielded that higher task autonomy can be achieved by letting an operator decide the position of handled parts, and this does not lead to statistically significant differences in the overall task efficiency, nor an increase in the MSD risk level. However, this result might have been influenced by two drawbacks in the experiment design. First, it was observed that a clear objective for the task was not communicated to the participants. Therefore, users might have optimized for different aspects (e.g., posture or speed), leading to the observed results. Second, the estimation of the MSD risk level based on RULA might have been affected by the camera resolution of the camera displacements as identified by previous literature. Therefore, the measured risk level might not have been correct. Despite these limitations, this study highlighted that robotic systems able to let the operators decide about some task parameters like the parts’ positioning can be beneficial and that the SHOP4CF architecture allows us to integrate such scenarios. However, proper consideration should be taken to understand how users decide for certain application aspects, and further research is needed to ensure user wellbeing on this aspect. Therefore, with the published open-source software and dataset for the physical ergonomics, the authors would like to encourage other researchers to further study on the topic.

Statements

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material; further inquiries can be directed to the corresponding author.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author contributions

MP contributed to the conceptualization, software, methodology, resources, investigation, formal analysis, data curation, and writing—original draft and editing. QY contributed to software and data curation. AB contributed to resources. RR contributed to conceptualization and writing—editing. TH contributed to conceptualization and writing—editing. BL contributed to conceptualization and writing—editing. DR contributed to project administration, funding acquisition, and supervision. TK contributed to project administration, funding acquisition, and supervision. KT contributed to software and writing—editing. DL contributed to supervision, conceptualization, and writing—editing.

Funding

This research has received partial funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 873087. The results obtained in this work reflect only the authors’ view and not the ones of the European Commission; the Commission is not responsible for any use that may be made of the information they contain.

Acknowledgments

The authors would like to acknowledge the technical and formal advice provided by Kuula Timo, Dr. Aromaa Susanna, and Dr. Heikkilä Päivi from the VTT Technical Research Centre of Finland and Seyedamir Ahmadi from Tampere University. Moreover, the authors would like to thank the users taking part in the tests.

Conflict of interest

Authors MP, AB, RR, TH, BL, DR, and TK were employed by the company Siemens Aktiengesellschaft.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2022.943261/full#supplementary-material

Footnotes

1.^http://www.fcc.chalmers.se/software/ips/ips-imma/

2.^https://www.plm.automation.siemens.com/global/en/products/manufacturing-planning/human-factors-ergonomics.html

3.^https://ergo-plus.com/wp-content/uploads/RULA.pdf

4.^https://github.com/matteopantano/human-ergonomic-module

5.^https://ros-planning.github.io/moveit_tutorials/doc/hand_eye_calibration/hand_eye_calibration_tutorial.html

References

1

AromaaS.HeikkiläP. (2020). Deliverable 2.3 - Design and Evaluation Framework for User Studies. Available at: https://live-shop4cf.pantheonsite.io/wp-content/uploads/2021/07/SHOP4CF-WP2-D23-DEL-201217-v1.0.pdf

2

BaizidK.ĆukovićS.IqbalJ.YousnadjA.ChellaliR.MeddahiA.et al (2016). IRoSim: Industrial Robotics Simulation Design Planning and Optimization Platform Based on CAD and Knowledgeware Technologies. Robotics Computer-Integrated Manuf.42, 121–134. 10.1016/j.rcim.2016.06.003

3

BallestarM. T.Díaz-ChaoÁ.SainzJ.Torrent-SellensJ. (2020). Knowledge, Robots and Productivity in SMEs: Explaining the Second Digital Wave. J. Bus. Res.108, 119–131. 10.1016/j.jbusres.2019.11.017

4

BauerW.BenderM.BraunM.RallyP.ScholtzO. (2016). Lightweight Robots in Manual Assembly – Best to Start Simply: Examining Companies' Initial Experiences with Lightweight Robots. Stuttgart: Fraunhofer-Institut für Arbeitswirtschaft und Organisation IAO.

5

BayhaA.BockJ.BossB.DiedrichC.MalakutiS. (2020). Describing Capabilities of Industrie 4.0 Components. Berlin: Federal Ministry for Economic Affairs and Energy (BMWi).

6

CaoZ.SimonT.WeiS. E.SheikhY. (2017). “Realtime multi-person 2d pose estimation using part affinity fields,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 7291–7299.

7

CoronadoE.DeuffD.Carreno-MedranoP.TianL.KulicD.SumartojoS.et al (2021). Towards a Modular and Distributed End-User Development Framework for Human-Robot Interaction. IEEE Access1, 12675–12692. 10.1109/ACCESS.2021.3051605

8

DemirK. A.DövenG.SezenB. (2019). Industry 5.0 and Human-Robot Co-working. Procedia Comput. Sci.158, 688–695. 10.1016/j.procs.2019.09.104

9

Deutschen Instituts für Normung (2020). DIN EN ISO 9241-210:2020-03, Ergonomie der Mensch-System-Interaktion: Teil_210: Menschzentrierte Gestaltung interaktiver Systeme (ISO_9241-210:2019); Deutsche Fassung EN_ISO_9241-210:2019. 9241-210. Berlin: Beuth Verlag GmbH. Available at. https://www.beuth.de/en/standard/din-en-iso-9241-210/313017070

10

Diego-MasJ.-A.Alcaide-MarzalJ.Poveda-BautistaR. (2017). Errors Using Observational Methods for Ergonomics Assessment in Real Practice. Hum. Factors59, 1173–1187. 10.1177/0018720817723496

11

EibandT.WillibaldC.TannertI.WeberB.LeeD. (2022). Collaborative Programming of Robotic Task Decisions and Recovery BehaviorsAuton. Robot.in press.

12

ErasmusJ.VanderfeestenI.TraganosK.KeulenR.GrefenP. (2020). The HORSE Project: The Application of Business Process Management for Flexibility in Smart Manufacturing. Appl. Sci.10, 4145. 10.3390/app10124145

13

FriedmanB.KhanP. H.HoweD. C. (2000). Trust Online. Commun. ACM43, 34–40. 10.1145/355112.355120

14

FriedmanB. (1996). Value-sensitive Design. interactions3, 16–23. 10.1145/242485.242493

15

GläserD.FritzscheL.BauerS.SylajaV. J. (2016). Ergonomic Assessment for DHM Simulations Facilitated by Sensor Data. Procedia CIRP41, 702–705. 10.1016/j.procir.2015.12.098

16

GualtieriL.RauchE.VidoniR. (2021). Emerging Research Fields in Safety and Ergonomics in Industrial Collaborative Robotics: A Systematic Literature Review. Robotics Computer-Integrated Manuf.67, 101998. 10.1016/j.rcim.2020.101998

17

HansenM. Å.KaergaardA.Hviid AndersenJ.NetterstrømB. (2003). Associations between Repetitive Work and Endocrinological Indicators of Stress. Work & Stress17, 264–276. 10.1080/02678370310001628899

18

HoffK. A.BashirM. (2015). Trust in Automation: Integrating Empirical Evidence on Factors that Influence Trust. Hum. Factors57, 407–434. 10.1177/0018720814547570

19

International Organization for Standardization (2011). ISO 10218-1:2011: Robots and Robotic Devices - Safety Requirements for Industrial Robots - Part 1: Robots. Geneva: ISO.

20

International Organization for Standardization (2010). ISO 12100:2010: Safety of Machinery - General Principles for Design - Risk Assessment and Risk Reduction. Geneva: ISO.

21

International Organization for Standardization (2016). ISO/TS 15066:2016: Robots and Robotic Devices - Collaborative Robots. Geneva: ISO.

22

JianJ.-Y.BisantzA. M.DruryC. G. (2000). Foundations for an Empirically Determined Scale of Trust in Automated Systems. Int. J. Cognitive Ergonomics4, 53–71. 10.1207/S15327566IJCE0401_04

23

KaasinenE.LiinasuoM.SchmalfußF.KoskinenH.AromaaS.HeikkiläP.et al (2019). “A Worker-Centric Design and Evaluation Framework for Operator 4.0 Solutions that Support Work Well-Being,” in Human Work Interaction Design. Designing Engaging Automation. BarricelliB. R.RotoV.ClemmensenT.CamposP.LopesA.GonçalvesF.et al (Cham: Springer International Publishing), 263–282.

24

KahnP. H.IshiguroH.FriedmanB.KandaT.FreierN. G.SeversonR. L.et al (2007). Psychological Benchmarks of Human–Robot Interaction. Interact. Stud.8, 363–390. 10.1075/is.8.3.04kah

25

KarG.VuA.Juliá NehmeB.HedgeA. (2015). Effects of Mouse, Trackpad and 3d Motion and Gesture Control on Performance, Posture, and Comfort. Proc. Hum. Factors Ergonomics Soc. Annu. Meet.59, 327–331. 10.1177/1541931215591068

26

KimW.LorenziniM.BalattiP.NguyenP. D.PattaciniU.TikhanoffV.et al (2019). Adaptable Workstations for Human-Robot Collaboration: A Reconfigurable Framework for Improving Worker Ergonomics and Productivity. IEEE Robot. Autom. Mag.26, 14–26. 10.1109/MRA.2018.2890460

27

KimW.SungJ.SaakesD.HuangC.XiongS. (2021). Ergonomic Postural Assessment Using a New Open-Source Human Pose Estimation Technology (OpenPose). Int. J. Industrial Ergonomics84, 103164. 10.1016/j.ergon.2021.103164

28

KolbeinssonA.LagerstedtE.LindblomJ. (2019). Foundation for a Classification of Collaboration Levels for Human-Robot Cooperation in Manufacturing. Prod. Manuf. Res.7, 448–471. 10.1080/21693277.2019.1645628

29

LepetitV.Moreno-NoguerF.FuaP. (2009). EPnP: An Accurate O(n) Solution to the PnP Problem. Int. J. Comput. Vis.81, 155–166. 10.1007/s11263-008-0152-6

30

LidstoneJ.MaloneG.PortoR.StephensA.SmetsM.BanningM.et al (2021). A Survey of Right-Angle Power Tool Use in Canadian Automotive Assembly Plants. Appl. Ergon.90, 103171. 10.1016/j.apergo.2020.103171

31

MakriniI. E.MerckaertK.WinterJ. d.LefeberD.VanderborghtB. (2019). Task Allocation for Improved Ergonomics in Human-Robot Collaborative Assembly. Interact. Stud.20, 102–133. 10.1075/is.18018.mak

32

McAtamneyL.Nigel CorlettE. (1993). RULA: a Survey Method for the Investigation of Work-Related Upper Limb Disorders. Appl. Ergon.24, 91–99. 10.1016/0003-6870(93)90080-S

33

MgbemenaC. E.TiwariA.XuY.PrabhuV.HutabaratW. (2020). Ergonomic Evaluation on the Manufacturing Shop Floor: A Review of Hardware and Software Technologies. CIRP J. Manuf. Sci. Technol.30, 68–78. 10.1016/j.cirpj.2020.04.003

34

MillerL.KrausJ.BabelF.BaumannM. (2021). More Than a Feeling-Interrelation of Trust Layers in Human-Robot Interaction and the Role of User Dispositions and State Anxiety. Front. Psychol.12, 592711. 10.3389/fpsyg.2021.592711

35

MorgesonF. P.HumphreyS. E. (2006). The Work Design Questionnaire (WDQ): Developing and Validating a Comprehensive Measure for Assessing Job Design and the Nature of Work. J. Appl. Psychol.91, 1321–1339. 10.1037/0021-9010.91.6.1321

36

MurrellK. F. H. (1961). Operator Variability and its Industrial Consequences. Int. J. Prod. Res.1, 39–55. 10.1080/00207546108943088

37

NamwongsaS.PuntumetakulR.NeubertM. S.ChaikliengS.BoucautR. (2018). Ergonomic Risk Assessment of Smartphone Users Using the Rapid Upper Limb Assessment (RULA) Tool. PLoS One13, e0203394. 10.1371/journal.pone.0203394

38

PantanoM.BlumbergA.RegulinD.HauserT.SaenzJ.LeeD. (2021). “Design of a Collaborative Modular End Effector Considering Human Values and Safety Requirements for Industrial Use Cases,” in 14th International Workshop on Human-Friendly Robotics (HFR 2021).

39

PantanoM.RegulinD.LutzB.LeeD. (2020). A Human-Cyber-Physical System Approach to Lean Automation Using an Industrie 4.0 Reference Architecture. Procedia Manuf.51, 1082–1090. 10.1016/j.promfg.2020.10.152

40

RahalR.MatareseG.GabicciniM.ArtoniA.PrattichizzoD.GiordanoP. R.et al (2020). Caring about the Human Operator: Haptic Shared Control for Enhanced User Comfort in Robotic Telemanipulation. IEEE Trans. Haptics13, 197–203. 10.1109/TOH.2020.2969662

41

RedmonJ.DivvalaS.GirshickR.FarhadiA. (2015). “You only look once: Unified, real-time object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 779–788.

42

RookL. (2013). Mental Models: a Robust Definition. Learn. Organ.20, 38–47. 10.1108/09696471311288519

43

Ruiz CastroP.MahdavianN.BrolinE.HögbergD.HansonL. (2017). “IPS IMMA for designing human-robot collaboration workstations,” in 5th International digital human modeling symposium, Bonn, Germany, June 26–28 (Federal Institute for Occupational Safety and Health), 263–273.

44

RüßmannM.LorenzM.GerbertP.WaldnerM.JustusJ.EngelP.et al (2015). Industry 4.0: The Future of Productivity and Growth in Manufacturing Industries. Boston Consult. group9, 54–89.

45

Sanchez-LiteA.GarciaM.DomingoR.Angel SebastianM. (2013). Novel Ergonomic Postural Assessment Method (NERPA) Using Product-Process Computer Aided Engineering for Ergonomic Workplace Design. PLoS One8, e72703. 10.1371/journal.pone.0072703

46

ShaftiA.AtakaA.LazpitaB. U.ShivaA.WurdemannH. A.AlthoeferK. (2019). “Real-time Robot-Assisted Ergonomics,” in 2019 International Conference on Robotics and Automation (ICRA), 1975–1981.

47

ShahrdarS.MenezesL.NojoumianM. (2019). “A Survey on Trust in Autonomous Systems,” in Intelligent Computing. Editors AraiK.KapoorS.BhatiaR. (Cham: Springer International Publishing), 368–386.

48

SofgeD. (2013). Trust and Autonomous Systems: Papers from the AAAI Spring Symposium. Palo Alto, California, USA: AAAI Press.

49

TauschA.KlugeA. (2020). The Best Task Allocation Process Is to Decide on One’s Own: Effects of the Allocation Agent in Human–Robot Interaction on Perceived Work Characteristics and Satisfaction. Cogn. Technol. Work24, 39–55. 10.1007/s10111-020-00656-7

50

TeoG.Reinerman-JonesL.MatthewsG.SzalmaJ.JentschF.HancockP. (2018). Enhancing the Effectiveness of Human-Robot Teaming with a Closed-Loop System. Appl. Ergon.67, 91–103. 10.1016/j.apergo.2017.07.007

51

VerleeB.DormalT.Lecomte-BeckersJ. (2012). Density and Porosity Control of Sintered 316L Stainless Steel Parts Produced by Additive Manufacturing. Powder Metall.55, 260–267. 10.1179/0032589912Z.00000000082

52

VillaniV.PiniF.LealiF.SecchiC. (2018). Survey on Human–Robot Collaboration in Industrial Settings: Safety, Intuitive Interfaces and Applications. Mechatronics55, 248–266. 10.1016/j.mechatronics.2018.02.009

53

WeissA.WortmeierA.-K.KubicekB. (2021). Cobots in Industry 4.0: A Roadmap for Future Practice Studies on Human–Robot Collaboration. IEEE Trans. Human-Mach. Syst.51, 335–345. 10.1109/THMS.2021.3092684

54

YazdaniradS.KhoshakhlaghA. H.HabibiE.ZareA.ZeinodiniM.DehghaniF. (2018). Comparing the Effectiveness of Three Ergonomic Risk Assessment Methods-RULA, LUBA, and NERPA-To Predict the Upper Extremity Musculoskeletal Disorders. Indian J. Occup. Environ. Med.22, 17–21. 10.4103/ijoem.IJOEM_23_18

55

ZhuW.FanX.ZhangY. (2019). Applications and Research Trends of Digital Human Models in the Manufacturing Industry. Virtual Real. Intelligent Hardw.1, 558–579. 10.1016/j.vrih.2019.09.005

56

ZimniewiczM. (2020). Deliverable 3.2 - SHOP4CF Architecture. Available at: https://ec.europa.eu/research/participants/documents/downloadPublic?documentIds=080166e5dd538506&appId=PPGMS.

Summary

Keywords

physical ergonomics, autonomy, human-robot collaboration (HRC), RULA, musculoskeletal disorders

Citation

Pantano M, Yang Q, Blumberg A, Reisch R, Hauser T, Lutz B, Regulin D, Kamps T, Traganos K and Lee D (2022) Influence of task decision autonomy on physical ergonomics and robot performances in an industrial human–robot collaboration scenario. Front. Robot. AI 9:943261. doi: 10.3389/frobt.2022.943261

Received

13 May 2022

Accepted

28 July 2022

Published

27 September 2022

Volume

9 - 2022

Edited by

Kadir Alpaslan Demir, Turkish Naval Center Research Command, Turkey

Reviewed by

Khelifa Baizid, National Research Council (CNR), Italy

Lucia Botti, University of Modena and Reggio Emilia, Italy

Updates

Copyright

© 2022 Pantano, Yang, Blumberg, Reisch, Hauser, Lutz, Regulin, Kamps, Traganos and Lee.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Matteo Pantano, matteo.pantano@siemens.com

This article was submitted to Field Robotics, a section of the journal Frontiers in Robotics and AI

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.