- AI and Robotics (AIR), Institute of Material Handling and Logistics (IFL), Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany

Diffusion generative models have demonstrated remarkable success in visual domains such as image and video generation. They have also recently emerged as a promising approach in robotics, especially in robot manipulations. Diffusion models leverage a probabilistic framework, and they stand out with their ability to model multi-modal distributions and their robustness to high-dimensional input and output spaces. This survey provides a comprehensive review of state-of-the-art diffusion models in robotic manipulation, including grasp learning, trajectory planning, and data augmentation. Diffusion models for scene and image augmentation lie at the intersection of robotics and computer vision for vision-based tasks to enhance generalizability and data scarcity. This paper also presents the two main frameworks of diffusion models and their integration with imitation learning and reinforcement learning. In addition, it discusses the common architectures and benchmarks and points out the challenges and advantages of current state-of-the-art diffusion-based methods.

1 Introduction

Diffusion Models (DMs) have emerged as highly promising deep generative models in diverse domains, including computer vision (Ho et al., 2020; Song J. et al., 2021; Nichol and Dhariwal, 2021; Ramesh et al., 2022; Rombach et al., 2022a), natural language processing (Li et al., 2022; Zhang et al., 2023; Yu et al., 2022), and robotics (Chi et al., 2023; Urain et al., 2023). DMs intrinsically posses the ability to model any distribution. They have demonstrated remarkable performance and stability in modeling complex and multi-modal distributions1 from high-dimensional and visual data surpassing the ability of Gaussian Mixture Models (GMMs) or Energy-based models (EBMs) like Implicit behavior cloning (IBC) (Chi et al., 2023). While GMMs and IBCs can model multi-modal distributions, and IBCs can even learn complex discontinuous distributions (Florence et al., 2022), experiments (Chi et al., 2023) show that in practice, they might be heavily biased toward specific modes. In general, DMs have also demonstrated performance exceeding generative adversarial networks (GANs) (Krichen, 2023), which were previously considered the leading paradigm in the field of generative models. GANs usually require adversarial training, which can lead to mode collapse and training instability (Krichen, 2023). Additionally, GANs have been reported to be sensitive to hyperparameters (Lucic et al., 2018).

Since 2022, there has been a noticeable increase in the implementation of diffusion probabilistic models within the field of robotic manipulation. These models are applied across various tasks, including trajectory planning, e.g. (Chi et al., 2023), and grasp prediction, e.g., (Urain et al., 2023). The ability of DMs to model multi-modal distributions is a great advantage in many robotic manipulation applications. In various manipulation tasks, such as trajectory planning and grasping, there exist multiple equally valid solutions (redundant solutions). Capturing all solutions improves generalizability and robots’ versatility, as it enables generating feasible solutions under different conditions, such as different placements of objects or different constraints during inference. Although in the context of trajectory planning using DMs, primarily imitation learning is applied, DMs have been adapted for integration with reinforcement learning (RL), e.g., (Geng et al., 2023). Research efforts focus on various components of the diffusion process adapted to different tasks in the domain of robotic manipulation. To give just some examples, developed architectures integrate different or even multiple input modalities. One example of an input modality could be point clouds (Ze et al., 2024; Ke et al., 2024). With the provided depth information, models can learn more complex tasks, for which a better 3D scene understanding is crucial. Another example of an additional input modality could be natural language (Ke et al., 2024; Du et al., 2023; Li et al., 2025), which also enables the integration of foundation models, like large language models, into the workflow. In Ze et al. (2024), both point clouds and language task instructions are used as multiple input modalities. Others integrate DMs into hierarchical planning (Ma X. et al., 2024; Du et al., 2023) or skill learning (Liang et al., 2024; Mishra et al., 2023), to facilitate their state-of-the-art capabilities in modeling high-dimensional data and multi-modal distributions, for long-horizon and multi-task settings. Many methodologies, e.g., (Kasahara et al., 2024; Chen Z. et al., 2023), employ diffusion-based data augmentation in vision-based manipulation tasks to scale up datasets and reconstruct scenes. It is important to note that one of the major challenges of DMs is its comparatively slow sampling process, which has been addressed in many methods, e.g., (Song J. et al., 2021; Chen K. et al., 2024; Zhou H. et al., 2024), also enabling real-time prediction.

To the best of our knowledge, we provide the first survey of DMs concentrating on the field of robotic manipulation. The survey offers a systematic classification of various methodologies related to DMs within the realm of robotic manipulation, regarding network architecture, learning framework, application, and evaluation. Alongside comprehensive descriptions, we present illustrative taxonomies.

To provide the reader with the necessary background information on DMs, we will first introduce their fundamental mathematical concepts (Section 2). This section provides a general overview of DMs rather than focusing specifically on robotic manipulation. Then, network architectures commonly used for DMs in robotic manipulation will be discussed (Section 3). Next (Section 4), we explore the three primary applications of DMs in robotic manipulation: trajectory generation (Section 4.1), robotic grasp synthesis (Section 4.2), and visual data augmentation (Section 4.3). This is followed by an overview of commonly used benchmarks and baselines (Section 5). Finally, we discuss our conclusions and existing limitations, and outline potential directions for future research (Section 6).

2 Preliminaries on diffusion models

2.1 Mathematical framework

The key idea of DMs is to gradually perturb an unknown target distribution

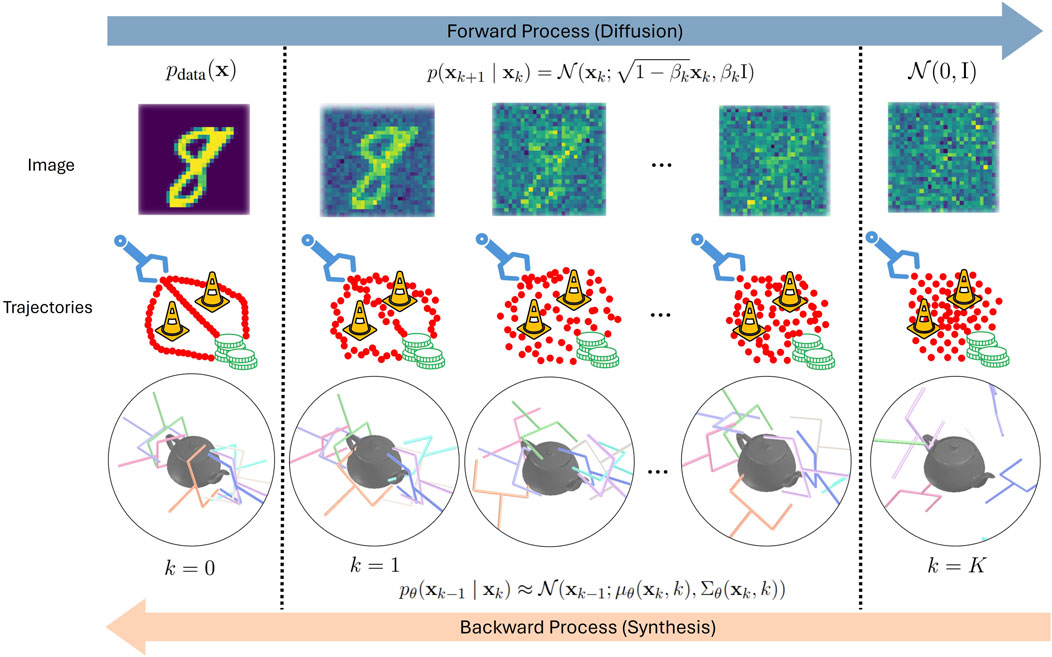

Figure 1. Illustrations of diffusion (forward) processes on image, trajectories, and grasp poses (Urain et al., 2023) and their corresponding synthesis (backward) processes.

The original score-based DM by Song and Ermon (2019) is rarely used in the field of robotic manipulation. This could be due to its inefficient sampling process. However, as it forms a crucial mathematical framework and baseline for many of the later developed DMs, e.g., (Song Y. et al., 2021; Karras et al., 2022), including DDPM Ho et al. (2020), we describe the main concepts in the following section. While DDPM is rarely used as well, the commonly used method Denoising Diffusion Implicit Models (DDIM) (Song J. et al., 2021) originates from DDPM. DDIM only alters the sampling process of DDPM while keeping its training procedure. Hence, understanding DDPM is crucial for many applications of DMs in robotic manipulation.

In the following sections, we first introduce score-based DMs, then DDPM, before addressing their shortcomings.

2.1.1 Denoising score matching using Noise Conditional Score Networks

One approach to estimate perturbations in the data distribution is to use denoising score matching with Lagenvin dynamics (SMLD), where the score of the data density of the perturbed distributions is learned using a Noise Conditional Score Network (NCSM) (Song and Ermon, 2019). This method is described in this section, and for more details, please refer to their original work. During the forward diffusion process, data

2.1.1.1 Forward process

Let

2.1.1.2 Reverse process

Starting with randomly drawn noise samples

where

2.1.2 Denoising Diffusion Probabilistic Models (DDPM)

In DDPM (Ho et al., 2020), instead of estimating the score function directly, a noise prediction network, conditioned on the noise scale, is trained. Similarly to SMLD with NCSN, new points are generated by sampling Gaussian noise and iteratively denoising the samples using the learned noise prediction network.

Notably, there is one step per noise scale in the denoising process instead of recursively sampling from each noise scale.

2.1.2.1 Forward process

To train the noise prediction network

where

with

Adding the noise in closed form facilitates training a noise prediction network

2.1.2.2 Reverse process

Similar to the reverse process described in Section 2.1.1, new samples are generated from random noise

In DDPM, the variance-schedule is fixed and thus

which is repeated until

2.2 Architectural improvements and adaptations

One of the main disadvantages of DMs is the iterative sampling, leading to a relatively slow sampling process. In comparison, using GANs or variational autoencoders (VAEs), only a single forward pass through the trained network is required to produce a sample. In both DDPM and the original formulation of SMLD, the number of time steps (noise levels) in the forward and reverse processes is equal. While reducing the number of noise levels leads to a faster sampling process, it comes at the cost of sample quality. Thus, there have been numerous works to adapt the architectures and sampling processes of DDPM and SMLD to improve both the sampling speed and quality of DMs, e.g., (Nichol and Dhariwal, 2021; Song J. et al., 2021; Song Y. et al., 2021).

2.2.1 Improving sampling speed and quality

The forward diffusion process can be formulated as a stochastic differential equation (SDE). Using the corresponding reverse-time SDE, SDE-solvers can then be applied to generate new samples (Song Y. et al., 2021). Song et al. (2021b) shows that the diffusion process from SMLD corresponds to an SDE where the variance of the perturbation kernels

One group of methods aimed at improving sampling speed (Jolicoeur-Martineau et al., 2021; Song J. et al., 2021; Lu et al., 2022; Karras et al., 2022) designs samplers that operate independently of the specific training process. Using an SDE/ODE-based formulation allows choosing different discretizations of the reverse process than for the forward process. Larger step sizes reduce computational cost and sampling time but introduce greater truncation error. The sampler operates independently of the specific noise prediction network implementation, enabling the use of a single network, such as one trained with DDPM, with different samplers.

Denoising Diffusion Implicit Models (DDIM) (Nichol and Dhariwal, 2021) is the dominant method used for robotic manipulation. It uses a deterministic sampling process and outperforms DDPM when using only a few (10–100) sampling iterations. DDIM can be formulated as a first-order ODE solver. In Diffusion Probabilistic Models-solver (DPM-solver) (Lu et al., 2022), a second-order ODE solver is applied, which decreases the truncation error, thus further increasing performance on several image classification benchmarks for a low number of sampling steps. In contrast to DDIM, Karras et al. (2022); Lu et al. (2022) use non-uniform step sizes in the solver. In a detailed analysis Karras et al. (2022) empirically shows that compared to uniform step-sizes, linear decreasing step sizes during denoising lead to increased performance (Karras et al., 2022), indicating that errors near the true distribution have a larger impact.

Even though DPM-solver (Lu et al., 2022) shows superior performance over DDIM. It should be noted that in the original papers (Song J. et al., 2021; Lu et al., 2022), only image-classification benchmarks are considered to compare both methods. Therefore, more extensive tests should be performed to validate these results.

A second group of methods addressing sampling speed also adapts the training process or requires additional fine-tuning. Examples are knowledge distillation of DMs to gradually reduce the number of noise levels (Salimans and Ho, 2022), or finetuning of the noise schedule (Nichol and Dhariwal, 2021; Watson et al., 2022). While in DDPM and DDIM, the noise schedule is fixed, in improved Denoising Diffusion Probabilistic Models (iDDPM) (Nichol and Dhariwal, 2021), the noise schedule is learned, resulting in better sample quality. They also suggest changing from a linear noise schedule, like in DDPM, to other schedules, e.g., a cosine noise schedule. In particular, for low-resolution samples, a linear schedule leads to a noisy diffusion process with too rapid information loss, while the cosine noise schedule has smaller steps during the beginning and end of the diffusion process. Already after a fraction of around 0.6 diffusion steps, the linear noise schedule is close to zero (and the data distribution close to white noise). Thus, the first steps of the reverse process do not strongly contribute to the data generation process, making the sampling process inefficient. Although iDDPM (Nichol and Dhariwal, 2021) also outperforms DDIM, it requires fine-tuning, which might be a reason why it is less popular.

There are also several methods (Zhou H. et al., 2024; Li X. et al., 2024; Wang et al., 2023b; Chen K. et al., 2024) regarding sampling speed, specifically for applications in robotic manipulation, which is different from the previously named methodologies, which were developed in the context of image processing. For example, Chen K. et al. (2024) samples from a more informed distribution than a Gaussian. They point out that even initial distributions approximated with simple heuristics result in better sample quality, especially when using few diffusion steps or when only a limited amount of data is available. Others (Prasad et al., 2024) use teacher–student distillation techniques (Tarvainen and Valpola, 2017), where pretrained diffusion models serve as teachers, guiding student models to operate with larger denoising steps while preserving consistency with the teacher’s results at smaller steps. While this increases training effort, it decreases sampling time at inference, which is especially important in (near) real-time control.

Recently, flow matching (Lipman et al., 2023) has been used as an alternative method to diffusion. Like with diffusion, the true distribution is estimated starting from a noise distribution. However, instead of learning the time-dependent score or noise, and then deriving the velocity from noise to data distribution from it, in flow matching, the time-dependent velocity field is learned directly. This leads to a simpler training objective, using the interpolation between the noise sample and true data point, without requiring a noise schedule. Thus, flow matching is usually more numerically stable and requires less hyperparameter tuning. However, when using few sampling steps, with flow matching, there is a risk of mode-collapse and infeasible solutions, as the ODE-solver averages over the velocity field. Thus, Frans et al. (2025) conditions the model not only on the time-step, but also on the step-size. By using the fact that one large step should lead to the same point as two consecutive steps of half the size, they maximize a self-consistency objective in addition to the flow-matching objective. Thus, the model can sample with a single step, with only a small drop in performance, far surpassing the performance of DDIM, when only a small number of sampling steps are used. While this is similar to the above-mentioned distillation techniques (Prasad et al., 2024), here only a single model has to be trained.

2.3 Adaptations for robotic manipulation

Two main points must be considered to apply DMs to robotic manipulation. Firstly, in the diffusion processes described in the previous sections, given the initial noise, samples are generated solely based on the trained noise prediction network or conditional score network. However, robot actions are usually dependent on simulated or real-world observations with multi-modal sensory data and the robot’s proprioception. Thus, the network used in the denoising process has to be conditioned on these observations (Chi et al., 2023). Encoding observations varies in different algorithms. Some use ground truth state information, such as object positions (Ada et al., 2024), and object features, like object sizes (Mishra et al., 2023; Mendez-Mendez et al., 2023). In this case, sim-to-real transfer is challenging due to sensor inaccuracies, object occlusions, or other adversarial settings, e.g., lightning conditions, Therefore, most methods directly condition on visual observations, such as images (Si et al., 2024; Bharadhwaj et al., 2024a; Vosylius et al., 2024; Chi et al., 2023; Shi et al., 2023), point clouds (Liu et al., 2023c; Li et al., 2025), or feature encodings and embeddings (Ze et al., 2024; Ke et al., 2024; Li X. et al., 2024; Pearce et al., 2022; Liang et al., 2024; Xian et al., 2023; Xu et al., 2023), where the robustness to adversarial setting can be directly addressed.

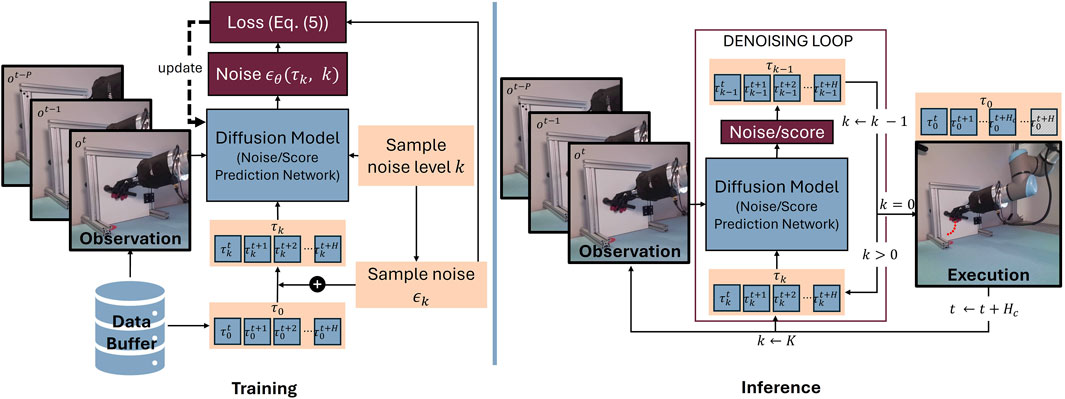

Secondly, unlike in image generation, where the pixels are spatially correlated, in trajectory generation for robotic manipulation, the samples of a trajectory are temporally correlated. On the one hand, generating complete trajectories may not only lead to high inaccuracies and error accumulation of the long-horizon predictions, but also prevent the model from reacting to changes in the environment. On the other hand, predicting the trajectory one action at a time increases the compounding error effect and may lead to frequent switches between modes. Accordingly, trajectories are mostly predicted in subsequences, with a receding horizon, e.g., (Chi et al., 2023; Scheikl et al., 2024), which will be discussed in more detail in Section 4.1 and is visualized in Figure 2. In receding horizon control, the diffusion model generates only a subtrajectory with each backward pass. The subtrajectory is executed before generating the next subtrajectory on the updated observations. In comparison, grasps are generated similarly to images. As here only a single action, usually the grasp pose, is generated, this is done using a single backward pass of the diffusion model. Moreover, the grasp pose is usually predicted from a single initial observation. During execution, possible changes in the scene are not being taken into account. The backward pass for generating one action is visualized in Figure 1.

Figure 2. Illustrations of the iterative trajectory generation using receding horizon control. At inference, the trajectory is planned up to a planning horizon

3 Architecture

3.1 Network architecture

For the implementation of the DM, it is essential to select an appropriate architecture for the noise prediction network. There exist three predominant architectures used for the denoising diffusion networks: Convolutional neural networks (CNNs), transformers, and Multi-Layer Perceptrons (MLPs).

3.1.1 Convolutional neural networks

The most frequently employed architecture is the CNN, more specifically the Temporal U-Net that was first introduced by Janner et al. (2022) in their algorithm Diffuser, a DM for robotics tasks. The U-Net architecture (Ronneberger et al., 2015) has shown great success in image generation with DMs, e.g., (Ho et al., 2020; Dhariwal and Nichol, 2021; Song Y. et al., 2021). U-net, in general, is proven to be sample efficient and can even generalize well with small training datasets (Meyer-Veit et al., 2022b; Meyer-Veit et al., 2022a). Thus, it has been adapted to robotic manipulation by replacing two-dimensional spatial convolutions with one-dimensional temporal convolutions (Janner et al., 2022).

The temporal U-Net is further adapted by Chi et al. (2023) in their CNN-based Diffusion Policy (DP) for robotic manipulation. While in Diffuser, the state and action trajectories are jointly denoised, only the action trajectories are generated in DP. To ensure temporal consistency, the diffusion process is conditioned on a history of observations using feature-wise linear modification (FiLM) (Perez et al., 2018). This formulation allows for an extension to different and multiple conditions by concatenating them in feature space before applying FiLM (Li X. et al., 2024; Si et al., 2024; Ze et al., 2024; Li et al., 2025; Wang L. et al., 2024). Moreover, it also enables the incorporation of constraints embedded with an MLP (Ajay et al., 2023; Zhou et al., 2023; Power et al., 2023).

Discussed in more detail in Section 4.1.1.6, Janner et al. (2022) formulates conditioning as inpainting, where during inferences at each denoising step, specific states from the currently being generated sample are replaced with states from the condition. For example, the final state of a generated trajectory may be replaced by the goal state, for goal-conditioning. This only affects the sampling process at inference and, thus, does not require any adaptations of the network architecture. However, it only supports point-wise conditions, severely limiting its applications. Multiple frameworks (Saha et al., 2024; Carvalho et al., 2023; Wang et al., 2023b; Ma X. et al., 2024) directly employ the temporal U-Net architecture introduced by Janner et al. (2022). However, as this type of conditioning is highly limited in its applications, FiLM conditioning is more common. A different but less-used architecture incorporates conditions via cross-attention mapped to the intermediate layers of the U-Net (Zhang E. et al., 2024), which is more complicated to integrate than FiLM conditioning.

3.1.2 Transformers

Another commonly used architecture for the denoising network are transformers. A history of observations, the current denoising time step, and the (partially denoised) action are input tokens to the transformer. Additional conditions can be integrated via self-and cross-attention, e.g., (Chi et al., 2023; Mishra and Chen, 2024). The exact architecture of the transformer varies across methods. The more commonly used model is a multi-head cross-attention transformer as the denoising network, e.g., (Chi et al., 2023; Pearce et al., 2022; Wang et al., 2023b; Mishra and Chen, 2024). Others (Bharadhwaj et al., 2024b; Mishra et al., 2023) use architectures based on the method Diffusion Transformers (Peebles and Xie, 2023), which is the first method combining DMs with transformer architectures. There are also less commonly used architectures, such as using the output tokens of the transformer as input to an MLP, which predicts the noise (Ke et al., 2024).

For completeness, we provide a list of works, using transformer architectures: (Chi et al., 2023; Pearce et al., 2022; Scheikl et al., 2024; Wang et al., 2023b; Ze et al., 2024; Feng et al., 2024; Bharadhwaj et al., 2024b; Mishra et al., 2023; Liu et al., 2023b; Xu et al., 2024; Mishra and Chen, 2024; Liu et al., 2023c; Vosylius et al., 2024; Reuss et al., 2023; Iioka et al., 2023; Huang T. et al., 2025).

3.1.3 Multi-Layer Perceptrons

Predominantly used for applications in RL, MLPs are employed as denoising networks, e.g., (Suh et al., 2023; Ding and Jin, 2023; Pearce et al., 2022), which take concatenated input features, such as observations, actions, and denoising time steps, to predict the noise. Although the architectures vary, it is common to use a relatively small number of hidden layers (2–4) (Wang et al., 2023b; Kang et al., 2023; Suh et al., 2023; Mendez-Mendez et al., 2023), using e.g., Mish activation (Misra, 2019), following the first method (Wang et al., 2023a), integrating DMs with Q-learning. It is important to note that most of these methods do not use visual input. An exception from this is Pearce et al. (2022), which also evaluates using high-resolution image inputs with an MLP-based DM. However, for this, a CNN-based image encoder is first applied to the raw image observation, before the encoding is fed to the DM.

3.1.4 Comparison

An ongoing debate exists concerning the relative merits of different architectural choices, with each architecture exhibiting distinct advantages and disadvantages. Chi et al. (2023) implemented both a U-Net-based and a transformer-based denoising network with the application of trajectory planning. They observed that the CNN-based model exhibits lower sensitivity to hyperparameters than transformers. Moreover, they report that when using positional control, the U-net results in a slightly higher success rate for some complex visual tasks, such as transport, tool hand, and push-t. On the other hand, U-nets may induce an over-smoothing effect, thereby resulting in diminished performance for high-frequency trajectories and consequently affecting velocity control. Thus, in these cases, transformers will likely lead to more precise predictions. Furthermore, transformer-based architectures have demonstrated proficiency in capturing long-range dependencies and exhibit notable robustness when handling high-dimensional data, surpassing the abilities of CNNs, which is particularly significant for tasks involving long horizons and high-level decision-making (Janner et al., 2022; Dosovitskiy et al., 2021).

While MLPs typically exhibit inferior performance, especially when confronted with complex problems and high-dimensional input data, such as images, they often demonstrate superior computational efficiency, which facilitates higher-rate sampling and usually requires fewer computational resources. Due to their training stability, they are a commonly used architecture in RL. In contrast, U-Nets, and especially transformers, are characterized by substantial resource consumption and prolonged inference times, which may hinder their application in real-time robotics (Pearce et al., 2022).

In summary, transformers are the most powerful architecture for handling high-dimensional input and output spaces, followed by CNNs, while MLPs have the highest computational efficiency. For processing visual data, such as raw images, an important task in robotic manipulation, a CNN or a Transformer architecture should be chosen. Also, while MLPs are most computationally efficient, real-time control is possible with the other two architectures, integrating, for example, receding horizon control (Mattingley et al., 2011) in combination with a more efficient sampling process, like DDIM.

3.2 Number of sampling steps

In addition to the network architecture, a crucial decision is the choice of the number of training and sampling iterations. As described in Section 2.2, each sample must undergo iterative denoising over several steps, which can be notably time-consuming, especially in the context of employing larger denoising networks with longer inference durations, such as transformers. Within the framework of DDPM, the number of noise levels during training is equal to the number of denoising iterations at the time of inference. This hinders its use in many robotic manipulation scenarios, especially those necessitating real-time predictions. Consequently, numerous methodologies employ DDIM, where the number of sampling iterations during inference can be significantly reduced compared to the number of noise levels used during training. Common choices of noise levels are 50–100 during training, but only a subset of five to ten steps during inference (Chi et al., 2023; Ma X. et al., 2024; Huang T. et al., 2025; Scheikl et al., 2024). Only a few works used less sampling (3–4) (Vosylius et al., 2024; Reuss et al., 2023) or more (20–30) (Mishra and Chen, 2024; Wang L. et al., 2024) sampling steps. Ko et al. (2024) documented a slight decline in performance when the number of sampling steps is reduced to

4 Applications

In this section, we explore the most dominant applications of DMs in robotic manipulation: trajectory generation for robotic manipulation, robotic grasping, and visual data augmentation for vision-based robotics manipulations.

4.1 Trajectory generation

Trajectory planning in robotic manipulation is vital for enabling robots to move from one point to another smoothly, safely, and efficiently while adhering to physical constraints, like speed and acceleration limits, as well as ensuring collision avoidance. Classical planning methods, like interpolation-based and sampling-based approaches, can have difficulty handling complex tasks or ensuring smooth paths. For instance, Rapidly Exploring Random Trees (Martinez et al., 2023) might generate trajectories with sudden changes because of the discretization process. As already discussed in the introduction, although popular data-driven approaches, such as GMMs and EBMs, theoretically pertain to the ability to model multi-model data distributions, in reality, they show suboptimal behavior, such as biasing modes or lack of temporal consistency (Chi et al., 2023). In addition, GMMs can struggle with high-dimensional input spaces (Ho et al., 2020). Increasing the number of components and covariances also increases the models’ ability to model more complex distributions and capture complex and intricate movement patterns. However, this can negatively impact the smoothness of the generated trajectories, making GMMs highly sensitive to their hyperparameters. In contrast, denoising DMs have demonstrated exceptional performance in processing and generating high-dimensional data. Furthermore, the distributions generated by denoising DMs are inherently smooth (Ho et al., 2020; Sohl-Dickstein et al., 2015; Chi et al., 2023). This makes DMs well-suited for complex, high-dimensional scenarios where flexibility and adaptability are required. While most methodologies that apply probabilistic DMs to robotic manipulation focus on imitation learning, they have also been adapted to their application in RL, e.g., (Janner et al., 2022; Wang et al., 2023a).

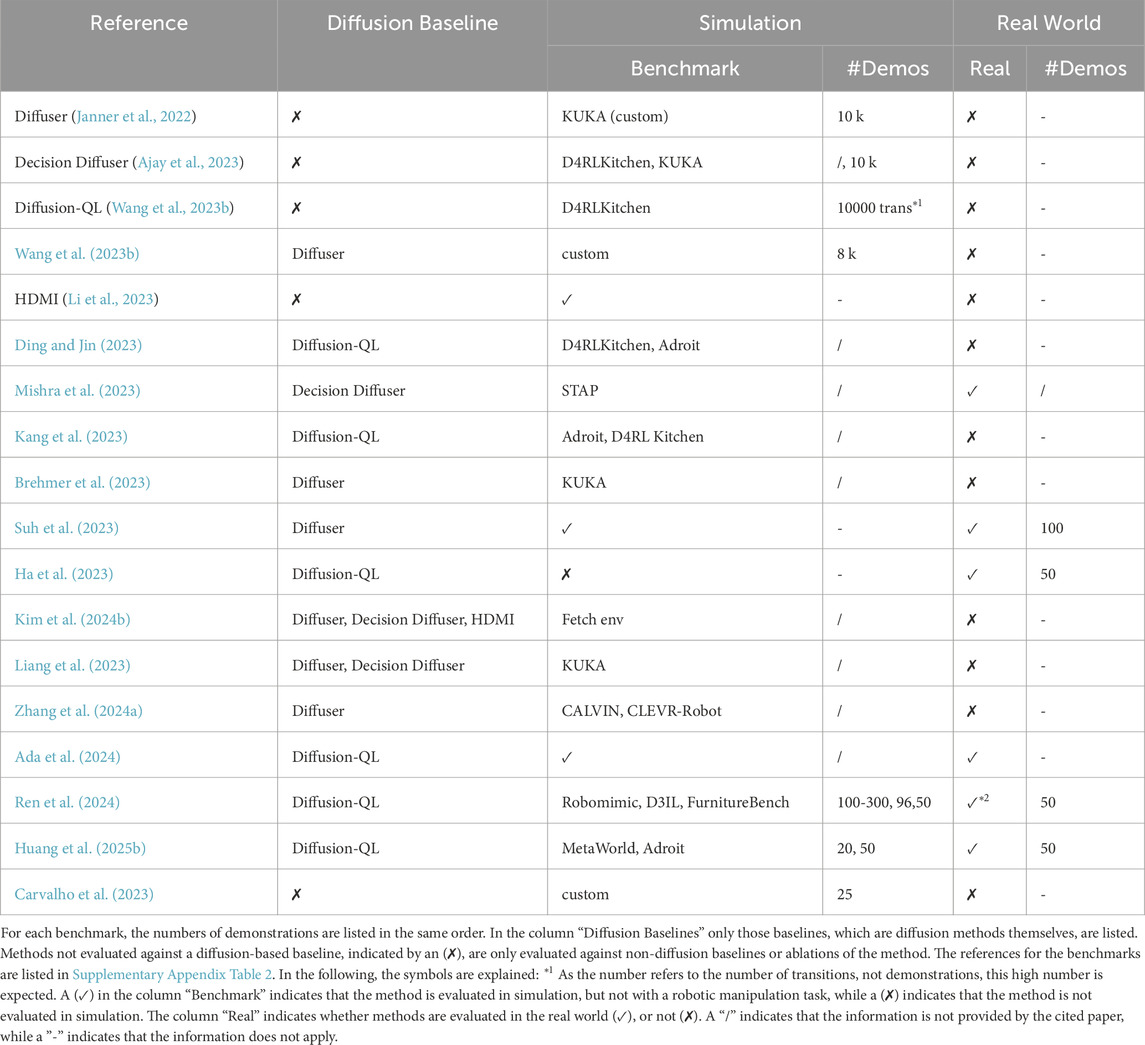

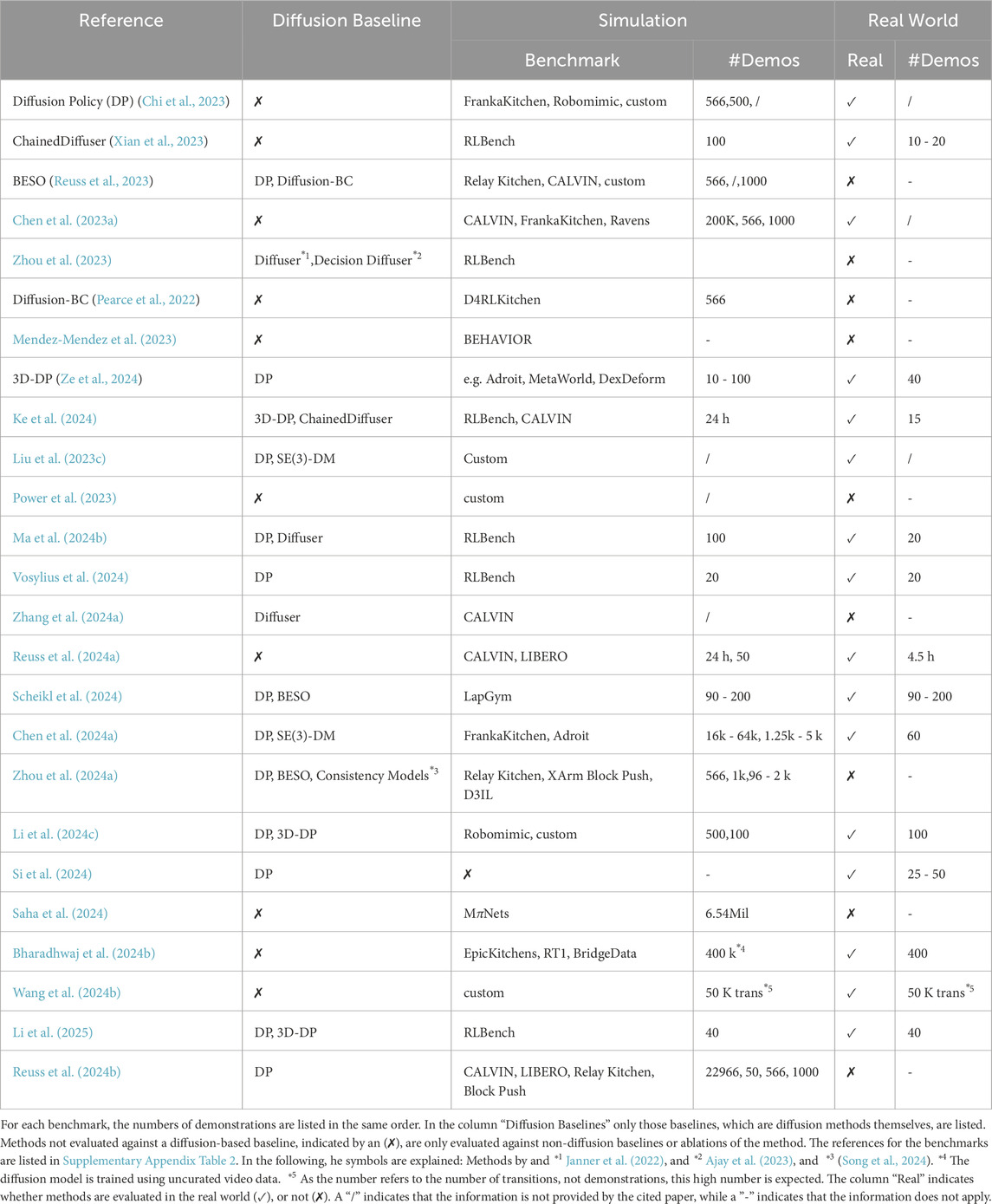

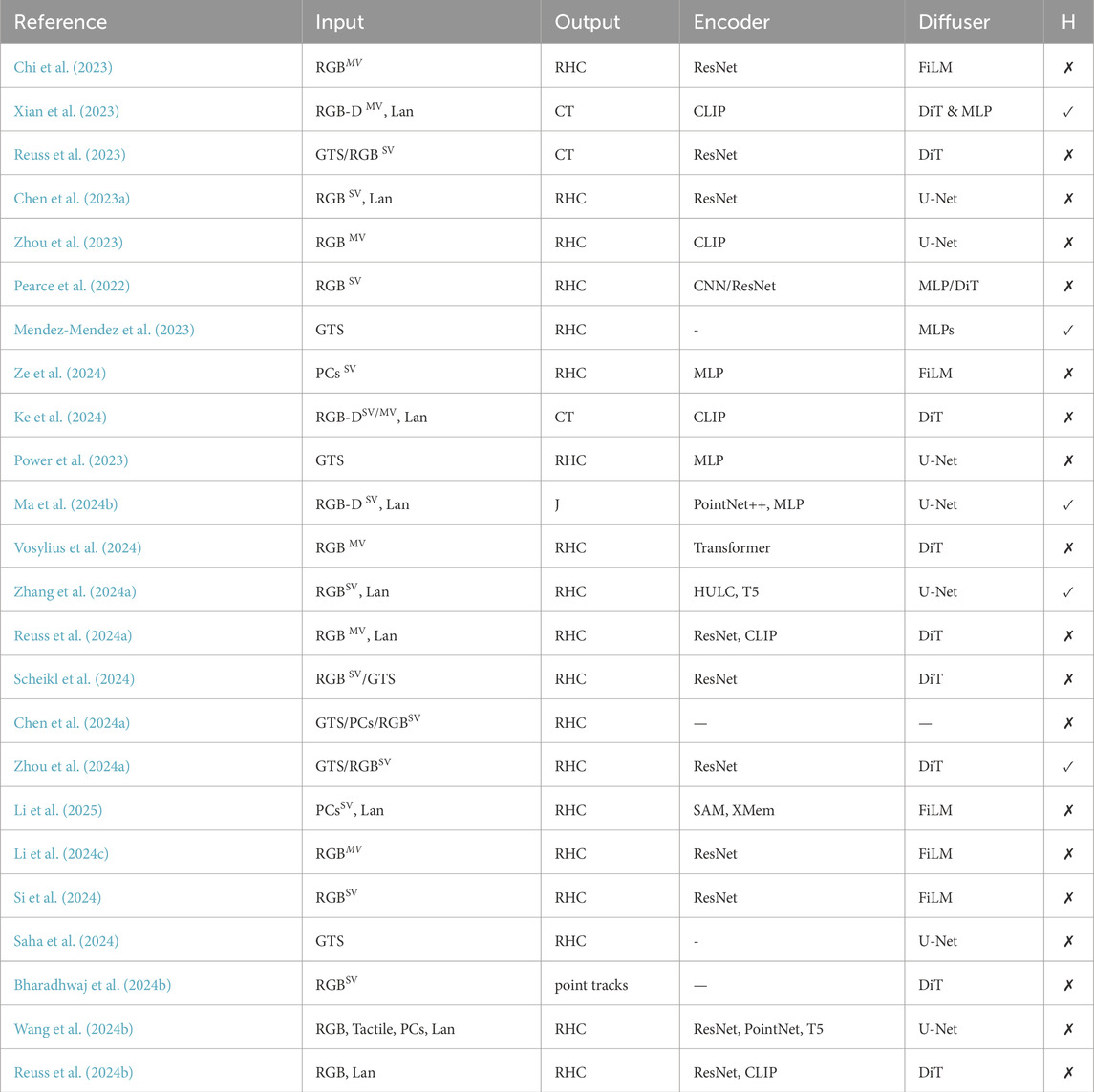

In the following sections, the methodologies of DMs for trajectory generation will be further discussed and categorized. We will first explain their applications in imitation learning, followed by a discussion on their use in reinforcement learning. For an overview of the method architectures in imitation learning, see Table 2, and for reinforcement learning, see Table 3.

4.1.1 Imitation learning

In imitation learning (Zare et al., 2024), robots attempt to learn a specified task by observing multiple expert demonstrations. This paradigm, commonly known as Learning from Demonstrations (LfD), involves the robot observing expert examples and attempting to replicate the demonstrated behaviors. In this domain, the robot is expected to generalize beyond the specific demonstrations, which allows the robot to adapt to variations in tasks or changes in configuration spaces. This may include diverse observation perspectives, altered environmental conditions, or even new tasks that share structural similarities with those previously demonstrated. Thus, the robot must learn a representation of the task that allows flexibility and skill acquisition beyond the specific scenarios it was trained on. Recent advancements in applying DMs to learn visuomotor policies (Chi et al., 2023) enable the generation of smooth action trajectories by modeling the task as a generative process conditioned on sensory observations. Diffusion-based models, initially popularized for high-dimensional data generation such as images and natural languages, have demonstrated significant potential in robotics by effectively learning complex action distributions and generating multi-modal behaviors conditioned on task-specific inputs. For instance, combining with recent progress in multiview transformers (Gervet et al., 2023; Goyal et al., 2023) that leverage the foundation model features (Radford et al., 2021; Oquab et al., 2023), 3D diffuser actor (Ke et al., 2024) integrates multi-modal representations to generate the end-effector trajectories. As another example, GNFactor (Ze et al., 2023) renders multiview features from Stable Diffusion (Rombach et al., 2022b) to enhance 3d volumetric feature learning. Very similar to diffusion, recently (Rouxel et al., 2024) flow-matching-based policies have emerged for trajectory generation, generally leading to a more stable training process with fewer hyperparameters, as already mentioned in Section 2.2.1. Nguyen et al. (2025) additionally includes second-order dynamics into the flow-matching objective, learning fields on acceleration and jerk to ensure smoothness of the generated trajectories.

In terms of the type of robotic embodiment, most works use parallel grippers or simpler end-effectors. However, few methods perform dexterous manipulation using DMs (Si et al., 2024; Ma C. et al., 2024; Ze et al., 2024; Chen K. et al., 2024; Wang C. et al., 2024; Freiberg et al., 2025; Welte and Rayyes, 2025), to facilitate their stability and robustness, also in this high-dimensional setting.

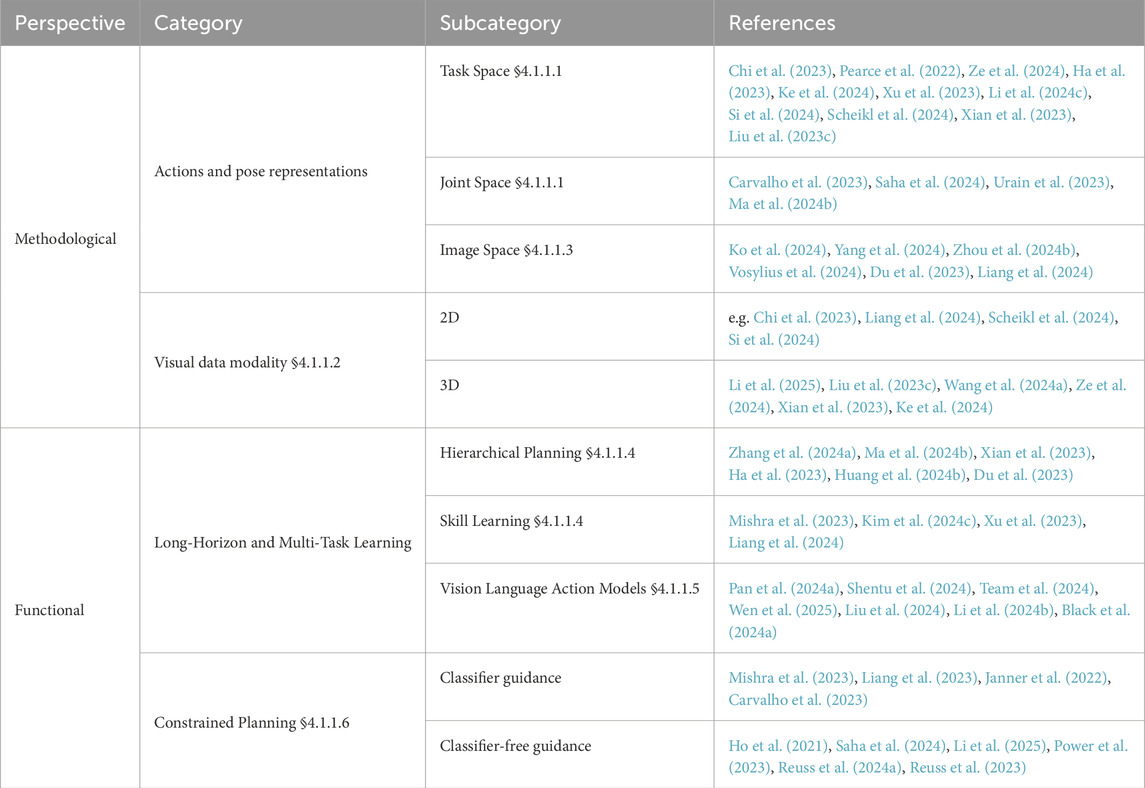

In the following sections, we will first repeat the process of sampling actions for trajectory planning with DMs and discuss common pose representations. Then we shortly address different visual data modalities, in particular 2D vs. 3D visual observations. Afterwards, we look at methods formulating trajectory planning as image generation, before looking at applications in hierarchical, multi-task, and constrained planning, also looking at multi-task planning with vision language action models (VLAs). A visualization of the taxonomy is provided in Table 1. More details on the individual method architectures are provided in Table 2.

Table 2. Technical details of trajectory diffusion using imitation learning. The references for the encoders are provided in Supplementary Appendix Table 1. In the following, the symbols and abbreviations are explained: H: Whether the method is hierarchical (✓) or not (✗). PCs: Point Clouds, Lan: Language, GTS: Ground Truth State, and whether the visual input modality is from single view or (SV) multi-view (MV). U-Net: temporal U-Net (Janner et al., 2022), FiLM: Convolutional Neural Networks with Feature-wise Linear Modulation (Perez et al., 2018), DiT: Diffusion Transformer, RHC: sub-trajectories with receding horizon control, CT: complete trajectory in task space, J: complete trajectory in joint space. A“/” indicates that the information is not provided by the cited paper, while a “-” indicates that no specialized encoder is required as ground truth state information is used.

4.1.1.1 Actions and pose representation

As briefly discussed in Section 2.3, the entire trajectory can be generated as a single sample, multiple subsequences can be sampled using receding horizon control, or the trajectory can be generated by sampling individual steps. Only in a few methods (Janner et al., 2022; Ke et al., 2024) the whole trajectory is predicted at once. Although this enables a more efficient prediction, as the denoising has to be performed only once, it prohibits adapting to changes in the environment, requiring better foresight and making it unsuitable for more complex task settings with dynamic or open environments. On the other hand, sampling of individual steps increases the compounding error effect and can negatively affect temporal correlation. Instead of predicting micro-actions, some use DMs to predict waypoints (Shi et al., 2023). This can decrease the compounding error, by reducing the temporal horizon. However, it relies on preprocessing or task settings that ensure that the space in between waypoints is not occluded. Thus, typically, DMs generate trajectories consisting of sequences of micro-actions represented as end-effector positions, generally encompassing translation and rotation depending on end-effector actuation (Chi et al., 2023; Ze et al., 2024; Xu et al., 2023; Li X. et al., 2024; Si et al., 2024; Scheikl et al., 2024; Ke et al., 2024; Ha et al., 2023). Once the trajectory is sampled, the proximity of the predicted positions enables computing the motion between the positions with simple positional controllers without the need for complex trajectory planning techniques. The control scheme is visualized in detail in Figure 2. Although more commonly applied in grasp prediction, here the pose is sometimes also represented in special Euclidean group

Although not common, sometimes actions are predicted directly in joint space (Carvalho et al., 2023; Pearce et al., 2022; Saha et al., 2024; Ma X. et al., 2024), allowing for direct control of joint motions, which, e.g., reduces singularities.

4.1.1.2 Visual data modalities

As already discussed in Section 2.3 to ground the robots actions in the physical world, they are dependent on sensory input. Here, in the majority of methods visual observations are used. In the original work (Chi et al., 2023), combining visual robotic manipulation with DMs for trajectory planning, the DM is conditioned on RGB-image observations. Many methods, e.g., (Si et al., 2024; Pearce et al., 2022; Li X. et al., 2024), adopt using RGB inputs, also developing more intricate encoding schemes (Qi et al., 2025).

However, 2D visual scene representations may not provide sufficient geometrical information for intricate robotic tasks, especially in scenes containing occlusions. Thus, multiple later methods used 3D scene representations instead. Here, DMs are either directly conditioned on the point cloud (Li et al., 2025; Liu et al., 2023c; Wang C. et al., 2024) or point cloud feature embeddings (Ze et al., 2024; Xian et al., 2023; Ke et al., 2024), from singleview (Ze et al., 2024; Li et al., 2025; Wang C. et al., 2024), or multiview camera setups (Ke et al., 2024; Xian et al., 2023). While multiview camera setups provide more complete scene information, they also require a more involved setup and more hardware resources.

These models outperform methods relying solely on 2D visual information, on more complex tasks, also demonstrating robustness to adversarial lighting conditions.

4.1.1.3 Trajectory planning as image generation

Another category formulates trajectory generation directly in image space, leveraging the exceptional generative abilities of DMs in image generation. Here (Ko et al., 2024; Zhou S. et al., 2024; Du et al., 2023), given a single image observation, a sequence of images, or a video, sometimes in combination with a language-task-instruction, the diffusion process is conditioned to predict a sequence of images, depicting the change in robot and object position. This comes with the benefit of internet-wide video training data, which facilitates extensive training, leading to good generalization capabilities. Especially in combination with methods (Bharadhwaj et al., 2024b) agnostic to the robot embodiment, this highly increases the amount of available training data. Moreover, in robotic manipulation, the model usually has to parse visual observations. Predicting actions in image space circumvents the need for mapping from the image space to a usually much lower-dimensional action space, reducing the required amount of training data (Vosylius et al., 2024). However, predicting high-dimensional images may also prevent the model from successfully learning important details of trajectories, as the DM is not guided to pay more attention to certain regions of the image, even though usually only a low fraction of pixels contain task-relevant information. Additionally, methods generating complete images must ensure temporal consistency and physical plausibility. Hence, extensive training resources are required. As an example (Zhou S. et al., 2024), uses 100 V100 GPUs and 70k demonstrations for training. While still operating in image space, some methods do not generate whole image sequences, but instead perform point-tracking (Bharadhwaj et al., 2024b) or diffuse imprecise action-effects on the end-effector position directly in image space (Vosylius et al., 2024). This mitigates the problem of generating physically implausible scenes. However, point-tracking still requires extensive amounts of data. Bharadhwaj et al. (2024b), e.g., uses 0.4 million video clips for training.

4.1.1.4 Long-horizon and multi-task learning

Due to their ability to robustly model multi-model distributions and relatively good generalization capabilities, DMs are well suited to handle long-horizon and multi-skill tasks, where usually long-range dependencies and multiple valid solutions exist, especially for high-level task instructions (Mendez-Mendez et al., 2023; Liang et al., 2024). Often, long-horizon tasks are modeled using hierarchical structures and skill learning. Usually, a single skill-conditioned DM or several DMs are learned for the individual skills, while the higher-level skill planning does not use a DM (Mishra et al., 2023; Kim W. K. et al., 2024; Xu et al., 2023; Liang et al., 2024; Li et al., 2023). The exact architecture for the higher-level skill planning varies across methods, being, for example, a variational autoencoder (Kim W. K. et al., 2024) or a regression model (Mishra et al., 2023). Instead of having a separate skill planner that samples one skill, Wang L. et al. (2024) develops a sampling scheme that can sample from a combination of DMs trained for different tasks and in different settings.

To forego the skill-enumeration, which brings with it the limitation of a predefined finite number of skills, some works employ a coarse-to-fine hierarchical framework, where higher-level policies are used to predict goal states for lower-level policies (Zhang E. et al., 2024; Ma X. et al., 2024; Xian et al., 2023; Ha et al., 2023; Huang Z. et al., 2024; Du et al., 2023).

The ability of DMs to stably process high-dimensional input spaces enables the integration of multi-modal inputs, which is especially important in multi-skill tasks, to develop versatile and generalizable agents via arbitrary skill-chaining. Methodologies use videos (Xu et al., 2023), images, and natural language task instructions (Liang et al., 2024; Wang L. et al., 2024; Zhou S. et al., 2024; Reuss et al., 2024b), or even more diverse modalities, such as tactile information and point clouds (Wang L. et al., 2024), to prompt skills.

Although these methods are designed to enhance generalizability, achieving adaptability in highly dynamic environments and unfamiliar scenarios may require the integration of continuous and lifelong learning. This is a widely unexplored field in the context of DMs, with only very few works (Huang J. et al., 2024; Di Palo et al., 2024) exploring this topic. Moreover, these methods are still limited in their applications. Di Palo et al. (2024) are utilizing a lifelong buffer to accelerate the training of new policies for new tasks. In contrast, Mendez-Mendez et al. (2023) continually updates its policy. However, they only conduct training and experiments in simulation. Additionally, their method requires precise feature descriptions of all involved objects and is limited to predefined abstract skills. Moreover, for the continual update, all past data is replayed, which is not only computationally inefficient but also does not prevent catastrophic forgetting.

4.1.1.5 Multi-task learning with vision language action models

Another approach to enhance generalizability in multi-task settings is the incorporation of pretrained VLAs. As a specialized class of multimodal language model (MLLM), VLAs combine the perceptual and semantic representation power of the vision language foundation model and the motor execution capabilities of the action generation model, thereby forming a cohesive end-to-end decision-making framework. Being pretrained on internet-scale data, VLAs exhibit great generalization capabilities across diverse and unseen scenarios, thereby enabling robots to execute complex tasks with remarkable adaptability (Firoozi et al., 2025).

A predominant line of approaches among VLAs employs next-token prediction for auto-regressive action token generation, representing a foundational approach to end-to-end VLA modeling, e.g., (Brohan et al., 2023b; Brohan et al., 2023a; Kim M. J. et al., 2024). However, this approach is hindered by significant limitations, most notably the slow inference speeds inherent to auto-regressive methods (Brohan et al., 2023a; Wen et al., 2025; Pertsch et al., 2025). This poses a critical bottleneck for real-time robotic systems, where low-latency decision-making is essential. Furthermore, the discretizations of motion tokens, which reformulates action generation as a classification task, introduces quantization errors that lead to a decrease in control precision, thus reducing the overall performance and reliability (Zhang et al., 2024g; Pearce et al., 2022; Zhang S. et al., 2024).

To address these limitations one line of research within VLAs focuses on predicting future states and synthesizing executable actions by leveraging inverse kinematics principles derived from these predictions, e.g., (Cheang et al., 2024; Zhen et al., 2024; Zhang et al., 2024c). While this approach addresses some of the limitations associated with token discretization, multimodal states often correspond to multiple valid actions, and the attempt to model these states through techniques such as arithmetic averaging can result in infeasible or suboptimal action outputs.

Thus, showing strong capabilities and stability in modeling multi-modal distributions, DMs have emerged as a promising solution. Leveraging their strong generalization capabilities, a VLA is used to predict coarse action, while a DM-based policy refines the action, to increase precision and adaptability to different robot embodiments, e.g. (Pan C. et al., 2024; Shentu et al., 2024; Team et al., 2024). For instance, TinyVLA (Wen et al., 2025) incorporates a diffusion-based head module on top of a pretrained VLA to directly generate robotic actions. More specifically, DP (Chi et al., 2023) is connected to the multimodal model backbone via two linear projections and a LayerNorm. The multimodal model backbone jointly encodes the current observations and language instruction, generating a multimodal embedding that conditions and guides the denoising process. Furthermore, in order to better fill the gap between logical reasoning and actionable robot policies, a reasoning injection module is proposed, which reuses reasoning outputs (Wen et al., 2024). Similarly, conditional diffusion decoders have been leveraged to represent continuous multimodal action distributions, enabling the generation of diverse and contextually appropriate action sequences (Team et al., 2024; Liu et al., 2024; Li Q. et al., 2024).

Addressing the disadvantage of long inference times with DMs, in some recent works instead, flow matching is used to generate actions from observations preprocessed by VLMs to solve flexible and dynamic tasks, offering a robust alternative to traditional diffusion mechanisms (Black et al., 2024a; Zhang and Gienger, 2025). While Black et al. (2024a) takes a skill-based approach, where the vision-language model is used to decide on actions, Zhang and Gienger (2025) uses a vision-language model to generate waypoints. In both approaches, flow matching is used as the expert policy, generating precise trajectories.

VLAs offer access to models trained on huge amounts of data and with strong computational power, leading to strong generalization capabilities. To mitigate some of their shortcomings, such as imprecise actions, specialized policies can be used for refinement. To not restrict the generalizability of the VLA, DMs offer a great possibility, as they can capture complex multi-model distributions and process high-dimensional visual inputs. However, both VLAs and DMs have a relatively slow inference speed. Thus, especially in this combination with VLAs, increasing the sampling efficiency of DMs is important. One example was provided in the previous paragraph. But the topic of higher sampling speed with DMs is also discussed in more detail in Section 2.2.1.

4.1.1.6 Constrained planning

Another line of methods focuses on constrained trajectory learning. A typical goal is obstacle avoidance, object-centric, or goal-oriented trajectory planning, but other constraints can also be included. If the constraints are known prior to training, they can be integrated into the loss function. However, if the goal is to adhere to various and possibly changing constraints during inference, another approach has to be taken. For less complex constraints, such as specific initial or goal states (Janner et al., 2022), introduces a conditioning, where, after each denoising time step (Equation 7), the particular state from the trajectory is replaced by the state from the constraint. However, this can lead the trajectory into regions of low likelihood, hence decreasing stability and potentially causing mode collapse. Moreover, this method is not applicable to more complex constraints.

One approach, also addressed by Janner et al. (2022), is classifier guidance (Dhariwal and Nichol, 2021). Here, a separate model is trained to score the trajectory at each denoising step and steer it toward regions that satisfy the constraint. This is integrated into the denoising process by adding the gradient of the predicted score. It should be noted that for sequential data, such as trajectories, classifier guidance can also bias the sampling towards regions of low likelihood (Pearce et al., 2022). Thus, the weight of the guidance factor must be carefully chosen. Moreover, during the start of the denoising process the guidance model must predict the score on a highly uninformative output (close to Gaussian noise) and should have a lower impact. Therefore, it is important to inform the classifier of the denoising time step, train it also on noisy samples, or adjust the weight with which the guidance factor is integrated into the reverse process. Classifier guidance is applied in several methodologies (Mishra et al., 2023; Liang et al., 2023; Janner et al., 2022; Carvalho et al., 2023). However, it requires the additional training of a separate model. Furthermore, computing the gradient of the classifier at each sampling step adds additional computational cost. Thus, classifier-free guidance (Ho et al., 2021; Saha et al., 2024; Li et al., 2025; Power et al., 2023; Reuss et al., 2024a; Reuss et al., 2023) has been introduced, where a conditional and an unconditional DM per constraint are trained in parallel. During sampling, a weighted mixture of both DMs is used, allowing for arbitrary combinations of constraints, also not seen together during training. However, it does not generalize to entirely new constraints, as this would necessitate the training of new conditional DMs.

As both classifier and classifier-free guidance only steer the training process, they do not guarantee constraint satisfaction. To guarantee constraint satisfaction in delicate environments, such as surgery (Scheikl et al., 2024), incorporate movement primitives with DMs to ensure the quality of the trajectory. Recent advances in diffusion models also delve into constraint satisfaction (Römer et al., 2024), integrating constraint tightening into the reverse diffusion process. While this outperforms previous methods (Power et al., 2023; Janner et al., 2022; Carvalho et al., 2024) in regards to constraint satisfaction, also in multi-constraint settings and constraints not seen during training, the evaluation is done only in simulation on a single experiment setup. Thus, constraint satisfaction with DMs remains an interesting research direction to further explore.

Few methods also perform affordance-based optimization for trajectory planning (Liu et al., 2023c). However, most work in affordance-based manipulation concentrates on grasp learning, which is discussed in more detail in Section 4.2.

4.1.2 Offline reinforcement learning

To apply diffusion policies in the context of RL the reward term has to be integrated. Diffuser (Janner et al., 2022), one early work adapting diffusion to RL, uses classifier-based guidance, which is based on classifier guidance described in Section 4.1.1.6. Let

Moreover, to ensure that the current state observation

In Diffuser (Janner et al., 2022) and Diffuser-based methods (Suh et al., 2023; Liang et al., 2023), the DM is trained independently of the reward signal, similar to methods in imitation learning with DM. Not leveraging the reward signal for training the policy can lead to misalignment of the learned trajectories with optimal trajectories and thus suboptimal behavior of the policy. In contrast, leveraging the reward signal already during training of the policy, can steer the training process, consequently increasing both quality of the trained policy and sample efficiency.

To mitigate these shortcomings, one approach, Decision Diffuser (Ajay et al., 2023), directly conditions the DM on the return of the trajectory using classifier-free guidance. This method outperforms Diffuser on a variety of tasks, such a block-stacking task. However, both methods have not been evaluated on real-world tasks. Directly conditioning on the return, limits generalization capabilities. Different to Q-learning, where the value function is approximated, which generalizes across all future trajectories, here only the return of the current trajectory is considered. Sharing some similarity to on-policy methods, this limits generalization as the policy learns to follow trajectories from the demonstrations with high return values. Thus, this can also be interpreted as guided imitation learning.

A more common method (Wang et al., 2023a) integrates offline Q-learning with DMs. The loss function from Equation 5 is a behavior cloning loss, as the goal is to minimize error with respect to samples taken via the behavior policy. Wang et al. (2023a) suggests including a critic in the training procedure, which they call Diffusion Q-learning (Diffusion-QL). In Diffusion-QL a Q-function is trained, by minimizing the Bellman-Operator using the double Q-learning trick. The actions for updating the Q-function are sampled from the DM. In turn a policy improvement step

where

Table 3. Technical details of trajectory diffusion using reinforcement learning. The references for the encoders are provided in Supplementary Appendix Table 1. In the following, the symbols and abbreviations are explained: H/S: Whether the method is hierarchical/skill-based (✓) or not (✗). Lan: Language, GTS: Ground Truth State, and whether the visual input modality is from single view (SV) or multi-view (MV). U-Net: temporal U-Net (Janner et al., 2022), Eq.: Equivariant FiLM: Convolutional Neural Networks with Feature-wise Linear Modulation (Perez et al., 2018), DiT: Diffusion Transformer, RHC: sub-trajectories with receding horizon control, Sia = single actions. A “-” indicates that no specialized encoder is required as ground truth state information is used.

One characteristic of methodologies combining RL with DMs is that they are offline methods, with both the policy, i.e., the DM, and the return prediction model/critic being trained offline. This introduces the usual advantages and disadvantages of offline RL (Levine et al., 2020). The model relies on high-quality existing data, consisting of state-action-reward transitions, and is unable to react to distribution shifts. If not tuned well, this may also lead to overfitting. On the other hand, it has increased sample efficiency and does not require real-time data collections and training, which decreases computational cost and can increase training stability. Compared to imitation learning (Levine et al., 2020; Pfrommer et al., 2024; Ho and Ermon, 2016), offline RL requires data labeled with rewards, the training of a reward function, and is more prone to overfitting to suboptimal behavior. However, confronted with data containing diverse and suboptimal behavior, offline RL has the potential of better generalization compared to imitation learning, as it is well suited to model the entire state-action space. Thus, combining RL with DMs has the potential of modeling highly multi-modal distributions over the whole state-action space, strongly increasing generalizability (Liang et al., 2023; Ren et al., 2024). In contrast, if high-quality expert demonstrations are available, imitation learning might lead to better performance and computational efficiency. To overcome some of the shortcoming of imitation learning, such as the covariate shift problem (Ross and Bagnell, 2010), which make it difficult to handle out of distribution situations, some strategies are devised to finetune behavior cloning policies using RL (Ren et al., 2024; Huang T. et al., 2025).

Skill-composition is a common method, to handle long-horizon tasks. To leverage the abilities of RL to learn from suboptimal behaviors multiple methodologies (Ajay et al., 2023; Kim W. K. et al., 2024; Venkatraman et al., 2023; Kim S. et al., 2024) combine skill-learning and RL with DMs.

Only little research (Ding and Jin, 2023; Ajay et al., 2023) in online and offline-to-online RL with DMs has been conducted, leaving a wide field open for research. Moreover, in the context of skill-learning (Ajay et al., 2023), the DMs, used for the lower-level policies, are trained offline and remain frozen, while the higher-level policy are trained using online RL.

It should be noted that, apart from Ren et al. (2024); Huang T. et al. (2025), none of the aforementioned methods process visual observations and instead rely on ground-truth environment information, which is only easily available in simulation. Moreover, while all methods have also been tested on robotic manipulation tasks, only a few (Ren et al., 2024; Huang T. et al., 2025) have been deliberately engineered for these specific applications. Expanding the scope to encompass all methodologies devised for robotics at large, there is a more substantial body of work that integrates diffusion policies with RL.

4.2 Robotic grasp generation

Grasp learning, as one of the crucial skills for robotic manipulation, has been studied over decades (Newbury et al., 2023). Starting from hand-crafted feature engineering to statistical approaches (Bohg et al., 2013), accompanied by the recent progress in deep neural networks that are powered by massive data collection either from real-world (Fang et al., 2020) or simulated environments (Gilles et al., 2023; Gilles et al., 2025; Shi et al., 2024). The current trend in grasp learning incorporates semantic-level object detection, leveraging open-vocabulary foundation models (Radford et al., 2021; Liu et al., 2025), and focuses on object-centric or affordance-based grasp detection in the wild (Qian et al., 2024; Shi et al., 2025). To this end, DMs, known for their ability to model complex distributions, allow for the creation of diverse and realistic grasp scenarios by simulating possible interactions with objects in a variety of contexts (Rombach et al., 2022b). Furthermore, these models contribute to direct grasp generation by optimizing the generation of feasible and efficient grasps (Urain et al., 2023), particularly in environments where real-time decision-making and adaptability are critical.

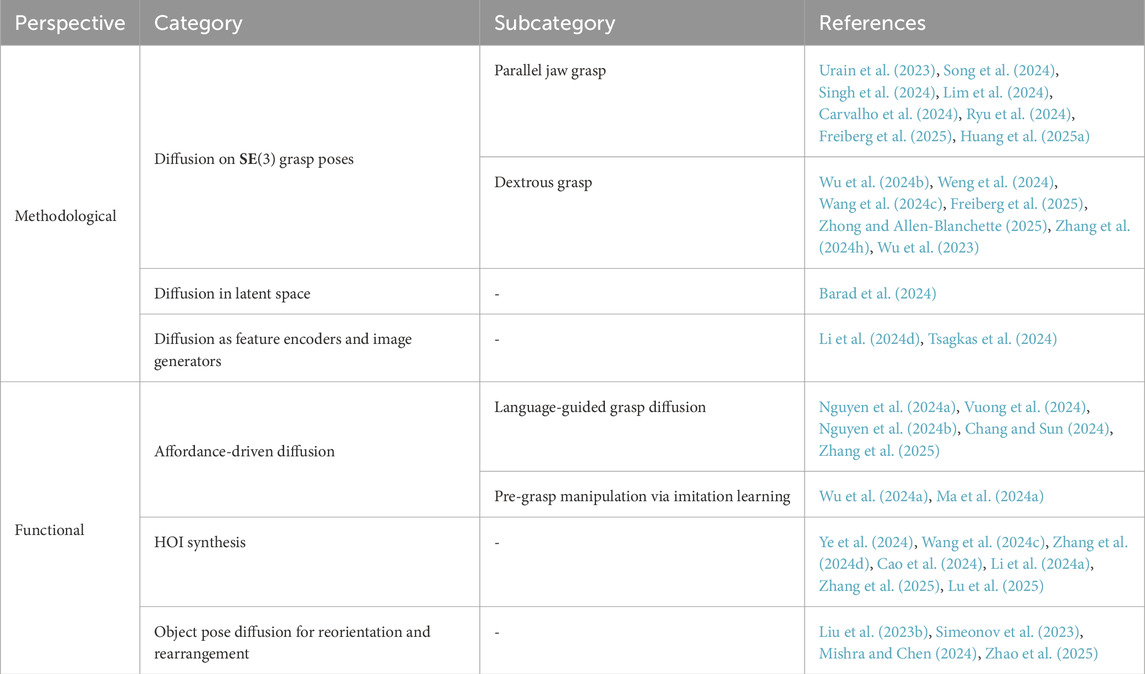

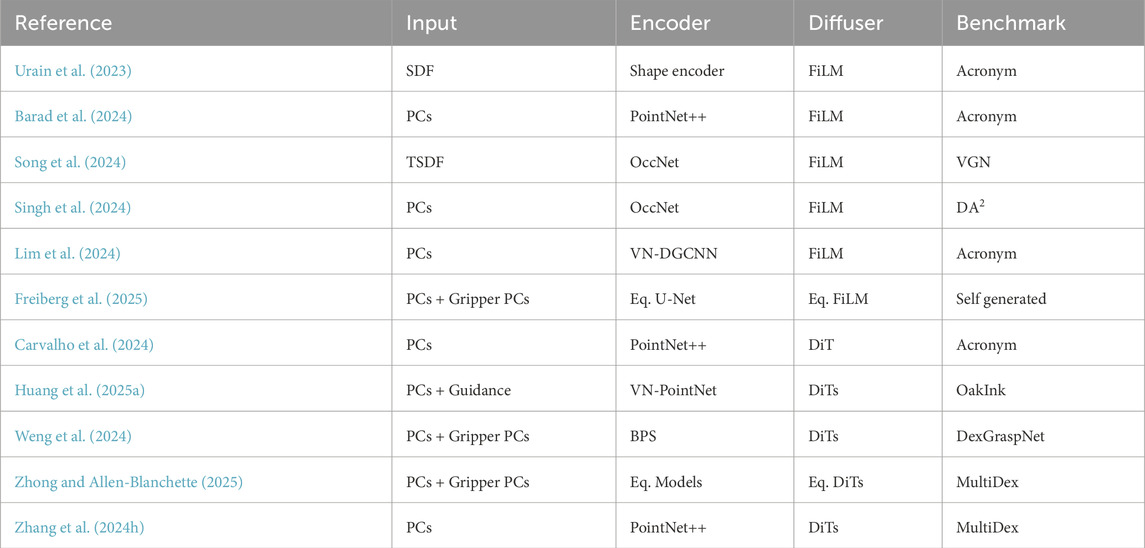

Grasp generation with DMs can be categorized into several key approaches: From methodological perspective, one category focuses on explicit diffusion on 6-DoF grasp poses that lie on the

Table 5. Technical details of grasp diffusion methodologies on

4.2.1 Diffusion as

Since the standard diffusion process is primarily formulated in Euclidean space, directly extending it to

To tackle this,

In contrast to explicit pose diffusion, latent DMs for grasp generation (GraspLDM (Barad et al., 2024)) explore latent space diffusion with VAEs, which does not explicitly account for the

Furthermore, the

4.3 Visual data augmentation

One line of methodologies focuses on employing mostly pretrained DMs for data augmentation in vision-based manipulation tasks. Here, the strong image generation and processing capabilities of diffusion generative models are utilized to augment data sets and scenes. The main goals of the visual data augmentation are scaling up data sets, scene reconstruction, and scene rearrangement.

4.3.1 Scaling data and scene augmentation

A challenge associated with data-driven approaches in robotics relates to substantial data requirements, which are time-consuming to acquire, particularly for real-world data. In the domain of imitation learning, it is essential to accumulate an adequate number of expert demonstrations that accurately represent the task at hand. While, by now, many methods, e.g., (Reuss et al., 2024a; Ze et al., 2024; Ryu et al., 2024) only require a low number of five to fifty demonstrations, there are also methods, e.g., (Chen L. et al., 2023; Saha et al., 2024) relying on more extensive data sets. Especially offline RL methods, e.g. (Carvalho et al., 2023; Ajay et al., 2023) usually require extensive amounts of data to accurately predict actions over the complete state-action space, also from suboptimal behavior. Moreover, increasing the variability in training data also has the potential to increase the generalizability of the learned policies. Thus, to automatically increase the variety and size of datasets, without additional costs on researchers and staff, or other more engineering-heavy autonomous data collection pipelines (Yu et al., 2023), many methodologies, e.g., (Chen Z. et al., 2023; Mandi et al., 2022), use DMs for data augmentation. In comparison to other strategies, such as domain randomization (Tremblay et al., 2018; Tobin et al., 2017), data augmentation with DMs directly augments the real-world data, making the data grounded in the physical world. In contrast, domain randomization requires complex tuning for each task, to ensure physical plausibility of the randomized scenes, and to enable sim-to-real transfer (Chen Z. et al., 2023).

Given a set of real-world data, DM-based augmentation methods perform semantically meaningful augmentations via inpainting, such as changing object colors and textures (Zhang X. et al., 2024), or even replacing whole objects, as well as corresponding language task descriptions (Chen Z. et al., 2023; Yu et al., 2023; Mandi et al., 2022). This enables both the augmentation of objects, which are part of the manipulation process, and backgrounds. The former increases the generalizability to different tasks and objects, while the latter increases robustness to scene information, which should not influence the policy. Some (Zhang X. et al., 2024) also augment object positions and the corresponding trajectories to generate off-distribution demonstrations for DAgger, thus addressing the covariate shift problem in imitation learning. Others (Chen L. Y. et al., 2024) augment camera view, robot embodiments, or even (Katara et al., 2024) generate whole simulation scenes from given URDF files, prompted by a Large Language Model (LLM). Targeted towards offline RL methods, Di Palo et al. (2024) combines data augmentation with a form of hindsight-experience replay (Andrychowicz et al., 2017) to adapt the visual observations to the language-task instruction. This increases the number of successful executions in the replay buffer, which potentially increases the data efficiency. The method is used to learn policies for new tasks, on previously collected data, to align the data with the new task instructions.

From a methodological perspective the methods mostly employ frozen web-scale pretrained language (Yu et al., 2023), and vision-language models, for object segmentation (Yu et al., 2023), or text-to-image synthesis (Stable Diffusion) (Rombach et al., 2022a; Mandi et al., 2022), or finetune (Zhang X. et al., 2024; Di Palo et al., 2024) pretrained internet-scale vision-language models. Apart from Zhang X. et al. (2024) the methods, do not augment actions, but only observations. Thus, the methodologies must ensure augmentations, for which the demonstrated actions do not change, which highly limits the types of augmentations. Moreover, large-scale data scaling via scene augmentation also requires additional computational cost. While this might not be a severe limitation, if it is applied once before the training, it may highly increase training time for online-RL methods.

4.3.2 Sensor data reconstruction

A challenge in vision-based robotic manipulation pertains to the incomplete sensor data. Especially single-view camera setups lead to incomplete object point clouds or images, making accurate grasp and trajectory prediction challenging. This is exacerbated by more complex task settings, with occlusion, as well as inaccurate sensor data.

Multiple methods (Kasahara et al., 2024; Ikeda et al., 2024) reconstruct camera viewpoints with DMs. Given an RGBD image and camera intrinsics Kasahara et al. (2024) generates new object views without requiring CAD models of the objects. For this, the existing points are projected to the new viewpoint. The scene is segmented using the vision foundation model SAM (Kirillov et al., 2023), to create object masks. On these masks missing data points are inpainted using the pretrained diffusion model for image generation Dall

In the field of robotic manipulation, not many methods consider scene reconstruction. A possible reason for this is its relatively high computational cost. However, expanding to the areas of robotics and computer vision, more methodologies in the field of scene reconstruction exist. In robotic manipulation instead more methods focus on making policies more robust to incomplete or noisy sensor information, e.g., (Ze et al., 2024; Ke et al., 2024). However, the limited number of occlusion in the experimental setups indicate that strong occlusion are still a major challenge. Moreover, scene reconstruction is unable to react to completely occluded objects.

4.3.3 Object rearrangement

The ability of DMs for text-to-image synthesis offers the possibility to generate plans from high-level task descriptions. In particular, given an initial visual observation, one group of methods uses such models to generate target-arrangement of objects in the scene, specified by a language-prompt (Liu et al., 2023b; Kapelyukh et al., 2023; Xu et al., 2024; Zeng et al., 2024; Kapelyukh et al., 2024). Examples of applications could be setting up a dinner table or clearing up a kitchen counter. While the earlier methodologies (Kapelyukh et al., 2023; Liu et al., 2023b) use the pretrained VLM Dall

5 Experiments and benchmarks

In this section, we focus on the evaluation of the various DMs for robotic manipulation. Details on the employed benchmarks and baselines are listed in the separate tables for imitation learning (Table 6), reinforcement learning (Table 7) in the Appendix, and grasp learning (Table 5). Separately, the references for all applied benchmarks are listed in Supplementary Appendix Table 2.

Various benchmarks are used to evaluate the methods. Common benchmarks are CALVIN (Mees et al., 2022), RLBench (James et al., 2020), RelayKitchen (Gupta et al., 2020), and Meta-World (Yu et al., 2020). Primarily in RL, the benchmark D4RL Kitchen (Fu et al., 2020) is used. One method (Ren et al., 2024) uses FurnitureBench (Heo et al., 0) for real-world manipulation tasks. Adroit (Rajeswaran et al., 2017) is a common benchmark for dexterous manipulation, LIBERO (Liu B. et al., 2023) for lifelong learning, and LapGym (Maria Scheikl et al., 2023) for medical tasks.

Many methods are only being evaluated against baselines, which are not based on DMs themselves. However, there are some common DM-based baselines. For methods operating in

The majority of methods are evaluated in simulation as well as in real-world experiments. For real-world experiments, most policies are directly trained on real-world data. However, some are trained exclusively in simulation and applied in the real world in a zero shot (Yu et al., 2023; Mishra et al., 2023; Ren et al., 2024; Liu et al., 2023b; Kapelyukh et al., 2024; Liu et al., 2023c), utilizing domain randomization, or real-world scene reconstruction in simulation. Few, predominately RL methods, are only evaluated in simulation (Yang et al., 2023; Power et al., 2023; Wang et al., 2023a; Janner et al., 2022; Pearce et al., 2022; Wang et al., 2023b; Mendez-Mendez et al., 2023; Kim S. et al., 2024; Brehmer et al., 2023; Liang et al., 2023; Zhou H. et al., 2024; Mishra and Chen, 2024; Ajay et al., 2023; Ding and Jin, 2023; Zhang E. et al., 2024).

6 Conclusion, limitations and outlook

Diffusion models (DMs) have emerged as state-of-the-art methods in robotic manipulation, offering exceptional ability in modeling multi-modal distributions, high training stability, and stability to high-dimensional input and output spaces. Several tasks, challenges, and limitations in the domain of robotic manipulation with DMs remain unsolved. A prevalent issue is the lack of generalizability. The slow inference time for DMs also remains a major bottleneck.

6.1 Limitations

6.1.1 Generalizability

While a lot of methods demonstrate relatively good generalizability in terms of object types, lightning conditions, and task complexity, they still face limitations in this area. This prevalent limitation shared across almost all methodologies in robotic manipulation remains a crucial research question.

The majority of methods using DMs for trajectory generation rely on imitation learning, using mostly behavior cloning. Thus, they inherit the dependence on the quality and diversity of training data, making it difficult to handle out-of-distribution situations due to the covariate shift problem (Ross and Bagnell, 2010). As most methodologies combining DMs with RL use offline RL, they still rely on existing data, mapping a sufficient amount of the state-action space, and are thus also unable to react to distribution shifts. Moreover, offline RL requires more careful fine-tuning than imitation learning to ensure training stability and prevent overfitting. Still, the advantage of RL is that it can handle suboptimal behavior Levine et al. (2020).

While data scaling offers improved generalizability, it typically demands large training datasets and substantial computational resources. One recent solution is to use pre-trained foundation models. Moreover, as the majority of current methods for data augmentation in DMs do not augment trajectories, e.g., (Yu et al., 2023; Mandi et al., 2022), it only increases robustness to slightly different task settings, such as changes in colors, textures, distractors, and background. VLAs can generalize to multi-task and long-horizon settings but often lack action precision, thus requiring finetuning and the combination with more specialized agents (Zhang et al., 2024g).

6.1.2 Sampling speed

The principal limitation inherent to DMs can be attributed to the iterative nature of the sampling process, which results in a time-intensive sampling procedure, thus impeding efficiency and real-time prediction capabilities. Despite recent advances that improve sampling speed and quality (Chen K. et al., 2024; Zhou H. et al., 2024), a considerable number of recent methods use DDIM (Song J. et al., 2021), although other methods, such as DPM-solver (Lu et al., 2022) have shown better performance. However, this comparison has only been performed using image generation benchmarks and would need to be verified for applications in robotic manipulation. Numerous works have demonstrated competitive task performance using DDIM, but do not directly investigate the decrease in task performance associated with a lower number of reverse diffusion steps. Ko et al. (2024) analyzes their approach using both DDPM and DDIM sampling, reporting a sampling process that is ten times faster with only a 5.6% decrease in task performance when using DDIM. Although such a decline might appear negligible, its significance is highly task-dependent. Consequently, there is a need for efficient sampling strategies and a more comprehensive analysis of existing sampling methods, particularly regarding the domain of robotic manipulation. It should, however, be noted that already in DP (Chi et al., 2023), one of the earlier methods combining DMs with receding-horizon control for trajectory planning, real-time control is possible. Using DDIM with 10 denoising steps during inference, they report an inference latency of 0.1 s on a Nvidia 3080 GPU.

6.2 Conclusion and outlook

This survey, to the best to our knowledge, is the first survey reviewing the state-of-the-art methods diffusion models (DMs) in robotics manipulation. This paper offers a thorough discussion of various methodologies regarding network architecture, learning framework, application, and evaluation, highlighting limits and advantages. We explored the three primary applications of DMs in robotic manipulation: trajectory generation, robotic grasping, and visual data augmentation. Most notably, DMs offer exceptional ability in modeling multi-modal distributions, high training stability, and robustness to high-dimensional input and output spaces. Especially in visual robotic manipulation, DMs provide essential capabilities to process high-resolution 2D and 3D visual observations, as well as to predict high-dimensional trajectories and grasp poses, even directly in image space.

A key challenge of DMs is the slow inference speed. In the field of computer vision, fast samplers have been developed that have not yet been evaluated in the field of robotic manipulation. Testing those samplers and comparing them against the commonly used ones, could be one step to increase sampling efficiency. Moreover, there are also methods for fast sampling, specifically in robotic manipulation, that are not broadly used, e.g. BRIDGeR (Chen K. et al., 2024). While the generalizability of DMs remains also an open challenge, the image generation capabilities of DMs open new avenues in data augmentation for data scaling, making methods more robust to limited data variety. Generalizability could be also improved by the integration of advanced vision-language, and vision-language action models.

We believe continual learning could be a promising approach to improve generalizability and adaptability in highly dynamic and unfamiliar environments. This remains a widely unexplored problem domain for DMs in robotic manipulation, exceptions are (Di Palo et al., 2024; Mendez-Mendez et al., 2023). However, these methods have strong limitations. For instance, Di Palo et al. (2024) relies on precise feature descriptions of all involved objects and is restricted to predefined abstract skills. Moreover, their continual update process involves replaying all past data, which is both computationally inefficient and does not prevent catastrophic forgetting. Morover, to handle complex and cluttered scenes, view planning and iterative planning strategies, also considering complete occlusions, could be combined with existing DMs using 3D scene representations. Leveraging the semantic reasoning capabilities of vision language and vision language action models could be a possible approach.

Author contributions

RW: Writing – original draft, Writing – review and editing. YS: Writing – review and editing, Writing – original draft. SL: Writing – original draft. RR: Writing – original draft, Supervision, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. Funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) – SFB-1574 – 471687386.

Acknowledgments

We thank our colleague Edgar Welte for providing the video data for the illustration of the diffusion process in Figure 2.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2025.1606247/full#supplementary-material

Footnotes

1In the context of probability distributions, “multi-modal” does not refer to multiple input modalities but rather to the presence of multiple peaks (modes) in the distribution, each representing a distinct possible outcome. For example, in trajectory planning, a multi-modal distribution can capture multiple feasible trajectories. Accurately modeling all modes is crucial for policies, as it enables better generalization to diverse scenarios during inference

References

Ada, S. E., Oztop, E., and Ugur, E. (2024). Diffusion policies for out-of-distribution generalization in offline reinforcement learning. IEEE Robotics Automation Lett. 9, 3116–3123. doi:10.1109/LRA.2024.3363530

Ajay, A., Du, Y., Gupta, A., Tenenbaum, J., Jaakkola, T., and Agrawal, P. (2023). “IS conditional generative modeling all you need for decision-making?,” in The Eleventh International Conference on Learning Representations.

Andrychowicz, M., Wolski, F., Ray, A., Schneider, J., Fong, R., Welinder, P., et al. (2017). Hindsight experience replay. Adv. Neural Inf. Process. Syst. 30. Available online at: https://proceedings.neurips.cc/paper_files/paper/2017.

Barad, K. R., Orsula, A., Richard, A., Dentler, J., Olivares-Mendez, M. A., and Martinez, C. (2024). GraspLDM: generative 6-DoF grasp synthesis using latent diffusion models. IEEE Access 12, 164621–164633. doi:10.1109/ACCESS.2024.3492118

Bharadhwaj, H., Gupta, A., Kumar, V., and Tulsiani, S. (2024a). “Towards generalizable zero-shot manipulation via translating human interaction plans,” in 2024 IEEE International Conference on Robotics and Automation (ICRA), 6904–6911. doi:10.1109/ICRA57147.2024.10610288

Bharadhwaj, H., Mottaghi, R., Gupta, A., and Tulsiani, S. (2024b). “Track2Act: predicting point tracks from internet videos enables generalizable robot manipulation,” in 1st Workshop on X-Embodiment Robot Learning, 306–324. doi:10.1007/978-3-031-73116-7_18

Black, K., Brown, N., Driess, D., Esmail, A., Equi, M., Finn, C., et al. (2024a). π0: a vision-language-action flow model for general robot control. arXiv preprint arXiv:2410.24164.