- 1The National Research Center for Giftedness and Creativity, King Faisal University, Al-Ahsa, Saudi Arabia

- 2Faculty of Educational Sciences, Yarmouk University, Irbid, Jordan

This study aimed to find out how science teachers use formative assessment to enhance children's learning. Data were collected through observing 45 male and female teachers in the classroom using an observation checklist based on international standards to assess teachers' practices in some aspects, such as planning the learning objectives, gathering evidence from activities, providing feedback, self-assessment, peer assessment, and promoting classroom collaboration. The results revealed that teachers' performance in applying formative assessment practices was at a low level across all criteria, as there were weaknesses in planning learning objectives and aligning them with lesson content, as well as a lack of effective use of educational activities to assess student progress. The feedback provided by teachers was insufficient to motivate students to improve their performance, and classroom interaction was limited. Furthermore, there was a lack of opportunities for students to assess themselves and their peers, indicating weaknesses in organizing self-assessment activities. In addition, there were no statistically significant differences in teachers' formative assessment practices based on gender and teaching experience, highlighting the importance of professional development for all teachers to enhance their teaching practices. These results suggest a significant need to improve teachers' skills in applying formative assessment and providing appropriate training.

1 Introduction

Teachers are one of the most important pillars in student's learning, and modern strategies place great emphasis on improving their skills to enhance students' academic performance, especially science teachers (AlAli and Al-Barakat, 2024; Bani Irshid et al., 2023; Bataineh et al., 2013). Having teachers use the right techniques to measure their students' progress is vital for productive learning activities, and while all these initiatives to transform science education are globally undertaken, attention is increasingly being given to the implementation of new methods of measurement in teaching and learning activities (Darling-Hammond et al., 2019; Dayal, 2021; Duraiappah et al., 2021). These new methods help create better climates for learning and make students more accountable for their own learning, which ultimately places less strain on teachers. Well-developed measurement techniques are functional and simple to apply, which is a precondition to improve learners' achievement (Al-Hassan et al., 2025; Black, 2014; Hawamdeh et al., 2025; Kim et al., 2019).

In coherence with the international perspective of scientific education, learning effectiveness depends greatly upon the way students are assessed. Thus, students need to master listed knowledge and skills of the science curriculum (Alonzo, 2018; Majeed et al., 2023). Among the many used forms of assessment, formative assessment is particularly significant as it enables the improvement of both teaching and learning by way of ongoing feedback. This is in contrast with summative assessments, which seek to compare and assess overall student competency at the end of a unit or course and are rather concerned with practitioners' feedback on students' in-depth knowledge mastery (Al-Hassan et al., 2025; Chand and Pillay, 2024; Stanja et al., 2023).

Summative assessments mostly deal with evaluative results, which provide a useful, but rather limited, picture of how skills can be further improved. This style of assessing does not aim at changing the curriculum, pedagogy or any subsequent educational activities (Majeed et al., 2023). On the other hand, formative assessment gives the best opportunity for teachers to change the measure of planning and instruction that was previously done to improve effectiveness. Formative assessment will be more useful in achieving respect for students as part of academic achievement (Stanja et al., 2023; Wondim, 2025).

Formative assessment has enhanced student participation since it provides distinct and practical feedback to learners about their performance while specifying areas and aspects that need improvement. It indeed creates constructive dialogue within the teacher-student relationship. Besides, it helps the teachers modify the support and instruction they give in class to fit the needs of the student. Moreover, it provides feedback in real time to address the student's shortcomings before they become a problem, thereby promoting a viable environment for learning (Al-Barakat et al., 2025a; Bataineh et al., 2017; Black and Wiliam, 2009).

Traditionally, educators assume that formative assessments only gather information about the learning process rather than doing something with the information. It is employed purposefully to manage changes in instruction and give students support in all aspects of their learning (Al-Halalat et al., 2024; Fraihat et al., 2022; Guhn et al., 2024; Khasawneh et al., 2022; Kulasegaram and Rangachari, 2018). Furthermore, Black and Wiliam (2009) explain a particular example in which the educator uses results from an assessment to change a practice while concentrating on the progression of a particular child and other children in the classroom. The assessment in this instance did not change the pedagogical actions or ways of supporting the learners, thus negating its relevance to formative assessment and making it purely evaluative (Al-Barakat et al., 2025b; Al-Hassan et al., 2022).

While learning any science subject, a formative assessment plays an important role in the achievement of any learning goal. It helps the teacher know and work on the student's strength and weaknesses and achieve better academic results. For formative assessment to be properly carried out, it is important to adhere to specific standards that offer guidance to teachers regarding improving their practices and focusing on the improvement of the learning outcomes of the students (Al-Barakat and Al-Hassan, 2009; Menéndez et al., 2019). These standards, which underpin teacher development, explain why formative assessment strategies work, and enhance educational outcomes in general. Likewise, Schildkamp et al. (2020) elaborate on these standards, saying that formative assessment, if implemented correctly in the teaching-learning process, can result in better learning as the student's educational needs are always emphasized and sought before, during and after the teaching learning process. Therefore, such adjustments need continuous feedback that lets teachers reflect on their teaching approach to provide more assistance to their students.

Conderman et al. (2020) remark that it is common to find reform movements in science education which recommend the use of integrated standards to improve teacher effectiveness in primary education, paying particular attention to formative assessment of student learning. To fulfill these criteria, teachers are expected to gain skills in providing regular and varied feedback, and engaging students with the feedback provided to achieve high-quality learning results (Al-Barakat et al., 2025a). There are generic international standards of quality in formative assessment that support the application of multiplicity of assessments to improve learning of science (AlAli and Al-Barakat, 2023a; Bataineh and Mayyas, 2017; Khasawneh et al., 2023; Stanja et al., 2023). Methods of teaching and learning incorporated in these systems are targeted at students and focus on effective assessment as an essential factor for desirable learning outcomes. These assessment forms are required to measure the ability acquired by the students to apply the concepts learned in real-life situations while being able to think critically (Huang et al., 2023; Adarkwah, 2021; Ajjawi et al., 2019).

To engage students with their learning through continuous assessment, teachers need to change their pedagogical strategies and focus on achieving the intended science learning outcomes rather than evaluating or measuring learning (AlAli et al., 2024; Al-Hassan et al., 2012; Huang et al., 2023; Schildkamp et al., 2020). This interaction is in line with Ozan and Kincal (2018) who reported that formative assessment is a two-way process that improves the learning experience for both students and teachers. As teaching practices change, there is a greater need for adapting these practices to align to the increasing prominence of formative assessment which serves the purpose of educational accountability. This notion is called assessment driven accountability (Adarkwah, 2021; Al-Barakat and Bataineh, 2011).

Learning and assessment are inseparable, as they enhance one another's effectiveness (Ajjawi et al., 2019). Therefore, effective assessment methods must be interactive, formative collaborative, aiming at improving students' understanding, skills, and attitudes in various areas of cognition (AlAli, 2021; Meng, 2023). Adarkwah (2021) emphasized that assessment refers to all actions undertaken by the teacher to evaluate the level of students' learning, including self-assessment, as the feedback and information contribute to enhancing the teaching and learning process. Assessment methods include various collecting strategies that determine what teachers should teach and what learners should learn. This determination requires the use of varied methods to legitimately address educational challenges. Additionally, assessments should be closely aligned with curricula to provide students with real-life application opportunities for scientific knowledge (AlAli and Al-Barakat, 2022; Hattie and Yates, 2014).

Teacher's professional identity plays a role in implementing the formative assessment, as it influences how formative assessment activities are conducted and how the classroom is arranged (Almazroa et al., 2022; Majeed et al., 2023). Teachers with a strong sense of professional identity, who consider themselves as facilitators of knowledge, tend to create more engaging and motivating learning environments where students actively participate. Such teachers are most likely to accept inclusive pedagogical methods geared toward equity and diversity while focusing on the prerequisite learning needs of the students. When teachers use formative assessment as an iterative and observational tool, they are able to give feedback tailored to specific students and consequently enhance their learning progress and academic development (AlAli and Al-Barakat, 2023b; Ambusaidi and Al-Farei, 2017).

Teacher's gender also plays a role in influencing formative assessment practices. As noted by Almazroa et al. (2022), there are gender concerns related to the teaching of science and mathematics due to the perspective that considers these fields of knowledge suitable for males more than females. Gender identity may determine the level of participation of students in formative assessment as they may be more willing to take part in assessments when their teachers are of the same gender. In addition, gender biases can result in stereotyping and misinterpretation of the assessments which may negatively or positively influence students' achievement. Accordingly, teachers of scientific disciplines, especially science teachers, need to pay more attention to professional identity and gender as these social constructs influence the forms and practices of their formative assessment (Ambusaidi and Al-Farei, 2017).

Formative assessment is known to provide substantial value in improving learning outcomes, This research aims at exploring the level of implementing formative assessment by science teachers at the primary school level in Jordan, in addition to exploring the effect of gender and teaching experience on the implementation of formative assessment. The importance of this research lies in providing deeper understanding of the role of formative assessment in teaching and learning science and how teachers' related practices affect children's learning. Besides, the research aims at putting down some recommendations to the Ministry of Education to enhance teacher training and improve formative assessment practices in schools. It also aims at developing formative assessment strategies that align with the educational context in Jordan and ultimately and improving the quality of education and learning in schools. Accordingly, the research raises the following questions:

1. What is the level of implementing formative assessment practices by science teachers to improve children's science learning?

2. Are there statistically significant differences at (p ≤ 0.05) in the formative assessment practices related to the teacher's gender and teaching experience?

2 Materials and methods

2.1 Research design

A descriptive-analytical methodology was used for this research, and the primary method of data collection was via forms of direct observation. The observation instrument was created to measure how science teachers use formative assessment strategies in the classroom and the effectiveness of such strategies across different teacher variables like gender and teaching experience. This observation method was done from various learning environments in which the focus was on capturing the formative assessment practices that teachers utilized during science instruction in particular how and why these practices are employed in the routine classroom teaching strategies. This research used focus, systematic and guided observation techniques designed for the purpose of detailed analysis of teacher-student interactions and the recording of the practical workings of formative assessment in different school settings.

2.2 Research sample

The research sample consisted of 45 male and female teachers who teach the science curriculum at the childhood stage in northern Jordan. These teachers were selected based on criteria including their willingness to participate in the research, which would serve as valuable feedback for them. It is also essential to mention that the choice for the selected participants was made considering sociocultural factors, as for female teachers it is particularly the case that no researchers are permitted into any teaching or field research lessons without their written consent. This is because the traditional and cultural context of the Jordanian society places social barriers on researchers trying to penetrate the learning environment. Thus, the attitude of the teachers toward cooperating was one of the main factors in the participant selection process as it was ensured that these cultural and social concerns are addressed. The sample was also chosen according to their ability to provide adequate information through direct observation on the teaching and learning process, so that a complete and fair picture of the assessment practice of the form of assessment in different education contexts was formed.

2.3 Classroom observation checklist

A standardized classroom observation checklist that measure formative assessment practices in a science classroom was prepared. The checklist includes 30 indicators distributed over six criteria including planning learning objectives and success criteria, gathering evidence and assessment through activities, feedback and enhancing classroom interaction, self-assessment and peer assessment, promoting collaboration in the classroom, redesigning instruction based on student feedback. Teachers were evaluated based on a verbal rating scale that has four levels of performance: Beginner (1), Growing (2), Progressing (3), and Exemplary (4). The scale was applicable for describing teaching behaviors where the teacher is showing differing degrees of skill or proficiency in performing formative assessment practices.

2.4 Validity and reliability

The observation instrument was validated by 11 experts in early childhood education, measurement and assessment, and science teaching. These experts reviewed the checklist items and provided their comments and views on the suitability of the items to the criteria. Given the comments made, it was ascertained that the instrument adequately met the research's requirements. Furthermore, the researchers performed a pilot test to 20 teachers and made revisions based on their comments. McDonald's Omega, Composite Reliability (CR), convergent validity, and discriminant validity were among some of the scientific measures used to verify construct validity. The results ranged from 0.893 to 0.961 and 0.872 to 0.938, respectively. The Average Variance Extracted (AVE) values ranged from 0.514 to 0.721. Moreover, the discriminant validity of the tool was confirmed using Fornell and Larcker's method, the square root of the AVE for each construct was higher than the correlations between that construct and other constructs in the model. Based on these results, it was confirmed that the instrument is highly reliable and valid (AlAli and Al-Barakat, 2022; AlAli and Saleh, 2022).

2.5 Data collection

The data was collected by attending teaching and learning situations in science learning environments after obtaining consent from the participants and arranging classroom visits, where each visit lasted for ~45 min. During each visit observation, the focus was on evaluating the implementation of formative assessment practices across 10 established criteria. Specific behaviors were also monitored, such as whether the teacher asked timely questions, provided clear feedback, and encouraged peer collaboration. These practices were assessed according to predefined performance levels. Also, feedback from the students regarding the teacher performance, discipline in class, and classroom interactions, and the behavioral aspects of student participation during the formative assessment, like self and peer assessment, was also taken into consideration in ascertaining the effectiveness of the teacher's formative assessment. A redundancy in observations system was used to enhance the validity and reliability of the results. Every session had another observer that was responsible for checking that every other assessment was correct. Each teacher was observed in four sessions, and the assessment forms were filled by both observers for the teachers' four sessions. Each teacher's four observations were done by each of the observers, and both teachers' sets of four assessments were done by both observers. The scores given by each observer were computed.

Using relevant statistical techniques, the inter-rater reliability was verified. The “Inter-Class Correlation Coefficient” or ICC was established as a measure of how observers rated formative assessment practices. The agreement level was 97%. Also, Cohen's Kappa coefficient was used to measure the degree of agreement among observers for all the teaching-learning activities. Adjusted Cohen's Kappa is especially valuable in investigating agreement between observers because it accounts for the probability of random consensus (Walter et al., 2019). A Kappa statistic of 0.88, however, indicates a great amount of agreement on the data collection procedure.

In this lesson, teachers used a single planned lesson to cover all assessment standards and benchmarks as they are certain that all the set requirements can be achieved using this method. In a science classroom where activities and participation are interdependent, a single lesson can include a multitude of methods of formative assessment. For example, right at the beginning of the lesson, the teacher may set the learning objectives that will be attained and restated later during the lesson. The learners' success criteria are also set up in such a manner that, during the activities, reference can be made to them. While participating in these activities, the teacher may monitor student learning through responses and the student's work may be analyzed for evidence of learning progress. The teacher may include self and peer assessment along with group work as well as during activities where students contemplate what they have learned.

2.6 Data analysis

The means and standard deviations were calculated for each specific indicator associated with those criteria in the observation instrument. The maximum possible score for each indicator was 4, and the minimum was 1. The score of formative assessment practices was determined based on an arithmetic means that ranges from 1 to 4 points. When the arithmetic means is < 2, it is considered that formative assessment practices are either absent or weak. If the arithmetic means falls between 2 and 2.99, the practices are present but not fully developed or consistent. However, if the arithmetic means is 3 or more, it is considered that the practices are good and integrated into the lesson. This calculation helps to provide a numerical representation of the data and makes it easier to present the results in an accurate and realistic manner. Additionally, these means were then converted into percentage averages for analysis. The percentage score was calculated using the following formula: Percentage = (Calculated arithmetic means of the item × 100%)/4. To address the second research question, non-parametric tests were utilized, including the Mann-Whitney U test for pairwise comparisons.

3 Research results

3.1 Using formative assessment in science learning environments

The first research question aimed at determining how science teachers implement formative assessment practices to enhance the science learning of primary children. Based on the classroom observations, the arithmetic means and standard deviations of the practice scores for each main criterion of formative assessment were calculated separately for each participant to determine the level of practice.

3.1.1 Planning learning objectives and success criteria

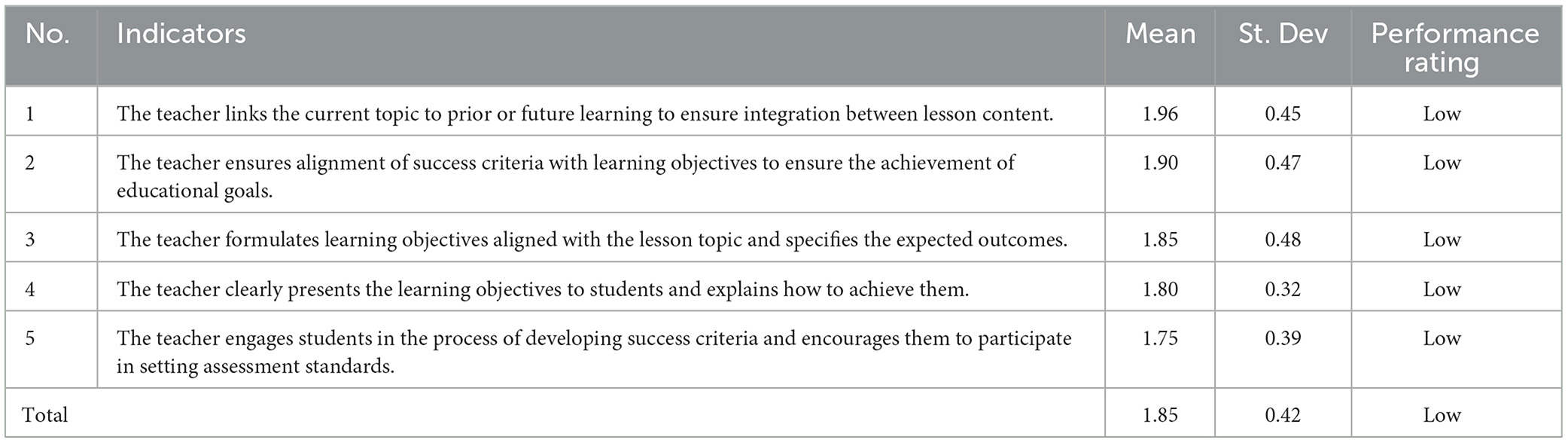

This criterion focuses on ensuring that learning objectives are clearly delivered to students, aligned with lesson content, and linked to prior and future knowledge. It also involves engaging students in defining success criteria to help them understand how to achieve the educational goals. Table 1 presents the means and standard deviations for science teachers' performance on this criterion, ranked in descending order according to their means.

Table 1. Means and standard deviations of science teachers' performance on planning learning objectives and success criteria.

Table 1 showed that that the science teachers' performance in this criterion is consistently low across all indicators. The means ranging from 1.75 to 1.96 indicated that teachers need to improve in all indicators' aspects.

3.1.2 Gathering evidence and assessment through activities

This criterion focuses on how teachers collect evidence of student learning through activities that are aligned with the learning objectives. Ongoing assessment enables teachers to adjust their teaching methods to better address students' needs. Table 2 shows the means and standard deviations for science teachers' performance on this criterion, organized in descending order according to their average scores.

Table 2. Means and standard deviations of science teachers' performance on gathering evidence and assessment through activities.

Table 2 showed a low performance of science teachers across all indicators of gathering evidence and assessment through activities criterion, showing a low performance across all indicators, confirming the need to improve teachers' practices related to this criterion.

3.1.3 Feedback and enhancing classroom interaction

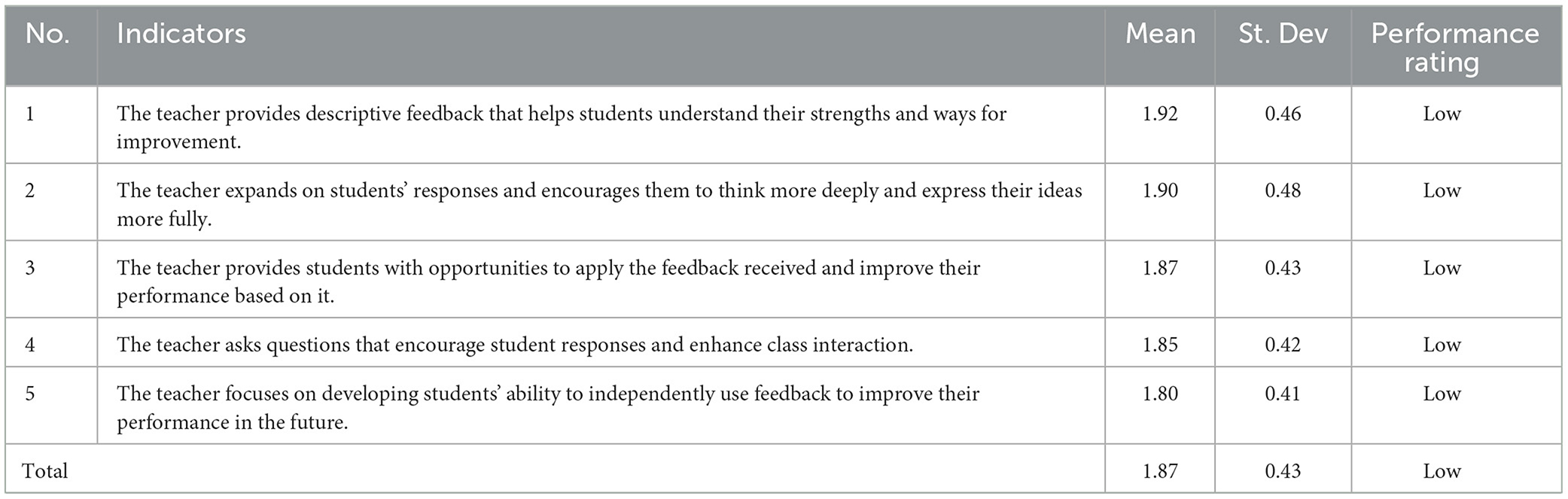

This criterion aims to give feedback of the descriptive type and facilitate interaction within the classroom, which are essential for enabling learners to assess their achievement and learn better. Interaction and feedback that is given are productive for understanding and improving the learner's performance. Table 3 shows the means and standard deviations for science teachers' performance on this criterion, organized in descending order according to their average scores.

Table 3. Means and standard deviations of science teachers' performance on feedback and enhancing classroom interaction.

Table 3 showed that the results indicate low performance across all listed indicators. The values of means reveal that all aspects of this criterion need to be improved. The results highlight the urgent need to improve teachers' strategies in providing feedback and enhancing classroom interaction by increasing opportunities for students to apply feedback and developing their ability to use it to improve their performance continuously.

3.1.4 Self-assessment and peer assessment

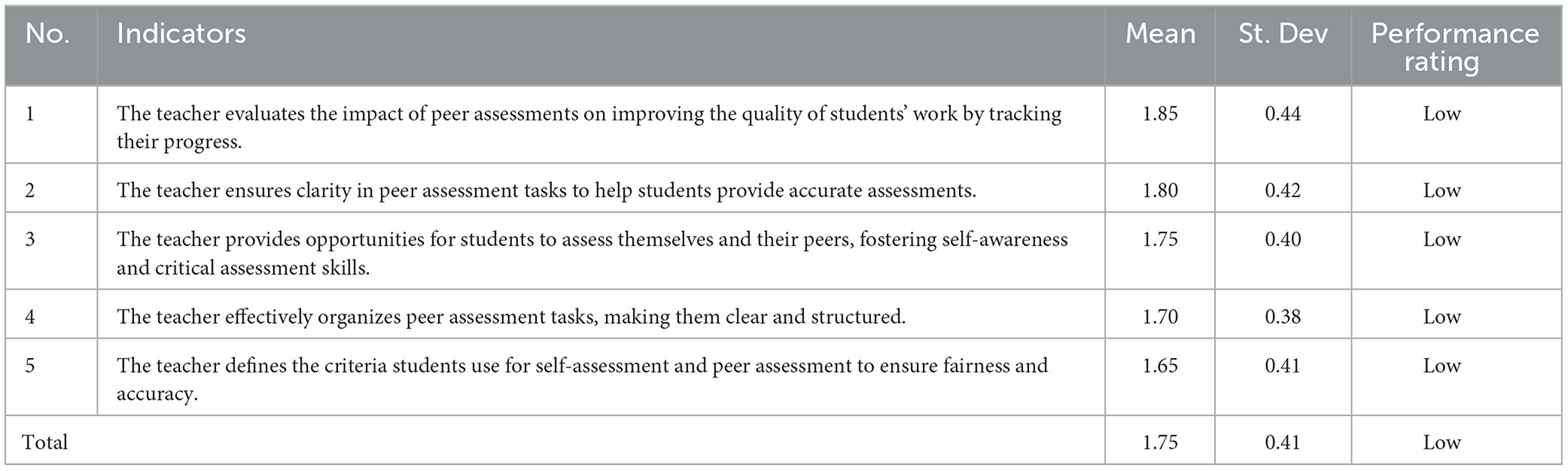

This criterion emphasizes the importance of encouraging students to assess both their own and their peers' work. Such assessments promote critical thinking, accountability, and contribute to the improvement of the quality of their work and achievement. Moreover, this practice fosters greater independence in students' learning. Table 4 shows the means and standard deviations of science teachers' performance on this criterion, ranked in descending order based on their average scores.

Table 4. Means and standard deviations of science teachers' performance on self-assessment and peer assessment.

Table 4 illustrates science teachers' performance on the self-assessment and peer assessment criterion, with results showing low performance across all indicators. The performance rating is classified as “Low” for all listed points, with the average scores ranging between 1.65 and 1.85. This indicates a significant need for improvement in the aspects of this criterion.

3.1.5 Promoting collaboration in the classroom

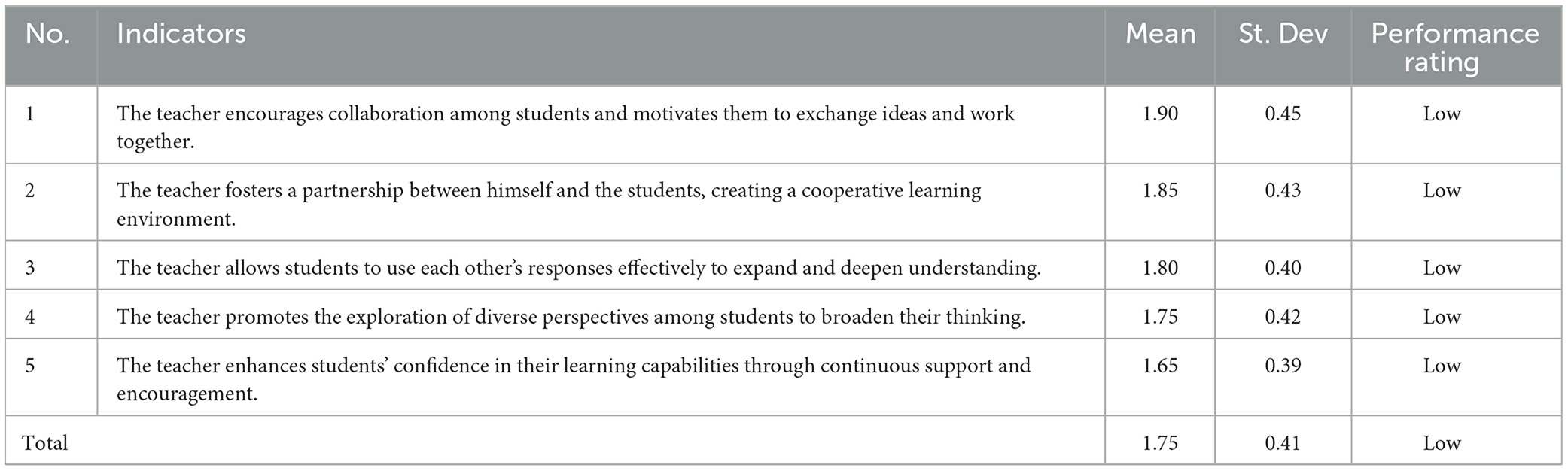

This criterion emphasizes fostering a collaborative environment in the classroom, where students engage in teamwork to reach shared objectives. Through exchanging ideas and perspectives, students enhance their understanding and learning through mutual collaboration. Table 5 illustrates the means and standard deviations of science teachers' performance on this criterion, organized in descending order according to their average scores.

Table 5. Means and standard deviations of science teachers' performance on promoting collaboration in the classroom.

Table 5 showed science teachers' performance on the promoting collaboration in the classroom criterion, with all indicators showing low performance. The average scores range from 1.65 to 1.90, indicating a consistent need for improvement in creating a collaborative learning environment.

3.1.6 Redesigning instruction based on student feedback

This criterion analyzes how instructors change their teaching practices considering students' feedback. By meeting student's expectations and changing their teaching methods in response to a student's feedback, teachers can enhance the quality of their teaching as well as the students' learning. Means and standard deviations of science teachers' performance in this task, arranged in a descending order, are shown in Table 6.

Table 6. Means and standard deviations of science teachers' performance on redesigning instruction based on student feedback.

As illustrated in Table 6, the participants showed low performance in all indicators of this criterion. The average scores for all indicator was between 1.60 and 1.95. These low scores suggest that while teachers appear to gather evidence of student assessment, they find it difficult to utilize this information for revising their instructive strategies and approaches.

3.2 Effect of teachers' gender and experience on their use of formative assessment

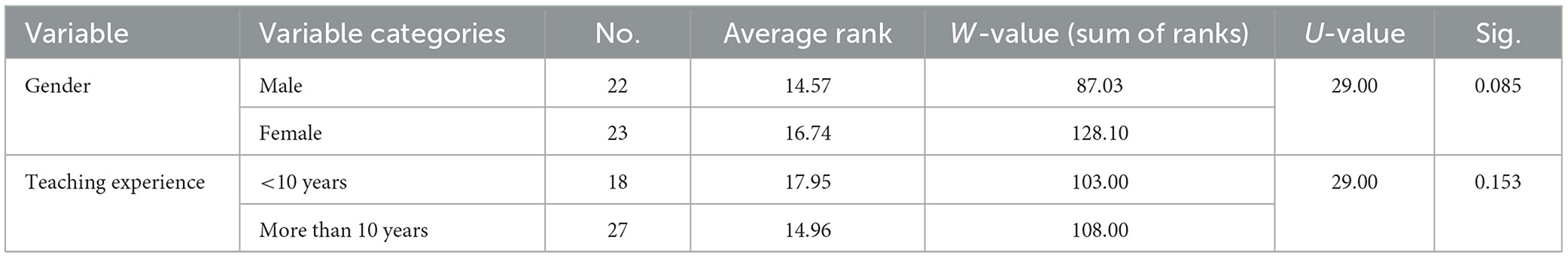

This section focuses on the variables of teacher gender and teaching experience as potential factors influencing the use of formative assessment practices in science learning environments. To examine this, non-parametric tests, specifically the Mann-Whitney U test, were used. The results of this test are presented in Table 7.

Table 7. Mann-Whitney U test results for formative assessment practices by gender and teaching experience.

Table 7 reveals that male participants ranked the use of formative assessment practices as 14.57 while female participants ranked it considerably higher at 16.74. The results appear to indicate that female teachers tend to apply assessment methods and practices more potent than male teachers. The Mann-Whitney U test at the same time produced a U-value of 29.0 and a significance value of 0.085, which means the analysis suggests this difference is not significant.

For participants with over 10 years of experience, the average rating of the quality of formative assessment practices was 14.96 while those with < 10 years of experience rated it at 17.95. This indicates that teachers with less experience are more willing to use formative assessment methods. However, the Mann-Whitney U test results from the U-value of 29.00 suggest there is no significant difference between average ratings of formative assessment practices and years of teaching experience.

These findings indicate that even though there are some distinctions in the use of formative assessment practices between male and female teachers or between experienced teachers and novice ones, these distinctions are not statistically significant. This implies that there might be other variables that have a stronger influence on the use of formative assessment implementations in the classroom.

4 Discussion

Although formative assessment bears extensive promise in fostering learning, the findings nevertheless showed gaps in all practices which, if worked on, would yield better education results. One such opportunity is where the students' prior learning is incorporated more closely with the new content to be taught. The findings showed that despite the fact that formative assessment has the strength of enhancing prior knowledge, this advantage was underexploited, which means that teachers need additional measures to accomplish the targeted level of linking previous concepts to new ones. Such a finding could be attributed to the existence of problems in applying linking strategies, caused by little training devoted to this area. Earlier research AlAli (2021), Alt et al. (2023), Atasoy and Kaya (2022), Xu et al. (2023), Yan et al. (2022), and Zeng et al. (2018) have noted that emphasizing linkages between what is already known and what is to be learned enhances the students' overall performance.

In terms of assessing criteria and setting benchmarks, the results revealed that these criteria need to be given to the students in a clearer and more precise manner. This can be attributed to the different ways in which the marking criteria are given to students as they have a bearing on the students' comprehension of the teacher's expectations. This confirms the need to direct the students efforts more specifically toward performing better. These findings are consistent with prior research conducted by AlAli (2021), Atasoy and Kaya (2022), Dah et al. (2024), and Van der Steen et al. (2023), which emphasized that clarity of assessment criteria is useful to students in their learning activities and academic performance, as well as enabling teachers to assess the students' progress more effectively.

The findings proved that the tasks and activities carried out for assessment purposes did not always coincide with the projected learning outcomes. This indicates that the assessment instruments need to be better harmonized with the objectives. Earlier studies AlAli (2021), Dah et al. (2024), Van der Steen et al. (2023), and Yan et al. (2022) were in agreement with this assumption, indicating that using more than one assessment instrument or strategy appropriate to a particular learning objective provides more valid and accurate evidence of student achievement, which helps increase students' attention to the material and direct their learning in a more purposeful manner.

Participants did not employ questioning approaches as assessment methods for student development, or respondents may hadn't enough time to reply or there may have been a limited number of responses allowed. This finding is also consistent with other research (AlAli, 2021; Atasoy and Kaya, 2022; Basilio and Bueno, 2021; Jett, 2009; Yan et al., 2022), which reported that when students were encouraged to think more deeply, enhanced questioning techniques increased their understanding of the studied concepts. Accordingly, teachers must learn how to ask questions that promote active participation of students during the learning process.

The findings also showed that students were rarely involved in peer assessment, which is well known to enhance critical thinking and student collaboration. This was attributed to the absence of activities in which students could assess the work of other students because new methods of working and thinking in a collaborative and independent manner have not been cultivated. This is consistent with Basilio and Bueno (2021), Jett (2009), Moyo et al. (2022), Obeido (2016), Ryan (2015), and Van der Steen et al. (2023), that peer assessment encourages collaborative learning and understanding of the subject matter. Besides, the results showed that more self-assessment practices must be introduced in the classrooms, this can be explained by a lack of change that allows students to examine their own learning. This, however, is more corroborated by Almazroa et al. (2022), Ambusaidi and Al-Farei (2017), Bouchaib (2016), and Chemeli (2019) who stated that self-assessment helps the students to track their progress while adjusting their effort and self-control toward independent learning.

It was further noted that the collaboration of teachers and students was lower than expected, which resulted in students being unable to view and address problems from different angles and to solve them. This result can be attributed to a gap in teacher and student collaboration, which forces students to lose out the advantages that the changing environment offers. Various studies Almazroa et al. (2022), Ambusaidi and Al-Farei (2017), Wondim (2025), and Yan et al. (2022) reported that students' collaboration with teachers increases students' participation, collaboration efforts, and even socialization skills.

The findings pointed out how some students' problems in science need to be handled better. This can be explained by that students do not receive enough feedback on their performance, which makes it difficult for the teachers to know what outcomes to work toward. Earlier studies Basilio and Bueno (2021), Jett (2009), Moyo et al. (2022), and Obeido (2016) confirmed that consistent performance data on students' learning challenges assist teachers to better plan and meet students' needs effectively.

The outcomes related to the second question showed that there was no statistical difference between formative assessments employed by male teachers and their female counterparts. This can be explained by the fact that teachers have equal training and experience in using formative assessment instruments. This finding conflicts with earlier studies by Almazroa et al. (2022) and Ambusaidi and Al-Farei (2017), that found gender has a significant impact on the use of formative assessment within the class. Providing equal training and professional experience to both male and female teachers contribute to the existence of equal practices in the field. When professional development programs are equitable and target improving formative assessment skills of all teachers, they will be able to apply the same methods and approaches in assessment according to the requirements of the training program and gender will not have a significant impact on how these practices are employed.

Moreover, the findings showed that teachers' experience had no statistically significant impact on formative assessment practices. This result can be explained by the fact that both novice and experienced teachers seem to face similar constraints toward using formative assessment strategies due to insufficient professional development. This is consistent with earlier studies of Al-Barakat et al. (2023), Almazroa et al. (2022), Ambusaidi and Al-Farei (2017), and Wondim (2025), which reported that experience alone is insufficient to increase the use of formative assessment, and it is the regular additional training that permits teachers to use these strategies.

5 Conclusions, recommendations, limitations, and future research directions

This study highlighted the need to enhance formative assessment practices in children's learning of science education, especially in linking previous concepts with new scientific content and enhancing self-assessment and student collaboration. Additionally, it was noted that questioning techniques, peer assessment, and self-assessment were inefficiently applied, indicating the need to strengthen these practices to nurture critical thinking and cooperation among children.

Considering these concerns, it is necessary to embark on creating teachers' professional development courses that include how to connect students' existing knowledge with the new learning and use the assessment criteria in a more understandable way. Also, devising more reliable evidence collection procedures is essential for the teachers to be able to modify their instruction to respond to the students' varying needs. There is a need to increase the variety of questions posed to students, with the aim of creating more critical thinkers among them. Even more, increasing opportunities for students to form assessments of their peers and self would aid in promoting a collaborative and independent learning culture. At the same time, the interaction between the teachers and the learners should be enhanced by establishing a classroom where ideas can freely be shared, and participation is encouraged.

As with all research, there are limitations that need to be discussed as well, and these could be associated with how the findings are generalizable. One limitation is the sample selected which comes from a very small educational region, so the findings may not be representative of all the educational regions or students. Another limitation of this research is that it was primarily based on direct classroom observations, which might not provide adequate evidence of formative assessment practices. There might be other factors that are not accounted for which affect how some of these practices are carried out. Consequently, there is a need to employ more than one research instrument, i.e., semi-structured interviews, to capture a robust and detailed account of teachers' formative assessment practices.

In terms of future work, there are plenty of topics that need to be investigated. To begin with, it would be interesting to conduct research on the use of formative assessment techniques in other educational disciplines like math or literature to find out if those disciplines employ the strategies differently. Second, future research could focus on the impact of ongoing professional development programs on improving formative assessment practices, identifying how teachers' skills and performance in implementing these strategies can be developed effectively. Third, it would be useful to conduct comparative studies between schools that have successfully implemented formative assessment strategies and those that have not, to identify factors that contribute to the improvement of these practices.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The research involving human participants was reviewed and approved by the Deanship of Scientific Research at King Faisal University, ensuring compliance with ethical guidelines and standards for research involving human and/or animal subjects. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

RA: Writing – original draft, Writing – review & editing, Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization. AA-B: Conceptualization, Investigation, Validation, Writing – review & editing, Data curation, Formal analysis, Funding acquisition, Methodology, Project administration, Resources, Software, Supervision, Visualization, Writing – original draft.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. Financial support was received from the Deanship of Scientific Research at King Faisal University, Saudi Arabia under annual research grant number KFU251145.

Acknowledgments

The authors thank the Deanship of Scientific Research at King Faisal University, Saudi Arabia for the financial support.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adarkwah, M. A. (2021). The power of assessment feedback in teaching and learning: a narrative review and synthesis of the literature. SN Soc. Sci. 1:75. doi: 10.1007/s43545-021-00086-w

Ajjawi, R., Tai, J., Nghia, T., and Patrick, C. (2019). Aligning assessment with the needs of work-integrated learning: the challenges of authentic assessment in a complex context. Assess. Assess. Higher Educ. 45, 521–534. doi: 10.1080/02602938.2019.1639613

AlAli, R. (2021). Assessment for learning at Saudi universities: an analytical study of actual practices. J. Instit. Res. South East Asia 19, 20–41. Available online at: https://www.researchgate.net/publication/359045533

AlAli, R., and Al-Barakat, A. (2022). Using structural equation modeling to assess a model for measuring creative teaching perceptions and practices in higher education. Educ. Sci. 12:690. doi: 10.3390/educsci12100690

AlAli, R., and Al-Barakat, A. (2023a). Role of teacher understanding about instructional visual aids in developing national and international student learning experiences. J. Int. Stud. 13, 331–354. Available online at: https://ojed.org/jis/article/view/6576/2797

AlAli, R., and Al-Barakat, A. (2023b). Instructional illustrations in children's learning between normative and realism: an evaluation study. PLoS ONE 18:e0291532. doi: 10.1371/journal.pone.0291532

AlAli, R., and Al-Barakat, A. (2024). Young children's attitudes toward science learning in early learning grades. Asian Educ. Dev. Stud. 13, 340–355. doi: 10.1108/AEDS-02-2024-0036

AlAli, R., Al-Hassan, O., Al-Barakat, A., Alakashee, B., Kanaan, E., Alqatawna, M., et al. (2024). Effectiveness of utilizing gamified learning in improving creative reading skills among primary school students. Forum Ling. Stud. 6, 816–830. doi: 10.30564/fls.v6i6.7518

AlAli, R., and Saleh, S. (2022). Towards constructing and developing a self-efficacy scale for distance learning and verifying the psychometric properties. Sustainability 14:13212. doi: 10.3390/su142013212

Al-Barakat, A., and Al-Hassan, O. (2009). Peer assessment as a learning tool for enhancing student teachers' preparation. Asia-Pac. J. Teach. Educ. 37, 399–413. doi: 10.1080/13598660903247676

Al-Barakat, A., Al-Hassan, O., Alakashee, B., Al-Saud, K., and Saleh, S. (2025b). Promoting the belief in God among Muslim youth through primary science learning. Eur. J. Philos. Relig. 17, 527–552. doi: 10.1371/journal.pone.0291532

Al-Barakat, A., Al-Hassan, O., AlAli, R., Al-Hassan, M., and Al sharief, R. (2023). Role of female teachers of childhood education in directing children towards effective use of smart devices. Educ. Inform. Technol. 28, 7065–7087. doi: 10.1007/s10639-022-11481-y

Al-Barakat, A., Al-Hassan, O., Bataineh, R., Al Ali, R., Aboud, Y., and Ibrahim, N. A. (2025a). Shaping young minds: How teachers foster social interaction, psychological security, and motivational support in the primary language classroom. Int. J. Learn. Teach. Educ. Res. 24, 359–378. doi: 10.26803/ijlter.24.1.18

Al-Barakat, A., and Bataineh, R. F. (2011). Preservice childhood education teachers' perceptions of instructional practices for developing young children's interest in reading. J. Res. Childhood Educ. 25, 177–193. doi: 10.1080/02568543.2011.556520

Al-Halalat, K., Beichi, A., Al-Barakat, A., Al-Saud, K., and Aboud, Y. (2024). Factors influencing the formation of intellectual security among university students: a field study. Int. J. Cyber Criminol. 18, 108–129. Available online at: https://cybercrimejournal.com/menuscript/index.php/cybercrimejournal/article/view/344

Al-Hassan, O., Al-Barakat, A., and Al-Hassan, Y. (2012). Pre-service teachers' reflections during field experience. J. Educ. Teach. 38, 419–434. doi: 10.1080/02607476.2012.707918

Al-Hassan, O., Al-Hassan, M., Almakanin, H., Al-Rousan, A., and Al-Barakat, A. (2022). Inclusion of children with disabilities in primary schools and kindergartens in Jordan. Education, 3-13 52, 1089–1102. doi: 10.1080/03004279.2022.2133547

Al-Hassan, O. M., Alhasan, L. M., AlAli, R., Al-Barakat, A., and Al-Saud, K. (2025). Enhancing early childhood mathematics skills learning through digital game-based learning. Int. J. Learn. Teach. Educ. Res. 24, 186–205. doi: 10.26803/ijlter.24.2.10

Almazroa, H., Alrwythi, E., and Alshaya, F. (2022). “Science teaching performance: investigating gender, education, and teaching experience,” in Paper Presented at the NARST Annual International Conference (Vancouver, BC).

Alonzo, A. (2018). An argument for formative assessment with science learning progressions. Appl. Meas. Educ. 31, 104–112. doi: 10.1080/08957347.2017.1408630

Alt, D., Naamati-Schneider, L., and Weishut, D. J. (2023). Competency-based learning and formative assessment feedback as precursors of college students' soft skills acquisition. Stud. Higher Educ. 48, 1901–1917. doi: 10.1080/03075079.2023.2217203

Ambusaidi, A., and Al-Farei, K. (2017). Investigating omani science teachers' attitudes towards teaching science: the role of gender and teaching experiences. Int. J. Sci. Mathem. Educ. 15, 71–88. doi: 10.1007/s10763-015-9684-8

Atasoy, V., and Kaya, G. (2022). Formative assessment practices in science education: a meta-synthesis study. Stud. Educ. Assess. 75:101186. doi: 10.1016/j.stueduc.2022.101186

Bani Irshid, M., Khasawneh, A., and Al-Barakat, A. (2023). The effect of conceptual understanding principles-based training program on enhancement of pedagogical knowledge of mathematics teachers. Eur. J. Mathem. Sci. Technol. Educ. 19:em2277. doi: 10.29333/ejmste/13215

Basilio, M., and Bueno, D. (2021). Instructional supervision and assessment in the 21st century and beyond. Online Subm. 4, 1–8. Available online at: https://files.eric.ed.gov/fulltext/ED629212.pdf

Bataineh, R., Al-Qeyam, F., and Smadi, O. (2017). Does form-focused instruction really make a difference? Potential effectiveness in Jordanian EFL learners' linguistic and pragmatic knowledge acquisition. Asian-Pac. J. Second Foreign Lang. Educ. 2, 17. doi: 10.1186/s40862-017-0040-0

Bataineh, R., and Mayyas, M. (2017). The utility of blended learning in EFL reading and grammar: a case for Moodle. Teach. Engl. Technol. 17, 35–49. Available online at: https://files.eric.ed.gov/fulltext/EJ1149423.pdf

Bataineh, R., Rabadi, R., and Smadi, O. (2013). Fostering Jordanian university students' communicative performance through literature-based instruction. TESOL J. 4, 655–673. doi: 10.1002/tesj.61

Black, G. (2014). Say cheese! A snapshot of elementary teachers' engagement and motivation for classroom assessment. Action Teach. Educ. 36, 377–388. doi: 10.1080/01626620.2014.977689

Black, P. J., and Wiliam, D. (2009). Developing the theory of formative assessment. Educ. Assess. Eval. Acc. 21, 5–31. doi: 10.1007/s11092-008-9068-5

Bouchaib, B. (2016). Exploring teachers' assessment practices and skills. Int. J. Assess. Tools Educ. 4, 1–18. doi: 10.21449/ijate.254581

Chand, S., and Pillay, K. (2024). Understanding the fundamental differences between formative and summative assessment. Global Sci. Acad. Res. J. Educ. Literat. 2, 6–9. Available online at: https://gsarpublishers.com/wp-content/uploads/2024/02/GSARJEL052024-Gelary-script.pdf

Chemeli, J. (2019). Impact of the Five Key Formative Assessment Strategies on Learner's Achievement in Mathematics Instruction in Secondary Schools: A Case of Nandi County, Kenya. Moi University, Kenya.

Conderman, G., Pinter, E., and Young, N. (2020). Formative assessment methods for middle level classrooms. Clear. House J. Educ. Strat. Issues Ideas 93, 233–240. doi: 10.1080/00098655.2020.1778615

Dah, N., Noor, M., Kamarudin, M., and Azziz, S. (2024). The impacts of open inquiry on students' learning in science: a systematic literature review. Educ. Res. Rev. 43:100601. doi: 10.1016/j.edurev.2024.100601

Darling-Hammond, L., Flook, L., Cook-Harvey, C., Barron, B., and Osher, D. (2019). Implications for educational practice of the science of learning and development. Appl. Dev. Sci. 24, 97–140. doi: 10.1080/10888691.2018.1537791

Dayal, H. (2021). How teachers use formative assessment strategies during teaching: evidence from the classroom. Austr. J. Teach. Educ. 46, 1–21. doi: 10.14221/ajte.2021v46n7.1

Duraiappah, A., Van Atteveldt, N., Asah, S., Borst, G., Bugden, S., Buil, J. M., et al. (2021). The international science and evidence-based education assessment. NPJ Sci. Learn. 6:7. doi: 10.1038/s41539-021-00085-9

Fraihat, M., Khasawneh, A., and Al-Barakat, A. (2022). The effect of situated learning environment in enhancing mathematical reasoning and proof among tenth grade students. Eur. J. Math. Sci. Technol. Educ. 18, em2120. doi: 10.29333/ejmste/12088

Guhn, M., Gadermann, A., and Wu, A. (2024). “Trends in international mathematics and science study (TIMSS),” in Encyclopedia of Quality of Life and Well-Being Research, ed. F. Maggino (Cham: Springer International Publishing), 7309–7311. doi: 10.1007/978-3-031-17299-1_3063

Hattie, J., and Yates, G. (2014). Visible Learning and the Science of How We Learn. Milton Park, Abingdon, Oxon, New York, NY: Routledge.

Hawamdeh, M., Khaled, M., Al-Barakat, A., and Alali, R. (2025). The effectiveness of Classpoint technology in developing reading comprehension skills among non-native Arabic speakers. Int. J. Inform. Educ. Technol. 15, 39–48. doi: 10.18178/ijiet.2025.15.1.2216

Huang, L. S., Khatri, R., and Alhemaid, A. (2023). “Enhancing learning through formative assessment: evidence-based strategies for implementing learner reflection in higher education,” in Global Perspectives on Higher Education: From Crisis to Opportunity, eds. J. S. Stephen, G. Kormpas, and C. Coombe (Cham: Springer International Publishing), 59–73. doi: 10.1007/978-3-031-31646-3_5

Jett, P. M. (2009). Teachers Valuation and Implementation of Formative Assessment Strategies in Elementary Science Classrooms (Doctoral dissertation). University of Louisville, USA.

Khasawneh, A., Al-Barakat, A., and Almahmoud, S. (2022). The Effect of error analysis-based learning on proportional reasoning ability of seventh-grade students. Front. Educ. 7:899288. doi: 10.3389/feduc.2022.899288

Khasawneh, A., Al-Barakat, A., and Almahmoud, S. (2023). The impact of mathematics learning environment supported by error-analysis activities on classroom interaction. Eur. J. Mathem. Sci. Technol. Educ. 19:em2227. doi: 10.29333/ejmste/12951

Kim, S., Raza, M., and Seidman, E. (2019). Improving 21st-century teaching skills: the key to effective 21st-century learners. Res. Compar. Int. Educ. 14, 99–117. doi: 10.1177/1745499919829214

Kulasegaram, K., and Rangachari, P. (2018). Beyond “formative”: assessments to enrich student learning. Adv. Physiol. Educ. 42, 5–14. doi: 10.1152/advan.00122.2017

Majeed, S., Yasmin, F., and Ahmad, R. (2023). Inquiry-based instruction and students' science process skills: an experimental study. Pak. J. Soc. Sci. 43, 155–166. Available online at: https://pjss.bzu.edu.pk/index.php/pjss/article/view/1248

Menéndez, I., Napa, M., Moreira, M., and Zambrano, G. (2019). The importance of formative assessment in the learning-teaching process. Int. J. Soc. Sci. Human. 3, 238–249. doi: 10.29332/ijssh.v3n2.322

Meng, S. (2023). Enhancing teaching and learning: aligning instructional practices with education quality standards. Res. Adv. Educ. 2, 17–31. doi: 10.56397/RAE.2023.07.04

Moyo, S., Combrinck, C., and Van Staden, S. (2022). Evaluating the impact of formative assessment intervention and experiences of the standard 4 teachers in teaching higher-order-thinking skills in mathematics. Front. Educ. 7:771437. doi: 10.3389/feduc.2022.771437

Obeido, M. (2016). Science teachers' beliefs about employing alternative assessment in teaching basic stage students laboratory skills (Unpublished PhD thesis). Yarmouk University, Irbid, Jordan.

Ozan, C., and Kincal, R. Y. (2018). The effects of formative assessment on academic achievement, attitudes toward the lesson, and self-regulation skills. Educ. Sci. Theory Pract. 18, 85–118. Available online at: https://files.eric.ed.gov/fulltext/EJ1179831.pdf

Ryan, A. (2015). Assessment practices for learning among primary school mathematics teachers in Hebron government schools from their point of view. J. Islam. Univ. Educ. Psychol. Stud. 23, 300–272.

Schildkamp, K., van der Kleij, F. M., Heitink, M. C., Kippers, W. B., and Veldkamp, B. P. (2020). Formative assessment: a systematic review of critical teacher prerequisites for classroom practice. Int. J. Educ. Res. 103:101602. doi: 10.1016/j.ijer.2020.101602

Stanja, J., Gritz, W., Krugel, J., Hoppe, A., and Dannemann, S. (2023). Formative assessment strategies for students' conceptions—the potential of learning analytics. Br. J. Educ. Technol. 54, 58–75. doi: 10.1111/bjet.13288

Van der Steen, J., van Schilt-Mol, T., Van der Vleuten, C., and Joosten-ten Brinke, D. (2023). Designing formative assessment that improves teaching and learning: what can be learned from the design stories of experienced teachers? J. Form. Design Learn. 7, 182–194. doi: 10.1007/s41686-023-00080-w

Walter, S., Dunsmuir, W. T., and Westbrook, J. I. (2019). Inter-observer agreement and reliability assessment for observational studies of clinical work. J. Biomed. Inform. 100:103317. doi: 10.1016/j.jbi.2019.103317

Wondim, M. G. (2025). Unveiling formative assessment in Ethiopian higher education institutions: practices and socioeconomic influences. Front. Educ. 10:1515335. doi: 10.3389/feduc.2025.1515335

Xu, X., Shen, W., Islam, A. Y. M., and Zhou, Y. (2023). A whole learning process-oriented formative assessment framework to cultivate complex skills. Human. Soc. Sci. Commun. 10, 1–15. doi: 10.1057/s41599-023-02200-0

Yan, Z., Chiu, M. M., and Cheng, E. (2022). Predicting teachers' formative assessment practices: teacher personal and contextual factors. Teach. Teacher Educ. 114:103718. doi: 10.1016/j.tate.2022.103718

Keywords: formative assessment, science learning, learning environments, practices, children

Citation: AlAli RM and Al-Barakat AA (2025) Enhancing young children's science learning through science teachers' formative assessment practices. Front. Educ. 10:1503088. doi: 10.3389/feduc.2025.1503088

Received: 28 September 2024; Accepted: 12 May 2025;

Published: 04 June 2025.

Edited by:

Eduardo Encabo-Fernández, University of Murcia, SpainReviewed by:

Valerie Harlow Shinas, Lesley University, United StatesSaif Saeed Alneyadi, Al Ain University, United Arab Emirates

Arlyne C. Marasigan, Philippine Normal University, Philippines

Copyright © 2025 AlAli and Al-Barakat. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rommel Mahmoud AlAli, cmFsYWxpQGtmdS5lZHUuc2E=; Ali Ahmad Al-Barakat, YWxpYWxiYXJha2F0QHl1LmVkdS5qbw==

†ORCID: Rommel Mahmoud AlAli orcid.org/0000-0001-7375-4856

Ali Ahmad Al-Barakat orcid.org/0000-0002-2709-4962

Rommel Mahmoud AlAli

Rommel Mahmoud AlAli Ali Ahmad Al-Barakat

Ali Ahmad Al-Barakat