- 1Center of Methods in the Social Sciences, University of Goettingen, Göttingen, Germany

- 2Campus Institute Data Science, University of Goettingen, Göttingen, Germany

- 3Hector Research Institute of Education Sciences and Psychology, University of Tuebingen, Tübingen, Germany

- 4Chair of Application Systems and E-Business, University of Goettingen, Göttingen, Germany

In a world changed by the COVID-19 pandemic, in 2020 universities had to completely rethink and immediately transform their teaching to a fully online setting. As a result, students had to learn from home and organize their learning by themselves. In a natural experiment, data about learning processes indicate that students learned more engaged and achieved higher learning success in this new situation compared to the traditional learning process. However, the students experienced a three-fold isolation: (1) physical isolation, (2) social isolation, and (3) learning isolation, which resulted in a stressful learning experience. In conclusion, these affective challenges indicate that this exceptional learning setting should not be normalized, even though positive outcomes were achieved during COVID-19. Beyond the situation in the pandemic, it can be deduced that students who learn at a distance need additional support to not (only) learn in isolation.

1 Introduction

Starting early 2020, the COVID-19 pandemic altered the familiar trajectories of many aspects of life. Its effects were not only felt on the health front but also in people’s social lives through lockdowns, social distancing, and other safety measures (Desvars-Larrive et al., 2020; World Health Organization, 2020). To prevent the further spread of SARS-CoV-2, also education has undergone a fundamental change, especially at the beginning of the pandemic (Sun et al., 2020; Weeden and Cornwell, 2020). Schoolchildren were taught from home, often for the first time in their lives, which was a major challenge for parents, teachers, and learners as well (Saavedra, 2020). The pandemic also transformed learning at universities worldwide (Chatziralli et al., 2020; Liu, 2020). In-class lectures, seminars, practice units, and labs had previously dominated university learning, which had then been closed. Within a short space of time, the entire teaching of universities shifted to a digital learning space. Learning in person was replaced by synchronous and asynchronous digital teaching formats, organized via video recordings, real-time video conferences, forum discussions, chats, and many more (Johnson et al., 2020; Wayne et al., 2020).

This rapid transformation poses a fundamental question about learning: How did the pandemic situation affect students’ learning?

On the one hand, answering this question provides insights into the pandemic situation and its influence on learning at universities and on students as individuals. On the other hand, viewing the pandemic as a natural experiment can help to understand the effects of students having to learn at a distance and/or completely digitally involuntarily or at least contrary to their actual preference. After all, the pandemic situation was characterized by the fact that students who had opted to study in person on campus now had to learn entirely online and at a distance. And while we know that there are systematic differences between students who choose to study on-campus and those who choose to study remotely (e.g., DeVaney, 2010; Gundlach et al., 2015), there is no complete separation between these groups, as external circumstances also lead students to study remotely who would not otherwise have chosen to do so (Fidalgo et al., 2020). Analyzing the pandemic as a natural experiment helps to identify possible risks of remote learning, such as stress or loneliness, for students who would not otherwise prefer it. In this sense, the analysis can be a warning where future prophets prophesize the vision of a completely digital university and the superfluousness of campuses.

2 Background

2.1 Online learning

The use of digital tools and the delivery of teaching online may first lead one to believe that teaching and learning during Covid-19 follows patterns that have long been studied in the field of online learning. Many findings in this field has actually provided guidance for teaching and learning during Covid-19 (Bragg et al., 2021). For example, it has been shown that proactive communication by instructors is especially important in online teaching in order to cultivate a relationship with the learners and thus ensure learning success (Beege et al., 2022; Flanigan et al., 2022).

These and many other findings provided indications for the design of remote teaching, but did not generally answer the question of what effects remote learning has. One step in this direction is research carried out before the pandemic that examined courses offered both online and face-to-face in parallel, most recently referred to as dual mode teaching (Soesmanto and Bonner, 2019). Simple comparisons of the two modes, however, have not yet yielded clear results. Some studies found no differences in learning behavior and test results (Shotwell and Apigian, 2015; Soesmanto and Bonner, 2019), while in other studies online students performed better (Pei and Wu, 2019) or worse (Gundlach et al., 2015) than face-to-face students. One reason for these differences may be that in general, and also in the studies mentioned, assignment to online or face-to-face teaching does not happen randomly, but is usually freely chosen by the learners. This leads to the fact that the learners differ considerably at the beginning of the courses (DeVaney, 2010; McPartlan et al., 2021). However, if learners who choose a course online differ from learners who attend in presence, it becomes obvious that the view on online learning is also not directly comparable to the situation during Covid-19. After all, during the pandemic situation neither teachers nor learners could choose, but all had to teach and learn online. Moreover, since this digital teaching had to be done at very short notice, the term Emergency Remote Teaching (Bozkurt and Sharma, 2020) became established.

2.2 Emergency remote teaching

Already early in 2020, the term Emergency Remote Teaching (ERT) developed for the digitally prepared and online delivered teaching during the pandemic-related lockdowns and restrictions (Bozkurt and Sharma, 2020). ERT differs from other online formats in at least three respects: First, teachers and learners alike have not freely chosen this format, are inexperienced in it, and many of them have not been trained for that format (Trust and Whalen, 2020). Second, there was little time to prepare for this format and to adapt previously prepared content and materials. Third, online learning was embedded in a life situation that was also substantially changed by the pandemic and its social consequences. Thus, learners were not only learning at a distance for a particular course, but were severely hindered in continuing their previous daily lives as a whole (Bond et al., 2021; Bozkurt and Sharma, 2020). The consequences of Emergency Remote Teaching for learning must therefore be described and studied as different from other experinces with online learning and, thus, are a novel phenomenon (Tang et al., 2021).

Therefore, very early on, first studies appeared that asked students about their experience during Emergency Remote Teaching via surveys or interviews. The results indicated that ERT had negative effects on motivation and perceived sense of belonging, while it increased stress and anxiety (Ezra et al., 2021; Petillion and McNeil, 2020). In addition, some students reported that concerns outside of learning, for example about health or economic development, also negatively impacted their learning experience (Alvarez, 2020). The only positive aspect seen by some students was increased flexibility in organizing their learning (Matarirano et al., 2021). But students also noted that increased flexibility paradoxically also posed significant challenges to learning (Rahiem, 2020). As a consequence, the comparison of satisfaction between the new Emergency Remote Teaching and before usually resulted in a negative evaluation, for example in Knudson’s (2020) study. Aldhahi et al. (2022) added that satisfaction is particularly strongly dependent on personal time management skills. Oinas et al. (2022) broadened the view to other self-regulatory abilities and also find high effects on learning behavior and satisfaction.

Moreover, dissatisfaction with the learning process was not the only negative outcome for students. In various studies, students reported that they perceive their own learning progress in Emergency Remote Teaching to be lower (Sharma et al., 2021; Shin and Hickey, 2021). Interestingly, however, Bawa (2020) and Iglesias-Pradas et al. (2021) did not report any negative effects in their studies on the measured learning success. Bawa (2020) did not find significant differences between ERT and before (whereby within the examined sample ERT even performed slightly better). Iglesias-Pradas et al. (2021) compared ERT and teaching before in even 43 courses, finding significantly higher learning success in ERT. For both mentioned studies, however, it should be noted that the comparison of learning success before ERT with learning success during ERT cannot be made completely without problems, as it remains unclear to what extent the measurement instruments of success were comparable and to what extent teaching changed beyond ERT in terms of personnel and pedagogy.

Moreover, it remains open how learning behavior has changed beyond the necessary modifications of ERT, and which of these are possible explanations for not finding a decline in success. Thus, an important gap remains that this research fills answering the following four research questions:

• RQ1: What was the impact of ERT on students’ learning behaviors?

• RQ2: What was the impact of ERT on students’ learning success?

• RQ3: Can correlations between learning behavior and success be found that suggest explanations for students´ learning success?

• RQ4: How can students’ personal experience be related to the above?

These questions will be answered using a natural field experiment with ERT being the treatment in a course otherwise kept as comparable as possible. Learning behavior is examined using learning analytics, learning success is measured via the official couse exams, and students´ experience is collected via a survey at the end of the semester.

2.3 Learning analytics as an approach in educational research

New research areas of technology-enhanced learning, particularly learning analytics and educational data mining, can address these questions in a special way. Educational data mining aims to analyze large learning-related datasets with statistical and data science methods in order to understand learning (Chatti et al., 2012; Kavitha and Raj, 2017). Learning analytics with a comparable approach aims to understand individual learning success to improve learning processes (Chatti et al., 2012; Gašević et al., 2022; Long and Siemens, 2011). Both approaches observe learning non-invasively and use data generated in the learning process, for example, using e-learning systems. In this way, learning can be observed not only in limited physical spaces and over short periods of time, but also in real learning processes that may last for months (Schumacher and Ifenthaler, 2021; Zhang et al., 2018). Prerequisite for the application of such approaches is the existence of large amounts of learning-related data, which requires a technical infrastructure whose process data provide this insight into learning (Avella et al., 2016; Gray et al., 2022). The digital behavioral data stored by, for example, a learning management system can then be counted to operationalize them into meaningful variables of learning behavior. These reflect learning without having to rely on self-reports of questionable data quality.

3 Methods

3.1 Setting of the experiment

The reported natural field experiment took place at a large public university covering various academic disciplines that is among the oldest and strongest research universities in Germany. Teaching at this university regularly takes place in lecture periods of 14 weeks with additional two to 3 weeks for examinations after that. Attending lectures, tutorial sessions, or office hours is not a prerequisite to attend or pass an examination. Thus, the entire teaching is intended to support the students’ learning processes. Students may decide on their own whether to make use of these offers or to learn self-educated. The only thing that matters for students to pass the course and get a grade is one final written exam.

The conducted study aims to investigate the learning behavior of students in a course on introductory statistics. The course targets students from many different undergraduate study programs related to social sciences (biggest groups being political science, sociology, and sports science) and covers basic statistical concepts and intends to build a basic understanding of statistical principles. The course takes place every summer semester, i.e., every year from mid-April to mid-July. Each year approximately 700–800 students enroll for the course at the beginning of the semester by registring in the course’s learning management system. Registration for the final exam is due 1 week before it takes place. On average 30 to 40% of those who registered for the course in April registers for the exam in July. The 60 to 70% who do not register for the exam have to take the course again the following year. There is no limit to the number of times a course can be repeated this way in this university system; only those who take the exam three times without passing it cannot continue their studies.

In regular years (including 2019), the course consists of five elements of teaching. This is a typical setting that exists in other courses as well. The five elements are:

1. The lecture takes place weekly in person from April to July in the extent of 90 min. In 2019, the lecture was given by the first author of this paper and took place on Tuesdays starting at 04:15 pm.

2. Tutorial sessions of 90 min are given in person by experienced student assistants for smaller subgroups and take place weekly (ideally 20–30 students actively participate). In 2019, Thursdays eight (identical) sessions were offered: two tutorials at 8:15 am, four tutorials at 12:15 pm, and two tutorials at 04:15 pm. Due to a bank holiday in Germany (in both 2019 and 2020), the number of tutorial sessions is reduced to twelve within the first 13 weeks, resulting in a total of twelve tutorial weeks.

3. Exercises are provided via a digital learning management sytem by the lecturer to be used by the students to deepen the content and prepare for the examination. In 2019, students got access to about 20 quizzes a week via a new e-learning system (see next section), which added up to 249 quizzes during the semester. Students could use these for learning following their own schedule and repeat the exercises as often as they wanted to.

4. At the end of the lecture period, students were given the opportunity to self-assess their state of learning using a gamified quiz played in person in the lecture hall (Hobert and Berens, 2023). Additionally, instead of classic tutorial sessions, the teaching assistants repeated the course content in three 90-min in person presentations. Further, the students were provided with 152 additional online quizzes for self-study using the e-learning app.

5. The examination takes place as an electronic assessment in the university’s examination center. The examination is intended to be solved in 90 min.

3.2 Technical infrastructure used for the study

In spring 2019, the authors of this research introduced a novel e-learning app they develpped for the course (Hobert and Berens, 2021, 2024) that replaced the learning management system used before. This app was supposed to support students in as many parts of learning as possible. Whereas the previous learning management system mainly focused on management tasks (i.e., sharing documents, managing participants, and making announcements), the novel e-learning app extended these management tasks by providing actual e-learning tools, such as formative quizzes with automated feedback, an audience response system to collect instant, anonymous feedback, access to a glossary for looking up definitions and important terms used in the lecture, and a video player to provide recordings via the e-learning app.

Besides these functionalities provided to students, the e-learning app also integrates learning analytics functionalities. Learning analytics was incorporated into the system by design and offers opportunities for collecting data about the students’ learning activities. For instance, data about system usage can be collected if the students opted in.

3.3 Intervention induced by the pandemic situation

Shortly after the decision in March 2020 for a lockdown, the authors designed the experiment. As the authors started researching the learning behavior of the students participating in the course in the summer term 2019 to evaluate the e-learning app (Hobert and Berens, 2024), the planned continuation in 2020 only needed to be adjusted slightly due to the COVID-19 situation. Due to the required transformation of teaching in 2020, the second observation period (summer semester 2020 started in April) incidentally became a treatment group.

To provide the students in 2020 an adequate learning environment during the COVID-19 situation, the authors decided to keep the overall structure of the course by offering all five components described above. For each component, it was analyzed how it could be provided best to support the students. Whereas the components 3 and 5 were offered identically like before, adjustments need to be made for components 1, 2, and 4.

As the university recommended switching large-scale lectures with more than 100 students to asynchronous video recordings, the lecturer provided the lecture content via the e-learning app described above. Luckily, the lectures were recorded in 2019 to provide them for exam preparation in later cohorts, which enabled the lecturer to post-process and uploaded the recordings to replace the lecture during ERT. This ensured that the students in 2020 got access to exactly the same lecture contents compared to 2019. Only the presentation changed from synchronous in-class teaching in 2019 to asynchronous video recordings. In 2020, the video recordings were made available weekly following 2019‘s schedule. The videos were available to the students until the examination.

As the tutorial sessions offered by student assistants are held in smaller groups, they could still be provided synchronously in 2020 as real-time video conferences. Thus, they were held in a synchronous setting like in 2019, and only the format changed from in-class sessions to real-time video conferences. If students could not attend the synchronous video conference, they could watch a recording of the tutorial session afterward, which was only done by few students (less than 10% of tutorial participations). For organizational reasons, the tutorials also had to be postponed in the weekday and now took place on Mondays between 10 am and 4 pm. Since students in 2019 made great use of the opportunity to ask the tutors individual subject-related questions after the tutorials, and this was not possible in the same way in 2020, an open office hour of the tutors was offered on a weekly basis. The offerings of the examination preparation were not changed, but they also had to be shifted from in-class settings to video conferences.

These changes from in-class teaching in 2019 to online teaching in 2020 are to be considered the intervention of the natural field experiment, while all other factors (including the e-learning app) were kept as stable as possible. In particular, all 401 quizzes offered in the e-learning app for practice (see section 3.1) remained exactly the same. Also for the examination, the same instrument was used in 2019 and 2020 as it had not been made accessible after its use in 2019.

3.4 Operationalization and data

To operationalize students’ learning behavior, data generated by the e-learning app was used. The e-learning app generates a pseudonymized log entry for each individual action taken by a student who opted-in to participate in research activities. The log entries encompass the type of action, a timestamp, and some (technical) metadata.

For each student, the following operationalization was used in this study based on data gathered using the e-learning app:

• Completed quizzes: number of quizzes solved by a student including all repetitions.

• Distinct completed quizzes: number of distinct quizzes solved by a student excluding all repetitions.

• Success rate of completed quizzes: percentage of correctly completed quizzes.

• Success rate of distinct completed quizzes: percentage of distinct quizzes completed correctly at least once.

• Number of participated tutorials: number of tutorial sessions a student attended. In 2020, this number was increased by the number of students watching the recording of the tutorial.

• Rate of active tutorial participations: number of tutorial sessions a student actively took part in. In contrast to above active participation was counted only if a student participated in audience response activities.

• Number of watched videos (only 2020): number of videos a student started to watch including all repetitions.

• Number of distinct watched videos (only 2020): number of videos a student started to watch excluding all repetitions.

• Number of active lecture participations (only in 2019): number of lectures a student actively took part in measured by participation in the audience response questions.

3.5 Additional information on the conducted survey at the end of the digital semester

During the semester in 2020, conversations with students, student teaching assistants, and other lecturers indicated that the transformation to digital teaching induced by COVID-19 could be a threat to the students’ mental wellbeing. For instance, increased stress, insecurity, and anxiety were discussed as possible effects. To analyze whether these effects were relevant for the students in the digital experimental group (i.e., the 2020 cohort), the authors decided to approach these affective layers of consequances of the transformed digital learning with a survey. This survey was designed by the authors of this research and made available via the e-learning app during the synchronously played game described as element four of the five course elements above. A total of 97 students responded to this survey, which corresponds to a response rate of over 90% of students present in the video conference the survey was advertised in and approx. 13.5% of all students enrolled in the course.

In addition to questions to evaluate the course and the e-learning app, six items concerning the transformation of teaching were asked: The six statements to be evaluated were (originally posed in German): 1. I could spend less time on university issues than in other semesters due to (corona-related) care duties. 2. It was very burdensome for me to work so much at home instead of being able to leave the house and meet people at the university. 3. It was harder for me to motivate myself during this semester than it was in traditional semesters. 4. I had more problems with my self-organization this semester. 5. This semester, I benefited from more freedom in the organization of my studies. 6. The digital studies have increased my level of stress compared to a traditional semester. Students were asked to rate their agreement with these statements on a 7-point Likert scale labeled only at the endpoints from “agree absolutely” to “not agree at all.”

4 Results

In the pre-pandemic semester in 2019, 710 students registered for the course in April 2019 in the newly developed e-learning app whereas in April 2020, 722 students signed up in the digital course. In 2019, seven students chose not to opt in for research activities on their data; in 2020, only one student did so. Therefore, this study operates with n1 = 703 students for 2019 and n2 = 721 students for 2020. For each of these 1,424 students, extensive data collected with the e-learning app on their individual learning behavior is available (e.g., on practicing with the provided 401 distinct formative quizzes). In total, this provides a data basis of 10,957,536 learning-related log entries.

4.1 Students’ learning engagement

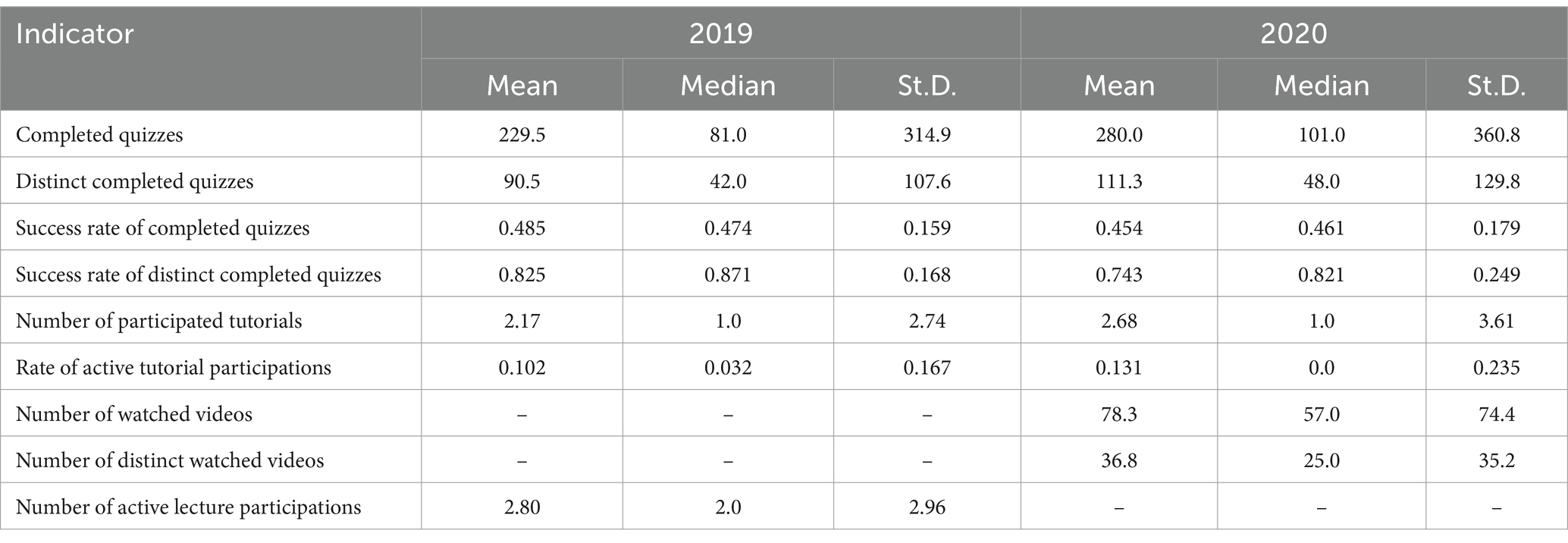

The first comparison of the two experimental groups we base on the students’ learning behavior. In 2019, students completed a total of 161,373 quizzes (including repetitions) using the 401 unique quizzes compared to 201,893 quizzes completed in the digital semester. Adjusted for the number of participants, this means that in 2020, almost 22% more quizzes were completed per person as can be seen in Table 1. Focusing on distinct quizzes, the difference rises to 22.9%. A contrary pattern was observed for the tutorial sessions. In 2019, 30.5% of the students on average took part in the tutorial sessions with 19.8% of the students being active participants. In 2020, only 22.4% of students participated in the tutorial video conferences, with 13.1% being active participants.

In 2019, an average of 28.2% of the students attended the in person lectures. In the digital semester, an average of 44.7% of the students a week watched lecture recordings. This advantage of the digital semester is also reflected in the fact that students rewatched videos on average 1.1 times. Relevant differences in heterogeneity between the 2 years cannot be found in any of the variables considered. The first observations about the learning behavior thus show that the learning engagement of the students was higher in almost every aspect in the Emergency Remote Teaching. Only the participation in the last remaining live element, the tutorial sessions, was lower than before. This interesting finding will be deepened in the following by looking at the distribution of learning.

4.2 Distribution of students’ learning

Figure 1 shows that the differences identified above between both cohorts regarding learning engagement can be observed over the entire period after week one. Students in the digital semester completed more quizzes in each single week and worked on more distinct quizzes. Additionally, more students watched lecture videos throughout the entire semester, compared with in-class lectures in 2019. Only participation and activity in tutorials were consistently lower in the digital semester. Although this finding is surprising, it does not appear to be an isolated case. Jeffery and Bauer (2020) in their research also find that participation in the remaining live events is declining and students are withdrawing more than necessary into self-study.

Figure 1. Development of the learning behavior during the lecture periods on a weekly basis. (A) Average number of completed quizzes (including repetitions) per student. (B) Average number of completed distinct quizzes (without repetitions) per student. (C) Rate of participation in tutorials and in grey rate of active participation. (D) Rate of participants in lectures (2019)/watching videos (2020).

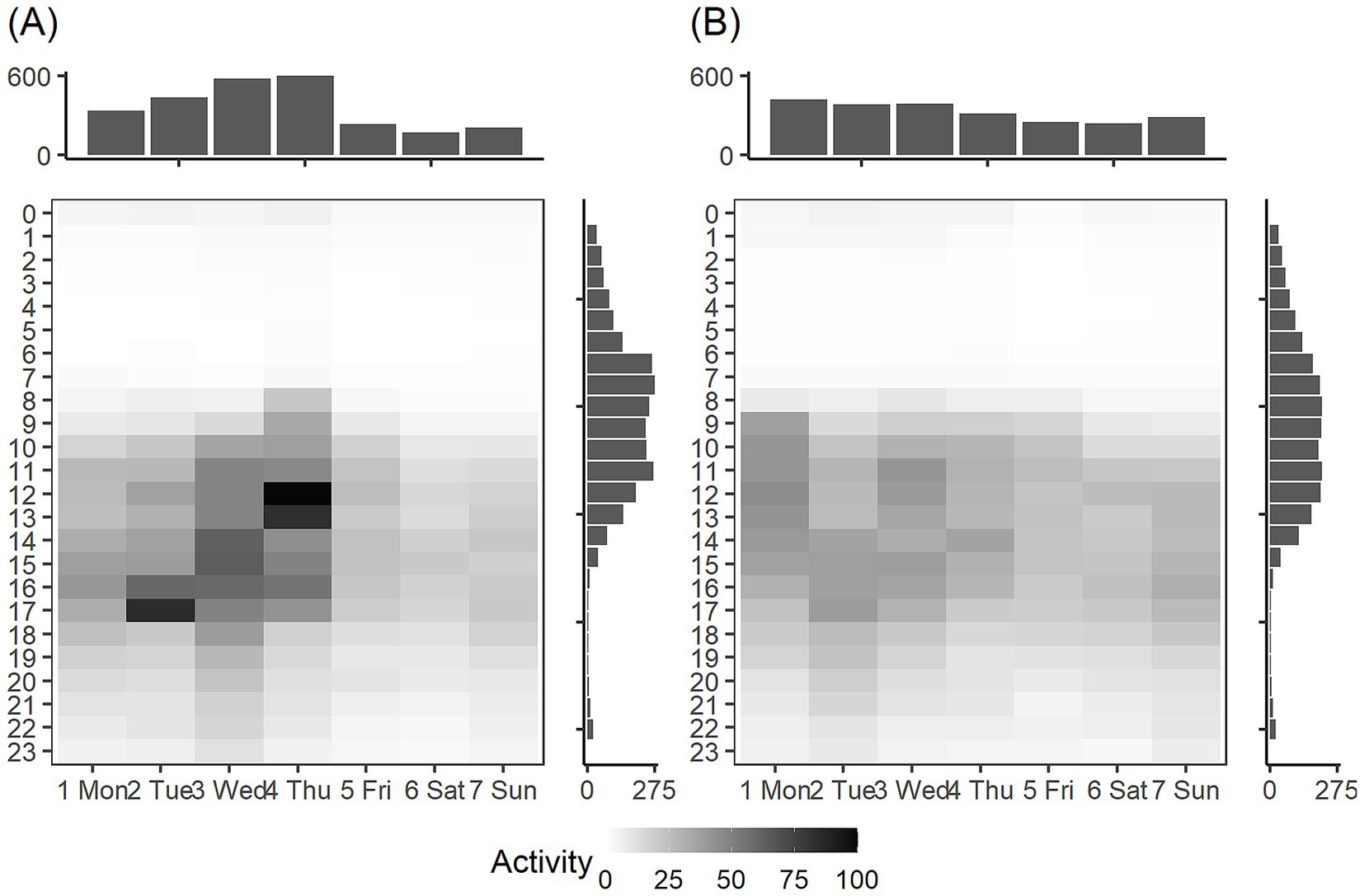

Figure 2 supports the impression gained by illustrating that students in the digital semester learned in a much more distributed manner across the day and the week and were much more engaged in learning on the weekends. The heatmaps visualize the distribution of learning activities across weekdays and times of the day.

Figure 2. Heatmap visualizing the distribution of learning activities across weekdays and time. (A) Activity distribution in 2019. (B) Activity distribution in 2020.

In 2019, the highest activity could be recognized on Tuesdays and Thursdays during synchronous learning activities (lecture resp. tutorial sessions). Additionally, increased activity could be detected on days before the synchronous learning activities took place (Monday and Wednesday). This can be explained by preparatory activities of the students (e.g., repeating the contents of the previous week on Mondays or solving quizzes as preparation for the tutorial sessions on Wednesdays). It is visible that the learning activity followed the schedule of the course and that learning decreased rapidly during the weekend.

In 2020, the learning activity were more homogeneously distributed as there are no clear maximum points. This can be explained by the absence of synchronous lectures and lower participation in tutorial sessions. In contrast to 2019, there was no substantial drop in the learning activities at the weekend – even though the activity decreased slightly.

Regarding learning behavior, we draw the overall conclusion that students were substantially more engaged in the asynchronous phases of learning than in the traditional year and spreaded their learning more across the week. However, they were less active in synchronous tutorial sessions. Considering that substantially less synchronous teaching was offered in the digital semester, this finding is counterintuitive. It was assumed that students would attend as much synchronous teaching and social interaction as possible instead of learning individually. Instead, however, students apparently encountered physical isolation by isolating themselves even further than necessary by learning in isolation.

4.3 Students’ learning success

Given the generally higher asynchronous learning engagement in the digital semester, the consequences on students’ learning success must be studied. We consider three indicators important for students’ learning success. The first indicator is course dropout. Since all course offerings are voluntary and there is no fixed schedule for the exam either, students do not have to take the exam but can postpone taking it without any negative consequences if they feel better this way. As a result, in the year of traditional teaching, only 34.0% of the 703 students registered in the e-learning app took the examination, which must generally be considered a normal dropout for the course. In the digital semester, 37.6% of the 721 students took the examination. Consequently, the digital semester scores slightly better in this indicator (although not significant with p = 0.15). The second indicator is the pass rate in the exam. In both years, students had to earn at least 40% of the achievable points to pass the exam. Seventy-seven per cent of the students passed the examination in the traditional semester whereas the pass rate in the digital semester is higher at 83.0% (although not significant with p = 0.09). The third indicator are the average points received on the examination, which point in the same direction. In the traditional semester, an average of 54.5% of the points were achieved, whereas in the digital semester it was 60.5%. This represents a highly significant difference between the two cohorts. Consequently, that means that students in the digital semester performed better in all three success indicators with one of them being highly significant. Thus, in this sense, the Emergency Remote Teaching can be considered successful. Thus, the counterintuitive finding of Bawa (2020) and Iglesias-Pradas et al. (2021) is confirmed in our data as well. At the same time, the increased learning engagement provides an approach to explain the increased learning success in ERT. In order to examine this more closely, in the following we examine to what extent the learning behavior variables can be linked to learning success.

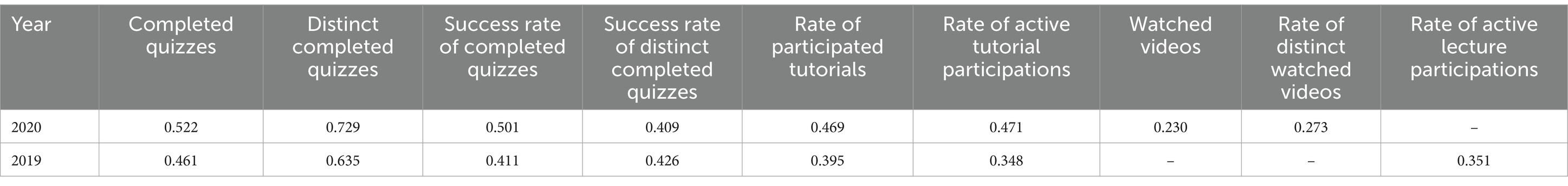

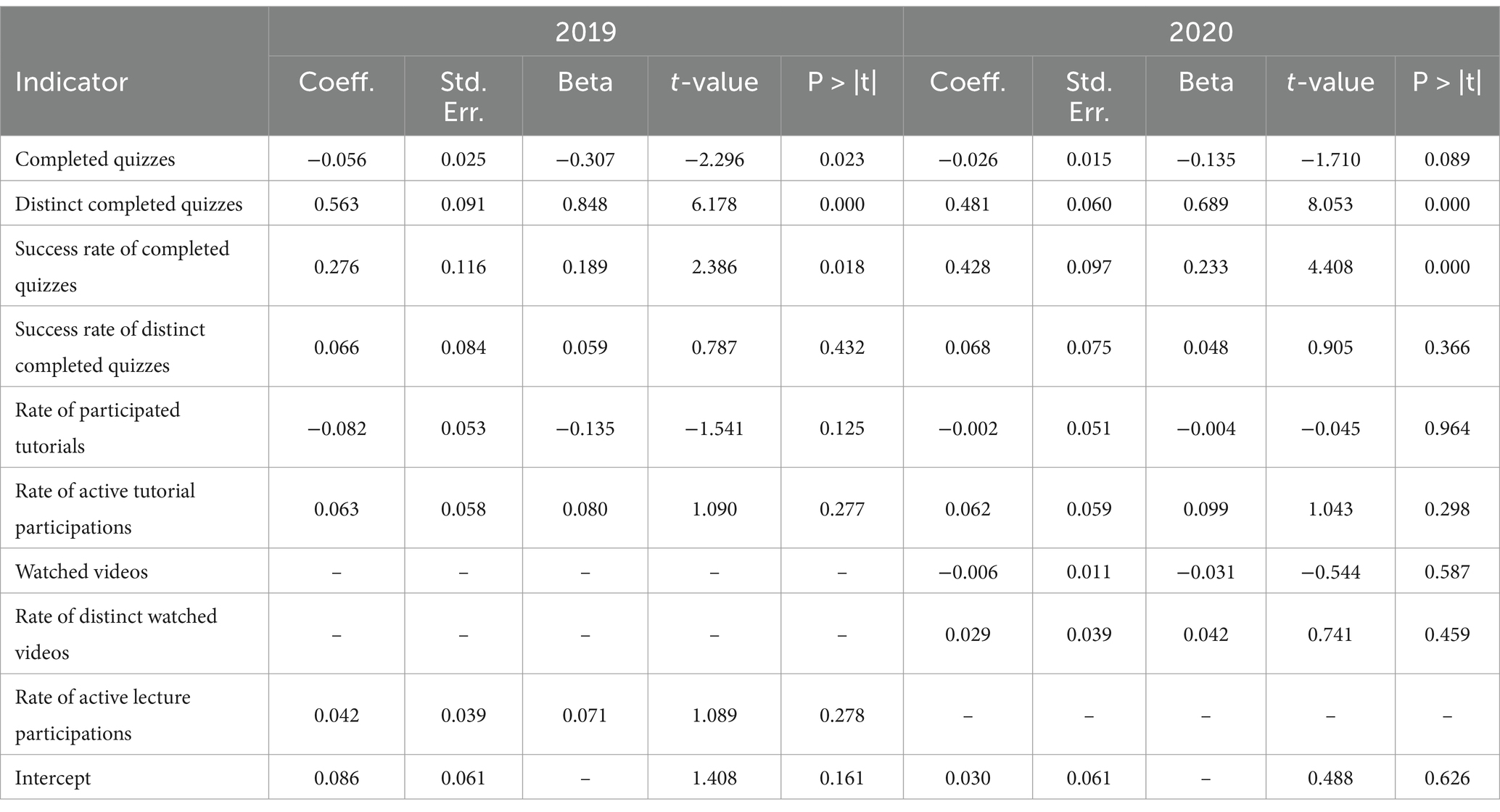

4.4 Explanations of the learning success

Thus, students in the digital semester were not only more engaged in asynchronous activities but also more successful in their examinations. The smaller number of synchronous sessions and the lower participation in them seems to not have a too negative impact on success, as also the correlation between the synchronous learning engagement in tutorials and exam score shows. The correlations from Table 2 indicate that our operationalizations of self-study correlate most highly with success in the examination. The number of distinct quizzes completed stands out as the most highly correlating indicator, but the total number of completed quizzes also correlates highly. (Active) participation in synchronous events correlates with examination success in both tutorials and lectures in the middle range only. Watching lecture videos correlates only slightly. The comparison of the two years shows that self-study is even more important in the digital semester as it correlates stronger with examination success than in the traditional semester. The corresponding linear regression model in Table 3 shows that because of the many correlations between the predictors, the importance of the number of distinct completed quizzes as a predictor increases even more, while many other elements of student learning have no significant link to examination success. Overall, it is to be expected that the students increased their total learning engagement and focused (whether consciously or unconsciously) on the more predictive activities of practicing quizzes. This way, the higher learning success may actually be explained by changed learning behavior.

Table 2. Pearson correlation matrix between learning behavior and score in the final examination in both years.

Table 3. Multiple linear regressions explaining score in the final examination by all learning behavior measures at the same time in both years.

5 Discussion

Summarizing the data-driven learning analytics, digital teaching and learning during the rapid transformation caused by the COVID-19 pandemic seems to have been successful from a cognitive perspective. This, however, neglects the affective perspective on students’ life in general and learning in particular (Dhawan, 2020; Fairlie and Loyalka, 2020). Research on previous crises and the latest insights on the COVID-19 situation indicate that major effects on affective layers exist (Mucci et al., 2016; Salari et al., 2020). Not only the concerns about personal health but also the societal changes like physical distancing, result in increased stress, insecurity and even anxiety (Barzilay et al., 2020). A non-consideration of these effects does not seem appropriate when analyzing learning. Therefore, a holistic evaluation of learning during COVID-19 should incorporate an analysis of the affective layers.

This gets reflected in the survey of the students participating in the digital course in 2020 we decided to add to our natural experiment during the second cohort was running. The students reported an increased burden due to lack of social contact (65.6% very high or high burden). They further experienced an increased level of stress (54.2%) and 58.3% of the students experienced difficulties motivating themselves to learn.

Although these results cannot be directly compared with the 2019 cohort, the retrospective comparison of the 2020 students shows that besides the physical isolation caused by the COVID-19 lockdowns, the students experienced problematic social isolation. Surprisingly, they did not react by increasing their participation in synchronous learning. They put themselves in a ‘learning isolation’ instead of participating in the offered cooperative tutorial sessions. Combining both perspectives, an overall evaluation of learning during COVID-19 shows positive impacts in terms of students’ learning engagement and examination success, but negative consequences for students’ affective life due to a (partially self-chosen) three-fold isolation.

This finding can be interpreted in two ways with regard to the COVID-19 lockdowns. On the one hand, the emergency remote teaching formats have been effective to the extent that students have learned something, and in the course studied, even more than before. ERT was therefore a successful response to the situation. At the same time, ERT was a burden for many students, which put their resilience to the test and for whom it is unclear whether a much longer phase of ERT would not produce worse results, as resilience cannot be maintained indefinitely in an exceptional situation.

At the same time, these results are highly relevant for students who, in dual-mode teaching situations or in other formats, do not have the choice of attending classes in person because, for example, they have care work to do or commuting distances in rural areas are unreasonable. These students could find themselves in a similar situation of involuntary distancing. This study shows that greater support for these students could be necessary, but also leaves open the need for research into what support could best help these students.

Data availability statement

Preprocessed data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical approval was not required for the studies involving humans because the study was approved by the institutions data privacy officer. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

FB: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Validation, Visualization, Writing – original draft, Writing – review & editing. SH: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Validation, Visualization, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The development and introduction of the e-learning system were funded by the Stifterverband (H120 5228 5008 32762).

Acknowledgments

The authors thank the students of both courses and the teaching assistants for their active participation and the enjoyable courses. We acknowledge support in funding the publication of this work by the Open Access Publication Funds of the Göttingen University.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. https://www.deepl.com/de/translator was used to help formulate parts of the paper by translating parts of the text back and forth between English and the first language of the authors.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aldhahi, M. I., Alqahtani, A. S., Baattaiah, B. A., and Al-Mohammed, H. I. (2022). Exploring the relationship between students' learning satisfaction and self-efficacy during the emergency transition to remote learning amid the coronavirus pandemic: a cross-sectional study. Educ. Inf. Technol. 27, 1323–1340. doi: 10.1007/s10639-021-10644-7

Alvarez, A., (2020). The phenomenon of learning at a distance through emergency remote teaching amidst the pandemic crisis. Asian J. Distance Educ., 15, pp. 144–153. Available online at: https://zenodo.org/record/3881529#.YppzjOzP1EY

Avella, J. T., Kebritchi, M., Nunn, S. G., and Kanai, T. (2016). Learning analytics methods, benefits, and challenges in higher education: a systematic literature review. Online Learn. 20, 13–29. doi: 10.5281/zenodo.3881528

Barzilay, R., Moore, T. M., Greenberg, D. M., DiDomenico, G. E., Brown, L. A., White, L. K., et al. (2020). Resilience, COVID-19-related stress, anxiety and depression during the pandemic in a large population enriched for healthcare providers. Transl. Psychiatry 10:291. doi: 10.1038/s41398-020-00982-4

Bawa, P. (2020). Learning in the age of SARS-COV-2: a quantitative study of learners’ performance in the age of emergency remote teaching. Comput. Educ. Open 1:100016. doi: 10.1016/j.caeo.2020.100016

Beege, M., Krieglstein, F., and Arnold, C. (2022). How instructors influence learning with instructional videos - the importance of professional appearance and communication. Comput. Educ. 185:104531. doi: 10.1016/j.compedu.2022.104531

Bond, M., Bedenlier, S., Marín, V. I., and Händel, M. (2021). Emergency remote teaching in higher education: mapping the first global online semester. Int. J. Educ. Technol. High. Educ. 18:50. doi: 10.1186/s41239-021-00282-x

Bozkurt, A., and Sharma, R. C. (2020). Emergency remote teaching in a time of global crisis due to coronavirus pandemic. Asian J. Distance Educ. 15, 1–6. doi: 10.5281/ZENODO.3778083

Bragg, L. A., Walsh, C., and Heyeres, M. (2021). Successful design and delivery of online professional development for teachers: a systematic review of the literature. Comput. Educ. 166:104158. doi: 10.1016/j.compedu.2021.104158

Chatti, M. A., Dyckhoff, A. L., Schroeder, U., and Thüs, H. (2012). A reference model for learning analytics. Int. J. Technol. Enhanced Learn. 4, 318–331. doi: 10.1504/IJTEL.2012.051815

Chatziralli, I., Ventura, C. V., Touhami, S., Reynolds, R., Nassisi, M., Weinberg, T., et al. (2020). Transforming ophthalmic education into virtual learning during COVID-19 pandemic: a global perspective. Eye (London, England) 35, 1459–1466. doi: 10.1038/s41433-020-1080-0

Desvars-Larrive, A., Dervic, E., Haug, N., Niederkrotenthaler, T., Chen, J., Di Natale, A., et al. (2020). A structured open dataset of government interventions in response to COVID-19. Sci. Data 7:285. doi: 10.1038/s41597-020-00609-9

DeVaney, T. A. (2010). Anxiety and attitude of graduate students in on-campus vs. online statistics courses. J. Stat. Educ. 18. doi: 10.1080/10691898.2010.11889472

Dhawan, S. (2020). Online learning: a panacea in the time of COVID-19 crisis. J. Educ. Technol. Syst. 49, 5–22. doi: 10.1177/0047239520934018

Ezra, O., Cohen, A., Bronshtein, A., Gabbay, H., and Baruth, O. (2021). Equity factors during the COVID-19 pandemic: difficulties in emergency remote teaching (ert) through online learning. Educ. Inf. Technol. 26, 7657–7681. doi: 10.1007/s10639-021-10632-x

Fairlie, R., and Loyalka, P. (2020). Schooling and Covid-19: lessons from recent research on EdTech. NPJ Sci. Learn. 5:13. doi: 10.1038/s41539-020-00072-6

Fidalgo, P., Thormann, J., Kulyk, O., and Lencastre, J. A. (2020). Students’ perceptions on distance education: a multinational study. Int. J. Educ. Technol. High. Educ. 17:18. doi: 10.1186/s41239-020-00194-2

Flanigan, A. E., Akcaoglu, M., and Ray, E. (2022). Initiating and maintaining student-instructor rapport in online classes. Internet High. Educ. 53:100844. doi: 10.1016/j.iheduc.2021.100844

Gašević, D., Tsai, Y.-S., and Drachsler, H. (2022). Learning analytics in higher education – stakeholders, strategy and scale. Internet High. Educ. 52:100833. doi: 10.1016/j.iheduc.2021.100833

Gray, G., Cooke, G., Murnion, P., Rooney, P., and O'Rourke, K. C. (2022). Stakeholders’ insights on learning analytics: perspectives of students and staff. Comput. Educ. 187:104550. doi: 10.1016/j.compedu.2022.104550

Gundlach, E., Richards, K. A. R., Nelson, D., and Levesque-Bristol, C. (2015). A comparison of student attitudes, statistical reasoning, performance, and perceptions for web-augmented traditional, fully online, and flipped sections of a statistical literacy class. J. Stat. Educ. 23. doi: 10.1080/10691898.2015.11889723

Hobert, S., and Berens, F. (2021). “Learning analytics for students: a field study on giving students access to their own data” in Visualizations and dashboards for learning analytics: advances in analytics for learning and teaching. eds. M. Sahin and D. Ifenthaler (Cham: Springer), 213–231.

Hobert, S., and Berens, F. (2023). Play to get instant feedback: using game-based apps to overcome the feedback gap in lectures and online teaching. Commun. Assoc. Inf. Syst. 53, 491–507. doi: 10.17705/1CAIS.05321

Hobert, S., and Berens, F. (2024). Developing a chatbot-based digital tutor as intermediary between students, teaching assistants, and lecturers. Educ. Technol. Res. Dev. 72, 797–818. doi: 10.1007/s11423-023-10293-2

Iglesias-Pradas, S., Hernández-García, Á., Chaparro-Peláez, J., and Prieto, J. L. (2021). Emergency remote teaching and students' academic performance in higher education during the COVID-19 pandemic: a case study. Comput. Hum. Behav. 119:106713. doi: 10.1016/j.chb.2021.106713

Jeffery, K. A., and Bauer, C. F. (2020). Students’ responses to emergency remote online teaching reveal critical factors for all teaching. J. Chem. Educ. 97, 2472–2485. doi: 10.1021/acs.jchemed.0c00736

Johnson, N., Veletsianos, G., and Seaman, J. (2020). U.S. faculty and administrators’ experiences and approaches in the early weeks of the COVID-19 pandemic. Online Learn. 24, 6–21. doi: 10.24059/olj.v24i2.2285

Kavitha, G., and Raj, L. (2017). Educational data mining and learning analytics educational assistance for teaching and learning. Int J. Comput. Organ. Trends 41, 21–25. doi: 10.14445/22492593/IJCOT-V41P304

Knudson, D. (2020). A tale of two instructional experiences: student engagement in active learning and emergency remote learning of biomechanics. Sports Biomech. 22, 1485–1495. doi: 10.1080/14763141.2020.1810306

Liu, K. (2020). How I faced my coronavirus anxiety. Science 367:1398. doi: 10.1126/science.367.6484.1398

Long, P., and Siemens, G. (2011). Penetrating the fog: analytics in learning and education. Educ. Rev. 46, 30–40.

Matarirano, O., Gqokonqana, O., and Yeboah, A. (2021). Students’ responses to multi-modal emergency remote learning during COVID-19 in a south African higher institution. Res. Soc. Sci. Technol. 6, 199–218. doi: 10.46303/ressat.2021.19

McPartlan, P., Rutherford, T., Rodriguez, F., Shaffer, J. F., and Holton, A. (2021). Modality motivation: selection effects and motivational differences in students who choose to take courses online. Internet High. Educ. 49:100793. doi: 10.1016/j.iheduc.2021.100793

Mucci, N., Giorgi, G., Roncaioli, M., Fiz Perez, J., and Arcangeli, G. (2016). The correlation between stress and economic crisis: a systematic review. Neuropsychiatr. Dis. Treat. 12, 983–993. doi: 10.2147/NDT.S98525

Oinas, S., Hotulainen, R., Koivuhovi, S., Brunila, K., and Vainikainen, M.-P. (2022). Remote learning experiences of girls, boys and non-binary students. Comput. Educ. 183:104499. doi: 10.1016/j.compedu.2022.104499

Pei, L., and Wu, H. (2019). Does online learning work better than offline learning in undergraduate medical education? A systematic review and meta-analysis. Med. Educ. Online 24:1666538. doi: 10.1080/10872981.2019.1666538

Petillion, R. J., and McNeil, W. S. (2020). Student experiences of emergency remote teaching: impacts of instructor practice on student learning, engagement, and well-being. J. Chem. Educ. 97, 2486–2493. doi: 10.1021/acs.jchemed.0c00733

Rahiem, M. D. H. (2020). The emergency remote learning experience of university students in Indonesia amidst the COVID-19 crisis. Int. J. Learn. Teach. Educ. Res. 19, 1–26. doi: 10.26803/ijlter.19.6.1

Saavedra, J. (2020). Educational challenges and opportunities of the coronavirus (COVID-19) pandemic. Available online at: https://blogs.worldbank.org/education/educational-challenges-and-opportunities-covid-19-pandemic (Accessed September 29, 2020).

Salari, N., Hosseinian-Far, A., Jalali, R., Vaisi-Raygani, A., Rasoulpoor, S., Mohammadi, M., et al. (2020). Prevalence of stress, anxiety, depression among the general population during the COVID-19 pandemic: a systematic review and meta-analysis. Glob. Health 16:57. doi: 10.1186/s12992-020-00589-w

Schumacher, C., and Ifenthaler, D. (2021). Investigating prompts for supporting students' self-regulation: a remaining challenge for learning analytics approaches? Internet High. Educ. 49:100791. doi: 10.1016/j.iheduc.2020.100791

Sharma, M., Onta, M., Shrestha, S., Sharma, M. R., and Bhattarai, T. (2021). The pedagogical shift during COVID-19 pandemic: emergency remote learning practices in nursing and its effectiveness. Asian J. Distance Educ. 16, 98–110.

Shin, M., and Hickey, K. (2021). Needs a little TLC: examining college students’ emergency remote teaching and learning experiences during COVID-19. J. Furth. High. Educ. 45, 973–986. doi: 10.1080/0309877X.2020.1847261

Shotwell, M., and Apigian, C. H. (2015). Student performance and success factors in learning business statistics in online vs. on-ground classes using a web-based assessment platform. J. Stat. Educ. 23. doi: 10.1080/10691898.2015.11889727

Soesmanto, T., and Bonner, S. (2019). Dual mode delivery in an introductory statistics course: design and evaluation. J. Stat. Educ. 27, 90–98. doi: 10.1080/10691898.2019.1608874

Sun, L., Tang, Y., and Zuo, W. (2020). Coronavirus pushes education online. Nat. Mater. 19:687. doi: 10.1038/s41563-020-0678-8

Tang, Y. M., Chen, P. C., Law, K. M. Y., Wu, C. H., Lau, Y., Guan, J., et al. (2021). Comparative analysis of student's live online learning readiness during the coronavirus (COVID-19) pandemic in the higher education sector. Comput. Educ. 168:104211. doi: 10.1016/j.compedu.2021.104211

Trust, T., and Whalen, J. (2020). Should teachers be trained in emergency remote teaching? Lessons learned from the COVID-19 pandemic. J. Technol. Teach. Educ. 28, 189–199. doi: 10.70725/307718pkpjuu

Wayne, D. B., Green, M., and Neilson, E. G. (2020). Medical education in the time of COVID-19. Sci. Advances 6:eabc7110. doi: 10.1126/sciadv.abc7110

Weeden, K., and Cornwell, B. (2020). The small-world network of college classes: implications for epidemic spread on a university campus. Sociol. Sci. 7, 222–241. doi: 10.15195/v7.a9

World Health Organization. (2020). Coronavirus disease 2019 (COVID-19): situation report – 72. Available online at: https://www.who.int/docs/default-source/coronaviruse/situation-reports/20200401-sitrep-72-covid-19.pdf?sfvrsn=3dd8971b_2 (Accessed September 29, 2020).

Zhang, J.-H., Zhang, Y.-X., Zou, Q., and Huang, S. (2018). What learning analytics tells us: group behavior analysis and individual learning diagnosis based on long-term and large-scale data. J. Educ. Technol. Soc., 21, 245–258. Available online at: http://www.jstor.org/stable/26388404

Keywords: COVID-19 pandemic, distance education, online learning, emergency remote teaching, learning analytics, natural experiment, higher education

Citation: Berens F and Hobert S (2025) Learning during COVID-19: (Too) isolated yet successful. Front. Educ. 10:1549202. doi: 10.3389/feduc.2025.1549202

Edited by:

Mei Tian, Xi’an Jiaotong University, ChinaReviewed by:

Francis Thaise A. Cimene, University of Science and Technology of Southern Philippines, PhilippinesDenice Hood, University of Illinois at Urbana-Champaign, United States

Copyright © 2025 Berens and Hobert. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Florian Berens, Zmxvcmlhbi5iZXJlbnNAdW5pLXR1ZWJpbmdlbi5kZQ==

†Present addresses: Sebastian Hobert, TH Lübeck, Lübeck, Germany

‡ORCID: Florian Berens, orcid.org/0000-0002-4271-9086

Sebastian Hobert, orcid.org/0000-0003-3621-0272

Florian Berens

Florian Berens Sebastian Hobert2,4†‡

Sebastian Hobert2,4†‡