- 1Department of Education, Literatures, Intercultural Studies, Languages and Psychology (FORLILPSI), University of Florence, Florence, Italy

- 2Department of Political and Social Sciences, University of Bologna, Bologna, Italy

Introduction: In the last decades we assisted in the exponential increase of information and robotic technologies for remote learning and rehabilitation. Such procedures are associated with a decrease of human interaction and “in person” control of responses, characteristics that, especially when children or youth are involved, can affect learning performances. Thus, online quantitative, and qualitative indicators of child's psychological engagement are mandatory to personalize the interaction with the technological device. According to the literature, the studies on child engagement during digitalized or robotic tasks vary in terms of underpinning constructs, technological tools, measures, and results obtained.

Methods: This systematic review was conducted with the general aim to provide a theoretical and methodological framework of children's engagement during digitalized and robotic tasks. The review included 27 studies conducted between 2014 and 2023. The sample size ranged from 5 to 299, including typically and atypically developing children, aged between 6 and 18 years.

Results: The results suggest the need for adopting a transversal approach including simultaneously emotional, behavioral and cognitive dimensions of engagement by diverse tools such as self-report questionnaires, video recordings, and eye-tracker. Although fewer studies have examined the relationship between children's engagement and task performance, existing evidence suggests a positive association between emotional, behavioral, and cognitive engagement and both task performance and skill acquisition.

Discussion: These results have implications for setting adequate protocols when using information and robotic technologies in child education and rehabilitation.

Systematic review registration: https://www.crd.york.ac.uk/PROSPERO/view/CRD42024528719, identifier CRD42024528719.

1 Introduction

Compared to previous generations, today's children demonstrate a high level of digital proficiency and knowledge: they embrace technology as a means of learning and entertainment, dedicating a substantial amount of time to technological devices (Duradoni et al., 2022). Beside the acknowledged risks associated to this prevailing trend, information and communication technology (ICTs) are demonstrating promising potential for enhancing learning (Di Lieto et al., 2021; Groccia, 2018; Ruffini et al., 2022; Stephen et al., 2008) and cognitive processes (Drigas et al., 2015; Noorhidawati et al., 2015) in children with typical development or special needs, within everyday settings such as households and schools. These contexts are particularly important since they are the places where children spend most of their time during their development phase through infancy and adolescence (Horvat, 1982; Pecini et al., 2019; Nadeau et al., 2020).

Additionally, ICTs have the potential to make the learning process easier and more enjoyable, for example by gamifying learning content (Brewer et al., 2013; De Aguilera and Mendiz, 2003). Gamifying refers to the application of game design elements—such as points, challenges, and rewards—in non-game contexts to increase motivation and engagement. This strategy has been shown to improve learning outcomes by making educational activities more interactive and rewarding (Deterding et al., 2011; Albertazzi et al., 2019). It is important to distinguish between formal game-based learning, which employs structured digital games with clear goals, rules, and feedback, and more open-ended or creative digital play activities that utilize technology in less structured ways. Such activities include exploratory, imaginative, or collaborative tasks that often emphasize creativity, social interaction, or free expression rather than competition or explicit challenges. These varied forms of digital play are widely used in educational settings and contribute differently to children's learning and development, offering benefits such as fostering creativity, problem-solving, and social skills (Clark et al., 2016). Among these, digital games stand out as a particularly influential modality due to their structured nature and strong potential for engagement. Digital games represent a fundamental and developmentally appropriate modality through which children explore, learn, and interact with the world. They possess core features, such as defined goals, rules, feedback systems, challenges, interactivity, and narrative structures that naturally support attention, emotional involvement, and intrinsic motivation. These characteristics allow digital games to create immersive and engaging learning environments where children are encouraged to participate actively, solve problems, and persist in the face of difficulty. As such, game-based activities have become increasingly relevant in educational contexts, especially those involving technology, due to their capacity to enhance engagement and promote meaningful learning experiences (Breien and Wasson, 2021). Indeed, children often lack motivation to continue learning if the process is tedious, cognitively demanding, and lacks stimulation (Dykstra Steinbrenner and Watson, 2015; Macklem, 2015). Thus, using innovative technology in learning not only enhances children's knowledge and skills but also provides enjoyable experiences through activities like gaming, fostering a sense of joy and pleasure (Hui et al., 2014). Furthermore, the concept of “fun” or “enjoyment,” frequently associated with digital games and play, warrants critical consideration. While positive affect and motivation can enhance engagement and learning, effective educational experiences do not necessarily depend on constant feelings of fun. Play-based learning may also involve moments of challenge, frustration, and sustained effort, which are essential for deep learning and cognitive growth (Whitton, 2018). Therefore, the educational value of digital play lies not solely in entertainment, but in its capacity to engage learners emotionally, cognitively, and behaviorally through meaningful and sometimes demanding experiences.

Finally, the incorporation of technology within educational and intervention settings holds promising prospects even in terms of efficiency and efficacy in time and cost for families, educational and clinical institutions, and policy practitioners involved. Especially in remote format, technology can offer significant advantages by facilitating low-cost, intensive, and personalized exercises (Alexopoulou et al., 2019; Pecini et al., 2019; Rivella et al., 2023; Sandlund et al., 2009; Paneru and Paneru, 2024).

Notwithstanding, to make the use of remote ICTs services as useful and personalized as possible for students and young pupils, it is imperative to gather information concerning children's engagement during the interaction with the device, that is online quantitative and qualitative indicators of the learning process and of the child's emotional and cognitive status. Indeed, engagement research is fundamental for the creation of digital interventions (Nahum-Shani et al., 2022) and in the field of human-computer interaction (Doherty and Doherty, 2019). In this context, children's actions are strongly influenced both by situational factors, linked to the characteristics of the digital task, and by individual factors, such as personal cognitive skills, emotional needs, and motivational tendencies. The combination of these contextual and personal factors can determine different forms of engagement which then predict success in digital learning. This can be particularly important in developmental ages or in the case of neurodevelopmental disorders as they are characterized by a high intersubject variability in children's needs that can affect the successfulness of the remote intervention (Di Lieto et al., 2020). In virtual environments—particularly during play or learning activities—monitoring engagement can help address critical issues such as content personalization, improved accessibility, integration with assistive technologies, and the optimization of strategies involving augmented reality and immersive educational environments to support more effective treatment approaches. Augmented reality refers to technology that overlays digital content (such as images, sounds, or information) onto the real world, enhancing the user's perception of their environment. Immersive and interactive educational environments, on the other hand, are digitally mediated settings designed to deeply engage learners through multisensory input and real-time feedback, encouraging active participation and experiential learning. Tracking children's engagement also contributes to ensuring the reliability of data collected during interactions with robots, digital tasks, or immersive environments. This is especially important for applications involving atypical developmental conditions and for the development of algorithms for assessment and intervention (Paneru and Paneru, 2024; Paneru et al., 2024).

Nevertheless, researchers continue to face numerous challenges in understanding engagement, both due to its definition, which yields various and sometimes conflicting interpretations, and its multidimensional nature, which makes measurement challenging.

Difficulties in defining and measuring engagement can hinder the development of practical applications aimed at supporting children's learning and skill acquisition through more targeted and informed use of technology. It is therefore essential to systematize the conceptualization of the “child's engagement” within robotic and digital contexts, in order to clarify its components, identify the influencing factors, and determine the most effective methodologies for measuring it accurately and consistently. A systematic review of the existing literature can offer a theoretical framework for the construct, as well as support the identification and selection of reliable and valid tools to monitor children's engagement during interactions with digital and robotic technologies. Moreover, findings from the literature can provide valuable insights into the role of emotional, cognitive, and behavioral engagement in influencing children's performance, thereby contributing to the optimization of educational technologies in both typical and atypical development.

1.1 Engagement definition

Existing definitions of engagement vary depending on the context and the individuals involved, illustrating the lack of a universal definition (Nahum-Shani et al., 2022).

Within the frame of conversation between two agents, engagement is defined as the process through which two or more participants establish, maintain, and interrupt their perceived connection (Sidner et al., 2005). This process includes initial contact, negotiating a collaboration, verifying that the other is still taking part in the interaction, evaluating whether to remain involved, and deciding when to end the connection.

In other fields, such as education research and healthcare, engagement can be defined as the effort children devote to educationally beneficial activities to desired learning outcomes (Hu and Kuh, 2002) or as actions taken by subjects to support their health (Cunningham, 2014). Furthermore, considering adulthood, engagement can be seen also as a stable characteristic of the individual, which is based on personality traits, therefore a propensity to engage or to be engaged (Barco et al., 2014).

Nowadays, engagement can have a broader meaning if one considers a single user interacting with a screen-based interface, a technological device, or a robot. A technological device refers to an integrated hardware-software system—such as a tablet, an augmented reality headset, or an educational robot—that enables users to access, navigate, and interact with digital content or immersive environments. These devices often serve as the physical interface through which playful or learning experiences take place. In the context of social media, Jaimes et al. (2011) define engagement as the phenomenon of people being fascinated and motivated by developing a relationship with a social platform and integrating it into their lives. In human-computer interaction (HCI) it is defined as the quality of users' experiences when interacting with a digital or robotic system. This includes aspects such as challenge, positive affect, usability, attention attraction and maintenance, aesthetic and sensory appeal, feedback, variety/novelty, interactivity, and user-perceived control (O'Brien and Toms, 2008). In the definition of O'Brien and Toms, engagement is therefore a dynamic process within which four discrete events are identified: the point of involvement, the period of involvement, disengagement, and re-engagement.

Beyond defining engagement as a unitary construct, it must be acknowledged that engagement is multifaceted and can include multiple processes at different levels (Bouta and Retalis, 2013; Islas Sedano et al., 2013). In fact, although there is no consensus on which dimensions are most important in defining engagement (Lee, 2014), it is acknowledged that engagement represents the simultaneous investment of emotional, cognitive, and physical energies (Rich et al., 2010).

Definitions of emotional engagement tend to emphasize the subjective nature of the experience, including attitudes and emotions that reflect intrinsic motivation, positive affects, and a sense of pleasure and interest in the task (Fredricks et al., 2004). Cognitive engagement instead primarily refers to the appropriate use of several cognitive processes such as attention, information processing, and memory (Fredricks et al., 2004). Finally, behavioral/physical engagement implies action, participation and individual conduct during the interaction with a person or a device (Bouta and Retalis, 2013). To date there is a growing literature supporting bodily engagement in learning contexts. Proponents of “embodied cognition” in fact, agree that the way people think and reason about the world is closely related to the body's interaction with the physical environment (Lindgren et al., 2016). As a consequence, body movement can have an impact on learning processes (Goldin-Meadow et al., 2009) and on degree of engagement (Anastopoulou et al., 2011).

Considering the diverse definitions of engagement and its multifaceted underlying constructs, the first objective of this review is to describe how engagement with digital tasks is conceptualized and operationalized in the educational and intervention contexts in childhood.

1.2 Tools and procedures to measure engagement

One of the most recent approaches that attempts to measure child's engagement within the educational setting is called Learning Analytics. Learning Analytics (LA) involves the measurement, collection, analysis, and reporting of data about learners and their contexts to understand and optimize learning and its environments (LAK, 2011). It offers educators and practitioners valuable information to enhance the learning experience, improve instructional design, and support performance success. LA can supply powerful tools for teachers and researchers to improve the effectiveness and the quality of children's performance as well as inform, extract and visualize real-time data about learners' engagement and their success (Macfadyen and Dawson, 2010).

In line with the LA approach, several innovative technologies and valid and reliable tools can be used to investigate engagement in technology- or game-mediated learning experiences (Abbasi et al., 2023). Researchers have used various methods to measure it such as quantitative self-report surveys, semi-structured interviews, qualitative observation, eye-trackers, artificial intelligence (AI), video recordings, physiological measures (Crescenzi-Lanna, 2020a; Sharma et al., 2020), as reported by previous literature reviews (Boyle et al., 2012; Henrie et al., 2015; Sharma et al., 2020).

Quantitative self-report surveys, often utilizing tools like the Likert scale, have been widely employed to assess learners' engagement. Survey inquiries span from evaluating self-perceived levels of engagement (Gallini and Barron, 2001) to delving into behavioral, cognitive, and emotional aspects of engagement (Chen et al., 2010; Price et al., 2007; Yang, 2011). While surveys are valuable for older students, they may not always be suitable with younger children who may struggle to comprehend and respond to the questions directly. Additionally, data are typically collected at the end of a learning activity, and this may not be ideal for those interested in developing systems that provide researchers with real-time feedback on child engagement over the course of an activity. This need may be particularly relevant in rehabilitative contexts, when the child's response to the intervention should be constantly monitored.

The second most common approach involves qualitative measures, which include direct observations via video, capturing screenshots of children's behavior during learning, interviews or focus groups, and texting by other digital communication tools. These qualitative measures are particularly useful for exploratory studies characterized by uncertainty about how to measure or define engagement. Although qualitative methodologies offer in-depth data regarding student engagement, they are limited in their ability to generalize findings to a larger population. This lack of generalizability impedes the establishment of a common strategy for defining or assessing engagement.

Regarding quantitative observational measures, researchers use various indicators obtained through direct human observation, video recording, and computer-generated user activity data (e.g., log data). These methods have the advantage of allowing researchers to assess engagement in real time, avoiding interruptions or subsequent measurements.

Finally, another approach to measuring engagement involves the use of physiological sensors, which can detect children's physical responses during learning. Eye-tracking technologies, skin conductance sensors, blood pressure, and electrophysiological data (e.g., EEG) are used to assess the impact of various technological devices and interactive lessons on engagement and learning (Boucheix et al., 2013). Physiological sensors have the advantage to not interrupt the task, but need confirmation through self-reported data. In addition, one challenge in using physiological sensors concerns the complexity of the technology and the associated cost. Finally, it is critical to pay attention to sensor placement and to subject's limitations while conducting monitoring. To date, physiological sensor technology is advancing with simpler and more affordable options, making this type of measurement increasingly viable for studying engagement (Henrie et al., 2015).

It must be noted that a multimodal approach integrating various interaction modalities, such as verbal language, gestures, facial expression, body posture and sensory data, could provide a more complete understanding of the individual's engagement. The importance of multimodal approaches in evaluating engagement in digital tasks lies precisely in their ability to capture a wider range of behavioral and emotional signals and in providing educators with the possibility to evaluate student engagement more accurately through valuable information to adapt and optimize online learning experiences. Additionally, using advanced data analytics techniques can help identify patterns and trends in children engagement, allowing teachers to intervene in real time to improve learning. That is why the use of multimodal assessments could be widely used in learning and rehabilitation, not only because it allows for a better understanding of learning behaviors, but also because it has the potential to improve intervention and adaptation to special educational needs by supporting cognitive, affective, and metacognitive (Emerson et al., 2020; Checa Romero and Jiménez Lozano, 2025).

However, it is not always feasible to implement multiple and valid tools to study engagement in an online learning environment. Studies often measure only one dimension of engagement, or study engagement in general (without operationalize it in terms of cognitive, emotional, and behavioral components) or even within a single subject area (e.g., math; Henrie et al., 2015). Additionally, the tools mentioned above are often developed to measure students' engagement in real, face-to-face classrooms rather than the online learning environment. Most importantly, the studies reported in the previous reviews (Henrie et al., 2015) have examined engagement of university students, thus leaving school age uncovered.

To date, although various tools can be used to measure engagement, little is known about which tools and procedures are most used to evaluate the different types of engagement, i.e., cognitive, emotional and behavioral, during childhood and adolescence. However, considering the evident benefits of utilizing technology in gathering relevant information, it is imperative to identify informative and valuable methodologies and tools to be used by practitioners and researchers with children.

Thus, the second aim of the review is to describe the tools and procedures used to measure engagement during children and adolescent activities with digital technologies and interaction with robots, through paying attention to differentiate the emotional, cognitive, and behavioral components.

1.3 Relationships between engagement, performances, and characteristics of the digital tasks

In the recent decades, there has been a growing interest in understanding the role of engagement in children's development, especially for educational special needs (Fredricks et al., 2004). Particularly, student engagement is universally recognized as one of the best indicators of success in the learning process and in personal development (Skinner et al., 2008). In the early school years engagement predicts academic achievement and test performances while subsequently it affects students' patterns of attendance, continuity, and academic resilience (Sinclair et al., 2003), creating an important gateway to better academic achievement while in school (O'Farrell and Morrison, 2003). Engagement has been also found to act as a protective factor against risky behaviors typical of adolescence, such as substance abuse, risky sexual behavior, and delinquency (Skinner et al., 2008).

Despite substantial investments in the digitization of education, which have made information and communication technologies (ICTs) an integral part of learning, current research is rather limited when considering the impact of engagement on performance during digital learning tasks (Ferrer et al., 2011). In fact, it must be noted that although it is assumed that the engagement induced by digital and game-based tasks affects positively learning and task performances in children, no systematic analysis of such a relationship has ever been explored. Moreover, it remains unclear whether different types of engagement are differently affected by the use of digital learning tasks. While emotional engagement is expected to be positively related to performance in digital tasks (Tisza et al., 2022), some hypotheses suggest that the use of technology for learning may induce cognitive fatigue, potentially negatively impacting cognitive engagement (Giannakos et al., 2020) with larger effects on accessibility to digital learning by subjects with neurodevelopmental disabilities. In addition, it is of interest to clarify which characteristics of the digital and game-based learning tasks (Crescenzi-Lanna, 2020a) favor engagement across typical and atypical developmental populations as it may have important implications for ponderated choices in the educational and interventional fields.

Given their influence on children's engagement, the design features of digital tasks deserve careful attention to ensure they effectively support motivation and learning.

Accordingly, the third aim of the review was to verify whether the degree and type of children's engagement correlated with their task performances and if it varied according to task characteristics.

2 Methods and procedure

2.1 Eligibility criteria

Studies were included if they presented the following criteria: (i) being written in English, (ii) reporting measurements of children's engagement in terms of emotion, cognition or behavior, (iii) being a peer-reviewed article, (iv) reporting on quantitative data, qualitative data or mixed-method study designs were included, (v) having a sample between 0 and 18 years old, (vi) having children completing a digital task, (vii) being published between 2000 and 2024.

2.2 Search methodology

The review was conducted in accordance with the recommendations of the Preferred Items for Reporting of Systematic Reviews and Meta-Analyses (PRISMA) to organize all the data retrieved from possible eligible studies (Page et al., 2021). Electronic bibliographic databases including the PsycINFO, PubMED, and Scopus were searched up to January 2024 using the following full string:

child* AND engage* AND ((“learning analytics” OR “embodied learning” OR “immersive learning”) AND (“game*” OR “computer*”OR “robot*” OR “digital*” OR “tablet” OR “multimod*” OR “educational technology”)).

The search strategy string was informed by previous literature on children's engagement and utilizing commonly used key terms pertaining to each of the three categories of engagement (e.g., Crescenzi-Lanna, 2020b; Lee-Cultura et al., 2021; Kosmas et al., 2019). Population was identified by the keyword “child*,” thus including typical and atypical development; the keyword “engage*” defined the variable of interest; the keywords “learning analytics,” “embodied learning,” and “immersive learning” were used to target the procedures used to measure engagement; the keywords “game*,” “computer*,” “robot*,” “digital*,” “tablet,” “multimod*,” and “educational technology” were used to define the type of task within which engagement was measured.

Filters were applied to include only English. The reference lists of all studies included were screened to identify additional citations of interest.

2.3 Review process

Papers were screened according to the following procedure: the principal reviewer (XX) fully compiled a list of all the papers obtained through the keywords and selected them as eligible based on the reading of the abstracts. A second reviewer (XX) independently carried out the same task and reported which papers were deemed eligible according to them based on their abstracts. A third reviewer (XX) intervened if there were any discrepancies in selecting a paper between the two other reviewers.

2.4 Data extraction

Data extracted were also in duplicate by two independent reviewers (XX, XX). The following information was reported for each of the included papers: reference, journal, main aim and scope, study methods, populations characteristics, intervention setting, tools used and main findings.

2.5 Study collection

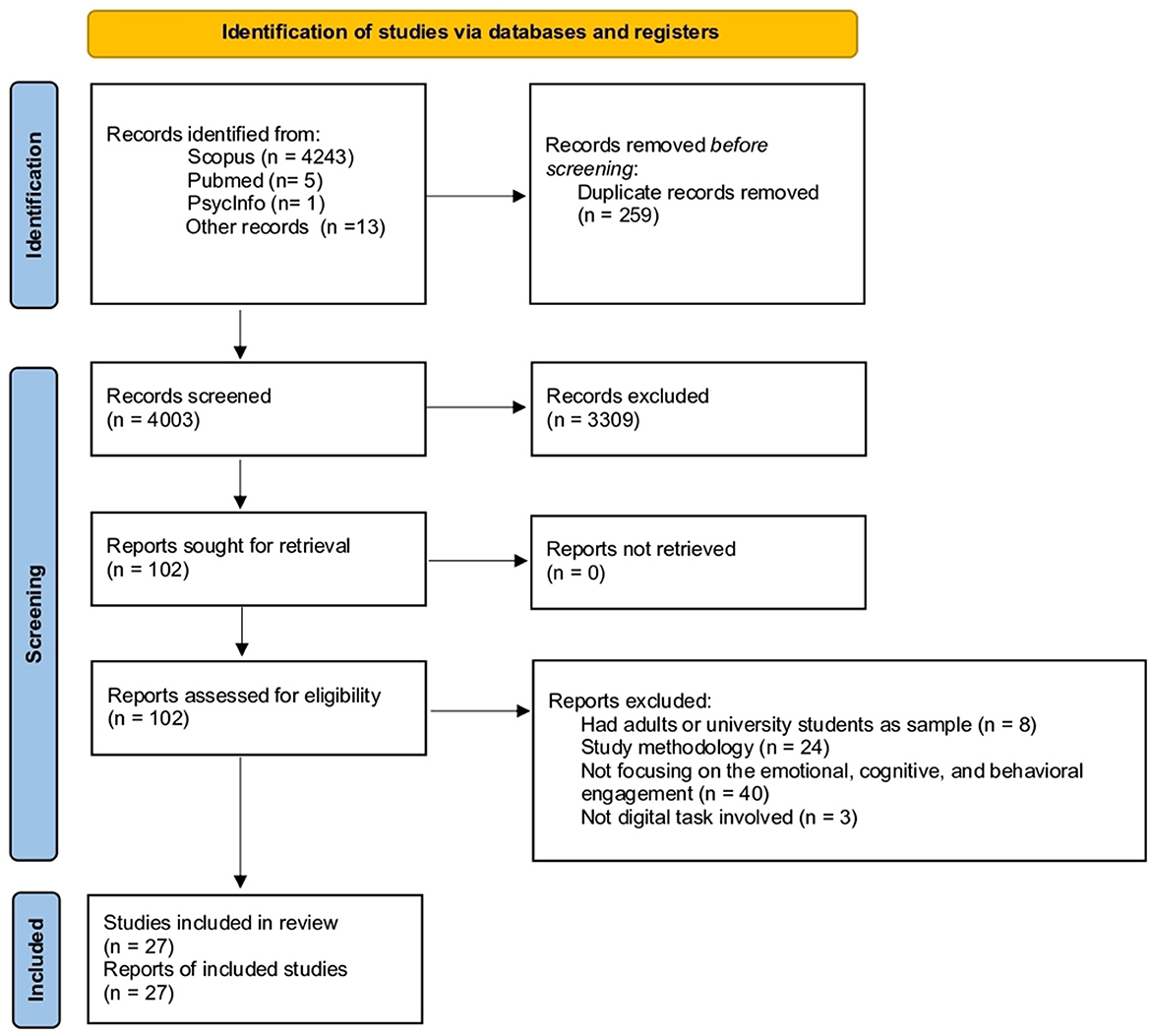

Four thousands abstracts were screened to assess the potential eligibility of the studies to be included in the systematic review. During this process of screening, 89 papers were accepted based on their titles and abstracts only and were thus read in full-text. Seventy-five papers were removed because they did not meet the eligibility criteria, specifically 8 presented a sample of either university students or of adult people, 24 did not present any empirical data, 40 did not focus on evaluating emotional, behavioral, or cognitive engagement, and 3 did not present any digital task. As a result, 17 papers were included through this process. References were also analyzed through other sources (e.g., forward citation searches, contact with authors) to find potentially eligible studies. A total of 13 references were screened and 10 papers were added in the end. Finally, 27 papers were deemed eligible for this PRISMA systematic review (Figure 1).

Figure 1. Flow diagram showing the search methodology to identify papers about the engagement of children and instruments used to assess it.

2.6 Quality assessment

A risk of bias assessment was conducted independently by two reviewers (XX, XX) using a quality appraisal tool, the Mixed Methods Appraisal Tool (MMAT) version 2018 (Hong et al., 2018), which has been validated and tested on different methodologies including quantitative, qualitative, and mixed-methods study designs. The tool consists of two screening questions (i.e., “Are there clear research questions?” and “Do the collected data allow for addressing the research questions?”). If both questions receive affirmative responses, five additional questions are posed regarding sample characteristics, study design, measurement efficacy, statistical analysis, and outcome data. These five questions vary based on the study design: qualitative, quantitative, or mixed methods. For mixed-method studies, all types of questions are utilized, totaling 15 questions. Total scores are computed as the percentage of MMAT criteria met, ranging from 0% (indicating no quality) to 20% (very low quality), 40% (low quality), 60% (moderate quality), 80% (good quality), and 100% (very high quality).

3 Results

3.1 Studies' setting

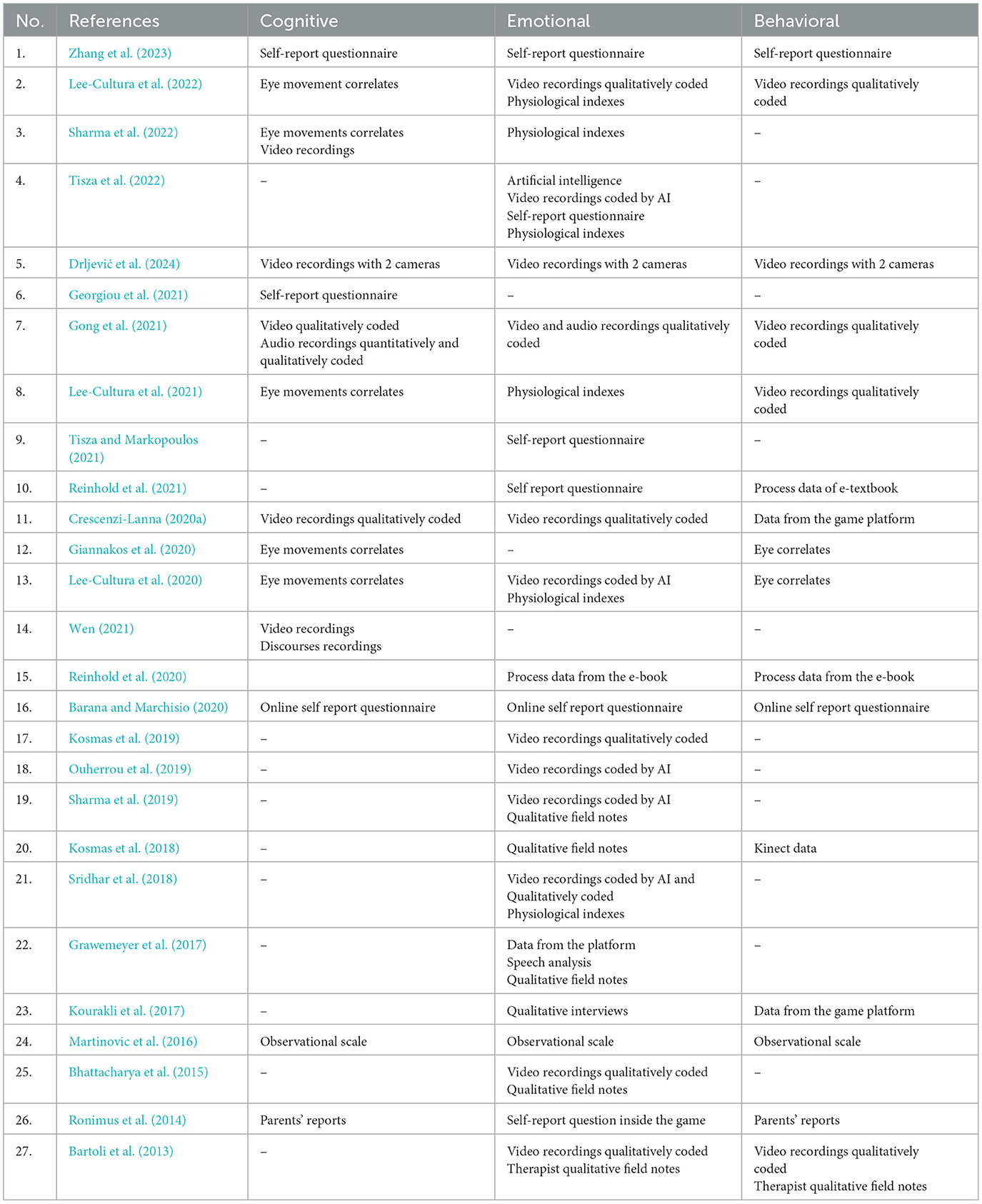

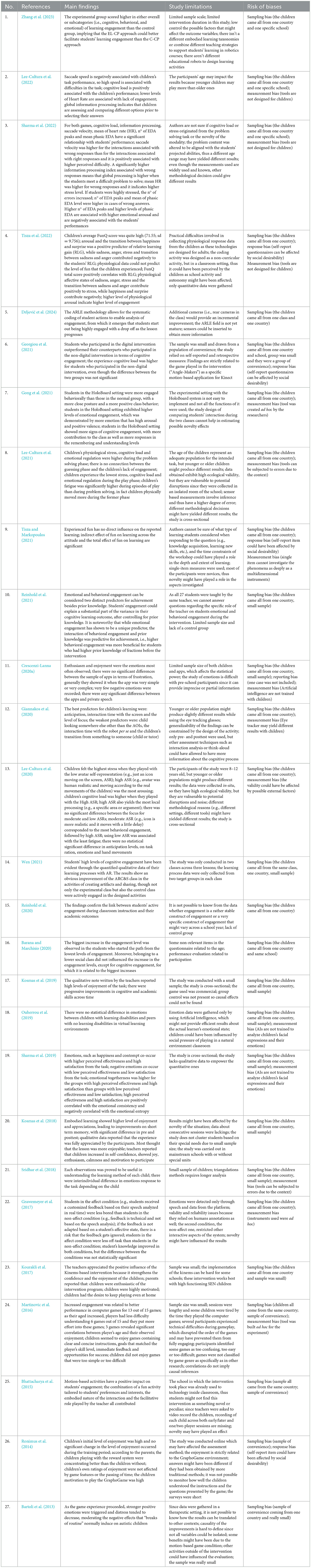

This systematic review synthesizes data from 27 studies on the assessment of children's emotional, cognitive, and behavioral engagement during the completion of digital activities. A brief summary with reference numbers used in the Section 3 of the included studies is provided in Tables 1, 2.

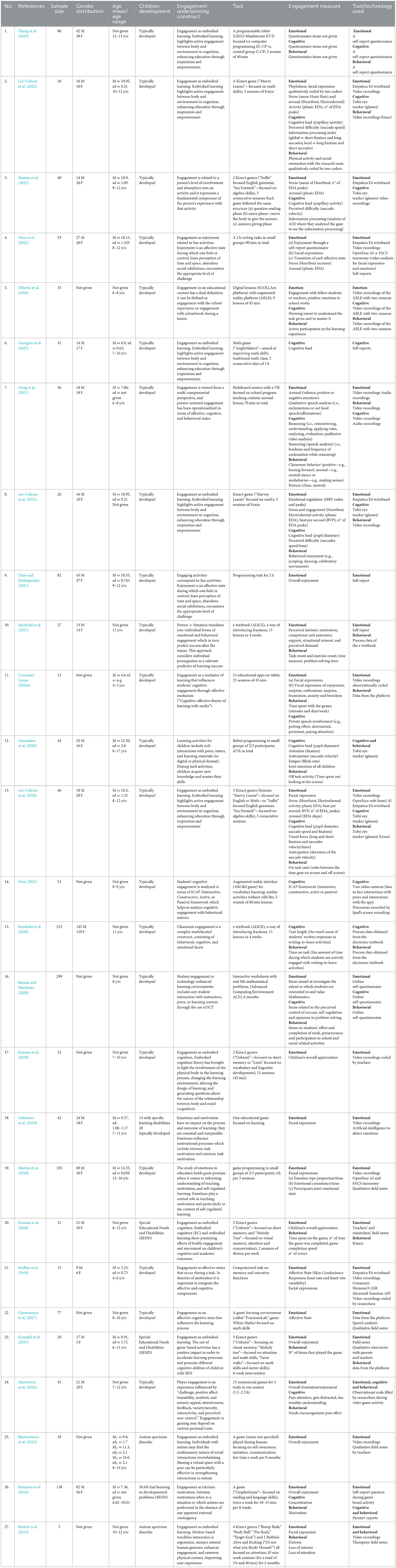

Table 1. Main characteristics of the studies included: APA reference, sample size, and its characteristics; type of task; type of engagement measured and the tool/technology used in the studies.

All studies were published between 2014 and 2023. Studies were cross-sectional in nature and most of them were conducted within the school setting. Specifically, 22 were carried out inside a school context, 2 were carried out in a university lab [12, 19], 1 was in a home setting [7], and 1 in a therapeutic center [27]. One paper did not provide any setting details [24]. Additionally, 2 papers used a double setting by carrying the experiments in a school and in a museum room [3, 13]. By location studies were conducted in Norway (n = 6) [2, 3, 8, 12, 13, 18], followed by Cyprus (n = 3) [6, 17, 20], United States (n = 1) [25], the Netherlands (n = 2) [4, 9], United Kingdom (n = 1) [22], China (n = 2) [1, 7], Brazil (n = 1) [11], Morocco (n = 1) [18], Singapore (n = 2) [14, 21], Greece (n = 1) [23], Canada (n = 1) [24], Finland (n = 1) [26], Croatia (n = 1) [5], German (n = 2) [10, 15], and Italy (n = 2) [16, 27].

3.1.1 Studies' participants

Sample sizes ranged from 5 to 299. In total, across the 27 studies there were 1,666 participants. Twenty five studies included primary and middle school children with the age varying from 6 to 18 years old. Only two studies had a sample of preschool children, aged between 4 and 6 years old [11, 21]. Twenty-four studies included children with typical development, whereas two included children with Autism Spectrum Disorder (ASD) [25, 27], three focused exclusively on children with Special Educational Needs or Disabilities (SEND) [20, 23, 26], and one study included both typically and atypically develop children (i.e., Learning Disabilities) [18]. In most studies children were predominantly White whereas one study conducted in Morocco [18] and another in China [7]. As per gender distributions, most samples comprised over 50% male children. Gender was not reported in seven studies [5, 11, 14, 17, 22, 25, 27].

3.1.2 Description of the digital, game-based, and robotic tasks

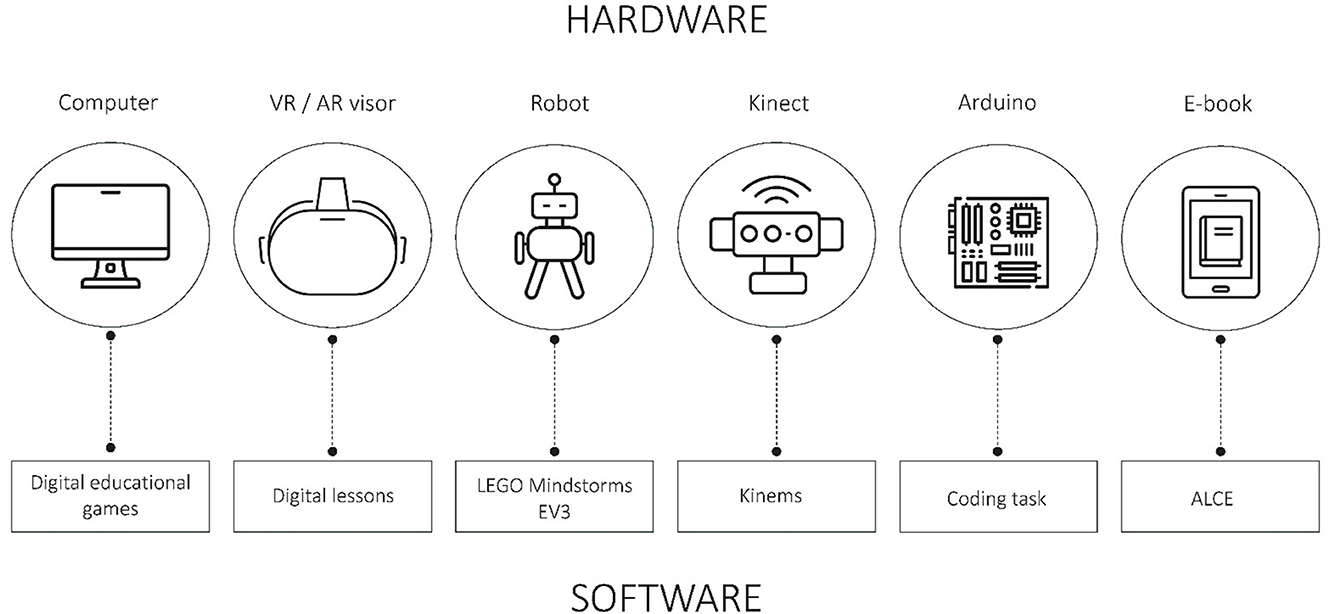

All studies engaged students in a digital task, through a game, a robot, or a computer to complete an activity (Figure 2). In particular, nine papers used educational games or digital exercises, whose purpose was to gamify the school learning content and make the learning process easier for the student. The game content among studies was broad, touching different topics such as math [i.e., 6] and language [i.e., 21]. Two studies [5, 14] used augmented reality (AR) technology for creating and presenting digital lessons to students and for promoting vocabulary learning in young children. One study used robot interactions [1] through a learning instrument called LEGO Mindstorms EV3, a combination of physical robot and a computer programming environment, including LEGO building blocks, sensors, and programmable hardware.

Figure 2. Graphical representation of task types categorized by associated hardware and software components used across the reviewed studies+. “Other” records: forward citation searches, contact with authors.

Game-based tasks typically rely on software interfaces emphasizing goal-oriented play and feedback. Differently, robotic tasks engage users through embodied, multimodal human-robot interaction and involve physical interaction, sensorimotor feedback, and a richer multisensory engagement, differentiating it from purely screen-based or software-driven learning experiences.

Eight papers used Kinect games, also called “kinems.” Kinems are motion-based games that can empower a variety of learning skills such as math (i.e., “Sea Formuli,” [13]), second language (i.e., “Suffiz,” [13]), memory (i.e., “Melody Tree,” [20]), and attention (i.e., “Body Ball,” [27]). Kinect uses a camera and sensors to detect the actions carried out by the participants in order to interact with the games. The platform can also register data about joints movements, the jerks and even descriptive data such as time spent playing. The gameplay activity usually varies based on the type of game whereas the difficulty can be determined by the player or the researcher, making the games self-adaptive according to children's needs.

In four studies children were asked to complete a coding task within small groups of 2/3 people [4, 9, 12, 19]. During this activity, children were expected to use the Arduino platform and upon watching a tutorial to code on a computer with the aim of crafting a small robot or creating a game. In one study children attended a lesson designed with VR technology [7]. During the study children were presented with a giant board that could project holograms and with which they could interact through the VR visor. Two studies [10, 15] used an e-book platform (i.e., ALCE). Lastly, one study used a simple computerized task on memory and executive functions [21].

3.2 Study findings

3.2.1 Engagement: definitions and construct

Through careful examination of studies, a diverse range of interpretations and conceptualizations of engagement in digital contexts have been identified. A summary of the definitions used in each study is reported in Table 1.

Specifically, 12 studies [1, 2, 3, 6, 8, 13, 16, 17, 20, 23, 25, 27] define engagement as linked to the concept of “embodied cognition” or “embodied learning,” that is, as mentioned in the introduction, an educational approach that emphasizes the importance of physical experience in learning, where active and bodily participation becomes fundamental.

Two studies [4, 9] define engagement as enjoyment, an affective state during which the user feels in control, loses perception of time and space, abandons social inhibition, and encounters the appropriate level of challenge.

In five studies [11, 18, 19, 21, 22] engagement is represented by all emotions and affective states an individual experiences during the task, including enjoyment, boredom, happiness, anger, and excitement.

One study [5] states that in the educational context, engagement includes a wide range of different dimensions including behaviors (such as perseverance, commitment, attention, and participation in challenging courses), emotions (such as interest, pride in success), and cognitive processes (such as problem solving, use of metacognitive strategies).

Two studies [7, 15], defined engagement by distinguishing the behavioral (i.e., as active participation and involvement in activities), emotional (i.e., as positive reactions and feelings toward teachers and work), and cognitive dimension (i.e., as effort and concentration on completing work).

One study [14] focuses on the cognitive engagement through defining it in terms four subject's modes: Interactive, Constructive, Active, and Passive (i.e., ICAP framework).

One study [24] used O'Brien and Toms (2008) definition that, as described in the introduction, postulates engagement as the quality of subject experiences when interacting with a digital or robotic system.

Finally, one study implies that engagement is the intrinsic motivation of an individual interacting with a specific device [26]. Intrinsic motivation refers to situations in which actions are undertaken without any perceivable external influence. For example, an intrinsically motivated individual derives satisfaction from the activity itself and does not anticipate specific gains, such as extrinsic rewards.

In sum, conceptualizations vary greatly, ranging from affective states and emotions experienced during interaction with digital content, to active participation, embodied learning, enjoyment, and intrinsic motivation in completing digital tasks.

3.2.2 Tools and procedures

Tools and procedures have been gathered according to the type of engagement, specifically emotional, behavioral, and cognitive. No studies directly compared the results obtained by different methodologies as most of them used one tool at time for different emotional, cognitive, and behavioral aspects.

A detailed description of the tools used in each of the three categories is provided in the following sections and is reported in Tables 1, 2.

3.2.2.1 Emotional/affective engagement

Studies evaluating children's emotional engagement gathered data on the affective state of the children during the completion of a digital task. Overall, emotional engagement was assessed by 22 papers, making it the most thoroughly explored category. All included studies focused on measuring a wide range of positive and negative affective states (e.g., boredom, happiness, anger, excitement, etc.). Nine studies measured emotional engagement through qualitative notes [2, 5, 7, 11, 17, 20, 21, 25, 27], five used video recording analysis through Artificial Intelligence [4, 13, 18, 19, 21], two speech analysis [22, 26], six self-report methods [1, 4, 9, 10, 16, 26], six physiological indexes [2, 3, 4, 8, 13, 21], one semi structured interview [23], one observational scale [24], and one log data gathered directly from the platform [22].

Video recordings focused on children's facial expressions, verbal cues (e.g., laughing, smiling), and body movements through qualitative analysis of the notes reported by researchers and teachers, AI (e.g., trained by the Facial Action Coding System taxonomy FACS; Cohn et al., 2007).

Self-reports consisted of Likert-based questionnaires or surveys referring to children's or parents' perceptions during the game-based learning activity. They were administered after completing the activity or online, while the child was playing the game or after each play session. The FunQ questionnaire (Tisza and Markopoulos, 2023) consists of 18-items gathered in six dimensions: (a) autonomy (i.e., experiencing control over the activity), (b) challenge (i.e., feeling challenged by the activity), (c) delight (i.e., experiencing positive emotions), (d) immersion (i.e., feeling immersed in it and lost the sense of time and space), (e) loss of social barriers (i.e., socially connectivity), and (f) stress (i.e., experiencing negative emotions during the activity). The questionnaire used by Reinhold et al. (2021, [10]) investigated perceived intrinsic motivation, competence support and autonomy, situational interest, and perceived demand.

In the study by Barana and Marchisio (2020, [16]) the questionnaire was composed of 35 questions inspired by the Pisa 2012 student questionnaire, including items investigating the emotional engagement on specific school topics (e.g., “I like lectures about Mathematics.”).

Finally, Ronimus et al. (2014, [26]) asked the parents to complete online a single quantitative item about the children's motivation in doing the task (i.e., “How eagerly did the child play the GraphoGame during the study?”).

Physiological indexes were used to measure children's stress and arousal (e.g., heart beat and rate and the electrodermal activity); they were collected by Empatica E4 wristband [2, 3, 4, 8, 13, 21] or the Consensys Shimmer 3 GSR [21]. The Empatica E4 wristband is an unobtrusive bracelet that can be worn by children while they are completing the task. The Shimmer, it is composed of electrodes that must be worn its along with a few probes and a sensor.

Qualitative field notes were collected from teachers, experienced therapists or researchers who were instructed to provide an overall evaluation of children's enjoyment or to focus on children's facial expressions and emotions [20, 25, 27]. Notes could be time-sampled (e.g., Baker-Rodrigo Ocumpaugh Monitoring Protocol method, BROMP, Ocumpaugh, 2012) and computer-assisted (e.g., Human Affect Recording Tool, HART; Ocumpaugh, 2012).

Semi structured interviews were used with teachers and the parents to verify the enjoyment experienced by children in using the intervention Kinems [23].

Speech analysis was used by two studies [22, 26] to evaluate the emotional characteristics of the interaction with the digital task, platform or robot provided. The affective states were analyzed through specific keywords pronounced by the children and prosodic signals that were then clustered by an algorithm in the different categories (i.e., frustration, in flow, boredom, confusion and surprise).

An observational scale, used by Martinovic et al. (2016, [24]), was compiled by the researchers to assess emotional engagement during digital tasks. The items assessed whether the child showed amusement (i.e., laughing or smiling), frustration (i.e., sighing, groaning) or anxiety and nervousness during the task.

Log data were acquired by Grawemeyer et al. (2017, [26]) to infer emotional engagement on the base of the interaction of the child with the digital platform (e.g., explore the functions of the platform to find a solution, stop without interacting with the platform to think about what to do).

3.2.2.2 Behavioral engagement

Behavioral engagement was explored by 16 studies, making it the second most thoroughly investigated category. Considering the fact that the reviewed studies analyzed diverse types of behavioral signs to determine behavioral engagement, results in this category are heterogeneous.

Precisely, the behavioral signs indicating engagement that were included in this category were as follows: the time spent looking and not looking at the screen [12, 13]; time spent on the activity and other information related to it (e.g., errors, speed, completion time; [10, 11, 15, 16, 20, 23]); socially interacting and being physically active while performing the task [2, 5, 8]; behavioral reactions (e.g., making noise in the classroom) and somatic posture while completing the task (e.g., leaning forward or keeping a neutral stand; [7]); behavioral distress [27]; behavioral signs of loss of attention [27]; and behavioral signs of loss of interest [27].

The most common methodologies to assess behavioral engagement were video recordings analyzed through qualitative notes [2, 5, 7, 8, 27] and log data acquired from the digital platform [10, 11, 15, 20, 23]. Other methods included eye correlates [12, 13], qualitative notes [27], a single quantitative item [27], observational scales [24], and self-report questionnaires [1, 16].

Video recordings were used to explore diverse signs of behavioral engagement such as: how much the children moved during the activity (e.g., too much, not at all, lazily) and whether they autonomously sought for social interaction with the researchers to also engage them in that activity [2]; specific behaviors, such as jumping, dancing, and making celebratory movements [8]; purposed and intentional movements toward the device or to reach the next goal [5]; overall behavior during virtual reality sessions (i.e., positive, normal or misbehavior) as well as children posture while [7]. The only study with children with Autism Spectrum Disorder [27] measured behavioral distress (e.g., clothing manipulation, teeth grinding, wobbling), loss of interest (e.g., verbal manifestation of tiredness), and loss of attention (e.g., child overstimulation—loss of movement control).

Log data from the digital platforms were collected automatically by the games and included the time spent playing on the platform, the number of mistakes, and the speed of completion of the game [10, 11, 15, 20, 23].

Eye correlates were measured by the Tobii eye-tracker device [12, 13]; the skewness of saccade velocity and the blink rate was used to calculate the level of anticipation and the tiredness.

Qualitative therapist notes were used in children with Autism Specter Disorders to record the presence of inappropriate movements (i.e., genital manipulation, clothing manipulation, teeth grinding, running in place, wobbling, putting hands on the mouth) [27].

Observational scales filled in by the researchers were used to evaluate whether the child was distracted by looking around or eating while carrying out the task [25].

Self-reported questionnaires were used by Barana and Marchisio (2020, [16]) and include items on students' effort, completion of work, perseverance, participation in school and social related activities.

3.2.2.3 Cognitive engagement

The “cognitive engagement” category refers to all those studies that tried to assess diverse cognitive aspects of the participating children, such as attention or cognitive load, while they were completing the digital task. Overall, 14 studies evaluated cognitive engagement. Majority of the studies assessed more than one cognitive aspect: seven papers assessed cognitive load [1, 2, 3, 6, 8, 12, 13, 15], three attention/focus [12, 13, 24], three perceived difficulty [2, 3, 8], two information processing (global and local) [2, 3], two anticipation of the task (i.e., anticipation of the stimuli's appearance during the task) [12, 13], two reasoning processes (e.g., remembering, understanding, analyzing, doing evaluations) [5, 7], one private speech (i.e., language directed to oneself that guides the cognitive execution) (Zivin, 1979) [11], one fatigue [12], one joint attention of all children [12], one ICAP framework (interactive, constructive, active or passive) [14], one perceived control of success and self-regulation [15], and one concentration [26].

The methods used to evaluate cognitive engagement included eye correlates and movements (n = 5) [2, 3, 8, 12, 13], questionnaires and self-reports (n = 5) [1, 6, 16, 24, 26], video recordings of each game session (n = 5) [3, 5, 7, 11, 14], and speech analysis [15].

Eye correlates were detected through Tobii eye-tracking glasses that are an unobtrusive support tool that are considered reliable to obtain a wider and more precise range of the eye data (Tobii, 2023). Several eye-tracker metrics were used to evaluate specific aspects of cognitive engagement: pupil diameter for cognitive load [2, 3, 8, 12, 13]; saccades velocity for perceived difficulty [2, 8, 12] and cognitive anticipation [12, 13]; fixations and saccades velocity for distinguish between global and local information processing [2, 3]; blink rate for cognitive fatigue [12]; simultaneous gazing for joint attention [12].

Self- reported questionnaires were used by three studies [1, 6, 16] to evaluate through Likert scales (e.g., 1 = extremely easy to 7 = extremely difficult) or direct questions (e.g., “How difficult was it for you to successfully accomplish the activity?”) the perceived cognitive load and students' effort in completing the task [16].

Parents' reports requested to rank children's concentration on a Likert scale and a final overall question (i.e., “How well did the child concentrate while playing the GraphoGame?”) [26].

Observational scale was used by one study [24] to assess comprehension of the game instruction and attention/distraction to the gameplay.

Video recordings were conducted by one [3] or more devices (e.g., one device to record the entire body of the children, one a mirror in front of the children and another through the tablet camera, [11]). Movements and postures were used to analyze the intent to understand the task given and to master it, as careful reading of instructions, reasoning, actively attempting to solve problems presented in the digital lesson, trying to understand task-related issues in discussion with fellow students or teachers and similar [5, 7]. In the study by Wen (2021, [14]) the researchers used video recordings to see whether the children displayed passive behavior (e.g., listening to the lecture but not taking notes), active behavior (e.g., turning or inspecting objects), constructive behavior (e.g., generating new ideas) or interactive behavior (e.g., interacting with the platform).

Speech and discourse analysis were used in three studies: audio recordings, exclamations and other phrases related to cognitive reasoning [7]; private speech as representative of language directed to oneself to guide cognitive execution and regulates social behavior [11]; e-text length (i.e., the word count of students' written responses in writing-to-learn activities) was used as an operationalization of cognitive effort exerted [15].

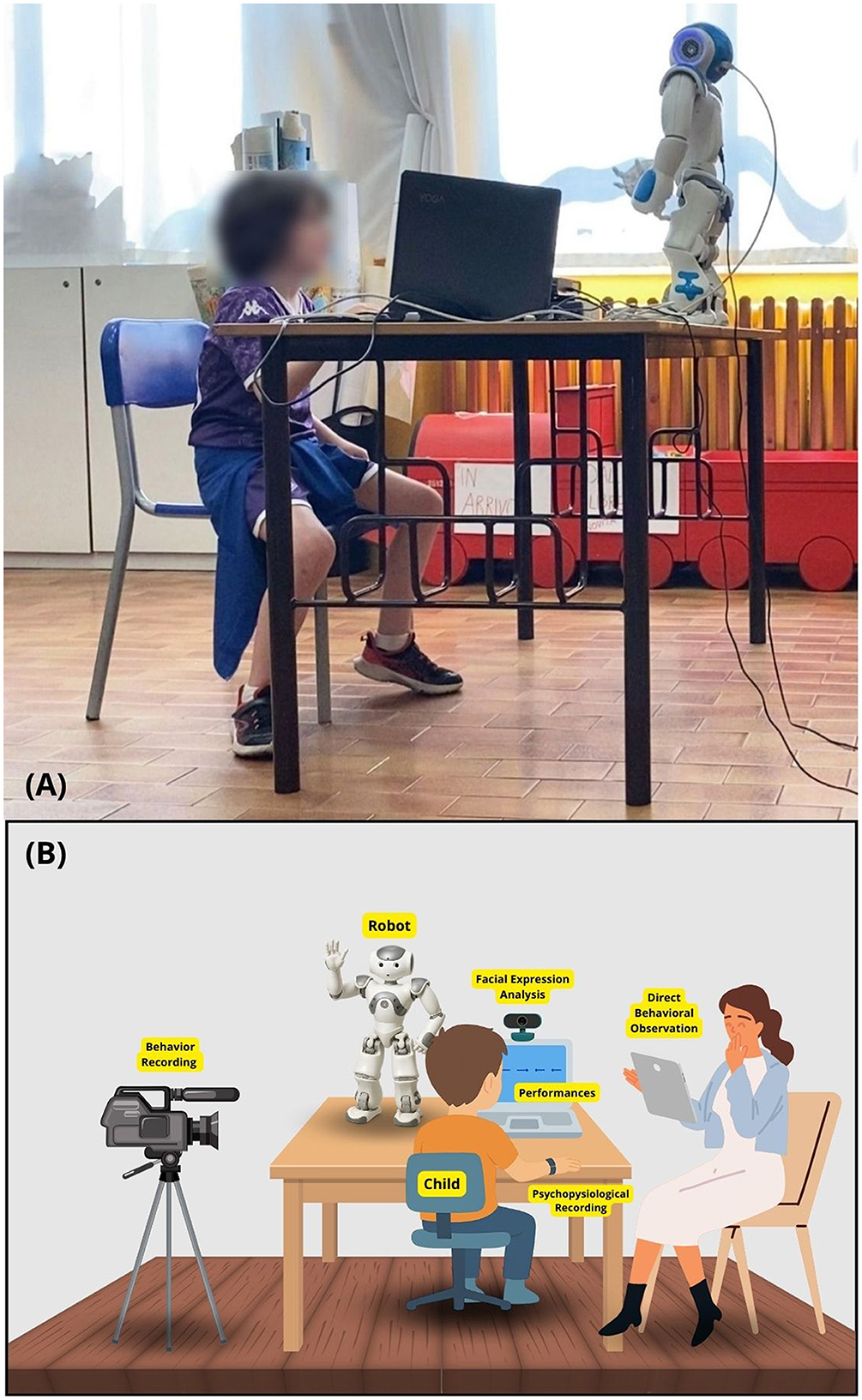

Figure 3 provides an illustrative overview of the various engagement measures during a child-robot interaction.

Figure 3. Example setting of (A) a child interacting with robot and (B) procedure and tools used to measure different dimensions of engagement.

3.2.3 Does engagement predict performances and vary according to digital tasks?

Through different study design and methodologies, including qualitative, quantitative (mainly descriptive analyses) and mixed methods studies, it has been possible to evaluate the presence of a relationship between engagement and task performance.

Out of the 22 studies that evaluated emotional engagement, only a minority concentrated on examining this correlation. Through data obtained from self-report questionnaires, the results of a study [4] indicate that there is a link between the fun that children experienced during the digital task and their learning outcomes. Indeed, the FunQ questionnaire total score correlated significantly with learning to code, suggesting that having fun while completing a digital task contributes to learning outcomes. Similarly, two studies [17, 20] suggested that the pleasure of embodied learning, through the use of motion-based educational games, can help to improve children's short-term memory. Another study [10] found that emotional engagement, measured with a self-report questionnaire, could be a unique predictor in explaining a substantial portion of the variance in cognitive learning outcomes. Furthermore, through the use of an observational scale, Martinovic et al. (2016, [24]) demonstrated that children performed better in games in which they show higher levels of fun (e.g., smiling, verbal expression of enjoyment).

Many other studies did not investigate learning improvements, but, however, they analyzed the link between digital games characteristics and emotional engagement, suggesting that the use of digital tasks or robotic activities in comparison to traditional tasks can favor positive emotions [7], reduce stress levels [3, 4, 8], and boredom [7, 20, 22, 27]. Moreover, Zhang et al. (2023, [1]) found that average emotional engagement scores in embodied learning contexts are higher than those obtained in non-embodied learning contexts. Two studies reported that children tend to feel more frustrated when digital activity is too easy or too difficult [11, 24]. Three studies showed that tailoring the characteristics of an intervention to children's preference improves their emotional engagement [20, 22, 25]. One study [13], examined the effect of different modes of self-representation of avatars on children's participation and another study [18] analyzed emotional engagement in children typically developing and those with learning disabilities; neither study found differences between conditions in terms of emotional engagement.

Considering the high level of heterogeneity, studies on behavioral engagement have reported different conclusions. Specifically, only five studies [10, 12, 15, 24, 27] investigated the relationship between behavioral engagement and cognitive performance. Through data obtained from digital platforms, three studies found that the interaction time with the screen [10, 12] or the time on the task [15] predicted student learning and their academic performance. Lastly, through data obtained from video recordings and qualitative notes, Bartoli et al. (2013, [27]) observed that children with ASD tended to reduce their repetitive behaviors associated with discomfort, loss of attention and concentration when playing Kinect games [27].

Nevertheless, most studies have not analyzed this relation but investigated the link between digital task characteristics and behavioral engagement. Gong et al. (2021, [7]) reported that students participating in the digital activity were more behaviorally engaged when there was a positive class behavior and a closer posture to the device. Similarly, Lee-Cultura et al. (2021, [8]) and Lee-Cultura et al. (2020, [13]) found that when children enjoyed the activity they tended to move more. Barana and Marchisio (2020, [16]) reported that the digital learning environment had a strong impact on the behavioral engagement's levels of initially poorly engaged students. Kosmas et al. (2018, [20]) and Kourakli et al. (2017, [23]) found that embodied Kinect game lessons, which involve motor movements during the completion of the task, were in general enjoyable for children. Finally, five studies that utilized data as acquired automatically by the digital platform [10, 11, 20, 23] or a single quantitative item [26] found that if digital activities are well-liked by children, they tend to play more and express the desire to repeat the experience [11, 20, 23, 26].

Considering the 14 studies assessing children's cognitive engagement (i.e., putting effort in remembering and applying the activity rules), those studies that investigated the relationship between cognitive engagement and task performance used eye tracker data (saccade speed, fixation time, pupil diameter) to assess cognitive load, perceived difficulty and anticipation. Two studies [2, 3] found that saccade speed was negatively associated with children's task performance. Three studies found that the cognitive load, measured by pupil diameter and fixation time, was positively associated with children's performance, with higher cognitive load to be related to an overall better performance in the digital task [2, 8, 13]. Lastly, Giannakos et al. (2020, [12]) suggested that anticipation and attention levels, measured by fixation time and saccade speed, had a high predictive value of performances. Regarding the use of self-report questionnaires and observational scale, Georgiou et al. (2021, [6]) found that students who participated in the digital intervention outperformed those who participated in the non-digital intervention in terms of cognitive load and Martinovic et al. (2016, [24]) found that an increased cognitive engagement (e.g., paying attention and putting effort) was related to better performance in computer games. Finally, Barana and Marchisio (2020, [16]) found that cognitive engagement, measured by self-reported questionnaire, is linked to self-regulation and persistence with schoolwork and cognitively engaged students are less likely to give up their learning and more likely to keep engaged with school.

3.3 Methodological quality of the studies, limitations, and risk of biases

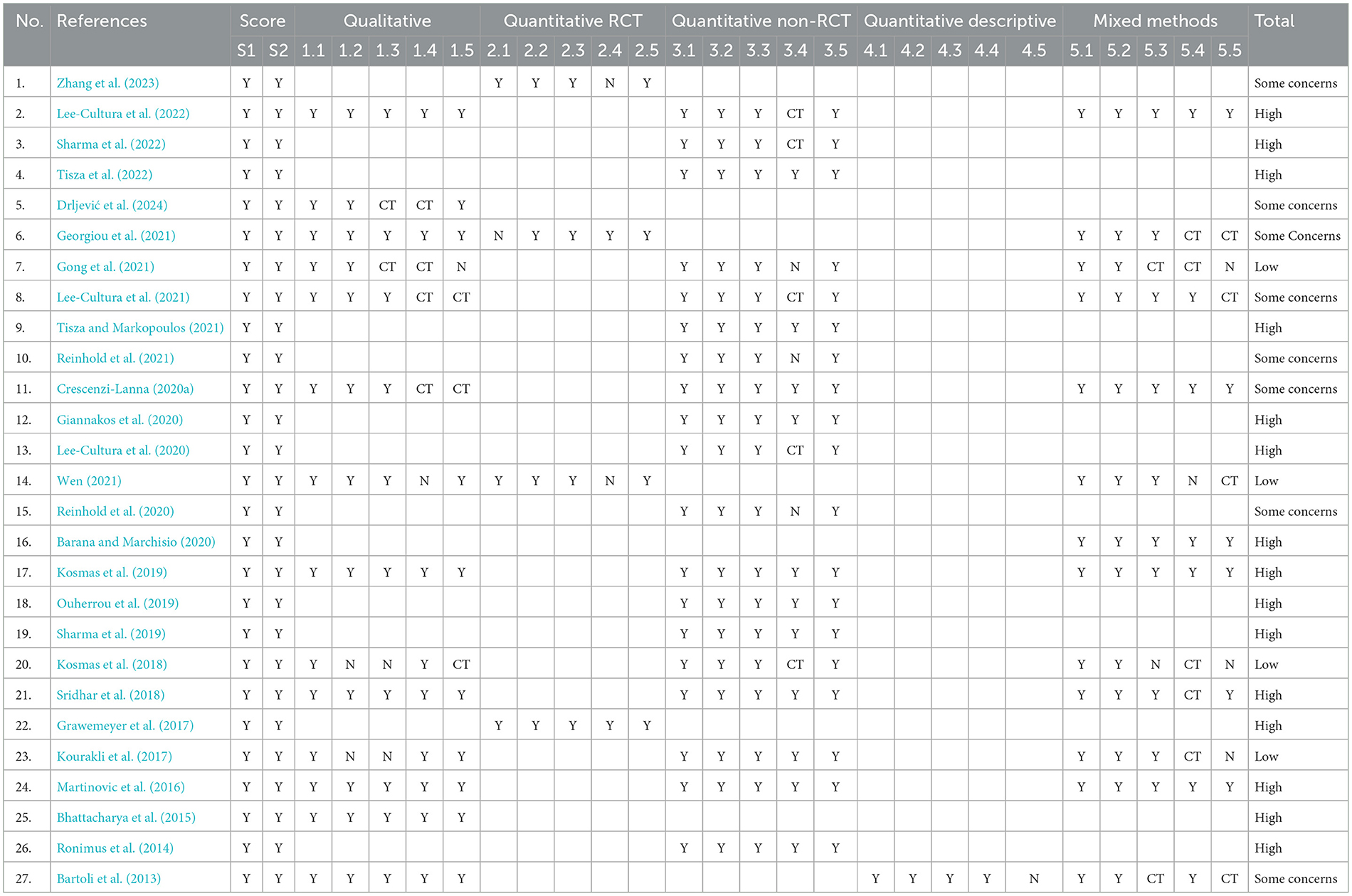

The methodological quality assessment of the included studies was carried out through the MMAT instruction. Table 3 depicts the MMAT analysis of each study included in full detail.

Table 3. Methodological quality for the included studies as conducted with the Mixed Methods Appraisal Tool (Version 2018).

Fifteen studies out of 27 obtained high quality MMAT scores. Those studies show several characteristics to be considered reliable and valid, such as appropriate research designs to answer the research question, well-defined and representative sample populations, adequate control of confounding factors and analysis methodologies. Besides, eight studies obtained MMAT scores of some concerns, as they present some characteristics of the best research studies, but also show some limitations or weaknesses that distinguish them from high-quality ones (e.g., the study design is adequate but not optimal, the population is representative but the sample size is small, the data have been collected with methods that are not entirely appropriate, the results are presented in an incomplete or complete but not exhaustive manner). Finally, four studies were of low quality as they exhibited characteristics such as: weak or inappropriate study designs, non-representative populations, unreliable data collection methods, failure to control for confounding factors, inappropriate statistical analysis, and incomplete presentation of results.

The most common limitations of the aforementioned studies included having a cross-sectional study design. Almost all papers, except one, had a relatively small sample size. Furthermore, studies that used self-reported methodologies acknowledged that these methods can be affected by social desirability or other individual variables. Six studies reported that findings may differ based on children's age, thereby older or younger participants could have yielded different results [2, 3, 8, 11, 12, 13]. Five studies also noted that using a different methodology could have given different findings [3, 8, 12, 13, 26]. Notably, five studies recognized that many of the technical tools used (e.g., AI, Empatica E4) were not designed for children and thus findings may have been impacted because of this [4, 8, 13, 18, 22]. Carrying the intervention within the school setting provided high ecological validity to the findings, yet external variables were less monitorable [4, 8, 9, 12, 18, 20] whereas the implementation of the intervention tools within school could sometimes be challenging [7, 23].

In two studies the authors stated that quantitative data should be paired up with qualitative data to provide more in-depth findings concerning engagement [4, 19].

Overall, majority of studies were affected by the following risk biases: (a) potential sampling bias due to the relatively small sample sizes as children from the same country and often the same school setting were recruited, thereby making findings less generalizable; (b) response bias due to the self-report measures used; (c) measurement bias due to the fact that the digital tools used (e.g., AIs, Empatica E4) were not designed or adapted for children. More specific details about limitations and risks of biases are reported in Table 4.

4 Discussion

The main research objectives of this systematic review were: (1) to describe the most commonly used conceptualizations of children engagement in digital and robotic contexts; (2) to understand which tools and procedures are widely used to measure three main types of engagement, that is emotional, behavioral and cognitive, in children and adolescents performing digital and robotic tasks; (3) to investigate the relationship between engagement, children's performances, and task characteristics.

Thorough selection process conducted according to the PRISMA method 27 studies were deemed eligible.

The review includes a diverse selection of studies from different continents, albeit mainly Europe: America (n = 3), Asia (n = 4), Africa (n = 1), and Europe (n = 19). Except for Norway, which accommodates 6 of the 27 studies considered, the countries distribution is quite even but there are few studies from each one.

Regarding the population included in the selected studies, the review examined a wide age range, from 6 to 18 years, while only two studies (Crescenzi-Lanna, 2020a; Sridhar et al., 2018) had a sample of preschool children (4–6 years). While no study has investigated the effect of age on the results obtained, such a wide age range may prevent the generalization of the findings to different developmental periods. Indeed, both the conceptualization of engagement and the tools and methodologies used to measure it may vary between preschoolers or school-age children and adolescents, thus not being uniformly adaptable to all ages included in the review. Additionally, most of the studies focused on typically developing children, with only a few considering children with atypical development (Bartoli et al., 2013; Bhattacharya et al., 2015; Ouherrou et al., 2019; Ronimus et al., 2014), leaving open the issue of whether the engagement conceptualization and measures are suitable also for different needs. Future studies should include a larger number of children with atypical development. In fact, with the advancements in ICTs, tele-intervention, tele-assessment, and tools like AI or sensors, it is now possible to gather more information about these children's level of engagement and improve their accessibility and training. Another important consideration is that most of the tools used in these studies were not specifically designed for children (Grawemeyer et al., 2017; Lee-Cultura et al., 2020, 2021; Ouherrou et al., 2019; Tisza et al., 2022). This could have impacted the quality of the data and the suitability of the tools for assessing engagement in children. Future research should focus on developing and utilizing tools that are specifically tailored to the needs, characteristics, and different ages of children.

Additionally, none of the included studies compared different settings, as most of these were conducted in schools. Despite the high ecological validity of carrying the studies in such settings, the children's engagement might have been influenced by external factors, such as environmental noise or unexpected events (Giannakos et al., 2020; Lee-Cultura et al., 2021; Ouherrou et al., 2019; Tisza and Markopoulos, 2021; Tisza et al., 2022). Participants from different regions and age groups might produce different research results, therefore it is important to consider and control these external factors to obtain more reliable and valid data.

In the future, more specific studies focusing on interindividual differences or different subgroups might help implement engagement measurements that are customizable to each individual child.

The review also encompasses four studies classified as low quality (Gong et al., 2021; Wen, 2021; Kosmas et al., 2018; Kourakli et al., 2017), thus, their results being poorly interpretable due to methodological limitations.

4.1 Engagement conceptualizations

The first research question focused on analyzing the conceptualization of engagement, with particular attention to the developmental age, a period in which the concept of engagement may have different facets compared to adulthood.

The presence of multiple interpretations has highlighted numerous theories and approaches that analyze different aspects of the same construct. This variety of perspectives and theoretical approaches provides a rich and complex framework for understanding the nature and the importance of engagement in different digital contexts, thus contributing to a deeper and more articulate view of this fundamental phenomenon in educational dynamics.

Although there are many approaches used, it is important to note that there is no theory of engagement that is universally recognized as the most effective. The complexity of the phenomenon requires a more transversal exploration that takes into account numerous factors and should be able to capture the complexity of interactions between children and digital platforms. Engagement is a polyhedral multicomponential construct, which means that it involves different dimensions and manifestations, therefore, to fully understand it we cannot limit it to a single perspective or a single aspect (Tisza et al., 2022; Ronimus et al., 2014).

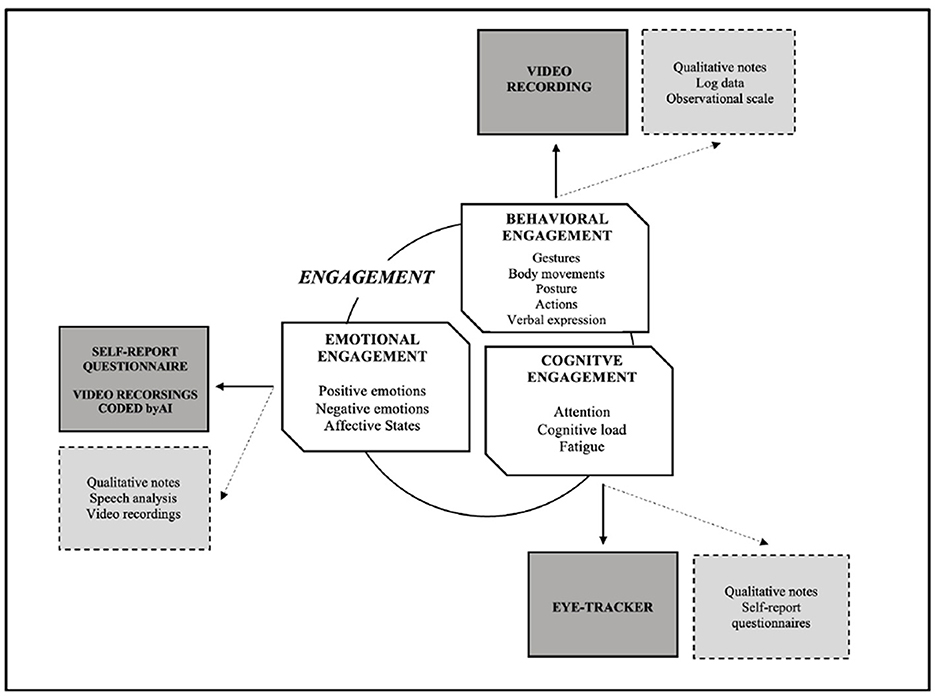

As represented in Figure 4, the constructs utilized in the selected studies can be conceptualized through three main components of engagement.

Figure 4. A three-dimension model of engagement and the respective measurement tools. Solid arrows indicate the most frequent and evidence-based measures.

The first component is the emotional engagement (Tisza et al., 2022; Drljević et al., 2024; Tisza and Markopoulos, 2021; Crescenzi-Lanna, 2020a; Reinhold et al., 2020; Ouherrou et al., 2019; Sharma et al., 2019; Sridhar et al., 2018; Grawemeyer et al., 2017). It regards the emotions perceived by children while they are performing a task and interacting with a device or at the end of the interaction. It must include both positive and negative emotions, such as sadness, happiness, fear, surprise, anger, and disgust, and other affective states, such as enjoyment, fun, boredom, frustration, and motivation. Measuring emotional engagement during interaction with digital tasks and robotics can be particularly important in children, for whom, unlike adults who generally have clear goals they want to achieve and therefore own motivation to perform tasks, emotional activation represents a driving factor in exercise and learning (Tisza et al., 2022). In fact, positive emotions are an essential feature to promote engagement and they are also essential in how children and adolescents imagine goals and challenges, guide their behavior, and shape group dynamics and interactions (Sharma et al., 2019). Therefore, when designing effective interventions aimed to promote engagement and improve children's experiences it is important to consider the role of affective state.

The second dimension is the behavioral/bodily engagement (i.e., Barana and Marchisio, 2020; Bartoli et al., 2013; Bhattacharya et al., 2015; Drljević et al., 2024; Georgiou et al., 2021; Kosmas et al., 2019; Lee-Cultura et al., 2020, 2021, 2022; Reinhold et al., 2021; Sharma et al., 2022; Zhang et al., 2023). It refers to the physical actions and active participation of the child during the activity. It includes time spent in front of the screen or the robot, the interaction time with the task, the posture (e.g., closeness to the device), the verbal expressions used, the movements frequency, the actions used. As illustrated by some studies (Bartoli et al., 2013; Giannakos et al., 2020; Reinhold et al., 2021), while log data can be indicators of this type of engagement in both adults and children, in the latter movements during task performance could be as well a positive indicator of engagement and promote learning through processes of embodied cognition.

Lastly, the third fundamental aspect of engagement is the cognitive dimension, which focuses on mental processing and understanding the task (Drljević et al., 2024; Martinovic et al., 2016; Reinhold et al., 2020). Cognitive engagement implies attentional aspects, which goes beyond simple superficial participation, and it translates into the ability to analyze, synthesize and apply information in a meaningful way. Digital and robotic environments could induce higher levels of cognitive engagement (such as attention and cognitive load), essential to promote a real acquisition of knowledge and skills, allowing individuals to develop critical thinking and problem solving skills that are essential for learning. However, in the developmental age, the appropriateness of using digital tools and robotics for learning and intervention, given the cognitive immaturity of individuals such as children, is still a debated issue (Vedechkina and Borgonovi, 2021). Although none of the included studies assessed the effects of age on different dimensions of cognitive engagement, such as attentional engagement and cognitive fatigue, the results of selected studies emphasize the importance of measuring cognitive engagement during children's interaction with digital tools and robotics.

As suggested by the model proposed in Figure 4 it is important to note that these three main dimensions of engagement are all relevant in childhood, as they can interact dynamically and influence each other as well as the child's overall level of engagement.

4.2 Tools and procedures to measure engagement in childhood

The second research question focuses on the evaluation of the tools used to measure the three main components of engagement. In this analysis, a dual approach was adopted. First, we evaluated how much the tools were a direct and sensitive measure of engagement, with particular attention to the interpretability of the results. Subsequently, we considered feasibility by examining the ease of use with children of different ages.

The model represented in Figure 4, alongside the visual depiction of the three components of the engagement that have been described in the section above, offers a comprehensive overview of the most commonly used tools and measures for each component.

In terms of the emotional dimension, there is a widely accepted consensus and understanding regarding the tools and methodologies mainly used to measure it. Indeed, the operationalization of emotions is firmly established, as emotions like joy or sadness, have clear definitions and known behaviors indicators (e.g., smiling, laughing). The majority of the studies (Barana and Marchisio, 2020; Lee-Cultura et al., 2020; Ouherrou et al., 2019; Reinhold et al., 2021; Ronimus et al., 2014; Sharma et al., 2019; Sridhar et al., 2018; Tisza et al., 2022; Tisza and Markopoulos, 2021; Zhang et al., 2023) used self-reports questionnaires or video-recordings coded by AI to measure the emotions and/or facial expressions the children experimented with during the execution of the digital tasks.

Self-report questionnaires offer children the opportunity to directly express their perceptions and communicate autonomously, without external interpretation. Furthermore, by completing the questionnaires, children are encouraged to reflect on their educational experiences, preferred learning methods and any obstacles encountered. Even from a usability point of view, self-report questionnaires represent a particularly suitable option for children as they are easy to use and understand. Their intuitive structure and clear questions make them accessible even to younger children or those with limited language skills. Furthermore, visual formats with images or smileys are often used, which further simplify the compilation and encourage active participation of all children.

Video-recording coded by AI that allows for codification of children's facial expressions associated with the emotions they feel (Lee-Cultura et al., 2020; Ouherrou et al., 2019; Sharma et al., 2019; Tisza et al., 2022). The use of facial detection systems provides continuous monitoring of emotional state with real-time feedback. Furthermore, these tools only require the child to be present and visible to the camera and there is no need to integrate them with sensors. Lastly, they can be configured to respect children's privacy. This is achieved by not storing or recording facial images and processing data anonymously or pseudonymously.

In addition to self-report questionnaires and video-recordings coded by AI, there are other tools used to assess emotional engagement, such as speech analysis, observational scales, and qualitative notes (Bartoli et al., 2013; Crescenzi-Lanna, 2020a; Drljević et al., 2024; Grawemeyer et al., 2017; Kosmas et al., 2019; Lee-Cultura et al., 2022; Martinovic et al., 2016; Sharma et al., 2019; Sridhar et al., 2018). These tools provide valuable information on the child's facial and verbal expression but require a certain level of expertise and preparation by researchers and the interpretation of the information collected can be influenced by their perspective. They can therefore be considered tools to be used in combination with self-report questionnaires or video recordings to provide a more complete and in-depth view of children's emotional engagement (see Figure 4).

Finally, physiological tools, although they offer an innovative approach to assessing engagement in children, present significant challenges related to their complexity of use. Furthermore, the interpretation of physiological data can be subjective and vary based on different factors. For instance, the study by Sharma et al. (2022) suggested that higher arousal is associated with greater stress, while another (Tisza et al., 2022) that higher arousal is associated with a higher level of engagement. Therefore, despite their potential to provide detailed information about children's emotional engagement, physiological tools are often considered more complex to use and require more attention to interpreting results.

Although a few of the selected studies involved children with special educational needs, the mentioned tools could be particularly useful to measure emotional engagement in those children with emotional dysregulation for whom the use of digital or robotic devices for learning could be highly challenging (Paneru and Paneru, 2024; Ribeiro Silva et al., 2024).

In terms of behavioral engagement, researchers collected heterogeneous data by assessing different behaviors based on the digital tasks used. Indeed, in contrast to the standardized operationalization of emotional engagement, there is a lack of consensus in the literature that pertains to the optimal motor or bodily indicators of behavioral engagement in childhood. The most commonly used tool in this category is video recording (Bartoli et al., 2013; Drljević et al., 2024; Lee-Cultura et al., 2021, 2022), which accurately and comprehensively records children's interactions with the digital environment, capturing gestures, body movements, reactions, verbal expressions, and postures. Thanks to its ability to capture a wide range of behaviors, video recording could be a valuable tool for understanding children's behavioral engagement and interactive dynamics with digital and robotic devices. From a usability point of view, it offers direct and non-invasive observation of children's actions, and it allows researchers to analyze the behaviors both during interaction with digital devices and at a later time.

In addition, there are other important methodologies that, although less direct, can provide valuable insights into children's behavioral engagement in digital contexts. These tools include observational scales, qualitative therapist notes, and log data and they could be used in combination with video-recordings to get a complete measure (see Figure 4). Among those studies which analyze data from digital platforms (Reinhold et al., 2020, 2021), some take into consideration the execution time, while others the number of tasks completed, the number of exercises carried out and the time to resolve the problem.

As well as for the emotional engagement, most of the studies demonstrated a shared consensus regarding cognitive engagement's definition and operationalization, as well as its associated indicators, such as attention, cognitive load, and fatigue (e.g., Lee-Cultura et al., 2022; Giannakos et al., 2020; Sharma et al., 2022).

The most used tool in this type of engagement was the eye-tracker, which allowed the collection of data on fixation time, saccade speed and pupil diameter (Giannakos et al., 2020; Lee-Cultura et al., 2020, 2021, 2022; Sharma et al., 2022). This tool provides objective and quantitative measurements of children's eye movements as they engage in digital tasks. It collects accurate data on the child's attention and fatigue and allows researchers to assess children's cognitive engagement in real time. By analyzing the fixation and saccade patterns of the eyes, it is possible to identify which elements of the digital interface capture the child's attention the most and which may be less stimulating or engaging. Modern eye trackers are also designed to be non-invasive and easy to use. They can be integrated into devices, allowing children to naturally engage in tasks without feeling disturbed or restrictive. They can be used with children of different ages and needs.

Additionally, besides the eye tracker, other tools are used to evaluate cognitive engagement in children, such as self-report questionnaires and qualitative notes (Barana and Marchisio, 2020; Georgiou et al., 2021; Martinovic et al., 2016; Zhang et al., 2023). Self-report questionnaires can be easily administered to children in formats suited to their age and level of understanding. However, it is important to note that these tools are based on subjectivity responses and depend on children's ability, which could vary based on age and individual experiences. Therefore, integrating the use of these questionnaires with objective tools, such as the eye tracker, can provide a more complete and accurate assessment of cognitive engagement in children during digital tasks (see Figure 4).

4.3 Engagement-performances relationship