- 1Department of Bioengineering, Civil Engineering, and Environmental Engineering, U.A. Whitaker College of Engineering, Florida Gulf Coast University, Fort Myers, FL, United States

- 2The Water School, Florida Gulf Coast University, Fort Myers, FL, United States

- 3DENDRITIC: A Human-Centered Artificial Intelligence and Data Science Institute, Florida Gulf Coast University, Fort Myers, FL, United States

As artificial intelligence (AI) tools evolve, a growing challenge faced by educators is how to leverage the invaluable AI-assisted learning, while maintaining rigorous assessment. AI tools, such as ChatGPT and Jupyter AI coding assistant, enable students to tackle advanced tasks and real-world applications. However, they also risk overreliance, which can diminish cognitive and skill development, and complicate assessment design. To address these challenges, the Fundamental, Applied, Conceptual, critical Thinking (FACT) assessment was implemented in an Environmental Data Science course for upper-level undergraduate and graduate students from civil and environmental engineering, and Earth sciences. By balancing traditional and AI-based assessments, the FACT assessment includes: (1) Fundamental skills assessment (F) through assignments without AI assistance to build a strong coding foundation, (2) applied project assessment (A) through AI-assisted assignments and term projects to engage students in authentic tasks, (3) conceptual-understanding assessment (C) through a traditional paper-based exam to independently evaluate comprehension, and (4) critical-thinking assessment (T) through complex multi-step case study using AI, to assess critical problem-solving skills. Analysis of student performance shows that both AI tools and AI guidance improved student performance and allowed them to tackle complex tasks and real-world applications versus AI tools alone without guidance. Survey results show that many students found AI tools beneficial for problem solving, yet some students expressed concerns about overreliance. By integrating assessments with and without AI tools, FACT assessment promotes AI-assisted learning while maintaining rigorous academic assessment to prepare students for their future careers in the AI era.

1 Introduction

The integration of artificial intelligence (AI) into environmental data science education, and science, technology, engineering, and mathematics (STEM) education more broadly, is transforming traditional teaching and assessment paradigms, offering both significant opportunities and challenges (Hopfenbeck et al., 2023; Oliver et al., 2024). AI tools, such as generative AI and coding assistants, enable students to obtain tutoring during learning, and engage in complex tasks. By providing real-time feedback, these tools can make learning more dynamic and effective, as evidenced by applications in environmental data science and engineering education (Baalsrud Hauge and Jeong, 2024; Ballen et al., 2024). AI advancements have the potential to provide students with the skills that help their careers (Mosly, 2024; Oliver et al., 2024), especially as industries increasingly integrate AI tools into their activities (Aladağ et al., 2024). Additionally, as Google reports that 25% of its new code is AI-generated, this underscores the need for computer science and engineering education to shift focus toward higher-order skills such as quality assurance and collaborative workflows (Talagala, 2024). While AI tools have demonstrated the ability to support student learning in programming and computational courses, careful integration of AI tools in STEM education is necessary to avoid diminishing student development, and for maintaining academic rigor.

The increasing sophistication of AI tools raises concerns about overreliance, the challenge of assessing learning outcomes, and ethical implications. Regarding overreliance, Frankford et al. (2024) note that while AI tutoring systems improved accessibility and personalized feedback, there is a need to balance AI use with independent skill development. For example, in data science education, student dependence on AI tools has the potential to diminish the development of basic coding and problem-solving skills necessary for their professional growth (Camarillo et al., 2024; Wilson and Nishimoto, 2024). Similarly, research has shown that increased confidence in AI-generated output often leads to reduced engagement in critical thinking, as users tend to rely on AI responses rather than independently evaluating information (Lee et al., 2025). Regarding the challenge of assessing learning outcomes, the misuse of AI in academic settings raises concerns about difficulties with plagiarism detection and learning outcome assessment (Baalsrud Hauge and Jeong, 2024; Williams, 2024). For example, widespread misuse of AI tools has led to significant increases in plagiarism and honor code violations, with some educators spending substantial time detecting AI-driven misconduct (McMurtrie, 2024). In addition, large language models like ChatGPT and Gemini have been shown to propagate biases, homogenize knowledge, and occasionally produce misleading information (Oliver et al., 2024). These practical and ethical considerations require structured pedagogical approaches to mitigate these risks and ensure alignment with academic and industry standards (Baidoo-anu and Ansah, 2023). For example, Oliver et al. (2024) highlight the importance of critical assessment of AI outputs and addressing ethical considerations, particularly in environmental data science, where generative AI is increasingly employed to synthesize data and design workflows. Therefore, there is a need for holistic assessment frameworks that can effectively leverage AI opportunities and mitigate AI risks (Ateeq et al., 2024).

In response to these challenges, the academic community is actively exploring strategies to reshape assessment in higher education, recognizing that traditional methods may be increasingly inadequate (Xia et al., 2024). One response involves redesigning assessments to emphasize higher-order thinking skills such as critical analysis, creativity, and problem solving, often through authentic tasks that focus on the learning process, where the AI role is carefully managed (Awadallah Alkouk and Khlaif, 2024; Khlaif et al., 2025; Xia et al., 2024). Common strategies include using personalized applications (e.g., local data with local interpretation), real-world scenarios (e.g., case studies), multimodal responses (using text, visuals, audio, etc.), oral defenses, and evaluating the learning journey, including responsible student interaction with AI tools (Ardito, 2024; Awadallah Alkouk and Khlaif, 2024; Corbin et al., 2025; Xia et al., 2024). In addition to redesigning assessments to make them AI-resistant, another response is building structured guidelines or scales for permitted AI use. For example, the AI Assessment Scale (AIAS) outlines levels for permitted AI integration (Perkins et al., 2024), supporting a balanced approach that combines AI capabilities while ensuring human evaluation measures student understanding and skills (Xia et al., 2024). Similarly, the HEAT-AI framework categorizes AI applications into four risk levels, which are unacceptable, high, limited, and minimal, offering institutions a structured, risk-based model to guide ethical and pedagogically sound AI use in assessment contexts (Temper et al., 2025). Alongside, universities are urged to revise assessment policies, provide professional development for faculty and students on AI and assessment literacy, and promote teaching innovations reflecting these shifts (Chan, 2023). For example, many top-ranking universities have begun issuing specific guidelines on AI use in assessments (Moorhouse et al., 2023). For more detail on strategies to redefine assessment in higher education due to AI, Xia et al. (2024) review these changes in student, teacher, and institutional contexts. Despite these efforts, a significant gap persists in developing holistic assessment frameworks (Swiecki et al., 2022), and defining clear boundaries for AI use remains complex (Corbin et al., 2025; Dabis and Csáki, 2024; Doenyas, 2024). Specifically, a hybrid approach that seamlessly integrates traditional assessment methods with AI-assisted projects to evaluate a full spectrum of skills from foundational knowledge to critical thinking within specialized contexts like environmental data science remains largely unexplored, especially with empirical application.

Given the call for hybrid assessment frameworks that balance AI-assisted learning with rigorous academic standards (Pham et al., 2023; Swiecki et al., 2022), this study introduces and evaluates the FACT (Fundamental, Applied, Conceptual, critical Thinking) assessment as a practical approach with an upper-level environmental data science course. The main objective is to evaluate the impact of FACT on student performance and to assess the perceptions of students about AI integration within a structure that combines traditional and AI-assisted methods for holistic assessment. Specifically, FACT uses AI tools for applied projects (A) and critical thinking (T), while maintaining traditional assessments for foundational skills (F) and conceptual understanding (C), ensuring that students develop both technical skills and higher-order cognitive skills. Such integrated approaches can enhance student engagement and real-world application (Baalsrud Hauge and Jeong, 2024; Ballen et al., 2024). This paper contributes to the growing literature on AI in STEM education, particularly within environmental data science (Gibert et al., 2018; Leal Filho et al., 2024; Pennington et al., 2020). By preparing students for AI-integrated professions while maintaining rigorous academic standards, this study addresses the dual objectives of advancement and integrity in STEM education under the AI paradigm through the following research questions: How does student performance vary across FACT assessment components with differing levels of permitted AI assistance and task complexity? What are student perceptions regarding their reliance on AI coding assistance when engaged in tasks under the FACT framework? How do students perceive the positive and negative impacts of integrated AI coding assistance on their learning experience within the FACT framework?

2 Literature review

2.1 Rationale for component selection

The FACT framework components of fundamental skills (F), applied projects (A), conceptual understanding (C), and critical thinking (T) were chosen to ensure comprehensive student assessment with respect to a wide learning spectrum. These assessment components address different learning stages by reflecting the cognitive levels in Bloom’s Taxonomy, as Bloom’s taxonomy undergoes adaptation for the AI era (Gonsalves, 2024; Jain and Samuel, 2025; Lubbe et al., 2025; Philbin, 2023). The deliberate progression from foundational knowledge (F, C) to application and higher-order thinking (A, T) is central to this design. Assessing fundamental skills (F) and conceptual understanding (C) correspond to Bloom’s foundational ‘remembering’ and ‘understanding’ levels (Anderson and Krathwohl, 2001). It is necessary to verify genuine comprehension as AI can mask knowledge gaps (Jain and Samuel, 2025; Lubbe et al., 2025). Yet beyond assessing comprehension, building core skills (F) and understanding key concepts (C) provide the foundation students need to effectively and efficiently apply their knowledge in real-world scenarios often with AI tools (A), while simultaneously engaging in critical judgment (T) as described below. The applied project (A) component directly assesses Bloom’s taxonomy of ‘applying’ and ‘analyzing’ capabilities in authentic contexts. Finally, critical thinking (T) is a cornerstone of the assessment because it evaluates higher-order cognitive processes such as ‘evaluating’ and ‘creating’ of the Bloom’s taxonomy (Jain and Samuel, 2025; Lubbe et al., 2025). This includes the skill of discerningly evaluating and interacting with AI outputs (Gerlich, 2025; Gonsalves, 2024; Lubbe et al., 2025). In an age where AI can perform not only routine but also increasingly complex tasks, a key pedagogical goal with respect to our assessment is to prepare learners who possess not just procedural skills (F, A), but more importantly a solid conceptual understanding and discerning critical judgment (C, T). Thus, the overarching goal of the FACT framework is to help students build the skills and knowledge needed to oversee and collaborate with AI to enhance their thinking and co-generate knowledge. This is achieved while aligning with calls for effective and responsible use of AI (Zhao et al., 2025) and maintaining individual accountability (Lin, 2025). The remainder of this section describes how the components of the FACT framework are grounded in pedagogical theory and practice.

2.2 FACT in pedagogical theory and practice

Fundamental skills assessment (F) and conceptual understanding assessment (C), conducted without AI assistance, are needed to verify student abilities, especially in the AI era. Foundational knowledge (F, C), which corresponds to the ‘remembering’ and ‘understanding’ levels of the Bloom’s taxonomy, is a prerequisite for higher-order cognitive tasks. Given AI efficiency at lower Bloom’s levels (Gerlich, 2025; Lubbe et al., 2025) and increasing capabilities at higher levels (Lin, 2025), verifying independent capabilities is needed. This ensures academic rigor by distinguishing genuine competence from AI use that could mask foundational weaknesses (Jain and Samuel, 2025; Lubbe et al., 2025). Beyond rigorous academic assessment, we argue that the F and C components are becoming more critical in the era of AI to ensure that students develop key skills rather than over-relying on AI. For example, Jain and Samuel (2025) caution against “ventriloquizing,” where learners might merely replicate AI-generated information without genuine internalization. The F assessment counters this by ensuring students develop their own fundamental skills. Similarly, the conceptual understanding assessment (C) is equally critical as it focuses on verifying student comprehension of core principles to ensure transferability and adaptability of knowledge (Jain and Samuel, 2025). Transferability of knowledge is applying knowledge to similar tasks, while adaptability of knowledge is evolving knowledge to handle unfamiliar tasks. On the other hand, recent studies (Gonsalves, 2024; Jose et al., 2025) indicate that overreliance on AI could impede the development of critical thinking if foundational learning is bypassed. Specifically, Gonsalves (2024) warns that easy access and overreliance on AI outputs can lead to superficial learning. Forms of superficial learning include rushing to finish tasks without critical thinking, disengagement from the material through AI overuse, and completing steps without understanding the underlying concepts (Dergaa et al., 2024; Jose et al., 2025). However, the F and C components not only ensure academic rigor and mitigate the risk of superficial engagement with AI (Jose et al., 2025; Philbin, 2023), but also help students build a strong base to progress in their learning, a principle further supported by cognitive load and constructivist learning theories discussed below.

The need for students to acquire basic foundational skills before engaging with AI tools is further supported by cognitive load theory (Sweller, 1988) and constructivist learning theory (Brown et al., 1989). We suggest that mastering foundational skills first reduces the cognitive burden when AI tools are introduced. Cognitive load theory suggests that human working memory has limited capacity. Poor instructional design or insufficient prerequisite knowledge can introduce extraneous cognitive load, which diverts cognitive resources away from intrinsic cognitive load and germane cognitive load (Sweller, 1988; Sweller et al., 2011). Intrinsic cognitive load and germane cognitive load refer to cognitive resources for handling the inherent complexity of the material and schema development, respectively. Extraneous load increases when students simultaneously learn the AI tool and the subject matter, manage overwhelming or inaccurate AI output without evaluative skills, or frequently switch attention (de Jong, 2010; Jose et al., 2025; Zhao et al., 2025). Without a solid foundation, AI might even impede deep learning by reducing genuine cognitive engagement (Jose et al., 2025). In parallel, constructivist learning theory argues that learners actively build knowledge from experiences (Brown et al., 1989; Kim et al., 2025; Tan and Maravilla, 2024). As such, the mental world is actively constructed with a developmental path from some initial state (Elshall and Tsai, 2014; Riegler, 2012). Thus, basic understanding and fundamental skills are needed so that AI will be used to assist learning rather than merely performing cognitive tasks for students (Jose et al., 2025; Tan and Maravilla, 2024). As effective AI-based education involves applying existing knowledge in authentic, real-world contexts (Khlaif et al., 2025; Kim et al., 2025), developing independent skills first allows students to construct their understanding, mitigates the risk of superficial learning (Jose et al., 2025) and reduces cognitive offloading (Gerlich, 2025). It should be noted that essential foundational skills should extend beyond subject-specific knowledge and basic technical literacy, to critical thinking, which is a core skill for effective AI use (Zhao et al., 2025).

Applied projects assessment (A), which incorporates AI assistance, aligns with constructivist learning theories, project-based learning, and authentic assessment principles in the context of the AI era. These frameworks emphasize that students learn best by actively applying knowledge to solve complex, real-world problems (Gonsalves, 2024; Khlaif et al., 2025; Ye et al., 2017). The A component targets higher-order thinking skills such as applying, analyzing, and creating, as defined by Bloom’s taxonomy. However, the A component situates these skills within a human-AI knowledge co-production paradigm, where students extensively use AI for research, coding, analysis, and communication in authentic contexts. This co-production approach aligns with frameworks such as “intelligence augmentation” model (Jain and Samuel, 2025), which describes the process as “co-curating” knowledge through human-AI collaboration. The co-production approach also aligns with other studies (Gonsalves, 2024; Philbin, 2023) characterizing AI as a “cognitive partner” and “intelligent collaborator,” respectively. As such, students collaborate with AI as epistemic partners or as one student described “my AI friend” to co-produce knowledge. Accordingly, the A component assesses student ability to produce high-quality deliverables through this human-AI collaboration, emphasizing the critical and responsible management of AI contributions.

As AI literacy becomes increasingly integral to professional practice, the co-production competence, which is the ability to both effectively and ethically collaborate with AI towards a tangible outcome, is needed for preparing students for workplace demands (Cheah et al., 2025). Accordingly, the A component does not evaluate students in a traditional sense, but more under the human-AI knowledge co-production paradigm (Gonsalves, 2024; Jain and Samuel, 2025; Philbin, 2023). Thus, the A component attempts to pair AI computational capabilities with human critical judgment for knowledge co-production. This is done with emphasis on maintaining human accountability to ensure that students retain ownership and oversight of the knowledge produced through human-AI collaboration (Jain and Samuel, 2025; Lubbe et al., 2025). As one student reflected during his term presentation “I was able to complete my project by applying what I learned in this class, by working with my AI friend, and by collaborating with the instructor.” This example illustrates the overarching goal of the A component that is to promote responsible and effective human-AI collaboration while maintaining student ownership and agency in both the learning process and the final product (Jain and Samuel, 2025; Lubbe et al., 2025).

Critical thinking assessment (T) is arguably the most important part of AI-based education. Critical thinking focuses on the highest levels of Bloom’s Taxonomy of ‘analyzing’, ‘evaluating’, and ‘creating’. Traditionally, critical thinking involves the ability to analyze, evaluate, and synthesize information to question assumptions, interpret evidence, solve problems, and make independent, well-reasoned decisions (Lubbe et al., 2025; Melisa et al., 2025). However, in the context of generative AI, this cognitive process needs to be redefined. While AI can produce large volumes of information, AI lacks critical judgment, ethical awareness, and the capacity to refine and integrate knowledge meaningfully (Gerlich, 2025; Gonsalves, 2024). In response to these limitations, Gonsalves (2024) proposes an updated Bloom’s taxonomy that includes AI-specific competencies like melioration, ethical reasoning, and reflective thinking. Melioration refers to improving AI output by combining it with reliable sources to make the information more accurate and relevant. In addition, Jain and Samuel (2025) introduce the notion of “critical understanding,” which means adding human judgment shaped by real-life experience to AI content. Building on this, Lubbe et al. (2025) suggest that in an AI era, “evaluate” should replace “create” at the apex of Bloom’s taxonomy, reflecting the increasing need to critically judge AI outputs before using them in knowledge construction.

These AI-specific competencies closely align with the T component of the FACT framework, which assesses student ability to critically assess and integrate AI outputs and apply them to problem-solving contexts. Gonsalves (2024) and Yusuf et al. (2024) refer to this dual role as “critical thinking toward the AI” and “critical thinking for the assignment,” respectively. Recent studies (Jose et al., 2025; Zhao et al., 2025) show that students with well-developed critical thinking skills benefit more from AI to improve their learning, while a lack of these skills may cause students to accept flawed or biased AI outputs without questioning. These findings directly address the growing concerns that AI could weaken critical thinking if AI outputs are unexamined (Gerlich, 2025; Jose et al., 2025). Consequently, there are calls to redesigning assessments to either resist AI interference altogether (Khlaif et al., 2025) or to deliberately integrate AI to cultivate higher-order thinking (Cheah et al., 2025; Philbin, 2023). Therefore, the A and T components aim to address this challenge by making critical engagement with and through AI a central focus of learning. This is to prepare students to develop the AI-specific competencies required for the AI era. On the other hand, the F and C components are mainly designed to resist AI interference.

As a whole, the FACT framework addresses the “cognitive paradox of AI in education” (Jose et al., 2025), which refers to the tension between the AI potential to assist learning and its simultaneous risk of undermining key cognitive skills such as memory, critical thinking, and creativity if overused or misused. Through four distinct yet interconnected components, the FACT framework offers a balanced approach to assessment in the AI era. The framework acknowledges the transformative potential of AI as a learning and productivity tool (A, T) while actively safeguarding and cultivating the essential human competencies of fundamental skills (F), conceptual understanding (C), and most importantly the capacity for independent critical thought and ethical judgment (T). The selection and emphasis of these components is a direct response to pedagogical challenges and opportunities identified in current research (Gonsalves, 2024; Jain and Samuel, 2025; Jose et al., 2025; Khlaif et al., 2025; Kim et al., 2025; Lubbe et al., 2025; Zhao et al., 2025) calling for the evolution of assessment practices in the AI era. This development aims to help students become not only AI-literate, but also strong in fundamental skills, solid in their understanding of basic concepts, and capable of thinking critically. In this way, the FACT framework presents a holistic model of assessment tailored for the AI era.

3 Methods

AI coding assistance is integrated into an Environmental Data Science course. The course was designed to balance the opportunities and challenges associated with AI tools while maintaining rigorous academic standards through applying the FACT assessment. This section describes the course design, FACT assessment, and data collection from student surveys to evaluate the impact of AI integration. By examining these components, the study aims to evaluate the effectiveness of the FACT assessment in addressing the dual objectives of leveraging AI tools, while maintaining rigorous academic standards and avoiding AI overdependence.

3.1 Course design and structure

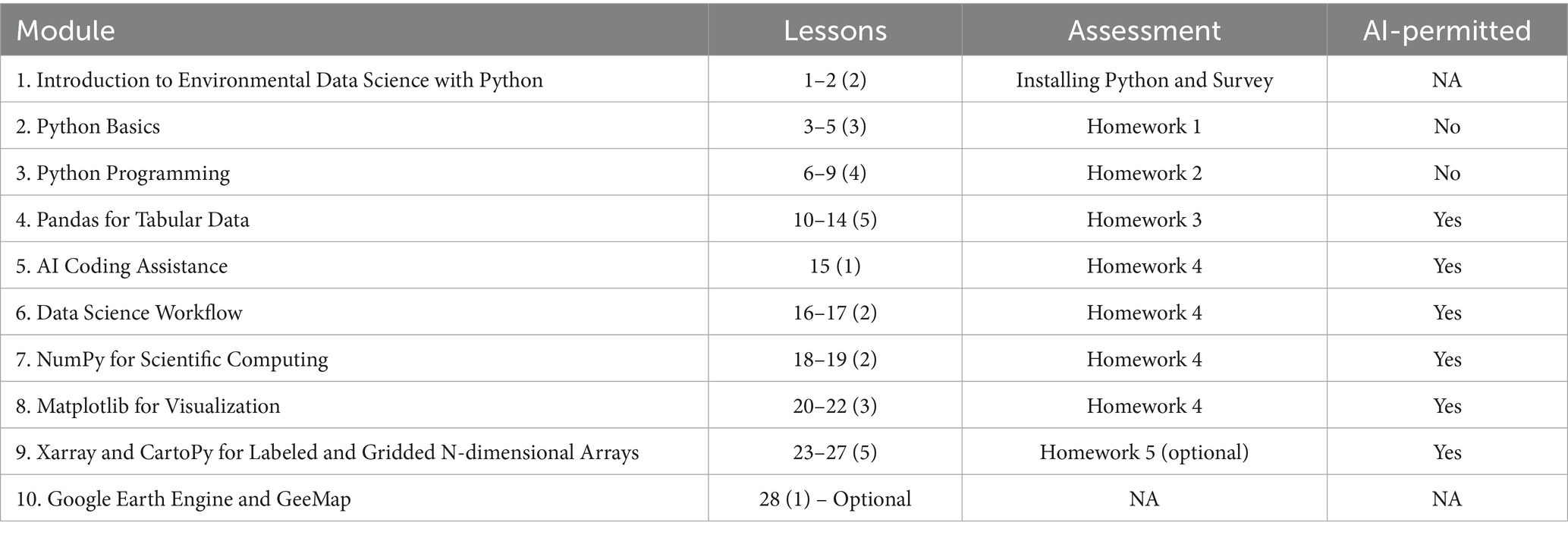

The Environmental Data Science course is offered to upper-level undergraduate and graduate students from civil and environmental engineering, as well as Earth, ocean, and environmental sciences. The course syllabus (Elshall, 2025) indicates that the curriculum introduces students to water and environmental data analysis using Python, a versatile programming language equipped with powerful libraries for data science and scientific computing. Key libraries include Pandas for spreadsheet-like data manipulation, Matplotlib for visualization, NumPy for scientific computing, Xarray for multi-dimensional geospatial data analysis, and CartoPy for geospatial visualization. The course curriculum included instruction on using Python to analyze and visualize water and environmental datasets, working with data from sources such as NOAA, NASA, Copernicus, USGS, and Data.Gov, in formats like CSV, shapefiles, and NetCDF. Additionally, the course is project-based and offers self-directed learning opportunities. The course was designed with no prior programming prerequisites and aimed to prepare students for data analysis and visualization to address real-world challenges in water resources and environmental management. Past students have explored and utilized specialized resources tailored to their interests, such as climate Data Store API for accessing CMIP6 datasets for climate projections and remote sensing data; sciencebasepy for programmatic interaction with the USGS ScienceBase platform; Geemap for using Google Earth Engine catalog of satellite imagery and geospatial datasets; Python in ArcGIS Pro to extend and customize GIS functionality; statsmodels for statistical analysis; Scikit-learn and TensorFlow for machine learning analysis of water and environmental datasets; FloPy for groundwater modeling using this MODFLOW Python API. Examples of student projects completed in the course are available at the project assignment (Elshall, 2025). Assessment in the course emphasized hands-on learning and practical applications. The course follows a structured syllabus with distinct stages of assessment as shown in Table 1. Each lesson is 75 min. Details about these modules are available online via a Jupyter book (Elshall, 2025) that contains course material.

Table 1. Course modules and assessment stages illustrating the structured progression from foundational skills (no AI permitted) to applied tasks (AI permitted) within the FACT framework implementation.

3.2 FACT assessment

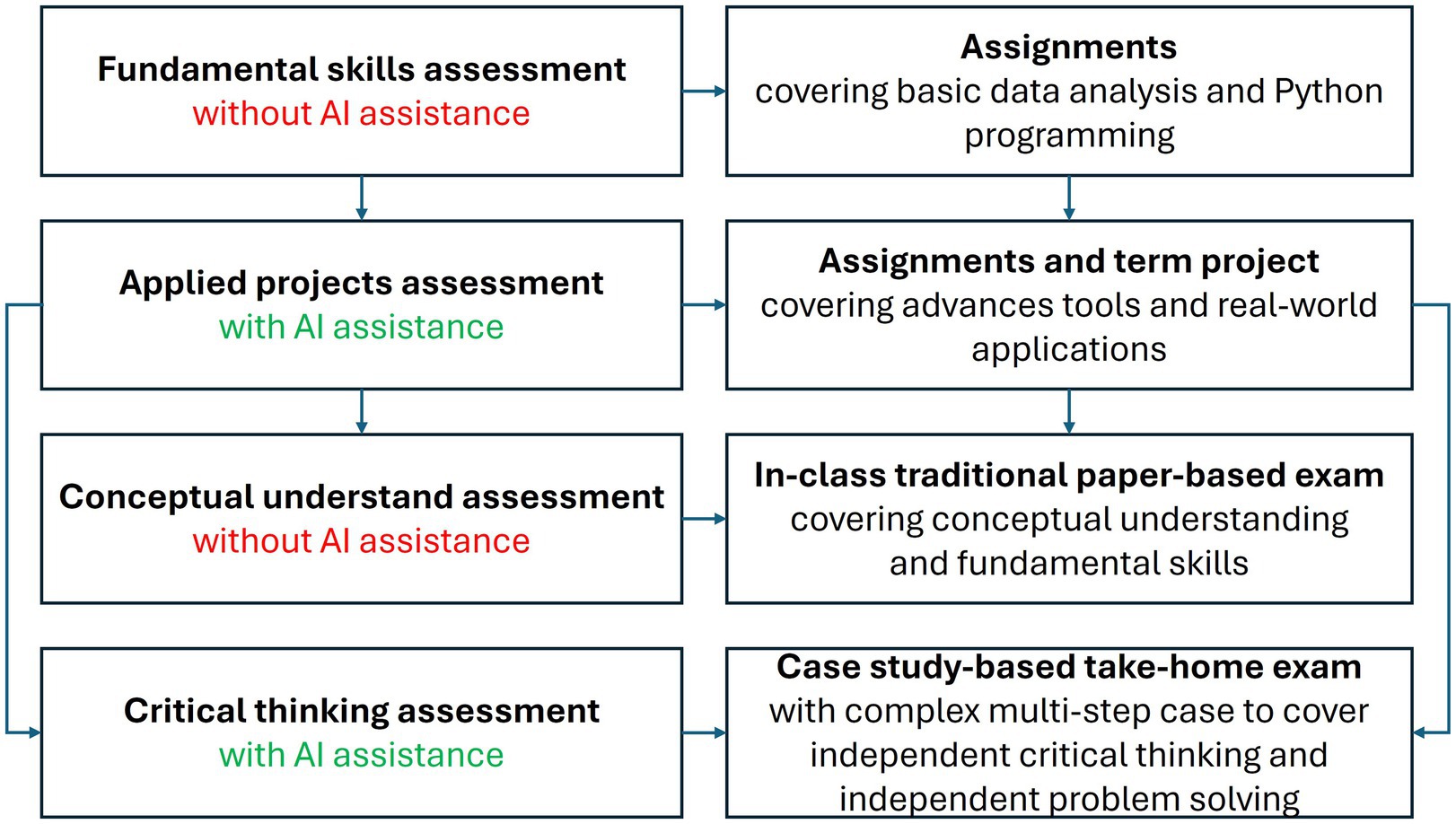

The FACT (Fundamental, Applied, Conceptual, critical Thinking) assessment is a structured approach that combines traditional and AI-assisted learning techniques to assess fundamental skills, applied project performance, conceptual understanding, and critical thinking as summarized in Figure 1. The FACT assessment aims to leverage AI tools, while avoiding overdependence and maintaining academic standards.

Figure 1. Conceptual summary of the FACT assessment framework, illustrating the integration of assessment instruments with and without AI assistance across fundamental, applied, conceptual, and critical thinking domains to achieve a balanced approach.

Fundamental-skills assessment (F) ensures that students build a solid foundation before progressing to more advanced concepts and tools. In the first nine lessons (75 min each), students focus on mastering basic Python programming and data analysis techniques without AI assistance. Through two assignments, they learn foundational skills without the aid of AI tools. Homework 1 (Elshall, 2025) assessed Python basics (e.g., variables, formulas, data structures and formatting). Homework 2 focused on programming concepts (e.g., loops, functions). Assignments are designed to assess these fundamental skills, before progressing to advanced tools like Pandas, NumPy, Xarray, CartoPy, scikit-learn, and Geemap.

Applied-project assessment (A) ensures that students engage in advanced tasks and real-world applications. Starting in lesson 10, students work on advanced, project-based assignments that incorporate AI coding assistance using AI tools such as Jupyter AI, and ChatGPT 3.5 Turbo. Homework 3 (Elshall, 2025), which focuses on programmable spreadsheet for analysis of big data using Pandas, emphasizes practical applications. The students were required to apply techniques demonstrated in lessons to a dataset of their interest and present information and insights uncovered from their data exploration. Graduate students have an additional problem of analyzing water quality due to harmful algae blooms. The students were also required to document their use of AI tools, such as large language models (LLMs), in improving their learning and productivity. Learning objectives of Homework 3 are detailed in Box 1.

Box 1. Learning objectives of the first homework where students are permitted to use AI.

As outlined in the syllabus, this course emphasizes project-based learning and self-directed study opportunities. This problem provides you with the chance to explore a dataset of personal interest. The objectives of this problem are to:

• Facilitate your learning of Pandas by engaging with a dataset that aligns with your interests

• Enhance your proficiency in accessing, wrangling, analyzing, and visualizing large CSV datasets

• Provide hands-on practice to strengthen your ability to analyze tabular data with mixed data types

• Engage in critical thinking to extract useful information from raw data

• Practice articulating your findings clearly and concisely using visualizations or narrative explanations

• Improve your skills in using AI-LLM for independent and self-directed learning

In Homework 3, students were permitted to use AI tools without guidance. Subsequently, Lesson 15 introduced AI tools to ensure students can effectively use these tools, while working on real-world applications of interest. The integration of AI tools was guided by principles of responsible AI usage. Students were encouraged to balance AI assistance with independent problem-solving, and class discussions addressed ethical concerns, including overreliance and potential biases and errors in AI outputs. The lesson also emphasized the importance of prompt engineering to effectively use AI tools. Detailed examples of effective prompt engineering are shown in Module 6. Data Science Workflow in Lessons 16 and 17 including Exercise 6 air quality index (AQI) data preparation (Elshall, 2025). For the rest of the semester, there was a continuous demonstration and discussion about the use of AI in coding and learning new topics and packages not covered in class including statistical analysis methods. These assignments and term projects allowed students to focus on real-world problem-solving, while managing the complexities of advanced coding and data analysis techniques through AI assistance.

Conceptual-understanding assessment (C) ensures independent evaluation of student understanding without the use of AI tools. The course culminates in a traditional, paper-based final exam designed to test student understanding of core concepts without the assistance of AI tools. The conceptual understanding assessment consisted of a 75-min, 60 multiple-choice exam, primarily assessing student grasp of key concepts, offering less emphasis on critical thinking and independent problem-solving skills. The exam focuses on basic materials from the 10 course modules, with an emphasis on assessing general knowledge discussed in class and class participation. The exam was administered on paper under open-book conditions, with exam instructions prohibiting AI tool use, and referencing academic honesty policies. Academic honesty is strictly enforced, and violations will result in a grade of zero. The exam study guide (Elshall, 2025) including sample questions was provided to students.

Critical-Thinking assessment (T) ensures the assessment of independent problem-solving through a multi-step case study. Examples of involved multi-step cases study are Homework 4 (Elshall, 2025) about studying the impact of COVID-19 on air quality in South Florida, and optional Homework 5 (Elshall, 2025) about comparing changes in rainfall patterns under different CMIP6 future scenarios for North and South Florida. Another example would be a take-home exam. While the final in-class exam format allows students to demonstrate their conceptual understanding, there is an additional need to assess individual critical thinking and independent problem-solving abilities in real-world contexts. Given that AI tools can inadvertently shift the cognitive burden from problem-solving to simple validation of AI-generated responses, assessments must explicitly encourage independent judgment and decision-making (Lee et al., 2025). To address this gap, in addition to Homework 4 and Homework 5, a recommended future addition is an additional take-home exam based on a multi-step case study. AI tools would assist with tasks like data cleaning or analysis, but students would need to make independent decisions and apply critical thinking to connect the steps, develop a complete solution, independently interpret results, draw conclusions, and connect insights into a cohesive solution. While this approach would effectively assess student independent problem-solving abilities and ensure they can navigate complex environmental science data challenges, designing such exams will become increasingly challenging as AI tools advance. Another concern is that this exam can be time-consuming constituting an extra load on students. In this course, students were given the choice of splitting the final exam into two parts, where the first part would be the traditional paper-based in-class exam as described above, and the second part would be a take-home exam covering a case study as described. They chose to do the in-class exam only.

3.3 Student survey for data collection

At the end of the course, an anonymous survey (Elshall, 2025) was distributed to gather feedback on student experiences with AI coding assistance and the course structure. Clear instructions were given that this survey is not to evaluate the instructor, but rather the learning experience irrespective of their like or dislike of the instructor. Also, clear instructions were given that this survey is for research purposes. Questions focused on student perceptions of how AI impacted their learning, problem-solving abilities, and reliance on technology (Survey Questions 6–9). These survey questions included three quantitative questions and one qualitative question as follows: (1) AI Coding Assistance: When I solve an environmental data science problem, I heavily rely on AI? (2) AI Coding Assistance: After I study a topic in this course and feel that I understand it, I have difficulty solving problems on the same topic; (3) AI Coding Assistance: When I get stuck on an environmental data science problem, rank how you seek help in order: Seek help from classmates, consult online resources, review lecture notes, experiment on my own, reach out instructor for guidance; (4) AI Coding Assistance: How has the integration of AI coding assistance, such as Jupyter AI or ChatGPT, impacted your learning experience in the course, both positively and negatively? The purpose of these survey questions is to learn the positive and negative impacts of AI from the student perception including ethical considerations and pedagogical implications. Survey results were collected from all 12 of the students who took this course in Spring 2024 with 9 undergraduate students and 3 graduate students.

3.4 Data analysis and AI-assisted research

To analyze student performance with and without AI assistance using the FACT assessment we used boxplots. Histogram charts were used to analyze quantitative survey questions. Semantic analysis was used to analyze qualitative survey questions to summarize the main themes from student responses. In addition, to avoid cognitive biases such as confirmation bias, anchoring bias, and overconfidence effect, semantic analysis with AI assistance from GPT-4o was conducted. Results were verified and confirmed for accuracy. Data analysis and plotting was conducted using standard Python packages including pandas and matplotlib with assistance from GPT-4o and GPT3.5 Turbo via Jupyter AI. In addition to data analysis and plotting, AI assistance from GPT-4o was utilized for providing review comments, refining text for succinctness and clarity, restructuring paragraphs to improve logic flow, and performing semantic analysis of qualitative survey responses.

4 Results and discussion

4.1 Student performance with and without AI assistance

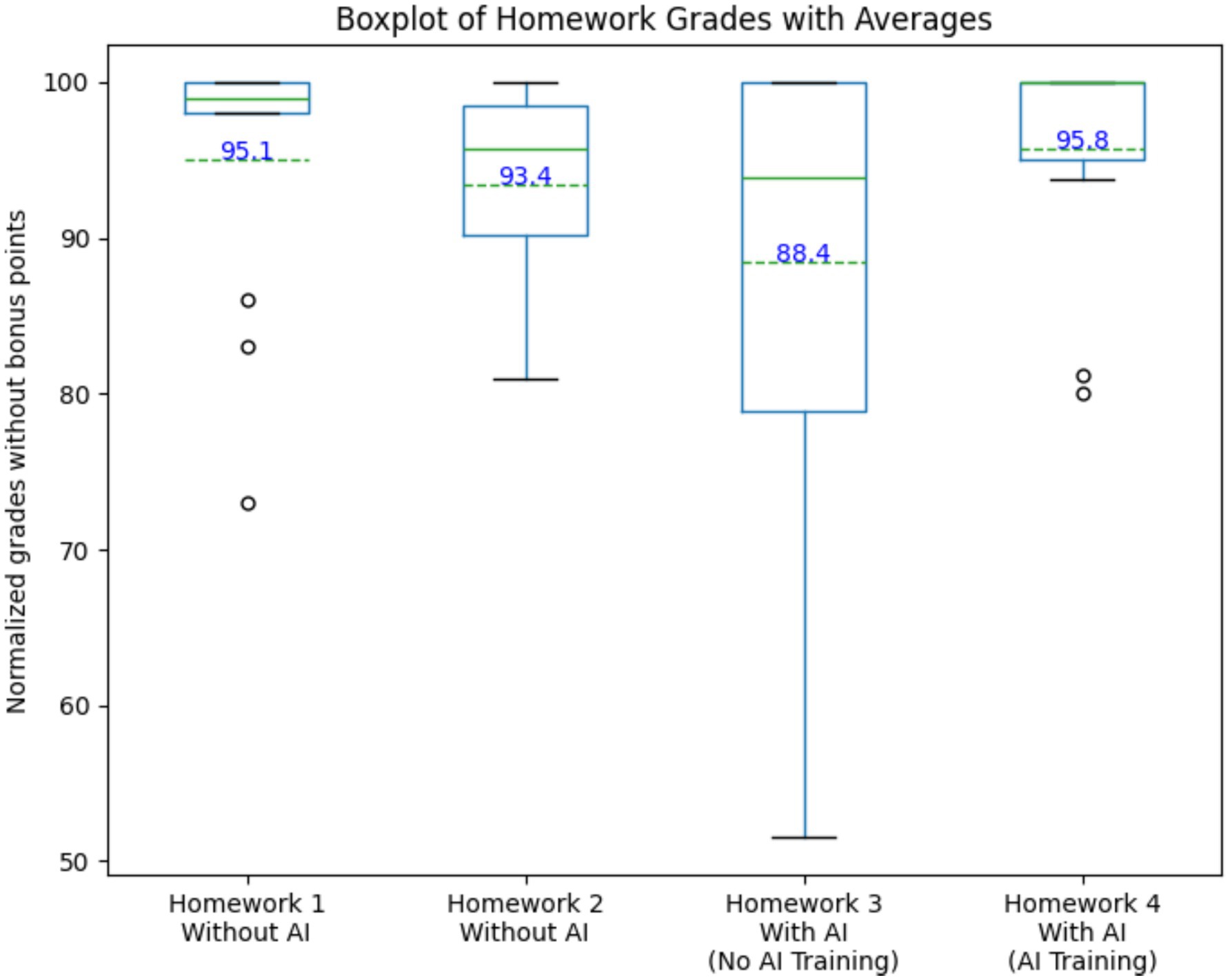

The boxplot analysis of normalized homework grades without bonus points shows differences in student performance under varying levels of AI assistance and task complexity from Homework 1 to Homework 4 (Figure 2). For Homework 1 and Homework 2, where AI tools were not permitted, students demonstrated consistent performance with relatively narrow interquartile ranges (IQRs) and high average scores of 95.3 and 93.4, respectively. These assignments, which focused on foundational skills like basic data analysis and Python programming, provided a solid baseline for students to build their technical competence. However, the presence of a few outliers in Homework 1 suggests that some students faced challenges completing these tasks independently, likely due to differences in their prior experience with coding.

Figure 2. Student performance across homework assignments, comparing grades without AI assistance (Homework 1, Homework 2), with AI assistance but no training (Homework 3), and with AI assistance plus training (Homework 4) to illustrate the impact of AI guidance. The difficulty increased progressively from Homework 1 to Homework 4.

In contrast, Homework 3 and Homework 4, which incorporated more advanced, real-world tasks and permitted AI assistance, generally exhibit greater variability in performance. Homework 3, with a wider IQR and a lower mean score of 88.4, highlights the adjustment period as students learned to integrate AI tools effectively. This variability might suggest that AI enabled some students to tackle complex tasks. Then students received AI guidance in Lesson 15 after Homework 3. By Homework 4, the mean increased to 95.8, and the IQR narrowed, indicating that students became more adept at leveraging AI for practical applications after receiving AI guidance in Lesson 15. These findings suggest that while AI assistance supports engagement with challenging tasks, it requires careful scaffolding and guidance to ensure it complements independent problem-solving skills.

4.2 Tackling real-world applications with AI assistance

AI tools and AI guidance improved student performance and permitted them to tackle complex tasks and real-world applications. AI tools and guidance helped students to tackle and excel in Homework 4 and the term project. Homework 4 is a pre-defined project focusing on comparing air quality improvement in selected major cites due to the pandemic lockdown order. Students were encouraged to leverage AI tools to learn about statistical analysis and develop code to conduct analysis using Python packages such as statsmodels and SciPy that were not covered in class. This is to prepare students to work on their term projects. These projects focus on location-based real-world applications including plasma proteomics of loggerhead sea turtles in the Gulf of Mexico, nutrient analysis in the Sanibel Slough in Sanibel Island, upwelling events and red tide blooms in the west Florida shelf, impact of hurricanes on surface water-groundwater salinity levels in southwest Florida, Naples Botanical Garden plant biodiversity alignment with global databases, machine learning models for red tide prediction in Charlotte Harbor in southwest Florida, and groundwater modeling with FloPy. More details about student projects can be found in the student project page (Elshall, 2025).

4.3 Student reliance on AI assistance

In Homework 3, Homework 4, and final project, while AI tools are utilized to handle complex tasks efficiently, students are advised to balance AI assistance and their own problem-solving skills. However, there is no guarantee that students will not over-rely on AI, diminishing their technical proficiency and independent thinking. The course final survey results indicated that students appreciated the efficient AI tools provided in managing complex coding tasks but recognized the importance of not becoming overly reliant on AI.

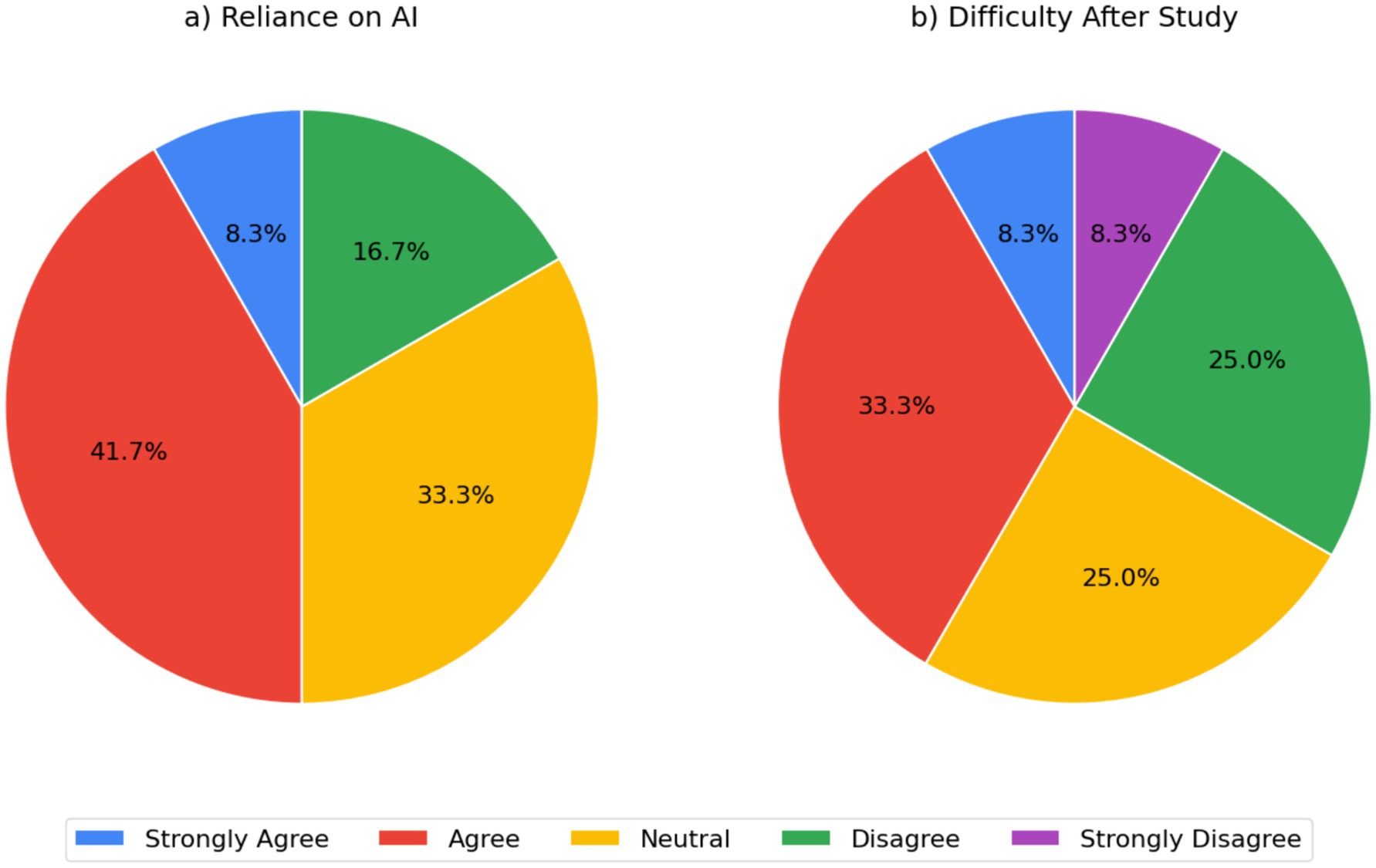

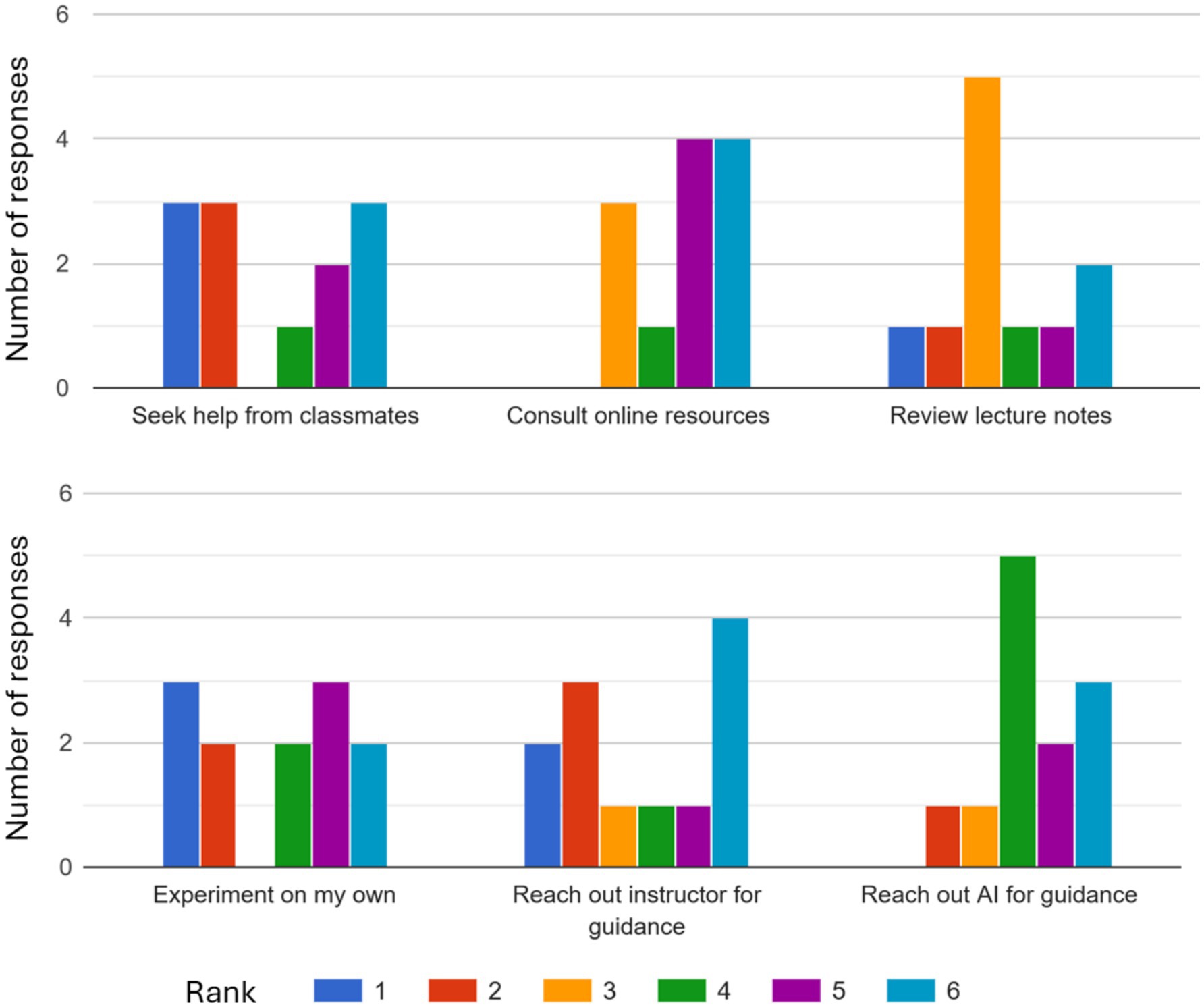

While AI tools allowed students to efficiently manage complex tasks, survey results indicate varying degrees of reliance on these tools. Figure 3A shows that 50% of students either strongly agreed or agreed that they heavily rely on AI for solving environmental data science problems, while 33.3% were neutral and 16.7% disagreed, indicating that some students maintain a balance between AI use and their own problem-solving abilities. Survey results indicate that while AI tools assist in handling complex tasks, students risk becoming passive recipients of information rather than active problem-solvers, a concern echoed in recent research on AI’s impact on cognitive effort (Lee et al., 2025). Despite the widespread use of AI, the survey also showed that 50% of students disagreed or strongly disagreed with the statement that they have difficulty solving problems independently after studying a topic (Figure 3B). This suggests that while AI tools were widely used, many students retained their ability to think critically and solve problems independently. This is confirmed by Figure 4 that shows that students primarily sought help through online resources and lecture notes when stuck on a problem, with some seeking assistance from classmates as well. However, the risk of dependency on AI tools for routine tasks can be also a concern, as noted in other studies (Ballen et al., 2024; Camarillo et al., 2024). Yet the definition of “routine tasks” is contextual. For example, generating a boxplot for upper-level undergraduate students and graduate students is a routine task, yet for lower-level courses on computational tools, this task is a learning objective for undergraduate students.

Figure 3. Student self-reported reliance on AI and difficulty solving problems independently. Panel (A) shows the distribution of perceived reliance on AI, supporting findings on student usage patterns. Panel (B) illustrates student confidence in independent problem-solving after studying topics, providing insight into perceived skill retention despite AI use.

Figure 4. Student ranking of help-seeking behaviors when encountering problems. This figure supports findings on independent problem-solving by illustrating the relative preference for different resources, including AI guidance, compared to self-experimentation or consulting notes/peers.

4.4 Student perception of AI assistance

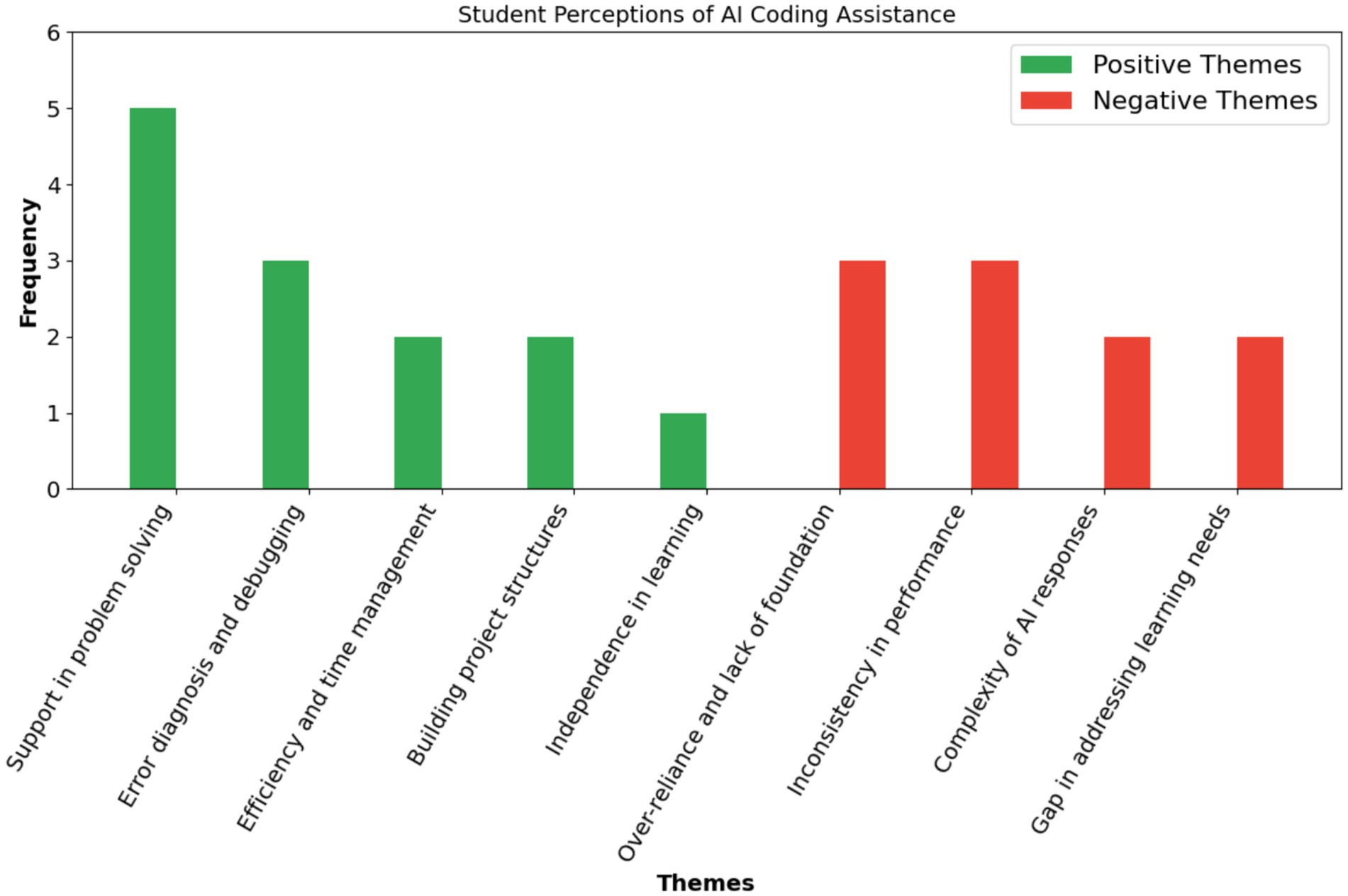

To gain further insights about student experience with AI-assisted learning from student perspectives, semantic analysis was conducted to analyze student responses to the survey question: “How has the integration of AI coding assistance, such as Jupyter AI or ChatGPT, impacted your learning experience in the course, both positively and negatively?” We conducted semantic analysis with and without AI assistance. For semantic analysis with AI-assistance, Figure 5 summarizes student responses by semantically analyzing and categorizing responses into nine distinct themes, divided into positive and negative perceptions. Positive themes include support in problem solving, error diagnosis and debugging, efficiency and time management, building project structures, and independence in learning. These highlight how AI tools facilitated learning, with problem solving being the most frequently mentioned benefit. Negative themes, such as overreliance on AI, inconsistency in performance, complexity of AI responses, and gaps in addressing learning needs, reflect challenges students encountered, particularly in maintaining foundational understanding and addressing advanced or nuanced tasks. AI analysis of data in Figure 5 states: “The balanced representation of positive and negative aspects underscores the dual role of AI: a valuable learning tool with limitations that require careful integration into the curriculum. This visualization provides actionable insights to refine AI-assisted education by leveraging its strengths and mitigating potential drawbacks” (AI-generated text).

Figure 5. Thematic analysis of student perceptions of AI coding assistance. This visualization supports findings on the student experience by showing the frequency of positive themes (e.g., problem-solving support, efficiency) and negative themes (e.g., overreliance, inconsistency) identified in qualitative survey responses.

For semantic analysis without AI-assistance, responses show that while AI coding tools were generally appreciated for making complex tasks more manageable, there was concern that overreliance on AI might hinder deeper learning and diminish technical proficiency. For example, one student noted, “AI coding assistance has helped me build the skeleton of my project and assisted me during homework problems,” while another appreciated its ability to “diagnose errors when other resources, such as Stack Exchange, are unclear.”

Although students appreciated the efficiency of AI tools, some expressed concerns about overreliance and their potential to undermine deeper learning. Some students felt that relying too much on AI could prevent them from building a strong foundation in coding and problem-solving, with one student explicitly wishing for less dependency on AI tools. For example, one student stated, “I feel like while I am using ChatGPT I am not learning as much as I could, so I wish I had a stronger foundation without it instead of heavily relying on it.” These survey results align with existing literature highlighting the need to balance AI-assisted learning with independent problem-solving to avoid reducing student fundamental skills and impeding long-term professional development (Oliver et al., 2024; Wilson and Nishimoto, 2024). One student explicitly stated the fear of overreliance stating “chatgpt was slightly helpful but it would be bad if i became too reliant on it.” Overreliance on AI tools can lead to shallow learning, as students may prioritize completing tasks over understanding underlying concepts. This is consistent with findings that AI use can lead to diminished confidence in one’s own critical thinking abilities (Lee et al., 2025). These findings are also consistent with concerns raised by Oliver et al. (2024) about the potential homogenization of knowledge and biases introduced by large language models.

4.5 Efficacy of the FACT assessment

4.5.1 Student performance and perception

The FACT (Fundamental, Applied, Conceptual, critical Thinking) assessment combines traditional and AI-based assessments to address the challenges of AI integration in education. With respect to fundamental skills assessment (F), by requiring students to complete foundational assignments without AI tools, this component ensured the development of basic Python programming and data analysis skills. Analysis of student grades and survey responses suggest that these initial exercises were critical for building confidence before advancing to more complex tasks. With respect to applied project assessment (A), AI-assisted projects allowed students to focus on real-world applications, such as predictive modeling, remote sensing analysis, phenology analysis, data services to local stakeholders, among others. This part of the course included discussions on the ethical implications of AI and provided training to students on prompt engineering to effectively use AI tools. While students appreciated the efficiency of AI tools in managing complex tasks, survey feedback revealed concerns about balancing AI use with independent problem-solving. This highlights the importance of a more structured scaffolding of AI usage with clear expectations for independent contributions.

Regarding the conceptual understanding assessment (C), the traditional, paper-based final exam effectively tested student understanding of key concepts without AI assistance. However, this format had limited capacity to assess higher-order skills like critical thinking and problem-solving in real-world contexts. This highlights the need for a case study-based take-home exam to independently assess this component versus a group project. Yet with respect to critical thinking assessment (T), all students opted out from taking the optional take-home exam and preferred the in-class paper exam only. Students justified this choice by stating that higher-order skills were already assessed by homework 4 and the term project, and the preference to reduce the course load. Although not implemented, the proposed take-home exam based on multi-step case study can be employed to individual problem-solving skills and critical thinking in general.

However, the above evaluation of the FACT assessment is subject to several limitations. The main drawback of this study is the small sample size of only 12 students, which limits the generalizability of the findings. However, one advantage is the diversity of student cohort of graduate and undergraduate students from both engineering and geoscience. Additionally, while the survey responses provided valuable qualitative insights, the results may be influenced by self-reporting biases, such as students overestimating or underestimating the role of AI in their learning. Although the survey is anonymous, and clear instructions were given that this is for research purposes, there is a possibility that students under-reported their AI use fearing that it might impact evaluation and grade. Another limitation is the variability in student prior coding experience, which may have impacted their ability to engage with both AI-assisted and non-AI-assisted assignments. However, this might not be a concern because the top four scoring students in this class had no prior coding experience. In addition, not conducting a critical thinking assessment, due to student preference, suggests the need to re-evaluate this component in future iterations of the course. Despite these limitations, the study offers general guidance on integration of AI-assisted learning and highlights the potential of the FACT assessment as a balanced approach to assess foundational skills, applied learning, conceptual understanding, and critical thinking in environmental data science education. Future studies with larger sample sizes, and additional iterations of the FACT assessment can further validate and refine these findings. Also, while guidance was provided to students on prompt engineering to effectively use AI tools, more explicit and structured guidance on critical assessment of AI outputs is needed as suggested by Oliver et al. (2024).

4.5.2 Evaluating AI use in assessment

The FACT framework functions not only as an assessment design tool but also as a mechanism for evaluating how AI is integrated into learning. By excluding AI from foundational and conceptual tasks (F, C) and incorporating AI into applied and critical thinking components (A, T) instructors can monitor how students build independent skills and how their reliance on AI evolves. For example, results from this study indicate that students perform better in applied assessments following structured AI guidance (e.g., Homework 4), consistent with the evidence on the benefits of pedagogically grounded AI use (Chan, 2023). However, student self-reports reflecting reduced confidence in problem-solving suggest that scaffolding alone may not sufficiently mitigate the risk of cognitive offloading or reduced self-efficacy (Jose et al., 2025). These findings highlight the importance of ongoing calibration of how assignments are designed by, for example, ensuring that students analyze and critique AI outputs rather than passively accepting the AI outputs. This is to maintain student autonomy (Cullen and Oppenheimer, 2024) by preventing overreliance on AI, and supporting epistemic agency (Kang, 2024) by encouraging students to take ownership and responsibility in the human-AI knowledge co-production process. As Xia et al. (2024) suggest institutions need to adopt flexible and feedback-driven strategies to adjust AI use based on observed learning outcomes and evolving ethical considerations. The FACT framework provides a practical structure to support this process. However, further empirical findings, especially across different student groups and academic disciplines to evaluate the long-term effectiveness of the FACT framework are warranted.

4.5.3 Ethical considerations

The FACT framework can support ethical AI use, but only if assessments and instructions are designed to promote it. Dergaa et al. (2024) warn of “AI-chatbot-induced cognitive atrophy” that is when students over-rely on AI without guidance can lead students skip important thinking steps, which can result in reduced critical thinking and problem-solving skills. At the same time, when properly guided, AI can support ethical reasoning and critical reflection, especially when students are asked to evaluate, revise, and explain their AI use (Gonsalves, 2024). For example, tasks that ask students to reflect on the accuracy, bias, or influence of AI in their work can help students to use AI more responsibly (Gonsalves, 2024; Jose et al., 2025; Khlaif et al., 2025; Melisa et al., 2025; Zhao, 2020). Yet instructors often lack clear strategies for embedding these practices in coursework (Cheah et al., 2025), especially when AI is heavily used in authentic tasks.

This challenge reflects a deeper ethical uncertainty. The ethical uncertainty is not only due to the limited institutional guidance and professional development training (Anthuvan and Maheshwari, 2025; Cheah et al., 2025; Dabis and Csáki, 2024; Doenyas, 2024), but also a lack of consensus on how to ethically position human–AI co-generation in education. At one end, human-AI co-generation is viewed as a threat to academic integrity. For example, using AI to develop ideas or synthesize outputs can be viewed as a form of plagiarism that undermines authorship, originality, and academic integrity (Dabis and Csáki, 2024; Doenyas, 2024). At the other end, human-AI co-generation is seen as a legitimate and evolving mode of shared knowledge production, where students and AI systems act as cognitive collaborators, challenging traditional notions of authorship and creativity in education (Anthuvan and Maheshwari, 2025; Richter, 2025). This tension reflects a deeper lack of ethical and pedagogical consensus on how generative AI should be used, limited, or credited in education as driven by unclear norms, inconsistent faculty training, and institutional ambiguity (Lee et al., 2024; Nguyen, 2025). More fundamentally, this tension may indicate that higher education is entering a new paradigm shaped by the disruptive and still-evolving role of AI (O’Dea, 2024). Whether higher education is navigating a policy gap or a paradigm shift, the FACT framework does not resolve these ethical dilemmas. Rather the FACT framework creates space for students and instructors to directly face the ethical challenges of human–AI collaboration and learn from them.

However, to be more effective, the FACT framework should go beyond designing assessment tasks to examine how students use AI and provide opportunities for structured reflection, transparency, and ownership. Embedding ethical engagement more explicitly would better support students in developing responsible and transparent AI practices. Forms of this embedding include structured AI-use statement (Perkins et al., 2024), reflection prompts that promote critical evaluation of AI outputs (Melisa et al., 2025; Zhao et al., 2025), and rubrics that assess student learning processes rather than just final products (Moorhouse et al., 2023; Xia et al., 2024). In this course, students were asked to reflect on their AI use through Homework 3 and Homework 4, project reports, and the end-of-term survey. Also, the student learning process in the project was assessed through project summary, interim report, class presentation, and final report submissions. However, these activities were scattered across the term without consistent structure, design, or guidelines. Without such integrations with a clear structure, ethics remains peripheral to assessment rather than a core learning outcome, especially with the rapid advancement of AI tools.

4.6 FACT in assessment literature in AI era

The findings from implementing the FACT assessment align with several key emerging themes in the recent literature on AI in higher education and assessment. First, the necessity for hybrid or balanced approaches, which integrate traditional assessment and AI-assisted learning to cover a spectrum of skills, resonate with calls for assessment transformation (Xia et al., 2024). Other examples include the development of structured frameworks like AIAS (Perkins et al., 2024) or HEAT-AI risk-based models (Temper et al., 2025). Risk-based models categorize AI applications based on potential risks (e.g., to academic integrity, fairness) to guide appropriate institutional policies and usage in assessment contexts. The FACT framework complements the AIAS by operationalizing its principles through differentiated assessment components that specify when and how AI can be used, thereby offering clarity on AI’s pedagogical role at each stage of learning (Perkins et al., 2024). Similarly, the FACT framework aligns with the HEAT-AI model by integrating risk awareness into course design, distinguishing between low-risk foundational tasks completed without AI to verify student learning, and higher-risk application tasks that involve AI use and require critical oversight to ensure ethical and accountable assessment (Temper et al., 2025). Secondly, our results showing improved student performance on complex applied tasks following AI guidance (Homework 4 vs. Homework 3) provide empirical support for the importance of scaffolding AI use and developing AI literacy (Chan, 2023; Xia et al., 2024). Third, the student survey responses reflected appreciation for AI efficiency in problem-solving and debugging. These findings, alongside student concerns about overreliance and potential negative impacts on foundational learning, mirror complex student perceptions documented in recent studies (Khlaif et al., 2025). This duality highlights the ongoing boundary negotiations students face when deciding how and when to use AI ethically and effectively (Fu and Weng, 2024; Han et al., 2025; Nguyen, 2025), often in the absence of clear institutional guidelines (Corbin et al., 2025).

While the literature broadly discusses the need for AI-resistant strategies (Ardito, 2024; Awadallah Alkouk and Khlaif, 2024; Khlaif et al., 2025) and institutional guidelines (Moorhouse et al., 2023), this study contributes a specific, empirically applied framework (FACT) within environmental data science. Unlike general guidelines or scales, FACT integrates distinct assessment types (foundational, applied, conceptual, thinking) to address the dual goals of leveraging AI for advanced tasks while ensuring fundamental skill development and conceptual understanding are assessed independently. Thus, FACT assessment responds to Swiecki et al. (2022) call for the need for assessment models suited to the age of AI. Finally, the student reluctance towards the optional critical thinking exam component suggests practical challenges in implementing comprehensive multi-component assessments. This perhaps reflects workload concerns or perceived overlap with project-based evaluations, highlighting the ongoing complexity in defining assessment boundaries noted by Corbin et al. (2025).

4.7 Study limitations

Several limitations should be considered when interpreting the findings of this study. First, the small sample size (n = 12), although diverse in including undergraduate/graduate students from engineering and geosciences, restricts the generalizability of the results to broader student populations or different institutional contexts. Second, the critical thinking (T) component of the FACT framework with respect to the take-home exam, was not implemented due to student preference for the in-class exam only. This absence limits the full empirical validation of the framework across all four intended assessment domains. Third, the study design lacked a control or comparison group, making it difficult to definitively attribute observed changes in performance or perception solely to the FACT framework compared to other pedagogical approaches or the natural progression of learning. Finally, as noted previously, the reliance on student self-reported survey data introduces potential biases, and variability in student prior coding experience could have influenced engagement with different assignment types. These limitations highlight the need for future research involving larger sample sizes, more structured implementation of FACT components, and potentially comparative study designs to further validate and refine the efficacy of the framework.

4.8 Supporting fairness, accountability, and transparency

The FACT framework has the potential to support fairer, more accountable, and more transparent assessment, but its success depends on how it is implemented. In terms of fairness, separating non-AI-based components (F and C) from AI-based components (A and T) helps ensure that students are evaluated on their own skills and not just what AI can produce. This reduces the chance that students with more advanced AI tools or experience have an unfair advantage. However, fairness is not guaranteed by structure alone. Khlaif et al. (2025) caution that unequal access to AI training may unintentionally advantage some students especially in applied components. This highlights the need to not only provide adequate resources and training, but also for systemic investment in digital inclusion (Khlaif et al., 2025). With respect to accountability, The FACT structure allows instructors to see what students can do on their own versus with AI help. This aligns with calls to preserve academic agency in the face of increasingly AI sophistication (Jose et al., 2025). However, there is still no built-in way to verify how much students relied on AI. Relying on student honesty is not enough and further iterations of the framework should include ways to track or reflect on AI use.

Transparency is one of the main advantages of the FACT framework. Each of the FACT components has a clear purpose, and students know when AI is allowed and how their work will be evaluated. Melisa et al. (2025) note that transparency helps build trust and supports student understanding of their own thinking and learning goals when learning with AI. In this course, the instructor builds this trust by clearly stating throughout the term that Homework 1 and 2 must be done without AI to develop foundational skills and core concepts. Students were told that while AI use in these assignments could not be monitored, using it would undermine their own learning. In contrast, for Homework 3 to 5, and the final project, students were told that AI use is not just permitted but required, aligning with the framework applied and critical thinking components. In addition, Tan and Maravilla (2024) note that assessments should focus not just on the final product, but also on how students think, make decisions, and use AI responsibly during the learning process. Thus, more detailed rubrics, structure, and guidance on documenting AI use would make the process even clearer as previously discussed. Overall, the FACT framework facilitates designing assessments that are more fair, accountable, and transparent in the AI era. However, this requires ongoing refinement of assessment design as AI tools evolve along with our ethical comprehension. The FACT framework will continue to evolve in what Kuhn (1962) would describe as a “transitional phase” in a “paradigm shift,” where traditional models are challenged by disruptive changes and new consensuses are still being formed. Note that this does not represent a paradigm shift in the ontological sense such as the transition from Newtonian mechanics to Einsteinian relativity, but rather in the epistemic sense, reflecting a shift from the traditional model of human-dominated knowledge production to a model of human–AI co-production of knowledge.

4.9 Cross-disciplinary applicability of the FACT framework

While the FACT framework was developed for environmental data science, the FACT framework comprising of fundamental skills (F), applied use (A), conceptual understanding (C), and critical thinking (T) offers a transferable structure for AI-based education. Its cognitive alignment with Bloom’s Taxonomy and grounding in constructivist learning theory make it adaptable to varied domains. In STEM disciplines such as biology or engineering, F can involve checking lab or modeling skills (versus coding skills); A can involve using AI to help with AI-driven data analysis; C can involve checking understanding of systems or principles; and T can involve asking students to evaluate AI-generated hypotheses. For example, in groundwater hydrology, F can involve using Darcy’s Law and developing a MODFLOW numerical model using a graphical user interface such as ModelMuse (Winston, 2019); A could include developing a MODFLOW numerical model with uncertainty analysis using the Python packages of FloPy (Hughes et al., 2024) and pyemu (White et al., 2021), respectively, with AI coding assistance; C can involve evaluating understanding of subsurface flow dynamics and developing conceptual models; and T can involve asking students to question the reliability of AI-generated predictions under uncertainty. In non-STEM disciplines such as history, F could focus on students analyzing primary or secondary sources; A could involve using AI tools to assist with translating historical texts or summarizing archival materials; C could assess student understanding of historical causation and context; and T could ask students to critically examine biased interpretations in AI-generated historical narratives. These are just examples of balanced assessments that combine foundational skill-building with ethically guided human-AI co-production. Thus, the FACT framework can be generally useful for designing assessments across many disciplines in the AI era. Further study is warranted to collect data about the potential broader use of the FACT framework in other STEM and non-STEM disciplines.

4.10 Practical and societal implications

This study offers practical implications for educators and institutions navigating the integration of AI into higher education. The FACT assessment framework provides a practical, adaptable model for instructors, particularly in STEM fields like environmental data science, seeking to balance the pedagogical benefits of AI tools with the need to ensure students master foundational knowledge and develop independent critical thinking skills. It moves beyond broad guidelines or solely AI-resistant strategies to offer a structure for course and assessment design in the AI era.

Societally, as AI tools become increasingly integrated into professional practice across various sectors, including environmental management and engineering, there is a pressing need to prepare graduates who can both perform fundamental tasks independently, and leverage AI effectively and ethically. By fostering both foundational competence and AI-assisted applied skills, the FACT framework contributes to developing a workforce better prepared for the complexities of modern, AI-integrated professions, ultimately enhancing their ability to address critical environmental and societal challenges.

Furthermore, this research furthers knowledge by providing empirical evidence on the implementation of a specific, integrated AI assessment framework, offering insights into student performance trajectories with guided AI use and documenting nuanced student perceptions of AI reliance versus its utility. These findings can inform educational practice by demonstrating a workable approach to AI integration and highlighting the importance of explicit AI guidance. For policy, the FACT model serves as an empirically tested example that can inform institutional discussions and the development of guidelines that support balanced and pedagogically sound assessment strategies in the AI era.

5 Conclusion

The fundamental, applied, conceptual, critical thinking (FACT) assessment demonstrates a balanced approach to integrating AI coding assistance in environmental data science education. First, while some students appreciated the use of AI tools in projects involving real-world environmental data science applications, some students have expressed concerns about how these tools can diminish their intellectual and skill development and create overreliance. These findings emphasize the need for structured and longitudinal studies to understand the impact of these tools on the development of critical thinking and problem-solving skills. This suggests that structured integration of AI tools with clear ethical and pedagogical guidelines can help balance AI benefits with independent skill development. Third, the FACT assessment addresses a practical concern that is growing among educators. As AI tools continue to evolve, designing assessments that ensure both technical proficiency and critical thinking will remain a pressing challenge for educators across disciplines and levels. The FACT assessment addresses this challenge by balancing AI-assisted projects with traditional assessments that test conceptual understanding and fundamental skills. The FACT assessment and similar assessment frameworks will keep emerging as educators continue to adapt their teaching and assessment strategies to prepare students for emerging AI-integrated professions.

Impact statement

The FACT assessment framework addresses the challenge of balancing AI-assisted learning with cognitive and skill development in higher education. By integrating foundational, applied, conceptual, and critical-thinking assessments, FACT mitigates overreliance on AI while enhancing student engagement and performance. The paper offers educators a scalable approach to promote AI-assisted learning while ensuring that students develop critical and independent problem-solving skills. This framework provides a practical approach for integrating AI into education, to support the development of skills for workforce readiness in the AI era.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/supplementary material.

Ethics statement

The studies involving humans were approved by Florida Gulf Coast University, Ciris Pelin, Program Coordinator/Assistant Professor U. A. Whitaker, College of Engineering, cGNpcmlzQGZnY3UuZWR1. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AE: Validation, Data curation, Resources, Methodology, Visualization, Conceptualization, Project administration, Supervision, Formal analysis, Writing – original draft, Funding acquisition, Writing – review & editing, Software, Investigation. AB: Writing – review & editing, Writing – original draft.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work is funded by U. S. Environmental Protection Agency (EPA) Award Number AWD-03D09024.

Acknowledgments

We thank Leonora Kaldaras and the two reviewers for their constructive feedback that helped to significantly improve the manuscript. We are grateful to students for completing the course surveys and insightful discussions on AI tools in environmental data science education throughout the semester.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. Data analysis and plotting was conducted using standard Python packages including Pandas and Matplotlib with code generation assistance from GTP-4o and GPT3.5 Turbo via Jupyter AI. AI assistance from GPT-4o was utilized for providing review comments, refining text for succinctness and clarity, providing suggestions for restructuring paragraphs to improve logic flow, and performing semantic analysis of qualitative survey responses. For the literature review, ChatGPT-4o, Gemini Pro 2.5 and Perplexity (ChatGPT-4o) were used to develop ideas and synthesize content. Consensus Pro Analysis and ChatGPT-4o web search features were used to identify several sources. AI contributions were verified and contextualized to ensure accuracy and relevance to the study's objectives.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aladağ, H., Güven, İ., and Balli, O. (2024). Contribution of artificial intelligence (AI) to construction project management processes: state of the art with scoping review method. Sigma J. Eng. Nat. Sci. 42:1654. doi: 10.14744/sigma.2024.00125

Anderson, L. W., and Krathwohl, D. R. (2001). A taxonomy for learning, teaching, and assessing: A revision of bloom’s taxonomy of educational objectives 1st Edn. New York: Longman.

Anthuvan, T., and Maheshwari, K. (2025). AI-C2C (conscious to conscience): a governance framework for ethical AI integration. AI Ethics. doi: 10.1007/s43681-025-00736-2

Ardito, C. G. (2024). Generative AI detection in higher education assessments. New Dir. Teach. Learn. 1, 1–18. doi: 10.1002/tl.20624

Ateeq, A., Alzoraiki, M., Milhem, M., and Ateeq, R. A. (2024). Artificial intelligence in education: implications for academic integrity and the shift toward holistic assessment. Front. Educ. 9:1470979. doi: 10.3389/feduc.2024.1470979

Awadallah Alkouk, W., and Khlaif, Z. N. (2024). AI-resistant assessments in higher education: practical insights from faculty training workshops. Front. Educ. 9:14994952024. doi: 10.3389/feduc.2024.14994952024

Baalsrud Hauge, J., and Jeong, Y. (2024). “Does the improvement in AI tools necessitate a different approach to engineering education?” in Sustainable production through advanced manufacturing, intelligent automation and work integrated learning, SPS 2024. eds. J. Andersson, S. Joshi, L. Malmskold, and F. Hanning, vol. 52 (Amsterdam: Ios Press BV), 709–718. doi: 10.3233/ATDE240211

Baidoo-anu, D., and Ansah, L. O. (2023). Education in the Era of Generative Artificial Intelligence (AI): Understanding the Potential Benefits of ChatGPT in Promoting Teaching and Learning. Journal of AI 7, 52–62. doi: 10.61969/jai.1337500

Ballen, S. D., Abril, D. E., and Guerra, M. A. (2024). Board 40: work in progress: generative AI to support critical thinking in water resources students. Available online at: https://peer.asee.org/board-40-work-in-progress-generative-ai-to-support-critical-thinking-in-water-resources-students (Accessed November 17, 2024).

Brown, J. S., Collins, A., and Duguid, P. (1989). Situated cognition and the culture of learning. Educ. Res. 18, 32–42. doi: 10.3102/0013189X018001032

Camarillo, M. K., Lee, L. S., and Swan, C. (2024). Student perceptions of artificial intelligence and relevance for professional preparation in civil engineering. Available online at: https://peer.asee.org/student-perceptions-of-artificial-intelligence-and-relevance-for-professional-preparation-in-civil-engineering (Accessed November 17, 2024).

Chan, C. K. Y. (2023). A comprehensive AI policy education framework for university teaching and learning. Int. J. Educ. Technol. High. Educ. 20:38. doi: 10.1186/s41239-023-00408-3

Cheah, Y. H., Lu, J., and Kim, J. (2025). Integrating generative artificial intelligence in K-12 education: examining teachers’ preparedness, practices, and barriers. Comput. Educ. Artif. Intelligence 8:100363. doi: 10.1016/j.caeai.2025.100363

Corbin, T., Dawson, P., Nicola-Richmond, K., and Partridge, H. (2025). ‘Where’s the line? It’s an absurd line’: towards a framework for acceptable uses of AI in assessment. Assess. Eval. High. Educ. 1–13. doi: 10.1080/02602938.2025.2456207

Cullen, S., and Oppenheimer, D. (2024). Choosing to learn: the importance of student autonomy in higher education. Sci. Adv. 10:eado6759. doi: 10.1126/sciadv.ado6759

Dabis, A., and Csáki, C. (2024). AI and ethics: investigating the first policy responses of higher education institutions to the challenge of generative AI. Human. Soc. Sci. Commun. 11, 1–13. doi: 10.1057/s41599-024-03526-z

de Jong, T. (2010). Cognitive load theory, educational research, and instructional design: some food for thought. Instr. Sci. 38, 105–134. doi: 10.1007/s11251-009-9110-0