- 1Centro de Investigación en Mecatrónica y Sistemas Interactivos (MIST), Facultad de Ingenierías, Universidad Tecnológica Indoamérica, Ambato, Ecuador

- 2Facultad de Ciencias Sociales y Humanas, Universidad Tecnológica Indoamérica, Ambato, Ecuador

Technologies based on artificial intelligence are transforming teaching practices in higher education. However, many university faculty members still face difficulties in incorporating these tools in a critical, ethical, and pedagogically meaningful way. This review addresses the issue of limited artificial intelligence literacy among educators and the main obstacles to its adoption. The objective was to analyze the perceptions, resistance, and training needs of faculty members in the face of the growing presence of artificial intelligence in educational contexts. To this end, a narrative review was conducted, drawing on recent articles from Scopus and other academic sources, prioritizing empirical studies and reviews that explore the relationship between intelligent systems, university teaching, and the transformation of academic work. Out of 757 records initially retrieved, nine empirical studies met the inclusion criteria. The most frequently examined tools were generative artificial intelligence systems (e.g., ChatGPT), chatbots, and recommendation algorithms. Methodologically, most studies employed survey-based designs and thematic qualitative analysis. The main findings reveal a persistent ambivalence: faculty members acknowledge the usefulness of such technologies, but also express ethical concerns, technical insecurity, and fear of professional displacement. The most common barriers include lack of training, limited institutional support, and the absence of clear policies. A shift in the teaching role is observed, with greater emphasis on mediation, supervision, and critical analysis of output generated by artificial intelligence applications. Additionally, ethical debates are emerging around algorithmic transparency, data privacy, and institutional responsibility. Effective integration in higher education demands not only technical proficiency but also ethical grounding, regulatory support, and critical pedagogical development. This review was registered in Open Science Framework (OSF): 10.17605/OSF.IO/H53TC.

1 Introduction

In recent years, artificial intelligence (AI) has experienced unprecedented growth, expanding into various sectors, including labor, healthcare, social dynamics, and education (Ayala-Chauvin and Avilés-Castillo, 2024). In the educational domain, it has emerged as a key driver of pedagogical innovation (Su et al., 2023). Particularly, Generative AI (GenAI) has gained prominence. This type of AI can create new content such as text, images, or code, based on patterns learned from large datasets. Its applications include process automation, personalized learning support, and assistance in assessment and academic monitoring (Zhang and Aslan, 2021; Wang et al., 2024).

Historically, the integration of digital technologies into education has been gradual, punctuated by moments of disruption, such as the rise of virtual learning environments and the proliferation of open educational resources (Yildirim et al., 2018). However, AI marks a qualitative leap by enabling algorithms to process large volumes of data and tailor educational content to individual learners’ needs (Özer, 2024).

Particularly since the COVID-19 pandemic, the surge in emerging technologies has significantly transformed teaching practices in higher education (Schön et al., 2023). AI-based tools, including GenAI platforms such as ChatGPT, Deepseek, Copilot, and MetaAI now support students and faculty by generating content, providing answers, and enabling personalized learning pathways (Schön et al., 2023). Intelligent platforms enhanced with AI have also optimized instruction through automated tutoring, assisted assessment, and adaptive interactive resources (Xia et al., 2024). Nevertheless, the integration of AI into teaching presents significant challenges. One of the most pressing issues is the need for faculty training to ensure the effective pedagogical use of these tools (Sperling et al., 2024). Many educators lack the skills required to engage with these tools.

In this rapidly evolving context, AI literacy has emerged as an essential competency. Commonly defined as the ability to understand, critically evaluate, and effectively interact with artificial intelligence systems, AI literacy is part of a broader framework of multiple literacies (Tuominen et al., 2005; Ilomäki et al., 2023). Its democratic function lies in enabling individuals from diverse fields, such as health, computing, mathematics, education, or engineering to comprehend how these technologies work and what their implications are. This emphasis places formal education at the center of the debate, highlighting the role of educators and their professional expertise in guiding responsible integration.

Ethical and social implications also demand attention. A study by Ayanwale et al. (2024), involving 529 prospective teachers, underscores the need to prepare educators for responsible use. It warns of potential errors and biases from poor implementation and highlights the dual function of AI ethics: positively predicting emotional regulation and shaping perceptions of persuasive AI, often without aligning with actual competencies. Complementing these findings, (Buele et al., 2025) note that many faculty members lack the epistemic resources to critically assess algorithmic processes, which limits their ability to mentor students on responsible use.

The large-scale collection and analysis of data raise concerns about the privacy and security of information belonging to both faculty and students (Ismail, 2025). Data ownership is often unclear, potentially falling under the control of educational institutions, AI providers, or even third parties. This lack of clarity introduces risks concerning how data is used, stored, and shared. In parallel, limited training opportunities and resistance to change remain key barriers to adoption. Notably, higher levels of anxiety have been associated with greater difficulty in adapting to intelligent tools, particularly among less digitally fluent educators (Shahid et al., 2024).

Given the accelerated emergence of these technologies and the ambivalence they generate among faculty, it becomes necessary to synthesize current evidence on how they are reshaping academic work. This narrative review explores recent literature on faculty perceptions, adoption barriers, ethical considerations, and the evolving roles of university instructors. By identifying key patterns and research gaps, the study contributes to a broader understanding of how higher education is adapting to artificial intelligence.

2 Materials and methods

2.1 Design and search strategy

This review was conducted using a systematic approach for the selection and analysis of scientific literature, focusing on the perceptions, attitudes, and barriers faced by faculty in the adoption of artificial intelligence in higher education. The literature search was carried out using scientific databases recognized for their relevance in the educational and technological fields, including Scopus, Web of Science, IEEE Xplore, ERIC, EBSCOhost, and ProQuest.

Search terms were defined to align closely with the objective of this review. The selection of keywords: “faculty attitudes,” “teacher perceptions,” “teacher barriers,” “artificial intelligence,” “AI in education,” and “higher education” was based on their recurrence in previous studies and relevance to the intersection of AI and academic work in higher education. Boolean operators were applied to structure the search queries. No publication year limits were set in the search strategy.

2.2 Inclusion and exclusion criteria

To ensure the relevance of the selected studies, the following inclusion criteria were applied: (i) studies addressing the relationship between artificial intelligence and the transformation of academic work in higher education; (ii) empirical research with a solid methodological foundation; (iii) studies analyzing changes in work structure, decision-making processes, or regulation of artificial intelligence use in teaching; (iv) publications written in English.

Exclusion criteria included: (i) studies focused exclusively on students or on pedagogical uses of artificial intelligence without considering its impact on faculty; (ii) use of artificial intelligence without evaluating its effects on teaching practices; (iii) research conducted at educational levels other than higher education.

2.3 Study selection process

The article selection was carried out in two phases. The first phase involved reviewing the titles and abstracts of the studies retrieved from the databases. During this phase, duplicates were removed, and studies that did not meet the inclusion criteria were discarded. This was followed by a full-text review, in which the preselected articles were thoroughly analyzed to confirm their relevance to the objectives of this study.

2.4 Data analysis

The selected articles were organized into a synthesis table that included the following information: authors, study objectives, methodology used, type of artificial intelligence examined, educational level, main findings, limitations, and implications for teaching work. Although no formal quality appraisal tools were applied (as this is a narrative review), studies were selected based on their empirical rigor and relevance to the objectives of the review.

2.5 Ethical considerations

As this review is based on previously published studies and does not involve the collection of primary data, ethical approval was not required. Nevertheless, scientific integrity was ensured through the selection of articles from reputable sources and proper acknowledgment of the original authors.

3 Results

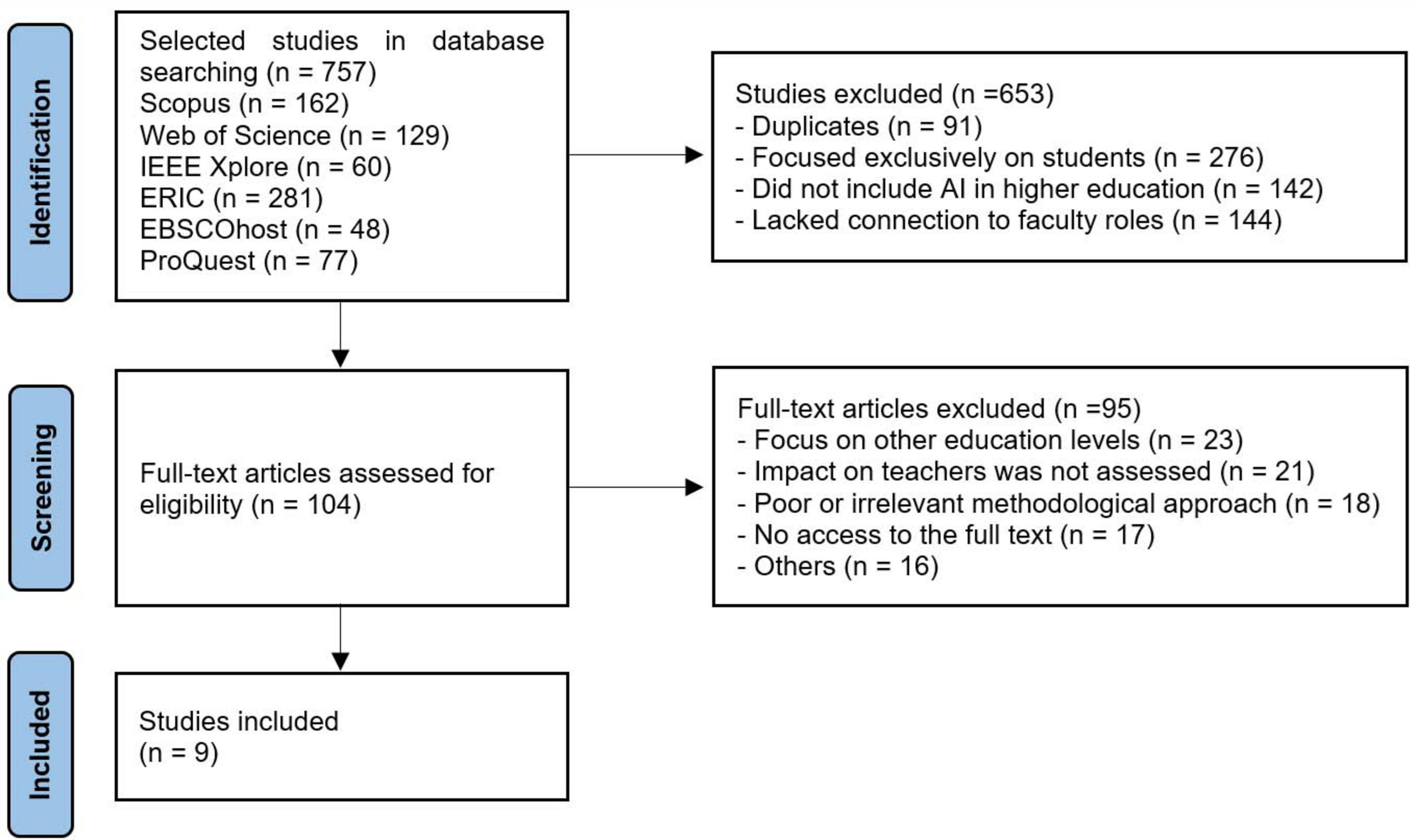

The search process yielded a total of 757 records from six databases. After removing duplicates and screening titles and abstracts based on predefined inclusion and exclusion criteria, 104 full-text articles were assessed for eligibility. Of these, 95 were excluded for reasons such as focus on other education levels, lack of assessment of impact on faculty, methodological issues, or inaccessibility of the full text. Ultimately, 9 studies were included in the review. The selection process is summarized in Figure 1.

3.1 Types of artificial intelligence used in higher education

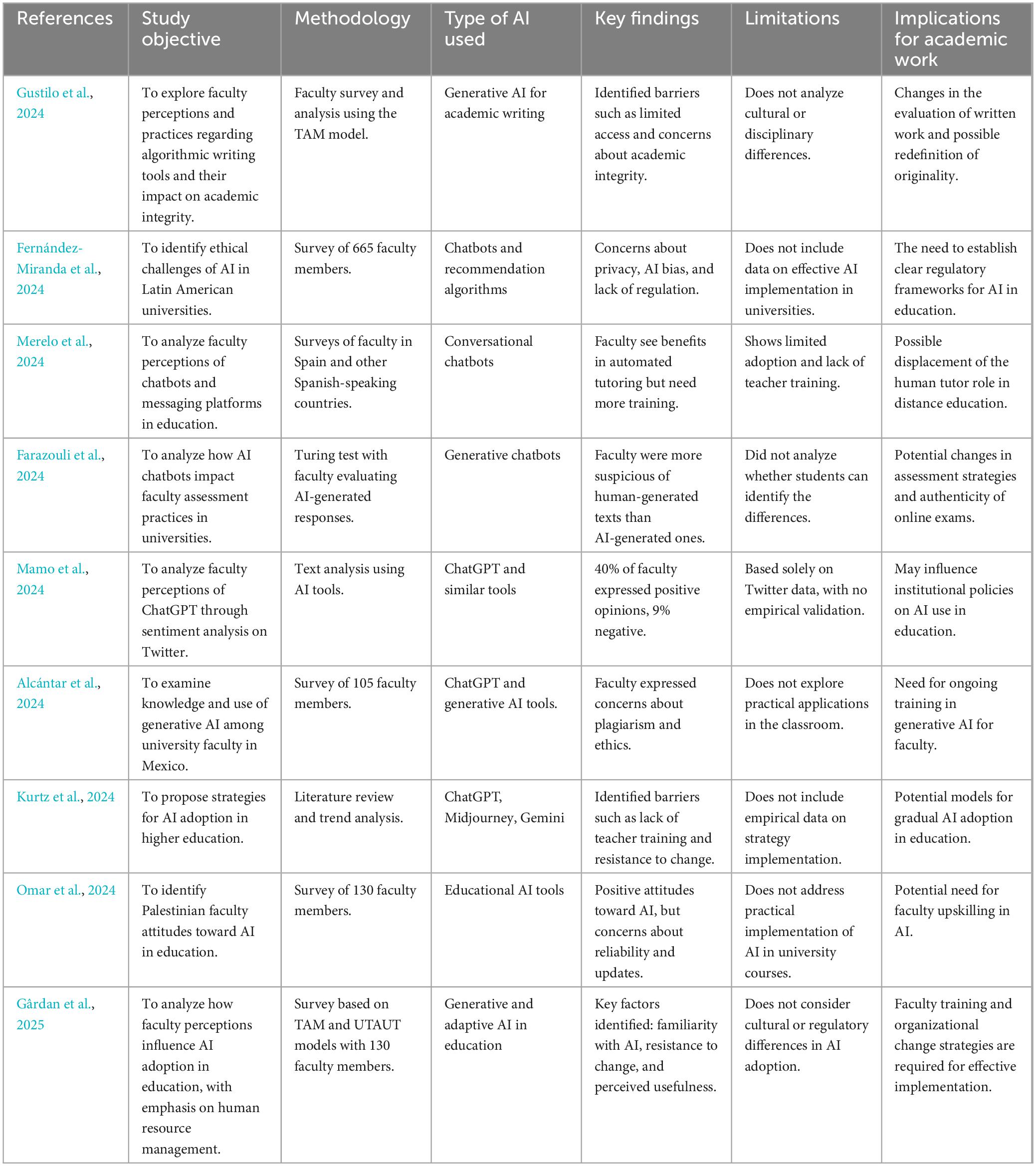

The selected studies analyzed various applications of artificial intelligence in higher education (Table 1), with a particular focus on generative tools, chatbots, and recommendation algorithms. Generative artificial intelligence was used in n = 4 studies, focusing on academic writing and teaching support (Alcántar et al., 2024; Gustilo et al., 2024; Kurtz et al., 2024; Gârdan et al., 2025). Conversational and generative chatbots were examined in n = 3 studies, with an emphasis on automated tutoring and academic assessment (Farazouli et al., 2024; Mamo et al., 2024; Merelo et al., 2024). Recommendation algorithms and data analysis were used in n = 2 studies to explore personalized learning and the optimization of teaching (Fernández-Miranda et al., 2024). Automation tools for research and teaching were evaluated in n = 1 study, exploring their impact on human resource management and academic output (Omar et al., 2024).

3.2 Faculty perceptions and barriers regarding artificial intelligence

The reviewed literature highlights a range of attitudes that faculty hold toward artificial intelligence in higher education. Positive perceptions: (n = 4) studies found that faculty recognize the potential of artificial intelligence to enhance personalized learning and administrative efficiency (Gustilo et al., 2024; Kurtz et al., 2024; Omar et al., 2024; Gârdan et al., 2025). (n = 3) studies reported that faculty view artificial intelligence as a useful tool for academic writing and assisted teaching (Alcántar et al., 2024; Mamo et al., 2024; Merelo et al., 2024).

Identified barriers: (n = 6) studies reported concerns regarding ethics and academic integrity, specifically related to plagiarism and the lack of regulatory frameworks (Alcántar et al., 2024; Farazouli et al., 2024; Fernández-Miranda et al., 2024; Gustilo et al., 2024; Mamo et al., 2024; Omar et al., 2024). (n = 3) studies noted that the lack of faculty training constitutes a significant obstacle to adoption (Kurtz et al., 2024; Merelo et al., 2024; Gârdan et al., 2025). (n = 3) studies identified resistance to change among faculty, based on the perception that artificial intelligence could replace certain teaching functions (Farazouli et al., 2024; Mamo et al., 2024; Omar et al., 2024).

3.3 Organizational impact and changes in teaching work

The reviewed literature suggests that the implementation of artificial intelligence in higher education is reshaping the structure of academic work in several ways: academic assessment and authenticity of student work: (n = 3) studies addressed how artificial intelligence is transforming the way instructors design and evaluate exams and academic assignments (Farazouli et al., 2024; Gustilo et al., 2024; Mamo et al., 2024). One study in particular (Farazouli et al., 2024) found that faculty had more difficulty identifying texts written by humans than those generated by artificial intelligence, highlighting challenges in assessing academic authenticity.

Shifts in teaching roles and task automation: (n = 3) studies emphasized that artificial intelligence can take on functions such as automated tutoring, student performance analysis, and instructional material generation (Kurtz et al., 2024; Merelo et al., 2024; Gârdan et al., 2025). (Kurtz et al., 2024; Gârdan et al., 2025) examined how instructors may reconfigure their roles, transitioning from knowledge transmitters to facilitators of learning in AI-enhanced environments. These findings suggest that artificial intelligence is not only influencing teaching methodologies but also altering how educators allocate their time and define their professional responsibilities.

3.4 Ethical and regulatory considerations

The impact of artificial intelligence in higher education extends beyond operational and methodological changes, raising important ethical and regulatory issues. (n = 5) studies addressed concerns related to data privacy and algorithmic bias in artificial intelligence tools (Alcántar et al., 2024; Fernández-Miranda et al., 2024; Gustilo et al., 2024; Mamo et al., 2024; Omar et al., 2024). (n = 3) studies noted the lack of clear regulations governing the use of artificial intelligence in teaching, which contributes to uncertainty among faculty members (Farazouli et al., 2024; Fernández-Miranda et al., 2024; Omar et al., 2024). (n = 1) study identified a gap in equitable access to artificial intelligence tools between institutions with differing levels of resources (Kurtz et al., 2024).

4 Discussion

4.1 Ambivalent perceptions and artificial intelligence literacy

One of the most consistent findings across the reviewed literature is the ambivalence in faculty perceptions of artificial intelligence. On one hand, many university instructors acknowledge the potential of these technologies to automate repetitive tasks, provide personalized feedback, and facilitate access to new educational resources. On the other hand, they express uncertainty, fear, and rejection particularly when they do not understand how artificial intelligence works or its ethical and pedagogical implications. Gustilo et al. (2024) found that many faculty members hold contradictory opinions: they value artificial intelligence for content generation but question its reliability and fear it may undermine students’ critical thinking.

To move beyond a descriptive account and toward a more robust interpretation, this ambivalence can be examined through established models of technology adoption. The Technology Acceptance Model (TAM) explains user behavior based on perceived usefulness and perceived ease of use (Davis, 1989). While faculty members may find these tools useful for instructional efficiency, they often struggle with ease of use due to limited training, which reduces their intention to adopt. Similarly, the Unified Theory of Acceptance and Use of Technology (UTAUT) highlights the influence of social expectations and the availability of institutional support (Venkatesh et al., 2003). Across the reviewed studies, the lack of peer collaboration, administrative backing, and pedagogical guidelines emerge as a critical barrier to adoption.

In this context, the concept of AI literacy becomes especially relevant. Artificial intelligence literacy should not be limited to the technical operation of tools but should also include a critical understanding of their foundations, potential, limitations, risks, and ethical frameworks. As Lin et al. (2022), note, the lack of specific didactic and technical knowledge about artificial intelligence hinders the design of sustainable learning experiences and limits educators’ ability to meaningfully integrate these tools. Furthermore, (Heyder and Posegga, 2021) propose a typology that includes three dimensions of literacy: technical, cognitive, and socio-emotional. The literature suggests that many faculty members score low across all three, limiting their engagement in institutional or curricular decisions about AI implementation.

Institutional environments also play a decisive role. The absence of structured training programs and clear experimentation spaces deepens uncertainty and stagnation. Although the literature on faculty professional development increasingly acknowledges these challenges, specific evidence targeting the higher education sector and intelligent technologies remains scarce (Chan, 2023; Kurtz et al., 2024; Walter, 2024).

Beyond institutional dynamics, contextual and demographic variables also shape the adoption of AI tools. Factors such as academic discipline, age, digital fluency, and organizational culture influence both perceived usefulness and actual use. However, most of the reviewed studies lack detailed characterization of these dimensions (Celik, 2023; Zhang, 2023; Ding et al., 2024). Adds that faculty adoption patterns are also mediated by demographic traits: younger instructors and those with prior experience in digital tools are more open to integration, whereas older faculty or those less digitally literate often exhibit skepticism or anxiety.

Recent studies have found that younger faculty members, or those with more prior experience in digital technologies, tend to adopt AI tools with greater ease and perceive them as pedagogically valuable. In contrast, older instructors or those with limited digital exposure often exhibit skepticism or require more intensive support (Chen et al., 2020). Academic rank also plays a role, with early-career faculty showing more willingness to experiment (Heyder and Posegga, 2021).

4.2 Institutional barriers and faculty resistance

National policy frameworks and institutional governance play a critical role in shaping faculty engagement with AI. Countries that have implemented clear AI strategies and ethical guidelines tend to foster more structured institutional responses, which positively affect faculty confidence and adoption (Fernández-Miranda et al., 2024; Mah and Groß, 2024). In contrast, where such frameworks are absent or poorly implemented, faculty often encounter ambiguity and lack of institutional support.

Institutional digital maturity also influences faculty attitudes. Universities with robust infrastructures and ongoing digital transformation efforts offer more consistent training opportunities, which reduce uncertainty and facilitate AI adoption (Qadhi et al., 2024). Conversely, in low-resource environments, the lack of coordination and continuity may amplify resistance.

Several studies indicate that educators perceive the introduction of artificial intelligence as a top-down technological imposition rather than a pedagogical tool (Mah and Groß, 2024). This perception leads to defensive or indifferent attitudes, especially in the absence of institutional spaces for critical reflection or continuous professional development related to artificial intelligence. In addition, (Farazouli et al., 2024) identified that implementing intelligent technologies without clear usage policies or shared ethical criteria creates an environment of ambiguity and insecurity, prompting instructors to avoid using artificial intelligence in order to protect their professional autonomy.

A critical factor is the absence of inclusive organizational models that involve faculty in techno-pedagogical decision-making. As shown by Omar et al. (2024), when artificial intelligence adoption processes exclude faculty input, feelings of exclusion, surveillance, and loss of agency are intensified. This situation is also linked to what (Bernhardt et al., 2023) describe as conflicts over symbolic and practical control in the workplace. To counter these barriers, institutions should implement bottom-up policy models that involve faculty in decision-making processes related to AI adoption. For instance, participatory workshops, co-designed pilot programs, and interdisciplinary advisory boards can help align the implementation of artificial intelligence with pedagogical goals. International examples, such as the The University of Edinburgh, 2024, Stanford University (2024) institutional initiatives (2024) provide valuable reference models for such alignment.

Resistance is not always expressed as open opposition but also as passive resistance such as non-use, minimal use, or avoidance of the more powerful features of intelligent technologies (Karataş et al., 2025). This resistance becomes more pronounced when instructors do not perceive a clear benefit to their teaching practices or feel that the learning effort required is not sufficiently rewarded (Ayanwale et al., 2022; Jatileni et al., 2024)

Another key point is the perception of replacement. Many instructors fear that extensive use of artificial intelligence may lead to a diminished value of their professional roles, particularly in assessment, feedback, or content development (Chan and Tsi, 2023). This perception has been cited as a factor contributing to technological anxiety or even professional disidentification (McGrath et al., 2023). Disciplinary cultures also shape the extent and manner in which AI is adopted. Faculty in STEM and technology-driven fields tend to exhibit greater enthusiasm and openness, whereas those in humanities or critical pedagogy domains express more skepticism, often due to concerns over epistemic integrity or automation of reflective practice (Holmes and Porayska-Pomsta, 2022).

Finally, it is essential to highlight that institutional barriers also include lack of infrastructure, insufficient technical training, and unstable or absent policies regarding the ethical use of artificial intelligence in university contexts (Gkrimpizi et al., 2023). These organizational gaps hinder informed and critical adoption and perpetuate a superficial or purely instrumental view of artificial intelligence (Zhai, 2022; Michel-Villarreal et al., 2023).

4.3 Reconfiguration of academic work

The integration of intelligent technologies in higher education not only transforms instructional tools but also brings about a structural reconfiguration of academic work. This transformation is reflected in the redefinition of roles, the displacement of traditional tasks toward automated processes, and the emergence of new professional competencies.

Recent studies, such as Kurtz et al. (2024) suggest that educators are transitioning from the role of knowledge transmitters to that of mediators, supervisors, resource curators, and providers of emotional support especially in environments where artificial intelligence systems generate content, assess assignments, or propose personalized learning pathways.

This professional shift is not without friction. The review indicates that many educators do not feel prepared to take on these new roles, as they were not part of their initial training and there are few institutional programs to support this transition (Ng et al., 2023). This creates a tension between the expectations of digital environments and faculty members’ perceived capabilities (Celik et al., 2022).

Moreover, as noted by Machado et al. (2025), faculty perceptions of workload associated with artificial intelligence vary depending on the level of automation in educational platforms. In their experiment with automated, manual, and semi-automated scenarios, instructors reported greater cognitive effort and frustration in contexts with higher levels of human control especially when technical support was lacking. This finding reveals a paradox: while artificial intelligence is promoted as a tool to ease workload, its implementation without clear support strategies may have the opposite effect, generating overload, stress, and a sense of lost control.

Simultaneously, the transformation of academic work introduces new demands for advanced digital literacy not only in technical terms, but also in interpreting and validating algorithm-generated outputs, managing adaptive systems, and making decisions in artificial intelligence-mediated environments. These tasks have become increasingly complex as current systems do not possess human-like awareness. As noted by Bouschery et al. (2023), Dwivedi et al. (2023), generative models are often specialized in specific tasks and struggle with adaptability in more complex scenarios (Lee et al., 2024).

Nonetheless, the reviewed literature suggests that this reconfiguration also presents an opportunity to redefine the purpose of academic work highlighting human interaction, pedagogical creativity, and professional judgment in contrast to the standardization of educational processes. However, for this potential to be realized, institutional spaces for dialog and policies that acknowledge and support the emerging profile of faculty are essential (Ng et al., 2023; Adzkia and Refdinal, 2024).

4.4 Ethical dimensions and institutional responsibility

The incorporation of artificial intelligence in higher education raises a series of ethical challenges that have yet to be clearly or consistently addressed by university institutions. Among the most common concerns are data privacy, algorithmic bias, lack of system transparency, and the unclear attribution of responsibility when errors or unintended consequences arise. Additionally, overreliance on AI could undermine teacher autonomy and creativity, raising concerns about the standardization of instruction and the diminishing of the human role in education (Sperling et al., 2024).

Building on these concerns, the concept of algorithmic accountability deserves further attention. This principle refers to the obligation of developers, institutions, and users to ensure that AI systems are explainable, auditable, and aligned with ethical standards, especially in environments like education where algorithmic outputs can affect learning trajectories and evaluations (Memarian and Doleck, 2023; Pawlicki et al., 2024). Equally important is faculty agency: instructors are not merely passive users of AI tools but can act as critical mediators who validate, contextualize, or even challenge algorithmic recommendations. As Buele et al. (2025) emphasize, when educators exercise intentional control over the use of generative AI, they contribute to fostering a culture of responsible innovation in academic environments.

Many instructors report feeling unprepared to deal with these ethical dilemmas, not only due to limited digital literacy but also because of the absence of clear institutional guidelines. Lin et al. (2022) show that the ethical dimension of artificial intelligence education often takes a backseat to the technical or instrumental approach that dominates many faculty training programs.

Likewise, (Alcántar et al., 2024) point to a disconnect between the rapid development of intelligent technologies in education and the normative and governance capacities of universities, leaving instructors in an ambiguous position regarding what they can or cannot do with artificial intelligence tools.

A recurring issue in the literature is algorithmic responsibility: who is accountable when an automated system makes an erroneous or discriminatory decision? How can it be ensured that these systems uphold principles of equity, inclusion, and educational justice? These questions often go unanswered in current university policies (Baker and Hawn, 2022; Salleh, 2023; Salvagno et al., 2023).

The lack of transparency in how artificial intelligence systems are designed and operate also contributes to faculty distrust. Many instructors are unaware of how models used by students, such as automated grading systems or recommendation engines—are trained or what data they process (Halaweh, 2023). His “algorithmic black box” limits the capacity to audit or question system outputs, weakening pedagogical agency (Felzmann et al., 2020; von Eschenbach, 2021; Chowdhury and Oredo, 2023).

Moreover, the ethical digital divide becomes more pronounced when only certain faculty groups, typically those with stronger technological backgrounds, possess the competencies to critically assess these systems. Others, lacking such preparation, are excluded from decision-making and pedagogical innovation (Chiu et al., 2023). This epistemic inequality has emerged as a new source of professional exclusion, yet remains underexplored in current research (Kasinidou et al., 2025; Liu, 2025).

Beyond concerns about algorithmic opacity and data governance, the implications of generative AI for academic integrity are gaining urgency. As Lo et al. (2025) observe, AI tools may enhance student engagement and improve writing quality during revisions. However, they also challenge conventional notions of authorship and originality, blurring the line between acceptable assistance and academic misconduct. These dilemmas extend to faculty as well, particularly in relation to the use of AI in preparing teaching materials, scholarly writing, or providing feedback. Addressing this ambiguity demands clear institutional policies on AI use in academic settings, including guidelines for disclosure, authorship attribution, and acceptable practices.

To translate ethical principles into practice, higher education institutions must adopt clear and adaptable policy frameworks. Recent analyses show that universities such as MIT, University College London, and the University of Edinburgh have developed institutional guidelines for the responsible use of generative AI in teaching and learning contexts (Ullah et al., 2024). These documents typically address transparency, academic integrity, authorship, and appropriate use of AI in assessment and course design. Implementing similar policies can reduce ambiguity and foster consistency in ethical standards across departments. In parallel, faculty development should be sustained and multidimensional, integrating technical, ethical, and pedagogical training. Programs focused on prompt design, bias detection, and case-based ethical reasoning are essential to promote responsible AI use in classrooms. Frameworks like the AI Literacy for Educators model (Chiu, 2024) can support faculty confidence and critical engagement.

Additionally, peer mentoring, interdisciplinary collaboration, and reflective teaching communities contribute to a culture of experimentation and pedagogical renewal. To further support innovation, institutions might consider incentives such as pilot project grants, teaching relief, or support for research dissemination. Latin American universities, in particular, could adapt these international frameworks to fit their specific socio-educational contexts, drawing on references such as the UNESCO (2021) and broader standards from International Organization for Standardization (2023), National Institute of Standards and Technology (2024), OECD (2024).

4.5 Methodological reflections and limitations

This review was conducted using a narrative approach to synthesize emerging insights on faculty perceptions of AI in higher education. While this design allows for thematic flexibility and conceptual depth, several methodological limitations must be acknowledged. First, there is a risk of publication bias, as studies reporting positive attitudes or successful implementations may be more likely to be published and indexed, while critical or null findings remain underreported (Boell and Cecez-Kecmanovic, 2015). This can skew the thematic balance and over represent adoption-oriented perspectives.

Second, the rapid evolution of generative AI tools poses a challenge for literature reviews. Tools like ChatGPT, Copilot, or Bard are being updated continuously, meaning that the perceptions captured in current research may soon become outdated or incomplete (Michel-Villarreal et al., 2023). As new capabilities and ethical concerns emerge, longitudinal and iterative research designs will be needed to track these shifts over time. Finally, the exclusion of gray literature and non-English sources may have limited the scope of this review. Reports, policy briefs, and institutional case studies, often found outside academic databases could provide valuable insights into real-world implementation processes, particularly in underrepresented regions. Future reviews should consider broader inclusion criteria and adopt dynamic frameworks that respond to the evolving nature of AI in education.

5 Conclusion

This narrative review synthesized recent empirical literature to examine how artificial intelligence is reshaping academic work in higher education, with a focus on faculty perceptions, adoption barriers, ethical concerns, and evolving teaching roles. The findings reveal persistent ambivalence among instructors: while many recognize the potential of intelligent tools to enhance pedagogical efficiency, concerns remain regarding ethical use, professional displacement, and the erosion of academic autonomy. Adoption appears to be shaped by more than just technical familiarity. Organizational culture, the presence of clear institutional policies, and disciplinary traditions strongly influence faculty engagement with AI. Moreover, the absence of robust training opportunities and ethical guidance continues to limit meaningful integration into academic practices.

By framing the findings through models such as TAM and UTAUT, this review moves beyond description to offer explanatory insight into the mechanisms driving resistance or acceptance. It also underscores the need to foster AI literacy through multidimensional strategies that include pedagogical, ethical, and institutional dimensions. There is a strong emphasis on adapting faculty development and policy frameworks to specific regional contexts, particularly in underrepresented areas such as Latin America. Institutions are also encouraged to take a proactive role in fostering responsible, equitable, and critically informed uses of AI in education.

Author contributions

JB: Conceptualization, Investigation, Methodology, Visualization, Writing – original draft, Writing – review and editing. LL-A: Conceptualization, Investigation, Supervision, Validation, Writing – original draft, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This research was funded by Universidad Tecnológica Indoamérica, under the project “Innovación en la Educación Superior a través de las Tecnologías Emergentes,” Grant Number: IIDI-022-25.

Acknowledgments

We extend our gratitude to the EDUTEM research network for its support in the dissemination of results.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Generative AI was used in the creation of this manuscript. The author(s) verify and take full responsibility for the use of generative AI in the preparation of this manuscript. Generative AI was used solely to revise English grammar and syntax.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adzkia, M. S., and Refdinal, R. (2024). Teacher readiness in terms of technological skills in facing artificial intelligence in the 21st century education era. JPPI 10, 1048–1057. doi: 10.29210/020244152

Alcántar, M. R. C., González, G. G. M., Rodríguez, H. G., Padilla, A. A. J., and Montes, F. M. J. (2024). Percepciones docentes sobre la integración de aplicaciones de IA generativa en el proceso de enseñanza universitario. REDU. Rev. Docencia Univer. 22, 158–176. doi: 10.4995/redu.2024.22027

Ayala-Chauvin, M., and Avilés-Castillo, F. (2024). Optimizing natural language processing: A comparative analysis of GPT-3.5. GPT-4, and GPT-4o. Data Metadata 3, 359–359. doi: 10.56294/dm2024.359

Ayanwale, M. A., Adelana, O. P., Molefi, R. R., Adeeko, O., and Ishola, A. M. (2024). Examining artificial intelligence literacy among pre-service teachers for future classrooms. Comput. Educ. Open 6:100179. doi: 10.1016/j.caeo.2024.100179

Ayanwale, M. A., Sanusi, I. T., Adelana, O. P., Aruleba, K. D., and Oyelere, S. S. (2022). Teachers’ readiness and intention to teach artificial intelligence in schools. Comput. Educ. Artif. Intell. 3:100099. doi: 10.1016/j.caeai.2022.100099

Baker, R. S., and Hawn, A. (2022). Algorithmic bias in education. Int. J. Artif. Intell. Educ. 32, 1052–1092. doi: 10.1007/s40593-021-00285-9

Bernhardt, A., Kresge, L., and Suleiman, R. (2023). The data-driven workplace and the case for worker technology rights. ILR Rev. 76, 3–29. doi: 10.1177/00197939221131558

Boell, S. K., and Cecez-Kecmanovic, D. (2015). On being ‘systematic’ in literature reviews in IS. J. Information Technol. 30, 161–173. doi: 10.1057/jit.2014.26

Bouschery, S. G., Blazevic, V., and Piller, F. T. (2023). Augmenting human innovation teams with artificial intelligence: Exploring transformer-based language models. J. Product Innov. Manag. 40, 139–153. doi: 10.1111/jpim.12656

Buele, J., Sabando-García, ÁR., Sabando-García, B. J., and Yánez-Rueda, H. (2025). Ethical use of generative artificial intelligence among ecuadorian university students. Sustainability 17:4435. doi: 10.3390/su17104435

Celik, I. (2023). Exploring the determinants of Artificial intelligence (AI) literacy: Digital divide, computational thinking, cognitive absorption. Telematics Informatics 83:102026. doi: 10.1016/j.tele.2023.102026

Celik, I., Dindar, M., Muukkonen, H., and Järvelä, S. (2022). The promises and challenges of Artificial intelligence for teachers: A systematic review of research. TechTrends 66, 616–630. doi: 10.1007/s11528-022-00715-y

Chan, C. K. Y. (2023). A comprehensive AI policy education framework for university teaching and learning. Int. J. Educ. Technol. High. Educ. 20:38. doi: 10.1186/s41239-023-00408-3

Chan, C. K. Y., and Tsi, L. H. Y. (2023). The AI revolution in education: Will AI replace or assist teachers in higher education? arXiv [Preprint] doi: 10.48550/arXiv.2305.01185

Chen, L., Chen, P., and Lin, Z. (2020). Artificial intelligence in education: A review. IEEE Access 8, 75264–75278. doi: 10.1109/ACCESS.2020.2988510

Chiu, T. K. F. (2024). Future research recommendations for transforming higher education with generative AI. Comput. Educ. Artif. Intell. 6:100197. doi: 10.1016/j.caeai.2023.100197

Chiu, T. K. F., Xia, Q., Zhou, X., Chai, C. S., and Cheng, M. (2023). Systematic literature review on opportunities, challenges, and future research recommendations of artificial intelligence in education. Comput. Educ. Artif. Intell. 4:100118. doi: 10.1016/j.caeai.2022.100118

Chowdhury, T., and Oredo, J. (2023). AI ethical biases: Normative and information systems development conceptual framework. J. Dec. Syst. 32, 617–633. doi: 10.1080/12460125.2022.2062849

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quart. 13, 319–340. doi: 10.2307/249008

Ding, A.-C. E., Shi, L., Yang, H., and Choi, I. (2024). Enhancing teacher AI literacy and integration through different types of cases in teacher professional development. Comput. Educ. Open 6:100178. doi: 10.1016/j.caeo.2024.100178

Dwivedi, Y. K., Kshetri, N., Hughes, L., Slade, E. L., Jeyaraj, A., Kar, A. K., et al. (2023). Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Information Manag. 71:102642. doi: 10.1016/j.ijinfomgt.2023.102642

Farazouli, A., Cerratto-Pargman, T., Laksov, K., and McGrath, C. (2024). Hello GPT! Goodbye home examination? An exploratory study of AI chatbots impact on university teachers’ assessment practices. Assess. Eval. High. Educ. 49, 363–375. doi: 10.1080/02602938.2023.2241676

Felzmann, H., Fosch-Villaronga, E., Lutz, C., and Tamò-Larrieux, A. (2020). Towards transparency by design for artificial intelligence. Sci. Eng. Ethics 26, 3333–3361. doi: 10.1007/s11948-020-00276-4

Fernández-Miranda, M., Román-Acosta, D., Jurado-Rosas, A. A., Limón-Dominguez, D., and Torres-Fernández, C. (2024). Artificial intelligence in latin american universities: Emerging challenges. Comput. Sist. 28, 435–450. doi: 10.13053/cys-28-2-4822

Gârdan, I. P., Manu, M. B., Gârdan, D. A., Negoiţă, L. D. L., Paştiu, C. A., Ghiţă, E., et al. (2025). Adopting AI in education: Optimizing human resource management considering teacher perceptions. Front. Educ. 10:1488147. doi: 10.3389/feduc.2025.1488147

Gkrimpizi, T., Peristeras, V., and Magnisalis, I. (2023). Classification of barriers to digital transformation in higher education institutions: Systematic literature review. Educ. Sci. 13:746. doi: 10.3390/educsci13070746

Gustilo, L., Ong, E., and Lapinid, M. R. (2024). Algorithmically-driven writing and academic integrity: Exploring educators’ practices, perceptions, and policies in AI era. Int. J. Educ. Integr. 20, 1–43. doi: 10.1007/s40979-024-00153-8

Halaweh, M. (2023). ChatGPT in education: Strategies for responsible implementation. Contemp. Educ. Technol. 15:e421. doi: 10.30935/cedtech/13036

Heyder, T., and Posegga, O. (2021). Extending the Foundations of AI Literacy. In ICIS 2021 Proceedings. Austin: AIS eLibrary. 1–9. Available online at: https://aisel.aisnet.org/icis2021/is_future_work/is_future_work/9

Holmes, W., and Porayska-Pomsta, K. (eds) (2022). The Ethics of Artificial Intelligence in Education: Practices, Challenges, and Debates. New York, NY: Routledge.

Ilomäki, L., Lakkala, M., Kallunki, V., Mundy, D., Romero, M., Romeu, T., et al. (2023). Critical digital literacies at school level: A systematic review. Rev. Educ. 11:e3425. doi: 10.1002/rev3.3425

International Organization for Standardization (2023). ISO/IEC 42001:2023 Information Technology — Artificial Intelligence — Management System. Geneva: International Organization for Standardization.

Ismail, I. A. (2025). “Protecting privacy in AI-Enhanced education: A comprehensive examination of data privacy concerns and solutions in AI-based learning,” in Impacts of Generative AI on the Future of Research and Education, (Pennsylvania: IGI Global Scientific Publishing), 117–142. doi: 10.4018/979-8-3693-0884-4.ch006

Jatileni, C. N., Sanusi, I. T., Olaleye, S. A., Ayanwale, M. A., Agbo, F. J., and Oyelere, P. B. (2024). Artificial intelligence in compulsory level of education: Perspectives from Namibian in-service teachers. Educ. Inf. Technol. 29, 12569–12596. doi: 10.1007/s10639-023-12341-z

Karataş, F., Eriçok, B., and Tanrikulu, L. (2025). Reshaping curriculum adaptation in the age of artificial intelligence: Mapping teachers’ AI-driven curriculum adaptation patterns. Br. Educ. Res. J. 51, 154–180. doi: 10.1002/berj.4068

Kasinidou, M., Kleanthoys, S., and Otterbacher, J. (2025). Cypriot teachers’ digital skills and attitudes towards AI. Discov. Educ. 4:1. doi: 10.1007/s44217-024-00390-6

Kurtz, G., Amzalag, M., Shaked, N., Zaguri, Y., Kohen-Vacs, D., Gal, E., et al. (2024). Strategies for integrating generative AI into higher education: Navigating challenges and leveraging opportunities. Educ. Sci. 14:503. doi: 10.3390/educsci14050503

Lee, Y., Son, K., Kim, T. S., Kim, J., Chung, J. J. Y., Adar, E., et al. (2024). “One vs. many: Comprehending accurate information from multiple erroneous and inconsistent AI generations,” in Proceedings of the 2024 ACM Conference on Fairness, Accountability, and Transparency, (New York, NY: Association for Computing Machinery), 2518–2531. doi: 10.1145/3630106.3662681

Lin, X.-F., Chen, L., Chan, K. K., Peng, S., Chen, X., Xie, S., et al. (2022). Teachers’ perceptions of teaching sustainable Artificial intelligence: A design frame perspective. Sustainability 14:7811. doi: 10.3390/su14137811

Liu, N. (2025). Exploring the factors influencing the adoption of artificial intelligence technology by university teachers: The mediating role of confidence and AI readiness. BMC Psychol. 13:311. doi: 10.1186/s40359-025-02620-4

Lo, N., Wong, A., and Chan, S. (2025). The impact of generative AI on essay revisions and student engagement. Comput. Educ. Open 2025:100249. doi: 10.1016/j.caeo.2025.100249

Machado, A., Tenório, K., Santos, M. M., Barros, A. P., Rodrigues, L., Mello, R. F., et al. (2025). Workload perception in educational resource recommendation supported by artificial intelligence: A controlled experiment with teachers. Smart Learn. Environ. 12:20. doi: 10.1186/s40561-025-00373-6

Mah, D.-K., and Groß, N. (2024). Artificial intelligence in higher education: Exploring faculty use, self-efficacy, distinct profiles, and professional development needs. Int. J. Educ. Technol. High. Educ. 21:58. doi: 10.1186/s41239-024-00490-1

Mamo, Y., Crompton, H., Burke, D., and Nickel, C. (2024). Higher education faculty perceptions of ChatGPT and the influencing factors: A sentiment analysis of X. TechTrends 68, 520–534. doi: 10.1007/s11528-024-00954-1

McGrath, C., Cerratto Pargman, T., Juth, N., and Palmgren, P. J. (2023). University teachers’ perceptions of responsibility and artificial intelligence in higher education - An experimental philosophical study. Comput. Educ. Artificial Intell. 4:100139. doi: 10.1016/j.caeai.2023.100139

Memarian, B., and Doleck, T. (2023). Fairness, accountability, transparency, and ethics (FATE) in Artificial intelligence (AI) and higher education: A systematic review. Comput. Educ. Artificial Intell. 5:100152. doi: 10.1016/j.caeai.2023.100152

Merelo, J. J., Castillo, P. A., Mora, A. M., Barranco, F., Abbas, N., Guillén, A., et al. (2024). Chatbots and messaging platforms in the classroom: An analysis from the teacher’s perspective. Educ. Inf. Technol. 29, 1903–1938. doi: 10.1007/s10639-023-11703-x

Michel-Villarreal, R., Vilalta-Perdomo, E., Salinas-Navarro, D. E., Thierry-Aguilera, R., and Gerardou, F. S. (2023). Challenges and opportunities of generative AI for higher education as explained by ChatGPT. Educ. Sci. 13:856. doi: 10.3390/educsci13090856

National Institute of Standards and Technology (2024). NIST-AI-600-1, Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile. Gaithersburg, MA: National Institute of Standards and Technology.

Ng, D. T. K., Leung, J. K. L., Su, J., Ng, R. C. W., and Chu, S. K. W. (2023). Teachers’ AI digital competencies and twenty-first century skills in the post-pandemic world. Educ. Tech. Res. Dev. 71, 137–161. doi: 10.1007/s11423-023-10203-6

Omar, A., Shaqour, A. Z., and Khlaif, Z. N. (2024). Attitudes of faculty members in Palestinian universities toward employing artificial intelligence applications in higher education: Opportunities and challenges. Front. Educ. 9:1414606. doi: 10.3389/feduc.2024.1414606

Özer, M. (2024). Potential benefits and risks of Artificial intelligence in education. BUEFAD 13, 232–244. doi: 10.14686/buefad.1416087

Pawlicki, M., Pawlicka, A., Uccello, F., Szelest, S., D’Antonio, S., Kozik, R., et al. (2024). Evaluating the necessity of the multiple metrics for assessing explainable AI: A critical examination. Neurocomputing 602:128282. doi: 10.1016/j.neucom.2024.128282

Qadhi, S. M., Alduais, A., Chaaban, Y., and Khraisheh, M. (2024). Generative AI, research ethics, and higher education research: Insights from a scientometric analysis. Information 15:325. doi: 10.3390/info15060325

Salleh, H. M. (2023). Errors of commission and omission in artificial intelligence: Contextual biases and voids of ChatGPT as a research assistant. DESD 1:14. doi: 10.1007/s44265-023-00015-0

Salvagno, M., Taccone, F. S., and Gerli, A. G. (2023). Artificial intelligence hallucinations. Crit. Care 27:180. doi: 10.1186/s13054-023-04473-y

Schön, E.-M., Neumann, M., Hofmann-Stölting, C., Baeza-Yates, R., and Rauschenberger, M. (2023). How are AI assistants changing higher education? Front. Comput. Sci. 5:1208550. doi: 10.3389/fcomp.2023.1208550

Shahid, M. K., Zia, T., Bangfan, L., Iqbal, Z., and Ahmad, F. (2024). Exploring the relationship of psychological factors and adoption readiness in determining university teachers’ attitude on AI-based assessment systems. Int. J. Manag. Educ. 22:100967. doi: 10.1016/j.ijme.2024.100967

Sperling, K., Stenberg, C.-J., McGrath, C., Åkerfeldt, A., Heintz, F., and Stenliden, L. (2024). In search of artificial intelligence (AI) literacy in teacher education: A scoping review. Comput. Educ. Open 6:100169. doi: 10.1016/j.caeo.2024.100169

Stanford University (2024). Analyzing the Implications of AI for Your Course. California: Stanford University.

Su, J., Ng, D. T. K., and Chu, S. K. W. (2023). Artificial intelligence (AI) literacy in early childhood education: The challenges and opportunities. Comput. Educ. Artificial Intell. 4:100124. doi: 10.1016/j.caeai.2023.100124

The University of Edinburgh (2024). Guidance for Working with Generative AI (“GenAI”) in Your Studies. Scotland: The University of Edinburgh.

Tuominen, K., Savolainen, R., and Talja, S. (2005). Information literacy as a sociotechnical practice. Library Quart. 75, 329–345. doi: 10.1086/497311

Ullah, M., Bin Naeem, S., and Kamel Boulos, M. N. (2024). Assessing the guidelines on the use of generative artificial intelligence tools in universities: A survey of the world’s top 50 universities. Big Data Cogn. Comput. 8:194. doi: 10.3390/bdcc8120194

Venkatesh, V., Morris, M. G., Davis, G. B., and Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quart. 27, 425–478. doi: 10.2307/30036540

von Eschenbach, W. J. (2021). Transparency and the black box problem: Why we do not trust AI. Philos. Technol. 34, 1607–1622. doi: 10.1007/s13347-021-00477-0

Walter, Y. (2024). Embracing the future of Artificial Intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education. Int. J. Educ. Technol. High. Educ. 21:15. doi: 10.1186/s41239-024-00448-3

Wang, S., Wang, F., Zhu, Z., Wang, J., Tran, T., and Du, Z. (2024). Artificial intelligence in education: A systematic literature review. Expert Syst. Appl. 252:124167. doi: 10.1016/j.eswa.2024.124167

Xia, Q., Weng, X., Ouyang, F., Lin, T. J., and Chiu, T. K. F. (2024). A scoping review on how generative artificial intelligence transforms assessment in higher education. Int. J. Educ. Technol. High. Educ. 21:40. doi: 10.1186/s41239-024-00468-z

Yildirim, G., Elban, M., and Yildirim, S. (2018). Analysis of use of virtual reality technologies in history education: A case study. Asian J. Educ. Training 4, 62–69. doi: 10.20448/journal.522.2018.42.62.69

Zhai, X. (2022). ChatGPT User Experience: Implications for Education. Available online at: http://dx.doi.org/10.2139/ssrn.4312418 (Accessed December 27, 2022)

Zhang, J. (2023). EFL teachers’ digital literacy: The role of contextual factors in their literacy development. Front. Psychol. 14:1153339. doi: 10.3389/fpsyg.2023.1153339

Keywords: artificial intelligence, higher education, university teaching, faculty perceptions, digital literacy

Citation: Buele J and Llerena-Aguirre L (2025) Transformations in academic work and faculty perceptions of artificial intelligence in higher education. Front. Educ. 10:1603763. doi: 10.3389/feduc.2025.1603763

Received: 01 April 2025; Accepted: 18 June 2025;

Published: 07 July 2025.

Edited by:

Indira Boutier, Glasgow Caledonian University, United KingdomReviewed by:

Dulce Fernandes Mota, Instituto Superior de Engenharia do Porto (ISEP), PortugalNoble Lo, Lancaster University, United Kingdom

Copyright © 2025 Buele and Llerena-Aguirre. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jorge Buele, am9yZ2VidWVsZUB1dGkuZWR1LmVj

Jorge Buele

Jorge Buele Leonel Llerena-Aguirre

Leonel Llerena-Aguirre