- Department of Psychology, Rutgers University, Piscataway, NJ, United States

Innovative technologies like AI need to be brought into education in ways that will support best pedagogical practices. Examining the history of adoption of innovations shows that their impact often is hard to predict. Future use of AI must be accompanied by clarity about the educational purposes that AI is intended to enhance. Key to ensuring that AI’s impact is positive is recognizing that AI is operator dependent, and the social–emotional and character competencies of those implementing and using AI innovations – along with the prosocial value structure of their schools, particularly around academic integrity—will determine the impact of AI. This is illustrated with examples of cyberbullying and the presence of Chromebooks in classrooms. Policy and practice recommendations are provided, centered around the prioritization of collaborative and experiential pedagogy and systematic, intentional social–emotional and character development for all children in all schools.

Introduction

AI is happening. As with many technological innovations before it, AI is unfolding in ways that cannot be predicted at the time of this writing. However, the process through which AI will unfold, and its impact on people’s well-being, can be illuminated by examining the processes that have characterized innovations before it. One thing that can be counted on: “technological change always produces winners and losers” (Postman, 1995).

Cottoms (2025) is among many writers who have concerns about the impact of AI on education. She notes potential harms when AI does people’s work for them: “AI requires people who know how to use it…AI’s most revolutionary potential is helping experts apply their expertise better and faster. But for that to work, there has to be experts” (p. SR 3). She identified cheating as the number one concern about AI among academic institutions across grade levels. It is not possible to prevent all avenues for cheating. What must be conveyed in our schools is the vital importance of academic integrity. This should not be narrowly conceptualized in terms of personal dishonesty. Lack of academic integrity needs to be communicated as a social danger. Imagine relying on AI to help you get an air traffic controller position without the requisite experience for dealing with sudden crises. Or becoming a lawyer who is facing courtroom situations without the luxury of looking up AI-generated information on one’s phone. Or becoming a teacher without actually having digested most of the books you were assigned, thanks to AI reading, summarizing, and writing for you. There are consequences to being a passenger, client, or student of people whose lack of academic integrity has led them to be underprepared for when their best efforts are needed.

AI is not the first technological innovation to exercise life-altering influences on people and on the process of education. Much can be learned from the stories and impact of specific innovations, such as the alphabet, the printing press, the telegraph, radio and television, and smart phones. Cyberbullying and bringing Chromebooks into many classrooms are particularly relevant. As we will see, most technological innovations—and certainly AI—should be conceptualized as, to a greater or lesser extent, operator dependent (Rossi, 1978). A clear way to understand this is to consider a Stradivarius, certainly an innovation in the creation of violins. Stradivari are operator dependent—the sound they produce depends on the human being “operating” the instrument. Different humans will generate different sounds from the same instrument. It is therefore appropriate to consider the instrument and the player as a single unit of analysis. Evaluating the impact of either depends on the joint impact of both.

The same is true for other innovations, including AI. We must look not only at the innovation but also the “operator.” As we will see, characteristics of the operator tend to be neglected in considering the impact of innovations, particularly those that are technological in nature. This cannot be the case with AI.

A review of the impact of selected technology innovations

Writing and literacy

Consider first writing and literacy. For centuries, knowledge and information were transmitted via an oral tradition. Drawing was mainly used for storytelling, representing visual reality or fantasy. The introduction of writing—symbols containing widely shared meaning—allowed institutional procedures to be codified and knowledge to be captured. Yet writing was not universally welcomed. Some worried that memory capacities would become limited and individual reasoning skills and creativity would be compromised by looking at documented ways prior problems were addressed. Perhaps these concerns are familiar, as they have been raised about smart phones.

When the printing press was created in the 15th century, there were concerns that mass access to books would weaken religious authority, allow ideas threatening existing power and authority (a subjective judgment, to be sure) to spread widely, and lead to harmful “free thinking” by individuals. These concerns have been raised about the internet.

Telegraph

The telegraph is a particularly interesting case example. Building on discoveries by Benjamin Franklin and Hans Christian Orsted in electricity and magnetism and Faraday in electromagnetic induction, Samuel Morse and Alfred Vail created a successful telegraph in 1837. Postman (1994) credits the idea of sending a message via electricity over a wire as occurring to Morse while he was on an ocean voyage in 1832 and found himself unable to communicate in any way. As with most technological advances, the true implications of his invention were not known to Morse at the time. What the telegraph did was to change the way people communicated and especially the way “news” was transmitted. From reliance on human conveyance and delivery, messages now traveled much more quickly. The human element—with all its potential for error, omission, nuance, etc.—yields to information without emotion. The Associated Press was created in 1848 and grew in size as more and more of the country was wired for telegraphic messages. Postman (1994) notes that this began the process of more intrusive information, outside the control of the recipient, or at least, more unexpected and of variable relevance. Letters, typically thoughtfully crafted but rarely timely, were deemphasized.

Television

Television, as an innovation, was the progenitor of concerns about “screens” and likely was the impetus for the term, “moral panic” (Cohen, 1972). Moral panic refers to societal reactions to perceived threats to existing moral norms that lead to fears that tend to be disproportionate to the actual impact. Postman (1994), Postman (1995), and Postman (2005) exemplified this with his great skepticism about the benefits of television, compared with their harms. Foremost among his concerns was that, by 1950, when the television became ubiquitous in American homes only 23 years after its invention by Philo Farnsworth, acquiring information became even less dependent on being able to read than with the advent of radio. He also observed that there was subtle cognitive rewiring going on. Visual aspects of television shaped the perception of information in ways that did not happen via radio. While written messages were conveyed visually before the invention of the alphabet, the alphabet revolutionized visual messaging. Postman felt television would affect cognitive wiring related to reading.

Learning how to read involves a number of skills and is an area of status and success differentiation within our education system and society. Foremost of these skills is the decoding of patterns of letters into units of meaning. Television requires even more instantaneous pattern recognition—quick perception, not analytic decoding, because the images change so quickly. It does not require the linear and sequential logic of the printed word. Programs as typically viewed through computer/tablet/smart phone screens require scanning processes that often are anathema to reading, and some maintain that the different eye movement processes have reduced stamina for reading, as well as accuracy of decoding and inference (Kostyrka-Allchorne et al., 2017; Rayner and Fischer, 1996).

Thus, television was anticipated to distract young people (and adults!) from reading, shorten attention spans, and expose children (and adults!) to images and stories that were not part of existing, local, previously accepted norms. Hence the idea of a “moral panic.” Another overarching concern was that television-watching would promote greater violence, though this was less an inherent property of the medium than a resulting interaction of medium and content (Huesmann, 2007). Indeed, it always has been difficult to connect rises in aggression and acting-out behavior to the role of television, though research tends to support these relationships, especially in cases where violent television content is viewed and regular television watching begins in infancy (Huesmann, 2007).

Information via radio, and especially television, is more intrusive upon young children and children do not always grasp what is being communicated. As James Comer has said, children spend more time outside of the company of adults (whose developmental purpose included filtering information and messages, primarily to restrict students’ access to morally undesirable behaviors), with the result being greater access to mass and social media that is not filtered through adults (Darling-Hammond et al., 2018). This, in turn, leads to a change in how children acquire information. In another indirect effect, children become more exposed to and interactive with buying habits of their parents, increasing their consumerism in ways that generally are not interpreted as beneficial (Chhatwal, 2025).

Challenges in mitigating the harms of technologies

Both the potential harms of innovative technologies and ways to mitigate those harms are well known. The literature on children’s television watching has noted, for decades, that adults watching together with children and discussing the content while doing so can mitigate many of the harms of television watching. Similarly, there are guidelines about how to limit the damage on children from screen time. Organizations like the Mayo Clinic and the American Academy for Child and Adolescent Psychiatry are sources of clear and sensible advice,1 but while this information is accessible to parents, relatively few follow its recommendations. Where the guidance falls short is in not specifying how they can be adapted to the current context of each family’s life.

Postman (1994) anticipated the tremendous challenges facing parents or educators who wish to resist the relentless march of technology. “To insist that one’s children learn the discipline of delayed gratification, or modesty in their sexuality, or self-restraint in manners, language, and style is to place oneself in opposition to almost every social trend” (p. 152). “But most rebellious of all is the attempt to control the media’s access to one’s children… [doing so requires] ‘a level of attention that most parents are not prepared to give to child-rearing’ (p. 153)”. Gessen (2025) suggests that the overwhelming amount of information adults deal with, as well as ongoing concerns about its veracity, continues to keep adults from providing the in-depth focus on technology needed for childrearing and for education.

What we can say about AI, based on the impact of the prior innovations just discussed, is that its influence is likely to be transformative. Yet, AI is a technology still looking for a platform, just as telegraphs, radios, and televisions took their particular form as holders of their technologies. At this stage, it would be folly to predict specific forms that future platforms for AI might take. The following three quotes are from Peters (1987), p. 244 useful reminders about how hard it is to predict the future, except in retrospect:

Harry Warner, a founder of Warner Brothers Studios, 1927: “Who in the hell wants to hear actors talk?”

Thomas Watson, a founder of IBM, 1943: “I think there is a world market for about five computers.”

Ken Olsen, a president of Digital Equipment, 1977: “There is no reason for any individual to have a computer in their home.”

Understanding the user-technology dyad: operator dependence

Holding back technologies has not proven to be a viable long-term strategy in the past. The pervasiveness of all the innovations just discussed is proof of that. A more viable strategy is to focus on the “operator” aspect of the user-technology dyad. First, we will take a closer look at the concept and functional significance of the “operator dependent” approach. This will lead us into two questions: what educational purposes will new technologies advance, and how do we best prepare the human operators to use technology in the service of those purposes?

Operator dependent

Rossi (1978) coined this term to refer to the fact that innovation and social change rely on human beings as the means of change. For instance, consider a school intending to introduce a new reading curriculum to students. Teacher/staff attitudes and commitment to the program and their enthusiasm – or lack of it – play an important role. Outside consultants or speakers may enhance the program’s impact, depending on how they are selected, prepared for the local context, and used. Program leaders may use curriculum activities carefully or they may devise their own approach, perhaps making culturally sensitive adaptations. Student reaction to the innovation may or may not be solicited and/or attended to. Gager and Elias (1997) engaged in a comprehensive assessment and analysis of how acclaimed, evidence-based social and emotional learning programs were carried out in New Jersey public schools. Each of the 125 programs examined could be considered an “innovation” entering each school adopting them. What they found was that the quality and attributes of the program were not the deciding factor in their success, or lack thereof. Outstanding programs were found on either side of the success-failure continuum. The key factor determining where a particular program wound up was the way in which each was implemented. It was the action of the human operators and their contexts that determined the innovation’s adoptive process. One under-considered aspect of operator dependence is that an innovation must mesh with the developmental stage and self-conceptions of the staff who will implement it (Kress and Elias, 2006). Skilled staff in any setting take pride in their craft and view their work with a sense of ownership. To gain their approval, an innovation must fit their values and identity: for instance, a Dean of School Discipline’s sense of how it is that students bring their behavior under better personal control. At the same time, an innovation must also offer something new that increases the staff’s effectiveness as they define it. Staff members of different ages, ranks in the organization, or levels of seniority may support or resist an innovation, depending on how they understand their work and roles.

Context dependent

Staff members or operators are not the only humans involved in an innovation. The recipients of the initiative also influence its impact, as does the social ecology of the setting. Regarding a school-based innovation, consideration must be given to the school and to the students. School and classroom culture and climate may undermine the impact of any innovation. Each school, workplace, or community has a mix of ages, genders, races and ethnicities, income levels, and other forms of diversity and personal identity that an innovation must address. These affect the social norms of the setting and the skills and resources its members need to adapt, and therefore the goals of an innovation. Furthermore, an innovation may draw a different response in a setting with a strong sense of community among its members, compared to one without it.

Greenhalgh et al. (2004) extensively studied processes that influence the success of innovations in human service systems. Consistent with the concept of operator dependence, they identify six features of adopters that must be considered when an innovation is brought into a system: needs, motivation, values and goals, skills, learning style, and social networks. If you consider educators as the “operators,” then this list makes sense. Does the innovation address real needs? Is there some motivation to find a new way to address those needs? Is the approach consistent with staff values and goals? Do they have a clear vision of how the innovation will be put into regular practice and do they have the competencies necessary to operate the innovation effectively? How much information do they need to feel confident in making a decision to commit to the innovation? Do they have a learning style that is tolerant of ambiguity? Finally, are there available social networks of other adopters that can support the innovation’s use?

What is much less often conceptualized, however, is that students also are operators of AI innovations. And the same set of processes can be applied to them. Their needs for and motivation for the innovation have to be carefully cultivated. The values and goals needed for effective use of AI for learning must be explicit and communicated by the culture of the school. These include academic integrity, curiosity, inclusiveness, and collaboration. Do they have the skills to engage in behaviors consistent with these key values? Is the way AI is used consistent with students’ learning styles? To what extent are students connected with other students who can support their positive, constructive use of AI?

A case example: cyberbullying

We can anticipate much about potential trajectories of AI use from examining cyberbullying. Cyberbullying involves the use of social media technology to spread harmful, often vindictive, degrading, insulting, and false information about other people. Often, those individuals are members of “protected classes” (such as LGBTQ+, people with disabilities, racially minoritized students) who are reluctant to disclose what is happening to them (National Association of School Psychologists, 2023). Estimates are that almost 30% of students in the United States have experienced cyberbullying at least once (Patchin, 2022).

Responses to cyberbullying have included monitoring of children’s use of technology and social media, but the critical factors that matter can be summarized as the moral compass and social–emotional problem-solving skills of the bullies. Do they understand that what they are doing is harmful and wrong? Do they understand the short and long-term consequences of their actions, as well as the risks to their own reputation, freedoms, and privileges? And do they understand why they are acting toward others in these unkind and disrespectful ways? If they have a problem or issue with their targets, is cyberbullying the best way to resolve the difficulties? Do they know their own emotions and their own goals? Evidence suggests that the answer to these questions is most often, “No” (Fanti et al., 2012).

We also must ask where they received the idea that abusing others is a good and reasonable thing to do. Is bullying tolerated in their classroom and school environments? Have they been victimized themselves without protection from or consequences to their bullies? Have they heard messages from influential adults in their lives that certain individuals “deserve” to be maltreated because they are somehow “less than” others? Have they considered that they may be interacting with one or more of their victims and might even find themselves dependent on those individuals’ knowledge or cooperation?

It is likely safe to say that the idea of using social media-related technology to cyberbully someone else would not occur to the vast majority of students, and that among those who would consider it, their social–emotional competencies would prevent most of them from following through on that impulse. Among those who did perpetrate an act of cyberbullying, most of those would likely not repeat it out of a sense of shame. For growing children, this is how their moral compass becomes clearer and stronger. Few indeed are the young people who do not transgress in any way. What matters is that they take the correct prosocial messages from the consequences of having done so, even if there are no clear extrinsic negative outcomes to themselves. That still leaves us with 30% of students who are victimized, and that is an unacceptable degree of harmful behavior. We must ensure the collective social–emotional strengths of our students and the corresponding health of their classroom and school environments are a priority for all schools in all communities (Elias, 2025).

Preparing educators and students to use AI for constructive purposes

This leads us into two interrelated questions: what educational purposes will the new AI technology advance, and how do we best prepare the human operators to use AI technology in the service of those purposes? One way to begin to understand potential answers is to look at an informative case example, that of bringing Chromebooks into classrooms.

Lessons learned from the Chromebook innovation

Indeed, the Chromebook serves as a useful example for how new technological innovations can arrive at schools. What often happens is that the technology creates the vision of its use (Coleman and Kleiner, 2000). The impact of the ever-present Chromebooks on instruction and group interaction is substantial. The technology of individual personal computers tends to favor individualized learning and problem solving. Yet, despite ongoing concerns, initial data on bringing Chromebooks into classrooms suggest that they are an asset when resources are available to help with their absorption and diffusion into existing pedagogical systems (Albataineh et al., 2024; Education First, 2025). They have been successful especially where they have been used in the service of a vision of collaborative pedagogy. Such a pedagogy includes real-time document sharing, editing, and teamwork; promoting collaborative learning experiences; and greater time on task due to screen monitoring. The technology was not used to define the vision. This mirrors Gager and Elias (1997), noted earlier, where the “technology” represented by evidence-based SEL curriculum programs was not the key factor in determining their impact. The latter was most strongly predicted by implementation considerations, especially the role of leadership and the preparation and support of teachers who carry out the SEL programs. Like the Stradivarius, the Chromebook in the hands of educators skilled in collaborative pedagogy can result in beautiful educational music.

For what purposes will AI technology be used in education?

One of the most unheralded aspects of educational innovation contexts is how they will put the innovation to use. November (2010) predicted that schools will be in a chaotic state in which a cascading array of technology-related innovations transform the nature of teaching and learning. One constant, he noted, will be the value of certain skills: management of overwhelming amounts of information, empathy, collaboration, and goal-setting and self-monitoring (November, 2010). Students must understand the nature of their exposure to (often unwanted or misleading) information, and how to use it, in concert with other people, toward their own or shared goals in considerate ways. They must know where they are headed and whether they are on track.

AI will enter schools carried in a series of innovations/platforms (just as the smart phone was the carrier of technologies and is now a carrier of AI). The laws that apply to the dissemination of innovation harken back to what already has been discussed: technologies are operator dependent. There are two aspects of this: individual and collective. Both aspects relate to the questions, “For what purposes will the technology be used and what will be the interpersonal and value structures guiding its use?” This is connected to one’s view of what public education is for, i.e., to one’s vision of schools and education.

Almost a century ago, Dewey (1938) viewed schools as preparing students for democratic participation. To him and others (Westheimer, 2015), educators must design educational activities that students will experience, which means that they will engage in cognitive, social, emotional, spatial, physical, artistic, and contextual ways. In other words, instruction must intentionally activate students’ multiple intelligences. This is neither easy nor automatic.

From a vision of education similar to that of Dewey, the path forward must involve rules and guideposts of a kind that should be animated by a spirit of inquiry. Schools should be open to questioning and to change. This reflects an understanding that groups of people who are part of a collective effort—in classrooms, schools, work groups, field experiences, families—have a shared purpose, interests, and sets of goals. They are part of a common enterprise in which everyone’s contributions matter. Rules are in the interest of goal attainment, not control. Therefore, openness to change is essential for adapting to changing circumstances. For successful adaptation to happen, educators must know who the students are as people, and they must know one-another. Cultures and contexts must be shared and respected. There must be an ethic of inclusiveness (Elias and Leverett, 2021).

The challenge of AI is that it can give students answers in ways that are not “earned.” Put another way, a certain vision of education would impel educators to learn to use AI to design more engaging and sophisticated experiential educational activities. There is another element to this. Students must understand that having AI generate the “right” answer—or an “adequate” answer—does not foster their own learning and productive growth. More than being a matter of honesty, misuse of AI is self-harm. This speaks to the virtue of integrity, as well as skills of persistence and problem solving.

Being a citizen in a democracy requires both knowledge and skills (Westheimer and Kahne, 1998). Citizens are required to engage in actions necessary within democratic institutions in an intentional, positive, and reflective way. Dewey (1938) made a point of saying that the ability to “stop and think” puts a damper on automatic, impulsive responding and opens learners to new, unexpected experiences. Well before emotional intelligence/SEL was formulated, Dewey understood that greater access to content did not correlate with more effective, lasting, practical action. Postman (1995) noted that, regardless of technology, there is a “tradition of teaching children how to behave in groups. You cannot have a democratic—indeed, civilized—community unless people have learned how to participate in a disciplined way as part of a group” (p. 45).

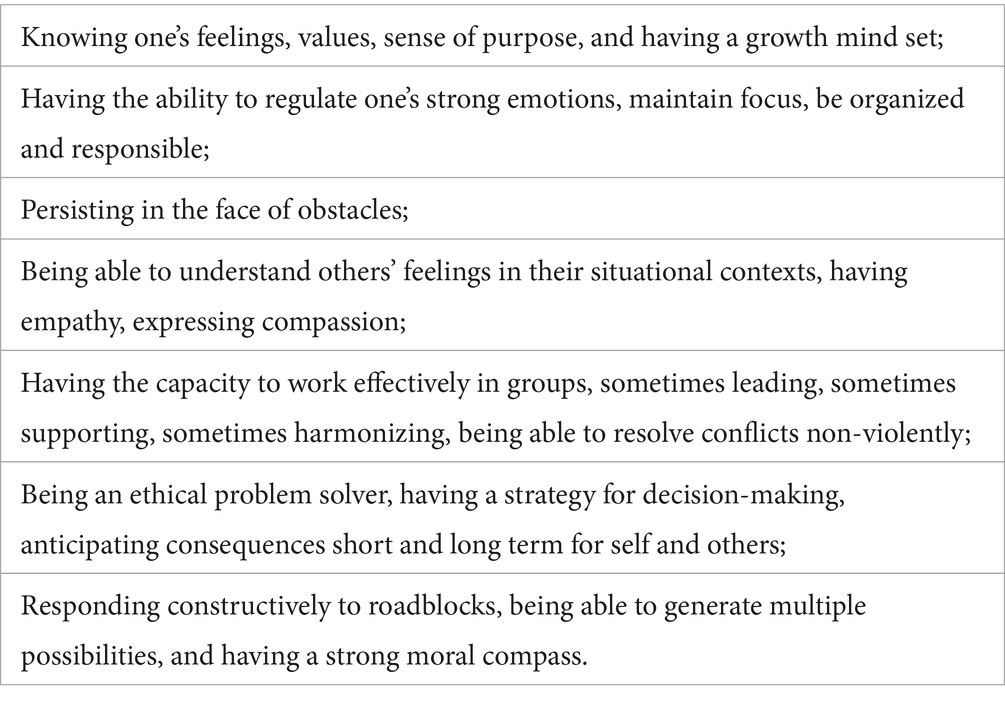

Convergent with these intuitive observations, Westheimer and Kahne (1998), Goleman (1995), and Mahoney et al. (2025) have articulated a wider range of essential social–emotional competencies/emotional intelligence skills. These go beyond those disseminated by the Collaborative for Academic, Social, and Emotional Learning2 and include seven skill areas (see Table 1) needed for active engagement in democratic citizenship.

Table 1. Essential social–emotional competencies/emotional intelligence skills for democratic citizenship.

Recommendations: how do we systematically prepare students to use AI constructively and ethically?

While education systems are far from embracing the systematic teaching of the skills mentioned above, preparing students to use AI constructively and ethically will take an even wider focus. This is why recent work in the area of social–emotional learning (SEL) has given way to SEL 2.0: social–emotional and character development (SECD; Elias, 2009). We cannot assume that children- or anyone-will direct their social–emotional skills for prosocial ends. As Theodore Roosevelt said, in a speech in Harrisburg, PA, October 4, 1906: “To educate a person in mind and not in morals is to create a menace to society.” Martin Luther King, Jr. updated this in 1947: “The function of education, therefore, is to teach one to think intensively and to think critically. But education which stops with efficiency may prove the greatest menace to society. The most dangerous criminal may be the person gifted with reason, but with no morals.”

Foster positive purpose

Hatchimonji et al. (2017) maintain that schools must take active, explicit, and systematic efforts to help students connect with their sense of positive purpose and of potential constructive contributions in the future. David Brooks (2025) refers to these as “annunciation moments”—times when we feel called to pursue a commitment intensively. As researchers have shown (Chen and Cheng, 2020; Malin, 2018), one’s calling does not necessarily remain steady throughout life, and the nature of a “calling” for young children or adolescents is typically not the same as it is for adults or senior adults. The commonality, though, is that mental health and well-being tend to suffer during those times when we are unanchored to a sense of purpose (ideally positive, but not necessarily so). A corollary to having a positive purpose is that one tends to be willing to endure many hardships in the service of achieving that purpose—one is not looking for the “easy way out,” but rather the most strengthening way possible (Brooks, 2025; Frankl, 1985). The analogy to AI could not be clearer.

Provide clear moral direction

As Dewey (1938) saw so presciently, the hours, days, and years spent in schools can and should send young people a strong message about how to live a productive, moral, contributory life as adults. Such a life should include compassion for others, an appreciation for the common good, an understanding of the give and take of democratic functioning, and many opportunities to engage in roles that allow these proclivities to develop (Westheimer, 2015). Also included is curiosity, which fosters an examination of the status quo toward efforts at continuous improvement. A corollary is healthy skepticism, which serves as a set of guard rails that keeps us within the boundaries of the law, considerate of others, and honest in recognizing and accounting for our own shortcomings (Brooks, 2025). This circles back to integrity: we must care about the truth of our work and impact more than we care about our own status or reputation. Those who cheat create harm based on the outcomes and influence of their work and damage the bonds of trust in their conduct and words—and those of their colleagues. This is true for grand things, such as scientific research, and smaller things, such as when people say that they washed their hands properly when they really did not do so.

Build social–emotional and character competencies, especially intellectual honesty

So it will be with AI. Its impact on education will depend on the vision of education held by policymakers and school leaders. Also influential will be the extent to which students have social–emotional and character competencies and the adults in schools send clear messages about prosocial core values. A well-formed moral compass is an essential skill that can enable children to reach a high standard of ethical behavior, as long as other social–emotional skills are developing in concert (Kasler and Elias, 2014). Along with self-awareness, decision making, and problem-solving skills, developing a strong personal moral compass can help promote the goal of confident, skilled, and moral individuals. Young people are stepping into a world that is as complex as it is challenging, and need a personal commitment to sustain the good health needed to follow the direction of that compass. In turn, educators at every level must strive to create the conditions in schools that will allow social–emotional and character development to thrive.

One of the most critical areas we can anticipate being of concern is intellectual honesty. AI has the capacity to help students find answers, create responses, and short-circuit the learning process. As we saw with Chromebooks, it is essential that a vision of learning drives the technology, not vice-versa. With all the resources that will be devoted to bringing AI into schools, with the tremendous profits that will accrue to technology services and consultants, a proportional fund should be set aside to promote social–emotional and character development approaches in schools. There are clear guidelines for how this can occur (Elias and Berkowitz, 2016), and tremendous human resources to assist the process around the United States and worldwide. Foremost among these resources are footnote 1,3 the New Jersey Alliance for Social–Emotional and Character Development,4,5 and the SEL Providers Association. Their work is fueled and supported by powerful research (e.g., Cipriano et al., 2023; Hatchimonji et al., 2022; Mahoney et al., 2025; Yuan et al., 2025).

I must note two essential caveats. While I believe my recommendations have relevance outside of the United States, the U.S. educational system and wider context has been the source of my inferences. This may limit generalizability. Additionally, as of this writing, the state of evolution of both AI and SEL in schools is accelerating, volatile, and subject to political considerations. This has limited the relevant empirical and experiential work upon which I have been able to draw. My intention is that this article will be a catalyst for research, action, and policy related to preparing for the inevitable impact of AI in education.

Indeed, looking ahead, the use of AI with academic, and more general, integrity and in ethical ways represents at least as great a challenge as the implementation of its technology. The human operators of AI-infused learning systems must learn to use it in support of a human vision of education, i.e., for collaborative and experiential pedagogy, and must be given support in that use. They must engage students in the work—and ethics – of finding information and arriving at answers, not having it all provided by AI engines. And the recipients (and users) of those systems—the students—must have a sensitive and active moral compass and the social–emotional competencies to interact with those systems and their classmates and teachers in ways consistent with a positive sense of purpose. This requires schools to invest in systematic efforts to improve their culture and climate, articulate core values, and developmentally build students’ social–emotional skills and their application to all academic subject areas (Elias, 2009; Elias and Berkowitz, 2016). Schools also must approach this in the spirit of continuous improvement, to allow for proactive adaptations as inevitable changes in technology and circumstances require.

Author contributions

ME: Writing – review & editing, Writing – original draft, Project administration, Investigation, Conceptualization.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author declares that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^https://www.aacap.org/AACAP/FamiliesandYouth/FactsforFamilies/FFF-Guide/Children-And-Watching-TV-054.aspx

3. ^SEL4US.org

References

Albataineh, M., Warren, B., and Al-Bataineh, A. (2024). The effects of Chromebook use on student engagement. Int. J. Technol. Educ. Sci. 8, 138–151. doi: 10.46328/ijtes.530

Brooks, D. (2025). A surprising route to the best life possible. New York Times, 2025, SR 9. Available online at: https://www.nytimes.com/2025/03/27/opinion/persistence-work-difficulty.html (Accessed October 10, 2025).

Chen, H. Y., and Cheng, C. L. (2020). Developmental trajectory of purpose identification during adolescence: links to life satisfaction and depressive symptoms. J. Adolesc. 80, 10–18. doi: 10.1016/j.adolescence.2020.01.013

Chhatwal, G. (2025). The evolution of kids’ media consumption habits. Kadence international blog. Available online at: https://kadence.com/en-us/the-evolution-of-kids-media-consumption-habits/ (Accessed October 10, 2025).

Cipriano, C., Strambler, M. J., Naples, L. H., Ha, C., Kirk, M., Wood, M., et al. (2023). The state of evidence for social and emotional learning: a contemporary meta-analysis of universal school-based SEL interventions. Child Dev. 94, 1181–1204. doi: 10.1111/cdev.13968

Coleman, J., and Kleiner, A. (2000). “The future of the company” in Schools that learn: A fifth discipline field book for educators, parents, and everyone who cares about education. eds. P. Senge, N. Cambron-McCable, T. Lucas, B. Smith, J. Dutton, and A. Kleiner (New York: Currency-Doubleday), 510–517.

Cottoms, T. M. (2025). The tech fantasy that powers a.I. Is running on fumes. New York times, 2025, SR 3. Available online at: https://www.nytimes.com/2025/03/29/opinion/ai-tech-innovation.html (Accessed October 10, 2025).

Darling-Hammond, L., Cook-Harvey, C., Flook, L., Gardner, M., and Melnick, H. (2018). With the whole child in mind: Insights from the comer school development program. Alexandria, VA: ASCD.

Education First. (2025). AI coherence. Available online at: https://www.education-first.com/strategies/accelerate-academic-success/ai-x-coherence/ (Accessed October 10, 2025).

Elias, M. J. (2025). Reinvigorating classroom climate: Everyday strategies to inspire teachers and students. New York: Routledge.

Elias, M. J. (2009). Social-emotional and character development and academics as a dual focus of educational policy. Educ. Policy 23, 831–846. doi: 10.1177/0895904808330167

Elias, M. J., and Berkowitz, M. W. (2016). Schools of social-emotional competence and character: Actions for school leaders, teachers, and school support professionals. Lake Worth, FL: National Professional Resources, Inc.

Elias, M. J., and Leverett, L. (2021). Addressing equity through culturally responsive education and SEL. Lake Worth, FL: National Professional Resources, Inc.

Fanti, K. A., Demetriou, A. G., and Hawa, V. V. (2012). A longitudinal study of cyberbullying: examining risk and protective factors. Eur. J. Dev. Psychol. 9, 168–181. doi: 10.1080/17405629.2011.643169

Gager, P. J., and Elias, M. J. (1997). Implementing prevention programs in high risk environments: Application of the resiliency paradigm. American Journal of Orthopsychiatry, 67, 363–373.

Gessen, M. (2025). The barrage of trump’s awful ideas is doing exactly what it’s supposed to. New York Times, Feb. 15, 2025, A18.

Greenhalgh, T., Robert, G., Fraser, M., Bate, P., and Kyriakdou, O. (2004). Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 82, 581–629. doi: 10.1111/j.0887-378X.2004.00325.x

Hatchimonji, D. R., Linsky, A. C. V., and Elias, M. J. (2017). Cultivating noble purpose in urban middle schools: a missing piece in school transformation. Education 138, 162–178.

Hatchimonji, D. R., Vaid, E., Linsky, A. C. V., Nayman, S. J., Yuan, M., MacDonnell, M., et al. (2022). Exploring relations among social-emotional and character development targets: character virtue, social-emotional learning skills, and positive purpose. Int. J. Emotional Educ. 14, 20–37.

Huesmann, L. R. (2007). The impact of electronic media violence: scientific theory and research. J. Adolesc. Health 41, S6–S13. doi: 10.1016/j.jadohealth.2007.09.005

Kasler, J., and Elias, M. J. (2014). “Promoting school health by means of social emotional and character education” in Emotional intelligence: Current evidence from psychophysiological, educational and organizational perspectives. eds. L. Zysberg and S. Raz (Hauppauge, New York: Nova Science Publishers, Inc), 87–104.

Kostyrka-Allchorne, K., Coopere, N. R., and Simpson, A. (2017). The relationship between television exposure and children’s cognition and behaviour: a systematic review. Dev. Rev. 44, 19–58. doi: 10.1016/j.dr.2016.12.002

Kress, J. S., and Elias, M. J. (2006). “Implementing school-based social and emotional learning programs: navigating developmental crossroads” in Handbook of child psychology. eds. I. Sigel and A. Renninger. Rev. ed (Hoboken, NJ: Wiley), 592–618.

Mahoney, J., Domitrovich, C., and Durlak, J. (Eds.) (2025). Handbook of social and emotional learning. 2nd Edn. New York: Guilford.

Malin, H. (2018). Teaching for purpose: Preparing students for lives of meaning. Boston: Harvard Education Press.

National Association of School Psychologists. (2023). Cyberbullying: prevention and intervention strategies [handout]. Available online at: https://www.nasponline.org/resources-and-publications/resources-and-podcasts/school-safety-and-crisis/school-violence-resources/bullying-prevention (Accessed October 10, 2025).

November, A. (2010). “Technology rich, information poor” in 21st century skills: Rethinking how students learn. eds. J. Bellanca and R. Brandt (Bloomington, IN: Solution Tree), 275–284.

Patchin, J. (2022). Summary of our cyberbullying research (2007–2021). Cyberbullying Research Center. Available online at: https://cyberbullying.org/summary-of-our-cyberbullying-research (Accessed October 10, 2025).

Postman, N. (2005). Amusing ourselves to death: Public discourse in the age of show business. New York: Penguin.

Rayner, K., and Fischer, M. H. (1996). Mindless reading revisited: eye movements during reading and scanning are different. Percept. Psychophys. 58, 734–747. doi: 10.3758/BF03213106

Westheimer, J. (2015). What kind of citizen? Educating our children for the common good. New York: Teachers College Press.

Westheimer, J., and Kahne, J. (1998). “Education for action: preparing youth for participatory democracy” in Teaching for social justice. eds. W. Ayers, J. A. Hunt, and T. Quinn (New York: Teachers College Press), 1–20.

Keywords: social–emotional learning (SEL), artificial intelligence (AI), operator dependent factors, adoption of technological innovations, social–emotional and character development, intellectual honesty, academic integrity

Citation: Elias MJ (2025) Social–emotional competencies and character are at the foundation of education regardless of technology. Front. Educ. 10:1607639. doi: 10.3389/feduc.2025.1607639

Edited by:

Peter Adeniyi Alaba, University of Malaya, MalaysiaReviewed by:

Ilaria Viola, University of Salerno, ItalyUrszula Soler, John Paul II Catholic University of Lublin, Poland

Copyright © 2025 Elias. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Maurice J. Elias, bWVsaWFzQHBzeWNoLnJ1dGdlcnMuZWR1

Maurice J. Elias

Maurice J. Elias