- 1Institute of Human-Centred Computing, Graz University of Technology, Graz, Austria

- 2Faculty II: Engineering & Technology, Tuttlingen Campus, Furtwangen University, Furtwangen, Germany

Introduction: Remote teaching often feels unnatural and restricted compared to on-site lectures, as traditional teaching aids are reduced to a 2D interface. The increasing adoption of VR expands online teaching platforms by offering new possibilities for educational content and enables teachers to teach more intuitively. While the potential of virtual reality (VR) for learners is well-investigated in the academic literature, VR tools for educators have hardly been explored. In this paper, we introduce the tool VRTeaching, a platform designed for presenters to enable immersive lectures using VR glasses and integrated tools such as an interactive whiteboard that can display slides, built-in chat integration to enable communication, and interactivity features such as polling tools or audience questions.

Methods: The presented study includes an expert evaluation assessing the usability and the potential of the teaching and learning platform and an investigation of the mental demand and psychophysiological responses on teachers and students giving presentations depending on the teaching environment.

Results: The results indicate a significantly higher mental demand for the VR environment than the online environment, with no significant effects on the psychophysiological measures.

Discussion: Despite the increased perceived mental demand, participants recognized the VR lecture room as having strong potential for enhancing teaching and learning experiences. These findings highlight the potential of VR-based platforms for remote education while underlining the importance of considering cognitive load aspects in their design.

1 Introduction

Teaching remotely has become an increasingly popular alternative to in-person lectures. While the integration of digital technologies has enabled remote teaching (Abdurashidova and Balbaa, 2022), many challenges have emerged alongside the opportunities: social isolation, technical deficits, and difficult psychological demands (Nalaskowski, 2023). Students often feel they learn less and that lecturers do not teach them anything (Venton and Pompano, 2021). According to (Cardullo et al. 2021), the interaction between learners and educators is the most frequently stated challenge for teachers who educate online. The increasing use of virtual reality (VR) is expanding the offerings of online teaching platforms. It provides new possibilities for presenting educational content and improves the quality of educational processes (Prasolova-Førland and Estrada, 2021). It has also been demonstrated that VR can be an effective method for managing psychological stress and can be used to reduce the overall stress level (McGarry et al., 2023). While the subjective workload inside VR remains the same as in non-VR environments (Chao et al., 2017), the workload can be influenced by the teaching environment itself (Luong et al., 2019), as well as by the feeling of presence and experience in using the work equipment (Maneuvrier et al., 2023). VR technologies are already known as valuable tools and are used for many educational applications (Cheng et al., 2017; Dunnagan et al., 2019; Ota et al., 1995; Pellett and Zaidi, 2019; Pirker et al., 2017; Pulijala et al., 2018; Heinemann et al., 2023; Koolivand et al., 2024). Most VR education applications are designed to directly support students in their learning process, with limited literature focusing on VR applications that assist educators in conducting lectures and teaching larger audiences. There are only a few approaches that focus on the asynchronous exchange between students and teachers using virtual reality (Bruza et al., 2021) and virtual production and live streaming systems for immersive teaching experiences (Nebeling et al., 2021).

In previous studies, we explored various motivating and engaging learning methods for STEM education. Using an immersive learning environment, we evaluated the engagement and learning experiences from the student and teacher perspectives and potential use cases for classroom settings (Holly et al., 2021). In this paper, we introduce the tool VRTeaching, a virtual reality experience designed to give livestream presentations in a VR environment, e.g., teachers giving a lecture or students presenting a selected topic to classmates. It enables livestreams via the popular platform Twitch (https://www.twitch.tv/). The interactivity of the audience is maintained through chat functions and surveys. To evaluate the suitability for teaching, we conducted an expert evaluation with a focus on the usability and the potential of the platform as well as a user evaluation to compare the VR teaching environment directly with on-site environments and online teaching platforms and to investigate whether there are differences between lecturers and students giving presentations. This resulted in the following research questions:

• RQ1: How do users perceive the suitability and effectiveness of the VR teaching streaming tool compared to traditional video conferencing tools?

• RQ2: How does the mental demand vary for lecturers and students across different teaching environments, such as VR, online teaching platforms, and on-site teaching?

• RQ3: How do psychophysiological reactions differ between lecturers and students presenting in different teaching environments?

Contribution: This paper introduces a new tool designed specifically for teachers to give remote lectures in VR that can be livestreamed to students. The work discusses the findings of an expert evaluation that mainly provided qualitative feedback, as well as the results of a user study that provides psychophysiological response measurements of the study participants (teachers and students), both groups have given presentations in three scenarios: in the VR environment, on-site, and via an online video conferencing tool. The measurements include heart rate, heart rate variability, as well as the subjectively reported mental load.

2 Background and related work

Innovative technologies have significantly transformed educational methodologies, enhancing the learning experience and accessibility. This section addresses the integration of virtual reality teaching methods, the utilization of recordings and livestreams in lectures, and the implementation of technological interactivity in educational settings.

2.1 VR in education

VR in an educational context has been the topic of various research works, mostly focused on students acquiring knowledge or understanding abstract concepts by using a VR application. There are various VR applications available, such as physics laboratories (Pirker et al., 2017), chemistry laboratories (Dunnagan et al., 2019), microbiology laboratories (Chitra et al., 2024), applications for learning a language (Cheng et al., 2017), or learning about geography (Stojsić et al., 2017). Yépez et al. (2020) demonstrated a VR-based e-learning application to simulate telepresence in a virtual classroom for students in distance education, allowing interaction with a virtual teacher and peers from any location. It supports synchronized, socially interactive environments and shows positive results in terms of user engagement and experience. Xue-qin et al. (2016) developed a multi-user virtual campus system using VR to simulate a real-life campus, where students can participate in activities such as attending classes, exercising, and making friends; and teachers can deliver lectures, review students' work, and conduct examinations. As a persistent and collaborative digital environment, it has great potential to deliver personalized and adaptive learning experiences. It enables educators to create and deliver immersive virtual experiences that deeply engage learners, offering a sense of presence and social connection that traditional classroom environments cannot replicate (Sá and Serpa, 2023; Onu et al., 2024).

VR and its potential for education have been discussed for several decades (Hoffman and Vu, 1997; Moore, 1995). (Bailenson et al. 2025) analyzed findings from the last 30 years of psychological experimentation in virtual reality and identified that procedural training consistently outperforms abstract learning in VR environments. Potential positive influences include the reduction of psychological stress, such as speech anxiety and the overall stress level (Yadav et al., 2020; Lim et al., 2023; McGarry et al., 2023). Chao et al. (2017) compared different training methods and showed that VR led to a lower physiological workload compared to technical manuals and multimedia films, with no difference in the subjective workload. Other studies indicate no significant difference between the workload in VR and reality for both subjective and physiological measures (Luong et al., 2019). The workload can be influenced by the teaching environment itself, as well as by the feeling of presence and experience in using the work equipment (Maneuvrier et al., 2023). Psychophysiological measures, such as an electrocardiogram (ECG) to measure cardiac activity, can be used for objective measurement (Luong et al., 2019; Haapalainen et al., 2010; Ryu and Myung, 2005). The work by Bardach et al. (2023) concerns a simulation of a classroom and its students inside VR and the resulting psychophysiological stress symptoms of pre-service teachers in relation to neuroticism. While much of the existing research focuses on the learner's experience, it is equally important to consider the implications for educators. Ripka et al. (2020) interviewed educators and pre-service teachers regarding their wishes and expectations from VR. Saalfeld et al. (2020) describe an application in which students use a VR device while a personal teacher guides the students by using a tablet device to annotate certain objects in the student's experience. Even more concerned regarding the teacher's role within a VR environment is the work by Nebeling et al. (2021). They developed an instructional VR application called XRStudio, with the intention for it to be used to hold lectures inside a virtual environment. The software allows the user to display slides and draw in a 2D or 3D space. A streaming setup, enabling lectures to be accessed remotely by many students simultaneously, was part of their work as well. The lectures can either be streamed to established streaming or video conferencing tools, or students can join the lesson by using the so-called XRStudio student client, access the chat, share their video and audio feed, or even participate and be co-present by using their own VR device. However, requiring a special client to access interaction abilities could present a hurdle to some users. Additionally, some students who participated in XRStudio's study found the transitions between different camera presets exhausting, and some preferred the conventional sharing of slides over the Mixed-Reality or First-Person VR perspective.

2.2 Recordings and livestreams

Nonetheless, traditional video lectures can provide insights and suggestions for lecturing inside a virtual reality environment. A disadvantage of recorded lectures in an educational context is the lack of interactivity since interactivity can lead to higher attention spans (Geri et al., 2017). Livestreaming has gained popularity within the last few years and can enable interactivity to at least some degree by sharing video content with almost no delay from its source and often providing a chat functionality. Sharing lectures using a video conferencing tool can be used to enable more advanced forms of interaction, such as sharing voice and audio feeds. However, these solutions often do not support public lectures available to anyone, even people not enrolled in a certain course. And for some, they might lack preferred anonymity, as Ohno et al. (2013) indicate that providing semi-anonymity may increase engagement and openness to discussions among some students. Crook and Schofield (2017) composed a list of categories for video lecture content. Although their list of categories is non-exhaustive, it offers a vast overview of the most popular video lecture formats. Most lectures use at least one of the following elements: Slides, a whiteboard, a video feed of the lecturer, or a screencast of the presenter's computer screen. Wang et al. (2015) compare different video formats as well, and found picture-in-picture formats, i.e., showing the lecturer's webcam video feed on top of a slide deck, to increase learning results slightly compared to only sharing slides. The work done by Steinbeck et al. (2021) discusses livestreaming on the streaming platform Twitch and compares different educational and non-educational livestreams. Pirker et al. (2021) show that Twitch can be a valuable educational platform. However, viewing or streaming VR content offers more challenges compared to traditional video formats. Emmerich et al. (2021) compared streaming VR applications from the VR user's point of view to using a so-called static third-person camera setup, meaning the camera faces the back of the streamer, giving viewers an “over-the-shoulder” perspective. The latter, also called mixed reality view, takes more effort and equipment to set up properly. The survey mentioned in the paper has shown that most people prefer the first-person point of view. The results vary between the different games of the paper, but the first-person point of view is always preferred by the majority of viewers. However, susceptibility to motion sickness or a higher interest in the streaming person themselves rather than the streamed content might also sway users into liking the third-person camera more. This issue of perspective in VR content recalls discussions around machinima recording in 3D virtual worlds, where creators often have to choose between different camera views to maximize narrative and viewer engagement (Mercado, 2016). VR tools for recording lectures could benefit in a similar way. Thomas and Schneider (2018) and Shrestha and Harrison (2019) identified machinima as a potentially effective tool for supporting language learning and fostering interaction and feedback. By immersing students in a 3D environment, machinima can promote greater engagement and encourage learners to be more interactive and expressive than they might be in traditional face-to-face classroom settings. A tool described by Bruza et al. (2021) offers the ability to record and capture VR experiences. The exported lectures can be entered by a student using a VR device or a 2D video can be generated as well; the instructor can specify a camera trajectory path by setting waypoints before exporting it to an MP4 video file. This approach reflects machinima techniques where camera paths are scripted to direct the viewer's attention. However, the application is focused on preparing and recording lectures rather than livestreaming them and allowing immediate audience interaction.

2.3 Technological interactivity in lectures

Researchers have tried to introduce technological tools into lectures to increase audience interaction, as it has been shown that these tools can positively influence learning results Cain et al., (2009). Baecker et al. (2003) suggested in 2003 to make online lectures interactive by enabling a polling mechanism to ask the presenter questions, and by implementing chat functionality. Geri et al. (2017) have shown that interactive elements in recorded videos can lead to longer average watch times. So-called Audience Response Systems (ARS) try to engage with students by asking single-choice questions scattered throughout a lecture. Students can answer these questions on their mobile devices and can reflect on the material they have learned. The lecturer, on the other hand, is then able to adjust their lecture to the students' needs. Cain et al. (2009) have shown that students themselves prefer lectures using an ARS. Pradhan et al. (2005) argue that learning results can be significantly improved by using an ARS. More specialized applications can enable interactivity only for a very specific set of lessons. For instance, the software described by Varnavsky (2017) allows for student interactivity in a course about the theory of probability and mathematical statistics.

3 VRTeaching

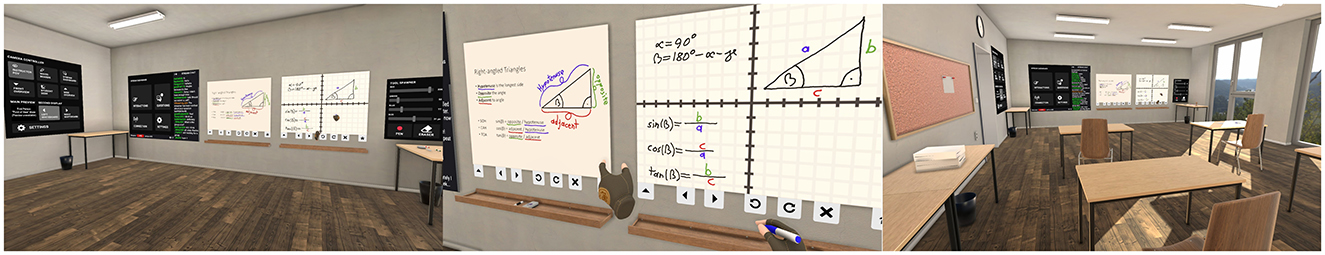

VRTeaching enables presentations via a VR application and allows interactions with various teaching aids. The goal is to create an environment that enables instructors and presenters to use supporting tools in an intuitive way, similar to how they use them in traditional classroom settings, but also enables interactions with the audience. Figure 1 shows an overview of the virtual environment VRTeaching uses.

Figure 1. VRTeaching enables educational personnel to give lectures in an intuitive Virtual Reality environment without being isolated from their audience by providing livestream integration and interaction features.

3.1 Design process and requirements

VRTeaching was initially designed for a university master course at Graz University of Technology, in which the learning contents are livestreamed to more than 100 students via the online streaming platform Twitch, as it provides a proven and stable system to host livestreams. Many students are already familiar with the interface of Twitch and how it works, as it acts as a reliable source of entertainment for many people (Sjblom and Hamari, 2017). Furthermore, using a well-established public platform also facilitates access of the general public to the lecture. The platform also provides extensive chat and moderation functionality, which are essential requirements for public lectures. The focus of the aforementioned lecture is to encourage a high level of personal interactivity with students despite the number of viewers being relatively high. This is achieved by asking questions to the viewership, who then respond in the text chat. Questions include discussion topics, prompting viewers for their ideas or opinions, and polls to assess the current state of the audience's knowledge. Besides basic VR functionalities, two core pillars have been identified:

3.1.1 Whiteboard

The user should be able to draw or write on the whiteboard with various tools, such as pens and erasers. However, the whiteboard additionally should support loading images or PDF files as a background as well but still allow writing on it. The user should be able to select files in an integrated file browser. Drawing and writing should work relatively smoothly compared to drawing using the mouse of a computer. The teaching experience should be intuitive, natural, and user-friendly and should remind the users of a traditional teaching experience. Additionally, the program should feature supporting tools and functions that are only possible in a digital environment, like undoing flawed pen strokes or exporting drawings or writings as digital images.

3.1.2 Livestreaming

The second design pillar is the ability to live-stream the application and integrate certain functionalities of the Twitch stream. The stream transmission to the streaming platform should be handled by an external broadcasting program, such as OBS Studio (https://obsproject.com/). The VR application should interact with the Twitch platform. In particular, the text chat should be visible to the lecturer, and it should support Twitch moderation features. While moderation can be proactive or reactive (Cai et al., 2021), it usually involves deleting inappropriate messages or banning disrespectful viewers to some extent (Wohn, 2019). Furthermore, the number of viewers and whether the stream is currently live should also be visible. To increase engagement, certain interactivity features should be implemented as well, such as polls and word clouds. Students should also be able to ask questions without them being overlooked by the teacher.

3.2 VR classroom setup

VRTeaching is implemented using Unity (https://unity.com/) and the XR Interaction Toolkit (https://docs.unity3d.com/Packages/com.unity.xr.interaction.toolkit@2.0/manual/). Using a well-established game engine and VR framework significantly helped reduce the required development effort and also enabled us to use its cross-platform features to support a wide range of VR devices. The Twitch integration has been achieved by using the open-source software library TwitchLib for Unity (https://github.com/TwitchLib/TwitchLib.Unity). The setting of the application is modeled after a spacious and well-lit classroom with large windows and features basic furniture, as well as a clock model showing the real world's time. The application contains multiple tools that act as teaching aids and ways to interact with learners. At the current stage, VRTeaching does not incorporate full-body tracking or avatar-based representations of instructors or learners. In the following section, the various features are discussed in more detail.

3.3 Locomotion and object interaction

When implemented poorly, VR locomotion can induce motion sickness, especially for females (Munafo et al., 2017; Stanney et al., 2020). Boletsis (2017) gives an overview of different literature regarding VR locomotion and possible implementation techniques that have been taken into account during our development process. VRTeaching's movement system consists of two parts: physical walking and teleportation locomotion using a curved projectile line. The application also features snap-turn behavior, meaning the user can instantly change the direction they are facing by 45° by pushing the joystick of the right-hand controller in the desired direction.

Different types of interactions with virtual objects can have certain advantages and disadvantages, as described by LI et al. (2019), Pfeuffer et al. (2017), and Yu et al. (2018). Based on that, we decided that users can either grab virtual items by moving their hand in the vicinity of an interactable object and then pressing the grip button with their middle finger on the respective controller, or the user may also choose to cast a ray out of their hand for the selection process. The process for interacting with UI elements is similar to virtual objects.

3.4 Whiteboard interaction

The whiteboards act as the centerpiece of the room and enable showing slides as well as drawing on them. The classroom setup features two identical whiteboards next to each other, enabling teachers to show slides on one whiteboard and make drawings or auxiliary calculations on the other board. Markers can be used to draw on a whiteboard and can have different colors, sizes, and brush shapes. A whiteboard eraser can be used to remove any drawings that have been made using a marker. While using a physical surface can make writing inside VR more precise (Kern et al., 2021), VRTeaching allows the presenter to move freely within the virtual classroom. Therefore, the system simply tracks the user's hand position in the air for drawing. A whiteboard can be in two states, either in the Presenting Mode or in the Full Board Menu mode. The lecturer can use any of the whiteboard drawing tools to interact with the board during the Presenting Mode and change its contents.

The Full Board Menu is mainly used to create a new drawing, open files, and access auxiliary features. Drawing, in this case, refers to a background with drawings or writings on it and can consist of multiple pages as well. The lecturer has three options when choosing a background for their drawings: Either a solid color, a template, or loading a file. Templates are predefined backgrounds, including horizontal lines similar to a ruled paper, a simple grid, a coordinate system, or a template for sheet music. When choosing to load a file as the background, a file browser is shown on the board, allowing the user to navigate through the computer's file system. Any .jpg, .png, or .pdf file can be selected. Loading .pdf files requires Ghostscript (https://www.ghostscript.com/releases/index.html) to be installed and happens in background threads to increase performance. The menu can also be used to export a single page or all pages of the current presentation, enabling the educational content to be shared after the lecture. Finally, the Full Board Menu may also be used to access the options, allowing the user to adjust the height of the whiteboard.

In the Presenting Mode, a single-color background, a slide deck, or an image is shown on the whiteboard, and a quick-access menu appears below the whiteboard. The menu includes a button to hide and display the menu, navigation buttons, redo and undo buttons, a clear button, and a home button. The navigation buttons allow the presenter to step backward and forward through the loaded slide set. Pages can either be actual pages inside a PDF file or virtual pages. If the background is a solid color or an image, a new page will maintain the same background but can have different writings and drawings. The undo button can be used to remove the last stroke on the whiteboard, while the redo button reinserts the stroke that was removed. Using the clear button the presenter can erase all drawings from the board. Finally, the home button allows access to the Full Board Menu.

3.5 Stream integration

VRTeaching features an intuitive integration with the popular streaming platform Twitch (https://www.twitch.tv/). The integration can be managed using the Stream Dashboard, as shown in Figure 2. The Twitch integration uses the Unity version of the TwitchLib library (https://github.com/TwitchLib/TwitchLib.Unity). The official Twitch game engine plugins had not yet been announced when development on VRTeaching started. The stream integration connects to Twitch in two ways: with a chat client and with the API. The chat client manages all functionality regarding sending or receiving messages, as well as updates regarding deleted messages. The API is used for gathering information about the stream, such as the number of current viewers. The Stream Dashboard is divided into the Stream Chat, the Connection Info Bar, and the main panel where various actions can be selected.

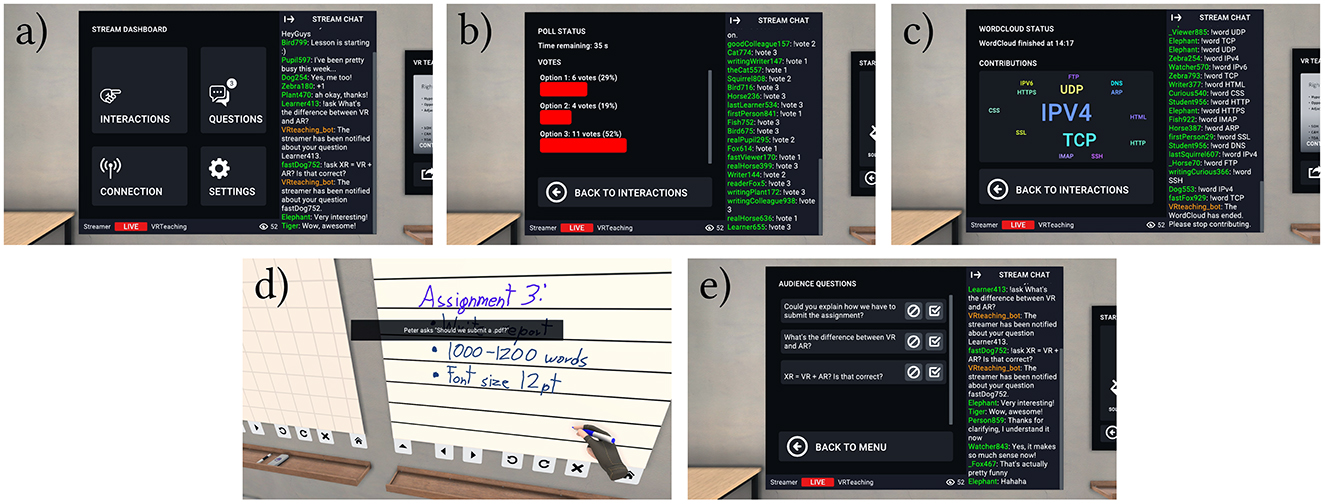

Figure 2. The Stream Dashboard can be used to interact with Twitch viewers: (a) The main menu of the Stream Dashboard, the chat being visible on the right side and the Connection Info Bar at the bottom; (b) A poll interaction; (c) A word cloud interaction; (d) Notification for an Audience Question; (e) Audience Questions can be marked as answered or inappropriate.

Connection info bar: At the bottom of the Stream Dashboard is a horizontal bar showing the Twitch channel name, a connection indicator, the title of the stream, and the number of viewers.

Chat integration: The chat panel can be seen on the right side of the Stream Dashboard and displays the chat of the livestream by connecting a chat client to the chatroom of the streamer. If the presenter wishes, the chat panel in-game can also be hidden by using the according button in the top left corner of the chat panel. Should a message be deleted by a moderator of the Twitch chat, the chat panel removes the corresponding message in VRTeaching as well. If a user gets banned or suspended from using the chat, all of their messages will be deleted from the VRTeaching chat panel.

Polls: Twitch supports polls natively on their platform, however, this feature is only available for Partner/Affiliate channels (Twitch, nd). Since we want VRTeaching to be open and unrestricted, we decided to implement our own version of polls. The streamer can choose how many options they would like to give their audience, and how long voting on the poll should be possible. Once the poll has been started, each viewer can vote for one option by typing in the chat “!vote X,” with X being the option the user wants to vote for. The results of past polls can be viewed in the Stream Dashboard's Interactions menu as well. The second graphic of Figure 2 depicts an active poll.

Word clouds: Similar to polls, the lecturer can also start a word cloud, limited to a specified duration. This feature is intended to support brainstorming and similar activities. Viewers may then contribute words by using a chat command. Terms entered by multiple viewers appear larger in the word cloud. The third graphic in Figure 2 shows a Word Cloud interaction.

Connection status panel: One tile in the main panel of the Stream Dashboard leads to more in-depth connection status information. This menu features additional information about the stream, for example, the stream's thumbnail, when the stream started, and how much time has passed since the last API update message from Twitch has been received.

Audience questions: If any viewer in an educational livestream has a question, they are usually free to ask it in the chat, however, the streamer might overlook this question in fast-paced chats, leading to frustration and unanswered questions on the part of the viewer. To prevent this we propose a solution called “Audience Questions.” A viewer may at any time of the stream type the command “!ask,” followed by the actual question they have. VRTeaching then notifies the streamer by displaying a pop-up notification in their field of view, as can be seen in the fourth graphic of Figure 2. Inside the Audience Questions menu, the streamer can view all unanswered questions, and mark them as answered after they have responded to the inquiry. To minimize abuse of this system, a user has to wait a certain amount of time between questions. Additionally, the streamer may choose to mark the question as spam, resulting in a temporary ban, preventing the user from asking any further questions. The fifth graphic of Figure 2 depicts the Audience Questions menu.

Camera Controller: The Camera Controller panel is next to the Stream Dashboard and can be used to change the rendered output of the main monitor to a different camera preset, meaning it can be used to change what the students see. Presets include the default first-person point-of-view, just the whiteboards, or an overview of the whole classroom. Furthermore, the streamer can choose whether a second window should be opened by the application, depicting only the contents of a whiteboard, allowing for picture-in-picture livestream arrangements. If the lecturer enables the respective setting, the Twitch chat users may also change the camera preset by sending a chat command.

4 Study design

The goal of the study was to receive feedback for the application and assess how lectures in VR perform compared to on-site lectures and online video conferencing lectures. The study is split up into two parts: In an expert evaluation, we asked participants to rate the usability of VRTeaching, provide feedback, and generally discuss the advantages and disadvantages of VR for teaching purposes. In the second part, the user evaluation, we compare the mental load and physiopsycological response of teachers as well as students who give presentations, depending on the lecture environment (VRTeaching, an online video conferencing tool, or an in-person classroom).

4.1 Material and setup

To run VRTeaching for the studies, we used a Meta Quest 2 and an HTC Vive Focus 3. The VR devices were connected via WiFi to a high-performance computer running the application. The virtual reality roomscale play area was 3m × 3m. The ECG psychophysiological response was measured with the EcgMove4 (https://docs.movisens.com/Sensors/EcgMove4/) sensor. For the online teaching condition of the user study, the video conferencing tool alfaview (https://alfaview.com/) was installed on a regular laptop. The same laptop was then also used by the participants in the on-site teaching condition with a projector, and a screen behind/next to the presenter.

4.2 Expert evaluation

We conducted an expert review with users who have teaching experience or a high proficiency with VR to evaluate the potential applications and usefulness of VRTeaching, get suggestions for further development efforts, and improve our understanding of the general advantages and disadvantages of teaching inside a VR application. To answer research question RQ1, the participants were asked to explore all features implemented in VRTeaching and to provide feedback. After the sessions, a discussion with the experts was held regarding the usability and the teaching experience in VR.

4.2.1 Methodology

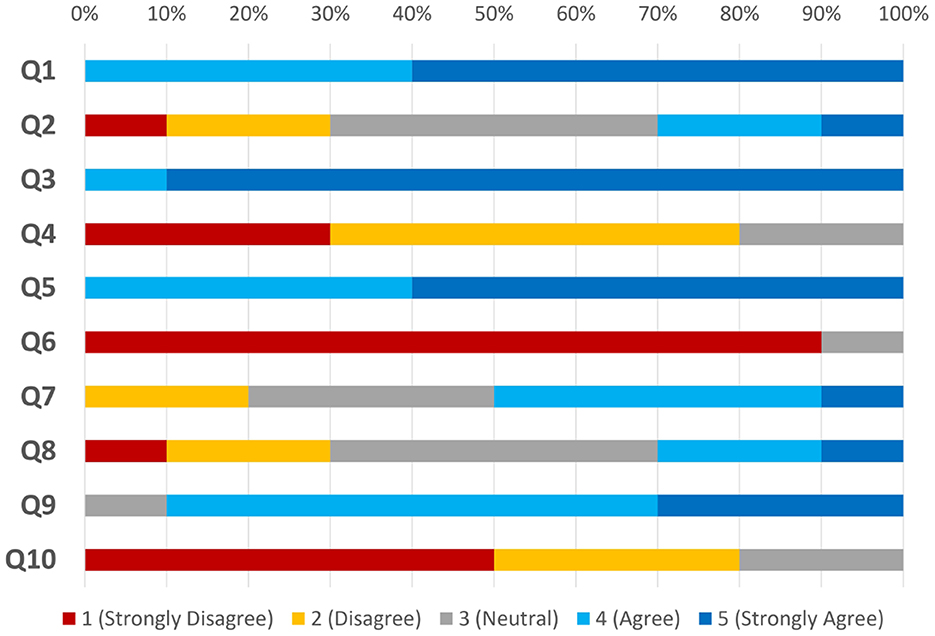

The experts tested the application individually after filling out a pre-questionnaire, including demographic data and questions regarding their VR and teaching experience. The experts were free to take as long as they wanted to explore and test all features of VRTeaching. The sessions lasted between 30 min and 2 h. The experts provided verbal comments while using VRTeaching and were transcribed by the study instructors. After the experiment, they were asked to fill out a post-questionnaire including ten questions regarding their user experience on a Likert scale between 1 (strongly disagree) and 5 (strongly agree). Afterward, a discussion with the experts was held regarding teaching in VR in general, and its advantages and disadvantages.

4.2.2 Participants

For conducting the study, we recruited ten experts (4 female, 6 male) via e-mail or in person. The participants were aged between 21 and 35 (AVG = 27.7, SD = 4.17). All participants indicated having prior experience with VR, with five participants rating their VR experience as high. Four had developed VR applications before, and eight participants had previous teaching experience. Five of them in the form of being a teaching assistant, one as a lecturer and researcher, one as a high school or middle school teacher, and one in the form of having taught lectures as part of a university course. All participants were right-handed. Six participants indicated some sort of vision impairment. Three were wearing glasses while testing the VR application.

4.3 User evaluation

To investigate the effect the teaching environment has on presenters, we conducted a user study measuring the mental demand and psychophysiological response. The study defined the following variables to address the research questions RQ2 and RQ3 using a mixed measurement design: The independent variables were the user group [lecturers, students who present] and the teaching environment [VR, online teaching platform, on-site teaching; measurement repetition factor]. The three different teaching environments were presented in a randomized order. Subjective and objective measures were used to answer the research questions. Therefore, the dependent variables mental demand [subjective measure; operationalized using the raw scale of Nasa-Task Load Index, NASA-TLX (Hart and Staveland, 1988; Hart, 2006)] and objective psychophysiological measures cardiovascular [ECG: heart rate (HR) in bpm and heart rate variability (HRV RMSSD)] were used. The psychophysiological parameters were baseline-corrected.

4.3.1 Methodology

After a short welcome and briefing of the participants, they filled out a pre-questionnaire (e.g., sociodemographic data, subjective wellbeing) and provided their informed consent. Afterward, the participants were equipped with the electrodes for the psychophysiological measurements, followed by an electrode check and a 5-min baseline measurement performance. Then, the participants had to answer the Simulator Sickness Questionnaire (SSQ) (Bimberg et al., 2020). After that, the three conditions (VR, online teaching platform, on-site teaching) started one after the other in randomized order. Within each condition, the participants went through a five-minute familiarization period, followed by a resting measurement (3 min.) before starting their presentation/lecture (8 min.). The presented content was selected by the participants, reflecting their everyday behavior in such situations. During the presentations, questions were asked from the audience. They were raised in a fixed mode, 4 min and 7 min after starting the presentation. Within each condition, one context-dependent question (e.g., asking the presenter to provide an example) and one context-independent question (e.g., asking the presenter to please speak up) were asked. The selection of the questions was randomized. After the lecture, the participants went through a short interview to provide feedback on the condition and assessed it using the NASA-TLX questionnaire (Hart and Staveland, 1988; Hart, 2006). Afterward, they answered the SSQ, followed by another resting measurement. Overall, the participants needed approximately 130 min to complete the study.

4.3.2 Participants

In total, 15 participants took part in the user study, comprising two user groups: lecturers and presenting students. To be included in the lecturer group, participants needed at least one semester of teaching experience. Students had to be at least 18 years old and currently enrolled. Seven lecturers participated in the study, two female and five male. The average age of the lecturers was 43.71 years (SD = 15.11). The group of students consisted of eight participants, four female and four male. The students had an average age of 23.7 years (SD = 1.67). Participants were recruited either in person or by email. In addition to socio-demographic data, the participants' attitudes and experiences with the three teaching environments and with giving lectures, in general, were assessed on a 5-point scale. All lecturers and students reported an average to very high level of experience in giving lectures. All lecturers stated that giving lectures was average to very easy for them. Seven of the students found giving lectures to be average to easy, while one student found it difficult. Four of the lecturers and one of the students stated that they had an average to very good experience with VR. The remaining seven students had little to no experience with VR. The attitude toward VR was rated as good to very good by all lecturers and as average to very good by all students. The attitude toward on-site teaching was rated as good to very good by all participants. Regular non-VR online courses were rated as average to very good by all lecturers and from bad to good by all students, with three students having a bad attitude toward online courses.

5 Results

The results focus on the expert feedback for the application and the mental demand and psychophysiological responses collected in the user evaluation.

5.1 Expert feedback

Each of the ten experts tested the application and its features. One person expressed physical discomfort while testing the VR application because of their glasses underneath the VR headset and took a break during testing. The other participants performed the tasks without any interruptions. The overall impression was described as intuitive and easy to learn. Three participants stated that they would need assistance to set up the application and familiarize themselves with all the features. Eight out of ten experts reported they would like to use VRTeaching regularly and felt confident in using it. The experts rated their general satisfaction and user experience on a five-point Likert scale. Most participants found the whiteboard functionality useful and liked the teaching environment in VR. They also felt confident completing certain tasks in VR and rated it as a good compromise between using VR technologies and including a high number of students. Figure 3 shows the results rated by the expert group.

Figure 3. The answers to questions specific to VRTeaching, using a five-point Likert scale. The questions were:

Q1) I think the whiteboard drawing tools are useful.

Q2) I prefer giving remote lectures with conventional video conferencing tools, such as Zoom or WebEx.

Q3) I like the Stream Integration possibilities with Twitch.

Q4) I think VRTeaching lacks some necessary features.

Q5) I like the room in which VRTeaching is set.

Q6) I felt confused about how to achieve certain tasks in VR.

Q7) Using the whiteboard feels natural and intuitive.

Q8) Despite chat functionalities and interaction features, not being able to hear or see students directly is a disadvantage.

Q9) VRTeaching is a good compromise between using VR technologies and still including a high number students who are unable to afford or use VR devices themselves.

Q10) I had troubles with the movement system in VR.

A significant part of the discussion between the experts after testing VRTeaching was the general discussion of the advantages and disadvantages of teaching in VR compared to on-site teaching, and online lectures that use video conferencing software. According to the feedback, the advantages of VR can include the possibility to integrate interactive 3D experiments and how they could be demonstrated more easily. These experiments could also include scenarios that would be impossible, too expensive, or too dangerous in a classroom. The perceived increase in motivation due to the novelty factor of the technology in lectures, the variety that VR can offer, for example by simply changing the 3D environment, and the more gamified experience were also stated as positive aspects of VR. As disadvantages of VR lectures, the experts mentioned the lack of real physical interactions, a potential noticeable delay of live lectures, the increased difficulty to assess the students' attention, special hardware and space requirements, potential cybersickness, accessibility concerns, and the lack of real facial expressions. Furthermore, drawing or writing using a common VR controller might still be more difficult than using real chalk on a blackboard or a drawing tablet.

The experts also provided examples of scenarios and lessons that might be well suited for VRTeaching specifically. A participant stated, “Especially in lectures where I need to work a lot with the whiteboard or need to switch often between the whiteboard and my slides, it would be beneficial to use such an immersive display.” Another expert mentioned that they see the potential of VRTeaching, especially for lessons containing many pictures, formulas, or plots, “For example in a history lesson, one could use the whiteboard to make more immersive lessons about a specific war, drawing military troop movements on a map” or “In a programming courfse, showing source code, I would use the marker to easily explain every single line and draw what is happening in the memory when some part of the code is being executed.” Another suggestion was to use VRTeaching for Biology lessons, annotating illustrations of a human cell, for example.

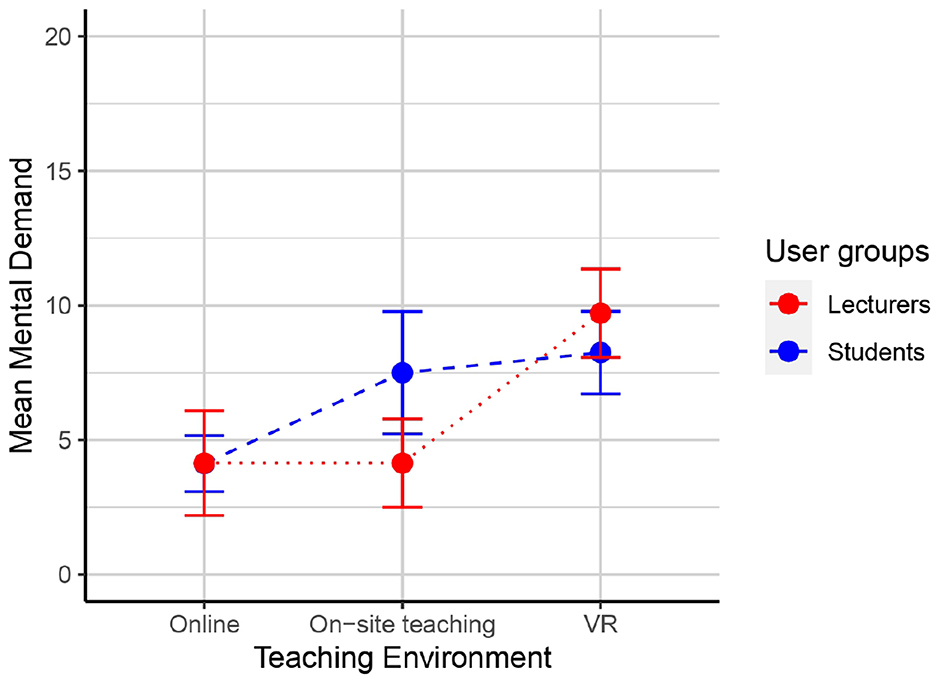

5.2 Mental demand and psychophysiological reactions

The statistical analyses were conducted using IBM SPSS Statistics and R. The analyses were based on a significance level of 5%. Analysis of Variance (ANOVA) tests with repeated measures were chosen as a statistical procedure. This allows for individual variations between participants to be largely controlled. The variance caused by the participants is reduced, making it possible to detect effects attributable to the experimental conditions even with smaller sample sizes and to identify the actual effect more accurately (Lakens and Caldwell, 2021). G*Power (Faul et al., 2007, 2009) has been used to calculate the minimum number of required participants. Using the a priori power analysis (ANOVA, repeated measures, within-between interaction, 2 groups, 3 measurements, α = 0.05) regarding achieving a large effect of (effect size f = 0.5) with a power of 0.95 yields a minimum sample size of 14. A significant part of the user evaluation was the subjective perceived mental demand. The average mental demand can be seen in Figure 4. Following the results of an ANOVA, a significant main effect of the lecture environment can be shown (). The Post-hoc analyses (Sidák) indicate significantly higher mental demand in the virtual reality environment than in the online environment (p = 0.002). All other effects did not reach the level of significance.

Figure 4. Average mental demand rating according to NASA-TLX of teachers (n = 7) and presenting students (n = 8) in different teaching environments. The error bars represent the standard error of mean.

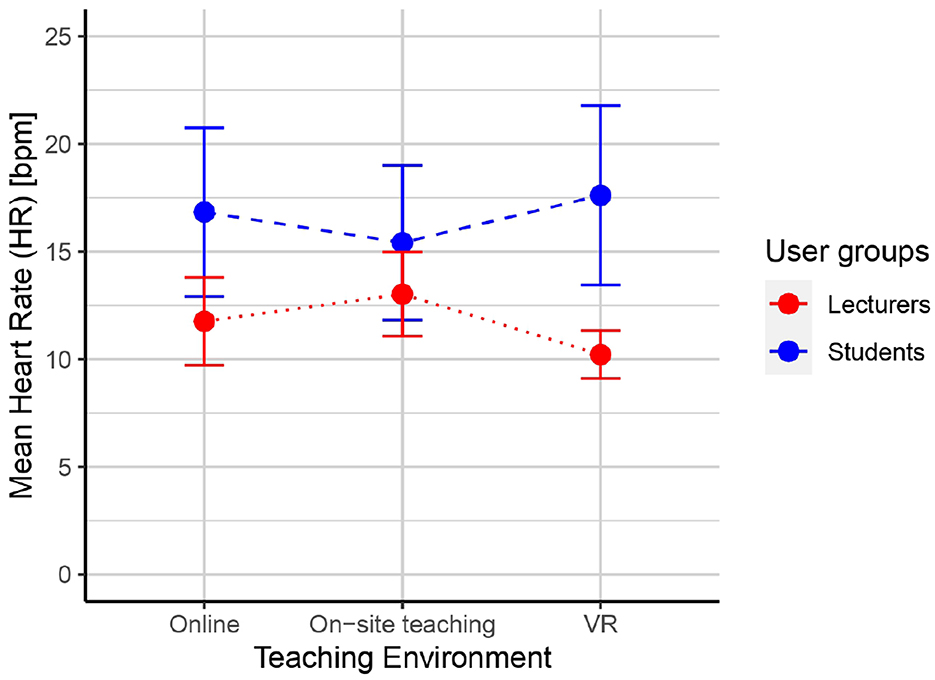

The first measure of the psychophysiological responses was the heart rate (HR in bpm). The values were baseline-corrected. Figure 5 displays the average baseline-corrected heart rates. Analysis of variance with repeated measures showed that neither the main effect of the lecture environment (), nor the user group (), nor an interaction effect user group x lecture environment () reached the level of significance.

Figure 5. Average baseline-corrected heart rate of teachers (n = 7) and presenting students (n = 8) in different teaching environments. The error bars represent the standard error of mean.

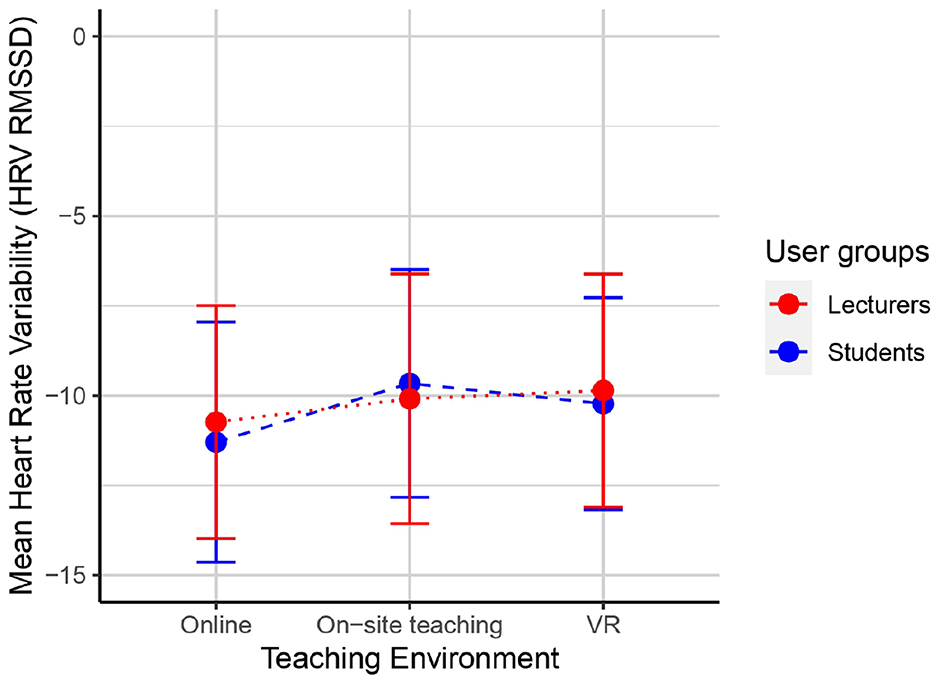

In addition to the heart rate, HRV (RMSSD) was calculated from the ECG data. The mean values and standard errors of means can be seen in Figure 6. The results of an ANOVA with repeated measures showed no significant main effect lecture environment (), nor user group (), nor the interaction user group x lecture environment ().

Figure 6. Average baseline-corrected heart rate variability of teachers (n = 7) and presenting students (n = 8) in different teaching environments. The error bars represent the standard error of mean.

6 Discussion

The main goals of our studies were to evaluate our virtual reality VRTeaching tool and to compare VR with online and on-site lecturing environments based on the user's mental demand and psychophysiological response. The first research question RQ1 dealt with whether VRTeaching is a suitable and effective teaching tool compared to regular online lectures that use video conferencing software. The results of the expert evaluation indicate that users are willing to use VRTeaching and that its features are promising. The whiteboard tools and Twitch integration were found to be useful by all participants. The experts generally liked the room in which VRTeaching takes place and had few to no problems using the locomotion system. Some participants found that not hearing or seeing students directly was a strong disadvantage. However, almost all found it to be a good solution featuring the advantages of using VR for lectures and still enabling a high audience count for people who are unable to afford VR devices. Besides the survey questions, we gained valuable insights from oral feedback during the sessions. The participants generally liked the visual environment of the virtual classroom. While two participants highlighted how impressed they were by the accuracy of writing on the whiteboard, one also found it to be rather difficult. An expert suggested giving students the ability to create custom avatars and placing them in the classroom. Another participant proposed adding the possibility for advanced VR users to move by using the joystick. Further suggestions mentioned by multiple participants were to add a feature that would help clean up all pens that might be lying around, to play videos on the whiteboard, to add the ability to name the options of a poll, and to add a laser pointer tool. Further qualitative feedback was given by the participants of the user evaluation who compared VR, online, and on-site lectures. Thirteen out of 15 participants indicated they would have liked the classroom to be filled with virtual avatars of the audience. A significant advantage of in-person lectures is the non-verbal communication, allowing a professor to assess the attentiveness and understanding of their students. It might also be useful to provide educators with a starting point by developing a formal pedagogical concept for integrating a VR lecture room into lessons.

To address the second research question RQ2, we focused on the mental demand scale of the NASA-TLX. The statistical analysis of the conducted user evaluation has shown that there is a significant main effect of the lecture environment on the subjectively experienced mental demand. However, there was neither a main effect of the user group (teachers, presenting students) nor an interaction effect. The perceived mental demand when teaching in VR was significantly higher than when teaching with online video conferencing tools. Regular non-VR online lectures required the least amount of mental demand, possibly because many users indicated in the pre-questionnaire to have a lot of experience with online teaching. Furthermore, some participants mentioned the perceived absence of spectators in the online and VR conditions helped against nervousness, which would also align with studies that showed virtual reality applications can reduce speech anxiety (Lim et al., 2023). VRTeaching still resulted in the highest perceived mental demand, which could be due to the limited experience of the 15 participants with virtual reality and the novelty of the virtual environment. Even though the participants were given some time to familiarize themselves with the VR environment, it was still a rather novel experience for them, which can be a reason for an increased mental demand (Maneuvrier et al., 2023). However, generally speaking, the average mental demand values of all conditions were below the median of the scale, hinting at a not-very-high mental demand overall, possibly also since all participants indicated having at least some experience giving presentations.

Regarding the third research question RQ3, it stands to reason that there is no significant connection between the psychophysiological response and the lecture environment. There was no significant difference regarding heart rate or heart rate variability between the different conditions. This is also consistent with the findings of Luong et al. (2019). However, the baseline-corrected HRV was negative, thus it is quite possible that at least some level of stress was present during the lectures, despite the subjective low mental demand of the participants. It is also possible that this level of stress was a result of the new VR environment or a by-product of the lengthy study.

6.1 Limitations

The study has several limitations to be considered when interpreting the results. The main limitation is the small sample size and covers no long-term effects. A larger and more diverse sample size would lead to more meaningful and broadly applicable conclusions. The user evaluation especially was also rather long with over 2 h per person, which might have been taxing on the participants. It also cannot be ruled out that the limited ability to move and gesticulate during the online lecture condition influenced the participant's psychophysiology. Furthermore, the wireless connection of the VR devices sometimes resulted in flickering or frame drops, which might have influenced the virtual reality results.

7 Conclusion

Remote online lectures are indispensable for many educators and students. However, traditional video lectures can pose challenges for teaching personnel and students, as user interfaces can be unintuitive, access can sometimes be restricted, or audience interaction is often limited. We have shown that the VR application we developed, VRTeaching, can compensate for some of the disadvantages of remote teaching. Our program features whiteboards that can show slides or be interacted with intuitively by allowing the user to annotate, draw, or write on them with various tools. Drawings and writings can also be exported. The application is intended to be livestreamed to the streaming platform Twitch. It integrates with the streaming platform's chat and API, allowing the streamer to see the Twitch chat in-game and enabling audience interactions, such as polls and word clouds, or notifying the lecturer about questions from viewers. These features can be customized and support Twitch moderation. We conducted an expert evaluation with ten participants who were proficient in VR applications or had previous teaching experience. According to them VRTeaching has a lot of potential and could be an improvement over traditional remote teaching for certain areas. Besides feedback for the application, they also provided potential advantages and disadvantages of VR as a lecturing environment. Furthermore, a second study with 15 participants (7 educators and 8 presenting students) has been conducted to compare the mental demand and psychophysiological response depending on different lecture environments (VRTeaching, online video conferencing tool, and on-site classroom setting). According to the results, teaching inside VR requires a significantly higher subjective mental demand than the video conferencing environment but does not affect the psychophysiological measures significantly. In the future, VRTeaching could be improved and extended by considering the study participants' feedback, such as adding more interactive experiments to the application or supporting more streaming platforms. A common request was also the development of a pedagogical concept for teaching inside virtual reality. Further research needs to be conducted, especially regarding the audience's acceptance of the application. Ideally, a future study compares two identical groups, which are taught about the same subject: one group with non-VR online livestream lectures and one group with lessons livestreamed via VRTeaching. The subjective enjoyment of lessons and the learning results could then be assessed and compared. An additional and currently underexplored area of development concerns the representation of learners and teachers within the virtual space. Currently, the audience is not visually present in VRTeaching, and the application does not support avatar-based interaction. Future iterations of the system could include avatars to foster a greater sense of social presence and identity in the virtual environment. This would require careful consideration of the design and implementation of avatar systems, ranging from abstract, stylized representations to photorealistic models, along with mechanisms for conveying emotions and nonverbal communication. This area opens up a broad field of research with implications for the quality of interaction, immersion, and emotional engagement in virtual learning scenarios.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical approval was not required for the studies involving humans because our study was an exploratory approach. An informed consent was obtained from all subjects involved in the study. The study was conducted in accordance with the Declaration of Helsinki. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

FG: Conceptualization, Investigation, Software, Writing – original draft, Writing – review & editing. MH: Writing – original draft, Writing – review & editing. JS: Investigation, Writing – original draft, Writing – review & editing. HS: Investigation, Writing – original draft, Writing – review & editing. FL: Investigation, Writing – review & editing. EC: Investigation, Writing – review & editing. JDP: Investigation, Writing – review & editing. VW-H: Investigation, Methodology, Supervision, Writing – original draft, Writing – review & editing. JP: Methodology, Supervision, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This study was supported by the TU Graz Open Access Publishing Fund.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdurashidova, M. S., and Balbaa, M. E. (2022). “The impact of the digital economy on the development of higher education,” in International Conference on Next Generation Wired/Wireless Networking (Springer), 411–422. doi: 10.1007/978-3-031-30258-9_36

Baecker, R., Moore, G., and Zijdemans Boudreau, A. (2003). “Reinventing the lecture: Webcasting made interactive,” in Proceedings of HCI International, 896–900.

Bailenson, J. N., DeVeaux, C., Han, E., Markowitz, D. M., Santoso, M., and Wang, P. (2025). Five canonical findings from 30 years of psychological experimentation in virtual reality. Nat. Hum. Behav. 8, 1328–1338. doi: 10.1038/s41562-025-02216-3

Bardach, L., Huang, Y., Richter, E., Klassen, R. M., Kleickmann, T., and Richter, D. (2023). Revisiting effects of teacher characteristics on physiological and psychological stress: a virtual reality study. Sci. Rep. 13:22224. doi: 10.1038/s41598-023-49508-0

Bimberg, P., Weissker, T., and Kulik, A. (2020). “On the usage of the simulator sickness questionnaire for virtual reality research,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 464–467. doi: 10.1109/VRW50115.2020.00098

Boletsis, C. (2017). The new era of virtual reality locomotion: a systematic literature review of techniques and a proposed typology. Multimodal Technol. Inter. 1:24. doi: 10.3390/mti1040024

Bruza, V., Byska, J., Mican, J., and Kozlíková, B. (2021). Vrdeo: creating engaging educational material for asynchronous student-teacher exchange using virtual reality. Comput. Graph. 98, 280–292. doi: 10.1016/j.cag.2021.06.009

Cai, J., Wohn, D. Y., and Almoqbel, M. (2021). “Moderation visibility: Mapping the strategies of volunteer moderators in live streaming micro communities,” in ACM International Conference on Interactive Media Experiences, IMX '21 (New York, NY, USA: Association for Computing Machinery), 61–72. doi: 10.1145/3452918.3458796

Cain, J., Black, E. P., and Rohr, J. (2009). An audience response system strategy to improve student motivation, attention, and feedback. Am. J. Pharm. Educ. 73:21. doi: 10.5688/aj730221

Cardullo, V., Wang, C.-,h., Burton, M., and Dong, J. (2021). K-12 teachers remote teaching self-efficacy during the pandemic. J. Res. Innov. Teach. Learn. 14, 32–45. doi: 10.1108/JRIT-10-2020-0055

Chao, C.-J., Wu, S.-Y., Yau, Y.-J., Feng, W.-Y., and Tseng, F.-Y. (2017). Effects of three-dimensional virtual reality and traditional training methods on mental workload and training performance. Hum. Factors Ergon. Manuf. Serv. Industr. 27, 187–196. doi: 10.1002/hfm.20702

Cheng, A., Yang, L., and Andersen, E. (2017). “Teaching language and culture with a virtual reality game,” in Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, CHI '17 (New York, NY, USA: Association for Computing Machinery), 541–549. doi: 10.1145/3025453.3025857

Chitra, E., Mubin, S. A., Nadarajah, V. D., Se, W. P., Sow, C. F., Er, H. M., et al. (2024). A 3-d interactive microbiology laboratory via virtual reality for enhancing practical skills. Sci. Rep. 14:12809. doi: 10.1038/s41598-024-63601-y

Crook, C., and Schofield, L. (2017). The video lecture. Internet Higher Educ. 34, 56–64. doi: 10.1016/j.iheduc.2017.05.003

Dunnagan, C. L., Dannenberg, D. A., Cuales, M. P., Earnest, A. D., Gurnsey, R. M., and Gallardo-Williams, M. T. (2019). Production and evaluation of a realistic immersive virtual reality organic chemistry laboratory experience: infrared spectroscopy. J. Chem. Educ. 97, 258–262. doi: 10.1021/acs.jchemed.9b00705

Emmerich, K., Krekhov, A., Cmentowski, S., and Krueger, J. (2021). “Streaming vr games to the broad audience: A comparison of the first-person and third-person perspectives,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, CHI '21 (New York, NY, USA: Association for Computing Machinery). doi: 10.1145/3411764.3445515

Faul, F., Erdfelder, E., Buchner, A., and Lang, A.-G. (2009). Statistical power analyses using g* power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/BRM.41.4.1149

Faul, F., Erdfelder, E., Lang, A.-G., and Buchner, A. (2007). G* power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Geri, N., Winer, A., and Zaks, B. (2017). Challenging the six-minute myth of online video lectures: Can interactivity expand the attention span of learners? Online J. Appl. Knowl. Manag. 5, 101–111. doi: 10.36965/OJAKM.2017.5(1)101-111

Haapalainen, E., Kim, S., Forlizzi, J. F., and Dey, A. K. (2010). “Psycho-physiological measures for assessing cognitive load,” in Proceedings of the 12th ACM International Conference on Ubiquitous Computing, 301–310. doi: 10.1145/1864349.1864395

Hart, S. (2006). “Nasa-task load index (nasa-tlx); 20 years later,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting (Sage CA: Los Angeles, CA: Sage publications), 904-908. doi: 10.1177/154193120605000909

Hart, S. G., and Staveland, L. E. (1988). “Development of nasa-tlx (task load index): results of empirical and theoretical research,” in Advances in Psychology (Elsevier), 139–183. doi: 10.1016/S0166-4115(08)62386-9

Heinemann, B., Görzen, S., and Schroeder, U. (2023). Teaching the basics of computer graphics in virtual reality. Comput. Graph. 112, 1–12. doi: 10.1016/j.cag.2023.03.001

Hoffman, H., and Vu, D. (1997). Virtual reality: teaching tool of the twenty-first century? Acad. Med. 72, 1076–1081. doi: 10.1097/00001888-199712000-00018

Holly, M., Pirker, J., Resch, S., Brettschuh, S., and Gütl, C. (2021). Designing vr experiences-expectations for teaching and learning in VR. Educ. Technol. Soc. 24, 107–119. Available online at: https://www.jstor.org/stable/27004935

Kern, F., Kullmann, P., Ganal, E., Korwisi, K., Stingl, R., Niebling, F., et al. (2021). Off-the-shelf stylus: Using xr devices for handwriting and sketching on physically aligned virtual surfaces. Front. Virt. Real. 2:684498. doi: 10.3389/frvir.2021.684498

Koolivand, H., Shooreshi, M. M., Safari-Faramani, R., Borji, M., Mansoory, M. S., Moradpoor, H., et al. (2024). Comparison of the effectiveness of virtual reality-based education and conventional teaching methods in dental education: a systematic review. BMC Med. Educ. 24:8. doi: 10.1186/s12909-023-04954-2

Lakens, D., and Caldwell, A. R. (2021). Simulation-based power analysis for factorial analysis of variance designs. Adv. Methods Pract. Psychol. Sci. 4:2515245920951503. doi: 10.1177/2515245920951503

LI, Y., HUANG, J., TIAN, F., WANG, H.-A., and DAI, G.-Z. (2019). Gesture interaction in virtual reality. Virt. Reality Intell. Hardw. 1, 84–112. doi: 10.3724/SP.J.2096-5796.2018.0006

Lim, M. H., Aryadoust, V., and Esposito, G. (2023). A meta-analysis of the effect of virtual reality on reducing public speaking anxiety. Curr. Psychol. 42, 12912–12928. doi: 10.1007/s12144-021-02684-6

Luong, T., Martin, N., Argelaguet, F., and Lécuyer, A. (2019). “Studying the mental effort in virtual versus real environments,” in 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (IEEE), 809–816. doi: 10.1109/VR.2019.8798029

Maneuvrier, A., Ceyte, H., Renaud, P., Morello, R., Fleury, P., and Decker, L. M. (2023). Virtual reality and neuropsychological assessment: an analysis of human factors influencing performance and perceived mental effort. Virtual Real. 27, 849–861. doi: 10.1007/s10055-022-00698-4

McGarry, S., Brown, A., Gardner, M., Plowright, C., Skou, R., and Thompson, C. (2023). Immersive virtual reality: an effective strategy for reducing stress in young adults. Br. J. Occupat. Ther. 86, 560–567. doi: 10.1177/03080226231165644

Mercado, J. (2016). Machinima Filmmaking: The Integration of Immersive Technology for Collaborative Machinima Filmmaking. PhD thesis, Drexel University.

Moore, P. (1995). Learning and teaching in virtual worlds: implications of virtual reality for education. Austr. J. Educ. Technol. 11:2078. doi: 10.14742/ajet.2078

Munafo, J., Diedrick, M., and Stoffregen, T. A. (2017). The virtual reality head-mounted display oculus rift induces motion sickness and is sexist in its effects. Exper. Brain Res. 235, 889–901. doi: 10.1007/s00221-016-4846-7

Nalaskowski, F. (2023). COVID-19 aftermath for educational system in Europe. Positives. Dialogo 9, 59–67. doi: 10.51917/dialogo.2023.9.2.4

Nebeling, M., Rajaram, S., Wu, L., Cheng, Y., and Herskovitz, J. (2021). “Xrstudio: a virtual production and live streaming system for immersive instructional experiences,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, 1–12. doi: 10.1145/3411764.3445323

Ohno, A., Yamasaki, T., and Tokiwa, K.-I. (2013). “A discussion on introducing half-anonymity and gamification to improve students' motivation and engagement in classroom lectures,” in 2013 IEEE Region 10 Humanitarian Technology Conference, 215–220. doi: 10.1109/R10-HTC.2013.6669044

Onu, P., Pradhan, A., and Mbohwa, C. (2024). Potential to use metaverse for future teaching and learning. Educ. Inf. Technol. 29, 8893–8924. doi: 10.1007/s10639-023-12167-9

Ota, D., Loftin, B., Saito, T., Lea, R., and Keller, J. (1995). Virtual reality in surgical education. Comput. Biol. Med. 25, 127–137. doi: 10.1016/0010-4825(94)00009-F

Pellett, K., and Zaidi, S. F. M. (2019). “A framework for virtual reality training to improve public speaking,” in 25th ACM Symposium on Virtual Reality Software and Technology, 1–2. doi: 10.1145/3359996.3364727

Pfeuffer, K., Mayer, B., Mardanbegi, D., and Gellersen, H. (2017). “Gaze + pinch interaction in virtual reality,” in Proceedings of the 5th Symposium on Spatial User Interaction, SUI '17 (New York, NY, USA: Association for Computing Machinery), 99–108. doi: 10.1145/3131277.3132180

Pirker, J., Lesjak, I., and Guetl, C. (2017). “Maroon VR: a room-scale physics laboratory experience,” in 2017 IEEE 17th International Conference on Advanced Learning Technologies (ICALT) (IEEE), 482–484. doi: 10.1109/ICALT.2017.92

Pirker, J., Steinmaurer, A., and Karakas, A. (2021). “Beyond gaming: the potential of twitch for online learning and teaching,” in Proceedings of the 26th ACM Conference on Innovation and Technology in Computer Science Education, 74–80. doi: 10.1145/3430665.3456324

Pradhan, A., Sparano, D., and Ananth, C. V. (2005). The influence of an audience response system on knowledge retention: an application to resident education. Am. J. Obstet. Gynecol. 193, 1827–1830. doi: 10.1016/j.ajog.2005.07.075

Prasolova-Førland, E., and Estrada, J. G. (2021). “Towards increasing adoption of online VR in higher education,” in 2021 International Conference on Cyberworlds (CW) (IEEE), 166–173. doi: 10.1109/CW52790.2021.00036

Pulijala, Y., Ma, M., Pears, M., Peebles, D., and Ayoub, A. (2018). An innovative virtual reality training tool for orthognathic surgery. Int. J. Oral Maxillofac. Surg. 47, 1199–1205. doi: 10.1016/j.ijom.2018.01.005

Ripka, G., Tiede, J., Grafe, S., and Latoschik, M. (2020). “Teaching and learning processes in immersive VR – comparing expectations of preservice teachers and teacher educators,” in Proceedings of Society for Information Technology &Teacher Education International Conference 2020 (Association for the Advancement of Computing in Education (AACE)), 1863–1871.

Ryu, K., and Myung, R. (2005). Evaluation of mental workload with a combined measure based on physiological indices during a dual task of tracking and mental arithmetic. Int. J. Ind. Ergon. 35, 991–1009. doi: 10.1016/j.ergon.2005.04.005

Sá, M. J., and Serpa, S. (2023). Metaverse as a learning environment: some considerations. Sustainability 15:2186. doi: 10.3390/su15032186

Saalfeld, P., Schmeier, A., D'Hanis, W., Rothkötter, H.-J., and Preim, B. (2020). Student and teacher meet in a shared virtual reality: a one-on-one tutoring system for anatomy education. arXiv preprint arXiv:2011.07926.

Shrestha, S., and Harrison, T. (2019). “Using machinima as teaching and learning materials: a nepalese case study,” in International Journal of Computer-Assisted Language Learning and Teaching (IJCALLT), 37–52. doi: 10.4018/IJCALLT.2019040103

Sjblom, M., and Hamari, J. (2017). Why do people watch others play video games? An empirical study on the motivations of twitch users. Comput. Human Behav. 75, 985–996. doi: 10.1016/j.chb.2016.10.019

Stanney, K., Fidopiastis, C., and Foster, L. (2020). Virtual reality is sexist: but it does not have to be. Front. Robot. AI 7:4. doi: 10.3389/frobt.2020.00004

Steinbeck, H., Teusner, R., and Meinel, C. (2021). “Teaching the masses on twitch: An initial exploration of educational live-streaming,” in Proceedings of the Eighth ACM Conference on Learning @ Scale, L@S '21 (New York, NY, USA: Association for Computing Machinery), 275–278. doi: 10.1145/3430895.3460157

Stojsić, I., Ivkov Dzigurski, A., Maricić, O., Ivanović Bibić, L., and Ðukicin Vucković, S. (2017). Possible application of virtual reality in geography teaching. J. Subject Didact. 1, 83–96. doi: 10.5281/zenodo.438169

Thomas, M., and Schneider, C. (2018). “Language teaching in 3D virtual worlds with machinima: Reflecting on an online machinima teacher training course,” in International Journal of Computer-Assisted Language Learning and Teaching (IJCALLT), 20–38. doi: 10.4018/IJCALLT.2018040102

Twitch (n.d.). How to use polls. Twitch Help Center. Available online at: https://help.twitch.tv/s/article/how-to-use-polls (Accessed April 7, 2025).

Varnavsky, A. N. (2017). “Software for interactive lectures on the theory of probability and mathematical statistics software for interactive lectures,” in 2017 6th Mediterranean Conference on Embedded Computing (MECO), 1–4. doi: 10.1109/MECO.2017.7977140

Venton, B. J., and Pompano, R. R. (2021). Strategies for Enhancing Remote Student Engagement Through Active Learning. Cham: Springer. doi: 10.1007/s00216-021-03159-0

Wang, W.-F., Chen, C.-M., and Wu, C.-H. (2015). “Effects of different video lecture types on sustained attention, emotion, cognitive load, and learning performance,” in 2015 IIAI 4th International Congress on Advanced Applied Informatics, 385–390. doi: 10.1109/IIAI-AAI.2015.225

Wohn, D. Y. (2019). “Volunteer moderators in twitch micro communities: How they get involved, the roles they play, and the emotional labor they experience,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, CHI '19 (New York, NY, USA: Association for Computing Machinery), 1–13. doi: 10.1145/3290605.3300390

Xue-qin, C., Dao-hua, Z., and Xin-xin, J. (2016). Application of virtual reality technology in distance learning. Int. J. Emerg. Technol. Learn. 11:76. doi: 10.3991/ijet.v11i11.6257

Yadav, M., Sakib, M. N., Nirjhar, E. H., Feng, K., Behzadan, A. H., and Chaspari, T. (2020). Exploring individual differences of public speaking anxiety in real-life and virtual presentations. IEEE Trans. Affect. Comput. 13, 1168–1182. doi: 10.1109/TAFFC.2020.3048299

Yépez, J., Guevara, L., and Guerrero, G. (2020). “Aulavr: virtual reality, a telepresence technique applied to distance education,” in 2020 15th Iberian Conference on Information Systems and Technologies (CISTI) (IEEE), 1–5. doi: 10.23919/CISTI49556.2020.9141049

Keywords: virtual reality, teaching, twitch, livestreaming, instructional experience

Citation: Glawogger F, Holly M, Stang JT, Schumm H, Lang F, Criscione E, Pham JD, Wagner-Hartl V and Pirker J (2025) VRTeaching: a tool for virtual reality remote lectures. Front. Educ. 10:1608151. doi: 10.3389/feduc.2025.1608151

Received: 08 April 2025; Accepted: 04 August 2025;

Published: 22 August 2025.

Edited by:

Yu-Tung Kuo, North Carolina Agricultural and Technical State University, United StatesReviewed by:

Earlisha J. Whitfield, University of Central Florida, United StatesStylianos Mystakidis, Hellenic Open University, Greece

Copyright © 2025 Glawogger, Holly, Stang, Schumm, Lang, Criscione, Pham, Wagner-Hartl and Pirker. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Florian Glawogger, Z2xhd29nZ2VyQHR1Z3Jhei5hdA==; Michael Holly, bWljaGFlbC5ob2xseUB0dWdyYXouYXQ=

Florian Glawogger

Florian Glawogger Michael Holly

Michael Holly Janine Tasia Stang2

Janine Tasia Stang2 Emil Criscione

Emil Criscione Verena Wagner-Hartl

Verena Wagner-Hartl Johanna Pirker

Johanna Pirker