- College of Medical Information and Artificial Intelligence, Shandong First Medical University and Shandong Academy of Medical Sciences, Taian, China

While studies on generative artificial intelligence (GAI) in medical education have attracted increased attention, there exists a gap in the literature in this field. To gain insight into the research trends and focal areas in this field, a bibliometric analysis of studies on GAI-related medical education was conducted using the Web of Science database over the past 2 years. Based on a search strategy, 281 relevant articles were selected for analysis using the analytical tool CiteSpace. The aim of these analyses was to identify the main trends, categories, countries, institutions, journals, and keywords in this field, while also assessing the impact of these GAI-related medical education studies. This approach ultimately revealed that the GAI technologies are integrated with medical education, as evidenced by the CiteSpace analysis. The analysis of noun phrases and keyword co-occurrence provides insights into specific clusters of interest, important themes, and relationships in the GAI-related medical education field. Together, the results of this bibliometric analysis provide high-level insights into the progression, development, and broader implications of research focused on GAI-related medical education, providing a foundation that can help guide studies and the direction of GAI-related medical education research in the future.

1 Introduction

Since November 2022, the GAI, which can generate high-quality and contextually relevant content from human-created work, has emerged as a revolutionary technology with the release of ChatGPT (Banh and Strobel, 2023). Before discussing the GAI, the large language model (LLM) requires initial study. LLM is the outcome of continued research and development in artificial intelligence (AI). LLM is a deep learning model that is capable of unsupervised training. The core component of the LLM is a transformer model that includes many neural networks, in which the encoder and decoder have the capability of self-attention. The transformer model can learn to understand sentences, paragraphs, articles, and other data. The transformer model processes data in parallel, and its calculations can be performed by the graphics processing unit, which can reduce the training time for the LLM (Zyda, 2024; Kumar, 2024; Zhao et al., 2024). A fully pretrained LLM is called a foundation model, which can be specialized to create a generative AI application. The generative AI application, which is commonly abbreviated to GAI, can generate new text, images, audio, and other synthetic items. In the GAI model, prompting is an interaction technique that enables end users to use natural language to engage with and instruct the GAI application to create desired output (Ross et al., 2024). Depending on the application, prompts vary in their modality and directly influence the mode of operation.

GAI has become a novel tool and is involved in multi-disciplinary fields, such as medicine (Babl and Babl, 2023), engineering (Bordas et al., 2024), education (Cogo et al., 2024), business and economics (Kshetri, 2023a,b), and agriculture (Pallottino et al., 2025). At the same time, there have been a growing number of research efforts in the education field in recent years, including business education (Huo and Siau, 2024), medical education (Parente, 2024), tourism and hospitality education (Dogru et al., 2024), ideological education (Xing, 2024), language education (Cogo et al., 2024), chemistry education (Tassoti, 2024), and management education (Ratten and Jones, 2023). In the study, we concentrate on the application of GAI in medical education.

Medicine is an area of sustainable development with the discoveries and innovations in different diseases and advanced technologies (Tokuc and Varol, 2023). The rapid development of technology affects medicine through its influence on medical education and patient care (Tokuc and Varol, 2023; Altintas and Sahiner, 2024). The GAI is one such advanced technology that is beginning to affect the field of medical education. Janumpally et al. (Janumpally et al., 2025) investigated the aspects of graduate medical education using GAI. Cervantes et al. (Cervantes et al., 2024) investigated the perception of GAI among medical educators and gained insights into its major advantages and concerns in medical education. Miao et al. (Miao et al., 2024a) discussed integrating GAI into nephrology education, highlighting its importance and potential applications. Despite this broad array of relevant topics, however, few studies have sought to broadly explore the dynamic features of the GAI in medical education. In an effort to improve the performance of medical education, there is a pressing need to clarify the major developments and hotspots in this research field through a bibliometric analysis.

The above results underline the need for systematic review efforts to explore the development of the GAI in medical education. This study is designed to fill the gap in the literature regarding an overview of the GAI in medical education by systematically analyzing extant studies and exploring trends in the ongoing development of the GAI in medical education. Through analyses of large numbers of scholarly reports, this bibliometric analysis will provide a foundation for evidence-based guidance to support the future development of this area.

2 Study goals

This study is designed to explore trends of GAI in medical education, and the documents published are from 1 January 2023 to 31 December 2024. The primary goal of these investigations is to clarify the development trends and distinctive features of this research field and to better understand the current state of GAI-related medical education by answering the following questions:

• What are the key trends of GAI-related medical education in accordance with annual publications and citations?

• What are the primary disciplines that have joined in GAI-related medical education research?

• What are the most prominent keywords and their associated themes in GAI-related medical education research?

• What are the most prolific journals that have contributed to research in GAI-related medical education?

• What are the most prolific institutes, countries/regions in GAI-related medical education research?

These questions are formulated in terms of other similar studies (Lu, 2024) and are used to guide the execution of a bibliometric review addressing these questions.

3 Methodology

To combine the features and answer the above questions, the Web of Science database was selected to conduct a rigorous review of GAI-related medical education studies. Titles, keywords, and abstracts of articles published from 1 January 2023 to 31 December 2024 were analyzed with a search strategy consisting of terms including: [TS = (sting of terms includingterms includingublisheOpenAI) OR TS = (ChatGPT) OR TS = (GPT-3) OR TS = (GPT-3.5) OR TS = (GPT-4) OR TS = (GAI) OR TS = (Large Language Model)] AND TS = (Medical Education). Studies were excluded if they were letters, early access papers, editorials, corrections, meeting abstracts, proceedings papers, or review articles. Only articles published in English were eligible for inclusion.

CiteSpace is widely used for studies examining relationships in the scientific literature (Chen, 2017; Chen et al., 2012; Rawat and Sood, 2021; Lu, 2024). It provides an effective tool for bibliometric analysis by generating co-occurrence knowledge maps that clarify connections among various authors, articles, and knowledge areas. Analyses were conducted using CiteSpace, covering the study period from 1 January 2023 to 31 December 2024. In CiteSpace, the Top 50 was chosen as the selection criterion, and the time slice was set as 1 year.

4 Results

4.1 Annual publication trends

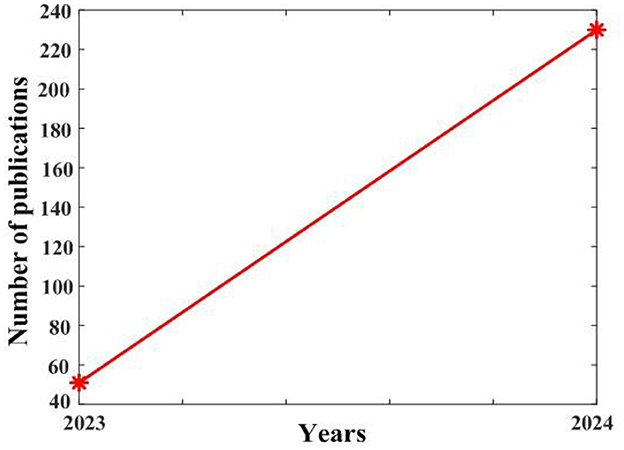

Based on the results from the Web of Science database, an overview of the trends in annual publication output in GAI-related medical education over the past 2 years is presented in Figure 1. In total, 281 relevant articles were found based on the above search method.

These results reveal a clear upward trajectory in accordance with the output of GAI-related medical education research over time. On the whole, the observed trends and general upward trajectory suggest that there will be ongoing growth in the GAI-based medical education technology in the future.

4.2 Identification of key GAI-related medical education disciplines

In previous studies (Lu, 2024), CiteSpace was employed to generate diagrams for different disciplines associated with the intelligent medical engineering field.

Based on the subject categories exported from the analysis of CiteSpace, there are 71 disciplines associated with the research of GAI-related medical education space, with 8 of these disciplines being associated with more than 10 publications, including the Health Care Sciences and Services, Education and Scientific Disciplines, General and Internal Medicine, Surgery, Medical Informatics, Education and Educational Research, Multidisciplinary Sciences, and Computer Science and Information Systems. The disciplines also include Nursing, Pharmacology and Pharmacy, Telecommunications, Emergency Medicine, Medical Ethics, and Transportation Science and Technology.

Based on the above analysis, the results underline the predominant role that medicine, education, and computer sciences play in this field, suggesting that computer science and medical education will continue to shape advances in GAI-related medical education research output in the future. On the whole, these results emphasize the multidisciplinary characteristics of the GAI-related medical education research.

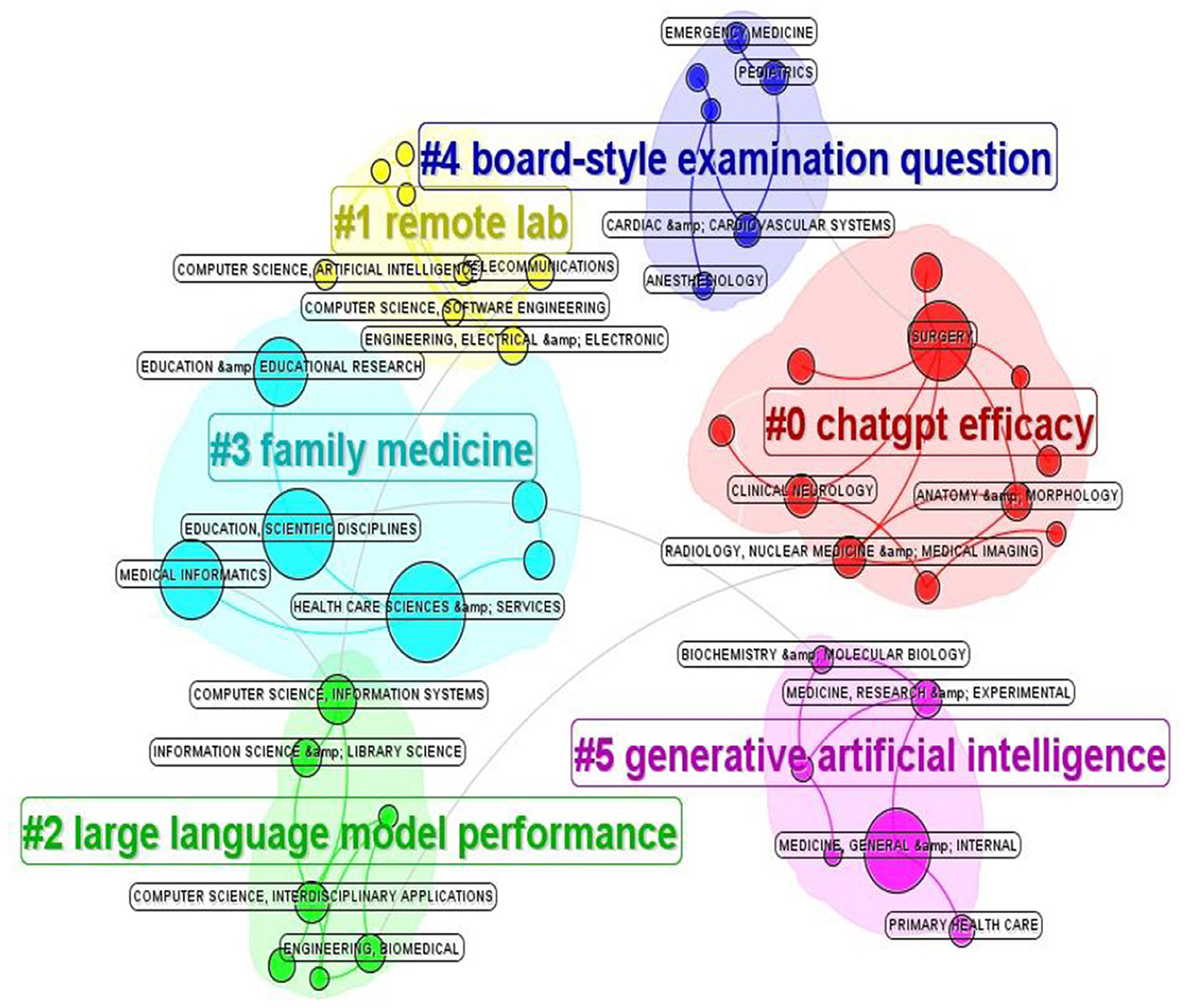

Figure 2 shows the cluster analysis of categories based on title words. This cluster analysis reveals that the main directions included ChatGPT efficacy, remote lab, large language model performance, family medicine, broad-style examination questions, and generative artificial intelligence. These results emphasize the specific directions within the GAI-related medical education space, while also underscoring the importance of collaboration among various directions to propel the field forward.

4.3 Identification of prolific institutions and countries/regions

Analyzing international cooperation in a given research field can clarify how relationships among countries have evolved and how they have shaped the research in the GAI-related medical education field. Based on the analyzed studies retrieved above, 65 countries/regions were found to contribute to GAI-related medical education research over the 2 years.

Based on the geographic distributions for the retrieved studies, the United States was identified as the most prominent contributor to the field with 105 publications. Other important contributors to this research field include China, England, Germany, and Canada, with 61, 18, 18, and 15 publications, respectively. These results emphasized the global nature of the GAI-related medical education field, suggesting that, while the United States and China remain the leaders in this field, there is also a broad global interest in ongoing, extensive collaborative research efforts.

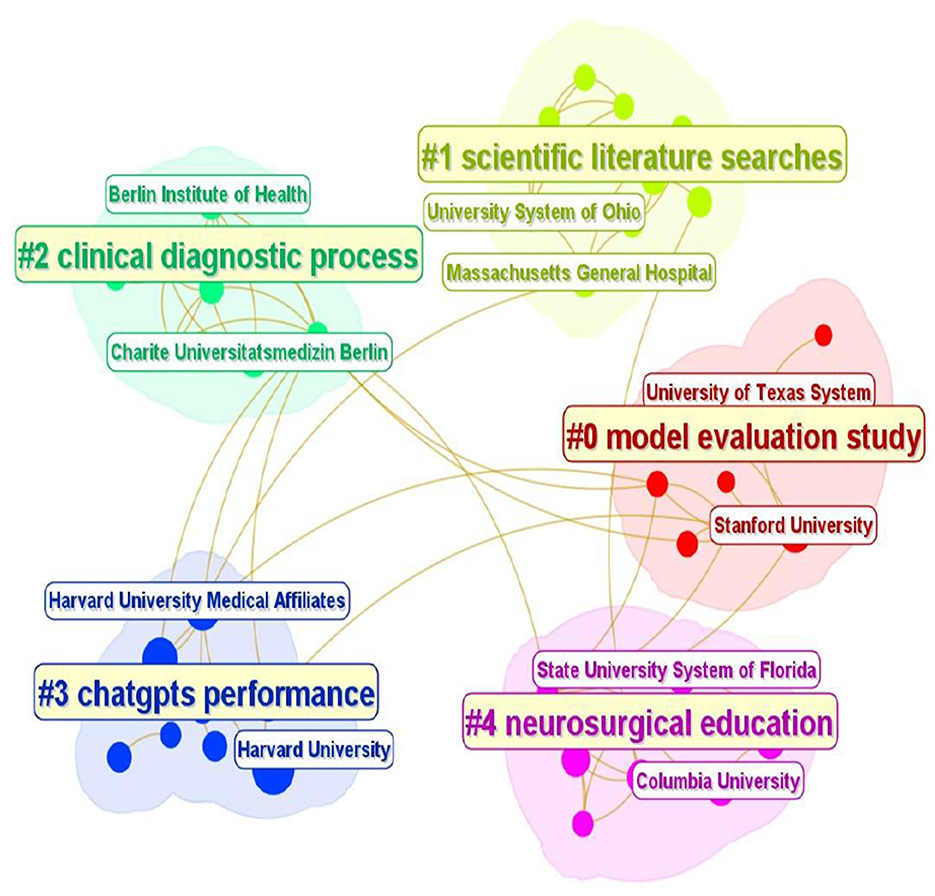

Over the past 2 years, 106 institutions have been identified as having contributed to the GAI-related medical education field. The leading institutions include Harvard University, Harvard University Medical Affiliates, Harvard Medical School, the National University of Singapore, Sichuan University, Stanford University, the University of California System, the University of Texas System, the State University System of Florida, and Chongqing Medical University. According to the QS World University Rankings 2026, half of the top 10 universities have contributed to the GAI-related medical education field, and they include the Massachusetts Institute of Technology, Stanford University, Harvard University, ETH Zurich, and the National University of Singapore. The results further indicate that the GAI-related medical education is a research hotspot. Nodes that form the resultant network exhibit close connections, emphasizing the high degree of collaboration and interactivity among various institutions. A cluster analysis of these institutions is additionally performed based on subject disciplines (Figure 3).

This cluster analysis reveals that the main research directions included model evaluation study, scientific literature searches, clinical diagnostic process, ChatGPT's performance, and neurosurgical education. These results emphasize the expertise of particular institutions in specific directions within the overall GAI-related medical education space, while also underscoring the importance of collaboration among various directions and institutions to propel the field forward.

4.4. Identification of the most prominent journals

The journals with the highest numbers of GAI-related medical education research publications are analyzed. The results reveal that several prominent journals are responsible for publishing a considerable proportion of the studies in this field over the past 2 years.

JMIR Medical Education is the most prominent journal in accordance to GAI-related medical education research output with 148 publications, followed by the Cureus Journal of Medical Science, PLoS Digital Health, the Journal of Medical Internet Research, Nature, Healthcare-Basel, Medical Teacher, Radiology, Academic Medicine, and BMC Medical Education (101, 91, 83, 82, 82, 75, 67, 67, and 66 publications, respectively). Based on the Impact Factor (IF) of the 2025 Journal Citation Reports, the top ten journals are CA-A Cancer Journal for Clinicians (IF = 232.4), LANCET (IF = 88.5), New England Journal of Medicine (IF = 78.5), JAMA-Journal of The American Medical Association (IF = 55), Nature Medicinen (IF = 50), Nature (IF = 48.5), Science (IF = 45.8), Circulation (IF = 38.6), LANCET Infectious Diseases (IF = 31), ACM Computing Surveys (IF = 28). The widely distributed nature of these publications across journals emphasizes the importance of collaborations among researchers from various disciplines as a means of advancing efforts in the GAI-related medical education field.

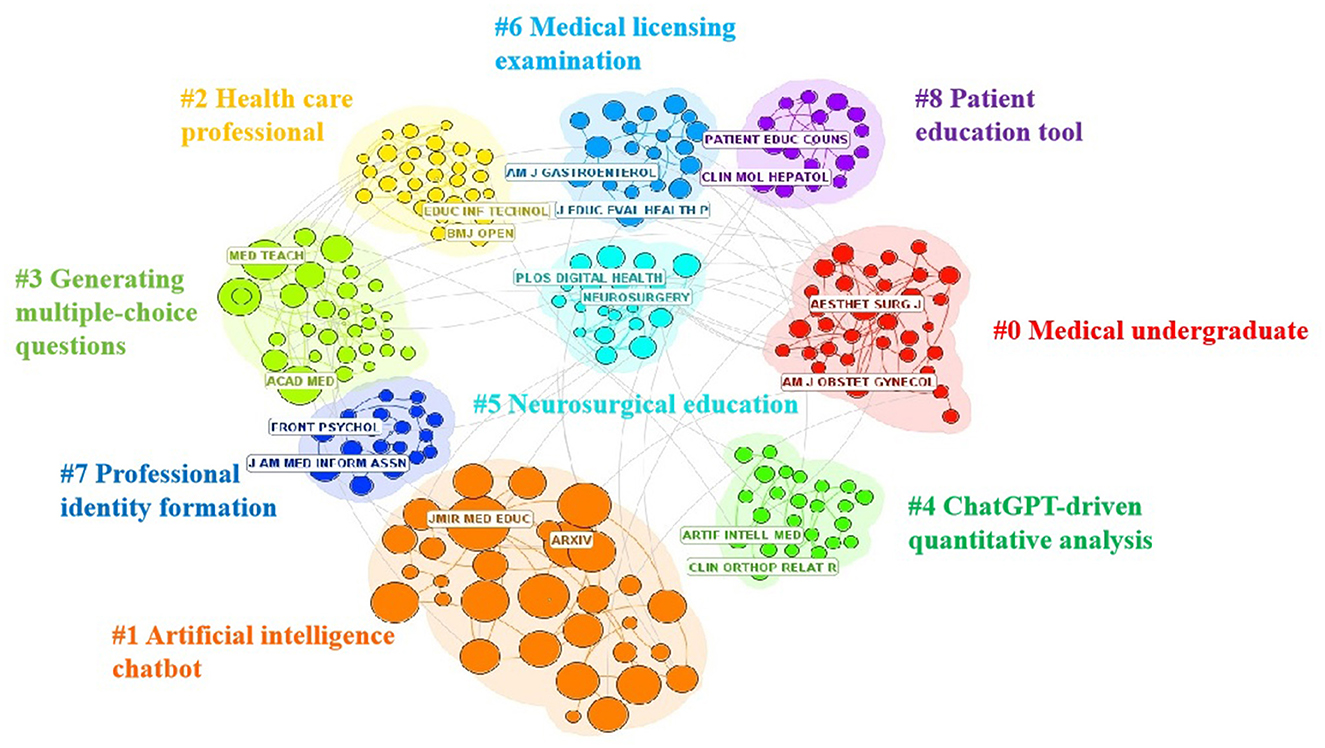

Cluster analysis of journals is performed based on title words (Figure 4).

This cluster analysis reveals that the predominant subject categories for these journals include medical undergraduate, artificial intelligence chatbot, health care professional, generating multiple-choice questions, ChatGPT-driven quantitative analysis, neurosurgical education, medical licensing examination, professional identity formation, and patient education tool, highlighting the importance of multidimensional direction for the publication of studies in the GAI-related medical education.

4.5. Developmental paths

To provide further insights into particular clusters of interest, CiteSpace can extract noun phrases from titles, keyword lists, or abstracts. To better understand the structure and homogeneity of the generated network, the resultant clusters can then be assessed based on their modularity (Q = 0.6169) and weighted mean silhouette (S = 0.8549) scores.

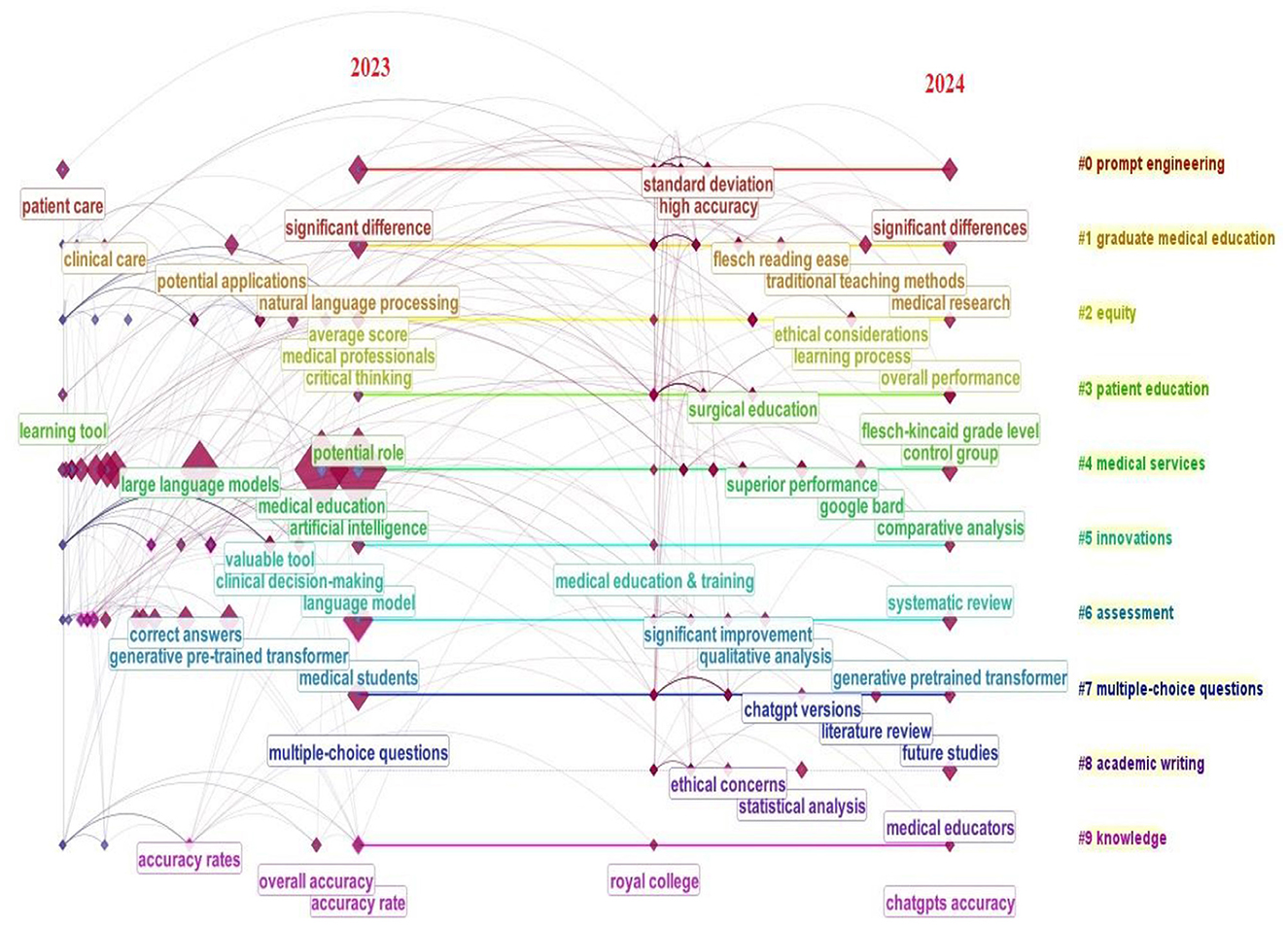

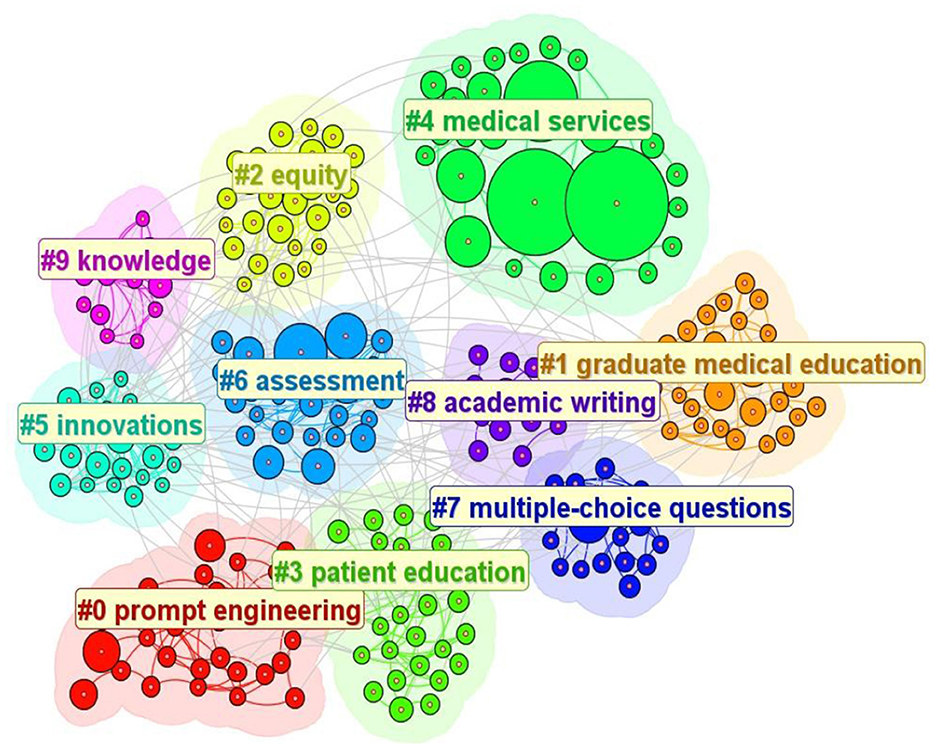

In this study, clusters are numbered based on descending cluster size order, such that the largest cluster containing (size = 30) is numbered #0 and is related to prompt engineering. Other top clusters in this analysis include #1 graduate medical education, #2 equity, #3 patient education, #4 medical service, #5 innovations, #6 assessment, #7 multiple-choice questions, #8 academic writing, and #9 knowledge. Cluster analysis is performed based on keywords (Figure 5).

Figure 5. Keyword clustering map based upon noun phrases for studies published from 1 January 2023 to 31 December 2024.

These clusters allow for the effective categorization of research topics in the GAI-related medical education field, providing key insights into the major areas of research interest.

Timeline analyses can offer a visual representation of progress pertaining to particular research keywords and themes over time (Chen, 2017; Lu, 2024). Here, the timeline visualization reveals the profound evolution of GAI-related medical education research over the past 2 years (Figure 6).

Based on Figure 6, the development of GAI-related medical education can be categorized as follows:

The GAI has been applied to different stages of medical higher education, including undergraduate (Luke et al., 2024), master's (Li et al., 2024a), and doctoral education (Turchoie et al., 2024). Besides the students, the educational objects also contain medical and healthcare professionals (Patel et al., 2024), interns and residents (Lower et al., 2023), medical clinicians (Patil et al., 2024), and patients (Srinivasan et al., 2024). At the same time, the GAI has been integrated with different disciplines of medicine, including preclinical medicine (Luke et al., 2024), clinical medicine (Miao et al., 2024b), anesthesiology (Khan et al., 2024), biomedicine (Khosravi et al., 2024), medical imageology (Monroe et al., 2024), ophthalmology and optometry medicine (Ciekalski et al., 2024), psychiatry (Li et al., 2024b), radiation medicine (Pandey et al., 2024), pediatrics (Ramgopal et al., 2024), stomatology (Balel, 2023), preventive medicine (Kassab et al., 2024), traditional Chinese medicine (Li et al., 2024a), laboratory medicine (Meyer et al., 2024), nursing science (Gosak et al., 2024), rehabilitation therapy (Sivarajkumar et al., 2024), pharmacy (Pradhan et al., 2024), obstetrics (Riedel et al., 2023), emergency medicine (Liu et al., 2024). In clinical medicine education, the disciplines include nephrology (Miao et al., 2024b), neurosurgery (Arfaie et al., 2024), orthopedics (Vaishya et al., 2024), otolaryngology (Grimm et al., 2024), rheumatology (Madrid-García et al., 2023), cardiology (Madaudo et al., 2024), urology (Park et al., 2024), gastroenterology (Gravina et al., 2024), andrology (Ergin and Sanci, 2024). According to the above statistical results, the GAI has integrated with medical education.

In these applications, the main fields include medical exams (Zong et al., 2024; Danehy et al., 2024; Moglia et al., 2024; Lubitz and Latario, 2024), the performance assessment of GAI models (McGrath et al., 2024; Yamaguchi et al., 2024; Chen et al., 2024), the improvement of teaching methods and modes (Wojcik et al., 2024; Naamati-Schneider, 2024), and curriculum reform (Houssaini et al., 2024).

Medical exams play a significant role in improving the ability of medical professionals and students and contribute to the development of medical education in which the GAI models act as virtual teaching assistants and tutors (Zong et al., 2024). To pass these exams, a deep understanding of medical knowledge, clinical skills, and medical scenarios is needed. The medical exams cover various fields, including licensing examinations (Danehy et al., 2024), qualifying examinations (Vaishya et al., 2024), entrance exams (Khosravi et al., 2024), didactic tests (Moglia et al., 2024), and training examinations (Lubitz and Latario, 2024). These medical exams generally take the form of single-choice questions (Liu et al., 2024), multiple-choice questions (Khan et al., 2024; Vaishya et al., 2024), or a structured questionnaire (McGrath et al., 2024). Data sources of the medical exams are from medical textbooks and documents, medical contents of the web (Khan et al., 2024), question banks (Yang et al., 2024), question archive database (Ciekalski et al., 2024), and online platforms (Yamaguchi et al., 2024).

In these studies, the helpfulness for learning is one aspect of concern; on the other hand, the goal is to evaluate the performance of the GAI models. The GAI models can effectively understand medical knowledge and provide contextually relevant and appropriate responses (Oh et al., 2023; Li et al., 2024c; Sengar et al., 2025; Sikarwar et al., 2025; Kumar et al., 2023; Balakrishnan and Sengar, 2024; Sengar et al., 2023). However, it also has some limits, such as limited knowledge, inaccuracies, and the necessity for verification (Mu and He, 2024; Boscardin et al., 2024; Li et al., 2024c). Researchers have employed various technologies, such as prompt engineering, fine-tuning, and low-rank adaptation, to increase the performance of GAI models (Maitin et al., 2024). In these techniques, prompt engineering has gained increased attention (Mesko, 2023). A prompt (Liu et al., 2023) is a set of instructions provided to the GAI that programs the GAI by customizing it and/or enhancing or refining its capabilities. Prompt engineering is the means by which GAI is programmed via prompts and is employed to optimize the performance of GAI models (Maharjan et al., 2024).

The GAI models are helpful to enrich the teaching methods in medical education. According to research achievements, the GAI model is considered an assistance tool for academic writing, homework assignments, exam preparation (Wojcik et al., 2024), understanding of course knowledge and clinical information (Luke et al., 2024; Oh et al., 2023), case study (Bakkum et al., 2024), assessment of medical literature (Parente, 2024), and development of clinical skills (Ba et al., 2024). It can also simulate medical settings (Parente, 2024; Gosak et al., 2024), make narrative assessments of clinical learners, generate learning assessments, and create lesson plans (Meşe et al., 2024). Especially, some researchers investigated customized innovative GAI models to enhance medical education (Collins et al., 2024; Kiyak and Kononowicz, 2024; Huang et al., 2024).

The GAI models can promote innovation in teaching modes and improve educational outcomes. Naamati-Schneider (Naamati-Schneider, 2024) proposed a novel pedagogical framework that integrated problem-based learning (PBL) with the use of ChatGPT for undergraduate students. Divito et al. (Divito et al., 2024) addressed the factors of implementation and described how ChatGPT can be responsibly utilized to support key elements of PBL.

The curriculum is one of the most important parts of the medical education system. Additionally, the development of the curriculum is integrated with the GAI technique, merged with constructive alignment principles, the design thinking method, and GAI. Houssaini et al. (Houssaini et al., 2024) designed a new medical curriculum to guide educators in generating student-centered learning experiences. Huang and Lin (2024) proposed a GAI-based model to design a professional identity formation course. Turchoie et al. (2024) generated teaching activities to introduce GAI to students enrolled in a nursing data science and visualization course. Benboujja et al. (2024) employed GAI language models to produce a multilingual curriculum for online medical education.

Overall, this period reflects important ongoing efforts to explore and expand the GAI to meet medical education needs.

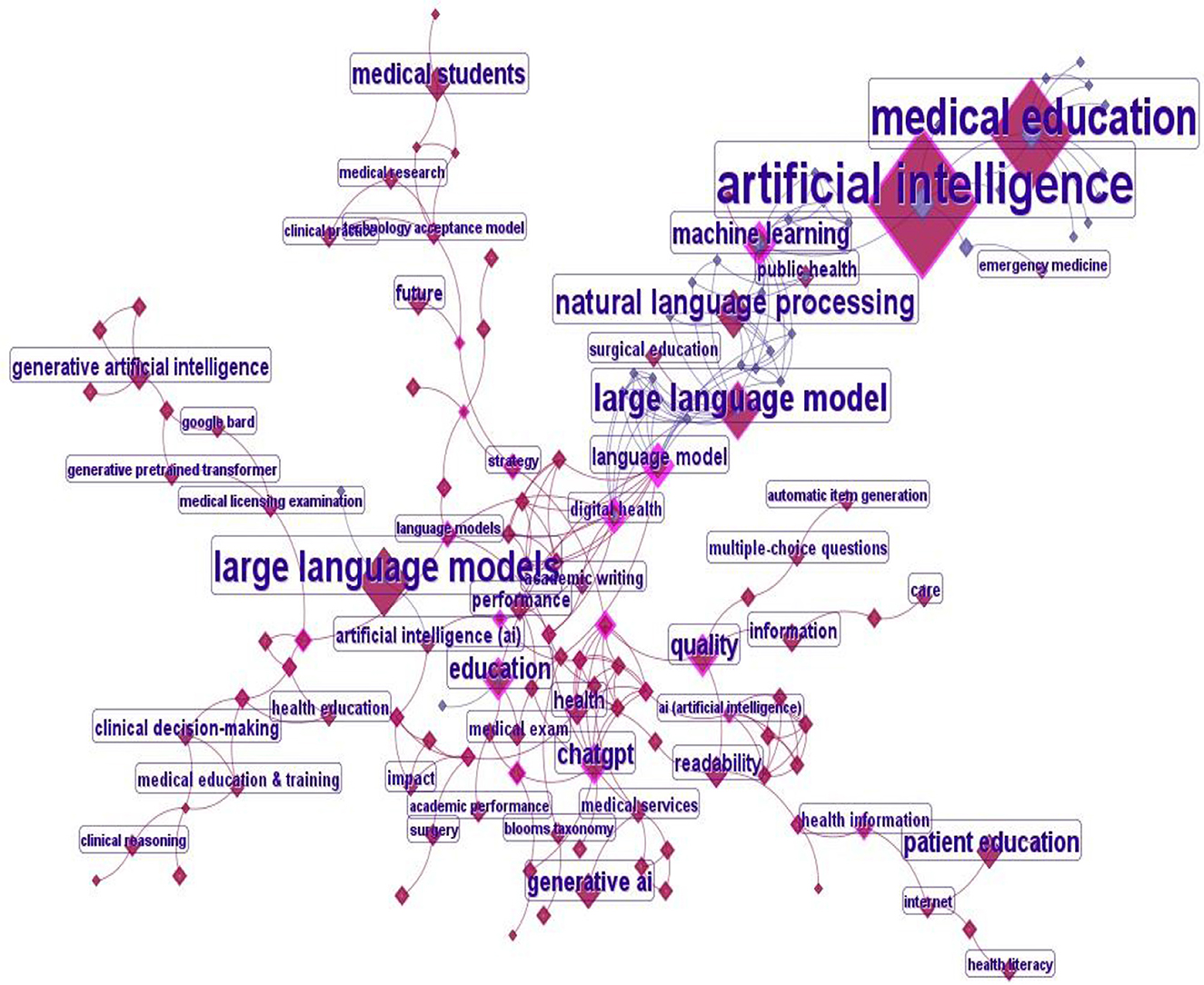

4.6 Research topics

Subsequently, a keyword co-occurrence analysis is carried out, and a keyword co-occurrence network is obtained (Figure 7). The network consists of 161 nodes and 257 co-citation links for studies published from 2023 to 2024. The density for this keyword co-occurrence network is 0.02. The links among these keywords are labeling of their co-occurrence relationships, with thicker lines indicating closer relations (Lu, 2024).

In the generated keyword co-occurrence map, denser, thicker interactions are observed among certain keywords, including “artificial intelligence”, “large language model”, “ChatGPT”, “medical education”, “generative artificial intelligence”, “patient education”, and “natural language processing”, suggesting that these keywords are areas of notable interest within medical education. Furthermore, “machine learning”, “surgical education”, “medical exam”, “medical students”, “medical licensing examination”, “performance”, “health information”, and “public health” are identified as keywords that are closely linked to core keywords.

Keyword co-occurrence maps can provide detailed insight into important themes and relationships in the context of GAI-related medical education research, emphasizing important areas of study and opportunities for additional collaboration and development. In the keyword co-occurrence analysis, the two top fields in GAI-related medical education are identified as AI-related technologies and the research in medical education, accounting for 46% and 35%, respectively.

In the field of AI-related technologies, the five top keywords are identified as follows:

• Artificial intelligence,

• LLM and GAI,

• Natural language processing,

• Machine learning, and

• Deep learning.

These keywords are all related to the AI discipline field, in which the GAI is one of the most advanced fields. In addition, the LLM and GAI are the outcomes of AI technologies, which encompass natural language processing, deep learning, and machine learning. The most frequently mentioned GAI models are ChatGPT and Bard from Google (Vaishya et al., 2024).

In the GAI-related medical education fields, the five top keywords are identified as follows:

• Medical education,

• Health education,

• Clinical skills,

• Medical examination,

• Medical students.

These keywords are all related to medical education, in which the frequently mentioned fields include surgical education, patient education, nursing education, and continuing medical education. In the field of health education, researchers pay more attention to public health, digital health, health literacy, and health care. For the medical students, clinical skills training and medical knowledge are equally important. The topics that are frequently discussed include clinical decision-making, clinical reasoning, clinical practice, and clinical management. Moreover, the medical examination is one of the most effective teaching methods, and it is integrated with the GAI technology deeply. In addition, it includes multi-choice questions, the medical licensing examination, and the clinical informatics board examination. The other typical keywords also include curriculum development, learning outcomes, problem-based learning, educational technology, and interactive learning.

Overall, these results suggest that a large proportion of GAI-related medical education studies have focused on AI-related technologies and medical education. Their deep integration improves the development of these disciplines.

5 Discussion

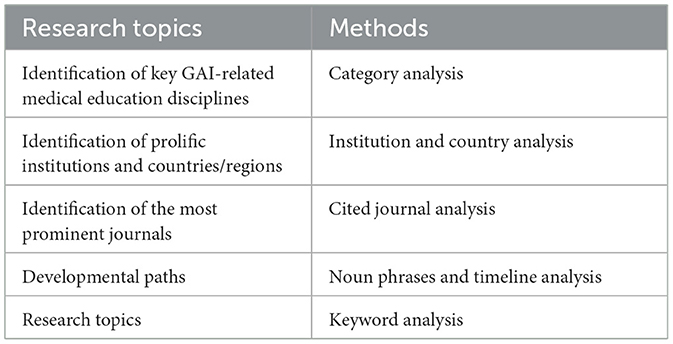

To gain insight into the research trends and focal areas in the GAI-related medical education, a bibliometric analysis is conducted in the article. The methods for different research topics in CiteSpace are shown in Table 1.

Based on the methods in CiteSpace, the results are summarized below. In the studies of GAI-related medical education disciplines, the results reveal that medicine, education, and computer sciences play important roles in this field. Eight major subjects are listed, and they are associated with the GAI-related medical education field. The major subjects include Health Care Sciences and Services, Education and Scientific Disciplines, General and Internal Medicine, Surgery, Medical Informatics, Education and Educational Research, Multidisciplinary Sciences, and Computer Science and Information Systems. At the same time, the numbers of disciplines associated with more than 5 publications are calculated. The results show that 69.2% of disciplines are related to medical science. Therefore, medical science is the foundation of the research field.

In the top five nations that make significant contributions in this research field, 80% of the countries are developed countries. The results show that the gap between the developed and developing countries increases continuously in the advanced research field. Therefore, the developing countries should be helped to improve their AI-related technologies and medical education.

The journals with the greatest publication output in this field over the analyzed period include JMIR Medical Education, the Cureus Journal of Medical Science, PLoS Digital Health, the Journal of Medical Internet Research, Nature, Healthcare-Basel, Medical Teacher, Radiology, Academic Medicine, and BMC Medical Education. Based on the investigations, the results can help researchers to select appropriate journals for publishing their related research findings. At the same time, the results can help researchers and students to find references that are related to GAI-related medical education.

In the studies of developmental paths, the results show that GAI has been applied to medical stakeholders at different levels and integrated with different disciplines of medicine. In the field of medical education, the main fields include medical exams, teaching methods and modes, and curriculum building. At the same time, the studies of GAI-related medical education are focused on AI-related technologies and medical education.

Although GAI is applied to medical education and many achievements are obtained, the researchers also pay more attention to the limitations of GAI and the GAI-related problems in medical education. The disadvantages of GAI (Katsamakas et al., 2024) include hallucination, poor-quality content, algorithmic bias, and unpredictability. When GAI is applied in medical education, the researchers concentrate on many problems, which include copyright, unpredictable control, pseudo imagination, privacy, security, quality, consistency, and triggering emotions (Mittal et al., 2024). Therefore, the researchers should take measures to address these GAI-related risks. The explainability, transparency, robustness, and fairness (Bogina et al., 2022) of GAI should be ensured. Supervision and education on the ethical use of GAI (Stahl and Eke, 2024) are crucial.

6 Conclusion

To gain insight into the research trends and focal areas in the GAI-related medical education field, a detailed bibliometric analysis is conducted. There has been a noticeable increase in publication output in the field of GAI-related medical education over the past 2 years, along with the emergence of several prominent topics during this time. Through keyword co-occurrence map analyses, AI-related technologies and the research in medical education are identified as the top topics of interest, providing insights that can help improve the teaching effects in GAI-related medical education. Given the inherently multidisciplinary nature of this field, universities should focus on appropriate directions for its development. The analysis results provide a foundation for future research in the GAI-related medical education field.

6.1 Limitation of the study

Although some research achievements are gained based on a detailed bibliometric analysis, there are some limitations in the article. First, only the Web of Science dataset is utilized to analyze in the investigation. Certainly, the dataset can be expanded to other datasets, including DOAJ, EBSCO, and Scopus. With the expansion of the database, the number of relevant articles will continue to increase, and the analysis results will be enriched. Second, the search strategy is designed using typical terms. However, many generative artificial intelligence models will be generated with the development of advanced technologies. With the emergence of different generative artificial intelligence models, the keywords and the analysis results will be enriched.

6.2 Implications of the study

In summary, these results highlight the importance of future comprehensive, systematic research areas focused on multidisciplinary collaboration and the implementation of various methodologies and perspectives to study the GAI-related medical education. The research fields can include understanding the abilities of GAI models, integrating GAI technologies into medical education further, solving resistance from educators or students, and ensuring ethically responsible use of GAI. Further studies will also be needed to explore mutual relationships between different areas of the GAI-related medical education field. The additional elucidation of these relationships has the potential to offer insight into how best to improve the GAI-related medical education.

Author contributions

QL: Validation, Data curation, Conceptualization, Supervision, Methodology, Project administration, Investigation, Resources, Writing – review & editing, Funding acquisition, Writing – original draft, Software, Formal analysis, Visualization.

Funding

The author declares that financial support was received for the research and/or publication of this article. This work was supported by Shandong Provincial Undergraduate Teaching Reform Research Project, China under Grant M2024140.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author declares that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Altintas, L., and Sahiner, M. (2024). Transforming medical education: the impact of innovations in technology and medical devices. Expert Rev. Med. Devices 21, 797–809. doi: 10.1080/17434440.2024.2400153

Arfaie, S., Mashayekhi, M. S., Mofatteh, M., Ma, C., Ruan, R., MacLean, M. A., et al. (2024). ChatGPT and neurosurgical education: a crossroads of innovation and opportunity. J. Clin. Neurosci. 129:110815. doi: 10.1016/j.jocn.2024.110815

Ba, H. J., Zhang, L. L., Enhancing, Z., and Yi, Z. (2024). clinical skills in pediatric trainees: a comparative study of ChatGPT-assisted and traditional teaching methods. BMC Med. Educ. 24:558. doi: 10.1186/s12909-024-05565-1

Babl, F. E., and Babl, M. P. (2023). Generative artificial intelligence: Can ChatGPT write a quality abstract? Emerg. Med. Aust. 35, 809–811. doi: 10.1111/1742-6723.14233

Bakkum, M. J., Hartjes, M. G., Piet, J. D., Donker, E. M., Likic, R., Sanz, E., et al. (2024). Using artificial intelligence to create diverse and inclusive medical case vignettes for education. Br. J. Clin. Pharmacol. 90, 640–648. doi: 10.1111/bcp.15977

Balakrishnan, T., and Sengar, S. S. (2024). Repvgg-gelan: Enhanced gelan with vgg-style convnets for brain tumour detection. arXiv [Preprint] arXiv: 2405.03541. Available online at: https://arxiv.org/abs/2405.03541

Balel, Y. (2023). Can ChatGPT be used in oral and maxillofacial surgery? J. Stomatol. Oral Maxillofac. Surg. 124:101471. doi: 10.1016/j.jormas.2023.101471

Banh, L., and Strobel, G. (2023). Generative artificial intelligence. Electron. Mark. 33:63. doi: 10.1007/s12525-023-00680-1

Benboujja, F., Hartnick, E., Zablah, E., Hersh, C., Callans, K., and Villamor, P. (2024). Overcoming language barriers in pediatric care: a multilingual, AI-driven curriculum for global healthcare education. Front. Public Health. 12:1337395. doi: 10.3389/fpubh.2024.1337395

Bogina, V., Hartman, A., Kuflik, T., Shoval, P., and Jbara, A. (2022). Educating software and AI stakeholders about algorithmic fairness, accountability, transparency and ethics. Int. J. Artif. Intell. Educ. 32, 808–833. doi: 10.1007/s40593-021-00248-0

Bordas, A., Le Masson, P., Thomas, M., and Weil, B. (2024). What is generative in generative artificial intelligence? A design-based perspective. Res. Eng. Des. 35, 427–443. doi: 10.1007/s00163-024-00441-x

Boscardin, C. K., Gin, B., Golde, P. B., and Hauer, K. E. (2024). ChatGPT and generative artificial intelligence for medical education: potential impact and opportunity. Acad. Med. 99, 22–27. doi: 10.1097/ACM.0000000000005439

Cervantes, J., Smith, B., Ramadoss, T., et al. (2024). Decoding medical educator's perceptions on generative artificial intelligence in medical education. J. Invest. Med. 72, 633–639. doi: 10.1177/10815589241257215

Chen, C. (2017). Science mapping: a systematic review of the literature. J. Data Inform. Sci. 2, 1–40. doi: 10.1515/jdis-2017-0006

Chen, C., Hu, Z., Liu, S., and Tseng, H. (2012). Emerging trends in regenerative medicine: a scientometric analysis in CiteSpace. Expert Opin. Biol. Ther. 12, 593–608. doi: 10.1517/14712598.2012.674507

Chen, S., Li, Y. Y., Lu, S., Van, H., Aerts, H. J., Savova, G. K., et al. (2024). Evaluating the ChatGPT family of models for biomedical reasoning and classification. J. Am. Med. Inform. Assoc. 31, 940–948. doi: 10.1093/jamia/ocad256

Ciekalski, M., Laskowski, M., Koperczak, A., Smierciak, M., and Sirek, S. (2024). Performance of ChatGPT and GPT-4 on Polish National Specialty Exam (NSE) in ophthalmology. Postepy Higieny Medycyny Doswiadczalnej 78, 111–116. doi: 10.2478/ahem-2024-0006

Cogo, A., Patsko, L., and Szoke, J. (2024). Generative artificial intelligence and ELT. ELT J. 78, 373–377. doi: 10.1093/elt/ccae051

Collins, R., Black, E. W., and Rarey, K. E. (2024). Introducing AnatomyGPT: A customized artificial intelligence application for anatomical sciences education. Clin. Anat. 37, 661–669. doi: 10.1002/ca.24178

Danehy, T., Hecht, J., Kentis, S., Schechter, C. B., and Jariwala, S. P. (2024). ChatGPT performs worse on USMLE-Style ethics questions compared to medical knowledge questions. Appl. Clin. Inform. 15, 1049–1055. doi: 10.1055/a-2405-0138

Divito, C. B., Katchikian, B. M., Gruenwald, J. E., and Shappell, E. (2024). The tools of the future are the challenges of today: the use of ChatGPT in problem-based learning medical education. Med. Teach. 46, 320–322. doi: 10.1080/0142159X.2023.2290997

Dogru, T., Line, N., Hanks, L., et al. (2024). The implications of generative artificial intelligence in academic research and higher education in tourism and hospitality. Tour. Econ. 30, 1083–1094. doi: 10.1177/13548166231204065

Ergin, E., and Sanci, A. (2024). Can ChatGPT help patients understand their andrological diseases? Revista Internacional Andrologia. 22, 14–20. doi: 10.22514/j.androl.2024.010

Gosak, L., Pruinelli, L., Topaz, M., and Štiglic, G. (2024). The ChatGPT effect and transforming nursing education with generative AI: discussion paper. Nurse Educ. Pract. 75:103888. doi: 10.1016/j.nepr.2024.103888

Gravina, G., Pellegrino, R., Palladino, G., Imperio, G., Ventura, A., and Federico, A. (2024). Charting new AI education in gastroenterology: Cross-sectional evaluation of ChatGPT and perplexity AI in medical residency exam. Digest. Liver Dis. 56, 1304–1311. doi: 10.1016/j.dld.2024.02.019

Grimm, D. R., Lee, Y. J., Hu, K., Liu, L., Garcia, O., Balakrishnan, K., et al. (2024). The utility of ChatGPT as a generative medical translator. Eur. Arch. Oto-Rhino-Laryngol. 281, 6161–6165. doi: 10.1007/s00405-024-08708-8

Houssaini, M. S., Aboutajeddine, A., Toughrai, I., and El Hajjami, A. (2024). Development of a design course for medical curriculum: using design thinking as an instructional design method empowered by constructive alignment and generative AI. Think. Skills Creat. 52:101491. doi: 10.1016/j.tsc.2024.101491

Huang, H., and Lin, H. C. (2024). ChatGPT as a life coach for professional identity formation in medical education: a self-regulated learning perspective. Educ. Technol. Soc. 27, 374–389.

Huang, Y., Xu, B. B., Wang, X. Y., and Li, J. (2024). Implementation and evaluation of an optimized surgical clerkship teaching model utilizing ChatGPT. BMC Med. Educ. 24:1540. doi: 10.1186/s12909-024-06575-9

Huo, X. N., and Siau, K. L. (2024). Generative artificial intelligence in business higher education: a focus group study. J. Global Inform. Manage. 32:364093. doi: 10.4018/JGIM.364093

Janumpally, R., Nanua, S., Ngo, A., et al. (2025). Generative artificial intelligence in graduate medical education. Front. Med. 11:1525604. doi: 10.3389/fmed.2024.1525604

Kassab, J., El Hajjar, A. H., Wardrop III, R. M., and Brateanu, A. (2024). Accuracy of online artificial intelligence models in primary care settings. Am. J. Prev. Med. 66, 1054–1059. doi: 10.1016/j.amepre.2024.02.006

Katsamakas, E., Pavlov, O. V., and Saklad, R. (2024). Enhancing Artificial intelligence and the transformation of higher education institutions: a systems approach. Sustainability. 16:6118. doi: 10.3390/su16146118

Khan, A., Yunus, R., Sohail, M., Rehman, T. A., Saeed, S., Bu, Y., et al. (2024). Artificial intelligence for anesthesiology board-style examination questions: role of large language models. J. Cardiothorac. Vasc. Anesth. 38, 1251–1259. doi: 10.1053/j.jvca.2024.01.032

Khosravi, T., Al Sudani, Z. M., and Oladnabi, M. (2024). To what extent does ChatGPT understand genetics? Innov. Educ. Teach. Int. 61, 1320–1329. doi: 10.1080/14703297.2023.2258842

Kiyak, Y. S., and Kononowicz, A. A. (2024). Case-based MCQ generator: A custom ChatGPT based on published prompts in the literature for automatic item generation. Med. Teach. 46, 1018–1020. doi: 10.1080/0142159X.2024.2314723

Kshetri, N. (2023a). Generative artificial intelligence and the economics of effective prompting. Computer 56, 112–118. doi: 10.1109/MC.2023.3314322

Kshetri, N. (2023b). Generative artificial intelligence in marketing. IT Prof. 25, 71–75. doi: 10.1109/MITP.2023.3314325

Kumar, P. (2024). Large language models(LLMs): survey, technical frameworks, and future challenges. Artif. Intell. Rev. 57:260. doi: 10.1007/s10462-024-10888-y

Kumar, S., Mallik, A., and Sengar, S. S. (2023). Community detection in complex networks using stacked autoencoders and crow search algorithm. J. Supercomput. 79, 3329–3356. doi: 10.1007/s11227-022-04767-y

Li, D. J., Kao, Y. C., Tsai, S. J., Bai, Y. M., Yeh, T. C., Chu, C. S., et al. (2024b). Comparing the performance of ChatGPT GPT-4, Bard, and Llama-2 in the Taiwan Psychiatric Licensing Examination and in differential diagnosis with multi-center psychiatrists. Psychiatry Clin. Neurosci. 78, 347–352. doi: 10.1111/pcn.13656

Li, K. C., Bu, Z. J., Shahjalal, M., He, B. X., Zhuang, Z. F., Li, C., et al. (2024a). Performance of ChatGPT on Chinese master's degree entrance examination in clinical medicine. PLoS ONE 19:e0301702. doi: 10.1371/journal.pone.0301702

Li, Z., Yhap, N., Liu, L. P., Zhengjie, W., Zhonghao, X., Xiaoshu, Y., et al. (2024c). Impact of large language models on medical education and teaching adaptations. JMIR Med. Inform. 12:e55933. doi: 10.2196/55933

Liu, C. L., Ho, C. T., and Wu, T. C. (2024). Custom GPTs enhancing performance and evidence compared with GPT-3.5, GPT-4, and GPT-4o? A study on the emergency medicine specialist examination. Healthcare. 12:1726. doi: 10.3390/healthcare12171726

Liu, P., Yuan, W., Fu, J., Jiang, Z., Hayashi, H., and Neubig, G. (2023). Pretrain, prompt, and predict: a systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 55, 1–35. doi: 10.1145/3560815

Lower, K., Seth, I., Lim, B., and Seth, N. (2023). ChatGPT-4: transforming medical education and addressing clinical exposure challenges in the post-pandemic era. Indian J. Orthop. 57, 1527–1544. doi: 10.1007/s43465-023-00967-7

Lu, Q. (2024). Development of intelligent medical engineering discipline over the past decade. IEEE Access 12, 169124–169135. doi: 10.1109/ACCESS.2024.3498312

Lubitz, M., and Latario, L. (2024). Performance of two artificial intelligence generative language models on the orthopaedic in-training examination. Orthopedics. 47, e146–e150. doi: 10.3928/01477447-20240304-02

Luke, W. A. N. V., Chong, L. S., Ban, K. H., Wong, A. H., Zhi Xiong, C., Shuh Shing, L., et al. (2024). Is ChatGPT ‘ready' to be a learning tool for medical undergraduates and will it perform equally in different subjects? Comparative study of ChatGPT performance in tutorial and case-based learning questions in physiology and biochemistry. Med. Teach. 46, 1441–1447. doi: 10.1080/0142159X.2024.2308779

Madaudo, C., Parlati, A. L. M., Di Lisi, D., Carluccio, R., Sucato, V., Vadalà, G., et al. (2024). Artificial intelligence in cardiology: a peek at the future and the role of ChatGPT in cardiology practice. J. Cardiovasc. Med. 25, 766–771. doi: 10.2459/JCM.0000000000001664

Madrid-García, A., Rosales-Rosado, Z., Freites-Nuñez, D., Pérez-Sancristóbal, I., Pato-Cour, E., Plasencia-Rodríguez, C., et al. (2023). Harnessing ChatGPT and GPT-4 for evaluating the rheumatology questions of the Spanish access exam to specialized medical training. Sci. Rep. 13:22129. doi: 10.1038/s41598-023-49483-6

Maharjan, J., Garikipati, A., Singh, N. P., Cyrus, L., Sharma, M., Ciobanu, M., et al. (2024). OpenMedLM: prompt engineering can out-perform fine-tuning in medical question-answering with open-source large language models. Sci. Rep. 14:14156. doi: 10.1038/s41598-024-64827-6

Maitin, M., Nogales, A., Fernandez-Rincon, S., Aranguren, E., Cervera-Barba, E., Denizon-Arranz, S., et al. (2024). Application of large language models in clinical record correction: a comprehensive study on various retraining methods. J. Am. Med. Inform. Assoc. 32, 341–348. doi: 10.2139/ssrn.4772540

McGrath, S. P., Kozel, B. A., Gracefo, S., Sutherland, N., Danford, C. J., and Walton, N. (2024). A comparative evaluation of ChatGPT 3.5 and ChatGPT 4 in responses to selected genetics questions. J. Am. Med. Inform. Assoc. 31, 2271–2283. doi: 10.1093/jamia/ocae128

Meşe, I., Taşliçay, C. A., Kuzan, B. N., Kuzan, T. Y., and Sivrioglu, A. K. (2024). Educating the next generation of radiologists: a comparative report of ChatGPT and e-learning resources. Diag. Interven. Radiol. 30, 163–174. doi: 10.4274/dir.2023.232496

Mesko, B. (2023). Prompt engineering as an important emerging skill for medical professionals: tutorial. J. Med. Internet Res. 25:e50638. doi: 10.2196/50638

Meyer, A., Ruthard, J., and Streichert, T. (2024). Dear ChatGPT-can you teach me how to program an app for laboratory medicine? J. Lab. Med. 48, 197–201. doi: 10.1515/labmed-2024-0034

Miao, J., Thongprayoo, C., Valencia, O. A. G., et al. (2024b). Performance of ChatGPT on nephrology test questions. Clin. J. Am. Soc. Nephrol. 19, 35–43. doi: 10.2215/CJN.0000000000000330

Miao, J., Thongprayoon, C., Craici, I. M., et al. (2024a). How to incorporate generative artificial intelligence in nephrology fellowship education. J. Nephrol. 37, 2491–2497. doi: 10.1007/s40620-024-02165-6

Mittal, U., Sai, S., Chamola, V., Guizani, M., and Niyato, D. (2024). A comprehensive review on generative AI for education. IEEE Access 12, 142733–142759. doi: 10.1109/ACCESS.2024.3468368

Moglia, A., Georgiou, K., Cerveri, P., Mainardi, L., Satava, R. M., and Cuschieri, A. (2024). Large language models in healthcare: from a systematic review on medical examinations to a comparative analysis on fundamentals of robotic surgery online test. Artif. Intell. Rev. 57:231. doi: 10.1007/s10462-024-10849-5

Monroe, C. L., Abdelhafez, Y. G., Atsina, K., Aman, E., Nardo, L., and Madani, M. H. (2024). Evaluation of responses to cardiac imaging questions by the artificial intelligence large language model ChatGPT. Clin. Imag. 112:110193. doi: 10.1016/j.clinimag.2024.110193

Mu, Y., and He, D. (2024). The potential applications and challenges of ChatGPT in the medical field. Int. J. Gener. Med. 17, 817–826. doi: 10.2147/IJGM.S456659

Naamati-Schneider, L. (2024). Enhancing AI competence in health management: students' experiences with ChatGPT as a learning Tool. BMC Med. Educ. 24:598. doi: 10.1186/s12909-024-05595-9

Oh, N., Choi, G. S., and Lee, W. Y. (2023). ChatGPT goes to the operating room: evaluating GPT-4 performance and its potential in surgical education and training in the era of large language models. Ann. Surg. Treat. Res. 104, 269–273. doi: 10.4174/astr.2023.104.5.269

Pallottino, F., Violino, S., Figorilli, S., Pane, C., Aguzzi, J., and Colle, G. (2025). Applications and perspectives of generative artificial intelligence in agriculture. Comput. Electron. Agricult. 230:109919. doi: 10.1016/j.compag.2025.109919

Pandey, V. K., Munshi, A., Mohani, B. K., Bansal, K., and Rastogi, K. (2024). Evaluating ChatGPT to test its robustness as an interactive information database of radiation oncology and to assess its responses to common queries from radiotherapy patients: a single institution investigation. Cancer Radiotherapie 28, 258–264. doi: 10.1016/j.canrad.2023.11.005

Parente, D. J. (2024). Generative artificial intelligence and large language models in primary care medical education. Fam. Med. 56, 534–540. doi: 10.22454/FamMed.2024.775525

Park, H. J., Kim, E. J., and Kim, J. Y. (2024). Exploring large language models and the metaverse for urologic applications: potential, challenges, and the path forward. Int. Neurourol. J. 28, S65–S73. doi: 10.5213/inj.2448402.201

Patel, D., Raut, G., Zimlichman, E., Cheetirala, S. N., Nadkarni, G. N., and Glicksberg, B. S. (2024). Evaluating prompt engineering on GPT-35′s performance in USMLE-style medical calculations and clinical scenarios generated by GPT-4. Sci. Rep. 14:17341. doi: 10.1038/s41598-024-66933-x

Patil, R., Heston, T. F., and Bhuse, V. (2024). Prompt engineering in healthcare. Electronics 13:2961. doi: 10.3390/electronics13152961

Pradhan, T., Gupta, O., and Chawla, G. (2024). The Future of ChatGPT in medicinal chemistry: harnessing AI for accelerated drug discovery. Chemistryselect. 9:e202304359. doi: 10.1002/slct.202304359

Ramgopal, S., Varma, S., Gorski, J. K., Kester, K. M., Shieh, A., and Suresh, S. (2024). Evaluation of a large language model on the American academy of pediatrics' PREP emergency medicine question bank. Pediatr. Emerg. Care. 40, 871–875. doi: 10.1097/PEC.0000000000003271

Ratten, V., and Jones, P. (2023). Generative artificial intelligence(ChatGPT): implications for management educators. Int. J. Manage. Educ. 21:100857. doi: 10.1016/j.ijme.2023.100857

Rawat, K. S., and Sood, S. K. (2021). Knowledge mapping of computer applications in education using CiteSpace. Comput. Appl. Eng. Educ. 29, 1324–1339. doi: 10.1002/cae.22388

Riedel, M., Kaefinger, K., Stuehrenberg, A., Ritter, V., Amann, N., Graf, A., et al. (2023). ChatGPT's performance in German OB/GYN exams-paving the way for AI-enhanced medical education and clinical practice. Front. Med. 10:1296615. doi: 10.3389/fmed.2023.1296615

Ross, K., McGrow, D., Zhi, G., et al. (2024). Foundation models, generative AI, and large language models. CIN-Comput. Inform. Nurs. 42, 377–387. doi: 10.1097/CIN.0000000000001149

Sengar, S. S., Hasan, A. B., Kumar, S., Yadav, S. K., and Singh, A. (2025). Generative artificial intelligence: a systematic review and applications. Multimed. Tools Appl. 84, 23661–23770. doi: 10.1007/s11042-024-20016-1

Sengar, S. S., Meulengracht, C., Boesen, M. P., and Nielsen, M. (2023). Multi-planar 3D knee MRI segmentation via UNet inspired architectures. Int. J. Imaging Syst. Technol. 33, 985–998. doi: 10.1002/ima.22836

Sikarwar, S. S., Rana, A. K., and Sengar, S. S. (2025). Entropy-driven deep learning framework for epilepsy detection using electro encephalogram signals. Neuroscience 577, 12–24. doi: 10.1016/j.neuroscience.2025.05.003

Sivarajkumar, S., Gao, F. Y., Denny, P., Aldhahwani, B., Visweswaran, S., Bove, A., et al. (2024). Mining clinical notes for physical rehabilitation exercise information: natural language processing algorithm development and validation study. JMIR Med. Inform. 12:e52289. doi: 10.2196/52289

Srinivasan, N., Samaan, J. S., Rajeev, N. D., et al. (2024). Large language models and bariatric surgery patient education: a comparative readability analysis of GPT-3.5, GPT-4, Bard, and online institutional resources. Surg. Endosc. Other Intervent. Tech. 38, 2522–2532. doi: 10.1007/s00464-024-10720-2

Stahl, B. C., and Eke, D. (2024). The ethics of ChatGPT-Exploring the ethical issues of an emerging technology. Int. J. Inf. Manage. 74:102700. doi: 10.1016/j.ijinfomgt.2023.102700

Tassoti, S. (2024). Assessment of students use of generative artificial intelligence: prompting strategies and prompt engineering in chemistry education. J. Chem. Educ. 101, 2475–2482. doi: 10.1021/acs.jchemed.4c00212

Tokuc, B., and Varol, G. (2023). Medical education in the era of advancing technology. Balkan Med. J., 40, 395–399. doi: 10.4274/balkanmedj.galenos.2023.2023-7-79

Turchoie, M. R., Kisselev, S., Van Bulck, L., and Bakken, S. (2024). Increasing generative artificial intelligence competency among students enrolled in doctoral nursing research coursework. Appl. Clin. Inform. 15, 842–851. doi: 10.1055/a-2373-3151

Vaishya, R., Iyengar, K. P., Patralekh, M. K., Botchu, R., Shirodkar, K., Jain, V. K., et al. (2024). Effectiveness of AI-powered Chatbots in responding to orthopaedic postgraduate exam questions-an observational study. Int. Orthop. 48, 1963–1969. doi: 10.1007/s00264-024-06182-9

Wojcik, S., Rulkiewicz, A., Pruszczyk, P., Lisik, W., Poboży, M., and Domienik-Karłowicz, J. (2024). Reshaping medical education: Performance of ChatGPT on a PES medical examination. Cardiol. J. 31, 442–450. doi: 10.5603/cj.97517

Xing, Y. (2024). The influence of responsible innovation on ideological education in universities under generative artificial intelligence. IEEE Access 12, 133008–133017. doi: 10.1109/ACCESS.2024.3459469

Yamaguchi, S., Morishita, M., Fukuda, H., Muraoka, K., Nakamura, T., and Yoshioka, I. (2024). Evaluating the efficacy of leading large language models in the Japanese national dental hygienist examination: a comparative analysis of ChatGPT, Bard, and Bing Chat. J. Dental Sci. 19, 2262–2267. doi: 10.1016/j.jds.2024.02.019

Yang, W. H., Chan, Y. H., Huang, C. P., and Chen, T. J. (2024). Comparative analysis of GPT-3.5 and GPT-4.0 in Taiwan's medical technologist certification: a study in artificial intelligence advancements. J. Chin. Med. Assoc. 87, 525–530. doi: 10.1097/JCMA.0000000000001092

Zhao, H. Y., Chen, H. J., Yang, F., et al. (2024). Explainability for large language models: a survey. ACM Transac. Intell. Syst. Technol. 15:20. doi: 10.1145/3639372

Zong, H., Wu, R., Cha, J., Wang, J., Wu, E., Li, J., et al. (2024). Large language models in worldwide medical exams: platform development and comprehensive analysis. J. Med. Internet Res. 26:e66114. doi: 10.2196/66114

Keywords: generative artificial intelligence, medical education, bibliometrics, CiteSpace, bibliometric analysis

Citation: Lu Q (2025) Development of generative artificial intelligence in medical education: a bibliometric profiling. Front. Educ. 10:1613067. doi: 10.3389/feduc.2025.1613067

Received: 16 April 2025; Accepted: 30 September 2025;

Published: 17 October 2025.

Edited by:

Tisni Santika, Universitas Pasundan, IndonesiaReviewed by:

John Mark R. Asio, Gordon College, PhilippinesSandeep Singh Sengar, Cardiff Metropolitan University, United Kingdom

Waqar M. Naqvi, Datta Meghe Institute of Higher Education and Research, India

Copyright © 2025 Lu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qiang Lu, bHVxaWFuZzI3MTAxNkAxNjMuY29t

Qiang Lu

Qiang Lu