- 1Office of Research and Development, Jordan Hu College of Science and Liberal Arts, New Jersey Institute of Technology, Newark, NJ, United States

- 2Department of Humanities and Social Sciences, Jordan Hu College of Science and Liberal Arts, New Jersey Institute of Technology, Newark, NJ, United States

- 3Facultad de Ciencias Económicas y Administrativas, Universidad de las Américas Ecuador, Quito, Ecuador

Introduction: Artificial intelligence (AI) has reshaped STEM education by influencing instructional design, learner agency, and ethical frameworks. However, the integration of AI into educational ecosystems raises critical questions regarding pedagogical coherence, assessment reform, and algorithmic ethics.

Methods: This study conducted a systematic review of 41 peer-reviewed publications to examine how AI has been integrated into STEM educational ecosystems. The review focused on peer-reviewed studies published between 2020 and 2025 that addressed AI applications in STEM education, transdisciplinary approaches to AI integration, and the ethical challenges inherent in AI-driven learning environments. A transdisciplinary communication (TDC) framework guided the synthesis of findings. The review followed PRISMA protocols for transparency and utilized Nvivo, Excel and VOSviewer to support thematic coding and bibliometric mapping.

Results: The analysis identified three emergent themes: (1) the evolving role of student agency in AI-enhanced learning, (2) shifts in assessment paradigms toward adaptive, AI mediated models, and (3) ethical tensions surrounding algorithmic transparency, equity, and automation in pedagogical design. Divergent disciplinary perspectives were noted, with some emphasizing efficiency and other prioritizing inclusive access and epistemic reflexivity.

Discussion: Drawing on the Universal Design for Learning (UDL) framework and trustworthy AI principles, this review offers a critical lens on inclusivity and design ethics in AI-mediated learning environments. The results offer a conceptual foundation and a set of actionable strategies for institutions, educators, and policymakers seeking to implement AI technologies in ways that are ethically sound, inclusive, and informed by epistemic plurality in STEM education.

1 Introduction

1.1 Research topic and importance

Artificial intelligence (AI) fundamentally transforms STEM education by reshaping how students learn, educators teach, and institutions design curricula. AI-driven systems1—such as adaptive learning platforms, intelligent tutoring systems, and automated assessment tools—offer unprecedented opportunities to personalize instruction, boost student engagement, and narrow educational disparities. Nevertheless, the integration of AI also raises critical ethical concerns, including algorithmic inconsistencies, risks of deepening the digital divide, and the concentration of decision-making authority among a limited number of entities (Craig, 2023).

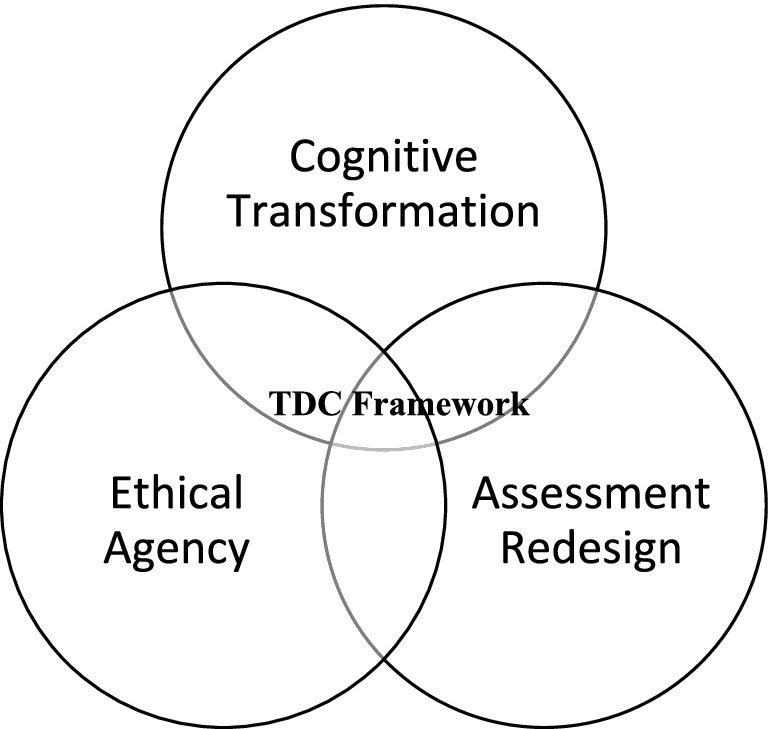

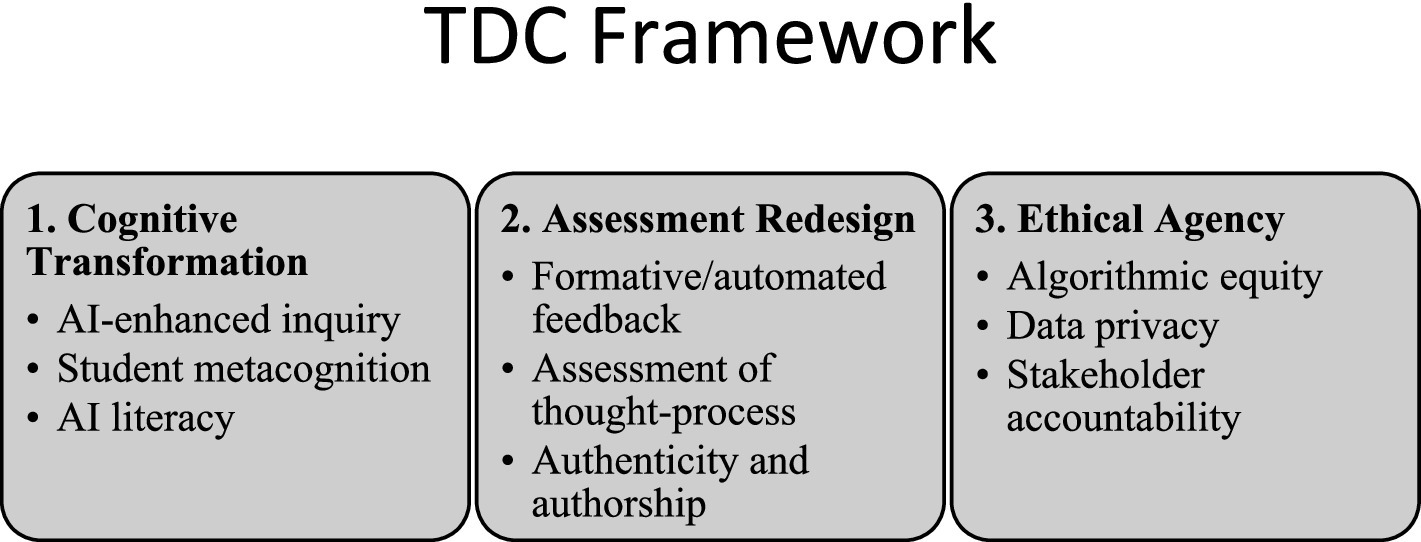

This paper adopts a transdisciplinary communication (TDC) framework (see Figure 1) to examine how AI can be harnessed to promote high-quality learning experiences that expand participation and align with Sustainable Development Goal 4 (SDG4) for broad access to education. In previous work, we have defined TDC as a process of iterative meaning-making and epistemic translation that enables multiple disciplinary actors—educators, technologists, ethicists, and policymakers—to co-construct shared language, goals, and frameworks for action (León, 2024; León et al., 2024; Lipuma and León, 2024b). This conceptualization builds upon Piaget’s constructivist epistemology (1972), the integrative logic of transdisciplinarity proposed by Nicolescu (2002, 2008), and our engagement with dialogical communication models advanced by Callaos (2022) and Callaos and León (2024). TDC provides a foundational mechanism for fostering convergence and co-governance in AI-integrated STEM education.

Figure 1. The TDC lens in AI-driven STEM education. This conceptual model illustrates the role of the transdisciplinary communication (TDC) framework as a unifying meta-lens. TDC mediates across three thematic domains—cognitive transformation, assessment redesign, and ethical agency—identified in the systematic review. The framework fosters cross-stakeholder engagement, enabling integrative insights and actionable strategies at the intersection of pedagogy, technology, and policy.

1.2 Existing research on AI in STEM education

The scholarly inquiry into artificial intelligence (AI) in education is extensive, yet it remains notably fragmented across various disciplines. Research in computer science has primarily focused on technological advancements, including machine learning algorithms, natural language processing (NLP), and educational data analytics (Zawacki-Richter et al., 2020; Baker and Hawn, 2022; Doğan and Şahin, 2024). For instance, bibliometric analyses indicate a marked increase in publications from 2021 onward, with a focus on predictive analytics, adaptive learning, and generative feedback systems in higher education.

Educational researchers, meanwhile, have highlighted the pedagogical affordances of AI, particularly in enhancing student engagement, inquiry-based learning (IBL), and formative assessment. Studies emphasize that intelligent tutoring systems and AI-enhanced simulations provide personalized and interactive learning experiences (Chen et al., 2020; Holmes et al., 2019; Tilepbergenovna, 2024). These systems are particularly beneficial in STEM contexts, where conceptual abstraction and iterative problem-solving are central to learning efficacy.

From a normative perspective, scholars in philosophy, education policy, and cognitive science have examined the ethical implications of AI, addressing algorithmic bias, transparency, and equitable access. Recent edited volumes (Kumar et al., 2024) and case-based contributions (Singh and Thakur, 2024) argue for ethical guardrails, inclusive governance models, and the development of explainable AI (XAI) that supports trust and interpretability in educational decision-making (Adadi and Berrada, 2018; West et al., 2019).

Despite this progress, disciplinary siloing persists. Technical studies often exclude pedagogical theory, educational innovations overlook algorithmic limitations, and ethical discourses are rarely grounded in the realities of the classroom. This lack of integration constrains the development of inclusive and context-aware AI solutions. Addressing this fragmentation requires a transdisciplinary communication (TDC) framework—one that facilitates coordinated action among educators, technologists, ethicists, and policymakers (Lipuma and León, 2024a). This paper adopts such a framework to synthesize disparate insights and promote AI integration that is pedagogically sound, ethically responsible, and socially responsive.

1.3 Research gap and unresolved issues

Despite its promise, AI’s application in STEM education is hindered by unresolved issues that threaten broad and fair implementation. First, AI systems often reflect the patterns and distortions inherent in their training data, which can lead to algorithmic unfairness and uneven learning outcomes. Second, many AI applications are designed in isolation, lacking collaborative input from educators, ethicists, and policymakers, which results in platforms that fail to address the diverse cognitive, cultural, and social needs of all learners. Third, the centralization of AI development among a few large corporations raises concerns about power imbalances and the reinforcement of disciplinary silos. Addressing the existing gaps in “fairness, accountability, transparency, and ethics of AI-based interventions in society” (Prabhakaran et al., 2022) is critical to ensuring that AI functions as a force multiplier for innovation and as a catalyst for broadening access rather than reinforcing existing disparities.

1.4 How this paper addresses the problem

This paper leverages a transdisciplinary communication (TDC) framework to guide the responsible integration of AI in STEM education. The study employs constructivist grounded theory (CGT) to analyze current AI education models, ethical frameworks, and policy guidelines, fostering collaboration among educators, technologists, ethicists, and policymakers. The goal is to propose actionable strategies that promote inquiry-based learning, cognitive development, and accessibility, ensuring that AI is effective and fair. The discussion sections of this paper are structured as follows: the Background and Importance section outlines the evolution of AI in education and underscores the necessity for transdisciplinary collaboration; the Literature Review synthesizes current research and identifies key gaps; the Actionable Strategies for AI-Driven STEM Education section proposes practical frameworks to support inclusive and ethical AI integration; the Implications and Future Directions section discusses policy considerations and emerging research trajectories; and finally, the Conclusion offers closing insights and recommendations to foster engagement, innovation, and ethical practice in AI-powered STEM education.

1.5 Research design and PICOS framework

To ensure clarity and methodological rigor, this study employs a research design aligned with a modified PICOS framework—commonly used in systematic reviews—to define the scope and relevance of the inquiry. While this framework originates in health sciences, its adaptation here reflects the growing need for structured evidence synthesis in educational research, particularly in fields influenced by emerging technologies.

• Population: The review focuses on students and educators operating within STEM education environments, especially those encountering generative AI tools as part of their academic or instructional experiences. Special consideration is given to populations navigating issues of access, digital literacy, and institutional policy shifts.

• Intervention: The central intervention examined is the integration of generative AI tools—such as ChatGPT, Google Gemini, and domain-specific AI platforms—into teaching, learning, and assessment practices. These tools represent a transformative presence in the educational landscape, warranting scrutiny, and pedagogical innovation.

• Comparison: While not always explicitly stated in the primary literature, traditional non-AI instructional approaches serve as the implicit comparator throughout the study. Contrasts are drawn between AI-mediated and conventional methods to highlight shifts in cognitive agency, authorship norms, and assessment integrity.

• Outcomes: Key outcomes analyzed include the promotion of cognitive integrity (students’ ability to retain and demonstrate authentic intellectual labor), the maintenance of academic integrity in an AI-rich context, and the redesign of instructional and evaluative practices to reflect transparency, ethical reasoning, and reflective learning.

• Study designs: The review incorporates peer-reviewed empirical studies, policy analyses, and systematic reviews published in the past decade. Studies were selected based on relevance to STEM education and AI integration, methodological transparency, and their contributions to theory, practice, or policy discourse.

This structured approach ensures that the evidence synthesized is not only comprehensive and thematically organized but also directly aligned with the broader goals of equitable, effective, and ethically grounded AI adoption in STEM education.

2 Background and importance

Integrating artificial intelligence (AI) into STEM education marks a transformative shift in pedagogical practice. AI-driven tools increasingly redefine instructional models by supporting personalization, formative assessment, and scalable delivery. These technologies respond to current demands for inquiry-based, interdisciplinary, and competency-driven learning (Frey, 2018, p. 1134). In this context, AI enhances problem-solving skills and computational thinking, fostering ethical decision-making among learners.

However, these innovations also surface critical challenges, including inequitable access, algorithmic opacity, and the over-centralization of AI design. These issues risk undermining the inclusive potential of educational AI. Addressing them requires a transdisciplinary approach that integrates educational, technological, cognitive, ethical, and policy perspectives. Persistent issues such as the digital divide, algorithmic inconsistencies, and the over-centralization of AI development can undermine the promise of expanded educational access. Addressing these concerns necessitates a transdisciplinary approach that unites perspectives from education, technology, cognitive sciences, ethics, and policy-making to ensure that AI systems are technologically sophisticated and socially responsible.

Understanding AI’s evolving role in STEM education involves examining three interrelated developments: (1) the historical trajectory of AI-based educational technologies, (2) the emergence of AI literacy as a core competency, and (3) the pedagogical transition toward AI-assisted inquiry-based learning (IBL). These shifts underscore the need for integrative frameworks that balance innovation with ethical oversight and broad accessibility. These dimensions provide critical insights into how AI has reshaped educational competencies, highlighting the need for frameworks that integrate ethical considerations and practices that promote broad access. The following subsections provide a more detailed examination of these dimensions.

2.1 Historical development and key AI-driven educational tools

The integration of artificial intelligence into education has evolved from foundational innovations in computer-assisted instruction to today’s highly adaptive, data-driven platforms. Early systems such as PLATO (1960s) and LOGO (1970s) introduced programmable logic and interactive learning environments, setting the stage for the incorporation of machine learning and natural language processing in contemporary applications (Bond et al., 2024). Modern AI tools—including adaptive learning systems like Knewton and Carnegie Learning and conversational agents such as IBM Watson and Google’s AI tutor—now offer dynamic personalization, formative assessment, and on-demand feedback (Dutta et al., 2024).

These advances mark a shift from static digital content to responsive educational ecosystems. However, as AI tools gain influence over instructional decisions, concerns about algorithmic bias, unequal access, and the transparency of learning analytics have intensified. The historical trajectory reveals not only technical progress but also the need for ethical frameworks that evolve in tandem with technological capabilities.

2.2 From learning literacy to digital literacy to AI literacy

The concept of literacy in education has undergone a fundamental transformation. Where once it referred primarily to reading, writing, and numeracy, digital technologies expanded this scope to include digital literacy—skills such as computational thinking, information evaluation, and media fluency. As artificial intelligence becomes a pervasive element in education, AI literacy emerges as a crucial competency. This entails not only the ability to use AI tools but also to interpret algorithmic outputs, recognize their limitations, and evaluate their social and ethical consequences.

Developing AI literacy2 requires a transdisciplinary approach that integrates perspectives from computer science, ethics, education, and the social sciences. Such integration fosters the capacity for students to engage critically and reflexively with AI systems, preparing them for both professional environments and participatory citizenship in AI-mediated societies.

2.3 The shift from teacher-centered to AI-assisted inquiry-based learning (IBL)

Traditional STEM education has often relied on teacher-centered methodologies, where content is delivered in a fixed, hierarchical format. While such approaches can be practical for foundational knowledge acquisition, they may constrain student engagement and limit opportunities for exploratory learning. The emergence of AI technologies has accelerated a pedagogical shift toward inquiry-based learning (IBL), emphasizing student agency, critical thinking, and problem-solving.

AI-driven platforms support this transition by offering real-time analytics, adaptive feedback, and personalized learning trajectories that align with individual learner needs. These tools also democratize access to high-quality STEM education by mitigating barriers related to geography and socioeconomic status. However, the deployment of AI-assisted IBL must be approached with critical attention to issues of algorithmic bias, accessibility, and ethical oversight to ensure equitable implementation.

Building on this foundation, the following literature review analyzes current research on AI in STEM education, with attention to how these technologies have been integrated, their instructional and policy implications, and the ethical considerations they raise. This literature synthesis informs a broader understanding of the field and identifies key gaps that future transdisciplinary efforts must address to develop inclusive, context-sensitive AI learning environments.

2.4 Research objectives and innovation rationale

Given the rapid evolution and complex implications of artificial intelligence in STEM education, this study aims to:

a) Identify and synthesize current research trends and key contributions across technical, pedagogical, ethical, and policy domains;

b) Assess the extent to which these contributions reflect transdisciplinary integration, address concerns of broad access and algorithmic equity, and

c) Develop actionable, research-informed strategies for advancing inclusive, AI-powered STEM learning environments. By combining bibliometric and systematic methods, the research seeks to establish a foundation for responsible and context-sensitive innovation in educational AI.

This study introduces three interlinked innovations that contribute substantively to the discourse on AI in STEM education:

• Theoretical innovation: It operationalizes Transdisciplinary Communication (TDC) not solely as a conceptual framework but as an applied methodology for aligning multiple epistemic communities—technologists, educators, ethicists, and policymakers—toward collaborative problem-solving. Through this approach, the study foregrounds iterative meaning-making and epistemic translation as core practices that enable ethical and context-sensitive AI implementation.

• Methodological innovation: The study employs a dual-method design that integrates bibliometric analysis and systematic literature review (SLR). This hybrid model enables both macro-level mapping of publication trends and micro-level thematic synthesis of content. Such integration allows for simultaneous observation of structural patterns and interpretive depth—a synthesis rarely executed in STEM-AI educational research.

• Practical and ethical innovation: The project embraces open science principles by publicly sharing its protocols, inclusion/exclusion criteria, and curated datasets via the Open Science Framework (OSF). This commitment to transparency enhances reproducibility, encourages interdisciplinary dialogue, and democratizes access to research outputs—particularly for educators and policymakers operating outside elite research institutions.

Together, these innovations respond to the need for a more inclusive, critically reflective, and epistemologically pluralistic approach to AI in STEM education. They serve not only as academic contributions but also as practical guides for institutional adoption, aligning with the broader goals of equitable innovation and reflexive governance.

To realize these objectives and articulate these innovations in actionable terms, the study follows a rigorous methodological approach that blends bibliometric and systematic review procedures. The following section details the strategies used for literature identification, data extraction, coding, and thematic synthesis, with attention to transparency, reliability, and reproducibility.

3 Methodological approach

To comprehensively examine the integration of artificial intelligence (AI) in STEM education, this study employed a sequential two-phase methodological approach combining bibliometric analysis and a systematic literature review (SLR). This design enabled both the quantitative mapping of research trends and the qualitative synthesis of scholarly findings. The methodological rationale is rooted in the complex and transdisciplinary nature of the topic, which necessitates convergence across technical, pedagogical, ethical, and policy-oriented domains.

3.1 Phase 1: Bibliometric analysis for corpus refinement

The initial corpus comprised over 3,700 records retrieved from the Web of Science, Scopus, and ERIC databases using a broad Boolean query that encompassed AI, STEM education, ethics, inquiry-based learning, and transdisciplinary communication. A bibliometric analysis was conducted to identify publication trends, disciplinary clusters, and temporal distribution (Donthu et al., 2021). This analysis revealed that approximately 72% of the literature had been published between 2021 and 2025, indicating the field’s recent and rapid evolution. Appendix Table 1 list the key resources identified (Fischer et al., 2020, 2023; Freeman, 2025; Luckin, 2018) and Encyclopedias like The SAGE Encyclopedia of Educational Research, Measurement, and Evaluation (Frey, 2018) provide a comprehensive review of academic frameworks. Co-word analysis and citation mapping helped identify central themes and underrepresented areas, providing a data-driven basis for refining the inclusion criteria for the subsequent systematic review.

3.2 Phase 2: Systematic literature review

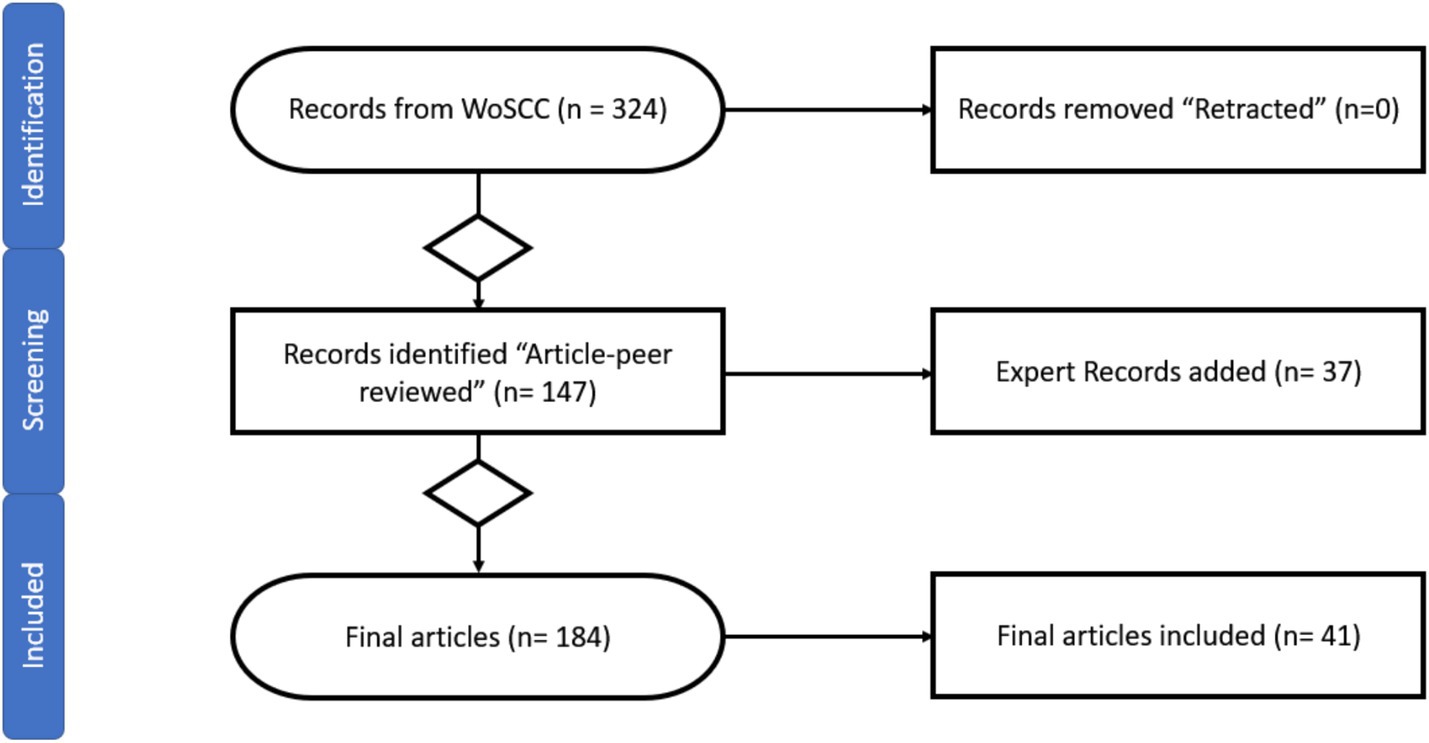

Building on the bibliometric insights, a focused and systematic literature review (Higgins et al., 2019; Zawacki-Richter et al., 2020; Bandara et al., 2015) was conducted using the PRISMA 2020 guidelines to ensure methodological transparency and reproducibility (see Appendix Table 5). The refined search string was applied across the three databases, yielding 766 unique records (324 from Web of Science, 397 from Scopus, and 45 from ERIC). After deduplication, title and abstract screening, and full-text assessment, 147 records met the initial eligibility criteria. An additional 37 sources were included based on expert recommendations obtained during a transdisciplinary focus group session, resulting in a total pool of 184 articles. Following a final screening phase, 41 studies were selected for qualitative synthesis, and a list of the types of documents is provided (see Appendix Table 2). The complete process is visualized in the PRISMA flow diagram (see Figure 2).

Figure 2. PRISMA diagram for literature review. This diagram outlines the multi-stage process used to identify and include studies in the systematic review. An initial set of 324 records was retrieved from the Web of Science Core Collection (WoSCC). No retracted records were found or removed. Of these, 147 were identified as peer-reviewed articles. An additional 37 expert-identified records were incorporated, resulting in a total of 184 records for full-title and abstract screening. Ultimately, 41 articles met the inclusion criteria and were retained for final analysis. This process was adapted to align with the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines.

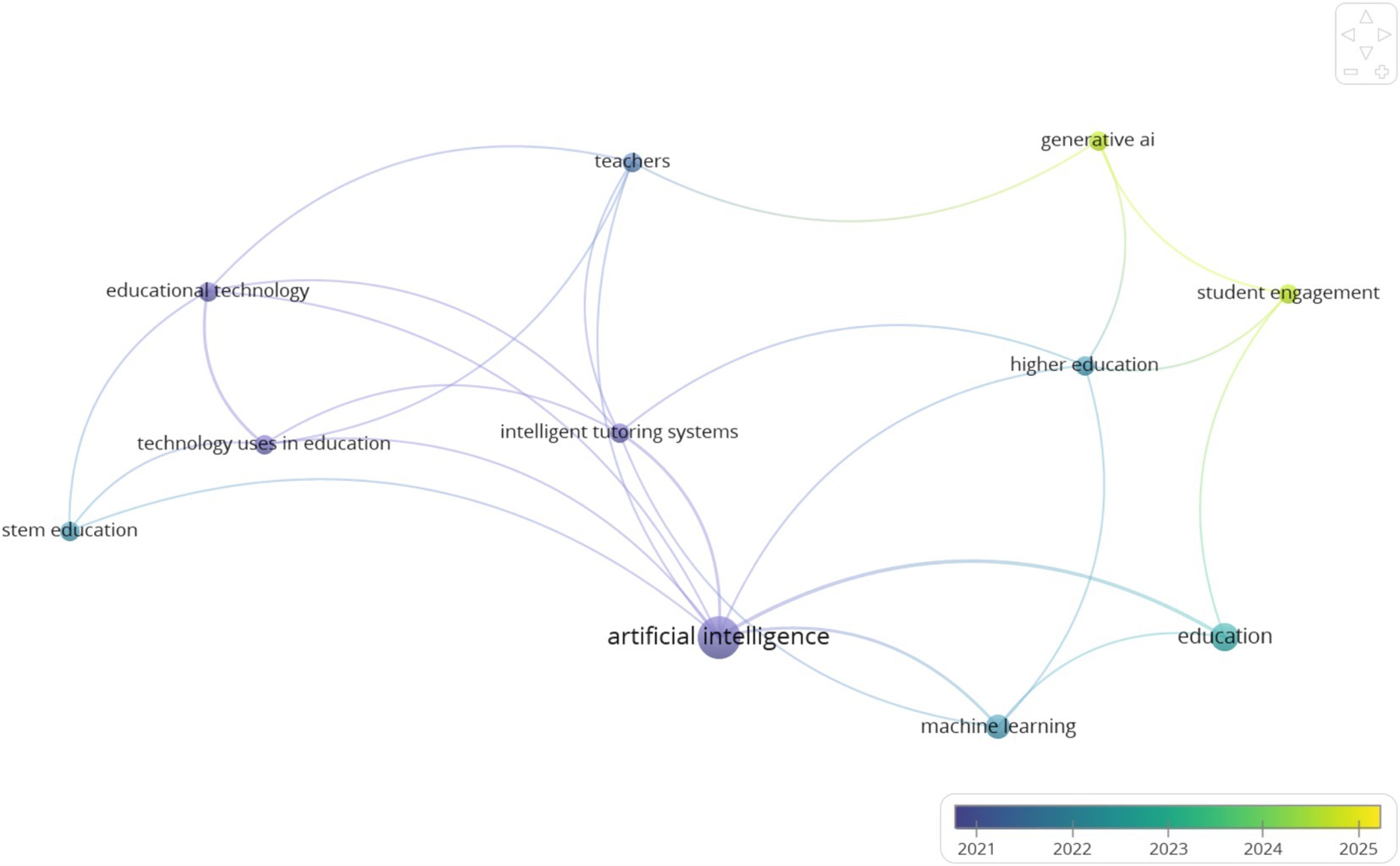

To further contextualize the thematic synthesis, we generated a co-occurrence map using VOSviewer based on keywords from the included studies. The map illustrates clustering around dominant concepts such as artificial intelligence, STEM education, educational technology, student engagement, and generative AI and their temporal progression from 2021 to 2025 (see Figure 3). This visualization supports the three synthesized themes by highlighting the evolving conceptual architecture of the field and how student-centered and ethical AI use has gained salience in recent years. A list of journals is provided (see Appendix Table 3).

Figure 3. Co-occurrence map of key terms in AI-STEM education literature. The VOSviewer map shows three distinct clusters centered on AI, STEM education, and ethics, indicating emerging thematic convergence in the literature from 2021 to 2025.

To ensure methodological rigor, we employed a modified version of the Critical Appraisal Skills Programme (CASP, 2018) checklist during the full-text screening stage. This adaptation was tailored to assess the clarity of research aims, methodological transparency, ethical considerations, and data relevance in studies related to education and policy. Each study was assigned a quality rating—high, moderate, or low—through consensus coding conducted by two reviewers. Any discrepancies were adjudicated through discussion with a third author. Appraisal results were systematically recorded and managed in Microsoft Excel to support reproducibility and transparency. All inclusion and exclusion decisions were tracked using Zotero (v7.0.15) for reference management and Microsoft Excel (v2108) for classification coding. In alignment with open science best practices, all data—including search queries, screening protocols, and bibliometric outputs—have been made publicly accessible via the Open Science Framework (OSF) to support transparency, reproducibility, and global scholarly engagement (see Section 14: Data Availability Statement).

The thematic synthesis was guided by Saldaña’s (2021) qualitative coding framework and proceeded in three structured stages. First, open coding was conducted using NVivo to identify recurring concepts and semantic descriptors within the titles and abstracts of the selected studies. Second, these initial codes were grouped into descriptive themes that captured surface-level patterns in how artificial intelligence (AI) was implemented, interpreted, or discussed in educational contexts. Third, analytical themes were developed through iterative interpretation, aligning emerging categories with the core dimensions of transdisciplinary communication (TDC) and the ethical integration of AI in STEM education.

This dual-method approach facilitated a structured understanding of how AI is being conceptualized and deployed in STEM education, enabling the identification of key research gaps to inform the actionable strategies presented in later sections.

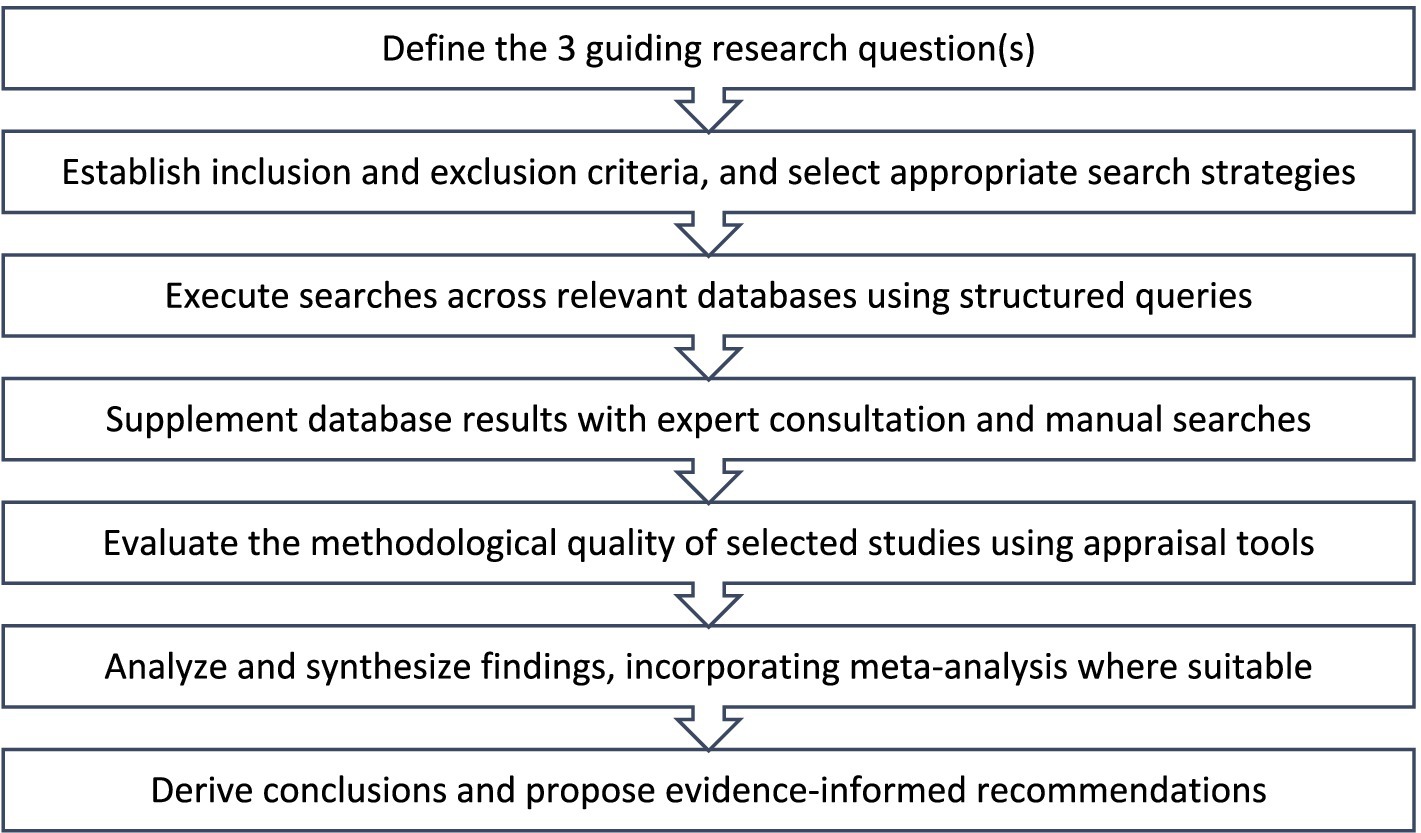

As outlined by Frey (2018, p. 2185), this study follows a structured approach to systematic review to address the following research questions (see Figure 4):

1. What instructional strategies have been proposed to ensure responsible and effective integration of generative AI in STEM education?

2. How does the use of AI tools influence cognitive and academic integrity in learning environments?

3. What institutional policies and ethical frameworks are being developed to guide the adoption of AI in educational settings?

Figure 4. Sequential steps in the systematic review methodology applied in this study. This schematic is informed by the structured review procedures outlined in The SAGE Encyclopedia of Educational Research, Measurement, and Evaluation (1st ed.), edited by Bruce B. Frey, 2018, Thousand Oaks, CA: SAGE Publications. © 2018 SAGE Publications. Adapted under fair use for academic and educational purposes.

3.3 Framework selection: PICOS and alternatives

While PICOS is traditionally used in clinical research, it has been adapted here for its structural clarity in identifying populations, interventions, comparisons, outcomes, and study designs—especially in fields influenced by policy and emerging technologies. We recognize that frameworks such as SPIDER (Sample, Phenomenon of Interest, Design, Evaluation, Research type) and PEO (Population, Exposure, Outcome) are more commonly used in qualitative educational research. In this review, PICOS was used for its alignment with PRISMA guidelines and to accommodate the mixed study types (empirical, review, policy) examined. Future systematic reviews focused exclusively on qualitative findings may benefit from SPIDER-based categorization.

3.4 Inter-rater reliability, inter-rater agreement and thematic consistency

To ensure analytic consistency, coding was reviewed collaboratively by the research team using a predefined agreement scale ranging from 1 to 5, with 5 representing complete conceptual alignment between coders. Inter-rater agreement was operationally defined as either identical ratings or a discrepancy of no more than one point—an approach consistent with best practices in qualitative reliability assessment (Frey, 2018, p. 1926).

During the full-text screening phase, the first and second authors independently reviewed all candidate studies and reached a consensus on 32 out of 41 included sources. The remaining nine cases were discussed in detail with the third author, who served as a moderator to resolve interpretive discrepancies and document the rationale for final inclusion decisions. This collaborative protocol enhanced both the reflexivity and methodological rigor of the review, ensuring that decisions reflected a plurality of disciplinary perspectives and thematic alignment.

Our independent assessments demonstrated high inter-rater reliability3 and inter-rater agreement,4 as scoring patterns were either identical or differed by no more than one point. Moreover, there was sufficient variability among study characteristics to confirm the discriminatory capacity of our coding criteria, affirming the robustness of the selection process.

3.5 Search strategy and exclusion tracking

The initial corpus was derived using comprehensive Boolean search strings across three databases—Web of Science, Scopus, and ERIC. Search parameters included combinations of keywords such as “artificial intelligence,” “STEM education,” “ethics,” “student learning,” and “Generative AI.” Detailed search strings and database-specific filters are provided in Supplementary material and available via OSF [see Appendix B].

Inclusion and exclusion decisions were recorded using Zotero and Excel. All excluded studies from the full-text review phase—along with justifications—have been documented and made publicly accessible in the OSF repository to support replicability and auditability.

This systematic review was conducted in the field of education and emerging technologies, which falls outside the current scope of the PROSPERO registry. Nonetheless, we followed PRISMA 2020 guidelines and adopted open-science protocols to ensure transparency.

4 Literature review

The rapid integration of artificial intelligence (AI) into STEM education has generated a growing and increasingly diverse body of research (Straw and Callison-Burch, 2020). This literature spans three major thematic domains: technical applications, pedagogical innovations, and ethical frameworks. Technical studies investigate the development of intelligent tutoring systems, adaptive algorithms, and large language models that support data-driven instruction and real-time feedback mechanisms (Fan et al., 2023; Valeri et al., 2025; A. Fuller et al., 2024). Pedagogical research, by contrast, focuses on AI’s capacity to support inquiry-based learning, formative assessment, and personalized instruction, particularly in STEM contexts that require high levels of abstraction and iterative problem-solving (Toyokawa et al., 2023; Lin et al., 2023).

Ethical concerns are also prominent, particularly those surrounding algorithmic bias, transparency, and data governance. Scholars argue that without intentional design and regulation, AI systems risk exacerbating existing educational inequities (Raji et al., 2022). In response, transdisciplinary communication (TDC) has emerged as a critical framework that calls for inclusive collaboration among educators, technologists, policymakers, and ethicists to ensure that AI systems are pedagogically sound and socially responsible (Williamson and Eynon, 2020).

This study employed a systematic literature review to examine these three thematic areas in depth, adhering to PRISMA 2020 guidelines to ensure methodological transparency and reproducibility. The review focused on peer-reviewed studies published between 2020 and 2025 that addressed AI applications in STEM education, transdisciplinary approaches to AI integration, and the ethical challenges inherent in AI-driven learning environments. Only English-language publications were included. Following the initial database screening, a focus group session resulted in the expert-guided addition of 37 relevant sources. No records were removed. In line with best practices for research transparency and open data (Serrano-Torres et al., 2025, p. 5), all review materials—including search queries, screening documentation, and bibliometric data—have been made publicly accessible through the Open Science Framework (see Section 14: Data Availability Statement). The following subsection details the search strategy, database coverage, and procedural steps used to identify and curate the reviewed literature.

4.1 Search strategy and review procedure

This systematic literature review adhered to the PRISMA 2020 guidelines to ensure methodological transparency and reproducibility. The primary objective was to synthesize current interdisciplinary research on the integration of artificial intelligence (AI) in STEM education, with a focus on transdisciplinary communication (TDC), pedagogical innovation, and ethical governance.

The search was conducted in January 2025 across the Web of Science Core Collection, Scopus, and ERIC databases. The following Boolean string was used to identify relevant articles: (“artificial intelligence” OR “AI”) AND (“STEM education” OR “science education” OR “technology in education”) AND (“ethics” OR “inquiry-based learning” OR “personalized learning” OR “transdisciplinary communication”).

The inclusion criteria were:

• Peer-reviewed journal articles published between 2020 and 2025.

• English-language publications.

• Empirical or conceptual studies focusing on AI applications in STEM education contexts.

• Studies discussing ethical, pedagogical, or access-related implications of AI.

• Open Access.

Exclusion criteria included:

• Conference abstracts without full texts.

• Non-English or non-peer-reviewed publications or gray literature (unless added via expert recommendation).

• Studies solely address technical algorithm development without educational context.

The refined categories were:

• Education Educational Research

• Education Scientific Disciplines

• Computer Science Artificial Intelligence

A total of 324 records were initially retrieved from the Web of Science database. The search was then replicated in Scopus, yielding 397 records, and in ERIC, which returned 45 records. Following the removal of duplicates, titles and abstracts of 543 unique entries were screened for relevance. Of these, 147 articles were selected for full-text review based on inclusion criteria. Subsequently, 37 additional records were incorporated based on expert recommendations gathered during a transdisciplinary focus group session, bringing the total pool to 184 articles. After a second round of title, abstract, and full-text assessment, 41 studies were deemed eligible and included in the final synthesis (see Figure 2, PRISMA flow diagram). We categorize the documents by STEM discipline including Technology (n = 16), Education (n = 15), Cross-Disciplinary/Integrated STEM (n = 6), and General STEM (n = 4).

Zotero version 7.0.15 (64-bit) was used for reference management, while Excel version 2,108 (Build 14332.21040 Click-to-Run) was employed to track inclusion/exclusion decisions. Complete data records, including search terms and selection criteria, are publicly available via the Open Science Framework (see Section 14: Data Availability Statement).

4.2 AI applications in STEM education

AI technologies have significantly transformed STEM education by enabling adaptive learning environments, automated assessments, and personalized learning pathways. For example, AI-powered adaptive systems utilize machine learning algorithms to tailor educational content based on student’s abilities and learning styles, offering individualized instruction and real-time feedback (Zawacki-Richter et al., 2019). Automated assessment tools—exemplified by intelligent tutoring systems (ITS) such as Carnegie Learning’s Cognitive Tutor—simulate one-on-one tutoring experiences and provide immediate, data-driven feedback (Van Lehn, 2011). Furthermore, predictive analytics derived from machine learning have enabled educators to identify learning gaps early and design targeted interventions (Holmes et al., 2019). Despite these promising applications, challenges remain in ensuring that these models are fair and transparent; inconsistencies in training datasets can disproportionately affect students from a variety of backgrounds (Baker and Hawn, 2022).

4.3 Transdisciplinarity and AI integration

The successful implementation of AI in STEM education hinges on transdisciplinary collaboration that bridges computer science, cognitive psychology, ethics, pedagogy, and social sciences. Additionally, it is essential to involve broader societal stakeholders, such as parents and students, to ensure that AI integration is aligned with community needs and values. Transdisciplinary communication (TDC) frameworks are instrumental in aligning technological innovation with educational theory. The adaptation of theoretical models such as Vygotsky’s “Zone of Proximal Development (ZPD)” (Lasmawan and Budiarta, 2020) and Piaget’s “Constructivist Learning Theory (CLT)” (Piaget, 1972) has demonstrated how AI can function as an instructional scaffold (Luckin and Holmes, 2016). Recent studies have also shown that AI-driven virtual labs and simulations can enhance inquiry-based learning (IBL) by replicating real-world experimentation (Chen et al., 2020), while collaborative learning platforms foster interdisciplinary teamwork and critical thinking (Hidiroglu and Karakas, 2022; Parviz, 2024). However, the efficacy of these initiatives depends on ongoing dialogue not only among educators, technologists, and policymakers but also with parents and students to ensure that AI systems adhere to ethical guidelines and pedagogical objectives while reflecting the needs of the society they aim to serve (Selwyn, 2019).

4.4 Ethics, algorithmic integrity, and access considerations

Integrating AI into education raises complex ethical questions about algorithmic decision-making, data privacy, and the digital divide (see Appendix Table 4). AI models trained on prior data may unintentionally reproduce existing societal disparities, leading to inconsistencies across demographic and economic dimensions (West et al., 2019). Additionally, some scholars argue that excessive reliance on AI-generated recommendations may inhibit students’ development of independent critical thinking (Williamson, 2019). Others contend that personalized feedback from AI can enhance metacognitive skills when designed appropriately (Karatas and Arpaci, 2021). Initiatives such as “AI for Social Good” underscore the need for fair, transparent, and accountable AI systems in education, advocating for robust regulatory frameworks and open-source models (Dignum, 2018). Moreover, emerging research in Explainable AI (XAI)5 emphasizes making AI processes interpretable so educators and students can understand and trust AI-driven decisions (Adadi and Berrada, 2018).

Despite AI’s potential, further research is needed to develop comprehensive inconsistency detection, fairness auditing, and governance mechanisms to ensure that AI enhances broad and accessible learning outcomes. While addressing these ethical challenges remains a critical priority, it is equally essential to translate research into tangible solutions. To fully realize AI’s potential in STEM education, it is necessary to move from theoretical concerns to actionable strategies that not only ensure fairness but also capitalize on AI’s transformative capabilities. The next step involves developing a strategic framework that integrates broad access, engagement, and pedagogical effectiveness into the development and deployment of AI technologies. As AI continues to influence the evolution of education, it is essential to adopt a multi-stakeholder approach—uniting educators, technologists, policymakers, and ethicists—to collaboratively shape AI-driven learning environments that foster innovation and uphold social responsibility.

4.5 Risk of bias and limitations

While this review adhered to PRISMA guidelines to ensure methodological rigor and transparency, several potential sources of bias warrant consideration. First, language bias is present, as the review was limited to English-language publications. This criterion may have excluded significant insights from regional or non-English sources, particularly those from the Global South. Second, a disciplinary imbalance is evident: the included literature predominantly represents fields such as computer science and educational technology, while contributions from sociology, anthropology, and public policy remain underrepresented. Third, although the inclusion of 37 additional articles based on expert recommendations enriched the review, it also introduces selection bias, particularly due to the purposive inclusion of highly cited or institutionally visible studies.

Furthermore, publication bias may have influenced the dataset, as studies reporting favorable or novel outcomes are more likely to be published in indexed academic databases. To promote inclusivity and reduce barriers to collaboration, the authors adopted open-access availability as a guiding principle, ensuring that all included sources were accessible to all members of the research team, including a co-author based in Ecuador. This strategy supported equitable participation in data review and analysis.

These limitations underscore the importance of future systematic reviews that incorporate multilingual sources, gray literature, and formal bias assessment tools, such as ROBIS, to enhance comprehensiveness and global representativeness.

4.6 Thematic patterns across the corpus: agency, assessment, and cognitive transformation

Analysis of the 41 included studies yielded three dominant and interrelated thematic patterns: (1) Student Agency and AI-Augmented Learning, (2) Reimagining Assessment in AI-Native Classrooms, and (3) Cognitive and Ethical Transformation through AI Integration. These themes collectively reflect the evolution of discourse and empirical work within the intersection of artificial intelligence and STEM education. The thematic results are grounded in both narrative coding and bibliometric clustering, supported by the co-occurrence map generated using VOSviewer (Figure 3).

4.6.1 Theme 1: Student agency and AI-augmented learning

Across the dataset, 28 studies examined how AI technologies influence learner autonomy, self-regulation, and personalization. Of these, 19 highlighted AI’s potential to support self-directed learning through adaptive feedback systems, intelligent tutoring, and personalized content pathways. However, nine studies reported concerns regarding reduced cognitive engagement, with some documenting over-reliance on generative tools as a substitute for deep learning. Variation in agency was observed across educational levels, with higher education studies reporting greater use of co-design and scaffolding mechanisms compared to K–12 settings.

4.6.2 Theme 2: Reimagining assessment in AI-native classrooms

Assessment-related concerns were addressed in 22 studies, with 14 explicitly discussing the limitations of traditional testing in the context of generative AI. Emerging assessment models include process-oriented evaluations, performance-based tasks, and AI-detection-aware rubrics. Several studies emphasized the need to evaluate thinking processes rather than outputs alone. Notably, comparative findings indicate a lack of alignment between institutional policy responses and the pace of student adoption of AI tools, especially in STEM disciplines.

4.6.3 Theme 3: Cognitive and ethical transformation

Ethical implications and shifts in cognitive strategies were identified in 21 studies. Key concerns included the normalization of biased AI outputs, lack of algorithmic transparency, and the marginalization of students with limited digital access or literacy. At the same time, 11 studies reported that structured AI integration can promote critical thinking, metacognitive reflection, and cross-disciplinary learning. Ethical discourse increased notably in post-2023 publications, consistent with the trend visualized in the VOSviewer map showing clustering around terms such as “ethics,” “student engagement,” and “equity.”

4.6.4 Synthesis across themes

Comparative synthesis reveals disciplinary divergences in the treatment of these themes: engineering and computer science studies prioritize automation and performance metrics, whereas education and psychology studies emphasize cognitive, social, and ethical dimensions. The combined use of bibliometric mapping and structured thematic coding highlights a growing convergence around student-centered approaches but also uncovers significant gaps—particularly the limited presence of transdisciplinary frameworks and empirically tested interventions.

These results underscore the importance of designing AI-powered STEM education systems that prioritize learner agency, reconfigure assessment for authenticity, and remain vigilant to the cognitive and ethical complexities introduced by AI technologies.

The thematic synthesis reveals a field in flux—marked by experimentation, ethical reconsideration, and evolving pedagogical models. However, while the literature offers valuable insights, it also underscores persistent gaps in application, equity, and coherence across disciplines. In response, the following section translates these thematic patterns into a set of actionable, evidence-informed strategies. These recommendations are designed to guide educators, policymakers, and institutional leaders in navigating the opportunities and risks of AI implementation while fostering an inclusive and reflexive educational ecosystem.

4.7 Ethical agency and inclusive design in AI-driven STEM education

This section examines how ethical design principles and inclusion frameworks are—or are not—embedded in the implementation of AI tools in STEM education. While numerous studies highlight the potential of AI for personalized and adaptive learning, far fewer address how these systems can reproduce structural inequities, reinforce epistemic power asymmetries, or marginalize already vulnerable student populations.

In response to these gaps, a growing body of perspective literature—particularly those grounded in the Universal Design for Learning (UDL) framework—has argued that inclusive design must transcend the technical layer of accessibility and be adopted as a foundational pedagogical philosophy. UDL serves as a framework for curriculum development that emphasizes instructional flexibility, enabling learners with diverse needs, backgrounds, and abilities to access, engage with, and express an understanding of content (Kapil et al., 2024). It proposes three core principles: providing multiple means of engagement, representation, and expression—each essential for inclusive pedagogical planning in AI-enhanced environments.

To provide additional clarity, UDL is defined as a research-based framework to improve and optimize teaching and learning based on what we know about the human brain. According to CAST (2024), learner variability is predictable and ubiquitous. The UDL Guidelines support educators in designing for this variability through three main categories:

• Engagement (the why of learning): recruiting interest, sustaining effort and persistence, and promoting self-regulation;

• Representation (the what of learning): perception, language and symbols, and comprehension;

• Action and expression (the how of learning): physical action, expression and communication, and executive function.

These principles guide educators in addressing diverse student needs and in reducing barriers to learning through anticipatory and inclusive design. In the context of AI-enhanced education, these categories provide critical design prompts for building systems that respect neurodiversity, language variation, and cognitive diversity at scale.

Glass et al. (2013) extend this argument by illustrating that UDL is not a retroactive accessibility fix but a proactive design mindset that leverages learner diversity as a pedagogical asset. Their work demonstrates how educators can shift from deficit-based models to strength-based practices through creative, multimodal instructional approaches. In the context of AI, this reconceptualization calls for systems that are not merely compliant with accessibility standards but are epistemically responsive—enabling students to co-construct their learning experiences in ways that affirm agency and identity.

Building on this, recent analyses of equity-focused AI systems emphasize the importance of aligning algorithmic design with the ethical requirements articulated by the European Commission’s “Trustworthy AI” guidelines (Isop, 2025). These include human agency and oversight, technical robustness, transparency, and fairness. UDL-aligned AI systems operationalize many of these principles by empowering students to navigate content on their terms, choose from multiple pathways for assessment, and receive meaningful, personalized feedback without compromising transparency or accountability.

Thus, the integration of UDL within AI-driven STEM education not only enhances technical adaptability but also reinforces ethical agency (Priyadharsini and Mary, 2024). It ensures that inclusion is not an afterthought but an active design commitment—one that addresses systemic exclusions and promotes learner autonomy, cultural relevance, and just learning ecologies. By foregrounding frameworks like UDL, the review reveals how ethical design and transdisciplinary collaboration are essential to the future of equitable AI in education.

In sum, the reviewed literature illustrates that ethical and inclusive design in AI-powered STEM education requires more than equitable access—it demands intentional, transdisciplinary frameworks that align pedagogical flexibility with algorithmic accountability. The following section builds upon this conceptual foundation by offering actionable strategies that operationalize these insights across practice, policy, and research domains.

5 Actionable strategies for AI-driven STEM education

As AI continues to shape the STEM education landscape, developing actionable strategies that support broad participation, promote transdisciplinary collaboration and harness AI’s capabilities for enhanced learning experiences is essential. AI-driven system education must be ethically sound, accessible, and pedagogically practical, requiring a strategic, multi-stakeholder approach that involves educators, AI developers, policymakers, and ethicists. This section outlines key strategies for designing AI systems that address access-related challenges, foster transdisciplinary collaboration in AI integration, and implement AI-driven solutions to enhance STEM education.

5.1 Designing AI for access and engagement

AI has the potential to personalize education and improve access (Devi-Doddi, 2023), but without proper oversight, it can also reinforce algorithmic inconsistencies and widen disparities in educational outcomes. To support broad AI adoption in STEM education, it is critical to develop inconsistency detection algorithms, responsive AI models, and strategies to expand access to underserved learning environments.

One of the foremost challenges in AI-driven education is algorithmic inconsistency, which arises when AI models are trained on datasets that reflect prior disparities in how educational resources and assessments have been distributed. AI inconsistency detection algorithms must be developed and implemented to regularly audit and correct imbalanced patterns in automated grading systems, adaptive learning platforms, and AI-generated recommendations (West et al., 2019). AI-driven educational tools must be tested across a range of learner profiles to ensure they do not produce uneven outcomes based on individual characteristics or contextual learning needs.

Expanding AI access to underserved learning environments requires targeted policy interventions and infrastructure investments. Many AI-driven learning platforms rely on high-speed internet and advanced computing resources, often unavailable in underprivileged schools and low-income communities (Holmes and Burgess, 2022). Governments, universities, and private sector partners must invest in affordable AI-powered educational tools, low-bandwidth adaptive learning technologies, and community-based digital literacy programs to democratize AI-driven education. Initiatives such as open-source AI models and low-cost machine learning platforms can provide students from a wide range of learning contexts with access to AI-enhanced educational experiences without financial or technological limitations.

Additionally, AI must be designed to support students with varying learning needs, ensuring that educational tools comply with Universal Design for Learning (UDL) principles. The UDL framework promotes inclusive and flexible learning environments by providing multiple means of engagement, representation, and expression—principles that are essential for addressing systemic inequities and fostering accessibility for neurodiverse and historically underserved learners (Alasadi and Baiz, 2023; Bray et al., 2024; Garcia Ramos and Wilson-Kennedy, 2024). Complementing UDL, the European Commission’s “Requirements of Trustworthy AI” offers a foundational ethical scaffold, emphasizing human agency and oversight, technical robustness, transparency, fairness, and accountability (Isop, 2025). Technologies, such as speech recognition software, real-time transcription services, and AI-generated personalized learning pathways, can provide tailored support for students with visual, auditory, and cognitive impairments (Grenier et al., 2025). By centering educational equity and responsible innovation, AI-powered learning environments can help close existing gaps rather than deepen them.

5.2 Transdisciplinary collaboration framework

The integration of AI into STEM education requires a transdisciplinary collaboration framework that brings together the ‘Mode 3’ and ‘Quadruple Helix’ stakeholders—such as educators, technologists, ethicists, policymakers, and social scientists—to co-design AI systems that are technically robust and pedagogically effective (Carayannis and Campbell, 2009). These stakeholders often embody multiple roles simultaneously; for example, a single individual may function as a parent, educator, and civic participant within overlapping educational and governmental systems. Recognizing these intersecting identities is critical to developing ethical frameworks and policy guidelines that authentically reflect the complexity of real-world educational ecosystems.

Technology developers must not solely dictate AI in education; its implementation should be a co-designed process that aligns with educational goals, ethical considerations, and societal needs. This approach resonates with the ideas discussed in Ostrom (2015, p. 137), who underscores the value of collaborative governance in managing shared resources such as educational technologies. She argues that sustainable systems emerge through collective action, where diverse stakeholders contribute their expertise, resources, and decision-making capacities. In this spirit, AI in education must be shaped by cooperative processes that prioritize accountability, transparency, and the public good.

Establishing multi-stakeholder networks ensures that diverse disciplinary perspectives inform AI development. Universities, research institutions, and industry leaders must work together to create AI education task forces that evaluate how AI technologies align with curriculum goals, support ethical learning environments, and foster cognitive development. Collaborative partnerships between educators and AI developers can effectively bridge the divide between technological innovation and classroom realities, ensuring that AI tools remain accessible, contextually appropriate, and pedagogically robust (Luckin and Holmes, 2016).

Embedding transdisciplinary communication (TDC) principles in AI policy design ensures that AI is transparent, explainable, and aligned with human-centered education values. AI systems in STEM education must be accountable to educators and students, requiring policies that mandate algorithmic transparency, open-access data, and explainable AI (XAI) models. These policies should also include ethical guidelines for AI-driven decision-making in student assessments, learning analytics, and automated grading systems, ensuring that AI does not function as an opaque, unchallengeable educational authority (Selwyn, 2019).

Another crucial aspect of transdisciplinary collaboration is public engagement and AI literacy development. Many educators and students remain unfamiliar with AI systems, leading to skepticism or overreliance on AI-generated outputs. Integrating AI literacy programs within STEM curricula can help students and teachers critically understand AI models, ethical considerations, and data-driven decision-making (Holmes et al., 2019). Students can become active co-creators of AI-enhanced learning experiences by fostering AI literacy across disciplines rather than passive consumers.

A transdisciplinary approach to AI integration ensures that AI is developed with—not just for—educators and learners, leading to a more ethical, transparent, and broadly accessible AI-driven education ecosystem.

5.3 Practical AI applications in STEM education

The successful integration of AI in STEM education relies on practical applications that enhance learning engagement, provide hands-on experiences, and automate administrative processes. AI-powered tools such as virtual laboratories, intelligent tutoring systems, and AI-driven research assistants have demonstrated significant potential in transforming STEM education.

AI-enhanced laboratories enable students to perform experiments in virtual and augmented reality settings, allowing them to explore complex scientific concepts without the constraints of traditional lab environments. AI-powered simulations can model chemical reactions, physics experiments, and engineering prototypes, giving students real-time feedback on their hypotheses and procedural accuracy (Chen et al., 2020). These virtual labs democratize access to high-quality STEM education, particularly for institutions that lack funding for specialized lab equipment and resources.

Intelligent tutoring systems (ITS) leverage natural language processing (NLP) and machine learning to provide students with personalized, real-time academic support. AI-driven tutors analyze student responses, learning patterns, and areas of difficulty to offer customized explanations and problem-solving strategies. Systems such as IBM Watsonx6 and Socratic AI7 have improved student comprehension and engagement, particularly in mathematics, physics, and computer science (Van Lehn, 2011). These AI tutors do not replace human educators but instead serve as complementary tools that enhance individualized learning experiences. These patterns align with the evolving thematic architecture illustrated in the VOSviewer map (see Figure 3), where terms like student engagement and generative AI emerge as increasingly central—underscoring the practical urgency of the issues identified in this study.

AI research assistants can automate time-consuming academic tasks, such as grading assignments, generating lesson plans, and compiling research summaries. AI-powered grading tools provide instant feedback on student submissions, allowing teachers to allocate more time to interactive discussions and hands-on instruction. Additionally, AI-driven recommendation engines can help students identify relevant research articles, suggest STEM career pathways, and personalize study plans based on their learning history (Baker and Hawn, 2022).

Integrating AI-driven labs, tutors, and research assistants makes STEM education more interactive, efficient, and student-centered. This allows educators to focus on mentorship, inquiry-driven learning, and interdisciplinary exploration.

Implementing AI-driven STEM education requires intentional design choices prioritizing broad access, transdisciplinary collaboration, and practical application. AI technologies must be designed to detect and mitigate inconsistencies, ensuring that underserved learning environments have broad access to AI-powered learning tools. Effective AI adoption also depends on transdisciplinary collaboration, where educators, technologists, and ethicists work together to create AI systems that are transparent, ethical, and pedagogically sound. Finally, AI must be applied strategically in STEM education, leveraging virtual laboratories, intelligent tutors, and research automation to enhance student engagement and learning outcomes.

AI can be a transformative force in STEM education by adopting these actionable strategies and fostering innovation, engagement, and interdisciplinary knowledge-building. Looking ahead, the integration of AI in education must undergo ongoing evaluation, refinement, and adaptation to guarantee that it functions as a means of empowerment and not as a mechanism of exclusion.

5.4 Disciplinary landscape of AI applications at a research-intensive polytechnic institution

Within the reviewed literature, the distribution of AI applications reflects the evolving priorities and capacities of research-intensive polytechnic institutions. The most prominent disciplinary category is Technology (n = 16), underscoring the emphasis on engineering, computer science, and data-driven innovation in AI research and pedagogy. This trend aligns with institutional goals to advance technical infrastructure, digital transformation and applied machine learning across curricula.

The education category (n = 15) captures scholarship focused on pedagogical integration, instructional design, and student engagement. These studies often explore how AI can support formative assessment, metacognition, and adaptive learning—critical concerns at institutions committed to educational innovation and learner-centered practices.

A smaller but significant number of studies focus on Cross-Disciplinary or Integrated STEM approaches (n = 6), highlighting the importance of collaborative pedagogical ecosystems. These contributions reflect how AI tools can scaffold interdisciplinary projects, data visualization, and collaborative problem-solving across STEM fields—particularly valuable in project-based learning environments.

Finally, the General STEM category (n = 4) encompasses broader overviews and domain-neutral applications. These works address AI’s potential to enhance core STEM competencies, support career readiness, and foster inquiry-driven education without focusing on specific subfields.

Collectively, these distributions illustrate the strategic value of AI at R1 polytechnic universities: promoting innovation in technological domains, driving inclusive and adaptive education practices, and supporting the convergence of disciplines through transdisciplinary problem-solving. Rather than siloing AI within narrow use cases, the reviewed literature reveals a pattern of purposeful diffusion—AI is increasingly understood as both a technological enabler and a pedagogical catalyst across the STEM ecosystem including research and development.

6 Student use of generative AI: a case illustration of emerging themes in practice

As artificial intelligence becomes increasingly interwoven into STEM education, the ways students interact with these tools provide a real-time reflection of the review’s core findings—particularly regarding learner agency, evolving assessment paradigms, and ethical engagement. Rather than viewing student AI use as a peripheral issue, this section treats it as an applied case of how technological integration unfolds in practice, echoing themes identified across the literature.

At the heart of these discussions is the principle that students must remain the central stakeholders in educational design. AI should be positioned not as a replacement for authentic learning but as a cognitive partner—augmenting educator capacity, scaffolding learner autonomy, and enhancing access to tailored support. However, AI’s perceived neutrality often masks structural inequities and behavioral adaptations that may deviate from pedagogical intent. As such, the ethical integration of AI demands reflexive dialogue, not only about its functionality but also its influence on learning norms and student values.

Several recent surveys have explored student engagement with AI, including the extent to which students use AI tools to complete assignments rather than engage authentically in learning tasks. These surveys investigate student perceptions, motivations, ethical considerations, and experiences with AI in academic contexts. For example, a Harvard Graduate School of Education study found that while a majority of teens perceive the use of AI for schoolwork as a form of cheating, many also recognize AI’s potential to enhance academic experiences, such as aiding in starting papers or creating individualized learning plans (Nagelhout, 2024).

While a definitive percentage is difficult to pinpoint due to varying survey methodologies and the evolving nature of AI use, research suggests that a significant portion of students, ranging from 10% to over 50%, utilize AI tools in some capacity for writing essays and academic work. For example, one study showed that 47% of students would have used AI for their college admissions essays had it been available (ACT, 2023). Other studies indicate that a large percentage (around 50%) use AI for brainstorming, outlining, or generating first drafts (Chan et al., 2025; Fahira et al., 2024) and that this is generally difficult to distinguish (Fleckenstein et al., 2024). The following studies provide various insights into the types and extent of AI uses reported by students (Digital Education Council, 2024; The State of Higher Education 2024, 2024).

A survey of teens found that 44% were likely to use AI to do their schoolwork, with 60% considering it cheating, indicating both the prevalence and ethical concerns surrounding AI use in education (Citizens, 2023). The HEPI/Kortext AI Survey (2025) revealed an “explosive increase” in the use of generative AI tools by students, with significant disparities in AI use among different groups (e.g., males, students in STEM courses, etc.). The results show the extremely rapid rate of uptake of generative AI chatbots and other AI tools being used by students. As AI becomes embedded in education, students see it as a core part of the learning process as well as a differentiator within the workforce, signaling a vital need for training and preparation.8

From a transdisciplinary perspective, student use of AI mirrors broader systemic shifts: a recalibration of trust in automated processes, new forms of peer and platform-mediated knowledge validation, and a restructuring of what counts as “learning.” The findings suggest that without clear pedagogical scaffolds and transparent, ethical guidelines, students may default to optimizing for performance rather than engaging deeply with disciplinary knowledge or collaborative problem-solving.

Underscoring the importance of designing evaluative metrics and instructional practices that align with authentic cognitive processes—not just outcomes. As AI tools become standard fixtures in educational ecosystems, institutions must adopt student-centered metrics that reflect formative learning, ethical reasoning, and epistemic engagement. Doing so requires a shift from punitive surveillance to participatory governance, where students contribute to shaping norms around appropriate AI use.

This case analysis reinforces the urgency of proactive, transdisciplinary strategies that treat student experience not as an ancillary concern but as a diagnostic site for educational reform. The following section draws on this synthesis to propose implications for policy, instructional design, and institutional leadership while outlining avenues for future research that center student voices and advance ethical, equitable AI integration in STEM education.

7 Implications and future directions

Integrating artificial intelligence (AI) in STEM education can redefine learning environments, personalize instruction, and democratize access to quality education. However, as AI advances, critical challenges related to governance, ethics, broad access, and long-term impact must be addressed to ensure that AI-driven education remains sustainable, transparent, and accessible to all learners. This section outlines key policy recommendations, future research directions, and strategies for scaling AI-based STEM education globally to maximize AI’s positive impact while mitigating potential risks.

The VOSviewer-generated map (see Figure 3) reinforces the findings of this review by visually demonstrating the field’s shifting priorities—highlighting the growing prominence of generative AI, student engagement, and transdisciplinary education as emergent clusters and signaling a need for frameworks that can address both convergence and divergence in future research agendas.

7.1 Policy and governance recommendations

The successful implementation of AI in education requires a robust governance framework that promotes ethical AI development, transparency, and broad access. Current AI policies in education remain fragmented, with disparities in AI adoption, lack of oversight, and limited guidelines for ensuring bias-free AI models. Developing transdisciplinary AI governance models is critical to fostering an engaging, fair, and responsible AI ecosystem for STEM education.

A transdisciplinary AI governance model should integrate educators, technologists, policymakers, ethicists, and social scientists in developing and overseeing AI-driven educational tools. This model would ensure that AI systems align with learning objectives, cognitive development principles, and ethical standards while addressing concerns about data privacy, algorithmic transparency, and AI accountability (Dignum, 2018). Governments and educational institutions must create clear policies for AI integration, establish guidelines for the ethical collection, use, and storage of student data, and ensure that AI-driven assessment tools are interpretable and auditable.

Encouraging open-source AI tools ensures broad access to AI-driven education technologies, particularly for underfunded schools and underserved learning environments. Open-source AI models allow for greater transparency, adaptability, and cost-effective deployment, making AI-based STEM education accessible to a broader range of learners (Holmes et al., 2019). Universities, research institutions, and technology firms should collaborate on open-source AI initiatives, prioritizing broad access to education and ethical AI development. By fostering a globally shared AI knowledge base, institutions can ensure that AI in education is shaped by collective expertise rather than being monopolized by a few large corporations.

7.2 Future research directions

AI’s role in STEM education is still in its early stages, and many critical questions remain unanswered. How does AI influence cognitive development, inquiry-based learning, and long-term career pathways in STEM fields?

One important avenue for exploration is AI’s impact on metacognition in STEM learning. AI-powered tools are often designed to provide answers and optimize efficiency, but they must also support higher-order thinking, self-regulated learning, and critical inquiry. Research is needed to determine whether AI enhances or hinders metacognitive skills, such as reflection, problem-solving strategies, and self-directed learning behaviors (Guilherme, 2019). Future studies should examine how AI can be intentionally designed to foster metacognition, ensuring students engage in deep, meaningful learning rather than passive knowledge consumption.

Additionally, investigating AI’s long-term impact on STEM career pathways is essential for understanding how early exposure to AI-driven learning environments influences students’ career trajectories. AI-driven STEM education could increase interest in AI-related careers and reshape workforce demands by automating specific skills while emphasizing others. Future research should explore: Does AI-based learning foster long-term engagement in STEM fields? How do AI tools influence students’ decision-making processes regarding career choices? Will AI literacy skills become a prerequisite for success in future STEM professions (Williamson, 2019)?

Further, longitudinal studies could examine whether AI-driven learning environments narrow or widen achievement gaps over time, particularly among students from varying educational backgrounds in STEM disciplines. Understanding AI’s impact on student diversity, retention rates, and long-term academic achievement is crucial for refining AI-based educational policies and interventions.

7.3 Scaling AI-based STEM education

For AI-driven STEM education to have a meaningful global impact, it must be scalable, adaptable, and responsive to diverse educational needs. Current AI implementations in education are often limited to well-funded institutions, high-income regions, and technologically advanced infrastructures, leaving many students without access to AI-enhanced learning experiences. Expanding AI-based STEM education requires global collaboration, open-access initiatives, and investment in digital infrastructure.

International organizations, governments, and educational institutions must collaborate to develop AI-for-education initiatives prioritizing global engagement and broad access to education. Partnerships between AI researchers, policymakers, and non-governmental organizations (NGOs) can facilitate the deployment of affordable AI-driven educational tools in low-income and rural communities (Kitsara, 2022). These efforts should focus on adapting AI technologies to different linguistic, cultural, and economic settings, ensuring that AI-based learning is relevant and accessible across a wide range of learner communities.

Moreover, integrating AI literacy into national education curricula will be essential for preparing students for AI-driven industries and future STEM careers. Governments should establish AI education policies that provide teacher training programs, curriculum frameworks, and AI-focused learning resources to support AI literacy at all levels of education (Selwyn, 2019). Special attention should be given to closing the digital divide, ensuring that students in underprivileged schools and resource-limited settings can access the same AI-enhanced learning opportunities as their peers in more developed regions.

To scale AI-based STEM education effectively, policymakers must continuously evaluate and refine AI-driven learning models. AI systems should be monitored for their educational efficacy, ethical implications, and societal impact, focusing on iterative improvements based on student and educator feedback.

Education systems can leverage AI’s transformative potential by fostering global AI collaborations, prioritizing open-access AI tools, and investing in AI literacy programs while ensuring broad, engaging, and ethically responsible STEM education for all learners.

As AI continues to shape the future of STEM education, it is critical to establish governance policies that promote transparency, accountability, and accessibility. Developing transdisciplinary AI governance models and encouraging open-source AI tools will ensure that AI benefits a broad range of learners rather than reinforcing existing disparities. How does AI impact metacognitive development and STEM career pathways, and what deeper insights can it provide into AI’s long-term role in shaping education and workforce readiness? Furthermore, global collaboration is essential to ensure that AI-driven STEM education is both scalable and accessible, particularly in under-resourced educational settings. By fostering an interdisciplinary and engaging approach to AI integration, policymakers, educators, and researchers can collectively build a future where AI enhances education, encourages innovation, and supports broad learning opportunities worldwide.

7.4 Meta-inference

As global collaboration and inclusive AI governance gain traction, a deeper understanding of the epistemic and ethical dimensions underlying educational AI becomes necessary. While transdisciplinary integration offers fertile ground for innovation, it also exposes systemic tensions—between personalization and surveillance, automation and human agency, access and asymmetry. These tensions are not merely operational but ontological, challenging the assumptions that various disciplines bring to concepts like intelligence, learning, and fairness. Across disciplinary boundaries, the integration of generative AI in STEM education reflects both convergence—such as shared commitments to personalization, accessibility, and improved engagement—and contradiction, especially in terms of authorship, cognitive agency, and assessment design (Arnold and Greer, 2016; León et al., 2024). The transdisciplinary communication (TDC) framework functions as a diagnostic and generative tool, illuminating these tensions and enabling the formulation of co-regulatory mechanisms that are both epistemically plural and ethically responsive. By surfacing these interdependencies, TDC strengthens the foundation for ongoing dialogue and design across stakeholder communities (see Figure 5).