- Palestine Technical University-Kadoorie, Tulkarem, Palestine

Introduction: Fostering Artificial Intelligence (AI) literacy and equipping college students with 21st-century skills in the generative AI era have become a global educational priority. In this context, generative AI offers opportunities for development in higher education institutions. Thus, this study investigates the influence of AI literacy and 21st-century skills on generative AI acceptance.

Methods: For data collection, the study employed a quantitative design with three scales, and the study sample included 260 college students selected randomly.

Results: Results revealed that AI literacy and 21st-century skills are present at a moderate level among college students. AI literacy and 21st-century skills influence the generative AI Acceptance level.

Discussion: Based on the results, the study recommends enriching the curriculum with AI literacy and equipping students with 21st-century skills while using generative AI applications.

1 Introduction

Artificial intelligence (AI) has long been a cornerstone of computer science research, but its application in education has seen a dramatic surge in recent years (Humble and Mozelius, 2022). Among AI’s transformative branches, generative AI stands out for its ability to create original content—including text, images, music, and videos—by learning patterns from existing data (Ooi et al., 2025). Unlike traditional AI, which focuses on classification or prediction, generative AI mimics human creativity, offering novel outputs that are reshaping industries and daily life. Its growing ubiquity is compelling individuals to adapt their skills and even reconsider career trajectories (Chui et al., 2023; Derakhshan, 2025; Feuerriegel et al., 2024).

Generative AI holds immense promise for societal and economic advancement (Zhu et al., 2025), yet its integration is not without challenges. While it can enhance education by fostering student engagement, self-directed learning, and critical thinking (Maphoto et al., 2024), it also raises ethical concerns, such as privacy violations, bias amplification, and accountability gaps (Amankwah-Amoah et al., 2024; Chan and Lo, 2025; Ismail, 2025). These issues underscore the need for AI literacy—a competency encompassing not only technical proficiency but also ethical awareness (Chiu et al., 2024). Educational institutions now face the dual challenge of harnessing generative AI’s potential while upholding academic integrity and addressing its risks (Zlateva et al., 2024).

In the 21st century, where learning goals are increasingly complex, generative AI presents both an opportunity and a imperative. Despite its rapid evolution, students’ foundational skills—critical thinking, creativity, and collaboration—remain underdeveloped (Fadli and Iskarim, 2024; Papadimitriou and Virvou, 2025). Bridging this gap requires a deliberate focus on integrating AI tools in ways that complement, rather than replace, human ingenuity and ethical judgment.

This study bridges that gap by investigating how AI literacy and 21st century skills influence generative AI acceptance among Palestinian university students, using the Unified Theory of Acceptance and Use of Technology (UTAUT) framework. Unlike prior work focused on high-income settings (e.g., Kong et al., 2024), we highlight disparities in infrastructure, gender, and academic discipline that mediate AI adoption—factors often overlooked in Global North-centric literature. Our findings offer actionable insights for educators and policymakers to design equitable AI integration strategies while advancing theoretical debates on technology acceptance in developing economies.

1.1 Significance of the study

Recently, in the 21st century, accomplishing learning goals is increasingly difficult. Even though generative AI technology is widely available, the reality is that the skills needed still need improvement. Moreover, generative AI literacy, including knowledge of generative AI applications and tools, must be included as an axis to prepare students to use generative AI. Hence, 21st century skills include communication, critical thinking, collaboration, and creativity. Students with these skills can lead change to keep pace with scientific and technological progress and confront life problems that students face while they learn. This study supports students in developing their future skills and will guide educators and policymakers to adapt their teaching strategies and policies in higher education institutions. Moreover, this study’s outcomes can benefit the advancement of generative AI in education for college instructors, researchers, and policymakers. Theoretically, this study is unique in its investigation since AI literacy and 21st century skills that might influence generative AI acceptance have been rarely explored by other researchers.

Therefore, the importance of the study stems from theoretical and applied considerations. It highlights the importance of AI literacy and 21st century skills in accepting generative AI applications in higher education. Also, this study contributes to closing the gap in AI literacy and 21st century skills that was merely investigated by applying the technology acceptance model (UTAUT) to determine how these factors influence students’ acceptance of using generative AI in learning. Additionally, the results and recommendations of this study might help university policy makers and college instructors to enhance the acceptance of generative AI.

1.2 Problem statement

Educational institutions, especially universities, keep pace with scientific and technological development by recognizing the importance of generative AI in education. In line with the recommendations of many researchers, it is necessary to explore AI literacy and 21st-century skills to improve and develop the educational process. It is necessary to enhance its competitive advantage to keep pace with such developments and benefit from them in teaching. This requires paying attention to students’ needs and preparing them to possess the necessary skills and experience to accept this technology. Equally important, more attention should be given to preparing a generation that can deal with the challenges of the times, be aware of the potential of this technology, and invest in generative AI. Living in the 21st century requires students to deal safely and effectively with generative AI data. Future 21st-century skills qualify and enable students to accept this modern technology.

AI will influence various aspects of human life beyond just the computer industry, making it essential for everyone to understand AI. Today, AI is used across diverse sectors such as business, science, art, and education to enhance user experience and boost efficiency (Ng et al., 2021). AI applications, including smartphones and virtual assistants, are integrated into many aspects of our daily lives. People recognize AI services and devices; however, they often lack knowledge about the underlying concepts and technologies, and awareness of potential usage of AI (Ng et al., 2021). As learning becomes more infused with AI tools, it is crucial to support AI literacy for all (Sperling et al., 2024). AI literacy has become an essential skill set that everyone should acquire in response to this new era of technology advancement.

Moreover, 21st-century skills are needed to solve practical problems using generative AI. AI. Hence, this study is partially similar to Jing et al. (2024), Schiavo et al. (2024), and Strzelecki (2024), who explore AI literacy and generative AI adoption. Based on the foregoing, this study aims to explore college students’ AI and their 21st century skills influence on generative AI acceptance.

1.3 Research gap

The ability to critically assess generative AI tools and apply them in a safe, ethical, and successful manner in various settings, including personal, professional, and educational ones, is known as AI literacy. Various measures of AI literacy have been employed to comprehend these elements. Some AI tools have been designed to assess these competencies in a particular population, such as college students (Tseng et al., 2025). Measures for computer science novices were created in other studies (Laupichler et al., 2023). Offered a wider variety of assessments considering AI literacy elements’ psychological and social components. As far as the researchers know, few studies have investigated the influence of AI literacy and 21st-century skills on generative AI acceptance. At the same time, conducting this study is necessary as it is crucial to assess these variables in a developing country like Palestine. Also, this study addresses gaps tied to the study’s questions by limited focus on developing countries. Most studies (e.g., Kong et al., 2024) focus on high-resource settings, neglecting regions like Palestine. Also, demographic nuances, few studies explore how gender, field of study, and academic level intersect with AI acceptance (e.g., Stöhr et al., 2024). Moreover, existing research (e.g., Zhu et al., 2025) lacks longitudinal insights into how AI literacy evolves with using generative AI.

1.4 Context of study

Limited infrastructure and resources may hinder AI integration (e.g., lack of standardized ICT policies, as noted by Ndibalema, 2025). Gender disparities in AI acceptance were observed, aligning with Jang et al. (2022). Compared to Malaysia, Mansoor et al. (2024) found higher AI literacy in Malaysian students due to robust STEM policies, while in Sub-Saharan Africa, Ndibalema (2025) highlighted challenges similar to Palestine (e.g., digital gaps). In Europe, Strzelecki and ElArabawy (2024) noted higher acceptance in Poland due to better institutional support.

Thus, this study attempts to identify the influence of AI literacy and 21st-century skills by addressing the following questions:

1. What is the overall state of AI literacy among Palestine Technical University-Kadoorie (PTUK) students?

2. What is the level of 21st-century skills among college students?

3. To what degree do PTUK students accept generative AI in their learning process?

4. What is the influence of AI literacy, 21st century skills, and demographic variables (gender, field of study, study level) on students’ acceptance of applications of generative AI from the students’ perspectives?

2 Literature review

2.1 AI literacy

AI literacy is acknowledged as an essential skill in the modern era of AI. Traditionally, literacy encompasses specific ways of thinking about and participating in reading and writing to comprehend or communicate ideas within a particular context (Laupichler et al., 2023). It involves maintaining a balanced perspective on technology to ensure responsible and healthy usage, understanding and addressing issues related to privacy, security, legal and ethical concerns, and the societal role of digital technologies (Khoo et al., 2024). Literacy is closely tied to knowledge, while competency refers to the ability to perform tasks successfully. Literacy centers on understanding, while competency highlights the practical application of knowledge with confidence. Furthermore, AI competence is an essential skill that enables users to interact with AI-driven applications while maintaining a reliable understanding of the underlying algorithms (Ng et al., 2021). AI literacy, on the other hand, can be defined as the ability identify, use, and critically assess AI products while adhering to ethical principles (Wang et al., 2009).

Ng et al. (2023) developed an AI Literacy scale that outlines four key constructs: using and applying AI tools, understanding what AI is, recognizing when AI is integrated into a system, and AI ethical awareness. Even though some studies investigated AI literacy, most conducted qualitative research methods focusing on exploring initial investigations (Ng et al., 2023). These studies have focused on improving AI literacy rather than quantifying it with other variables. Long and Magerko (2020) describe AI literacy as a variety of competencies that motivate students to evaluate AI applications critically, communicate and cooperate effectively with AI tools, and use AI as a system across diverse contexts. They indicate that AI literacy has 17 skills, highlighting its connections to digital data and computational literacy. While these literacies may be interdependent, they remain distinct. For example, AI literacy builds on basic computer skills, making digital literacy a foundational requirement. Data literacy, which involves the ability to understand, analyze, evaluate, and debate data, significantly overlaps with AI literacy due to the integral role of data in machine learning.

Later, Ng et al. (2023) expanded on this concept by framing AI literacy around four core dimensions. The first dimension, comprehension, involves grasping fundamental AI concepts, such as how AI operates, machine learning algorithms, data training, and AI biases. The second dimension focuses on using AI tools to solve problems and accomplish objectives, which often requires coding and the capacity to handle large datasets. The third dimension is evaluation, which entails assessing AI applications’ quality and reliability and ethically designing and developing AI systems. This demands technical skills and an awareness of AI’s ethical and societal impacts. The final dimension is AI ethics, which centers on understanding the moral and ethical considerations surrounding AI, enabling individuals to make informed decisions about its use. This includes issues such as fairness, transparency, privacy, and the broader societal implications. For example, Chan and Lo (2025) highlighted the proliferation of AI technologies such as facial recognition and predictive policing has exposed significant gaps in legal protections and ethical frameworks, particularly concerning privacy and autonomy. Also, their study warns against the erosion of privacy norms through continuous monitoring. To mitigate these risks, higher education institutions must adopt privacy-by-design principles and ensure algorithmic transparency in AI tools.

Moreover, Tseng et al. (2025) assessed AI literacy level of nursing students by using AI literacy scale that includes four categories: using and applying AI ability, understanding AI, detecting AI, making ethical considerations of AI by analyzing the influence of ChatGPT by Openia and Copilot tools compared with traditional teaching methods and found that generative AI tools enhanced students’ AI literacy.

Meanwhile, a study conceptualized AI literacy as a person’s capability to understand how AI technology functions and influences society comprehensively. It also involves using these technologies ethically. Similarly, Salhab (2024) explored AI literacy in the college curriculum and found that integrating AI literacy into the curriculum can enhance AI literacy for college students to foster essential 21st century skills. From a broader perspective, Mansoor et al. (2024) investigated AI literacy levels by surveying university students across four countries in Asia and Africa. They found variations in AI literacy levels influenced by nationality, field of study, and academic specialization, while gender did not significantly influence AI literacy. Malaysian participants demonstrated higher AI literacy levels compared to other students. Results also revealed that demographic and academic variables significantly shaped participants’ perceptions of AI literacy.

2.2 21st century skills

The 21st century skills equip students to develop different thinking types, make sense in learning experiences, and employ approaches that can be applied in many different life situations. 21st-century skills encompass collaboration, communication, critical thinking, and creativity (Kain et al., 2024). These learning skills were developed by educators, government officials, and business leaders to equip students for a constantly changing life and work environment (Cristea et al., 2024). Collaboration involves the ability to cooperate effectively and respectfully with diverse teams and flexibility and willingness to achieve a common goal. It assumes shared responsibility, collaborative work, and valuing each team member’s contributions (Barrett et al., 2021; He and Chiang, 2024). Communication includes the ability to listen and interpret meaning effectively. Strong communication skills are crucial for sharing knowledge, principles, feelings, and objectives. They are essential for informing, instructing, motivating, and persuading. Creativity is characterized by creating ideas, such as brainstorming, to generate valuable ideas. Students elaborate, refine, analyze, and evaluate original ideas to enhance and maximize creative thinking (Saleem et al., 2024). Critical thinking includes the ability to reason effectively, utilize systems thinking, make decisions, and solve problems (Liu et al., 2021).

These skills are important for reaching diverse audiences and conveying information to multilingual and multicultural populations (Shadiev and Wang, 2022). Moreover, a wide range of knowledge, capabilities, and work habits, like creative thinking, problem solving, innovation, and creativity skills, were practically operationalized in a specific setting, like a project-based STEM classroom using AI. For example, Hu (2024) documented a 6-month blended learning program where students used collaborative platforms (e.g., Padlet, GitHub) to solve community-based problems, illustrating applied critical thinking and creativity.

Over the past decade, educational systems have focused on helping students acquire these 21st century skills through various strategies and new technologies. These strategies include inquiry-based learning, AI-powered simulations, and gamification (Celik et al., 2024; Jing et al., 2024; Samala et al., 2024). Research literature shows that many studies have investigated these skills in light of using AI like robotics (Gratani and Giannandrea, 2022), and specific approaches like game-based learning and stimulating learning environments to develop these skills.

2.3 Generative AI acceptance

The extensive adoption of technology has led to an increasing preference for generative AI applications in education (Choung et al., 2023). Generative AI is an emerging technology comprising machine learning algorithms that produce original content like text, images, and sound (Alier et al., 2024). However, the implementation success of generative AI in educational settings largely hinges on students’ readiness to accept and embrace the technology (Li et al., 2024; Yilmaz et al., 2023). The UTAUT model provides insights into attitudes and intentions regarding using generative AI applications in education. For instance, students are more likely to use generative AI applications if they perceive them as intuitive and easy to use. Strzelecki and ElArabawy (2024) used UTAUT to investigate the key factors influencing students’ usage of ChatGPT. The authors reported that performance expectancy, effort expectancy, and social influence significantly impact behavioral intention to adopt ChatGPT. The study explores two factors that might affect students’ acceptance of generative AI, specifically within higher educational settings. Moreover, previous studies have shown that the UTAUT is an effective framework to evaluate users’ acceptance of modern technologies (Teng et al., 2022; Ustun et al., 2024). Therefore, the UTAUT could offer insights into how users evaluate these technologies. There is a noticeable gap in research grounded in theoretical frameworks like the UTAUT model that examines students’ acceptance of generative AI.

Generative AI applications can transform the roles and responsibilities assigned to students. The widespread adoption of these technologies can offer students enhanced access to information, deeper understanding of their learning progress, and more tailored educational experiences (Yilmaz et al., 2023). For example, a study by Marrone et al. (2022) investigated the relationship between AI and creativity from four key concepts: social, affective, technological, and learning factors. Results revealed that AI could certainly help them develop students’ creativity. These findings suggest that the effectiveness of generative AI in education largely hinges on students’ creativity, making their acceptance and utilization of the technology a critical factor (Li et al., 2024; Yilmaz et al., 2023). Moreover, generative AI is a powerful instrument for tackling urgent global issues related to enhancing societal wellbeing and promoting sustainable development. It is a valuable partner in tackling environmental issues such as mitigating climate change, managing resources, and developing clean energy solutions (Shafik, 2024). Also, it has economic impacts, as it is transforming industries by automating content creation, enhancing productivity, and enabling new business models. Its economic implications affect labor markets, business efficiency, and innovation (Chui et al., 2023).

2.4 Theoretical framework

The UTAUT2 model, created by Venkatesh et al. (2012), is a theoretical framework businesses use to examine influencing customer acceptance and adoption of new technology. According to this theory, behavioral intentions are influenced by four key factors: performance expectancy, social influence, effort expectancy, and facilitating conditions, which influence actual usage behavior (Venkatesh et al., 2003). Performance expectancy refers to the belief that the technology will help users accomplish tasks, aligning with perceived usefulness (PU) (Venkatesh et al., 2012). Social influence involves the degree to which individuals perceive that important others approve or disapprove of their use of the technology (Wang et al., 2009). Effort expectancy relates to how easy the technology is to use, comparable to perceived ease of use (PEOU) (Wang et al., 2009). Facilitating conditions encompass the support and resources available to users when adopting the technology (Venkatesh et al., 2012). Furthermore, Chen et al. (2020) introduced perceived enjoyment as an additional factor influencing perceived ease of use, suggesting that if users find generative AI enjoyable, they are likely to perceive it as easier to use, thereby impacting its adoption.

The present study uses the UTAUT since it is an effective framework for investigating the acceptance of generative AI, because this model predicts actual usage behavior. The authors will explore the relationships between the components of the UTAUT as applied to generative AI. The study’s novelty lies in incorporating two new variables, AI literacy and 21st century skills, into the model. Therefore, this study adds to existing literature on factors influencing the acceptance of generative AI in higher education. The study’s novelty lies in incorporating two new variables, AI literacy and 21st century skills, into the model. This study contributes to existing literature on the factors influencing the acceptance of generative AI in higher education institutions.

2.5 Ethical use of generative AI

Ethical use is an important domain that should be investigated in order to navigate generative AI (Ray, 2023; Tan and Maravilla, 2024). Previous studies showed that it is imperative to explicitly address concerns related to fairness, accountability, transparency, bias, and integrity (Jang et al., 2022). Jang et al. (2022) identified five dimensions of AI ethics by a final instrument they developed. Five dimensions were identified: fairness, transparency, privacy, responsibility, and non-maleficence. The fairness dimension encompasses elements like considering diversity during data collection for AI development and ensuring universal disclosure of the developed AI without discrimination. The transparency dimension comprised items gauging attitudes regarding the importance of AI explainability. In the privacy dimension, there were inquiries about attitudes toward safeguarding privacy during the collection and utilization of data for creating AI. The responsibility dimension sought opinions on whether responsibilities should be allocated based on social consensus in situations involving AI-related issues or if specific groups, such as developers and users, should bear complete responsibility. Lastly, the non-maleficence dimension gathered responses on the significance of preventing abuse by various agencies associated with AI (Ryan and Stahl, 2020).

3 Materials and methods

3.1 Research design

This study employed a quantitative research design to investigate the influence of AI literacy and 21ˢᵗcentury skills on college students’ acceptance of generative AI. The design utilized three validated scales to measure the key variables: AI literacy, 21st century skills, and generative AI acceptance. A cross-sectional survey was administered to collect self-reported data from participants, and statistical analyses were performed to examine the relationships between the variables.

3.2 Research context

The study was conducted at Palestine Technical University-Kadoorie (PTUK), a higher education institution in Palestine. PTUK was selected due to its diverse student population across multiple disciplines, including Information Technology, Engineering, Applied Sciences, Business, and Humanities. The university’s focus on integrating technology into education made it an ideal setting for exploring generative AI acceptance among students.

3.3 Sample size

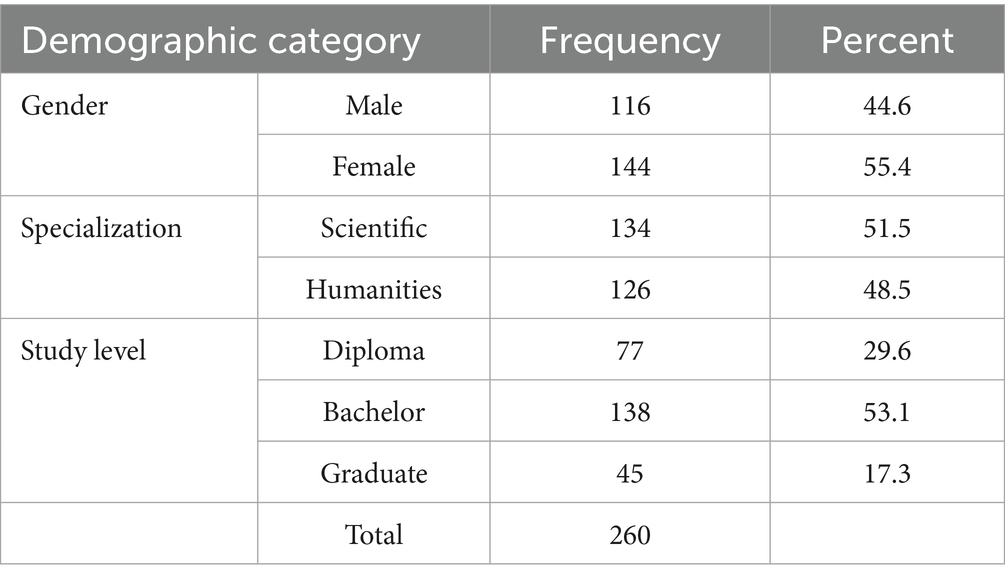

A sample of 260 college students was selected using random sampling to ensure representativeness across faculties, genders, and academic levels. The sample size was determined based on the following considerations: first, statistical Power: A sample size of 260 was deemed sufficient to achieve adequate statistical power (≥0.80) for detecting medium effect sizes in regression analyses, as recommended by Cohen (1988). Second, population Diversity: The sample included students from nine faculties, with proportional representation of males (44.6%, *n* = 116) and females (55.4%, *n* = 144), as well as Diploma (29.6%, *n* = 77), Bachelor (53.1%, *n* = 138), and Graduate (17.3%, *n* = 45) students. Third, pilot testing: A pilot study with 30 respondents was conducted to validate the adapted scales and refine the survey instrument, ensuring clarity and relevance to the Palestinian context.

3.4 Data collection and instrumentation

This study evaluates the influence of AI literacy and 21st century skills on college students’ acceptance of generative AI. The researchers used the Likert-item rating scale. Participants self-reported their levels of AI literacy, 21st century skills, and acceptance of integrating generative AI in educational settings. To adapt the three existing scales and contextualize them to the Palestinian context, a pilot study with 30 respondents was used. Exploratory factor analysis was used for the three scales. A pre-existing AI literacy scale developed by Ng et al. (2023) was utilized and adapted. The original scale, a 32-item self-reported questionnaire on AI literacy, was developed and validated to measure students’ literacy development in the four dimensions. The adapted scale for the study using generative AI was validated, and the scale included 14 items after validation.

For assessing 21st century skills, an adapted version of the scale by Jia et al. (2016) was used in the Palestinian context. This scale originally comprised 16 items covering information literacy, collaboration, communication, innovation and creativity, and problem-solving skills. A pilot study of 30 students validated the scale in the Palestinian context. Further, the exploratory factor analysis was used to validate and adapt the scale.

A pre-existing Tugiman et al. (2023) scale was used and modified to fit the study context for generative AI acceptance. The scale was adapted by changing the context of the sentences to accept generative AI. The acceptance scale consists of five constructs: performance expectancy, effort expectancy, facilitating conditions, behavioral intention, and social influence.

Researchers employed random sampling and pilot-tested the survey with a diverse subgroup to minimize respondents’ bias. Questions used neutral phrasing and balanced scales. Respondent anonymity was ensured by omitting identifiers, using encrypted platforms, and reporting aggregated data. No names, IP addresses, or other traceable data were collected. Google Forms, which is a secure platform, was used. The study received IRB approval (No. 04/2025), and participants provided informed consent acknowledging confidentiality.

4 Data analysis

For data analysis, researchers used Statistical Package for the Social Sciences (SPSS) package to calculate descriptive statistics, skewness, Kurtosis and Kolmogorov–Smirnov test to ensure that the data follow the normal distribution, and multiple regression used to investigate the influence of AI literacy and 21st century skills on the acceptance of generative AI.

4.1 Scales’ validity and reliability

Content validity or face validity evaluates an instrument to ensure that the questionnaire has an adequate and representative group of questions that reflect the real meaning of the concept for the three pre-existing scales (Sekaran and Bougie, 2003). The respondents were asked to judge the questions’ appropriateness and suggest any items that should be included in the instrument. Pearson’s correlation for each variable assessed construct validity.

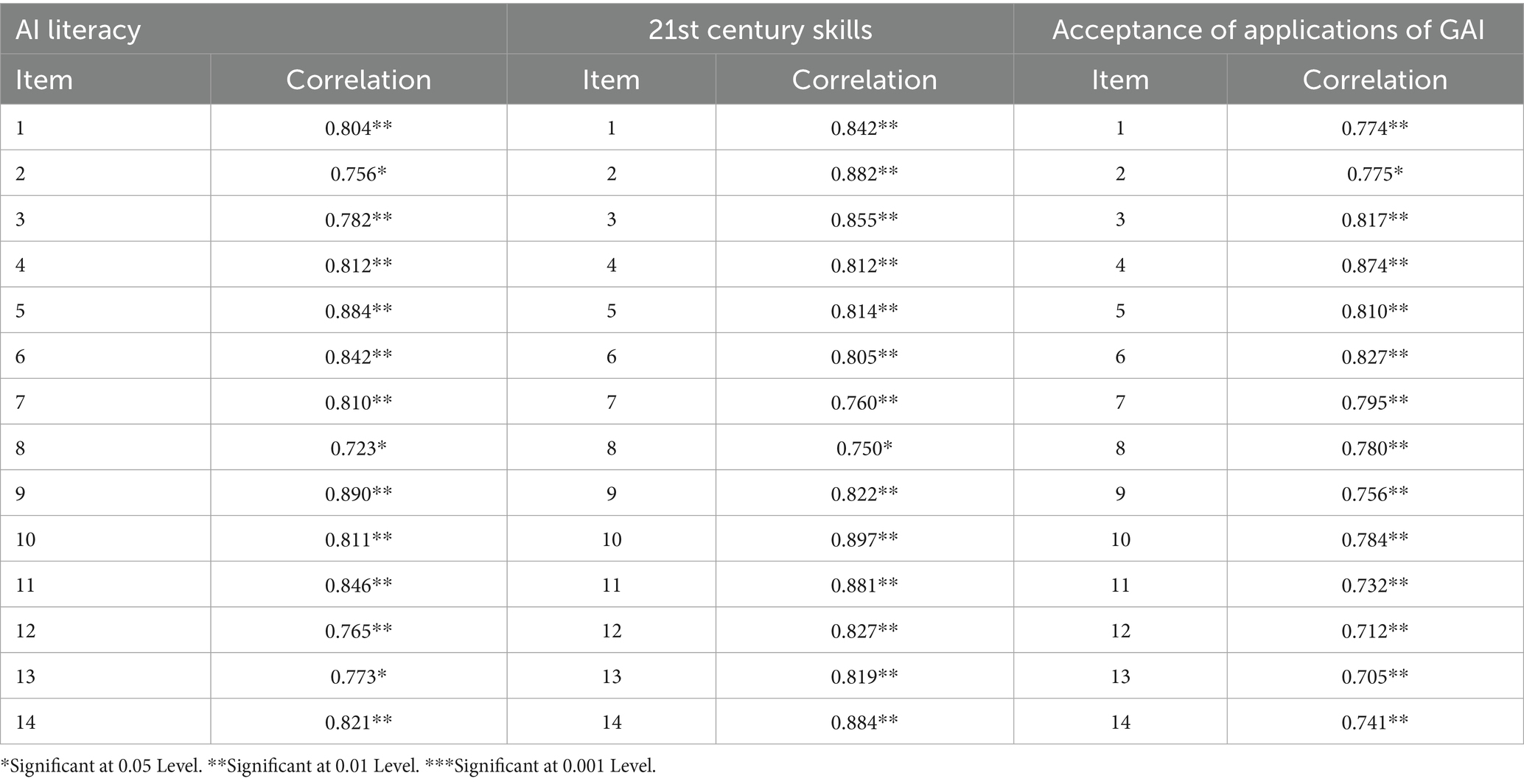

For internal consistency validity, the researchers applied the study tools to a survey sample of 30 students, and Pearson’s correlation coefficient was used for each item with the total score for the construct it relates to, and the results are shown in Table 1.

Table 1 shows that the correlation coefficients of each item with its dimension for each scale were all statistically significant at the 0.05 level, *p < 0.05, **p < 0.01, and ***p < 0.001, which confirms an appropriate degree of internal validity for the three scales.

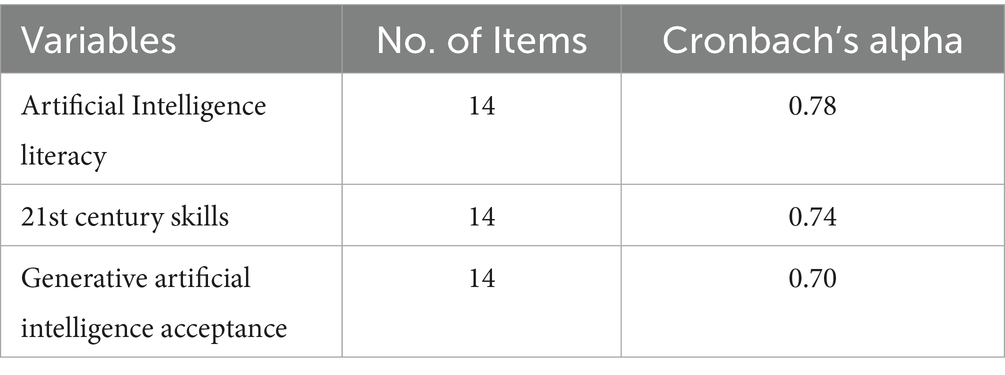

A coefficient of at least 0.70 is required to indicate acceptable reliability (Baumgartner et al., 2002). Table 2 reports Cronbach’s alpha for each variable. Table 2 shows that Cronbach’s alpha varied from 0.70 to 0.78, which is acceptable.

4.2 Sample characteristics

Respondents in this study are from nine faculties (Faculty of Information Technology, Graduate Studies, Engineering and Technology, Applied Sciences, Business and Economics, Art and Education, and Physical Education Faculty) from Palestine Technical University- Kadoorie, comprising 116 males (44.6%) and 144 females (55.4%). There were (29.6%, n = 77) Diploma students (53.1%, n = 138). Bachelor students, and (17.3%, n = 45) Graduate Students. It also comprises 134 Scientific specializations (51.5%) and 126 Humanities specializations (48.5%). Table 3 shows the demographic characteristics.

5 Findings

To answer the first question, “What is the level of AI literacy among PTUK students?,” the average scores for each dimension were calculated, and these mean scores were then evaluated by a pre-existing scale developed by Daher (2019). According to this scale, mean scores between 0.8 and 1.8 were categorized as very weak, while mean scores ranges from 1.8 to 2.6 were labeled as “weak scores,” scores lies between 2.6 and 3.4 were identified as “moderate scores,” and scores ranging from 3.4 to 4.2 were categorized as “good scores.” Mean scores from 4.2 to 5 were designated as “very good scores” on this scale.

Table 4 shows the means for AI literacy. The overall mean score for AI literacy was 3.075 out of 5, indicating a “moderate” level of AI literacy (Table 4).

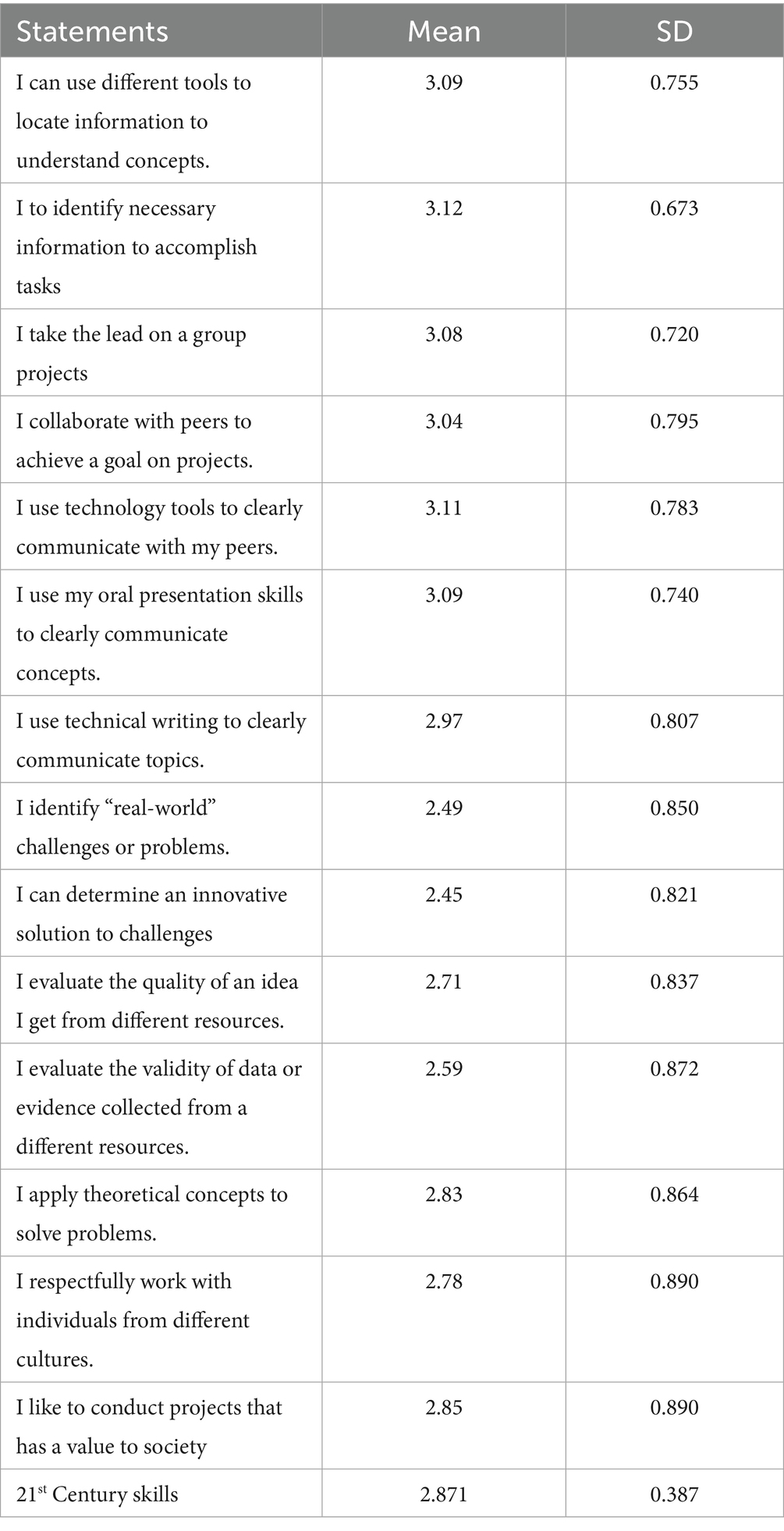

To answer the second question, “What is the level of 21st century skills among college students?,” mean scores were calculated as shown in Table 5. The mean score for 21st century skills scores is 2.871 out of 5, indicating a “moderate” level of 21st-century skills (Table 5).

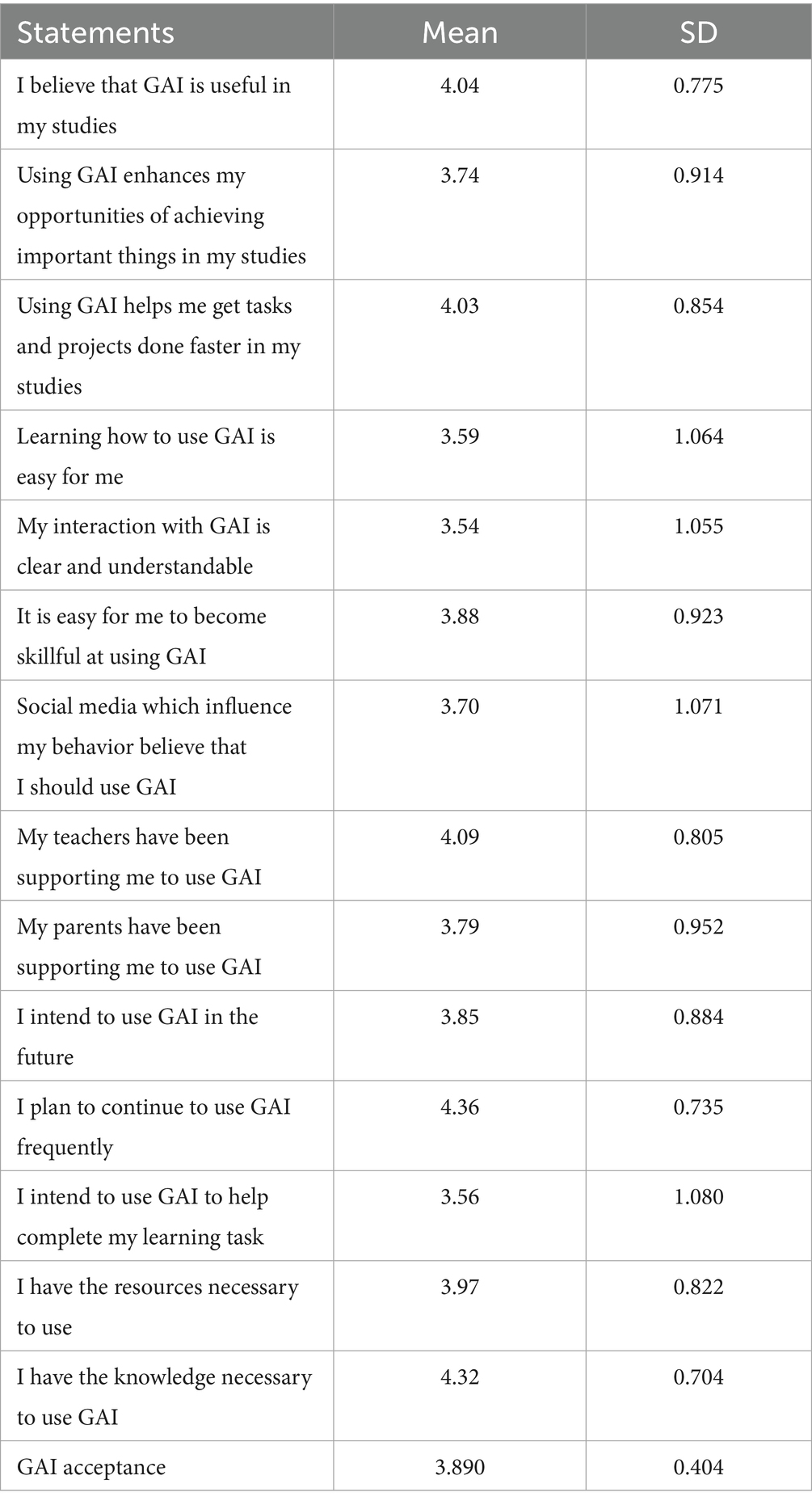

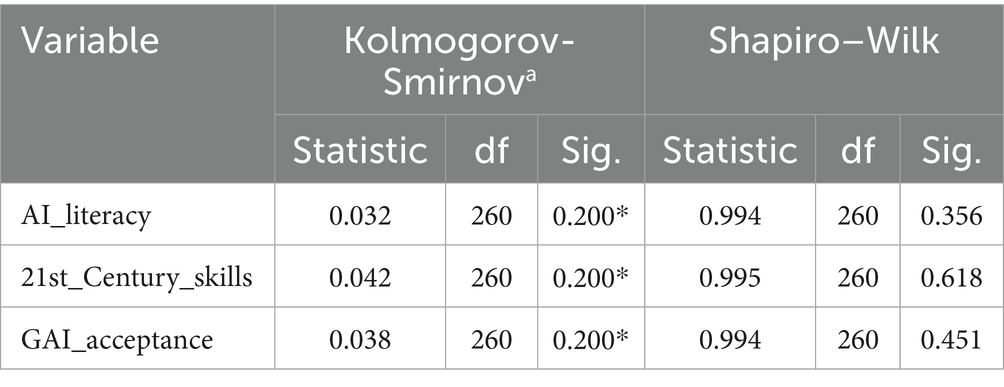

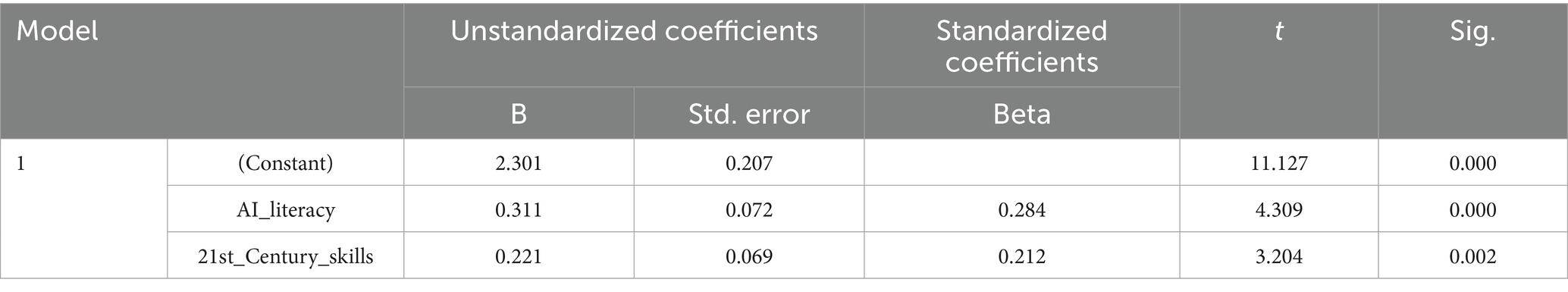

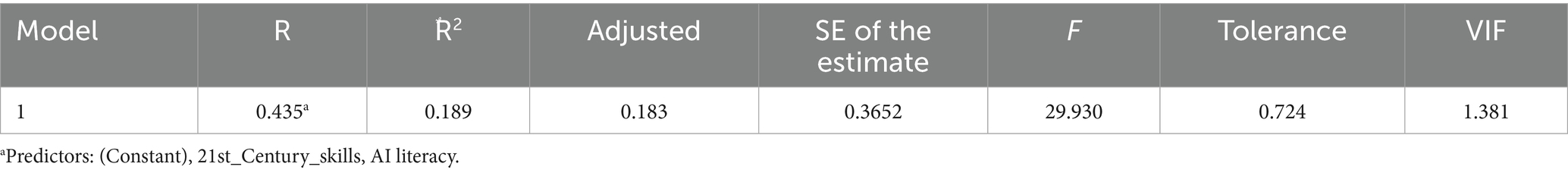

To answer the third question, “What is the level of acceptance of generative AI among PTUK students?,” the means and standard deviation are calculated in Table 6. The overall mean score for generative AI acceptance mean is 3.890 out of 5, indicating a “good” level of generative AI acceptance (Table 6). Multiple regression models are conducted to find the influence. To assess whether the collected data satisfied the assumptions, including normality and collinearity (Williams et al., 2019). The Shapiro–Wilk test was also employed to assess normality, as shown in Table 7. It shows that it is clear that the probability value of the variables (AI_literacy, 21st-century skills, Generative AI acceptance) is not significant ( ) respectively, so we accept the hypothesis. All of the reported values show that all variables are normally distributed. The Variance Inflation Factor (VIF) values were examined to evaluate collinearity, with results falling within the 1 < VIF < 5 range. This indicates that the variables are moderately correlated with each other. The low VIF values associated with the variables confirm no collinearity issue. Table 8 presents the VIF test results, demonstrating the absence of multicollinearity concerns. After verifying that the regression model’s assumptions were satisfied, multiple regression analyses were used to determine whether the independent variables (AI literacy and 21st century skills) significantly influenced the dependent variable (generative AI acceptance). Table 9 displays coefficients for the regression analysis.

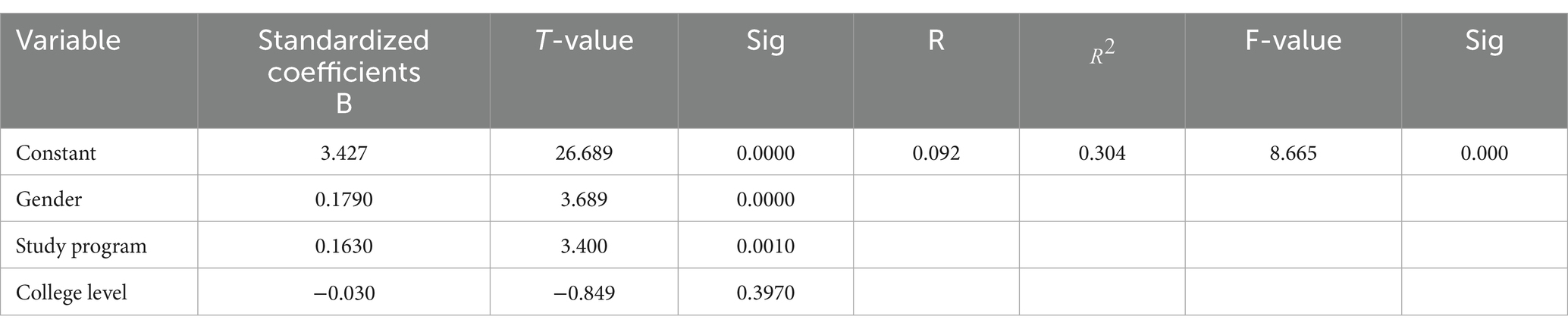

The results shown in Table 9 indicated that the two predictors (AI literacy and 21st century skills) explained 29.9% of the variation in students’ acceptance. AI literacy has a significant direct influence (t = 4.309, sig = 0.000). Furthermore, 21st century skills have a significant direct impact (t = 3.204, sig = 0.002). The results state that the regression coefficient is positive (0.311) and the relationship between AI literac and generative AI acceptance is statistically significant (p < 0.001). The regression coefficient is also positive for 21st century skills (0.221), indicating that the students with good 21st century skills accept generative AI better, and the relationship is statistically significant (p < 0.001). The value of the coefficient of determination ( )for the constant was (19.9%) and the value of F was (29.93). This indicates that the model is statistically significant. For demographic influence, the results of Table 10 show that gender and field of study have a statistically significant influence, while the study level does not significantly influence generative AI acceptance. The value of the coefficient of determination ( ) for the constant was (30.4%), and the value of F was (8.665). This indicates that the model is statistically significant.

6 Discussion

This study aimed to measure levels of generative AI literacy and 21st century skills by surveying 260 respondents sampled from university students with different disciplines. The overall mean score for all respondents on the AI literacy scale was 3.07 out of 5, reflecting a moderate level of AI literacy among participants. The moderate AI literacy scores indicate that most participants are familiar with basic AI concepts (e.g., machine learning, chatbots) but may lack deeper technical or ethical understanding. This aligns with studies suggesting that while AI awareness increases, many individuals struggle with critical evaluation and responsible use of AI tools (Laupichler et al., 2023). The moderate level supports the argument that current educational and training programs may not yet be sufficient to foster high AI literacy, necessitating more structured curricula emphasizing ethical considerations, bias detection, and practical AI applications (Long and Magerko, 2020).

Moreover, AI literacy is related to understanding AI’s capabilities and limitations and ethical awareness regarding its use. A moderate level of these dimensions reflects that college students need to retrieve their knowledge and reflect on the adequacy of their current knowledge (Ng et al., 2021). Also, students must consider issues while using AI platforms, such as privacy, intellectual property, and discrimination and bias (Khoo et al., 2024). Kong et al. (2024) reported that AI literacy is a comprehensive concept that enables students to apply AI concepts, realize their ability to use AI, understand the potential of AI applications, and be aware of the ethical implications of AI tools. When comparing these findings to Kong et al. (2024), who define AI literacy as a multidimensional competency encompassing conceptual understanding, practical application, and ethical awareness, the current results suggest that students may perform adequately in basic AI comprehension but lag in critical evaluation and responsible use. For instance, while students might recognize AI tools like ChatGPT or facial recognition systems, their ability to assess biases in AI outputs or navigate copyright concerns appears limited. This contrast highlights a crucial gap due to technical familiarity that does not equate to ethical or strategic competence. The moderate scores also imply that educational interventions should not only teach how AI works but also how to question its societal impact, reinforcing, Ng et al. (2021), and who emphasis on applied and ethical dimensions of AI literacy. Future curricula should integrate case studies on AI ethics, bias audits of real-world systems, and reflective exercises to bridge this gap (Chan and Lo, 2025).

Regarding the second research question, which focused on 21st century skills level, the study found that these skills were moderate in critical competencies like communication, collaboration, creativity, and critical thinking among college students. The moderate scores in 21st-century skills (e.g., collaboration, creativity, digital literacy) suggest that students are reasonably adept at teamwork and communication skills frequently emphasized in the recent education systems (Cristea et al., 2024; Kain et al., 2024). However, weaker critical thinking and problem-solving performance imply that many students may still rely on surface-level analysis rather than deeper, innovation-driven approaches. This could be explained by the teaching strategies used while using generative AI (Barrett et al., 2021; He and Chiang, 2024; Saleem et al., 2024). Also, higher education institutions may not yet have structured ways to integrate AI in a manner that fully enhances 21st century skills (Shadiev and Wang, 2022). To address this, university maker spaces should foster environments that encourage collaborative learning and enhance the teaching-learning process.

Additionally, universities should adopt educational models that incorporate quality assessments of learning and skill acquisition related to generative AI and promote meaningful interactions among students. If an institution plans to incorporate generative AI, it should address, assess, and evaluate students’ skills comprehensively, including soft skills and hard skills, and plan strategies to enrich students with 21st century skills. For example strategies like inquiry-based learning, AI-powered simulations, and gamification (Celik et al., 2024; Hu, 2024; Gratani and Giannandrea, 2022; Jing et al., 2024; Samala et al., 2024) should be implemented. This is in line with Marrone et al., (2022), who found that 21st-century skills are not sufficiently developed due to inadequate digital infrastructure, a lack of qualified instructors, and the absence of clear Information and Communication Technology (ICT) policies, all of which impede the effective integration of technology in teaching.

In this respect, results also revealed that AI literacy positively influences the acceptance of generative AI. Since students are moderately AI literate, this may facilitate the acceptance of generative AI tools that contribute to the generative AI usage. Schiavo et al. (2024) indicated that students acquire more knowledge and develop greater competence in using AI, indicating more AI literacy and enhancing a deeper understanding and proficiency in generative AI.

For the influence of college students’ 21st-century skills on generative AI acceptance, results revealed a pivotal role of 21st-century skills that influence the acceptance of generative AI tools. This finding aligns with the earlier study by Fadli and Iskarim (2024), which identified six components of 21st century skills, digital skills, communication skills, student connectedness, perceived competence, and cooperativity, as potential factors that positively influence students’ acceptance of modern technologies. However, these findings differ from Gómez Niño et al. (2024) findings, who found that AI can equip students with essential 21st century skills like creativity, collaboration, and critical thinking in education. Also, a supporting study of Fadli and Iskarim (2024) showed that integrating ChatGPT into the learning process can help students sharpen their communication skills, stimulate critical thinking, and enhance their analytical, evaluative, and reflective skills. It seems also that the relation is bidirectional. 21st-century skills influence generative AI acceptance, and generative AI acceptance also influences 21st-century skills. Consider ethical issues while using AI tools related to fairness, accountability, transparency, bias, and integrity (Jang et al., 2022). Moderate levels in AI literacy and 21st-century skills seem to underscore the need for targeted upskilling initiatives. Since generative AI is increasingly integrated into various professions, educational institutions might focus on AI ethics, problem-based learning to strengthen critical thinking alongside digital skills, and merging AI literacy with creativity and analytical reasoning.

These findings could also mean that AI acceptance influences AI literacy and 21st-century skills, which is a reverse correlation. For example, a previous study of Tseng et al. (2025) and demonstrated that using AI tools like ChatGPT and Copilot in teaching greatly enhanced students’ AI literacy. Additionally, a positive correlation between AI literacy and AI acceptance was observed in a study by Li et al., 2024, and Yilmaz et al. (2023). They revealed that AI literacy and the ability to utilize AI technology are interconnected, suggesting that as AI literacy improves, so does the acceptance of AI. This result also concurred with the study of Ma and Lei (2024). They identified that AI literacy influences several components of acceptance among students using the Technology Acceptance Model (TAM), which includes: perceived usefulness (PU) as a primary factor that affects behavioral intention (BI) to use AI tools. It seems that AI applications have economic implications affect labor markets, business efficiency, and innovation (Chui et al., 2023; Shafik, 2024).

Relationship between AI Literacy, 21st-century skills, and generative AI acceptance was provided in this study. This includes moderate levels of AI literacy and 21st-century skills. The study found that students exhibited moderate levels of AI literacy (mean score: 3.075/5) and 21st-century skills (mean score: 2.871/5), indicating room for improvement in these areas. Positive influence on generative AI acceptance. Regression analysis revealed that both AI literacy (β = 0.311, p < 0.001) and 21st-century skills (β = 0.221, p < 0.001) significantly influenced students’ acceptance of generative AI, explaining 29.9% of the variance. Also, bidirectional relationship which means while AI literacy and 21st-century skills enhance acceptance, the use of generative AI tools (e.g., ChatGPT) also improves these competencies, as supported by studies like Tseng et al. (2025).

For the demographic influence of gender and field of study. It seems that gender affects generative AI acceptance. This could be explained by the fact that usage among females and males may vary. Jang et al. (2022) revealed that female students may be more apprehensive about utilizing AI-based applications in their learning processes. Also, gender plays a complex role in determining an individual’s experience of AI anxiety; both cognitive and non-cognitive factors can explain this phenomenon. Also, a study conducted by Zhang et al. (2023) reported that gender plays a role in AI usage, since there is a lack of exposure that may lead to a low confidence level in the ability to use and understand AI-based applications, which may lead to anxiety and fear. According to the regression results, the influence of the study field is statistically significant. This is to say that students in different fields of study accept generative AI differently. The specialization of students could explain this. For example, students in scientific colleges perceive generative AI differently from students in humanities colleges. Technology and engineering schools lead in AI innovation, while liberal arts colleges may focus on their societal impact. The results also align with Hornberger et al. (2023), who noted variations in AI literacy levels among college students, specifically highlighting higher literacy among engineering students and those in science, technology, engineering, and mathematics (STEM).

7 Conclusion

Based on the findings of this study, college students who possess a moderate AI literacy level and moderate 21st century skills tend to accept generative AI applications in their learning process. In the 21st century, achieving learning outcomes is increasingly difficult. The reality is that the learning skills still need to be improved. AI literacy also needs improvement among college students. Therefore, enhancing AI literacy among college students is essential, and this can be achieved by developing targeted instructional materials, educational curricula, and strategies. Integrating AI literacy into college courses across all disciplines is a highly effective approach, as supported by various studies in the field (Laupichler et al., 2023; Mansoor et al., 2024; Salhab, 2024).

7.1 Recommendations and future work

Based on the study’s findings, it is recommended that the use of generative AI among college students be further improved by fostering critical thinking and ensuring its responsible application in education. This approach can lead to higher-quality and more effective learning outcomes. Findings also inform some recommendations for curriculum design like assessment strategies; institutions should redesign assignments to emphasize process over product, such as requiring students to document their use of AI tools and reflect on revisions. Moreover, ethical awareness by offering courses that incorporate case studies on AI-generated plagiarism and copyright dilemmas, echoing the referenced study’s call for explicit discussions about originality (Lo et al., 2025). As this study found that AI literacy and 21st-century skills influence generative AI use, conducting qualitative studies to investigate the phenomenon deeply is thoughtful. Moreover, conducting studies to compare AI literacy rates across low-resource vs. high-resource institutions is recommended.

7.2 Limitations

Some limitations could be addressed in future research. UTAUT model may not have the feature of generalizability of the findings compared to other models or theoretical frameworks. Moreover, evaluating 21st century skills poses challenges due to the lack of standardized assessment tools, which may limit the depth and accuracy of the results. Future studies could explore alternative frameworks and more comprehensive methods to address these limitations. Additionally, the tool used in this study may not have fully captured the complexity of certain constructs, such as creativity. Also, the score strength scale used in Daher (2019) lacks a clear empirical or theoretical foundation for its categorical thresholds (e.g., weak, moderate, strong). The cutoffs appear arbitrarily defined without justification, such as statistical analysis, prior validation, or expert consensus. This raises concerns about objectivity and reproducibility in this study. This arbitrariness may introduce bias, oversimplify nuanced data, and limit the scale’s validity for comparative research. Future work should employ empirically derived thresholds or continuous scoring to enhance rigor. Moreover, future studies could explore the model developed in this research by employing more detailed and objective data collection methods to measure these skills better. Another limitation is the relatively small sample size of the study, which may influence the robustness and applicability of the results.

Data availability statement

Data is available upon request from the corresponding author.

Ethics statement

The studies involving humans were approved by Dr. Jehad Asad, Palestine Technical University-Kadoorie. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

RS: Writing – original draft, Writing – review & editing, Funding acquisition, Visualization. MA: Data curation, Methodology, Supervision, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was financially supported by the Palestine Technical University-Kadoorie.

Acknowledgments

The researchers express their appreciation to the Palestine Technical University-Kadoorie for supporting this study financially.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Correction note

This article has been corrected with minor changes. These changes do not impact the scientific content of the article.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alier, M., García-Peñalvo, F., and Camba, J. D. (2024). Generative artificial intelligence in education: from deceptive to disruptive. Int. J. Interact. Multimed. Artif. Intell. 8, 11–18. doi: 10.9781/ijimai.2024.02.011

Amankwah-Amoah, J., Abdalla, S., Mogaji, E., Elbanna, A., and Dwivedi, Y. K. (2024). The impending disruption of creative industries by generative AI: opportunities, challenges, and research agenda. Int. J. Inf. Manag. 79:102759. doi: 10.1016/j.ijinfomgt.2024.102759

Barrett, M. S., Creech, A., and Zhukov, K. (2021). Creative collaboration and collaborative creativity: a systematic literature review. Front. Psychol. 12:713445. doi: 10.3389/fpsyg.2021.713445

Baumgartner, T., Strong, C., and Hensley, L. (2002). “Measurement issues in research” in Conducting and reading research in health and human performance. ed. E. R. Buhi (New York, NY: McGraw-Hill), 329–350.

Celik, I., Gedrimiene, E., Siklander, S., and Muukkonen, H. (2024). The affordances of artificial intelligence-based tools for supporting 21st-century skills: a systematic review of empirical research in higher education. Australas. J. Educ. Technol. 40, 19–38. doi: 10.14742/ajet.9069

Chan, H. W. H., and Lo, N. P. K. (2025). A study on human rights impact with the advancement of artificial intelligence. J. Posthumanism 5, 1114–1153. doi: 10.63332/joph.v5i2.490

Chen, H. L., Widarso, G. V., and Sutrisno, H. (2020). A chatbot for learning Chinese: learning achievement and technology acceptance. J. Educ. Comput. Res. 58, 1161–1189. doi: 10.1177/0735633120929622

Chiu, T. K., Ahmad, Z., Ismailov, M., and Sanusi, I. T. (2024). What are artificial intelligence literacy and competency? A comprehensive framework to support them. Comput. Educ. Open 6:100171. doi: 10.1016/j.caeo.2024.100171

Choung, H., David, P., and Ross, A. (2023). Trust in AI and its role in the acceptance of AI technologies. Int. J. Hum.-Comput. Interact. 39, 1727–1739. doi: 10.1080/10447318.2022.2050543

Chui, M., Hazan, E., Roberts, R., Singla, A., and Smaje, K. (2023). The economic potential of generative AI: The next productivity frontier. New York, NY: McKinsey & Company.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences. 2nd Edn. London: Routledge.

Cristea, T. S., Snijders, C., Matzat, U., and Kleingeld, A. (2024). Do 21st-century skills make you less lonely? The relation between 21st-century skills, social media usage, and students’ loneliness during the COVID-19 pandemic. Heliyon, 10. doi: 10.1016/j.heliyon.2024.e25899

Daher, W. (2019). “Assessing students' perceptions of democratic practices in the mathematics classroom,” in Eleventh Congress of the European Society for Research in Mathematics Education.

Derakhshan, A. (2025). EFL students' perceptions about the role of generative artificial intelligence-mediated instruction in their emotional engagement and goal orientation: a motivational climate theory perspective in focus. Learn. Motiv. 90:102114. doi: 10.1016/j.lmot.2025.102114

Fadli, F., and Iskarim, M. (2024). Students' perceptions of artificial intelligence technology to develop 21ˢᵗ century learning skills. Lentera Pendidikan: Jurnal Ilmu Tarbiyah dan Keguruan 27, 178–190. doi: 10.24252/lp.2024v27n1i11

Feuerriegel, S., Hartmann, J., Janiesch, C., and Zschech, P. (2024). Generative AI. Bus. Inf. Syst. Eng. 66, 111–126. doi: 10.1007/s12599-023-00834-7

Gómez Niño, J. R., Árias Delgado, L. P., Chiappe, A., and Ortega González, E. (2024). Gamifying learning with AI: a pathway to 21ˢᵗ-century skills. J. Res. Child. Educ. 22, 1–16. doi: 10.1080/02568543.2024.2421974

Gratani, F., and Giannandrea, L. (2022). Towards 2030. Enhancing 21st century skills through educational robotics. Front. Educ. 7:955285.

He, W. J., and Chiang, T. W. (2024). From growth and fixed creative mindsets to creative thinking: an investigation of the mediating role of creativity motivation. Front. Psychol. 15:1353271. doi: 10.3389/fpsyg.2024.1353271

Hornberger, M., Bewersdorff, A., and Nerdel, C. (2023). What do university students know about Artificial Intelligence? Development and validation of an AI literacy test. Computers and Education: Artificial Intelligence, 5:100165. doi: 10.1016/j.caeai.2023.100165

Hu, L. (2024). Programming and 21ˢᵗ century skill development in K-12 schools: a multidimensional meta-analysis. J. Comput. Assist. Learn. 40, 610–636. doi: 10.1111/jcal.12904

Humble, N., and Mozelius, P. (2022). The threat, hype, and promise of artificial intelligence in education. AI Soc. 2, 673–683. doi: 10.1007/s44163-022-00039-z

Ismail, I. A. (2025). “Protecting privacy in AI-enhanced education: a comprehensive examination of data privacy concerns and solutions in AI-based learning” in Impacts of generative AI on the future of research and education. ed. A. Mutawa (London: IGI Global Scientific Publishing), 117–142.

Jang, Y., Choi, S., and Kim, H. (2022). Development and validation of an instrument to measure undergraduate students' attitudes toward the ethics of artificial intelligence (AT-EAI) and analysis of its difference by gender and experience of AI education. Educ. Inf. Technol. 27, 11635–11667. doi: 10.1007/s10639-022-11086-5

Jia, Y., Oh, Y. J., Sibuma, B., LaBanca, F., and Lorentson, M. (2016). Measuring twenty-first century skills: development and validation of a scale for in-service and pre-service teachers. Teach. Dev. 20, 229–252. doi: 10.1080/13664530.2016.1143870

Jing, Y. H., Wang, H. M., Chen, X. J., and Wang, C. L. (2024). What factors will affect the effectiveness of using ChatGPT to solve programming problems? A quasi-experimental study. Humanit. Soc. Sci. Commun. 11:319. doi: 10.1057/s41599-024-02751-w

Kain, C., Koschmieder, C., Matischek-Jauk, M., and Bergner, S. (2024). Mapping the landscape: a scoping review of 21ˢᵗ century skills literature in secondary education. Teach. Teach. Educ. 151:104739. doi: 10.1016/j.tate.2024.104739

Khoo, C., Yang, E. C. L., Tan, R. Y. Y., Alonso-Vazquez, M., Ricaurte-Quijano, C., Pécot, M., et al. (2024). Opportunities and challenges of digital competencies for women tourism entrepreneurs in Latin America: a gendered perspective. J. Sustain. Tour. 32, 519–539. doi: 10.1080/09669582.2023.2189622

Kong, S. C., Cheung, M. Y. W., and Tsang, O. (2024). Developing an artificial intelligence literacy framework: evaluation of a literacy course for senior secondary students using a project-based learning approach. Comput. Educ. Artif. Int. 6:100214. doi: 10.1016/j.caeai.2024.100214

Laupichler, M. C., Aster, A., Haverkamp, N., and Raupach, T. (2023). Development of the "scale for the assessment of non-experts' AI literacy" - an exploratory factor analysis. Comput. Hum. Behav. Rep. 12:100338. doi: 10.1016/j.chbr.2023.100338

Li, Y., Wu, B., Huang, Y., and Luan, S. (2024). Developing trustworthy artificial intelligence: insights from research on interpersonal, human-automation, and human-AI trust. Frontiers in psychology, 15:1382693. doi: 10.3389/fpsyg.2024.1382693

Liu, Z., Li, S., Shang, S., and Ren, X. (2021). How do critical thinking ability and critical thinking disposition relate to the mental health of university students? Front. Psychol. 12:704229. doi: 10.3389/fpsyg.2021.704229

Lo, N., Wong, A., and Chan, S. (2025). The impact of generative AI on essay revisions and student engagement. Computers and Education Open 100249:100249. doi: 10.1016/j.caeo.2025.100249

Long, D., and Magerko, B. (2020). “What is AI literacy? Competencies and design considerations,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, 1–16.

Ma, S., and Lei, L. (2024). The factors influencing teacher education students’ willingness to adopt artificial intelligence technology for information-based teaching. Asia Pacific Journal of Education, 44, 94–111. doi: 10.1080/02188791.2024.2305155

Mansoor, H. M., Bawazir, A., Alsabri, M. A., Alharbi, A., and Okela, A. H. (2024). Artificial intelligence literacy among university students: a comparative transnational survey. Front. Commun. 9:1478476. doi: 10.3389/fcomm.2024.1478476

Maphoto, K. B., Sevnarayan, K., Mohale, N. E., Suliman, Z., Ntsopi, T. J., and Mokoena, D. (2024). Advancing students' academic excellence in distance education: exploring the potential of generative AI integration to improve academic writing skills. Open Praxis 16, 142–159. doi: 10.55982/openpraxis.16.2.649

Marrone, R., Taddeo, V., and Hill, G. (2022). Creativity and artificial intelligence—A student perspective. Journal of Intelligence, 10:65. doi: 10.3390/jintelligence10030065

Ndibalema, P. (2025). Digital literacy gaps in promoting 21ˢᵗ century skills among students in higher education institutions in sub-Saharan Africa: a systematic review. Cogent Educ. 12:2452085. doi: 10.1080/2331186X.2025.2452085

Ng, D. T. K., Leung, J. K. L., Chu, S. K. W., and Qiao, M. S. (2021). Conceptualizing AI literacy: an exploratory review. Comput. Educ. Artif. Int. 2:100041. doi: 10.1016/j.caeai.2021.100041

Ng, D. T. K., Wu, W., Leung, J. K. L., and Chu, S. K. W. (2023). “Artificial Intelligence (AI) literacy questionnaire with confirmatory factor analysis,” in 2023 IEEE International Conference on Advanced Learning Technologies (ICALT), 233–235.

Ooi, K. B., Tan, G. W. H., Al-Emran, M., Al-Sharafi, M. A., Capatina, A., Chakraborty, A., et al. (2025). The potential of generative artificial intelligence across disciplines: perspectives and future directions. J. Comput. Inf. Syst. 65, 76–107. doi: 10.1080/08874417.2023.2261010

Papadimitriou, S., and Virvou, M. (2025). “21ˢᵗ century skills via prosocial educational games with responsible artificial intelligence: an overview,” in Artificial Intelligence-Based Games as Novel Holistic Educational Environments to Teach 21ˢᵗ Century Skills, 1–24.

Ray, P. (2023). ChatGPT: a comprehensive review of background, applications, key challenges, bias, ethics, limitations, and future scope. J. Int. ThingsCyber-Physical Systms. 3, 121–154. doi: 10.1016/j.iotcps.2023.04.003

Ryan, M., and Stahl, B. (2020). Artificial intelligence ethics guidelines for developers and users: clarifying their content and normative implications. J. Inf. Commun. Ethics Soc. 19, 61–86. doi: 10.1108/JICES-12-2019-0138

Saleem, S., Dhuey, E., White, L., and Perlman, M. (2024). Understanding 21st century skills needed in response to industry 4.0: Exploring scholarly insights using bibliometric analysis. Telematics and Informatics Reports, 13:100124. doi: 10.1016/j.teler.2024.100124

Salhab, R. (2024). AI Literacy across Curriculum Design: Investigating College Instructors’ Perspectives. Online Learning, 28:n2. doi: 10.24059/olj.v28i2.4426

Samala, A. D., Bojić, L., Vergara-Rodríguez, D., Klimova, B., and Ranuharja, F. (2024). Exploring the impact of gamification on 21st-century skills: insights from DOTA 2. Int. J. Interact. Mob. Technol. 17, 33–54. doi: 10.3991/ijim.v17i18.42161

Schiavo, G., Businaro, S., and Zancanaro, M. (2024). Comprehension, apprehension, and acceptance: understanding the influence of literacy and anxiety on acceptance of artificial intelligence, 77. doi: 10.2139/ssrn.4668256

Sekaran, U., and Bougie, R. (2003). Research methods for business: A skill building approach. 5th Edn. New York, NY: Wiley.

Shadiev, R., and Wang, X. (2022). A review of research on technology-supported language learning and 21st century skills. Front. Psychol. 13:897689. doi: 10.3389/fpsyg.2022.897689

Shafik, W. (2024). “Generative AI for social good and sustainable development” in Generative AI: Current trends and applications. eds. K. Raza, N. Ahmad, and D. Singh (Singapore: Springer Nature Singapore), 185–217.

Sperling, K., Stenberg, C. J., McGrath, C., Åkerfeldt, A., Heintz, F., and Stenliden, L. (2024). In search of artificial intelligence (AI) literacy in teacher education: a scoping review. Comput. Educ. Open 6:100169. doi: 10.1016/j.caeo.2024.100169

Stöhr, C., Ou, A. W., and Malmström, H. (2024). Perceptions and usage of AI chatbots among students in higher education across genders, academic levels and fields of study. Comput. Educ. Artif. Int. 7:100259. doi: 10.1016/j.caeai.2024.100259

Strzelecki, A. (2024). ChatGPT in higher education: investigating bachelor and master students' expectations towards AI tool. Educ. Inf. Technol. 30, 10231–10255. doi: 10.1007/s10639-024-13222-9

Strzelecki, A., and ElArabawy, S. (2024). Investigation of the moderation effect of gender and study level on the acceptance and use of generative AI by higher education students: comparative evidence from Poland and Egypt. Br. J. Educ. Technol. 55, 1209–1230. doi: 10.1111/bjet.13425

Tan, M. J. T., and Maravilla, N. M. A. T. (2024). Shaping integrity: why generative artificial intelligence does not have to undermine education. Front. Artif. Int. 7:1471224. doi: 10.3389/frai.2024.1471224

Teng, M., Singla, R., Yau, O., Lamoureux, D., Gupta, A., Hu, Z., et al. (2022). Health care students’ perspectives on artificial intelligence: countrywide survey in Canada. JMIR medical education, 8:e33390. doi: 10.2196/33390

Tseng, L. P., Huang, L. P., and Chen, W. R. (2025). Exploring artificial intelligence literacy and the use of ChatGPT and copilot in instruction on nursing academic report writing. Nurse Educ. Today 147:106570. doi: 10.1016/j.nedt.2025.106570

Tugiman, T., Herman, H., and Yudhana, A. (2023). The UTAUT model for measuring acceptance of the application of the patient registration system. MATRIK: Jurnal Manajemen, Teknik Informatika dan Rekayasa Komputer 22, 381–392. doi: 10.30812/matrik.v22i2.2844

Ustun, A. B., Karaoglan-Yilmaz, F. G., Yilmaz, R., Ceylan, M., and Uzun, O. (2024). Development of UTAUT-based augmented reality acceptance scale: A validity and reliability study. Education and Information Technologies, 29, 11533–11554. doi: 10.1007/s10639-023-12321-3

Venkatesh, V., Morris, M. G., Davis, G. B., and Davis, F. D. (2003). User acceptance of information technology: toward a unified view. MIS Q. 27, 425–478. doi: 10.2307/30036540

Venkatesh, V., Thong, J. Y. L., and Xu, X. (2012). Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology. MIS Q. 36, 157–178. doi: 10.2307/41410412

Wang, Y. S., Wu, M. C., and Wang, H. Y. (2009). Investigating the determinants and age and gender differences in the acceptance of mobile learning. Br. J. Educ. Technol. 40, 92–118. doi: 10.1111/j.1467-8535.2007.00809.x

Williams, M. N., Grajales, C. A. G., and Kurkiewicz, D. (2019). Assumptions of multiple regression: correcting two misconceptions. Pract. Assess. Res. Eval. 18:11. doi: 10.7275/55hn-wk47

Yilmaz, F. G. K., Yilmaz, R., and Ceylan, M. (2023). Generative artificial intelligence acceptance scale: a validity and reliability study. Int. J. Hum.-Comput. Interact. 40, 8703–8715. doi: 10.1080/10447318.2023.2288730

Zhang, C., Schießl, J., Plößl, L., Hofmann, F., and Gläser-Zikuda, M. (2023). Acceptance of artificial intelligence among pre-service teachers: a multigroup analysis. Int. J. Educ. Technol. High. Educ. 20:49. doi: 10.1186/s41239-023-00420-7

Zhu, Y., Liu, Q., and Zhao, L. (2025). Exploring the impact of generative artificial intelligence on students' learning outcomes: a meta-analysis. Educ. Inf. Technol. 30, 16211–16239. doi: 10.1007/s10639-025-13420-z

Keywords: AI literacy, 21st century skills, generative artificial intelligence (generative AI), curriculum, acceptance, college students

Citation: Salhab R and Aboushi MM (2025) Influence of AI literacy and 21st-century skills on the acceptance of generative artificial intelligence among college students. Front. Educ. 10:1640212. doi: 10.3389/feduc.2025.1640212

Edited by:

Walter Alexander Mata López, University of Colima, MexicoReviewed by:

Dennis Arias-Chávez, Continental University, PeruNoble Lo, Lancaster University, United Kingdom

Copyright © 2025 Salhab and Aboushi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Reham Salhab, ci5zYWxoYWJAcHR1ay5lZHUucHM=

Reham Salhab

Reham Salhab Mosab M. Aboushi

Mosab M. Aboushi