- National Committee for Qualifications and Academic Accreditation, Ministry of Education and Higher Education, Doha, Qatar

Introduction: Artificial intelligence (AI) is reshaping education by enabling adaptive learning, personalized feedback, and data-driven decision support. However, systematic tools to evaluate the ethical and pedagogical readiness of AI educational platforms remain limited, particularly in culturally specific contexts. This study addresses this gap by operationalizing the UNESCO Ethical Impact Assessment (EIA) Tool through the development of the Gulf-AI Education Tool Evaluation Matrix (G-AIETM), a structured framework designed to assess AI-powered educational platforms against 18 ethical and pedagogical indicators.

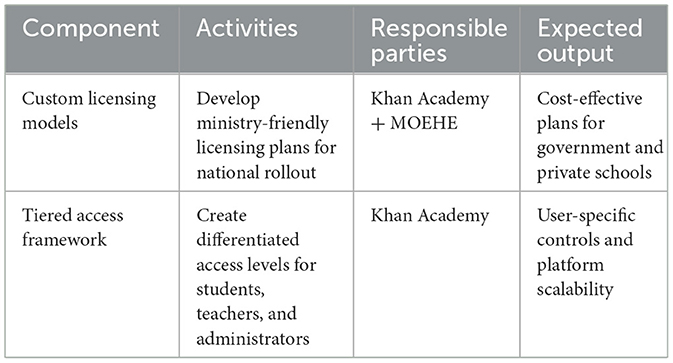

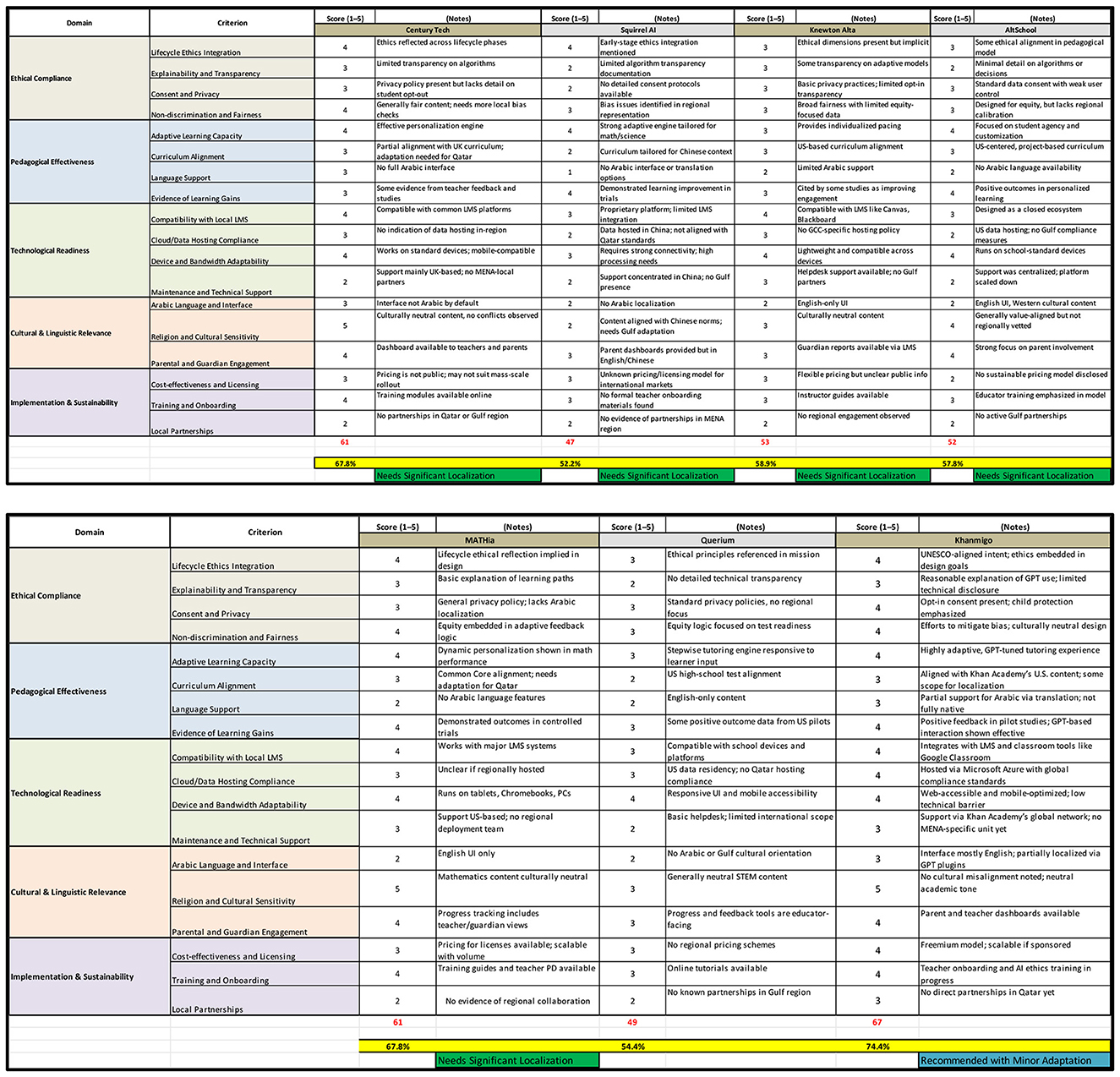

Methods: The G-AIETM framework was applied to evaluate seven globally recognized AI-powered educational platforms: Khanmigo, CENTURY Tech, MATHia, Knewton Alta, AltSchool, Querium, and Squirrel AI. Each platform was assessed against 18 criteria using a 5-point Likert scale, and normalized scores were calculated to generate rankings out of 100. The study further developed an actionable implementation framework specifically for Khanmigo, which included phases such as Arabic natural language integration, curriculum adaptation, ethical AI training for educators, and localized data hosting. Enabling factors for the application of the UNESCO EIA tool–such as cross-disciplinary stakeholder engagement and iterative use–were also identified, alongside persistent barriers including resource limitations and regulatory gaps.

Results: The evaluation revealed that Khanmigo achieved the highest score of 74.4%, qualifying as “Recommended with Minor Adaptation,” due to strengths in adaptive learning, stakeholder dashboards, and ethical integration. However, it was limited by insufficient Arabic language support and local compliance mechanisms. CENTURY Tech and MATHia each scored 67.8%, showing solid technical performance but requiring significant localization in language, curriculum alignment, and data governance. Knewton Alta (58.9%), AltSchool (57.8%), Querium (54.4%), and Squirrel AI (52.2%) were categorized as “Needs Significant Localization,” reflecting deficits in Arabic support, cultural sensitivity, and transparency in algorithmic processes and data privacy.

Discussion: The findings underscore the urgent need for cultural and linguistic localization in AI for education. A critical issue across platforms was the persistent lack of Arabic language integration and Islamic cultural alignment, raising concerns about inclusivity and trust in AI outputs within Gulf classrooms. These limitations highlight the ethical imperative of ensuring context-specific adaptation before large-scale deployment. By grounding the G-AIETM in contemporary theories of responsible innovation and ethics-by-design, the study extends beyond descriptive evaluation to provide a replicable, evidence-based model for policymakers, educators, and developers. This contributes novel insights into the ethical governance of AI in education by combining a globally recognized assessment tool with a culturally responsive matrix, offering practical policy implications for Qatar and comparable education systems worldwide.

1 Introduction

Around the world, education systems are undergoing a profound transformation driven by the rapid integration of artificial intelligence (AI) into teaching, learning, and administrative processes. From personalized tutoring to predictive analytics and automated assessment, AI-powered tools are reshaping how knowledge is delivered, measured, and managed. This technological shift offers unprecedented opportunities for improving access, efficiency, and individualization in education, yet it also raises complex ethical and governance challenges. Concerns over bias, data privacy, cultural alignment, and accountability underscore the need for frameworks that can guide AI adoption in ways that safeguard equity, transparency, and trust. These issues are particularly pressing in regions with distinct linguistic and cultural contexts, where imported technologies may not fully reflect local values or educational priorities. Against this backdrop, the present study applies a structured ethical evaluation framework to analyze leading AI-powered educational platforms, with the aim of identifying their readiness for responsible deployment in the Gulf and similar contexts.

Besides, artificial intelligence (AI) systems are rapidly permeating critical areas of human life, including public services (Misuraca et al., 2020), education (Holmes and Tuomi, 2022), labor markets (Webb, 2019), and healthcare (Saraswat et al., 2022). As these technologies become increasingly embedded in decision-making processes, they simultaneously introduce significant ethical risks (Douglas et al., 2024). These risks range from algorithmic discrimination and systemic opacity to invasive surveillance practices and the exclusion of marginalized populations from algorithmically mediated opportunities (Fountain, 2022). Left unaddressed, such ethical challenges threaten to erode public trust in AI systems and exacerbate existing social inequalities.

Recognizing these dangers, international organizations and policymakers have issued a series of declarations emphasizing the need for human-centered and rights-based AI governance. Among the most comprehensive is the UNESCO Recommendation on the Ethics of Artificial Intelligence, adopted unanimously by UNESCO's member states in November 2021 (UNESCO, 2021). The Recommendation articulates 10 core principles: human rights and dignity, fairness, inclusiveness, sustainability, privacy, transparency, responsibility, accountability, multi-stakeholder participation, and adherence to the rule of law, that are intended to guide the ethical design, deployment, and use of AI systems (UNESCO, 2021). However, translating these high-level ethical ideals into concrete practices remains a persistent global challenge.

Notably, there are emerging platforms designed specifically for the Arabic-speaking and Islamic educational space. Examples include Noon Academy (Saudi Arabia; AlAteeq et al., 2020), a social learning platform that incorporates Arabic-first content and peer-to-peer tutoring, and Fanar (Qatar), a platform for Arabic-centric multimodal generative AI systems, that supports language, speech, and image generation tasks (Abbas et al., 2025). Although these platforms were excluded from the present evaluation due to insufficient publicly available documentation for ethical and technical assessment, their development illustrates the growing momentum toward culturally aligned AI in education. Future research should include such platforms in comparative analyses to build a more representative evidence base for Arabic-language AI education.

Besides, the repeated absence of Arabic language support and Islamic studies integration in widely used AI educational platforms highlights a broader challenge of inclusivity in educational technology design. Research in Arabic natural language processing (NLP) demonstrates that technical barriers such as morphological complexity, dialectal variation, and the scarcity of annotated corpora continue to limit the effective deployment of AI tools in Arabic-speaking contexts (Al-Khalifa et al., 2025). Beyond technical issues, the omission of Islamic cultural content from training datasets, as noted by UNESCO (2023b), raises concerns about stereotyping, cultural erasure, or misrepresentation in educational outputs. These gaps are not trivial, they directly affect pedagogical effectiveness and student trust, particularly in Gulf countries where Arabic is the medium of instruction and Islamic values are embedded in curricula. More broadly, scholars in AI ethics identify such omissions as examples of “design bias,” where systems optimized for dominant cultural and linguistic groups fail to serve minority populations equitably (Jobin et al., 2019). In the case of Arabic-speaking Muslim learners, this bias results in reduced accessibility and misalignment with national education standards, thereby limiting the global applicability of otherwise advanced platforms. Addressing these deficiencies is therefore not only a matter of technical improvement but also an ethical imperative consistent with the UNESCO Ethical Impact Assessment framework, which treats cultural and linguistic relevance as integral to responsible AI deployment in education.

Educational Artificial Intelligence (EAI) refers to the application of AI technologies specifically designed to support and enhance teaching, learning, and educational administration. Its scope encompasses intelligent tutoring systems that adapt to learners' individual needs, natural language processing tools that provide automated feedback and language support, predictive analytics for early identification of learning gaps, and recommender systems that personalize learning pathways. EAI also includes AI-driven administrative tools that streamline grading, content management, and curriculum planning. As noted by (Holmes and Tuomi 2022), EAI represents a convergence of technological innovation and pedagogical theory, offering unprecedented opportunities for personalization, scalability, and accessibility in education. However, as the (World Economic Forum, 2024) emphasizes, these opportunities are accompanied by ethical complexities related to bias, transparency, data privacy, and cultural relevance. Within the framework of this study, EAI is examined not only as a technological enabler but also as a domain requiring governance aligned with UNESCO's vision for human-centered, ethically guided AI in education.

At the same time, scholarship on AI ethics, such as (Jobin et al. 2019), (Fjeld et al. 2020), and (Douglas et al. 2024), has emphasized risks related to bias, opacity, and exclusion, risks that are magnified when platforms are deployed across diverse cultural and linguistic contexts. Despite these insights, there remains a gap in frameworks that integrate ethical evaluation with localized educational priorities. Existing global tools, such as UNESCO's Ethical Impact Assessment, provide valuable guidance but require contextual adaptation to reflect the specific legal, linguistic, and curricular realities of regions such as the Gulf (UNESCO, 2021, 2023b).

Despite significant global efforts to translate high-level ethical principles for AI into actionable practices, there remains a critical gap in practical evaluation tools that can assess the readiness of educational AI systems within localized, culturally specific contexts. Existing frameworks, while comprehensive in scope, often lack the mechanisms to account for linguistic diversity, cultural values, and national curricular requirements, factors that are essential for equitable and effective deployment in non-Western education systems. This study addresses this problem by introducing the Gulf-AI Education Tool Evaluation Matrix (G-AIETM), a context-sensitive framework designed to assess the ethical, pedagogical, and cultural readiness of AI-powered educational platforms in alignment with Qatar's educational policies and societal norms.

Bridging the gap between principles and practice requires not merely ethical awareness but also practical, structured frameworks that can be integrated into the operational processes of AI development. In this context, the UNESCO EIA tool represents a significant innovation (UNESCO, 2023a). Designed as a dynamic, lifecycle-wide instrument, the EIA tool aims to support stakeholders in identifying, assessing, and mitigating ethical risks at every stage of an AI system's conception, development, deployment, use, and decommissioning. Unlike traditional audit mechanisms, which often assess ethical compliance retrospectively, the EIA promotes proactive and iterative ethical reflection, aligning itself with the agile development cycles typical of contemporary AI innovation.

Hence, our study aims to answer the following research questions:

1. How can the UNESCO Ethical Impact Assessment (EIA) Tool be operationalized to evaluate the ethical readiness of AI-powered educational platforms?

2. To what extent do leading AI-powered platforms align with ethical, pedagogical, and cultural standards relevant to Qatar's or similar education system?

3. What adaptations are required for these platforms to achieve cultural and linguistic relevance, particularly in Arabic-speaking and Islamic contexts?

4. How can the proposed Gulf-AI Education Tool Evaluation Matrix (G-AIETM) contribute to global discourse on responsible and localized AI in education?

Moreover, this research examines the ethical, pedagogical, and contextual readiness of AI-powered educational tools through the development and application of a novel framework, the Gulf-AI Education Tool Evaluation Matrix (G-AIETM). Grounded in the structure and principles of the UNESCO EIA tool, this matrix operationalizes ethical considerations across the AI lifecycle and adapts them to the specific needs of Qatar's education system. The study applies the matrix to seven globally recognized AI education platforms to assess their alignment with national curriculum standards, cultural and linguistic requirements, and legal data governance policies. Through structured multi-criteria analysis, the research identifies tools with the greatest potential for deployment, highlights ethical and technical gaps, and proposes implementation strategies for localized adaptation. In doing so, the paper not only contributes to the operationalization of UNESCO's human-centered AI vision in the education sector but also offers a scalable and context-sensitive framework for other countries in the Gulf and beyond seeking to integrate AI responsibly into their educational systems.

The significance of this study lies in its dual contribution to both practice and policy. For policymakers, the G-AIETM offers an evidence-based framework to guide procurement, regulation, and ethical oversight of AI-powered educational tools. For school administrators and educators, it provides a structured basis for selecting and adapting platforms that align with local curricula, linguistic needs, and cultural values. For EdTech developers, the findings identify critical adaptation requirements, such as Arabic language integration and cultural sensitivity, that can expand market reach in Arabic-speaking and culturally conservative regions. While tailored to Qatar, the matrix is replicable in other education systems with similar values and governance priorities, thereby extending its utility beyond the national context.

To our knowledge, there are no published quantitative ethical matrices tailored to measure the readiness of AI education platforms for deployment in Arabic-speaking, Islamic-majority contexts. From our perspective as researchers embedded in the Gulf's educational policy landscape, this omission has tangible consequences: without such tools, platform selection risks overlooking issues of cultural alignment, language accessibility, and local data governance compliance. This study addresses that gap by introducing the Gulf-AI Education Tool Evaluation Matrix (G-AIETM), designed to operationalize global ethical principles within the specific policy, cultural, and pedagogical environment of Qatar.

2 Conceptual foundations of the UNESCO Ethical Impact Assessment tool

The adoption of the UNESCO Recommendation on the Ethics of Artificial Intelligence in 2021 marked a watershed moment in global AI governance. For the first time, an international instrument articulated a shared normative framework to guide the development and use of AI systems in ways that prioritize human dignity, environmental sustainability, and social justice. Central to this framework are 10 interdependent values and principles: human rights and fundamental freedoms, human dignity, fairness and non-discrimination, inclusiveness and diversity, environmental and social sustainability, privacy and data protection, transparency and explainability, responsibility and accountability, multi-stakeholder participation, and the rule of law (UNESCO, 2021). These principles are intended not merely as aspirational goals but as actionable foundations for ethical AI governance.

Nevertheless, a significant gap persists between the articulation of ethical principles and their consistent operationalization in AI practices. Numerous analyses have shown that voluntary AI ethics guidelines, often issued by private sector actors, tend to lack enforcement mechanisms and have limited influence on day-to-day design and deployment decisions (Jobin et al., 2019; Fjeld et al., 2020). This phenomenon, sometimes referred to as “ethics washing,” highlights the danger of ethical principles being used rhetorically without substantive impact. Against this backdrop, there is an urgent need for practical tools that enable developers, policymakers, and institutions to embed ethics meaningfully into AI systems throughout their lifecycle.

The UNESCO EIA tool responds directly to this need. It is designed not merely as a compliance checklist, but as a dynamic participatory process that encourages continuous ethical reflection and dialogue among stakeholders. By aligning ethical deliberations with the AI lifecycle, from pre-design to decommissioning, the EIA seeks to ensure that ethical considerations are not an afterthought, but an integral part of AI system conception, development, deployment, and oversight.

Furthermore, AI-powered educational platforms can serve not only as instructional tools but also as decision-support systems for educators and administrators. For example, platforms such as Khanmigo and CENTURY Tech incorporate real-time analytics dashboards that aggregate and visualize learner performance data at the class, group, and individual levels. These analytics enable timely identification of at-risk students, monitoring of mastery levels across learning objectives, and detection of engagement patterns that may require intervention. Decision-makers can use these insights to adjust curriculum pacing, refine assessment schedules, personalize instructional interventions, and allocate resources more efficiently. In doing so, such platforms extend their value beyond direct teaching to facilitate evidence-based decision-making at both classroom and institutional levels. This decision-support role aligns with recent analyses by UNESCO (2023b) and HolonIQ (2025), which highlight AI's potential to transform educational leadership, improve learning outcomes, and inform strategic planning in rapidly digitizing education systems.

The EIA tool is distinctive in several important respects when compared to other existing frameworks for AI ethics operationalization. For example, while the IEEE Ethically Aligned Design framework (IEEE, 2019) and the IBM AI FactSheets approach (IBM Research, 2020) offer valuable mechanisms for documenting ethical considerations, they are often oriented toward technical documentation or organizational transparency rather than iterative ethical reflection. Similarly, algorithmic auditing initiatives, such as those proposed by (Raji et al. 2020), tend to focus on retrospective evaluation, often after an AI system has already been deployed.

By contrast, the EIA tool's design encourages a proactive, participatory, and context-sensitive approach to ethics. It emphasizes stakeholder engagement at every stage, asking developers and policymakers to consider not only technical robustness but also social, cultural, and legal impacts. Moreover, by structuring ethical inquiry around critical points in the AI lifecycle, the EIA helps anticipate risks before they materialize, promoting a form of “ethics-by-design” that aligns with emerging trends in responsible AI development.

In this sense, the UNESCO EIA tool can be seen as part of a broader shift toward embedding ethics within agile development processes rather than treating it as a separate or subsequent exercise. As AI systems become more complex and more deeply intertwined with societal infrastructures, such anticipatory and integrated approaches to ethics will be increasingly essential for ensuring that AI serves the common good.

3 The UNESCO Ethical Impact Assessment tool structure

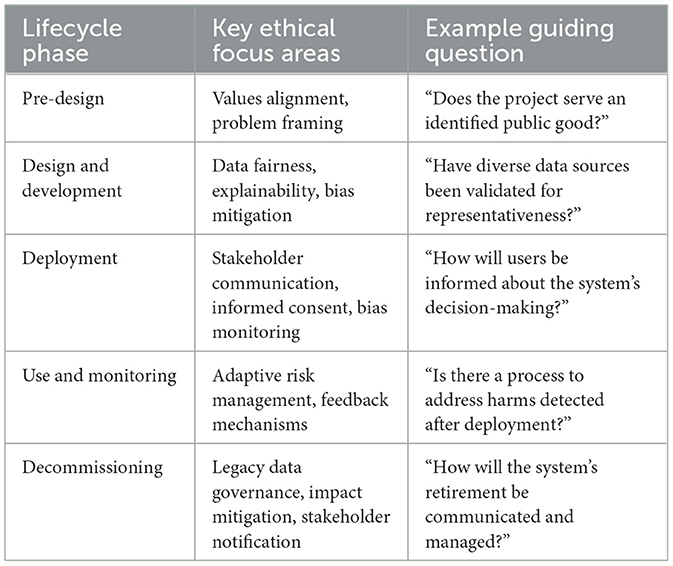

The UNESCO EIA tool is structured around a comprehensive understanding of the AI system lifecycle. Recognizing that ethical risks can arise at different stages of development and deployment, the EIA tool divides the lifecycle into five distinct but interrelated phases: Pre-Design, Design and Development, Deployment, Use and Monitoring, and Decommissioning. Each phase includes a structured set of guiding questions, potential risk indicators, and reflective prompts tailored to the evolving relationship between AI systems and their affected stakeholders.

3.1 Pre-design phase

In the initial conception stage, the EIA tool emphasizes the importance of problem framing and values alignment. Stakeholders are encouraged to ask foundational questions, such as whether the intended use of AI genuinely serves public interest and whether alternative non-AI solutions have been adequately considered. Ethical reflection at this stage seeks to prevent the normalization of harmful objectives and ensure that human rights are embedded from the outset.

The conceptual foundation of this study draws from contemporary theoretical approaches to AI governance, particularly the ethics-by-design (Nussbaumer et al., 2023) and responsible innovation frameworks (Li et al., 2023). Ethics-by-design advocates for the proactive embedding of ethical considerations into every stage of a technology's lifecycle, from conception through deployment and eventual decommissioning, ensuring that societal values, fairness, and inclusivity are integral to system architecture rather than retrofitted after development. Responsible innovation expands on this premise by emphasizing anticipatory governance, stakeholder engagement, and reflexivity in decision-making, encouraging continuous assessment of potential impacts and alignment with the public good. It is worth noting that, while the majority of globally recognized AI educational platforms originate in the U.S. or China, their successful deployment in Arabic-speaking and Islamic-majority contexts requires deliberate localization. This involves not only translation into Modern Standard Arabic, but also the integration of right-to-left (RTL) user interface design, culturally sensitive content filters, and the embedding of Islamic studies and regional history within the learning modules.

3.2 Design and development phase

During system architecture and algorithm design, the EIA tool focuses on data representativeness, fairness in model training, explainability, and robustness. Developers are prompted to assess potential biases in datasets, evaluate transparency mechanisms, and design risk mitigation strategies. Ethical inquiry is aligned with technical decision points, reinforcing the integration of ethics into the design process rather than treating it as an external constraint.

Within the design and development phase, the EIA tool embeds fairness, representativeness, and robustness as core evaluative dimensions. Fairness is addressed by requiring stakeholders to systematically assess dataset diversity, ensuring balanced representation across gender, socio-economic backgrounds, geographic regions, and ability levels, so that the model does not disproportionately favor one group over another. This step parallels the due diligence process in quality control: just as a school textbook is reviewed for cultural inclusivity before printing, AI training data must be checked for representational equity before deployment. Representativeness is reinforced through validation against diverse, context-relevant datasets, thereby improving the system's ability to perform accurately across varied learner profiles. Robustness is promoted by testing models under a range of scenarios, stress conditions, and edge cases to ensure performance remains stable even in less-than-ideal input conditions. The EIA also prompts documentation of explainability features so that decision pathways can be understood and challenged by educators and administrators. These elements are consistent with best practices outlined by (Raji et al. 2020) in algorithmic auditing and the (IEEE, 2019) Ethically Aligned Design framework, both of which advocate lifecycle-based checks to uphold equity and system resilience.

3.3 Deployment phase

As AI systems are implemented in real-world environments, the EIA tool emphasizes stakeholder communication, monitoring of unintended consequences, and the establishment of recourse mechanisms for affected users. Ethical considerations at this stage involve ensuring informed consent, explaining system functionalities to users, and monitoring for emergent biases that may not have been evident during development.

3.4 Use and monitoring phase

Once deployed, AI systems require ongoing ethical oversight. The EIA tool prompts periodic reviews of system impacts, user feedback channels, and adaptive risk management practices. By institutionalizing continuous monitoring, the tool ensures that ethical evaluation remains dynamic and responsive to evolving conditions.

3.5 Decommissioning phase

Finally, the EIA recognizes that ethical obligations extend beyond an AI system's operational life. Developers and institutions are encouraged to plan for responsible decommissioning, including managing legacy data, mitigating environmental impacts, and communicating the system's retirement to affected stakeholders (Table 1).

The EIA tool is intentionally designed to be flexible and iterative. It recognizes that ethical risks cannot be fully anticipated in advance and that ongoing reflection is necessary. Thus, users of the tool are encouraged to revisit earlier stages as needed, particularly when new risks emerge, or project objectives evolve. This approach aligns with contemporary agile development methodologies, fostering a culture of continuous ethical vigilance rather than treating ethics as a one-time compliance exercise.

Moreover, by embedding participatory reflection throughout the lifecycle, the EIA tool underscores the importance of multi-stakeholder engagement. Developers, policymakers, users, and affected communities are all seen as essential contributors to the ethical governance of AI systems. This inclusive methodology not only enriches ethical deliberations but also enhances the legitimacy and societal trustworthiness of AI deployments.

4 Research methodology

This study adopts a qualitative, comparative ethics-based assessment approach to evaluate leading AI-powered educational platforms using the UNESCO EIA Tool. Given the lack of access to primary data or internal system documentation, the research is based solely on publicly available secondary sources, including peer-reviewed literature, developer documentation, and global policy reports.

4.1 Selection of AI education tools

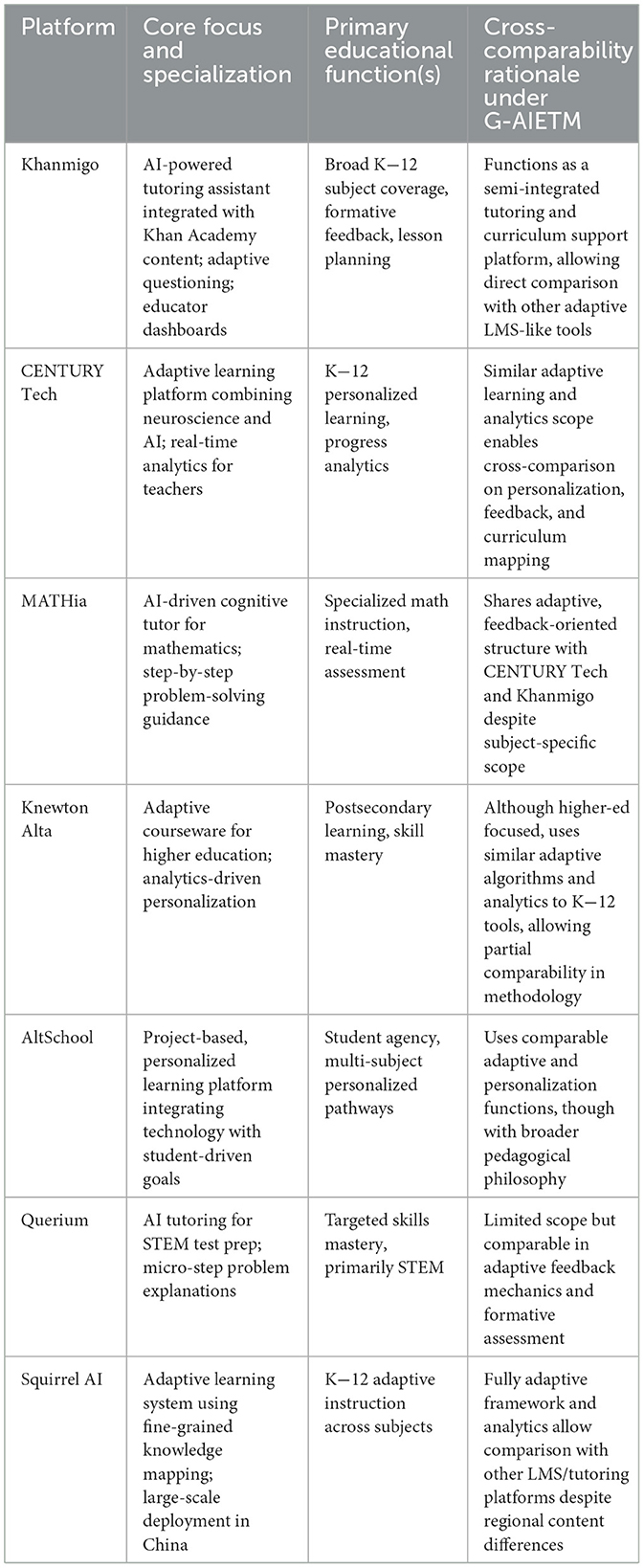

The study focuses on seven globally recognized AI-powered educational platforms that are widely cited for their innovation, reach, and AI-driven capabilities: CENTURY Tech (UK), Squirrel AI (China), Knewton Alta (USA), AltSchool (USA), Querium (USA), Mathia Carnegie Learning (USA), and Khanmigo (USA). These platforms were selected based on their alignment with core educational functions, personalized learning, real-time assessment, adaptive feedback, and learning analytics, as well as the availability of technical and policy documentation for evaluation.

Furthermore, to ensure a meaningful comparative analysis under the G-AIETM framework, the seven platforms were selected on the basis of their core AI-driven educational functionalities, adaptive learning, real-time analytics, personalized feedback, and curriculum mapping, combined with the availability of verifiable public documentation to support ethical assessment. While some tools, such as MATHia and Querium, are subject-specific (mathematics and STEM), and others, such as Khanmigo, CENTURY Tech, and Squirrel AI, are multi-subject, all employ adaptive algorithms, user analytics, and structured feedback mechanisms that align with the five domains of the G-AIETM. This functional overlap allows for consistent scoring against the same 18 ethical and pedagogical indicators, even where subject focus varies. Platforms primarily dedicated to niche creative or editorial functions (e.g., Canva, Grammarly) were excluded, as their core purpose does not align with the integrated instructional or tutoring scope required for this evaluation. Cross-comparability was thus established on shared operational characteristics, particularly adaptivity, data-driven feedback, and potential for curriculum integration, rather than on identical subject coverage alone (Table 2).

The final selection of seven platforms was intentionally constrained by the requirement for robust, publicly accessible, and verifiable documentation covering both technical and ethical dimensions. While a larger pool could have increased diversity, many AI education tools, particularly those emerging in non-English or niche markets, did not provide sufficient transparency in areas such as algorithmic processes, data governance policies, and curriculum integration. Including such platforms would have necessitated speculative interpretation, undermining the methodological rigor of the G-AIETM-based evaluation.

4.2 Data sources

Given the absence of access to proprietary data, internal system architecture, or direct user feedback, this study relies exclusively on secondary data sources that are publicly accessible and verifiable. These sources were selected based on their relevance, credibility, and ability to support an ethically oriented analysis in line with the UNESCO EIA Tool. The following categories of sources underpin the comparative evaluation of the selected AI education platforms:

a) Official Company Websites and Technical Documentation

The foundational understanding of each platform's AI capabilities, system architecture, intended pedagogical functions, and declared ethical safeguards was derived from the official websites of CENTURY Tech, Squirrel AI, Knewton Alta, Querium, and Carnegie Learning. This included: product white papers, FAQs, and platform demos, privacy policies and terms of service, publicly released ethical or AI governance frameworks (where available), and Blog articles or thought leadership by the companies' technical teams.

These documents provided critical insight into how each platform communicates its ethical commitments, data handling policies, and technical decision-making to users and stakeholders.

b) Independent Product Reviews and Third-Party Market Evaluations

To complement the companies' self-reported data, the study reviewed third-party evaluations and independent product comparisons from respected e-tech analysts and review sites such as HolonIQ (2025), EdSurge (2024), Common Sense Education (2025), and EdTech (2025). These reviews offered external perspectives on usability, equity implications, algorithmic performance, and user experience, often citing direct feedback from educators or school systems. Particular attention was paid to recurring critiques regarding data transparency, accessibility, or explainability.

c) Policy Reports from International Organizations

The study also incorporated key documents from leading global policy and standards-setting organizations, particularly:

• UNESCO: Including the 2021 Recommendation on the Ethics of Artificial Intelligence and the subsequent Ethical Impact Assessment Tool (UNESCO, 2021, 2023b).

• OECD: Reports on AI in education and policy guidelines for trustworthy AI (OECD, 2020).

• World Economic Forum (WEF): Analyses of edtech trends, risks of algorithmic bias in education, and governance toolkits (World Economic Forum, 2024).

These sources were used both to contextualize the ethical criteria applied and to benchmark platform practices against international expectations for responsible AI use in education.

4.3 Evaluation framework: applying the UNESCO EIA tool

The UNESCO EIA Tool is used as a structured framework to evaluate the ethical strengths and weaknesses of each platform. The tool's five lifecycle stages, Pre-design, Design and Development, Deployment, Use and Monitoring, and Decommissioning, serve as the backbone of the analysis. For each stage, key questions and risk indicators from the EIA guidance are applied to the extent that public data allows.

Evaluation dimensions include:

i. Problem framing and value alignment (Pre-design)

ii. Data fairness and algorithmic explainability (Design and Development)

iii. Consent practices and stakeholder communication (Deployment)

iv. Feedback mechanisms and dynamic risk management (Use and Monitoring)

v. Transparency around system retirement and data handling (Decommissioning)

Each AI tool is scored qualitatively across these stages, and comparative insights are drawn to identify best practices, ethical gaps, and areas for policy intervention.

4.4 Analytical strategy

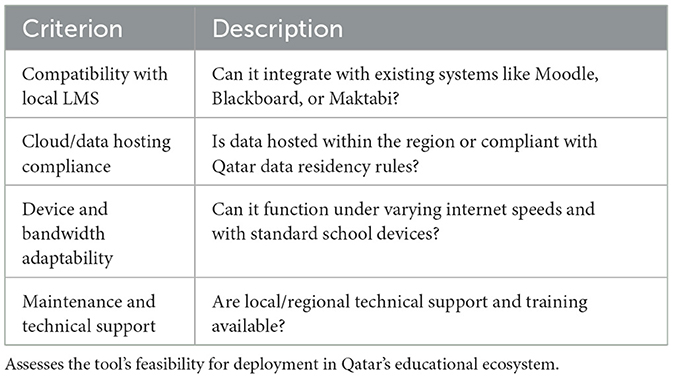

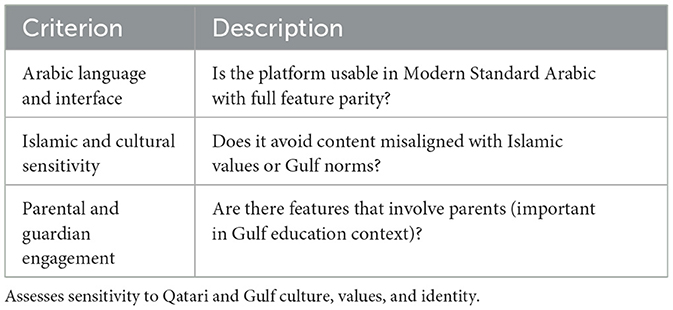

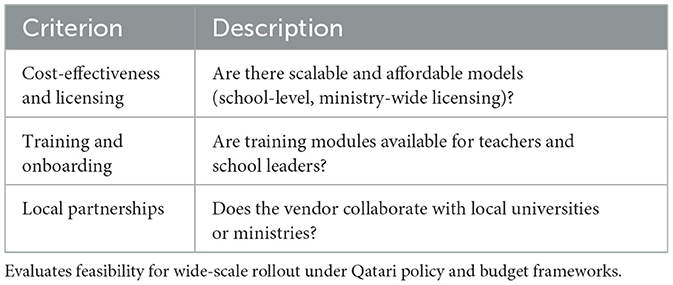

To evaluate the suitability of AI-powered educational tools for deployment in the Gulf region, specifically within Qatar's education system, a structured multi-criteria assessment was conducted using the G-AIETM scale. This matrix was developed in alignment with the UNESCO EIA Tool and localized to reflect national educational priorities, cultural norms, and legal standards.

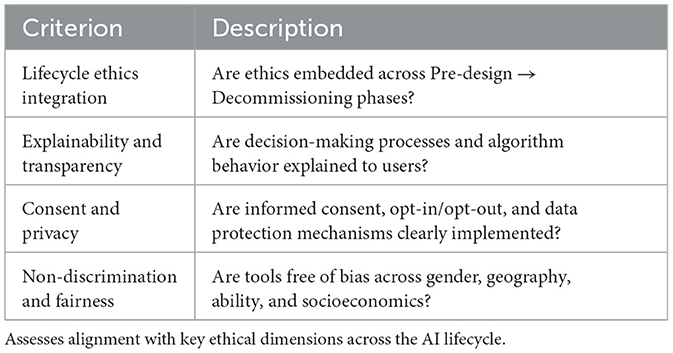

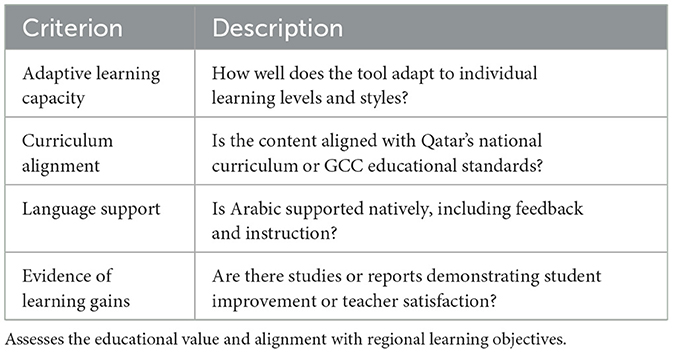

The G-AIETM matrix comprises 18 qualitative indicators organized across five evaluative domains:

1. Ethical Compliance (4 criteria)

2. Pedagogical Effectiveness (4 criteria)

3. Technological Readiness (4 criteria)

4. Cultural and Linguistic Relevance (3 criteria)

5. Implementation and Cost Sustainability (3 criteria)

Each AI tool was assessed against these indicators on a Five-point Likert scale (1 = Poor; 5 = Excellent). The scoring was informed by a desk-based document review of publicly available information, including company technical documentation, academic studies, global edtech reports, and policy frameworks.

For each tool, two additional columns were used to capture:

• Quantitative Scores: Numeric rating for each indicator.

• Qualitative Notes: Narrative justifications explaining the score and highlighting key strengths or limitations.

The scores were summed to calculate a total out of 90, then normalized to a percentage score. Based on the normalized score, each tool was categorized as follows:

• 85–100%: Highly Recommended

• 70–84%: Recommended with Minor Adaptation

• 50–69%: Needs Significant Localization

• Below 50%: Not Recommended

This structured approach facilitated comparative evaluation and informed context-specific adaptation memos for each tool. The results guided the formulation of policy recommendations on localization, data compliance, and curriculum integration for Qatar's education sector. Moreover, this indirect, desk-based approach is appropriate given current limitations in data access and the early maturity of many AI governance frameworks. It enables a first-of-its-kind, ethics-centered comparison of AI education tools through the lens of a UNESCO-endorsed methodology. The findings aim to guide future policy discussions, vendor selection processes, and adaptation of the EIA tool to educational contexts.

4.5 Novel AI education tool evaluation matrix

The novel evaluation matrix was built based on giving a score from 1 to 5 in every criterion under five main domains (Tables 3–7).

4.6 Score range and final composite score

4.6.1 Score range (1–5)—ethical compliance

1 = Very Poor (Non-Compliant or Superficial Engagement)

• No visible integration of ethical considerations in design or implementation.

• Lack of any public documentation on privacy, fairness, or data protection.

• Ethics is treated as a symbolic gesture or a checkbox, with no operational mechanisms in place.

• No transparency regarding AI decision-making or user consent.

2 = Weak (Minimal or Incomplete Ethical Attention)

• Ethics is mentioned in mission statements or policy, but operational integration is limited or inconsistent.

• Basic privacy policies exist, but consent is implicit or non-interactive.

• Limited or non-specific disclosures on how data is handled, stored, or protected.

• Ethical risks are not reviewed iteratively; assessments occur only once or under pressure.

3 = Moderate (Partially Aligned with UNESCO EIA Principles)

• Some phases of the AI lifecycle (e.g., design or deployment) incorporate ethical review, but not all.

• Consent is present but may not be fully informed, easy to understand, or accessible to minors.

• Some mechanisms exist for addressing bias or risk, but without formal monitoring systems.

• Ethical oversight may exist at the organization level but lacks external accountability or auditing.

4 = Strong (Operationally Ethical and Transparent)

• Ethical practices are embedded across most lifecycle stages (pre-design, development, deployment).

• Clear policies for consent, data privacy, fairness, and algorithmic accountability are published.

• The tool includes teacher or user-facing explanations for AI behavior and decisions.

• There is stakeholder engagement (e.g., parents, teachers) in ethical evaluation or policy feedback.

5 = Excellent (Fully Integrated and Aligned with UNESCO EIA Standards)

• Comprehensive ethical governance is implemented across all five stages of the AI lifecycle.

• Informed consent mechanisms are transparent, accessible, and user-friendly, especially for children.

• The system demonstrates active mitigation of bias, periodic ethical audits, and dynamic risk monitoring.

• Ethical decisions are guided by inclusive stakeholder consultation and made transparent through documentation or dashboards.

• There is a demonstrable culture of ethical reflection embedded in the organization's values, training, and reporting.

Each domain could carry equal weight or be weighed (e.g., Ethics and Pedagogy weighed more). Total possible score:

• Raw Total: adding the total scores = 90 points

• Conversion to percentage = (Total score/90) * 100

• Score thresholds can categorize tools as:

° 85–100: Highly Recommended

° 70–84: Recommended with Minor Adaptation

° 50–69: Needs Significant Localization

° < 50: Not Recommended for Deployment

5 Results and discussions

5.1 Evaluation matrix outcomes

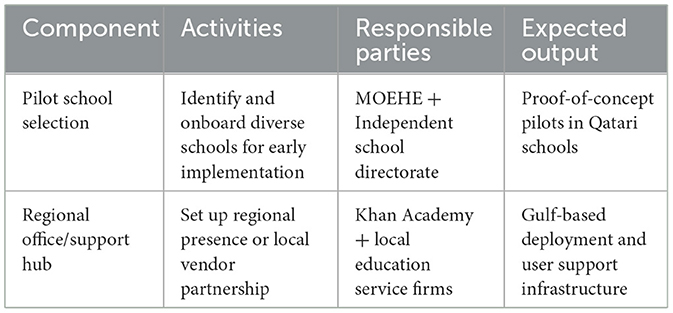

Figure 1 shows the evaluation of seven leading AI-powered educational platforms: CENTURY Tech, Squirrel AI, Knewton Alta, AltSchool, MATHia, Querium, and Khanmigo, using the Gulf-AI Education Tool Evaluation Matrix (G-AIETM). This matrix, grounded in the UNESCO EIA framework, allowed for a structured comparison across five domains: Ethical Compliance, Pedagogical Effectiveness, Technological Readiness, Cultural and Linguistic Relevance, and Implementation and Sustainability. Each tool was assessed on 18 indicators, with a total possible score of 90, subsequently normalized to a percentage score.

Figure 1. Gulf-AI Education Tool Evaluation Matrix (G-AIETM) for evaluation of AI-powered learning platforms.

The evaluation of seven AI-driven educational platforms, Khanmigo, CENTURY Tech, MATHia, Knewton Alta, AltSchool, Querium, and Squirrel AI, was conducted using the G-AIETM scale, which integrates the UNESCO EIA framework with localized educational, cultural, and regulatory criteria. Each tool was assessed on 18 indicators across five domains, and the scores were normalized out of 100. The results reveal a varied landscape of readiness, highlighting both promise and critical gaps in ethical, pedagogical, and contextual alignment.

Khanmigo, developed by Khan Academy and powered by GPT technology, achieved the highest overall score at 74.4%, placing it in the “Recommended with Minor Adaptation” category. The tool demonstrated strong performance in ethical compliance, explainability, adaptive interaction, and compatibility with classroom platforms. However, to be fully deployable in Qatar, Khanmigo still requires significant improvements in Arabic language integration, cultural contextualization, and assurance of data residency compliance. Nonetheless, its pedagogical adaptability and structured support for both students and educators make it a leading candidate for pilot implementation in Qatar's bilingual and digital-first learning environments.

CENTURY Tech and MATHia both scored 67.8%, placing them just below the threshold for minor adaptation and within the “Needs Significant Localization” category. CENTURY Tech stands out for its emphasis on evidence-based instruction and real-time analytics, while MATHia demonstrates depth in personalized mathematics tutoring through intelligent cognitive modeling. Both tools perform strongly in pedagogical effectiveness and technological readiness but lack full Arabic interface support, alignment with Qatari national curricula, and culturally relevant content. Additionally, neither tool currently provides data hosting solutions in compliance with Qatar's privacy law (Law No. 13 of 2016), nor do they have established partnerships in the region, key factors for scalability and public-sector adoption.

Knewton Alta and AltSchool scored 58.9% and 57.8%, respectively, reflecting moderate potential but limited regional alignment. Knewton offers adaptive pacing and analytics geared toward higher education but is primarily designed for U.S. institutions. AltSchool, despite its innovative project-based design and emphasis on student agency, lacks sustainability as a platform following its organizational restructuring. Both tools would require major adjustments in curriculum, language, cultural framing, and governance to be considered for public deployment in Qatar.

Querium and Squirrel AI, with scores of 54.4% and 52.2%, respectively, ranked lowest in the evaluation. While Querium offers concise, AI-supported tutoring in STEM for test preparation, and Squirrel AI provides powerful adaptivity and algorithmic logic, both are largely misaligned with the linguistic, cultural, and regulatory requirements of the Gulf context. Neither platform supports Arabic, nor do they reflect Islamic values, and both lack transparency around data ethics and local compliance, limiting their feasibility for national rollout.

Hence, Khanmigo emerges as the most promising candidate for near-term deployment with targeted adaptations, while CENTURY Tech and MATHia offer strong technical foundations for future localization. The remaining tools, though innovative in design, require substantial revision to meet the ethical, cultural, and infrastructural standards necessary for use in Qatar's evolving AI-enhanced educational landscape.

5.2 Tool analytical summary

5.2.1 CENTURY Tech

- Pre-Design Phase:

• Problem Framing and Value Alignment: CENTURY Tech aims to address challenges in personalized learning and teacher workload by leveraging AI to create adaptive learning pathways and automate administrative tasks.

- Design and Development Phase

• Data Fairness and Algorithmic Explainability: The platform utilizes AI to analyze student interactions and tailor learning experiences. While it emphasizes personalization, specific details on algorithmic transparency and data handling practices are limited in public documentation.

- Deployment Phase

• Consent Practices and Stakeholder Communication: CENTURY Tech provides dashboards for educators and guardians, facilitating monitoring of student progress. However, comprehensive information on data privacy policies and user consent mechanisms is not extensively detailed in available sources.

- Use and Monitoring Phase

• Feedback Mechanisms and Dynamic Risk Management: The platform offers real-time insights and analytics to educators, enabling timely interventions. Continuous monitoring and updates are implied, though specifics on risk management strategies are not explicitly outlined.

- Decommissioning Phase

• Transparency Around System Retirement and Data Handling: Publicly available information does not provide clear guidance on data retention policies or procedures for decommissioning the platform.

CENTURY Tech demonstrates a commitment to enhancing educational outcomes through AI-driven personalization and support for educators. While the platform addresses several ethical considerations outlined in the UNESCO EIA framework, such as improving access to personalized learning and supporting teacher workload, there is a need for greater transparency in areas like data handling, algorithmic explainability, and user consent.

5.2.2 Squirrel AI

- Pre-Design Phase

• Problem Framing and Value Alignment: Squirrel AI aims to address challenges in personalized learning and educational equity by leveraging AI to create adaptive learning pathways and automate administrative tasks.

- Design and Development Phase

• Data Fairness and Algorithmic Explainability: The platform utilizes AI to analyze student interactions and tailor learning experiences. While it emphasizes personalization, specific details on algorithmic transparency and data handling practices are limited in public documentation.

- Deployment Phase

• Consent Practices and Stakeholder Communication: Squirrel AI provides dashboards for educators and guardians, facilitating monitoring of student progress. However, comprehensive information on data privacy policies and user consent mechanisms is not extensively detailed in available sources.

- Use and Monitoring Phase

• Feedback Mechanisms and Dynamic Risk Management: The platform offers real-time insights and analytics to educators, enabling timely interventions. Continuous monitoring and updates are implied, though specifics on risk management strategies are not explicitly outlined.

- Decommissioning Phase

• Transparency Around System Retirement and Data Handling: Publicly available information does not provide clear guidance on data retention policies or procedures for decommissioning the platform.

Squirrel AI Learning demonstrates a commitment to enhancing educational outcomes through AI-driven personalization and support for educators. While the platform addresses several ethical considerations outlined in the UNESCO EIA framework, such as improving access to personalized learning and supporting teacher workload, there is a need for greater transparency in areas like data handling, algorithmic explainability, and user consent.

5.2.3 Knewton Alta

- Pre-Design Phase

• Problem Framing and Value Alignment: Knewton Alta aims to address challenges in personalized learning and educational equity by leveraging AI to create adaptive learning pathways and automate administrative tasks.

- Design and Development Phase

• Data Fairness and Algorithmic Explainability: The platform utilizes AI to analyze student interactions and tailor learning experiences. While it emphasizes personalization, specific details on algorithmic transparency and data handling practices are limited in public documentation.

- Deployment Phase

• Consent Practices and Stakeholder Communication: Knewton Alta provides dashboards for educators and guardians, facilitating monitoring of student progress. However, comprehensive information on data privacy policies and user consent mechanisms is not extensively detailed in available sources.

- Use and Monitoring Phase

• Feedback Mechanisms and Dynamic Risk Management: The platform offers real-time insights and analytics to educators, enabling timely interventions. Continuous monitoring and updates are implied, though specifics on risk management strategies are not explicitly outlined.

- Decommissioning Phase

• Transparency Around System Retirement and Data Handling: Publicly available information does not provide clear guidance on data retention policies or procedures for decommissioning the platform.

Knewton Alta demonstrates a commitment to enhancing educational outcomes through AI-driven personalization and support for educators. While the platform addresses several ethical considerations outlined in the UNESCO EIA framework, such as improving access to personalized learning and supporting teacher workload, there is a need for greater transparency in areas like data handling, algorithmic explainability, and user consent.

5.2.4 AltSchool

- Pre-Design Phase

• Problem Framing and Value Alignment: AltSchool aimed to revolutionize traditional education by integrating technology to personalize learning experiences, focusing on student agency and individualized learning paths.

- Design and Development Phase

• Data Fairness and Algorithmic Explainability: The platform utilized AI to analyze student interactions and tailor learning experiences. While it emphasized personalization, specific details on algorithmic transparency and data handling practices were limited in public documentation.

- Deployment Phase

• Consent Practices and Stakeholder Communication: AltSchool provided dashboards for educators and guardians, facilitating monitoring of student progress. However, comprehensive information on data privacy policies and user consent mechanisms was not extensively detailed in available sources.

- Use and Monitoring Phase

• Feedback Mechanisms and Dynamic Risk Management: The platform offered real-time insights and analytics to educators, enabling timely interventions. Continuous monitoring and updates were implied, though specifics on risk management strategies were not explicitly outlined.

- Decommissioning Phase

• Transparency Around System Retirement and Data Handling: Publicly available information did not provide clear guidance on data retention policies or procedures for decommissioning the platform.

AltSchool demonstrated a commitment to enhancing educational outcomes through AI-driven personalization and support for educators. While the platform addressed several ethical considerations outlined in the UNESCO EIA framework, such as improving access to personalized learning and supporting teacher workload, there was a need for greater transparency in areas like data handling, algorithmic explainability, and user consent.

5.2.5 MATHia

- Pre-Design Phase

• Problem Framing and Value Alignment: MATHia aims to address challenges in personalized learning and educational equity by leveraging AI to create adaptive learning pathways and automate administrative tasks.

- Design and Development Phase

• Data Fairness and Algorithmic Explainability: The platform utilizes AI to analyze student interactions and tailor learning experiences. While it emphasizes personalization, specific details on algorithmic transparency and data handling practices are limited in public documentation.

- Deployment Phase

• Consent Practices and Stakeholder Communication: MATHia provides dashboards for educators and guardians, facilitating monitoring of student progress. However, comprehensive information on data privacy policies and user consent mechanisms is not extensively detailed in available sources.

- Use and Monitoring Phase

• Feedback Mechanisms and Dynamic Risk Management: The platform offers real-time insights and analytics to educators, enabling timely interventions. Continuous monitoring and updates are implied, though specifics on risk management strategies are not explicitly outlined.

- Decommissioning Phase

• Transparency Around System Retirement and Data Handling: Publicly available information does not provide clear guidance on data retention policies or procedures for decommissioning the platform.

MATHia demonstrates a commitment to enhancing educational outcomes through AI-driven personalization and support for educators. While the platform addresses several ethical considerations outlined in the UNESCO EIA framework, such as improving access to personalized learning and supporting teacher workload, there is a need for greater transparency in areas like data handling, algorithmic explainability, and user consent.

5.2.6 Querium

- Pre-Design Phase

• Problem Framing and Value Alignment: Querium aims to address challenges in STEM education by providing personalized, AI-driven tutoring to help students master critical skills.

- Design and Development Phase

• Data Fairness and Algorithmic Explainability: The platform utilizes AI to analyze student interactions and tailor learning experiences. While it emphasizes personalization, specific details on algorithmic transparency and data handling practices are limited in public documentation.

- Deployment Phase

• Consent Practices and Stakeholder Communication: Querium provides dashboards for educators and guardians, facilitating monitoring of student progress. However, comprehensive information on data privacy policies and user consent mechanisms is not extensively detailed in available sources.

- Use and Monitoring Phase

• Feedback Mechanisms and Dynamic Risk Management: The platform offers real-time insights and analytics to educators, enabling timely interventions. Continuous monitoring and updates are implied, though specifics on risk management strategies are not explicitly outlined.

- Decommissioning Phase

• Transparency Around System Retirement and Data Handling: Publicly available information does not provide clear guidance on data retention policies or procedures for decommissioning the platform.

Querium demonstrates a commitment to enhancing educational outcomes through AI-driven personalization and support for educators. While the platform addresses several ethical considerations outlined in the UNESCO EIA framework, such as improving access to personalized learning and supporting teacher workload, there is a need for greater transparency in areas like data handling, algorithmic explainability, and user consent.

5.2.7 Khanmigo

- Pre-Design Phase

• Problem Framing and Value Alignment: Khanmigo aims to democratize access to high-quality education by providing AI-powered tutoring and teaching assistance, aligning with Khan Academy's mission to offer free, world-class education to anyone, anywhere.

- Design and Development Phase

• Data Fairness and Algorithmic Explainability: The platform utilizes AI to analyze student interactions and tailor learning experiences. While it emphasizes personalization, specific details on algorithmic transparency and data handling practices are limited in public documentation.

- Deployment Phase

• Consent Practices and Stakeholder Communication: Khanmigo provides dashboards for educators and guardians, facilitating monitoring of student progress. However, comprehensive information on data privacy policies and user consent mechanisms is not extensively detailed in available sources.

- Use and Monitoring Phase

• Feedback Mechanisms and Dynamic Risk Management: The platform offers real-time insights and analytics to educators, enabling timely interventions. Continuous monitoring and updates are implied, though specifics on risk management strategies are not explicitly outlined.

- Decommissioning Phase

• Transparency Around System Retirement and Data Handling: Publicly available information does not provide clear guidance on data retention policies or procedures for decommissioning the platform.

Khanmigo demonstrates a commitment to enhancing educational outcomes through AI-driven personalization and support for educators. While the platform addresses several ethical considerations outlined in the UNESCO EIA framework, such as improving access to personalized learning and supporting teacher workload, there is a need for greater transparency in areas like data handling, algorithmic explainability, and user consent.

Khanmigo's comparatively strong performance reflects its integration of adaptive feedback and teacher-facing dashboards, features that align with the literature on explainable AI as a key trust-building mechanism in education (Gunning and Aha, 2019). This is consistent with findings by (Holmes and Tuomi 2022), who note that adaptive learning systems significantly improve learner engagement and teacher intervention capacity.

6 Implementation framework for Khanmigo deployment in Qatar

As per the G-AIETM, Khanmigo scored 74.4%—Recommended with Minor Adaptation. Hence, to localize and operationalize Khanmigo for integration into Qatar's public and private school ecosystems, ensuring compliance with legal, linguistic, cultural, and curricular standards. The proposed framework is comprised of seven phases. Each phase corresponds to addressing an identified gap to meet the needs for deployment in the Gulf region.

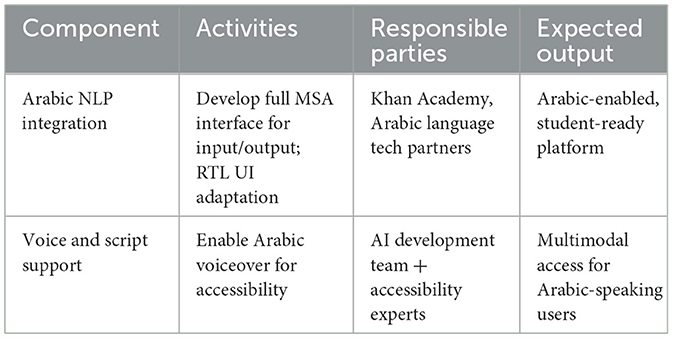

1. Arabic Language and RTL Support

Gap Identified: Interface and conversational outputs are predominantly in English, with only partial Arabic support through translation plugins.

Recommendations:

• Integrate native Modern Standard Arabic (MSA) capability for both input and output.

• Implement right-to-left (RTL) functionality and localized NLP tuning.

• Use Arabic voice and script in alignment with Qatari classroom needs.

Rationale: Arabic is the official language of instruction. Native linguistic support is essential to ensure equitable access and student comprehension across Qatar's public schools (Table 8).

2. Cultural Sensitivity and Islamic Norms

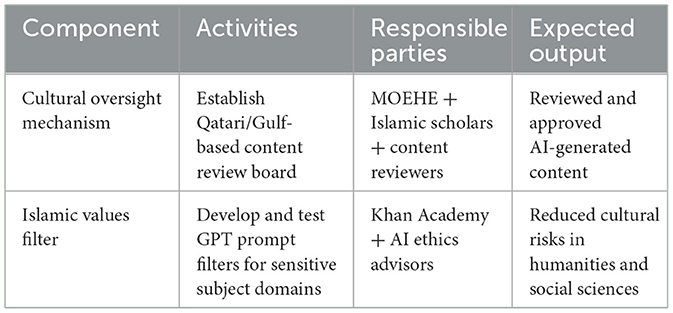

Gap Identified: GPT-generated responses are globally neutral but not tailored to Islamic or Gulf sociocultural values.

Recommendations:

• Integrate filters for cultural and religious alignment, especially in humanities and social science interactions.

• Pre-train or fine-tune localized language models with Qatari-approved education content.

• Establish a Gulf-based content oversight board to review sensitive outputs.

Rationale: Content must uphold Islamic values and reflect Qatari culture to avoid conflict and enhance community trust (Table 9).

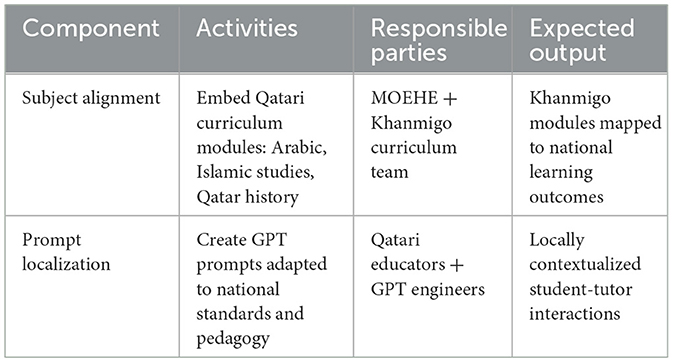

3. Curriculum Alignment

Gap Identified: Content is currently aligned with U.S.-based Khan Academy curricula.

Recommendations:

• Collaborate with MOEHE curriculum teams to develop Qatar-specific Khanmigo modules in math, science, and social studies.

• Embed Qatari history, Arabic grammar, and Islamic studies into GPT prompts and tutoring sessions.

Rationale: Curriculum alignment ensures content relevance, improves adoption by teachers, and enhances student engagement (Table 10).

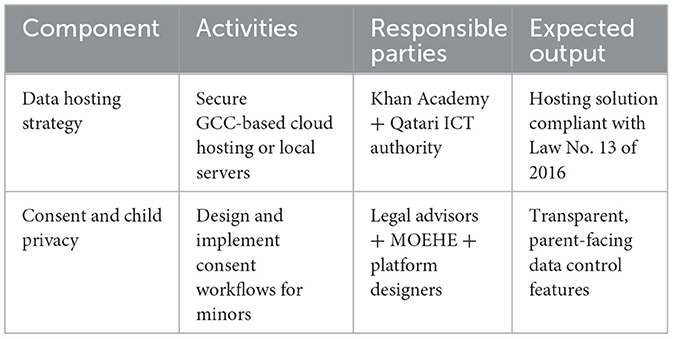

4. Data Protection and Legal Compliance

Gap Identified: Khanmigo operates globally via Microsoft Azure, raising questions about local data residency and compliance.

Recommendations:

• Ensure data residency in compliance with Qatar Law No. 13 of 2016 on Personal Data Protection.

• Offer local data storage options or secure GCC-based cloud partnerships.

• Implement child data handling protocols aligned with UNESCO and national laws.

Rationale: Legal compliance is non-negotiable for nationwide implementation in public education systems (Table 11).

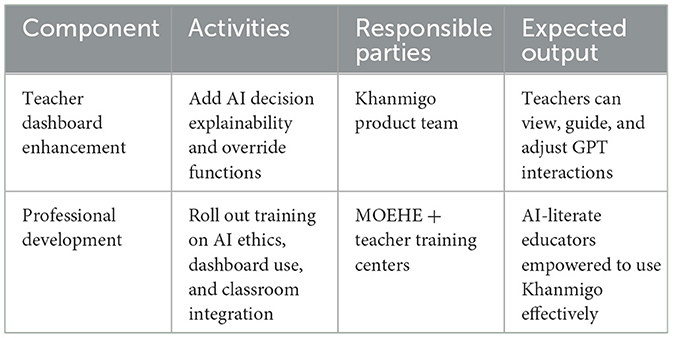

5. Explainability and Teacher Autonomy

Gap Identified: AI decision paths are not fully explainable to educators.

Recommendations:

• Provide dashboard-level transparency on GPT reasoning and logic.

• Enable teachers to override or customize AI feedback during lesson plans.

• Deliver training modules on ethical AI integration and classroom use.

Rationale: Empowering teachers increases trust and encourages ethical use of AI in classrooms (Table 12).

6. Regional Deployment and Support Infrastructure

Gap Identified: No formal partnerships or support channels in the Gulf region.

Recommendations:

• Establish regional partnerships with Qatari universities, tech hubs, or NGOs.

• Set up a local support and training center under the supervision of MOEHE.

• Explore pilot projects in independent and public schools.

Rationale: Local partnerships are critical for contextual responsiveness and long-term sustainability (Table 13).

7. Licensing, Access, and Cost Sustainability

Gap Identified: Khanmigo operates on a freemium model; sustainability in national systems remains unclear.

Recommendations:

• Design a custom licensing model for national education systems.

• Consider subsidized government partnerships or philanthropic models.

• Create tiered access for students, teachers, and administrators.

Rationale: Financial sustainability ensures scalability and equitable access across income levels and school types (Table 14).

Phase 8: Monitoring and Evaluation

Evaluation Tools: Annual G-AIETM audit + teacher/student satisfaction surveys

KPIs: Arabic engagement rate, AI ethical compliance incidents, user satisfaction, curriculum alignment index

Review Cycle: Every 12 months post-deployment with MOEHE oversight

7 Enabling factors to EIA adoption

While the UNESCO EIA tool offers a practical and flexible framework for operationalizing AI ethics, its successful adoption and sustained use depend on a range of enabling conditions. Conversely, multiple barriers, technical, organizational, and cultural, can hinder its effective application. This section identifies key success factors that have facilitated EIA implementation and discusses common challenges observed across early real-world applications.

The successful integration of the UNESCO EIA tool into AI development and governance frameworks depends on a constellation of institutional, procedural, and cultural factors. Through the examination of various deployment contexts, five enabling conditions emerged as particularly critical in facilitating the effective use of the EIA tool across education-focused AI initiatives.

1. Early Integration into Project Planning: The timing of EIA implementation significantly influenced its impact. Projects that embedded the EIA tool at the earliest stages, during initial concept development and feasibility planning, were consistently more successful in aligning technical objectives with ethical principles. Early integration allowed project teams to engage in value-sensitive design before the imposition of technical constraints or architectural decisions. By identifying potential ethical dilemmas, privacy concerns, and fairness risks at the outset, institutions avoided costly redesigns later in the development cycle and fostered more ethically coherent AI solutions.

2. Cross-Disciplinary Stakeholder Engagement: Another critical factor was the composition and inclusiveness of the stakeholder group involved in the assessment process. Institutions that brought together multidisciplinary teams, including AI developers, education experts, ethicists, legal advisors, policymakers, and community stakeholders, demonstrated a stronger capacity to identify and negotiate diverse ethical risks and social values. This collaborative and participatory approach not only ensured that ethical considerations reflected the lived experiences of affected populations but also improved the credibility, transparency, and legitimacy of the assessment outcomes.

3. Organizational Ethical Literacy and Capacity Building: The presence of prior ethical training and organizational investment in ethical literacy was found to be a strong predictor of EIA tool effectiveness. Teams that had undergone structured capacity-building programs, such as ethics bootcamps, workshops, or integration of ethics modules in technical training, were better able to engage substantively with the prompts and dilemmas presented in the EIA framework. This shared vocabulary and conceptual grounding allowed for deeper, more nuanced ethical discussions and promoted alignment across technical and governance functions.

4. Institutional Linkage to Funding, Procurement, and Evaluation Processes: A fourth enabler was the formal integration of EIA requirements into institutional mechanisms, such as procurement processes, grant funding, or project evaluation criteria. For instance, in public education systems or donor-funded projects, requiring evidence of EIA tool completion as a prerequisite for awarding tenders or funds served as a tangible incentive for ethical engagement. This linkage reinforced accountability and signaled the institutional importance of ethical due diligence alongside technical feasibility or cost-efficiency.

5. Iterative and Agile Use of the EIA Tool: Finally, how the tool was applied over time influenced its utility. Treating the EIA not as a static checklist but as a dynamic and iterative instrument, updated at multiple project phases, enabled institutions to respond to shifting conditions, emerging risks, and stakeholder feedback. This practice aligned well with agile development methodologies often used in AI projects, ensuring that ethical reflections remained relevant and responsive throughout the project lifecycle.

The comparatively strong performance of Khanmigo, which achieved the highest score in the G-AIETM evaluation, aligns with findings in the explainable AI (XAI) literature that emphasize transparency and user interpretability as critical factors in building educator trust (Gunning and Aha, 2019). Khanmigo's educator-facing dashboards and adaptive questioning features reflect core principles of adaptive learning documented by (Holmes and Tuomi 2022), where real-time feedback loops are shown to enhance student engagement and learning outcomes. In contrast, the lower scores for Querium and Squirrel AI, particularly in the domains of cultural and linguistic relevance, are consistent with critiques in the AI ethics field regarding the underrepresentation of minority languages and cultural perspectives in global AI development (Al-Khalifa et al., 2025; Jobin et al., 2019). These results underscore the broader theoretical argument advanced by the responsible innovation framework, which calls for anticipating and addressing cultural misalignments during the design and deployment phases rather than relying solely on post-hoc adaptation (Stilgoe et al., 2013). By situating the evaluation findings within these established and emerging theoretical perspectives, the study not only validates the G-AIETM as a context-sensitive assessment tool but also contributes to ongoing debates on how AI in education can reconcile global standards with local needs.

Collectively, these enabling factors demonstrate that meaningful ethical assessment is not simply a matter of applying a tool, but of embedding supportive structures, expertise, and institutional will to ensure that ethics is integral to innovation rather than an afterthought.

8 Barriers to implementation

Despite these successful factors, several barriers commonly impede EIA's full adoption:

1. Resource Constraints: Completing a comprehensive EIA can be resource-intensive, requiring time, specialized expertise, and institutional support. Under-resourced organizations, particularly in the Global South, often struggle to allocate sufficient capacity for thorough ethical reflection.

2. Technical Team Resistance: Some technical staff viewed ethics assessments as peripheral or burdensome, particularly when performance optimization was prioritized. Without organizational mandates or incentives, ethical evaluations were sometimes treated as symbolic exercises rather than integral components of project development.

3. Contextual Adaptation Challenges: The UNESCO EIA tool, while flexible, requires contextual tailoring to different legal, cultural, and sectoral environments. Institutions without prior experience in adapting to international frameworks often found it challenging to localize ethical criteria meaningfully.

4. Limited Regulatory Enforcement: In the absence of mandatory requirements or external accountability mechanisms, organizations had few external pressures to apply the EIA rigorously. Voluntary adoption alone was insufficient in many cases to drive sustained engagement.

The success of the UNESCO EIA tool ultimately hinges on more than its design; it requires organizational commitment, cultural change, and policy environments that recognize ethics as a fundamental dimension of technological development. Recognizing and addressing the barriers outlined above is therefore crucial for scaling the EIA's impact and realizing the broader vision of rights-based, human-centered AI governance.

In conclusion, the UNESCO Ethical Impact Assessment tool offers not only a practical framework for ethical AI governance but also a blueprint for building public trust, enhancing accountability, and ensuring that AI technologies serve the collective interests of humanity. As the AI landscape continues to evolve, such structured, participatory ethics mechanisms will be indispensable for guiding innovation toward sustainable, inclusive, and rights-respecting futures.

9 Conclusions

This study presents a pioneering effort to evaluate the ethical readiness, pedagogical value, and contextual adaptability of AI-powered educational platforms using a novel framework, the Gulf-AI Education Tool Evaluation Matrix (G-AIETM). Anchored in the principles of the UNESCO EIA tool, the matrix operationalizes ethics across five domains and 18 criteria, offering a structured and localized mechanism for decision-makers in Qatar and the Gulf region to assess AI tools in education.

The application of this matrix to seven prominent platforms, Khanmigo, CENTURY Tech, MATHia, Knewton Alta, AltSchool, Querium, and Squirrel AI, revealed a diverse spectrum of ethical integration and deployment readiness. Notably, Khanmigo scored the highest at 74.4%, followed by CENTURY Tech and MATHia at 67.8% each. These tools demonstrated commendable performance in pedagogical effectiveness and user-facing adaptability but required targeted modifications for cultural, linguistic, and regulatory compliance. The remaining platforms, Knewton Alta (58.9%), AltSchool (57.8%), Querium (54.4%), and Squirrel AI (52.2%), were categorized as needing significant localization, particularly in the areas of Arabic language support, curriculum alignment, and data governance transparency.

Beyond scoring, the study offers a practical implementation framework for Khanmigo, detailing phased adaptations for language integration, cultural sensitivity, curriculum mapping, and regional deployment. It also identifies enabling factors such as early-stage ethics integration and stakeholder participation, alongside barriers including limited regulatory enforcement, resource constraints, and resistance from technical teams.

Beyond its application to Qatar's education sector, the G-AIETM has broader implications for educational systems in Arabic-speaking or culturally conservative contexts worldwide. It serves as both a practical assessment tool and a policy model for integrating global ethical AI principles into regionally specific frameworks. Policymakers can use it to establish clear adoption criteria; school leaders can apply it to evaluate and adapt tools for classroom use; and edtech companies can leverage it to design culturally responsive, linguistically inclusive solutions. By bridging global ethics with local realities, the study contributes not only to the responsible deployment of AI in education but also to the global discourse on equitable and inclusive technology adoption.

Despite its contributions, this study faces certain limitations. Chief among them is its reliance on secondary data due to a lack of direct access to proprietary system architectures, internal evaluations, or user-generated feedback. The analysis also focuses on a snapshot in time, which may not capture ongoing updates or ethical enhancements made by the platform's post-review. Additionally, while the matrix is grounded in UNESCO's global framework, its weighting of domains was equal, which may not reflect policy priorities across different national contexts.

Another limitation of this study is the restricted number of platforms included in the comparative analysis. This was due to the need for verifiable public documentation to ensure a fair, evidence-based ethical assessment. As a result, potentially relevant tools, especially emerging Arabic-first platforms, regionally localized solutions, and AI tools used in vocational, adult, or informal education, were excluded. Future studies should seek to include such platforms as documentation standards improve, allowing for a broader examination of diversity, accessibility, and cultural adaptability in AI-powered education.

Future research should aim to validate the G-AIETM matrix in real-world pilot deployments, integrating feedback from students, teachers, and policymakers in Qatar. It is also recommended to explore domain-specific weighting schemes and to expand the matrix's applicability beyond K−12 to higher education and lifelong learning contexts. Furthermore, cross-country comparative studies could help examine how regional cultural and legal variables affect the ethical acceptability of AI tools.

In conclusion, the G-AIETM matrix represents an essential step toward transforming abstract ethical principles into measurable, actionable standards for AI in education. It equips the education systems in the Gulf with a strategic tool to select, adapt, and govern AI solutions that are not only effective but also aligned with local values and global ethics. However, this novel index is flexible to be tailored to other parts of the world. As education becomes increasingly digitized, embedding structured ethical evaluation will be key to ensuring that innovation serves equity, accountability, and public trust.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

RI: Investigation, Conceptualization, Writing – review & editing, Formal analysis, Writing – original draft, Methodology, Data curation. AT: Writing – review & editing. MH: Conceptualization, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The article processing charges of this article have been paid by Qatar National Library.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abbas, U., Ahmad, M. S., Alam, F., Altinisik, E., Asgari, E., Boshmaf, Y., et al. (2025). Fanar: an Arabic-centric multimodal generative AI platform. arXiv [Preprint]. arXiv:2501.13944. doi: 10.48550/arXiv.2501.13944

AlAteeq, D. A., Aljhani, S., and AlEesa, D. (2020). Perceived stress among students in virtual classrooms during the COVID-19 outbreak in KSA. J. Taibah Univ. Med. Sci. 15, 398–403. doi: 10.1016/j.jtumed.2020.07.004

Al-Khalifa, S., Durrani, N., Al-Khalifa, H., and Alam, F. (2025). The landscape of Arabic large language models (ALLMs): a new era for Arabic language technology. arXiv [Preprint]. arXiv:2506.01340. doi: 10.1145/3737453

Common Sense Education (2025). Navigating AI: Free Resources for Educators. Available online at: https://www.commonsense.org/education (Accessed April 28, 2025).

Douglas, D. M., Lacey, J., and Howard, D. (2024). Ethical risk for AI. AI Ethics 5, 2189–2203. doi: 10.1007/s43681-024-00549-9

EdSurge (2024). Revolutionizing How Educators Find Tech Solutions—Ed. Surge News. Available online at: https://www.edsurge.com/news/2024-11-13-revolutionizing-how-educators-find-tech-solutions (Accessed April 28, 2025).

EdTech (2025). EdTech Digest—Who's Who and What's Next in EdTech. Available online at: https://www.edtechdigest.com/ (Accessed April 28, 2025).

Fjeld, J., Achten, N., Hilligoss, H., Nagy, A., and Srikumar, M. (2020). Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI. Berkman Klein Center Research Publication No. 2020–1. doi: 10.2139/ssrn.3518482

Fountain, J. E. (2022). The moon, the ghetto and artificial intelligence: reducing systemic racism in computational algorithms. Gov. Inf. Q. 39:101645. doi: 10.1016/j.giq.2021.101645

Gunning, D., and Aha, D. W. (2019). DARPA's explainable artificial intelligence program. AI Mag. 40, 44–58. doi: 10.1609/aimag.v40i2.2850

Holmes, W., and Tuomi, I. (2022). State of the art and practice in AI in education. Euro. J. Educ. 57, 542–570. doi: 10.1111/ejed.12533

HolonIQ (2025). EdTech in 10 Charts. Available online at: https://www.holoniq.com/edtech-in-10-charts (Accessed April 28, 2025).

IBM Research (2020). IBM FactSheets Further Advances Trust in AI. Available online at: https://research.ibm.com/blog/aifactsheets (Accessed April 28, 2025).

IEEE (2019). Ethically Aligned Design—A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems. IEEE, 1–294.

Jobin, A., Ienca, M., and Vayena, E. (2019). Artificial intelligence: the global landscape of ethics guidelines. Nat. Machine Intell. 1, 389–399. doi: 10.1038/s42256-019-0088-2

Li, W., Yigitcanlar, T., Browne, W., and Nili, A. (2023). The making of responsible innovation and technology: an overview and framework. Smart Cities 6, 1996–2034. doi: 10.3390/smartcities6040093