- Department of Chemistry, Louisiana State University, Baton Rouge, LA, United States

This brief research report presents exploratory findings from a study examining student-use of a mandatory artificial intelligence (AI) disclosure form in a general chemistry and citizen science honors course. Students documented every instance of AI use, describing the AI tool utilized, their purpose, the context of the assignment and their perceived outcomes. Originally created as a practical solution, the form aligns retrospectively with established frameworks in AI Literacy, Digital Ethics, Universal Design for Learning (UDL), and Metacognitive Reasoning. Qualitative analysis of responses identified major themes: verification, immediate academic aid, procrastination, and material obstacles. Findings underscore the disclosure form’s potential as a pedagogical tool, fostering transparency, ethical engagement, and self-regulation. The author proposes broader adoption of the form as a replicable strategy for instructors integrating AI in the classroom and advocates for exposing students to literacy in AI, ethics, and intellectual property.

1 Introduction

Generative Artificial Intelligence (GAI) tools, such as ChatGPT, have rapidly upended educational environments. The use of such technology and tools is confounded by the challenge of integrating them in the curriculum and education system to meet instructional and learning needs (Xu and Ouyang, 2022). However, there is a lack of systematic and reflective student documentation around AI-use. As stated by Barkas et al., 2022, a deeper understanding of how students are using AI tools will elevate the student voice and aid in developing an evidence-base for the use of these tools in teaching and learning (Barkas et al., 2022). Although there have been studies done in education using AI tools, little to no research has addressed how and why students use AI tools in real-time coursework settings (Barstow et al., 2017; Ledesma and García, 2017; Liu et al., 2017; Perin and Lauterbach, 2018; Zhang et al., 2020). This study presents the early insights from a self-reporting disclosure form designed to capture the context, intent, and perceived impact of AI-use in response to the lack of transparency and ethical scaffolding for AI-use in education.

Generative Artificial Intelligence (AI), specifically ChatGPT, is a current issue of pressing importance and concern in higher education (Mohebi, 2024). ChatGPT and its forecasted use challenges traditional notions of academic and professional ethics. During this emerging period when new technology is being learned and adopted, critical populations may be vulnerable; such as minoritized students being accused of cheating/using AI tools based on racial stereotypes and inequitable access to technology (Murphy et al., 1986; Lawson and Murray, 2014; Lawson et al., 2015; Beasley, 2016; Puckett and Rafalow, 2020; Cwik and Singh, 2022; Holstein and Doroudi, 2022; Rafalow and Puckett, 2022; Surahman and Wang, 2022). A 2023 study surveying 2,728 students revealed that 10% of U.S. college students were frequent users of generative AI tools, with an additional 28% reporting they are occasional users (Widenhorn et al., 2023). The misuse or overuse of AI can undermine the educational process, raising issues of academic integrity, impairing the cultivation of critical thinking, and introducing concerns about bias and equity. The contradictions of the positive and negative impacts of AI signify that the future of AI in higher education will depend largely on how it is implemented. Educational institutions must adapt through updated pedagogy to integrate AI in a way that maximizes benefits for learning while safeguarding academic and ethical values (Garza Mitchell and Parnther, 2018; Bittle and El-Gayar, 2025).

2 Methodology

A Qualitative method research design was employed in this study. Based on the gaps in research intersecting AI in education and self-documentation of use, this study aimed to investigate the following research question:

How do students articulate their motivations for AI-use within a structured, mandatory self-disclosure process, and what implications does this hold for academic practices and transparency policies?

2.1 Ethical considerations

The study received ethical approval from the Institutional Review Board (IRB): IRB # IRBAM-24-0850. Virtually signed consent forms were obtained from participants. Student names were redacted and replaced with numerical coding during data collection and analysis to ensure data integrity and maintain participant privacy. Any potentially identifying details were removed from all documentation prior to analysis.

2.2 Participants and study environment

This study was conducted at a predominantly white and historically privileged public flagship research university located in the southern United States. The university has a campus-wide initiative to integrate an AI-statement across all curricula; however, instructors can choose to copy/paste what the university has provided or customize the statement as their own. The author chose to customize their statement. The AI Disclosure Form was communicated in the syllabus and in person to the students in the General Chemistry I course and Honors Citizen Science course the author taught in the Spring of 2024 & 2025, respectively. Out of 238 students enrolled in the General Chemistry 1 course, 60 students completed the AI Disclosure Form. Out of the 16 students enrolled in the Honors course, 3 students completed the AI Disclosure Form. 31 students from the General Chemistry 1 course and all 3 students from the Honors course completed the consent form to participate in the study. The AI Disclosure Form was found and accessed by the students via the Learning Management System as an external link to Microsoft Forms. Students were required to completely fill out the form if they used AI within the bounds stated in the syllabus. Failure to report AI-use when revealed resulted in a zero for the given task/assignment. Virtually signed consent forms were obtained by the students after completion of the course(s) through Qualtrics.

2.3 Theoretical frameworks

The syntheses of the following framework theories were used.

2.3.1 AI literacy

AI Literacy is encompassed by the knowledge, skills, and dispositions that enable individuals to critically understand, interact with, and create systems powered by artificial intelligence (Lankshear, 2008). The three core dimensions of AI Literacy includes conceptual understanding, critical reasoning, and practical application (Zawacki-Richter et al., 2019; Long and Magerko, 2020; Kong et al., 2021; Ng et al., 2021). Empirical studies have emphasized the critical role of metacognition in developing AI literacy (Lankshear, 2008; Ng et al., 2021; Cardona et al., 2023; Avsec and Rupnik, 2025; Lee et al., 2025; Ricciardi Celsi and Zomaya, 2025). In this brief study, AI Literacy is operationalized to include scaffolding, contextualization, and transparency—all core to the AI Disclosure Form’s function of making AI use and decision-making process visible.

2.3.2 Digital ethics

Digital Ethics frameworks encompass the following four pillars to guide the responsible use of data and technology: Data Rights & Privacy, Fairness & Equity, Transparency & Explainability, and Accountability & Governance (Atenas et al., 2023). This framework was used as it entirely encompasses ethical literacy in the use of technology that emphasizes secure handling of the technology, mitigating biases, and promotes transparency alongside accountability (Floridi and Cowls, 2019; Kong et al., 2021; Ng et al., 2021; Atenas et al., 2023). The four pillars of digital ethics guiding this study are defined as follows: (1) Data Rights & Privacy—students’ information and AI-generated data must be protected and used ethically; (2) Fairness & Equity—AI systems and related practices should avoid bias and ensure just outcomes; (3) Transparency & Explainability—the processes and decisions made by AI tools must be understandable to users and stakeholders; and (4) Accountability & Governance—clear roles, responsibilities, and oversight mechanisms should govern AI use (Khan et al., 2022; Mustafa et al., 2024). These align with broader standards in AI ethics emphasizing privacy, fairness, accountability, and transparency.

2.3.3 Universal Design for Learning (UDL)

The Universal Design for Learning framework introduces the creation of flexible learning environments that accommodate the diverse needs of all learners, promoting equity and not limiting its use to strictly students with disabilities (King-Sears, 2009; Schreffler et al., 2019; Roski et al., 2021; Sewell et al., 2022). Sewell et al. (2022) articulated how the UDL framework supports inclusion and can be extended to support all learners through flexible and inclusive instructional design (Sewell et al., 2022). The three pillars of UDL are Multiple Means of Engagement, Multiple Means of Representation, and Multiple Means of Action & Expression. Multiple Means of Engagement are strategies that motivate learners by tapping into their interests, offering choices, and supporting self-regulation; Multiple Means of Representation are practices of presenting content in diverse methods to ensure all learners can access and understand the information/content; and Multiple Means of Action & Expression are practices that allow learners to demonstrate knowledge through varied modalities (Al-Azawei et al., 2016; Roski et al., 2021). This framework was used as it aligns with the disclosure form with each pillar: Multiple Means of Engagement—students chose how they used AI and reflected on its use when answering the form, Multiple Means of Representation—students clarified their learning intentions and tool use, and Multiples Means of Action & Expression—students documented how the tools provided self-regulated support, which promotes metacognitive awareness and accountability. This framework is presented as a conceptual lens for interpreting the intended design of the AI Disclosure Form, rather than as an outcome measure in this study. Specifically, the form was developed to be consistent with UDL principles: Multiple Means of Engagement—allowing students to choose how they use AI and reflect on its use; Multiple Means of Representation—providing space for students to clarify their learning intentions and tool selection; and Multiple Means of Action & Expression—documenting how AI tools provided self-regulated support, potentially fostering metacognitive awareness and accountability. While these pillars informed the form’s structure, the present analysis focuses on students’ self-reported motivations for AI use, not on directly measuring progression through these processes.

2.3.4 Metacognitive reflection

Metacognitive reflection refers to the active processes of planning, monitoring, and evaluating one’s own learning and decision-making (Schraw and Dennison, 1994; Zimmerman, 2002). Within the context of this brief study, metacognitive reflection is central to how the AI Disclosure Form operates: students are prompted to articulate the purpose, rationale, and perceived outcomes of their AI use. This act of documentation requires learners to pause, assess their choices, and consider the implications of those choices—behaviors that align with established models of metacognitive engagement. In doing so, the form supports students’ self-regulated learning, fosters awareness of their cognitive strategies, and complements the reflective aims embedded within AI Literacy and Universal Design for Learning (UDL) frameworks.

2.4 Development of the AI disclosure form

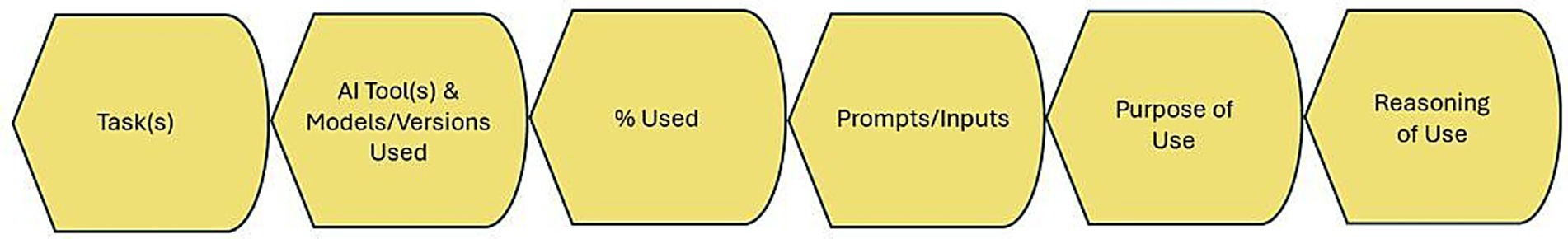

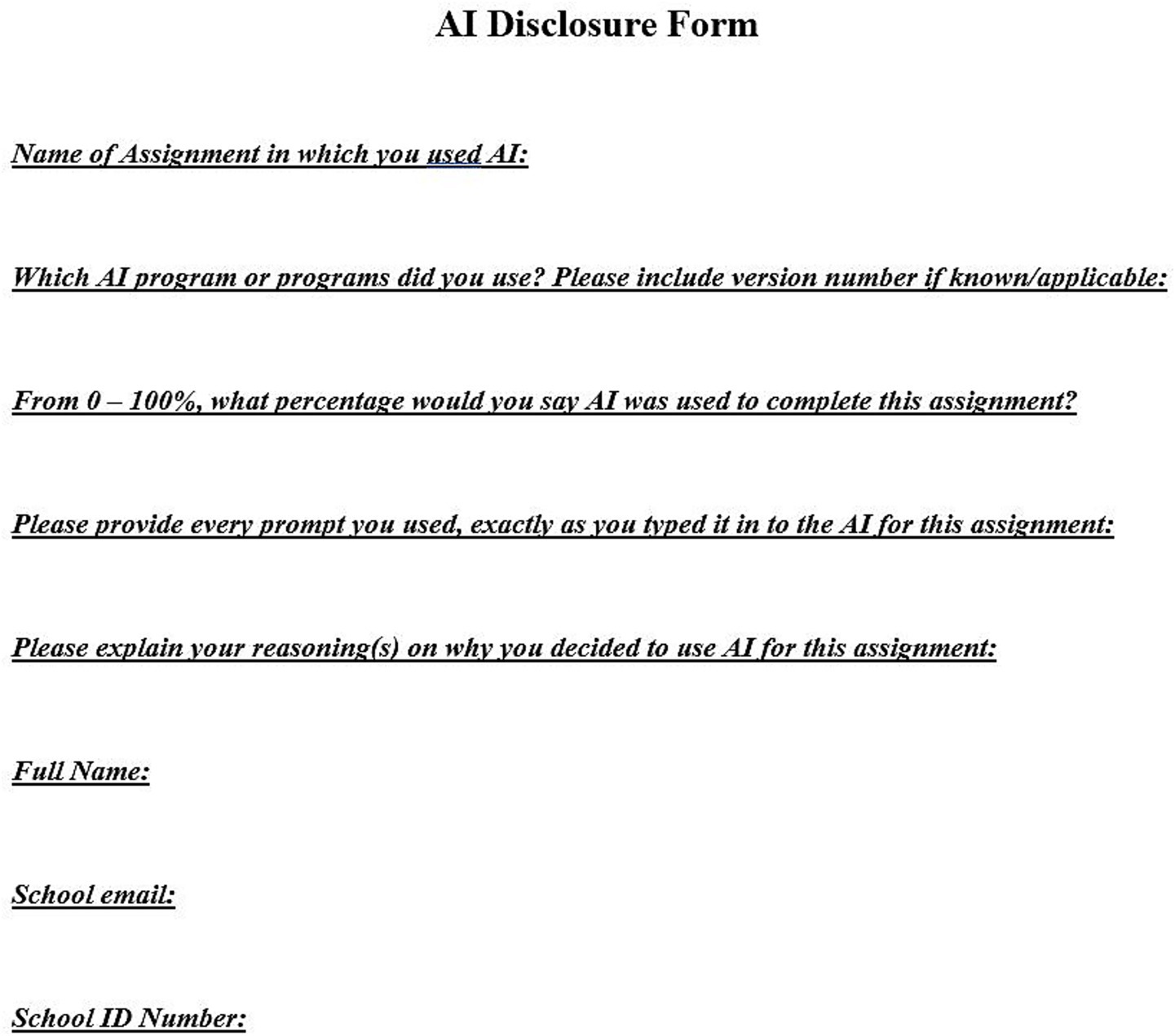

The AI Disclosure Form used in this study was designed by the author who was also the instructor for the courses mentioned in Section 2.3, not initially grounded in a formal theoretical framework, but rather in response to emerging needs in higher education for ethical, structured, and transparent documentation of AI-use. The form aligns with the educational frameworks mentioned in Section 2.4—particularly in fostering metacognitive reflection and inclusive engagement with emerging technologies. Students were required to complete the form each time they engaged with AI tools for any course-related task, including writing, brainstorming, research, etc. The students provided information through structured prompts, including specific course tasks or assignment associated with AI-use, the specific AI tool(s) utilized, percentage of AI-use, explicit prompts/input, purpose of AI use, and their reasoning behind their decision to employ AI as displayed in Figure 1. The AI Disclosure Form is enclosed in the Supplementary Material and is displayed in Figure 2. The form served as both a data collection instrument and a pedagogical intervention, promoting transparency, ethical awareness, and student self-regulation in AI-use.

2.5 Qualitative

The qualitative component of this study was conducted through document analysis. The responses on the documents were coded by the author using Delve, an online qualitative data analysis software (Delve was used to sort, store, and retrieve data during analysis) (Delve, 2025). The consented sample size (N = 34) was above the commonly recommended threshold of 20 for theme saturation, the recurrence of themes across participants and the coherence of shared reflections suggest that thematic saturation was achieved (Braun and Clarke, 2006; Creswell and Clark, 2017). Inductive coding was used for data analysis. The coding process was guided by the frameworks mentioned under Section 2.4. Reflexivity was practiced throughout the study, with the researcher maintaining memo notes and analytic reflections to remain aware of positionality and potential biases during interpretation. Although inter-coder reliability was not applicable due to the single-author design, steps were taken to enhance credibility, including prolonged engagement with the data and triangulation with course materials and context.

2.6 Study limitations

The data gathered in this study is derived from a large R1 institution in the southern United States in a set of courses taught by one professor—the author. Thus, the data may not be representative of what may result from different courses or even the same courses with different instructors. The data may not be representative of smaller colleges or universities in different states with varying degrees of permission and/or restrictions of AI-use. This study uniquely emphasizes student AI-use in general and honors STEM and/or basic STEM-focused courses. In addition, self-report biases and variability in student honesty or thoroughness cannot be ruled out, in which these factors may influence generalizability—this is common in research relying on voluntary disclosure and does not negate the internal validity of the thematic findings. We note that this study does not empirically test whether students engaged in sustained metacognitive reflection as a result of completing the form; rather, these theoretical elements frame the intended pedagogical function of the tool. The AI Disclosure Form was conceptually aligned with UDL and metacognitive reflection principles, the present analysis did not empirically evaluate whether students engaged in these processes as a result of using the form. The findings instead focus on students’ self-reported motivations for AI use. Future studies should examine the degree to which such disclosure processes actively facilitate the reflective and self-regulatory behaviors they are designed to support.

3 Results

The document analysis uncovered a mix of acceptable and problematic patterns, especially in students’ reported frequency of AI use, the content of their inputs, and the rationale they provided.

3.1 Assignment-type

For the General Chemistry I course, the author employs Claim Evidence Reasoning (CER) worksheets in the course throughout the semester, where they were able to complete within a two-week timeframe once assigned. All but one of the chemistry students consenting to the study used AI for the worksheets, where one student reported using AI to assist with their online homework. One student in the Honors Citizen Science course used AI for a worksheet and the remaining two students submitted a joint form since they were partners for a weekly presentation.

3.2 AI program/tool of choice

The AI program/tool of choice was overwhelmingly ChatGPT. However, a few students stated using Bing/Microsoft Copilot, Grammarly, Canva (explicitly for AI image generation by the honors students giving a presentation), Hyperwrite, and chatbots engrained within websites and online homework programs such as CourseHero, Brainly, and Chegg. Although it was mentioned on the form to state the program version model if known, there was a 94 and 6% split, where the majority did not state which model of ChatGPT or AI tool they used, and the minority did. The 6% that did state which version of (explicitly ChatGPT) they used varied between the then free model (3.0 & 3.5) and the then subscription paid advanced model (4.0).

3.3 Percentage of use

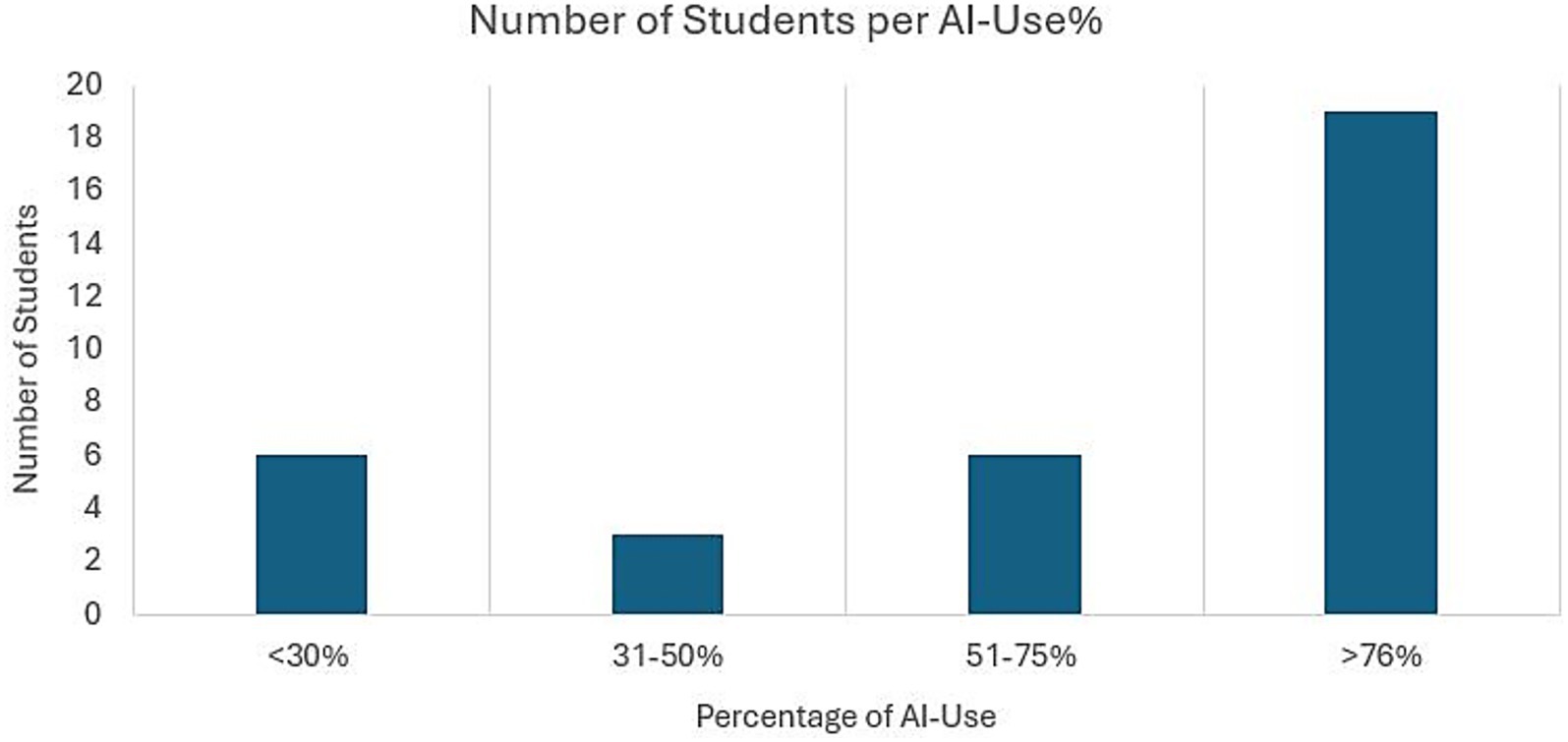

The self-reported percentage of AI-use revealed to be extremely high with few exceptions, the lowest self-reported range being 5% and the highest being 100%. As displayed in Figure 3, ~17% of students reported using AI for 30% or less of their assignment/task, ~9% reported using it for 31 to 50% of their assignment/task, ~18% reported using it for 51 to 75% of their assignment/task, and ~56% reported using it for 76 to 100% of their assignment/task.

Reported percentages varied by assignment type. Students who reported low percentages of use (5–30%) generally engaged AI for verification or brainstorming, particularly on CER worksheets or honors course presentations. Moderate use (31–50%) was associated with immediate academic aid on problem-solving tasks, such as retrieving formulas, clarifying stoichiometric calculations, or balancing equations. High use (76–100%) was most often tied to repetitive or time-intensive tasks—especially CER worksheets in General Chemistry—where entire question sets were copied into AI tools. In a few cases, high-percentage use was linked to online homework tasks when students lacked confidence in the content. These patterns suggest that higher levels of AI use corresponded to repetitive or high-stakes assignments, while lower levels reflected targeted, supplementary use to verify or extend students’ work.

3.4 Inputs into AI tools

Regardless of the percentage of AI use, students overwhelmingly copied/pasted the assignment/task question/statement word for word into the AI tools. Students either self-reported as such by delineating word for word or by passive and short statements such as “Questions 1–4,” “Every question” or “I copied and pasted the questions”—even though it was stated in the form to provide every prompt used exactly as they typed into the AI tool.

3.5 Themes of reasoning

Analysis of the documents revealed three thematic categories emerging from the data, addressing how students self-report their reasoning on AI-use.

3.5.1 Verification

Students have articulated motivations of AI-use around verifying accuracy and ensuring the correctness of procedural steps. The students that self-reported to use the AI tools(s) for 5–30% of their assignment/task were using it for verification of their responses or for the pair of honors students—to make an image they wanted that did not have a watermark.

“I wanted to check my work to make sure that it was correct.”

“We needed an image that does not have a watermark or logo. Hard to find since they are everywhere!”

“I forgot the exact steps of how to do some of the things and needed a step by step example I could follow along with.”

3.5.2 Immediate academic aid

The students that self-reported to use the AI tool(s) for 31–50% of their assignment/task emphasized AI as a readily available assistant for immediate aid. Students also recognized what they specifically need assistance with or when they needed to quickly retrieve a formula not on-hand. They credited the tool to be extremely helpful in theses instances in conjunction with clarifying questions or solving problems efficiently. Students were using AI tools as aids for stoichiometry, balancing equations, quickly calculating molar mass, etc.

“I used this application because my friends are not able to help me, the chemistry tutor does not help me, and a Google search is too diverse and broad. The application is precise and tells me exactly what I want to know, it shows me general steps taken, as well as giving an explanation as to why it is.”

“It was getting late and my brain was getting foggy. I was struggling to remember how to begin each problem. Once I was shown the first step in the process to get started, I attempted to finish the problem and compared my answer to the answer generated in AI.”

“I decided to use AI to help me on this assignment with the balancing of the chemical equation question to better understand it since I did not understand. I also decided to use AI to help me with conversion which would make the process faster for me.”

3.5.3 Procrastination

The students that self-reported to use the AI tool(s) for 51–100% of their assignment/task acknowledged they were procrastinating or lost their sense of time in a very short manner—highlighting temporal constraints.

“lack of time.”

“short on time. Exam week overload.”

“I used it for this assignment because I have a lot of studying for other classes that I need to focus more on because of the grades in those classes.”

“I was short on time. I did not organize myself effectively today after I accumulated a load of work over the break.”

“Have a lot going on and was on a time crunch.”

“Under a time crunch and needed to get it done.”

3.5.4 Material obstacles

In addition, certain students stated that they were facing severe obstacles in comprehending the material—where their responses were more elongated. Distinct from procrastination, their comments highlight academic difficulty and/or lack of confidence.

“I struggle to correctly solve conversion problems and lay them out properly so I wanted to ensure that I did them correctly. I switched my majors and am no longer a stem major and am just hoping to pass this class because I really struggle with math, stoiciometry, and balancing.”

“I’m not very good at chemistry and never was I just really need to pass this class it is my second time taking it. A lot of it I plugged in and worked out on my own but following the steps that the AI gave me.”

The honors student that self-reported using AI for a worksheet claimed it was for 30% of the worksheet, when it was not the case as they reported that they input each question word-for word into the AI. Their reasoning was not so much a material obstacle, but concern of meeting assignment requirements.

“I did not know how to expand my thoughts for each question in at least 5 quality sentences, so I used AI to generate some ideas for me.”

4 Discussion and implications

This brief original research report proposes broader implementation of the AI Disclosure Form as a replicable practice for instructors across educational fields. Such practice encourages transparency, promotes ethical reflection, and promotes student self-regulation; aligning closely with principles of AI literacy, digital ethics, and UDL. Although students were intentional on the forms, some students have displayed variations in detail, intentional, and reflective use when prompted to disclose their AI-use. The results yielded from the students’ responses highlights the importance of the disclosure forms in observance of surfacing learning behaviors and ethical engagement. The form and its opportunity of reflection fosters agency and metacognition to transition students to move beyond passive use of AI. In addition, these findings underscore how the Disclosure Form operates as a UDL-aligned scaffold fostering metacognitive reflection: through documenting AI use, students engage in active planning and self-monitoring (core metacognitive processes). The findings also align with and illuminate the three pillars of UDL in practice. The “Verification” theme reflects Multiple Means of Engagement, as students sought to sustain motivation and confidence by confirming the accuracy of their work. “Immediate Academic Aid” corresponds with Multiple Means of Representation, with students leveraging AI to access alternative explanations, retrieve formulas, and visualize steps that supported comprehension. “Procrastination and Material Obstacles” intersect with Multiple Means of Action & Expression by showing how students used AI to complete tasks under time or content pressure—yet also revealing moments where deeper conceptual engagement was limited. These patterns indicate that the AI Disclosure Form not only documents behavior but also serves as a diagnostic tool for educators to identify where UDL-aligned supports are effective and where further scaffolding or targeted intervention may be necessary to enhance equity and inclusion in AI-integrated learning environments. This metacognitive engagement reinforces agency and transparency in AI-enhanced learning environments (Avsec and Rupnik, 2025). Although the form was used in two different science-based courses, the form is not discipline-specific, and can thus be adapted across different courses, disciplines, and institutional requirements. The use of an AI Disclosure Form should be considered and integrated into faculty training and institutional AI policies. Widespread institutional adoption could position the AI Disclosure Form as a mechanism for fostering a culture of accountability and responsible AI use, equipping students to navigate ethical complexities in technological innovation. While the present study did not measure the extent to which the form led students through specific UDL or metacognitive processes, its design is intentionally aligned with these frameworks, and future research could examine these outcomes directly.

However, educators should review their pedagogical approach for course tasks/assignments to promote their ideal and intended course outcomes as they integrate ethical and transparent AI-use. The themes of verification and academic aid displays students’ borderline with the intent of using it as a guide and solely focused on achieving a good grade. The themes of academic aid and material obstacles resurfaces the continuing educational issue of how to effectively make students understand that help and resources are available (Dembo and Seli, 2004). The theme of procrastination display students simply wanting to complete the assignment with the assumption and hope that the answers are correct and/or they are prioritizing certain work and courses over others (Dembo and Seli, 2004). The challenge to reviewing pedagogical approach is integrating AI into course tasks/assignments in a way that keeps students in an active, agentic role while emphasizing that their creativity and (scientific) judgement remain paramount, with AI simply as an assistive tool to their learning. The AI Disclosure Form is positioned to make students’ use of AI and its operations transparent and positioning the students as the decision-makers who directs and/or interprets the AI’s outputs. In addition, insight into students’ responses on the forms assist educators on how to pivot their pedagogy. Ultimately, the effect of technology and AI is contingent on design and pedagogy.

Collectively, the variance of students reporting the version of AI tool they are using, the dominant trend of inputting the questions word for word from a course assignment/task into the AI, and the reported amount of use per assignment requires the academic community to discuss plagiarism, methods of citation for accreditation, ethics, and intellectual property with students regarding AI-use (OECD, 2025). Whether or not the students realize/know that assignments are completely created by the professor (which the author has for both courses in this case), is it ethical to completely upload or prompt in an AI tool word for word the assignment—resigning a professor’s or online homework company’s intellectual property to an AI tool? Is it plagiarism to completely upload or prompt in an AI tool word for word the assignment? How does one prompt an AI to give accreditation to an input that was to the AI by a student or any external source that is not the original source? There is a dire need for students to be more aware of intellectual property, basic and AI-focused literacy and ethics.

5 Conclusion

This study not only investigates how students interacted with AI through self-reported disclosures but also introduces and advocates for a replicable pedagogical tool that promotes ethical AI literacy and reflective practice in STEM and general education. Given the current uncertainty around AI use in higher education, the AI disclosure form offers a timely, accessible, and research-informed tool to help instructors and institutions navigate the balance between innovation, accountability, and ethics in education. Through structured reflection, students become active, autonomy responsible agents in their educational process, fostering transparency, ethical literacy and deeper learning engagement. The AI Disclosure Form offers a scalable, scaffolded, and ethical intervention in an emerging AI-integrated academic environment. This work advocates for broader adoption and ongoing refinement of the disclosure approach, contributing to ethically informed AI practices and use in higher education.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by LSU Institutional Review Board Dr. Alex Cohen, IRB Chair Douglas Villien, Assistant Director of Research Compliance & Integrity and IRB Manager. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

JGR: Visualization, Conceptualization, Formal analysis, Resources, Data curation, Validation, Project administration, Funding acquisition, Methodology, Writing – review & editing, Writing – original draft, Supervision, Investigation.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was funded by the National Science Foundation Award Number 2327418.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Al-Azawei, A., Serenelli, F., and Lundqvist, K. (2016). Universal design for learning (UDL): a content analysis of peer-reviewed journal papers from 2012 to 2015. J. Scholarsh. Teach. Learn. 16, 39–56.

Atenas, J., Havemann, L., and Timmermann, C. (2023). Reframing data ethics in research methods education: a pathway to critical data literacy. Int. J. Educ. Technol. High. Educ. 20:11. doi: 10.1186/s41239-023-00380-y

Avsec, S., and Rupnik, D. (2025). From transformative agency to AI literacy: profiling Slovenian technical high school students through the five big ideas lens. Systems 13:562. doi: 10.3390/systems13070562

Barkas, L. A., Armstrong, P.-A., and Bishop, G. (2022). Is inclusion still an illusion in higher education? Exploring the curriculum through the student voice. Int. J. Incl. Educ. 26, 1125–1140. doi: 10.1080/13603116.2020.1776777

Barstow, B., Fazio, L., Lippman, J., Falakmasir, M., Schunn, C. D., and Ashley, K. D. (2017). The impacts of domain-general vs. domain-specific diagramming tools on writing. Int. J. Artif. Intell. Educ. 27, 671–693. doi: 10.1007/s40593-016-0130-z

Beasley, E. M. (2016). Comparing the demographics of students reported for academic dishonesty to those of the overall student population. Ethics Behav. 26, 45–62. doi: 10.1080/10508422.2014.978977

Bittle, K., and El-Gayar, O. (2025). Generative AI and academic integrity in higher education: a systematic review and research agenda. Information 16:296. doi: 10.3390/info16040296

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Cardona, M. A., Rodríguez, R. J., and Ishmael, K. (2023). Artificial intelligence and the future of teaching and learning: Insights & recommendations. Washington, D.C.: U.S. Department of Education, Office of Educational Technology.

Creswell, J. W., and Clark, V. L. P. (2017). Designing and conducting mixed methods research. Los Angeles: SAGE Publications.

Cwik, S., and Singh, C. (2022). Developing an innovative sustainable science education ecosystem: lessons from negative impacts of inequitable and non-inclusive learning environments. Sustainability 14:11345. doi: 10.3390/su141811345

Delve, (2025). Software tool to analyze qualitative data. Delve. Available online at: https://delvetool.com (Accessed December 17, 2022).

Dembo, M. H., and Seli, H. P. (2004). Students’ resistance to change in learning strategies courses. J. Dev. Educ. 27:2.

Floridi, L., and Cowls, J. (2019). A unified framework of five principles for AI in society. Harv. Data Sci. Rev. 1, 535–545. doi: 10.1162/99608f92.8cd550d1

Garza Mitchell, R. L., and Parnther, C. (2018). The shared responsibility for academic integrity education. New Dir. Community Coll. 2018, 55–64. doi: 10.1002/cc.20317

Holstein, K., and Doroudi, S. (2022). “Equity and artificial intelligence in Education will ‘AIEd’ amplify or alleviate inequities” in The ethics of artificial intelligence in education (New York: Routledge).

Khan, A. A., Badshah, S., Liang, P., Waseem, M., Khan, B., Ahmad, A., et al. (2022). Ethics of AI: a systematic literature review of principles and challenges. Proceedings of the 26th international conference on evaluation and assessment in software engineering 383–392.

King-Sears, M. (2009). Universal design for learning: technology and pedagogy. Learn. Disabil. Q. 32, 199–201. doi: 10.2307/27740372

Kong, S.-C., Man-Yin Cheung, W., and Zhang, G. (2021). Evaluation of an artificial intelligence literacy course for university students with diverse study backgrounds. Comput. Educ. Artif. Intell. 2:100026. doi: 10.1016/j.caeai.2021.100026

Lankshear, C. (Ed.) (2008). Digital literacies: concepts, policies and practices. New York Bern Frankfurt am Main Berlin Vienna: Lang.

Lawson, A., Ferrer, L., Wang, W., and Murray, J. (2015). “Detection of demographics and identity in spontaneous speech and writing” in Multimedia data mining and analytics. eds. A. K. Baughman, J. Gao, J.-Y. Pan, and V. A. Petrushin (Cham: Springer International Publishing), 205–225.

Lawson, A., and Murray, J. (2014). “Identifying user demographic traits through virtual-world language use” in Predicting real world behaviors from virtual world data. eds. M. A. Ahmad, C. Shen, J. Srivastava, and N. Contractor (Cham: Springer International Publishing), 57–67.

Ledesma, E. F. R., and García, J. J. G. (2017). Selection of mathematical problems in accordance with student’s learning style. Int. J. Adv. Comput. Sci. Appl. 8. doi: 10.14569/IJACSA.2017.080316

Lee, H.-P., Sarkar, A., Tankelevitch, L., Drosos, I., Rintel, S., Banks, R., et al. (2025). The impact of generative AI on critical thinking: self-reported reductions in cognitive effort and confidence effects from a survey of knowledge workers. Proceedings of the 2025 CHI conference on human factors in computing systems, 1–22.

Liu, M., Li, Y., Xu, W., and Liu, L. (2017). Automated essay feedback generation and its impact on revision. IEEE Trans. Learn. Technol. 10, 502–513. doi: 10.1109/TLT.2016.2612659

Long, D., and Magerko, B.. (2020). What is AI literacy? Competencies and design considerations, in Proceedings of the 2020 CHI conference on human factors in computing systems, (New York, NY, USA: Association for Computing Machinery), 1–16.

Mohebi, L. (2024). Empowering learners with chatgpt: insights from a systematic literature exploration. Discov. Educ. 3:36. doi: 10.1007/s44217-024-00120-y

Murphy, J., Hallinger, P., and Lotto, L. S. (1986). Inequitable allocations of alterable learning variables1. J. Teach. Educ. 37, 21–26. doi: 10.1177/002248718603700604

Mustafa, M. Y., Tlili, A., Lampropoulos, G., Huang, R., Jandrić, P., Zhao, J., et al. (2024). A systematic review of literature reviews on artificial intelligence in education (AIED): a roadmap to a future research agenda. Smart Learn. Environ. 11:59. doi: 10.1186/s40561-024-00350-5

Ng, D. T. K., Leung, J. K. L., Chu, S. K. W., and Qiao, M. S. (2021). Conceptualizing AI literacy: an exploratory review. Comput. Educ. Artif. Intell. 2:100041. doi: 10.1016/j.caeai.2021.100041

OECD (2025). Intellectual property issues in artificial intelligence trained on scraped data. 33rd Edn. Paris, France.

Perin, D., and Lauterbach, M. (2018). Assessing text-based writing of low-skilled college students. Int. J. Artif. Intell. Educ. 28, 56–78. doi: 10.1007/s40593-016-0122-z

Puckett, C., and Rafalow, M. H. (2020). “From ‘impact’ to ‘negotiation’: educational technologies and inequality” in The Oxford handbook of digital media sociology. eds. D. A. Rohlinger and S. Sobieraj (New York, NY: Oxford University Press).

Rafalow, M. H., and Puckett, C. (2022). Sorting machines: digital technology and categorical inequality in education. Educ. Res. 51, 274–278. doi: 10.3102/0013189X211070812

Ricciardi Celsi, L., and Zomaya, A. Y. (2025). Perspectives on managing AI ethics in the digital age. Information 16:318. doi: 10.3390/info16040318

Roski, M., Walkowiak, M., and Nehring, A. (2021). Universal design for learning: the more, the better? Educ. Sci. 11:164. doi: 10.3390/educsci11040164

Schraw, G., and Dennison, R. S. (1994). Assessing metacognitive awareness. Contemp. Educ. Psychol. 19, 460–475. doi: 10.1006/ceps.1994.1033

Schreffler, J., Vasquez, E. III, Chini, J., and James, W. (2019). Universal design for learning in postsecondary STEM education for students with disabilities: a systematic literature review. Int. J. STEM Educ. 6:8. doi: 10.1186/s40594-019-0161-8

Sewell, A., Kennett, A., and Pugh, V. (2022). Universal design for learning as a theory of inclusive practice for use by educational psychologists. Educ. Psychol. Pract. 38, 364–378. doi: 10.1080/02667363.2022.2111677

Surahman, E., and Wang, T.-H. (2022). Academic dishonesty and trustworthy assessment in online learning: a systematic literature review. J. Comput. Assist. Learn. 38, 1535–1553. doi: 10.1111/jcal.12708

Widenhorn, M., Hardy, D. W., Manuel, J. A., Botha, A., and Robinson, R. (2023). Comparing global university mindsets and student expectations: closing the gap to create the ideal learner experience. The 2nd Global Trends in E-Learning Forum (GTEL 2023).

Xu, W., and Ouyang, F. (2022). The application of AI technologies in STEM education: a systematic review from 2011 to 2021. Int. J. STEM Educ. 9:59. doi: 10.1186/s40594-022-00377-5

Zawacki-Richter, O., Marín, V. I., Bond, M., and Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education – where are the educators? Int. J. Educ. Technol. High. Educ. 16:39. doi: 10.1186/s41239-019-0171-0

Zhang, Z., Liu, H., Shu, J., Nie, H., and Xiong, N. (2020). On automatic recommender algorithm with regularized convolutional neural network and IR technology in the self-regulated learning process. Infrared Phys. Technol. 105:103211. doi: 10.1016/j.infrared.2020.103211

Keywords: generative AI, education, AI integration, pedagogy, technology, ethics, transparency

Citation: Garcia Ramos J (2025) Development and introduction of a document disclosing AI-use: exploring self-reported student rationales for artificial intelligence use in coursework: a brief research report. Front. Educ. 10:1654805. doi: 10.3389/feduc.2025.1654805

Edited by:

Patsie Polly, University of New South Wales, AustraliaReviewed by:

Nehme Safa, Saint Joseph University, LebanonPatricia Milner, University of Arkansas, United States

Copyright © 2025 Garcia Ramos. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jennifer Garcia Ramos, amVubmlmZXJnYXJjaWEyMEBnbWFpbC5jb20=

†ORCID ID: Jennifer Garcia Ramos, orcid.org/0000-0001-5807-8829

Jennifer Garcia Ramos

Jennifer Garcia Ramos