Abstract

The proliferation of generative artificial intelligence (AI) tools in recent years has transformed the educational landscape. This evolution has increased the need to train teachers to adapt to a context in which this technology is ubiquitous, and to prepare their future students for it. However, the effectiveness of AI training programmes for teachers has not been widely analysed. AI literacy has a positive influence on attitudes and perceptions toward technology. It also improves understanding of the role of technology in everyday life, facilitating the conscious and critical integration of AI, including in educational contexts. In this context, the lack of qualified AI teachers is one of the most significant obstacles to integrating the technology itself into basic education itself. This study evaluates the effect of an AI literacy-based intervention on improving the perceptions of AI among pre-service teachers. To carry out the analysis, the pre-service teachers’ perceptions were evaluated using the PAICE questionnaire, which measures awareness, attitude, and trust in AI, before and after the training session. Paired-sample t-tests revealed significant improvements in awareness and attitude. Trust increased numerically but did not reach statistical significance. These results suggest that concise, concept-driven instruction can influence awareness and attitude toward AI. In line with these results, a comparison with the findings of a larger study highlights that self-assessed knowledge about AI is notably higher in the group of pre-service teachers involved in the intervention. The study contributes to the integrative design of training programmes for pre-service and in-service teachers, combining conceptual, ethical, and experiential components. It also highlights the need to promote teacher development in order to enable them to respond critically and consciously to an educational system that has been radically transformed by AI.

1 Introduction

Since the autumn of 2022, the emergence of generative models such as GPT has brought artificial intelligence (AI) into close contact with nearly every sector of society (Massachusetts Institue for Technology - MIT, 2023). Moreover, given the scale and speed with which AI has entered society, it has become increasingly necessary to individuals across all sectors to adapt to this new reality. Developing a basic understanding of how AI systems function and how they influence daily life is no longer optional, but essential for meaningful and responsible participation in contemporary society (Casal-Otero et al., 2023).

While the range of possibilities is vast, the constant emergence of new tools can create a sense of information overload, making it difficult for individuals and organizations to keep pace. This often leads to anxiety or resistance to change (Shalaby, 2024). On the other side, the initial “wow” effect highlights the transformative potential of AI, but it can also foster unrealistic expectations regarding its capabilities and ethical or practical limitations (Farina and Lavazza, 2023). As with any emerging technology, understanding both the promise and the constraints of AI is essential for promoting a more balanced, responsible, and informed use (Jacovi et al., 2021).

This integration of AI into contemporary societies presents numerous challenges. As more individuals adopt AI tools in their daily lives, it becomes increasingly important that they do so in a conscious and critical manner (Castañeda et al., in press). One key strategy for achieving this is the development of AI literacy - the competence to use AI-based technologies effectively, ethically and responsibly (Bilbao Eraña, 2024; Jacovi et al., 2021; Pinski and Benlian, 2024).

Compulsory education must also respond to the growing societal need for AI literacy (Organization for Economic Co-operation and Development - OECD, 2025a), and within this context, primary education will play a key role introducing educational programs that incorporate AI from an early age (Yue Yim, 2024). Liu and Zhong (2024) also highlight the importance of providing AI literacy across all levels of K-12 education, using scaffolding strategies and taking into account the cognitive development appropriate to each stage. In this context, teachers play a central role in introducing AI concepts in primary education. As Zhang et al. (2024) found, teacher-led implementation of AI literacy curricula in the classroom can effectively help students develop a conceptual understanding of AI. Teachers are responsible for explaining how AI works in ways that are developmentally appropriate and accessible to their students’ age and background. To fulfill this role, teachers themselves must first be equipped with the necessary knowledge and confidence to address AI in their classroom (Organization for Economic Co-operation and Development - OECD, 2025a; UNESCO, 2024). This can be achieved through multiple pathways, including in-service training programs designed for active teachers, as well as through initial teacher education, which is crucial for preparing future educators to engage with AI from the very beginning of their careers.

Considering the central role of teachers and the need for appropriate training pathways, it becomes crucial to investigate how teacher education programs are currently integrating AI literacy. The aim of this study is to examine the impact of this foundational training on students’ awareness, attitudes and trust in relation to AI. In doing so, the study seeks not only to evaluate the effectiveness of the instructional approach, but also to generate insights into the types of training that may best prepare both current and future teachers to promote AI literacy in educational settings.

1.1 Defining and promoting AI literacy

The rapid integration of AI into multiple spheres of everyday life has brought with it a growing consensus around the need to foster AI literacy across the general population (Kong et al., 2024; Pinski and Benlian, 2024). As AI increasingly influences how we work, communicate, learn and make decisions, understanding its functioning, implications and limitations has become a fundamental competence for responsible and informed citizenship in the digital age.

AI literacy goes beyond technical knowledge or coding skills: it includes a combination of cognitive, social, and ethical competencies that enable individuals to make informed decisions about AI in real-world contexts (Lee et al., 2024). In this sense, AI literacy represents not only a response to technological advancement, but also a civic and educational imperative (Long and Magerko, 2020).

Several attempts have been made in recent years to define and structure the concept of AI literacy. Long and Magerko (2020) described AI literacy as the ability to understand, use, and critically evaluate AI technologies, emphasizing not only conceptual knowledge but also ethical reflection and the capacity to interact meaningfully with intelligent systems. Building on this framework, the following section presents a detailed overview of the five dimensions of AI literacy proposed by Pinski and Benlian (2024), in conjunction with the main insights and contributions from the scholarly literature associated with each component.

-

Cognitive dimension/conceptual understanding: this involves understanding the fundamental concepts of AI, such as machine learning and deep learning, how AI systems function, make decisions and their inherent strengths and weaknesses (Long and Magerko, 2020). Awareness of AI’s existence and relevance is also part of this dimension (Zhang et al., 2024).

-

Affective dimension/Psychological readiness: this encompasses individuals’ attitudes toward AI, their perceived confidence or self-efficacy in using AI, and a sense of empowerment to engage with and thrive in an AI-driven world (Kong et al., 2024). A positive attitude and confidence are significant predictors of the intention to use AI (Smarescu et al., 2024).

-

Social dimension/Ethical and societal awareness: this relates to understanding the broader implications of AI, including its positive and negative societal impacts, key ethical issues and the importance of using AI responsibly (Pinski and Benlian, 2024). It also involves the ability to identify and critically evaluate ethical dilemmas surrounding AI (Long and Magerko, 2020).

-

Metacognitive dimension: although less frequently emphasized in some frameworks (Kong et al., 2024), this dimension relates to the ability to use AI for problem solving and potentially includes the awareness and regulation of one’s own understanding and use of AI.

-

Practical skills: this involves the ability to identify and use AI tools in various contexts (Long and Magerko, 2020), to apply AI concepts and to effectively use AI systems (Pinski and Benlian, 2024).

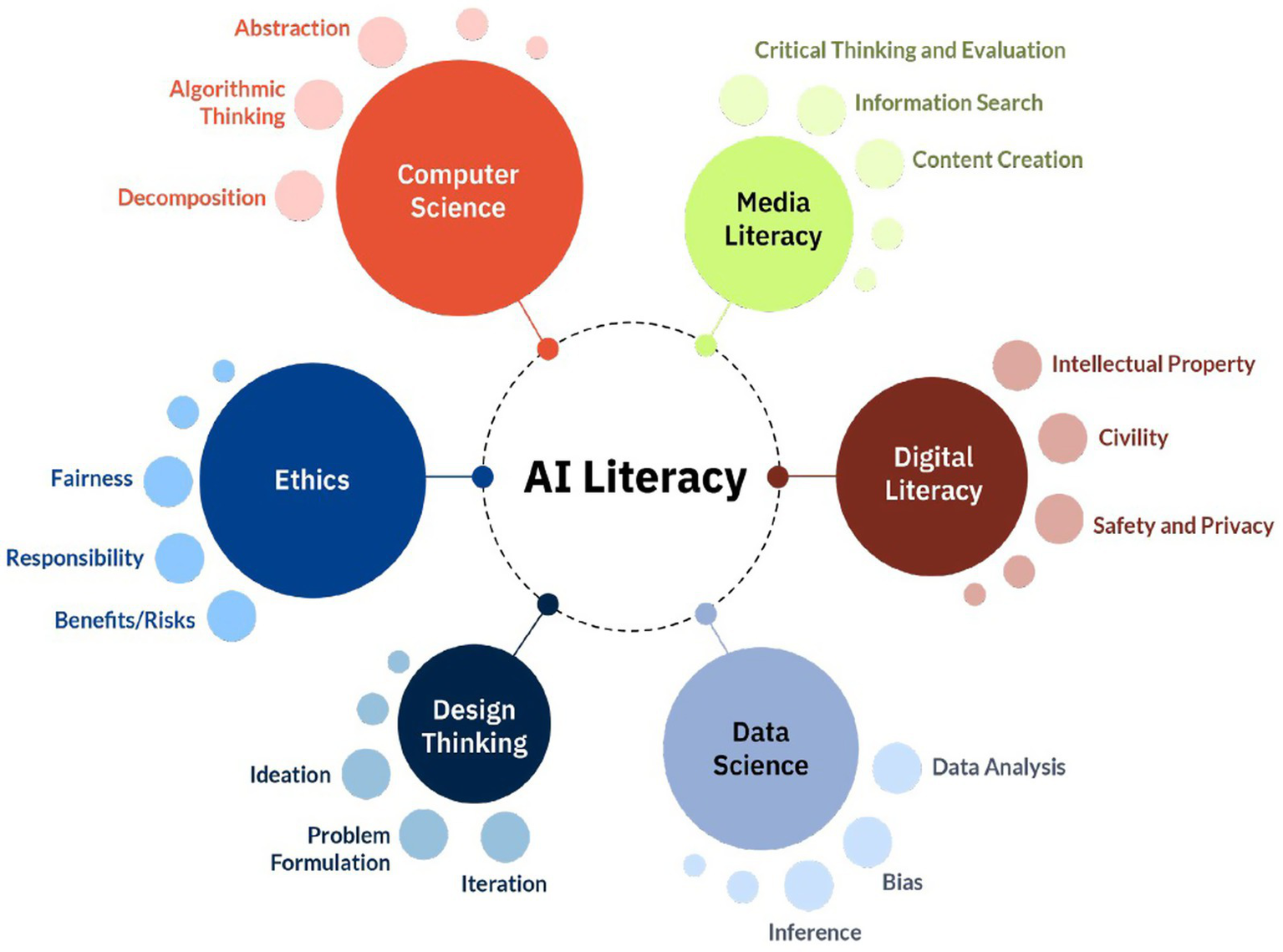

Aligned with all said, and with the EU AI Act, UNESCO and other organizations, the OECD defined the AI Literacy as “the technical knowledge, durable skills, and futureready attitudes required to thrive in a world influenced by AI. It enables learners to engage, create with, manage, and design AI, while critically evaluating its benefits, risks, and ethical implications” (Organization for Economic Co-operation and Development - OECD, 2025a). This last definition, when considered alongside the five dimensions of AI literacy proposed by Pinski and Benlian (2024), highlighted the broad scope of AI Literacy (Figure 1).

Figure 1

Areas of AI literacy and relationship to other topics and disciplines. Source: Organization for Economic Co-operation and Development - OECD (2025a, p.17).

According to Schiavo et al. (2024), cultivating AI literacy not only enhances individuals’ ability to identify, use, and critically assess AI technologies, but also fosters more positive attitudes toward their adoption by reducing uncertainty and anxiety. Their findings suggested that higher levels of AI literacy are associated with increased perceptions of ease of use and usefulness, which directly influence acceptance. Similarly, Moravec et al. (2024) emphasized that AI literacy enables individuals to better understand the role of AI in daily life, which is essential for informed adoption, for mitigating potential risks, and also for reducing negative prejudices and encouraging more balanced attitudes (Jacovi et al., 2021). Together, these perspectives underlined the value of AI literacy as a foundation for responsible engagement with technology, empowering citizens to navigate an increasingly AI-driven world with greater confidence and awareness.

1.2 AI literacy in education and teacher training

Promoting comprehensive AI literacy is especially crucial in the field of education, not only for students who are growing up surrounded by AI technologies, but also for the educators responsible for equipping them to navigate and shape the future (Zhang et al., 2024). Educators need AI literacy to understand how AI can be integrated into teaching and learning, how it impacts assessment practices and how to guide students in responsible AI use (Smarescu et al., 2024).

In this regard, as Liu and Zhong (2024) pointed out, introducing AI literacy from early education onwards demands a scaffolding approach, adapted to the cognitive level of each stage of schooling. This approach also highlighted the importance of teacher preparation, both in-service and pre-service, as a prerequisite for meaningful integration of AI literacy into school curricula. This preparation requires not only the capacity to use AI tools effectively in their practice but also the ability to teach about AI and its implications, including its ethical and social dimensions (Casal-Otero et al., 2023).

Fostering AI literacy in primary teachers involves cultivating a multifaceted set of knowledge and skills (Casal-Otero et al., 2023). This includes a cognitive understanding of basic AI concepts and how AI works, enabling teachers to explain it to students and understand its capabilities and limitations (Castro et al., 2025). Furthermore, AI literacy requires the ability to critically evaluate AI outputs and tools, discerning their quality, appropriateness, and potential biases (Abdulayeva et al., 2025). As Castro et al. (2025) determined, this involves understanding ethical principles, data privacy, potential biases in AI systems, and the broader social and environmental impacts — all of which are essential competencies for teachers aiming to integrate AI meaningfully into their practice. In this regard, Castañeda et al. (in press) defined seven dimensions of implications of AI in Primary Education that should also be considered: instrumental/technical/functional, ethical, social, epistemological, ideological, political and commercial.

Furthermore, as Zhang et al. (2024) argued, understanding how AI systems function enables educators to help students recognize potential biases and address the ethical challenges these technologies may pose. Ethical awareness is a core component of AI literacy (Organization for Economic Co-operation and Development - OECD, 2025a; UNESCO, 2024), and teachers who possess this understanding are better positioned to guide students in navigating the social implications of AI (Du et al., 2024).

In this context, the use of AI in education should be driven by pedagogical objectives rather than by what is technologically possible (Abdulayeva et al., 2025; Arroyo-Sagasta, 2024). As AI has a transformative potential in teaching and learning (Holmes and Tuomi, 2022), with specific classroom applications, such as promoting critical thinking, facilitating classroom debates, working with prompts alongside students, and designing activities that help distinguish AI-generated content from human production (McDonald et al., 2025), teachers may also use AI for didactic planning, content creation, personalization of instruction, formative self-assessment tools, and case-based learning scenarios. Castro et al. (2025) reported that teachers increasingly perceive AI as an opportunity to support personalized learning, reduce administrative workload, and address the challenges of multigrade classrooms.

1.3 Teacher training: current practices and gaps

Despite the growing potential of AI in education, one of the main barriers to its meaningful integration in compulsory education is the lack of adequately trained teachers (Liu and Zhong, 2024). The literature does not provide a consensus on how to address this issue. While Chan (2023) emphasized the importance of comprehensive institutional frameworks for integrating AI education at the university level, such initiatives are still rare, especially in teacher education programs. Her model highlighted the need to align technical knowledge, ethical understanding, and policy awareness in order to create a sustainable foundation for AI literacy for professionals. However, when it comes to compulsory education, the implementation of AI-related content often depends on the initiative and preparedness of individual teachers (Ng et al., 2024). This makes teacher training a critical component of AI integration at early stages.

As noted earlier and highlighted in the literature review by Liu and Zhong (2024), the absence of AI-prepared educators is among the most significant obstacles to implementing AI education at early stages. Most teachers have not received specific training in AI, which in turn affects both their confidence and their capacity to engage with AI-related content in their classrooms. In this context, understanding teachers’ perceptions of AI becomes essential (Bae et al., 2024; Cervantes et al., 2024; Nikolic et al., 2024) for identifying the gaps, challenges, and opportunities associated with AI integration in schools.

Recent efforts to introduce AI literacy in secondary education provide useful insights into how these competencies can be meaningfully integrated into school curricula. Ng et al. (2024) identified a range of pedagogical strategies—including project-based learning, inquiry-based approaches, and ethical discussions—that have been employed to engage students in understanding AI concepts and implications. However, they also noted that many of these interventions depend heavily on individual teacher initiative and lack systemic integration into broader educational policies. While their review focused on secondary education, it underscored the need to extend such efforts to earlier stages. If the goal is to cultivate foundational AI literacy from a young age, then primary education must also be considered a strategic entry point—one that requires well-prepared teachers who can introduce AI in developmentally appropriate and pedagogically meaningful ways.

Teachers generally hold a positive perception of AI’s usefulness and perceived benefits for both their teaching and student learning (Emenike and Emenike, 2023; Cervantes et al., 2024; Liu and Zhong, 2024). Key benefits identified include increasing efficiency and saving time in their work (Lee et al., 2024), assisting with lesson preparation, enabling personalized learning and assessment (Liu and Zhong, 2024), and increasing student motivation and engagement (Zulkarnain and Md Yunus, 2023).

Regarding understanding and knowledge of AI, the sources indicated that most teachers have some general knowledge of AI, but their understanding of Generative AI (GenAI) is significantly less comprehensive (Liu and Zhong, 2024). This lack of knowledge and technological skill is perceived as a major challenge (Zulkarnain and Md Yunus, 2023), although some studies found participants rated their knowledge level as average (Smarescu et al., 2024).

Teachers also perceive significant challenges and concerns associated with AI integration (Liu and Zhong, 2024; Lee et al., 2024). Among the most prominent worries are academic integrity and plagiarism, and concerns about the accuracy and reliability of content generated by AI. There is also apprehension about students potentially becoming overly reliant on technology, leading to a decrease in their creativity and autonomy (Liu and Zhong, 2024). Lack of adequate institutional support and professional development is a frequently mentioned challenge (Smarescu et al., 2024). Practical issues like difficulties with class control, content distraction, poor internet connectivity, lack of infrastructure, teacher burnout/workload, and even computer anxiety are also reported challenges (Zulkarnain and Md Yunus, 2023). Ethical issues are a broader concern, encompassing potential bias in AI, security risks, and the need for establishing responsible standards for AI use (Lee et al., 2024; Yusuf et al., 2024).

Equally important, there is a clear perceived need for more support and training for teachers (Liu and Zhong, 2024): teachers express a desire for free access to AI resources, guidance on usage, technical support, and the development of practical teaching and assessment systems that integrate AI. Similarly, Smarescu et al. (2024) argued that enhancing teacher training can mitigate fears and misconceptions.

Recent studies have increasingly highlighted the urgent need to incorporate AI literacy into teacher education programs. Ravi et al. (2023) pointed out that, while interest in AI among educators is growing, many feel underprepared to integrate it into their teaching practice due to limited exposure and lack of structured training. Similarly, Hur (2024) emphasized that although teachers recognize the relevance of AI, professional development opportunities remain fragmented and insufficient, often focusing more on technical tools than on pedagogical or ethical implications (Arroyo-Sagasta, 2024). Some authors noted that most existing training initiatives still lack a coherent framework and fail to address age-appropriate pedagogical strategies, particularly for compulsory education (Lee et al., 2024; Liu, 2024). In this regard, Dilek et al. (2025) proposed a scaffolded model of teacher training that gradually builds both technical understanding and reflective competence, adapting to teachers’ prior experience and subject area. Finally, Eun and Kim (2024) provided empirical evidence that targeted and practice-based training programs can significantly enhance teachers’ confidence and readiness to engage with AI in the classroom. Together, these contributions suggested that, while awareness of the importance of AI training is growing, there is still a pressing need for more systematic, pedagogically grounded, and context-sensitive approaches to preparing educators for the challenges and opportunities of AI in education.

While much of the current discourse on AI training for teachers focuses on secondary or higher education, there is a growing consensus that AI literacy must be addressed from the earliest stages of schooling. Liu (2024) underscored that neglecting primary education in AI-related teacher training represents a missed opportunity to build foundational understanding and ethical awareness from a young age.

1.4 Measurement approaches in AI literacy research

Understanding how individuals perceive, interact with, and reflect on AI requires the use of valid and reliable measurement instruments. In recent years, various studies have proposed different approaches to operationalize constructs such as AI literacy, awareness, attitude, and trust. These constructs are central to understanding how people engage with AI and how educational interventions might influence such engagement. For instance, Scantamburlo et al. (2025), in the PAICE study, provided a large-scale European framework for measuring AI awareness, attitudes toward AI use in different sectors, and trust in institutions involved in AI governance. Similarly, Nong et al. (2024) developed and validated a multidimensional scale to assess AI literacy, incorporating conceptual knowledge, ethical awareness, and confidence in using AI tools. Krause et al. (2025) also contributed to this field by analyzing how generative AI impacts perceptions and behaviors in higher education, linking constructs such as perceived usefulness, ease of use, and transformative potential. These contributions provide a robust foundation for the design and interpretation of empirical studies in AI literacy and inform the measurement approach adopted in the present research.

2 Materials and methods

This study aims to investigate the effectiveness of a foundational training program on AI delivered within the Artificial Intelligence in Education module of the Digital Innovation specialization in the Primary Education Bachelor’s Degree at Mondragon Unibertsitatea. The training consisted of an 8-h session designed to introduce key AI concepts and systems, fostering AI literacy among pre-service primary school teachers. The study examines how this training influences students’ levels of awareness, attitudes, and trust toward AI and aims to evaluate the effectiveness of the instructional approach implemented.

To this end, the study addresses the following research questions:

-

To what extent does participation in an 8-h foundational AI training program affect students’ awareness, attitudes and trust toward AI?

-

How do the post-intervention perceptions of AI among students of the Digital Innovation specialization compare to those of the general European population, as reported in large scale studies?

Based on this questions, the study pursues the following objectives:

-

To evaluate the effect of a foundational AI training program on students’ levels of awareness, attitudes and trust regarding AI.

-

To compare, at a descriptive level, the post-intervention perceptions of future primary school teachers with those reported by European citizens.

-

To reflect on the effectiveness of the instructional approach and identify key elements for designing future training programs aimed at equipping pre-service and in-service teachers to promote AI literacy from early educational stages.

In line with these objectives and grounded in prior research on AI literacy and acceptance, the following hypotheses are proposed:

H1: Participation in the foundational AI training program will lead to a statistically significant increase in students’ awareness of AI.

H2: Participation in the training will result in a statistically significant positive change in attitudes toward AI.

H3: The training will significantly improve students’ trust in AI.

H4: Compared to the general European population, students in this study will demonstrate higher post-intervention levels of awareness and attitude due to the focused nature of the training they received.

2.1 Research analysis

This study adopted a quantitative research approach. A quasi-experimental research design with a pre-test–intervention–post-test structure was employed. Although the pre- and post-intervention questionnaires were administered to the same group of participants, the data were collected anonymously and without individual coding. As a result, responses could not be matched at the individual level. For this reason, statistical analyses comparing pre- and post-test results were conducted using methods appropriate for independent samples, focusing on aggregated group-level changes.

2.2 Sample and participants

This study employed a convenience sample, composed of 60 students enrolled in the Digital Innovation specialization of the Primary Education Degree of Mondragon Unibertsitatea (2023/24 and 2024/25). Participation was voluntary and limited to those who agreed to complete both intervention and associated assessments. No compensation was provided. All students completed the pre-test questionnaire, but only 48 of them participated in the post-test, resulting in a partial response rate for the second measurement point. This discrepancy reflects both attrition and voluntary participation patterns, and it was considered when interpreting the results. Given that all students enrolled in the specialization were included, no further sampling procedures were implemented.

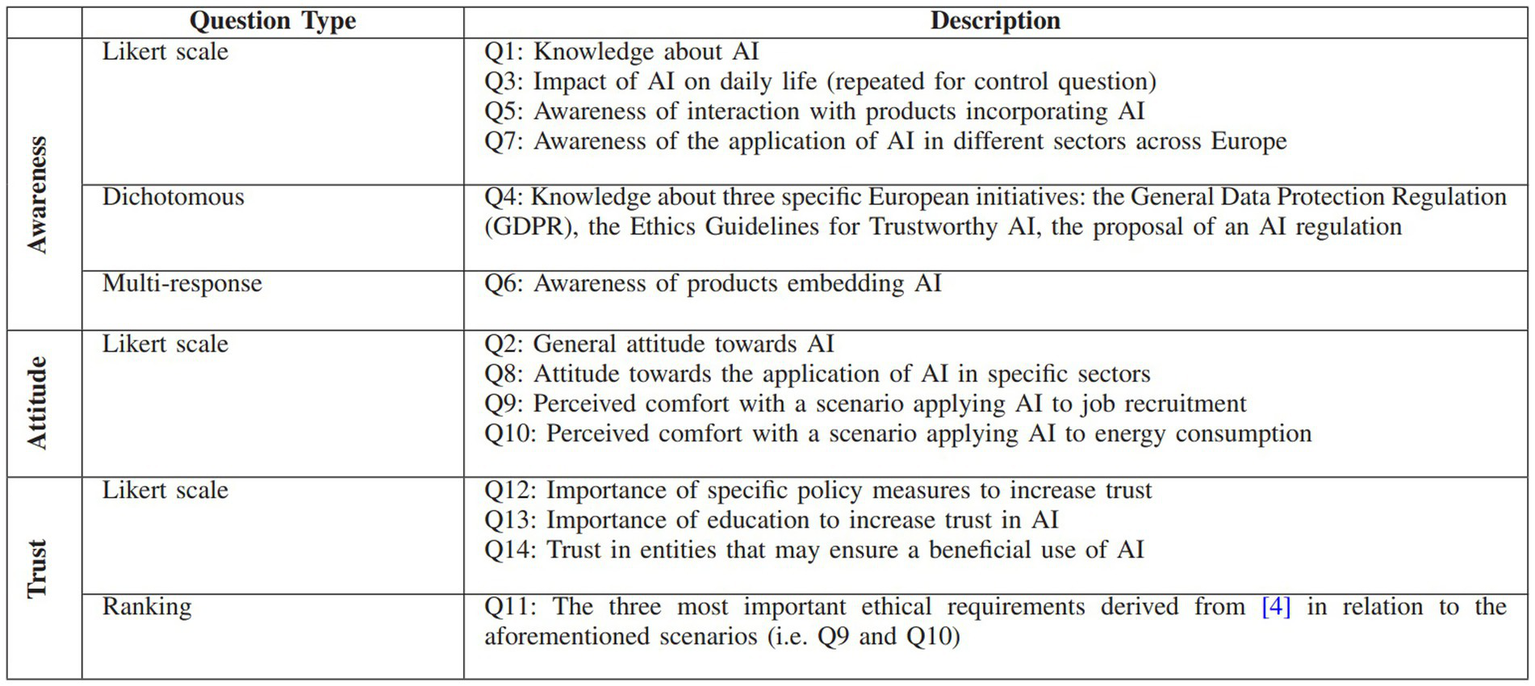

2.3 Data collection tool

Data was collected using a self-administered online questionnaire. The questionnaire titled “PAICE - Perceptions about AI in Citizens of Europe” is validated (Scantamburlo et al., 2025) and consisted of 14 items including Likert scale, dichotomous, multi-response items, and ranking (Figure 2). These items were organized according to three dimensions: awareness, attitude, and trust regarding AI. The instruments were a pre-test and a post-test, which were identical forms of a validated questionnaire to measure the dependent variables of the study. To ensure comparability, the pre- and post-intervention assessments were conducted using the same questionnaire instrument.

Figure 2

PAICE questionnaire design and structure. Source: Scantamburlo et al. (2025, p. 5).

2.4 Intervention design

Between the pre- and post-test, participants completed an 8-h training session focused on AI literacy. The session introduced foundational concepts such as the principles of machine learning, how these systems are trained using data, and the distinction between different types of AI. Rather than focusing on advanced technical details, the training prioritized a clear and accessible understanding of how current AI systems function and are integrated into everyday tools, aligning with Kong et al. (2024), who consistently identify conceptual understanding as the foundational level of AI literacy (cognitive dimension).

Delivered in person, the session interwove explanatory content, illustrative examples, and practical exercises to support comprehension, which simultaneously nurtured the metacognitive and affective dimensions of AI literacy (Kong et al., 2024). Table 1 provides an overview of the content areas covered during the intervention, along with a description of each topic and the corresponding PAICE questionnaire’s dimensions targeted.

Table 1

| Content area | Description | Targeted PAICE dimension |

|---|---|---|

| Introduction to AI and machine learning | Historical context, definitions, basic AI taxonomy | Awareness |

| How AI systems learn from data | Supervised/unsupervised learning, data quality, algorithmic bias | Awareness |

| AI in everyday life | Examples from education, health, entertainment, communication… | Awareness + Attitude |

| Ethical and social implications | Bias, fairness, transparency, misinformation | Awareness + Attitude + Trust |

| Reflective activities and group discussion | Personal experiences, critical perspectives on AI adoption in education | Awareness + Attitude + Trust |

Overview of AI literacy training content and dimensions targeted.

2.5 Data collection procedure

The questionnaire was hosted on a secure online platform (Encuesta.com). Invitations containing a link to the survey were provided to the target student population through the course Learning Management System (LMS) of Mondragon Unibertsitatea. A brief explanation of the study’s purpose, estimated completion time, and clear statements regarding voluntary participation, anonymity, and confidentiality of responses were provided at the beginning. Participants were required to provide electronic informed consent before accessing the questions.

Once the pre-test questionnaire was completed and following the intervention, the identical questionnaire (post-test) was administered to the same participants using the same LMS. Only the research team had access to the collected data.

2.6 Data analysis

All statistical analyses were conducted using Jamovi (version 2.6.26). In addition, Python was employed for data preprocessing, the calculation of Cronbach’s alpha for internal consistency, and the generation of visualizations.

Descriptive statistics (means, standard deviations, frequencies, and percentages) were computed to summarize participant responses at both measurement points. Although the pre- and post-test responses were collected from the same population, the lack of individual identifiers prevented a paired analysis.

Before examining the specific dimensions and their items, an overview was provided of how the Likert-scale items were analyzed. Initially, the data were assessed for normality using skewness and kurtosis statistics. For items that met the assumption of normality, Levene’s test was conducted to evaluate the homogeneity of variances. Based on the results of these assumption checks, appropriate inferential tests were selected following the parametric or non-parametric approach. Specifically, when both normality and homogeneity of variances were confirmed, the parametric Student’s t-test was used. In cases where normality was confirmed but equal variances could not be assumed, Welch’s t-test was applied. Conversely, when the assumption of normality was violated, the non-parametric Mann–Whitney U test was employed to compare groups. The significance threshold for all tests was set at α ≤ 0.05.

In case of dichotomous items, a chi-square test of independence was used to compare the proportion of affirmative responses (“yes”) before and after the intervention. This approach treats the two datasets as independent samples, enabling the detection of significant differences in overall response distributions.

For multiple-response and ranking items, the proportion of participants who selected each option was calculated separately for the pre- and post-test. These proportions were then compared to identify changes.

3 Results

This section presents the findings from the study on university students participating in Digital Innovation speciality, focusing on their awareness, attitude, and trust regarding AI, dimensions utilized in Scantamburlo et al. (2025). The results are presented in two parts: a pre-post analysis of the study group and a comparison of the study group’s perceptions with those of European citizens surveyed by Scantamburlo et al. (2025).

3.1 Pre-post analysis of digital innovation students

Descriptive statistics (means, standard deviations) and inferential statistics (t-tests, t-Welch and U-Mann–Whitney) were used to compare pre- and post-intervention scores on each dimension.

3.1.1 Awareness

As shown in Table 2, three general items from the awareness dimension (Q1: Knowledge about AI, Q3: Impact of AI on daily life, and Q5: awareness of interaction with products incorporating AI) showed statistically significant differences (p < 0.05), indicating an overall increase in participants’ self-reported awareness following the intervention.

Table 2

| Linkert items | Pre | Post | p | Inferential test | ||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | |||

| Q1. Knowledge about AI | 2.63 | 0.712 | 3.40 | 0.676 | 0.001 | T-student |

| Q3. Impact of AI on daily life | 3.68 | 0.813 | 4.10 | 0.905 | 0.012 | T-student |

| Q5. Awareness of interaction with products incorporating AI | 3.05 | 0.877 | 3.54 | 0.874 | 0.003 | U Mann–Whitney |

Changes in AI awareness before and after intervention – Likert items Q1, Q3, Q5.

Results for Q7 explore awareness of AI applications across different sectors in Europe. This item was broken down into ten sub-items, each referring to a specific sector: healthcare, insurance, agriculture, finance, military, law enforcement, environmental, transportation, manufacturing industry and human resource management. Among these, five sectors showed statistically significant differences (p < 0.05) —healthcare, insurance, finance, law enforcement and environmental—, while the other five did not yield significant changes (p > 0.05).

Q4 assessed participants’ awareness about AI related regulation (Table 3). The results showed no significant change (p > 0.05) in awareness of the General Data Protection Regulation (Q4_1). In contrast, statistically significant increases were observed in awareness of both the Ethics Guidelines for Trustworthy AI (Q4_2) and the proposal for an AI regulation at the European level (Q4_3), p < 0.05 in both cases. These findings indicate that the intervention was particularly effective in raising awareness of recent or less familiar European initiatives in AI governance.

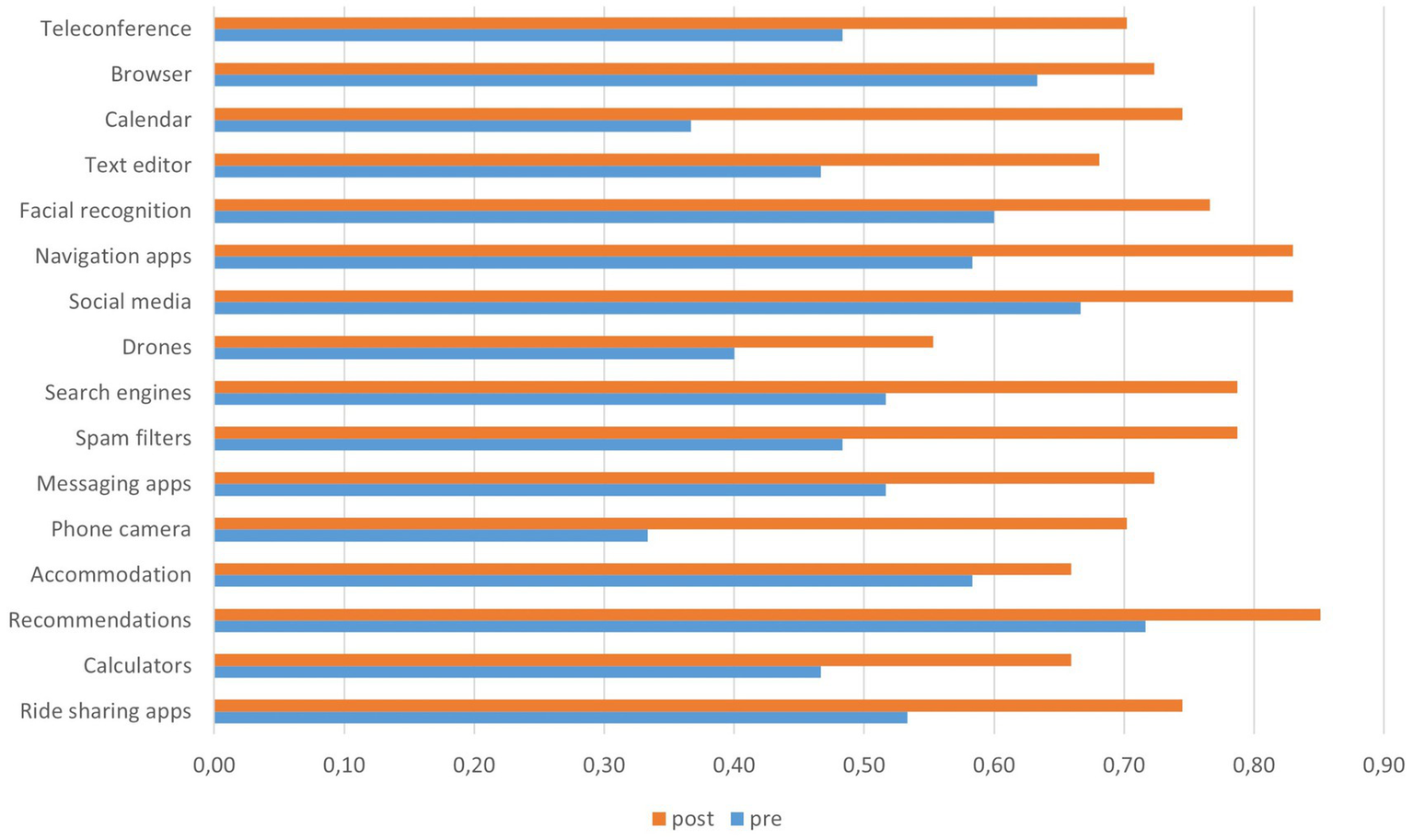

Q6 assessed participants’ awareness of 16 different products or applications embedding AI, using a multi-response format. As shown in Figure 3, all 16 items showed an increase in the proportion of participants who reported being aware of these AI-enabled products after the intervention. These consistent results suggest a broad and generalized improvement in awareness of how AI is embedded in everyday technologies (Table 3).

Figure 3

Changes in consciousness of using AI before and after intervention.

Table 3

| X2 | p | |

|---|---|---|

| Q4_1. Knowledge about General Data Protection Regulation (GDPR) | 0.477 | 0.490 |

| Q4_2. Knowledge about Ethics Guidelines for Trustworthy AI | 39.9 | <0.001 |

| Q4_3. Knowledge about Proposal for a Regulation on AI | 32.9 | <0.001 |

Statistically significant differences in awareness of European AI initiatives.

Values in bold denote statistically significant differences at the p < .05 level.

3.1.2 Attitude

Table 4 displays the results for the general items included in this dimension: Q2 (General attitude toward AI), Q9 (Perceived comfort with a scenario applying AI to job recruitment), and Q10 (Perceived comfort with a scenario applying AI to energy consumption). Statistically significant differences (p < 0.05) were observed for Q2 and Q9, indicating a positive shift in participants’ attitudes and comfort levels in those specific contexts. In contrast, Q10 did not show a statistically significant difference (p > 0.05).

Table 4

| Pre | Post | p | Inferential test | |||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | |||

| Q2. General attitude toward AI | 3.63 | 0.637 | 3.96 | 0.509 | 0.004 | T Welch |

| Q9. Perceived comfort with a scenario applying AI to job recruitment | 3.37 | 0.928 | 3.02 | 0.827 | 0.047 | T Welch |

| Q10. Perceived comfort with a scenario applying AI to energy consumption | 3.53 | 0.830 | 3.43 | 0.881 | 0.551 | T Student |

Changes in attitude toward AI before and after intervention – Likert items Q2, Q9, Q10.

Values in bold denote statistically significant differences at the p < .05 level.

As with item Q7 in the awareness dimension, the results for Q8 varied across sectors. While some sectors showed significant changes in participants’ attitudes after the intervention (p < 0.05) —healthcare, finance, military, environmental, manufacturing industry and human resource management—, others did not (p > 0.05).

3.1.3 Trust

Table 5 presents the results for the trust dimension, including general items and their respective sub-categories. Specifically, Q12 assessed the perceived importance of various policy measures to increase trust in AI, while Q14 focused on trust in different types of entities that may ensure a beneficial use of AI. Q13 measured the perceived importance of education in fostering trust in AI. Across all sub-items of Q12 and Q14, as well as Q13, no statistically significant differences were observed (p > 0.05).

Table 5

| Linkert items | Pre | Post | p | Inferential test | ||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | |||

| Q12. Importance of … to increase trust in AI: | ||||||

| Q12_1. A set of laws enforced by a national authority which guarantees ethical standards and social responsibility in the application of AI. | 3.84 | 0.914 | 3.95 | 0.680 | 0.489 | T Welch |

| Q12_2. Voluntary certifications released by trusted and competent agencies which guarantee ethical standards and social responsibility in the application of AI. | 3.69 | 0.681 | 3.71 | 0.787 | 0.882 | T student |

| Q12_3. Having independent expert entities that monitor the use and misuse of AI in society, including the public sector, and inform citizens. | 3.84 | 0.768 | 3.93 | 0.654 | 0.538 | T student |

| Q12_4. The adoption and application of a self-regulated code of conduct or a set of ethical guidelines when developing or using AI products. | 3.69 | 0.690 | 3.87 | 0.726 | 0.219 | T student |

| Q12_5. The provision of clear and transparent information by the provider that describes the purpose, limitations and data usage of the AI product. | 3.88 | 0.781 | 3.95 | 0.776 | 0.622 | T student |

| Q12_6. The creation of design teams promoting diversity and social inclusion (e.g., gender wise, different expertise, ethnicity, etc) and the consultation of different stakeholders throughout the entire lifecycle of the AI product. | 3.93 | 0.753 | 3.87 | 0.757 | 0.676 | T student |

| Q13. Importance of education to increase trust in AI | 4.26 | 0.715 | 4.27 | 0.688 | 0.954 | T student |

| Q14. Trust in entities that may ensure a beneficial use of AI: | ||||||

| Q14_1. National Governments and public authorities | 3.16 | 0.812 | 3.40 | 0.863 | 0.143 | T student |

| Q14_2. European Union (including European Commission/European Parliament) | 3.52 | 0.863 | 3.62 | 0.834 | 0.536 | T student |

| Q14_3. Universities and research centres | 4.07 | 0.623 | 4.07 | 0.695 | 0.988 | T student |

| Q14_4. Consumer associations, trade unions and civil society organisations | 3.51 | 0.690 | 3.64 | 0.645 | 0.318 | T student |

| Q14_5. Tech companies developing AI products | 3.70 | 0.925 | 3.84 | 1.07 | 0.471 | T student |

| Q14_6. Social media companies | 3.14 | 1.03 | 3.54 | 1.11 | 0.059 | T student |

Changes in trust in AI before and after intervention – Likert items Q12, Q13, Q14.

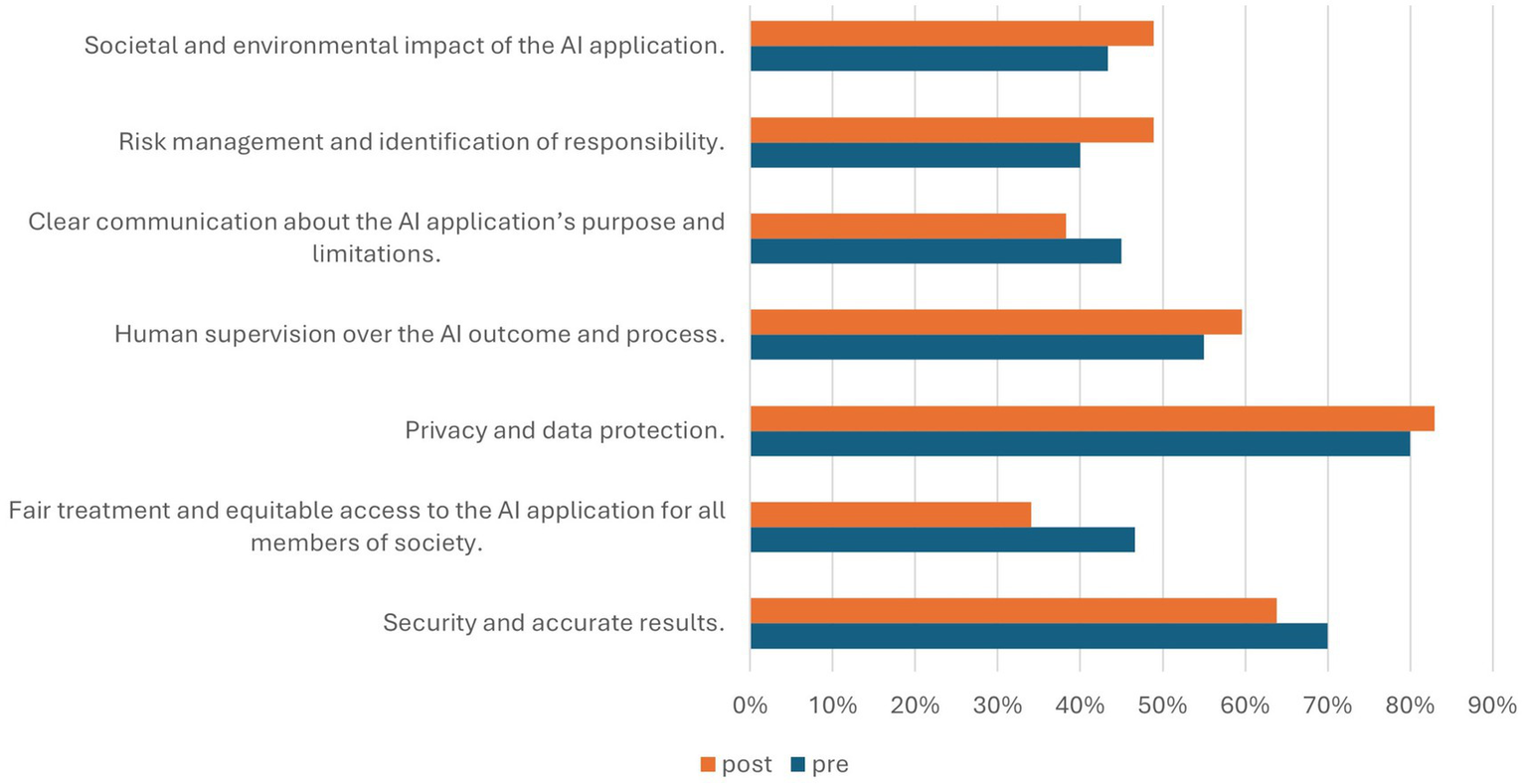

While no statistically significant changes were observed in Likert-scale items related to trust (Q12, Q13 and Q14), Q11—presented as a ranking task rather than a Likert-scale item—provides further insight into participants’ trust-related priorities (see Figure 4). This question asked participants to rank several criteria in terms of their importance for building trust in AI. The results show a mixed pattern: some criteria were ranked more highly after the intervention, suggesting a rise in their perceived importance, while others saw a decrease in their relative position. This lack of uniformity indicates that the intervention may have prompted participants to reconsider their trust-related priorities, although not in a consistent direction across the entire group.

Figure 4

Changes in the ranking of trust-related priorities before and after intervention.

Given the nature of the data (ordinal rankings rather than scale ratings), these changes are interpreted descriptively and are not included in the statistical tests applied to the Likert-based items.

3.2 Comparative analysis with the PAICE European study

To contextualize the findings of the current study, we conducted an additional comparative analysis using aggregated data from the PAICE project (Scantamburlo et al., 2025), which surveyed over 4,000 European citizens across eight countries. While individual-level data were not available, the published aggregate results offer a valuable benchmark for interpreting our local findings.

For each of the three core dimensions —awareness, attitude, and trust— visual comparisons between the results of our study and those reported in PAICE were created. This comparison was performed item by item, where possible, using bar Figures to highlight patterns and discrepancies in response distributions.

Although no statistical inference is possible due to the lack of raw data from PAICE, this visual and descriptive comparison offers meaningful insights into how our participants’ perceptions align with or diverge from broader European trends. Particular attention was paid to items where our intervention appeared to produce significant shifts, allowing for reflection on context-specific effects and possible implications for AI literacy and policy at the local level.

For this comparison, we used the post-intervention results from our study, as they reflect the participants’ perceptions after being exposed to the educational intervention. This choice allows us to examine whether, and to what extent, the intervention brought participants’ levels of awareness, attitude, and trust closer to or further from those observed in the broader European population, as reported in the PAICE study (Scantamburlo et al., 2025).

3.2.1 Awareness

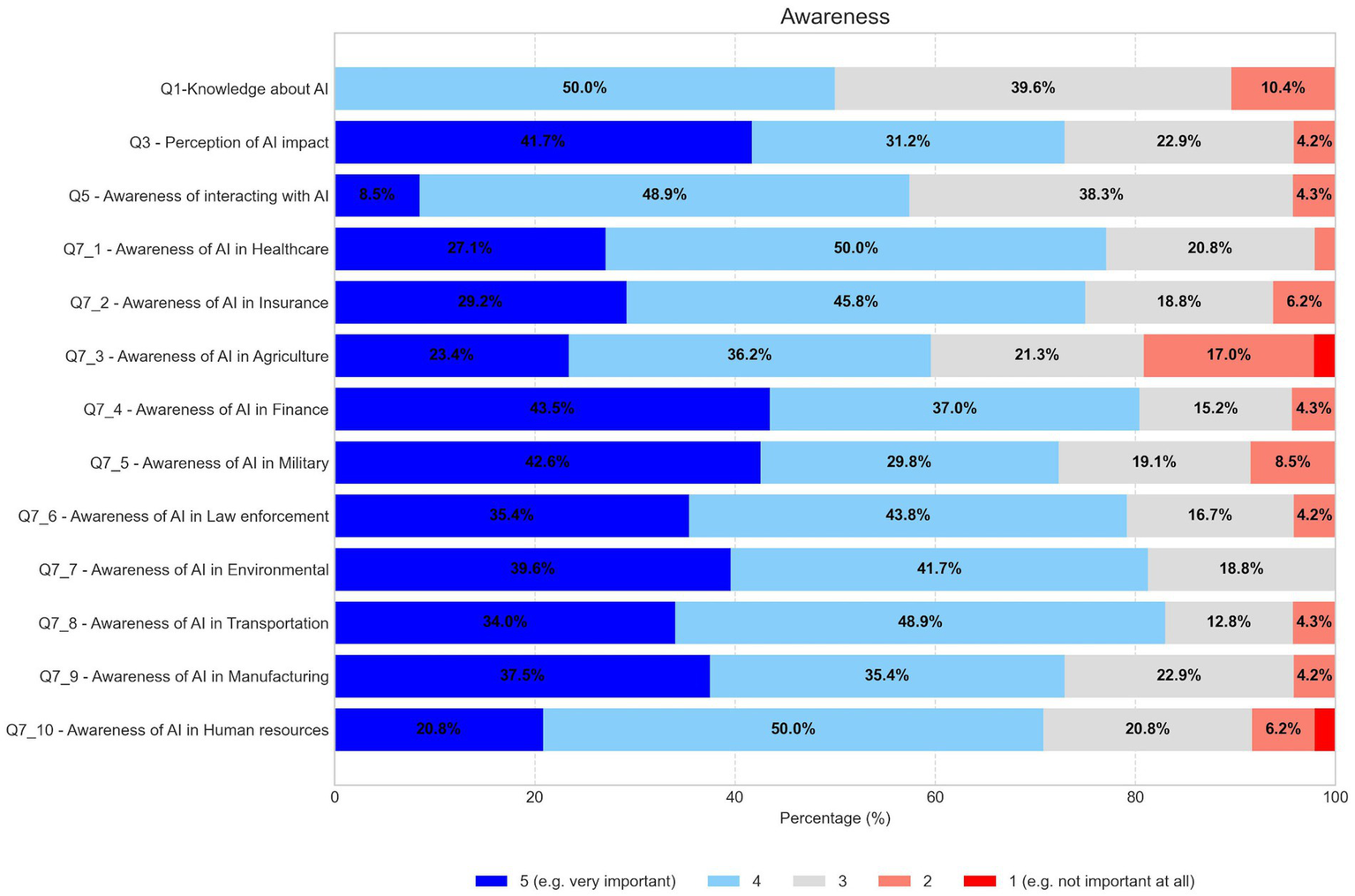

Figure 5 displays our participants’ distribution of responses to the awareness items, in comparison with the corresponding results reported in the PAICE study. Clear differences can be observed between the two.

Figure 5

Responses to Likert scale items associated with awareness.

Notably, self-assessed knowledge about AI (Q1) is substantially higher in Digital Innovation students’ post-test sample, with 50% rating their knowledge as high (levels 4–5), compared to only 20.9% in the European sample. Similar trends are observed in perceived awareness of interacting with AI (Q5) and the perceived impact of AI in daily life (Q3). Moreover, the awareness of AI use across sectors (Q7 items) appears consistently higher in our sample.

3.2.2 Attitude

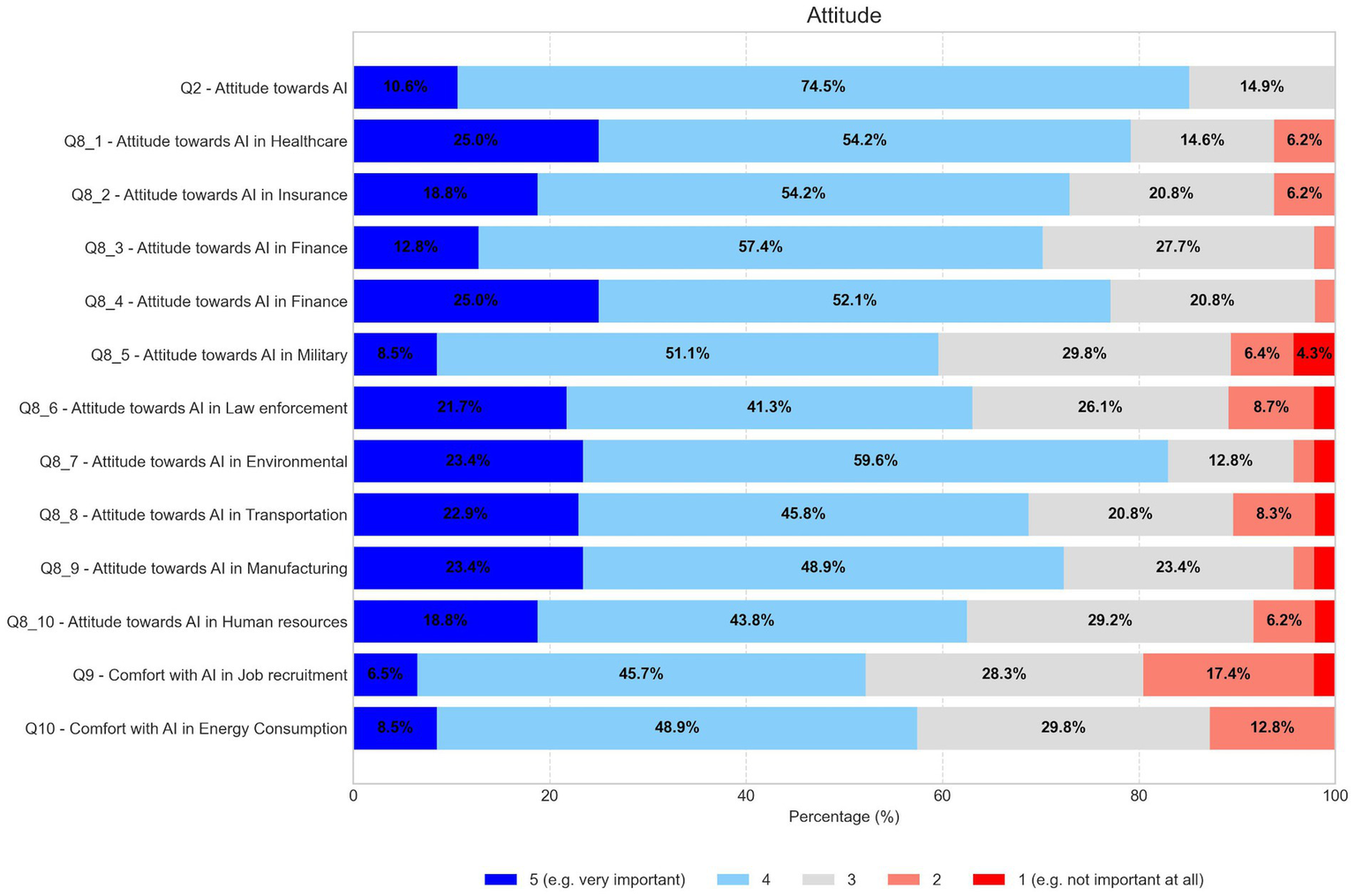

As shown in Figure 6, Digital Innovation students report a consistently more positive attitude toward AI across most items.

Figure 6

Responses to Likert scale items associated with attitude. Low-scale values (1 and 2) are represented by red colors, while high-scale values (4 and 5) are represented by blue colors. Item Q8 is split into subitems regarding the attitude toward AI in ten different sectors.

For instance, general approval of AI (Q2) reached 85.1% in our post-test, considerably higher than the 63.4% reported in PAICE. Additionally, sector-specific attitudes (Q8) were markedly more favorable in our group, particularly in the environmental (83%) and human resources (67.7%) domains, the latter of which had received the lowest approval in PAICE (47.3%). Similarly, levels of comfort with AI applied to job recruitment and energy consumption (Q9, Q10) were either similar or slightly higher in our study.

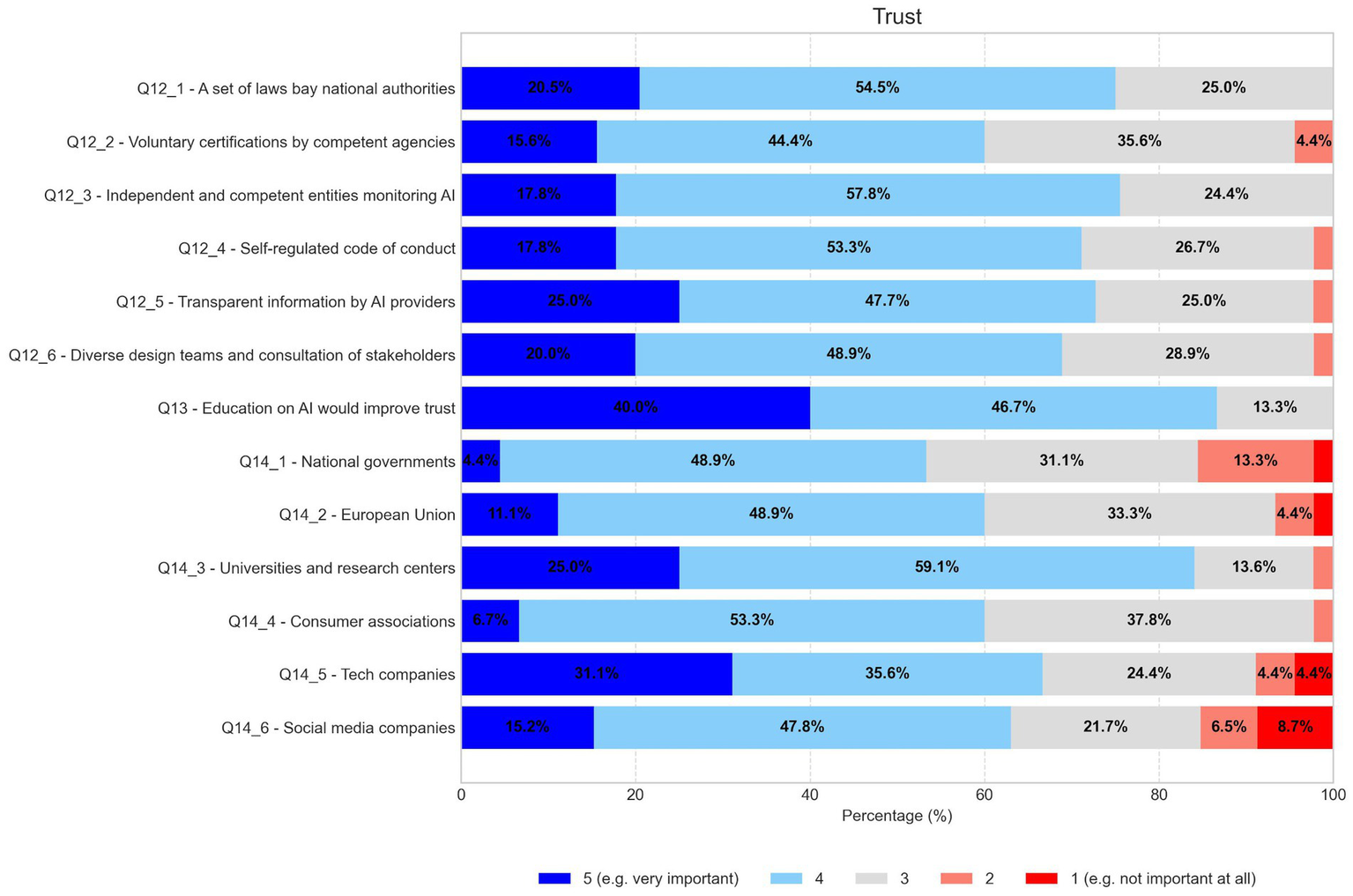

3.2.3 Trust

In the trust dimension, the post-intervention results from our sample of Digital Innovation students were largely aligned with those from the PAICE European study. As shown in Figure 7, the perceived importance of various policy measures (Q12 items) and the role of education (Q13) in building trust were consistently rated highly, with our sample even surpassing the European average in most cases. Regarding Q13, specifically, 86.7% of our participants rated education as important or very important for fostering trust in AI, compared to 71.4% in PAICE.

Figure 7

Responses to Likert scale items associated to trust. Low-scale values (1 and 2) are represented by red-like colors, while high-scale values (4 and 5) are represented by blue-like colors. Item Q12 is split into subitems regarding the perceived importance of six different policy measures. Item Q14 is split into subitems related to the perceived trust in six different entities.

Similarly, the perceived trustworthiness of universities and research centers (Q14_3) was higher in our group (84%) than in the European sample (67%). Interestingly, trust in social media companies (Q14_6) was also considerably higher in our group (63% vs. 35%), which may reflect demoFigureic or cultural differences (Figure 7).

4 Discussion

The findings from this study indicate that the training program based on AI foundations significantly improved participants’ awareness and attitudes toward AI, while dimension of trust did not show a statistically significant change. These results offer valuable insights into how different aspects of AI literacy are influenced by training programmes and highlight potential areas requiring further pedagogical attention.

The observed significant improvement in Digital Innovation students’ awareness aligns with the primary objective of AI literacy training: building a conceptual understanding of AI. Frameworks of AI literacy consistently identify conceptual understanding as a crucial component, enabling individuals to comprehend AI principles and evaluate AI in their lives (Kong et al., 2024; Krause et al., 2025; Nong et al., 2024). Teaching core concepts of AI, even without focusing on complex mathematical concepts, is known to lower the entry barrier to AI literacy (Kong et al., 2022; Kong et al., 2024; Organization for Economic Co-operation and Development - OECD, 2025a). Our results support that direct instruction on the basics of AI effectively enhances participants’ knowledge about what AI is and what it can do. This improvement is consistent with findings from prior studies. Kong et al. (2022, 2024) reported significant gains in participants’ conceptual understanding of AI and its applications, including ethical awareness and problem-solving ability. Similarly, Zhang et al. (2024) found that students developed a deeper understanding of AI concepts and recognized its relevance in their lives. Finally, the result of item Q16 also indicates awareness of the influence of AI in people’s lives. In line with the findings of Zhang et al. (2024), the level of awareness of AI use in all applications and tools has increased following the intervention. Overall, these studies support the improvements observed in this study with regard to awareness.

The positive shift in participants’ attitudes toward AI after the training is consistent with the findings in the literature that link cognitive understanding with affective dimension. Attitude is often associated with the affective dimension of AI literacy, which includes psychological readiness and empowerment (Pinski and Benlian, 2024). Kong et al. (2024) emphasized that understanding the value and societal impact of AI contributes to shaping this dimension. By providing foundational knowledge –what Pinski and Benlian (2024) defined as cognitive dimension– the training likely enhanced participants’ perception of AI’s relevance and potential benefits, thereby fostering a more positive attitude. This is further supported by empirical findings: Zhang et al. (2024) observed a more positive attitude among students toward AI and its role in their future careers; Scantamburlo et al. (2025) found that perceiving greater advantages of AI predicts intention to use it; and Schiavo et al. (2024) confirmed a positive relationship between AI literacy and acceptance. In line with this, Moravec et al. (2024) pointed out that strengthening AI literacy helps individuals better understand the role of AI in daily life, which can also contribute to shaping more favorable attitudes. Although the training program focused primarily on conceptual understanding, the improvement observed in the attitude dimension suggests that cognitive learning may also influence affective responses to AI.

In contrast to other dimensions, no significant change is observed in participants’ trust in AI. At first glance, these results may appear to contrast with those of Kong et al. (2022), who found a significant increase in ethical awareness following an AI literacy intervention. However, two important differences should be considered. First, the training program presented by Kong et al. (2022) lasted 30 h, compared to the 8-h intervention delivered in the present study. Second, that training explicitly addressed ethical considerations as part of the curriculum, while the intervention reported in this study focused primarily on conceptual and technical aspects of AI. These differences in content and scope likely explain the contrast in outcomes.

One notable finding regarding the development of trust, connected with the affective dimension of AI literacy according to Pinski and Benlian (2024), concerns the perceived importance of education. The obtained results are consistent with the work of Liu and Zhong (2024), who also highlighted the need for greater support and training for teachers. Participants in this study demonstrated a strong belief in the role of education fostering trust toward AI, with 86.7% indicating that education on AI would be important or very important for increasing trust. This is significantly higher than the 71.4% reported by Scantamburlo et al. (2025). This difference might suggest a particularly strong conviction among the Digital Innovation students regarding the power of educational initiatives to build confidence and mitigate concerns about AI.

Furthermore, universities and research centers are often perceived as highly trusted entities to ensure beneficial development and use of AI (Scantamburlo et al., 2025). The strong interest in further training observed in other studies (Smarescu et al., 2024) reinforces the idea that educators recognize the need for development in this area. Interestingly, 84% of participants in this study rated universities and research centers with a score of 4 or higher on the Likert scale in terms of trust in their role in AI governance (Q14_3), a notably higher proportion than the 67% reported in the broader European sample from Scantamburlo et al. (2025). This elevated level of trust among pre-service teachers may reflect their closer relationship with academic institutions, its commitment to education, and the relevance of these entities in their own professional development. It also resonates with the perspective advanced by Chan (2023), who argues that universities play a key role in promoting a comprehensive and ethical approach to AI education. Through structured training and critical engagement with AI concepts—particularly teachers’ initial training—higher education institutions are perceived not only as sources of knowledge, but also as agents of responsible and trustworthy AI stewardship.

5 Conclusion

This study aimed to evaluate the impact of foundational AI training on the AI literacy of Digital Innovation specialization pre-service teachers, focusing on the dimensions of awareness, attitude, and trust, as conceptualized within frameworks such as those proposed by Kong et al. (2024) and Scantamburlo et al. (2025).

The findings show clear gains in awareness and a markedly more positive attitude toward AI. Trust, however, exhibited only a marginal movement, suggesting that cognitive gains do not automatically translate into affective and social confidence. These results underline a key pedagogical implication: AI literacy requires more than conceptual instruction and must articulate cognitive, social and ethical aspects, as Lee et al. (2024) have already pointed out. Also points to the need for effective pedagogical approaches that successfully translate awareness into increased confidence and trust (Smarescu et al., 2024).

All these findings point to the need for longer-term, multifaceted interventions that combine conceptual knowledge, ethical reflection, and critical thinking. They also suggest that different dimensions of AI perception—awareness, attitude, and trust—evolve at different paces and may require distinct pedagogical strategies. So, moving from “knowing about AI” to “feeling confident in AI” demands learning experiences that integrate ethical reflection, critical dialogue, and opportunities to interrogate real-world use cases (Smarescu et al., 2024).

In line with this, AI literacy should be embedded not only in initial and in-service teacher education, but also across the broader school curriculum —from early childhood to primary and secondary education— to foster a critically engaged and AI-literate citizenry (Organization for Economic Co-operation and Development - OECD, 2025a). The urgency of this educational shift is further underscored by the OCDE’s announcement that AI literacy will be incorporated into the new PISA 2029 Innovative Domain on Media & Artificial Intelligence Literacy (MAIL), placing new demands on education systems and teacher preparation programs (Organization for Economic Co-operation and Development - OECD, 2025b).

Despite these valuable insights, this study has several limitations that must be acknowledged. First, the relatively small and homogeneous sample —involving students from a single specialization— limits the representativeness of the study and the generalizability of the findings to broader educational or professional populations. Second, the anonymity of the data collection in the pre- and post-intervention phases —while ethically appropriate— prevented the matching of individual responses across time points. As a result, paired-sample statistical tests could not be used, and independent-sample comparisons were employed instead. This limitation reduces the statistical power of the analysis and limits the ability to measure individual change over time. Third, the short duration and specific structure of the intervention may have constrained its impact, particularly regarding the dimension of trust. Trust in AI is inherently complex and context-dependent, influenced by external factors such as media discourse, cultural attitudes or personal experiences with AI systems, elements that were beyond the scope of this study. These limitations highlight the need for further research in order to explore the effectiveness of longer or differently structured programs, especially those explicitly incorporating modules on AI ethics and governance.

Building on these findings and limitations, future research should explore the impact of longer-term and multi-phase interventions that combine conceptual understanding with ethical reflection and opportunities for critical engagement. Particular attention should be given to designing interventions or programmes that explicitly address AI governance and ethics, as these may play a crucial role in fostering trust. Moreover, comparative studies across different educational levels, disciplines, and professional backgrounds would help determine how AI literacy influences awareness, attitude, and trust in diverse contexts. To advance a more integrated and holistic approach of AI literacy, future studies should also examine the interrelationships between the cognitive, affective, social (including trust), and metacognitive dimensions using comprehensive frameworks (Kong et al., 2024). Finally, mixed-methods approaches, combining quantitative and qualitative tools, could shed light on the subtle processes through which learners learn and respond to AI-related knowledge.

Statements

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Ethics Committee of University of Mondragon. The studies were conducted in accordance with the ethical principles of the Declaration of Helsinki and the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AB-E: Conceptualization, Investigation, Project administration, Writing – original draft, Writing – review & editing, Data curation, Formal analysis, Methodology, Software. AA-S: Writing – original draft, Writing – review & editing, Conceptualization, Investigation, Project administration, Supervision, Validation.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. Generative AI was used to refine the academic style of some sentences, but never to directly create content.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1

Abdulayeva A. Zhanatbekova N. Andasbayev Y. Boribekova F. (2025). Fostering AI literacy in pre-service physics teachers: inputs from training and co-variables. Front. Educ.10:1505420. doi: 10.3389/feduc.2025.1505420

2

Arroyo-Sagasta A. (2024). Inteligencia artificial y Educación: construyendo puentes. Barcelona: Graó.

3

Bae H. Jaesung H. Park J. Choi G. W. Moon J. (2024). Pre-service teachers’ dual perspectives on generative AI: benefits, challenges, and integration into their teaching and learning. Online Learn. J.28, 131–156. doi: 10.24059/olj.v28i3.4543

4

Bilbao Eraña A. (2024). “Entonces, ¿qué necesito saber como docente sobre IA?” in Inteligencia artificial y educación: construyendo puentes. ed. SagastaA. A. (Barcelona: Graó), 29–44.

5

Casal-Otero L. Catala A. Fernández-Morante C. Taboada M. Cebreiro B. Barro S. (2023). AI literacy in K-12: a systematic literature review. Int. J. STEM Educ.10:29. doi: 10.1186/s40594-023-00418-7

6

Castañeda L. Arroyo-Sagasta A. Postigo-Fuentes A. Y. (in press). “When digital literacy must go beyond the screen: further dimensions for analysing the AI impact in education” in The Bloomsbury international handbook of literacy. eds. FlynnN.GarciaP. O.JosephH.PowellD.SlaterW. H. (Dublin: Bloomsbury Press).

7

Castro A. Díaz B. Aguilera C. Prat M. Chávez-Herting D. (2025). Identifying rural elementary teachers’ perception challenges and opportunities in integrating artificial intelligence in teaching practices. Sustainability17:2748. doi: 10.3390/su17062748

8

Cervantes J. Smith B. Ramadoss T. D’Amario V. Shoja M. M. Rajput V. (2024). Decoding medical educators’ perceptions on generative artificial intelligence in medical education. J. Investig. Med.72, 633–639. doi: 10.1177/10815589241257215

9

Chan C. K. Y. (2023). A comprehensive AI policy education framework for university teaching and learning. Int. J. Educ. Technol. High. Educ.20:38. doi: 10.1186/s41239-023-00408-3

10

Dilek M. Baran E. Aleman E. (2025). AI literacy in teacher education: empowering educators through critical co-discovery. J. Teach. Educ.76, 294–311. doi: 10.1177/00224871251325083

11

Du H. Sun Y. Jiang H. Islam A. Gu X. (2024). Exploring the effects of AI literacy in teacher learning: an empirical study. Humanit. Soc. Sci. Commun.11:559. doi: 10.1057/s41599-024-03101-6

12

Emenike M. E. Emenike B. U. (2023). Was this title generated by ChatGPT? Considerations for artificial intelligence text-generation software programs for chemists and chemistry educators. J. Chem. Educ.100, 1413–1418. doi: 10.1021/acs.jchemed.3c00063

13

Eun S. Kim A. (2024). The impact of AI literacy on teacher efficacy and identity: a study of Korean English teachers. Int. Conf. Computers Educ. doi: 10.58459/icce.2024.5057

14

Farina M. Lavazza A. (2023). ChatGPT in society: emerging issues. Front. Artif. Intell.6:1130913. doi: 10.3389/frai.2023.1130913

15

Holmes W. Tuomi I. (2022). State of the art and practice in AI in education. Eur. J. Educ.57, 542–570. doi: 10.1111/ejed.12533

16

Hur J. W. (2024). Fostering AI literacy: overcoming concerns and nurturing confidence among preservice teachers. Inform Learn. Sci.126, 56–74. doi: 10.1108/ILS-11-2023-0170

17

Jacovi A. Marasović A. Miller T. Goldberg Y. (2021). Formalizing trust in artificial intelligence: prerequisites, causes and goals of human trust in AI. In FAccT '21: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (pp. 624–635). Association for Computing Machinery

18

Kong S.-C. Cheung M.-Y. W. Tsang O. (2024). Developing an artificial intelligence literacy framework: evaluation of a literacy course for senior secondary students using a project-based learning approach. Comp. Educ. Artificial Intell.6:100214. doi: 10.1016/j.caeai.2024.100214

19

Kong S.-C. Cheung W. M.-Y. Zhang G. (2022). Evaluating artificial intelligence literacy courses for fostering conceptual learning, literacy and empowerment in university students: refocusing to conceptual building. Comput. Hum. Behav. Rep.7:100223. doi: 10.1016/j.chbr.2022.100223

20

Krause S. Panchal B. H. Ubhe N. (2025). Evolution of learning: assessing the transformative impact of generative AI on higher education. Front. Digit. Educ.2:21. doi: 10.1007/s44366-025-0058-7

21

Lee D. Arnold M. Srivastava A. Plastow K. Strelan P. Ploeckl F. et al . (2024). The impact of generative AI on higher education learning and teaching: a study of educators’ perspectives. Comp. Educ. Artificial Intell.6:100221. doi: 10.1016/j.caeai.2024.100221

22

Liu L. (2024). Survey and analysis of primary school teachers’ use of generative artificial intelligence. Lecture Notes Educ. Psychol. Public Media74, 43–52. doi: 10.54254/2753-7048/2024.BO17693

23

Liu X. Zhong B. (2024). A systematic review on how educators teach AI in K-12 education. Educ. Res. Rev.45:100642. doi: 10.1016/j.edurev.2024.100642

24

Long D. Magerko B. (2020). What is AI literacy? Competencies and design considerations. Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, 1–16.

25

Massachusetts Institue for Technology - MIT (2023). The great acceleration: CIO perspectives on generative AI. MIT Technology Review. Available online at: https://www.technologyreview.com/2023/07/18/1076423/the-great-acceleration-cio-perspectives-on-generative-ai/

26

McDonald N. Johri A. Ali A. Hingle A. (2025). Generative artificial intelligence in higher education: evidence from an analysis of institutional policies and guidelines. Comp. Human Behav. Artificial Humans3. doi: 10.48550/arXiv.2402.01659

27

Moravec V. Hynek N. Gavurova B. Kubak M. (2024). Everyday artificial intelligence unveiled: societal awareness of technological transformation. Oecon. Copernic.15:2. doi: 10.24136/oc.2961

28

Ng D. T. K. Su J. Leung J. K. L. Chu S. K. W. (2024). Artificial intelligence (AI) literacy education in secondary schools: a review. Interact. Learn. Environ.32, 6204–6224. doi: 10.1080/10494820.2023.2255228

29

Nikolic S. Wentworth I. Sheridan L. Moss S. Duursma E. Jones R. A. et al . (2024). A systematic literature review of attitudes, intentions and behaviours of teaching academics pertaining to AI and generative AI (GenAI) in higher education: an analysis of GenAI adoption using the UTAUT framework. Australas. J. Educ. Technol. 40, 56–75. doi: 10.14742/ajet.9643

30

Nong Y. Cui J. He Y. Zhang P. Zhang T. (2024). Development and validation of an AI literacy scale. J. Artif. Intell. Res.1:1. doi: 10.70891/JAIR.2024.100029

31

Organization for Economic Co-operation and Development - OECD (2025a). Empowering learners for the age of AI: An AI literacy framework for primary and secondary education (review draft). OECD. Available online at: https://ailiteracyframework.org

32

Organization for Economic Co-operation and Development - OECD (2025b). PISA 2029 Media and Artificial Intelligence Literacy. Available online at: https://www.oecd.org/en/about/projects/pisa-2029-media-and-artificial-intelligence-literacy.html

33

Pinski M. Benlian A. (2024). AI literacy for users – a comprehensive review and future research directions of learning methods, components, and effects. Comp. Human Behav. Artificial Humans2:100062. doi: 10.1016/j.chbah.2024.100062

34

Ravi P. Broski A. Stump G. Abelson H. Klopfer E. Breazeal C. (2023). Understanding teacher perspectives and experiences after deployment of AI literacy curriculum in middle-school classrooms. Proceedings of ICERI2023 Conference, 6875–6884.

35

Scantamburlo T. Cortés A. Foffano F. Barrué C. Distefano V. Pham L. et al . (2025). Artificial intelligence across Europe: a study on awareness, attitude and trust. IEEE Trans. Artif. Intell.6, 477–490. doi: 10.1109/TAI.2024.3461633

36

Schiavo G. Businaro S. Zancanaro M. (2024). Comprehension, apprehension, and acceptance: understanding the influence of literacy and anxiety on acceptance of artificial intelligence. Technol. Soc.77:102537. doi: 10.1016/j.techsoc.2024.102537

37

Shalaby A. (2024). Classification for the digital and cognitive AI hazards: urgent call to establish automated safe standard for protecting young human minds. Digital Econ. Sustain. Develop.2, 1–17. doi: 10.1007/s44265-024-00042-5

38

Smarescu N. Bumbac R. Alin Z. Iorgulescu M.-C. (2024). Artificial intelligence in education: next-gen teacher perspectives. Amfiteatru Econ.26, 145–161. doi: 10.24818/EA/2024/65/145

39

UNESCO . (2024). AI competency framework for teachers. UNESCO. Available online at: https://unesdoc.unesco.org/ark:/48223/pf0000391104

40

Yue Yim I. H. (2024). A critical review of teaching and learning artificial intelligence (AI) literacy: developing an intelligence-based AI literacy framework for primary school education. Comp. Educ. Artificial Intell.7:100319. doi: 10.1016/j.caeai.2024.100319

41

Yusuf A. Pervin N. Román-González M. (2024). Generative AI and the future of higher education: a threat to academic integrity or reformation? Evidence from multicultural perspectives. Int. J. Educ. Technol. High. Educ.21:21. doi: 10.1186/s41239-024-00453-6

42

Zhang H. Lee I. Moore K. (2024). An effectiveness study of teacher-led AI literacy curriculum in K-12 classrooms. Proceedings of the AAAI Conference on Artificial Intelligence, 38,

43

Zulkarnain N. S. Md Yunus M. (2023). Primary teachers’ perspectives on using artificial intelligence technology in English as a second language teaching and learning: a systematic review. Int. J. Acad. Res. Prog. Educ. Dev.12, 861–875. doi: 10.6007/IJARPED/v12-i2/17119

Summary

Keywords

artificial intelligence, AI literacy, teacher training, perception, education

Citation

Bilbao-Eraña A and Arroyo-Sagasta A (2025) Fostering AI literacy in pre-service teachers: impact of a training intervention on awareness, attitude and trust in AI. Front. Educ. 10:1668078. doi: 10.3389/feduc.2025.1668078

Received

17 July 2025

Accepted

06 October 2025

Published

29 October 2025

Volume

10 - 2025

Edited by

Lola Costa Gálvez, Open University of Catalonia, Spain

Reviewed by

Ben Morris, Leeds Trinity University, United Kingdom

Giovanna Cioci, University of Studies G. d'Annunzio Chieti and Pescara, Italy

Updates

Copyright

© 2025 Bilbao-Eraña and Arroyo-Sagasta.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Amaia Arroyo-Sagasta, aarroyo@mondragon.edu

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.