- 1Department of Science and Technology Education, University of South Africa, Pretoria, South Africa

- 2Department of Computer and Robotics Education, University of Nigeria, Nsukka, Nigeria

- 3Department of Mathematics, Science and Technology Education, Faculty of Education, University of Johannesburg, Johannesburg, South Africa

In response to the growing demand for innovative instructional strategies in STEM education, we examine the effectiveness of AI-supported Problem-Based Learning (PBL) in improving students’ engagement, intrinsic motivation, and academic achievement. Traditional pedagogies often fail to sustain learner interest and problem-solving skills, particularly in computing disciplines, which informed our focus on integrating artificial intelligence into PBL to address these gaps. We adopted a quasi-experimental design with a non-equivalent pretest–posttest control group structure, involving 87 s-year undergraduates enrolled in Computer Robotics Programming courses in Nigeria Universities. Participants were divided into two groups: the experimental group (n = 45, University of Nigeria) received AI-supported PBL instruction, while the control group (n = 42, Nnmadi Azikwe University) engaged in traditional PBL. We ensured the reliability and validity of our instruments, with Cronbach’s alpha values exceeding 0.70, composite reliability > 0.70, and AVE > 0.50. Data were analyzed using one-way multivariate analysis of covariance (MANCOVA) to assess the combined and individual effects of instructional method, controlling for prior programming experience. Results revealed a significant multivariate effect of instructional method on the combined outcomes, Wilks’ Λ = 0.134, F(3, 82) = 176.93, p < 0.001, η2 = 0.866. Univariate analyses showed that AI-supported PBL significantly improved engagement (η2 = 0.694), motivation (η2 = 0.690), and achievement (η2 = 0.519) compared to traditional PBL. We conclude that integrating AI into active learning environments transforms cognitive and skills learning outcomes. We recommend that curriculum designers, educators and policymakers prioritize AI-enhanced pedagogies and invest in faculty training for sustainable STEM education. This approach promises to advance learner-centered instruction and equip graduates for the challenges of a technology-driven future.

1 Introduction

The adoption of Artificial Intelligence (AI) is transforming Science, Technology, Engineering, and Mathematics (STEM) education, offering innovative opportunities for personalized, adaptive, and engaging learning experiences (Leon et al., 2025). Studies indicate that over 51% of students exposed to AI-driven learning environments show improved academic achievement compared to those taught using traditional methods (Pertiwi et al., 2024; Strielkowski et al., 2025), especially in developed regions like America and Europe. AI tools have been widely applied in personalizing instruction for disciplines such as chemistry and programming, particularly in developing countries (Iyamuremye et al., 2024; Omeh et al., 2025). These tools not only enhance academic outcomes but also increase learner interest and motivation. Consequently, scholars recommend integrating AI tools within effective pedagogical frameworks rather than using them in isolation to optimize learning (Pertiwi et al., 2024). Problem-Based Learning (PBL) offers such a pedagogical framework. As a student-centered approach, PBL engages learners in solving real-world, open-ended problems to foster critical thinking and creativity (Umakalu and Omeh, 2025). While traditional PBL has been shown to enhance collaboration, problem-solving, and conceptual understanding (Ito et al., 2021; Kurniawan et al., 2025), empirical evidence on the integration of AI with PBL in STEM education, especially in African contexts remains scarce. Notably, Kurniawan et al. (2025) reported a 40% rise in class participation and conceptual understanding through PBL, recommending the adoption of emerging technologies such as AI to further enrich the learning experience.

AI, defined as the development of systems capable of performing tasks requiring human intelligence, such as pattern recognition, decision-making, and language processing has expanded rapidly across education (Zhu et al., 2023). In STEM, AI promotes personalized learning, adaptive feedback, and intelligent tutoring, fostering creative thinking and cross-disciplinary problem-solving (Xu and Ouyang, 2022; Omeh, 2025). These capabilities are particularly relevant in Computer Robotics Programming (CRP), a multidisciplinary field integrating programming, control systems, and sensor technologies to design intelligent robotic systems (Lozano-Perez, 2005). However, in developing countries like Nigeria, the teaching of CRP faces challenges such as limited access to modern labs, robotics kits, and internet connectivity, coupled with a shortage of instructors with both technical and pedagogical expertise (Bati et al., 2014; Omeh et al., 2025). Consequently, instruction often relies on lecture-based methods that do not adequately foster creativity, practical skills, or problem-solving (Eteng et al., 2022).

To address these challenges, this study proposes integrating AI technology within a PBL framework for teaching CRP, leveraging AI’s ability to provide real-time feedback, adaptive scaffolding, and personalized learning analytics. Such integration is grounded in Self-Determination Theory (Deci and Ryan, 1987), which posits that learning environments that support autonomy, competence, and relatedness enhance intrinsic motivation. AI-supported PBL can fulfill these psychological needs by offering structured guidance while promoting learner autonomy. Additionally, the study draws on Schneiderman’s (2000) engagement theory, emphasizing active participation and meaningful interaction with peers and technology as key to sustained engagement. Despite promising evidence, research findings on AI adoption remain inconsistent. Some studies report significant improvements in knowledge and skills acquisition through AI integration (Pillai and Sivathanu, 2020), while others highlight minimal or no impact (Adewale et al., 2024). This lack of consensus underscores the need for empirical investigations in under-researched contexts like Africa, focusing on how AI-supported PBL influences engagement, intrinsic motivation, and academic achievement in CRP. Thus, this study aims to examine the effect of integrating AI technology with PBL on student outcomes, controlling for prior programming experience, which often influences confidence and task performance (Bowman et al., 2019; Harding et al., 2024). By addressing these gaps, the study seeks to provide actionable insights for designing equitable and motivating learning environments in STEM education.

1.1 Research questions

What is the effect of instructional method (AI-supported PBL vs. traditional PBL) on students’ engagement in computer robotics programming after controlling for prior programming experience?

What is the effect of instructional method on students’ intrinsic motivation after controlling for prior programming experience?

What is the effect of instructional method on students’ academic achievement after controlling for prior programming experience?

2 Related literature review and theoretical framework

2.1 Evolution of teaching computer robotics programming (CRP)

The teaching of Computer Robotics Programming (CRP) has evolved significantly over the last few decades, reflecting its interdisciplinary nature and growing complexity. Robotics education combines programming, control systems, and sensor technologies, requiring both theoretical understanding and practical application (Krishnamoorthy and Kapila, 2016). Traditional lecture-based methods have historically dominated robotics education, particularly in developing countries, where limited infrastructure and resource constraints often necessitate conventional instructional models (Corral et al., 2016; Zhang et al., 2024). While lectures provide a foundational understanding of concepts such as algorithms, sensors, and actuators, they are often insufficient for fostering critical skills like problem-solving, collaboration, and innovation (Thomas and Bauer, 2020). Hands-on experiences using platforms like LEGO Mindstorms and Arduino have been widely recognized for enhancing technical proficiency and practical application in robotics programming (Thomas and Bauer, 2020). However, such benefits are amplified when coupled with active learning approaches, particularly Problem-Based Learning (PBL), which emphasizes real-world problem-solving and learner autonomy (Jonassen and Carr, 2020; Chen and Chung, 2024).

2.2 Problem-based learning (PBL) and emerging technologies

PBL fosters iterative learning cycles, critical thinking, and collaborative problem-solving, positioning students as active participants rather than passive recipients of knowledge (Jonassen and Carr, 2020). In recent years, educational research has highlighted the importance of integrating emerging technologies, such as AI, virtual simulation environments, gamification, and mixed reality, into PBL environments to enhance student engagement and achievement (Marín et al., 2018; Srimadhaven et al., 2020). Virtual simulation platforms like ROS and Webots have become central in robotics programming, providing scalable, risk-free environments for experimentation (Ahn and Jeong, 2025; Washington and Sealy, 2024). Moreover, the integration of AI-driven tools offers adaptive feedback and real-time analytics, which help bridge gaps in learners’ prior knowledge and maintain engagement in complex tasks (Yilmaz and Yilmaz, 2023; Chavez-Valenzuela et al., 2025). AI not only supports personalized learning pathways but also fosters collaborative practices, enabling peer-to-peer programming and shared responsibility for problem-solving. Despite these advancements, limited empirical studies exist on AI-supported PBL in CRP within African higher education contexts, underscoring the need for the present study.

2.3 Engagement in robotics programming

Engagement comprising behavioral, cognitive, and emotional dimensions, which is critical to learning outcomes, influencing persistence, creativity, and task performance (Bakır-Yalçın and Usluel, 2024). Prior research suggests that engagement in CRP is often shaped by prior programming experience, as students with prior exposure exhibit higher confidence and smoother task navigation (Bowman et al., 2019; Harding et al., 2024). Conversely, novice learners may struggle with syntax and logic, diverting attention from robotics-specific problem-solving (Woodrow et al., 2024). Studies recommend controlling for prior knowledge through stratified grouping or statistical adjustment using pre-test scores to ensure fair measurement of engagement (MacNeil et al., 2023; Nie et al., 2024). Adaptive feedback systems integrated within AI-supported environments can help sustain engagement by tailoring task complexity and providing timely scaffolding (Yilmaz and Yilmaz, 2023; Omeh et al., 2025). PBL further enhances engagement through peer collaboration, enabling novices to learn from experienced peers while experts consolidate knowledge by teaching. Thus, we hypothesize that:

H1: There is no significant difference in engagement between students in AI-supported PBL and those in traditional PBL, controlling for prior programming experience.

2.4 Intrinsic motivation in AI-supported learning

Intrinsic motivation—the internal drive to learn for interest and enjoyment rather than external rewards—is a key determinant of persistence and academic success in STEM education (Kotera et al., 2023). Robotics programming environments that emphasize autonomy, competence, and relatedness foster intrinsic motivation (Gressmann et al., 2019; Anselme and Hidi, 2024). Research shows that integrating AI technologies, such as real-time hints and adaptive feedback, can enhance learners’ sense of mastery and autonomy, thereby sustaining motivation (Lin and Muenks, 2025; Tozzo et al., 2025). However, concerns exist regarding overreliance on AI systems, which may reduce self-reliance and autonomy if not carefully managed (Kotera et al., 2023). The present study addresses this by embedding AI within a student-centered PBL structure, ensuring technology acts as a scaffold rather than a substitute for human facilitation. Thus, we hypothesize that:

H2: There is no significant difference in intrinsic motivation between students in AI-supported PBL and those in traditional PBL, controlling for prior programming experience.

2.5 Academic achievement in robotics programming

Academic achievement in CRP encompasses content mastery, problem-solving ability, and application of programming skills (Omeh et al., 2025). Empirical evidence indicates that PBL significantly enhances these outcomes compared to lecture-based methods by promoting active learning and knowledge transfer (Orhan, 2025; Chen and Yang, 2019). AI integration further amplifies these benefits through personalized scaffolding, real-time analytics, and adaptive prompts, reducing cognitive load and enhancing skill acquisition (Torres and Inga, 2025; Yilmaz and Yilmaz, 2023). Nevertheless, some studies report mixed results regarding AI’s impact on academic performance, citing infrastructure limitations and poor pedagogical alignment as contributing factors (Adewale et al., 2024). Effective implementation, therefore, requires balancing technological support with human facilitation, a principle incorporated into the present study design. Thus, we hypothesize that:

H3: There is no significant difference in academic achievement between students in AI-supported PBL and those in traditional PBL, controlling for prior programming experience.

2.6 Theoretical framework

This study is grounded in Self-Determination Theory (Deci and Ryan, 1987) and Engagement Theory (Schneiderman, 2000). Self-Determination Theory posits that intrinsic motivation is fostered when learning environments support three core psychological needs: autonomy, competence, and relatedness. In the context of PBL, students are given the freedom to explore open-ended problems (autonomy), develop technical and collaborative skills (competence), and engage meaningfully with peers and mentors (relatedness). These conditions are essential for nurturing intrinsic motivation. However, while SDT provides a strong foundation for understanding motivation, it offers limited insight into the mechanisms of student engagement particularly in dynamic, technology-enhanced learning environments. To address this gap, Engagement Theory offers a complementary perspective by emphasizing purposeful, collaborative, and technology-mediated activities. It suggests that meaningful engagement arises when learners are involved in tasks that are authentic, socially interactive, and supported by digital tools. Within the AI-supported PBL framework, this theory becomes especially relevant. The proposed intervention Artificial Intelligence Meets PBL operationalizes the principles of both theories by: Structuring learning around problem-driven tasks that promote autonomy and relevance. Integrating adaptive AI feedback, which supports competence through personalized guidance and scaffolding. Facilitating peer collaboration, enhancing relatedness and social engagement.

3 Methodology

3.1 Participants, and design

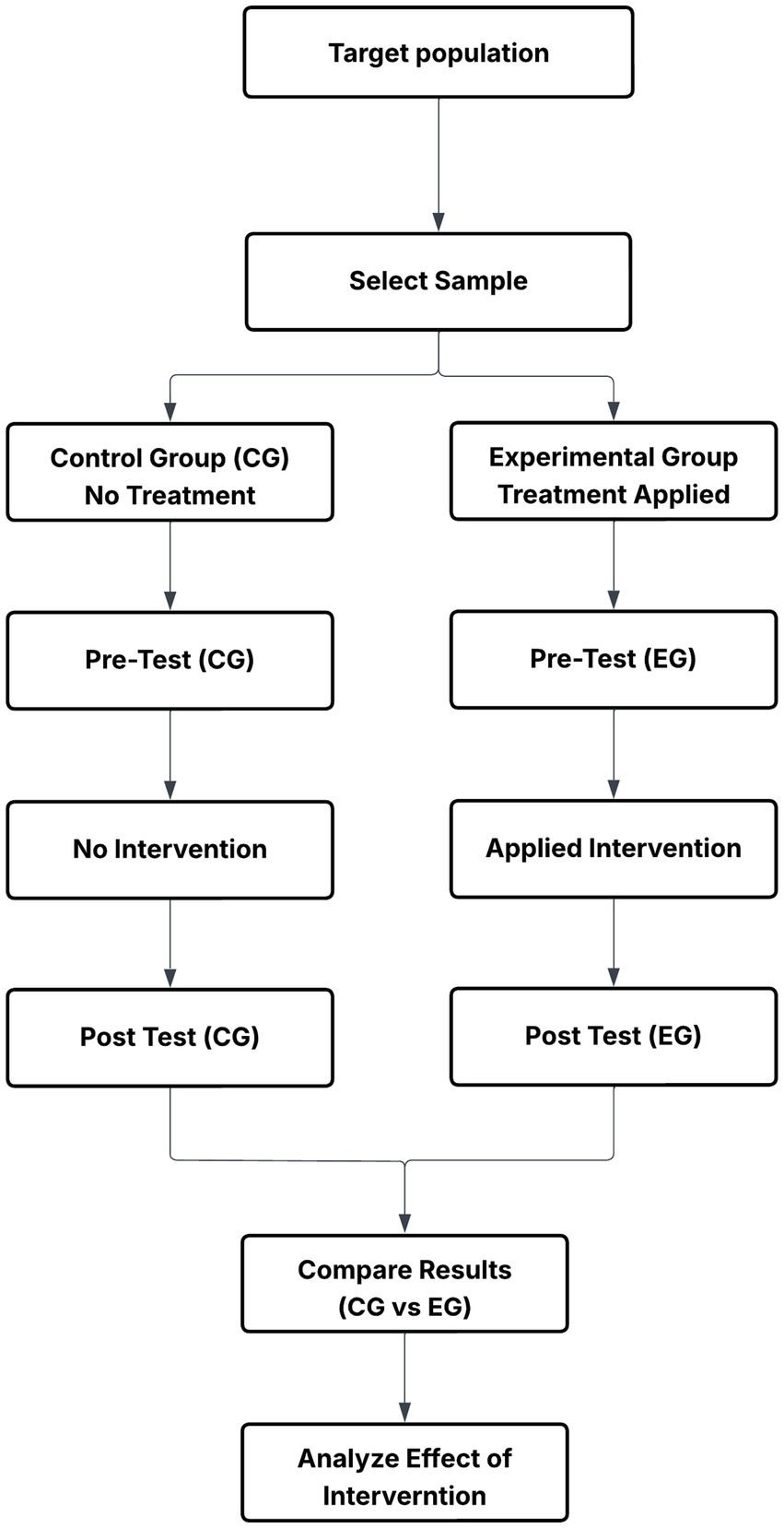

Our sample comprised 87 s-year students of computing Education that offers Computer Robotics programming in Nigeria universities university of Nigeria, with n = 45 students while Nnamdi Azikwe University Akwa with n = 43 students. The study population is made up of 38 males (43.7%) and 49 females (56.3%), indicating a slightly higher representation of females. In terms of age distribution, most participants were within the 18–20 years age group (48.3%), followed by 21–23 years (32.2%) and 24 years and above (19.5%), suggesting that the majority were traditional-age undergraduates. Regarding prior programming experience, 34 students (39.1%) reported previous exposure to programming, while 53 students (60.9%) had no prior experience, underscoring the diversity in baseline skills. Additionally, 63 students (72.4%) indicated adequate access to digital devices, whereas 24 students (27.6%) had limited access, reflecting some level of digital inequality among the participants. Furthermore, we adopted a quasi-experimental design with a non-equivalent pretest–post-test control group structure to examine our objectives. This design was appropriate for examining the effect of AI-supported Problem-Based Learning (PBL) on students’ engagement, intrinsic motivation, and academic achievement in a computer robotics programming course. It allowed us to compare learning outcomes between two groups an experimental group exposed to AI-enhanced instruction and a control group taught using traditional PBL while statistically controlling for prior programming experience as a covariate.

3.2 System design of AI-supported PBL learning environment

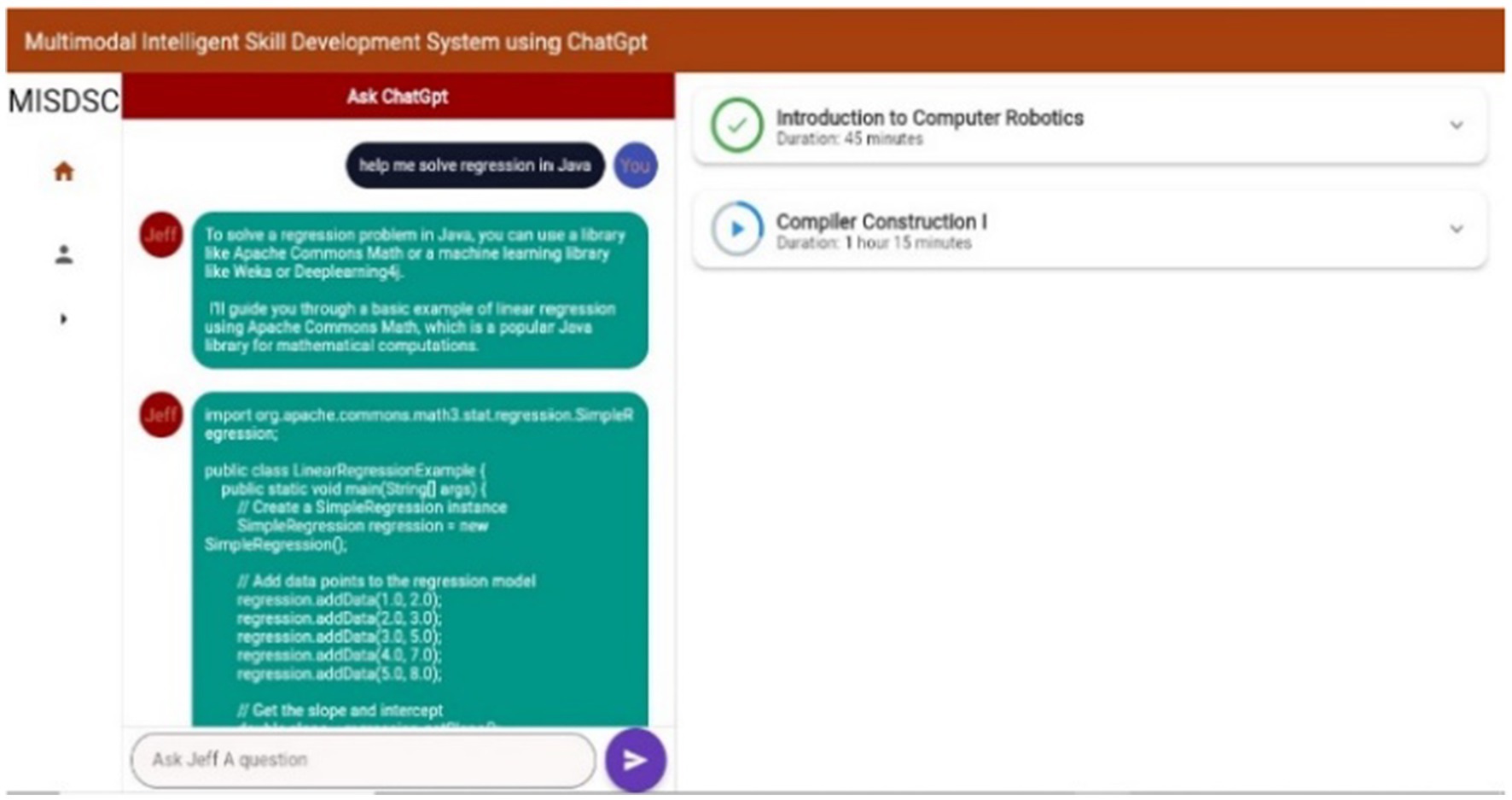

The AI-Supported Problem-Based Learning Environment (APLE) (See Figure 1) was conceptualized and implemented to facilitate active, personalized, and adaptive learning experiences for students enrolled in computer robotics programming. The system was designed to combine intelligent tutoring capabilities with the pedagogical principles of PBL, ensuring students could access real-time support while engaging in structured problem-solving tasks.

3.2.1 System architecture

The APLE was developed using a modular architecture based on the frontend-backend separation model to ensure scalability, maintainability, and smooth integration of AI components.

3.2.1.1 Frontend development

The user interface was developed using Flutter, an open-source UI toolkit renowned for its ability to deliver cross-platform applications. This choice enabled a consistent user experience across desktop and mobile devices, although this version primarily deployed as a desktop application.

3.2.1.2 Backend development

The backend was implemented with Firebase, which provided a secure and scalable environment for authentication, real-time data synchronization, and API management. Cloud Firestore, a NoSQL database solution within Firebase, was employed to store user profiles, session data, lesson progress, and engagement logs.

3.2.1.3 AI integration

To enable intelligent interaction, OpenAI’s API was integrated as the conversational agent responsible for real-time problem-solving assistance. This feature allowed students to query ChatGPT for explanations, coding guidance, and debugging support, simulating an on-demand virtual tutor.

3.2.2 Core functionalities

3.2.2.1 Interactive learning pane (left section)

The left pane of the interface (see Figure 1) serves as the AI interaction window, where students input queries and receive context-sensitive responses. This component supports code generation, concept clarification, and personalized hints for robotics programming challenges, promoting active engagement.

3.2.2.2 Lesson navigation panel (right section)

The right pane contains a structured lesson navigation module, organized into weekly topics such as Introduction to Computer Robotics and Compiler Construction I. Each lesson includes instructional materials, practice exercises, and reflective questions aligned with the course objectives.

3.2.2.3 Problem-based tasks with ai support

Students engage in open-ended robotics programming problems, leveraging the AI assistant for guidance without receiving direct solutions, thereby maintaining the constructivist nature of PBL. This design encourages critical thinking while minimizing cognitive overload.

3.2.2.4 Performance analytics and adaptive feedback

Engagement data (e.g., frequency of interaction, task completion) and assessment scores are logged in Firestore. These metrics enable instructors to track student progress and inform adaptive recommendations for further learning.

3.2.3 Design rationale

The system design aligns with Self-Determination Theory (Deci and Ryan, 1987) and Engagement Theory (Schneiderman, 2000) by promoting autonomy (through self-directed problem-solving), competence (via AI-enabled feedback), and relatedness (through collaborative PBL structures). By integrating AI into PBL, APLE addresses limitations of traditional lecture-based instruction—such as lack of immediate feedback and limited personalization—identified in prior studies (Krishnamoorthy and Kapila, 2016; Omeh et al., 2025). While the current implementation is desktop-based, future iterations will prioritize mobile deployment to increase accessibility, particularly in resource-limited environments.

3.3 Measures

To ensure accurate measurement of the key constructs—engagement, intrinsic motivation, and academic achievement, we employed adapted, validated, and reliability-tested instruments. Students’ engagement in computer robotics programming was assessed using a 20-item engagement questionnaire adapted from the University Student Report (National Survey of Student Engagement, 2001) and tailored to reflect robotics programming contexts within PBL environments. The instrument measured behavioral, emotional and cognitive engagement through items related to persistence, collaboration, and active learning tasks. Responses were collected on a five-point Likert scale ranging from 1 = Never, 2 = Rarely, 3 = sometime, 4 = often to, 5 = Very So Often. A sample item includes: “How often do you collaborate with peers to solve robotics programming challenges?” This adaptation ensured cognitive engagement with authentic problem-solving experiences.

Academic achievement was measured using the Computer Robotics Programming Achievement Test (CRPAT), which consisted of 50 multiple-choice questions covering fundamental and applied robotics concepts. Each question had four alternatives (A–D), with one correct answer scored as two points, giving a maximum possible score of 100 marks. The test was constructed following Bloom’s taxonomy, addressing knowledge, comprehension, and application skills. Sample items include: “What is the primary purpose of a robot’s control system in robotics programming?” and “How can a robot use ultrasonic sensors to detect obstacles and adjust its path accordingly?” The CRPAT was reviewed by three experts in computer science education to ensure content validity. In addition to the test, continuous assessment tasks were evaluated using a 30-point rubric derived from a laboratory manual that required students to complete real-world robotics programming exercises.

Intrinsic motivation was evaluated using a nine-item scale adapted from Pintrich et al. (1993), contextualized to reflect robotics programming activities. The instrument assessed students’ interest, enjoyment, and perceived competence in problem-solving within the AI-supported and traditional PBL environments. Responses were rated on a five-point Likert scale from 1 = Strongly Disagree to 5 = Strongly Agree. Sample items include: “I experience pleasure when I discover new things in robotics programming” and “I often feel excited when exploring interesting robotics topics.” This scale provided insight into learners’ internal drive to engage in robotics programming tasks.

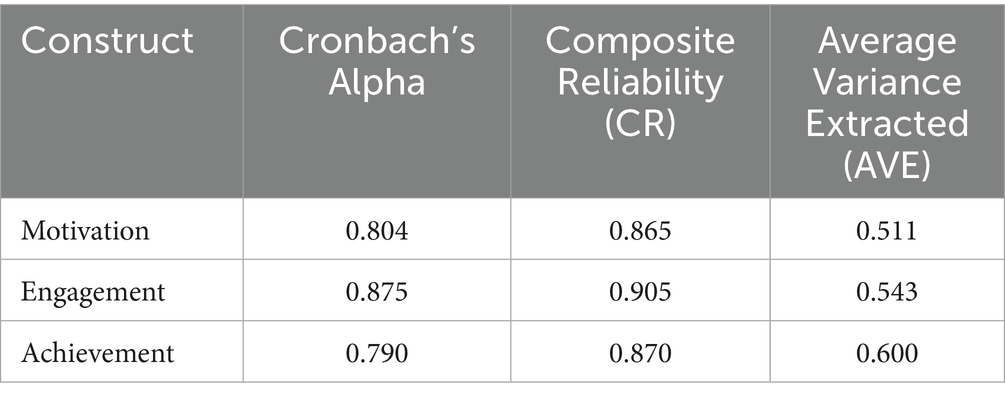

To establish validity and reliability, all instruments were subjected to expert review and pilot testing. Reliability analysis using Cronbach’s alpha indicated high internal consistency, with coefficients of 0.875 for engagement, 0.804 for intrinsic motivation, and 0.790 for the CRPAT. Composite reliability values exceeded 0.70, and Average Variance Extracted (AVE) values were above 0.50 for all constructs, confirming convergent validity. Discriminant validity was also verified using the Fornell-Larcker criterion, ensuring that each construct was distinct.

3.4 Data collection procedure

We implemented a structured and phased approach to collect data for this study, ensuring systematic execution from pre-intervention through post-intervention.

Phase 1: Pre-Test Administration (Week 1)

At the beginning of the semester, we administered a baseline assessment to both groups using Google Forms. This included:

Engagement Scale (20 items, 5-point Likert scale)

Intrinsic Motivation Scale (9 items, 4-point Likert scale)

Academic Achievement Test (50 multiple-choice questions, 2 marks each)

Demographic Section, which captured gender, age group, prior programming experience, and access to digital devices.

The purpose of the pre-test was twofold: (a) to establish baseline scores for comparison with post-test data, and (b) to use these as covariates in the subsequent statistical analysis, ensuring accurate measurement of treatment effects.

Phase 2: Intervention (Weeks 2–15)

The instructional intervention spanned 14 weeks, with two 2-h contact sessions weekly (total of 64 contact hours). Participants were divided into two groups:

Experimental Group: AI-Supported PBL

Students in this group experienced PBL enhanced with AI tools, integrated into every learning activity:

Weeks 2–4:

Orientation and AI Familiarization: Students were introduced to the AI learning platform and robotics programming basics.

Activity Example: Using an AI-driven coding simulator that provided real-time syntax and logic feedback for simple code exercises.

Weeks 5–8:

AI-Supported Group Problem-Solving: Students tackled intermediate-level robotics tasks with AI-based adaptive hints.

Activity Example: “Develop a line-following robot,” where the AI dashboard analyzed each team’s progress and suggested performance-improving strategies.

Weeks 9–12:

Complex Robotics Projects: Students worked on advanced, real-world problems, receiving AI-generated predictive analytics about potential errors and recommended practice exercises.

Weeks 13–15:

Capstone Project: Collaborative design and testing of robotic systems. AI-assisted peer assessment was introduced, where the system scored submissions based on preloaded rubrics and flagged anomalies for instructor review.

Control Group: Traditional PBL.

The control group engaged in identical robotics programming problems and tasks without AI support. All feedback and guidance were provided by the instructor and peers, replicating conventional PBL practices.

Phase 3: Post-Test Administration (Week 16).

At the end of the intervention, we administered the same engagement and intrinsic motivation scales along with a parallel form of the achievement test via Google Forms. This allowed us to measure changes attributable to the instructional method while maintaining test validity and reliability.

3.5 Instructional parity

To address potential experimenter bias and ensure instructional consistency, we engaged the existing computer robotics programming lecturers at the University of Nigeria, Nsukka (UNN) and Nnamdi Azikiwe University, Awka (UNIZIK) to deliver the intervention (see Figure 2). This decision allowed for ecological validity while leveraging institutional structures. The geographic distance between the two universities minimized the risk of treatment diffusion. Two weeks before implementation, the principal investigator conducted a standardized two-day training for all instructors, focusing on research protocols, ethical compliance, and instructional delivery. All instructors received a unified, pre-developed lesson plan, facilitation scripts, and identical problem-based learning (PBL) materials. The control group used conventional PBL, while the experimental group integrated the AI-Supported PBL Environment (APLE), ensuring that the only instructional difference was the presence of the AI agent (ChatGPT). Instructors in the experimental group were trained on the APLE interface but instructed not to provide extra scaffolding beyond the standard plan. This approach reduced variability and supported fidelity. Observations and checklists were used to monitor implementation across sites. While this design ensured parity, we acknowledge that the absence of random assignment and the use of different instructors could introduce bias.

3.6 Data analysis procedure

We conducted a Multivariate Analysis of Covariance (MANCOVA) to assess the effectiveness of two instructional methods on students’ engagement, intrinsic motivation, and academic achievement, while controlling for prior programming experience. Prior to the main analysis, we confirmed that all necessary statistical assumptions, including normality, homogeneity of variance, equality of covariance matrices, and linearity, were met. To ensure instrument validity and reliability, we found that Cronbach’s alpha and Composite values supported construct reliability, while Average Variance Extracted (AVE) scores exceeded 0.50, confirming convergent validity. Discriminant validity was established using the Fornell-Larcker criterion, as the square roots of the AVEs for each construct were greater than their inter-construct correlations. We then proceeded with the MANCOVA, reporting Wilks’ Lambda and partial eta squared (η2) to evaluate the multivariate effects and effect sizes. Following this, univariate tests were conducted to analyze the distinct impact of each instructional method on the dependent variables. Estimated marginal means and Bonferroni-adjusted pairwise comparisons were used to identify specific group differences.

4 Results

4.1 Preliminary analysis

Before proceeding with the main analysis, we conducted a series of preliminary checks to ensure that the assumptions underpinning Multivariate Analysis of Covariance (MANCOVA) were met. These diagnostic tests were essential for validating the integrity and robustness of our findings regarding the effect of instructional method on student outcomes. First, we assessed the normality of our dependent variables such as engagement, intrinsic motivation, and academic achievement by examining skewness and kurtosis values. The distribution of each variable approximated normality, with skewness and kurtosis values falling within the acceptable ±2 range (Byrne, 2010; Hair et al., 2010). This gave us confidence that the assumption of univariate normality was satisfied. We also examined the linearity of relationships between our covariate prior programming experience and each dependent variable. Scatterplots revealed that the relationships were reasonably linear, indicating that the linearity assumption for MANCOVA was met. Next, we tested the homogeneity of variance–covariance matrices using Box’s M test. Table 1 yielded a non-significant result, Box’s M = 2.11, F(6, 51656.13) = 0.338, p = 0.917, confirming that the covariance matrices for the dependent variables were equal across the instructional groups. This indicated that the multivariate homogeneity of variance–covariance assumption was upheld.

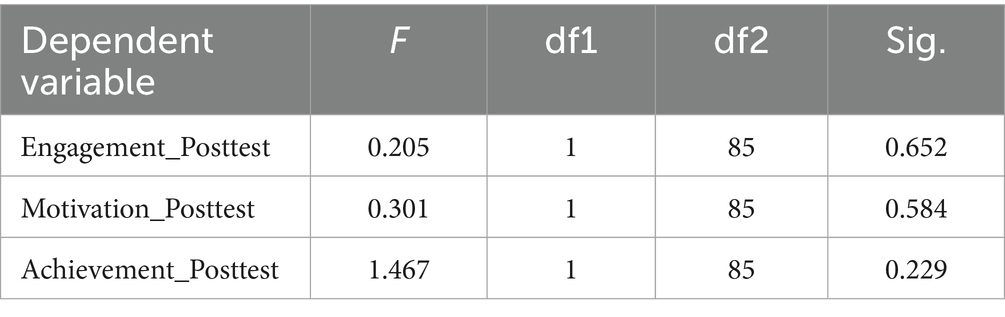

To determine whether the assumption of homogeneity of regression slopes held, we assessed the interaction between instructional method and the covariate (prior programming experience) for each dependent variable. The non-significant interaction effects suggested that the regression slopes were homogeneous across groups, satisfying this critical assumption. We further examined Levene’s Test of Equality of Error Variances to assess the homoscedasticity of each dependent variable. Table 2 revealed that engagement, motivation, and achievement produced non-significant Levene’s test results (p > 0.05), confirming that the assumption of equal variances was not violated. Finally, we evaluated multicollinearity by inspecting the intercorrelations among the dependent variables. The correlations ranged from moderate to strong but did not exceed problematic thresholds of 0.85. This assured us that the dependent variables shared some variance while still retaining distinct constructs, justifying their inclusion in a multivariate analysis. Taken together, the results of these preliminary tests provided us with a sound basis to proceed with the MANCOVA as all assumptions were sufficiently met.

4.2 Assessment of construct reliability and validity

Also, we evaluated the reliability and validity of our measurement instruments for engagement, motivation, and achievement in the context of AI-supported problem-based. Table 3 indicated that all constructs demonstrated strong internal consistency, exceeding the recommended threshold of 0.70 (Hair et al., 2010). Composite reliability (CR) values also supported this, ranging from 0.865 to 0.905, confirming that the constructs consistently captured their respective latent variables. Furthermore, all constructs demonstrated adequate convergent validity, as the average variance extracted (AVE) values exceeded the 0.50 benchmark suggesting that more than 50% of the variance in observed variables was explained by their underlying constructs (Fornell and Larcker, 1981).

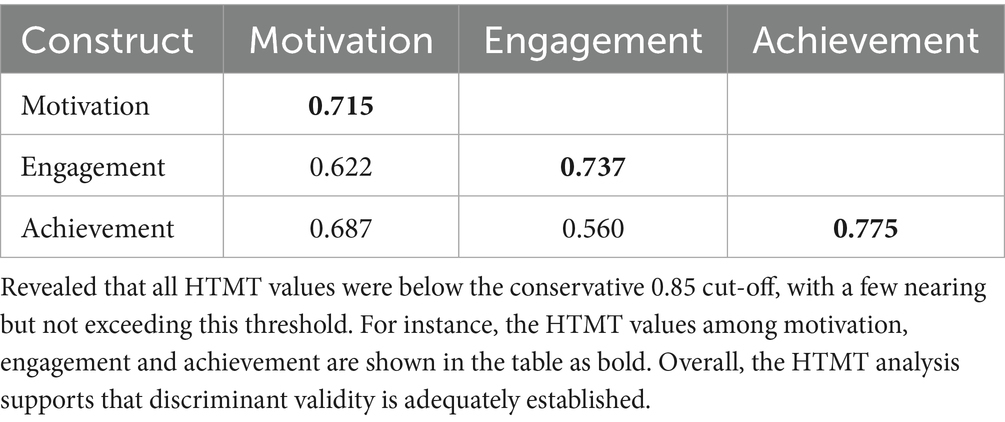

Discriminant validity was also assessed using the Fornell–Larcker criterion, and Table 4 showed that the square root of the AVE for each construct was greater than its correlations with other constructs. This pattern was consistent across all constructs, indicating that they were empirically distinct and not overlapping in meaning. Overall, these results provide strong evidence for the psychometric adequacy of our instruments, justifying their use in the MANCOVA analysis and supporting the credibility of our findings on the effectiveness of AI-supported PBL in enhancing students’ engagement, motivation, and achievement.

4.3 Multivariate analysis

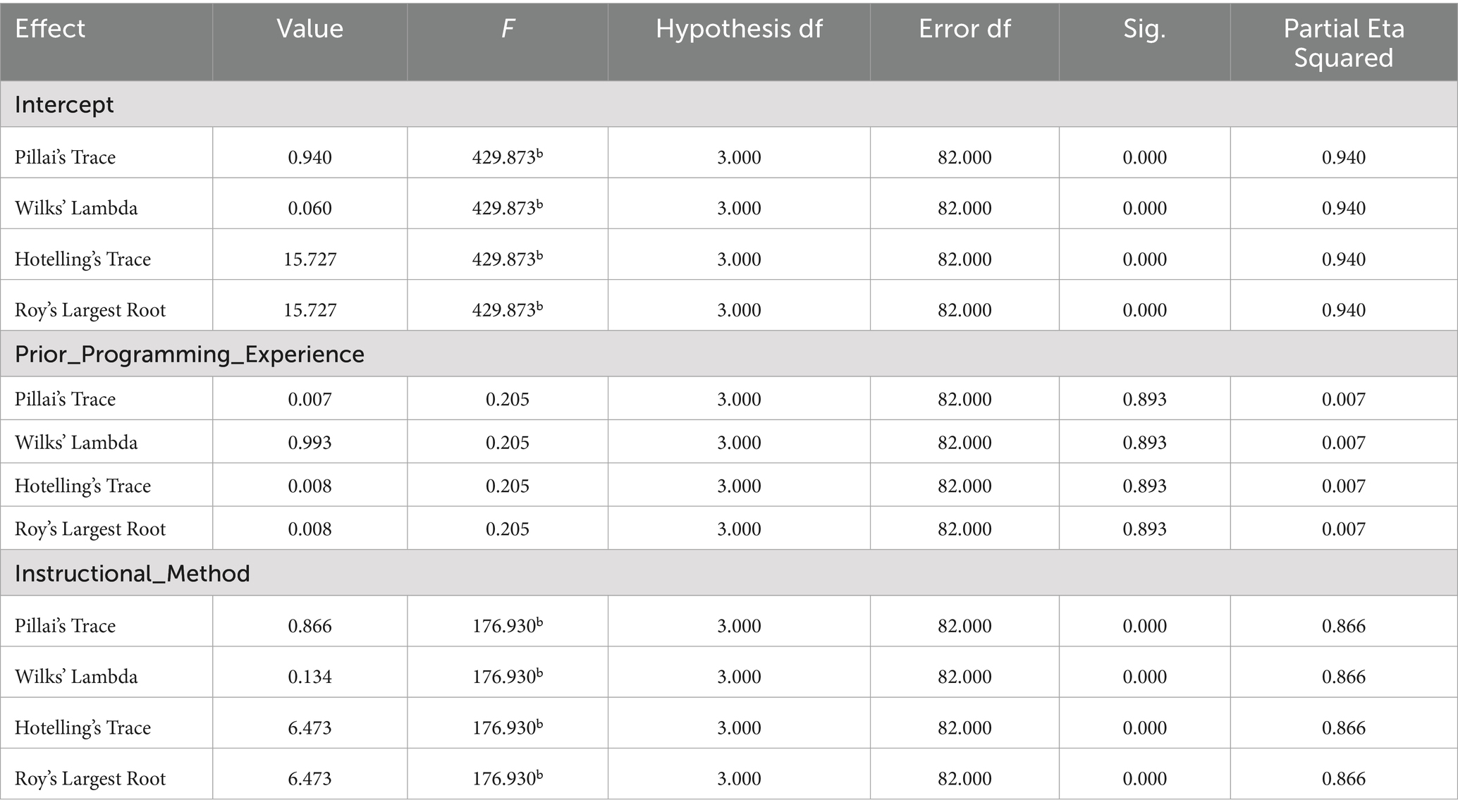

To address our research hypotheses, we conducted a one-way Multivariate Analysis of Covariance (MANCOVA), which allowed us to assess the combined and individual effects of the instructional method on the three outcome variables, while adjusting for the covariate. The multivariate results revealed a statistically significant effect of instructional method on the combined dependent variables. As shown in Table 5, Wilks’ Lambda was 0.134, F(3, 82) = 176.93, p < 0.001, with a partial eta squared (η2) of 0.866. This indicates that the instructional method accounted for approximately 86.6% of the variance in the combination of engagement, intrinsic motivation, and achievement. Based on this strong effect size, we rejected the main null hypothesis and concluded that the mode of instruction had a significant joint effect on students’ learning outcomes. Conversely, Table 5 shows that prior programming experience did not yield a statistically significant multivariate effect, Wilks’ Lambda = 0.993, F(3, 82) = 0.205, p = 0.893, η2 = 0.007. This suggests that students’ previous exposure to programming did not substantially affect the combined outcome measures, reinforcing the dominant role of instructional method.

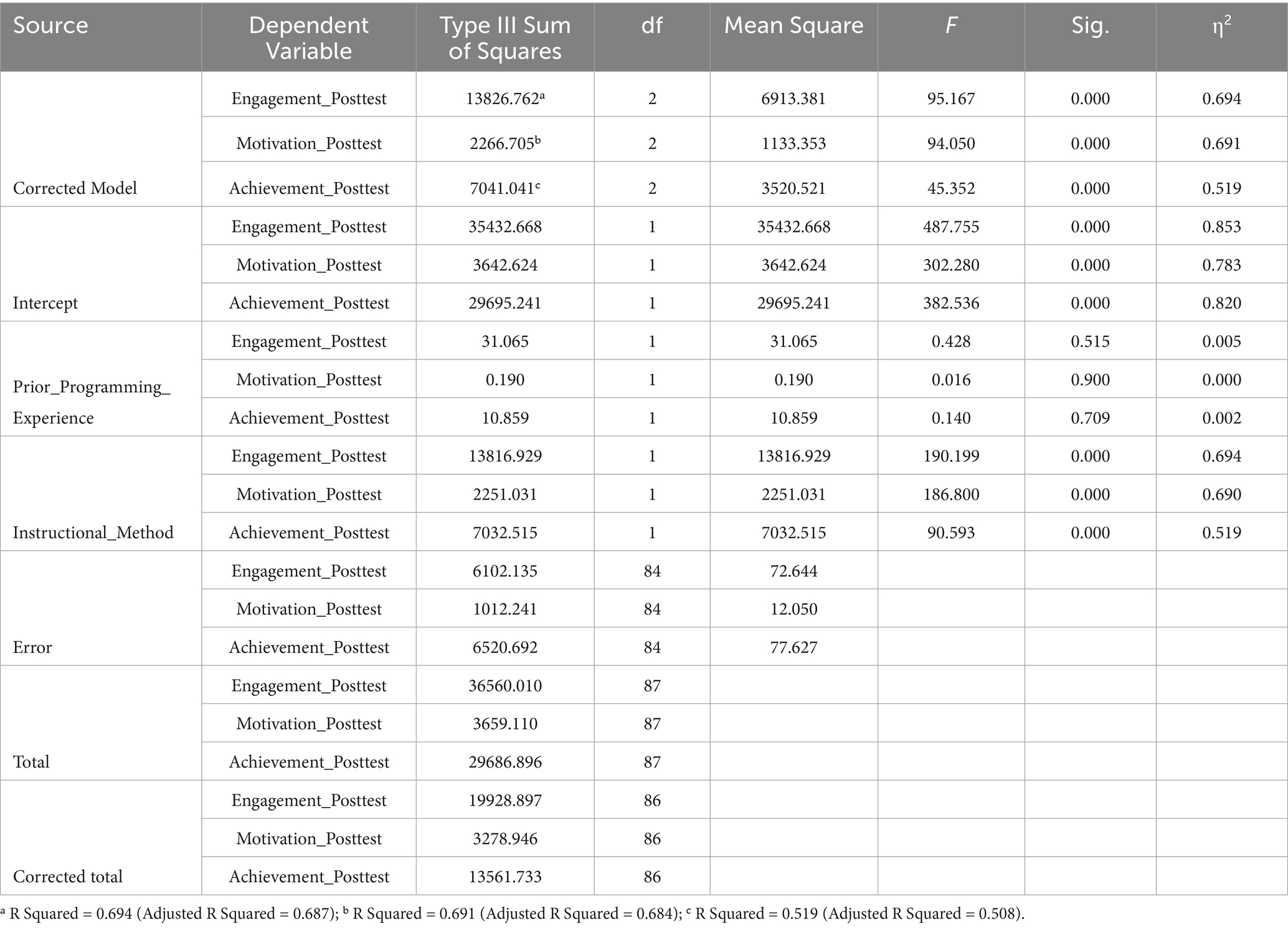

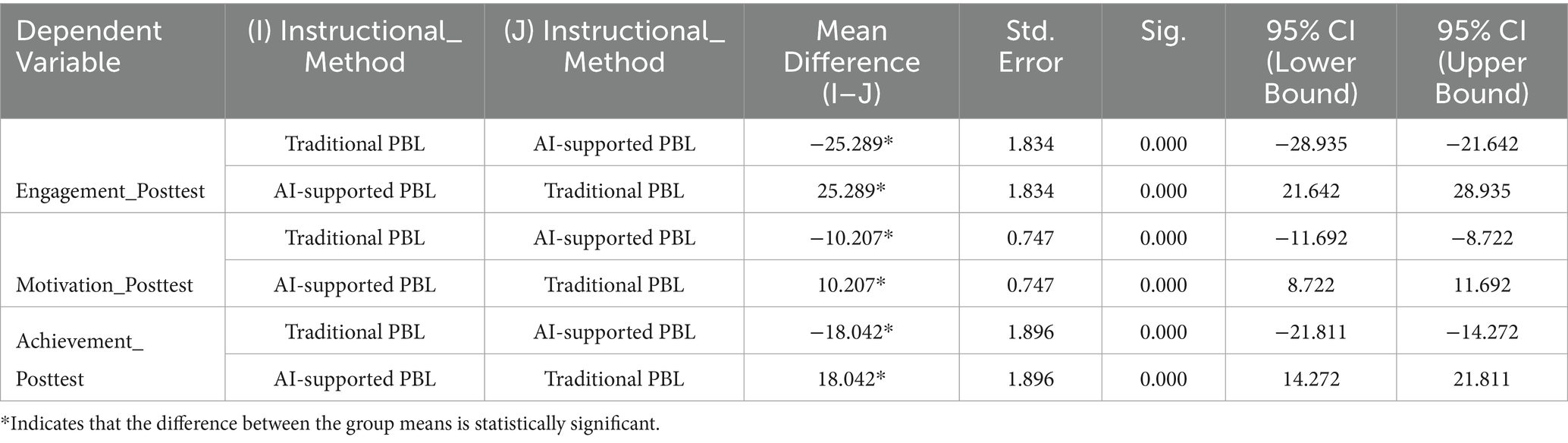

Assessing individual outcomes through the between-subjects effects, we found that instructional method had a statistically significant effect on each dependent variable. For engagement, Table 6 showed F(1, 84) = 190.20, p < 0.001, η2 = 0.694, indicating that students in the AI-supported PBL group were significantly more engaged than those taught through traditional PBL. In terms of intrinsic motivation, the result was also significant, F(1, 84) = 186.80, p < 0.001, η2 = 0.690, confirming that students exposed to the AI-supported instructional method reported notably higher levels of motivation. For academic achievement, a significant effect was observed as well, F(1, 84) = 90.59, p < 0.001, η2 = 0.519, highlighting the advantage of AI-supported PBL in enhancing students’ academic performance. These results support our hypotheses (H1–H3) and demonstrate that the instructional method had a strong and consistent effect across all three learning outcomes.

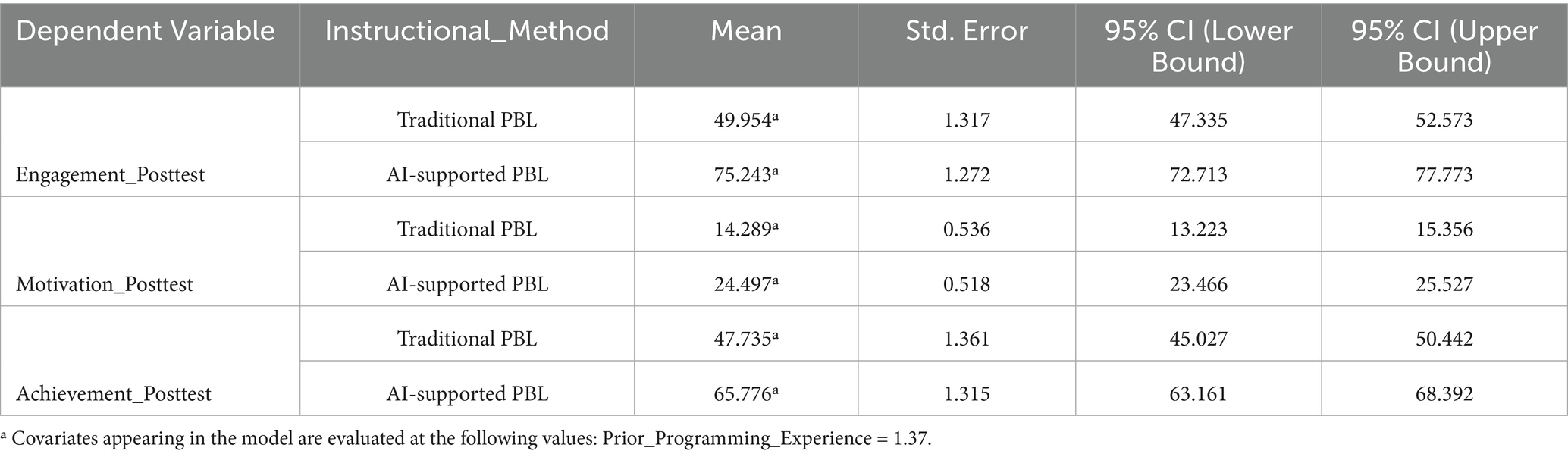

To further reinforce these results, our estimated marginal means revealed that students in the AI-supported PBL condition scored higher across all outcomes. Specifically, they scored an average of 75.24 on engagement compared to 49.95 in the traditional PBL group; 24.50 on intrinsic motivation compared to 14.29; and 65.78 on academic achievement compared to 47.74 (see Table 7). These mean differences, adjusted for prior programming experience, affirm the positive effect of integrating AI tools into PBL pedagogy.

Lastly, the pairwise comparisons substantiated these group differences, with statistically significant mean differences observed for each outcome: 25.29 for engagement, 10.21 for motivation, and 18.04 for achievement, all with p < 0.001 (see Table 8). The confidence intervals for these differences did not include zero, which confirms the robustness of the observed effects.

5 Discussion and implication

The purpose of this study was to evaluate the impact of AI-supported Problem-Based Learning (PBL) on students’ engagement, intrinsic motivation, and academic achievement in Computer Robotics Programming (CRP) compared to traditional PBL, while controlling for prior programming experience. Results demonstrated significant multivariate and univariate effects, favoring AI-supported PBL for all three outcomes. The results indicate that students exposed to AI-supported PBL reported significantly higher engagement than those in traditional PBL environments. This supports Schneiderman’s (2000) engagement theory, which highlights that meaningful interaction and adaptive support enhance active learning. The integration of AI offered personalized feedback, real-time hints, and analytics that likely sustained students’ persistence in task completion and fostered collaborative interaction, consistent with findings by Kurniawan et al. (2025) and Ahn and Jeong (2025), who observed improved engagement when technology enhanced problem-based learning contexts. Meanwhile, some scholars argue that heavy reliance on AI tools may diminish learner autonomy and create dependency, potentially reducing authentic engagement (Mehdaoui, 2024). Students might focus on following AI prompts rather than engaging in deeper inquiry or collaborative dialogue. This concern is valid in contexts where AI replaces human facilitation rather than complementing it. In this study, AI served as an enhancement rather than a substitute for instructor guidance, offering adaptive scaffolding without restricting learner autonomy. Moreover, engagement gains observed here align with findings by Woodrow et al. (2024), which suggest that adaptive feedback closes engagement gaps for novices, allowing all students to contribute meaningfully in collaborative PBL environments.

Furthermore, students in AI-supported PBL reported significantly higher intrinsic motivation than those in traditional PBL, reinforcing Self-Determination Theory (Deci and Ryan, 1987), which emphasizes that fulfilling learners’ needs for competence and autonomy fosters intrinsic motivation. AI-driven feedback systems and predictive analytics provided students with a sense of progress and mastery, reducing frustration and promoting self-directed learning. These findings echo Lin and Muenks (2025) and Tozzo et al. (2025), who reported increased motivation through adaptive technologies in STEM settings. Critics argue that excessive AI support can undermine intrinsic motivation by reducing students’ sense of autonomy, as learners may attribute success to the system rather than their own efforts (Kotera et al., 2023). In addition, students accustomed to real-time feedback may develop dependency, which could weaken motivation in non-AI-supported contexts. While such concerns are plausible, our intervention design balanced AI assistance with learner-driven problem solving and peer collaboration, which preserved autonomy and encouraged active participation. This aligns with Yilmaz and Yilmaz (2023), who asserted that AI integration enhances motivation when combined with student-centered pedagogies like PBL, rather than implemented in isolation.

Our analysis also revealed a significant advantage for AI-supported PBL in improving academic achievement compared to traditional PBL. This is consistent with Chen and Yang (2019), who reported a 12% higher post-test performance among students receiving adaptive feedback, and Omeh et al. (2025), who highlighted AI’s role in facilitating knowledge transfer and complex problem solving in robotics programming. Personalized scaffolding and automated error detection likely reduced cognitive overload, enabling students to master challenging CRP concepts. However, some studies suggest that AI does not uniformly improve academic achievement, especially in resource-constrained environments where infrastructure limitations and unreliable connectivity impede technology’s effectiveness (Adewale et al., 2024). Additionally, when AI-driven systems provide overly prescriptive guidance, they may discourage independent problem solving, limiting long-term knowledge retention. This study mitigated these concerns by blending AI tools with instructor facilitation and peer collaboration, ensuring that AI functioned as a support mechanism rather than a prescriptive tutor. Moreover, performance gains observed in our context suggest that AI-supported PBL can overcome systemic constraints when implemented strategically, echoing the recommendations by Pillai and Sivathanu (2020) and Torres and Inga (2025) on leveraging AI to enhance structured STEM instruction.

Interestingly, prior programming experience did not significantly affect engagement, motivation, or achievement once instructional method was accounted for. This finding reinforces claims by MacNeil et al. (2023) and Woodrow et al. (2024) that adaptive technologies can neutralize disparities in prior knowledge through personalized feedback and dynamic scaffolding. One could argue that in highly technical subjects like CRP, prior experience will inevitably shape task navigation and conceptual understanding, rendering instructional interventions secondary to baseline knowledge (Harding et al., 2024). While prior knowledge influences initial confidence, our findings suggest that AI-supported PBL offsets these differences by tailoring instructional complexity and offering real-time performance analytics, enabling novice learners to engage effectively and achieve outcomes comparable to their experienced peers.

This study findings underscore the transformative role of AI-enhanced pedagogical strategies in promoting equitable and engaging STEM education in developing contexts like Nigeria. Educators should prioritize integrating AI tools within structured frameworks like PBL, while policymakers must invest in digital infrastructure and instructor training to ensure scalability.

6 Conclusion and recommendation

This exploratory study provides compelling preliminary evidence that integrating Artificial Intelligence into Problem-Based Learning (PBL) environments can enhance student engagement, intrinsic motivation, and academic achievement in robotics programming courses. The results demonstrated statistically and practically significant improvements in learning outcomes for students in the AI-supported PBL environment compared to those in traditional PBL. However, we interpret these findings within the context of a limited, non-random sample drawn from a single institution. Rather than making broad generalizations, this study offers strong proof of concept that AI-driven instructional support can positively impact learning in specific STEM education contexts. It showcases the potential of AI to support personalized, interactive, and data-informed learning experiences that promote cognitive and affective gains. Importantly, it also highlights how AI tools such as ChatGPT can assist students by providing real-time feedback, programming support, and facilitating inquiry-based exploration.

6.1 Limitations and future research

As researchers committed to exploring the transformative potential of AI-supported pedagogies, we recognize several limitations in our current study that provide fertile ground for future inquiry. Firstly, although we employed validated self-report instruments to assess student engagement and motivation, we acknowledge the inherent limitations of perceptual data. Learners may have unintentionally misrepresented their actual behaviors due to social desirability or limited self-awareness. In future iterations of this work, we plan to incorporate behavioral logging features directly into the APLE system to track user interactions, time on task, and problem-solving paths, thereby capturing a more nuanced and objective picture of engagement. Secondly, our choice of a quasi-experimental design, while pragmatic given the institutional constraints, limits our ability to draw definitive causal conclusions. Despite controlling for prior programming experience, we cannot entirely rule out the influence of pre-existing group differences. As we continue this line of research, we are preparing to conduct a randomized controlled trial (RCT) within the same instructional context to strengthen internal validity and provide more robust evidence of AI’s causal impact on learning.

Third, the relatively small sample size, reflecting the limited number of computing students at the participating institution, restricts the generalizability of our findings. We view this study as a foundational proof-of-concept effort. Future research will involve larger, more diverse samples across multiple universities and STEM disciplines to enhance the external validity of our conclusions. Moreover, we acknowledge a technological limitation: the AI-supported PBL environment was deployed exclusively as a desktop application. This limited mobile accessibility may have constrained some students’ participation. To address this, we are currently developing a cross-platform version with mobile compatibility, ensuring broader reach and usability across devices. Lastly, while the quantitative results were compelling, we did not capture the lived experiences of students. As researchers deeply interested in learner agency and affective outcomes, we believe the next critical step is to conduct a mixed-methods study. We intend to follow up with focus groups and interviews to understand how AI influenced students’ problem-solving strategies, confidence, and collaboration dynamics. These qualitative insights will enrich the explanatory power of our findings and inform future refinements of the APLE system.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Faculty of Vocational Technical Education Research Ethics Committee at the University of Nigeria, Nsukka (Ref UNN/PG/PhD/2020/95701 on 3/8/2024). We ensured voluntary participation by securing informed consent from all students. Participants were briefed on their right to withdraw at any point without consequence. All responses were anonymized, and the dataset was securely stored. We adhered strictly to ethical principles of autonomy, beneficence, and justice, ensuring that the research complied with both institutional and international ethical standards. The participants provided their written informed consent to participate in this study.

Author contributions

CO: Writing – original draft, Software, Conceptualization, Writing – review & editing. MA: Formal analysis, Visualization, Validation, Writing – review & editing, Writing – original draft.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adewale, M. D., Azeta, A., Abayomi-Alli, A., and Sambo-Magaji, A. (2024). Impact of artificial intelligence adoption on students' academic performance in open and distance learning: a systematic literature review. Heliyon 10:e40025. doi: 10.1016/j.heliyon.2024.e40025

Ahn, S. H., and Jeong, H. W. (2025). Exploring nursing students' experiences with virtual patient-based health assessment simulation program: a qualitative study. Nurse Educ. Today 153:106826. doi: 10.1016/j.nedt.2025.106826

Anselme, P., and Hidi, S. E. (2024). Acquiring competence from both extrinsic and intrinsic rewards. Learn. Instr. 92:101939. doi: 10.1016/j.learninstruc.2024.101939

Bakır-Yalçın, E., and Usluel, Y. K. (2024). Investigating the antecedents of engagement in online learning: do achievement emotions matter? Educ. Inf. Technol. 29, 3759–3791. doi: 10.1007/s10639-023-11995-z

Bati, T. B., Gelderblom, H., and Van Biljon, J. (2014). A blended learning approach for teaching computer programming: design for large classes in sub-Saharan Africa. Comput. Sci. Educ. 24, 71–99. doi: 10.1080/08993408.2014.897850

Bowman, N. A., Jarratt, L., Culver, K. C., and Segre, A. M. (2019). How prior programming experience affects students' pair programming experiences and outcomes. In Proceedings of the 2019 ACM Conference on innovation and technology in computer science education (170–175). University of Iowa: Iowa

Byrne, B. M. (2010). Structural equation modeling with AMOS: Basic concepts, applications, and programming. New York: Routledge.

Chavez-Valenzuela, P., Kappes, M., Sambuceti, C. E., and Diaz-Guio, D. A. (2025). Challenges in the implementation of inter-professional education programs with clinical simulation for health care students: a scoping review. Nurse Educ. Today 146:106548. doi: 10.1016/j.nedt.2024.106548

Chen, C. H., and Chung, H. Y. (2024). Fostering computational thinking and problem-solving in programming: integrating concept maps into robot block-based programming. J. Educ. Comput. Res. 62, 186–207. doi: 10.1177/07356331231205052

Chen, C. H., and Yang, Y. C. (2019). Revisiting the effects of project-based learning on students’ academic achievement: a meta-analysis investigating moderators. Educ. Res. Rev. 26, 71–81. doi: 10.1016/j.edurev.2018.11.001

Corral, J. R., Morgado-Estevez, A., Molina, D. C., Perez-Pena, F., Amaya Rodríguez, C. A., and Civit Balcells, A. A. (2016). Application of robot programming to the teaching of object-oriented computer languages. Int. J. Eng. Educ. 32, 1823–1832.

Deci, E. L., and Ryan, R. M. (1987). The support of autonomy and the control of behavior. J. Pers. Soc. Psychol. 53, 1024–1037. doi: 10.1037/0022-3514.53.6.1024

Eteng, I., Akpotuzor, S., Akinola, S. O., and Agbonlahor, I. (2022). A review on effective approach to teaching computer programming to undergraduates in developing countries. Sci. Afr. 16:e01240. doi: 10.1016/j.sciaf.2022.e01240

Fornell, C., and Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J Mark Res. 18, 39–50.

Gressmann, A., Weilemann, E., Meyer, D., and Bergande, B. (2019). Nao robot vs. lego mindstorms: the influence on the intrinsic motivation of computer science non-majors. In Proceedings of the 19th Koli Calling International Conference on Computing Education Research. The University of Adelaide, Australia

Hair, J., Black, W. C., Babin, B. J., and Anderson, R. E. (2010). Multivariate data analysis. Upper Saddle River, New Jersey: Pearson Educational International.

Harding, J. N., Wolpe, N., Brugger, S. P., Navarro, V., Teufel, C., and Fletcher, P. C. (2024). A new predictive coding model for a more comprehensive account of delusions. Lancet Psychiatry 11, 295–302. doi: 10.1016/S2215-0366(23)00411-X

Ito, T., Tanaka, M. S., Shin, M., and Miyazaki, K. (2021). The online PBL (project-based learning) education system using AI (artificial intelligence). In DS 110: Proceedings of the 23rd International Conference on Engineering and Product Design Education (E&PDE 2021), VIA Design, VIA University in Herning, Denmark.

Iyamuremye, A., Niyonzima, F. N., Mukiza, J., Twagilimana, I., Nyirahabimana, P., Nsengimana, T., et al. (2024). Utilization of artificial intelligence and machine learning in chemistry education: a critical review. Discov. Educ. 3:95. doi: 10.1007/s44217-024-00197-5

Jonassen, D. H., and Carr, C. S. (2020). Mindtools: Affording multiple knowledge representations for learning. In Computers as cognitive tools. 165–196. Routledge.

Kotera, Y., Taylor, E., Fido, D., Williams, D., and Tsuda-McCaie, F. (2023). Motivation of UK graduate students in education: self-compassion moderates pathway from extrinsic motivation to intrinsic motivation. Curr. Psychol. 42, 10163–10176. doi: 10.1007/s12144-021-02301-6

Krishnamoorthy, S. P., and Kapila, V. (2016). Using a visual programming environment and custom robots to learn c programming and k-12 stem concepts. In Proceedings of the 6th Annual Conference on Creativity and Fabrication in Education (41–48). Stanford, CA: ACM.

Kurniawan, D., Masitoh, S., Bachri, B. S., Kamila, V. Z., Subastian, E., and Wahyuningsih, T. (2025). Integrating AI in digital project-based blended learning to enhance critical thinking and problem-solving skills. Multidiscipl. Sci. J. 7:2025552. doi: 10.31893/multiscience.2025552

Leon, C., Lipuma, J., and Oviedo-Torres, X. (2025). Artificial intelligence in STEM education: a transdisciplinary framework for engagement and innovation. Front. Educ. 10:1619888. doi: 10.3389/feduc.2025.1619888

Lin, S., and Muenks, K. (2025). How students' perceptions of older siblings' mindsets relate to their math motivation, behavior, and emotion: a person-centered approach. Soc. Psychol. Educ. 28:65. doi: 10.1007/s11218-024-09968-2

MacNeil, S., Tran, A., Hellas, A., Kim, J., Sarsa, S., Denny, P., et al. (2023). Experiences from using code explanations generated by large language models in a web software development e-book. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education (931–937). Stanford, CA: ACM

Marín, B., Frez, J., Cruz-Lemus, J., and Genero, M. (2018). An empirical investigation on the benefits of gamification in programming courses. ACM Trans. Comput. Educ. 19, 1–22. doi: 10.1145/3231709

Mehdaoui, A. (2024). Unveiling barriers and challenges of AI technology integration in education: assessing teachers’ perceptions, readiness and anticipated resistance. Fut. Educ. 4, 95–108. doi: 10.57125/FED.2024.12.25.06

National Survey of Student Engagement. (2001). The College Student Report (CRS). Bloomington: Indiana University Center for Postsecondary Research.

Nie, A., Chandak, Y., Suzara, M., Malik, A., Woodrow, J., Peng, M., et al. (2024). The GPT surprise: offering large language model chat in a massive coding class reduced engagement but increased adopters exam performances. Available online at: https://arxiv.org/abs/2407.09975 (Accessed April 24, 2024)

Omeh, C. B. (2025). Chatgogy instructional strategy. Open Access J. Educ. Lang. Stud. 3:555608. doi: 10.19080/OAJELS.2025.03.555608

Omeh, C. B., Olelewe, C. J., and Ohanu, I. B. (2025). Impact of artificial intelligence technology on students' computational and reflective thinking in a computer programming course. Comput. Appl. Eng. Educ. 33:e70052. doi: 10.1002/cae.70052

Orhan, A. (2025). Investigating the effectiveness of problem based learning on academic achievement in EFL classroom: a meta-analysis. Asia-Pac. Educ. Res. 34, 699–709. doi: 10.1007/s40299-024-00889-4

Pertiwi, R. W. L., Kulsum, L. U., and Hanifah, I. A. (2024). Evaluating the impact of artificial intelligence-based learning methods on students' motivation and academic achievement. Int. J. Post Axial 6, 49–58.

Pillai, R., and Sivathanu, B. (2020). Adoption of artificial intelligence (AI) for talent acquisition in IT/ITeS organizations. Benchmarking 27, 2599–2629. doi: 10.1108/BIJ-04-2020-0186

Pintrich, P. R., Smith, D. A., Garcia, T., and McKeachie, W. J. (1993). Reliability and predictive validity of the Motivated Strategies for Learning Questionnaire (MSLQ). Educ Psychol Meas. 53, 801–813.

Schneiderman, D. (2000). Investment rules and the new constitutionalism. Law Soc. Inq. 25, 757–787. doi: 10.1111/j.1747-4469.2000.tb00160.x

Srimadhaven, T., AV, C. J., Harshith, N., and Priyaadharshini, M. (2020). Learning analytics: virtual reality for programming course in higher education. Proced. Comput. Sci. 172, 433–437. doi: 10.1016/j.procs.2020.05.095

Strielkowski, W., Grebennikova, V., Lisovskiy, A., Rakhimova, G., and Vasileva, T. (2025). AI-driven adaptive learning for sustainable educational transformation. Sustain. Dev. 33, 1921–1947. doi: 10.1002/sd.3221

Thomas, A., and Bauer, A. S. (2020), Robotics and coding within integrated STEM coursework for elementary pre-service teachers. In Society for Information Technology & teacher education international conference (pp. 1905–1912). North Carolina: Association for the Advancement of Computing in Education.

Torres, I., and Inga, E. (2025). Fostering STEM skills through programming and robotics for motivation and cognitive development in secondary education. Information 16:96. doi: 10.3390/info16020096

Tozzo, M. C., Reis, F. J. J. D., Ansanello, W., Meulders, A., Vlaeyen, J. W., and Oliveira, A. S. D. (2025). Motivational impact, self-reported fear, avoidance, and harm perception of shoulder movements pictures in people with chronic shoulder pain. Eur. J. Phys. 27, 136–146. doi: 10.1080/21679169.2024.2359968

Umakalu, C. P., and Omeh, C. B. (2025). Impact of teaching computer robotics programming using hybrid learning in public universities in Enugu state, Nigeria. Vocat. Tech. Educ. J. 5, 1–8.

Washington, T. C., and Sealy, W. (2024), Programming by demonstration using mixed reality and simulated kinematics. In 2024 IEEE world AI IoT congress (AIIoT) (pp. 387–392). Seattle: IEEE.

Woodrow, J., Malik, A., and Piech, C. (2024), AI teaches the art of elegant coding: timely, fair, and helpful style feedback in a global course. In Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 1 (1442–1448).Portland: ACM.

Xu, W., and Ouyang, F. (2022). The application of AI technologies in STEM education: a systematic review from 2011 to 2021. Int. J. STEM Educ. 9:59. doi: 10.1186/s40594-022-00377-5

Yilmaz, R., and Yilmaz, F. G. K. (2023). Augmented intelligence in programming learning: examining student views on the use of ChatGPT for programming learning. Comp. Human Behav. 1:100005. doi: 10.1016/j.chbah.2023.100005

Zhang, X., Chen, Y., Li, D., Hu, L., Hwang, G. J., and Tu, Y. F. (2024). Engaging young students in effective robotics education: an embodied learning-based computer programming approach. J. Educ. Comput. Res. 62, 312–338. doi: 10.1177/07356331231213548

Keywords: AI-supported learning, problem-based learning, robotics programming, students’ motivation, students’ engagement, STEM education

Citation: Omeh CB and Ayanwale MA (2025) Artificial intelligence meets PBL: transforming computer-robotics programming motivation and engagement. Front. Educ. 10:1674320. doi: 10.3389/feduc.2025.1674320

Edited by:

Sebastian Becker-Genschow, University of Cologne, GermanyReviewed by:

Muhammed Parviz, Imam Ali University, IranWenchao Zhang, Guangxi Normal University, China

Copyright © 2025 Omeh and Ayanwale. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christian Basil Omeh, Y2hyaXN0aWFuLm9tZWhAdW5uLmVkdS5uZw==

†ORCID: Christian Basil Omeh, orcid.org/0000-0002-1673-2578

Musa Adekunle Ayanwale, orcid.org/0000-0001-7640-9898

Christian Basil Omeh

Christian Basil Omeh Musa Adekunle Ayanwale

Musa Adekunle Ayanwale