- 1Department of Welfare and Participation, Western Norway University of Applied Sciences, Sogndal, Norway

- 2Department of Psychology, University of Inland Norway, Lillehammer, Norway

Background: Feedback practices in higher education are important for student learning and engagement, and unfortunately, both students and university teachers report being dissatisfied with existing feedback practices. Existing practices are often summative instead of formative, and it has been proposed that the use of formative feedback can enhance learning and student satisfaction in higher education. This preregistered scoping review (https://doi.org/10.17605/OSF.IO/2J4M5) aimed to map the current literature on dialogic formative feedback in higher education and provide an overview of the various approaches to dialogic formative feedback and the evaluation of outcomes of these practices as reported in the literature.

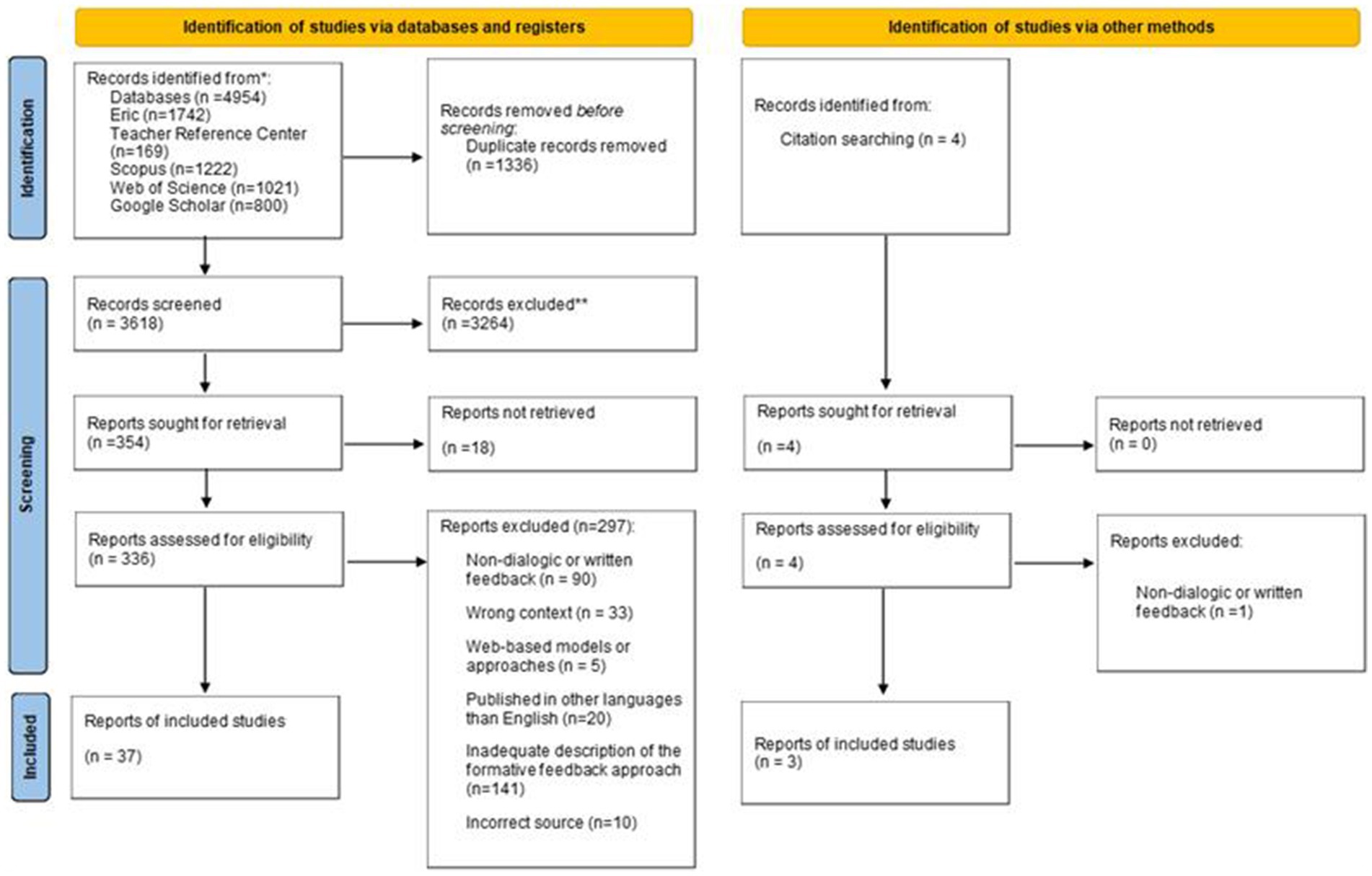

Method: The review followed established methodological frameworks for scoping reviews and report according to the PRISMA Extensions for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. We searched ERIC, Scopus, CINAHL, the Teacher Reference Center, and sources of gray literature to identify relevant studies. The search strategy was developed in collaboration with a research librarian, who conducted the final search. Two independent reviewers screened all identified records against predefined inclusion criteria.

Results: A total of 40 sources met the inclusion criteria. The studies varied considerably in terms of the purpose, setting, and content. However, most records described aims related to cognitive growth and increased self-regulated learning. Feedback was delivered across diverse contexts, most commonly in small group settings. The records identified several students-and teacher-related factors that appeared to influence the learning outcomes of formative feedback. Most of the sources focused on cognitive and affective measures (n = 11) or students’ experiences and perceptions (n = 10), while comparatively fewer examined teacher experiences and perceptions (n = 2) or academic achievement outcomes (n = 5).

Conclusion: The findings suggest that dialogic formative feedback can be implemented in a wide range of higher education contexts to promote self-regulated learning and cognitive development. Future research should investigate its effectiveness in improving academic achievement and further explore the impact of dialogic formative feedback on students’ educational outcomes, as well as teachers’ perspectives on applying dialogic feedback practices.

1 Background

Feedback is widely recognized as one of the most powerful influences on learning and achievement (Hattie and Timperley, 2007). Despite this, students in higher education frequently report dissatisfaction with the feedback they receive on their academic work (Bakken, 2018). Teachers likewise express concerns about the adequacy of current feedback practices, highlighting a shared recognition that existing approaches often fail to meet their intended purpose (Nicol, 2010).

In this review, we focus on dialogic formative feedback, as a form of feedback delivered during the learning process rather than solely on its outcomes. This type of feedback is characterized by reciprocal dialog between students and the teacher, allowing for clarification, negotiation of meaning, and active engagement in the feedback process. Dialogic formative feedback has been proposed as a promising approach for supporting deeper learning, foster self- regulation, and strengthening student- teacher relationships (Winstone and Carless, 2019).

The overreaching aim of this review was to systematically map the literature on dialogic formative feedback in higher education. In doing so, we sought to both provide a starting point for further research in the field, and to offer practical insights for instructors interested in integrating dialogic formative feedback into their own teaching practices.

1.1 The need for new approaches to student feedback

Higher education is undergoing a paradigm shift toward student-centered and lifelong learning, with an increased focus on fostering active, engaged, and self-regulated learners (Bucharest Communiqué, 2012). However, traditional feedback practices, often characterized by delayed, summative comments after a task is completed, frequently fail to support these pedagogical goals. This creates a disconnection between the learning process and the feedback because feedback is perceived as a justification for a grade rather than a tool for future learning.

One of the consequences of this disconnection is the high prevalence of student procrastination, especially younger students who tend to delay tasks due to difficulties in taking sufficient responsibility for their learning process (Klingsieck, 2013). These younger students requiring support and guidance in self-regulated learning (Ferrari and O’Callaghan, 2005; Zacks and Hen, 2018). Steel (2007), drawing from motivational theory, explains that students are more likely to procrastinate when one of three factors is present: (1) they perceive a low probability of success, (2) they expect neither value nor enjoyment in completing the task, or (3) there is a long-time gap between task execution and feedback that demonstrates its benefits (Steel, 2007). The long-time gap between task (or assignment) execution and feedback is largely due to the summative feedback practices that are commonly used in higher education (Steel, 2007). However, formative feedback, focusing on providing feedback during the learning process, is an alternative that can reduce the time-gap and consequently positively influence learning and reduce procrastination among students. Assessment for learning is well recognized as a crucial driver of student learning, and well-implemented assessment practices provide an important foundation for positive and meaningful learning experiences. Changing feedback practices may be one possible intervention to promote student learning and reduce procrastination.

In higher education, feedback on student’ work is seen as a process whereby students are proactive, making sense of and using comments from others on their performance to aid further learning (Winstone and Carless, 2019). At the same time, a new and more diverse student body is entering higher education, often referred to as Generation Z (shortened as Gen Z). Some literature suggests that this group tends to struggle with traditional teaching and learning methods, while being more inclined to take ownership of their own learning (Szymkowiak et al., 2021). Others characterize Gen Z as autonomous, interactive learners who thrive in personalized educational settings; however, they face challenges related to fragmented attention caused by digital distractions (Chardonnens, 2025). Additionally, they are sometimes perceived as impatient, as they are “digital natives” who have always had easy access to information and knowledge through the internet (Szymkowiak et al., 2021). These characteristics highlight the need for educational strategies that balance autonomy with structured support that encourage student engagement, social emotional development and motivation for learning (Chardonnens, 2025). One way to address this multifaceted nature of Gen Z learning may be through formative feedback.

Unlike traditional summative feedback, which is often delayed after task completion, formative feedback can be provided continuously during the tasks and during the semester, supporting students during the learning process. This highlights the need to reconsider feedback practices in higher education, moving from delayed summative approaches toward more continuous and formative strategies. It is during the task execution phase that students usually have questions and require feedback and support (Steel, 2007; Zacks and Hen, 2018). Nicol and Macfarlane-Dick (2006) argue that feedback can enhance students’ learning if it is provided during the learning process. Such forms of feedback can also serve as timely motivation boosters, helping students initiate tasks more promptly and maintain consistent progress (Zacks and Hen, 2018). In addition to the timing of feedback, effective feedback should address both the students´ understanding and the task itself, allowing students to reconstruct their knowledge and develop more capacity and creativity (Nicol and Macfarlane-Dick, 2004).

To address these challenges and better align feedback with the goals of active learning, there is a clear need to move toward more continuous, formative strategies (Schluer and Brück-Hübner, 2025). A more learning-focused model of feedback represents a paradigm shift in higher education, where students become active social participants who reflect and discuss assessment criteria with peers and teachers. This shift aligns with a broader educational context, particularly within the Bologna Process’s second decade toward 2020, which emphasized student-centered and lifelong learning (Bucharest Communiqué, 2012). Building on this development, increasing attention has been directed toward dialogic approaches toward to formative assessment. These approaches reconceptualize feedback as a reciprocal process, grounded in dialog between students and instructors, rather than a unidirectional transmission of information.

1.2 Dialogic formative feedback

In this review, we have chosen to use the term dialogic formative feedback. To ensure clarity throughout this review, it is important to delineate the key terms that are often used interchangeably in the literature. We conceptualize these terms hierarchically.

The broadest concept is formative assessment, which is a fundamentally collaborative act that takes place between the teaching staff and students where the primary purpose is to enhance the capability of the latter to the fullest extent possible (Yorke, 2003). It is an overarching pedagogical approach focused on assessment for learning, contrasting the formative assessment with the summative assessment of learning that often occurs after a task is completed. The quality of the interaction is at the very core of pedagogical work and important for a successful outcome (Black and Wiliam, 2009).

Within this broad approach, formative feedback is a core component. It can be defined as information communicated to the learner that is intended to modify the learner’s thinking or behavior for the purpose of improving learning and achieving a particular learning outcome (Shute, 2008). Its purpose is to “move learner’s forwards” by supporting their ongoing work.

In this review, we have chosen to use the term dialogic formative feedback as it precisely captures the core of the approach, which includes core aspects of formative assessment and formative feedback. The dialogic formative feedback approach reconceptualizes feedback as a reciprocal, dialog-based process between students and instructors, rather than a unidirectional transmission of information or a monolog. Dialog is the “engine” that produces and expresses student engagement. This process is characterized by back-and-forth interaction that allows for clarification, negotiation of meaning, and active student engagement, giving students a voice and agency in their own learning. By selecting this term, we underscore the importance of the relational and collaborative interaction that is foundational to this model of feedback in higher education.

One framework for formative feedback has been proposed by Winstone and Carless (2019). Winstone and Carless (2019) emphasize the importance of first becoming aware of the feedback culture and thereafter initiate a change toward more dialogic forms of feedback. They argue for a shift toward a more learning-focused model of feedback, which contrasts with a unidirectional feedback model, as it encourages students to actively engage and implement feedback into their work (Winstone and Carless, 2019). Supporting students in developing as self-regulated learners is a central pedagogical principle. The construct of self-regulation refers to the degree to which students are capable of regulating aspects of their thinking, motivation, and learning. “Self- regulation is manifested in the active monitoring and regulation of a number of different learnings processes, e.g., the setting of, and orientation toward, learning goals; the strategies used to achieve goals; the management resources; the effort exerted; reactions to external feedback; the product produced” (Nicol and Macfarlane-Dick, 2006, p. 2).

Thus, for the purpose of this review, we define dialogic formative feedback as any information that is intended to provide dialogic feedback to students so that they can improve and self-regulate their work by following these five steps according to Black and Wiliam (2009). The five major strategies for effective formative feedback are:

1. To provide regular opportunities to clarify and share learning intentions and criteria for success, 2. Engineer effective discussions and other learning tasks that elicit evidence of student understanding, 3. Provide feedback that moves leaners forwards, 4. Activate students instructional resources for one another and 5. activate learners as owners of their own learning. Introducing collaborative dialogic formative feedback can shift from a more teacher-directed feedback approach to assisting and guiding students in generating their own feedback on their learning.

1.3 Previous reviews of the literature

Several reviews explore how higher education might use feedback practices more effectively (Black and Wiliam, 2009; Lipnevich and Panadero, 2021; Morris et al., 2021; Panadero and Lipnevich, 2022; Sun et al., 2023). While many definitions of formative feedback have been offered, there is no clear rationale to define and delimit it within broader theories of pedagogy. Thus, there is a need for a review scoping approaches and models as a starting point toward unifying the field. This review could provide suggestions for further enquiry, while at the same time helping teachers with a starting point if they want to implement formative practices (Black and Wiliam, 2009).

Black and Wiliam (2009) examined formative assessment across various assessment methods, focusing on quantitative studies and causal evidence. Findings from their study indicate that feedback to students on their work through quizzes, peers, and instructors is beneficial, but its effectiveness is influenced by the way the feedback is implemented. However, it remains challenging to determine the effectiveness of formative feedback, and it is also important to consider student and teacher experiences as they may be gathered in qualitative studies.

In another review, Sun et al. (2023) specifically explored research on the student voice in feedback, without focusing on specific models but generally investigating if students’ voices have any impact on feedback. Sun et al. (2023) emphasized that systematically collecting student voices could change and inform teachers feedback practices.

In a third review, by Lipnevich and Panadero (2021), 14 models and their empirical evidence were described, and the review highlighted that the field is inconsistent in terms of definitions, inclusion of feedback characteristics, and in terms of empirical evidence. Next, to overcome the identified inconsistencies between models and studies, Panadero and Lipnevich (2022) examined these 14 feedback models and proposed an integrative framework highlighting the interplay between feedback content, implementation, and student engagement. They concluded with an integrated model that includes five components: message, implementation, student, context, and agent (MICA). However, to our knowledge, the MICA model has yet to undergo empirical investigation, and given the recency of this proposed model, its influence on the field remains limited.

Lastly, adding a crucial theoretical dimension to the field, a very recent critical review by Myers and Buchanan (2025) synthesizes 74 articles to examine how dialog is conceptualized within feedback literacies. Instead of mapping feedback models, they offer a nuanced theoretical framework that categorizes dialog into three distinct levels of engagement: clarificatory, questioning, and critical. These levels are aligned with established models of academic literacies, progressing from a ‘study skills’ approach (clarificatory dialog), through ‘academic socialization’ (questioning dialog), to a transformative ‘academic literacies’ approach (critical dialog). Myers and Buchanan argue that “dialog” is not a monolithic concept but rather a cline of activity, ranging from a simple clarification of a teacher’s comments to a critical interrogation of the assessment process itself. This framework provides a powerful lens for analyzing the nature and depth of dialogic interactions, moving beyond their structural implementation (Myers and Buchanan, 2025).

Taken together, these reviews illustrate a field rich with models and evolving theoretical perspectives yet still lacking a unified practical map for educators. While Panadero and Lipnevich (2022) offer an integrative model of feedback elements and Myers and Buchanan (2025) provide a framework for the nature of the dialog itself, there remains a need to systematically scope the available literature on the practical models and approaches used in higher education. Such a review is necessary to provide a comprehensive overview of how dialogic formative feedback is conducted in practice and to determine how these different approaches and models vary.

1.4 The current review

Several gaps in previous literature reviews justify the need for a scoping review on dialogic formative feedback in higher education. Firstly, as pointed out above, there is a lack of clarity and consensus regarding how dialogic feedback is conceptualized and implemented within the context of higher education, leading to inconsistencies in the research field and uncertainties for teachers seeking to implement formative feedback practices. Research has also highlighted persistent challenges in documenting the outcomes of dialogic feedback on student learning, feedback literacy, and engagement, especially considering diverse student populations, and learning contexts. These evidence gaps—ranging from theoretical frameworks to the absence of rigorous comparative and causal studies—warrant a comprehensive scoping review to synthesize current knowledge, identify areas for future research, and guide practice.

The aim of this systematic scoping review is to explore, map, and summarize both models and approaches for dialogic formative feedback in higher education described in the literature. Furthermore, it aims to support higher education teachers in development and implementation of dialogic formative feedback by illustrate how these approaches and models vary in their implementation of dialogic formative feedback. This knowledge can be used by higher education teachers as a starting point before further examining studies and approaches that are directly relevant to one’s own teaching domain and context. In addition, we aimed to gain applicable knowledge about the evaluation of outcomes from dialogic formative feedback conducted in the higher education context.

The following research questions guided our review of the literature:

1. Which dialogic formative feedback models and approaches are described in the literature, and how are they conducted?

2. How is dialogic formative feedback evaluated in the literature?

Research question 2 was slightly changed from the preregistered protocol research question, which characteristics are essential when evaluating results of dialogic formative feedback. During the review process, it became apparent that the original research question was too comprehensive due to the number of included studies.

2 Methods

We followed the methodological framework for scoping reviews described by Arksey and O’Malley (2005) which consists of the following five stages: (1) identifying the research question by clarifying and linking the purpose and research question, (2) identifying relevant studies, (3) selection of studies, (4) Charting the data in a tabular and narrative format, and (5) collating and summarizing the result for practice or further research. Our reporting is done in accordance with the PRISMA-ScR checklist (Tricco et al., 2018).

2.1 Search process

Prior to the search, a protocol was published in Open Science Framework (Nedrehagen et al., 2024) ID nr: 10.17605 (Doi: https://doi.org/10.17605/OSF.IO/2J4M5). A librarian implemented the search in ERIC, CINAHL, Scopus, Teacher Reference Center and modified in accordance with specific database preferences. The following search string was used: Formative AND (Feedback OR evaluation OR assessment*) AND (“Higher education” OR “bachelor OR undergraduate student*” OR “college student”) AND dialogic AND “student evaluation.” Additionally, Google Scholar was searched for gray literature and supplemented with sources identified through pearling of reference lists in included reports. The initial searches were not limited by source type, study design, language, or publication year.

2.2 Data charting and collation

The screening process was conducted in a three-stage process (Figure 1). Initially, every unique paper was uploaded to Rayyan (Ouzzani et al., 2016) and duplicates were removed.

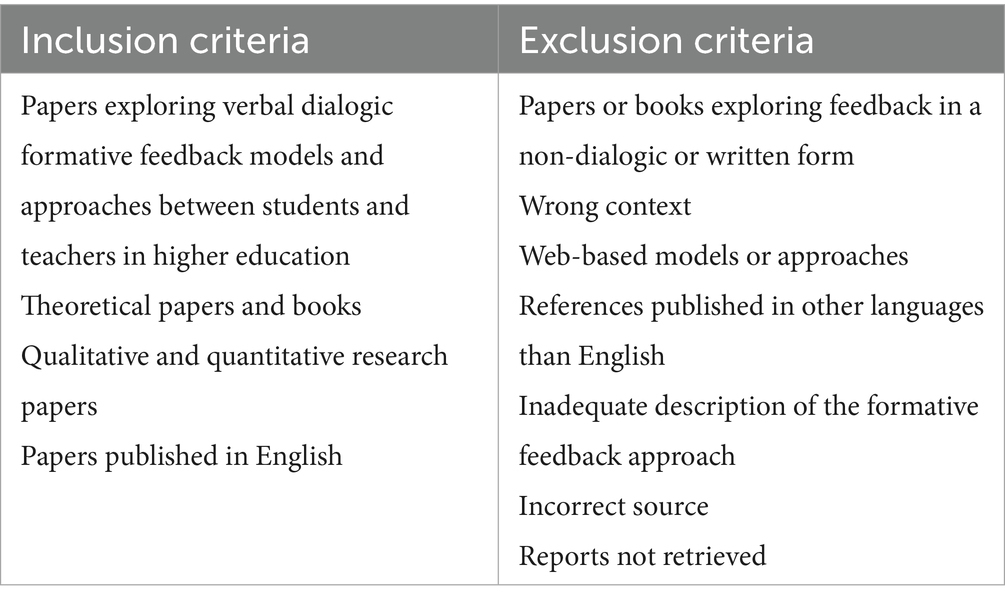

Second, the reviewers calibrated by screening titles and abstracts from randomly selected papers (n = 50) together. At this stage, the reviewers evaluated the eligibility criteria’s (Table 1) as precise and suitable.

The reviewers agreed to include references with sparse or unclear descriptions regarding form or context of feedback for further evaluations in full text. Thereafter, the remaining titles and abstracts (n = 3,568) were screened by two independent, blinded reviewers. To calculate the reviewer’s agreement at this stage of screening, papers were selected randomly (n = 636) and screened by all three reviewers independently. Of this, 88,7% had unanimous decisions about eligibility (n = 564). The remaining references (n = 2,932) were screened by two independent reviewers with 85,5% (n = 2,507) agreement, whereas 12% (n = 353) were disagreements resolved after discussion between the corresponding reviewers, and 2,5% (n = 72) as conflicts that was resolved with 2:1 agreement with a third reviewer.

Third, all papers reviewed as eligible by two reviewers was read in full text. To initially assess reviewer agreement, 30 references were independently read and evaluated for eligibility by the three reviewers (ESN, JMS, TSV), forming three independent reviewer pairs. The mean agreement across the pairs was 82,2%, with a substantial agreement (Cohen’s Kappa = 0.64). Following, the reviewers decided to add “inadequate description of the formative evaluation approach” as a fifth exclusion criteria, i.e., a description that is not detailed enough for replication/reproduction of the approach. Initially in the screening process, “Full text not retrievable” and “incorrect source” were further added as a sixth and seventh exclusion criteria, for references that were behind paywalls inaccessible for the library and references that did not qualify as a primary source (i.e., book reviews).

Thereafter, the remaining references from the primary (n = 336) and additional search (n = 4) was equally assigned to the three reviewers for individual full text eligibility decisioning. Of these, 40 sources were sought for further data extractions (Figure 1).

Before extracting and compiling the data, the reviewers revised the pre-published and developed detailed descriptions of the variables compiled in the matrix. Initially after this, the reviewers calibrated the matrix by extracting and compiling the same three references blinded for each other, before conducting a second revision of the matrix. At this stage, the authors collaborated in the extraction and compiling of the findings from the three references. Thereafter the authors started the process of individual extraction and compiling with two unique references each, before conducting a last revision of the matrix.

The analysis followed a basic qualitative content approach with pre-determined stages described in detail by Pollock et al. (2023). The analysis’ followed an inductive approach. First, the reviewers collaboratively established preliminary codes after reading the included evidence sources. Second, the reviewers individually piloted the preliminary coding scheme. Lastly, after gaining further acquaintance with the evidence and preliminary codes, the reviewers collaboratively established the final coding scheme. After this, two reviewers individually coded the included data before coming together to seek agreement. Discrepancy between reviewers in coding were discussed with a third reviewer and resolved with a 2:1 agreement.

3 Results

This section presents the findings from our scoping review, organized according to the two research questions that guided the study. First, we are outlining the findings relevant to research question one: which dialogic formative feedback models and approaches are described in the literature, and how they are conducted?. Next, we present findings related to research question two: how is dialogic formative feedback evaluated in the literature?

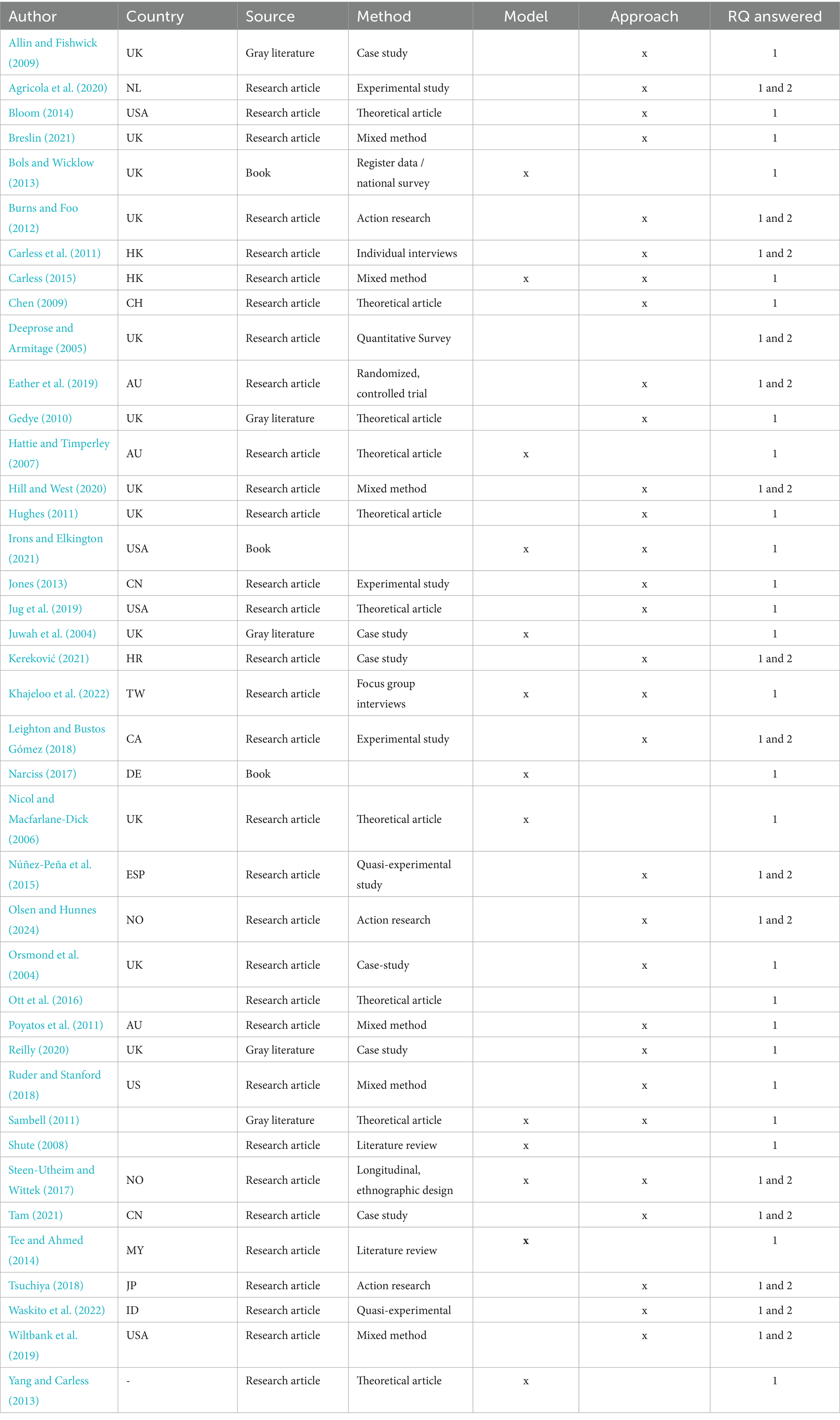

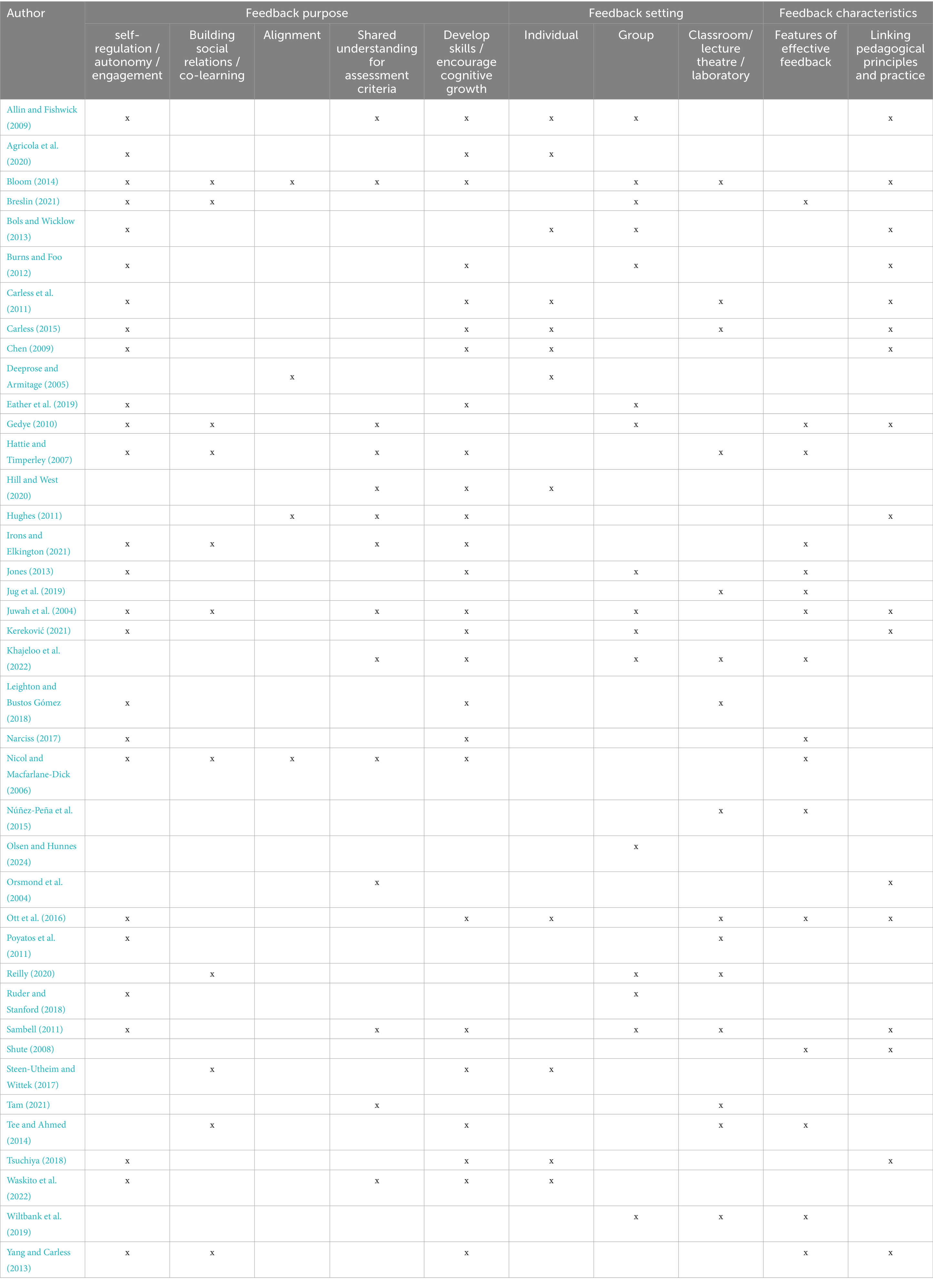

Our review identified 40 sources that presents models and approaches for dialogic formative feedback (Table 2).

The models and approaches serve different purposes and are therefore structured differently. The summarizing analysis was conducted inductively. When the team of reviewers analyzed the data extracted from the models and approaches, it came apparent that there were three main categories in which the different models and approaches diverged: Feedback purpose, feedback setting, and the content of the feedback (Table 3).

Table 3. Witch verbal dialog-based feedback models and approaches are described in the literature, and how are they conducted?

3.1 Feedback purpose

The identified models and approaches to formative feedback were organized according to their intended purpose as stated by the authors of the respective papers. Our review identified several distinctive purposes by dialogic formative feedback in higher education. These purposes can be broadly categorized as promoting self-regulation and cognitive growth (n = 27), promoting shared understanding for assessment criteria (n = 15), and building social relationships and increasing co-learning and alignment between students and teachers (n = 4) (Table 3).

The most common purpose of dialogic formative feedback in the included papers was to promote self-regulated learning and foster cognitive growth, including the development of study skills (Carless, 2015; Hattie and Timperley, 2007; Nicol and Macfarlane-Dick, 2006). The different models and approaches conducted with this purpose describes how dialogic feedback serves to help students reconstruct their understanding and build greater capacity and creativity when addressing’s academic tasks. The different models and approaches conducted with this purpose describes how dialogic feedback serves to help students reconstruct their understanding and build greater capacity and creativity when addressing’s academic tasks.

The second most common purpose of dialogic formative feedback in the included papers was to promote shared understanding for assessment criteria (Hattie and Timperley, 2007; Irons and Elkington, 2021). These papers described dialogic formative feedback to help students to reduce the gap between what they currently understand and the learning objectives they are expected to reach during a course. Papers that described this purpose emphasized the timing of feedback, highlighting the value of providing guidance during the task execution phase rather than after completion.

Finally, a less common purpose of dialogic formative feedback reported was to build social relationships between students to promote co-learning and alignment between students and teachers (Bloom, 2014; Breslin, 2021; Gedye, 2010). These papers described group- or classroom-based settings for the formative feedback (see details in the next section) and emphasized peer-discussion and activities as a central part of the co-learning (Carless, 2015; Hattie and Timperley, 2007; Nicol and Macfarlane-Dick, 2006).

3.2 Feedback characteristics

Two categories describing feedback characteristics were identified (Table 3). The first subcategory focused on features of effective feedback (n = 16). The sources generally describe effective feedback as a process that enhances learning by providing information about performance. This may involve confirming, contradicting, adding to, removing, or restructuring students’ knowledge or believes, with a clear emphasis on the task rather than personal attributes or preferences. Overall, the literature concentrates on how, when, and what to deliver effective feedback.

Some sources ground these characteristics in general principles, while other sources propose more tailored approaches, focusing on specific methods designed to structure the feedback process. For example, the “feedback sandwich” involves delivering positive feedback both before and after constructive criticism (Jug et al., 2019). Similarly, the “Pendleton Method” encourages a sequential dialog between the student, and teacher, were both identifies what went well before discussing aspects that could be improved (Jug et al., 2019).

Several sources identify factors that influence how effective feedback should be conducted. These include student characteristics, such as the level of commitment, the quality of the interpersonal relationship between the teacher and the student and the nature of the dialog (Breslin, 2021; Gedye, 2010; Hattie and Timperley, 2007; Jug et al., 2019; Khajeloo et al., 2022; Narciss, 2017; Shute, 2008; Yang and Carless, 2013).

A substantial portion of the literature addresses the timing of feedback (Hattie and Timperley, 2007; Jug et al., 2019; Shute, 2008; Tee and Ahmed, 2014; Yang and Carless, 2013). Some sources distinguish between delayed feedback-delivered after task completion and feeding forward, provided during task to guide the next step (Jones, 2013; Ott et al., 2016). Others suggest a combined approach with immediate feedback for complex tasks, delayed feedback for simpler ones, while the content and phrasing of feedback such as questioning, hints and prompts also are discussed (Shute, 2008).

In the second category identified (n = 17), the sources draw on the importance of aligning pedagogical principles and feedback practices frameworks. A recurring theme in the category is the role of feedback in clarifying what constitutes good performance. This can be supported through the use of examples, discussion and reflective dialog (Gedye, 2010; Ott et al., 2016; Tee and Ahmed, 2014).

In addition, several sources highlight the potential of feedback to promote self-evaluation and reflection, particularly by encouraging students to consider what actions are needed to progress toward their goals (Hattie and Timperley, 2007; Ott et al., 2016). Constructive feedback may also assist students in identifying both strengths and areas for improvement (Bols and Wicklow, 2013). This process may be supported by the opportunity of revision and resubmission, which can help to close the gap between current and future performance in the process toward completed assignments (Gedye, 2010).

The importance of dialog is consistently highlighted as a key element in clarifying expectations and standards, and in helping students construct meaning from feedback (Jug et al., 2019; Ott et al., 2016). Structured feedback methods such as the “Ask-Tell-Ask” method, where student engage in self-assessment both before and after feedback- and the “Pendleton Method” in which the student and teacher sequentially identify strengths and areas for improvement- have been proposed as effective strategies to facilitate such dialog (Jug et al., 2019).

3.3 Feedback setting

Three dialogic formative feedback settings were described in the literature: individual, group and classroom/lectures (Table 3). Formative feedback in groups was the most frequently described setting (n = 16), followed by formative feedback in classroom/lectures (N = 15) and individual formative feedback (n = 12).

Four different models of formative group feedback were identified (Bols and Wicklow, 2013; Juwah et al., 2004; Khajeloo et al., 2022; Sambell, 2011). These models converged in their emphasis on dialog as crucial for effective learning, through student-teacher interaction, and student self-regulation as fundamental for independent learning. Some combined individual and group feedback (Allin and Fishwick, 2009; Bols and Wicklow, 2013), whereas others combined feedback in group and classroom (Khajeloo et al., 2022; Reilly, 2020; Sambell, 2011; Wiltbank et al., 2019). Furthermore, 12 different approaches to group formative feedback were described (Allin and Fishwick, 2009; Bloom, 2014; Breslin, 2021; Burns and Foo, 2012; Eather et al., 2019; Gedye, 2010; Jones, 2013; Kereković, 2021; Olsen and Hunnes, 2024; Reilly, 2020; Ruder and Stanford, 2018; Wiltbank et al., 2019). These approaches all emphasized the use of group activities such as collective reading, modeling, and group assignments, but diverged in that some were oriented toward measuring quantitative student performance (Breslin, 2021; Olsen and Hunnes, 2024) whereas others were oriented toward fostering qualitative changes in students’ attitudes, self-confidence, engagement, or learning strategies (Burns and Foo, 2012; Kereković, 2021; Reilly, 2020; Wiltbank et al., 2019).

Dialogic formative feedback in classrooms and lectures was the second most frequently described setting of dialogic formative feedback (n = 15). Five models for classroom-based formative feedback were identified (Carless, 2015; Hattie and Timperley, 2007; Khajeloo et al., 2022; Sambell, 2011; Tee and Ahmed, 2014) and eight approaches (Bloom, 2014; Carless et al., 2011; Jug et al., 2019; Núñez-Peña et al., 2015; Poyatos et al., 2011; Reilly, 2020; Tam, 2021; Wiltbank et al., 2019). The models described converged in their emphasis on that effective feedback and assessment are integrated, student-centered processes designed to enhance and drive student learning, rather than simply measuring it. However, the models differed in their focus on how content and structure impact the feedback (Hattie and Timperley, 2007). Some focus on teacher abilities and roles (Carless, 2015; Khajeloo et al., 2022), some on the students (Sambell, 2011), while others focus on both student and teachers in the feedback process (Tee and Ahmed, 2014).

Individual dialogic formative feedback was described as the third most common setting for dialogic formative feedback (n = 12). We identified three models for conducting individual formative feedback (Bols and Wicklow, 2013; Carless, 2015; Steen-Utheim and Wittek, 2017). In addition to the three models, eight different approaches to individual formative feedback were described in other sources (Agricola et al., 2020; Allin and Fishwick, 2009; Carless et al., 2011; Chen, 2009; Deeprose and Armitage, 2005; Hill and West, 2020; Tsuchiya, 2018; Waskito et al., 2022). Some gave the individual, one-to one, feedback sessions within a classroom (Carless, 2015; Chen, 2009), while others utilized private rooms for individual feedback (Agricola et al., 2020; Allin and Fishwick, 2009; Hill and West, 2020; Steen-Utheim and Wittek, 2017). Several approaches demonstrated that individual feedback also can be given within a group. The distinction from group feedback lies in the fact that this feedback is directed at individuals, rather than addressing the entire group collectively (Allin and Fishwick, 2009; Carless, 2015). The content of individual feedback frequently focused on drafts or projects, guiding students on next steps in their work (Carless, 2015; Hill and West, 2020).

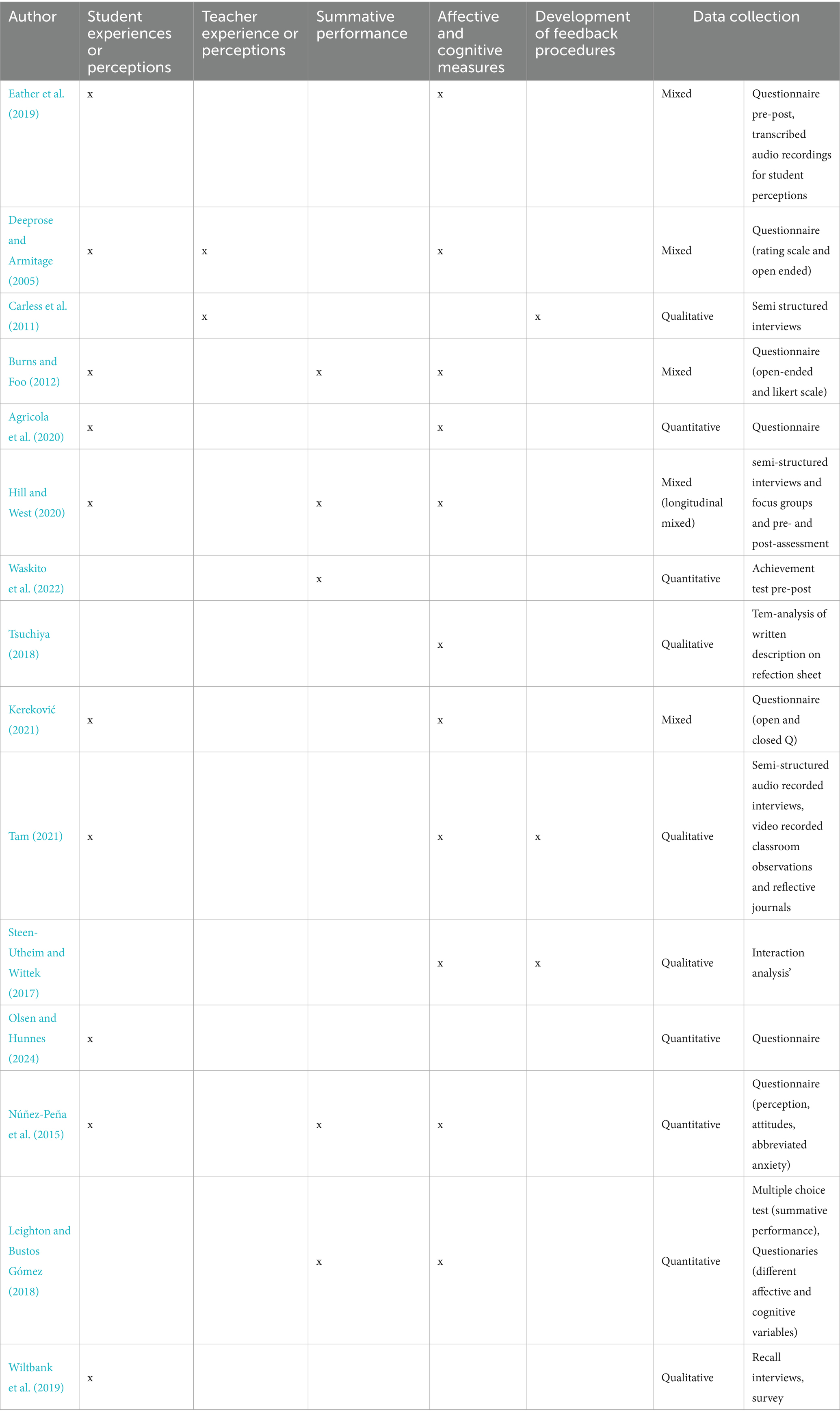

3.4 Evaluation of dialogic formative feedback

This section aims to address the second research question; how is dialogic formative feedback evaluated in the literature? Five categories of outcomes related to dialogic formative feedback were identified (Table 4). The most prevalent categories are student experiences and perceptions (n = 10) and various affective and cognitive measures (n = 11). The studies highlight how dialogic formative feedback can enhance students’ learning, motivation, engagement, well-being and learning strategies. Fewer studies have explored teacher experiences and perceptions (n = 2). These sources offer insights in teachers view on dialogic formative feedback practices. Academic achievement outcomes (n = 5) were explored through a variety of measures, including course grades and performance on different summative assessments. Lastly, a limited group of sources (n = 3) focussed on development of feedback procedures (n = 3), using empirical findings to inform and shape feedback models and guidelines.

4 Discussion

This review sought to identify different models and approaches to dialogic formative feedback and map the qualitative and quantitative empirical outcomes reported in studies that have evaluated dialogical models and approaches. The overview provided herein can provide valuable knowledge for teachers and researchers who intend to implement or evaluate models or approaches to enhance dialogic feedback in higher education. Our findings highlight a range of different models and approaches to dialogic formative feedback and that the current literature on dialogic formative feedback is highly heterogenous with respect to methods (i.e., qualitative, or quantitative) and evaluation domains (e.g., student experiences, perceptions, affective and cognitive measures).

We identified and analyzed 40 sources of models and approaches to dialogic formative feedback, which illustrate the variety of research designs and practical implementations. Through our analysis, we arrived at three main categories that could be used to summarize the similarities and differences across models and approaches. These categories were feedback purpose, feedback setting, and feedback content. In the following, we discuss the findings related to each of these categories before we address how dialogical formative feedback has been evaluated in the included sources.

4.1 What is the most described pedagogical purpose?

A common feature across the identified models and approaches is the articulation of a clear pedagogical purpose: to foster self-regulation, cognitive development, and collaborative learning through dialog. These models and approaches are deliberately designed with a content and structure that support this aim by enabling formative feedback through interaction and reflective dialog between teacher and students. Moreover, the organization of the models and approaches varies, allowing formative assessment to occur at the individual level, in group settings, and as an integrated element within the classroom instruction.

For feedback purpose, we found that promoting self-regulation and cognitive growth is the most reported purpose of dialogic formative feedback. This purpose aligns well with the paradigm shift in feedback practices in higher education, where students are supposed to become active social participants who reflect and discuss assessment criteria with peers and teachers. As mentioned earlier, Generation Z students foster learning environments that give them more independence and personal relevance. At the same time, they may struggle with staying focused and managing their own learning due to constant distractions and reduced attention spans (Chardonnens, 2025; Szymkowiak et al., 2021). These tendencies call for teaching strategies that not only support self-regulation but also help students develop both academically and emotionally. Formative feedback, by encouraging dialog and reflection over time, can help students take greater ownership of their learning, strengthen their ability to regulate it, and maintain motivation throughout their learning process. Sambell (2011) argue that feedback must be a relational process that is integrated into different courses and that students need to evaluate their work through engaging in challenging dialogs with the teacher and their peers (Sambell, 2011). When the learning environment is inviting students to engage in the feedback process, it makes the students more responsible for their own learning, and thus, fosters self-regulated learning processes. Then the students need to evaluate their work through engaging in challenging dialogs with the teacher and their peers (Sambell, 2011). When the learning environment invites students to engage in the feedback process, it makes the students more responsible for their own learning and thus fosters self-regulated learning processes.

4.2 How is the characteristics structured to support the pedagogical purpose?

This study identified 25 sources that presents different perspectives on dialogic feedback characteristics, categorized in features of effective feedback and linking pedagogical principles and practice. These sources manly focus on “what to say,” “when to say it” and “how to say it” (Gedye, 2010; Jug et al., 2019; Juwah et al., 2004). Notably, many of the approaches presented are not one-sided techniques, but rather parts of broader pedagogical approaches. A significant share of the sources focuses on linking the formative feedback practice and pedagogical principles. This suggests that dialogic formative feedback should be aligned in a broader approach, rather than serve as an administrative structure in a course (Bols and Wicklow, 2013; Hattie and Timperley, 2007). The findings underscore that there is no universal “one-size-fits-all” approach for best practice. Rather, the literature highlights the presence of different moderating factors that influence the effectiveness of the feedback. These moderators are closely tied to the nature of the task, the teacher, and the student. Key factors include relational dynamics, levels of trust, academic ability, task complexity, and the degree of feedback literacy (Breslin, 2021; Jug et al., 2019; Khajeloo et al., 2022; Yang and Carless, 2013). Such variables must be considered when implementing dialogical feedback strategies. In response to the educational expectations of Generation Z, teachers should be aware of these factors, so that they can seek to align their feedback practices with the Bologna process goal of promoting student-centered learning approaches (Bucharest Communiqué, 2012).

4.3 What feedback settings are described?

Dialogic formative feedback in higher education is delivered through three main forms: individual sessions, group work, and classroom/lectures. The most frequently described setting for dialogic formative feedback is in small groups, described in 16 of the included sources, followed by classroom/lectures (15 sources) and individual feedback (12 sources). The research highlights that when it comes to using dialogic feedback, the focus is on creating interactive, group-based learning settings. The variety of settings in which dialogic formative feedback can be practiced underscores that dialogic formative feedback can be implemented into a broad range of subjects, teaching approaches, and with varying student cohort sizes. Dialogic formative feedback does not have to be resource-intensive and demanding for faculty. Delivering feedback directly in group or classroom settings makes the feedback process an efficient alternative to summative one-to-one feedback, which can ease staff workload. This may, though, require coordination of the groups and, thus, add load to the scheduling effort, especially in large cohorts. Facilitating the dialogs may also require specific competence and experience from the facilitator, thereby increasing the demand on staff competence.

While traditional summative feedback often has a significant time delay and does not optimally promote learning (Steel, 2007; Zacks and Hen, 2018), group- and classroom-based dialogic feedback demonstrates more learning-focused feedback that aligns with the need to meet a more diverse student body (Bucharest Communiqué, 2012; Szymkowiak et al., 2021). In summary, group- and classroom-based approaches to dialogic formative feedback offers efficient and promising alternatives to the current practice of summative feedback. For students, implementation of dialogic formative feedback in class (or smaller groups) implies that they will receive more timely and continuous guidance throughout the learning process, which can reduce procrastination and promote self-regulated learning (Ferrari and O’Callaghan, 2005; Steel, 2007; Zacks and Hen, 2018). Through dialogic feedback in groups, students can engage in activities such as collective reading and group assignments, clarify learning objectives, and develop self-evaluative skills. For teachers, this offers practical implications by highlighting the value of designing tasks that promote critical thinking and dialog, as well as focusing on relational support and maintaining conversation, thereby helping to create supportive and stimulating classroom environment.

Yet, considering Gen Z’s expectations for personalized and interactive learning, there is a growing need for educational approaches that combine autonomy with structured support. However, such strategies can be related to time- and resource constraints on institutional level. A possible solution could be to utilize new technology, such as Artificial Intelligence (AI). AI- driven educational tools, such as intelligent tutoring systems and adaptive learning platforms, can analyze students’ learning behaviors in real-time and provide instant feedback (Chardonnens, 2025). Implementation of AI has the potential to alleviate institutional resource constraints and aligns with the learning expectations of Gen Z. However, it is essential to ensure that new approaches, which utilizes AI, also recognize that learning is a social process, and that much learning is fostered through human interaction.

4.4 How is dialogic formative feedback evaluated in the literature?

This scoping review systematically mapped a broad spectrum of outcome domains in studies of dialog-based formative feedback in higher education, identifying five principal categories: student experiences and perceptions, affective and cognitive outcomes, academic achievement, teacher experiences and perceptions, and development of feedback procedures. Notably, substantial variation was observed in how studies were distributed across these categories. The analyses revealed that “students’ experiences and perceptions” (n = 10) and “affective and cognitive measures” (n = 11) represented the most frequently investigated domains, reflecting a research agenda that prioritizes learner-centered perspectives and psychological processes. In contrast, areas such as academic achievement (n = 5), teacher experiences/perceptions (n = 2), and the development of feedback procedures (n = 3) were covered less extensively, highlighting persistent gaps in evidence, and suggesting underexplored dimensions with relevance to broader educational effectiveness.

This imbalance may mirror prevailing trends in feedback research, where exploration of student experience and cognition takes precedence, and the relational or procedural aspects of feedback delivery are comparatively neglected. The observed underrepresentation of studies focusing on teacher perspectives and direct impacts on academic achievement underscores the need for more systematic investigation into how feedback practices affect both instructors and learning outcomes, and how feedback procedures evolve within different pedagogical contexts.

At the same time as more research on these topics is called for, it is also important to acknowledge the methodological challenges that may have contributed to these research gaps. That is, research on academic achievement in the context of dialogic formative feedback interventions is complex, as it ideally involves methods to capture dialogic processes in authentic educational contexts and, at the same time, measure the impact of these processes on academic achievement and quantify this impact. Much existing research relies on self-reported student perceptions that are easy to collect, and/or short-term interventions that do not fully reflect the learning process and iterative nature of feedback dialog. Experimental studies in a naturalistic setting are time- and resource-consuming but would provide important insights into the impact of dialogic formative feedback on the academic achievements of students within different subjects. In contrast, teacher perspectives should be easier to capture, and it is not clear why this is an understudied topic. An interesting line of research would be to interview teachers about their experiences with dialogic formative feedback approaches to get more knowledge about which approaches are more easily implemented by university teachers and which ethical concerns related to power dynamics they should be aware of. Furthermore, observation studies, where one observes the feedback dialog, trying to capture the different strategies used by teachers and how students respond to them, are another idea for future research.

Beyond the range of different topics that have been explored in the evaluation of dialogic formative feedback, our review also highlights considerable methodological diversity. The included studies varied in their methodological approach, from employing surveys to classroom observations and in-depth interviews. This demonstrates a richness in research approaches and reinforces the multifaceted nature of dialog-based formative feedback and the different research designs used to examine these approaches. However, this diversity also points to the challenge of synthesizing evidence across heterogeneous research designs and signals a need for more comparative and systematic evaluations to advance methodological consistency and enhance the interpretability of findings in this complex field.

4.5 Interpreting dialogic practices through a theoretical lens

While our review has mapped the practical landscape of dialogic formative feedback by categorizing models based on purpose, characteristics, and setting, the recent theoretical framework by Myers and Buchanan (2025) offers a valuable lens for interpreting the pedagogical depth of these practices. Their model, which posits a cline of dialog from clarificatory to questioning and critical reflections, helps to explain the variations we observed and provides a theoretical grounding for our key findings.

Our analysis revealed that the most common purpose of dialogic feedback is to promote self-regulation and cognitive growth. Viewed through Myers and Buchanan’s framework, this purpose is primarily achieved through clarificatory dialog and critical reflections, where students seek to understand and close the “gap” between their performance and the expected standard. Questioning dialog, where they begin to contextualize criteria and explore the underlying “guild knowledge” of their discipline helps to bridge the gap. The less common purpose we identified, building social relationships and co-learning, aligns more closely with the collaborative and transformative potential of questioning and critical dialog, where the assessment process itself can be analyzed.

A second theoretical lens that can help explain why self-regulation was emphasized in the models we reviewed, is Myers and Buchanan's (2025) emphasis on internal dialog. Drawing on Nicol (2019), they argue that for learning to occur, “external information or advice has to be turned into inner feedback.” The numerous group- and classroom-based approaches we identified can be understood as pedagogical structures designed to stimulate this crucial internal dialog.

Finally, the framework of internal dialog presented by Myers and Buchanan (2025) reinforces our finding that there is no “one-size-fits-all” approach to feedback in higher education. The integrated matrix proposed by Myers and Buchanan demonstrates how different dialog types—and the teacher feedback that supports them—are appropriate for different contexts, such as a student’s developmental stage or the complexity of a task. The practical variety we mapped in our review is therefore not a sign of a fragmented field, but rather a reflection of the necessary pedagogical flexibility required to move students from simple clarification toward deeper, critical engagement. Thus, while our scoping review maps what is being done in practice, the Myers and Buchanan (2025) framework helps to explain how these practices function and with what potential effect on the learning process.

5 Future directions

Both students and teachers have expressed dissatisfaction with current feedback practice in higher education (Bakken, 2018; Klingsieck, 2013; Wiggen, 2020), which highlights the need for alternative approaches. Consequently, an overview of the purpose, characteristics and setting of dialogic formative feedback can be helpful and serve as a source of inspiration for researchers and teachers who want to develop feedback practices that can meet the pedagogical challenges of higher education in the 21st century. A recent review highlights that interventional studies in higher education have yet to fully reflect the conceptual shift to more formative feedback practices (Schluer and Brück-Hübner, 2025).

While the current review highlights the diversity of models and approaches to dialogic formative feedback, it also underscores several gaps and opportunities for further research. First, the predominance of studies emphasizing self-regulation and cognitive growth as the primary purpose of feedback suggests the need for a more balanced exploration of less common purposes, such as fostering social relationships and co-learning. Future research could investigate how dialogic feedback can be more effectively used to build alignment between students and teachers, especially in collaborative and group-based settings. Several studies have found that the quality of the student-teacher relationship is important for student engagement and satisfaction, which in turn could promote more positive learning outcomes (Hagenauer and Volet, 2014; Snijders et al., 2020).

Additionally, while this review identified a variety of feedback models and approaches, the practical implementation of these models—particularly in diverse educational contexts—remains underexplored. Studies comparing the efficacy of these approaches across disciplines, cultural contexts, and student populations could provide valuable insights. There is also a need for studies with more stringent study designs, such as experimental studies, before we can conclude about the causal impact of dialogic formative feedback on students learning outcomes.

Beyond exploring different purposes and using more stringent study designs, another promising area for future research is to further investigate the timing and delivery of feedback. The literature discusses immediate, delayed, and combined feedback approaches, yet empirical comparisons of their effectiveness in specific learning scenarios (e.g., complex vs. simple tasks) are lacking and there is a need for studies that can give us a broader understanding of when in the learning process dialogic formative feedback should be initiated to enhance self-regulated learning and other related student learning outcomes.

Lastly, while this review (focused on and) highlights the importance of dialog in feedback processes, more research is needed to examine how specific dialogic strategies—such as the “Ask-Tell-Ask” or “Pendleton Method”—influence student outcomes. Investigating how interpersonal factors, such as the teacher-student relationship, impact the effectiveness of dialogic feedback could also deepen our understanding of best practices. It may be that a good student-teacher relationship can be promoted through dialogic formative feedback, but it may also be that successful dialogic formative feedback depends on a good student-teacher relationship. Advancing research in these areas will not only refine existing feedback models but also support the development of innovative approaches that align with evolving pedagogical needs in higher education.

In summary, this review provides an overview of the literature, focusing on the variety and commonalities in approaches and models. Given the number of different approaches and models, further work in this area should strive toward developing a unified model that encompasses the most important and most agreed-upon features from the existing models. The MICA model proposed by Panadero and Lipnevich (2022) may be such a unified model, but empirical investigations of the model’s applicability to the diverse context in which dialogic formative feedback can be implemented are needed. A unifying model is a starting point for further research that can provide the field with a necessary clarification of key terms and make the entrance to the field easier for new researchers or teachers who are interested in the topic of dialogic formative feedback.

6 Strengths and limitations

This preregistered scoping review provides a comprehensive overview of dialogic formative feedback models and approaches in higher education; however, several limitations should be acknowledged. First, while we followed the rigorous methodological framework of Arksey and O’Malley (2005) and adhered to the PRISMA-ScR reporting guidelines (Tricco et al., 2018), the inclusion criteria and search strategy may have inadvertently excluded relevant studies. For instance, despite efforts to retrieve gray literature through Google Scholar and searching of the reference lists of relevant sources, studies published in non-indexed journals or inaccessible due to paywalls may have been missed, potentially leading to publication bias. The decision to exclude reports with “Full text not retrievable” and “incorrect source” may have omitted valuable sources that could have contributed to a more nuanced understanding of the topic. These were sources that lacked content or contained material that could not be extracted, such as PowerPoint presentations, or otherwise were unobtainable through the university library. Importantly, only a small portion of these (n = 2) were excluded specifically due to being behind paywalls inaccessible to the university.

Second, the decision to adjust the second research question during the review process reflects the challenges of managing a large and diverse body of literature. While this decision allowed a more focused synthesis, the original intent to capture the essential characteristics more comprehensively in the evaluation of dialogic formative feedback may have been compromised, limiting the comprehensiveness of our findings.

Third, the reliance on reviewer agreement for inclusion and coding, despite measures to ensure calibration and consistency, introduces an inherent risk of rater discrepancies. While interrater reliability was substantial (Cohen’s Kappa = 0.64), discrepancies in interpretation across reviewers highlight the potential for bias in the screening and analysis phases.

Fourth, the inductive nature of the analysis means that the categorization of models, approaches, and characteristics is interpretative. Although the collaborative coding process aimed to ensure consistency, the findings are shaped by the reviewers’ analysis of the literature and the data available in the included sources. As such, the replicability of the current review is not comparable to, for instance, a meta-analysis, and this should be taken into consideration when interpreting our findings and conclusions. However, given the huge heterogeneity in the field, performing a meta-analysis (or even a systematic review) would not have been possible, and some degree of interpretation and analysis was necessary to summarize the current literature.

Fifth, we excluded all papers that used digital forms of formative feedback. This was to avoid too broad of a scope, and because the review started when technological advances such as AI was not commonly used yet. However, today, different forms of AI are rapidly evolving, and we think it is likely that future forms of dialogic formative feedback can draw upon the technological advances in this field. At the same time, however, we think it is important to remember that learning is an inherent social activity, and that students (and all other humans) need affiliation with others. As such, AI cannot replace the teacher when it comes to feedback practices, but AI could supplement teachers´ feedback to aid students´ self-regulatory learning and thus, increase the benefits of dialogic formative feedback. Thus, a future scoping review should examine the scope of the literature on the intersection of dialogic formative feedback and AI.

Sixth, we also excluded studies with an “inadequate description of the dialogic formative feedback approach.” This exclusion criterion necessarily evokes a degree of subjective judgment when it comes to what is an adequate or inadequate description. Thus, the criterion may have led to the omission of studies presenting vague or less well-articulated approaches to the formative feedback and consequently biased the selection of studies included in this review. However, the lack of sufficiently precise or detailed accounts in these reports meant that their feedback approaches may not be reliably replicated or tested in practice. Further, it is important to note that all records were screened by two independent reviewers with substantial inter-rater agreement, and that all disagreements were solved by discussion or a 2:1 agreement with a third reviewer.

Seventh, although not a limitation with the methodology of this scoping review itself, we found that most of the included studies were conducted in Western, English-speaking countries, particularly the UK, US, Australia, and Norway. Fewer studies originated from Asian or other non-western contexts. This geographical concentration may limit the contextual transferability of findings, as feedback practices may be shaped by local educational cultures and norms. Accordingly, the findings of this scoping review should be understood with the contextual limitations in mind and as a snapshot of a rapidly evolving field, rather than a definitive account.

Finally, given the vast number of studies, the diversity of approaches, and the constant development of the field, a comprehensive review of all formative feedback designs is not possible. These variations and ongoing changes also complicate comparisons across studies and limit the generalizability of systematic reviews and meta-analyses.

Author contributions

EN: Conceptualization, Writing–original draft, Data Curation, Formal analysis, Investigation, Methodology, Software, Validation, Writing–review & editing. TV: Supervision, Formal analysis, Project administration, Conceptualization, Validation, Writing–review & editing, Methodology, Investigation, Writing–original draft, Software, Data curation. JS: Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing–original draft, Writing–review & editing. SO: Conceptualization, Formal analysis, Methodology, Supervision, Writing–original draft, Writing–review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. Artificial intelligence (AI) was used as a supportive tool during the linguistic refinement of this article. After the text was drafted by the research team, AI was employed to check for spelling errors and suggest improvements to sentence structure and phrasing. This use of AI contributed to enhancing the clarity and flow of the language, without influencing the academic content or the analytical interpretations presented in the article. We have used Chat GPT-5 and Microsoft copilot.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Agricola, B. T., Prins, F. J., and Sluijsmans, D. M. A. (2020). Impact of feedback request forms and verbal feedback on higher education students’ feedback perception, self-efficacy, and motivation. Assess. Educ. Princ. Policy Pract. 27, 6–25. doi: 10.1080/0969594x.2019.1688764

Allin, L., and Fishwick, L. (2009). Learning and teaching guides. Engaging sport students in assessment and formative feedback.

Arksey, H., and O’Malley, L. (2005). Scoping studies: towards a methodological framework. Int. J. Soc. Res. Methodol. 8, 19–32. doi: 10.1080/1364557032000119616

Black, P., and Wiliam, D. (2009). Developing the theory of formative assessment. Educ. Assess. Eval. Account. 21, 5–31. doi: 10.1007/s11092-008-9068-5

Bloom, E. M. (2014). A law school game changer: (trans)formative feedback. Ohio N. Univ. Law Rev. 41, 227–259. doi: 10.2139/ssrn.2437060

Bols, A., and Wicklow, K. (2013). “Feedback – what students want” in Reconceptualising feedback in higher education: Developing dialogue with students. eds. S. Merry, M. Price, D. Carless, and M. Taras (New York: Taylor and Francis Group), 19–29.

Breslin, D. (2021). Finding collective strength in collective despair; exploring the link between generic critical feedback and student performance. Stud. High. Educ. 46, 1312–1324. doi: 10.1080/03075079.2019.1688283

Bucharest Communiqué. (2012). Making the Most of our potential: Consolidating the European higher education area.

Burns, C., and Foo, M. (2012). Evaluating a formative feedback intervention for international students. Pract. Res. High. Educ. 6, 40–49. Available at: http://insight.cumbria.ac.uk/id/eprint/1331/

Carless, D. (2015). Exploring learning-oriented assessment processes. High. Educ. 69, 963–976. doi: 10.1007/s10734-014-9816-z

Carless, D., Salter, D., Yang, M., and Lam, J. (2011). Developing sustainable feedback practices. Stud. High. Educ. 36, 395–407. doi: 10.1080/03075071003642449

Chardonnens, S. (2025). Adapting educational practices for generation Z: integrating metacognitive strategies and artificial intelligence. Front. Educ. 10:1504726. doi: 10.3389/feduc.2025.1504726

Chen, L. (2009). A study of policy for providing feedback to students on college English. Engl. Lang. Teach. 2:p162. doi: 10.5539/elt.v2n4p162

Deeprose, C., and Armitage, C. (2005). Giving formative feedback in higher education. Psychol. Learn. Teach. 4, 43–46. doi: 10.2304/plat.2004.4.1.43

Eather, N., Riley, N., Miller, D., and Imig, S. (2019). Evaluating the impact of two dialogical feedback methods for improving pre-service teacher’s perceived confidence and competence to teach physical education within authentic learning environments. J. Educ. Train. Stud. 7:32. doi: 10.11114/jets.v7i8.4053

Ferrari, J. R., and O’Callaghan, J. (2005). Prevalence of procrastination in the United States, United Kingdom, and Australia: arousal and avoidance delays among adults. Am. J. Psychol. 7, 1–6. Available at: https://psycnet.apa.org/record/2005-03779-001

Gedye, S. (2010). Formative assessment and feedback: a review. Planet 23, 40–45. doi: 10.11120/plan.2010.00230040

Hagenauer, G., and Volet, S. E. (2014). Teacher–student relationship at university: an important yet under-researched field. Oxf. Rev. Educ. 40, 370–388. doi: 10.1080/03054985.2014.921613

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Hill, J., and West, H. (2020). Improving the student learning experience through dialogic feed-forward assessment. Assess. Eval. High. Educ. 45, 82–97. doi: 10.1080/02602938.2019.1608908

Hughes, G. (2011). Towards a personal best: a case for introducing ipsative assessment in higher education. Stud. High. Educ. 36, 353–367. doi: 10.1080/03075079.2010.486859

Irons, A., and Elkington, S. (2021). Enhancing learning through formative assessment and feedback. Abingdon: Routledge.

Jones, D. (2013). Making feedback effective in a presentation skills class. Engl. Teach. China 2, 24–28. Available at: https://www.xjtlu.edu.cn/assets/files/publications/etic/issue-2/2_7_jones_2013.pdf

Jug, R., Jiang, X., and Bean, S. M. (2019). Giving and receiving effective feedback: a review article and how-to guide. Arch. Pathol. Lab Med. 143, 244–250. doi: 10.5858/arpa.2018-0058-RA

Juwah, C., Macfarlane-Dick, D., Matthew, B., Nicol, D., Ross, D., and Smith, B. (2004). Enhancing student learning through effective formative feedback.

Kereković, S. (2021). Formative assessment and motivation in ESP: a case study. Lang. Teach. Res. Q. 23, 64–79. doi: 10.32038/ltrq.2021.23.06

Khajeloo, M., Birt, J. A., Kenderes, E. M., Siegel, M. A., Nguyen, H., Ngo, L. T., et al. (2022). Challenges and accomplishments of practicing formative assessment: a case study of college biology instructors’ classrooms. Int. J. Sci. Math. Educ. 20, 237–254. doi: 10.1007/s10763-020-10149-8

Klingsieck, K. B. (2013). Procrastination in different life-domains: is procrastination domain specific? Curr. Psychol. 32, 175–185. doi: 10.1007/s12144-013-9171-8

Leighton, J. P., and Bustos Gómez, M. C. (2018). A pedagogical alliance for trust, wellbeing and the identification of errors for learning and formative assessment. Educ. Psychol. 38, 381–406. doi: 10.1080/01443410.2017.1390073

Lipnevich, A. A., and Panadero, E. (2021). A review of feedback models and theories: descriptions, definitions, and conclusions. Front. Educ. 6:195. doi: 10.3389/feduc.2021.720195

Morris, R., Perry, T., and Wardle, L. (2021). Formative assessment and feedback for learning in higher education: a systematic review. Rev. Educ. 9:e3292. doi: 10.1002/rev3.3292

Myers, T., and Buchanan, J. (2025). Dialogism in feedback literacies: a critical review. Assess. Eval. High. Educ. 50, 846–860. doi: 10.1080/02602938.2025.2478159

Narciss, S. (2017). “Conditions and effects of feedback viewed through the Lens of the interactive tutoring feedback model” in Scaling up assessment for learning in higher education, the enabling power of assessment. eds. D. Carless, S. M. Bridges, C. K. Y. Chan, and R. Glofcheski (Singapore: Springer Singapore), 173–189.

Nedrehagen, E. S., Vee, T. S., Stokstad, J. M., and Orm, S. (2024). Protocol: approaches for dialogic formative feedback and their outcomes in higher education; a scoping review.

Nicol, D. (2010). From monologue to dialogue: improving written feedback processes in mass higher education. Assess. Eval. High. Educ. 35, 501–517. doi: 10.1080/02602931003786559

Nicol, D. (2019). Reconceptualising feedback as an internal not an external process. Ital. J. Educ. Res. 1, 71–84. Available at: https://ojs.pensamultimedia.it/index.php/sird/article/view/3270

Nicol, D., and Macfarlane-Dick, D. (2004). Rethinking formative assessment in HE: a theoretical model and seven principles of good feedback practice.

Nicol, D., and Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: a model and seven principles of good feedback practice. Stud. High. Educ. 31, 199–218. doi: 10.1080/03075070600572090

Núñez-Peña, M. I., Bono, R., and Suárez-Pellicioni, M. (2015). Feedback on students’ performance: a possible way of reducing the negative effect of math anxiety in higher education. Int. J. Educ. Res. 70, 80–87. doi: 10.1016/j.ijer.2015.02.005

Olsen, T., and Hunnes, J. (2024). Improving students’ learning—the role of formative feedback: experiences from a crash course for business students in academic writing. Assess. Eval. High. Educ. 49, 129–141. doi: 10.1080/02602938.2023.2187744

Orsmond, P., Merry, S., and Callaghan, A. (2004). Implementation of a formative assessment model incorporating peer and self‐assessment. Innov. Educ. Teach. Int. 41, 273–290. doi: 10.1080/14703290410001733294

Ott, C., Robins, A., and Shephard, K. (2016). Translating principles of effective feedback for students into the CS1 context. ACM Trans. Comput. Educ. 16, 1–27. doi: 10.1145/2737596

Ouzzani, M., Hammady, H., Fedorowicz, Z., and Elmagarmid, A. (2016). Rayyan—a web and mobile app for systematic reviews. Syst. Rev. 5:210. doi: 10.1186/s13643-016-0384-4

Panadero, E., and Lipnevich, A. A. (2022). A review of feedback models and typologies: towards an integrative model of feedback elements. Educ. Res. Rev. 35:416. doi: 10.1016/j.edurev.2021.100416

Pollock, D., Peters, M. D., Khalil, H., McInerney, P., Alexander, L., Tricco, A. C., et al. (2023). Recommendations for the extraction, analysis, and presentation of results in scoping reviews. JBI Evid. Synth. 21, 520–532. doi: 10.11124/JBIES-22-00123

Poyatos, C., Muurlink, O., and Ng, C. (2011). Using the Igcra (individual, group, classroom reflective action) technique to enhance teaching and learning in large accountancy classes. J. Technol. Sci. Educ. 1, 24–37. doi: 10.3926/jotse.2011.11

Ruder, S. M., and Stanford, C. (2018). Strategies for training undergraduate teaching assistants to facilitate large active-learning classrooms. J. Chem. Educ. 95, 2126–2133. doi: 10.1021/acs.jchemed.8b00167

Sambell, K. (2011). Rethinking feedback in higher education: an assessment for learning perspective.

Schluer, J., and Brück-Hübner, A. (2025). Diversity of pedagogical feedback designs: results from a scoping review of feedback research in higher education. Assess. Eval. High. Educ. 50, 295–307. doi: 10.1080/02602938.2024.2378336

Shute, V. J. (2008). Focus on formative feedback. Rev. Educ. Res. 78, 153–189. doi: 10.3102/0034654307313795

Snijders, I., Wijnia, L., Rikers, R. M. J. P., and Loyens, S. M. M. (2020). Building bridges in higher education: student-faculty relationship quality, student engagement, and student loyalty. Int. J. Educ. Res. 100:101538. doi: 10.1016/j.ijer.2020.101538

Steel, P. (2007). The nature of procrastination: a meta-analytic and theoretical review of quintessential self-regulatory failure. Psychol. Bull. 133, 65–94. doi: 10.1037/0033-2909.133.1.65

Steen-Utheim, A., and Wittek, A. L. (2017). Dialogic feedback and potentialities for student learning. Learn. Cult. Soc. Interact. 15, 18–30. doi: 10.1016/j.lcsi.2017.06.002

Sun, S., Gao, X., Rahmani, B. D., Bose, P., and Davison, C. (2023). Student voice in assessment and feedback (2011–2022): a systematic review. Assess. Eval. High. Educ. 48, 1009–1024. doi: 10.1080/02602938.2022.2156478

Szymkowiak, A., Melović, B., Dabić, M., Jeganathan, K., and Kundi, G. S. (2021). Information technology and gen Z: the role of teachers, the internet, and technology in the education of young people. Technol. Soc. 65:101565. doi: 10.1016/j.techsoc.2021.101565

Tam, A. C. F. (2021). Undergraduate students’ perceptions of and responses to exemplar-based dialogic feedback. Assess. Eval. High. Educ. 46, 269–285. doi: 10.1080/02602938.2020.1772957

Tee, D. D., and Ahmed, P. K. (2014). 360 degree feedback: an integrative framework for learning and assessment. Teach. High. Educ. 19, 579–591. doi: 10.1080/13562517.2014.901961

Tricco, A. C., Lillie, E., Zarin, W., O’Brien, K. K., Colquhoun, H., Levac, D., et al. (2018). PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann. Intern. Med. 169, 467–473. doi: 10.7326/M18-0850

Tsuchiya, M. (2018). The effects of a teacher’s formative feedback on the self-regulated learning of lower-proficiency Japanese university learners of English: a qualitative data analysis using TEM. Annu. Rev. Engl. Lang. Educ. Jpn. 1:97. doi: 10.20581/arele.29.0_97

Waskito, I., Wulansari, R. E., and Kyaw, Z. Y. (2022). The adventure of formative assessment with active feedback in the vocational learning: the empirical effect for increasing students’ achievement. J. Tech. Educ. Train. 14, 54–62. doi: 10.30880/jtet.2022.14.01.005

Wiltbank, L., Williams, K., Salter, R., Marciniak, L., Sederstrom, E., McConnell, M., et al. (2019). Student perceptions and use of feedback during active learning: a new model from repeated stimulated recall interviews. Assess. Eval. High. Educ. 44, 431–448. doi: 10.1080/02602938.2018.1516731

Winstone, N., and Carless, D. (2019). Designing effective feedback processes in higher education: A learning-focused approach. Abingdon: Routledge.

Yang, M., and Carless, D. (2013). The feedback triangle and the enhancement of dialogic feedback processes. Teach. High. Educ. 18, 285–297. doi: 10.1080/13562517.2012.719154

Yorke, M. (2003). Formative assessment in higher education: moves towards theory and the enhancement of pedagogic practice. Higher Educ. 45, 477–501. doi: 10.1023/A:1023967026413

Keywords: dialogic formative feedback, formative feedback, formative assessment, shared assessment, higher education

Citation: Nedrehagen E, Vee TS, Stokstad JM and Orm S (2025) A scoping review of dialogic formative feedback practices in higher education. Front. Educ. 10:1696703. doi: 10.3389/feduc.2025.1696703

Edited by:

Halvdan Haugsbakken, Østfold University College, NorwayReviewed by:

Viktoria Magne, University of West London, United KingdomDelia Muste, Babes-Bolyai University, Romania