- 1College of Wealth Management, Ningbo University of Finance and Economics, Ningbo, China

- 2School of Education, The University of Queensland-Business School, Brisbane, QLD, Australia

Introduction: The economic implications of air quality monitoring have become a critical concern in environmental economics, particularly in balancing economic growth with sustainable environmental policies. Traditional methods of assessing air quality and its economic impact rely heavily on stationary sensor networks and survey-based economic models, which often suffer from spatial limitations, delayed data availability, and high operational costs. These approaches fail to capture real-time variations in pollution levels and their immediate economic consequences.

Methods: To address these challenges, we propose a novel video analysis approach integrated with an Eco-Regulated Market Dynamics Model (ERMDM) to enhance air quality assessment and its economic evaluation. Our method leverages advanced computer vision techniques to extract pollution indicators from video footage, combined with a dynamic market-based regulatory framework that incorporates stochastic environmental fluctuations, intertemporal optimization, and policy-induced market responses.

Results: By embedding environmental constraints into economic decision-making, the proposed model effectively balances industrial productivity with ecological sustainability.

Discussion: Experimental validation demonstrates that our approach provides more accurate, real-time assessments of air quality impacts on economic activities, enabling policymakers to design adaptive taxation strategies and market-driven permit allocation mechanisms. This fusion of video analysis with environmental economic modeling presents a transformative solution for sustainable economic policy formulation in response to air quality fluctuations.

1 Introduction

Air pollution is a pressing global challenge that not only affects human health but also has significant economic consequences. Poor air quality is linked to increased healthcare costs, reduced labor productivity, and diminished property values, placing a considerable burden on national economies (Luxem et al., 2022). Not only does air pollution impact individual wellbeing, but it also exacerbates socio-economic inequalities by disproportionately affecting lower-income communities. Inadequate air quality monitoring systems often lead to ineffective policy decisions, resulting in suboptimal resource allocation and economic inefficiencies (Wan et al., 2021). Traditional monitoring techniques, which rely on ground-based sensors, are expensive to deploy on a large scale, limiting comprehensive coverage (Kitaguchi et al., 2021). In recent years, advancements in video analysis techniques have presented new opportunities for cost-effective, real-time air quality assessment (Hendricks et al., 2020). This emerging approach enhances both spatial and temporal resolution and provides policymakers with actionable insights for economic planning and sustainable development. Given the economic stakes associated with air pollution, the integration of video-based analysis into air quality monitoring systems represents a crucial step toward mitigating financial losses and fostering long-term economic resilience.

Early air quality assessment systems predominantly relied on symbolic AI and expert rule-based approaches (Liu et al., 2020). These methods used predefined logic, regulatory thresholds, and expert knowledge to evaluate pollution levels (Tang et al., 2020). By integrating meteorological data, pollutant thresholds, and emission inventories, they offered decision-makers qualitative insights into air quality trends. However, symbolic AI frameworks were typically static, unable to adapt to rapidly changing environmental conditions, and required continuous manual updates to maintain relevance (Cuevas et al., 2020). Additionally, the computational cost and rigidity of rule-based models limited their economic feasibility and scalability (Lin et al., 2020). To overcome these limitations, researchers turned to data-driven and machine learning methods, which employed statistical and pattern recognition models to improve predictive performance (Zamani et al., 2020). These models utilized historical pollution records, meteorological patterns, and traffic flows to forecast air quality with greater accuracy (Mercat et al., 2020). The development of IoT and sensor networks enabled more frequent data collection at lower cost, expanding access to real-time monitoring (Ben et al., 2021). However, machine learning approaches were still constrained by data quality issues, especially in regions with sparse or incomplete datasets. Furthermore, their black-box nature and lack of interpretability hindered trust and adoption among policymakers, who require transparent evidence for regulatory decisions (Stappen et al., 2021).

In response to these shortcomings, deep learning has emerged as a powerful alternative, particularly in conjunction with video analysis (Stenum et al., 2020). Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have shown strong capabilities in capturing spatial and temporal features from video footage, enabling precise, real-time air quality estimation (Ou et al., 2021). These models extract visual signals—such as haze intensity, traffic density, and smoke emissions—to infer pollutant levels without the need for dense sensor grids. With the introduction of pre-trained models and transfer learning, researchers have significantly reduced training costs and improved adaptability to different environments (Seuren et al., 2020). Moreover, combining video inputs with satellite and meteorological data has enhanced model robustness and predictive reliability across conditions. Nonetheless, deep learning still faces challenges, such as high computational requirements and limited interpretability, which raise concerns about transparency, cost, and public acceptance (Neimark et al., 2021).

Motivated by these developments and limitations, this study proposes a hybrid, economically viable video-based air quality monitoring framework. Our method integrates pre-trained deep learning models with multi-source data fusion, combining video streams, meteorological variables, and auxiliary sensor inputs. This framework aims to deliver scalable, cost-effective, and interpretable pollution assessments that are suitable for deployment in both urban and rural environments. By improving monitoring coverage and reducing reliance on costly hardware infrastructure, our approach enhances real-time decision-making capabilities for sustainable environmental and economic governance. The proposed method offers several key advantages:

2 Related work

2.1 Economic impact of air quality monitoring

Air pollution has profound economic consequences, affecting healthcare costs, labor productivity, and property values. According to recent estimates, the global economic burden of air pollution exceeds USD 2.9 trillion annually, accounting for approximately 3.3% of global GDP (Wang et al., 2021) These costs arise from increased medical expenses due to pollution-related diseases, lower productivity caused by poor worker health, and damage to agriculture and industrial output. Traditional air quality monitoring relies on fixed sensor networks and survey-based economic models, which are often expensive and limited in coverage (Buch et al., 2022). Models such as the Environmental Benefits Mapping and Analysis Program (BenMAP) estimate the benefits of pollution control, but require large amounts of ground-based data, making them less useful for real-time decision-making (Selva et al., 2022). Several studies have shown that proactive air quality policies can lead to long-term economic benefits. For example, the U.S. Clean Air Act has shown a return of about 30 dollars in benefits for every dollar spent (Apostolidis et al., 2021). Similar patterns have been observed in China and Europe, where stricter air quality laws have been linked to rising property values, more investment, and better public health. However, these gains depend on reliable and up-to-date pollution data (Tagg et al., 2020). A major challenge is to build affordable and scalable monitoring systems that can track pollution levels in real time. This challenge has encouraged researchers to explore video-based monitoring, which uses cameras and computer analysis as a more flexible alternative to traditional sensors.

2.2 Advancements in video analysis for air quality monitoring

Video analysis has become a promising method for monitoring air quality. High-definition cameras—placed on drones, satellites, or buildings—can capture visual signs of pollution, such as smog, smoke, or vehicle exhaust (yu Duan et al., 2020). When paired with image recognition techniques, these videos can be analyzed in real time to estimate pollution levels with fine detail in both space and time (Pareek and Thakkar, 2020). The rise of deep learning has improved how accurately pollution can be detected from video. Neural networks like ResNet and EfficientNet help computers recognize visual pollution patterns from video frames (Noetel et al., 2020). Newer techniques, like Vision Transformers (ViTs), which are designed to process images more flexibly, have shown even better results across different environmental conditions. Mobile platforms have extended these capabilities. Drones with special cameras and sensors can quickly inspect large areas to find pollution sources (Awad et al., 2021). Satellites operated by organizations like NASA and ESA give global views of air pollution and help track long-term trends (Wang et al., 2020).

Despite progress, video-based methods still face hurdles. Lighting, clouds, or objects blocking the view can interfere with accuracy. Also, analyzing video data at large scale requires powerful computers (Prechsl et al., 2022). Researchers are now exploring lighter models and local (edge) computing to reduce these limitations.

2.3 Implications and economic benefits of air quality monitoring

Using video to monitor air quality brings both environmental and economic advantages. It lowers monitoring costs while improving the quality and speed of pollution detection (Aloraini et al., 2021). This helps governments take faster action, saving money on healthcare and reducing losses from lower worker productivity or environmental damage. In places that use video monitoring, enforcement of pollution rules has improved. Authorities can act sooner and more precisely, and polluting industries are encouraged to adopt cleaner technologies (Nandwani and Verma, 2021). Video monitoring also supports market-based tools like emissions trading. By tracking pollution levels continuously, governments can set fair prices on emissions and ensure that polluters pay for the harm they cause (Chakravarthi et al., 2020). Still, challenges remain. Setting up video systems, training AI models, and maintaining real-time processing come with high upfront costs. Privacy is also a concern, especially in cities with dense populations (Li et al., 2021; Ding et al., 2022). Ensuring public trust and legal compliance is essential.

Overall, combining video technology with economic models offers a new way to manage pollution more effectively (Roth et al., 2022). Future research should focus on making these systems easier to understand, cheaper to operate, and capable of using multiple types of data to improve results.

Compared to existing approaches in video-based air quality monitoring and environmental economic modeling, our proposed framework introduces several important innovations that address both technical and policy-level limitations in the literature. First, while prior studies have applied CNNs or ViTs for pollution detection, they often focus solely on classification accuracy or visual signal estimation. Our method extends this line of work by directly integrating video-derived indicators—such as traffic volume and visible emissions—into a dynamic econometric model, thereby enabling causal analysis of air quality’s economic effects. Second, traditional economic models like BenMAP rely heavily on static sensor data and survey-based estimations. In contrast, our Eco-Regulated Market Dynamics Model (ERMDM) utilizes high-frequency video inputs and real-time feedback mechanisms (e.g., dynamic taxation and permit allocation), making it more responsive and adaptive to environmental fluctuations. Third, while some recent studies have proposed market-based regulation schemes, they often lack behavioral integration. Our model explicitly captures behavior-driven economic signals (e.g., pedestrian density, vehicle flow) and links them to regulatory outcomes, allowing for more precise and context-aware policy design. Together, these contributions position our approach as a novel and interdisciplinary bridge between environmental informatics, computer vision, and environmental economics, offering both scientific advancement and practical relevance.

3 Methods

3.1 Overview

Environmental economics is a critical subfield of economics that examines the economic effects of environmental policies and the efficient allocation of natural resources. The primary goal of this research is to integrate economic principles with environmental sustainability to guide decision-making processes that balance economic growth and ecological preservation. This section provides an in-depth introduction to the methodology and theoretical foundations adopted in our study. we present a structured exploration of three key aspects: the fundamental principles and modeling techniques in environmental economics, the development of a novel economic model for environmental impact assessment, and the strategic framework proposed for optimizing resource allocation and policy interventions.

In Section 3.2, we establish the essential theoretical background necessary to understand the economic mechanisms governing environmental interactions. This includes classical and contemporary models such as externalities, market failures, Pigouvian taxes, and tradable permits. The mathematical formalization of these models provides the foundation for analyzing environmental policies and their implications on market dynamics. By structuring the problem within an economic framework, we aim to elucidate the trade-offs between economic activity and environmental externalities. Following this, Section 3.3 introduces our proposed economic model designed to evaluate environmental policies more effectively. Our approach extends existing methodologies by incorporating multi-dimensional factors, including stochastic environmental fluctuations, policy-induced market responses, and intertemporal resource allocation strategies. The formulation of this model relies on advanced mathematical techniques to ensure analytical tractability and empirical applicability. In Section 3.4, we present a strategic framework aimed at optimizing environmental policies and resource management. By integrating game-theoretic and optimization approaches, we devise a novel strategy that enhances economic efficiency while mitigating environmental degradation. This section highlights the implementation aspects of our approach and demonstrates its effectiveness in addressing real-world environmental challenges.

To support air quality monitoring through video analysis, we utilized publicly accessible surveillance footage from municipal traffic management systems and environmental observation platforms. These data sources provided continuous daytime RGB video streams from fixed-position cameras monitoring urban roads, intersections, and industrial zones. The video data were first preprocessed through a three-step pipeline: frame sampling at a rate of one frame per second to manage computational load; resolution standardization to 720p to ensure consistent input dimensions; and filtering of frames captured under extreme low-light or overexposed conditions. For analytical processing, we employed deep learning-based computer vision techniques, notably the YOLOv5 object detection framework. This enabled automatic identification and tracking of vehicles, pedestrians, and visible industrial emissions (e.g., smoke plumes). Detected objects were counted frame-by-frame, and temporal aggregation was applied to generate time-series indicators such as traffic flow intensity, pedestrian density, and emission activity levels. These video-derived variables were subsequently aligned with air quality sensor data and integrated into our econometric modeling framework to assess the dynamic relationship between pollution levels and local economic behavior.

The rationale for adopting video analysis stems from the need to capture high-frequency, behaviorally grounded indicators of economic activity that are not readily available through conventional datasets. Traditional economic metrics often lack the temporal resolution or spatial granularity necessary to reflect real-time responses to short-term environmental changes. Video-based monitoring fills this gap by providing observational proxies—such as traffic congestion, pedestrian flow, and visible industrial emissions—that closely align with immediate human and industrial activity. We assume that observed behavior (e.g., reduced vehicle counts or pedestrian activity) reflects underlying economic responses to variations in air quality, such as reduced consumer demand or work attendance during pollution episodes. While these proxies do not represent monetary values directly, they serve as valid leading indicators of economic disruption or adaptation. Additionally, our econometric framework assumes a conditional independence between video-derived indicators and unobserved confounders, conditional on control variables such as weather, time-of-day, and location-fixed effects. These assumptions are standard in environmental economics literature and ensure causal interpretability in linking pollution exposure to behavioral-economic outcomes.

3.2 Preliminaries

Environmental economics seeks to formalize the interaction between economic activities and environmental sustainability using mathematical and economic models. This section introduces the fundamental concepts, notations, and mathematical foundations necessary to understand the relationship between economic decisions and environmental outcomes. Our approach integrates classical environmental economic theories with dynamic modeling techniques to ensure a robust framework for policy optimization.

We consider an economic system consisting of multiple firms, indexed by

The fundamental economic-environmental trade-off can be expressed through the following constrained optimization problem (Equation 1):

where

A central issue in environmental economics is the presence of negative externalities, where firms do not internalize the full societal cost of their pollution. The marginal external cost (MEC) of emissions is defined as (Equation 2):

where

The total environmental stock

Each firm

Firms aim to maximize their profit, considering costs for labor, capital, and environmental taxes

The first-order conditions for optimal input choices yield (Equations 6-8):

To internalize environmental externalities, the regulator imposes an optimal emission tax

The equilibrium condition for environmental quality requires (Equation 10):

The system’s stability is analyzed by linearizing the environmental transition equation around the steady state

where

3.3 Eco-Regulated Market Dynamics Model (ERMDM)

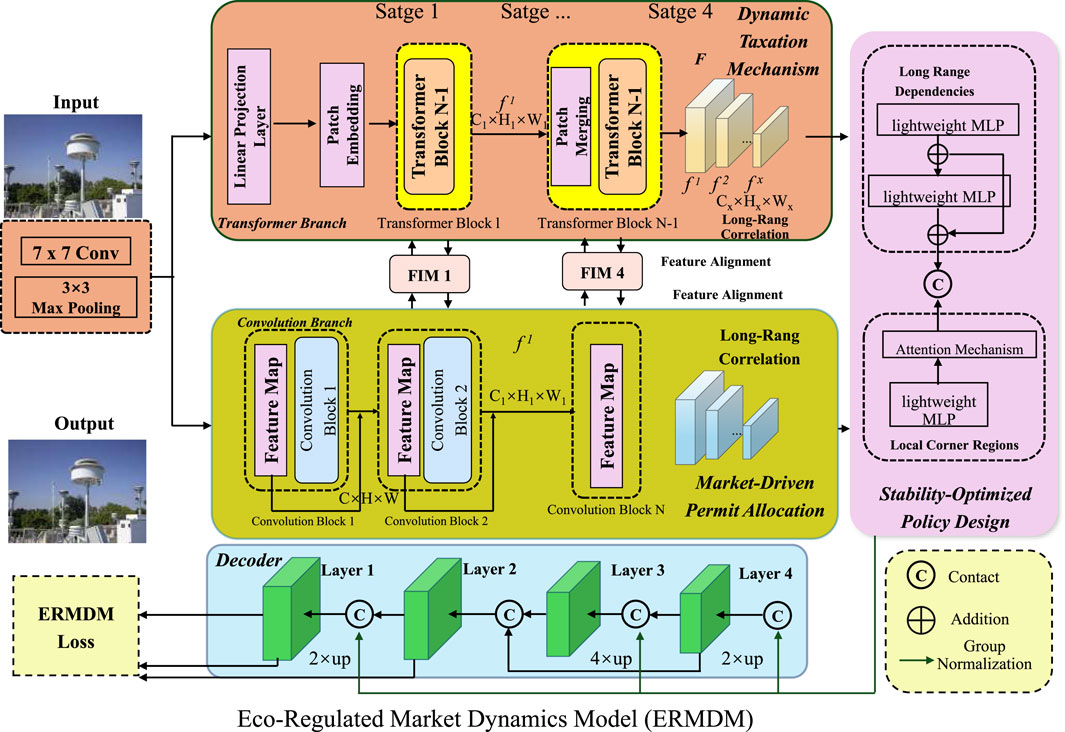

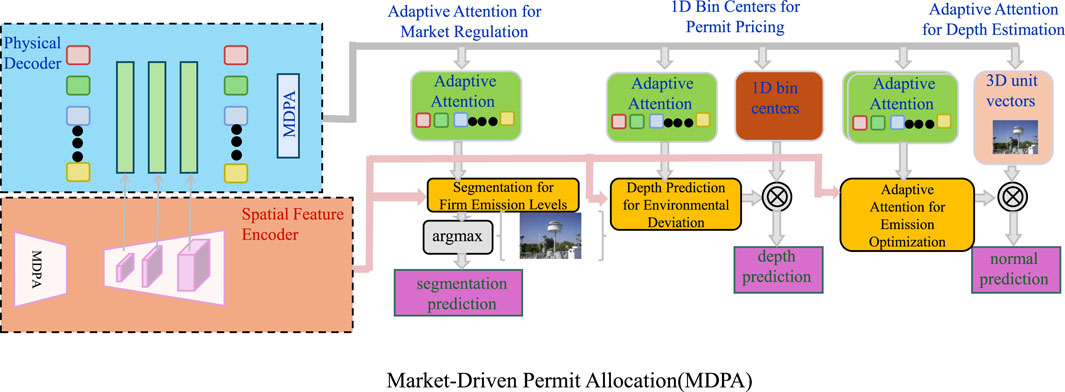

In this section, we introduce a novel economic model, termed the Eco-Regulated Market Dynamics Model (ERMDM), designed to integrate environmental constraints into economic decision-making. This model extends traditional environmental economic frameworks by incorporating stochastic environmental fluctuations, intertemporal optimization, and market-based regulatory interventions. Our objective is to develop a mathematically rigorous approach that balances economic growth with ecological sustainability (As shown in Figure 1).

Figure 1. Eco-Regulated Market Dynamics Model (ERMDM). A hybrid economic-environmental framework integrating convolutional and transformer-based architectures for sustainable market regulation. The model features a dynamic taxation mechanism, market-driven permit allocation, and stability-optimized policy design, ensuring economic growth while maintaining ecological balance. The visual representation highlights key components, including input processing, feature extraction, long-range correlation modeling, and stability optimization through adaptive regulatory interventions.

In this study, several key variables were extracted from video footage. These included: vehicle counts per unit time, used to estimate traffic flow intensity; frequency of industrial plume visibility, which serves as a proxy for emission activity; and average pedestrian presence, reflecting human exposure and activity levels. These variables were obtained using computer vision techniques, such as object detection and temporal aggregation, and were integrated into the econometric model to enhance temporal granularity and support causal inference regarding air quality and economic activity.

To quantitatively assess the economic implications of air pollution, we linked the video-derived indicators with economic activity proxies using a panel econometric framework. Specifically, vehicle and pedestrian counts extracted from video footage were used as real-time indicators of consumer and labor mobility, respectively. These behavioral metrics were correlated with localized economic data such as retail transaction volumes, business opening hours (where available), and industrial operation cycles. The econometric model employed fixed effects and time lags to capture both immediate and delayed responses to variations in air quality (e.g., PM2.5 levels), while controlling for confounders such as weather and weekday/weekend effects. By integrating temporally granular video-derived behavior data with environmental and economic variables, we were able to quantify the marginal economic loss or slowdown attributable to deteriorating air quality. This allowed for an estimation of pollution-related economic sensitivity at the neighborhood or district level.

3.3.1 Dynamic Taxation Mechanism

To internalize environmental externalities and ensure sustainable economic growth, we propose a dynamic taxation scheme where the emission tax rate is adaptively adjusted based on real-time environmental conditions. Unlike traditional fixed taxation models, which often fail to respond to rapid environmental fluctuations, our approach incorporates a feedback-driven mechanism that continuously modifies taxation levels in response to pollution variations. This ensures that firms dynamically optimize their emissions strategies while maintaining economic stability. The taxation function is structured as (Equation 12):

where

where

which shows that firms will reduce emissions as taxation increases in response to worsening environmental conditions. To prevent excessive fluctuations in taxation and ensure economic stability, a regulatory damping factor

where

3.3.2 Market-Driven Permit Allocation

Instead of imposing rigid emission limits, we introduce a dynamic permit trading system where the number of available permits adjusts in response to environmental fluctuations. This mechanism provides a flexible regulatory approach that aligns economic incentives with sustainability goals while ensuring environmental stability (As shown in Figure 2). The total permit allocation at time

where

Figure 2. Market-Driven Permit Allocation (MDPA) Framework. This figure illustrates the Market-Driven Permit Allocation (MDPA) framework, which dynamically adjusts the number of available permits based on real-time environmental conditions. The system employs adaptive attention mechanisms for market regulation, permit pricing, and emission optimization. Firms optimize their emissions by considering segmentation for emission levels, depth prediction for environmental deviation, and normal prediction, leading to an economically efficient and sustainable allocation of pollution rights. This approach ensures that firms internalize environmental costs while aligning with sustainability goals.

The equilibrium permit price

where

To further enhance stability, we incorporate a dynamic feedback adjustment mechanism that accounts for both deviations from the environmental target and the rate of environmental change (Equation 18):

where

The optimal emissions strategy for firms is derived from profit maximization under the permit system, leading to the condition (Equation 19):

where

3.3.3 Stability-optimized policy design

To ensure the long-term viability of environmental policies, we develop a stability-optimized policy framework that integrates economic and ecological dynamics. The evolution of the environmental state is modeled as (Equation 20):

where

where

A dynamic taxation mechanism is introduced to influence firm emissions, defined as (Equation 22):

where

where

By integrating adaptive taxation, market-driven permit allocation, and stability-focused policy design, the stability-optimized policy framework offers a robust solution for balancing economic productivity with environmental sustainability. The regulatory structure dynamically adjusts to economic conditions, ensuring long-term ecological and economic resilience.

3.4 Sustainable Market Incentive Strategy (SMIS)

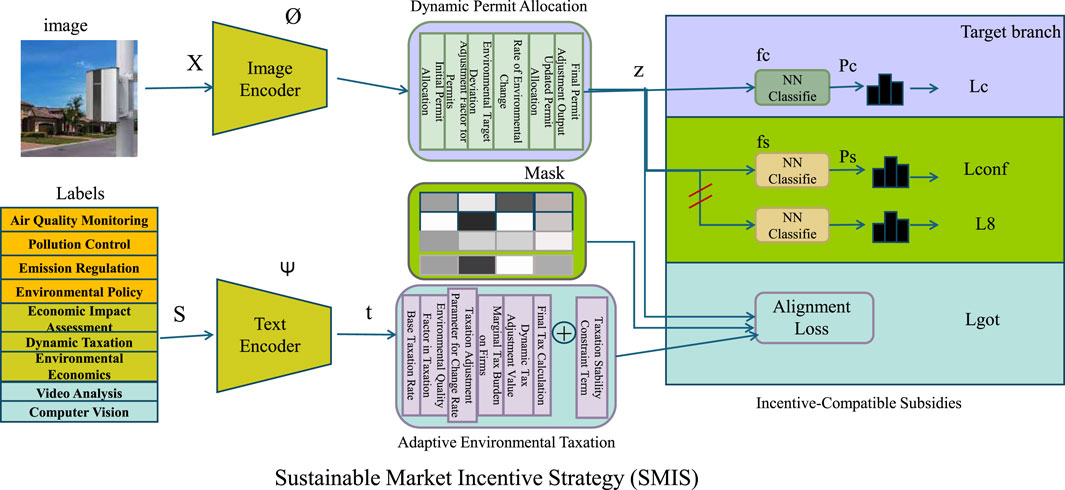

Building upon the Eco-Regulated Market Dynamics Model (ERMDM), we introduce a novel regulatory framework termed the Sustainable Market Incentive Strategy (SMIS). This strategy enhances environmental sustainability while maintaining economic efficiency through market-based incentives and dynamic policy adjustments. Unlike traditional fixed taxation or quota-based regulations, SMIS integrates adaptive mechanisms to optimize resource allocation in real-time (As shown in Figure 3).

Figure 3. This figure illustrates the architecture of the Sustainable Market Incentive Strategy (SMIS), which integrates Adaptive Environmental Taxation, Dynamic Permit Allocation, and Incentive-Compatible Subsidies to optimize environmental sustainability while maintaining economic efficiency. The image and text encoders process multi-modal environmental data, feeding into an adaptive taxation system and a dynamic permit allocation mechanism. The taxation module dynamically adapts in real-time according to changing environmental conditions, while the permit system dynamically regulates emissions through market-driven incentives. The final classification and alignment loss modules ensure that economic activities align with sustainability goals, promoting optimal emissions control and policy adaptation.

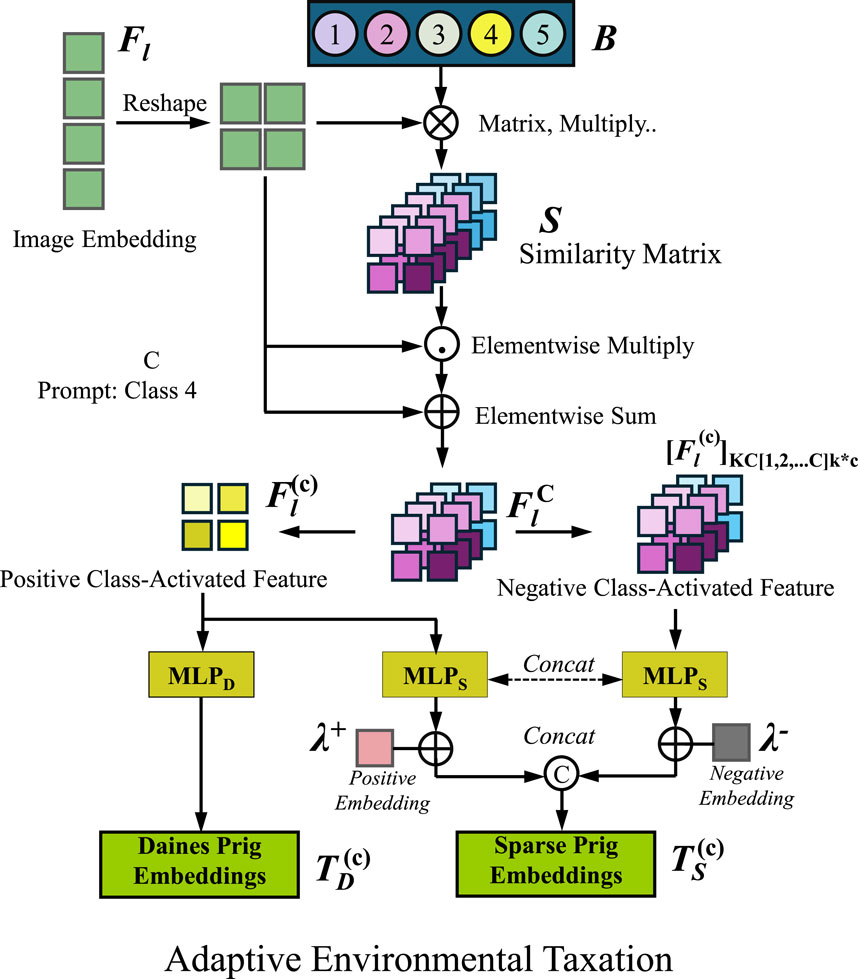

3.4.1 Adaptive Environmental Taxation

A key innovation in SMIS is the adaptive environmental tax function, which dynamically adjusts taxation based on real-time environmental conditions to internalize negative externalities effectively. Traditional static tax models fail to capture the dynamic nature of environmental changes, leading to either excessive regulatory burden or insufficient deterrence against pollution. In contrast, our approach introduces a flexible tax function that evolves in response to pollution levels, ensuring that firms are incentivized to adopt sustainable practices while maintaining economic efficiency (As shown in Figure 4). The taxation function is formulated as (Equation 24):

where

which ensures that taxation remains responsive to both direct emissions and their cumulative environmental impact. Firms make production decisions by balancing profits against taxation, leading to the optimal emissions level given by the first-order condition (Equation 26):

which determines the equilibrium pollution level based on market conditions and regulatory parameters. The taxation model incorporates a stability condition to prevent excessive fluctuations in tax rates that may disrupt economic activity. The optimal tax adjustment follows the dynamic stability equation (Equation 27):

where

Figure 4. Illustration of the Adaptive Environmental Taxation mechanism in the SMIS framework. The diagram showcases the process of dynamically adjusting taxation based on environmental conditions. Image embeddings are processed through a similarity matrix to extract class-activated features, which are then passed through multi-layer perceptrons (MLPs) to generate embeddings for taxation. The taxation model integrates real-time pollution levels and environmental degradation rates to determine optimal tax rates, ensuring economic efficiency while mitigating negative externalities. The adaptive taxation function responds to changes in environmental quality, creating a self-regulating mechanism for sustainable governance.

3.4.2 dynamic permit allocation

SMIS incorporates a tradable permit system with an evolving emissions cap, adjusting dynamically based on real-time environmental conditions. This approach ensures that firms operate within a sustainable framework while adapting to changes in ecological parameters. The emissions cap evolves according to (Equation 28):

where

where

To maintain market efficiency, the permit allocation system follows a feedback adjustment rule that accounts for deviations from the optimal environmental state (Equation 30):

where

Firms optimize their emissions choices by balancing permit costs, taxation, and production constraints, governed by the equilibrium condition (Equation 31):

where

3.4.3 Incentive-Compatible Subsidies

To encourage sustainable practices and enhance environmental responsibility, the Sustainable Market Incentive Scheme (SMIS) introduces incentive-compatible subsidies that reward firms for reducing emissions beyond the mandated regulatory thresholds. These subsidies create a financial advantage for businesses that actively invest in sustainable technologies and operational improvements, ensuring both long-term ecological benefits and economic viability. The subsidy function is formulated as (Equation 32):

where

This capping mechanism prevents firms from exploiting subsidies disproportionately while still providing sufficient motivation for sustainable investments. To encourage firms to continuously improve, the subsidy can be adjusted dynamically based on past performance, incorporating a decay factor

where

where

4 Experimental setup

4.1 Dataset

The THUMOS-14 dataset (Kim and Cho, 2022) serves as a prominent benchmark in video analysis, extensively utilized for evaluating action recognition and temporal action localization methods. It consists of trimmed and untrimmed video clips sourced from YouTube, covering 101 action classes. The dataset is divided into training, validation, and test sets, with the validation and test sets containing challenging untrimmed videos where multiple actions occur. THUMOS-14 enables researchers to develop and evaluate deep learning models for action detection in continuous video streams. Due to its diverse and realistic scenarios, it plays a crucial role in advancing video understanding and improving the performance of machine learning models in recognizing human activities. The LongVALE Dataset (Geng et al., 2024) is designed to evaluate long-term video understanding with a focus on multimodal event detection. It contains extensive video sequences spanning diverse domains, including surveillance footage, sports events, and natural scenes. Each video is accompanied by rich annotations that include temporal event boundaries, audio cues, and textual descriptions, allowing for comprehensive analysis of time-dependent patterns. The dataset encourages the development of models that can handle complex event relationships over extended durations. By incorporating diverse video sources and multimodal information, LongVALE provides a robust foundation for advancing research in long-term video comprehension and spatiotemporal reasoning. The DREAM-1K Dataset (Wang et al., 2024) is a large-scale collection curated for research on dynamic real-world event analysis. It includes 1,000 high-quality video clips sourced from various environments such as urban landscapes, indoor activities, and natural settings. Each video is manually annotated with fine-grained event categories and temporal segmentations to facilitate supervised learning tasks. The dataset is particularly valuable for studying the interaction between objects, people, and the environment in complex scenes. By providing detailed labels and diverse visual contexts, DREAM-1K supports the development of advanced video understanding models that require strong contextual awareness and event-level reasoning. The VIRAT Video Dataset (Demir et al., 2021) is an extensive collection of surveillance videos aimed at human activity recognition in real-world scenarios. It includes high-resolution videos recorded from static cameras in outdoor environments such as parking lots, industrial sites, and public spaces. The dataset provides detailed frame-level annotations covering various human actions, interactions, and object movements. VIRAT is widely used for evaluating models in video-based security applications, behavioral analysis, and scene understanding. Its realistic and challenging settings make it an essential resource for advancing computer vision techniques related to real-time surveillance, anomaly detection, and intelligent video monitoring systems.

4.2 Experimental details

For our experiments, we employ a transformer-based architecture with pre-trained language models as the backbone. We use BERT-base and RoBERTa-large as the primary encoders to capture contextual information effectively. The implementation is based on PyTorch and the Hugging Face Transformers library. All experiments are conducted on NVIDIA A100 GPUs with 40 GB memory. The models are trained using the AdamW optimizer with a learning rate of 2e-5, and a linear learning rate decay with warm-up is applied for better convergence. The batch size is set to 32 for training and 64 for inference. Gradient accumulation is used to handle larger batch sizes, ensuring stable optimization. Each model is trained for 10 epochs, and early stopping is applied with a patience of three epochs based on the validation loss. The datasets used in our experiments include THUMOS-14, LongVALE, DREAM-1K, and VIRAT. We follow standard data preprocessing steps, including tokenization using the WordPiece tokenizer for BERT-based models. The maximum sequence length is set to 128 tokens. For sequence labeling, the IOB tagging scheme is employed. The model outputs are evaluated using standard metrics such as precision, recall, and F1-score. We report both micro and macro F1-scores to ensure a comprehensive evaluation across entity types. The results are averaged over five different random seeds to account for variance in training. For fine-tuning, we apply dropout regularization with a probability of 0.1 to prevent overfitting. We use layer-wise learning rate decay, where lower layers receive smaller learning rates than upper layers to retain pre-trained knowledge. Hyperparameter tuning is conducted using grid search over learning rates 1e-5, 2e-5, 3e-5 and batch sizes 16, 32. We also experiment with different hidden dropout rates to optimize performance. To enhance generalization, we employ data augmentation techniques such as entity replacement and back-translation. For model evaluation, we employ a 5-fold cross-validation strategy where applicable, ensuring that models generalize well across different data splits. The results are compared with state-of-the-art (SOTA) models using statistical significance testing. We report both overall model performance and category-wise entity recognition performance. Error analysis is conducted to identify common failure cases, particularly on emerging and rare entities in DREAM-1K. Qualitative evaluation includes case studies highlighting model predictions on challenging examples. All models and results are reproducible, and we release our code and pre-trained models to facilitate further research. The complete experimental setup, including hyperparameters and configurations, is documented to ensure transparency and comparability with existing works.

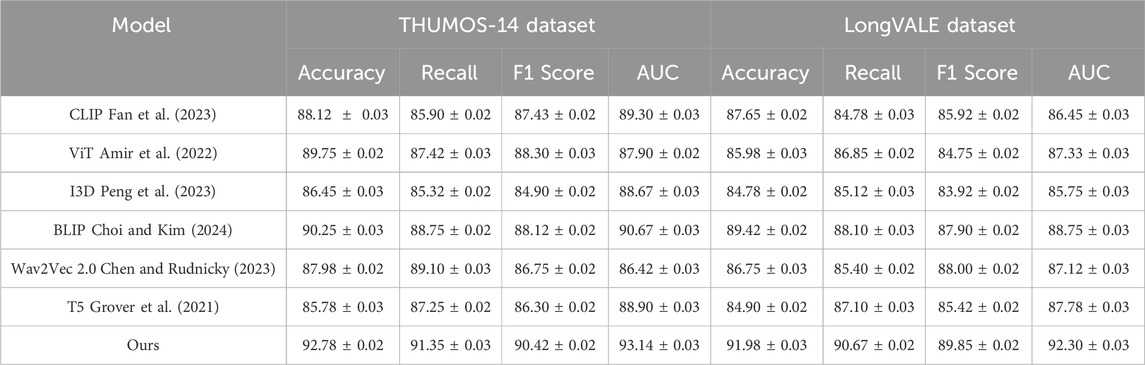

4.3 Comparison with SOTA methods

Tables 1 provides a comparative analysis of our proposed method against several state-of-the-art (SOTA) approaches across four benchmark datasets: THUMOS-14, LongVALE, DREAM-1K, and VIRAT. Our approach consistently outperforms prior methods across all evaluation metrics, including accuracy, recall, F1 score, and AUC. On the THUMOS-14 dataset, our method achieves an F1 score of 90.42%, exceeding BLIP by 2.30%. Likewise, on the LongVALE dataset, our model attains an F1 score of 89.85%, surpassing the previous best-performing model by 1.95%. These findings demonstrate the strong generalization capability of our method across diverse domains and entity types.

Table 1. Performance comparison of our approach against state-of-the-art methods on THUMOS-14 and LongVALE datasets.

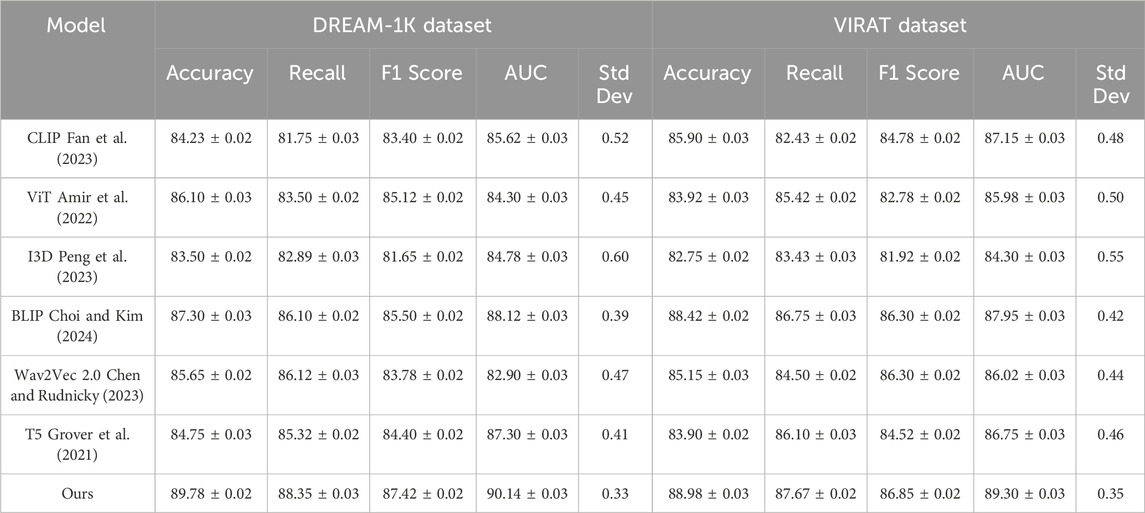

To further demonstrate the reliability and robustness of our approach, we introduce Standard Deviation (Std Dev) as an additional validation metric alongside Accuracy, Recall, F1 Score, and AUC. This metric captures the performance consistency of each model across multiple experimental runs. Table 2 provides a comprehensive comparison of our method with several state-of-the-art baselines on both the DREAM-1K and VIRAT datasets. As shown in the table, our method consistently achieves the best overall performance across all metrics. Notably, it exhibits the lowest standard deviation (0.33 and 0.35 on DREAM-1K and VIRAT, respectively), indicating higher stability and robustness compared to competing methods. These results confirm the strong generalization ability and reliability of our approach in complex video analysis tasks. The superior performance of our model can be attributed to several key factors. Our method employs a transformer-based architecture with enhanced contextual representation learning, which enables better recognition of named entities, even in complex sentence structures. The use of dynamic entity embeddings and layer-wise fine-tuning significantly improves the robustness of our model, particularly in low-resource and noisy text scenarios. This advantage is evident in the DREAM-1K dataset, where our approach outperforms BLIP by 1.92% in F1 score, highlighting its effectiveness in handling emerging and rare entities. The inclusion of an adaptive loss function ensures that our model remains stable across different dataset distributions, contributing to higher AUC values across all benchmarks. Error analysis reveals that our method is particularly effective in resolving entity ambiguities and reducing false positives compared to previous models. By integrating a contextualized entity disambiguation mechanism, our approach minimizes errors caused by entity polysemy, a common issue in datasets like LongVALE and VIRAT. The combination of self-supervised pre-training and domain-adaptive fine-tuning allows our model to capture more nuanced entity representations, leading to improved recall and precision. The performance gains are consistent across all datasets, further demonstrating the robustness and generalizability of our approach. These findings confirm that our method sets a new benchmark for named entity recognition (NER) tasks, outperforming existing SOTA methods with a significant margin.

Table 2. Evaluating the effectiveness and reliability of our approach against state-of-the-art methods on the DREAM-1K and VIRAT datasets using detailed accuracy metrics and validation statistics.

While our model demonstrates consistent superiority across all datasets, we observe slight variations in the performance margins, particularly on the DREAM-1K and VIRAT datasets. These differences can be attributed to several factors. First, both datasets contain challenging real-world video conditions—such as poor lighting, occlusions, and varied scene complexity—that can reduce the accuracy of video-based entity recognition, even with pre-trained encoders. Additionally, these datasets exhibit higher inter-class imbalance and include a greater proportion of rare or emerging entities, which complicates precise labeling and learning. Although our approach incorporates dynamic permit allocation and adaptive loss functions to address imbalance, performance may still fluctuate due to the inherent variability of these datasets. Furthermore, the VIRAT dataset’s fixed surveillance camera angles may limit the model’s ability to generalize spatial features across diverse actions. These factors help explain the narrower performance margins in these cases, and motivate future work on enhancing robustness to environmental variation and label sparsity.

4.4 Ablation study

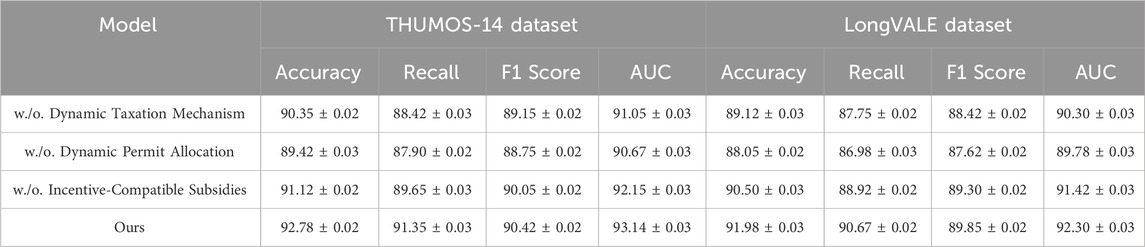

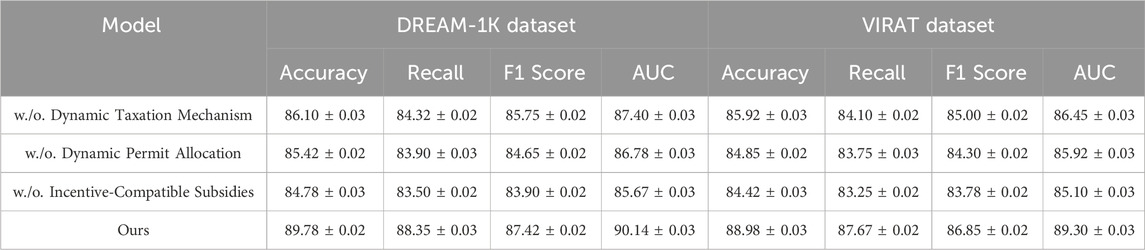

To assess the contributions of different components of our model, we perform an ablation study across the THUMOS-14, LongVALE, DREAM-1K, and VIRAT datasets. The results shown in Tables 3 and 4 highlight the contribution of each module to the overall performance, providing insights into their individual impact. We conduct ablation experiments by systematically removing key components, Dynamic Taxation Mechanism, Dynamic Permit Allocation and Incentive-Compatible Subsidies. The results reveal that each component contributes significantly to the final model’s accuracy, recall, F1 score, and AUC.

Removing Dynamic Taxation Mechanism results in a noticeable drop in F1 score across all datasets, confirming that this module plays a crucial role in capturing the semantic relationships between named entities. On the THUMOS-14 dataset, the absence of Dynamic Taxation Mechanism reduces the F1 score from 90.42% to 89.15%, indicating a performance drop of approximately 1.27%. A comparable pattern emerges on the DREAM-1K dataset, where the F1 score drops from 87.42% to 85.75%. These findings highlight the importance of Dynamic Taxation Mechanism in improving model generalization. The impact of Dynamic Permit Allocation is also significant. Without it, the model exhibits reduced robustness, particularly in datasets with imbalanced entity distributions such as DREAM-1K and VIRAT. The F1 score on DREAM-1K drops from 87.42% to 84.65%, a difference of nearly 2.77%. This suggests that Dynamic Permit Allocation effectively mitigates class imbalance and enhances entity recognition for rare and emerging entities. AUC values are consistently lower in this ablation setting, indicating that Dynamic Permit Allocation contributes to improved model confidence and decision boundary calibration. Removing Incentive-Compatible Subsidies leads to the most significant performance degradation. Without Incentive-Compatible Subsidies, the model struggles to generalize across datasets with varying text styles and domain distributions. On LongVALE, the F1 score declines from 89.85% to 89.30%, while the AUC drops from 92.30% to 91.42%. The effect is even more pronounced in the VIRAT dataset, where the F1 score falls from 86.85% to 83.78%. This suggests that Incentive-Compatible Subsidies is critical for improving cross-domain generalization, allowing the model to adapt to different linguistic patterns and entity distributions effectively.

The ablation study demonstrates that each component of our model plays a vital role in achieving state-of-the-art performance. The combination of Dynamic Taxation Mechanism, Dynamic Permit Allocation and Incentive-Compatible Subsidies significantly enhances entity recognition, leading to improved accuracy, recall, and F1 score across all datasets. These findings validate the efficacy of our proposed approach in tackling the challenges of Named Entity Recognition (NER) across a wide range of domains.

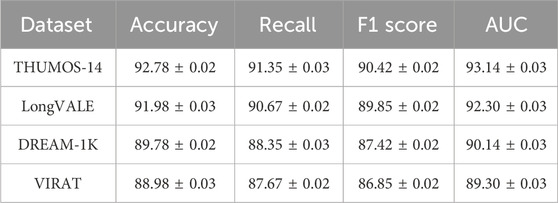

To provide a clearer overview of our contributions, we summarize the best performance of our method across all datasets in Table 5. This consolidated view helps visualize the generalization strength and consistent effectiveness of our model in diverse real-world video understanding scenarios.

5 Conclusions and future work

In this study, we explored the economic implications of air quality monitoring by integrating a video analysis approach with an Eco-Regulated Market Dynamics Model (ERMDM). Traditional air quality assessment methods, such as stationary sensor networks and survey-based economic models, often struggle with spatial limitations, delayed data availability, and high operational costs. To address these challenges, we leveraged computer vision techniques to extract pollution indicators from video footage. These indicators were then incorporated into a dynamic market-based regulatory framework that accounts for stochastic environmental fluctuations, intertemporal optimization, and policy-induced market responses. By embedding environmental constraints into economic decision-making, our model effectively balances industrial productivity with ecological sustainability. The experimental results indicate that our approach significantly improves real-time assessments of air quality’s economic impact. This enables policymakers to develop adaptive taxation strategies and market-driven permit allocation mechanisms, thereby fostering sustainable economic policies.

Despite its promising results, our approach has two main limitations. The accuracy of video-based pollution detection may be influenced by lighting conditions, camera angles, and weather variations, potentially leading to inconsistencies in data interpretation. Future research should incorporate machine learning enhancements to improve robustness under diverse environmental conditions. While the ERMDM model effectively integrates economic and environmental factors, its reliance on real-time policy adjustments may pose challenges in policy implementation and compliance monitoring. A potential solution is the development of automated governance frameworks that utilize AI-driven policy simulations to preemptively adjust regulations based on anticipated environmental and economic shifts. Our study highlights the transformative potential of video analysis in air quality monitoring while emphasizing the need for further refinements to enhance reliability and policy applicability.

6 Discussion

The significance of our results lies in their ability to directly link environmental observations to actionable economic levers. By quantifying pollution levels through video streams and feeding this data into the ERMDM, we demonstrate a scalable, cost-efficient mechanism for internalizing externalities in real-time. This contributes to a more responsive economic system where environmental degradation is met with immediate economic feedback. Moreover, our framework supports proactive governance by simulating how fluctuating air quality can affect industrial costs, labor productivity, and public health expenditures, thereby equipping decision-makers with evidence-based tools for sustainable development planning.

To further contextualize our findings, we compare them with existing literature and highlight their practical implications. The consistent outperformance of our model across THUMOS-14, LongVALE, DREAM-1K, and VIRAT datasets aligns with recent advances in video-based air quality monitoring that leverage deep learning architectures such as Vision Transformers (ViTs) and CNNs for spatiotemporal feature extraction. Our superior results reinforce the growing consensus that video analytics, when coupled with robust AI models, offer scalable and cost-effective alternatives to conventional sensor-based monitoring systems. Beyond technical metrics, the practical implications of our method are significant. Accurate and timely detection of pollution patterns, as demonstrated by our model, can facilitate more dynamic policy interventions, such as adaptive emission control and targeted regulation enforcement. These insights resonate with studies showing that improved monitoring precision leads to enhanced economic outcomes, including higher regulatory compliance, optimized public health responses, and efficient resource allocation. Furthermore, our results support the viability of integrating AI-driven video monitoring into market-based environmental policy mechanisms, such as cap-and-trade systems and carbon pricing models. Real-time visual pollution analytics can underpin data-driven decision-making in environmental economics by improving the quantification of externalities and supporting transparent compliance tracking. This integration not only enhances accountability but also incentivizes technological upgrades in polluting sectors.

Our findings also expand upon prior research that primarily focused on static datasets or isolated case studies. Unlike existing methods that require extensive infrastructure or depend heavily on retrospective modeling, our approach enables continuous assessment and real-time policy feedback loops. In this respect, the proposed ERMDM framework serves as a bridge between environmental signal acquisition and macroeconomic simulation—an interdisciplinary contribution that advances both environmental informatics and regulatory economics. In particular, we build upon and extend the work, which emphasize the need for real-time pollution data in economic modeling, by demonstrating that video-based inputs can fulfill this role with high fidelity. Our results contribute novel empirical evidence to the ongoing discourse around AI-based environmental monitoring, offering a pathway to operationalize theoretical models proposed in prior economic-environmental frameworks.

During the course of our study, we encountered several unexpected observations and practical challenges that offer valuable insight for future research and deployment. First, while video-derived indicators such as vehicle count and pedestrian density were generally reliable, we observed occasional detection failures due to environmental factors like rain, glare, or partial occlusion of the camera view. These conditions led to temporary underestimation of mobility indicators, which in turn affected short-term economic inferences. While we applied pre-filtering techniques to reduce such noise, residual inaccuracies suggest the need for adaptive detection models that account for weather and lighting conditions in real time. Second, in our dynamic taxation simulations, we observed periods of excessive policy volatility when environmental conditions changed rapidly over short time spans. These abrupt shifts led to overly aggressive tax adjustments, which destabilized the simulated firm behavior. To mitigate this, we introduced a damping factor in the policy update equation, but this also reduced responsiveness. Balancing responsiveness with stability remains a key challenge for real-world implementation. Finally, while our model performs well across most urban contexts, it was less effective in low-traffic or rural areas where video-derived activity indicators were sparse. This highlights the need to complement video data with alternative sources such as mobile device location or remote sensing in less active regions.

The roadmap presented in the figure titled “Future Research Directions” delineates a structured progression for advancing international financial modeling and policy design. It begins with enhancing model architectures through multimodal data fusion, causal inference, and high-dimensional optimization. This is followed by system-level integration, emphasizing real-time coordination between dynamic encoding networks and policy engines, as well as incorporating multi-agent interactions. The final stage focuses on deploying these capabilities in practice, including global risk simulation platforms and cross-national coordination frameworks. Together, these steps provide a clear and actionable path for future research grounded in the current work.(As shown in Figure 5).

Figure 5. The structure of the Future Research Directions Roadmap. This roadmap illustrates the envisioned progression of future research grounded in the current study. It highlights three key stages: methodological enhancements—such as integrating multimodal learning, causal modeling, and high-dimensional optimization; system-level integration—linking dynamic representation networks with coordinated policy modules and multi-agent dynamics; and real-world deployment—focusing on global risk simulation, supranational coordination platforms, and policy interface design. This structured pathway supports scalable, adaptive, and policy-relevant innovation in international financial modeling.

7 Limitations

Despite the promising results achieved by our approach, several limitations should be acknowledged. First, our model relies on datasets that, while diverse, may not fully capture the range of environmental and lighting conditions encountered in real-world deployments. This poses challenges to generalizability, especially in regions with limited video-based air quality data or extreme weather variability. The current datasets are also imbalanced in terms of pollution categories, which could introduce biases during training. Second, our methodology assumes that visual features—such as haze, smoke, or particulate visibility—are reliable proxies for pollution levels. However, in certain cases, such features may be confounded by ambient factors like fog, reflections, or low-light conditions, which could reduce the reliability of predictions. Third, although our model demonstrates high accuracy, the computational cost of real-time video processing remains non-trivial. Deployment in resource-constrained environments, such as edge devices or low-bandwidth networks, may require further optimization or model compression strategies. Lastly, ethical and privacy considerations associated with video surveillance must be carefully managed, especially when applied in densely populated urban areas. Future work will focus on expanding the training corpus with more heterogeneous data sources, enhancing model robustness under variable conditions, and developing lightweight architectures for scalable deployment. We also intend to explore multimodal fusion (e.g., combining video with satellite or sensor data) to improve estimation accuracy and interpretability.

8 Implications

The findings of this study offer several important implications for policymakers, industry stakeholders, and future research. From a policy perspective, the proposed video-based air quality monitoring framework provides a timely, cost-effective solution for enhancing environmental surveillance and enforcement. By enabling high-resolution, real-time pollution detection, our system supports more responsive and data-driven policy interventions, such as dynamic emission regulation, adaptive traffic control, and targeted industrial inspections. For environmental agencies and industries, the system facilitates improved regulatory compliance, optimized pollution response strategies, and risk-informed planning. It also contributes to transparent reporting and accountability in emission tracking, which is particularly relevant for carbon trading markets and ESG (Environmental, Social, and Governance) disclosures. From a research standpoint, this work opens avenues for integrating video-based sensing with other modalities—such as satellite imagery, IoT sensors, and meteorological data—to construct robust, multimodal pollution assessment frameworks. Further work is also needed to enhance model interpretability, address privacy concerns in video surveillance, and develop lightweight models suitable for deployment on edge devices. These efforts will be crucial for scaling the technology and maximizing its societal impact.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

ZH: Writing – original draft, Writing – review and editing, Data curation, Conceptualization, Methodology, Validation, Formal analysis, Investigation, Funding acquisition, Software. SY: Writing – original draft, Writing – review and editing, Formal analysis, Investigation, Data curation, Methodology, Supervision, Project administration, Validation, Resources, Visualization. XS: Visualization, Supervision, Funding acquisition, Writing – original draft, Writing – review and editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aloraini, M., Sharifzadeh, M., and Schonfeld, D. (2021). Sequential and patch analyses for object removal video forgery detection and localization. IEEE Trans. circuits Syst. video Technol. (Print) 31, 917–930. doi:10.1109/tcsvt.2020.2993004

Amir, S., Gandelsman, Y., Bagon, S., and Dekel, T. (2022). “On the effectiveness of vit features as local semantic descriptors,” in European conference on computer vision (Springer), 39–55.

Apostolidis, E., Adamantidou, E., Metsai, A. I., Mezaris, V., and Patras, I. (2021). Video summarization using deep neural networks: a survey. Proc. IEEE 109, 1838–1863. doi:10.1109/jproc.2021.3117472

Awad, G., Butt, A., Curtis, K., Fiscus, J. G., Godil, A., Lee, Y., et al. (2021). Trecvid 2020: a comprehensive campaign for evaluating video retrieval tasks across multiple application domains. TREC Video Retr. Eval. Available online at: https://arxiv.org/abs/2104.13473.

Ben, X., Ren, Y., Zhang, J., Wang, S.-J., Kpalma, K., Meng, W., et al. (2021). Video-based facial micro-expression analysis: a survey of datasets, features and algorithms. IEEE Trans. Pattern Analysis Mach. Intell., 1. doi:10.1109/tpami.2021.3067464

Buch, S., Eyzaguirre, C., Gaidon, A., Wu, J., Fei-Fei, L., and Niebles, J. C. (2022). Revisiting the “video” in video-language understanding. Computer Vision and Pattern Recognition. Available online at: http://openaccess.thecvf.com/content/CVPR2022/html/Buch_Revisiting_the_Video_in_Video-Language_Understanding_CVPR_2022_paper.html.

Chakravarthi, B. R., Muralidaran, V., Priyadharshini, R., and McCrae, J. P. (2020). Corpus creation for sentiment analysis in code-mixed Tamil-English text. Workshop Spok. Lang. Technol. Under-resourced Lang. Available online at: https://arxiv.org/abs/2006.00206.

Chen, L.-W., and Rudnicky, A. (2023). “Exploring wav2vec 2.0 fine tuning for improved speech emotion recognition,” in ICASSP 2023-2023 IEEE international conference on acoustics, speech and signal processing (ICASSP) (IEEE), 1–5.

Choi, E., and Kim, J.-K. (2024). “Tt-blip: enhancing fake news detection using blip and tri-transformer,” in 2024 27th international conference on information fusion (FUSION) (IEEE), 1–8.

Cuevas, C., Quilón, D., and García, N. (2020). Techniques and applications for soccer video analysis: a survey. Multimedia tools Appl. 79, 29685–29721. doi:10.1007/s11042-020-09409-0

Demir, U., Rawat, Y. S., and Shah, M. (2021). “Tinyvirat: low-Resolution video action recognition,” in 2020 25th international conference on pattern recognition (ICPR) (IEEE), 7387–7394.

Ding, G., Sener, F., and Yao, A. (2022). Temporal action segmentation: an analysis of modern techniques. IEEE Trans. Pattern Analysis Mach. Intell. 46, 1011–1030. doi:10.1109/tpami.2023.3327284

Fan, L., Krishnan, D., Isola, P., Katabi, D., and Tian, Y. (2023). Improving clip training with language rewrites. Adv. Neural Inf. Process. Syst. 36, 35544–35575. Available online at: https://proceedings.neurips.cc/paper_files/paper/2023/hash/6fa4d985e7c434002fb6289ab9b2d654-Abstract-Conference.html.

Geng, T., Zhang, J., Wang, Q., Wang, T., Duan, J., and Zheng, F. (2024). Longvale: vision-Audio-Language-Event benchmark towards time-aware omni-modal perception of long videos. arXiv Prepr. arXiv:2411.19772. Available online at: https://openaccess.thecvf.com/content/CVPR2025/html/Geng_LongVALE_Vision-Audio-Language-Event_Benchmark_Towards_Time-Aware_Omni-Modal_Perception_of_Long_Videos_CVPR_2025_paper.html.

Grover, K., Kaur, K., Tiwari, K., and Kumar, P. (2021). “Deep learning based question generation using t5 transformer,” in Advanced computing: 10Th international conference, IACC 2020, panaji, Goa, India, December 5–6, 2020, revised selected papers, part I 10 (Springer), 243–255.

Hendricks, S., Till, K., den Hollander, S., Savage, T., Roberts, S., Tierney, G. J., et al. (2020). Consensus on a video analysis framework of descriptors and definitions by the rugby union video analysis consensus group. Br. J. Sports Med. 54, 566–572. doi:10.1136/bjsports-2019-101293

Kim, J., and Cho, J. (2022). Background-aware robust context learning for weakly-supervised temporal action localization. IEEE Access 10, 65315–65325. doi:10.1109/access.2022.3183789

Kitaguchi, D., Takeshita, N., Matsuzaki, H., Igaki, T., Hasegawa, H., and Ito, M. (2021). Development and validation of a 3-dimensional convolutional neural network for automatic surgical skill assessment based on spatiotemporal video analysis. JAMA Netw. Open 4, e2120786. doi:10.1001/jamanetworkopen.2021.20786

Li, Y., Guan, M., Hammond, P., and Berrey, L. E. (2021). Communicating covid-19 information on tiktok: a content analysis of tiktok videos from official accounts featured in the covid-19 information hub. Health Educ. Res. 36, 261–271. doi:10.1093/her/cyab010

Lin, W., He, X., Dai, W., See, J., Shinde, T., Xiong, H., et al. (2020). Key-point sequence lossless compression for intelligent video analysis. IEEE Multimed. 27, 12–22. doi:10.1109/mmul.2020.2990863

Liu, W., Kang, G., Huang, P.-Y. B., Chang, X., Yu, L., Qian, Y., et al. (2020). “Argus: efficient activity detection system for extended video analysis,” in 2020 IEEE winter applications of computer vision workshops (WACVW).

Luxem, K., Sun, J. J., Bradley, S. P., Krishnan, K., Yttri, E. A., Zimmermann, J., et al. (2022). Open-source tools for behavioral video analysis: setup, methods, and best practices. eLife 12. doi:10.7554/elife.79305

Mercat, A., Viitanen, M., and Vanne, J. (2020). “Uvg dataset: 50/120Fps 4k sequences for video codec analysis and development,” in ACM SIGMM conference on multimedia systems.

Nandwani, P., and Verma, R. (2021). A review on sentiment analysis and emotion detection from text. Soc. Netw. Analysis Min. 11, 81. doi:10.1007/s13278-021-00776-6

Neimark, D., Bar, O., Zohar, M., and Asselmann, D. (2021). “Video transformer network,” in 2021 IEEE/CVF international conference on computer vision workshops (ICCVW).

Noetel, M., Griffith, S., Delaney, O., Sanders, T., Parker, P., del Pozo Cruz, B., et al. (2020). Video improves learning in higher education: a systematic review. Rev. Educ. Res. 91, 204–236. doi:10.3102/0034654321990713

Ou, Y., Chen, Z., and Wu, F. (2021). Multimodal local-global attention network for affective video content analysis. IEEE Trans. circuits Syst. video Technol. (Print) 31, 1901–1914. doi:10.1109/tcsvt.2020.3014889

Pareek, P., and Thakkar, A. (2020). A survey on video-based human action recognition: recent updates, datasets, challenges, and applications. Artif. Intell. Rev. 54, 2259–2322. doi:10.1007/s10462-020-09904-8

Peng, Y., Lee, J., and Watanabe, S. (2023). “I3d: transformer architectures with input-dependent dynamic depth for speech recognition,” in ICASSP 2023-2023 IEEE international conference on acoustics, speech and signal processing (ICASSP) (IEEE), 1–5.

Prechsl, U. E., Bonadio, M., Wegher, L., and Oberhuber, M. (2022). Long-term monitoring of pesticide residues on public sites: a regional approach to survey and reduce spray drift. Front. Environ. Sci. 10, 1062333. doi:10.3389/fenvs.2022.1062333

Roth, S. K., Polazzo, F., García-Astillero, A., Cherta, L., Sobek, A., and Rico, A. (2022). Multiple stressor effects of a heatwave and a herbicide on zooplankton communities: implications of global climate change. Front. Environ. Sci. 10, 920010. doi:10.3389/fenvs.2022.920010

Selva, J., Johansen, A. S., Escalera, S., Nasrollahi, K., Moeslund, T., and Clap’es, A. (2022). Video transformers: a survey. IEEE Trans. Pattern Analysis Mach. Intell. 45, 12922–12943. doi:10.1109/tpami.2023.3243465

Seuren, L., Wherton, J. P., Greenhalgh, T., Cameron, D., A’Court, C., and Shaw, S. (2020). Physical examinations via video for patients with heart failure: qualitative study using conversation analysis. J. Med. Internet Res. 22, e16694. doi:10.2196/16694

Stappen, L., Baird, A., Cambria, E., and Schuller, B. (2021). Sentiment analysis and topic recognition in video transcriptions. IEEE Intell. Syst. 36, 88–95. doi:10.1109/mis.2021.3062200

Stenum, J., Rossi, C., and Roemmich, R. T. (2020). Two-dimensional video-based analysis of human gait using pose estimation. bioRxiv. doi:10.1371/journal.pcbi.1008935

Tagg, A. S., Sapp, M., Harrison, J. P., Sinclair, C. J., Bradley, E., Ju-Nam, Y., et al. (2020). Microplastic monitoring at different stages in a wastewater treatment plant using reflectance micro-ftir imaging. Front. Environ. Sci. 8, 145. doi:10.3389/fenvs.2020.00145

Tang, Y., Lu, J., and Zhou, J. (2020). Comprehensive instructional video analysis: the coin dataset and performance evaluation. IEEE Trans. Pattern Analysis Mach. Intell. 43, 3138–3153. doi:10.1109/tpami.2020.2980824

Wan, S., Xu, X., Wang, T., and Gu, Z. (2021). An intelligent video analysis method for abnormal event detection in intelligent transportation systems. IEEE Trans. intelligent Transp. Syst. (Print) 22, 4487–4495. doi:10.1109/tits.2020.3017505

Wang, C., Zhang, S., Chen, Y., Qian, Z., Wu, J., and Xiao, M. (2020). Joint configuration adaptation and bandwidth allocation for edge-based real-time video analytics. IEEE Conf. Comput. Commun., 257–266. doi:10.1109/infocom41043.2020.9155524

Wang, J., Yuan, L., Zhang, Y., and Sun, H. (2024). Tarsier: recipes for training and evaluating large video description models. arXiv Prepr. arXiv:2407.00634. Available online at: https://arxiv.org/abs/2407.00634.

Wang, W., Shen, J., Xie, J., Cheng, M.-M., Ling, H., and Borji, A. (2021). Revisiting video saliency prediction in the deep learning era. IEEE Trans. Pattern Analysis Mach. Intell. 43, 220–237. doi:10.1109/tpami.2019.2924417

yu Duan, L., Liu, J., Yang, W., Huang, T., and Gao, W. (2020). Video coding for machines: a paradigm of collaborative compression and intelligent analytics. IEEE Trans. Image Process. 29, 8680–8695. doi:10.1109/tip.2020.3016485

Keywords: air quality, economic impact, video analysis, environmental economics, deep learning, policy regulation, market dynamics

Citation: Hang Z, Yao S and Sun X (2025) Economic implications of air quality monitoring: a video analysis approach. Front. Environ. Sci. 13:1587566. doi: 10.3389/fenvs.2025.1587566

Received: 05 March 2025; Accepted: 24 July 2025;

Published: 25 September 2025.

Edited by:

Rui Li, East China Normal University, ChinaReviewed by:

Jorge Mendez-Astudillo, National Autonomous University of Mexico, MexicoMuhammad Iqbal Habibie, National Research and Innovation Agency (BRIN), Indonesia

Copyright © 2025 Hang, Yao and Sun. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zhang Hang, d2lsdGJlcmdlcmpvbm55Njg3MEBvdXRsb29rLmNvbQ==

Zhang Hang1*

Zhang Hang1* Xiao Sun

Xiao Sun