- 1Department of Pediatrics, West China Hospital Sichuan University Jintang Hospital. Jintang First People’s Hospital, Chengdu, Sichuan, China

- 2Department of Ultrasound, West China Hospital Sichuan University Jintang Hospital. Jintang First People’s Hospital, Chengdu, Sichuan, China

- 3Pediatric Cardiology Center, Sichuan Provincial Women’s and Children’s Hospital/The Affiliated Women’s and Children’s Hospital of Chengdu Medical College, Chengdu, Sichuan, China

Background: The integration of artificial intelligence (AI) into early childhood health management has expanded rapidly, with applications spanning the fetal, neonatal, and pediatric periods. While numerous studies report promising results, a comprehensive synthesis of AI's performance, methodological quality, and translational readiness in child health is needed.

Objectives: This systematic review aims to evaluate the current landscape of AI applications in fetal and pediatric care, assess their diagnostic accuracy and clinical utility, and identify key barriers to real-world implementation.

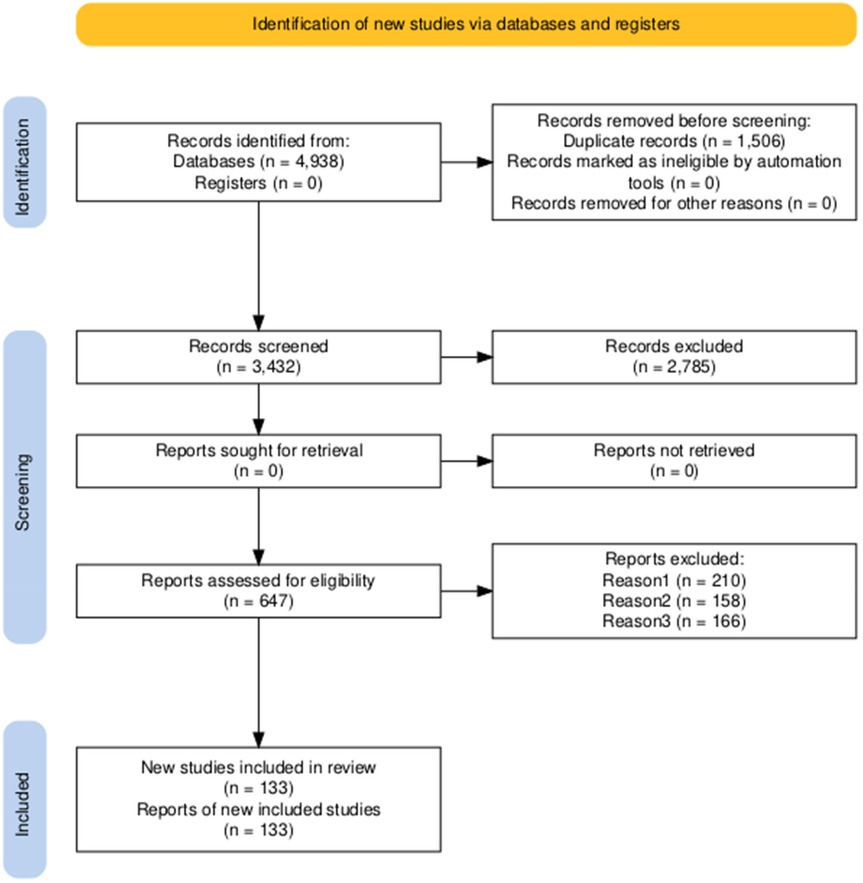

Methods: A systematic literature search was conducted in PubMed, Scopus, and Web of Science for studies published between January 2021 and March 2025. Eligible studies involved AI-driven models for diagnosis, prediction, or decision support in individuals aged 0–18 years. Study selection followed the PRISMA 2020 guidelines. Data were extracted on application domain, AI methodology, performance metrics, validation strategy, and clinical integration level.

Results: From 4,938 screened records, 133 studies were included. AI models demonstrated high performance in prenatal anomaly detection (mean AUC: 0.91–0.95), neonatal intensive care (e.g., sepsis prediction with sensitivity up to 89%), and pediatric genetic diagnosis (accuracy: 85%–93% using facial analysis). Deep learning enhanced consistency in fetal echocardiography and ultrasound interpretation. However, 76% of studies used single-center retrospective data, and only 21% reported external validation. Performance dropped by 15%–20% in cross-institutional settings. Fewer than 5% of models have been integrated into routine clinical workflows, with limited reporting on data privacy, algorithmic bias, and clinician trust.

Conclusion: AI holds transformative potential across the pediatric continuum of care—from fetal screening to chronic disease management. However, most applications remain in the research phase, constrained by data heterogeneity, lack of prospective validation, and insufficient regulatory alignment. To advance clinical adoption, future efforts should focus on multicenter collaboration, standardized data sharing frameworks, explainable AI, and pediatric-specific regulatory pathways. This review provides a roadmap for clinicians, researchers, and policymakers to guide the responsible translation of AI in child health.

1 Introduction

Child health represents a cornerstone of global public health, with long-term implications for national human capital development and societal well-being. However, pediatric healthcare systems worldwide continue to face significant challenges. Approximately 2.3 million neonates die within the first month of life each year, the majority due to preterm birth complications, congenital anomalies, and perinatal conditions—many of which are preventable or treatable with timely intervention (1). Furthermore, the prevalence of chronic pediatric conditions such as congenital heart disease, neurodevelopmental disorders, and inborn errors of metabolism is rising, leading to prolonged functional impairment in affected individuals and imposing substantial economic and psychosocial burdens on families and healthcare systems. These disparities are exacerbated in resource-limited settings, where shortages of specialized pediatric personnel, inadequate diagnostic tools, and delays in early intervention contribute to persistent inequities in health outcomes. Consequently, enhancing the capacity for early detection, precise diagnosis, and individualized management of pediatric diseases has become a critical unmet need in global child health.

Recent advances in artificial intelligence (AI) have opened unprecedented opportunities to address these challenges (2). Machine learning (ML) techniques—including deep learning, natural language processing, and computer vision—have demonstrated exceptional performance in medical image analysis, physiological signal monitoring, genomic interpretation, and clinical decision support (3). In prenatal care, AI applications have enabled automated measurement of fetal biometric parameters, detection of fetal growth restriction (FGR), prediction of preterm birth, and high-accuracy diagnostic support across imaging modalities such as fetal ultrasound, Magnetic Resonance Imaging (MRI), and fetal electrocardiography. In postnatal pediatric care, AI models have been developed to analyze neonatal electroencephalography for seizure prediction, assess jaundice severity using smartphone-captured images, support staging of chronic kidney disease, and improve diagnostic accuracy for rare genetic disorders through facial phenotyping (e.g., Face2Gene) (4). These developments suggest that AI has the potential to shift clinical paradigms from passive documentation to proactive risk prediction and intelligent decision-making, thereby enhancing both the accessibility and precision of pediatric healthcare.

Despite this promise, the clinical translation of AI in fetal and pediatric care remains in its early stages and is hindered by multiple challenges. First, most existing models are trained on small, single-center datasets, lacking external validation and robust assessment of generalizability, resulting in a persistent “lab-to-clinic gap”. Second, pediatric data are inherently heterogeneous, developmentally dynamic, and highly sensitive, while high-quality, large-scale, and expertly annotated datasets specific to pediatric populations remain scarce—limiting model robustness and broad applicability. Third, the majority of current AI systems operate as “black boxes” with limited interpretability (Explainable AI, XAI), which undermines clinician trust and hinders clinical adoption. Additionally, pathways for clinical integration, ethical guidelines, regulatory frameworks, and cost-effectiveness evaluations for AI tools remain poorly defined, impeding their scalable deployment in real-world settings.

Given this context, a systematic evaluation of the current state of AI applications across the fetal-to-pediatric health continuum—assessing their evidence base, technological maturity, and translational potential—is both timely and essential. Recent research and empirical evidence on the implementation of AI in key domains—including fetal monitoring, neonatal intensive care, chronic disease prediction, and genetic disorder diagnosis—are synthesized. Technological bottlenecks and research gaps are identified, and future directions such as multimodal data integration, causal inference modeling, federated learning, and enhanced model interpretability are discussed. Table 1 summarizes the applications of AI across developmental stages, providing an overview of current use cases and clinical domains.

2 Methods

2.1 Literature search strategy

Systematic literature searches were conducted in PubMed, Scopus, Web of Science, and IEEE Xplore to identify studies published between January 1, 2021, and March 15, 2025. The search strategy combined controlled vocabulary terms (e.g., MeSH terms in PubMed) with free-text keywords, adapted to the syntax of each database. Key concepts included artificial intelligence (e.g., “Artificial Intelligence”, “Machine Learning”, “Deep Learning”, “Neural Networks”) and pediatric populations (e.g., “Pediatrics”, “Neonatology”, “Fetus”, “Newborn Infant”, “Child Development”). Truncation symbols (*) were used to capture word variants (e.g., pediatr*, neonat*). Searches were limited to title and abstract fields where applicable.

In addition to electronic database searches, conference proceedings (via IEEE Xplore and ACM Digital Library) and preprint servers (arXiv and bioRxiv) were screened to capture emerging research. (Note: arXiv and bioRxiv are open-access preprint repositories for preliminary scientific reports prior to peer review.) The full PubMed search strategy is provided in Appendix 1. Study identification and screening were performed independently, with discrepancies resolved through consensus or arbitration by a third party.

2.2 Research questions

The review addresses the following research questions (RQs), formulated using the Population, Intervention, Comparator, Outcome, and Study Design (PICOS) framework:(RQ1) the applications of AI/ML in prenatal and perinatal care among pregnant women and fetuses, compared with standard care without AI support, examining the development and application of AI/ML models across any study design; (RQ2) the applications of AI/ML in neonatology among newborns and neonates, relative to standard care, focusing on diagnostic accuracy and clinical outcomes in prospective or retrospective cohort, cross-sectional, case–control, or randomised controlled trial (RCT) designs; (RQ3) the applications of AI/ML in paediatric disease management among children aged 0–18 years, against standard care, with outcomes of diagnostic accuracy and clinical effectiveness within the same study designs; (RQ4) the utilisation of AI/ML-based intelligent diagnostic technologies in paediatric populations (0–18 years), compared with conventional care, assessing diagnostic accuracy and clinical outcomes in prospective or retrospective cohort, cross-sectional, case–control, or RCT designs; (RQ5) the deployment of AI/ML-supported patient education and clinical decision support systems among patients, families, and healthcare providers, vs. standard care, evaluating knowledge retention and clinical utility within prospective or retrospective cohort, cross-sectional, case–control, or RCT designs; and (RQ6) the challenges and future directions for AI/ML in paediatric care, targeting healthcare providers and policymakers, contrasting current practices with proposed strategies to enhance implementation success and future potential, and including any study design reporting methodological or clinical limitations.

2.3 Inclusion and exclusion criteria

Peer-reviewed studies published in English between January 2021 and March 2025 were included if they reported diagnostic accuracy (e.g., sensitivity, specificity, area under the curve [AUC]) or clinical outcomes (e.g., mortality, morbidity, resource utilization) and employed prospective or retrospective cohort, cross-sectional, case–control, or randomized controlled trial (RCT) designs. Non-clinical studies, animal experiments, and studies based on unpublished or inaccessible data were excluded.

2.4 Study selection process

The study selection process followed the PRISMA 2020 guidelines and is summarized in Figure 1. An initial search yielded 4,938 records from electronic databases; no additional records were identified through other sources. After removal of 1,506 duplicates and exclusion of zero records flagged as ineligible by automated screening tools, 3,432 unique records remained for title and abstract screening. Of these, 2,785 were excluded as irrelevant, leaving 647 for full-text assessment. All 647 full-text articles were retrieved and evaluated against predefined inclusion criteria. A total of 514 studies were excluded: 210 were non-clinical, 158 involved animal experiments, and 166 were based on unpublished or inaccessible data. Ultimately, 133 studies met all inclusion criteria and were included in the final qualitative synthesis.

2.5 Quality assessment of included studies

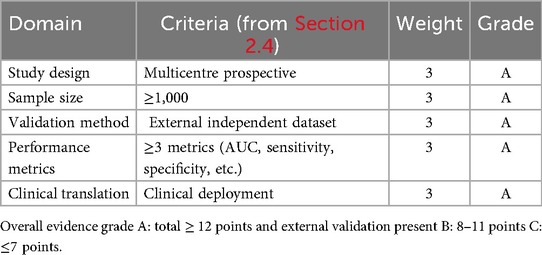

A standardized quality assessment framework was applied to evaluate scientific validity and methodological rigor. The framework assessed study design, sample size, validation methods, performance metrics, and stage of clinical translation. Study designs were classified as prospective, retrospective, or multicenter, with multicenter prospective studies considered more robust. Sample sizes were categorized as large (≥1,000), medium (300–999), or small (<300). Validation methods were distinguished between internal and external validation, with greater weight assigned to studies using independent external datasets. All included studies reported at least one quantitative performance indicator, such as area under the receiver operating characteristic curve (AUC), sensitivity, or specificity. Studies were classified by stage of clinical translation: laboratory development, pilot application, or clinical implementation, with those demonstrating real-world deployment considered to have higher practical relevance.

The methodological quality of all 133 included studies was assessed using a five-domain framework outlined in Table 2. Studies were evaluated according to study design, sample size, validation strategy, reporting of performance metrics, and level of clinical translation, and assigned an overall quality rating: Grade A (≥12 points with external validation), Grade B (8–11 points), or Grade C (≤7 points).Of the 133 studies, 24 (18.0%) received Grade A, 71 (53.4%) Grade B, and 38 (28.6%) Grade C. High-quality examples include Pierucci et al. (4), Chen et al. (10), and Mallineni et al. (19) (all Grade A), while Alqahtani et al. (39) received Grade B. The predominance of single-center datasets and limited external validation highlights the need for more multicenter, prospectively validated pediatric AI research.

2.6 Data extraction and categorization

Data extraction was conducted independently by two reviewers, with discrepancies resolved through consensus or third-party arbitration. Extracted data were categorized according to application domain. Methodological quality was assessed based on study design, sample size, validation approach, performance indicators, and translational stage. The level of evidence for each study was classified to reflect the reliability of findings and potential for real-world applicability. This approach supported reproducibility and transparency in synthesis.

3 Applications of artificial intelligence in prenatal and perinatal care

The integration of AI into prenatal and perinatal care has been associated with advances in risk prediction, imaging interpretation, and decision support. Evidence from recent studies can be organized into four thematic domains: (1) prediction of adverse pregnancy outcomes, (2) automation and standardization of ultrasound interpretation, (3) advanced imaging analysis in fetal MRI and echocardiography, and (4) augmentation of clinical expertise and reduction of diagnostic disparities. This framework reflects functional patterns in AI application and supports a structured synthesis of technological impact—highlighting roles in early detection, diagnostic consistency, and potential improvements in access to expert-level assessment. These applications address the first research question regarding the development and application of AI/ML models in prenatal and perinatal care.

3.1 Prediction of adverse pregnancy outcomes

Early identification of high-risk pregnancies is critical for timely intervention. AI models integrating multimodal data have demonstrated performance in predicting FGR and preterm birth. An AI system incorporating hemodynamic features for placental function assessment achieved a sensitivity of 89% for FGR detection, with clinical alerts generated 2–3 weeks earlier than conventional methods in one study (5). A support vector machine (SVM) model combining maternal physiological parameters and fetal monitoring data reported 91% accuracy and an AUC of 0.89 in predicting preterm birth (6). Multiparameter models analyzing fetal heart rate variability and uterine contraction frequency have identified preterm labor risk up to 48 h in advance (7). These findings suggest potential for earlier clinical actions, including corticosteroid administration or transfer to tertiary centers, although prospective validation in diverse populations remains limited.

3.2 Automation and standardization of ultrasound interpretation

Ultrasound remains the primary modality for fetal assessment, though diagnostic reliability may be affected by image noise, artifacts, and interobserver variability. AI algorithms have been applied to enhance image quality and automate biometric analysis. Deep learning techniques have reduced noise and artifacts, increasing the reported detection rate of spina bifida from 82%–94% in retrospective evaluations (8, 9). In fetal biometry, AI has improved measurement precision and reduced operator dependence (10). Deep learning models analyzing fetal ultrasound images achieved a classification accuracy of 97.2% in the prenatal detection of congenital heart disease, with lower error rates compared to manual measurements in controlled settings (11). Convolutional neural networks (CNNs) classified fetal renal pelvis dilation with 94% accuracy (95% CI: 93%–95%), and 51% of cases were correctly graded, indicating potential utility in borderline findings (18). These approaches may contribute to greater consistency in diagnostic workflows, particularly in complex or equivocal cases.

3.3 Advanced imaging analysis in fetal MRI and echocardiography

Beyond standard ultrasound, AI has been applied to enhance the utility of fetal MRI and echocardiography. In fetal MRI, AI algorithms have enabled organ segmentation, optimization of imaging sequences, and diagnostic support. Organ segmentation models achieved a Dice coefficient of 0.92 in identifying anatomical structures, suggesting improved efficiency in image interpretation (12). In fetal echocardiography, AI has supported image processing, biometric measurement, and anomaly detection, facilitating earlier identification of structural cardiac defects (13). Longitudinal monitoring of fetal growth through serial ultrasound analysis has also been demonstrated (14), with AI compensating for challenges such as fetal motion and maternal body habitus (15). In neuroanatomy, AI-based methods have enabled detailed assessment of brain development and detection of subtle abnormalities that may correlate with neurodevelopmental trajectories (16). Non-invasive fetal electrocardiography (fECG) integrated with AI improved the AUC of cardiac screening from 0.748 (resident)–0.890 (researcher) and 0.975 (expert), indicating performance gains across experience levels (17). The use of explainable AI (XAI) in cardiac screening, achieving an AUC of 0.975, may support transparency in decision-making (17).

3.4 Augmentation of clinical expertise and reduction of diagnostic disparities

AI-based systems have shown potential to improve diagnostic consistency in settings with limited access to specialized personnel. In low-resource environments, automatic fetal ultrasound screening systems increased the reported detection rate of congenital heart disease from 60%–89%, suggesting a narrowing of diagnostic performance gaps between high- and low-resource settings (73, 74). By standardizing image acquisition, view recognition, and anomaly detection, AI may assist clinicians across experience levels in achieving higher diagnostic accuracy. This domain highlights AI not only as a technical tool but as a potential contributor to more equitable access to prenatal screening across diverse populations, though real-world implementation evidence remains limited.

In summary, AI applications in prenatal and perinatal care span predictive modeling, imaging standardization, advanced diagnostics, and clinical support. From early risk prediction to image enhancement, advanced imaging analysis, and efforts to reduce diagnostic disparities, these technologies align with key clinical needs. While most models remain in the validation phase and face challenges in generalizability, regulatory approval, and integration into clinical workflows, the collective evidence indicates a trajectory toward earlier detection, reduced variability, and broader access to standardized fetal assessment. These developments suggest that AI is evolving from a supplementary tool to a potentially integral component of modern prenatal care systems.

4 Applications of artificial intelligence in neonatology

This section answers RQ2 by integrating evidence within four complementary functions: (1) real-time physiological monitoring and early warning, (2) neurodevelopmental surveillance and brain-injury prediction, (3) automated imaging interpretation, and (4) clinical decision support. These themes collectively illustrate the technical breadth of AI methodologies and their alignment with core neonatal care priorities—reducing diagnostic delays, minimising inter-observer variability, and enabling timely, individualised management for high-risk newborns.

4.1 Real-Time physiological monitoring and early warning

Detecting early signs of clinical deterioration in unstable neonates remains a critical challenge. Traditional monitoring systems generate frequent false alarms, contributing to alert fatigue among clinical staff. In contrast, AI models have been applied to high-frequency time-series data from electronic health records (EHRs) and bedside monitors to identify pre-symptomatic physiological patterns. Machine learning algorithms integrating heart rate variability, respiratory dynamics, and laboratory trends have demonstrated the ability to predict neonatal sepsis 6–12 h before clinical onset, with reported AUC values ranging from 0.85–0.93 in retrospective studies (18, 19). Predictive models for bronchopulmonary dysplasia (BPD) have utilized antenatal, postnatal, and ventilatory data to stratify risk, potentially guiding early pulmonary protective strategies (20, 21). These findings suggest that AI-based monitoring systems may support a shift toward earlier clinical recognition, although prospective validation in diverse Neonatal Intensive Care Unit (NICU) settings is still limited.

4.2 Neurodevelopmental surveillance and brain injury prediction

Timely identification of hypoxic-ischemic encephalopathy (HIE), seizures, and long-term neurodevelopmental impairment is essential for neuroprotective interventions. Given the limited availability of pediatric neurophysiologists, AI-based analysis of electroencephalography (EEG) — including amplitude-integrated EEG (aEEG) and raw EEG — has been explored as a scalable solution for continuous neurological monitoring. Deep learning models have achieved sensitivities exceeding 90% and specificities above 85% in automated seizure detection in preterm and term infants, enabling continuous monitoring even in resource-constrained environments (22, 23). Beyond seizure detection, AI frameworks such as dynamic functional connectome learning (DFC-Igloo) have been used to extract predictive biomarkers from resting-state functional Magnetic Resonance Imaging (fMRI), with reported correlations to motor and cognitive outcomes in preterm infants (24). Additionally, AI-powered cranial ultrasound analysis has enabled automated detection of intraventricular hemorrhage (IVH) and quantification of brain volume, providing objective and reproducible metrics for use in neuroprotection trials (25, 26). These approaches may contribute to more standardized and data-informed neurodevelopmental assessments.

4.3 Automated imaging interpretation

AI has been applied to standardize and accelerate diagnostic imaging in neonatal radiology and echocardiography. CNNs have been used for automated segmentation of cardiac structures, measurement of ventricular function, and detection of congenital heart defects in fetal and neonatal echocardiograms, with reported reductions in inter-observer variability (27, 28). In radiology, AI models have assisted in the interpretation of chest x-rays for conditions such as respiratory distress syndrome and pneumothorax, improving diagnostic speed and consistency (29). Computer vision algorithms have also been applied to video laryngoscopy, enabling real-time detection of glottic opening during intubation, which may support procedural accuracy (30). Furthermore, smartphone-based AI applications (e.g., BiliSG) have enabled non-invasive jaundice screening through digital color analysis, facilitating remote monitoring and reducing unnecessary blood sampling (31). These tools may extend diagnostic capabilities beyond tertiary centers, with potential implications for equitable access to neonatal care.

4.4 Clinical decision support

AI has increasingly been integrated into systems designed to support clinical decision-making and operational efficiency. AI-driven clinical decision support systems (CDSS) have been developed to integrate multimodal data for real-time optimization of ventilation settings, nutritional delivery, and antibiotic stewardship (75, 76). Predictive analytics have also been applied to discharge planning, identifying infants at elevated risk for readmission or developmental delay, thereby enabling targeted follow-up (77). At the systems level, AI models have been used to support NICU bed management, staffing forecasts, and patient flow optimization, with preliminary reports indicating improved resource utilization without compromising patient safety (78).

Synthesis across these four thematic areas indicates that AI applications in neonatology are evolving toward more data-driven, proactive, and individualized approaches to newborn care. The thematic clustering reflects a progression from diagnostic assistance (imaging, EEG) to risk prediction (sepsis, Bronchopulmonary Dysplasia (BPD), neurodevelopment) and intervention support (ventilation, intubation, nutrition). These developments align with the central aims of this review: AI-based tools have demonstrated high diagnostic accuracy (AUC > 0.85 in multiple studies), shown potential to reduce time-to-treatment and morbidity in observational settings, and exhibited increasing translational maturity. However, the majority of models remain in pilot or laboratory stages, with limited external validation. Key challenges include algorithmic bias, lack of interoperability with existing EHR systems, and insufficient evidence from multicenter prospective trials. Despite these limitations, the cumulative evidence suggests that AI may play an increasingly integral role in neonatal care, provided that future research prioritizes external validation, regulatory compliance, and equitable deployment across diverse healthcare settings.

5 Applications of artificial intelligence in pediatric disease management

This section responds to RQ3 by integrating evidence across two clinically focused domains: (1) the diagnosis and management of common paediatric diseases and (2) the diagnosis of genetic disorders. Within these domains, AI/ML applications consistently enhance diagnostic accuracy, refine risk stratification, and streamline clinical workflows—exemplified by earlier detection of congenital heart defects, improved prediction of sepsis onset, and accelerated identification of pathogenic variants—thereby enabling more individualised and timely care for children aged 0–18 years.

5.1 Diagnosis and management of common pediatric diseases

In pediatric oncology, ML models have been used to analyze high-dimensional clinical and biological data, identifying patterns associated with disease subtypes and treatment response. These models have demonstrated improved diagnostic precision and risk stratification in several pediatric cancers, supporting more individualized therapeutic approaches (79).

For the prediction of sepsis in children beyond the neonatal period, AI-driven models—primarily logistic regression and ensemble methods—have been applied to dynamic vital sign data, including heart rate variability and respiratory entropy. In a retrospective cohort study, such models predicted sepsis onset 6–8 h in advance with a reported sensitivity of 89% and specificity of 76% (31), suggesting potential for earlier clinical recognition, though prospective validation in real-world settings remains limited.

In pediatric malnutrition, ML algorithms have been developed to analyze multifactorial risk factors, including abnormal weight-for-age trajectories, to support early risk stratification. When integrated into web-based platforms, these models have enabled scalable screening tools for deployment in primary care and community health settings (32). Additionally, computer vision-based systems for infant posture tracking have been used to monitor subtle motor changes in real time, with one study reporting an accuracy exceeding 85% in detecting early signs of neurological impairment (33). These tools may enhance surveillance and support timely developmental interventions.

AI has also contributed to advances in the diagnosis of pediatric heart disease. Deep learning models trained on large ECG datasets have demonstrated the ability to detect early signs of congenital heart defects, such as atrial septal defects. In one validation study, the model increased diagnostic accuracy by 15% compared to conventional interpretation and reduced reported misdiagnosis rates to below 3% (34, 35). Furthermore, natural language processing (NLP) techniques have been applied to structured and unstructured echocardiographic reports to develop predictive models for spontaneous closure of perimembranous ventricular septal defects (PMVSD). One such model achieved an AUC of 0.92—18% higher than traditional parameter-based approaches—indicating improved predictive performance (36, 37). These findings suggest potential for earlier diagnosis and more personalized follow-up planning.

In the management of chronic pediatric conditions, AI-based tools have shown promise. Automated image analysis systems for pediatric diabetic retinopathy (DR) have been evaluated in clinical settings, demonstrating high sensitivity and specificity, with reported benefits in reducing clinician workload and improving access to screening in underserved populations (38). AI models have also been applied to assess environmental health risks in children. By integrating respiratory rate, environmental exposure data (e.g., PM2.5 concentration), and medication records, one predictive model achieved a reported accuracy of 91% in forecasting asthma exacerbations. In a pilot community health program, implementation of this model was associated with a 31% reduction in emergency department visits among asthmatic children, although the causal contribution of the AI component remains to be fully disentangled (39, 40).

In autism spectrum disorder (ASD) screening, AI-powered video analysis tools have been developed to detect behavioral markers—including facial expressions, eye contact, and limb movements—during brief (10-minute) interaction sessions. In a validation study of 1,200 children aged 2–5 years, the tool achieved a sensitivity of 94% and specificity of 89%, representing improvements of 20% and 30%, respectively, over the Modified Checklist for Autism in Toddlers (M-CHAT) (41). Another study reported that remote video-based behavioral analysis, combined with explainable AI (XAI) techniques, achieved a screening accuracy of 92% in infants as young as 18 months (42, 43). These approaches may support earlier identification, particularly in resource-limited settings.

In pediatric surgery, AI applications have been explored across preoperative planning, intraoperative guidance, and postoperative assessment. For laparoscopic cholecystectomy (LC), an AI system trained on liver CT imaging data has been used to identify fibrotic tissue and assist in surgical planning. In a clinical evaluation of 50 cases, the use of this system was associated with a reduction in biliary duct injury (BDI) rates from 8%–1.5%, and a decrease in planning time from 15–2 min, potentially reducing the need for intraoperative biopsies (44). In Hirschsprung's disease, an AI-assisted frozen section analysis system using transfer learning achieved a reported accuracy of 98.7% in ganglion cell detection (45). For retroperitoneal neuroblastoma, an AI-based 3D reconstruction model reduced surgical planning time by 40% in a single-center study (46).

Moreover, AI has shown strong performance in image-based differentiation of pediatric solid tumors. Multimodal image fusion techniques based on convolutional neural networks (CNNs) have demonstrated a sensitivity of 95% and specificity of 89% in distinguishing tumor types in retrospective analyses, highlighting the potential of AI in radiological and pathological interpretation (47).

5.2 Diagnosis of genetic diseases

AI has revolutionized the diagnosis of pediatric genetic diseases by enabling more accurate and efficient integration of phenotypic and genotypic data. Through advanced algorithms for phenotype-genotype association analysis and multimodal data fusion, AI tools are significantly reducing diagnostic delays and improving diagnostic yield in rare and complex genetic conditions.

AI-powered diagnostic platforms such as Dx29 integrate clinical phenotypes with genomic data to assist clinicians in rapidly identifying pathogenic variants in pediatric genetic disorders, including neurodevelopmental disorders and hearing impairments (48, 49). These systems streamline the diagnostic workflow by prioritizing candidate genes and reducing reliance on manual data interpretation. Similarly, AI tools like Franklin© AI reanalyze clinical genetic testing data to refine variant classification, thereby increasing diagnostic accuracy (50). In one large-scale study analyzing five years of pediatric genetic testing data, AI reanalysis identified 3,031 previously missed pathogenic variants, demonstrating substantial added value over conventional interpretation methods (51, 52).

AI is also being applied to congenital surgical conditions with genetic underpinnings. By analyzing genomic data, AI models can identify key disease-associated gene variants—such as those linked to Hirschsprung's disease or congenital diaphragmatic hernia—providing critical support for early diagnosis and surgical planning (53). Furthermore, AI-based risk prediction models integrate genetic and environmental factors to estimate the likelihood of pediatric-onset genetic disorders, such as hereditary cancer syndromes and neurodegenerative diseases, enabling earlier surveillance and intervention (54).

A major advancement in AI-assisted genetic diagnosis is the integration of electronic health records (EHRs), medical imaging, and genomic sequencing to support the identification of genetic syndromes. Deep learning algorithms can detect subtle morphological patterns in facial, ocular, and skeletal imaging, transforming phenotypic analysis into a quantitative, data-driven process (54–58). For example, Face2Gene—a widely used AI-based phenotypic analysis tool—analyzes facial photographs to generate a ranked list of up to 30 candidate syndromes, significantly accelerating the diagnostic process for rare diseases (59). It has demonstrated high performance in identifying conditions such as mucolipidosis type II (60) and has been validated in clinical settings in South Korea, showing strong diagnostic expansion potential (61). Studies confirm that Face2Gene's predictions for KBG syndrome and Kabuki syndrome are highly concordant with whole-exome sequencing results (62, 63). The tool also shows robust performance in diagnosing rare conditions such as Thrombocytopenia-Absent Radius (TAR) syndrome and Sotos syndrome, demonstrating its utility across diverse phenotypic spectra.

Beyond facial analysis, AI is being used to interpret ocular and skeletal imaging. Eye2Gene analyzes retinal and ocular images to detect features associated with inherited retinal diseases (IRD), improving variant interpretation and diagnostic confidence (64). Bone2Gene focuses on skeletal radiographs, using x-rays or computed tomography (CT) scans to identify dysmorphic features linked to genetic skeletal disorders, particularly those involving pathogenic variants in ANKRD11, and provides critical diagnostic clues in cases of skeletal dysplasia.

In genomic variant interpretation, AI systems are enhancing both speed and precision. The VAREANT platform improves variant detection and classification through three core modules: preprocessing, variant annotation, and AI/ML-driven data integration (65). The ClinGen Sequence Variant Interpretation (SVI) Working Group has endorsed the use of AI-based prediction tools in clinical variant classification, issuing updated guidelines to standardize their application (66). Deep learning models such as the Nucleic Transformer and Nucleotide Transformer have achieved state-of-the-art performance in DNA sequence classification and phenotype prediction (67–69). These pre-trained models are increasingly used to interpret non-coding region variants, a historically challenging area in clinical genomics (70).

To maximize accuracy, the integration of multiple AI models is recommended (71), and platforms like VarGuideAtlas are being developed to harmonize variant interpretation guidelines and support consensus-based classification (72). Such integrative approaches not only improve diagnostic sensitivity but also promote standardization across laboratories and healthcare systems.

In summary, AI technologies are transforming the landscape of pediatric genetic disease diagnosis. By leveraging facial, ocular, and skeletal imaging, as well as advanced genomic analysis, AI tools are shortening diagnostic odysseys, increasing diagnostic yield, and enabling earlier, more precise interventions. These advancements are laying the foundation for a new era of data-driven, personalized pediatric genetics.

6 Applications of intelligent diagnostic technologies

This section responds to RQ4 by evaluating AI/ML-based diagnostic technologies that enhance accuracy and clinical utility across pediatric medical imaging, pathology, and multi-omics analysis. These tools support automated image interpretation, anomaly detection, and integrated diagnostic reasoning, contributing to faster, more reliable, and standardized diagnostic processes in pediatric healthcare.

The increasing use of medical imaging in pediatric care has driven the integration of deep learning technologies across multiple modalities. AI-driven tools are now being applied to tasks including image classification, segmentation, prediction, and synthesis, with reported improvements in diagnostic consistency and workflow efficiency (47).

In ultrasound imaging, deep learning algorithms have demonstrated high performance in image quality assessment. One model achieved a reported recognition rate of 98.8% for normal pediatric sonographic images in a retrospective validation study, potentially reducing operator-dependent variability and enhancing reproducibility (20, 80). In radiology, AI-based bone age estimation from panoramic x-rays has shown a mean error of ±0.8 years—approximately half the error of traditional Greulich-Pyle method (±1.5 years)—supporting applications in growth assessment and orthodontic planning (81). A retrospective analysis of 3,000 pediatric dental radiographs found that an AI system for caries detection achieved 92% accuracy and 89% sensitivity, outperforming the average 78% accuracy of visual inspection by radiologists (37). More advanced architectures, such as the BAE-ViT visual transformer model, further improve performance by integrating clinical metadata (e.g., sex) with imaging data, representing a promising direction for multimodal diagnostic integration (82).

AI is also being explored in hybrid and cross-modality imaging. The generation of synthetic CT (sCT) from MRI data, when combined with PET, has been shown to reduce ionizing radiation exposure while maintaining diagnostic fidelity—offering a safer alternative for pediatric oncology and neuroimaging applications (83). In MRI, deep learning enables high-quality reconstruction from undersampled k-space data, potentially mitigating long-standing trade-offs between scan duration, spatial resolution, and signal-to-noise ratio (84). When integrated with quantitative MRI techniques, AI models can efficiently generate multi-contrast images, accelerating diagnosis in neurological disorders and supporting precision imaging workflows (85).

Beyond conventional imaging, emerging computational frameworks such as digital twins (DT) are being investigated for personalized pediatric care. By integrating physiological data, imaging phenotypes, and environmental factors, DT models aim to simulate disease progression and optimize treatment strategies. In pediatric neuro-oncology, the fusion of MRI with explainable AI (XAI) has enabled the development of adaptive clinical decision support systems that may improve both diagnostic interpretation and prognostic modeling (86, 87). Similarly, in chronic kidney disease (CKD), machine learning models leveraging multi-omics data have shown potential for early detection and risk stratification, potentially reducing unnecessary interventions (24).

AI is also accelerating biomarker discovery and enabling deeper biological insights. For example, AI-driven analysis of urinary proteomics and epigenetic profiles has identified non-invasive biomarker signatures for bladder cancer, suggesting potential to reduce reliance on invasive procedures (88). In hereditary kidney disease, integration of single-cell RNA sequencing with AI has elucidated molecular mechanisms of tubular injury in coenzyme Q10 deficiency nephropathy, revealing candidate therapeutic targets (89). In neuroblastoma, spatial transcriptomics combined with AI has identified a senescent-associated cancer-associated fibroblast (senes-CAF) subpopulation, offering new insights into tumor microenvironment modulation (90).

7 Applications in patient education and clinical decision support systems

This section responds to RQ5 by synthesizing AI/ML applications designed to improve knowledge delivery and clinical decision-making for patients, families, and healthcare providers. Through personalized education platforms and real-time decision support tools, AI helps bridge information gaps, enhance care coordination, and promote safer, more informed clinical practices.

In patient and medical education, AI-powered systems are being used to translate complex medical information into accessible formats. Text-to-video (T2V) generation models can produce dynamic visualizations of disease mechanisms or surgical procedures, facilitating both patient communication and medical training. In one reported application, such models reduced the production time of instructional videos—such as those demonstrating proper asthma inhaler technique—from 8 h–30 min, significantly improving content creation efficiency (91). Natural language processing (NLP)-based systems have also been developed to generate personalized health education materials tailored to a child's age, literacy level, and clinical context. In a pilot study involving families of pediatric kidney transplant recipients, the use of an AI-generated education tool was associated with a 35% improvement in caregiver knowledge retention, suggesting potential to enhance engagement and adherence (92). AI is also being explored in professional training; surveys of dental students' attitudes toward AI provide early insights for integrating intelligent technologies into clinical curricula (93).

In clinical operations and decision support, AI-driven systems have shown utility in high-acuity settings. Emergency department clinical decision support systems (ED-CDSSs) incorporating AI have been deployed for early sepsis detection and trauma severity grading. In a multicenter evaluation, such systems were associated with a 28% reduction in time to diagnosis, though challenges remain in processing unstructured clinical notes, with current models capable of analyzing only 32% of non-standardized text inputs—highlighting the need for more robust NLP integration (94). Augmented reality (AR)-assisted navigation systems have improved procedural accuracy in pediatric lumbar puncture, increasing first-attempt success rates from 68%–91% in a single-center study by providing real-time anatomical guidance (29).

Beyond bedside care, AI is being applied to hospital operations. One children's hospital implemented an AI-powered surgical scheduling system that analyzes historical procedure durations, resource utilization, and emergency priorities. This system increased operating room utilization from 68%–85%, reduced average patient wait times by 2.3 days, and was estimated to generate annual cost savings of $1.2 million (95). Such applications illustrate the potential of AI to enhance not only clinical outcomes but also healthcare efficiency and patient satisfaction.

8 Challenges and future development directions

This section responds to RQ6 by examining the methodological, technical, and translational barriers that hinder the clinical integration of AI/ML in pediatric healthcare. Key challenges include data heterogeneity, limited external validation, regulatory uncertainty, and poor alignment with clinical workflows. Addressing these barriers requires multicenter collaboration, standardized data practices, and human-centered design to enable equitable and sustainable implementation.

Despite the transformative potential of AI in pediatric healthcare, its translation from research to clinical practice faces multifaceted challenges that span ethical, methodological, and technological domains. At the ethical forefront, the use of children's sensitive health data raises urgent concerns regarding privacy, informed consent, and algorithmic equity. Only 19% of AI studies in pediatrics explicitly describe data anonymization procedures, and standardized protocols for obtaining meaningful consent—particularly from minors and their guardians—remain underdeveloped, increasing the risk of re-identification and misuse (96). Compounding this, training datasets often reflect racial, socioeconomic, and geographic biases, which can lead to degraded model performance in underrepresented populations, especially in low-income and resource-limited settings (87, 97). Without deliberate efforts to ensure representativeness and accessibility, AI risks exacerbating existing health disparities rather than mitigating them.

Methodologically, the field is hindered by significant heterogeneity in data and analytical approaches. A large proportion of studies rely on single-center, retrospective datasets, which are vulnerable to selection bias and lack external validation, limiting the generalizability of findings. Cross-institutional variations in data collection—such as imaging protocols, electronic health record (EHR) structures, and clinical workflows—can reduce model performance by 15%–20%, underscoring the critical need for standardized data curation and annotation frameworks (47, 98). Furthermore, the high cost of expert labeling and the scarcity of large, annotated pediatric datasets constrain the development of robust models, particularly for rare diseases. This is compounded by inconsistent model evaluation practices: while some studies report high accuracy, many fail to provide essential metrics such as sensitivity, specificity, or confidence intervals, making it difficult to compare performance across models or assess real-world utility.

The clinical adoption of AI is further impeded by the “black box” nature of many systems. Despite the introduction of visualization techniques to enhance interpretability, clinicians remain hesitant to trust AI-generated decisions without transparent, clinically meaningful explanations. Regulatory agencies increasingly demand rigorous documentation of model logic, uncertainty, and failure modes (99–101), highlighting the need for explainable AI (XAI) frameworks that align with clinical reasoning. For instance, deep learning models demonstrate high accuracy in detecting congenital heart disease from fetal ultrasound images but demand large datasets and substantial computational resources, whereas machine learning models offer faster inference and are better suited for real-time applications like neonatal sepsis prediction—yet without standardized benchmarks, such trade-offs remain poorly quantified.

Looking ahead, the future of pediatric AI lies in the integration of emerging technologies that enable more personalized, responsive, and secure care. Multimodal large language models (LLMs) hold promise for extracting and synthesizing complex information from unstructured EHRs, while digital twin technology could simulate individualized treatment responses by integrating longitudinal clinical, imaging, and genomic data. Edge computing devices offer the potential for real-time AI inference at the bedside—critical in neonatal and emergency settings—reducing latency and enhancing data privacy. Meanwhile, blockchain-based systems may provide a secure and auditable infrastructure for cross-institutional data sharing, fostering collaboration without compromising patient confidentiality (22, 47). However, the realization of this vision requires more than technological innovation; it demands systemic alignment through multicenter collaborations, standardized validation protocols, regulatory clarity, and human-centered design. Only by addressing these interconnected challenges can AI evolve from a collection of isolated tools into a trusted, equitable, and integral component of pediatric healthcare.

9 Practical implications for stakeholders

The findings of this review carry concrete and actionable implications for key stakeholders in pediatric healthcare, including clinicians, hospital administrators, policymakers, and technology developers. For clinicians, our synthesis highlights the growing maturity of AI tools in high-impact areas such as fetal anomaly detection, neonatal intensive care, and rare disease diagnosis—domains where early intervention is critical and human expertise is often stretched thin. Rather than viewing AI as a replacement, clinicians should position it as a cognitive augmentation tool that enhances diagnostic accuracy, reduces workload, and supports shared decision-making with families. However, this requires ongoing education in AI literacy, including understanding model limitations, interpreting uncertainty, and recognizing potential biases.

For hospital systems and healthcare administrators, the evidence underscores the need to invest in foundational infrastructure: standardized data pipelines, interoperable EHR systems, and secure computing environments (e.g., edge or federated learning platforms). The performance drop of 15%–20% when models are deployed across institutions is not merely a technical issue—it translates into real-world diagnostic delays and missed cases. Proactive investment in data harmonization and multicenter validation frameworks can mitigate this risk and accelerate the safe integration of AI into clinical workflows.

At the policy and regulatory level, our analysis reveals a critical gap: the absence of pediatric-specific AI governance standards. Unlike adult populations, children undergo rapid physiological and cognitive development, rendering adult-trained models potentially unsafe or inaccurate. Regulators must therefore establish developmental-stage-aware evaluation criteria, mandate transparency in training data demographics, and enforce strict privacy safeguards for lifelong pediatric data. Initiatives such as the FDA's Safer Technologies Program (STeP) offer a promising pathway, but they must be explicitly adapted for pediatric use cases.

Finally, for AI developers and researchers, this review calls for a paradigm shift—from building isolated “hero models” to designing clinically integrated, user-centered systems. Future efforts should prioritize external validation, real-world usability testing, and collaboration with frontline pediatric teams. The integration of multimodal LLMs, digital twins, and blockchain-based data sharing is not merely technological innovation; it is a systems-level opportunity to build proactive, personalized, and equitable child health ecosystems.

By aligning these stakeholder actions with the evidence synthesized in this review, the pediatric community can move beyond pilot studies and fragmented tools toward a future where AI is not an exception, but an expected, trusted, and life-enhancing component of routine care. The urgency is clear: every day of delay means missed opportunities for earlier diagnosis, more precise treatment, and better outcomes for the world's most vulnerable patients—children.

10 Conclusion

AI is profoundly reshaping the paradigm of pediatric medical practice. From real-time life monitoring in neonatal intensive care units (NICUs) to intelligent navigation in complex surgical procedures, from early warnings of fetal diseases to precise screening of pediatric diseases, the widespread application of AI technologies in the pediatric field demonstrates strong clinical potential. AI technologies not only improve the accuracy of diagnosis and treatment but also optimize the allocation and utilization of medical resources. However, continuous technological innovation needs to be synchronized with the standardization of methodologies, the improvement of ethical frameworks, and the construction of interdisciplinary collaboration systems. Future research should focus on standardized validation methods and multicenter studies to ensure the robustness and universality of AI applications. At the same time, addressing ethical and privacy issues is crucial for the widespread adoption of AI in pediatric healthcare. Looking ahead to the next 5–10 years, with the continuous expansion of pediatric-specific datasets and the application of cutting-edge technologies such as causal inference, innovations and breakthroughs in the pediatric field will bring new hope to the cause of children's health. The future development of AI technologies in the pediatric field is expected to further development of AI technologies in the pediatric field, which can provide more precise and efficient support for the health and well-being of children. These directions not only fully demonstrate the multi-level application potential of AI technologies from basic research to clinical implementation but also point the way for the future development of pediatric healthcare.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Author contributions

QW: Writing – original draft, Data curation, Conceptualization, Validation. JY: Validation, Data curation, Conceptualization, Writing – original draft. XZ: Writing – original draft, Data curation, Formal analysis. HO: Writing – original draft, Data curation, Formal analysis. FL: Data curation, Writing – original draft, Formal analysis. YZ: Data curation, Formal analysis, Writing – original draft. WW: Formal analysis, Data curation, Writing – original draft. CG: Formal analysis, Data curation, Writing – original draft. YC: Data curation, Formal analysis, Writing – original draft. XW: Supervision, Writing – review & editing, Validation. TL: Writing – review & editing, Supervision, Validation.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Rahman A, Ray M, Madewell ZJ, Igunza KA, Akelo V, Onyango D, et al. Adherence to perinatal asphyxia or sepsis management guidelines in low- and middle-income countries. JAMA Netw Open. (2025) 8(5):e2510790. doi: 10.1001/jamanetworkopen.2025.10790

2. Till H, Elsayed H, Escolino M, Esposito C, Shehata S, Singer G. Artificial intelligence (AI) competency and educational needs: results of an AI survey of members of the European society of pediatric endoscopic surgeons (ESPES). Children (Basel). (2024) 12(1):6. doi: 10.3390/children12010006

3. Robinson JR, Stey A, Schneider DF, Kothari AN, Lindeman B, Kaafarani HM, et al. Generative artificial intelligence in academic surgery: ethical implications and transformative potential. J Surg Res. (2025) 307:212–20. doi: 10.1016/j.jss.2024.12.059

4. Husain A, Knake L, Sullivan B, Barry J, Beam K, Holmes E, et al. AI models in clinical neonatology: a review of modeling approaches and a consensus proposal for standardized reporting of model performance. Pediatr Res. (2024). doi: 10.1038/s41390-024-03774-4

5. Pierucci UM, Tonni G, Pelizzo G, Paraboschi I, Werner H, Ruano R. Artificial intelligence in fetal growth restriction management: a narrative review. J Clin Ultrasound. (2025) 53:825–31. doi: 10.1002/jcu.23918

6. Davidson L, Boland MR. Towards deep phenotyping pregnancy: a systematic review on artificial intelligence and machine learning methods to improve pregnancy outcomes. Brief Bioinform. (2021) 22(5):bbaa369. doi: 10.1093/bib/bbaa369

7. Bitar G, Liu W, Tunguhan J, Kumar KV, Hoffman MK. A machine learning algorithm using clinical and demographic data for all-cause preterm birth prediction. Am J Perinatol. (2024) 41(S 01):e3115–23. doi: 10.1055/s-0043-1776917

8. He F, Wang Y, Xiu Y, Zhang Y, Chen L. Artificial intelligence in prenatal ultrasound diagnosis. Front Med (Lausanne). (2021) 8:729978. doi: 10.3389/fmed.2021.729978

9. Miskeen E, Alfaifi J, Alhuian DM, Alghamdi M, Alharthi MH, Alshahrani NA, et al. Prospective applications of artificial intelligence in fetal medicine: a scoping review of recent updates. Int J Gen Med. (2025) 18:237–45. doi: 10.2147/IJGM.S490261

10. Ramirez Zegarra R, Ghi T. Use of artificial intelligence and deep learning in fetal ultrasound imaging. Ultrasound Obstet Gynecol. (2023) 62(2):185–94. doi: 10.1002/uog.26130

11. Chen Z, Liu Z, Du M, Wang Z. Artificial intelligence in obstetric ultrasound: an update and future applications. Front Med (Lausanne). (2021) 8:733468. doi: 10.3389/fmed.2021.733468

12. Meshaka R, Gaunt T, Shelmerdine SC. Artificial intelligence applied to fetal MRI: a scoping review of current research. Br J Radiol. (2023) 96(1147):20211205. doi: 10.1259/bjr.20211205

13. Zhang J, Xiao S, Zhu Y, Zhang Z, Cao H, Xie M, et al. Advances in the application of artificial intelligence in fetal echocardiography. J Am Soc Echocardiogr. (2024) 37(5):550–61. doi: 10.1016/j.echo.2023.12.013

14. Alzubaidi M, Agus M, Alyafei K, Althelaya KA, Shah U, Abd-Alrazaq A, et al. Toward deep observation: a systematic survey on artificial intelligence techniques to monitor fetus via ultrasound images. iScience. (2022) 25(8):104713. doi: 10.1016/j.isci.2022.104713

15. Xiao S, Zhang J, Zhu Y, Zhang Z, Cao H, Xie M, et al. Application and progress of artificial intelligence in fetal ultrasound. J Clin Med. (2023) 12(9):3298. doi: 10.3390/jcm12093298

16. Weichert J, Scharf JL. Advancements in artificial intelligence for fetal neurosonography: a comprehensive review. J Clin Med. (2024) 13(18):5626. doi: 10.3390/jcm13185626

17. Sakai A, Komatsu M, Komatsu R, Matsuoka R, Yasutomi S, Dozen A, et al. Medical professional enhancement using explainable artificial intelligence in fetal cardiac ultrasound screening. Biomedicines. (2022) 10(3):551. doi: 10.3390/biomedicines10030551

18. Smail LC, Dhindsa K, Braga LH, Becker S, Sonnadara RR. Using deep learning algorithms to grade hydronephrosis severity: toward a clinical adjunct. Front Pediatr. (2020) 8:1. doi: 10.3389/fped.2020.00001

19. Mallineni SK, Sethi M, Punugoti D, Kotha SB, Alkhayal Z, Mubaraki S, et al. Artificial intelligence in dentistry: a descriptive review. Bioengineering (Basel). (2024) 11(12):1267. doi: 10.3390/bioengineering11121267

20. Hardalaç F, Akmal H, Ayturan K, Acharya UR, Tan RS. A pragmatic approach to fetal monitoring via cardiotocography using feature elimination and hyperparameter optimization. Interdiscip Sci. (2024) 16(4):882–906. doi: 10.1007/s12539-024-00647-6

21. Wang J, Li H, Cecil KM, Altaye M, Parikh NA, He L. DFC-Igloo: a dynamic functional connectome learning framework for identifying neurodevelopmental biomarkers in very preterm infants. Comput Methods Programs Biomed. (2024) 257:108479. doi: 10.1016/j.cmpb.2024.108479

22. McOmber BG, Moreira AG, Kirkman K, Acosta S, Rusin C, Shivanna B. Predictive analytics in bronchopulmonary dysplasia: past, present, and future. Front Pediatr. (2024) 12:1483940. doi: 10.3389/fped.2024.1483940

23. Wang D, Huang S, Cao J, Feng Z, Jiang Q, Zhang W, et al. A comprehensive study on machine learning models combining with oversampling for bronchopulmonary dysplasia-associated pulmonary hypertension in very preterm infants. Respir Res. (2024) 25(1):199. doi: 10.1186/s12931-024-02797-z

24. Lopes MB, Coletti R, Duranton F, Glorieux G, Jaimes Campos MA, Klein J, et al. The omics-driven machine learning path to cost-effective precision medicine in chronic kidney disease. Proteomics. (2025) 25:e202400108. doi: 10.1002/pmic.202400108

25. Ngeow AJH, Moosa AS, Tan MG, Zou L, Goh MMR, Lim GH, et al. Development and validation of a smartphone application for neonatal jaundice screening. JAMA Netw Open. (2024) 7(12):e2450260. doi: 10.1001/jamanetworkopen.2024.50260

26. Bernardo D, Kim J, Cornet MC, Numis AL, Scheffler A, Rao VR, et al. Machine learning for forecasting initial seizure onset in neonatal hypoxic-ischemic encephalopathy. Epilepsia. (2025) 66(1):89–103. doi: 10.1111/epi.18163

27. Ryu S, Back S, Lee S, Seo H, Park C, Lee K, et al. Pilot study of a single-channel EEG seizure detection algorithm using machine learning. Childs Nerv Syst. (2021) 37(7):2239–44. doi: 10.1007/s00381-020-05011-9

28. Baker S, Yogavijayan T, Kandasamy Y. Towards non-invasive and continuous blood pressure monitoring in neonatal intensive care using artificial intelligence: a narrative review. Healthcare (Basel). (2023) 11(24):3107. doi: 10.3390/healthcare11243107

29. Majeedi A, Peebles PJ, Li Y, McAdams RM. Glottic opening detection using deep learning for neonatal intubation with video laryngoscopy. J Perinatol. (2025) 45(2):242–8. doi: 10.1038/s41372-024-02171-3

30. Wilson CG, Altamirano AE, Hillman T, Tan JB. Data analytics in a clinical setting: applications to understanding breathing patterns and their relevance to neonatal disease. Semin Fetal Neonatal Med. (2022) 27(5):101399. doi: 10.1016/j.siny.2022.101399

31. Tennant R, Graham J, Kern J, Mercer K, Ansermino JM, Burns CM. A scoping review on pediatric sepsis prediction technologies in healthcare. NPJ Digit Med. (2024) 7(1):353. doi: 10.1038/s41746-024-01361-9

32. Chen K, Zheng F, Zhang X, Wang Q, Zhang Z, Niu W. Factors associated with underweight, overweight, and obesity in Chinese children aged 3–14 years using ensemble learning algorithms. J Glob Health. (2025) 15:04013. doi: 10.7189/jogh.15.04013

33. Gleason A, Richter F, Beller N, Arivazhagan N, Feng R, Holmes E, et al. Detection of neurologic changes in critically ill infants using deep learning on video data: a retrospective single center cohort study. EClinicalMedicine. (2024) 78:102919. doi: 10.1016/j.eclinm.2024.102919

34. Hussain MA, Grant PE, Ou Y. Inferring neurocognition using artificial intelligence on brain MRIs. Front Neuroimaging. (2024) 3:1455436. doi: 10.3389/fnimg.2024.1455436

35. Leone DM, O’Sullivan D, Bravo-Jaimes K. Artificial intelligence in pediatric electrocardiography: a comprehensive review. Children (Basel). (2024) 12(1):25. doi: 10.3390/children12010025

36. Sun J, Feng T, Wang B, Li F, Han B, Chu M, et al. Leveraging artificial intelligence for predicting spontaneous closure of perimembranous ventricular septal defect in children: a multicentre, retrospective study in China. Lancet Digit Health. (2025) 7(1):e44–53. doi: 10.1016/S2589-7500(24)00245-0

37. Kerth JL, Hagemeister M, Bischops AC, Reinhart L, Dukart J, Heinrichs B, et al. Artificial intelligence in the care of children and adolescents with chronic diseases: a systematic review. Eur J Pediatr. (2024) 184(1):83. doi: 10.1007/s00431-024-05846-3

38. Ahmed M, Dai T, Channa R, Abramoff MD, Lehmann HP, Wolf RM. Cost-effectiveness of AI for pediatric diabetic eye exams from a health system perspective. NPJ Digit Med. (2025) 8(1):3. doi: 10.1038/s41746-024-01382-4

39. Campbell EA, Holl F, Marwah HK, Fraser HS, Craig SS. The impact of climate change on vulnerable populations in pediatrics: opportunities for AI, digital health, and beyond-a scoping review and selected case studies. Pediatr Res. (2025). doi: 10.1038/s41390-024-03719-x

40. Alqahtani MM, Alanazi AMM, Algarni SS, Aljohani H, Alenezi FK, Alotaibi T F, et al. Unveiling the influence of AI on advancements in respiratory care: narrative review. Interact J Med Res. (2024) 13:e57271. doi: 10.2196/57271

41. Ganatra HA. Machine learning in pediatric healthcare: current trends, challenges, and future directions. J Clin Med. (2025) 14(3):807. doi: 10.3390/jcm14030807

42. Jeon I, Kim M, So D, Kim EY, Nam Y, Kim S, et al. Reliable autism Spectrum disorder diagnosis for pediatrics using machine learning and explainable AI. Diagnostics (Basel). (2024) 14(22):2504. doi: 10.3390/diagnostics14222504

43. Jin L, Cui H, Zhang P, Cai C. Early diagnostic value of home video-based machine learning in autism spectrum disorder: a meta-analysis. Eur J Pediatr. (2024) 184(1):37. doi: 10.1007/s00431-024-05837-4

44. Orimoto H, Hirashita T, Ikeda S, Amano S, Kawamura M, Kawano Y, et al. Development of an artificial intelligence system to indicate intraoperative findings of scarring in laparoscopic cholecystectomy for cholecystitis. Surg Endosc. (2025) 39(2):1379–87. doi: 10.1007/s00464-024-11514-2

45. Demir D, Ozyoruk KB, Durusoy Y, Cinar E, Serin G, Basak K, et al. The future of surgical diagnostics: artificial intelligence-enhanced detection of ganglion cells for hirschsprung disease. Lab Invest. (2024) 105(2):102189. doi: 10.1016/j.labinv.2024.102189

46. Wah JNK. Revolutionizing surgery: AI and robotics for precision, risk reduction, and innovation. J Robot Surg. (2025) 19(1):47. doi: 10.1007/s11701-024-02205-0

47. Buser MAD, van der Rest JK, Wijnen MHWA, de Krijger RR, van der Steeg AFW, van den Heuvel-Eibrink MM, et al. Deep learning and multidisciplinary imaging in pediatric surgical oncology: a scoping review. Cancer Med. (2025) 14(2):e70574. doi: 10.1002/cam4.70574

48. Choi IH, Seo GH, Park J, Kim YM, Cheon CK, Kim YM, et al. Evaluation of users’ level of satisfaction for an artificial intelligence-based diagnostic program in pediatric rare genetic diseases. Medicine (Baltimore). (2022) 101(28):e29424. doi: 10.1097/MD.0000000000029424

49. Abdallah S, Sharifa M, I Kh Almadhoun MK, Khawar MM Sr., Shaikh U, Balabel KM, et al. The impact of artificial intelligence on optimizing diagnosis and treatment plans for rare genetic disorders. Cureus. (2023) 15(10):e46860. doi: 10.7759/cureus.46860

50. Reiley J, Botas P, Miller CE, Zhao J, Malone Jenkins S, Best H, et al. Open-source artificial intelligence system supports diagnosis of mendelian diseases in acutely ill infants. Children (Basel). (2023) 10(6):991. doi: 10.3390/children10060991

51. Jackson S, Freeman R, Noronha A, Jamil H, Chavez E, Carmichael J, et al. Applying data science methodologies with artificial intelligence variant reinterpretation to map and estimate genetic disorder prevalence utilizing clinical data. Am J Med Genet A. (2024) 194(5):e63505. doi: 10.1002/ajmg.a.63505

52. Choon YW, Choon YF, Nasarudin NA, Al Jasmi F, Remli MA, Alkayali MH, et al. Artificial intelligence and database for NGS-based diagnosis in rare disease. Front Genet. (2024) 14:1258083. doi: 10.3389/fgene.2023.1258083

53. Lin Q, Tam PK, Tang CS. Artificial intelligence-based approaches for the detection and prioritization of genomic mutations in congenital surgical diseases. Front Pediatr. (2023) 11:1203289. doi: 10.3389/fped.2023.1203289

54. Qadri YA, Ahmad K, Kim SW. Artificial general intelligence for the detection of neurodegenerative disorders. Sensors (Basel). (2024) 24(20):6658. doi: 10.3390/s24206658

55. Huang AE, Valdez TA. Artificial intelligence and pediatric otolaryngology. Otolaryngol Clin North Am. (2024) 57(5):853–62. doi: 10.1016/j.otc.2024.04.011

56. Alkuraya IF. Is artificial intelligence getting too much credit in medical genetics? Am J Med Genet C Semin Med Genet. (2023) 193(3):e32062. doi: 10.1002/ajmg.c.32062

57. Li Q, Zhou SR, Kim H, Wang H, Zhu JJ, Yang JK. Discovering novel cathepsin L inhibitors from natural products using artificial intelligence. Comput Struct Biotechnol J. (2024) 23:2606–14. doi: 10.1016/j.csbj.2024.06.009

58. Raza A, Rustam F, Siddiqui HUR, Diez IT, Garcia-Zapirain B, Lee E, et al. Predicting genetic disorder and types of disorder using chain classifier approach. Genes (Basel. (2022) 14(1):71. doi: 10.3390/genes14010071

59. Carrer A, Romaniello MG, Calderara ML, Mariani M, Biondi A, Selicorni A. Application of the Face2Gene tool in an Italian dysmorphological pediatric clinic: retrospective validation and future perspectives. Am J Med Genet A. (2024) 194(3):e63459. doi: 10.1002/ajmg.a.63459

60. Obara K, Abe E, Toyoshima I. Whole-exome sequencing identified a novel DYRK1A variant in a patient with intellectual developmental disorder, autosomal dominant 7. Cureus. (2023) 15(1):e33379. doi: 10.7759/cureus.33379

61. Park S, Kim J, Song TY, Jang DH. Case report: the success of face analysis technology in extremely rare genetic diseases in Korea: tatton-brown-rahman syndrome and say-barber -biesecker-young-simpson variant of ohdo syndrome. Front Genet. (2022) 13:903199. doi: 10.3389/fgene.2022.903199

62. Bajaj S, Nampoothiri S, Chugh R, Sheth J, Sheth F, Sheth H, et al. KBG syndrome in 16 Indian individuals. Am J Med Genet A. (2025) 197(2):e63907. doi: 10.1002/ajmg.a.63907

63. Jyothi BN, Angel S, Ravi Kumar CP, Tamhankar PM. Child with KBG syndrome. BMJ Case Rep. (2024) 17(12):e260238. doi: 10.1136/bcr-2024-260238

64. Fujinami K, Nishiguchi KM, Oishi A, Akiyama M, Ikeda Y., Research Group on Rare, Intractable Diseases (Ministry of Health, Labour, Welfare of Japan). Specification of variant interpretation guidelines for inherited retinal dystrophy in Japan. Jpn J Ophthalmol. (2024) 68(4):389–99. doi: 10.1007/s10384-024-01063-5

65. Narayanan R, DeGroat W, Peker E, Zeeshan S, Ahmed Z. VAREANT: a bioinformatics application for gene variant reduction and annotation. Bioinform Adv. (2024) 5(1):vbae210. doi: 10.1093/bioadv/vbae210

66. Tejura M, Fayer S, McEwen AE, Flynn J, Starita LM, Fowler DM. Calibration of variant effect predictors on genome-wide data masks heterogeneous performance across genes. Am J Hum Genet. (2024) 111(9):2031–43. doi: 10.1016/j.ajhg.2024.07.018

67. He S, Gao B, Sabnis R, Sun Q. Nucleic transformer: classifying DNA sequences with self-attention and convolutions. ACS Synth Biol. (2023) 12(11):3205–14. doi: 10.1021/acssynbio.3c00154

68. Dalla-Torre H, Gonzalez L, Mendoza-Revilla J, Lopez Carranza N, Grzywaczewski AH, Oteri F, et al. Nucleotide transformer: building and evaluating robust foundation models for human genomics. Nat Methods. (2025) 22(2):287–97. doi: 10.1038/s41592-024-02523-z

69. Ramprasad P, Pai N, Pan W. Enhancing personalized gene expression prediction from DNA sequences using genomic foundation models. HGG Adv. (2024) 5(4):100347. doi: 10.1016/j.xhgg.2024.100347

70. Sindeeva M, Chekanov N, Avetisian M, Shashkova TI, Baranov N, Malkin E, et al. Cell type-specific interpretation of noncoding variants using deep learning-based methods. Gigascience. (2023) 12:giad015. doi: 10.1093/gigascience/giad015

71. Soldà G, Asselta R. Applying artificial intelligence to uncover the genetic landscape of coagulation factors. J Thromb Haemost. (2025) 23:1133–45. doi: 10.1016/j.jtha.2024.12.030

72. Costa M, García S A, Pastor O. Varguideatlas: a repository of variant interpretation guidelines. Database (Oxford). (2025) 2025:baaf017. doi: 10.1093/database/baaf017

73. Wang A, Doan TT, Reddy C, Jone PN. Artificial intelligence in fetal and pediatric echocardiography. Children (Basel). (2024) 12(1):14. doi: 10.3390/children12010014

74. Van den Eynde J, Kutty S, Danford DA, Manlhiot C. Artificial intelligence in pediatric cardiology: taking baby steps in the big world of data. Curr Opin Cardiol. (2022) 37(1):130–6. doi: 10.1097/HCO.0000000000000927

75. Priyadarshi A, Tracy M, Kothari P, Sitaula C, Hinder M, Marzbanrad F, et al. Comparison of simultaneous auscultation and ultrasound for clinical assessment of bowel peristalsis in neonates. Front Pediatr. (2023) 11:1173332. doi: 10.3389/fped.2023.1173332

76. Urschitz M, Lorenz S, Unterasinger L, Metnitz P, Preyer K, Popow C. Three years experience with a patient data management system at a neonatal intensive care unit. J Clin Monit Comput. (1998) 14(2):119–25. doi: 10.1023/a:1007467831587

77. Tang BH, Guan Z, Allegaert K, Wu YE, Manolis E, Leroux S, et al. Drug clearance in neonates: a combination of population pharmacokinetic modelling and machine learning approaches to improve individual prediction. Clin Pharmacokinet. (2021) 60(11):1435–48. doi: 10.1007/s40262-021-01033-x

78. Várady P, Micsik T, Benedek S, Benyó Z. A novel method for the detection of apnea and hypopnea events in respiration signals. IEEE Trans Biomed Eng. (2002) 49(9):936–42. doi: 10.1109/TBME

79. Elsayid NN, Aydaross Adam EI, Yousif Mahmoud SM, Saadeldeen H, Nauman M, Ali Ahmed TA, et al. The role of machine learning approaches in pediatric oncology: a systematic review. Cureus. (2025) 17(1):e77524. doi: 10.7759/cureus.77524

80. Cao CL, Li QL, Tong J, Shi LN, Li WX, Xu Y, et al. Artificial intelligence in thyroid ultrasound. Front Oncol. (2023) 13:1060702. doi: 10.3389/fonc.2023.1060702

81. Tanna DA, Bhandary S, Hegde KS. Tech bytes-harnessing artificial intelligence for pediatric oral health: a scoping review. Int J Clin Pediatr Dent. (2024) 17(11):1289–95. doi: 10.5005/jp-journals-10005-2971

82. Zhang J, Chen W, Joshi T, Zhang X, Loh PL, Jog V, et al. BAE-ViT: an efficient multimodal vision transformer for bone age estimation. Tomography. (2024) 10(12):2058–72. doi: 10.3390/tomography10120146

83. Montgomery ME, Andersen FL, Mathiasen R, Borgwardt L, Andersen KF, Ladefoged CN. CT-free attenuation correction in paediatric long axial field-of-view positron emission tomography using synthetic CT from emission data. Diagnostics (Basel). (2024) 14(24):2788. doi: 10.3390/diagnostics14242788

84. Karkalousos D, Išgum I, Marquering HA, Caan MWA. ATOMMIC: an advanced toolbox for multitask medical imaging consistency to facilitate artificial intelligence applications from acquisition to analysis in magnetic resonance imaging. Comput Methods Programs Biomed. (2024) 256:108377. doi: 10.1016/j.cmpb.2024.108377

85. Sharma S, Nayak A, Thomas B, Kesavadas C. Synthetic MR: clinical applications in neuroradiology. Neuroradiology. (2025) 67(3):509–27. doi: 10.1007/s00234-025-03547-8

86. Tortora M, Pacchiano F, Ferraciolli SF, Criscuolo S, Gagliardo C, Jaber K, et al. Medical digital twin: a review on technical principles and clinical applications. J Clin Med. (2025) 14(2):324. doi: 10.3390/jcm14020324

87. Prince EW, Mirsky DM, Hankinson TC, Görg C. Current state and promise of user-centered design to harness explainable AI in clinical decision-support systems for patients with CNS tumors. Front Radiol. (2025) 4:1433457. doi: 10.3389/fradi.2024.1433457

88. Wan X, Wang D, Zhang X, Xu M, Huang Y, Qin W, et al. Unleashing the power of urine-based biomarkers in diagnosis, prognosis and monitoring of bladder cancer (review). Int J Oncol. (2025) 66(3):18. doi: 10.3892/ijo.2025.5724

89. Park PG, Choi S, Ahn YH, Kim SH, Kim C, Kim HJ, et al. Single-cell transcriptomics in a child with coenzyme Q10 nephropathy: potential of single-cell RNA sequencing in pediatric kidney disease. Pediatr Nephrol. (2025) 40(5):1653–62. doi: 10.1007/s00467-024-06611-2

90. Li S, Luo J, Liu J, He D. Pan-cancer single cell and spatial transcriptomics analysis deciphers the molecular landscapes of senescence related cancer-associated fibroblasts and reveals its predictive value in neuroblastoma via integrated multi-omics analysis and machine learning. Front Immunol. (2024) 15:1506256. doi: 10.3389/fimmu.2024.1506256

91. Ihsan MZ, Apriatama D, Pithriani AR. AI-assisted patient education: challenges and solutions in pediatric kidney transplantation. Patient Educ Couns. (2025) 131:108575. doi: 10.1016/j.pec.2024.108575

92. Temsah MH, Nazer R, Altamimi I, Aldekhyyel R, Jamal A, Almansour M, et al. OpenAI’s Sora and Google's Veo 2 in action: a narrative review of artificial intelligence-driven video generation models transforming healthcare. Cureus. (2025) 17(1):e77593. doi: 10.7759/cureus.77593

93. Elchaghaby M, Wahby R. Knowledge, attitudes, and perceptions of a group of Egyptian dental students toward artificial intelligence: a cross-sectional study. BMC Oral Health. (2025) 25(1):11. doi: 10.1186/s12903-024-05282-7

94. Kareemi H, Yadav K, Price C, Bobrovitz N, Meehan A, Li H, et al. Artificial intelligence-based clinical decision support in the emergency department: a scoping review. Acad Emerg Med. (2025) 32(4):386–95. doi: 10.1111/acem.15099

95. Lopes J, Guimarães T, Duarte J, Santos M. Enhancing surgery scheduling in health care settings with metaheuristic optimization models: algorithm validation study. JMIR Med Inform. (2025) 13:e57231. doi: 10.2196/57231

96. Lin X, Liang C, Liu J, Lyu T, Ghumman N, Campbell B. Artificial intelligence-augmented clinical decision support systems for pregnancy care: systematic review. J Med Internet Res. (2024) 26:e54737. doi: 10.2196/54737