- 1Prevention Research Center, Brown School, Washington University in St. Louis, St. Louis, MO, United States

- 2Division of Public Health Sciences, Department of Surgery, Alvin J. Siteman Cancer Center, Washington University School of Medicine, Washington University in St. Louis, St. Louis, MO, United States

- 3Fredrick S. Pardee RAND Graduate School, RAND Corporation, Santa Monica, CA, United States

- 4National Association of County and City Health Officials, Washington, DC, United States

Background: Local health departments (LHDs) in the United States are charged with preventing disease and promoting health in their respective communities. Understanding and addressing what supports LHD's need to foster a climate and culture supportive of evidence-based decision making (EBDM) processes can enhance delivery of effective practices and services.

Methods: We employed a stepped-wedge trial design to test staggered delivery of implementation supports in 12 LHDs (Missouri, USA) to expand capacity for EBDM processes. The intervention was an in-person training in EBDM and continued support by the research team over 24 months (March 2018–February 2020). We used a mixed-methods approach to evaluate: (1) individuals' EBDM skills, (2) organizational supports for EBDM, and (3) administered evidence-based interventions. LHD staff completed a quantitative survey at 4 time points measuring their EBDM skills, organizational supports, and evidence-based interventions. We selected 4 LHDs with high contact and engagement during the intervention period to interview staff (n = 17) about facilitators and barriers to EBDM. We used mixed-effects linear regression to examine quantitative survey outcomes. Interviews were transcribed verbatim and coded through a dual independent process.

Results: Overall, 519 LHD staff were eligible and invited to complete quantitative surveys during control periods and 593 during intervention (365 unique individuals). A total of 434 completed during control and 492 during intervention (83.6 and 83.0% response, respectively). In both trial modes, half the participants had at least a master's degree (49.7–51.7%) and most were female (82.1–83.8%). No significant intervention effects were found in EBDM skills or in implementing evidence-based interventions. Two organizational supports scores decreased in intervention vs. control periods: awareness (−0.14, 95% CI −0.26 to −0.01, p < 0.05) and climate cultivation (−0.14, 95% CI −0.27 to −0.02, p < 0.05) but improved over time among all participants. Interviewees noted staff turnover, limited time, resources and momentum as challenges to continue EBDM work. Setting expectations, programmatic reviews, and pre-existing practices were seen as facilitators.

Conclusions: Challenges (e.g., turnover, resources) may disrupt LHDs' abilities to fully embed organizational processes which support EBDM. This study and related literature provides understanding on how best to support LHDs in building capacity to use and sustain evidence-based practices.

Introduction

Local health departments (LHDs) serve as an important frontline for chronic disease prevention in the complex US public health system (1, 2). Because LHDs are more localized than state-based and national efforts, they are able to tailor the implementation of important evidence-based programs and policies to their community's needs and resources. The burden of chronic diseases like diabetes and prediabetes continues to increase, disproportionally impacting underserved communities (3). Supporting the capacity of LHDs to implement effective local strategies is an urgent priority (4). Such capacity requires skilled staff and organizational practices that support evidence-based decision making (EBDM) processes, or strategies to apply the best available scientific evidence and community preferences (5, 6). LHDs face unique challenges to building capacity, such as staff turnover, limited resources, and funding (7).

Providing training on EBDM to health department staff has been documented as an important and effective strategy to boost staff competency among the workforce and influence organizational practices (8–11). Previous work with state health departments suggests training and additional researcher-supported, agency-planned strategies to embed EBDM into systems could also enhance individual and organizational capacity to adopt evidence-based approaches (9). It is unknown if similar supports yield similar results within LHDs given the differences in governance and other organizational factors. Research to understand how best to build capacity for EBDM within LHDs has mostly been cross-sectional or longitudinal with pre-post follow-up (either with or without control groups). For the current study, we utilized a stepped-wedge design, which allows for pre-post comparisons across intervention and control groups while assuring all groups receive the possible benefits from inclusion in intervention.

In 2018, the Adoption & Implementation of evidence to Mobilize Local Health (AIM-Local Health) trial began and we recruited 12 LHDs in Missouri with the goal of understanding how training and ongoing support and technical assistance could aid LHDs and their unique context in improving capacity to use EBDM, especially with regard to chronic disease prevention. We report qualitative interview findings from LHD staff participants and quantitative survey results which are, to our knowledge, the first to feature a mixed-method, stepped-wedge cluster randomized trial with LHD as the cluster.

Methods

This study reports results from phase 2 of a two-phase dissemination trial, grounded in Diffusion of Innovation Theory (12) and Institutional Theory (13–15), to test the effectiveness of strategies designed to increase capacity for evidence-based diabetes and other chronic disease control efforts among local public health practitioners (16). Phase 1 included a national cross-sectional survey of LHDs and qualitative interviews with key informants. Findings from Phase 1 are reported elsewhere (17–21) and were used to refine the dissemination approach and measures used in phase 2. Likewise, a full protocol for all phases has been described in detail previously (16). Here we briefly describe components related to understanding Phase 2 trial results.

Site Selection and Study Design

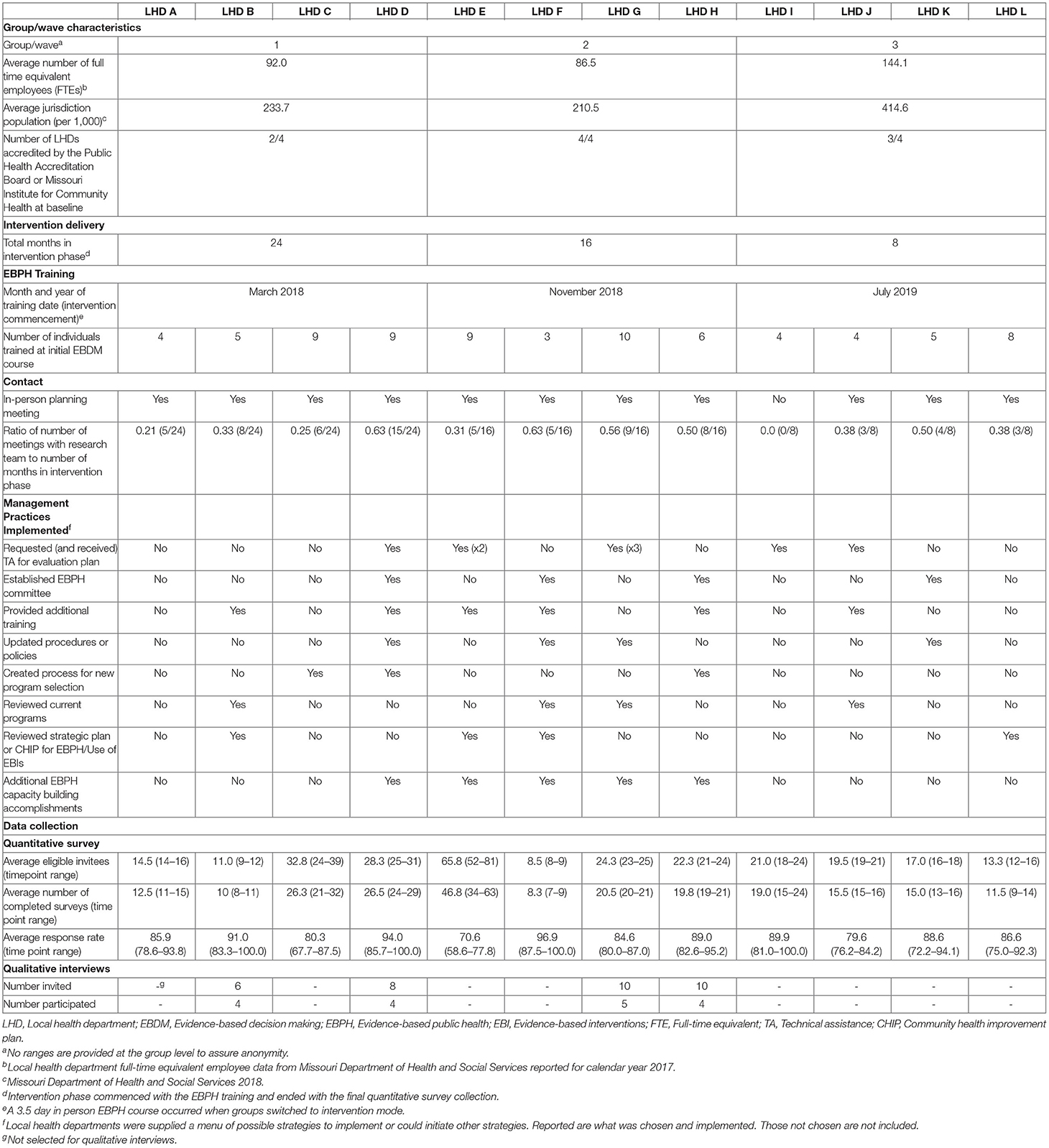

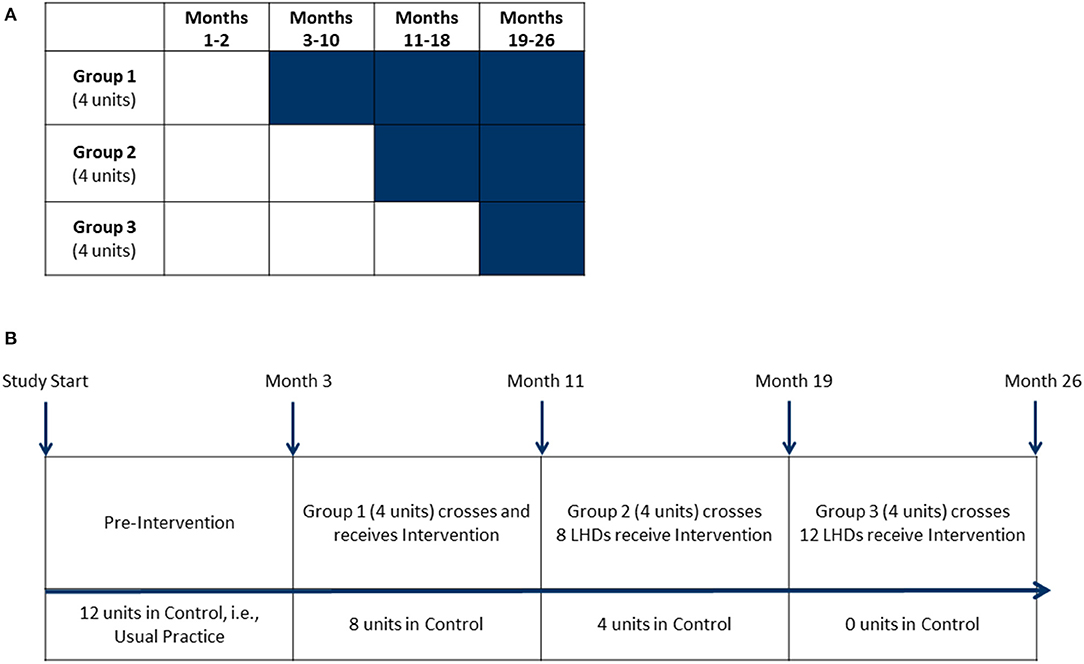

This study used an open cohort stepped-wedge design with groups of clusters (LHDs) that crossed over to intervention at various intervals. Each cohort of participants included newly recruited and previously recruited individual participants within clusters (22, 23). In comparison to parallel trial designs, the main strength of a stepped-wedge design is all groups eventually receive the intervention. We selected 12 LHDs (from 115 LHDs) in Missouri, USA based upon several characteristics such as full-time equivalent employees (proxy for LHD size), number of people working in diabetes programs (at least 5 required), and diabetes burden-mortality rate for diabetes (disparity measure). After obtaining permission from each LHD's leadership, the LHDs were randomized into three groups (four LHDs in each group). LHDs assigned to group “1” crossed over from control into intervention first and remained in intervention status until the completion of the study (Figure 1).

Figure 1. Stepped-wedge design. (A) This stepped-wedge design featured 12 units (local health departments) randomly assigned into one of three groups. Shaded cells represent intervention periods. Clear cells represent control periods. Group 1's intervention period was 24 months, Group 2's intervention period was 16 months and Group 3's intervention period was 8 months. (B) Baseline measures for all units were taken during the pre-intervention period. Groups crossed over from control to receive intervention activities with measurements at 8-month intervals.

Intervention

Previous work demonstrated capacity building within health departments to be highly nuanced to each individual organization because of differing resources (21, 24). As such, the primary intervention component included a tailored evidence-based public health (EBPH) training with each LHD and continued follow-up (e.g., email exchanges, phone meetings) to determine specific areas for capacity building activities. Each LHD's team included 1–3 staff who served as key contacts with the research team. The research team included the study's principal investigator (RCB), the project manager (RGP) and expert consultant (PA).

Eight to 9 weeks after receiving the pre-intervention survey, the intervention group received a 3.5-day, in-person EBPH training led by the research team. The EBPH training was modeled from previously successful workshops to enhance EBDM within local and state health departments (25, 26). Didactic and interactive group work covers 10 modules:

1. Introduction and overview of EBPH,

2. Assessing and engaging communities,

3. Quantifying the issue,

4. Developing a concise statement of the issue,

5. Searching and summarizing the scientific literature,

6. Developing and prioritizing intervention options,

7. Developing an action plan and building a logic model,

8. Understanding and using economic evaluation,

9. Evaluating the program or policy, and

10. Communicating and disseminating evidence to local policymakers.

More information on the course and specific learning objectives for each module can be found at evidencebasedpublichealth.org and in previously published evaluation work (26, 27).

Following the training, each LHD was offered an in-person planning session with the research team to identify priority areas. LHDs chose from a list of strategies developed in previous work or proposed alternate approaches (9, 16) in the areas of accreditation, access to scientific information, workforce development, leadership and management supports, organizational changes, relationships and partnerships, and financial practices. Additional support or “check in” meetings were offered to each LHD at whatever frequency was most helpful to the LHD for their chosen strategies. See Supplementary Material 2 for additional descriptions and examples of types of supports offered to each LHD.

We tracked intervention delivery and implementation in several ways (Table 1). We logged each LHD's training start, post-training in-person planning meeting, and follow-up check-in meetings with key staff. In addition, we tracked each LHD's management strategies that were implemented during the intervention phase. All components of this study received approval by the Washington University in St. Louis Institutional Review board (#201705026 and #202010031).

Quantitative Survey

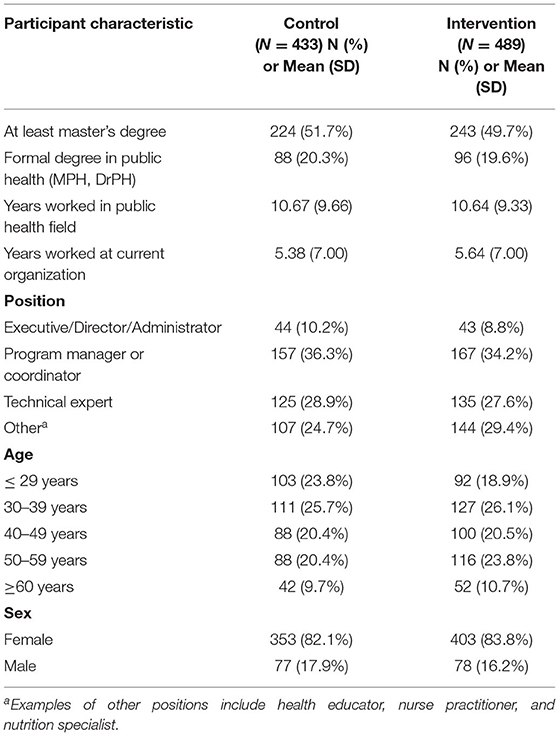

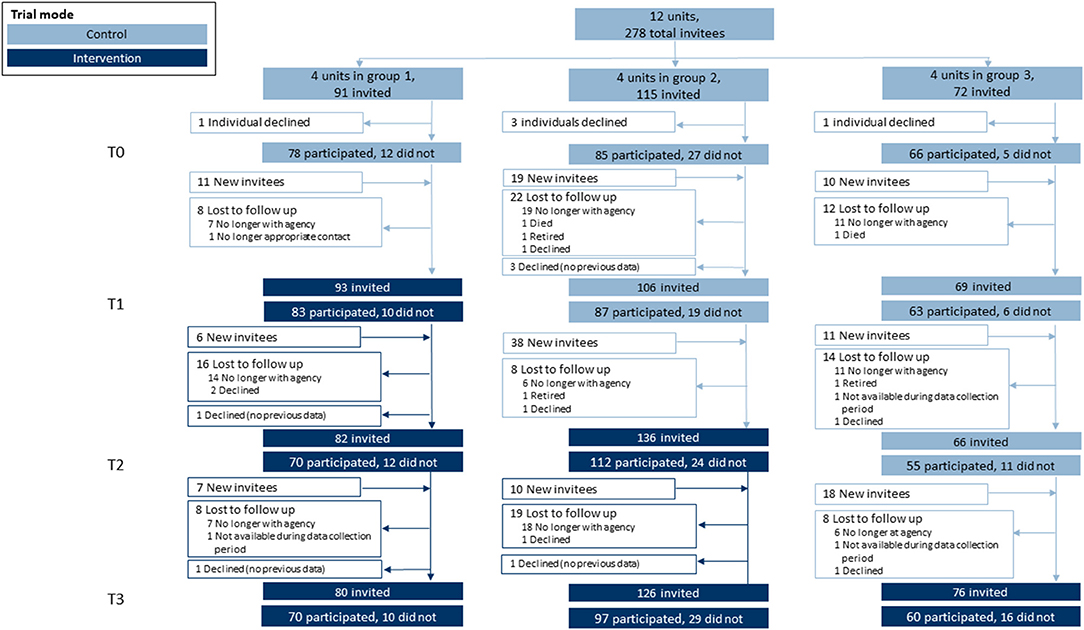

Each LHD developed a list of key staff involved in chronic disease prevention who were invited to complete an online quantitative survey every 8 months during the entirety of the study (26 months). Participants were invited via email and reminded by both email and phone to boost response. Surveys were timed with group cross-over in order to collect control measures before each intervention period. We worked with LHDs to identify individuals to replace participants who were no longer at their respective organization (open cohort design) as shown in Figure 2.

Figure 2. Participation flow diagram. This stepped-wedge design featured 12 units (local health departments) randomly assigned into one of three groups. Within each unit, individuals were invited to participate in a quantitative survey at four separate time points. Each time point included returning survey invitees and newly-invited individuals (open cohort design) where turnover warranted replacements with new hires.

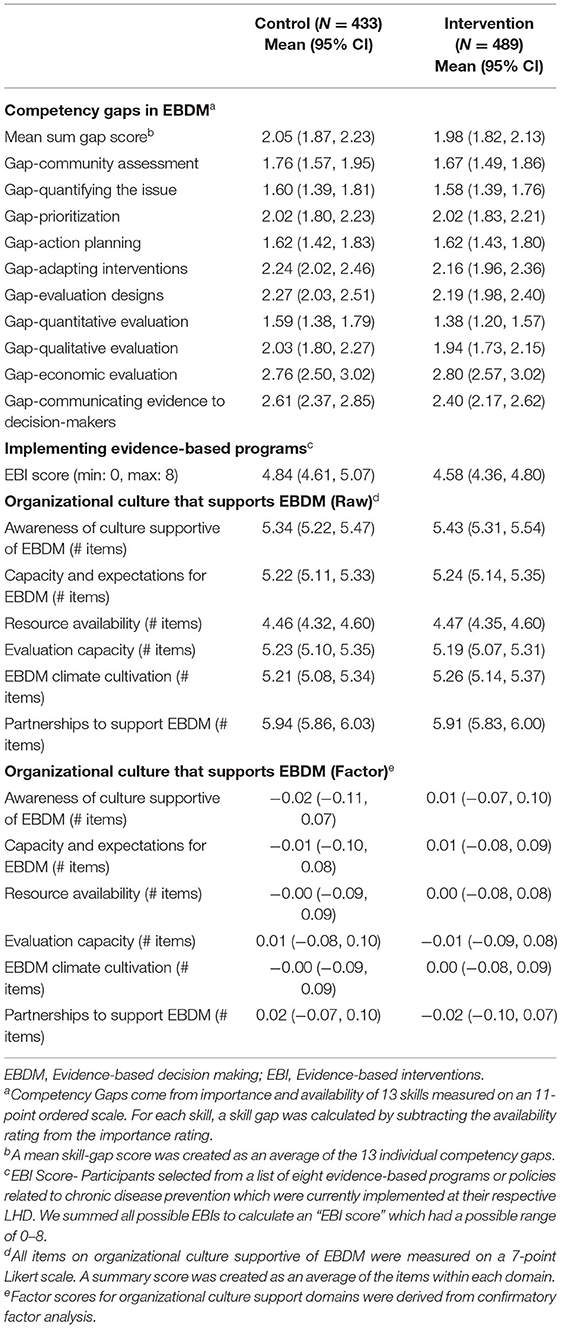

Constructs assessed with the quantitative survey include three main areas of trial outcomes. First was competency for EBDM. Ten skills were assessed and align closely with objectives from the EBPH training that each LHD received (listed in Tables 3, 4). Importance and availability of each skill was assessed on an 11-point ordered scale. Participants selected from a list of eight evidence-based programs or policies related to chronic disease prevention which were currently implemented at their respective LHD. Twenty-two survey items assessed organizational culture supportive of EBDM processes on a 7-point Likert scale (1 = strongly disagree to 7 = strongly agree). The survey also assessed participant characteristics such as education level, length of employment in public health and at their current agency, gender identity, age, race and ethnicity. The full survey is available in Supplementary Material 2.

Qualitative Interviews

The purpose of qualitative interviews was to understand how LHDs supported evidence-based processes after the initiation of the intervention phase of the study (i.e., how processes were implemented, any impacts, barriers and facilitators that influenced implementation, advice to other LHDs wanting to replicate). We selected four of the 12 LHDs based on trial randomization group, intervention adoption information, and raw change in outcome measures from baseline to final time point (Table 1). Selection in this manner was purposeful to represent LHDs with at least 16 months of intervention time prior to interview, active (high contact) participation in the intervention, and favorable outcomes from the raw quantitative data. The research team worked with each health promotion or chronic disease manager in the four LHDs to obtain leadership approval to participate in qualitative data collection. Participants were invited via email to complete audio-recorded phone interviews between October 2020 and January 2021.

The semi-structured interview guide, based on the team's previous work (24, 28), asked about organizational policies and procedures intended to support EBDM use, organizational environment and norms pertaining to EBDM, impact of instituted organizational policies and procedures on employees' day-to-day work, facilitators and challenges to integration of EBDM into day-to-day work, steps taken to sustain EBDM processes, recommendations for other LHDs, and recommendations for academic research teams for future studies with health departments. The full interview guide is provided in Supplementary Material 3.

Analysis

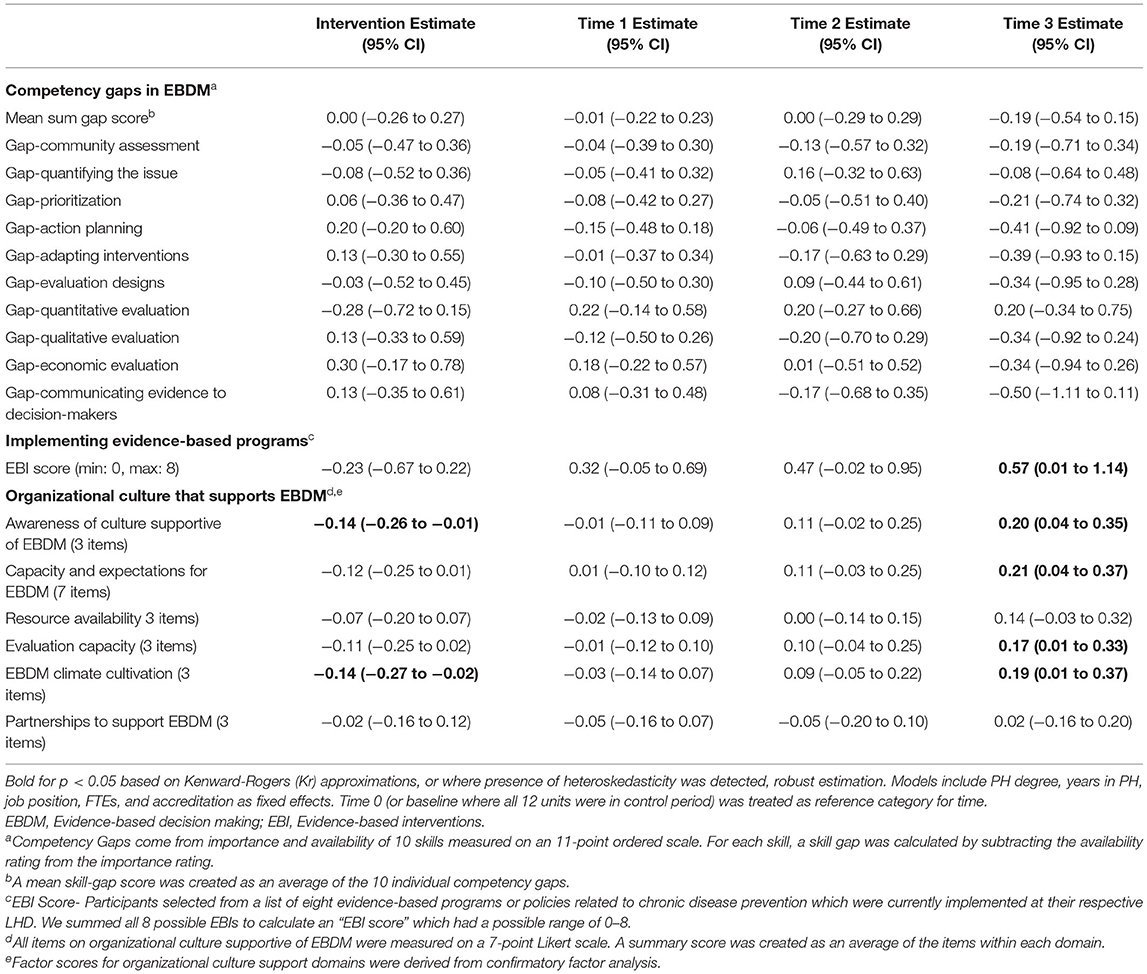

Descriptive statistics for participant and LHD characteristics and main outcomes were compared across trial mode, or intervention and control groups. We calculated gaps in each skill for EBDM by subtracting availability from importance Likert rating (possible range of −10 to +10). An overall skill gap was created by taking the average across all 10 skill items. We summed all 8 possible EBIs to calculate an “EBI score” which had a possible range of 0–8. For organizational culture items, we grouped and averaged Likert items within six main focus areas based on previous work: awareness of culture supportive of EBDM, capacity and expectations for EBDM, resource availability, evaluation capacity, EBDM climate cultivation, and partnerships to support EBDM (20). A confirmatory factor analysis using data from all four time points according to standard procedures (29–31) demonstrated adequate fit and strict measurement invariance of the factor structure used for the national survey in Phase 1 (20). We used linear mixed-effect regression models for each outcome with LHD and Participant entered as random intercepts, trial mode (control or intervention) as fixed effect, and time as a categorical fixed effect. Public health degree, years worked in the public health field, job position category and accreditation status were entered as fixed effects. Kenward-Rogers approximations were used to determine significance of fixed effects, a common approach in fitting restricted maximum likelihood models in order to produce acceptable Type 1 error (32, 33). Where models violated assumptions of homoscedasticity, robust models were approximated. Survey data were managed and analyzed in R.

Each phone interview was audio-recorded, transcribed verbatim by rev.com, and de-identified. A deductive coding approach was used to analyze interview data. Two coauthors (RGP, SK) developed and refined a codebook. Co-authors (SK, RGP) independently coded 20% of the transcripts in NVIVO 12 qualitative software and then met to reach consensus (agreement above 95% and Kappa of at least 0.70) on discrepancies and finalize the coding before one co-author (SK) coded the remaining transcripts (34). The coding team (SK, RGP, PA) conducted content analyses through a dual independent process (34–36). For each topic, co-author pairs (PA, SK, RGP) independently reviewed coded texts and made notes to identify themes and summarize content. Pairs then met and reached consensus on final themes and subthemes.

Results

Table 1 provides descriptive information about the three groups of LHDs including the EBPH training timing for each group, follow-up support, and management practices each LHD implemented to support EBDM. Overall, Group 3 had a higher average number of full time equivalent employees (144.1) and larger average jurisdiction populations (414,600 people) compared to groups 1 and 2. All but one LHD met in person for planning strategies. The first group averaged 8.5 follow-up meetings with the research team (over 24 months), the second group 9.5 over 16 months, and 2.5 for the third group over 8 months in the intervention phase.

Quantitative Results

Overall, 519 LHD staff were eligible and invited to complete quantitative surveys during control periods and 593 during intervention (365 total unique individuals). A total of 434 completed during control and 492 during intervention resulting in 83.6 and 83.0% response, respectively. For each time period, the LHDs averaged 23.2 eligible staff (range 8.5–65.8) and 19.3 staff (range 8.3–46.8) which completed surveys. Of all participants (Table 2), most were female (82.1% control, 83.8% intervention), about half had at least a master's degree (51.7% control; 49.7% intervention), and less than a quarter completed a formal degree program in public health (20.3% control; 19.6% intervention). For job position, program managers or coordinators made up approximately one-third of participants (36.3% control; 34.2% intervention). Participants worked in public health for a little more than 10 years on average (mean = 10.7, SD = 9.7 control; 10.6, SD 9.3 intervention), about double the time they worked in their current organization (mean = 5.4, SD = 7.0 control; 5.64, SD 7.0 intervention).

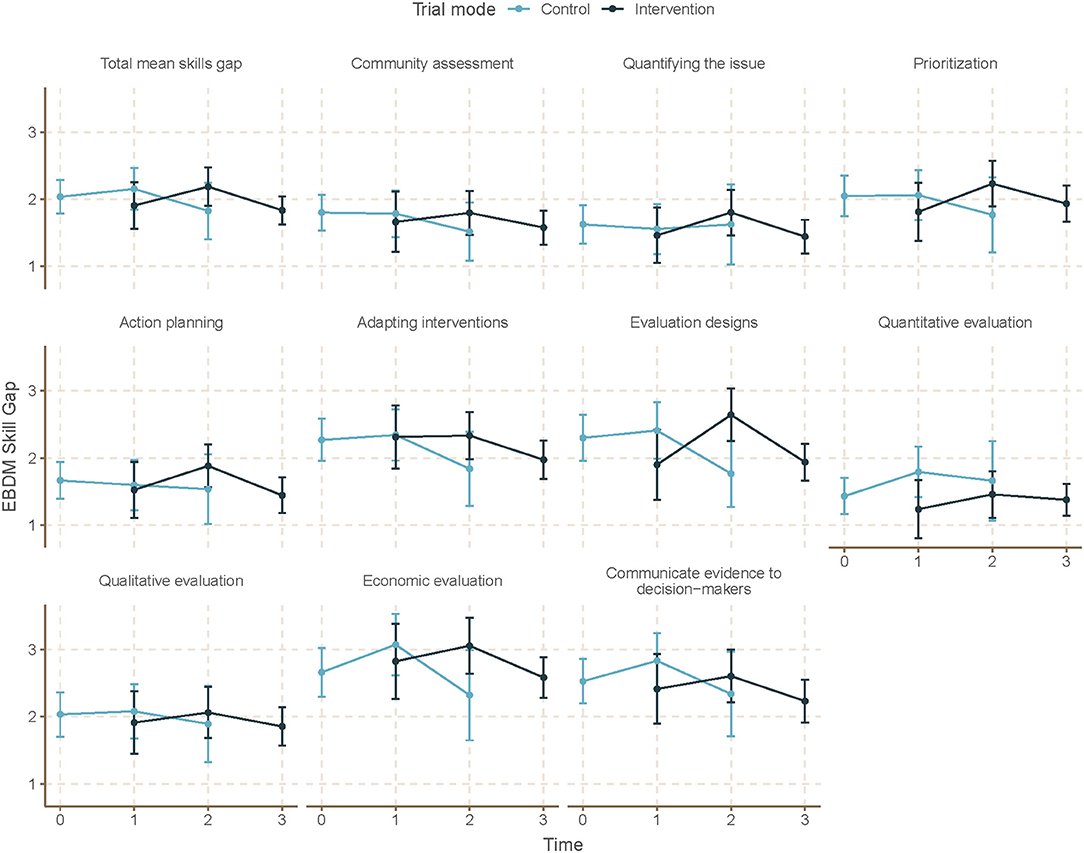

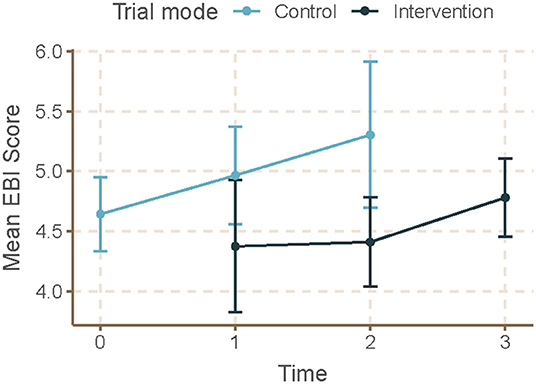

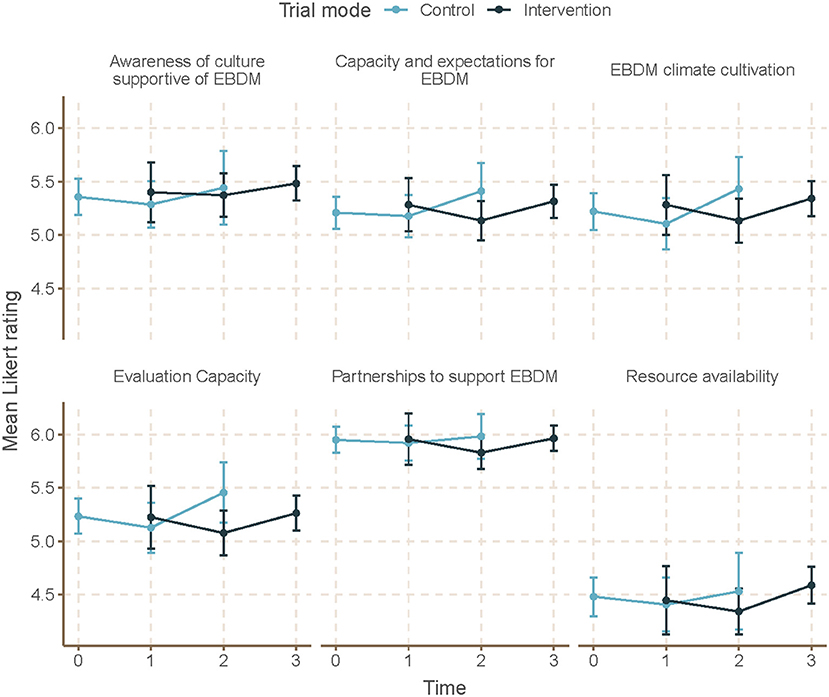

Mean skill gaps in EBDM (mean of all 10 skill gaps) were similar for both control and intervention trial modes (2.05 95% CI 1.87–2.23 control; 1.98 95% CI 1.82–2.13 intervention) (Table 3). Mean skill gaps in EBDM (and 95% CIs) for both intervention and control groups at each time point are displayed in Figure 3. Economic evaluation was the largest skill gap (2.76 95% CI 2.50–3.02 control; 2.80 95% CI 2.57–3.02 intervention) followed by communicating evidence to decision-makers (2.61 95% CI 2.37–2.85 control, 2.40 95% CI 2.17–2.62 intervention). More EBIs were reported during the control period (4.84 95% CI 4.61–5.07 control, 4.58 95% CI 4.36–4.80 intervention). Mean EBI scores (and 95% CIs) for intervention and control groups at each time point are displayed in Figure 4. For both control and intervention, partnerships that support EBDM had the highest mean Likert scale rating among other organizational culture items (5.94 95% CI 5.86–6.03 and 5.91 95% CI 5.83–6.00, respectively). The lowest mean Likert ratings for both were in resources available to support EBDM (4.46 control and 4.47 intervention, respectively). Mean organizational culture for EBDM items (and 95% CIs) for intervention and control groups at each time point are displayed in Figure 5.

Figure 3. Mean EBDM skill gaps by time and trial mode. At each time point, mean and 95% confidence intervals for skill gaps in evidence-based decision making (EBDM) are displayed for individuals during control and intervention phases. EBDM skill gaps come from importance and availability of 10 skills measured on an 11-point ordered scale. For each skill, a skill gap was calculated by subtracting the availability rating from the importance rating. Time 0 represents baseline where all units (local health departments) were in control period. Time 3 is the final data collection point and all individuals are in intervention period. Total mean skill gap score was created as an average of the 10 individual competency gaps.

Figure 4. Mean EBI score by time and trial mode. At each time point, mean and 95% confidence intervals for evidence-based interventions (EBI) score are displayed for individuals during control and intervention phases. For EBI Score, participants selected from a list of eight evidence-based programs or policies related to chronic disease prevention which were currently implemented at their respective local health department. We summed all 8 possible EBIs to calculate the EBI score which had a possible range of 0–8. Time 0 represents baseline where all units (local health departments) were in control period. Time 3 is the final data collection point and all individuals are in intervention period. Total mean skill gap score was created as an average of the 10 individual competency gaps.

Figure 5. Mean EBDM culture items by time and trial mode. At each time point, mean and 95% confidence intervals for organizational culture supportive of evidence-based decision making (EBDM) items are displayed for individuals during control and intervention phases. All items on organizational culture supportive of EBDM were measured on a 7-point Likert scale. A summary score was created as an average of the items within each domain. Time 0 represents baseline where all units (local health departments) were in control period. Time 3 is the final data collection point and all individuals are in intervention period. Total mean skills gap score was created as an average of the 10 individual competency gaps.

After accounting for clustering by LHD and repeated response in mixed-effects linear models, we found no significant time or intervention effect with regard to EBDM skill gaps (Table 4). No net intervention effect was found in implementing EBIs, though the last time point had significantly more EBIs implemented overall compared to the first time point (0.57, 95% CI 0.01–1.14, p < 0.05). Two organizational culture support for EBDM factor scores were significantly reduced for intervention: EBDM awareness (−0.14, 95% CI −0.26 to −0.01, p < 0.05) and climate cultivation (−0.14, 95% CI −0.27 to −0.02, p < 0.05). At the final collection (time 4), significantly larger factor scores were found in awareness (0.20, 95% CI 0.04–0.35, p < 0.05), EBDM capacity and expectations (0.21, 95% CI 0.04–0.35), evaluation capacity (0.17, 95% CI 0.01–0.33), and climate cultivation (0.19, 95% CI 0.01–0.37) compared to the first time point.

Qualitative Interviews

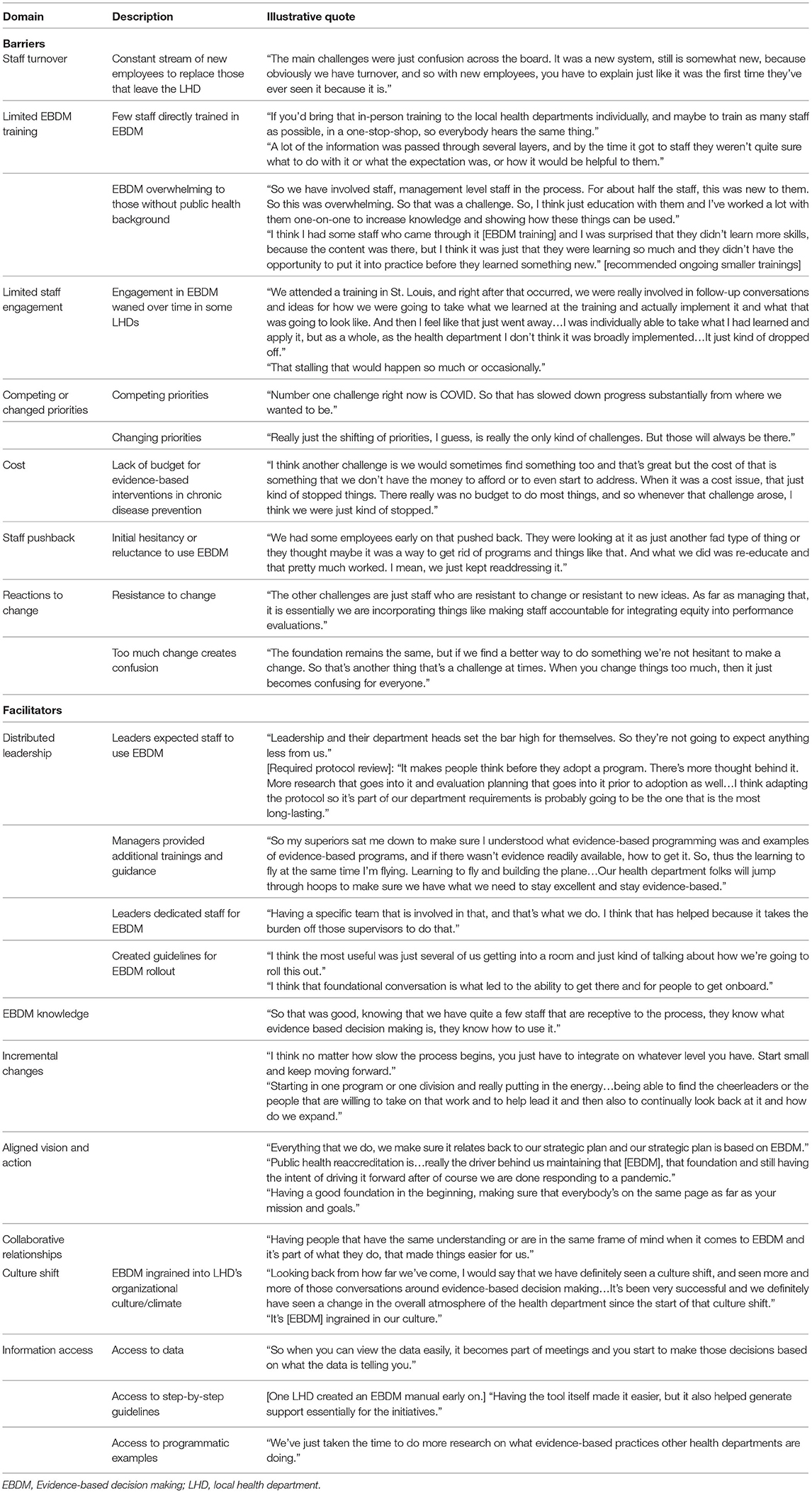

A total of 17 interviews were conducted with LHD staff in the trial, representing 4 of the 12 LHDs. Of those interviewed, most were white (71.0%), mostly non-Hispanic (94.0%) and worked an average of 6.7 years at their current agency. Several themes emerged from interviews with LHD staff participants.

Facilitators

Management practices, or the way in which various mechanisms within LHDs operate to either challenge or support EBDM including leadership, organization culture, workforce development, agency/system planning, program reviews, partnerships, and accreditation processes/status were facilitators of EBDM processes within LHDs (Table 5). Leadership activities that were most helpful to support EBDM included dedicating staff, creating specific guidelines, setting expectations for use, and providing trainings, resources and guidance. A culture shift, including dialogue, helped to change the “atmosphere” and “ingrain” EBDM implementation into LHD processes. Specific workforce development practices such as staff-led training sessions helped to “introduce new staff to what that [EBDM] is and our processes and what we do.” Incorporating EBDM into job descriptions and performance reviews to introduced a level of accountability to “continually working on that [EBDM].” Agency level plans included strategic planning that included EBDM and including EBDM into performance management systems and community health improvement plan development. Also helpful to support EBDM within LHDs was the incorporation of programmatic reviews to align programs with LHD priorities, find evidence-based approaches, compare programs with EBDM process, and enhance or initiate program evaluation. In working with partners, LHDs provided staff time to support partner EBDM use and also facilitated use of EBDM through the CHIP process. Management practices put in place to apply for or maintain PHAB accreditation also helped to support EBDM implementation and served as a “driver” to continue EBDM practices.

Table 5. Barriers and facilitators to use of evidence-based decision making in local health departments.

Barriers

Despite management practices that were described as facilitators, several challenges to EBDM processes were also discussed by LHD staff (Table 5). Staff turnover, small numbers trained, time to fully implement changes, not enough support, and low level of involvement after training all were mentioned as barriers to moving EBDM management practices further. Staff turnover was described as especially disruptive to continuing with EBDM work, “we'll get going for a little while... we'll have some turnover or something will happen where we have to shift our focus and then it can be difficult sometimes sort of going back and saying, okay, how can we get, how can we keep that momentum going?” Staff turnover also makes it hard to keep enough staff trained in EBDM, “our health department had a whole bunch of people that went through the EBDM training, but most of them have left now…But if there was an opportunity to continue offering the EBDM trainings, just because of that staff turnover, that would be one resource that would be amazing.” Time to develop and implement EBDM practices was challenging as one participant described because “in public health agencies, there's never going to be enough time.” The participant stated that LHDs should “start small and just keep moving forward.” Another participant described how implementing EBDM processes after the training “took a backseat because it did take time and effort and staffing in order to develop a plan, continue training, implement it, evaluate, et cetera.” In terms of needing additional support, participants wanted more concrete examples and continued technical assistance that was more formalized. According to one participant, when the research team did “…provide us with really concrete steps or examples, that was really helpful. So for example, some of the website structure that actually had the list of evidence-based programs that we could then kind of replicate here. Or walking us through how to do a logic model. The evaluation series that they offered us, the online trainings that were made available, those were super helpful. So really just kind of that, that concrete examples and technical assistance is helpful.”

Discussion

Overall, our mixed methods study highlights the challenge of complex interventions and their implementation with heterogeneous organizations. While the quantitative outcomes were not significantly improved in our analysis, the qualitative data highlight challenges and future directions for continued efforts in building EBDM capacity.

We found no intervention effect on skills for EBDM. In a similar randomized control trial with 12 state health departments, Brownson et al. (9) found significant reductions in EBDM skill gaps in 6 skill areas (adapting interventions, prioritization, quantifying the issue, communicating to policy makers, community assessment, and qualitative evaluation) at 18–24 months post-training. These improvements were found among the primary intervention group (or those who had attended the state-based EBPH training). With smaller local health departments and training sizes, it was not possible to complete similar analyses, though it is possible we may have seen improvements among the smaller group of those who attended the EBPH training. Perhaps direct training linked with individual skill-building may not diffuse to others in the department unable to attend or that are brought on later during an extended study time. As such, continuous training opportunities, possibly embedded into health department work flows, are needed. In our study, interview participants wanted additional direct training in how to apply EBDM principles in their day-to-day work, such as examples of evidence-based interventions other LHDs were using and additional hands-on support with program evaluation.

Trial mode was associated with significant reductions in organizational culture factor items including awareness, evaluation capacity, and climate cultivation for EBDM. Brownson et al. (9) found that, except for access to evidence and skilled staff, the remaining three organizational culture factors were not changed for the primary intervention group with negative, nonsignificant estimates in supervisory expectations and in participatory decision-making. In both studies, organizational readiness (and/or the readiness to engage in participatory research) was not comprehensively assessed. It is possible that while we looked for changes in organizational culture supportive of EBDM, perhaps we missed important contextual influences affecting implementation of the intervention (37–39). In a review, Willis et al. (39) outlined six guiding principles that influence the sustainability of organizational change: aligned vision and action; incremental changes within a comprehensive transformation strategy; distributed leadership; staff engagement; collaborative relationships; and continuous assessment and evaluation of change. Many of these principles were mentioned qualitatively by LHD staff as strategies that were helpful in shifting processes to be more EBDM-focused. Determining additional ways to measure incremental changes and/or measures that capture the changes that happened from starting “small and just keep moving forward” could help explain how change happens and is sustained in LHDs.

Implementing and sustaining organizational change through staff engagement is further complicated when faced with the instability of the public health workforce. Maintaining a stable workforce is a well-documented challenge in public health (40, 41) and is compounded by local health departments with smaller budgets and fewer employees (7, 42). Similar challenges were mentioned in a different mixed method study with 31 LHDs in New York (43). Sosnowy et al. (43) found that EBDM philosophy was generally understood and supported within health departments, but operationalizing its concepts was challenged by limited funding, staff and resources. Turnover was commonly mentioned by interviewees and also confirmed by the study team at each data collection point in terms of updating tracking sheets and contact information to account for staffing changes. Every time a trained staff member leaves, a new staff must be on boarded and trained. This is disruptive to keeping “the momentum going” on work to change management policy and/or practices. However, strong and determined leadership may be resilient in the face of turnover and limited resources, as was found in our study and in several other studies exploring supports for evidence-based processes (24, 43, 44). In addition, embedding standards for the use of EBDM into daily departmental activities creates structure, a level of capacity, and efficiency needed where high turnover is present.

Interviewees described how the focus on changing policy and management practices that occurred directly after training began to wane over time. Expansion and lag periods make capturing change difficult and reinforces the need for complex interventions with public health departments to account for such context (45, 46). LHDs were the main drivers of the intervention, from the staff who attended the trainings, to the approaches to focus on, and the level of engagement with the research team. Engagement varied across the LHDs (Table 1) and even LHDs working closely with our research team cited various barriers to moving EBDM processes along via interviews. This reinforces the need for participatory research with practitioners to depart from the notion that the research pipeline is evidence “delivered” to practitioners (47). A promising approach involves Academic Health Departments (AHDs), or formal or informal arrangements between governmental public health agencies and academic institutions with the overall goal of shared benefits through research, practice, and development of the next generation of the public health workforce (18, 48). AHDs offer sustained partnerships as a main benefit and provide infrastructure to manage organizational culture shifts, which demand long-term commitment. AHDs have been shown to implement more EBDM processes as compared to non-AHDs (18), underscoring the still forming AHD research agenda, which maps to various concepts of EBDM (49, 50). Learning “what works” from other LHDs in forming supportive and efficient AHD partnerships benefits the overall movement toward increasing such partnerships, but external funding for such coordination is limited (51). Our study and the accompanying body of work can be used to inform efforts.

Limitations

The current study is limited to LHDs in Missouri. LHDs are governed differently in each state and even locally, which has unique implications for building EBDM capacity. Responses to the quantitative survey were self-reported, which introduces the possibility of response bias. Evidence-based programs and policies reported by each individual for their LHD were not verified beyond self-report. Similarly, interview participants were informed of their anonymity, but self-censure may still be a potential limitation. Our final wave of data collection began in February 2020, when our LHDs were in the beginning stages of responding to the COVID−19 pandemic, which challenged data collection. We saw a drop in response rates for the final wave of data collection period, especially with the third group/wave (those who received intervention in the last period). The pandemic response was another reason we did not conduct interviews with more LHDs. Our purposive sampling approach allowed the research team to obtain rich contextual information from LHDs with mid- to high levels of participation while also minimizing disrupting or burdening all 12 LHDs during a demanding period addressing the COVID−19 pandemic in their communities. It is possible that key participants were uninvolved in the last part of our intervention period. Finally, the stepped-wedge design has several practical limitations that are trade-offs for robustness such as data collection intensity and burden, but allows for all 12 LHDs to be in the intervention group. For example, each participant received four surveys within the 26 month trial period. Staff may have been unable to respond, and therefore, we potentially missed their perspective.

Conclusions

LHDs are unique in that they represent the frontline of chronic disease prevention, localized with the ability to engage the communities they serve in evidence-based programs and policies. Our study and related literature serve in understanding how best to build capacity within LHDs to support EBDM processes. LHDs can facilitate integration of EBDM into day-to-day public health practice through leadership support, by fostering a supportive organizational climate and culture, and by embedding EBDM steps into internal written plans, policies, and standardized procedures. Future directions should focus on sustained partnerships that keep LHDs in the driver's seat and give consideration for known challenges (e.g., turnover, limited funding).

Data Availability Statement

The datasets analyzed during the current study are available from the corresponding author upon reasonable request.

Ethics Statement

The studies involving human participants were reviewed and approved by Washington University in St. Louis Institutional Review Board (#201705026 and #202010031). The Ethics Committee waived the requirement of written informed consent for participation.

Author Contributions

RP led intervention planning and implementation, quantitative and qualitative data collection, and data management. PA assisted with intervention planning and implementation, quantitative data collection, and qualitative data analysis. RB led the study conceptualization and design and assisted with intervention planning and implementation. YY assisted with study design, data analysis, and statistical support. RJ assisted with data management and led data analysis and manuscript development. SK supported both quantitative and qualitative data collection and coding and analysis. DD assisted with survey tool development, recruitment, and interpretation of results. SM supported quantitative data analyses. All authors contributed to manuscript development and approved the final version.

Funding

This study was funded by the National Institute of Diabetes and Digestive and Kidney Diseases of the National Institutes of Health (Award Nos. R01DK109913, P30DK092949, and P30DK092950), the National Cancer Institute (Award No. P50CA244431), the Centers for Disease Control and Prevention (Award No. U48DP006395), and the Foundation for Barnes-Jewish Hospital.

Author Disclaimer

The findings and conclusions in this article are those of the authors and do not necessarily represent the official positions of the National Institutes of Health or the Centers for Disease Control and Prevention.

Conflict of Interest

SK was employed by RAND Corporation. DD was employed by National Association of County and City Health Officials.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We are especially grateful to our 12 Missouri local health departments for their participation in data collection and also in intervention implementation. We are appreciative of the dedicated work from our student research assistants, Katie Bass and Mackenzie Robinson. We also acknowledge the administrative support of Linda Dix, Mary Adams, and Cheryl Valko at the Prevention Research Center in St. Louis, Brown School, Washington University in St. Louis.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2022.853791/full#supplementary-material

Supplementary Material 1. Menu of organizational supports for evidence-based decision making. This document was presented to units (local health departments) after crossing over to intervention period, following participation in the multi-day evidence-based public health training. The menu offers examples of strategies to support evidence-based processes within health departments.

Supplementary Material 2. Quantitative survey. The quantitative survey was administered at four separate time points over the course of the study. All individuals received the same survey tool regardless of the intervention phase of their respective unit (local health department).

Supplementary Material 3. Qualitative interview guide. These questions and prompts were used in interviews with local health department staff to guide a discussion around facilitators and barriers to implementing evidence-based processes within their respective agencies.

References

1. National Association of County City Health Officials–NACCHO. National Profile of Local Health Departments Survey: Chapter 7-Programs and Services. (2019). Available online at: https://www.naccho.org/uploads/downloadable-resources/Programs/Public-Health-Infrastructure/NACCHO_2019_Profile_final.pdf (accessed July 13, 2021).

2. Zhang X, Luo H, Gregg EW, Mukhtar Q, Rivera M, Barker L, et al. Obesity prevention and diabetes screening at local health departments. Am J Public Health. (2010) 100:1434–41. doi: 10.2105/AJPH.2009.168831

3. Centers for Disease Control Prevention. National Diabetes Statistics Report. Atlanta, GA (2020). Available online at: https://www.cdc.gov/diabetes/pdfs/data/statistics/national-diabetes-statistics-report.pdf (accessed July 13, 2021).

4. Golden SH, Maruthur N, Mathioudakis N, Spanakis E, Rubin D, Zilbermint M, et al. The case for diabetes population health improvement: evidence-based programming for population outcomes in diabetes. Curr Diab Rep. (2017) 17:51. doi: 10.1007/s11892-017-0875-2

5. Brownson RC, Reis RS, Allen P, Duggan K, Fields R, Stamatakis KA, et al. Understanding administrative evidence-based practices: findings from a survey of local health department leaders. Am J Prev Med. (2014) 46:49–57. doi: 10.1016/j.amepre.2013.08.013

6. Jacob RR, Baker EA, Allen P, Dodson EA, Duggan K, Fields R, et al. Training needs and supports for evidence-based decision making among the public health workforce in the United States. BMC Health Serv Res. (2014) 14:564. doi: 10.1186/s12913-014-0564-7

7. Robin N, Leep CJ. NACCHO's national profile of local health departments study: looking at trends in local public health departments. J Public Health Manag Pract. (2017) 23:198–201. doi: 10.1097/PHH.0000000000000536

8. Jacobs JA, Duggan K, Erwin P, Smith C, Borawski E, Compton J, et al. Capacity building for evidence-based decision making in local health departments: scaling up an effective training approach. Implement Sci. (2014) 9:124. doi: 10.1186/s13012-014-0124-x

9. Brownson RC, Allen P, Jacob RR, deRuyter A, Lakshman M, Reis RS, et al. Controlling chronic diseases through evidence-based decision making: a group-randomized trial. Prev Chronic Dis. (2017) 14:E121. doi: 10.5888/pcd14.170326

10. Brownson RC, Fielding JE, Green LW. Building capacity for evidence-based public health: reconciling the pulls of practice and the push of research. Annu Rev Public Health. (2017) 39:27–53. doi: 10.1146/annurev-publhealth-040617-014746

11. Muir Gray J. Evidence-Based Healthcare: How to Make Decisions about Health Services and Public Health. 3 ed. New York, NY; Edinburgh: Churchill Livingstone Elsevier (2009).

13. March JG, Olsen JP. The new institutionalism: organizational factors in political life. Am Polit Sci Rev. (1984) 78:734–49. doi: 10.2307/1961840

14. North DC. Institutions, Institutional Change, and Economic Performance. New York, NY: Cambridge University Press (1990).

15. Scott WR. Institutions and Organizations: Ideas And Interests. 3rd ed. Los Angeles, CA: Sage Publications; (2008).

16. Parks RG, Tabak RG, Allen P, Baker EA, Stamatakis KA, Poehler AR, et al. Enhancing evidence-based diabetes and chronic disease control among local health departments: a multi-phase dissemination study with a stepped-wedge cluster randomized trial component. Implement Sci. (2017) 12:122. doi: 10.1186/s13012-017-0650-4

17. Tabak RG, Parks RG, Allen P, Jacob RR, Mazzucca S, Stamatakis KA, et al. Patterns and correlates of use of evidence-based interventions to control diabetes by local health departments across the USA. BMJ Open Diabetes Res Care. (2018) 6:e000558. doi: 10.1136/bmjdrc-2018-000558

18. Erwin PC, Parks RG, Mazzucca S, Allen P, Baker EA, Hu H, et al. Evidence-based public health provided through local health departments: importance of academic–practice partnerships. Am J Public Health. (2019) 109:739–47. doi: 10.2105/AJPH.2019.304958

19. Allen P, Mazzucca S, Parks RG, Robinson M, Tabak RG, Brownson R. Local health department accreditation is associated with organizational supports for evidence-based decision making. Front Public Health. (2019) 7:374. doi: 10.3389/fpubh.2019.00374

20. Mazzucca S, Parks RG, Tabak RG, Allen P, Dobbins M, Stamatakis KA, et al. Assessing organizational supports for evidence-based decision making in local public health departments in the United States: development and psychometric properties of a new measure. J Public Health Manag Pract. (2019) 25:454–63. doi: 10.1097/PHH.0000000000000952

21. Poehler AR, Parks RG, Tabak RG, Baker EA, Brownson RC. Factors facilitating or hindering use of evidence-based diabetes interventions among local health departments. J Public Health Manag Pract. (2020) 26:443–50. doi: 10.1097/PHH.0000000000001094

22. Hemming K, Taljaard M, McKenzie JE, Hooper R, Copas A, Thompson JA, et al. Reporting of stepped wedge cluster randomised trials: extension of the CONSORT 2010 statement with explanation and elaboration. BMJ. (2018) 363:k1614. doi: 10.1136/bmj.k1614

23. Hooper R, Copas A. Stepped wedge trials with continuous recruitment require new ways of thinking. J Clin Epidemiol. (2019) 116:161–6. doi: 10.1016/j.jclinepi.2019.05.037

24. Allen P, Jacob RR, Lakshman M, Best LA, Bass K, Brownson RC. Lessons learned in promoting evidence-based public health: perspectives from managers in state public health departments. J Community Health. (2018) 43:856–63. doi: 10.1007/s10900-018-0494-0

25. Gibbert WS, Keating SM, Jacobs JA, Dodson E, Baker E, Diem G, et al. Training the workforce in evidence-based public health: an evaluation of impact among US and international practitioners. Prev Chronic Dis. (2013) 10:E148. doi: 10.5888/pcd10.130120

26. Jacob RR, Brownson CA, Deshpande AD, Eyler AA, Gillespie KN, Hefelfinger J, et al. Long-term evaluation of a course on evidence-based public health in the U.S. and Europe. Am J Prev Med. (2021) 61:299–307. doi: 10.1016/j.amepre.2021.03.003

27. Jacob RR, Duggan K, Allen P, Erwin PC, Aisaka K, Yang SC, et al. Preparing public health professionals to make evidence-based decisions: a comparison of training delivery methods in the United States. Front Public Health. (2018) 6:257. doi: 10.3389/fpubh.2018.00257

28. Duggan K, Aisaka K, Tabak RG, Smith C, Erwin P, Brownson RC. Implementing administrative evidence based practices: lessons from the field in six local health departments across the United States. BMC Health Serv Res. (2015) 15:221. doi: 10.1186/s12913-015-0891-3

29. van de Schoot R, Lugtig P, Hox J. A checklist for testing measurement invariance. Eur J Dev Psychol. (2012) 9:486–92. doi: 10.1080/17405629.2012.686740

30. Dyer NG, Hanges PJ, Hall RJ. Applying multilevel confirmatory factor analysis techniques to the study of leadership. Leadership Q. (2005) 16:149–67. doi: 10.1016/j.leaqua.2004.09.009

31. Putnick DL, Bornstein MH. Measurement invariance conventions and reporting: the state of the art and future directions for psychological research. Dev Rev. (2016) 41:71–90. doi: 10.1016/j.dr.2016.06.004

32. Luke SG. Evaluating significance in linear mixed-effects models in R. Behav Res Methods. (2017) 49:1494–502. doi: 10.3758/s13428-016-0809-y

33. Leyrat C, Morgan KE, Leurent B, Kahan BC. Cluster randomized trials with a small number of clusters: which analyses should be used? Int J Epidemiol. (2018) 47:321–31. doi: 10.1093/ije/dyx169

34. Saldaña J. The Coding Manual for Qualitative Researchers. 3rd ed. Thousand Oaks, CA: Sage Publications (2016).

35. Miles MB, Huberman AM. Qualitative Data Analysis: A Source Book of New Methods. Berverly Hills, CA: Sage Publications (1994).

36. Strauss AL, Corbin JM. Grounded Theory in Practice. Thousand Oaks, CA: Sage Publications (1997).

37. Birken SA, Bunger AC, Powell BJ, Turner K, Clary AS, Klaman SL, et al. Organizational theory for dissemination and implementation research. Implement Sci. (2017) 12:62. doi: 10.1186/s13012-017-0592-x

38. Leeman J, Baquero B, Bender M, Choy-Brown M, Ko LK, Nilsen P, et al. Advancing the use of organization theory in implementation science. Prev Med. (2019) 129:105832. doi: 10.1016/j.ypmed.2019.105832

39. Willis CD, Saul J, Bevan H, Scheirer MA, Best A, Greenhalgh T, et al. Sustaining organizational culture change in health systems. J Health Organ Manag. (2016) 30:2–30. doi: 10.1108/JHOM-07-2014-0117

40. Beck AJ, Boulton ML. Trends and characteristics of the state and local public health workforce, 2010–2013. Am J Public Health. (2015) 105:S303–S10. doi: 10.2105/AJPH.2014.302353

41. Beck AJ, Boulton ML, Lemmings J, Clayton JL. Challenges to recruitment and retention of the state health department epidemiology workforce. Am J Prev Med. (2012) 42:76–80. doi: 10.1016/j.amepre.2011.08.021

42. Harris JK, Beatty K, Leider JP, Knudson A, Anderson BL, Meit M. The double disparity facing rural local health departments. Annu Rev Public Health. (2016) 37:167–84. doi: 10.1146/annurev-publhealth-031914-122755

43. Sosnowy CD, Weiss LJ, Maylahn CM, Pirani SJ, Katagiri NJ. Factors affecting evidence-based decision making in local health departments. Am J Prev Med. (2013) 45:763–8. doi: 10.1016/j.amepre.2013.08.004

44. Dodson EA, Baker EA, Brownson RC. Use of evidence-based interventions in state health departments: a qualitative assessment of barriers and solutions. J Public Health Manag Pract. (2010) 16:E9–e15. doi: 10.1097/PHH.0b013e3181d1f1e2

45. Hawe P. Lessons from complex interventions to improve health. Annu Rev Public Health. (2015) 36:307–23. doi: 10.1146/annurev-publhealth-031912-114421

46. Spiegelman D. Evaluating public health interventions: 2. stepping up to routine public health evaluation with the stepped wedge design. Am J Public Health. (2016) 106:453–7. doi: 10.2105/AJPH.2016.303068

47. Green LW. Making research relevant: if it is an evidence-based practice, where's the practice-based evidence? Fam Pract. (2008) 25(Suppl. 1):i20–4. doi: 10.1093/fampra/cmn055

48. Erwin PC, Keck CW. The academic health department: the process of maturation. J Public Health Manag Pract. (2014) 20:270–7. doi: 10.1097/PHH.0000000000000016

49. Erwin PC, McNeely CS, Grubaugh JH, Valentine J, Miller MD, Buchanan M. A logic model for evaluating the academic health department. J Public Health Manag Pract. (2016) 22:182–9. doi: 10.1097/PHH.0000000000000236

50. Erwin PC, Brownson RC, Livingood WC, Keck CW, Amos K. Development of a research agenda focused on academic health departments. Am J Public Health. (2017) 107:1369–75. doi: 10.2105/AJPH.2017.303847

Keywords: evidence-based decision making, evidence-based public health, local health department, organizational capacity, evidence-based decision making competency

Citation: Jacob RR, Parks RG, Allen P, Mazzucca S, Yan Y, Kang S, Dekker D and Brownson RC (2022) How to “Start Small and Just Keep Moving Forward”: Mixed Methods Results From a Stepped-Wedge Trial to Support Evidence-Based Processes in Local Health Departments. Front. Public Health 10:853791. doi: 10.3389/fpubh.2022.853791

Received: 13 January 2022; Accepted: 25 March 2022;

Published: 28 April 2022.

Edited by:

Erin Hennessy, Tufts University, United StatesReviewed by:

Christopher Mierow Maylahn, New York State Department of Health, United StatesAngela Carman, University of Kentucky, United States

Copyright © 2022 Jacob, Parks, Allen, Mazzucca, Yan, Kang, Dekker and Brownson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rebekah R. Jacob, cmViZWthaGphY29iQHd1c3RsLmVkdQ==

Rebekah R. Jacob

Rebekah R. Jacob Renee G. Parks

Renee G. Parks Peg Allen

Peg Allen Stephanie Mazzucca

Stephanie Mazzucca Yan Yan2

Yan Yan2 Sarah Kang

Sarah Kang Ross C. Brownson

Ross C. Brownson