- Usher Institute, University of Edinburgh, Edinburgh, Scotland, United Kingdom

Purpose: This paper reviews the applicability of standard epidemiological criteria for causation, to the multidisciplinary studies of RF-EMF exposure and various adverse biological and health effects, with the aim of demonstrating that these criteria, although 60 years old, are still helpful in this context—albeit in some cases not entirely straightforward to apply.

Methods: This is a commentary, based on Bradford Hill’s criteria for assessing evidence of causation, applied to recent primary studies and systematic reviews of the RF-EMF/health-effects literature. Every effort has been made to use non-epidemiological language to reach a wide readership of biologists, physicists, and engineers now active in this field.

Results: A rapidly growing number of human observational epidemiological studies have assessed the association of diverse adverse health effects with RF-EMF exposures. However, existing systematic reviews and meta-analyses of these primary studies have substantially diverged in their conclusions. The application of Bradford Hill’s epidemiological criteria for assessing evidence of causation, originally designed for use in occupational and environmental health, casts light on some of reasons for this divergence, mostly reflecting the key weaknesses in the primary literature, which are discussed in detail. As a result of these threats to their validity—particularly the facts that (1) exposure measurement is typically subject to substantial error, and (2) insufficient time has elapsed, since modern cell phone use began in earnest, to allow tumors of longer latency to develop—most primary studies to date, and therefore many published systematic reviews of them, probably underestimate the true potential for causation, if in fact this association is causal.

Conclusion and recommendations: In view of these findings, international experts representing professional and scientific organizations in this field should convene an independent Guidelines development process to inform future epidemiological studies of associations between RF-EMF exposures and human health outcomes. Wide dissemination of such Guidelines could help researchers, journals and their reviewers in this field to execute, review and publish higher-quality studies to better inform evidence-based policy.

Background

Considerable scientific disagreement has developed in recent years over whether or not existing exposure limits and associated health and safety regulations in most Western countries adequately protect the public from potential health risks putatively associated with radio-frequency electromagnetic fields (RF-EMFs) (1–16). Indeed, over the last few years, a set of about 12 Systematic Reviews (SRs) of these various health effects, commissioned by WHO (17, 18) has led to substantial criticisms in the peer-reviewed literature (19–22), with extensive rebuttals by the Reviews’ authors (23–25) followed by retorts from the critics (8). Central to this controversy is well-established epidemiological guidance for assessing the extent to which observational (typically cohort and case–control) studies of RF-EMF exposure and various health outcomes, as well as systematic reviews of those studies, convincingly demonstrate features of such exposure-disease associations which are typical of causation.

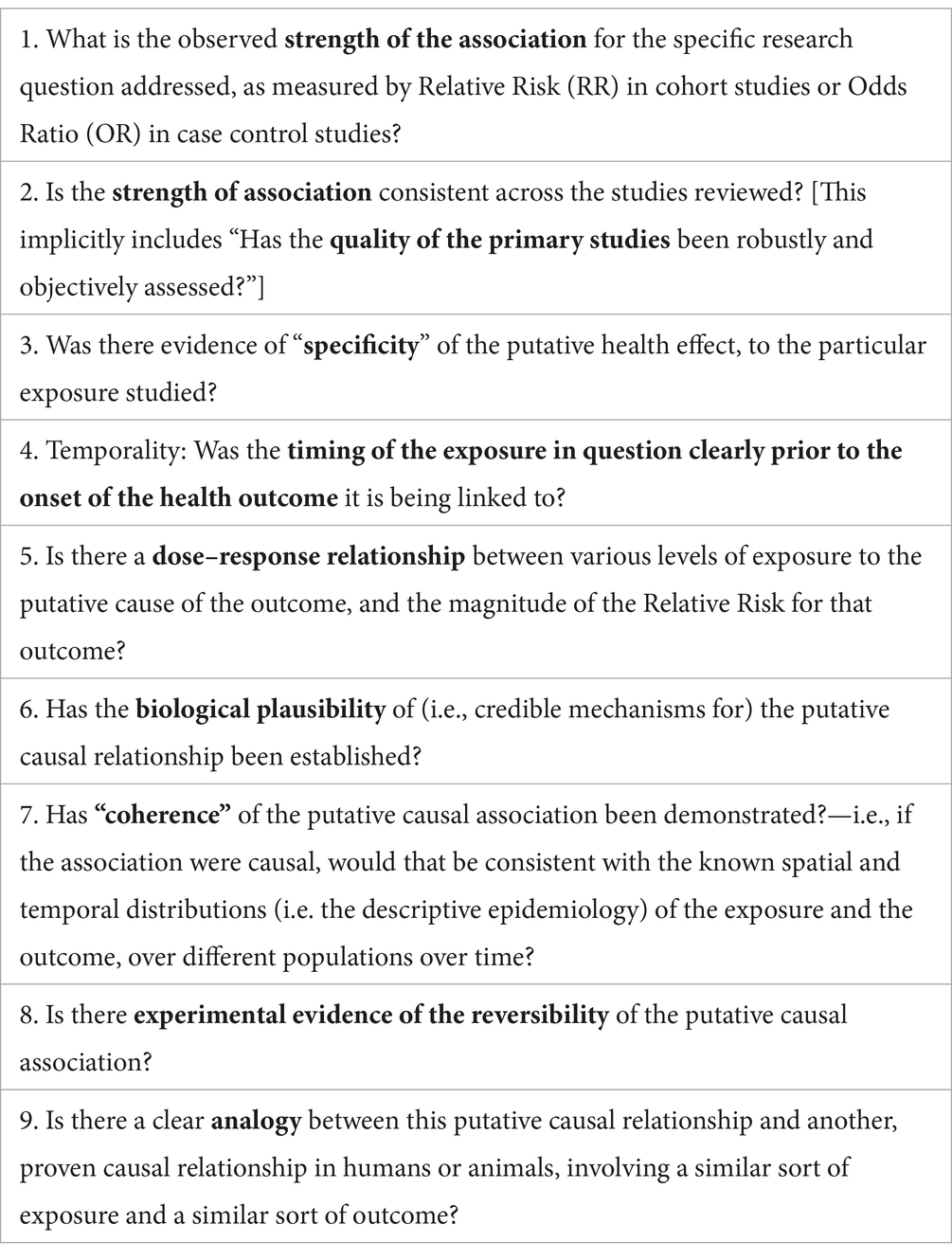

The most widely used set of such causation criteria are those created by the eminent British statistician Austin Bradford Hill in 1965 (26). These are summarized in Table 1, using modern phrasing that reflects more recent thinking on their application to environmental epidemiological studies of ambient hazards, which have particular challenges to their design and analysis (7, 27–31). One publication to date has attempted a similar analysis (32) but is now a dozen years old, since which time the relevant literature has mushroomed. In particular, the entire field of RF-EMF health-effects is fraught with major disagreements about which specific measures of exposure—including cell-phone use proxies for such exposures—are most relevant, as well as feasible to implement in human epidemiological studies so as to facilitate high levels of consistency across study findings, enabling the literature’s capacity to demonstrate replicability (33, 34).

Table 1. Bradford Hill (26) criteria for assessing evidence of causation vs. association human health [This table is based on previous related materials (7, 39, 40)].

Methods

This paper reviews the relevance of each of the above Bradford Hill criteria for causation to the literature on RF-EMF and human health effects, describing the results of applying each criterion to the primary and secondary (review) literature in this field. A particular effort has been made to explain these findings in plain language suitable for non-epidemiologists, since the field of RF-EMF safety is interdisciplinary.

Results

Strength of association

Epidemiological studies have traditionally quantified the association between an exposure and any dichotomous health outcome (e.g., a disease) as a Relative Risk (RR) in cohort studies that follow large numbers of exposed and unexposed persons to determine what proportion of them develop the disease in question (29, 31, 35, 36). Virtually unique to epidemiology, an alternative study design does the reverse, taking a representative sample of patients with the disease in question, as well as controls without the disease (but at risk of it in future) and analyzing the proportion of each group which has been exposed in the past to the putative hazard under investigation. In such “case control” studies, an estimator of RR—the odds ratio—closely approximates the RR that would be found in cohort when the outcome is relatively rare, or the study is designed to ensure that the controls are questioned about their past exposure at the same calendar times as the incident cases occur (31).

Epidemiologists over several decades have developed an informal consensus about how large a relative risk needs to be for it to be solidly suggestive of causation—well over 2, and ideally over 3 or even 4 (31, 37). This is because common weaknesses in cohort and case–control study design and analysis can readily lead to biased RR estimates, and mis-state the strength of small relative risks typical of weak associations (31). Indeed, some decades ago one of the fathers of modern epidemiology, Sir Richard Doll, wrote a critique of unsound preventive advice based primarily on “weak effects” in observational epidemiological studies (38). Subsequently, even stronger critiques have appeared of conclusions about causation or prevention which are derived solely from studies with weak associations (37, 39, 40).

Environmental epidemiologists, however, understand that even small relative risks between 1.1 and 1.5, if unbiased, can lead to large population burdens of disease attributable to the hazard in question, if that hazard is widespread in a susceptible population (27, 29). This is easily demonstrated by the calculation of Levin’s “population attributable fraction,” (41) which makes use of both the RR indicating the strength of association of a causal relationship, and the prevalence of any given risk factor with that RR, in a specific population (29). For example, if a putative causal exposure has only a weak RR linking it to an adverse health outcome, such as 1.5, but that exposure affects, say 50% of the population (as, for example serious air pollution does in some global settings) then the proportion of the burden of cases in that whole population attributable to the exposure would be 33%—hardly trivial. Environmental epidemiological studies of widespread exposures (such as RF-EMF nowadays) must therefore walk a narrow path between maintaining vigilance against bias, while at the same time being open to finding small RRs of potentially great public health importance.

In the case of RF-EMF exposures and the major health outcomes that have been most frequently studied to date—such as brain tumors, a particularly large and longstanding literature—a quick glance at recently published systematic reviews (34, 42, 43) reveals many pooled RR estimates across primary studies of less than 2, with only a handful of sub-analyses of longer-latency associations (i.e., evident only after more than a decade has passed since exposure began, and so apparent only in studies published more recently) approaching, and occasionally exceeding, 3. Indeed, the highest RR reported by Moon et al. (34), is 3.53 from the Interphone study of acoustic neuroma and habitual past patient use of cell phones ipsilateral to (on the same side of the head as) the tumor [We shall return to this finding below, under “dose–response relationships” because it is arguably an example of evidence supporting such a relationship.]. On the other hand, critics of this study have run simulations which indicate that this excess risk in the heaviest user group is likely the result of a combination of systematic and random errors (44).

Consistency of strength of association (across primary studies)

The standard epidemiological approach to assessing whether primary studies of a given association provide consistent—and therefore credible, in the sense that all sound science should be replicable—estimates of strength of association (RR/OR) of that association, is the systematic review (SR). SRs may or not be accompanied by meta-analytic pooling of the various primary studies’ estimates of RR/OR, according to an explicit judgment made by the review authors, guided by well-developed and now-quite-sophisticated substantive and statistical criteria for when such pooling is appropriate, based on formal assessment of the heterogeneity of both designs and analyses, as well as RR/OR findings, across the primary studies.

As may be obvious, however, one cannot proceed to that stage of a systematic review without first: (a) conducting a thorough and well-documented literature search (to allow later replication by others) for all the studies of relevance—ideally published and unpublished (45); (b) applying standard, detailed criteria to assess the quality of the primary studies, so as to eliminate those found to have design or analytic flaws likely to have led to significantly biased findings—an issue is discussed in the section immediately below. Only then can a valid assessment be made of whether the final short-list of high-quality primary studies of a given exposure-outcome association demonstrates sufficiently homogeneous findings to warrant their being pooled with meta-analytic statistical techniques (31, 36, 45).

Assessing the quality of the primary studies

In the past decade, numerous methodological tools have been developed for assessing the quality of observational epidemiological studies of putative environmental health hazards, as well as for guiding the rest of the process of synthesizing these studies in systematic reviews/meta-analyses: (1) the NIH OHAT “Risk of Bias” tool (46), and the associated “Navigation Guide” (47); (2) a shorter set of 10 “critical appraisal questions” specifically designed for biologists unfamiliar with epidemiology (48); (3) the COSTER framework for systematic reviews in environmental health (49, 50); (4) the international PRISMA guidance for systematic reviews (51); and (5) the relatively short and simple-to-use Oxford Center for Evidence-Based Medicine Critical Appraisal Questions for Systematic Reviews of Observational Studies of Causation (45). Notably, at least one study has shown that applying these tools yields quite different results (52).

Each of these alternative approaches to the quality assessment of both primary studies and SRs in environmental health, presents significant challenges even for an experienced epidemiologist. The OHAT tool is overly complex, with considerable internal duplication—as well as much jumping back and forth between experimental and observational studies. Yet non-experimental, observational cohort or case control studies, are pretty much all we have for serious human health effects, such as neoplasms, of RF-EMFs, due to the ethical constraints on experimental designs for putatively hazardous exposures. In addition, as noted in a recent critique (53), the OHAT risk-of bias tool does not cover some important aspects of SR methodology of major relevance to observational epidemiological studies—especially the need to carefully analyze the designs and analyses of the relevant primary studies for substantive heterogeneity in design, analysis and inference, in addition to conducting formal statistical tests for heterogeneity across the results of those studies (covered in detail below). The PRISMA guidance is helpful but designed primarily for meta-analysis of clinical epidemiological studies of medical treatments, where experimental study designs reign supreme, rather than primarily observational studies of widespread ambient environmental exposures such as RF-EMFs, where randomized controlled trials are typically unethical or impractical.

Finally, many of the recently published SRs of diverse biological and human health effects utilize the GRADE approach to assessing the confidence with which the primary literature on a given health outcome and RF-EMF exposure can be said to show convincing evidence of a consistent association, compatible with causation. However, the GRADE approach was also specifically designed for assessing evidence of medical-treatment efficacy across randomized controlled trials (54) rather than the observational epidemiological studies which almost exclusively form the body of evidence for biological and health effects of RF-EMFs. It is thus not surprising that the GRADE approach systematically discounts the value of non-randomized studies—thereby rendering it less than ideal for assessing the strength of evidence for fields such as the environmental epidemiology of serious health effects such as neoplasms, where RCTs are hardly ever feasible. A promising improvement to the GRADE approach is the well-established STROBE guidance [Strengthening Reporting of Observational Epidemiological Studies—(55)] for ensuring that key quality indicators are assessed across primary studies and in systematic reviews, in fields such as RF-EMF biological/health-effects where virtually all the evidence in human beings is non-experimental.

To reiterate, all of the various international guidelines for conducting, and reviewing the robustness of, SRs in environmental/toxicological epidemiology require, prior to any examination of across-study heterogeneity, a systematic screening of all the relevant primary studies for their quality. However, in the case of RF-EMF exposures and their putative health effects, all the relevant primary studies tend to be either cohort or case–control studies, for which the list of potential weaknesses in such observational epidemiological studies, leading to biased estimates of the strength of association either higher or lower than the true value, is rather long and methodologically demanding to apply. Indeed, some of these guidelines list as many as a dozen methodological flaws which should be ruled out by reviewers for each primary study, before assembling a shortlist for potential meta-analytic pooling of results. While such schemata for critically appraising relevant primary studies are helpful in reducing reviewers’ own biases, and improving agreement across SRs by different authors, there remains a substantial amount of expert judgment in these assessments of primary study quality. This in turn leads to major discrepancies between various SR shortlists of primary studies suitable for potential pooling of results. Some examples, taken from recently published SR of RF-EMF exposure and various health effects, illustrate major impediments to widespread scientific agreement about causation:

• Some SRs lump biologically quite different health outcomes in one meta-analysis, typically to try to overcome the problem of insufficient numbers of primary studies for any one specific outcome. A clear example is found in two recently published SRs of brain tumors and RF-EMF exposure. Choi et al. (42) and Moon et al. (34) respectively chose to pool, and not to pool studies of pathologically quite different brain tumors—a strategy which would be expected to generally increase across-study heterogeneity in a meta-analysis, thus favoring a decision not to pool. On the other hand, the reviews referenced by Moon and by Choi do not just pool pathologically different cancers, they also pool different exposure metrics—most notably because of different cut-offs in exposure duration and/or cumulative exposure. This makes pooling even less appropriate, as the pooled estimate is uninterpretable.

• Some SRs lump results from cohort and case–control studies, even though these two study designs have substantially different major threats to their internal validity (31, 36, 45). Moon et al. (34) kept these two strata of primary studies separate, but did make use of both strata. Choi et al. (42), on the other hand, chose to exclude from consideration the available cohort studies, on the grounds of serious concerns about the representativeness of the controls, and/or the likely inaccuracy of RF-EMF exposures proxied by the mere recorded possession of a cell phone account [these debates are well covered in the ensuing correspondence with the journal Editors (1, 4, 12) as well as a thorough overview of all these methodological issues (14)]. The approach by Moon et al. (34) of including both case–control and cohort studies is arguably preferable over the Choi et al. (42) approach, of excluding cohort studies, since combining them may “even out” different bias structures in the two study designs.

• Some SRs, and primary studies, explicitly consider the issue of tumor latency, while others do not. This matters because, according to the International Agency for Research on Cancer (56), it is scientifically unjustifiable to rule out cancer causation without having studies spanning at least 30 years since the start of exposure. However, only a handful of studies completed to date have significant statistical power (sample size) over more than a decade or two of follow-up. One pooled analysis of case–control studies covering such a long follow-up period (57) has found that mobile phone use increased the risk of glioma, OR = 1.3 (95% CI = 1.1–1.6 overall) increasing to OR = 3.0 (95% CI = 1.7–5.2) in the >25-year latency group. The OR increased statistically significant both per 100 h of cumulative use, and per year of latency for mobile and cordless phone use.

Therefore, health effects with long latency cannot be confidently established as clearly not caused by a given exposure until sufficient time as passed since those exposures appeared in a study population—decades in the case of most solid tumors. For the rapidly changing set of population exposures to RF-EMFS over recent decades, this means in turn that only epidemiological studies of the link between older generations of mobile phone technology (1G through perhaps 2 G and early 3G, but certainly not 4G or 5G) are partially capable of ruling out carcinogenesis, until several more years have passed (7, 10, 19).

It should be noted that both case control and cohort studies are theoretically capable of studying long-latency outcomes, such as tumors without waiting for them to occur (the usual strategy in prospective cohort studies). This can be done by asking at baseline in cohort studies about exposures in the more remote past. However, the accuracy of such self-reported exposures is always less than it is for prospectively recorded exposures, due to “recall bias.” It is also important to note that latencies for any outcome will follow a statistical distribution in time (typically log-normal) so that earlier-occurring tumors, for example, can be expected long before the median latency for that tumor type.

Finally, one important and easily implemented strategy for improving the quality of systematic reviews in this field, and indeed in general, is their preregistration on an appropriate international database of reviews that are planned/in-progress—which helps to reduce the likelihood of undetected publication bias resulting from the failure to publish, especially for studies with largely negative findings (51).

Assessing the heterogeneity of primary studies: forest plots

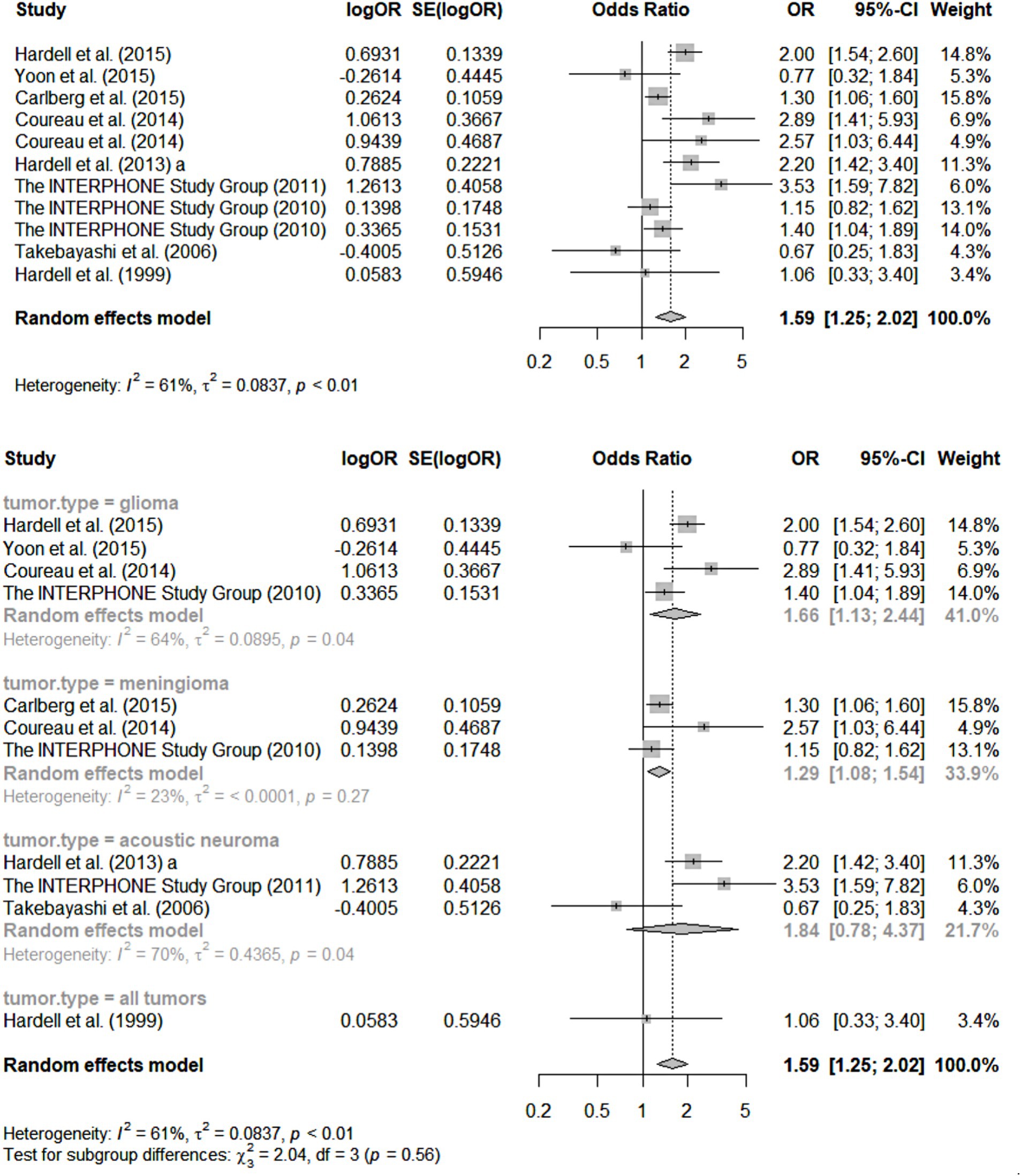

As an illustration of the process used in SRs of assembling only primary studies of reasonable quality and then assessing the consistency of those studies’ methods and findings, a fundamental tool for this purpose, the “forest plot,” is reproduced below (Figure 1) from the SR of Moon et al. (34) focused on RF-EMFs and brain tumors. A well-designed forest plot (such as this one) depicts, across the carefully selected, high-quality primary studies: their main central estimates of the strength of association observed (in this case OR because the Figure includes case–control studies only), on a log scale (plus the log of each OR’s SE); each of those OR estimates’ 95% confidence intervals (both numerically, and as horizontal “whiskers” around the graphed central estimate), quantifying the statistical precision of each RR/OR estimate; the statistical “weight” of each study’s estimate, used in any pooling of the results across the studies (the proportion of any pooled estimate across studies of effect-size contributed by each study, based on its sample-size). Underneath each section of the forest plot is a summary of the meta-analytic statistics for the sub-category of primary studies depicted immediately above, including I-squared and tau-squared heterogeneity statistics (58–60). Finally, on the last line of the graph for each section of the Figure is the pooled estimate of OR and its 95% CI, based on the more conservative “random effects” meta-analytic method of pooling. [For simplicity, since this Figure is intended to merely illustrate and explain the features of a good forest plot, this one only covers one sub-analysis of the case–control studies reviewed by Moon et al. (34), involving these studies’ ORs for total cumulative cell phone use—a metric for total “dose” of RF-EMF exposure, to be further discussed in Section 5—in the highest category analysed: over 896 h.]

Figure 1. Forest plots for meta-analysis of total cumulative use over 896 h [case–control studies; reprinted with permission from Moon et al., licensed under CC BY 4.0, https://doi.org/10.1186/s12940-024-01117-8; Figure 2 of Moon et al. (34)].

For the uninitiated, the following are the key features of this forest plot that not only tell us what this SR/meta-analysis has found, but also reveal quite a bit about those findings’ credibility:

• First and foremost, the number of quality-screened primary studies depicted in Figure 1 for each separate health outcome (type of brain tumor) is relatively small: three for meningioma and acoustic neuroma, and four for glioma. Statistical authorities have recommended that meta-analyses not be conducted (i.e., results across studies pooled) for less than five primary studies at a time (61). The rationale for this recommendation is that the statistical power of standard tests for heterogeneity across primary studies (see next paragraph) is very limited when the number of such studies is less than five; indeed, as Ioannides et al. (62) have pointed out, typical indices of heterogeneity such as I-squared have major uncertainty in their estimates in such circumstances (see second paragraph below).

• Furthermore, the graphical pooling of all 11 studies in the top section of the Figure reveals a relatively high heterogeneity across their OR central estimates, with an I-squared value of 61% (values above 50% are considered suggestive of potentially concerning heterogeneity) so that the wisdom of pooling them at all can be legitimately questioned (58, 60, 63, 64). Such pooling across pathologically quite different tumors surely is clinically and biologically questionable. In essence, such high heterogeneity indicates that these studies were not actually estimating the relative risk linking precisely the same exposure-outcome combination, or at least not doing so using comparable study designs, analyses and approaches to statistical inference. Again, such “substantive” (as opposed to merely “statistical”) across-study heterogeneity is usually considered a contra-indication to pooling of results using meta-analysis. Another recent peer-reviewed SR of this literature did pool all studies of reasonable quality across these same types of brain tumors (42) and has been criticized for it (1, 4), although the original authors have responded vigorously (12).

• The very small number (< five) of studies for each category of tumor, depicted in the bottom half of Figure 1, ha another consequence for those sub-analyses: such small literatures result in I-squared values and other indices of heterogeneity with considerable uncertainty in their estimation—an estimate of which can be calculated but is not provided by Moon et al. (59, 61, 62).

• These weaknesses of the Moon et al. (34) review are compounded by the fact that studies with different high-exposure definitions are also pooled; furthermore, the studies depicted in the forest plot only reflect results for exposures over 896 h—an arguably arbitrary cut-off.

• Overall, there is a clear impression of many studies of insufficient sample size, leading to inadequate statistical precision of the resultant ORs, and contributing to cross-study heterogeneity of findings. While this weakness in a literature can in theory be addressed by—and indeed is the main rationale for—meta-analysis, through the pooling of data across studies, it leads to a kind of instability/fragility of the pooled results, in the sense that just one new, high-quality study of substantial size is quite likely to change the overall result across studies. This translates directly into one of the GRADE criteria for summarizing the confidence one has in the credibility of the meta-analysis, rendering that confidence lower than would otherwise be the case (54).

• Finally, even if we believe the pooled central estimate of OR for, say the 11 studies of diverse brain tumors, From Figure 1 in Moon et al. (34), the result overall OR from such pooling is only 1.59 (95% CI 1.25–2.02)—clearly a “weak effect” which can readily be artefactually created by measurement/information biases, uncontrolled confounding, etc.—hardly a “smoking gun” for causation.

• It may be thought that this unconvincing evidence of causation for RF-EMFs and brain tumors is not the fault of the SR authors, but rather a reflection of the rather immature and sparse literature available (as of 2024) for this review. However, the authors can be faulted for proceeding with a full meta-analysis, which seems contra-indicated, given the above concerns. The authors should have come to the conclusion that a narrative review rather than a pooling of results was the correct approach. In short, “it is too soon to tell” whether causation may be present, rather than clearly the case that it is not present (20, 21, 65). In short, this Figure depicts substantially “iffy” meta-analytic results, which are likely to have led some epidemiologists not to even report the results of pooling the primary studies of separate tumor types.

Specificity

Most experienced epidemiologists believe that this criterion for causation is rarely met—simply because Nature so rarely matches just one sort of hazardous exposure to a given disease or health outcomes, with just one outcome resulting from that disease. A commonly used example (29, 36) is cigarette smoking: it is linked to dozens of adverse health outcomes of widely varying pathologies, ranging from chronic obstructive pulmonary disease to coronary heart disease, stroke and peripheral vascular disease, as well as several cancers. Yet all of these outcomes have many other known causal exposures, including air pollution in the case of COPD, poor-quality diet, sedentary lifestyle/hypertension/ genetic dyslipidemias in the case of arteriosclerosis outcomes, and a long list of “lifestyle,” environmental and genetic factors in the case of cancers associated with smoking. It is therefore considered a rare epidemiological occurrence when a disease is linked to only one exposure, and vice-versa. Indeed, such specificity is commonly found only among infectious diseases (where the causative organism is typically specific to a given clinical picture, although many host-resistance factors also play a causative role), and toxicological syndromes—e.g. the otherwise very rare cancer, mesothelioma, is specific to asbestos exposure (66); however, it has long been known that asbestos exposure also increases the risk of lung cancer and fibrotic lung disease. Often an exposure is wrongly considered to be specific to a rare health outcome only because not enough cohort or case–control studies have been done to investigate other etiological exposures. For example, the industrial pollutant vinyl chloride was long thought to be a specific cause of the rare liver cancer angiosarcoma; however, a more recent review lists several other toxicants as equally implicated and point out that 75% of cases of this rare tumor cannot be linked to any specific toxicant (67). Declaring specificity is therefore inherently fraught, given the impossibility of proving the negative—that no other cause of a disease exists.

There is, however, one particular sense of the term “specific” that is relevant to the literature on the potential causation of adverse health effects, especially neoplasms and RF-EMF exposure: the occurrence of a unique (i.e., virtually pathognomonic) clinical picture obviously anatomically proximal to the suspect source of EMFs. Miller et al. (10) have collected independent reports of extremely localized, multi-focal breast cancers immediately under the position where the patient had carried her cell phone next to the skin for long periods. Because of the well-known inverse square law—which leads to a 100-fold higher EMF exposure intensity at, say, 1 cm distance from breast tissue, compared to, say 10 cm distance when the phone is in a jacket pocket—there would seem to be credibility in inferring that the specificity criterion for causation is met. On the other hand, this phenomenon could also be considered merely a specific instance of “dose response relationship,” another criterion to be further discussed below.

To be fair, there is substantial scientific disagreement about the accuracy with which subjects report the side of their body on which they habitually kept their cell phones over long periods. Karipidis et al. (23, 43) cite studies of brain tumors suggesting that the information biases associated with patients’ reporting of the side of the head on which they held their phone are too major to rely on such analyses. These studies point out that “compensatory” under-reporting of contralateral tumors, found in some analyses, suggests misreporting of the sidedness of phone use by subjects with tumors. This same sort of recall (“rumination”) bias could have affected breast cancer patients “ruminating” over the possible causes of their tumor.

Temporality

This is by far the easiest criterion to meet, in any causation literature. It merely requires that, as is always the case in cohort studies, and in case–control studies based on historically recorded exposure levels for each subject (as opposed to potentially biased personal recollections), the exposure is measured before the occurrence of the outcome. There are sufficient such studies of RF-EMF exposure and a wide range of health outcomes, including many tumors, to meet this criterion. However, as pointed out above, some potentially important aspects of exposure, such as laterality of usual phone use, can be particularly subject to recall bias in cases within case–control studies.

Dose–response relationship

Environmental epidemiology has long made much of this criterion for causation, because often it is easier to establish empirically for ambient exposures, especially those with well-defined spatiality. Historically, dose for many environmental hazards tends to have been measured by the proxy “distance” of the subject from the source of the hazards, where this can be accurately determined (68).

However, in the case of RF-EMF exposures, recent case–control and some cohort studies have begun to mathematically estimate the precise exposure to specific body organs, such as the brain, based on a number of assumptions. Karipidis et al. (43, 69) made a major effort to do this, using sophisticated models of EMF-RF exposure from cell phones and state-of-the-art biostatistical methods for formally assessing dose–response relationships (70–72). Published criticism of that approach (21) emphasizes the unclear parameterization of those models and lack of goodness-of -fit statistics in the main WHO SR by Karipidis et al. (43). Karipidis et al. have rebutted those arguments (2024b) in detail. However, the critics have come back with additional concerns (73). The issue is therefore highly contested, and the key evidence around RF-EMF exposure and cancers has now become extremely technical in nature—at least from a policy-maker’s point of view.

An additional challenge to the assessment of primary studies’ handling of dose–response relationships is that virtually all extant primary studies and SRs tend to conflate: (1) true cumulative “dose” of exposure (e.g., as indicated by some measured or modeled strength of the RF-EMF in the relevant body organ /tissue where the tumor under study is located); (2) time-elapsed between exposure and the occurrence of the health outcome under study (i.e., “latency”—see discussion above); and (3) age at first exposure (potentially an important factor in carcinogenesis, as various cancers have different “age-windows” of susceptibility to exposure). Because individuals’ mobile-phone use habits tend to remain rather consistent over many years after acquiring a phone, one would expect study subjects with higher “exposure dosages,” as measured by high cumulative hours of use (e.g., above 1,000 h in the Hardell studies, and the Moon et al. SR) to be virtually the same study subjects as those with the longest duration of use (as usually measured in years), and those with the earliest ages of first exposure. Yet these subjects’ data are critical to the analysis of the latency effects expected for cancers (see above). This greatly complicates the assessment of the adequacy of primary studies’ and systematic reviews’ handling of the three conflated issues. There may be lessons to be learned here from tobacco epidemiology, where the most commonly used measure of “dose” for smoking is “pack-years,” which similarly conflates actual dose (e.g., mean packs per day smoked) and latency. Readers are referred to recent theoretical discussions on these issues (74, 75).

Mention has already been made of some primary studies’ finding of an elevated risk for brain tumors ipsilateral to the side of the head where the cell phone user habitually held his/her phone, compared to the risk for contralateral tumors. The Interphone Study finding, for the highest-exposure-group, of an RR estimate of 3.53—is one of the highest ever observed for any subset of tumors and exposure categories. There are two contrasting views of this finding. As noted above, Karipidis et al. (23, 43) cite studies suggesting that the information biases associated with patients’ reporting of the side on which they held their phone are too major to rely on such analyses. These studies point out that “compensatory” under-reporting of contralateral tumors, found in some analyses, suggests misreporting—recall bias—of the sidedness of phone use by subjects with tumors. Other authors (21) have suggested that such exposure misclassification would generally tend to reduce the observed RR toward the null (RR = 1), making it unlikely that increased RRs would be found purely due to those biases. Certainly, the simplest explanation for an increased ipsilateral risk is the large difference in dose of RF-EMFs emanating from the phone itself, because of the much shorter distance for ipsilateral users between the phone antennae and the side of the brain where the tumor developed, given the inverse square law (7).

In summary then, there is substantial scientific disagreement about whether the extant literature on RF-EMFs and the most frequently studied outcomes—such as brain tumors—demonstrates clear dose–response relationships. One reason for that is no cohort or case–control studies have been able to observe sufficient numbers of subjects for the expected latency period required for many tumors to develop to the point of clinical presentation, which IARC has deemed to be 30 years. The largest numbers of patients followed for the longest follow-up (more than 20 years) are in the COSMOS cohort studies [albeit based on self-reported exposures at baseline recruitment—(76)] and in Hardell’s case–control studies from Sweden [(e.g., 57, 77)]. However, the latter relative risk estimates, while elevated to over 3, have relatively wide confidence intervals. This means that more high-quality studies, with longer follow-up, will be needed to resolve both the latency and dose–response issues.

Biological plausibility

It is under this category of evidence for causation that perhaps the most profound disagreements exist in the scientific community. The history of this disagreement goes back several decades, to the establishment of two contrasting views on what sorts of basic (laboratory- science) biological effects are caused by the levels of RF-EMF exposure widely found in modern society. The one view, held by ICNIRP (the expert body which has advised WHO and many countries on its health and safety “safe exposure” limits for decades) is that there is solid scientific evidence only for heating effects (6, 78–80). The opposite view, held by hundreds of independent scientists, is that there is rapidly mounting evidence of many other biological effects from currently-ambient RF-EMF exposures, including: high levels of oxidative stress leading to cellular damage; changes in cell membrane permeability and function (e.g., through bioelectric effects on ion channels); neuronal dysfunction; and even DNA dysfunction leading to cell dysregulation (3, 8, 10, 19, 81–90). On the other hand, there is rather little evidence that these biological effects actually lead to clear disease outcomes.

One of the challenges of using this Bradford Hill criterion for causation is that it was intended to be integrated with the other, more inherently epidemiological criteria. This challenge has become greater in an era of ever-more-specialized science. For example, Karipidis et al. (24) have pointed out that the pre-determined and published scope of their 2024 SR specifically excluded a detailed review of the laboratory and animal primary studies relevant to RF-EMF exposures and the most commonly studied cancers. A separate review of the laboratory evidence on this question, also commissioned by the WHO, which should appear shortly, given that its protocol was published nearly 3 years ago (91). IARC is said to be planning an update of its 2011 review in the next few years, likely making major use of the WHO SRs. In the interim, it would seem premature for any reasonable scientist to dismiss a causal relationship on the grounds of inadequate evidence for biological plausibility.

Coherence

This criterion can be most simply stated as “Is the descriptive epidemiological evidence about the spatial and temporal distributions of the exposure, and the outcome, compatible with a causal relationship?” While this may seem a straightforward matter to address empirically in a SR, in practice such a research question is fraught with challenges. A clear example is the relationship between various brain tumors’ incidence and mortality time-trends’ and the presence of preceding changes in RF-EMF exposure prevalence at the population level. Obviously, such data are only available in countries with highly developed systems of national health statistics, especially cancer registries able to consistently tally all cases of all types of tumors reliably over decades, as well as measure the extent of RF-EMF exposure in their entire population at any point in time. Not surprisingly, such countries are few and far between. Some of the most widely cited studies are from Sweden [(e.g., 92)]. These appear to show associations between steadily rising rates of cell phone usage in the population, occurring with a credible time-delay (some years) before observed increases in brain tumor incidence, in particular for glioma—perhaps the malignant brain tumor most strongly linked to RF-EMF exposure in epidemiological observational studies.

However, major disagreement has developed on whether such data can be replicated by other investigators in other settings, some with equally well-developed cancer registries. For example, in their WHO-commissioned SR of the more commonly studied cancers, Karipidis et al. (43) analyzed several prior studies on this question and performed sophisticated time-trend simulations to mimic the effects of various reporting biases on observed time trends in brain tumors’ incidence. They concluded that:

“In particular, based on findings from three simulation studies, we could define a credibility benchmark for the observed risk of glioma in relation to long-term mobile phone use, and perform sensitivity meta-analyses excluding studies reporting implausible effect sizes (>1.5) for this exposure contrast.” Karapidis et al. (43), p.43.

In their lengthy critique of this SR by Karipidis et al., Frank et al. (21) stated that overall cancer time trends which combine all cases of similar histology do not capture the unique equipment use and exposure characteristics of the groups in which brain tumor risks were increased in the case–control studies, such as tumor risks in the ipsilateral areas of the brain (temporal lobe) with the highest absorption of RF radiation emitted from a mobile phone held next to the head. It is also a matter of common epidemiological knowledge that specific population subgroups (such as extremely high phone-users, or persons developing very specific subtypes of cancers or cancer locations) may reflect health effects which would not be observable in national time trends data.

Finally, Frank et al. (21) also claimed that key studies finding recent increases in population-level incidence for such brain cancers were omitted by Karipidis et al. (92–96). In their forceful rebuttal to these assertions, Karipidis et al. (23) argued that:

“In some of these studies [cited by Frank et al. (21)] but are actually accompanied by decreases in brain cancers of unspecified site and/or morphology, while overall brain cancer incidence has remained largely unchanged. This suggests improvements in diagnostic techniques as the reason for increasing trends in certain brain cancer sub-types. There have also been shifts in classifying sub-types in updated editions of the WHO classification of tumors of the central nervous system; for example, the WHO 2000 classification induced a shift from anaplastic astrocytoma to glioblastoma. This is addressed in many of the included time-trend simulation studies, e.g., in (21, 113) where reclassification of unclassified or overlapping brain cancers was shown to reduce increased trends in morphological or topological sub-types (such as in glioblastoma multiforme).”

To summarize, as with the state of the evidence cited above for other key Bradford Hill causation criteria, it seems fair to say that there are qualified scientific experts on both sides of the issue of “coherence,” and only highly-technically-trained methodologists are likely to be able to sort out who is right, based on further research.

Experimental reversibility

Although this criterion for causation is very powerful when met, it is almost impossible to address empirically for disease outcomes generally regarded as “irreversible” once they have been diagnosed—such as cancers. More broadly, one might hope to design a study to show that major reductions in ambient RF-EMF exposures have subsequently led to reductions in related cancers’ incidence. However, no one has yet conducted such a study, largely because no such setting has been identified at the population level, and because of the decades of time-lag required to observe a reduction in cancer incidence after a reduction in exposure, given the long latency involved in carcinogenesis. If we take smoking cessation, for example, as perhaps the best studied reversal of exposure to a proven carcinogen, it is well known from decades-long follow-up of the UK physicians’ cohort of smokers (and quitters) that some common adverse health effects of tobacco show substantially reduced risks within a few months of quitting (e.g., chronic bronchitis) to a few years (e.g., coronary, cerebral and peripheral arteriosclerosis)—whereas the major cancers linked to smoking, such as carcinoma of the lung, persist at elevated incidence rates in quitters, compared to never-smokers, for decades (97).

Analogy

Finally, this last criterion for causation is deceptively easy to state, but not so easy to fulfill: “Is there an analogous causal relationship established for the exposure in question (i.e., to RF-EMF) and the disease outcome of interest (e.g., brain tumors)—for example in a laboratory animal or credibly similar in vitro model?” The most compelling published evidence of such analogies—albeit evidence which has been strongly contested (98, 99)—comes from the results of the USA NIH National Toxicology Program (81, 86, 87) and very similar results of the Ramazzini Institute studies (82, 100) in laboratory rats, showing elevated rates of gliomas and glial cell hyperplasias in the brain and schwannomas and Schwann cell hyperplasias in the heart of exposed male rats [Schwannoma tumors are histologically closely related to acoustic neuromas in humans, linked in other studies to RF-EMF exposure (see previous sections of this paper)]. Additionally, many new studies of potentially adverse short-term RF-EMF effects, related to abnormal physiology/ biochemistry or anatomy observed in the laboratory setting, in either plants or animals, are beginning to appear (3, 8, 10, 83–85, 88–90).

More modern methods for assessing causation

Necessarily absent from the original (1965) Bradford Hill criteria for assessing possible causative relationships between exposures and health outcomes are modern epidemiological methods such as Directed Acyclic Graphs (DAGs) (101, 102). Surprisingly, these newer methodological approaches to demonstrating causation appear not to have been utilized in the RF-EMF literature—as yet. Such methods could materially improve the quality of new primary studies, by quantifying the influence of effect moderation and mediation in complex causal chains.

The broader issue of potential conflicts of interest

Finally, potential conflicts of interest among authors of the primary studies, and some SRs, has recently become perhaps the most hotly contested issue in this whole field. Peer-reviewed publications by Hardell and Carlberg (7, 83, 84, 103), Hardell and Moskowitz (114), Frank et al. (20, 21), Nordhagen and Flydal (104), and Weller and McCredden (105) have claimed that there is widespread under-declaration of such conflicts of interest, particularly related to research grants and other funding from telecommunications companies with a vested interest in mobile phone and related equipment sales. There are also potential conflicts of interest that are more subtle than merely financially incentivized ones—for example, related to vested interests’ influence on a scientist’s thinking (106). On the other hand, vigorous counterarguments have been published, claiming that there is no hard evidence of potential conflicts of interest among, for example, ICNIRP members or the authors of some of the recently published SRs commissioned by WHO (4, 7, 14, 23–25, 106–108).

More worrisome is the finding of Prasad et al. (109) and Myung et al. (110), that funding source predicted study quality, in their systematic reviews of case–control studies of tumor risk and RF-EMF exposure. Government-funded studies were of higher quality, which in turn was associated with finding a statistically significant association between exposure and brain tumor risk, especially in long-term users (>10 years). Similarly, Carpenter (111) found that empirical studies of RF-EMF exposures and childhood leukemia reported systematically different findings, according to the source of the study’s funding:

“By examining subsequent reports on childhood leukemia it is clear that almost all government or independent studies find either a statistically significant association between magnetic field exposure and childhood leukemia, or an elevated risk of at least OR = 1.5, while almost all industry supported studies fail to find any significant or even suggestive association.” [Abstract]

There is no easy answer to this question. Current guidelines regarding the declaration of potential conflicts of interest are helpful, but there is little “enforcement” and various academic disciplines have varying norms. For example, after decades of published concerns about the undue influence of “Big Pharma” funded researchers in setting public policy, such as the 2014 NICE guidance about who should be prescribed statins (29) medical researchers are quite accustomed to having to declare all potential conflicts of interest in both their publications and prior to participation in influential policy processes. University departments of Engineering and Applied Sciences would appear, on the other hand to regard such funding as not only normal and usual, but in fact a key ingredient in being judged a successful professor. Be that as it may, it would seem only reasonable that all such potential conflicts should be declared at the outset of any scientific interchange.

At a minimum, transdisciplinary fields such as RF-EMF exposures and their biological/health effects will require transdisciplinary agreements about what precisely constitutes potential conflicts of interest. How this might be successfully negotiated is not at all clear.

Discussion

Based on the application of Bradford Hill’s criteria for causation to the current literature on RF-EMF exposures and adverse health outcomes—especially brain tumors, for which the literature is more voluminous and “mature”—it is clear that many of the primary studies of these associations are problematically afflicted by low-quality science (especially many small studies of low quality), failure of consistent replication and consequent uncertainty about causation. Some epidemiologists (7, 8, 20, 21) have argued that the major methodological weaknesses in this literature, as discussed above, would be expected to produce a bias in the observed strength of association toward the null (i.e., underestimation of the relative risk), particularly the ubiquitous challenge of inaccurate exposure measurement at the individual study subject level, and latency (7, 19, 27, 28, 34). Other epidemiologists have pointed out that the predominant study design in this field—case–control studies—is well known for exaggeration of effect-sizes (relative risks) due to biased recall of exposure in cases, compared to controls, when no objective measurement of exposure is available (23, 43).

Some authors (3, 7, 10, 19) have argued that it is inherently unethical to wait many years for conclusive evidence of causation, before adopting “safe” exposure limits. These researchers invoke the “precautionary principle” which holds that, pending the availability of conclusive science, exposure limits should be set on the basis of potential if not necessarily proven harms—especially in the case of women of reproductive age and children. Other authorities (7, 79, 80) dispute this view, holding firmly to their long-held conviction that “safe” exposure limits are already in place. There appears to be very little common ground between these views.

Strengths and weaknesses of this commentary

This commentary—which is not intended to be a narrative let alone systematic review of the pertinent literature on biological and health effects of RF-EMF exposure—has attempted to cover the key issues in that literature related to the assessment of causation, utilizing a wide range of illustrative examples from relevant publications, without any intent or claim to be exhaustive. A strength of this approach is that the detailed discussion above, of the entire nine original Bradford Hill criteria for assessing causation, does provide a relatively neutral, methodologically sound and comprehensive framework for analyzing the critical deficiencies in the current evidence-base in this highly contested field.

Conclusion and recommendation

Although not a panacea, we propose that a neutral group of international experts in environmental health, nominated by independent scientific and professional bodies, convene a guidelines development process to inform future epidemiological studies, systematic reviews and causal evidence syntheses of associations between RF/EMF and human health outcomes. To overcome entrenched positions identified with various specific experts, an anonymous, Delphi-like consensus -building process might be helpful.

We recognize that several such guidelines are already available, and that the more recent of them [(e.g., 49, 50)] are much better suited than previously published guidelines to evaluating observational epidemiological literatures about putative environmental health hazards. However, we believe that further specification of the most common but avoidable “pitfalls” in this field would assist investigators and reviewers to be more vigilant against lower-quality studies which have dominated the field for decades. Wide dissemination of such guidelines could help journals and their reviewers in this field (many of whom appear to be new to epidemiology) to execute, review and publish higher-quality studies, to better inform evidence-based policy. A useful approach for achieving these ends would be to develop a STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) extension specifically for guiding future systematic reviews and primary studies of RF-EMF biological and health effects (55, 112).

Author contributions

JF: Conceptualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

In the interests of full disclosure, he is an unpaid Special Advisor to the International Commission on the Biological Effects of Electromagnetic Fields, a not-for-profit global organization dedicated to the use of high-quality science to set evidence-based public health and health protection, as well as broader environmental standards, related to RF-EMFs. He also is providing scientific advice, on an entirely unpaid basis, to the non-for-profit academic organization Physicians’ Health Initiative for Radiation and Environment (PHIRE). This paper is based on a presentation to the 4th International Expert Forum on the Public Health and Environmental Impacts of Cellular and Wireless Radiation Exposure 2024.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Brzozek, C, Abramson, MJ, Benke, G, and Karipidis, K. Cellular phone use and risk of tumors: systematic review and meta-analysis. Int J Environ Res Public Health. (2021, 2020) 18:5459.

2. Croft, RJ, McKenzie, RJ, Inyang, I, Benke, GP, Anderson, V, and Abramson, MJ. Mobile phones and brain tumours: a review of epidemiological research. Australasian Physics Eng Sci Med. (2008) 31:255–67. doi: 10.1007/BF03178595

3. Davis, D, Birnbaum, L, Ben-Ishai, P, Taylor, H, Sears, M, Butler, T, et al. Wireless technologies, non-ionizing electromagnetic fields and children: identifying and reducing health risks. Curr Probl Pediatr Adolesc Health Care. (2023) 53:101374. doi: 10.1016/j.cppeds.2023.101374

4. De Vocht, F, and Röösli, M. Cellular phone use and risk of tumors: systematic review and meta-analysis. Int J Environ Res Public Health. (2021) 18:3125.

5. Di Ciaula, A. Towards 5G communication systems: are there health implications? Int J Hyg Environ Health. (2019) 221:367–75. doi: 10.1016/j.ijheh.2018.01.011

6. Foster, KR, and Moulder, JE. Wi-fi and health: review of current status of research. Health Phys. (2013) 105:561–75. doi: 10.1097/HP.0b013e31829b49bb

7. Frank, JW. EMFs, 5G and health: what about the precautionary principle? J Epidemiol & Comm Hlth. (2021) 75:562–6. doi: 10.1136/jech-2019-213595

8. ICBE-EMF (2025). Available online at: https://icbe-emf.org/scientific-response-to-the-rebuttal-of-karipidis-et-al-to-the-icbe-emfs-criticisms-of-the-who-cell-phone-radiation-cancer-review/ (accessed May 5, 2025)

9. Kostoff, RN, Heroux, P, Aschner, M, and Tsatsakis, A. Adverse health effects of 5G mobile networking technology under real-life conditions. Toxicol Lett. (2020) 323:35–40. doi: 10.1016/j.toxlet.2020.01.020

10. Miller, AB, Sears, ME, Morgan, LL, Davis, DL, Hardell, L, Oremus, M, et al. Risks to health and well-being from radio-frequency radiation emitted by cell phones and other wireless devices. Front Public Health. (2019) 7:223. doi: 10.3389/fpubh.2019.00223

11. Moskowitz, JM. (2019). We have no reason to believe 5G is safe. Scientific American blogs. Available online at: https://blogs.scientificamerican.com/observations/we-have-no-reason-to-believe-5g-is-safe/ (Accessed Feb. 26, 2024).

12. Moskowitz, J. M., Myung, S. K., Choi, Y. J., and Hong, Y. C. (2021). Cellular phone use and risk of tumors: systematic review and meta-analysis Int J Environ Res Public Health.18,:5581, doi: 10.3390/ijerph18115581, PMCID: PMC8197078

13. Nyberg, NR, McCredden, JE, Weller, SG, and Hardell, L. The European Union prioritises economics over health in the rollout of radiofrequency technologies. Rev Environ Health. (2024) 39:47–64. doi: 10.1515/reveh-2022-0106

14. Röösli, M, Lagorio, S, Schoemaker, MJ, Schüz, J, and Feychting, M. Brain and salivary gland tumors and mobile phone use: evaluating the evidence from various epidemiological study designs. Annu Rev Public Health. (2019) 40:221–38. doi: 10.1146/annurev-publhealth-040218-044037

15. Russell, CL. 5 G wireless telecommunications expansion: public health and environmental implications. Environ Res. (2018) 165:484–95. doi: 10.1016/j.envres.2018.01.016

16. Simkó, M, and Mattsson, M-O. 5G wireless communication and health effects: a pragmatic review based on available studies regarding 6 to 100 GHz. Int J Environ Res Public Health. (2019) 16:3406–28. doi: 10.3390/ijerph16183406

17. Various authors,. WHO assessment of health effects of exposure to radiofrequency electromagnetic fields: systematic reviews. Environ Int. (2021–2025)

18. Verbeek, J, Oftedal, G, Feychting, M, van Rongen, E, Scarfi, MR, Mann, S, et al. Prioritizing health outcomes when assessing the effects of exposure to radiofrequency electromagnetic fields: a survey among experts. Environ Int. (2021) 146:106300. doi: 10.1016/j.envint.2020.106300

19. Di Ciaula, A, Petronio, MG, Bersani, F, and Belpoggi, F. Exposure to radiofrequency electromagnetic fields and risk of cancer: epidemiology is not enough! Environ Int. (2025) 196:109275. doi: 10.1016/j.envint.2025.109275

20. Frank, JW, Melnick, RL, and Moskowitz, JM. A critical appraisal of the WHO 2024 systematic review of the effects of RF-EMF exposure on tinnitus, migraine/headache, and non-specific symptoms. Rev Environ Health. (2024). 18. doi: 10.1515/reveh-2024-0069

21. Frank, JW, Moskowitz, JM, Melnick, RL, Hardell, L, Philips, A, Heroux, P, et al. The systematic review on RF-EMF exposure and cancer by Karipidis et al. (2024) has serious flaws that undermine the validity of the study’s conclusions. Environ Int. (2024) 195:1009200–1009202. doi: 10.1016/j.envint.2024.109200

22. Moskowitz, JM, Frank, JW, Melnick, RL, Hardell, L, Belyaev, I, Héroux, P, et al. International commission on the biological effects of electromagnetic fields. COSMOS: a methodologically-flawed cohort study of the health effects from exposure to radiofrequency radiation from mobile phone use. Environ Int. (2024) 190:108807. doi: 10.1016/j.envint.2024.108807

23. Karipidis, K, Baaken, D, Loney, T, Blettner, M, Brzozek, C, Elwood, M, et al. Response to the letter from members of the ICBE-EMF. Environ Int. (2024) 195:109201. doi: 10.1016/j.envint.2024.109201

24. Karipidis, K, Baaken, D, Loney, T, Blettner, M, Brzozek, C, Elwood, M, et al. Response to the letter from Di Ciaula et al. Environ Int. (2025) 196:109276. doi: 10.1016/j.envint.2025.109276

25. Röösli, M, Dongus, S, Jalilian, H, Eyers, J, Esu, E, Oringanje, CM, et al. The effects of radiofrequency electromagnetic fields exposure on tinnitus, migraine and non-specific symptoms in the general and working population: a systematic review and meta-analysis on human observational studies. Environ Int. (2024) 183:108338. doi: 10.1016/j.envint.2023.108338

26. Bradford, HA. The environment and disease: association or causation? Proc J Royal Society Med. (1965) 58:295–300.

27. Armstrong, BG. Effect of measurement error on epidemiological studies of environmental and occupational exposures. Occup Environ Med. (1998) 55:651–6.

28. Arroyave, WD, Mehta, SS, Guha, N, Schwingl, P, Taylor, KW, Glenn, B, et al. Challenges and recommendations on the conduct of systematic reviews of observational epidemiologic studies in environmental and occupational health. J Expo Sci Environ Epidemiol. (2021) 31:21–30. doi: 10.1038/s41370-020-0228-0

29. Frank, JW, Jepson, R, and Williams, AJ. Disease prevention: A critical toolkit. Oxford: Oxford University Press (2016). 215 p.

30. Gee, D. Establishing evidence for early action: the prevention of reproductive and developmental harm. Basic Clin Pharmacol Toxicol. (2008) 102:257–66. doi: 10.1111/j.1742-7843.2008.00207.x

31. Rothman, KJ, Greenland, S, and Lash, TI. Modern Epidemiology. 3rd ed. Philadelphia: Lippincott Wiliams and Wilkins (2008). 146 p.

32. Hardell, L, and Carlberg, M. Using the hill viewpoints from 1965 for evaluating strengths of evidence of the risk for brain tumors associated with use of mobile and cordless phones. Rev Environ Health. (2013) 28:97–106. doi: 10.1515/reveh-2013-0006

33. Auvinen, A, Toivo, T, and Tokola, K. Epidemiological risk assessment of mobile phones and cancer: where can we improve? Eur J Cancer Prevention: Official J Eur Cancer Prev Organisation (ECP). (2006) 15:516–23. doi: 10.1097/01.cej.0000203617.54312.08

34. Moon, J, Kwon, J, and Mun, Y. Relationship between radiofrequency-electromagnetic radiation from cellular phones and brain tumor: meta-analyses using various proxies for RF-EMR exposure-outcome assessment. Environ Health. (2024) 23:82. doi: 10.1186/s12940-024-01117-8

35. Fletcher, GS. Clinical epidemiology: The essentials. 6th ed. Philadelphia: Wolters Kluwer (2021). 264 p.

36. Hennekens, CH, Buring, JE, and Mayrent, SL. Epidemiology in medicine. Boston: Little, Brown (1987). 385 p.

37. Grimes, D, and Schulz, KF. False alarms and pseudo-epidemics: the limitations of observational epidemiology. Obstet Gynecol. (2012) 120:920–7. doi: 10.1097/AOG.0b013e31826af61a

38. Doll, R. Weak associations in epidemiology: importance, detection, and interpretation. J Epidemiol. (1996) 6:11–20.

39. Smith, GD. Reflections on the limitations to epidemiology. J Clin Epidemiol. (2001) 54:325–31. doi: 10.1016/S0895-4356(00)00334-6

40. Szklo, M. The evaluation of epidemiologic evidence for policy-making. Am J Epidemiol. (2001) 154:S13–7. doi: 10.1093/aje/154.12.S13

42. Choi, YJ, Moskowitz, JM, Myung, SK, Lee, YR, and Hong, YC. (2020). Cellular phone use and risk of tumors: systematic review and meta-analysis Int J Environ Res Public Health ;17:8079, doi: 10.3390/ijerph17218079, PMCID: PMC7663653

43. Karipidis, K, Baaken, D, Loney, T, Blettner, M, Brzozek, C, Elwood, M, et al. The effect of exposure to radiofrequency fields on cancer risk in the general and working population: a systematic review of human observational studies–part I: Most researched outcomes. Environ Int. (2024) 191:108983. doi: 10.1016/j.envint.2024.108983

44. Bouaoun, L, Byrnes, G, Lagorio, S, Feychting, M, Abou-Bakre, A, Béranger, R, et al. Effects of recall and selection biases on modeling cancer risk from mobile phone use: results from a case–control simulation study. Epidemiology. (2024) 35:437–46. doi: 10.1097/EDE.0000000000001749

45. Oxford Centre for Evidence-Based Medicine. (2025). Available online at: https://www.cebm.net/wp-content/uploads/2019/01/Systematic-Review.pdf (Accessed January 8, 2025).

46. Rooney, AA, Boyles, AL, Wolfe, MS, Bucher, JR, and Thayer, KA. Systematic review and evidence integration for literature-based environmental health science assessments. Environ Health Perspect. (2014) 122:711–8. doi: 10.1289/ehp.1307972

47. Woodruff, TJ, and Sutton, P. The navigation guide systematic review methodology: a rigorous and transparent method for translating environmental health science into better health outcomes. Environ Health Perspect. (2014) 122:1007–14. doi: 10.1289/ehp.1307175

48. Nakagawa, S, Noble, DWA, Senior, AM, and Lagisz, M. Meta-evaluation of meta-analysis: ten appraisal questions for biologists. BMC Biol. (2017) 15:18. doi: 10.1186/s12915-017-0357-7

49. Whaley, P, Aiassa, E, Beausoleil, C, Beronius, A, Bilotta, G, Boobis, A, et al. Recommendations for the conduct of systematic reviews in toxicology and environmental health research (COSTER). Environ Int. (2020) 143:105926. doi: 10.1016/j.envint.2020.105926

50. Whaley, P, Piggott, T, Morgan, RL, Hoffmann, S, Tsaioun, K, Schwingshackl, L, et al. Biological plausibility in environmental health systematic reviews: a GRADE concept paper. Environ Int. (2022) 162:107109. doi: 10.1016/j.envint.2022.107109

51. Page, MJ, McKenzie, JE, Bossuyt, PM, Boutron, I, Hoffmann, TC, Mulrow, CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Int J Surg. (2021) 88:105906. doi: 10.1016/j.ijsu.2021.105906

52. Eick, SM, Goin, DE, Chartres, N, Lam, J, and Woodruff, TJ. Assessing risk of bias in human environmental epidemiology studies using three tools: different conclusions from different tools. Syst Rev. (2020) 9:1–3.

53. Boogaard, H, Atkinson, RW, Brook, JR, Chang, HH, and Hoek, G. Evidence synthesis of observational studies in environmental health: lessons learned from a systematic review on traffic-related air pollution. Environ Health Perspect. (2023) 131:115002. doi: 10.1289/EHP11532

54. Guyatt, GH, Oxman, AD, Vist, G, Kunz, R, Brozek, J, Alonso-Coello, P, et al. GRADE guidelines: 4. Rating the quality of evidence—study limitations (risk of bias). J Clin Epidemiol. (2011) 64:407–15. doi: 10.1016/j.jclinepi.2010.07.017

55. von Elm, E, Altman, DG, Egger, M, Pocock, SJ, Gøtzsche, PC, Vandenbroucke, JP, et al. The strengthening the reporting of observational studies in epidemiology (STROBE) statement: guidelines for reporting observational studies. Epidemiology. (2007) 18:800804

56. IARC. Working group on the evaluation of carcinogenic risks to humans. Non-ionizing radiation, part 2: radiofrequency electromagnetic fields. IARC Monogr Eval Carcinog Risks Hum. (2013) 102:1–460.

57. Hardell, L, and Carlberg, M. Mobile phone and cordless phone use and the risk for glioma – analysis of pooled case-control studies in Sweden, 1997–2003 and 2007–2009. Pathophysiology. (2015) 22:1–13. doi: 10.1016/j.pathophys.2014.10.001

58. Harrer, M, Cuijpers, P, Furukawa, T, and Ebert, D. Doing Meta-analysis with R: a hands-on guide, vol. 2021. London, UK: Chapman and Hall/CRC (2021).

59. Higgins, JP, and Green, S. Cochrane handbook for systematic reviews of interventions. London: The Cochrane Collaboration (2011).

60. Kanellopoulou, A, Dwan, K, and Richardson, R. Common statistical errors in systematic reviews: a tutorial. Cochrane Ev Synth. (2025) 3:e70013. doi: 10.1002/cesm.70013

61. Valentine, JC, Pigott, TD, and Rothstein, HR. How many studies do you need? A primer on statistical power for meta-analysis. J Educ Behav Stat. (2010) 35:215–47. doi: 10.3102/1076998609346961

62. Ioannidis, JPA, Patsopoulos, NA, and Evangelou, E. Uncertainty in heterogeneity estimates in meta-analyses. BMJ. (2007) 335:914–6. doi: 10.1136/bmj.39343.408449.80

63. Hedges, LV, and Pigott, TD. The power of statistical tests in meta-analysis. Psychol Methods. (2001) 6:203–17. doi: 10.1037/1082-989X.6.3.203

64. Thompson, SG, and Higgins, JP. How should meta-regression analyses be undertaken and interpreted? Stat Med. (2002) 21:1559–73. doi: 10.1002/sim.1187

65. Rosenthal, R, and Rubin, DB. The counternull value of an effect size: a new statistic. Psychol Sci. (1994) 5:329–34. doi: 10.1111/j.1467-9280.1994.tb00281.x

66. Sheldon, J In: JG Ledingham and D Warrell, editors. Concise Oxford textbook of medicine. Oxford: Oxford University Press (2001). 2056.

67. Chaudhary, P, Bhadana, U, Singh, RAK, and Ahuja, A. Primary hepatic angiosarcoma. European J Surgical Oncol (EJSO). (2015) 41:1137–43. doi: 10.1016/j.ejso.2015.04.022

68. Frank, JW, Gibson, B, and MacPherson, M. (1988). Information needs in epidemiology: detecting the health effects of environmental chemical exposure. In: “Information needs for risk management -- environmental monograph no. 8 ”, (Fowle, CD AP Grima and RE Munn, eds.), Toronto, Canada: Institute of Environmental Studies University of Toronto, pp. 129–144.

69. Karipidis, K, Baaken, D, Loney, T, Blettner, M, Brzozek, C, Elwood, M, et al. The effect of exposure to radiofrequency fields on cancer risk in the general and working population: a systematic review of human observational studies–part II: less researched outcomes. Environ Int. (2025) 196:109274. doi: 10.1016/j.envint.2025.109274

70. Crippa, A, Discacciati, A, Bottai, M, Spiegelman, D, and Orsini, N. One-stage dose–response meta-analysis for aggregated data. Stat Methods Med Res. (2019) 28:1579–96. doi: 10.1177/0962280218773122

71. Orsini, N. Weighted mixed-effects dose–response models for tables of correlated contrasts. Stata J. (2021) 21:320–47. doi: 10.1177/1536867X211025798

72. Orsini, N, and Spiegelman, D. Meta-analysis of dose-response relationships In: Handbook of Meta-analysis. London, UK: Chapman and Hall/CRC (2020). 395–428.

73. ICBE-EMF. (2025) International Commission on Biological Effects of Electromagnetic Fields. Available online at: https://icbe-emf.org/resources/ (Accessed Feb. 21, 2025).

74. Burstyn, I, Barone-Adesi, F, De Vocht, F, and Gustafson, P. What to do when accumulated exposure affects health but only its duration was measured? A case of linear regression. Int J Environ Res Public Health. (2019) 16:1896. doi: 10.3390/ijerph16111896

75. Vocht, D. Rethinking cumulative exposure in epidemiology, again. J Expo Environ Epidemiol. (2015) 25:467–73. doi: 10.1038/jes.2014.58

76. Tettamanti, G, Auvinen, A, Åkerstedt, T, Kojo, K, Ahlbom, A, Heinävaara, S, et al. Long-term effect of mobile phone use on sleep quality: results from the cohort study of mobile phone use and health (COSMOS). Environ Int. (2020) 140:105687. doi: 10.1016/j.envint.2020.105687

77. Hardell, L, Carlberg, M, and Mild, KH. Use of mobile phones and cordless phones is associated with increased risk for glioma and acoustic neuroma. Pathophysiology. (2013) 20:85–110. doi: 10.1016/j.pathophys.2012.11.001

78. Bushberg, JT, Chou, CK, Foster, KR, Kavet, R, Maxson, DP, Tell, RA, et al. IEEE Committee on man and radiation—Comar technical information statement: health and safety issues concerning exposure of the general public to electromagnetic energy from 5G wireless communications networks. Health Phys. (2020) 119:236–46. doi: 10.1097/HP.0000000000001301

79. ICNRP (International Commission on Non-Ionizing Radiation Protection). Guidelines for limiting exposure to electromagnetic fields (100 kHz to 300 GHz). Health Phys. (2020) 118:483–524. doi: 10.1097/HP.0000000000001210

80. ICNIRP (2025). International Commission on Non-Ionizing Radiation Protection. Available online at: https://www.icnirp.org/ (Accessed Feb. 20, 2025).

81. Gong, Y, Capstick, MH, Kuehn, S, Wilson, PF, Ladbury, JM, Koepke, G, et al. Lifetime dosimetric assessment for mice and rats exposed in reverberation chambers for the two-year NTP cancer bioassay study on cell phone radiation. IEEE Trans Electromagn Compat. (2017) 59:1798–808. doi: 10.1109/TEMC.2017.2665039

82. Ishai, PB. (2021). Health implications of exposure to electromagnetic radiation for cellphones and their Infrastrucutre. Reproduction. Available online at: https://galweiss.com/wp-content/uploads/2021/08/Health-Implications-of-Exposure-to-Electromagnetic-Radiation-for-Cellphones-and-Their-Infrastrucutre-Paul-Ben-Ishay.pdf (accessed May 19, 2025)

83. Lin, JC. RF health safety limits and recommendations [health matters]. IEEE Microw Mag. (2023) 24:18–77. doi: 10.1109/MMM.2023.3255659

84. Lin, JC. World Health Organization’s EMF project’s systemic reviews on the association between RF exposure and health effects encounter challenges [health matters]. IEEE Microw Mag. (2024) 26:13–5. doi: 10.1109/MMM.2024.3476748

85. Margaritis, LH, Manta, AK, Kokkaliaris, KD, Schiza, D, Alimisis, K, Barkas, G, et al. Drosophila oogenesis as a bio-marker responding to EMF sources. Electromagn Biol Med. (2014) 33:165–89. doi: 10.3109/15368378.2013.800102

86. Melnick, RL. Commentary on the utility of the National Toxicology Program study on cell phone radiofrequency radiation data for assessing human health risks despite unfounded criticisms aimed at minimizing the findings of adverse health effects. Environ Res. (2019) 168:1–6. doi: 10.1016/j.envres.2018.09.010

87. Melnick, R. Regarding ICNIRP’s evaluation of the National Toxicology Program’s carcinogenicity studies on radiofrequency electromagnetic fields. Health Phys. (2020) 118:678–82. doi: 10.1097/HP.0000000000001268

88. Panagopoulos, DJ. Comparing DNA damage induced by mobile telephony and other types of man-made electromagnetic fields. Mutation Res/Rev Mutation Res. (2019) 781:53–62. doi: 10.1016/j.mrrev.2019.03.003

89. Pophof, B, Henschenmacher, B, Kattnig, DR, Kuhne, J, Vian, A, and Ziegelberger, G. Biological effects of radiofrequency electromagnetic fields above 100 MHz on fauna and flora: workshop report. Health Phys. (2023) 124:31–8. doi: 10.1097/HP.0000000000001625

90. Yakymenko, I, Tsybulin, O, Sidorik, E, Henshel, D, Kyrylenko, O, and Kyrylenko, S. Oxidative mechanisms of biological activity of low-intensity radiofrequency radiation. Electromagn Biol Med. (2016) 35:186–202. doi: 10.3109/15368378.2015.1043557

91. Mevissen, M, Ward, JM, Kopp-Schneider, A, McNamee, JP, Wood, AW, Rivero, TM, et al. Effects of radiofrequency electromagnetic fields (RF EMF) on cancer in laboratory animal studies: a protocol for a systematic review. Environ Int. (2022) 161:107106. doi: 10.1016/j.envint.2022.107106

92. Hardell, L, and Carlberg, M. Mobile phones, cordless phones and rates of brain tumors in different age groups in the Swedish National Inpatient Register and the Swedish Cancer register during 1998-2015. PLoS One. (2017) 12:e0185461. doi: 10.1371/journal.pone.0185461

93. Davis, FG, Smith, TR, Gittleman, HR, Ostrom, QT, Kruchko, C, and Barnholtz-Sloan, JS. Glioblastoma incidence rate trends in Canada and the United States compared with England, 1995-2015. Neuro-Oncology. (2020) 22:301–2. doi: 10.1093/neuonc/noz203

94. Defossez, G, Le Guyader-Peyrou, S, Uhry, Z, and Grosclaude, P. Estimations nationales de l’incidence et de la mortalité par cancer en France métropolitaine entre 1990 et 2018 -- Étude à partir des registres des cancers du réseau Francim Volume 1 - Tumeurs solides. Paris: Sante Publique France (2019). 318 p.

95. Philips, A, Henshaw, DL, Lamburn, G, and O’Carroll, MJ. Brain tumours: rise in glioblastoma multiforme incidence in England 1995-2015 suggests an adverse environmental or lifestyle factor. J Environ Public Health. (2018) 2018:7910754. doi: 10.1155/2018/7910754

96. Zada, G, Bond, AE, Wang, YP, Giannotta, SL, and Deapen, D. Incidence trends in the anatomic location of primary malignant brain tumors in the United States: 1992-2006. World Neurosurg. (2012) 77:518–24. doi: 10.1016/j.wneu.2011.05.051

97. Doll, R, and Peto, R. Mortality in relation to smoking: 20 years’ observations on male British doctors. Br Med J. (1976) 2:1525–36. doi: 10.1136/bmj.2.6051.1525

98. Elwood, JM, and Wood, AW. Animal studies of exposures to radiofrequency fields. N Z Med J. (2019) 132:98–100.

99. ICNRP. ICNIRP note: critical evaluation of two radiofrequency electromagnetic field animal carcinogenicity studies published in 2018. Health Phys. (2020) 118:525–32. doi: 10.1097/HP.0000000000001137

100. Falcioni, L, Bua, L, Tibaldi, E, Lauriola, M, De Angelis, L, Gnudi, F, et al. Report of final results regarding brain and heart tumors in Sprague-Dawley rats exposed from prenatal life until natural death to mobile phone radiofrequency field representative of a 1.8 GHz GSM base station environmental emission. Environ Res. (2018) 165:496–503. doi: 10.1016/j.envres.2018.01.037

101. Digitale, JC, Martin, JN, and Glymour, MM. Tutorial on directed acyclic graphs. J Clin Epidemiol. (2022) 142:264–7. doi: 10.1016/j.jclinepi.2021.08.001

102. Lipsky, AM, and Greenland, S. Causal directed acyclic graphs. JAMA. (2022) 327:1083–4. doi: 10.1001/jama.2022.1816

103. Hardell, L, and Carlberg, M. Health risks from radiofrequency radiation, including 5G, should be assessed by experts with no conflicts of interest. Oncol Lett. (2020) 20:15.

104. Nordhagen, EK, and Flydal, E. WHO to build neglect of RF-EMF exposure hazards on flawed EHC reviews? Case study demonstrates how “no hazards” conclusion is drawn from data showing hazards. Rev Environ Health. (2024) 10. doi: 10.1515/reveh-2024-0089