- 1Paone & Associates, LLC, Minneapolis, MN, United States

- 2Johns Hopkins School of Nursing and the Bloomberg School of Public Health, Baltimore, MD, United States

- 3School of Public Health, Texas A&M University, College Station, TX, United States

- 4Center for Community Health and Aging, Texas A&M University, College Station, TX, United States

- 5Drexel College of Nursing and Health Professions, Philadelphia, PA, United States

Background: Examining the experience of organizations implementing evidence-based programs can help future programs address barriers to effective implementation, sustainment, and scaling. CAPABLE is an evidence-based 4-to-6-month program that improves daily function of older adults and modifies their home environments in modest ways to support their goal attainment. Through a guided process, utilizing an occupational therapist, nurse, and handy worker, the older adult sets goals and a personal action plan. In this study, we examined factors that advanced or impeded implementation and sustainability of CAPABLE. The researchers are embedded in the CAPABLE National Center and Johns Hopkins and provide ongoing technical support in implementation and dissemination of CAPABLE throughout the U.S., Canada, and other countries.

Methods: We chose the RE-AIM and CFIR frameworks based on their robust use in the U.S. for examining implementation of older adult health promotion and prevention programs. We examined the implementation and sustainment experience of 65 organizations adopting CAPABLE across 5 years (2019–2024). Data sources included licensure records, an annual survey, and additional notes collected ad hoc. We identified key components to implement CAPABLE and used self-reported data from the lead program administrator at each organization who replied to the annual survey. These key informants responded to the level of ease or difficulty of these key components required for implementation. They responded each year that their organizations provided CAPABLE. CAPABLE licensure records indicated when the organization began/terminated their service. Notes from monthly office hours calls provided additional contextual information. We performed qualitative thematic and descriptive analysis on the notes. We also reviewed published studies on CAPABLE’s outcomes. The unit of analysis was the organization.

Results: The following factors were consistently reported by these administrators as supporting ease of implementation: getting leadership support, accessing technical assistance, and maintaining fidelity to the program. Conversely, common challenges reported included difficulty with recruitment, hiring/finding the required personnel, and sustainability funding. Internal factors supporting readiness and adoption were perceived value of the program and program manager knowledge and commitment. External factors reported that supported adoption was initial funding to start a pilot, and alignment with “aging in community” strategic goals.

Implication: This examination revealed positive and impeding forces for implementation and sustainment and identified where additional support was needed. Findings are guiding the development of this additional technical support by the CAPABLE National Center. In addition, efforts are underway to improve funding and policy to support CAPABLE to improve sustainment, scaling, and dissemination. This study also provides a use case for employing the RE-AIM and CFIR frameworks together to track ongoing implementation. This helps address a gap in the literature concerning practical ways to monitor, evaluate, and report on ongoing implementation of evidence-based programs.

Introduction

Understanding the implementation and dissemination experience of organizations as they implement and sustain evidence-based programs can help future programs address barriers to sustainment and scaling. Organizations implementing evidence-based programs are motivated to effectively launch, operate, and sustain these programs, but they must overcome internal and external hurdles to do so (1). Studies of implementation case examples have found the interplay between program features, organizational characteristics, and external environments impact success (2–9).

Community Aging in Place—Advancing Better Living for Elders (CAPABLE) is a structured, 4- to 6-month home visit program to improve physical function among older adults by addressing individual capacity and modifying the home environment (10, 11). It uses an interprofessional team composed of an occupational therapist (OT), registered nurse (RN), and handy worker in an iterative series of home visits to assist the older person to attain self-determined goals and build capacity for self-care, deploying techniques of motivational interviewing and client-directed action.

Since 2009, CAPABLE has been tested in randomized control trials and demonstrated improvement in activities of daily living (ADL) such as bathing, dressing, and eating (8 ADLs examined) and instrumental activities of daily living (IADL) such as paying bills, grocery shopping (8 IADLs examined), functional status, and depression (12). Studies about the effect of CAPABLE on healthcare utilization and costs showed cost savings (13–15). Examining Medicare expenditures for CAPABLE participants relative to comparators in a study sample of 5,861 beneficiaries, CAPABLE had lower expenditures driven by reductions in inpatient and outpatient expenditures. Expenditures were lower by $2,765 USD per participant per quarter for eight consecutive quarters, which totaled $22,120 USD per participant over 2 years (14). Examining Medicaid expenditures, these were lower an average of $867 USD per participant per month due to lower utilization and spending in all healthcare services except home health (15). For people dually eligible for Medicare and Medicaid, savings would be approximately $30,000 in 2017 US dollars ($2,765 × 8 quarters plus $867 × 24 months).

While the efficacy and effectiveness of CAPABLE is widely understood, less is reported about the important aspects of program implementation that have facilitated its diffusion into communities across the United States and Canada. In this context, the purposes of this examination were to (1) identify factors that are important (as facilitators or hurdles) to organizations when implementing and sustaining CAPABLE; (2) assess the utility of using two frameworks to study implementation of CAPABLE by a range of organizations at various stages of implementation progress; and (3) provide practical recommendations to advance the dissemination of CAPABLE, which can be adopted/modeled by other evidence-based programs.

Framework selection

We chose the Reach-Effectiveness-Adoption-Implementation-Maintenance (RE-AIM) and the Consolidated Framework for Implementation Research (CFIR) frameworks to guide our examination, given experience by the lead researcher with these frameworks and extensive use in the field, especially in examining implementation and dissemination of health promotion programs for older adults (17–22, 25–27, 41, 42).

The five domains of the RE-AIM framework (i.e., Reach, Effectiveness, Adoption, Implementation, and Maintenance) were important to our examination to provide a macro-view of the 5-year implementation experience of CAPABLE. RE-AIM is used for planning and evaluation and can help determine impact of an evidence-based program (16–22). The Practical, Robust Implementation and Sustainability Model (PRISM) is an evolution of RE-AIM designed to improve translation of research into community practice (23) which we examined but did not use. Researchers in implementation science have noted the importance of continuing to apply RE-AIM iteratively as part of ongoing evaluation in a measurement and improvement cycle (17, 20, 21, 24, 44).

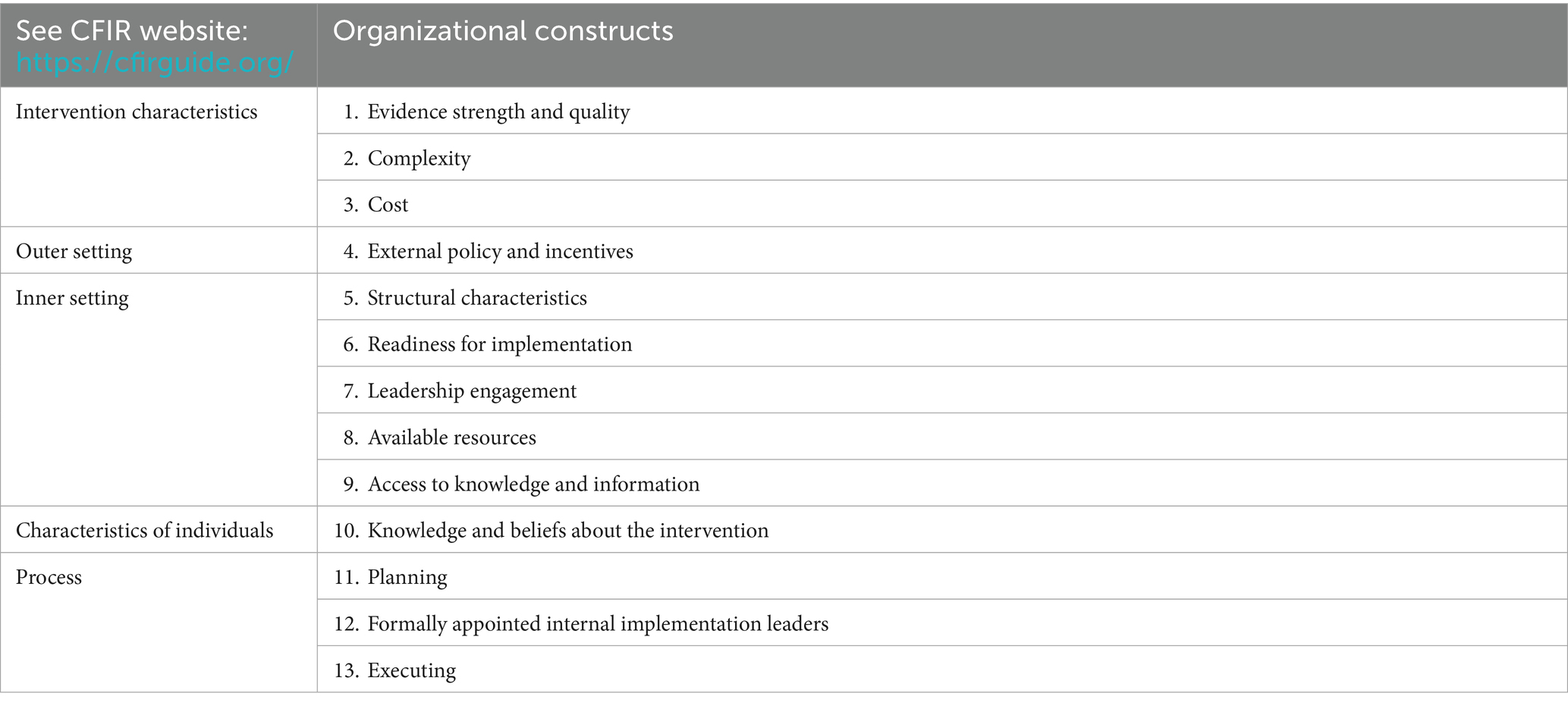

We chose CFIR to examine specific factors that are important at an organizational level. CFIR provides a structured way to examine specific constructs and context as organizations implement a given program. The researcher selects constructs based on understanding program components and internal and external aspects of readiness (25). Since the study team had been involved in shepherding the CAPABLE program, working with organizations directly, we were able to use this knowledge to confidentially select internal and external factors, consistent with the guidelines around the use of CFIR. In this way, CFIR provided us with a structured way to conduct a more granular focus at the organizational level. As stated by Damschroder, capturing setting-level barriers and facilitators to predict or explain antecedents and implementation outcomes is the appropriate use of CFIR (26).

Both RE-AIM and CFIR have robust research communities and Internet-based applications with open-access tools and resources. Each framework has been used for more than two decades, and there is a strong body of literature available1,2. These two frameworks have been used effectively together (27). An addendum to CFIR provides conceptual distinctions and connects RE-AIM and CFIR to guide analysis on outcomes (26).

Our approach follows the recommendation to move beyond the conceptual or theoretical use of a framework to where it is incorporated and operationalized within the implementation effort (28). The 10 recommendations offered by these researchers stress the use of the frameworks in “real-world” implementation projects. Therefore, we attempted to follow their 10 recommendations in this examination which included selecting suitable frameworks, engaging the stakeholder (the organization) directly, having embedded researchers, defining key issues and implementation phases, identifying influences on the organization to inform a logic model, determining the methods for examination that were practical and would continue beyond this study in order to serve as an ongoing monitor, identifying key barriers and enablers that influence the outcome of effective implementation and sustainment, specifying the outcomes and monitoring progression, and using the framework at a micro-level to tailor support. The final step of reporting the implementation effort is this study and article (28).

Methods

Implementation stages

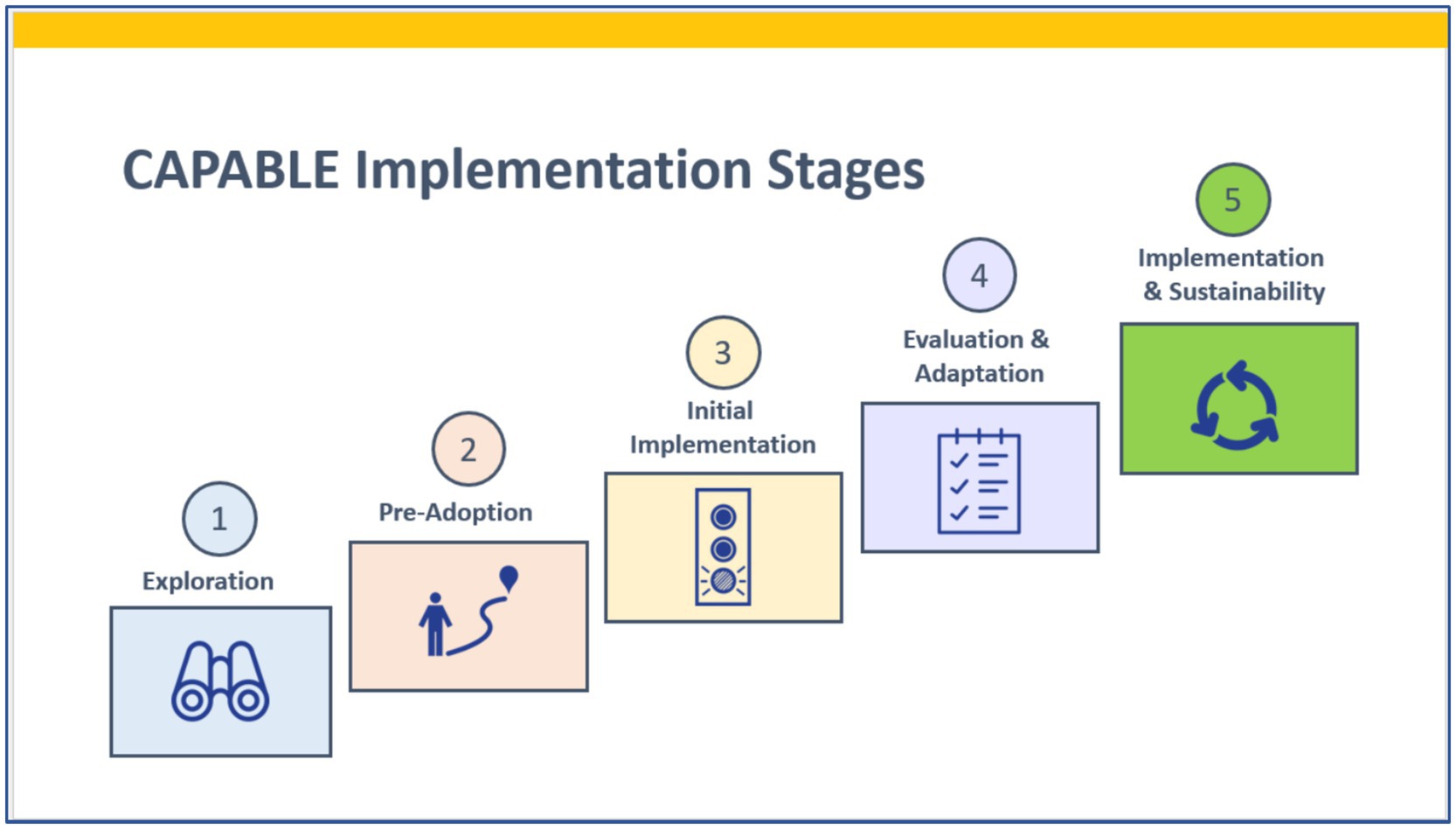

On an ongoing basis, since 2019, the CAPABLE team has used a five-stage implementation journey map to support each organization as it moves from exploring CAPABLE to sustaining it (Figure 1) (45, 46). During Exploration of CAPABLE (Stage 1), organizational leaders review evidence on expected outcomes, the program protocol, and operational considerations. The Pre-Adoption stage (Stage 2) involves multiple contacts with the CAPABLE technical team to discuss “what it takes to launch CAPABLE.” In Stage 3, Initial Implementation, a program manager is assigned by the organization, and this person is tasked with launching CAPABLE—within a defined timeframe, budget, and scope. Hallmarks of the Evaluation and Adaptation stage (Stage 4) are reviews of the results from the initial implementation of the program. A critical component of Stage 4 is to secure ongoing funding. Leadership from the organization examine the resources and level of effort expended from their actual implementation experience (costs, labor inputs, internal processes required) and compare this to the outcomes attained (service volume, pre/post-health outcomes, receptivity of the program by target groups). Finally, Stage 5 (Sustainability) is reached when the organization has completed their initial implementation stage and made the determination to continue. In this examination, we focus on organizations at Stages 3 through 5.

Figure 1. Implementation journey map. Adapted from the National Implementation Research Network (43, 45, 46).

Data sources

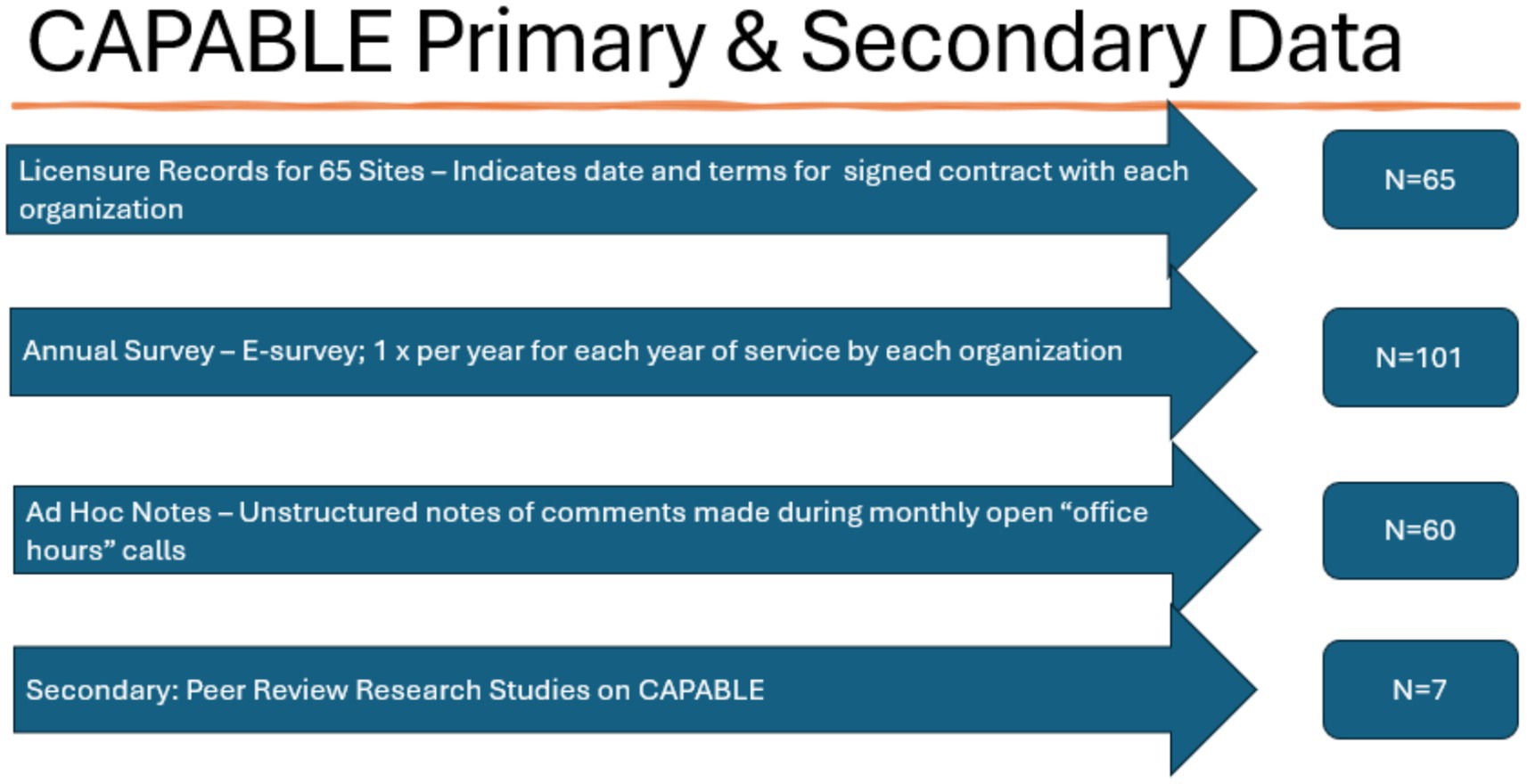

We reviewed all (N = 65) CAPABLE licensure records, which indicate when the organization signed their agreement to allow adoption of CAPABLE under the conditions specified by Johns Hopkins University. Licensure records include the organization address, program administrator name, date when the license contract was signed, payment received, and termination date (when the license expires if it is not renewed). Most licensure agreements were for a term from 2 to 4 years.

We reviewed all (N = 101) responses to our annual CAPABLE Implementation Check-in & Fidelity e-survey which is required for all licensed sites. The annual CAPABLE Implementation Check-in & Fidelity e-survey is conducted at the end of each year and responses are given at the organization level. Data collected include the number of participants served in that year, the organization’s intent to continue or end the CAPABLE service, staffing changes, cost per participant, funding sources, self-reported ease or difficulty of key implementation steps at the organizational level, self-reported organizational readiness to implement CAPABLE (in retrospect), and attestation that the organization has followed the program protocol with fidelity. The CAPABLE program administrator is the respondent for the organization and is the key informant for this examination. This provides the CAPABLE National Center program office with a core set of information on each site annually.

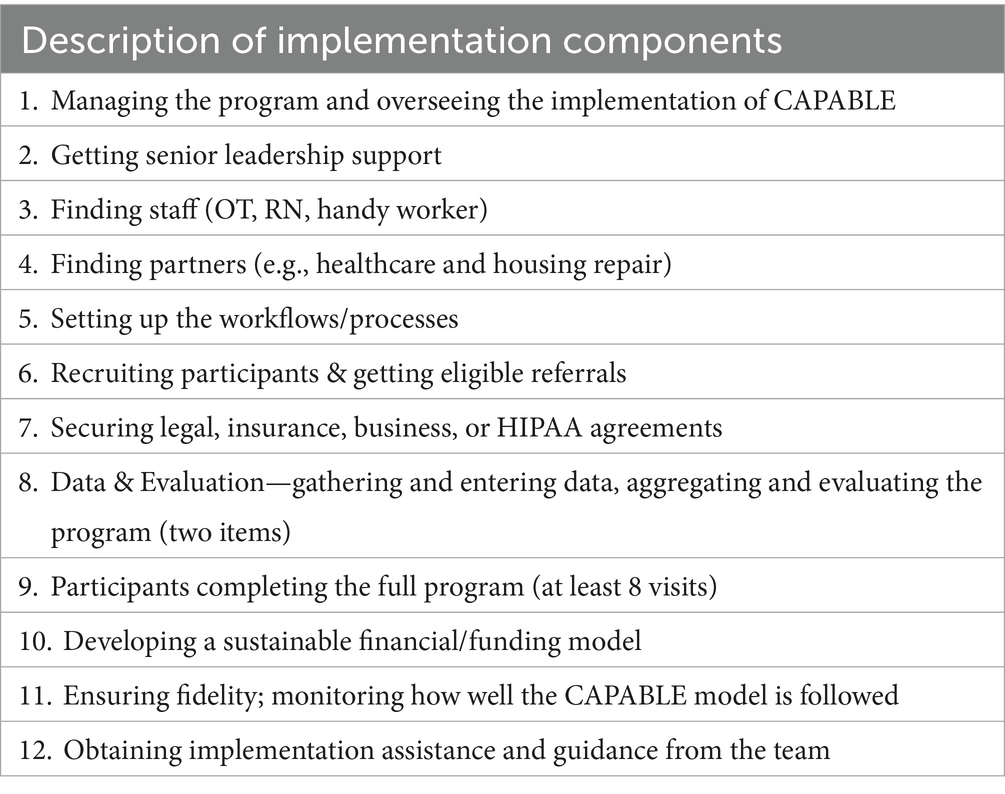

To create the survey items, we used our knowledge about each of the organizations that had adopted CAPABLE up to the point of this study. The lead author is the national program office key contact for technical support on implementation and, with other team members, had extensive first-hand knowledge of each of the 65 organizations. We identified 12 key implementation components for CAPABLE that were common across all implementation sites. These 12 components were probed via the CAPABLE annual e-survey with forced choice response using an adjectival Likert scale, from Very Easy/Easy to Difficult/Very Difficult (Table 1). Program administrators are the respondent, and they determine the level of ease or difficulty that they report. The administrators received the survey in December of each year and responded by the end of January to describe implementation progress for the previous calendar year. The lead author exported the Excel spreadsheet generated from the e-survey platform and aggregated the data calculating frequency and percentage for each level of the scale, by item.

For additional contextual information, we reviewed notes from the monthly office hours with program administrators, which began in October 2019. There were approximately 60 calls held by June 2024 with brief notes available on most of them. The monthly call is akin to an “open office hour” for a drop-in discussion and open-ended, non-scripted conversation. The purpose of the calls is to foster shared learning between site program administrators and help ensure consistency across geographic settings and over time in how the program operates. All program administrators participated in at least one call during the term of their license agreement. The lead author has facilitated these monthly calls since their inception.

These monthly “office hours” calls have no specific agenda and thus represent an organic capture of questions, issues, and strategies. The calls offer a real-world (not surveyor or researcher-driven) perspective of implementation. As such, the notes are not structured, do not include direct quotes or identify the speaker, and are very brief. We believe these calls offer an important opportunity to the national program office to have a deeper understanding of operational issues, strategies, and concerns in real time. Such calls offer a convenience sample of organizational feedback about CAPABLE implementation strategies, questions, barriers, and facilitators. The notes represent a non-structured qualitative dataset.

In reviewing notes, we identified themes that were consistent with the implementation components and factors queried in the annual survey. We noted the commenter’s perspective on whether the component or issue was a positive or negative factor. This was straightforward as the commenter would indicate a challenging or an emerging enabling issue in the beginning of making their comment. We took at face value the determination of that person who is running the CAPABLE program as to whether a factor or issue they were describing was having a negative or a positive effect on their implementation progress. We did not adjudicate their opinions. We categorized these unstructured, ad hoc comments into the implementation components and constructs probed in the annual survey and used the contextual information to indicate the direction and strength of the factor.

No Institutional Review Board was required as these program data are collected as part of the licensure agreement between the organization and the CAPABLE National Center.

The primary and secondary data are summarized in Figure 2.

To define effectiveness for this evidence-based program (what program outcomes are defined and proven for CAPABLE), we reviewed peer-reviewed published studies about CAPABLE program results (11–15, 29).

Indicators

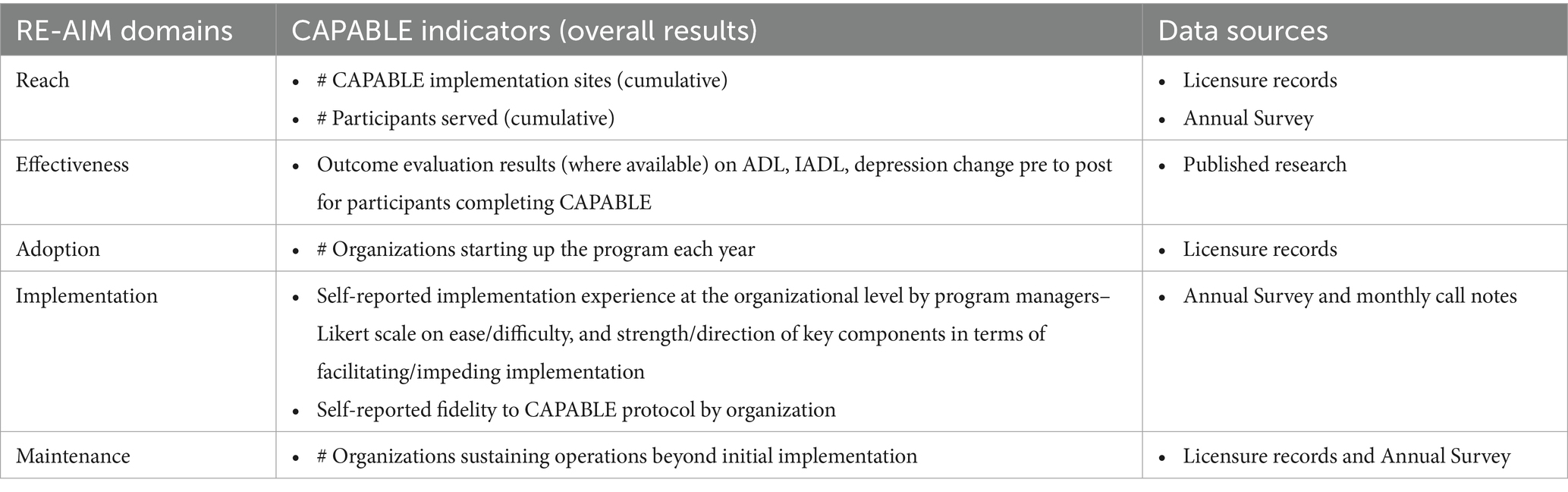

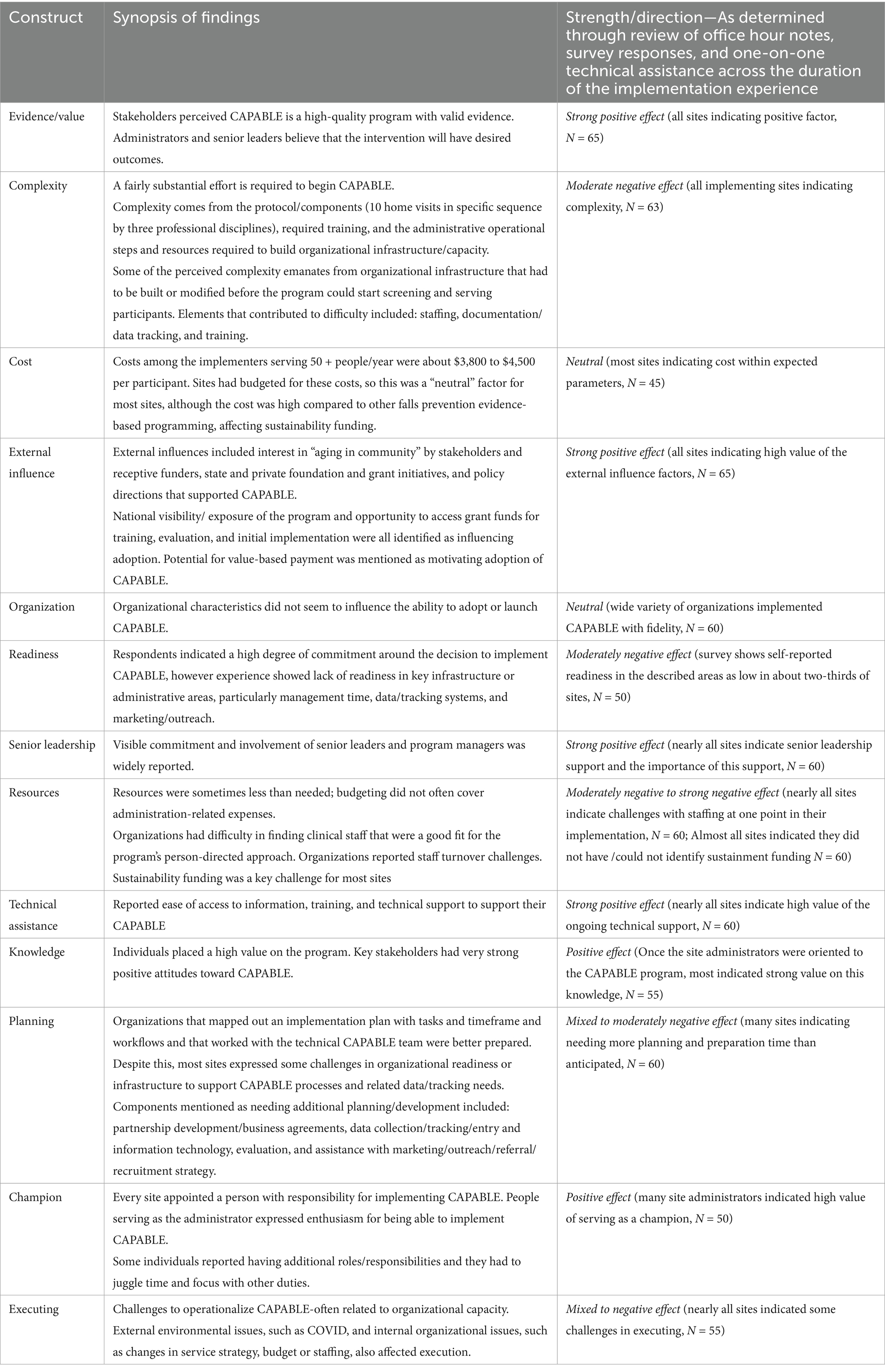

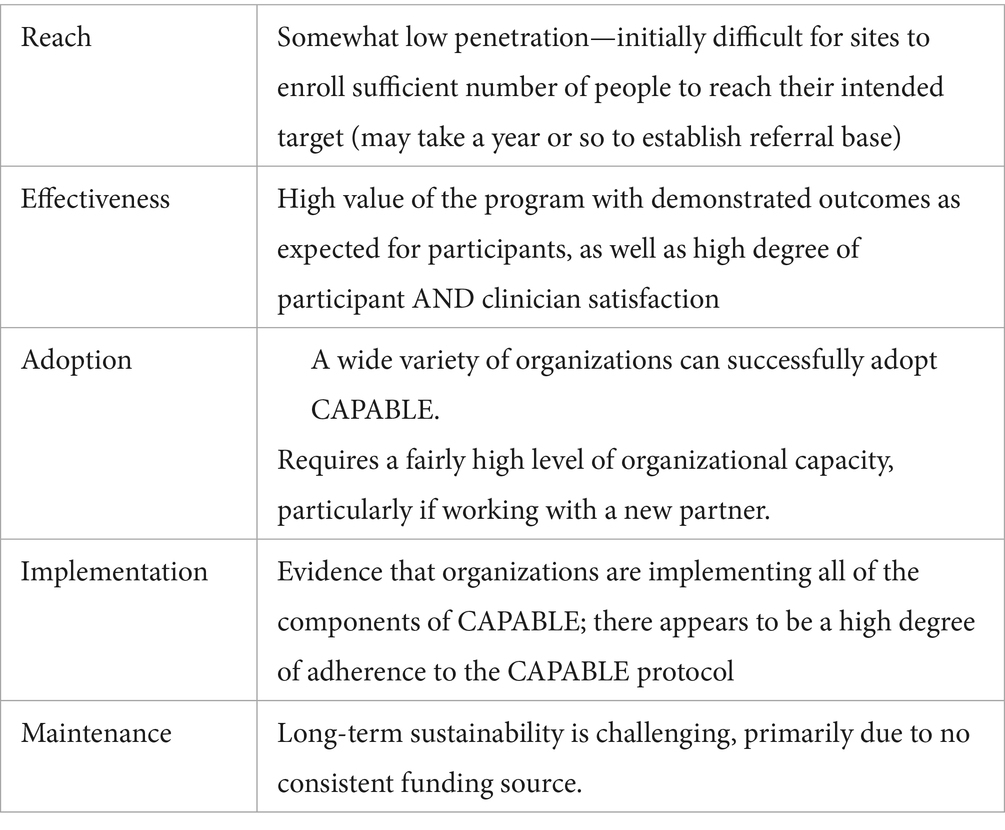

We selected seven indicators for examining macro-level results using the RE-AIM framework across the five domains of Reach, Effectiveness, Adoption, Implementation, and Maintenance (Table 2). We selected 13 constructs across the five areas within CFIR to examine internal characteristics, external environment, and other contextual factors that influenced CAPABLE implementation (Table 3). Construct selection was based on studies of implementation of evidence-based health promotion and disability prevention programs for older adults and our knowledge over 5 years working with the organizations implementing CAPABLE (5–7, 9, 16).

The annual e-survey contained items on the 12 implementation components and 13 CFIR constructs listed in Tables 1, 3. This allowed us to systematically collect consistent data on every licensed CAPABLE site at least annually and round out our understanding with the Program Administrator monthly “office hours” virtual meetings. Every site participated in at least one call, and all except three organizations participated in the annual survey at least once. Since the CAPABLE service for most of these organizations extended from 2 to 4 years, some administrators responded multiple times. There is utility in continuing to ask administrators annually about their implementation experience as ease or difficulty with different components varies from year to year.

Respondents rating for each of the 12 implementation components were tallied, and the percentage distribution across the Likert scale from Very Easy to Very Difficult was calculated. As mentioned, it is the perception of the program administrator of that CAPABLE site who determined the ease or difficulty of that implementation component. In other words, we took at face value the determination of that person who is running the CAPABLE program as to whether a factor or issue had a negative or a positive effect on their implementation in the survey years and how strong of an effect was experienced. We did not adjudicate their opinions. There are no pre-defined cut point thresholds for each adjective level in the scale.

We examined self-reported readiness from high to low for each of the 13 constructs for each organization as indicated in the annual survey. We coupled the respondents’ answers given in open-ended optional response boxes for items in the annual survey with qualitative information gathered through monthly “office hours.” This helped us to determine the strength and direction of the response on these constructs for their organization from “Strongly Positive” to “Strongly Negative.” Given that we were able to observe and interact with these administrators and teams from these organizations over multiple years, we had the opportunity to discuss organizational challenges and strengths several times one-on-one to confirm our categorization around these constructs. The items that were more frequently reported as “Very Difficult to Somewhat Difficult” were considered “Negative,” and the items that were more frequently reported as “Very Easy to Somewhat Easy” were considered “Positive”.

Analysis

Our analysis for each of the domains in the RE-AIM framework involved examining the indicators shown in Table 2, with quantitative results (frequencies and percentages) and qualitative results (categorized comments and open-ended responses). The indicators for Reach were the cumulative number of implementing organizations and the number of participants they served collectively. The indicators for Effectiveness were the reported outcomes observed in the studies and by each organization in terms of aggregate change in the prescribed pre/post-measures (i.e., the overall aggregate change response observed by the organization). The site reported that their CAPABLE participants showed one of the following: “improvement,” “no change,” or “decline.” The indicator of Adoption was the number of organizations signing a license agreement and starting up each year. The indicators of Implementation were the self-reported implementation experience by the program administrator for each of 12 implementation components and their attestation of fidelity to the protocol. The indicator of Maintenance was the number of organizations sustaining their operations beyond the initial implementation period, which was usually 2 to 4 years.

The analytical strategy for all of the implementation factors was frequency counts and percentages. We examined each year’s survey results and then looked at the trends or patterns year over year. The analytical strategy for the 13 constructs probed was also frequency counts of self-reported organizational readiness as indicated by the program administrator. The analytical strategy for the contextual qualitative information provided ad hoc in the calls was categorization of brief notes by common themes categorized by the 12 implementation components and 13 constructs. For the survey and the ad hoc comments in the monthly calls, we took at face value the positive or negative direction of the program administrators’ comments as they indicated their experience on the implementation components or constructs.

We examined the perspective of each organizational respondent one at a time and then aggregated results across all organizations. The data sources to determine the strength and direction in the aggregate were as follows: (1) the annual survey—where the person indicated their experience with the component using the Likert adjectival scale, (2) the annual survey—where the person sometimes provided additional open-ended responses about the organization’s experience, and (3) the monthly “office hours” calls, which the lead author facilitated and where the emphasis on a positive or negative component or construct was clear based on tone and language of the commenter. These data sources allowed us to count frequencies around how often an issue was raised by the 65 organizations (over time) and the direction of the comment. In this way, the strength and direction of the component or construct was determined by the frequency of it being raised and how emphatic the commenter was in terms of it being a barrier or facilitator. Although we did not have transcribed notes, some of the factors were so often raised that we could refer to our casual notes and memory of the conversations. A great advantage was having an embedded researcher facilitate all 60 calls. Directly providing technical assistance and conducting the calls over the 5 years studied provided an in-depth understanding of each organization and its strategy, challenges, and administrator’s perspective in real time and over time.

Results

Adoption

Over the 5 years studied by June 2024, 65 organizations had secured a license to implement CAPABLE, with 63 (96%) of these organizations progressing to initial implementation. Two organizations were licensed but never implemented the service; therefore, there was no administrator to answer the annual survey and no data to review for these organizations.

From 4 to 11 organizations became licensed each year in the 5 years studied. We do not have data on how many organizations may have initially considered CAPABLE but did not contact the CAPABLE National Center to indicate their interest in adopting the program.

At the end of each year, the CAPABLE annual survey was e-mailed to the program administrators within all licensed sites. This means that most of the organizations/program administrators responded multiple times to the survey over the 5 years studied. This captures the yearly experience of the organization and the program administrator. Since the implementation start-up (prior to service) period can last from 6 to 12 months, the annual survey is a snapshot that provides a perspective of the administrators and the organizations as they move through the implementation stages and address the operational components to launch and sustain their programs.

Size, organizational structure, geographic region, and primary services provided (e.g., medical care, senior services, and housing repair) varied among these 65 organizations. Types of organizations implementing CAPABLE included the following: healthcare organizations (28–45%) (e.g., home healthcare agencies, or integrated hospital and clinic systems and rehabilitation, or long-term care facilities), housing service and home repair or construction organizations (15–39%) (e.g., Habitat for Humanity affiliate chapter), managed care organizations (3–6%) (e.g., Medicare Advantage health plan), community-based organization (22–28%) (e.g., Area Agencies on Aging and Meals on Wheels service provider), and government agencies and university research centers (6–9%). The size and type of organization did not appear to affect the ability to adopt CAPABLE. A key driver for adoption was initial funding, often from grants or private sources.

Of the 65 licensed organizations examined over 5 years, the stage of implementation and/or conclusion of their programs was as follows:

• 28 (43%) were licensed and were either preparing to or actively providing CAPABLE service, with 13 of these sites (20% of the licensed organizations) progressing from Stage 3 (initial implementation) to sustaining the program (Stage 4 or 5).

• 35 (54%) offered the program, came to the end of their license, and did not renew; that is, they ended after the implementation stage (Stage 3).

• 2 organizations (3%) were licensed—but never advanced and decided not to pursue CAPABLE.

One barrier faced by all of the sites operating during this 5-year period was the impact of COVID-19, which caused most of these organizations to pause their CAPABLE service for between 2 and 12 months from 2020 to 2021.

Reach

As of 2024, a total of approximately 4,000 individuals completed CAPABLE. We calculated this total by adding up the reported number of participants who completed the CAPABLE service each year from each organization.

Based on funder and organizational criteria for participant selection set by the organizations studied, it is reasonable to assume that most of these participants were low-income (for example, parameters included criteria such as “less than 80% of the median income in the area”). Sites do not report demographic characteristics of their participants, so we do not have quantitative data on race, ethnicity, or language. However, qualitative information from the monthly calls indicates most (90% or more) of CAPABLE participants served spoke English as their primary language and most (75% or more) were White, with a few exceptions (one site served a majority of Black/African-American participants).

Service volume (number of completed CAPABLE participants) by site was generally between 10 and 40 individuals per year, but three sites provided service to between 80 and 100 people in 1 year and one site served 300 participants in a year.

Service volume was constrained by funding and staffing resources, as well as the funder prescribed parameters. Since the primary source of funding for most of these 65 organizations was grants, the grantor approved the grantee proposal around target number of people to be served within the defined timeframe. Typical volume targets were between 20 and 50 participants per year, with 1, 2, or 3 years of service funded. After that time, programs were expected to find other funding. However, during this timeframe, the pandemic impacted all programs. For example, in 2020, only eight sites (32% of the number of licensed sites at that time) had served 10 or more participants within the year, compared to the initial organizational targets of between 20 and 50 participants.

Implementation

We examined the 12 implementation components probed in the annual survey. There was one program administrator designated for each organization. Twenty-five program administrators responded to the survey in 2020, 25 in 2021, 23 in 2022, and 28 in 2023. As mentioned, this includes multiple responses from some administrators over the 5 years as they continued the operations of their CAPABLE service. Some organizations responded only once as their site had just become licensed that year and were only beginning their second year at the time of this examination. The 2024 survey was not yet distributed for this examination.

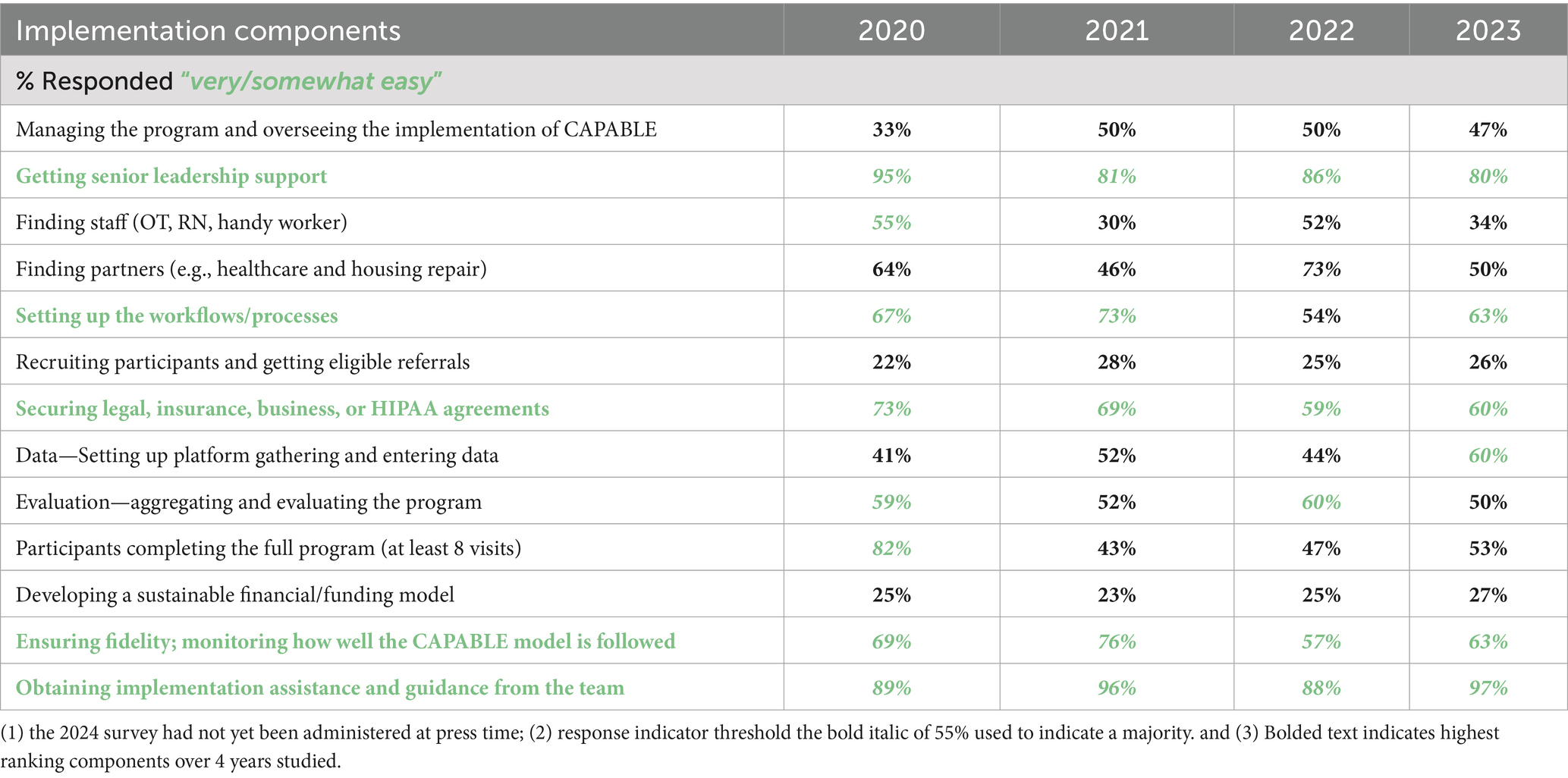

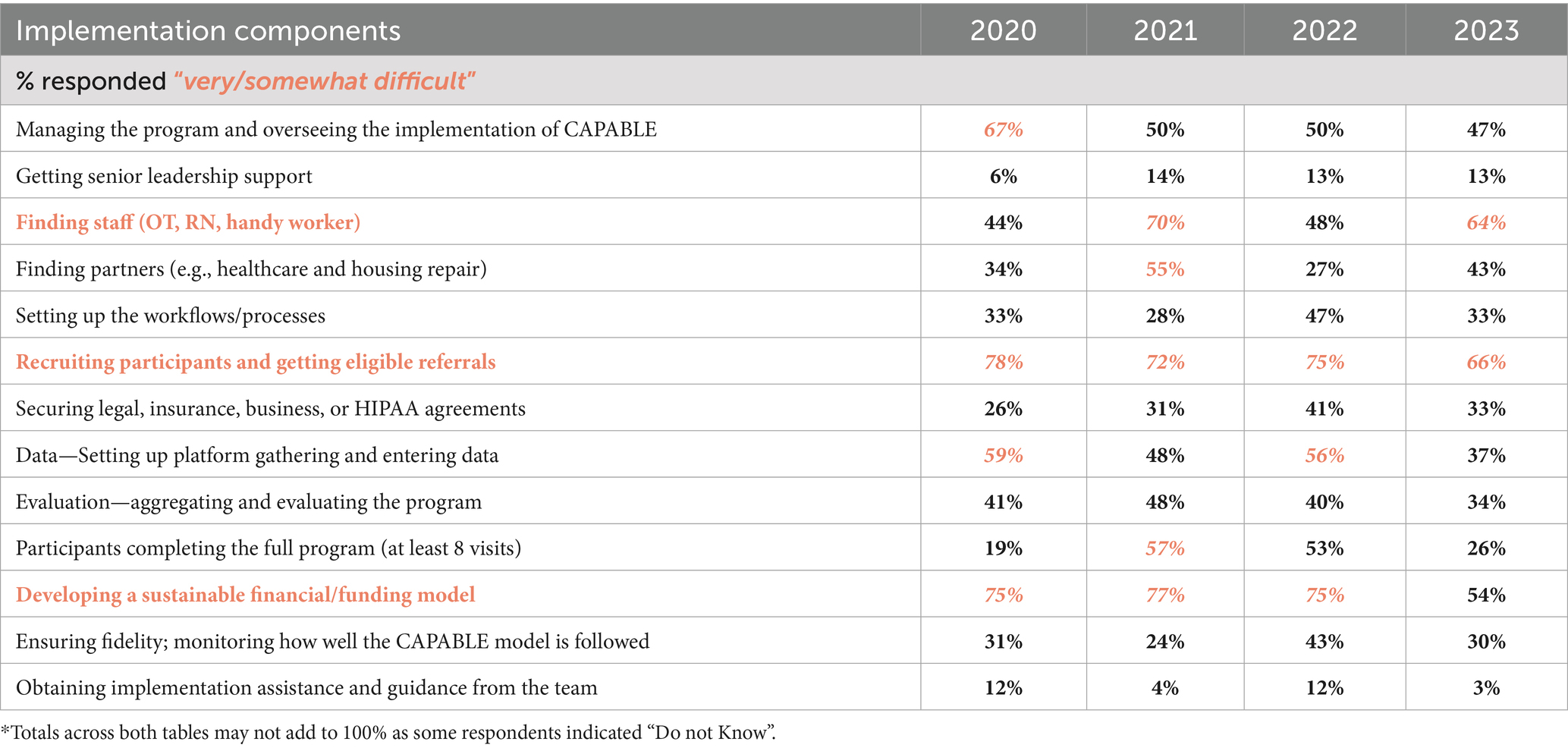

Aggregating survey responses, we found consistency in several component ratings. Respondents most often rated “Getting Senior Leadership Support” and “Maintaining Fidelity to the CAPABLE protocol” as Easy. Respondents most often rated “Recruitment” and “Finding Sustainable Funding” as Difficult.

“Managing the Program” had mixed results in terms of level of effort and difficulty or ease. Program administrators explained in monthly calls and in open-text boxes on the survey that managing CAPABLE required more time than originally estimated to set up/launch this multi-component program.

Respondents identified “Recruitment and Obtaining Eligible Referrals” to CAPABLE as a common challenge. Building awareness within the external environment among referral sources required continual effort. Program administrators explained that their referral sources initially had difficulty understanding CAPABLE; referrals for CAPABLE needed to be fostered one person or referral source at a time. However, mature sites had overcome this hurdle and often had waiting lists. Referrals effectively came from a wide variety of sources, such as clinics, home health agencies, area agencies on aging, rehabilitation centers, care management agencies, home support service providers and housing and other community-based service providers, as well as word-of-mouth from past participants who had “graduated” from CAPABLE.

“Getting Technical Support” was rated as Easy and an important enabling factor to these organizations to implement CAPABLE effectively. Any licensed site can request technical support from the National CAPABLE Center or Johns Hopkins key technical support staff for CAPABLE at any time within the course of their license agreement. In addition, all organizations are provided with a comprehensive CAPABLE Implementation Manual, online training and manuals for the clinicians, and bi-monthly, monthly, or quarterly office hours for each of the four key roles in CAPABLE: Program Administrators, Occupational Therapists, Registered Nurses, and Handy workers/Construction Specialists. The CAPABLE National Center provided additional tools and resources as they were developed, such as Evaluation Considerations, Fidelity Frequently Asked Questions, the Readiness Assessment, and Cost/Budget Guide.

Tables 4, 5 provide more detail on 4 years of survey responses, by the implementation component probed.

The annual survey also included questions on organizational readiness, internal factors, and external factors, corresponding to the 13 chosen CFIR constructs. We found consistency in positive responses around the following: the evidence/value of CAPABLE, external influences around aging in community, senior leadership support, knowledge, and champion acumen—all with a strong-to-moderate positive effect. We determined the direction of the effect by reviewing each organization’s responses to the implementation components (discussed earlier), the notes from “office hours” calls, and our knowledge of the organization over multiple years as we provided technical support and answered their questions. We found consistency in negative responses around the following: complexity of the program, internal infrastructure readiness that was lacking, difficulty executing (particularly with partners), and availability of resources (particularly staffing) with a moderate-to-strong negative effect (Table 6).

Program administrators saw themselves as champions with a strong perceived value of the CAPABLE program. They articulated how CAPABLE was a great fit with organizational strategy to promote “aging in community.” In calls, program managers indicated leadership support had been an important factor for adoption. Senior leaders, who were also occasionally involved in calls with the CAPABLE team, described the strategic importance and evidence base of CAPABLE—qualities that were emphasized early in the exploration and pre-adoption stages.

All CAPABLE program administrators described an operational learning curve to implement CAPABLE. CAPABLE requires a fairly substantial administrative effort to launch and manage this multi-component intervention, involving three professional disciplines and ten coordinated in-home visits over 4 months. Administrators described needing additional data tracking and evaluation infrastructure, staff resources, management time to oversee staff/contractors, planning support to create new policies or procedures to establish workflows, and help in monitoring the sequencing and scheduling of visits to ensure adherence to the protocol. Gaps in readiness around these infrastructure and resource components were not apparent to them prior to adopting the program.

Challenges with lack of infrastructure readiness to support the components of CAPABLE suggest some readiness factors may be under-appreciated. Self-reported organizational readiness was high in the pre-adoption phase. However, as the operational requirements of CAPABLE were better understood, program administrators discussed (in ad hoc and “office hours” monthly calls) areas where they realized their organizations lacked capacity.

Effectiveness

Regarding effectiveness, all organizations use the same pre/post-measures of participant outcomes. In the annual survey, the organizational respondent affirms the measures they are using. These measures were consistent with the suggested measures to monitor participant outcomes pre- and post-service. These include the following:

• 8 ADL activities—level of difficulty from “No Difficulty” to “Unable to Do” using a 5-point scale.

• 8 IADL activities—level of difficulty from “No Difficulty” to “Unable to Do” using a 5-point scale,

• Depressive symptoms—using the PHQ-9 instrument, with the last item on suicide ideation not used (therefore, we refer to this as the PHQ-8 item set).

• Falls Efficacy—using the Falls Efficacy Scale—A 10 item scale by Mary Tinetti.

All administrators report on whether they have observed improvement in these participant outcomes, indicating in the aggregate whether they have seen overall positive changes, decline/negative changes, or no change. Where the organization conducted a more formal evaluation and shared that with the National Center (N = 15, unpublished), they reported the following results:

• Improvement in functional status (typically halving the functional status limitations reported comparing pre- and post-scores and aggregating across the participants served),

• Improvement in depression (reducing reported depressive symptoms by 20–40%), and

• Improving falls self-efficacy (improving confidence of not falling) by most of the CAPABLE participants served.

These results were similar to those demonstrated in the original CAPABLE studies and in subsequent studies of specific programs (12, 13, 15, 29).

We observed a gap in expertise around evaluation design and methods, data platforms, and ability to track and aggregate the pre- and post-outcome measure data. Several program administrators indicated these as areas of weakness, impacting their ability to evaluate results and demonstrate a return on investment of their particular program for potential payers. Potential payers in the United States would include managed care/health plans, particularly “Special Needs Plans” (SNPs) which are a type of Medicare Advantage plan that targets dually eligible individuals. The term dually eligible means a person is eligible for both Medicaid (state administered—for low-income people that meet the level of care needs and financial eligibility guidelines as set by the state) and Medicare (for all older adult citizens age 65 + and for younger people with physical disabilities who meet the federal guidelines for eligibility). It is a term widely used and understood in the United States but is not relevant to other countries. Other value-based payors in the U.S. would include Accountable Care Organizations (ACOs) which are usually integrated health delivery providers with an insurance component. Both SNPs and ACOs are unique to the United States healthcare delivery and payment system. They are designed to provide an incentive to better manage care by paying upfront for each enrolled individual, rather than based on fee-for-service utilization of that person. The idea is to promote prevention upstream, thereby avoiding higher cost events as disease or condition effects progress.

Regarding return on investment (ROI), CAPABLE has shown a 6- to 10-fold return on investment to the Medicare and Medicaid programs in other research, where a $3,000 (USD) investment resulted in almost $30,000 (USD) savings (14, 15). However, among these 65 sites, only a few had pursued calculating their own CAPABLE program return on investment—in part because it required following the participant for 24 months post-program graduation. The technical support team at the national program office has provided technical assistance on ROI calculation and encourages sites to determine the following:

• “Cost to whom”—Identify the stakeholder(s) of interest. In addition to the current funder/payer, sites are encouraged to identify costs that would be experienced by a potential payer, usually a managed care plan or state government. There are different cost scenarios and inputs based on each potential payer.

• “What costs”—Determine the input costs (other than direct costs of labor and supplies, if any) to be added to the equation.

• “What time period”—Determine the length of time to be used to calculate the cost and value.

• “Value”—Identify the benefits, calculate the value of these benefits, and identify to whom the benefits inure.

Maintenance

Organizations report adherence to following the CAPABLE protocol with fidelity via an attestation at the end of the annual survey. All but two organizations attested to following the protocol with fidelity. The two organizations that were unable to follow the protocol had shortened the number of visits, due to resource constraints. Therefore, the participants were not considered to have received the full dose of CAPABLE, and the organizations’ licenses ended.

Regarding sustainability, administrators reported wanting to continue the service; however, sustainability was elusive for most. Among these 65 organizations, most received time-limited grant support to launch their CAPABLE programs. When the grant ended, they often needed to close their CAPABLE program (usually after 3 years). Despite extensive searching and additional grant requests or outreach to potential payers, they were not able to secure sustainment funding. Organizations explored value-based payments from Medicare Advantage plans, Accountable Care Organizations, or state agency grants or waiver programs to secure sustainable funding, but such funding was not forthcoming for most programs as of mid-2024.

Funding was the number one issue in maintenance, with two other key issues also consistently raised: maintaining the necessary staff; and recruitment of individuals who fit the criteria to participate in the program.

Based on the detailed data from various sources reported herein, we summarize results at the macro-level across all CAPABLE organizations using the five RE-AIM domains (Table 7).

Discussion

This investigation identified key implementation factors supporting or hindering the delivery and sustainability of CAPABLE, demonstrated successful use of the two frameworks to guide the examination, and has offered an approach for ongoing implementation monitoring.

Senior leadership support was a strong driver of adoption. Leadership and funding commitment were critical to promote effective implementation and sustainability. Other studies have reported similar findings pertaining to senior leadership support (1, 3, 6, 27, 30). This underscores the importance of cultivating awareness and building internal support for the evidence-based program prior to launch, particularly in the C-Suite. Additional studies discuss similar readiness factors. For example, in a review of the implementation experience of the Healthy Moves for Aging Well program, a key challenge was engaging adoption by providers, and a key readiness factor was having senior leadership and program administrator support (31). In a comprehensive evaluation by the Centers for Medicare and Medicaid Services of 12 nationally disseminated programs, the evaluators found low existing market demand and program awareness (which affected recruitment) as well as a lack of a sustainable payment model to finance and support the delivery of these programs long term (32). A systematic review of evidence-based program sustainment found multiple facilitators (e.g., alignment) and barriers (funding, other limitations in resources) that affected ability to sustain the program (1, 33).

A program champion and effective program manager also were widely reported as facilitators of implementation success, consistent with other research (3, 34). Champions (program administrators) indicated their strong perceived value of the CAPABLE program. This suggests that to move from adoption to effective implementation, organizations should identify managers with a strong commitment to the program.

Findings around challenges with recruitment were consistent with other research and evaluation of evidence-based programs for older adults or caregivers (7, 31, 32). This suggests an important area for national focus—more resources are needed to achieve name recognition of CAPABLE and other evidence-based programs.

Most respondents needed additional infrastructure and resources compared to initial expectations. This is congruent with other studies probing characteristics and factors present among effective implementers and what readiness and supports are needed to facilitate effective implementation (6, 9, 31, 35). The organizational lift to build this program can be a restraining force in dissemination (36). Among these organizations, there was a frequently reported infrastructure readiness gap in evaluation design and data tracking capacity. A study of three states implementing evidence-based programs also found additional acumen around structured evaluation was needed (37).

A facilitating factor was technical support and training provided by Johns Hopkins and the CAPABLE National Center, as well as peer support through calls and/or learning collaboratives. Technical assistance and peer support have been shown to be important in other studies of implementation effectiveness (7).

Potential impact

Given the strong and consistent participant outcomes observed in the CAPABLE organizations studied, we calculated the potential cost savings to Medicare and Medicaid if even just half of the 4,000 participants (2,000 individuals) were dually eligible and had achieved the mean cost savings identified in previous studies (approximately $30,000 USD for both Medicare and Medicaid). This would represent a cost savings of $60 million USD, in 2017 dollars. These savings are driven by better management of function at home, which avoids expensive falls and other healthcare utilization in the 24 months following the intervention. Notably, this figure does not calculate additional value to the community (for example, the value of avoiding persistent emergency medical and fire department calls when the person who lives alone falls). Moreover, there are important benefits to the participants that should be considered, such as the ability to stay in their residences, avoidance of pain and suffering associated with falls and other injuries, and emotional and health benefits of the positive behavior change aspects of CAPABLE, which are considered the essence of the program driving improved self-care. As of June 2024, there were 12.8 million dually eligible (for Medicare and Medicaid) individuals in the U.S (38). If even one-quarter (3.2 million individuals) could access a CAPABLE program, the estimated cost savings to Medicare and Medicaid would be $96 billion USD.

Despite the strong evidence of substantial positive health outcomes and of potential cost savings to federal and state governments for older adults participating in CAPABLE, policy and payment to ensure sustainment funding has not materialized for most programs. The organizations bearing the cost of CAPABLE do not receive the benefits of costs avoided. This is regrettable, especially given the laudable effort to stand up a CAPABLE program and achieve the effect in health outcomes as were demonstrated in the initial research studies. Over and over, organizations small and large, across all regions, are effectively implementing CAPABLE in their initial implementation (Stage 3) and older adults in their communities who participate describe transformational improvement in their lives. When grant funding ends, however, the organization finds it has to dismantle the program given lack of funding. This is a barrier most acutely observed in the United States, where healthcare policy and payment does not support upstream action focused on improving functional ability in people with chronic conditions. This has been described as a “wrong pocket” problem (39). Notably, the one program implemented in Nova Scotia, Canada, has been successfully implemented and is being expanded.

Framework utility

The combination of RE-AIM and CFIR for studying implementation of CAPABLE offered great utility. RE-AIM provided a framework for looking at results. CFIR guided examination of context. The two frameworks helped identify factors important in readiness and effective implementation, factors also found to be important in other studies (19, 24, 31). Others using both frameworks have also identified RE-AIM as being useful for evaluating change to assure external validity, while CFIR helps explain why implementation succeeded or failed (27).

To replicate the methods used, a research team would choose the indicators that are relevant for each of the RE-AIM domains and identify data sources that capture qualitative and quantitative information on those indicators. The research team would also identify key implementation components or actions required to implement the program, and the internal and external factors that are relevant and observed in implementing the program. The research team would then embed the components and constructs into an annual survey that would be completed by the lead administrator or operational manager at each program site. Each site would respond to the survey every year that it operated the program, allowing for identification of trends and patterns over time. There is utility in continuing to ask administrators annually about their implementation experience as ease or difficulty with different components varies from year to year. This provides important information when taking a longitudinal and ongoing approach to studying implementation and is recommended to others as a strategy. Ideally, the research team would provide ongoing technical assistance and facilitate regular shared learning “office hours” calls with all implementation sites to capture contextual information in real time. This unstructured feedback provides many insights into challenges and strategies as they emerge.

The CAPABLE National Center will continue to use the two frameworks to take a structured and consistent look at implementation experience. Others have recommended that organizations implementing evidence-based programs regularly gather and report metrics on implementation experience and program outcomes, guided by theories, models, and frameworks (40). We recommend that evidence-based program stewards use frameworks such as RE-AIM and CFIR to set up a structured reporting system that gathers information not only on participant outcomes but also on organizational implementation experience such as demonstrated in this example. Structured annual reporting coupled with capture of qualitative information provides an important feedback loop to the program steward, allows for benchmarking by and for the implementing organizations, and can guide informed decision-making on where adaptations or other changes may be needed. We would highly recommend this as a strategy. Other national program offices are encouraged to conduct these virtual meetings/calls of all implementation sites to facilitate effective implementation and promote ongoing shared learning around a specific evidence-based program such as CAPABLE. This offers real-time insight and an ongoing opportunity to identify barriers and facilitators of progress and address needs proactively through enhanced technical support.

Strengths and limitations

Strengths include the embedded research approach and use of multiple sources to capture annual implementation experience. Relatedly, identifying key implementation components and important constructs based on first-hand knowledge of organizational experience is a strength. Probing implementation experience and constructs annually through structured data collection from all sites provided consistent snapshots that could be aggregated. Collecting information from the key operational lead within each organization using forced choice response scales and open-ended response provided information from those most directly responsible for the implementation process. In addition, strengths of the research team included having ongoing technical knowledge from working day-to-day with the program sites and extensive experience with the intervention over many years. The primary author (DP) is an embedded researcher and provides day-to-day technical assistance to CAPABLE implementation organizations. The other authors either co-developed the CAPABLE program (SS, LG), have worked with CAPABLE sites around a pilot study or to support a Learning Collaborative (JS), or have evaluated evidence-based programs nationwide using implementation frameworks (MS).

Limitations include our inability to reach five organizations that had ended their programs and the few non-responders (less than 10% per year) to our annual survey. We do not know whether their experience would be substantively different from the organizations included in our analysis.

Conclusion

We examined factors that advanced or impeded implementation and sustainability of the evidence-based program CAPABLE by 65 organizations over 5 years, using the RE-AIM and CFIR frameworks to guide our examination. Through an annual e-survey of all licensed organizations and review of notes from monthly office hours and ad hoc calls, we conducted a structured examination performing qualitative thematic and descriptive analysis. Factors consistently supporting implementation included senior leadership support, technical assistance, and the protocol of the program, which guided fidelity adherence. Conversely, common challenges included difficulty with recruitment and hiring/finding the required personnel. Internal factors supporting readiness and adoption were perceived value of the program and program manager knowledge and commitment. External factors supporting adoption were initial funding to start a pilot and strategic tie to aging in community organizational commitments.

Lack of sustainability funding was the greatest factor impacting sustainment. This is an ongoing challenge. There are some encouraging signs as several government agencies and policymakers in multiple states in the U.S. are working on ways to incorporate CAPABLE into a list of approved benefits under certain circumstances (for example, via waiver program under the state’s Medicaid funding for low-income older adults). Raising awareness of the program to make it easier to reach potential participants who then agree to enroll in CAPABLE is another factor that would enhance sustainability.

Based on the learning acquired and ongoing insights from monthly technical assistance calls, the CAPABLE National Center is adding to the readiness guidance for organizations adopting CAPABLE to promote ease of implementation and to build the organizations’ acumen for conducting evaluations that supply potential payers with cost and benefit calculations as well as evidence on participant outcomes achieved.

This study provides a use case for employing the RE-AIM and CFIR frameworks to track ongoing implementation. We offer practical ways to monitor, evaluate, and report on ongoing implementation of evidence-based programs.

Data availability statement

The datasets presented in this article are not readily available because licensure data is restricted. Requests to access the datasets should be directed to ZHBhb25lMUBqaHUuZWR1.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the participants was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

DP: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. JS: Data curation, Writing – review & editing. MS: Writing – review & editing. LG: Writing – review & editing. SS: Funding acquisition, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. Funding was provided by Rita and Alex Hillman Foundation and the National Institute on Disability, Independent Living, and Rehabilitation Research, HHS-ACL-NIDILRR-RTGE-0342 Grant # 90RTGE0003. The views expressed here do not represent the views of either the Rita and Alex Hillman Foundation, nor those of the National Institute on Disability, Independent Living, and Rehabilitation Research or the federal government.

Conflict of interest

DP and JS were employed by Paone & Associates, under contract with Johns Hopkins University to conduct this study.

SS and LG are inventors of the CAPABLE training program, for which they, Johns Hopkins University, and Thomas Jefferson University are entitled to fees. This arrangement has been reviewed and approved by the Johns Hopkins University in accordance with its conflict of interest policies.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

1. Hailemariam, M, Bustos, T, Montgomery, B, Barajas, R, Evans, LB, and Drahota, A. Evidence-based intervention sustainability strategies: a systematic review. Implement Sci. (2019) 14:57. doi: 10.1186/s13012-019-0910-6

2. Aarons, G, Horowitz, J, Dlugosz, L, and Ehrhart, M. The role of organizational processes in dissemination and implementation research In: R Brownson, G Colditz, and E Proctor, editors. Dissemination and implementation research in health: Translating science to practice. New York, NY: Oxford University Press (2012). 128–53.

3. Durlak, JA, and DuPre, EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. (2008) 41:327–50. doi: 10.1007/s10464-008-9165-0

4. Fixsen, D. L., Naoom, S. F., Blasé, K A., Friedman, R. M., and Wallace, F. (2005). Implementation research: a synthesis of the literature. University of South Florida, Louis de la Parte Florida Mental Health Institute. The National Implementation Research Network (FMHI Publication #231). Implementation Research: A Synthesis of the Literature. Available online at: https://fpg.unc.edu/

5. Ory, MG, and Smith, ML. Research, practice, and policy perspectives on evidence-based programming for older adults. Front Public Health. (2015) 3:1–9. doi: 10.3389/fpubh.2015.00136

6. Paone, D. Factors supporting implementation among CDSMP organizations. Front Public Health. (2015) 2:237. doi: 10.3389/fpubh.2014.00237

7. Paone, D. Using RE-AIM to evaluate implementation of an evidence-based program: a case example from Minnesota. J Gerontol Soc Work. (2014) 57:602–25. doi: 10.1080/01634372.2014.907218

8. Tomioka, M, and Braun, KL. Examining sustainability factors for organizations that adopted Stanford’s chronic disease self-management program. Front Public Health. (2014) 2:140. doi: 10.3389/fpubh.2014.00140/

9. Whitelaw, N. Systems change and organizational capacity for evidence-based practices: lessons from the field. Generations. (2010) 34:43–50.

10. Szanton, SL, Thorpe, RJ, Boyd, C, Tanner, EK, Leff, B, Agree, E, et al. CAPABLE: a bio-behavioral-environmental intervention to improve function and health-related quality of life of disabled, older adults. J Am Geriatr Soc. (2011) 59:2314–20. doi: 10.1111/j.1532-5415.2011.03698.x

11. Szanton, SL, Wolff, JW, Roberts, L, Leff, BL, Thorpe, RJ, Tanner, EK, et al. Preliminary data from CAPABLE, a patient directed, team-based intervention to improve physical function and decrease nursing home utilization: the first 100 completers of a CMS innovations project. J Am Geriatr Soc. (2015) 63:371–4. doi: 10.1111/jgs.13245

12. Szanton, SL, Xue, QL, Leff, B, Guralnik, J, Wolff, JL, Tanner, EK, et al. Effect of a biobehavioral environmental approach on disability among low-income older adults: a randomized clinical trial. JAMA Intern Med. (2019) 179:204–11. doi: 10.1001/jamainternmed.2018.6026

13. Breysse, J, Dixon, S, Wilson, J, and Szanton, S. Aging gracefully in place: an evaluation of the CAPABLE© approach. J Appl Gerontol. (2021) 41:718–28. doi: 10.1177/07334648211042606

14. Ruiz, S, Snyder, LP, Rotondo, C, Cross-Barnet, C, Colligan, EM, and Giuriceo, K. Innovative home visit models associated with reductions in costs, hospitalizations, ad emergency department use. Health Aff. (2017) 36:425–32. doi: 10.1377/hlthaff.2016.1305

15. Szanton, SL, Alfonso, YN, Leff, B, Guralnik, J, Wolff, JL, Stockwell, I, et al. Medicaid cost savings of a preventive home visit program for disabled older adults. J Am Geriatr Soc. (2018) 66:614–20. doi: 10.1111/jgs.15143

16. Estabrooks, PA, and Allen, KC. Updating, employing, and adapting: a commentary on what does it mean to “employ” the RE-AIM model. Eval Health Prof. (2013) 36:67–72. doi: 10.1177/0163278712460546

17. Glasgow, RE, Vogt, TM, and Boles, SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. (1999) 89:1322–7. doi: 10.2105/AJPH.89.9.1322

18. Glasgow, R, Harden, S, Gaglio, B, Rabin, B, Smith, ML, Porter, GC, et al. RE-AIM planning and evaluation framework: adapting to new science and practice with a twenty-year review. Front Public Health. (2019) 7:64. doi: 10.3389/fpubh.2019.00064

19. Glasgow, R, Battaglia, C, McCreight, M, Aydiko Ayele, R, and Adreinn Rabin, B. Making implementation science more rapid: use of the RE-AIM framework for mid-course adaptations across five health services research projects in the veterans health administration. Front Public Health. (2020). 8:1–13. doi: 10.3389/fpubh.2020.00194

20. Harden, SM, Strayer, TE, Smith, ML, Gaglio, B, Ory, MG, Rabin, B, et al. National working group on the RE-AIM planning and evaluation framework: goals, resources, and future directions. Front Public Health. (2020) 7:390. doi: 10.3389/fpubh.2019.00390

21. Kwan, BM, McGinnes, HL, Ory, MG, Estabrooks, PA, Waxmonsky, JA, and Glasgow, RE. RE-AIM in the real world: use of the RE-AIM framework for program planning and evaluation in clinical and community settings. Front Public Health. (2019) 7:345. doi: 10.3389/fpubh.2019.00345

22. Ory, MG, Altpeter, M, Belza, B, Helduser, J, Zhang, C, and Smith, ML. Perceived utility of the RE-AIM framework for health promotion/disease prevention initiatives for older adults: a case study from the U.S. evidence-based disease prevention initiative. Front Public Health. (2015) 2:143. doi: 10.3389/fpubh.2014.00143

23. Rabin, BA, Cakici, J, Golden, CA, Estabrooks, PA, Glasgow, RE, and Gaglio, B. A citation analysis and scoping systematic review of the operationalization of the practical, robust implementation and sustainability model (PRISM). Implement Sci. (2022) 17:62. doi: 10.1186/s13012-022-01234-3

24. Harden, SM, Smith, ML, Ory, MG, Smith-Ray, RL, Estabrooks, PA, and Glasgow, RE. RE-AIM in clinical, community, and corporate settings: perspectives, strategies, and recommendations to enhance public health impact. Front Public Health. (2018) 6:71. doi: 10.3389/fpubh.2018.00071

25. Damschroder, LJ, Aron, DC, Keith, RE, Kirsh, SR, Alexander, JA, and Lowery, JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. (2009) 4:50. doi: 10.1186/1748-5908-4-50

26. Damschroder, LJ, Reardon, CM, Opra Widerquist, MA, and Lowery, J. Conceptualizing outcomes for use with the consolidated framework for implementation research (CFIR): the CFIR outcomes addendum. Implement Sci. (2022) 17:7. doi: 10.1186/s13012-021-01181-5

27. King, D, Shoup, JA, Raebel, M, Anderson, C, Wagner, N, Ritzwoller, D, et al. Planning for implementation success using RE-AIM and CFIR frameworks: a qualitative study. Front Public Health. (2020) 8:59. doi: 10.3389/fpubh.2020.00059

28. Moullin, J, Dickson, K, Stadnick, N, Albers, B, Nilsen, P, Broder-Fingert, S, et al. Ten recommendations for using implementation frameworks in research and practice. Implement. Sci. Comm. (2020) 1:42. doi: 10.1186/s43058-020-00023-7

29. Szanton, SL, Leff, B, Li, Q, Breysse, J, Spoelestra, S, Kell, J, et al. CAPABLE program improves disability in multiple randomized trials. J. Am. Geriatr. Soc. (2021) 69:3631–40. doi: 10.1111/jgs.17383

30. Aarons, GA, Hurlburt, M, and Horwitz, SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Admin Pol Ment Health. (2011) 38:4–23. doi: 10.1007/s10488-010-0327-7

31. Wieckowski, J, and Simmons, J. Translating evidence-based physical activity interventions for frail elders. Home Health Serv. Quart. (2006) 25:75–94. doi: 10.1300/J027v25n01_05

32. Centers for Medicare & Medicaid Services (CMS). (2013). Report to congress: Evaluation of community-based wellness and prevention programs under section 4202(b) of the affordable care act. Washington, DC: Health and Human Services. Available online at: cms.gov

33. Smith, ML, Towne, SD Jr, Herrera-Venson, A, Cameron, K, Horel, SA, Ory, MG, et al. Delivery of fall prevention interventions for at-risk older adults in rural areas: findings from a national dissemination. Int J Environ Res Public Health. (2018) 15:2798. doi: 10.3390/ijerph15122798

35. Smith, ML, Ory, MG, Ahn, SN, Kulinski, K, Jiang, L, Horel, S, et al. National dissemination of chronic disease self-management education programs: an incremental examination of delivery characteristics. Front Public Health. (2015) 2:2–7. doi: 10.3389/fpubh.2014.00227

36. Szanton, SL, Bonner, A, Paone, D, Atalla, M, Hornstein, E, Alley, D, et al. Drivers and restrainers to adoption and spread of evidence-based health service delivery interventions: the case of CAPABLE. Geriatr Nurs. (2022) 44:192–8. doi: 10.1016/j.gerinurse.2022.02.002

37. Smith, ML, Schneider, EC, Byers, IN, Shubert, TE, Wilson, AD, Towne, SD Jr, et al. Reported systems changes and sustainability perceptions of three state departments of health implementing multi-faceted evidence-based fall prevention efforts. Front Public Health. (2017) 5:120. doi: 10.3389/fpubh.2017.00120

38. Sutton, J., Jacobsen, G., and Leonard, F. (2024). The healthcare experiences of people dually-eligible for Medicare and Medicaid. The Commonwealth Fund. Available online at: https://www.commonwealthfund.org/publications/2024/jun/health-care-experiences-people-dually-eligible-medicare-medicaid

39. McCullough, JM. Declines in spending despite positive returns on investment: understanding public health’s wrong pocket problem. Front Public Health. (2019) 7:159. doi: 10.3389/fpubh.2019.00159

40. Smith, ML, and Harden, SM. Full comprehension of theories, models, and frameworks improves application: a focus on RE-AIM. Front Public Health. (2021) 9:599975. doi: 10.3389/fpubh.2021.599975

41. Brach, JS, Juarez, G, Perera, S, Cameron, K, Vincenzo, JL, and Tripken, J. Dissemination and implementation of evidence-based falls prevention programs: reach and effectiveness. J. Gerontol. (2022) 77:164–71. doi: 10.1093/gerona/glab197

42. Feldstein, AC, and Glasgow, RE. A practical, robust implementation and sustainability model (PRISM) for integrating research findings into practice. J Qual Patient Safety. (2008) 34:228–43. doi: 10.1016/s1553-7250(08)34030-6

43. Fixsen, A. A. M., Aijaz, M., Fixsen, D., Burks, E., and Schultes, M. T. (2021). Implementation frameworks: An analysis. Chapel Hill, NC: Active Implementation Research Network. Available online at: https://www.activeimplementation.org

44. Glasgow, RE, Green, LW, Klesges, LM, Abrams, DB, Fisher, EB, Goldstein, MG, et al. External validity: we need to do more. Ann Behav Med. (2006) 31:105–8. doi: 10.1207/s15324796abm3102_1

45. Metz, A, Naoom, SF, Halle, T, and Bartley, L. An integrated stage-based framework for implementation of early childhood programs and systems (OPRE Research Brief OPRE 201548). Washington, DC: Office of Planning, Research and Evaluation, Administration for Children and Families, U.S. Department of Health and Human Services.

46. Proctor, E, Silmere, H, Raghavan, R, Hovmand, P, Aarons, G, and Bunger, A. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Administration and policy in mental health and mental health services research. (2011) 38:65–76. doi: 10.1007/s10488-010-0319-7

Keywords: implementation, implementation effectiveness, RE-AIM, CFIR, sustainability, CAPABLE, evidence-based, program funding

Citation: Paone DL, Schuller JW, Smith ML, Gitlin LN and Szanton SL (2025) A 5-year examination of CAPABLE implementation using RE-AIM and CFIR frameworks. Front. Public Health. 13:1569320. doi: 10.3389/fpubh.2025.1569320

Edited by:

Azzurra Massimi, Sapienza University of Rome, ItalyReviewed by:

Frances Horgan, Royal College of Surgeons in Ireland, IrelandErika Renzi, Sapienza University of Rome, Italy

Copyright © 2025 Paone, Schuller, Smith, Gitlin and Szanton. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Deborah L. Paone, ZGVicGFvbmUxQGdtYWlsLmNvbQ==

Deborah L. Paone

Deborah L. Paone Jeanne W. Schuller1

Jeanne W. Schuller1 Matthew Lee Smith

Matthew Lee Smith Laura N. Gitlin

Laura N. Gitlin