- 1Faculty of Health, Medicine and Life Sciences, Maastricht University, Maastricht, Netherlands

- 2Falck, Copenhagen, Denmark

- 3Faculty of Medical Sciences, Newcastle University, Newcastle upon Tyne, United Kingdom

- 4Faculty of Health and Medical Sciences, University of Copenhagen, Copenhagen, Denmark

Introduction: The adoption of artificial intelligence (AI) in prehospital emergency medicine has predominantly been confined to high-income countries, leaving untapped potential in low- and middle-income countries (LMICs). AI holds promise to address challenges in out-of-hospital care within LMICs, thereby narrowing global health inequities. To achieve this, it is important to understand the success factors and challenges in implementing AI models in these settings.

Methods: A scoping review of peer-reviewed studies and semi-structured expert interviews were conducted to identify key insights into AI deployment in LMIC prehospital care. Data collection occurred between June and October 2024. Using thematic analysis, qualitative data was systematically coded to extract common themes within the studies and interview transcripts. Themes were then summarised narratively and supplemented with illustrative quotations in table format.

Results: From 16 articles and nine expert interview transcripts, five core themes emerged: (1) the rapid, iterative development of AI technologies; (2) the necessity of high-quality, representative, and unbiased data; (3) resource gaps impacting AI implementation; (4) the imperative of integrating human-centred design principles; and (5) the importance of cultural and contextual relevance for AI acceptance.

Conclusion: Additional focus on these areas can help drive the sustainable utilisation and ensuing development of AI in these environments. Strengthening collaboration and education amongst stakeholders and focusing on local needs and user engagement will be critical to promoting future success. Moving forwards, research should emphasise the importance of evidence-based AI development and appropriate data utilisation to ensure equitable, impactful solutions for all users.

1 Introduction

Artificial intelligence (AI) can be defined as technology’s ability to learn and perform tasks that simulate human behaviours, often at faster speeds and with higher accuracy than humans (1, 2). The recent exponential growth of this technology and a global appreciation of the efficiency gains it can generate, coincide with a time where there is an urgent global need for improvements in healthcare systems. Unfortunately, most current research investigating the use of AI in healthcare services is conducted in and for the benefit of high-income countries (HICs) (3), widening the health equity gap between these countries and low- and middle-income countries (LMICs). However, AI has the potential to transform the pursuit of global health equity by expanding essential healthcare coverage to underserved populations, including those in LMICs (4). Although there is comparatively little published research on AI within the healthcare settings of LMICs, this does not reflect current efforts in this field (1). Advances in mobile phone accessibility in rural and urban areas, investments in electronic health records (EHR) and the continued development of Information Technology (IT) infrastructure mean these countries have the capacity to utilise AI within their healthcare systems (1, 5, 6).

One area of healthcare that can particularly benefit from AI is emergency care. This is defined as “the care administered to a patient within the first few minutes or hours of suffering an acute and potentially life-threatening disease or injury” (7). The overall intention of providing emergency care is to minimise complications, including premature mortality and permanent disability (8). Emergency care has the greatest chance of achieving this goal when it is administered within the first hour of the injury or illness, during a period of time known as the golden hour (9). The golden hour usually occurs outside a hospital or healthcare facility, hence the ability of the prehospital emergency medical services (EMS) to react positively to unexpected casualties can have a significant impact on patient morbidity and mortality (9, 10). Moreover, the initial links in the “chain of survival” such as early recognition and call for help, contribute significantly more to improving patient outcomes than the later, advanced care links (11). Some researchers believe that AI can help overcome some of the barriers commonly associated with prehospital care in low-resource settings, such as shortages in skilled healthcare workers, a lack of medical equipment for diagnosis and management, and an absence of dedicated emergency vehicles. Addressing these challenges can reduce the gap in global health inequities (12–14), with AI models allowing EMS to more efficiently assess patients and promote the democratisation of clinical expertise (15, 16). This can help reduce the burden on EMS personnel and healthcare services while simultaneously improving patient outcomes (15, 17).

Within prehospital emergency care systems (PECS) in LMICs however, there is a lack of literature assessing the current broad applications of AI and the types of AI commonly utilised in these settings. Understanding AI’s current use in LMICs is important to ensure future systems are correctly integrated into the local context. Interventions designed for HICs cannot necessarily be transplanted into the PECS of LMICs. Local context plays a central role in the implementation of effective and sustainable health interventions (4, 18), meaning global health solutions cannot and should not be simply transferred into different communities (19, 20). Similarly, EMS models of care designed for use in HICs often are not sustainable solutions for LMICs (21).

This study seeks to address this research gap by firstly, identifying themes that exist in the current literature base of AI use within the PECS of LMICs and secondly, by consulting experts in this domain through qualitative interviews to provide an overview in AI utilisation in this field. This topic aligns with research recommendations by Masoumian Hosseini et al. (22), who advised increased focus on the different types of AI systems used and their interactions with healthcare professionals (and patients) to reconnect the gap between AI research and practice. By highlighting the outcomes and lessons learnt from previous interventions and their researchers, as well as exploring important considerations and upcoming challenges, future AI developers and prehospital personnel can ensure new models benefit local prehospital emergency patients, systems and staff while remaining contextually relevant in the long-term future (23).

2 Methods

This qualitative scoping review study has been reported according to the Consolidated Criteria for Reporting Qualitative Research (COREQ) guidelines (see Supplementary material for COREQ Checklist) (24). The qualitative scoping review study consists of thematic analysis of two distinct datasets: literature identified through scoping review, and transcripts of the consultations with field experts (25). This study will seek to answer the overarching research question: what is the current state of artificial intelligence utilisation in prehospital emergency care systems in LMICs, and what are the implications for future development? The qualitative scoping review protocol for this study was developed and registered with the Open Science Framework in June 2024 (26). Data collection of the literature and expert interviews was done independently, but datasets were combined for thematic analysis. The literature collected via scoping review for thematic analysis in this study, and the methodology used, is comprehensively described in the study by Mallon et al. (27). The literature collection process for the scoping review can also be seen in the PRISMA flowchart in the Supplementary material. In this study, all but one of the interviews were conducted by OM, who had not met any of the participants before commencing this study. As a male medical student studying for a Master’s degree in a healthcare-related topic (at the time of the study) from a HIC who has limited previous experience working in emergency and prehospital care in sub-Saharan Africa, the main researcher OM has made a conscious effort to ensure his previous experiences and personal perspectives did not infiltrate the data analysis process. OM has an interest in prehospital and emergency medicine and was undertaking this work as part of a Master’s project. FL and EP are experienced researchers from HICs with previous involvement in studies focusing on emergency medicine. As mentioned above, one interview was conducted by another researcher in a different language using the same interview question guide as the other interviews, and a transcript was translated to English by this interviewer.

Participants were selected and invited for interview via email through a combination of purposive and snowball sampling. This ensured a range of relevant perspectives from participants who possess an understanding of the topic, allowing research aims to be comprehensively addressed (28, 29). Participants were selected based on their experience working with or alongside AI tools designed for use in PECS, with nearly all participants having some relevant experience in LMICs. The sample size of nine was determined based off recommendations provided by Terry et al. of between six and 15 interviews for a Master’s project (30). The limited number of participants in this study reflects that this field is still an emerging research area, with limited experts having appropriate experience to act as participants in this study. The existing researchers in this field are also still establishing research networks and therefore may have been missed by our sampling methods. Several potential participants contacted via snowball sampling declined to participate as they did not feel they had enough experience in the field. Interviews were conducted online or at the workplace of the participant, depending on their preference and convenience. This ensured a professional but comfortable environment for participants.

Data collection took place between June and August 2024 and was analysed between July and October 2024. Participants took part in a one-off semi-structured interview lasting approximately 40 min. Only the interviewer and the participant were present during the interviews. Informed consent for interview and audio recording was acquired before the interview began. The researcher was equipped with a brief interview guide, a notebook for general notetaking during the interview, and an audio recording device. Interview guides were composed to include open questions structured around the overarching research question. This guide was reviewed and approved by all authors before beginning the interview process and further refined throughout the interviews. A copy of the final interview guide can be found in the Supplementary material. Experts were asked to reflect on their experiences in this field, and provide their opinions on the strengths, limitations and potential pitfalls in developing future AI models to address PECS in LMICs. Data collected from all expert consultations was qualitatively analysed from an interpretivist point of view using the thematic analysis method outlined by Braun and Clarke (31). Constructionist positions, which can be characterised as a form of interpretivism (32), were applied during the thematic analysis method. These positions make it difficult to ascertain data saturation based solely off sample size (33), and therefore data saturation is not reported in this study. Furthermore, this study primarily seeks to provide an overview of the topic by combining findings from literature and experts. Due to this adapted method, the sample size is appropriate for the intentions of the study and data saturation is less important than would be required for a standalone qualitative analysis of interviews.

Data was transcribed verbatim from audio recordings of the interview and checked by the interviewer to ensure integrity. Transcripts were not returned to the participants. Identifiable information including names and locations were removed, and each expert was assigned an ID number. These ID numbers are used throughout the results section to refer to the individual expert interviews. The anonymised transcripts and the articles identified in the scoping review were uploaded to ATLAS.ti 24 Mac (Version 24.1) for thematic analysis by OM (34). The data was then analysed using the iterative process of thematic analysis discussed by Braun and Clarke (31) to produce a narrative synthesis (34). This involved initial familiarisation with the data before generating the initial codes. Coding was conducted by one coder and based on interesting data features that can be meaningfully interpreted within the transcripts and articles (31). These codes were integrated into themes derived from the selected articles and alongside the transcripts of expert consultations. These identified themes were then discussed between all the authors to ensure all authors were in agreement, before being further refined (31). To ensure trustworthiness, supporting quotations of important themes identified during thematic analysis are included in the final manuscript (24), however participants did not provide feedback on the findings. Qualitative themes identified through thematic analysis of the literature review and expert consultations are discussed as part of the narrative summary with selected quotations displayed in table format.

3 Results

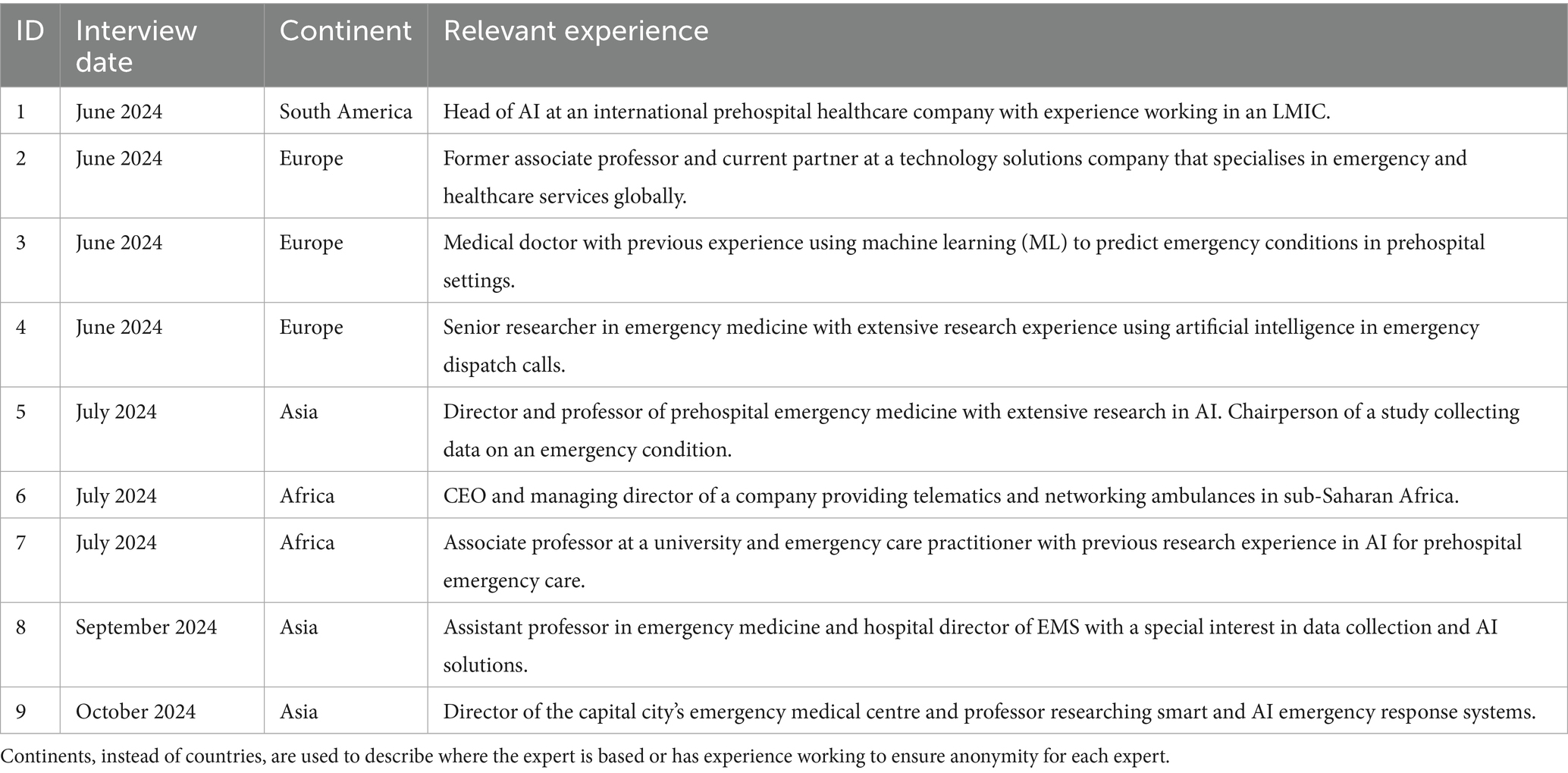

This study involved thematic analysis of the available literature identified by scoping review, and the consultation of nine experts. Table 1 provides a brief, anonymised overview of each of the nine expert’s individual characteristics. There were 16 studies identified through scoping review that were included for thematic analysis in this study (27). The majority of studies were from China (35–43), with the other seven countries with studies having only one study. In addition, all but one of the included studies were retrospective (42), with most studies conducted as cohort studies and three using simulation modelling (38, 44, 45). Further characteristics of the selected studies that were analysed can be seen in the previous study by Mallon et al. (27).

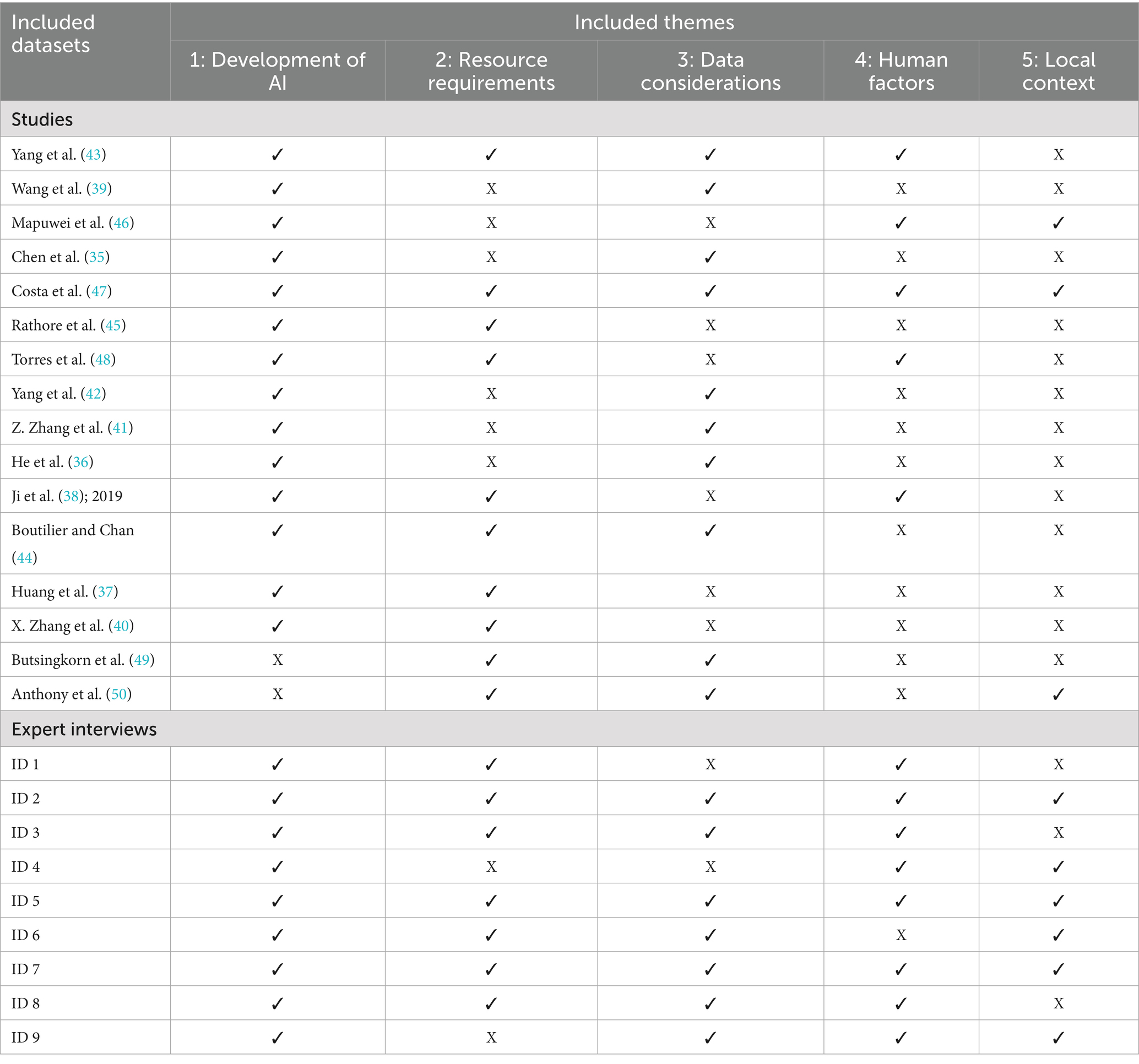

Thematic analysis of the literature and expert consultations identified five overarching themes that need to be considered in the current utilisation and future development of AI in the PECS of LMICs. These themes are (1) the development of AI; (2) resource requirements; (3) data considerations; (4) human factors; and (5) local context. Table 2 provides an overview of the five themes identified and discussed in each included dataset. The findings related to each theme are discussed below and supported by tables displaying relevant quotes, which have been derived from included studies and the transcripts of expert interviews. Words in square brackets ([]) were not spoken by the expert/ written by the study author but have been included to indicate the topic of discussion and provide context.

3.1 Theme 1: development of AI

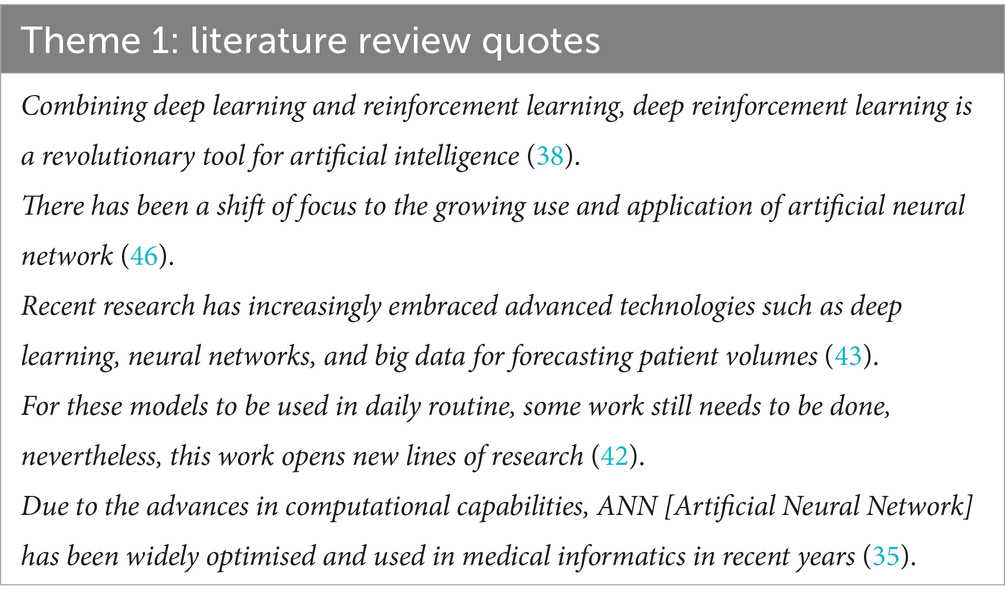

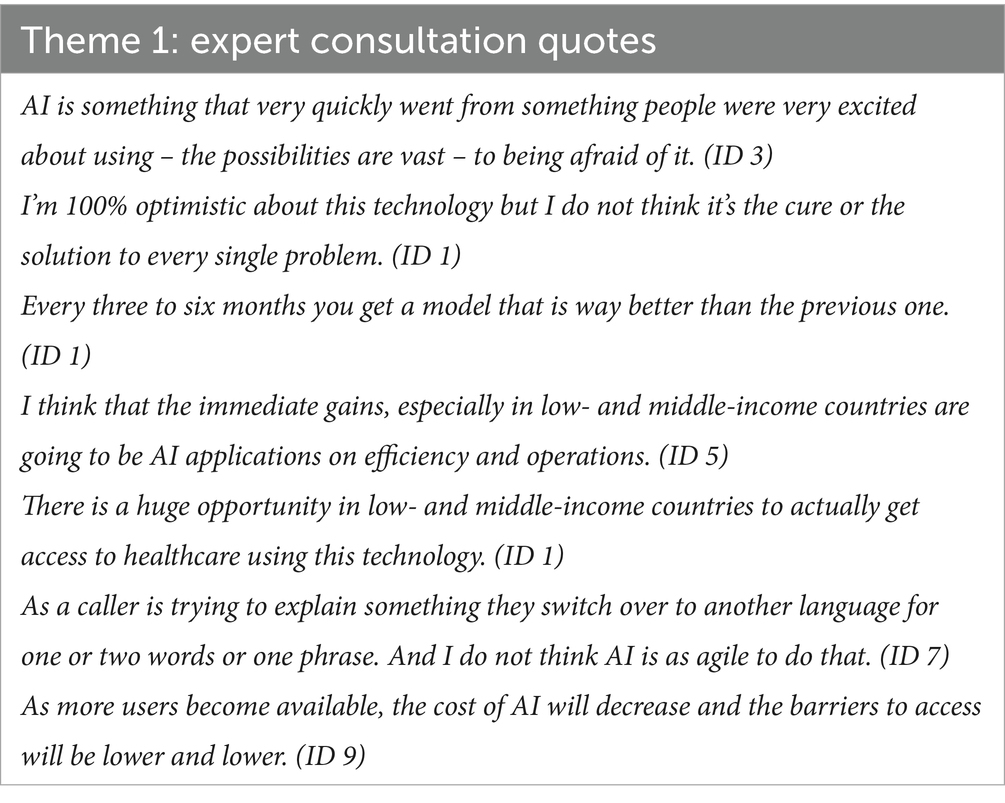

This theme, development of AI, explores how AI and its uses in prehospital care have developed over time and considers the directions they may continue to grow in. Some articles noted how AI technology has progressed to become more advanced and manage larger quantities of data (35, 39, 43, 46). Most authors discussed how the results of their studies can drive future AI applications and research (36, 38, 41–43, 45–48), with some studies forecasting the potential of combining AI tools with statistical or other AI models (37, 38, 40, 43, 44). Key quotes from these studies are shown in Table 3.

Experts also noted the progression towards more advanced AI technology (ID 1, ID 5, ID 7), although some acknowledged there is still a need for further refinement (ID 1, ID 7, ID 9). The majority of experts believed that AI has the potential to bring significant benefits to the PECS of LMICs (ID 1, ID 3, ID 4, ID 5, ID 6, ID 7, ID 8), and almost all experts interviewed discussed the various roles AI may play in this (ID 1, ID 2, ID 3, ID 5, ID 6, ID 7, ID 8, ID 9). Table 4 highlights some relevant quotes from the interviews. Consultations with experts also revealed a divide in opinion on the future progression of AI models in the context of LMICs. Some experts believed that explainability will be a key consideration in the implementation of AI in this context, including simpler models and the development of explainable deep learning AI models (XAI; ID 3, ID 4, ID 5, ID 8), while another expert believed that generative AI applications will transform future AI use in prehospital care (ID 1).

Overall, AI development is on a continued upward trajectory as the technology becomes increasingly complex and sophisticated. While there are some differences in opinion amongst experts around key considerations on the future direction of AI in prehospital care, subsequent research can leverage off previous studies to promote further innovation.

3.2 Theme 2: resource requirements

Some articles discussed how implementing AI solutions in EMS can reduce response times, save money and minimise resource use (37, 43, 45, 49) allowing for more equitable resource allocation (43, 44). As shown in Table 5, authors referred to the current lack of resources in LMICs as an important barrier to adequate prehospital emergency care (44, 45, 48–50). However, few studies considered the ability of countries to access the computational resources that would need to be involved to implement AI in some settings (38, 40, 45, 47). Moreover, Yang et al. (43) proposed using a statistical model in favour of AI to combat these computational demands.

Table 6 highlights some relevant quotes related to this theme from the expert interviews. Just under half of experts interviewed acknowledged the advantages of using AI to tackle resource limitations (ID 1, ID 2, ID 3, ID 5). Despite several experts noting that current IT infrastructure and expertise may be a limitation for using AI in some settings (ID 1, ID 2, ID 5, ID 8), these potential physical infrastructure challenges could potentially be overcome by leveraging off cloud architecture and mobile health solutions (ID 2, ID 5). Experts also considered the infrastructure required for adequate data security to protect detailed EHR as a potential limitation in the implementation of AI in LMICs (ID 3, ID 5, ID 7). In addition, experts noted the importance of funding and support for a project (ID 3, ID 5, ID 6, ID 8), with one expert admitting that the economy comes first (ID 3).

AI is generally considered to be able to optimise resource allocation in prehospital care. This is particularly important in LMICs that often lack appropriate levels of resources. However, other factors such as funding and infrastructure must be evaluated before attempting to implement any AI tools.

3.3 Theme 3: data considerations

Several studies noted that model precision could be improved with access to additional high-quality data (36, 42–44, 49, 50). With the majority of studies using retrospective datasets, some papers acknowledged the potential for bias in study design and participant selection (35, 39, 41, 42, 47). Minimising inherent biases in data collection methods and ensuring models are only trained on high quality data that represents the population it is serving, were seen by some as two key processes to limit the effect of data bias (39, 43, 47, 50). Relevant quotes from the literature are included in Table 7.

Expert opinions were largely congruent with study authors on the importance of, and how to limit data bias (ID 2, ID 3, ID 5, ID 7, ID 8, ID 9). Access to a high quality and quantity of representative data was considered amongst experts to be one of the most significant limitations to the implementation of AI in PECS in LMICs (ID 2, ID 3, ID 5, ID 7, ID 9). Some experts commented on the need for LMICs to digitise health records before they can embrace AI solutions (ID 5, ID 7, ID 8). Most experts warned of the risk of data bias and the consequences this could have on specific populations (ID 2, ID 3, ID 5, ID 6, ID 7). Examples of applicable quotes can be found in Table 8.

AI model accuracy can be improved by increased focus on data collection procedures. This includes ensuring you collect a large quantity of quality data, and that it is debiased, so that it better represents the population. LMICs aiming to use AI in their prehospital system, can facilitate algorithm access to larger quantities of data by digitising their existing health records.

3.4 Theme 4: human factors

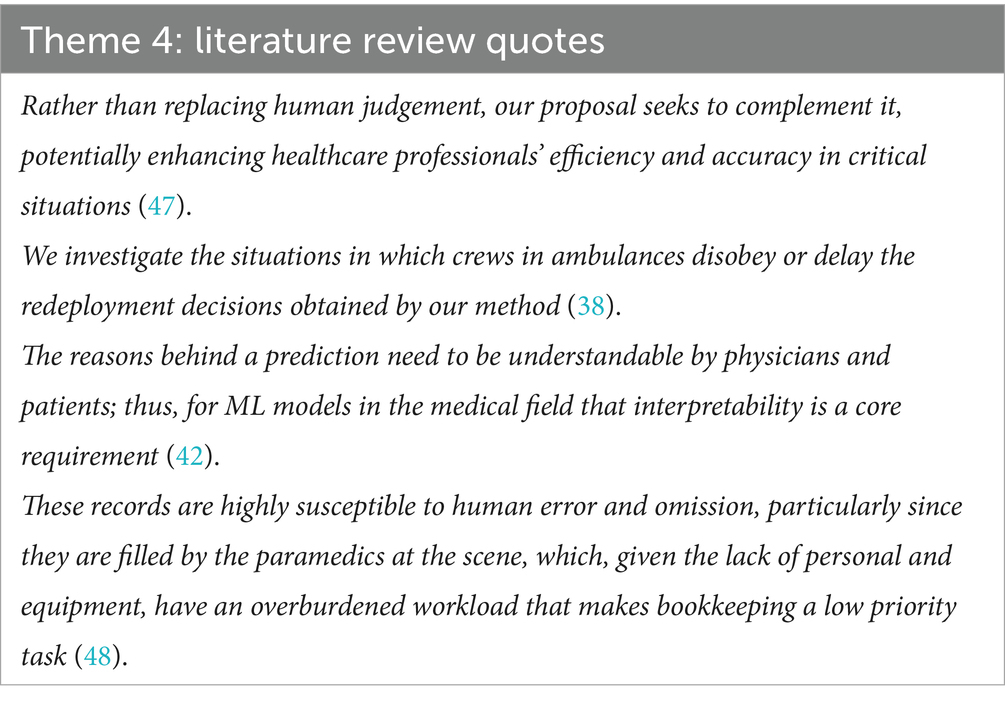

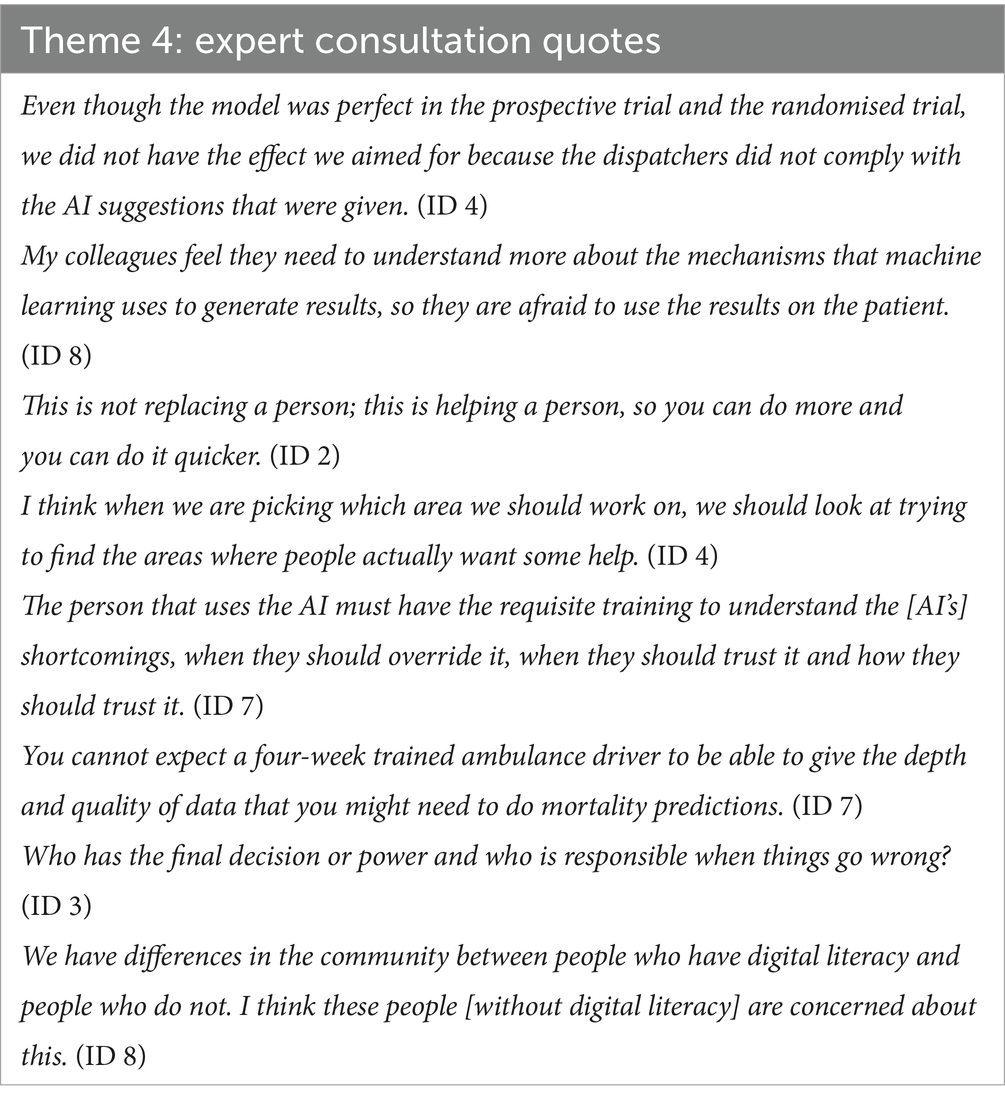

Some studies advise that increased collaboration between researchers, developers and healthcare workers will increase the chance of successful implementation (43, 46, 47). However, factors such as human error in records, can also limit the effectiveness of AI (48). Table 9 highlights some applicable quotes around this subject and wider theme. One study accommodated for some human factors, such as ignorance towards AI decisions, to more closely resemble the differences in experiments and real life (38).

A majority of experts believe all AI tools should be designed with the human end-user in mind, as model precision can be negatively impacted by human decisions in real life (ID 2, ID 3, ID 4, ID 5, ID 7, ID 9). As demonstrated in some of the quotes shown in Table 10, almost all experts stated that they believe AI tools should be developed to work alongside humans and not replace them (ID 1, ID 2, ID 3, ID 4, ID 5, ID 7, ID 9). To ensure the successful implementation of AI tools into a healthcare system, most experts feel that there is a need to educate the end-user on the purpose and functioning of the model (ID 3, ID 4, ID 5, ID 7). This was believed to build trust in the technology amongst the users (ID 2, ID 3, ID 4). Some experts maintained that XAI can also establish trust in AI (ID 3, ID 5, ID 8). Enhancing collaboration between contributors on future projects was also seen by some experts as an important step towards improving AI tools (ID 4, ID 5, ID 7, ID 8).

Overall, integrating humans in the decision process of AI models is necessary to ensure successful implementation, but this can limit the accuracy of the model. To minimise the risk of this occurring, all AI models should be developed using human-centred design principles.

3.5 Theme 5: local context

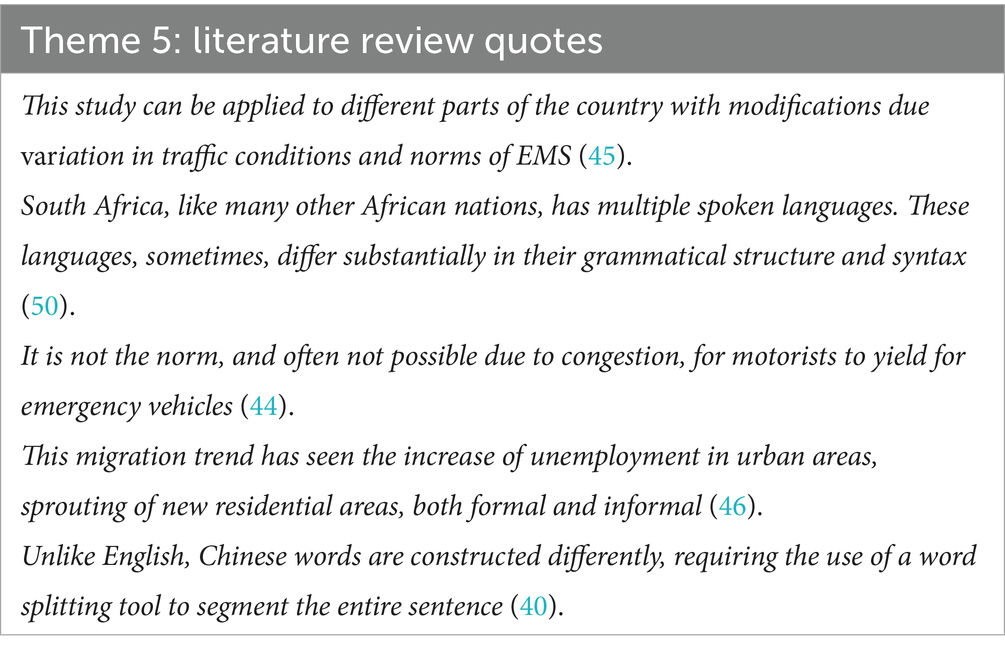

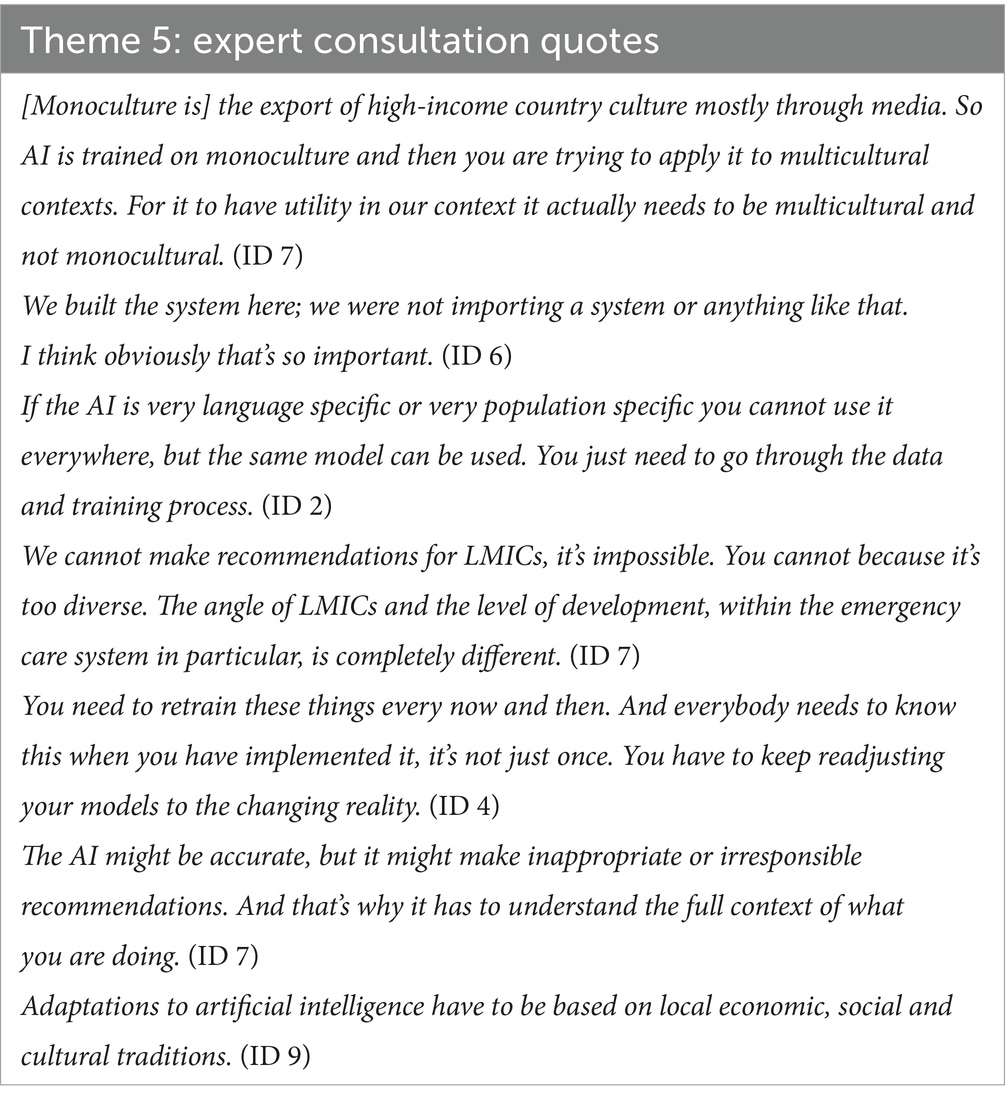

Table 11 displays relevant quotes related to this theme from the literature review. To ensure AI models benefit the population they have been built to help, they all should incorporate local context into their design and function. However, this is particularly important for models that deal with spoken language (47, 50). Some studies also discussed the importance of using contextually appropriate model designs (46, 47, 50).

Experts were keen to stress models should only be implemented for the local population they have been trained on, including considerations on important differences in urban and rural populations (ID 5, ID 6, ID 7). Applying the model outside of the local context would require retraining (ID 2, ID 4). Some experts believe social and cultural acceptability must be considered when implementing AI tools (ID 5, ID 6, ID 7, ID 9). Key quotations related to this theme are included in Table 12.

In conclusion, factors such as language and culture cannot be ignored when designing an AI tool. These and other context-specific factors must be taken into consideration to ensure successful implementation.

4 Discussion

In this study, 16 studies and the transcripts of nine interviews with field experts were thematically analysed to assess the current state of AI utilisation in PECS in LMICs and the implications for development. The findings of this study suggest AI can be an effective tool to use in these settings, however the key areas that researchers and clinicians implementing AI tools should consider are the rapid and constant development of this technology; the importance of high quality, unbiased data; any gaps in current and required resources for implementation; the incorporation of human-centred design into the project; and the importance of ensuring models are acceptable within the local context. This discussion will review the findings from this study and discuss the success factors and challenges in implementing AI models in the PECS of LMICs. By building on the results of this study, this discussion will seek to outline important considerations for a future strategic vision in this field.

4.1 Ensuring high-quality data

AI tools in PECS should have the overarching goal to improve patient outcomes by facilitating the treatment of patients as soon as possible after an acute injury or illness occurs. The results of this qualitative analysis suggest that attaining adequate amounts of high-quality data is fundamental to achieving this goal. However, acquiring the necessary level of detail in data can be difficult for some LMICs (51). Healthcare systems in some of these countries do not have access to personnel with adequate training in data collection and analysis (52). This is typically exacerbated in PECS, many of which often lack trained medical personnel (14). Ensuring workers are skilled and available to appropriately use AI tools has been a challenge in adopting AI previously (53). Even when personnel are appropriately trained, they can lack the system-wide technical infrastructure and therefore have limited capacity to use data on the frontline (52, 54). Organisational challenges such as financial limitations and inadequate government policies further limit accurate data collection (52, 55). In addition to facilitating the integration of AI, addressing structural barriers is important as LMICs seeking to improve the delivery of healthcare generally may benefit from improvements in data utilisation through a cyclical positive feedback process (54). By focusing on improving data utilisation as a means to improve a healthcare system, healthcare services seek to gather additional data. This demand can promote improvements in data collection and analysis methods, through technical infrastructure, training of individuals to ensure correct procedural technique, and additional organisational support (52, 56). The digitisation of written records into EHR is one way to support improved data collection and analysis (52). This should be a priority for health systems in LMICs to allow AI technology to be appropriately trained (57). Electronic healthcare systems can also lower costs, boost transparency and lead to efficiency gains (54, 58). These adaptations can result in an increase in information available for stakeholders to make informed decisions. Improving access to this information can allow more stakeholders to use all the available information to make more evidence-based health decisions, thereby promoting evidence-based policies (56). Adopting policies supported by research is seen as essential for the development of emergency care in LMICs (59). Finally, a greater desire for information to assist decision-making stimulates a greater demand for data, allowing the cycle to continue (56).

AI can also widen the scope of information available for evidence-based decisions by improving the efficiency and accuracy of data analysis processes by rapidly identifying patterns from large volumes of data (22). However, it is important that the use of information, and therefore the demand for data (56), is based on the needs of the population and not on the current availability of data and information (5). This can be accomplished by implementing AI models that are designed to address the specific and relevant challenges in prehospital care for a defined population or setting. Aside from the advantages directly associated with using AI in LMICs and the positive feedback mechanism discussed, upgrading existing digital healthcare infrastructure to meet the data demands of AI will likely result in other wider benefits for the health system. One example of this is cloud computing, which can provide leverage for AI and simplify its deployment in a healthcare system (60), while simultaneously improving the reliability and reducing the cost of data storage and management (1). Despite these benefits, many LMICs lack the sustained financial resources for AI implementation, with funding being prioritised for wider EMS upgrade costs instead (61). If executed correctly, AI augmentation may reduce healthcare costs through improvements in operational efficiency and quality care. Resource limited settings should prioritise areas that are lower cost, offer the greatest gains in efficiency and target diseases with the highest burden to maximise AI investment and simultaneously upgrade PECS (58). However, this can be expensive to undertake and countries implementing AI tools in PECS should consider both direct and indirect costs with this technology, ensuring rigorous procedures are in place to allow for sustained cost savings and efficiencies (62).

4.2 Human-centred approach

According to the study results, continued technological innovation is believed to improve AI models in PECS in LMICs. The ability to use XAI without limitations to performance is considered by some to play a central role in future applications by making currently hidden deep learning decision processes visible, promoting model reliability and physician trust (57, 63). Future AI models should not only demonstrate transparency in how the data is used to generate a final decision but should also clearly show where the data has been obtained. Algorithms based on data from a HIC will likely not reflect the population of a LMIC, and risk exacerbating established prejudices (64, 65). Even if data is collected from the population of a selected LMIC, factors such as aggregation bias or representation bias can ensure the collected data is not a true reflection of the population. Moreover, making an assumption about a single patient based on observations of an entire population is unlikely to properly accommodate for individual differences (66, 67). For example, if an AI tool to predict sepsis is based on data from the general population, a patient with a compromised immune system may be screened as healthy by the algorithm but be, in reality, very unwell. The model trained using observations from “typical” patients, i.e., immunocompetent patients, is unlikely to recognise an immunocompromised patient as unwell. Within local populations, vulnerable and marginalised groups often face more significant challenges in accessing technologies such as AI, meaning populations with a perceived higher social standing are more likely to be able to access and therefore benefit from new health innovations (68). Not only does this result in improved outcomes only for these patients, but the resulting algorithm is also trained and refined based on this sample, and therefore introduces bias into the tool and becomes more accurate for the convenient majority, potentially negatively impacting patient outcomes for other historically marginalised demographic groups (66, 69). To minimise this risk, AI tools and the corresponding algorithms must be regularly audited by independent third parties. These auditing processes should be continuous and adapted to appropriately assess the AI tool as it is developed and refined. It is essential that these evaluations assess the model’s performance within these vulnerable communities (70). These processes should follow the framework elements and principles outlined in the World Health Organisation’s guidelines on AI ethics and governance (70).

When correctly integrated into a specific setting, AI can improve the quality of and access to healthcare for some populations (65). For example, using AI may be particularly useful in multicultural settings where patients speak a diverse set of languages by providing real-time accurate translations of discussions between healthcare provider and patient. Some healthcare professionals view AI as potentially beneficial for patients who are normally isolated by language barriers, by improving the standard of care for these patients and providing additional support to the healthcare team, on top of gains in efficiency (71). This function can have further unintended benefits for health systems and staff as well. For dispatch services, AI may also reduce levels of staffing required to address language barriers, relieving emergency care teams in resource-limited settings (72). Moreover, automating certain processes in emergency care can even lead to improvements in job satisfaction amongst staff, by lowering levels of frustration and the perceived time spent documenting notes (73).

4.3 Ethical considerations

Although an essential factor in AI development, ethical considerations for equitable AI use should not be limited to ensuring representative datasets, but should also consider the availability of equipment and staff in the healthcare system (69). For example, should an AI tool advise intubation for a prehospital patient if there are no healthcare personnel available with the skills and knowledge to intubate? What if there are no intensive care beds available for the patient when they arrive at hospital? There is little advantage in improving the ability to diagnose a condition if a particular healthcare system lacks the resources to treat it (1). Ethical implications of AI use in the prehospital setting should be discussed and decisions made with consideration for current system capacity, as well as local culture and customs (69). This point has been previously disregarded in the technological research agendas implemented by Western actors that lack local contextual knowledge, who instead seek to engage and satisfy the needs of international donors more than those of the end-users (74).

Healthcare systems in LMICs that are seeking to implement AI will also need to address data security and patient privacy concerns that accompany the generation of electronically stored datasets (1, 13). AI models are reliant on multiple actors accessing patient information across different databases (13), potentially exposing confidential medical records to malicious threats (52). Previous studies have highlighted the difficulties of establishing privacy policies and enforcing medical law in LMICs (75). Although these issues exist in AI use in all areas of medicine, the diversity of patient presentations in PECS makes it difficult to develop generalised regulations for this domain. In addition, the imminently life-threatening nature of some patient presentations means that the ease of access to vital patient information such as past medical history and drug allergies is an important consideration when developing security measures for EHR. Despite this, the ease of accessing data must never be at the expense of patient confidentiality. AI developers will have to balance these ethical privacy challenges with the accessibility requirements of healthcare staff (1). Governments of LMICs will also have to manage energy security concerns if AI is implemented in the healthcare system. AI systems are reliant on large amounts of energy to process information through its servers, that then require cooling with clean, fresh water. Although some studies suggest AI can improve energy efficiency in LMICs (76), this computational demand combined with the rapid growth of large language models is expected to exceed any potential energy savings in the coming years (77). Balancing this net-positive energy expenditure of AI, coupled with growing international concerns about the effect of greenhouse gas emissions on climate change, against the possible advantages of implementing AI tools, will be a challenge for LMICs as they continue to rely heavily on fossil fuels and struggle to match energy supply with demand (76). Power cuts affecting AI tools that PECS have become reliant on may have devastating consequences for patient outcomes.

4.4 Future directions

There is a need to successfully implement AI now so that it can continue to benefit society in the future. This is particularly important in LMICs, where preliminary research is demonstrating AI’s potential to augment current health service distribution (5). To address some of the current issues with AI utilisation in PECS in LMICs, there must be improved international partnership between stakeholders in prehospital EMS, government organisations, research and the medical technology field (15), to empower leadership from those with local, contextual experience to implement necessary changes. This will promote the safe, ethical and socially acceptable use of AI technology and ensure a smooth transition towards programs funded and run by local people (23). Collaboration should occur early in the design process, allowing governments and investors to make informed decisions about the implementation of this technology and strengthen the sustainability of the intervention (21). These discussions should continue after the implementation of the device, to make sure algorithms are appropriately retrained and adapt to the changing reality of their environment (51). All collaborators should ensure understanding in a tool’s applications and limitations, and should share collective responsibility for any implemented AI models (70). By ensuring humans remain central to decisions made while using AI, healthcare systems can also ease legal liability and ethical considerations in cases of patient harm (1, 57).

Stakeholders involved in these discussions should also develop a regulatory framework for AI use in these settings. This should set out the legal requirements for this technology to prevent models discriminating against marginalised populations (69). This framework should also consider how to ensure data is securely stored (52). Having governmental support for AI-friendly policies can further motivate healthcare systems to embrace AI solutions (78). In addition, prehospital emergency care staff that will avail of this technology should be educated on how any future AI tools will work before they have been implemented and used. Improvements in training should also be extended to those involved in data collection and analysis, with an emphasis on transparency at all stages of data collection, model training and implementation to make sure standards are upheld, evidence-based decision making processes are followed, and future developers learn from previous mistakes (12, 69, 79). There is also a need for more qualitative reviews of key actors, including patients, on the use of AI in prehospital settings. This should include studies on the acceptance of AI amongst key prehospital staff in LMICs, as well as how to ensure contextual acceptability.

4.5 Limitations

This study had several limitations. Firstly, participants may have felt obligated to respond to questions in a socially acceptable way instead of sharing their true opinions. To try and limit this, the interviewer used a semi-structured approach with open and follow-up questions. Secondly, as participants were unable to provide feedback on the findings and therefore validate the results, it must be acknowledged that the results are based on the interviewer’s interpretation of the expert’s spoken word and may not directly mirror the intention of the expert. Using a single coder to identify themes in the data can also introduce the risk of interpretive bias in the analysis. To try and reduce this risk of bias, themes were discussed with all co-authors to ensure inter-author agreement. Finally, the sampling methods of snowball and purposive sampling mean the study sample may not be representative of the range of researchers and clinicians involved in this research area, reducing the external validity of the findings. Moreover, only nine participants were interviewed for the thematic analysis, possibly limiting the depth of identified themes. However, as this research area is only beginning to develop there are only a limited number of experts working on AI in PECS in LMICs, some of whom may not have fully developed strong international research links, making it difficult to identify experts through the selected sampling methods. This is also reflected in the geographical representation of interviewed experts. Most participants originate from Europe and Asia, with other LMIC regions such as Latin America underrepresented. This sampling bias may limit the applicability of the study’s findings in these underrepresented areas. This lack of expert availability is one of the reasons a scoping review method was applied, as it would be difficult to achieve true data saturation.

5 Conclusion

AI is seen by some to be a key missing piece in the drive towards universal health coverage and the realisation of the United Nations’ Sustainable Development Goal 3: Good Health and Wellbeing (13, 79, 80). Improvements across all areas of medicine, but particularly in emergency care will be an essential step to achieve this ambitious target (81). LMICs and low-resource settings may be in a good position to improve their public health systems by sustainably implementing this technology (1). Moreover, AI has the potential to facilitate the improvement of data collection and management procedures, enhancing overall data quality and ensuring healthcare decisions are evidence-based. However, AI will not provide the solution many people hope without consideration for its future directions in development; resource requirements; data needs; human factors; and local context. Without appropriate initial infrastructure to support its use, AI technology cannot be a long-term solution in prehospital settings. Stakeholders must also consider the ethical implications of introducing this technology into the healthcare system. These targets can be achieved through improved collaboration between all involved stakeholders, led by those with context-specific knowledge and experience. This partnership can promote creation of a regulatory framework to ensure AI is used responsibly, as well as provide further education for the healthcare staff using this technology. Continued research into AI use in PECS, particularly within LMICs, involving both qualitative and large, prospective and randomised quantitative studies is required. As the field of prehospital AI continues to develop and the perceived benefits become more urgently needed, it is essential that future developers, researchers and EMS staff reflect on the success factors and challenges of previously implemented AI tools to ensure that future applications are sustainable for the future and benefit the patient, staff and wider healthcare system.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Ethics Review Committee at the Faculty of Health, Medicine and Life Sciences (REC-FHML), Maastricht University (Ethical Clearance Approval Number FHML/GH_2024.006). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

OM: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Visualization, Writing – original draft, Writing – review & editing. FL: Conceptualization, Project administration, Resources, Supervision, Validation, Visualization, Writing – review & editing. EP: Conceptualization, Project administration, Resources, Supervision, Validation, Visualization, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

The authors would like to thank the staff at Maastricht University and Falck for their continued support throughout this project. The authors would like to especially thank Yan Wu for their help translating one of the expert interviews into English for analysis.

Conflict of interest

FL was employed by Falck.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2025.1632029/full#supplementary-material

Abbreviations

AI, Artificial Intelligence; ANN, Artificial Neural Network; COREQ, Consolidated Criteria for Reporting Qualitative Research; EHR, Electronic Health Records; EMS, Emergency Medical Services; HIC, High Income Country; IT, Information Technology; LMIC, Low- and Middle-Income Country; ML, Machine Learning; PECS, Prehospital Emergency Care System; XAI, Explainable Artificial Intelligence.

References

1. Wahl, B, Cossy-Gantner, A, Germann, S, and Schwalbe, NR. Artificial intelligence (AI) and global health: how can AI contribute to health in resource-poor settings? BMJ Glob Health. (2018) 3:e000798. doi: 10.1136/bmjgh-2018-000798

2. Liu, G, Li, N, Chen, L, Yang, Y, and Zhang, Y. Registered trials on artificial intelligence conducted in emergency department and intensive care unit: a cross-sectional study on ClinicalTrials.gov. Front Med (Lausanne). (2021) 8:634197. doi: 10.3389/fmed.2021.634197

3. Tran, BX, Vu, GT, Ha, GH, Vuong, Q-H, Ho, M-T, Vuong, T-T, et al. Global evolution of research in artificial intelligence in health and medicine: a bibliometric study. J Clin Med. (2019) 8:360. doi: 10.3390/jcm8030360

4. Weissglass, DE. Contextual bias, the democratization of healthcare, and medical artificial intelligence in low- and middle-income countries. Bioethics. (2022) 36:201–9. doi: 10.1111/bioe.12927

5. Schwalbe, N, and Wahl, B. Artificial intelligence and the future of global health. Lancet. (2020) 395:1579–86. doi: 10.1016/S0140-6736(20)30226-9

6. Fujimori, R, Liu, K, Soeno, S, Naraba, H, Ogura, K, Hara, K, et al. Acceptance, barriers, and facilitators to implementing artificial intelligence–based decision support Systems in Emergency Departments: quantitative and qualitative evaluation. JMIR Form Res. (2022) 6:e36501. doi: 10.2196/36501

7. Hani, M, Christine, N, Gerard, O, Reilly, OK, Vikas, K, et al. Emergency care surveillance and emergency care registries in low-income and middle-income countries: conceptual challenges and future directions for research. BMJ. Glob Health. (2019) 4:e001442. doi: 10.1136/bmjgh-2019-001442

8. West, R. Objective standards for the emergency services: emergency admission to hospital. J R Soc Med. (2001) 94:4–8.

9. Henry, JAM, and Reingold, ALM. Prehospital trauma systems reduce mortality in developing countries: a systematic review and meta-analysis. J Trauma Acute Care Surg. (2012) 73:261–8. doi: 10.1097/TA.0b013e31824bde1e

10. Mehmood, A, Rowther, A, Kobusingye, O, and Hyder, A. Assessment of pre-hospital emergency medical services in low-income settings using a health systems approach. International. J Emerg Med. (2018) 11:11. doi: 10.1186/s12245-018-0207-6

11. Deakin, CD. The chain of survival: not all links are equal. Resuscitation. (2018) 126:80–2. doi: 10.1016/j.resuscitation.2018.02.012

12. Ciecierski-Holmes, T, Singh, R, Axt, M, Brenner, S, and Barteit, S. Artificial intelligence for strengthening healthcare systems in low- and middle-income countries: a systematic scoping review. npj Digital Med. (2022) 5:1. doi: 10.1038/s41746-022-00700-y

13. Singh, J. Artificial intelligence and global health: opportunities and challenges. Emerging topics. Life Sci. (2019) 3:741–6. doi: 10.1042/ETLS20190106

14. Bhattarai, HK, Bhusal, S, Barone-Adesi, F, and Hubloue, I. Prehospital emergency Care in low- and Middle-Income Countries: a systematic review. Prehosp Disaster Med. (2023) 38:495–512. doi: 10.1017/S1049023X23006088

15. Cimino, J, and Braun, C. Clinical research in prehospital care: current and future challenges. Clin Prac. (2023) 13:1266–85. doi: 10.3390/clinpract13050114

17. Piliuk, K, and Tomforde, S. Artificial intelligence in emergency medicine. A systematic literature review. Int J Med Inform. (2023) 180:105274. doi: 10.1016/j.ijmedinf.2023.105274

18. Adams, V, Burke, NJ, and Whitmarsh, I. Slow research: thoughts for a movement in Global Health. Med Anthropol. (2014) 33:179–97. doi: 10.1080/01459740.2013.858335

19. Engel, N, Van Hoyweghen, I, and Krumeich, A. Making health care innovations work: standardization and localization in Global Health. In: N Engel, I HoyweghenVan, and A Krumeich, editors. Making Global Health care innovation work: Standardization and localization. New York: Palgrave Macmillan US; (2014). p. 1–14.

20. Razzak, J, Beecroft, B, Brown, J, Hargarten, S, and Anand, N. Emergency care research as a global health priority: key scientific opportunities and challenges. BMJ Glob Health. (2019) 4:e001486. doi: 10.1136/bmjgh-2019-001486

21. Delaney, PG, Moussally, J, and Wachira, BW. Future directions for emergency medical services development in low- and middle-income countries. Surgery. (2024) 176:220–2. doi: 10.1016/j.surg.2024.02.030

22. Masoumian Hosseini, M, Masoumian Hosseini, ST, Qayumi, K, Ahmady, S, and Koohestani, HR. The aspects of running artificial intelligence in emergency care; a scoping review. Arch Acad Emerg Med. (2023) 11:e38. doi: 10.22037/aaem.v11i1.1974

23. Hadley, TD, Pettit, RW, Malik, T, Khoei, AA, and Salihu, HM. Artificial intelligence in Global Health -a framework and strategy for adoption and sustainability. Int J MCH AIDS. (2020) 9:121–7. doi: 10.21106/ijma.296

24. Tong, A, Sainsbury, P, and Craig, J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. (2007) 19:349–57. doi: 10.1093/intqhc/mzm042

25. Levac, D, Colquhoun, H, and O'Brien, KK. Scoping studies: advancing the methodology. Implement Sci. (2010) 5:69. doi: 10.1186/1748-5908-5-69

26. Mallon, O, Pilot, E, and Lippert, F. Utilising artificial intelligence in the prehospital emergency medical services of LMICs: a scoping. Review. (2024). doi: 10.17605/OSF.IO/9VS2M

27. Mallon, O, Lippert, F, Stassen, W, Ong, MEH, Dolkart, C, Krafft, T, et al. Utilising artificial intelligence in prehospital emergency care systems in low- and middle-income countries: a scoping review. Frontiers. Public Health. (2025) 13:2–3. doi: 10.3389/fpubh.2025.1604231

28. Campbell, S, Greenwood, M, Prior, S, Shearer, T, Walkem, K, Young, S, et al. Purposive sampling: complex or simple? Research case examples. J Res Nurs. (2020) 25:652–61. doi: 10.1177/1744987120927206

29. Giacomini, MK, and Cook, DJ. Group ftE-BMW. Users' guides to the medical LiteratureXXIII. Qualitative research in health care a. are the results of the study valid? JAMA. (2000) 284:357–62.

30. Terry, G, Hayfield, N, Clarke, V, and Braun, V. Thematic analysis In: C Willing and WS Rogers, editors. The SAGE handbook of qualitative research in psychology. 2nd ed. London: Sage (2017). 17–37.

31. Braun, V, and Clarke, V. Using thematic analysis in psychology. Qual Res Psychol. (2006) 3:77–101. doi: 10.1191/1478088706qp063oa

32. Bunniss, S, and Kelly, DR. Research paradigms in medical education research. Med Educ. (2010) 44:358–66. doi: 10.1111/j.1365-2923.2009.03611.x

33. Braun, V, and Clarke, V. To saturate or not to saturate? Questioning data saturation as a useful concept for thematic analysis and sample-size rationales. Qual Res Sport, Exerc Health. (2021) 13:201–16. doi: 10.1080/2159676X.2019.1704846

34. ATLAS.ti Scientific Software Development GmbH. ATLAS.ti Mac. 24.1.1 ed. Lumivero (2024). Available at: https://atlasti.com

35. Chen, Z, Zhang, R, Xu, F, Gong, X, Shi, F, Zhang, M, et al. Novel prehospital prediction model of large vessel occlusion using artificial neural network. Front Aging Neurosci. (2018) 10:181. doi: 10.3389/fnagi.2018.00181

36. He, M, Lu, Y, Zhang, L, Zhang, H, Gong, Y, and Li, Y. Combining amplitude Spectrum area with previous shock information using neural networks improves prediction performance of defibrillation outcome for subsequent shocks in out-of-hospital cardiac arrest patients. PLoS One. (2016) 11:e0149115. doi: 10.1371/journal.pone.0149115

37. Huang, H, Jiang, M, Ding, Z, and Zhou, M. Forecasting emergency calls with a Poisson neural network-based assemble model. IEEE Access. (2019) 7:18061–9. doi: 10.1109/ACCESS.2019.2896887

38. Ji, S, Zheng, Y, Wang, Z, and Li, T. A deep reinforcement learning-enabled dynamic redeployment system for Mobile ambulances. Proc ACM Interact Mob Wearable Ubiquitous Technol. (2019) 3:1–20. doi: 10.1145/3314402

39. Wang, J, Zhang, J, Gong, X, Zhang, W, Zhou, Y, and Lou, M. Prediction of large vessel occlusion for ischaemic stroke by using the machine learning model random forests. Stroke Vasc Neurol. (2022) 7:94–100. doi: 10.1136/svn-2021-001096

40. Zhang, X, Zhang, H, Sheng, L, and Tian, F. DL-PER: deep learning model for Chinese prehospital emergency record classification. IEEE Access. (2022) 10:64638–49. doi: 10.1109/ACCESS.2022.3179685

41. Zhang, Z, Zhou, D, Zhang, J, Xu, Y, Lin, G, Jin, B, et al. Multilayer perceptron-based prediction of stroke mimics in prehospital triage. Sci Rep. (2022) 12:17994. doi: 10.1038/s41598-022-22919-1

42. Yang, L, Liu, Q, Zhao, Q, Zhu, X, and Wang, L. Machine learning is a valid method for predicting prehospital delay after acute ischemic stroke. Brain Behav. (2020) 10:e01794. doi: 10.1002/brb3.1794

43. Yang, P, Cheng, P, Zhang, N, Luo, D, Xu, B, and Zhang, H. Statistical machine learning models for prediction of China's maritime emergency patients in dynamic: ARIMA model, SARIMA model, and dynamic Bayesian network model. Front Public Health. (2024) 12:1401161. doi: 10.3389/fpubh.2024.1401161

44. Boutilier, JJ, and Chan, TCY. Ambulance emergency response optimization in developing countries. Oper Res. (2020) 68:1315–34. doi: 10.1287/opre.2019.1969

45. Rathore, N, Jain, PK, and Parida, M. A sustainable model for emergency medical Services in Developing Countries: a novel approach using partial outsourcing and machine learning. Risk Manag Healthcare Policy. (2022) 15:193–218. doi: 10.2147/RMHP.S338186

46. Mapuwei, TW, Bodhlyera, O, and Mwambi, H. Univariate time series analysis of short-term forecasting horizons using artificial neural networks: the case of public ambulance emergency preparedness. J Appl Math. (2020) 2020:1–11. doi: 10.1155/2020/2408698

47. Costa, DB, Pinna, FCA, Joiner, AP, Rice, B, Souza, JVP, Gabella, JL, et al. AI-based approach for transcribing and classifying unstructured emergency call data: a methodological proposal. PLOS digital health. (2023) 2:e0000406. doi: 10.1371/journal.pdig.0000406

48. Torres, N, Trujillo, L, Maldonado, Y, and Vera, C. Correction of the travel time estimation for ambulances of the red cross Tijuana using machine learning. Comput Biol Med. (2021) 137:104798. doi: 10.1016/j.compbiomed.2021.104798

49. Butsingkorn, T, Apichottanakul, A, and Arunyanart, S. Predicting demand for emergency ambulance services: a comparative approach. J Applied Sci Eng. (2024) 27:3313–8. doi: 10.6180/jase.202410_27(10).0011

50. Anthony, T, Mishra, AK, Stassen, W, and Son, J. The feasibility of using machine learning to classify calls to south African emergency dispatch Centres according to prehospital diagnosis, by Utilising caller descriptions of the incident. Healthcare (Basel, Switzerland). (2021) 9:1107. doi: 10.3390/healthcare9091107

51. Christie, SA, Hubbard, AE, Callcut, RA, Hameed, M, Dissak-Delon, FN, Mekolo, D, et al. Machine learning without borders? An adaptable tool to optimize mortality prediction in diverse clinical settings. J Trauma Acute Care Surg. (2018) 85:921–7. doi: 10.1097/TA.0000000000002044

52. Abdul-Rahman, T, Ghosh, S, Lukman, L, Bamigbade, GB, Oladipo, OV, Amarachi, OR, et al. Inaccessibility and low maintenance of medical data archive in low-middle income countries: mystery behind public health statistics and measures. J Infect Public Health. (2023) 16:1556–61. doi: 10.1016/j.jiph.2023.07.001

53. Vishwakarma, LP, Singh, RK, Mishra, R, and Kumari, A. Application of artificial intelligence for resilient and sustainable healthcare system: systematic literature review and future research directions. Int J Prod Res. (2023) 63:1–23. doi: 10.1080/00207543.2023.2188101

54. O'Neil, S, Taylor, S, and Sivasankaran, A. Data equity to advance health and health equity in low- and middle-income countries: a scoping review. Digit Health. (2021) 7:20552076211061922. doi: 10.1177/20552076211061922

55. Awosiku, OV, Gbemisola, IN, Oyediran, OT, Egbewande, OM, Lami, JH, Afolabi, D, et al. Role of digital health technologies in improving health financing and universal health coverage in sub-Saharan Africa: a comprehensive narrative review. Front Digit Health. (2025) 7:1391500. doi: 10.3389/fdgth.2025.1391500

56. Foreit, K, Moreland, S, and LaFond, A. Data demand and information use in the health sector: a conceptual Framework. (2006):[1–17 pp.]. Available online at: https://www.measureevaluation.org/resources/publications/ms-06-16a.html (Accessed on 08-08-2024)

57. Pagallo, U, O'Sullivan, S, Nevejans, N, Holzinger, A, Friebe, M, Jeanquartier, F, et al. The underuse of AI in the health sector: opportunity costs, success stories, risks and recommendations. Health Technol (Berl). (2024) 14:1–14. doi: 10.1007/s12553-023-00806-7

59. Lecky, FE, Reynolds, T, Otesile, O, Hollis, S, Turner, J, Fuller, G, et al. Harnessing inter-disciplinary collaboration to improve emergency care in low- and middle-income countries (LMICs): results of research prioritisation setting exercise. BMC Emerg Med. (2020) 20:68. doi: 10.1186/s12873-020-00362-7

60. Hosny, A, and Aerts, HJWL. Artificial intelligence for global health. Science. (2019) 366:955–6. doi: 10.1126/science.aay5189

61. López, DM, Rico-Olarte, C, Blobel, B, and Hullin, C. Challenges and solutions for transforming health ecosystems in low- and middle-income countries through artificial intelligence. Front Med (Lausanne). (2022) 9:958097. doi: 10.3389/fmed.2022.958097

62. Taheri, Hosseinkhani N. Economic evaluation of artificial intelligence integration in global healthcare: balancing costs, outcomes, and investment Value. New Brunswick: Auburn University (2025).

63. Kelly, CJ, Karthikesalingam, A, Suleyman, M, Corrado, G, and King, D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. (2019) 17:195. doi: 10.1186/s12916-019-1426-2

64. Peña Gangadharan, S, and Niklas, J. Decentering technology in discourse on discrimination*. Inf Commun Soc. (2019) 22:882–99. doi: 10.1080/1369118X.2019.1593484

65. Alami, H, Rivard, L, Lehoux, P, Hoffman, SJ, Cadeddu, SBM, Savoldelli, M, et al. Artificial intelligence in health care: laying the Foundation for Responsible, sustainable, and inclusive innovation in low- and middle-income countries. Glob Health. (2020) 16:52. doi: 10.1186/s12992-020-00584-1

66. Chenais, G, Lagarde, E, and Gil-Jardiné, C. Artificial intelligence in emergency medicine: viewpoint of current applications and foreseeable opportunities and challenges. J Med Internet Res. (2023) 25:e40031. doi: 10.2196/40031

67. Mehrabi, N, Morstatter, F, Saxena, N, Lerman, K, and Galstyan, A. A survey on Bias and fairness in machine learning. ACM Comput Surv. (2021) 54:115. doi: 10.1145/3457607

68. Yao, R, Zhang, W, Evans, R, Cao, G, Rui, T, and Shen, L. Inequities in health care services caused by the adoption of digital health technologies: scoping review. J Med Internet Res. (2022) 24:e34144. doi: 10.2196/34144

69. Fletcher, RR, Nakeshimana, A, and Olubeko, O. Addressing fairness, Bias, and appropriate use of artificial intelligence and machine learning in Global Health. Front Artif Intell. (2021)3:561802. doi: 10.3389/frai.2020.561802

70. World Health Organization. Ethics and governance of artificial intelligence for health. Geneva: WHO (2021).

71. Barwise, AK, Curtis, S, Diedrich, DA, and Pickering, BW. Using artificial intelligence to promote equitable care for inpatients with language barriers and complex medical needs: clinical stakeholder perspectives. J Am Med Inform Assoc. (2023) 31:611–21. doi: 10.1093/jamia/ocad224

72. Lee, SCL, Mao, DR, Ng, YY, Leong, BS-H, Supasaovapak, J, Gaerlan, FJ, et al. Emergency medical dispatch services across Pan-Asian countries: a web-based survey. BMC Emerg Med. (2020) 20:5. doi: 10.1186/s12873-019-0299-1

73. Skyttberg, N, Chen, R, and Koch, S. Man vs machine in emergency medicine – a study on the effects of manual and automatic vital sign documentation on data quality and perceived workload, using observational paired sample data and questionnaires. BMC Emerg Med. (2018) 18:54. doi: 10.1186/s12873-018-0205-2

74. Leach, M, and Scoones, I. The slow race: Making technology work for the poor. London: Demos (2006). 81 p.

75. Zuhair, V, Babar, A, Ali, R, Oduoye, MO, Noor, Z, Chris, K, et al. Exploring the impact of artificial intelligence on Global Health and enhancing healthcare in developing nations. J Prim Care Community Health. (2024) 15:21501319241245847. doi: 10.1177/21501319241245847

76. Khan, MS, Umer, H, and Faruqe, F. Artificial intelligence for low income countries. Humanities Soc Sci Commun. (2024) 11:1422. doi: 10.1057/s41599-024-03947-w

77. UNCTAD Division on Technology and Logistics. Digital economy report 2024. New York: United Nations Publications (2024).

78. Capasso, M, and Umbrello, S. Responsible nudging for social good: new healthcare skills for AI-driven digital personal assistants. Med Health Care Philos. (2022) 25:11–22. doi: 10.1007/s11019-021-10062-z

79. Parsa, AD, Hakkim, S, Vinnakota, D, Mahmud, I, Bulsari, S, Dehghani, L, et al. Artificial intelligence for global healthcare In: J Moy Chatterjee and SK Saxena, editors. Artificial Intelligence in Medical Virology. Singapore: Springer Nature Singapore (2023). 1–21.

80. Guo, J, and Li, B. The application of medical artificial intelligence Technology in Rural Areas of developing countries. Health Equity. (2018) 2:174–81. doi: 10.1089/heq.2018.0037

Keywords: artificial intelligence, machine learning, prehospital, emergency medical services, LMIC, emergency patient care

Citation: Mallon O, Lippert F and Pilot E (2025) Artificial intelligence in prehospital emergency care systems in low- and middle-income countries: cure or curiosity? Insights from a qualitative study. Front. Public Health. 13:1632029. doi: 10.3389/fpubh.2025.1632029

Edited by:

Deepanjali Vishwakarma, University of Limerick, IrelandReviewed by:

Weiqi Jiao, Boston Strategic Partners Inc., United StatesJaideep Visave, University of North Carolina at Greensboro, United States

Copyright © 2025 Mallon, Lippert and Pilot. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Eva Pilot, ZXZhLnBpbG90QG1hYXN0cmljaHR1bml2ZXJzaXR5Lm5s; Odhran Mallon, by5tYWxsb25AYWx1bW5pLm1hYXN0cmljaHR1bml2ZXJzaXR5Lm5s

Odhran Mallon

Odhran Mallon Freddy Lippert

Freddy Lippert Eva Pilot

Eva Pilot