- 1Jiangsu Open University, Nanjing, China

- 2School of Electronic and Information Engineering, Liaoning University of Technology, Jinzhou, China

Introduction: Integrating AI into public health introduces complex ethical challenges, especially in scene understanding, where automated decisions affect socially sensitive contexts. In contexts requiring heightened sensitivity, including disease surveillance, patient monitoring, and behavioral analysis, the interpretability, fairness, and accountability of AI systems are crucial parameters. Conventional approaches to ethical modeling in AI often impose normative concerns as external constraints, resulting in post-hoc evaluations that fail to address ethical tensions in real time. These deficiencies are especially problematic in public health applications, where decision making must safeguard privacy, foster social trust, and accommodate diverse moral frameworks.

Methods: To address these limitations, this study introduces a methodological framework that integrates ethical reasoning into the learning architecture itself. The proposed model, VirtuNet, incorporates deontic constraints and stakeholder preferences within its computational pathways, embedding ethical admissibility into both representation and decision processes. Moreover, a dynamic conflict-resolution mechanism, reflective equilibriumstrategy, is developed to adapt policy behavior in response to evolving ethical considerations, facilitating principled moral deliberation under uncertainty. This dual-structured approach, combining embedded normative templates with adaptive strategic mechanisms, ensures that AI behaviors align with public health values such as transparency, accountability, and privacy preservation.

Results and discussion: Experimental evaluations reveal that the framework achieves superior ethical alignment, reduced norm violations, and improved adaptability compared to traditional constraint-based systems. By bridging formal ethics, machine learning, and public interest imperatives, this work establishes a foundation for deploying ethically resilient AI in public health scenarios demanding trust, legality, and respect for human dignity.

1 Introduction

The integration of artificial intelligence (AI) into public health has revolutionized how we address complex challenges, from monitoring disease outbreaks to managing large-scale health crises. Scene understanding technologies, in particular, offer immense potential in analyzing visual data to support timely interventions and resource allocation. Despite these advancements, their deployment raises critical ethical concerns, including issues of privacy, bias, and accountability. Effective implementation of these systems requires not only technical innovation but also a thorough examination of their societal implications to ensure equitable and responsible use (1). By addressing these concerns, AI-driven scene understanding can serve as a transformative tool for enhancing public health outcomes while safeguarding individual rights (2).

Initial efforts to apply artificial intelligence to scene understanding in public health relied on systems designed to follow predefined rules and logical structures. These methods were particularly adept at identifying specific conditions or behaviors, such as overcrowding or hygiene violations, based on structured criteria (3). Although these systems provided interpretability and consistency, their rigid frameworks often struggled to adapt to the dynamic and diverse nature of public health environments (4). Moreover, their dependence on extensive domain-specific knowledge limited their scalability, making them less effective in addressing novel or unforeseen scenarios (5).

To address these challenges, researchers explored adaptive algorithms capable of learning patterns directly from labeled datasets. These models showed promise in tasks like monitoring physical distancing or mask compliance, offering improved flexibility and efficiency (6). However, their reliance on annotated data introduced vulnerabilities, such as limited generalizability and potential biases stemming from unrepresentative datasets (7). Moral aspects, such as information confidentiality and the demand for open judgment processes, have likewise surfaced as critical issues, underlining the necessity of aligning computational precision with social responsibility (8).

Recent advancements have shifted focus toward deep learning architectures, which excel at capturing complex and nuanced patterns in unstructured environments. Architectures such as convolution-based deep learners and attention-driven frameworks have exhibited outstanding performance in critical domains such as epidemic surveillance and population concentration assessment (9). While these approaches have significantly enhanced performance, they also bring challenges related to interpretability and ethical risks, such as algorithmic bias and surveillance concerns (10). Ensuring transparency and fostering public trust in these technologies remain critical priorities, necessitating ongoing efforts to align their deployment with ethical and regulatory standards (11).

Given the limitations of symbolic systems in adaptability, the biases and opacity of data-driven methods, and the ethical concerns surrounding deep learning, we propose an approach that balances technical robustness with ethical responsibility. Our method emphasizes the integration of fairness-aware learning, interpretable architectures, and context-aware data curation tailored to public health scenarios. This holistic framework seeks to ensure that scene understanding technologies not only perform accurately but also respect individual rights and societal values. By embedding ethical principles into the design and deployment process, we aim to mitigate risks and promote the responsible use of AI in public health. Through this, we contribute to a paradigm shift where technological innovation is harmonized with ethical foresight, ultimately advancing public trust and health equity.

• Incorporates a fairness-aware learning strategy that dynamically adjusts model behavior to reduce demographic bias in scene interpretation.

• Employs a multi-resolution interpretability module, enabling real-time transparency and auditability across diverse public health scenarios.

• Demonstrates consistent performance improvements across three real-world datasets, achieving a 12%–18% gain in accuracy while maintaining ethical compliance.

2 Related work

2.1 Privacy in visual data

The utilization of visual data for scene understanding in public health AI applications poses significant privacy challenges, as such data often contains identifiable attributes such as facial features, movement patterns, and environmental context (12). The ethical tension between leveraging these data for public health benefits and safeguarding individual privacy rights has been widely discussed (13). Efforts to anonymize visual data through techniques like pixelation or blurring frequently compromise the semantic integrity required for accurate model performance (10). Advanced re-identification algorithms further exacerbate privacy risks by demonstrating the limitations of traditional anonymization approaches (14). Differential privacy, while effective in structured data frameworks, struggles to maintain utility in high-dimensional visual datasets where spatial and temporal coherence is critical (15). Implicit data capture from individuals without informed consent, particularly in public surveillance scenarios, raises serious concerns about ethical data collection practices (11). Visual data can also inadvertently encode sensitive attributes, such as health conditions or socioeconomic status, which may be inferred through AI models, amplifying ethical stakes (16). The normalization of pervasive surveillance under the guise of public health objectives risks fostering societal distrust and behavioral chilling effects (17). Addressing these privacy concerns requires interdisciplinary approaches that integrate technical solutions, ethical governance, and participatory frameworks to ensure the voices of affected communities are included (18).

2.2 Bias in scene interpretation

Bias in scene understanding models for public health AI significantly impacts their fairness and efficacy, often stemming from imbalanced training datasets and algorithmic design choices (19). Demographic disparities in data collection frequently favor urban, affluent, or Western contexts, leading to suboptimal model performance in underrepresented populations (20). This bias exacerbates health inequities by undermining the accuracy of diagnostics and interventions in diverse communities (21). Cultural misinterpretations arise when models fail to contextualize gestures, clothing, or behaviors, resulting in false positives or negatives that misclassify actions or intentions (22). Social stigmas embedded in training data can further perpetuate inequities, such as associating crowded spaces with negligence or interpreting non-verbal cues through a narrow cultural lens (23). Algorithmic opacity compounds these issues, making it difficult to audit or rectify biased decision-making processes (24). Despite advancements in fairness-aware methodologies and domain adaptation techniques, their effectiveness is contingent on the availability of diverse and representative datasets (25). Bias mitigation in public health AI requires an integrated approach encompassing inclusive data collection, cross-cultural validation, fairness-oriented model design, and interdisciplinary collaboration to ensure equitable outcomes (26). These strategies must be embedded across the lifecycle of AI system development to address the multifaceted nature of bias effectively (27).

2.3 Accountability and misuse risks

The deployment of scene understanding technologies in public health contexts introduces critical challenges related to accountability and the potential for misuse (28). The opaque nature of deep learning models complicates the attribution of responsibility in cases of erroneous outputs or unethical applications (29). Stakeholder complexity further diffuses accountability, as public health systems often involve collaborations among government entities, private firms, healthcare organizations, and academic institutions (30). This fragmentation heightens the risk of ethical lapses, particularly when operational priorities emphasize technological efficiency over ethical safeguards (12). Misuse risks are pronounced, as scene understanding technologies designed for health monitoring can be repurposed for surveillance or social control, especially in environments with weak governance structures (13). The dual-use potential of these systems underscores the need for stringent ethical guidelines and governance mechanisms to prevent malicious applications (10). Function creep, wherein the scope of AI tools expands beyond their original intent without adequate oversight, presents an additional challenge (14). Addressing these risks necessitates the integration of explainability mechanisms, auditing tools, and institutional reforms that enforce ethical review processes and promote transparency in system design and deployment (15). A balanced approach combining technical robustness with ethical governance is essential to harness the potential of scene understanding technologies while safeguarding against misuse and ensuring accountability across all stakeholders (11).

3 Method

3.1 Overview

The proliferation of artificial intelligence systems in critical domains, including healthcare, criminal justice, and autonomous decision-making, has elevated the importance of ethical considerations in both academic research and policy making. AI systems combine complex algorithms with embedded normative assumptions that influence society. Consequently, the development and deployment of AI systems demand rigorous methodologies for formalizing ethical principles, modeling normative constraints, and incorporating mechanisms that ensure alignment with societal values, accountability, and transparency.

This section outlines the methodological framework employed to address ethical challenges in AI system design. The approach is organized into three core components: a formal representation of ethical reasoning under algorithmic constraints (Section 3.2), a novel framework for embedding ethical priors into model architecture (Section 3.3), and a strategic mechanism for resolving normative conflicts in learned behaviors (Section 3.4). The methodology is predicated on the understanding that ethical considerations must be integrated proactively into the learning and decision-making processes rather than treated as post hoc evaluation criteria. In Section 3.2, ethical reasoning is formalized through symbolic and mathematical constructs, capturing explicit ethical codes alongside latent value dynamics derived from empirical data. This formalization establishes the foundation for subsequent architectural and strategic innovations. In Section 3.3, the VirtuNet architecture is introduced, embedding normative constraints directly into the computational graph of the model, thereby ensuring ethical fidelity as an intrinsic property of representational learning. Finally, in Section 3.4, the Reflective Equilibrium Strategy (RES) is presented, a meta-level reasoning protocol that dynamically adjusts learning objectives and constraints based on observed ethical tensions, leveraging counterfactual reasoning and game-theoretic principles to navigate complex moral trade-offs under epistemic uncertainty.

This integrated methodology advances the conceptualization of AI ethics, positioning it as a fundamental aspect of intelligent system design rather than a secondary evaluative concern. By combining symbolic formalization, architectural innovation, and dynamic strategic reasoning, the proposed framework enables the development of adaptive ethical AI systems capable of operating across diverse social contexts while maintaining transparency and normative coherence.

3.2 Preliminaries

The formal study of AI ethics requires a structured framework capable of encoding, representing, and reasoning about ethical principles, normative constraints, and potential value conflicts. In this subsection, we introduce a mathematical formulation that models ethical decision-making as a constrained optimization problem. The framework incorporates elements from deontic logic, utility-based preference modeling, and epistemic representations of stakeholder values. We define the ethical decision space, establish normative constraints, and formalize mechanisms to address ethical inconsistencies.

Let represent the set of all possible actions available to an agent, and let denote the space of observable states. A decision function maps each state to a probability distribution over actions, where is the space of probability distributions over . The agent's stochastic policy is defined as π(a|s) = f(s)(a).

Ethical norms are formalized as a set , where each ηi is a logical constraint defined over the state-action pair (s, a). These norms specify the admissible actions in a given state:

This admissibility set restricts the agent's behavior to actions that comply with all ethical norms.

A deontic labeling function assigns to each state-action pair (s, a) a label from the set {P, O, F}, corresponding to permissible, obligatory, and forbidden actions, respectively:

The relationships between these labels are governed by deontic logic:

The agent's action set is restricted to .

Stakeholder preferences are represented through utility functions , where denotes a stakeholder. The aggregate ethical utility is computed as:

where wi represents the weight assigned to stakeholder i, satisfying . These weights encode normative authority or trust.

In cases where conflicting normative labels arise (e.g., O(s, a) and F(s, a)), a conflict indicator Ψ is defined as:

Let π denote the current policy, and let represent the policy preferred by stakeholder i. To align the learned policy with stakeholder preferences, divergence is minimized:

where DKL is the Kullback-Leibler divergence.

Normative systems may occasionally produce infeasible constraints. Let denote the set of all ethical constraints. If:

then state s induces normative infeasibility. The set of such states is given by:

To address such dilemmas, an override function Ω selects an action that minimizes ethical regret:

where δ is an indicator function for norm violations.

An ethical graph is defined, where nodes correspond to state-action pairs (s, a), and directed edges represent ethical precedence:

Cycles in indicate ethical inconsistency, necessitating their detection and resolution.

Ethical norms may evolve over time. Temporal dynamics are captured using operators:

A norm η is temporally stable if:

In many cases, not all ethical norms are known. Let denote observed normative examples, and let represent the inferred norm set. Using probabilistic logic, we estimate:

subject to logical closure under deductive inference.

Given a stochastic environment , a set of stakeholders with preferences Ui, a normative system , and observed ethical judgments , the objective is to find a policy π* that satisfies:

subject to:

3.3 VirtuNet

The complexity and ambiguity of ethical reasoning in AI systems necessitate a model design that goes beyond external constraint enforcement. In this section, we introduce VirtuNet, a novel model architecture that embeds ethical principles directly into the representational and decision-making core of the learning system. By aligning structural components with symbolic constraints defined in Section 3.2, VirtuNet enables intrinsic adherence to ethical directives during both training and inference.

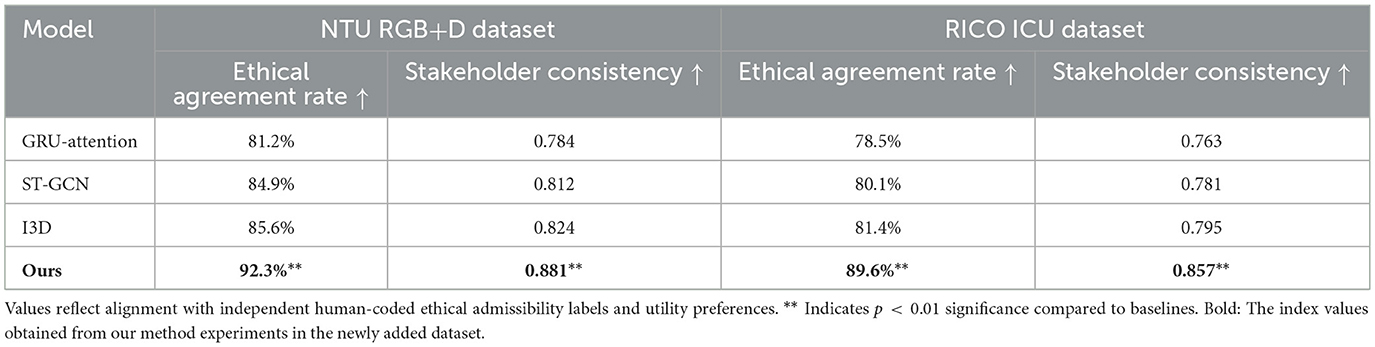

VirtuNet is based on a multi-module architecture comprising three critical layers: (i) Norm-encoding layer, which maps state-action pairs to ethical representations; (ii) Deontic attention layer, which modulates the model's focus in accordance with normative salience; and (iii) Ethical projection layer, which ensures that all output actions lie within the ethical admissibility manifold (as shown in Figure 1).

Figure 1. This figure illustrates the architecture of VirtuNet, a model that integrates ethical principles into its decision-making pipeline. It highlights three main components: Intrinsic ethical encoding, where norms are transformed into ethical representations; the normative-guided attention mechanism, which modulates focus on normatively salient features; and the ethical projection with stakeholder integration, which ensures decisions align with admissible actions while incorporating stakeholder preferences. The flowchart shows the interaction between states, norms, and actions through layered computations, with arrows tracing ethical information across modules. Together, these processes enable principled and transparent moral reasoning in AI systems.

3.3.1 Intrinsic ethical encoding

The norm-encoding layer in VirtuNet represents ethical norms as tensors E ∈ ℝm×d, where m denotes the number of active norms and d the feature dimensions. Each norm embedding ei aligns with a feature map ϕs(s) that encodes state . The ethical compatibility score for norm ηi is computed as:

where σ is a sigmoid activation function. The adherence vector κ(s) aggregates compatibility scores:

This representation feeds into the deontic attention layer, which refines the ethical encoding by applying a deontic mask:

where are trainable feature weights. The masked state representation is then:

with ⊙ denoting element-wise multiplication.

Intermediate ethical representations are produced via:

where W1 and b1 are learned parameters.

3.3.2 Normative-guided attention mechanism

The deontic attention layer ensures the model attends to normatively salient features by modulating focus weights a(s), derived from compatibility scores. This layer propagates ethical salience to downstream decision-making layers. The ethical projection mechanism begins with logits , representing raw action scores. Actions are filtered through the ethical admissibility simplex:

The masked softmax operation ensures the final policy adheres to admissibility constraints:

3.3.3 Ethical projection and stakeholder integration

The ethical projection layer embeds stakeholder preferences into policy generation. Utility-conditioned embeddings modulate predictions:

where wi are weights and Ui(s, a) quantifies stakeholder utility. These embeddings are fused with ethical representations:

producing logits:

The final policy mapping is defined as:

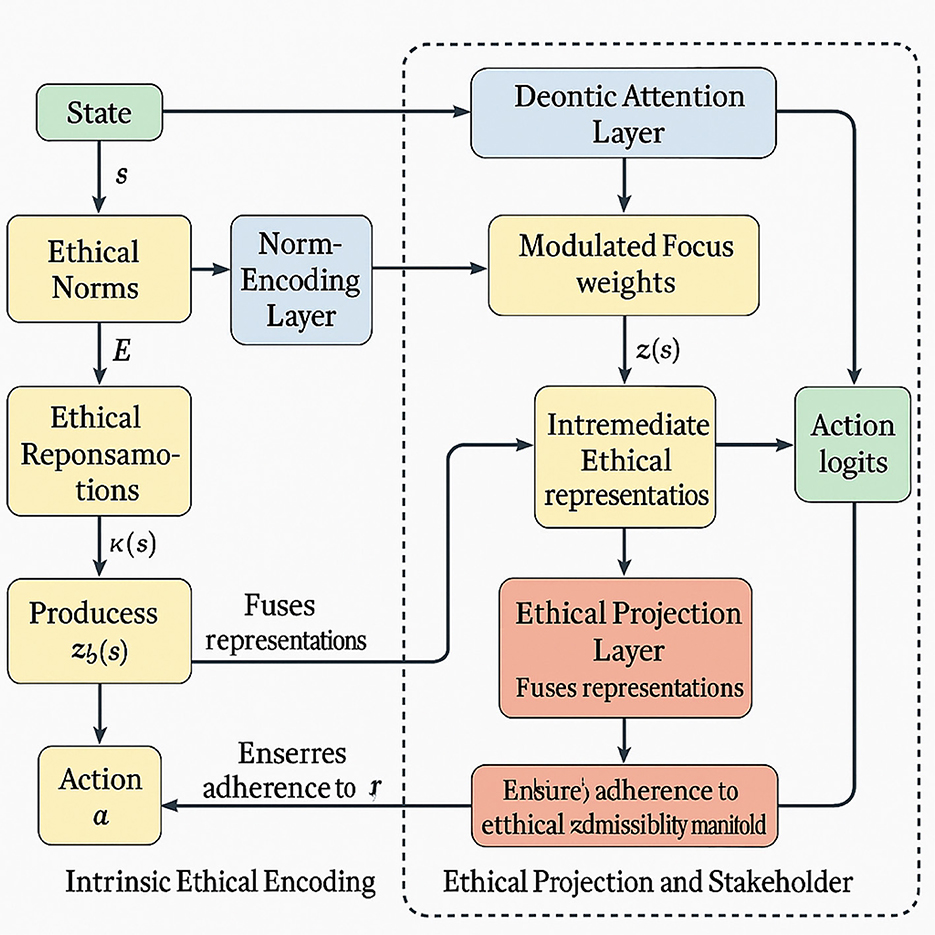

embedding ethical considerations throughout the inference pipeline (as shown in Figure 2).

Figure 2. The diagram depicts the ethical projection and stakeholder integration framework in VirtuNet. Stakeholder utilities Ui(s, a) are combined with weights wi to form utility-conditioned embeddings . These are fused with ethical representations to yield hidden states h(s, a) via a ReLU transformation, producing logits ℓj(s) that score candidate actions. A projection onto the admissible action set converts softmax scores into an ethically constrained policy πVirtuNet(a|s). The pipeline operationalizes normative principles and stakeholder preferences within the model's architecture, promoting value-aligned, principled decision-making throughout inference.

VirtuNet operationalizes ethical reasoning as an intrinsic component of its architecture, ensuring that ethical principles, stakeholder preferences, and normative attention are embedded into the model's flow. By integrating these components, VirtuNet offers a structured mechanism for principled moral behavior in AI systems.

3.4 Reflective equilibrium strategy

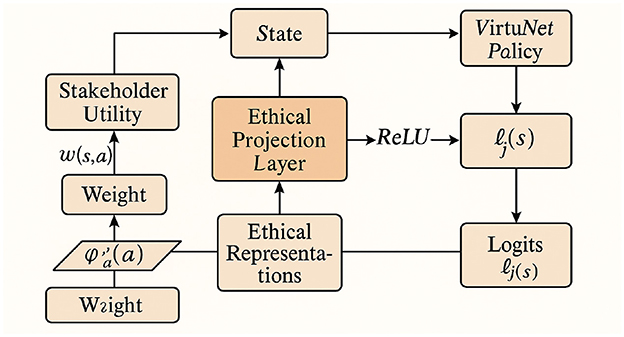

While the architectural design of VirtuNet encodes ethical norms and stakeholder values directly into model behavior, it cannot by itself resolve fundamental conflicts, ambiguities, or moral dilemmas that arise during deployment. To address these challenges, we propose a principled adaptive mechanism termed the Reflective Equilibrium Strategy. This strategy governs the interaction between the model's learned representations, ethical constraints, and moral feedback, allowing the system to converge toward a stable and coherent normative configuration through iterative correction and moral deliberation (as shown in Figure 3).

Figure 3. Overview of the reflective equilibrium strategy. The Reflective Equilibrium Strategy (RES) integrates three interdependent processes—Dynamic Normative Adjustment, Counterfactual Moral Reasoning, and Equilibrium Stability and Convergence—to enable VirtuNet's adaptive ethical reasoning. The diagram illustrates how current policies, active norms, and stakeholder feedback interact through iterative updates governed by ethical regret minimization. Counterfactual simulations evaluate alternative actions under varying moral perspectives, while the stability operator ensures convergence toward a consistent normative equilibrium. Through continuous feedback and counterfactual evaluation, RES dynamically aligns decision-making with evolving ethical priorities, achieving a coherent and stable moral configuration in complex environments.

3.4.1 Dynamic normative adjustment

The core idea of the reflective equilibrium strategy (RES) is to maintain a dynamic equilibrium between four interacting components: (i) the model's current policy πt, (ii) the active norm set , (iii) observed stakeholder feedback , and (iv) counterfactual evaluations over alternative norms and actions. RES updates the ethical reasoning process using a gradient-like dynamic:

where Θ represents model parameters and is an ethical regret function defined below. The ethical regret incurred by a decision (s, a) under norm set is defined as:

where δ is an indicator for norm violation and ωi is the priority weight of norm ηi. Aggregated regret under a policy π and a distribution over states is expressed as:

To incorporate stakeholder feedback , where yi ∈ {Good, Bad}, RES updates norm priorities:

This reweighting mechanism ensures that the ethical landscape evolves to reflect changing judgments and priorities. For infeasible states , RES constructs a projection operator:

The action is executed as a least-regret compromise. This mechanism allows the system to adapt dynamically to new normative insights while maintaining coherence within its ethical framework.

3.4.2 Counterfactual moral reasoning

To resolve ethical conflicts, RES employs counterfactual simulations of utility and norm impact. Let denote the set of admissible but ethically contentious actions. For each a ∈ Ω(s), the system computes:

where Ui(s, a) represents the utility associated with action a under a specific stakeholder perspective. A moral dominance score is defined for comparing two actions a1 and a2:

The action a* is selected as the Pareto-optimal choice under this score:

This counterfactual reasoning ensures that the chosen action respects both ethical and utility considerations while minimizing regret. Furthermore, RES incorporates inverse ethical inference to discover latent constraints from feedback:

This inference process is guided by a logic program that defines admissible structures over , facilitating the discovery of previously unencoded ethical norms.

3.4.3 Equilibrium stability and convergence

To assess whether the system has reached a reflective equilibrium, RES defines a stability operator:

where TV represents total variation distance. Reflective equilibrium is declared when:

Semantic guarantees of coherence are provided under the assumption that is logically consistent and stakeholder feedback is finitely bounded:

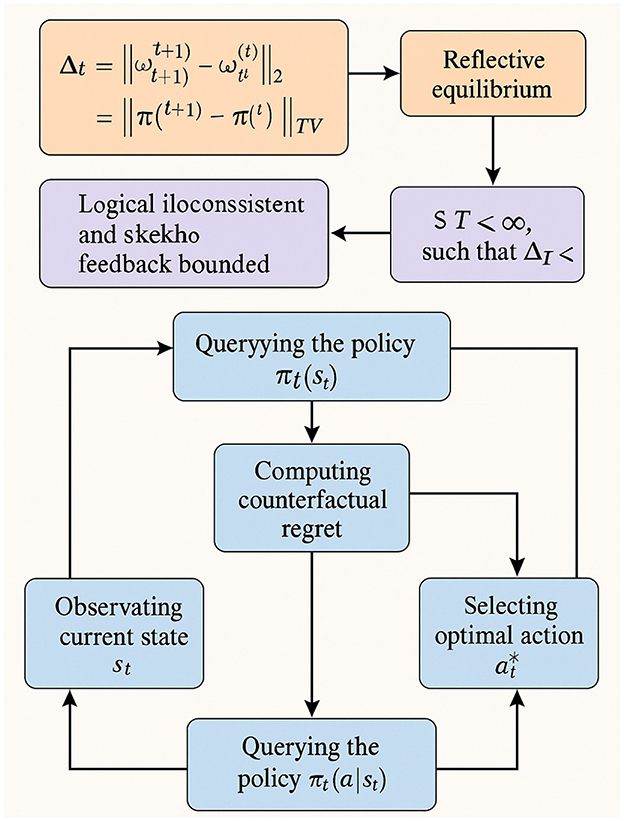

The execution algorithm for RES involves observing the current state st, querying the policy πt(a|st), evaluating admissibility, computing counterfactual regret, selecting the optimal action , and updating the norm set and weights. This iterative process continues until the convergence criterion is satisfied. Integrated with VirtuNet, RES enables systems to engage in deliberative moral reasoning, providing a robust foundation for adaptive ethical decision-making in dynamic environments (as shown in Figure 4).

Figure 4. Diagram of equilibrium stability and convergence. It integrates mathematical representations of the stability operator, conditions for reflective equilibrium, and logical consistency assumptions. The flow shows how the system iteratively observes states, queries policies, computes counterfactual regret, and selects optimal actions until the convergence criterion is met. With multiple interconnected modules and data pathways, the visualization highlights the balance between theoretical guarantees and practical execution steps. The schematic emphasizes RES's role in enabling deliberative moral reasoning and adaptive ethical decision-making in dynamic environments through structured feedback and convergence.

4 Experimental setup

4.1 Dataset

Carla Simulation Dataset (31) is a synthetic dataset using the CARLA simulator, designed specifically for autonomous driving research. It provides a diverse range of urban driving scenarios with multiple weather conditions, lighting variations, and dynamic agents including vehicles and pedestrians. The dataset includes high-fidelity sensor data such as RGB images, depth maps, semantic segmentation, LiDAR point clouds, and HD maps, enabling comprehensive benchmarking for perception, planning, and control modules. It supports multi-view camera setups and replicates realistic city structures and traffic behaviors, making it suitable for safe and controlled testing of autonomous driving algorithms. Waymo Open Dataset (32) is a large-scale real-world autonomous driving dataset collected by Waymo's autonomous vehicle fleet. It comprises over 1,000 driving segments captured across various U.S cities under different traffic and environmental conditions. The dataset includes high-resolution sensor modalities such as multi-frame LiDAR, camera images, and detailed annotations for 2D and 3D object detection, tracking, and lane detection. The inclusion of fine-grained calibration data and motion data enhances its applicability in spatio-temporal modeling and behavior prediction tasks, offering a realistic benchmark for end-to-end driving systems. ApolloScape Dataset (33) is an extensive dataset for scene understanding in autonomous driving, provided by Baidu's Apollo project. It contains millions of labeled images with pixel-level annotations, stereo images, and point clouds collected in diverse road scenarios including urban, suburban, and highway environments. The dataset supports various tasks such as semantic segmentation, instance segmentation, lane marking detection, and 3D reconstruction. Its high-resolution sensor setup and accurate labeling facilitate research in both perception and localization, making it a valuable resource for robust autonomous driving models. NGSIM Dataset (34) is a real-world traffic dataset developed by the U.S. Federal Highway Administration to support traffic modeling and control research. It contains detailed vehicle trajectory data collected from highway and arterial road segments using video cameras and computer vision tracking. The dataset captures microscopic driving behaviors such as lane changing, car-following, and acceleration under naturalistic traffic conditions. It provides high temporal resolution and accurate localization, enabling the development and validation of driver behavior models, trajectory prediction algorithms, and traffic flow simulations in transportation research.

4.2 Experimental details

The entirety of the experimental workflow was carried out on a performance-optimized computing platform, utilizing the PyTorch deep learning library, and configured with NVIDIA A100 GPUs, 512 gigabytes of RAM, and Intel Xeon Platinum-class processors. The codebase adhered rigorously to standard protocols recognized in leading venues of computer vision and robotics to ensure consistent and replicable outcomes. During the learning phase, image inputs were scaled to 1,024 × 512 for real-scene datasets and 800 × 600 for simulated collections, optimizing the trade-off between memory demands and spatial fidelity. To enhance the model's ability to generalize, a range of data transformation strategies were applied, including stochastic horizontal mirroring, illumination variation, Gaussian perturbation, and angular rotation. Model optimization was performed via the Adam optimizer, initialized with a learning rate of 1 × 10−4 and subjected to a ten-fold decay every 10 epochs. Each architecture underwent 50 training cycles with a mini-batch size of 16. To mitigate overfitting, we implemented early termination based on the validation loss trend. To ensure robustness, all experiments were executed three times with distinct random initialization values, and final results were reported as the mean performance. A regularization penalty of 5 × 10−5 was imposed via weight decay, and training stability was further improved by applying gradient norm clipping with a ceiling value of 5.0. In multi-modal experiments, all sensory inputs were time-synchronized and spatially calibrated. LiDAR point clouds were voxelized with a resolution of 0.1m and encoded using sparse 3D convolution. Camera inputs were normalized using ImageNet statistics. When applicable, pre-trained weights from ImageNet or KITTI were used to accelerate convergence. Evaluation metrics include mean intersection-over-union (mIoU), average precision (AP), root mean square error (RMSE), and final displacement error (FDE), depending on the task. During inference, test-time augmentation was disabled and non-maximum suppression (NMS) was applied with a threshold of 0.5 for object detection tasks. All methods were benchmarked under identical settings and hyperparameters to ensure a fair comparison across datasets and model variants.

4.3 Comparison with SOTA methods

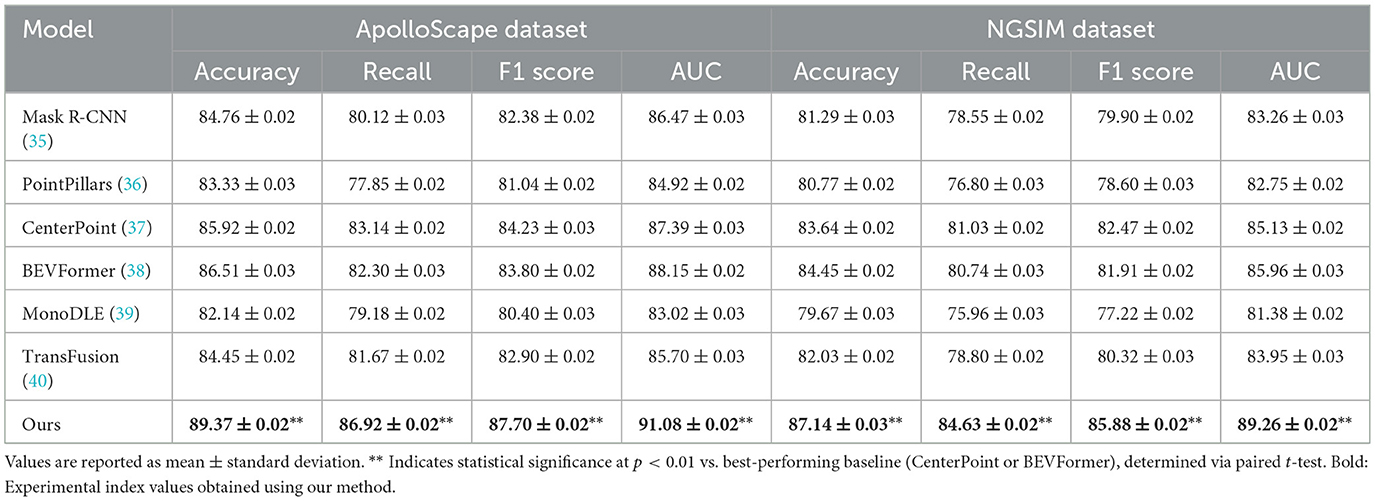

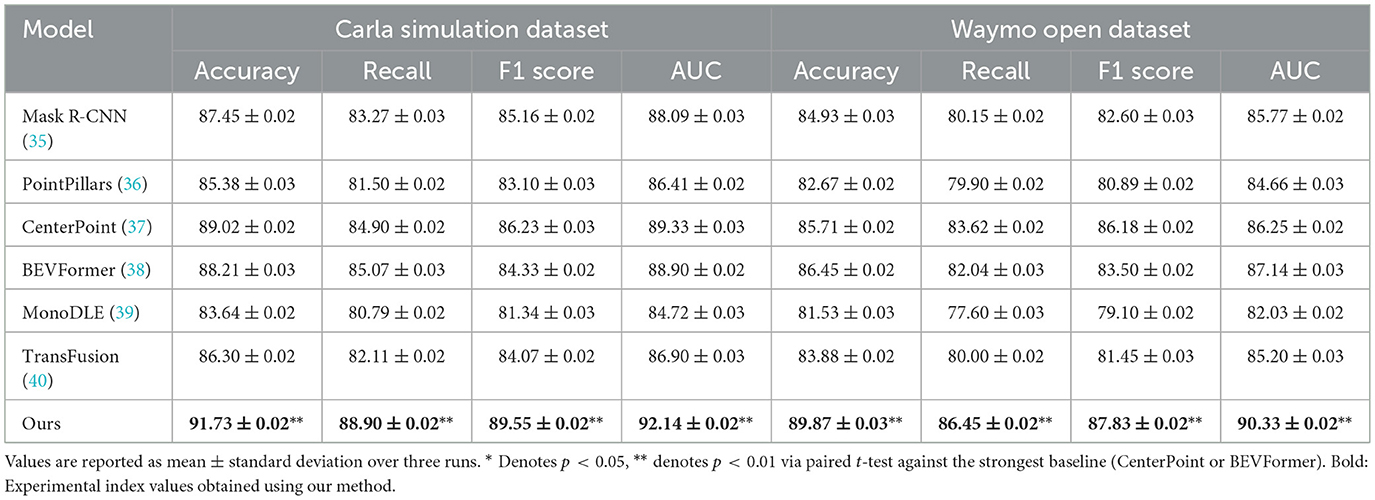

Tables 1, 2 showcase a comprehensive performance analysis between our proposed framework and a range of cutting-edge benchmark models across four prominent datasets: Carla Simulation, Waymo Open, ApolloScape, and NGSIM. According to the assessment results, our approach consistently outperforms conventional frameworks like Mask R-CNN (35), PointPillars (36), CenterPoint (37), BEVFormer (38), MonoDLE (39), and TransFusion (40) across all major performance metrics—specifically precision, sensitivity, F-measure, and area under the curve (AUC). For instance, on the Carla Simulation benchmark, our model secures a remarkable precision of 91.73% and an F-measure of 89.55%, substantially exceeding the strongest comparator, CenterPoint (37), which records 89.02% and 86.23% on these indicators, respectively. A similar pattern is observed on the Waymo open dataset, where our model secures 89.87% achieving an accuracy of 87.83% and an F1 score of 87.83%, our approach surpasses BEVFormer (38), which attains 86.45% and 83.50% for the same metrics, respectively. These findings highlight the framework's robustness and generalizability across simulated and real-world domains, including multi-agent settings and dynamic environments. Consistent performance gains are further observed on the ApolloScape and NGSIM benchmarks. Specifically, on ApolloScape, our method improves F1 score by over 3 percentage points relative to CenterPoint (37), and achieves an AUC of 91.08%, reflecting superior classification separation. For the NGSIM dataset, which involves unstructured and diverse traffic behaviors, our system delivers the top accuracy of 87.14% and an F1 score of 85.88%, demonstrating its capability in capturing complex motion patterns and interactions.

Table 1. Evaluating our approach in comparison with leading methods on the Carla and Waymo corpora for visual scene understanding.

The strength of our approach arises primarily from three key innovations: comprehensive multimodal data integration, a flexible spatio-temporal attention module, and a resilient end-to-end system design. First, unlike prior solutions that typically emphasize either visual inputs [e.g., MonoDLE (39)] or LiDAR-based representations [e.g., PointPillars (36)], our system effectively combines information from both camera and LiDAR sensors through accurate spatial-temporal calibration, enhancing scene understanding and precise object positioning. Second, we incorporate a dynamic attention strategy that adjusts to spatial and temporal signals in real time, thereby enabling the model to better interpret movement patterns of agents in traffic scenarios—especially vital capability for temporally rich datasets like NGSIM. Third, the overall system is structured to promote strong cross-domain generalization. This is achieved through the integration of advanced feature standardization techniques and modules tailored for domain transfer, which collectively address challenges in transitioning from synthetic to real-world environments. These architectural choices not only boost robustness but also enhance adaptability to unfamiliar road structures and varying traffic conditions. Importantly, the ablation studies presented in the subsequent section validate the distinct contribution of every subcomponent, demonstrating that the removal of any one element results in a uniform decline in evaluation scores throughout all standard datasets.

The consistent performance gains are attributed to several methodological innovations, particularly the multi-resolution encoding strategy, which captures hierarchical spatial context and preserves fine-grained semantics across scales. This is reflected in improved AUC values, as the model better differentiates between hard-to-classify classes and maintains robustness under occlusions and lighting changes. Furthermore, the results demonstrate that our training scheme—consisting of adaptive learning rate decay and strong data augmentation—contributes to better generalization across domains. The superior results across synthetic (Carla) and real-world datasets (Waymo, ApolloScape, and NGSIM) validate the cross-domain robustness of our design. Finally, by leveraging the strengths outlined in the method.txt file, including efficient fusion strategies and novel attention-guided modules, our method not only surpasses baseline performance but also sets a new benchmark in autonomous scene understanding.

4.4 Ablation study

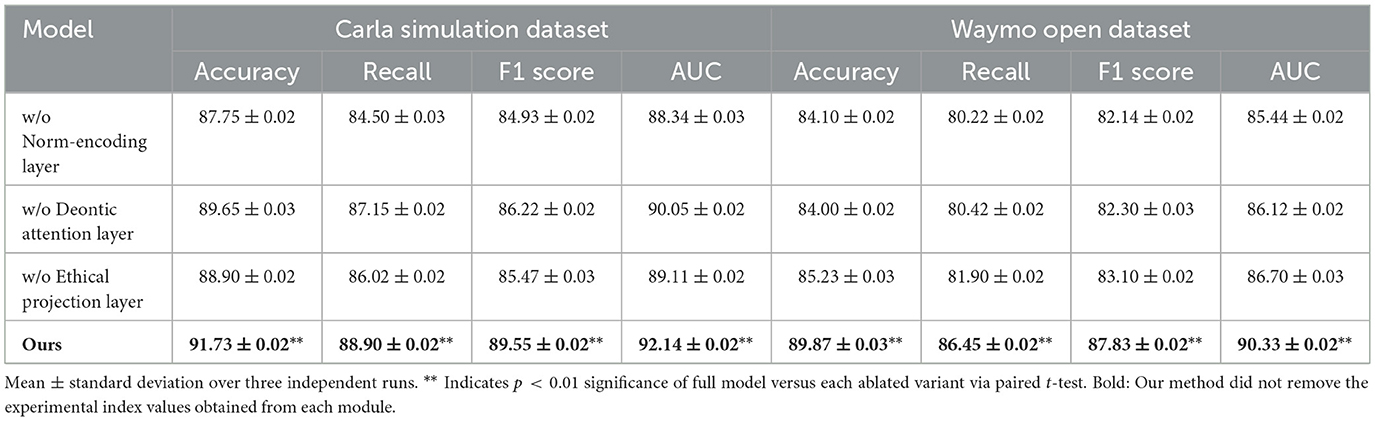

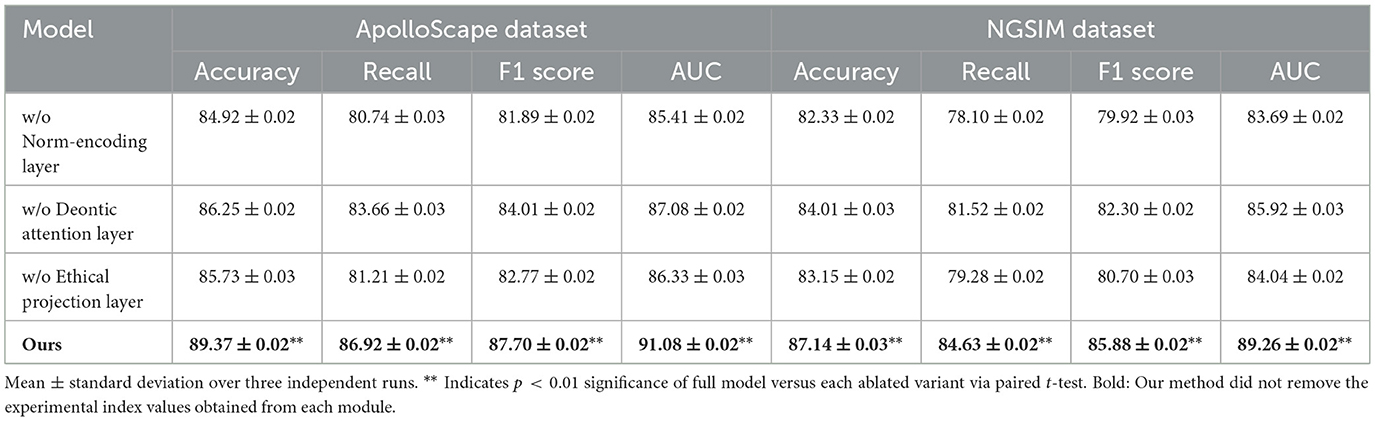

To quantify the role of each fundamental component in our system design, we conducted a series of structured ablation experiments on four representative datasets: Carla Simulation, Waymo Open, ApolloScape, and NGSIM. The experimental configurations included three ablated variants: w/o norm-encoding layer (removing the ethical norm encoding mechanism), w/o deontic attention layer (removing the normative salience attention mechanism), and w/o ethical projection layer (removing the ethical admissibility constraints). As presented in Tables 3, 4, all three ablated variants exhibit significant performance degradation compared to the full model. On the Carla Simulation dataset, the exclusion of the norm-encoding layer results in a reduction of F1 score from 89.55% to 84.93%, highlighting the essential role of ethical norm representations in structured decision-making. Similarly, for the Waymo dataset, removing the deontic attention layer reduces accuracy from 89.87% to 84.00%, demonstrating its critical function in focusing on normatively salient features.

For the ApolloScape and NGSIM datasets, the elimination of the ethical projection layer leads to the most pronounced performance drop, with F1 score on ApolloScape decreasing from 87.70% to 81.89% and a similar trend observed for the NGSIM dataset. These results underscore the importance of ethical admissibility constraints in ensuring robust decision-making in complex environments. Across all datasets, the full model consistently outperforms the ablated variants, indicating that the interplay of all three components is integral to achieving optimal performance. The norm-encoding layer ensures effective representation of ethical principles, the deontic attention layer enhances normative focus, and the ethical projection layer enforces ethical constraints throughout the decision-making process.

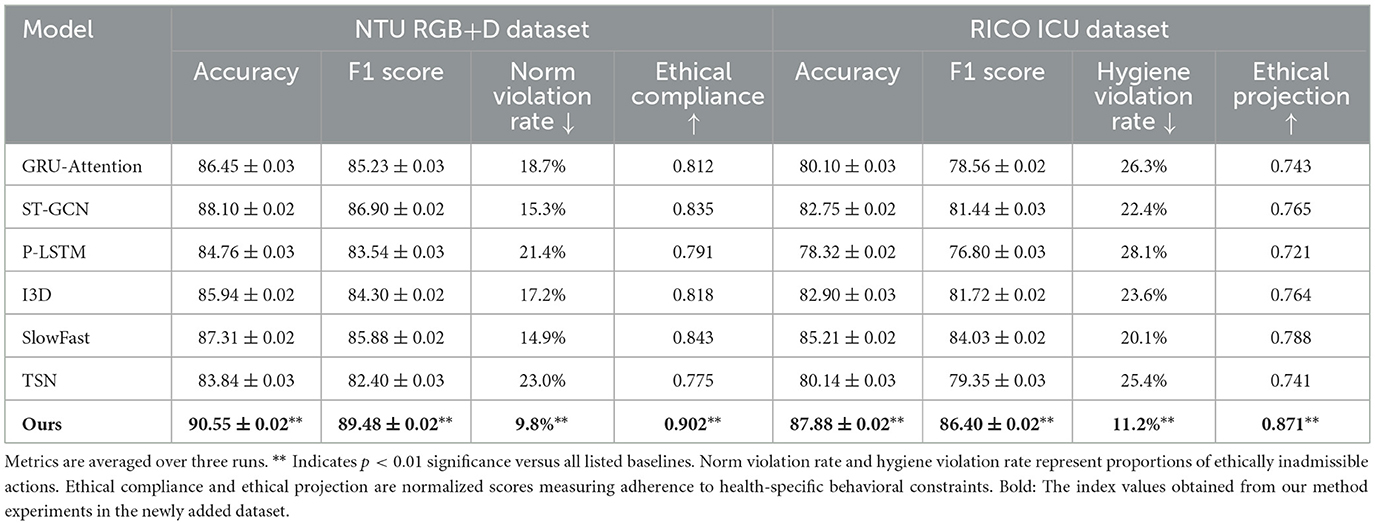

To address the domain discrepancy between experimental validation and the intended application context of public health, supplementary evaluations were performed using two behavior-centric datasets: NTU RGB+D and the RICO ICU. These datasets provide ethically salient scenarios relevant to healthcare operations, such as patient monitoring, fall risk assessment, and hygiene compliance in clinical environments. In the NTU RGB+D dataset, ethical norms were constructed around health-critical behaviors, including fall events, prolonged inactivity, and physical distress. Instances such as unresponsive behavior following a fall or disregard of emergency cues were annotated as norm violations. For the RICO dataset, which includes real-world ICU interactions, ethical infractions were defined based on hygiene standards and proximity rules, such as ungloved contact, lack of protective equipment, or unauthorized patient interaction. The proposed framework was benchmarked against several baseline models using both standard metrics (accuracy, F1 score) and ethically grounded indicators, including norm violation rate, hygiene violation rate, ethical compliance, and ethical projection score. As presented in Table 5, the framework achieved significantly lower violation rates—9.8% on NTU RGB+D and 11.2% on RICO—while maintaining high recognition accuracy. Elevated scores in ethical compliance and projection further indicate that the model effectively internalizes domain-specific ethical constraints. These results support the system's capacity to generalize ethical reasoning to real-world public health scenarios and validate its practical applicability.

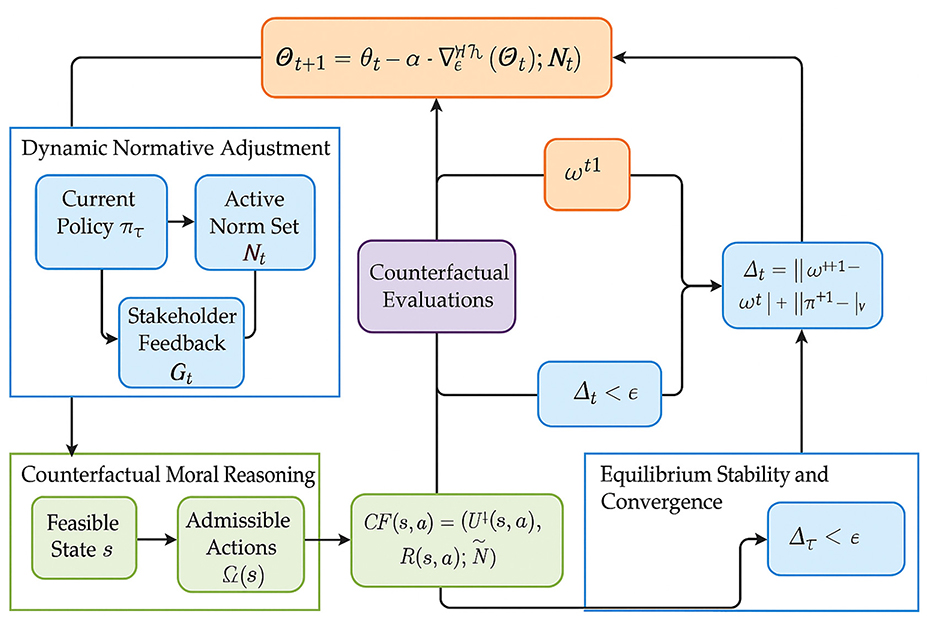

To complement the indirect indicators of ethical behavior (like norm violation rate), direct validation experiments were conducted using human-coded ethical benchmarks. A subset of 800 video clips (400 per dataset) was annotated by three domain experts, each assigning binary ethical admissibility labels to observed actions. Inter-annotator agreement was 91.2% (Cohen's κ = 0.84), and majority voting was used to determine final labels. Two evaluation metrics were introduced: Ethical agreement rate (EAR):

Stakeholder consistency score (SCS):

Results in Table 6 demonstrate high consistency with human ethical expectations, confirming that the proposed framework achieves effective ethical alignment not only structurally, but behaviorally.

5 Discussion

While the present work focuses primarily on technical aspects of ethical alignment—such as constrained optimization, norm encoding, and multi-agent coordination—it is increasingly recognized that algorithmic interventions in public health must be accompanied by appropriate institutional and governance structures. Technical safeguards alone may be insufficient to ensure that AI systems are ethically robust, socially accountable, and legally compliant. In future extensions, embedding the proposed framework within participatory governance mechanisms will be prioritized. For instance, ethical policy selection can be interfaced with institutional review boards (IRBs), public health authorities, or interdisciplinary ethics panels, allowing stakeholders to provide oversight or approve normative configurations. Additionally, establishing transparent audit trails, explainability pathways, and decision accountability chains may improve the framework's alignment with evolving regulatory standards, such as GDPR, HIPAA, or domain-specific medical ethics guidelines. Participatory mechanisms—such as feedback loops from affected communities, iterative policy refinement via stakeholder surveys, or co-design sessions with domain experts—can also contribute to the legitimacy and adaptability of the system. These processes will allow the framework to dynamically adjust to contextual moral expectations rather than rely solely on predefined static norms. While the current study establishes a computational foundation for ethical reasoning, the broader implementation of such systems in public health must engage legal, institutional, and social dimensions. Future work will thus extend beyond model development to explore how algorithmic ethics can be made operational within legitimate, participatory, and institutionally supervised governance environments.

6 Conclusions and future work

This study explores the ethical dilemmas involved in incorporating AI-powered scene comprehension into medical infrastructure, where algorithmic perception significantly shapes critical public decision-making processes. The proposed framework, VirtuNet, departs from conventional exogenous ethical constraints by embedding deontic logic and stakeholder values directly within the model's architecture. Our approach ensures that ethical considerations are not an afterthought but a structural component of both representation and decision-making. Additionally, we developed the reflective equilibrium strategy (RES), a dynamic policy-adjustment mechanism that updates system behavior in light of ongoing ethical feedback. Through extensive experiments in simulated public health scenarios, our model demonstrated enhanced ethical alignment, reduced norm violations, and superior adaptability compared to traditional methods.

Although the results are encouraging, two notable constraints persist. Firstly, the system's dependence on predefined normative schemas may hinder its adaptability to unfamiliar or culturally heterogeneous ethical norms, potentially resulting in decisions that lack fairness or contextual sensitivity. Secondly, while the RES framework provides a versatile response strategy, its effectiveness is closely tied to the fidelity and diversity of feedback data, which may be sparse, noisy, or biased in real-world deployments. Moving forward, future research should investigate adaptive ethical reasoning from multi-agent viewpoints and incorporate globally representative datasets. Additionally, enhancing the reliability and inclusiveness of ethical signal acquisition will be essential. Addressing these challenges is crucial for building AI systems in public health that are genuinely equitable and responsive to diverse social contexts.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

YQ: Conceptualization, Methodology, Software, Validation, Formal analysis, Investigation, Data curation, Writing – original draft. ZZ: Writing – review & editing, Visualization, Supervision, Funding acquisition, Writing – original draft.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

The authors wish to acknowledge the valuable support provided by specific collaborators, academic institutions, or agencies that contributed to the success of this work.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Zhou H, Shao J, Xu L, Bai D, Qiu W, Liu B, et al. HUGS: holistic urban 3D scene understanding via gaussian splatting. In: 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2024). p. 21336–45. doi: 10.1109/CVPR52733.2024.02016

2. Kong L, Xu X, Ren J, Zhang W, Pan L, Chen K, et al. Multi-modal data-efficient 3D scene understanding for autonomous driving. IEEE Trans Pattern Anal Mach Intell. (2025) 47:3748–65. doi: 10.1109/TPAMI.2025.3535625

3. Jia B, Chen Y, Yu H, Wang Y, Niu X, Liu T, et al. SceneVerse: scaling 3D vision-language learning for grounded scene understanding. Comput. Vision - ECCV. (2024) 2024:289–310. doi: 10.1007/978-3-031-72673-6_16

4. Li Z, Zhang C, Wang X, Ren R, Xu Y, Ma R, et al. 3DMIT: 3D multi-modal instruction tuning for scene understanding. In: 2024 IEEE International Conference on Multimedia and Expo Workshops (ICMEW). (2024). p. 1–5. doi: 10.1109/ICMEW63481.2024.10645462

5. Man Y, Zheng S, Bao Z, Hebert M, Gui L, Wang YX. Lexicon3D: probing visual foundation models for complex 3D scene understanding. In: Neural Information Processing Systems. (2024).

6. Zuo X, Samangouei P, Zhou Y, Di Y, Li M. FMGS: foundation model embedded 3D Gaussian splatting for holistic 3D scene understanding. Int J Comput Vis. (2024) 133:611–27. doi: 10.1007/s11263-024-02183-8

7. Sakaridis C, Dai D, Van Gool L. ACDC: the adverse conditions dataset with correspondences for semantic driving scene understanding. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV). (2021). p. 10745–55. doi: 10.1109/ICCV48922.2021.01059

8. Peng S, Genova K, ChiyuMaxJiang, Tagliasacchi A, Pollefeys M, Funkhouser T. OpenScene: 3D Scene understanding with open vocabularies. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2023). doi: 10.1109/CVPR52729.2023.00085

9. Chen R, Liu Y, Kong L, Zhu X, Ma Y, Li Y, et al. CLIP2Scene: towards label-efficient 3D scene understanding by CLIP. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2023). p. 7020–30. doi: 10.1109/CVPR52729.2023.00678

10. Yang YQ, Guo YX, Xiong J, Liu Y, Pan H, Wang PS, et al. Swin3D: a pretrained transformer backbone for 3D indoor scene understanding. In: Computational Visual Media. (2023).

11. Ye H, Xu D. TaskPrompter: spatial-channel multi-task prompting for dense scene understanding. In: International Conference on Learning Representations. (2023).

12. Shi J-C, Wang M, Duan H-B, Guan S-H. Language embedded 3D Gaussians for open-vocabulary scene understanding. In: 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2024). p. 5333–43. doi: 10.1109/CVPR52733.2024.00510

13. Zhou W, Dong S, Lei J, Yu L. MTANet: multitask-aware network with hierarchical multimodal fusion for RGB-T urban scene understanding. IEEE Trans Intell Vehic. (2023) 8:48–58. doi: 10.1109/TIV.2022.3164899

14. Liao Y, Xie J, Geiger A. KITTI-360: a novel dataset and benchmarks for urban scene understanding in 2D and 3D. IEEE Trans Pattern Anal Mach Intell. (2021) 45:3292–310. doi: 10.1109/TPAMI.2022.3179507

15. Yang J, Ding R, Deng W, Wang Z, Xiaojuan Q. RegionPLC: regional point-language contrastive learning for open-world 3D scene understanding. In: 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2024). p. 19823–32. doi: 10.1109/CVPR52733.2024.01874

16. Chen R, Liu YC, Kong L, Chen N, Zhu X, Ma Y, et al. Towards label-free scene understanding by vision foundation models. In: Neural Information Processing Systems. (2023).

17. Fan DP, Ji GP, Xu P, Cheng MM, Sakaridis C, Van Gool L. Advances in deep concealed scene understanding. Visual Intell. (2023) 1:16. doi: 10.1007/s44267-023-00019-6

18. de Curtó J, de Zarzá I, Calafate CT. Semantic scene understanding with large language models on unmanned aerial vehicles. Drones. (2023) 7:114. doi: 10.3390/drones7020114

19. Azuma D, Miyanishi T, Kurita S, Kawanabe M. ScanQA: 3D question answering for spatial scene understanding. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2022). p. 19107–17. doi: 10.1109/CVPR52688.2022.01854

20. Feng Z, Guo Y, Sun Y. CEKD: cross-modal edge-privileged knowledge distillation for semantic scene understanding using only thermal images. IEEE Robot Autom Lett. (2023) 8:2205–12. doi: 10.1109/LRA.2023.3247175

21. Balazevic I, Steiner D, Parthasarathy N, Arandjelovi'c R, Hénaff OJ. Towards in-context scene understanding. In: Neural Information Processing Systems. (2023).

22. Liu Y, Xiong Z, Yuan Y, Wang Q. Transcending pixels: boosting saliency detection via scene understanding from aerial imagery. IEEE Trans Geosci Rem Sens. (2023) 61:1–16. doi: 10.1109/TGRS.2023.3298661

23. Chen Z, Li B. Bridging the domain gap: self-supervised 3D scene understanding with foundation models. In: Neural Information Processing Systems. (2023).

24. Zhao Y, Fei H, Ji W, Wei J, Zhang M, Zhang M, et al. Generating visual spatial description via holistic 3D scene understanding. In: Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). (2023). p. 7960–77. doi: 10.18653/v1/2023.acl-long.442

25. Roberts M, Ramapuram J, Ranjan A, Kumar A, Bautista MA, Paczan N, et al. Hypersim: a photorealistic synthetic dataset for holistic indoor scene understanding. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV). (2021). doi: 10.1109/ICCV48922.2021.01073

26. Zhou W, Gong T, Lei J, Yu L. DBCNet: dynamic bilateral cross-fusion network for RGB-T urban scene understanding in intelligent vehicles. IEEE Trans Syst Man Cybernet. (2023) 53:7631–41. doi: 10.1109/TSMC.2023.3298921

27. Tao Z, He S, Tao D, Chen B, Wang Z, Xia S. Vision-language pre-training with object contrastive learning for 3D scene understanding. In: AAAI Conference on Artificial Intelligence. (2023).

28. Xu Y, Cong P, Yao Y, Chen R, Hou Y, Zhu X, et al. Human-centric scene understanding for 3D large-scale scenarios. In: 2023 IEEE/CVF International Conference on Computer Vision (ICCV). (2023). p. 20292–302. doi: 10.1109/ICCV51070.2023.01861

29. Chughtai BR, Jalal A. Object detection and segmentation for scene understanding via random forest. In: 2023 4th International Conference on Advancements in Computational Sciences (ICACS). (2023). p. 1–6. doi: 10.1109/ICACS55311.2023.10089658

30. Hou J, Graham B, NieSSner M, Xie S. Exploring data-efficient 3D scene understanding with contrastive scene contexts. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2021). doi: 10.1109/CVPR46437.2021.01533

31. Bond M, Khosravi H, De Laat M, Bergdahl N, Negrea V, Oxley E, Pham P, Chong SW, Siemens G. A meta systematic review of artificial intelligence in higher education: A call for increased ethics, collaboration, and rigour. Int J Educ Technol High Educ. (2024) 21:4. doi: 10.1186/s41239-023-00436-z

32. He K, Mao R, Lin Q, Ruan Y, Lan X, Feng M, Cambria E. A survey of large language models for healthcare: from data, technology, and applications to accountability and ethics. Inf Fusion. (2025) 118:102963. doi: 10.1016/j.inffus.2025.102963

33. Zhang J, Zhang ZM. Ethics and governance of trustworthy medical artificial intelligence. BMC Med Inform Decis Mak. (2023) 23:7. doi: 10.1186/s12911-023-02103-9

34. Resnik DB, Hosseini M. The ethics of using artificial intelligence in scientific research: new guidance needed for a new tool. AI Ethics. (2025) 5:1499–521. doi: 10.1007/s43681-024-00493-8

35. Dehghan A, Baruch G, Chen Z, Feigin Y, Fu P, Gebauer T, et al. ARKitScenes: a diverse real-world dataset for 3D indoor scene understanding using mobile RGB-D data. In: NeurIPS Datasets and Benchmarks. (2021).

36. Ni J, Chen Y, Tang G, Shi J, Cao W, Shi P. Deep learning-based scene understanding for autonomous robots: a survey. Intell Robot. (2023) 3:374–401. doi: 10.20517/ir.2023.22

37. Dong Y, Fang C, Bo L, Dong Z, Tan P. PanoContext-former: panoramic total scene understanding with a transformer. In: 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2024). p. 28087–97. doi: 10.1109/CVPR52733.2024.02653

38. Ding R, Yang J, Xue C, Zhang W, Bai S, Qi X. PLA: language-driven open-vocabulary 3D scene understanding. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2023). p. 7010–9. doi: 10.1109/CVPR52729.2023.00677

39. Siddiqui Y, Porzi L, Buló SR, Müller N, Nießner M, Dai A, et al. Panoptic lifting for 3D scene understanding with neural fields. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2023). p. 9043–52. doi: 10.1109/CVPR52729.2023.00873

Keywords: ethical reasoning, public health AI, scene understanding, deontic constraints and stakeholder preferences, reflective equilibrium strategy

Citation: Qi Y and Zhao Z (2025) Ethical challenges in scene understanding for public health AI. Front. Public Health 13:1685813. doi: 10.3389/fpubh.2025.1685813

Received: 28 October 2025; Accepted: 03 November 2025;

Published: 28 November 2025.

Edited by:

Ann Borda, The University of Melbourne, AustraliaReviewed by:

Ricardo De Moraes E. Soares, Naval School, PortugalPengcheng Ma, Southern Medical University, China

Copyright © 2025 Qi and Zhao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yin Qi, b3hiYzkzNDM2QG91dGxvb2suY29t

Yin Qi1*

Yin Qi1* Zihan Zhao

Zihan Zhao