Abstract

Interactive human–machine systems aim to significantly enhance performance and reduce human workload by leveraging the combined strengths of humans and automated systems. In the state of the art, human–machine cooperation (HMC) systems are modeled in various interaction layers, e.g., the decision layer, trajectory layer, and action layer. The literature usually focuses on the action layer, assuming that there is no need for a consensus at the decision or trajectory layers. Only few studies deal with the interaction at the trajectory layer. None of the previous work has systematically examined the structure of communication for interaction between humans and machines beyond the action layer. Therefore, this paper proposes a graph representation based on a multi-agent system theory for human–machine cooperation. For this purpose, a layer model for human–machine cooperation from the literature is converted into a graph representation. Using our novel graph representation, the existence of communication loops can be demonstrated, which are necessary for emancipated cooperation. In contrast, a leader–follower structure does not possess a closed loop in this graph representation. The choice of the communication loop for emancipated cooperation is ambiguous and can take place via various closed loops at higher layers of human–machine interactions, which open new possibilities for the design of emancipated cooperative control systems. In a simulation, it is shown that emancipated cooperation is possible via three variants of communication loops and that a consensus on a common trajectory is found in each case. The results indicate that taking into account cooperative strategies at the trajectory layer can enhance the performance and effectiveness of human–machine systems.

1 Introduction

By using human capabilities synergistically with automation, interactive human–machine systems promise to improve performance and reduce human workload, leading to a symbiotic state where human cooperation seamlessly integrates with automation (Inga et al., 2022). Such interactive human–machine systems have been studied and developed in the past in the context of driver assistance systems (Marcano et al., 2020) and teleoperation systems (Li et al., 2023; Grobbel et al., 2023) under the term shared control. Interactive wheelchair applications are another frequently considered application in this field (Carlson and Demiris, 2012). A common assumption in these works is the existence of a trajectory for the cooperative system and the knowledge of both agents about this trajectory. In the context of assisted driving, for example, this is the center of the lane as a known reference for both agents (Claussmann et al., 2020). According to Schneider et al. (2024), however, this assumption cannot generally be made. To establish a common reference between humans and automation, a cooperation process must take place at the trajectory layer (TL) in advance. For this purpose, an approach for cooperative trajectory planning is proposed in the form of an agreement process, which aims to reach a consensus on a common trajectory (Schneider et al., 2022). The application considered in this context is accompanying a patient to examination rooms.

Efficient and human-centric cooperation requires a communication channel (Lunze, 2019, p. 13). The communication between humans and automaton proposed by Abbink et al. (2018) and applied by Rothfuß et al. (2020) should take place as directly as possible and within a single layer via a separate communication interface. According to this design rule, a dedicated communication interface must be provided to agree on a common trajectory. Humans would, therefore, have to communicate their desired trajectory via an additional communication interface. However, an additional communication interface is rather unfavorable for two reasons: first, the constant communication of the desired trajectory represents an additional task for the person parallel to the execution of the movement, which can result in performance costs in the form of higher response latency or higher error rates in task execution (Fischer and Plessow, 2015). Second, according to Todorov and Jordan (2002), trajectory planning and execution in humans are often intuitive and combined, which can make it difficult for them to explicitly communicate their trajectory. Based on these limitations, the authors of this paper propose a communication method that occurs intuitively via the action layer (AL) and does not require a separate communication interface. Therefore, measured variables (state and control variables) from the action layer can be used to estimate the trajectory intentions of both agents. This approach represents an alternative communication pathway between humans and automation, differing from the approach recommended by Abbink et al. (2018).

In contrast to leader–follower approaches, an emancipated interaction should take place for the investigated cooperation at the trajectory layer as this offers advantages in the synergetic interconnection of humans and automation, e.g., in information gathering and sharing, where humans and automation can complement each other in the sensor perception of the environment in order to be able to react better to environmental influences as a result of the combination (Pacaux-Lemoine and Makoto, 2015). The underlying definition of emancipated cooperation is taken from Rothfuß (2022, p. 9), according to which humans and automation possess equal control authority.

Building on the state of the art in communication for human–machine interactions (Section 2), this paper introduces a graph-based taxonomy for describing different communication paths in Section 3. To comparatively evaluate three proposed communication paths, we conduct a simulative analysis within a cooperative positioning application of a coupled human–robot system (Section 4). Finally, Section 5 provides a summary of our key findings and concludes the work.

2 Related works on communication in human–machine systems

Communication is one of the central aspects of human–machine cooperation (HMC) systems. Abbink et al. (2018) established the link between shared control and communication from the Latin word “communicare,” which means “to share.” Pacaux-Lemoine and Vanderhaegen (2013) described communication between humans and automation as a necessary element of the know-how-to-cooperate (KHC) framework. This KHC, in turn, is central to keeping humans in the control loop and thus avoiding potential dangers that can arise when humans move out of it (Pacaux-Lemoine and Trentesaux, 2019). Marcano et al. (2020) stated that bidirectional communication is a necessary condition for shared control systems. Bidirectional communication is generally understood in such a way that signals can be transmitted in both directions via a communication interface. However, bidirectional communication does not necessarily have to take place via the same channel, but the signal flow only has to represent a circular structure between the sender and the receiver (Marko, 1973). The layer models of human–machine cooperation proposed by Flemisch et al. (2016), Flemisch et al. (2019), Abbink et al. (2018), and Rothfuß et al. (2020) are rather generic in character and draw general signal paths between humans and automation at all layers (Pacaux-Lemoine and Flemisch, 2019). Abbink et al. (2018) specify that communication between humans and automation should be as direct as possible and without detours, e.g., via delaying system dynamics. Losey et al. (2018) discussed different concepts of this direct haptic communication channel (kinesthetic and tactile) and the combination of channels (audio, visual, and haptic).

In the multi-agent theory, communication is also a central aspect. Lunze even stated that “cooperation needs communication” (quote from Lunze). In contrast to the classic, single-player automation design, it is not only the controller design that forms the synthesis problem, but the communication concept (which agent exchanges which information with which agent?) is also part of the synthesis problem (Lunze, 2019; Barrett and Lafortune, 1998). Individual agents that consist of controllers and corresponding actuators are modeled as nodes in a graph. When modeling a decentralized system using a graph, the immediate question that arises is which nodes are connected to each other and what type of energy and/or information is exchanged between them. The matrix that indicates which node communicates with which node is referred to as the adjacency matrix. One advantage of modeling multi-agent systems via a graph is the analysis and calculation of a consensus value, i.e., the agreement on a previously unknown common reference state (Lunze, 2019, p. 57f). Depending on the choice of the exact consensus protocol, the consensus value depends, among other things, on the initial state of the nodes. Leader–follower structures, with respect to the consensus value, are characterized in such a graph by an arrangement in which at least one node has no incoming edges, thus representing a root node of the graph (Lunze, 2019, p. 78). On the other hand, in a strongly connected node arrangement, a path can be found from each node to each other node. Each node has at least one input edge, which means that each node contributes to the formation of the consensus value.

The graph representation of multi-agent systems thus enables the description and analysis of a consensus process of an unknown reference state and the representation of different communication structures via different edge connections between nodes. This exactly fulfills the two requirements for the development of cooperation at the trajectory layer, as described in Section 1. The graph representation also enables system analysis using graph theory tools (e.g., identifying loops) and easy extensibility to integrate additional agents, e.g., when a coupled HMC system interacts with another human or agent in the environment (e.g., indicating the evasive direction to another vehicle or human or obtaining information from another robot). Therefore, a graph representation for cooperative human–machine systems will be introduced in the following section, with which three different communication paths will then be proposed in Section 4, and a simulative comparison between the communication paths will take place.

3 Graph-based taxonomy for the representation of communication in human–machine cooperation

From the perspective of multi-agent theory, the combination of humans and automation in the HMC context can be described as a multi-agent system consisting of two agents that communicate with each other using a respective edge configuration. In the context of haptic shared control, this is bidirectional haptic communication at the action layer via the actuator in the form of the steering wheel (Abbink and Mulder, 2010; Flad et al., 2014; Ludwig et al., 2017; Marcano et al., 2020). Communication between humans and automation can also take place symbolically via a tablet input in the case of cooperation at the decision layer (Rothfuß et al., 2020). These two examples clearly demonstrate that in HMC systems, cooperation and, thus, communication take place at different levels of task abstraction.

First, a brief clarification of the terminology used in this paper, particularly the distinction between level and layer, is necessary. As mentioned in Section 2, various layer models have been proposed in the literature for the description of HMC. These models differ in their focus mainly in three dimensions: first, the inclusion of the perception–action cycle, the degree of task abstraction, and the level of consciousness. What all models have in common is that the behavior of humans and automation is described in hierarchical levels and that these levels are mirrored across both agents. This leads to the following two definitions.

Definition 1A level is a hierarchical component of the description of human or automation behavior.

Definition 2A layer comprises a certain level of human behavior and the corresponding mirrored level of automation behavior together. Optionally, the layer also includes a communication interface for interactions within the layer between the two corresponding levels of the human and automation.The butterfly model presented by Rothfuß et al. (2020) is one form of an HMC layer model that focuses on the dimension of the task abstraction level. It integrates decision-making and action implementation aspects at the same time. This butterfly model was used as the underlying layer model for the present work because of its generic, application-independent character as well as its lack of restrictions with regard to authority contributions (Rothfuß, 2022, p. 26). These features make it suitable for emancipated cooperation. Taking into account Definition 1, Definition 2, the model has four layers, namely, the decomposition layer, decision layer, trajectory layer, and action layer (see Figure 1, left). In the decomposition layer, an overall task is broken down into subtasks, the sequence of which is determined in the decision layer below. In the trajectory layer, the trajectory is determined for each subtask, which is executed in the lowest layer, i.e., the action layer.In contrast to general multi-agent systems, the two agents, humans and automation, are not each modeled as only one node for the proposed taxonomy; rather, the levels from the butterfly model are each modeled as a node (see Figure 1 right: four nodes for each agent; one node for each level). There is also a system node and a further node for each additional communication interface on the individual levels (Figure 1, center). In the general case, there are edges in both directions between all neighboring nodes. A node can only ever be connected to the node from the layer above and/or below it and to a communication interface node. Edges that run across another layer node are not permitted in the HMC graph. Figure 1 shows the general case of all possible existing nodes and edges of the graph representation of the HMC model. An instantiation of the model for a specific application generally includes only a subset of nodes and edges. An HMC graph can thus be defined as follows:

FIGURE 1

Conversion of the butterfly model (left) as a representation of an HMC model into a graph representation (right). (a) Butterfly model (Rothfuß et al., 2020). (b) HMC graph of the butterfly model.

Definition 3A human–machine cooperation graph is a directed graph consisting of a node set and an edge set . The node subset denotes all human levels contained in an HMC system. The node subset denotes all levels of automation contained in an HMC system. The node subset denotes all subsystems of the extended system contained in an HMC system. This includes both the system as such and all included communication interfaces. The edge subset denotes directed physical edges between two nodes, where energy flows. The edge subset denotes directed edges between two nodes, indicating the exchange of information between them. A directed edge from node i to node j is represented by . A node in the HMC graph generally does not have a self-loop, i.e., an edge .Directed graphs can possess the property that every node can be reached from every other node, which is referred to as strongly connected [Lunze, p. 31]:

Definition 4A directed HMC graph is said to be strongly connected if there are directed paths from a node to every other node .

3.1 Definition communication loop

Emancipated cooperation, as described in Section 1, is characterized by equal interaction between agents (Rothfuß, 2022, p. 9), which is the opposite of a leader–follower structure. A leader–follower structure is represented in the HMC graph as a path-like structure with a root node, i.e., one (or more) node that has only outgoing edges and no incoming edges (Lunze, 2019, p. 78). For emancipated cooperation to exist, no node should only have outgoing edges without any incoming edges. This is the case for a strongly connected graph (see Definition 4), which is a necessary condition for emancipated cooperation. Whether emancipated cooperation takes place at a layer or not can be verified in the HMC graph by identifying a loop that contains the respective level nodes of both agents.

Definition 5 Communication loop for emancipated cooperation: Emancipated cooperation is characterized by a closed, circular signal flow from the node of one agent to the corresponding node of the other agent in the same layer and back. In the HMC graph, this represents a cycle, i.e., a closed path from node back to the same node , with the condition that the path runs through the corresponding node of the other agent in the same layer. A necessary condition for this communication loop is that the graph must be strongly connected. Depending on the application, there may be several possible communication loops. Remark: the communication loop can occur either directly between two nodes via a communication interface on the same layer (intra-layer communication) or across several layers (inter-layer communication). The special case of intra-layer communication is the widespread concept of bidirectional communication, which enables the signal flow via one channel in both directions.In the following section, the presented definition of a communication loop for emancipated cooperation is applied to three examples of possible cooperation at the trajectory layer.

3.2 Application of the taxonomy to cooperative approaches from the literature

Bidirectional communication plays a central role in the field of haptic shared control (Abbink et al., 2018; Marcano et al., 2020). Figure 2a shows the concept of haptic shared control in an automotive application for lateral control, represented as an HMC graph; the edge designations are taken from the haptic shared control system structure described by Marcano et al. (2020). In this case, the human and automation act simultaneously on the steering wheel as an actuator with their steering torques and , respectively. The human senses the feedback torque , and the automated system measures the resulting steering angle as feedback. From the steering wheel actuator, the steering angle acts on the vehicle dynamics. This, in turn, acts as feedback on the steering wheel actuator via the restoring torque . There is a communication loop at the action layer, with the steering wheel as the actuator representing the communication interface.

FIGURE 2

Different communication pathways in HMC graphs of cooperative approaches from the literature. (a) HMC graph of Marcano et al. (2020). (b) HMC graph of Huang et al. (2022). (c) HMC graph of Rothfuß et al. (2020). (d) HMC graph of Varga et al. (2020). (e) HMC graph of Benloucif et al. (2019). (f) HMC graph of Losey and O’Malley (2018).

Huang et al. (2022) presented a cooperative human–machine-RRT approach that uses a safety assessment and classification of the human driving style to carry out trajectory planning, which is intended to guide the vehicle back into a defined safe space in the event of a detected unsafe driving style of the human. In the case of a detected unsafe driving style, it provides a corrective control input on the vehicle movement. Figure 2b shows the corresponding HMC graph for the lateral movement of the vehicle (longitudinal movement not shown, but it works analogously). Interaction with the human does not take place via a common communication interface. Nevertheless, there is a closed communication loop via the action layer as the control variable of the human is evaluated in order to determine the error to the safe space—noted here as —and generate a corrective control input from this (loop shown in yellow). The human’s trajectory request is not taken into account, which means that there is no communication and, thus, no cooperation at the trajectory layer (TL) between the human and automation. The communication of the human’s trajectory shown in green is, therefore, only a path from the trajectory node to the system node. In contrast, the graph shows cooperation at the action layer, where the communication loop is closed via the system node. It has the special feature that the determination of the corrective control signal includes trajectory planning. Based on the leader–follower structure at the trajectory layer, with the human as the leader (green path), and the determination of the corrective control signal using a defined safe space, a control conflict is assumed during the phases of automation intervention.

Rothfuß et al. (2020) developed a model that describes emancipated cooperation between humans and automation at the decision-making layer in an automotive application. It examines the interaction between a human and an automated system, where both must agree on a common turning direction at a road junction (maneuver , Figure 2c). The interaction takes place via a tablet. Once an agreement has been reached, the vehicle plans the trajectory of the agreed maneuver to be driven fully autonomously and also executes it fully autonomously. Figure 2c shows the HMC graph. In this case, the communication path is a loop at the decision layer, with the tablet as the communication interface.

Varga et al. (2020) presented a cooperative approach for vehicle-manipulators, in which the vehicle (automation) and the human (as the manipulator operator) cooperate. The special feature of the system dynamics is the unidirectional coupling between the vehicle and manipulator nodes (Figure 2d). The presented limited-information shared control approach results in a communication loop at the action layer, which is closed via the system of vehicle and manipulator (yellow path). The human specifies the trajectory for the manipulator (left green path). Automation estimates its reference based on the human’s control variable. Both communication paths at the trajectory layer are not closed, resulting in a leader–follower structure with the human as the leader.

Benloucif et al. (2019) presented an approach that either takes into account the reference of the human (in the case of , represents the driver attention state) or does not take it into account based on the measurement of a defined driver attention state (Figure 2e). For , an additive compromise is formed from both references. At the trajectory layer, this results in a leader–follower structure for and . A compromise is found for , but this is controlled by the automation (Schneider et al., 2024).

Losey and O’Malley (2018) presented a trajectory deformation approach, which, in addition to impedance control for the movement of a robot-end effector (the yellow communication loop in Figure 2f), enables humans to deform the reference of the automation. If a force is measured, this leads to a deformation, which is referred to as in Figure 2f. The roof indicates that this is an estimated value. If the human exerts a force and is, therefore, , the human is the leader. If , automation is the leader.

Based on the HMC graphs shown in Figure 2, it can be observed that communication paths are found in all works, which is a characteristic of cooperation (Lunze, 2019, p. 13). The most common form of cooperation occurs at the action layer (Marcano et al., 2020; Varga et al., 2020; Losey and O’Malley, 2018), with a special feature in Huang et al. (2022), where cooperation at the action layer includes the trajectory planning layer of automation. Rothfuß et al. (2020) is the only work to present a cooperative approach at the decision layer. Intra-layer communication, as defined in Section 3.1, is present in Marcano et al. (2020) and Rothfuß et al. (2020). At the trajectory layer, leader–follower structures are present. A closed communication loop at the trajectory layer is not present in any of the works presented.

The following chapter presents three different communication loops at the trajectory layer and simulates each using the same application example.

4 Simulative comparison of communication loops for cooperation at the trajectory layer

4.1 Description and motivation of the simulation system

As an application example, a positioning task involving a heavy object is considered, performed jointly by a human and robot at the action layer. The robot is modeled as a robot arm with three degrees of freedom, which can manipulate its end effector’s position in three Cartesian dimensions: , , and 1. For this simulation, the orientation of the end effector is neglected. Furthermore, the -component of the positioning task is neglected, reducing the task to a planar problem in the dimensions and . It is assumed that the robot carries the heavy object and compensates for the weight force. The human and robot are coupled via the end effector of the robot arm (see visualization in Figure 3). The robot is operated by means of admittance control, i.e., the human can move the robot arm by exerting a two-dimensional force . The admittance of the robot is preset, but it is not analyzed further in this study. The robot can also adjust the position of the end effector by applying a two-dimensional force .

FIGURE 3

Visualization of the application example.

4.2 System model

The system dynamics result in a double integrator system of a mass-damper system, in which the force applied by the human and that applied by the robot add up and serve as input variables to the system. The state vector consists of four state variables, defined as , and the system dynamics are described by the state-space modelThe matrix shows integrating behavior in both the dimensions and . The term denotes the damping behavior of oscillations. For the matrices and , it is assumed that , which models the same force effect on the system for both the human and the robot. The two position states and result in the output . The mass of the object to be moved is , and the initial state is .

4.3 Cooperation on the action layer

The interaction of the human and the robot on the action layer is modeled as a cooperative process, according to Flad et al. (2014) and Varga (2024), and it is described and solved using game theory. In this model, the human and the robot are each modeled as predictive MPCs (Flad et al., 2014), whose optimization problems are coupled. For the simulation, it is assumed that and matrices are mutually known to both agents. In a practical application, these could be determined using the method described by Inga et al. (2017). In addition, both agents know the control value of the partner, which can be justified via the end effector as a haptic interface: both agents feel or measure the force of the other agent on the end effector at all times. However, the output state references and of both agents are not known to the respective partner and differ. With , , and , the cost functions of the two agents for the prediction horizon with time steps are, therefore, as follows: and matrices have the following entries:

It is assumed that the human penalizes deviations in state less than the automation does. At the same time, the human assigns a higher penalty to the control variable, which should lead the robot to take on a greater share of the load.

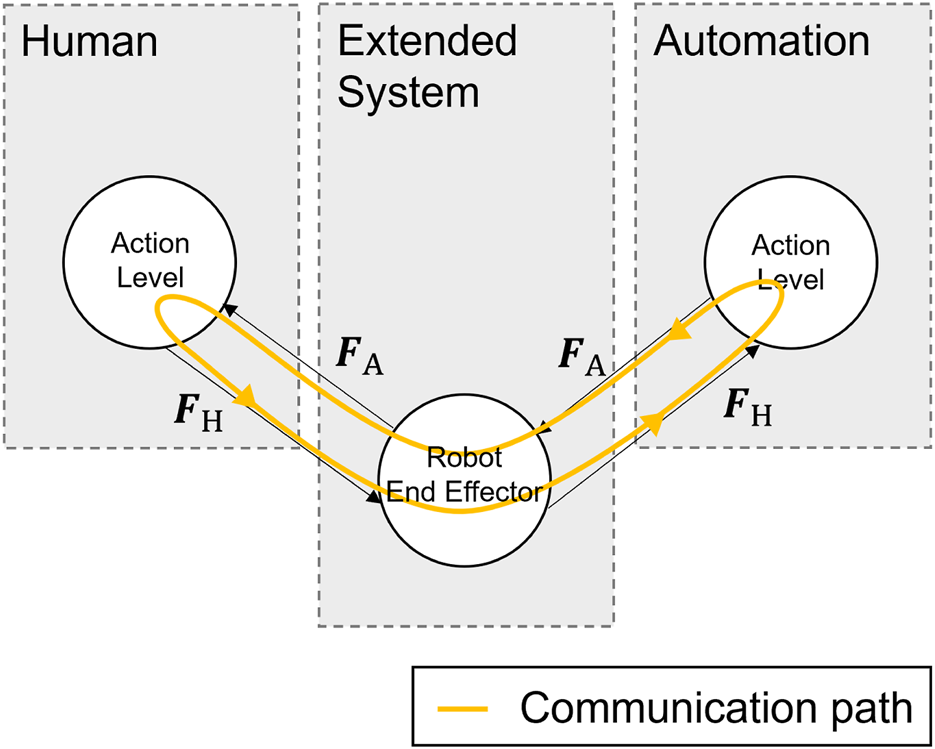

The solution for the control variables and results from the Nash equilibrium. The solutions of the coupled MPC optimization problems are calculated using the iterative best response method, according to which each agent solves its own coupled MPC optimization problem and that of its partner at each time step. In one iteration, the control variable of the partner is kept constant, and its own control variable is calculated. In the next iteration, this control value is kept constant for the partner’s optimization problem, and the partner’s control variable is updated. This update process continues until the control values converge to the Nash equilibrium. The optimization problems were solved in this paper using CasADi (Andersson et al., 2019) and an IPOPT solver. According to the receding horizon strategy, only the first element of the control variable trajectory is fed into the system (Kwon and Han, 2005). The described modeling of the action layer can be depicted using the graph shown in Figure 4. forces and serve as the communication variables.

FIGURE 4

HMC graph showing the communication loop for cooperation on the action layer.

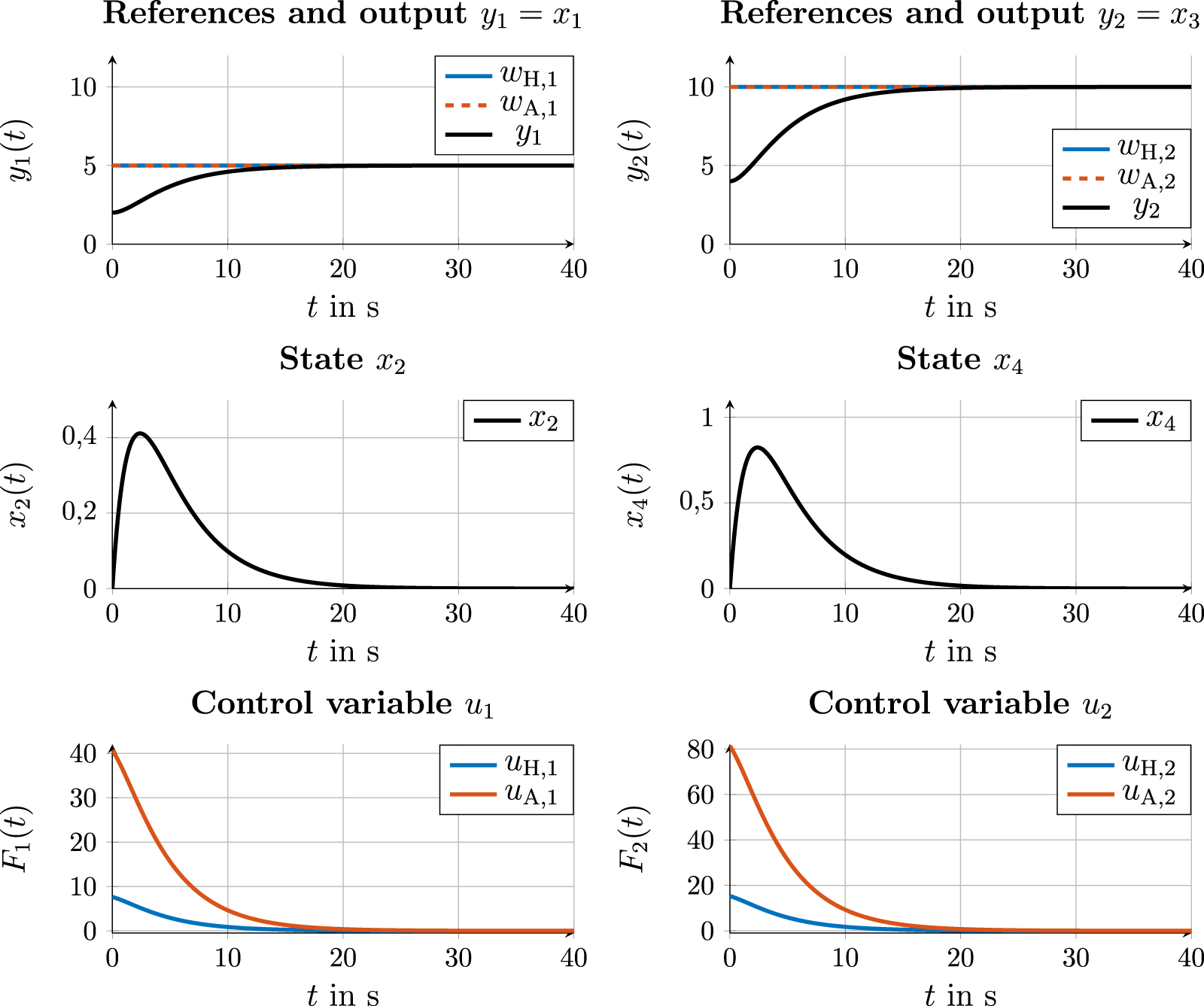

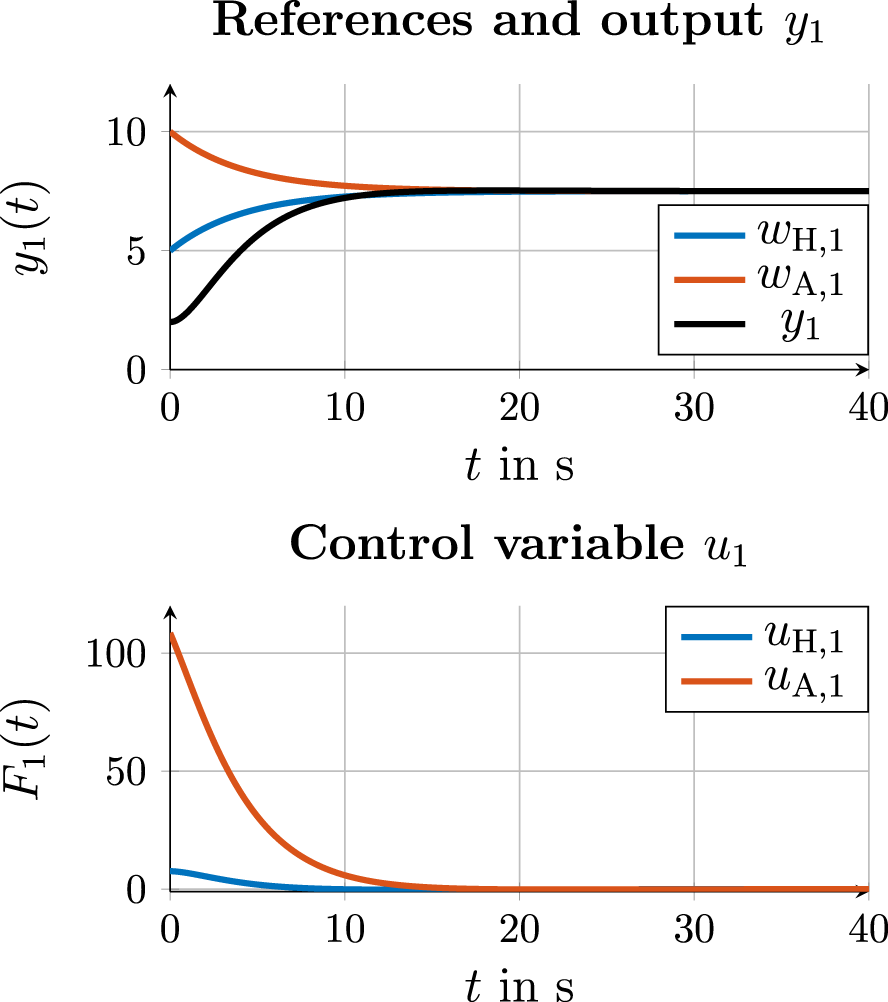

Figure 5 shows a simulation in which and initially match: . In the state progression, it can be observed that the states and converge to the references and in the steady state, respectively. In addition, it can be observed in the control variable curve that both control variables and disappear for .

FIGURE 5

Simulation results for state and control values on the action layer with human and robot having the same reference for the output states.

Figure 6, on the other hand, shows a simulation in which and differ ( and ), and it can be seen that for the outputs and , there is a constant steady state value for . However, this constant steady state value lies between the two references of the human and the robot. It can be observed that it is closer to the reference in each case. This can be explained by the higher control values of the robot during , which are also shown in the diagram in Figure 6. The higher control values of the robot result from the lower penalty of the control variable in the matrix compared to the matrix (see (4)), whereby higher control values are permitted for the robot. In this simulation, this corresponds to the intention that the robot takes on a higher load of the weight to be moved than the human. When examining the control values and as well as and , it is noticeable that these do not disappear in the steady state for ; instead, they settle atthe following values: and and and . This is the control conflict between the robot and the human described in Section 1. This arises from the Nash equilibrium of the interaction at the action layer, which results in constant steady-state values and . However, these constant steady-state values are based on the force equilibrium between the human and the robot. This force equilibrium can be explained by the fact that and do not become 0, whereby, according to (3) and (4), a non-vanishing control value results for both agents. This control conflict is to be resolved by reaching a consensus on a common reference so that the control error disappears for . This requires cooperation at the trajectory layer, which is introduced and simulated in the following subsection.

FIGURE 6

Simulation results for state and control values on the action layer with the human and robot having different references.

4.4 Cooperation on the trajectory layer

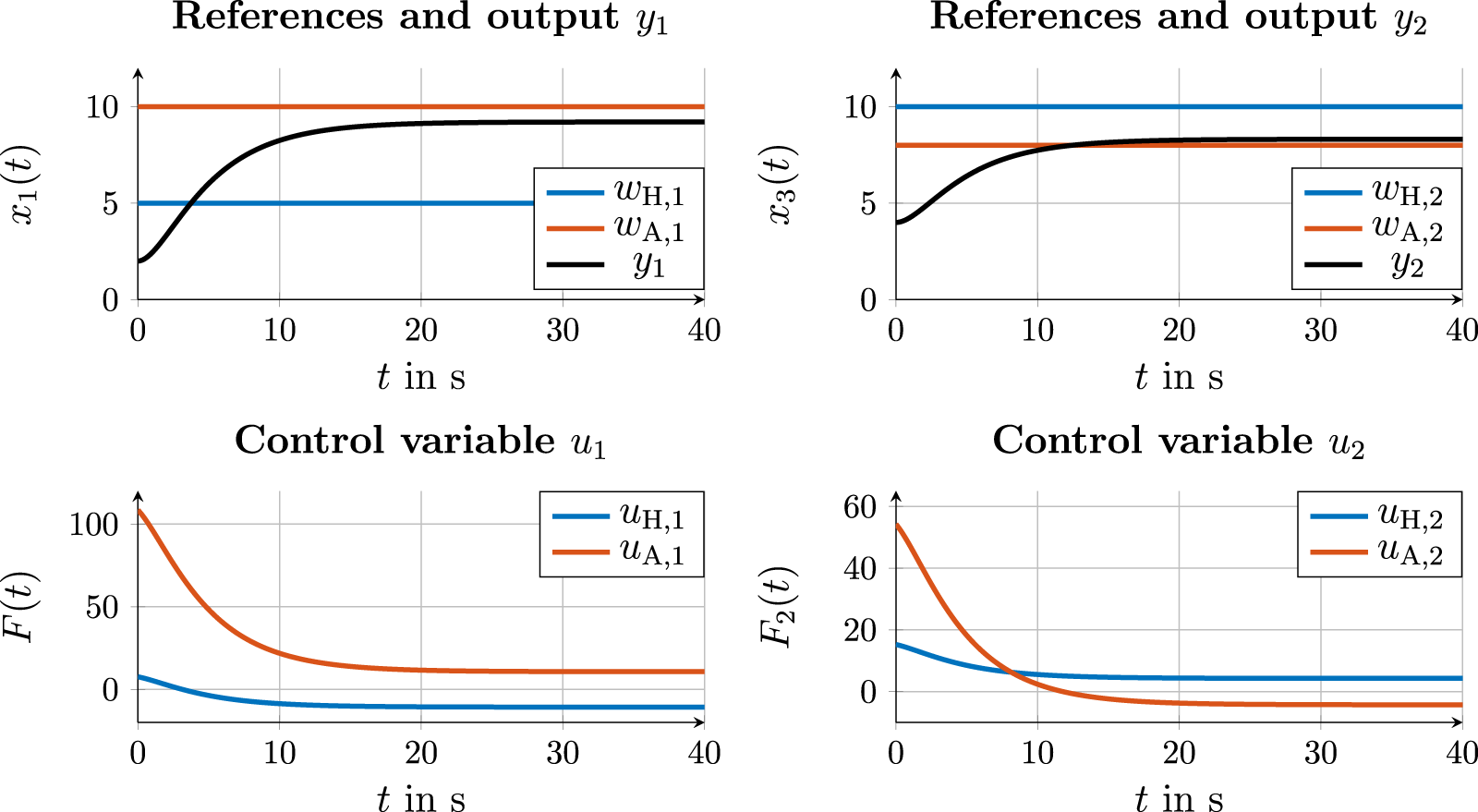

To achieve consensus at the trajectory layer, the graph from Figure 4 must be extended to the trajectory layer. From a development perspective, there are several options for selecting the communication loop, as required in Definition 5. Three such options are shown in Figure 7, which are compared in the following subsections. In addition, a leader–follower simulation is carried out for comparison (see Figure 7d).

FIGURE 7

Communication variants 1, 2, and 3 for emancipated cooperation and a leader–follower structure in (d). (a) Communication variant 1. (b) Communication variant 2. (c) Communication variant 3. (d) Leader–follower structure.

In all cases, a simple consensus protocol from Lunze (2019) (p. 58) is used, whose model dynamics are integrating and take the estimated error as input. This consensus protocol can deviate from real human behavior; however, it is suitable for demonstrating the working principle of the proposed communication loops. For a practical application, a human consensus protocol would first have to be identified in a study. The consensus protocol at the trajectory layer for an agent in the present case is defined as follows:For both agents, was chosen. The reference values of the human and the robot for the following simulations are as follows:In both dimensions of the reference vectors, there is, therefore, a disagreement between the human and the robot. Due to the symmetry of the inertia in both dimensions of the heavy object to be moved and the fact that the two dimensions are not coupled, only the first output is considered below for reasons of space. The results for the second output are analogous to the output .

4.4.1 Communication variant 1

As the first option, the communication variant proposed by Abbink et al. (2018) is simulated, in which a separate communication interface is established at the trajectory layer. In practice, this can be achieved via a tablet or a second joystick. For this simulation example, it is assumed that the human and the robot communicate their references and directly and explicitly via an unspecified interface. The estimated value in the consensus protocol (5) thus becomes the true value . Figure 8 shows the output and the consensus for the references and . The selected consensus protocol (5) leads, according to equation (3.18) in Lunze (2019), p. 66),to a consensus with the value with , , and . It can be observed that at the action layer, the output takes the found consensus in the reference value as its steady-state value. This, in turn, means that the control values disappear as desired in the steady-state case (see Figure 8).

FIGURE 8

Simulation results for state and control values with cooperation on the trajectory layer in communication variant 1.

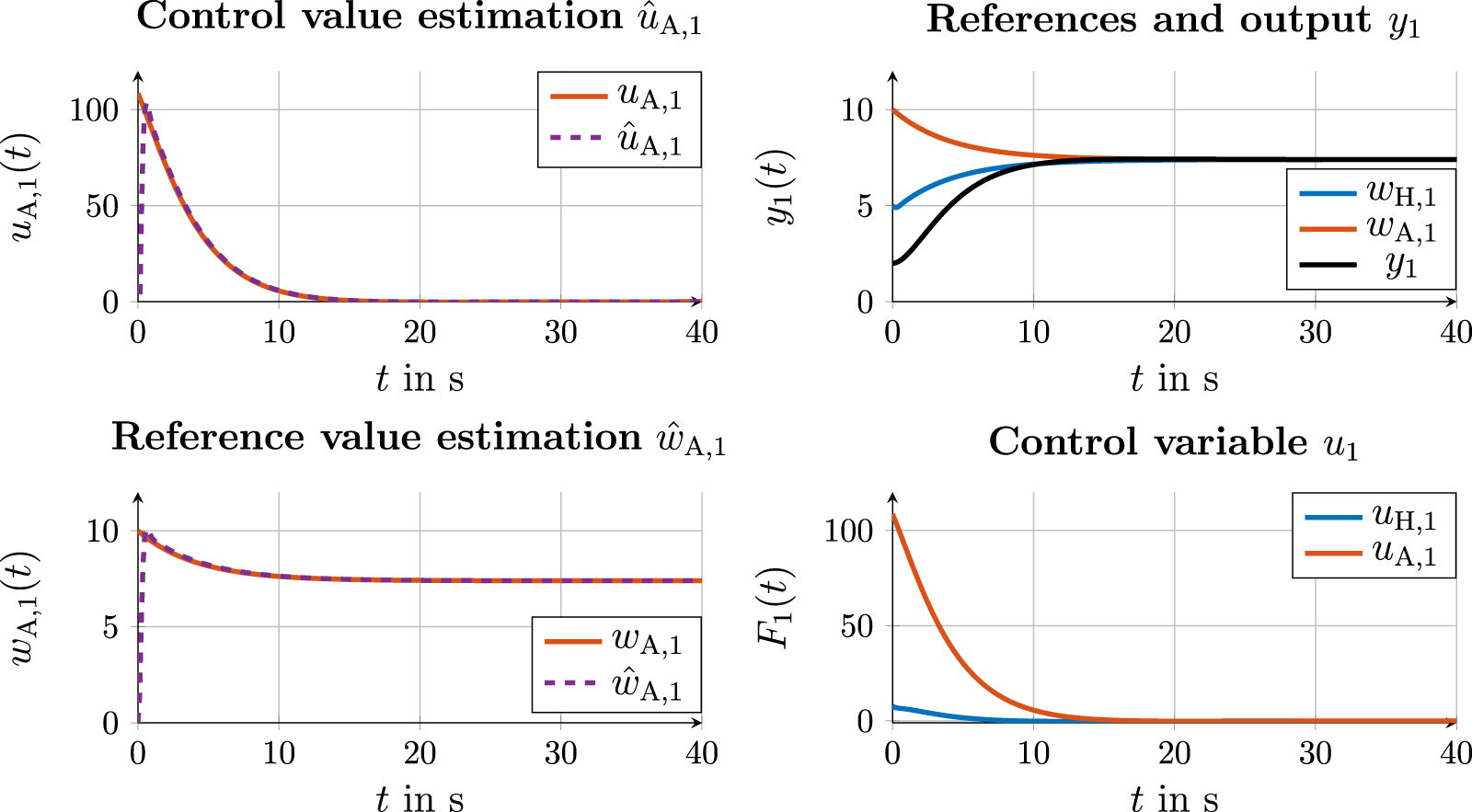

4.4.2 Communication variant 2

The second communication variant is based on the communication loop presented by Schneider et al. (2022), as shown in Figure 7b for this simulation example. Humans communicate their reference to the robot via an interface. The automation in turn responds to its reference request by executing a movement, i.e., via the action layer. Reasons for choosing this communication loop can be safety aspects, as is the case described by Schneider et al. (2022): for safety reasons, the robot arm, as the communication interface from automation to the human, must not be moved. The reference value of the automation has to be reconstructed from the control variables of automation at the action level. For this purpose, a heuristic was applied that controls the error to . This control error is the difference between the measured control variable of automation and a control variable calculated using the known and matrices of automation with an estimated reference . The erroris added to the current estimated value at each time step after multiplication with a constant gain as follows:With the new estimated value , a new input is calculated in the forward simulation via the MPC solution of automation, and this allows the new control error to be measured. The parameter is chosen asFigure 9 shows (the two diagrams on the left) the corresponding resulting curves for the estimation of and . It shows that the estimate after corresponds approximately to the true manipulated variable . The estimate of the reference corresponds to the true reference after . The estimation of results, according to the consensus Formula 5, in the reference curves for and , as shown in the right top of Figure 7b. The resulting control variable curves and are shown on the bottom right. It can be observed that the control variables for disappear as desired, and the output converges to the steady-state value, which corresponds to the found reference consensus.

FIGURE 9

Simulation results for the control value and state estimation of automation (left) and state and control values with cooperation on the trajectory layer in communication variant 2 (right).

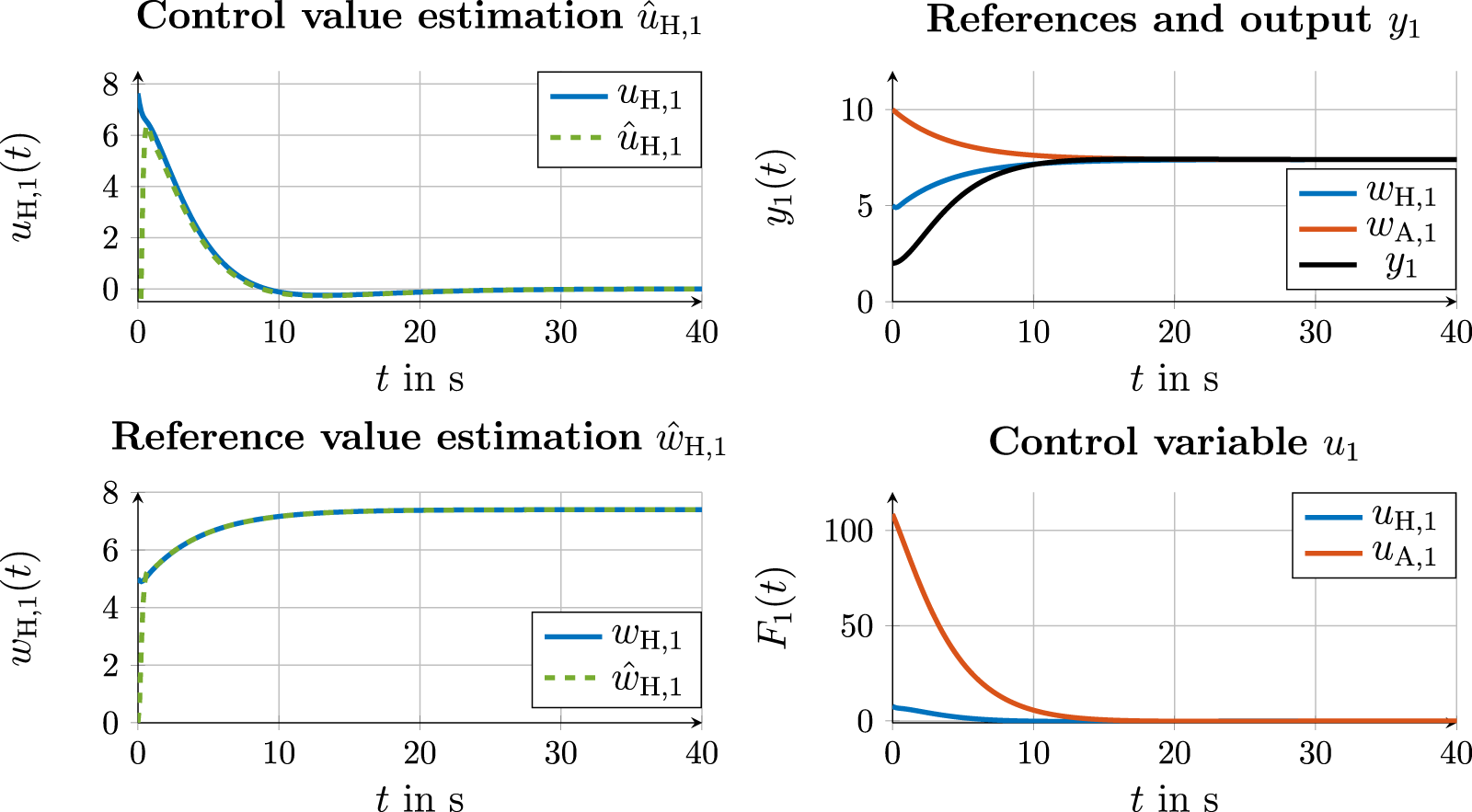

4.4.3 Communication variant 3

The third alternative is the communication shown in Figure 7c. Compared to communication variant 2, humans also communicate their desire to move via the direct execution of their movement. Both agents use the variables from the underlying action layer as input variables for cooperation at the trajectory layer, and each agent reconstructs the reference variables of the partner from the feedback of the error = and = , respectively. The automation also estimates the reference of the human according to (Equation 10). Here, the control error is multiplied with the gain.The estimated values for and are shown in the two diagrams in the left in Figure 10. After , the estimated control value follows its true value. For reference, the estimation closely follows the true value after . Using these estimated reference values, the consensus at the trajectory layer, along with the resulting state and control values, is shown on the right side of Figure 10.

FIGURE 10

Simulation results for the control value and state estimation of automation (left) and state and control values with cooperation on the trajectory layer in communication variant 3 (right).

4.4.4 Leader–follower communication

Compared to emancipated cooperation approaches and associated communication variants 1–3, the graph in Figure 7d shows a leader–follower structure in which the human is the leader for the reference. The communication path from the human to automation corresponds to that of communication variant 3, in which automation estimates the desired reference of the human from the state and control variables of the action layer. Figure 11 shows the estimation curves for and . Based on the estimated reference of the human, the consensus protocol for consensus finding at the trajectory layer with results in the two trajectories for and , as shown in Figure 11 (right). The reference of automation matches the reference of the human as the leader until they align with each other after . The output follows the human reference and is adjusted after .

FIGURE 11

Simulation results for the control value and state estimation of the human (left) and state and control values (right) with leader–follower communication on the trajectory layer.

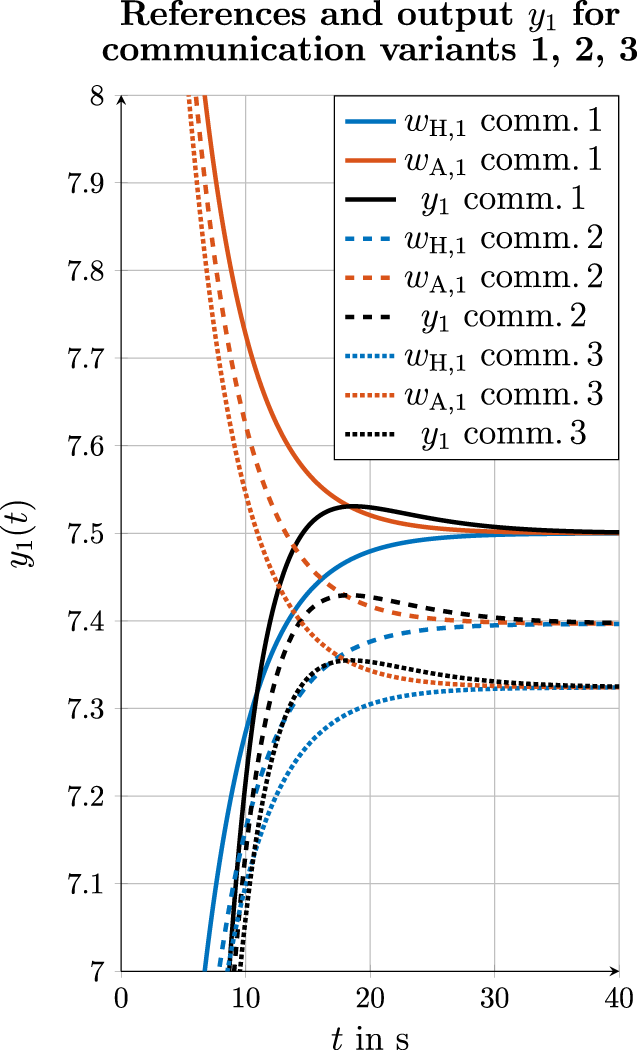

FIGURE 12

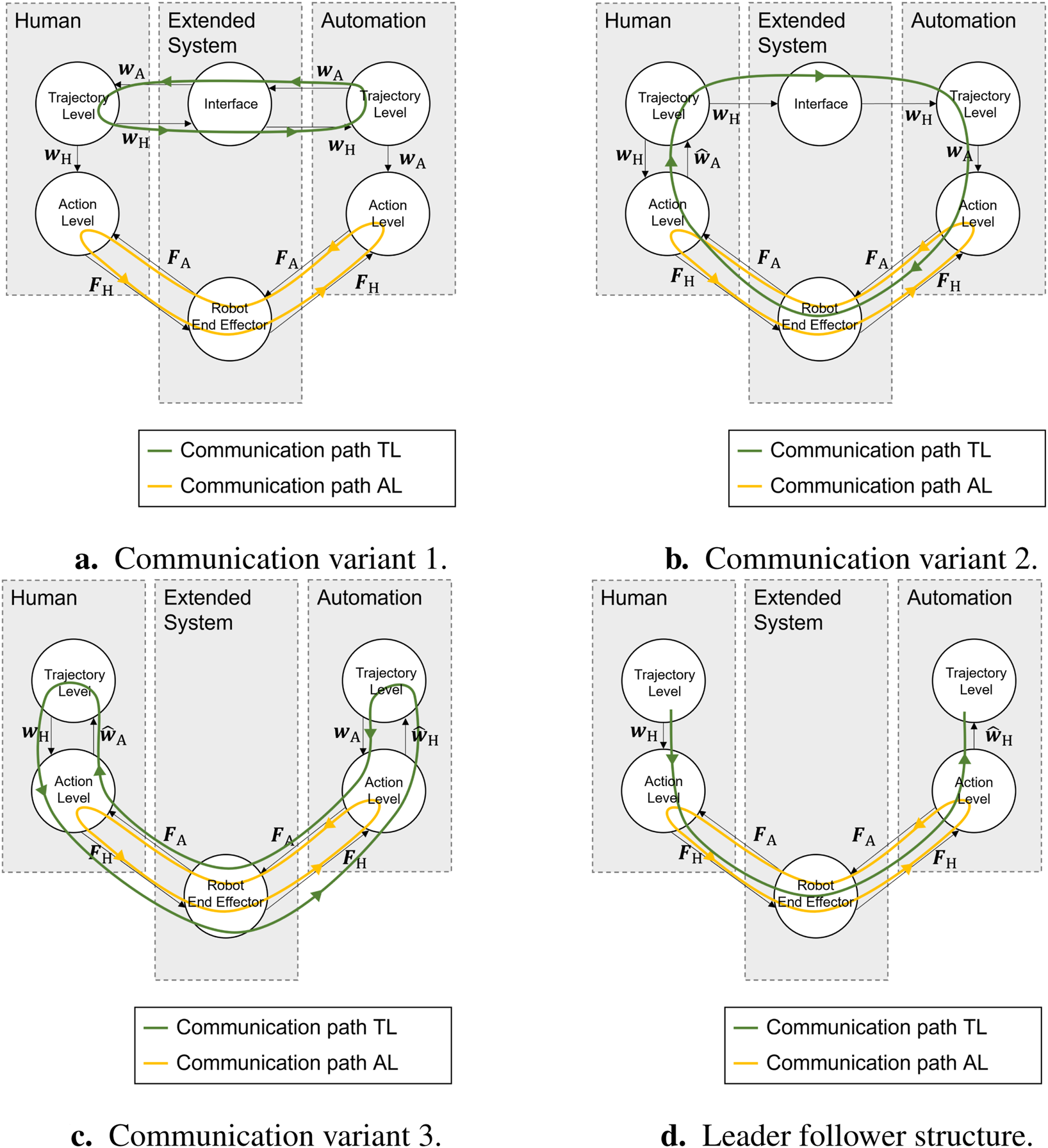

Simulation results for references and output for all three communication variants.

4.5 Comparison of the consensus values in communication variants 1, 2, and 3

As it can be observed in the simulation results in Sections 4.4.1, Section 4.4.2, and Section 4.4.3, a consensus between the human and automation is found in all three communication variants using the consensus protocol (5). According to Equation 3.18 from Lunze (2019), the consensus exists for the references for output in the case of the exchange of the true reference values and . However, the estimation from the control variables generates an estimation error at the beginning of cooperation for small :As a result, the estimation error (Equation 13) applies to the consensus protocol:As shown in Figures 9, 10 for the estimation of references, the estimation error disappears for . However, in the comparison of consensus values of all three communication variants, a difference can be observed according to the existent estimation error . Figure 12 shows the reference of the human, the reference of the automation, and the output for all three communication variants. It can be observed that in the first communication variant, in which the true references and are exchanged, the consensus is reached according to (Equation 8). In the second communication variant, is reached, and in the third communication variant, is reached. Compared to the first communication variant, the third communication variant, therefore, shows a difference of to . At the same time, it must be said that this difference is the result of the present simulation of the human behavior. In practice, it is expected that humans will continue to apply a control variable if they have not yet agreed with the consensus reached with automation.

4.6 Discussion

The simulation results show that for emancipated cooperation at the trajectory layer, a consensus for the common reference is found in all three cases shown, each involving a variation of the communication loops. It should be noted that the selected consensus protocol (Equation 5) from Lunze (2019) represents a model that does not always align with human behavior. In practice, this consensus behavior of humans must first be identified since it may vary between human groups, e.g., concerning age and gender. Second, for the first communication variant in Section 4.4.1, a perfect exchange of information about the true references between the two agents was assumed. In practice, however, this always happens via some type of communication interface. Depending on the communication medium used and the ability of humans to communicate their true reference via this, an error in the true reference is expected in a practical setup. Finally, it was assumed for all simulations that all necessary parameters are known, particularly the entries of the and matrices, are known to both agents. These parameters would need to be identified first in practical use. Despite these limitations and simplifications mentioned, the simulations provide a successful proof of concept for variants of communication loops for emancipated cooperation at the trajectory layer.

5 Conclusion

In this article, a graph representation for a human–machine cooperation system was presented. For this purpose, the butterfly layer model from Rothfuß et al. (2020) was converted into a graph representation. The graph representation offers several advantages: on one hand, human–machine cooperation systems can easily be extended to cases involving interactions with other agents. On the other hand, the graph can be examined for closed-loop communication between the human and automation by checking for loops in the form according to Definition 5. For practical application, a human consensus protocol must first be identified through a study. In addition, the next step is to conduct a study to test the proposed communication loops with humans in practice.

Statements

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

JS: writing – original draft. BV: writing – review and editing. SH: supervision and writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The authors acknowledge support by the KIT-Publication Fund of the Karlsruhe Institute of Technology.

Acknowledgments

The AI-based translation tool DeepL was used to translate parts of the text and for linguistic correction.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Generative AI was used in the creation of this manuscript. The AI-based translation tool DeepL was used to translate parts of the text and for linguistic correction.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1.^The denotations , , and are used instead of , , and for the three spatial directions in order to avoid confusion with the system states and system outputs .

References

1

Abbink D. Mulder M. (2010). “Neuromuscular analysis as a guideline in designing shared control,” in Advances in haptics (IntechOpen). 10.5772/8696

2

Abbink D. A. Carlson T. Mulder M. de Winter J. C. F. Aminravan F. Gibo T. L. et al (2018). A topology of shared control systems—finding common ground in diversity. IEEE Trans. Human-Machine Syst.48, 509–525. 10.1109/THMS.2018.2791570

3

Andersson J. A. E. Gillis J. Horn G. Rawlings J. B. Diehl M. (2019). CasADi: a software framework for nonlinear optimization and optimal control. Math. Program. Comput.11, 1–36. 10.1007/s12532-018-0139-4

4

Barrett G. Lafortune S. (1998). “A novel framework for decentralized supervisory control with communication,” in SMC’98 conference proceedings. 1998 IEEE international conference on systems, man, and cybernetics cat. No.98CH36218, 1, 617–620. 10.1109/ICSMC.1998.725481

5

Benloucif A. Nguyen A.-T. Sentouh C. Popieul J.-C. (2019). Cooperative trajectory planning for haptic shared control between driver and automation in highway driving. IEEE Trans. Industrial Electron.66, 9846–9857. 10.1109/TIE.2019.2893864

6

Carlson T. Demiris Y. (2012). Collaborative control for a robotic wheelchair: evaluation of performance, attention, and workload. IEEE Trans. Syst. Man, Cybern. Part B Cybern.42, 876–888. 10.1109/TSMCB.2011.2181833

7

Claussmann L. Revilloud M. Gruyer D. Glaser S. (2020). A review of motion planning for highway autonomous driving. IEEE Trans. Intelligent Transp. Syst.21, 1826–1848. 10.1109/TITS.2019.2913998

8

Fischer R. Plessow F. (2015). Efficient multitasking: parallel versus serial processing of multiple tasks. Front. Psychol.6, 1366. 10.3389/fpsyg.2015.01366

9

Flad M. Otten J. Schwab S. Hohmann S. (2014). “Steering driver assistance system: a systematic cooperative shared control design approach,” in 2014 IEEE international conference on systems, man, and cybernetics (SMC), 3585–3592. 10.1109/SMC.2014.6974486

10

Flemisch F. Abbink D. A. Itoh M. Pacaux-Lemoine M.-P. Weßel G. (2019). Joining the blunt and the pointy end of the spear: towards a common framework of joint action, human–machine cooperation, cooperative guidance and control, shared, traded and supervisory control. Cognition, Technol. & Work4, 555–568. 10.1007/s10111-019-00576-1

11

Flemisch F. Winner H. Bruder R. Bengler K. (2016). “Cooperative guidance, control, and automation,” in Handbook of driver assistance systems.

12

Grobbel M. Varga B. Hohmann S. (2023). “Shared telemanipulation with VR controllers in an anti slosh scenario,” in 2023 IEEE international conference on systems, man, and cybernetics (SMC), 4405–4410. 10.1109/SMC53992.2023.10393900

13

Huang C. Huang H. Zhang J. Hang P. Hu Z. Lv C. (2022). Human-machine cooperative trajectory planning and tracking for safe automated driving. IEEE Trans. Intelligent Transp. Syst.23, 12050–12063. 10.1109/TITS.2021.3109596

14

Inga J. Köpf F. Flad M. Hohmann S. (2017). “Individual human behavior identification using an inverse reinforcement learning method,” in 2017 IEEE international conference on systems, man, and cybernetics (SMC), 99–104. 10.1109/SMC.2017.8122585

15

Inga J. Ruess M. Heinrich Robens J. Nelius T. Rothfuß S. Kille S. et al (2022). Human-machine symbiosis: a multivariate perspective for physically coupled human-machine systems. Int. J. Human-Computer Stud.170, 102926. 10.1016/j.ijhcs.2022.102926

16

Kwon W. H. Han S. (2005). “Receding horizon control: model predictive control for state models,” in Advanced textbooks in control and signal processing. Berlin ; London: Springer.

17

Li G. Li Q. Yang C. Su Y. Yuan Z. Wu X. (2023). The classification and new trends of shared control strategies in telerobotic systems: a survey. IEEE Trans. Haptics16, 118–133. 10.1109/TOH.2023.3253856

18

Losey D. P. McDonald C. G. Battaglia E. O’Malley M. K. (2018). A review of intent detection, arbitration, and communication aspects of shared control for physical human–robot interaction. Appl. Mech. Rev.70. 10.1115/1.4039145

19

Losey D. P. O’Malley M. K. (2018). Trajectory deformations from physical human–robot interaction. IEEE Trans. Robotics34, 126–138. 10.1109/TRO.2017.2765335

20

Ludwig J. Gote C. Flad M. Hohmann S. (2017). “Cooperative dynamic vehicle control allocation using time-variant differential games,” in 2017 IEEE international conference on systems, man, and cybernetics (SMC), 117–122. 10.1109/SMC.2017.8122588

21

Lunze J. (2019). Networked control of multi-agent systems: consensus and synchronisation, communication structure design, self-organisation in networked systems, event-triggered control. Textb. (Münster Ed. MoRa). Available online at: https://www.editionmora.de/ncs/ncs.html

22

Marcano M. Díaz S. Pérez J. Irigoyen E. (2020). A review of shared control for automated vehicles: theory and applications. IEEE Trans. Human-Machine Syst.50, 475–491. 10.1109/THMS.2020.3017748

23

Marko H. (1973). The bidirectional communication theory - a generalization of information theory. IEEE Trans. Commun.21, 1345–1351. 10.1109/TCOM.1973.1091610

24

Pacaux-Lemoine M.-P. Flemisch F. (2019). Layers of shared and cooperative control, assistance, and automation. Cognition, Technol. & Work21, 579–591. 10.1007/s10111-018-0537-4

25

Pacaux-Lemoine M.-P. Makoto I. (2015). “Towards vertical and horizontal extension of shared control concept,” in 2015 IEEE international conference on systems, man, and cybernetics, 3086–3091. 10.1109/SMC.2015.536

26

Pacaux-Lemoine M.-P. Trentesaux D. (2019). Ethical risks of human-machine symbiosis in industry 4.0: insights from the human-machine cooperation approach. IFAC-PapersOnLine52, 19–24. 10.1016/j.ifacol.2019.12.077

27

Pacaux-Lemoine M.-P. Vanderhaegen F. (2013). “Towards levels of cooperation,” in 2013 IEEE international conference on systems, man, and cybernetics, 291–296. 10.1109/SMC.2013.56

28

Rothfuß S. (2022). Human-machine cooperative decision making. Karlsruhe: Ph.D. thesis, Karlsruher Institut für Technologie KIT. 10.5445/IR/1000143873

29

Rothfuß S. Wörner M. Inga J. Hohmann S. (2020). “A study on human-machine cooperation on decision level,” in 2020 IEEE international conference on systems, man, and cybernetics (SMC), 2291–2298. 10.1109/SMC42975.2020.9282813

30

Schneider J. Rothfuß S. Hohmann S. (2022). Negotiation-based cooperative planning of local trajectories. Front. Control Eng.3. 10.3389/fcteg.2022.1058980

31

Schneider J. Varga B. Hohmann S. (2024). Cooperative trajectory planning: principles for human-machine system design on trajectory level. A. T. - Autom.72, 1121–1129. 10.1515/auto-2024-0083

32

Todorov E. Jordan M. I. (2002). Optimal feedback control as a theory of motor coordination. Nat. Neurosci.5, 1226–1235. 10.1038/nn963

33

Varga B. (2024). Toward adaptive cooperation: model-based shared control using lq-differential games. Acta Polytech. Hung.21, 439–456. 10.12700/APH.21.10.2024.10.27

34

Varga B. Hohmann S. Shahirpour A. Lemmer M. Schwab S. (2020). “Limited-information cooperative shared control for vehicle-manipulators,” in 2020 IEEE international conference on systems, man, and cybernetics (SMC), 4431–4438. 10.1109/SMC42975.2020.9283367

Summary

Keywords

communication, human–machine cooperation, shared control, cooperative trajectory planning, human–machine interaction

Citation

Schneider J, Varga B and Hohmann S (2025) Consideration of communication in human–machine interaction for cooperative trajectory planning. Front. Robot. AI 12:1568402. doi: 10.3389/frobt.2025.1568402

Received

29 January 2025

Accepted

02 April 2025

Published

06 May 2025

Volume

12 - 2025

Edited by

Nils Mandischer, University of Augsburg, Germany

Reviewed by

Carlos Francisco Rodriguez, University of Los Andes, Colombia

Marie-Pierre Pacaux-Lemoine, UMR8201 Laboratoire d’Automatique, de Mécanique et d’Informatique Industrielles et Humaines (LAMIH), France

Updates

Copyright

© 2025 Schneider, Varga and Hohmann.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Julian Schneider, julian.schneider@kit.edu

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.