- 1Department of Biomechanical Engineering, University of Twente, Enschede, Netherlands

- 2Department of Robotics and Mechatronics, University of Twente, Enschede, Netherlands

Identifying kinematic constraints between a robot and its environment can improve autonomous task execution, for example, in Learning from Demonstration. Constraint identification methods in the literature often require specific prior constraint models, geometry or noise estimates, or force measurements. Because such specific prior information or measurements are not always available, we propose a versatile kinematics-only method. We identify constraints using constraint reference frames, which are attached to a robot or ground body and may have zero-velocity constraints along their axes. Given measured kinematics, constraint frames are identified by minimizing a norm on the Cartesian components of the velocities expressed in that frame. Thereby, a minimal representation of the velocities is found, which represent the zero-velocity constraints we aim to find. In simulation experiments, we identified the geometry (position and orientation) of twelve different constraints including articulated contacts, polyhedral contacts, and contour following contacts. Accuracy was found to decrease linearly with sensor noise. In robot experiments, we identified constraint frames in various tasks and used them for task reproduction. Reproduction performance was similar when using our constraint identification method compared to methods from the literature. Our method can be applied to a large variety of robots in environments without prior constraint information, such as in everyday robot settings.

1 Introduction

Autonomous robotic manipulation has the potential to improve human lives by alleviating physical effort. Robots may offer advantages over human labor regarding consistency, endurance, strength, accuracy, and/or precision in fields such as healthcare, logistics, exploration, and the manufacturing industry. However, autonomous robots are typically designed for one specific task in one specific environment, hence they lack versatility. Tasks in different environments therefore often require different robots, which may be expensive and impractical.

It may therefore be beneficial to develop robots that are versatile in their task execution, allowing a single robot to deal with a variety of tasks and environments. Manipulation tasks may include pick-and-place tasks (e.g., order picking), contact tasks (e.g., wiping, polishing, contour following, and opening/closing compartments) and tool-use tasks (e.g., hammering and screwing). Common environments may include moveable objects such as tools, as well as (immovable) physical constraints.

Models of the physical environments may assist in versatile task execution. For example, door opening is a common everyday task involving similar articulation mechanisms across most doors. If the interaction mechanisms (e.g., hinges and slides) can be modeled, a robot may apply the same control to all environments that have the same mechanisms, thereby improving robot versatility. However, manually creating such models may be cumbersome due to many possible variations in the environment, such as object positions, orientations, shapes, and dynamics. There is therefore a need for automatic modeling of physical environments (Kroemer et al., 2020).

Automatically modeling physical environments may occur in a Learning from Demonstration (LfD) context (Kroemer et al., 2020). In LfD, robots learn to perform tasks from human demonstrations, for example, by manually guiding a robot, rather than by explicit programming. From the demonstration data, the physical interactions throughout the demonstration may be modelled, which in turn may be used in autonomous task reproduction.

Physical environments typically contain kinematic constraints that restrict movement. In everyday settings numerous constraints may be encountered, including articulated/mechanism contacts, such as prismatic (drawers) and revolute joints (doors), polyhedral contacts such as pin-plane contacts (pen drawing) and plane-plane contact (box sliding), and contour-following contacts (dusting).

Constraint awareness can benefit task execution in several ways. First, tasks can often be simplified when expressed in the constraints (Bruyninckx and De Schutter, 1996). Second, choosing a suitable control method, e.g., position, velocity, force, or impedance control can improve both task performance and stability by keeping undesirable interaction forces low (Conkey and Hermans, 2019; De Schutter et al., 1999). Third, planning to avoid constraints can simplify some tasks because there are fewer state transitions to consider, such as in reaching tasks (Oriolo and Vendittelli, 2009). Alternatively, planning to introduce constraints can simplify some tasks by reducing the free space of the robot, for example, during object alignment (Suomalainen et al., 2021). Fourth, differentiating between constrained states in a task provides meaningful, tractable building blocks, which can improve task planning (Ureche et al., 2015; Jain and Niekum, 2018; Holladay et al., 2021) and facilitate learning (Simonič et al., 2024). Fifth, some constraints are common in many robot tasks and can therefore be a basis for generalization between tasks (Li and Brock, 2022; Li et al., 2023).

Kinematic constraints consist of a (i) constraint class, e.g., a type of joint or contact, and (ii) constraint geometry, e.g., the orientation and position of a rotation axis or surface normal (De Schutter et al., 1999). Identifying constraints from data therefore requires (i) classifying the constraint class and (ii) identifying the constraint geometry, both are the topic of this work.

In this work, we make three assumptions about robot manipulation to limit our scope. First, we assume that robots are rigidly attached to a constrained mechanism or object in contact with the environment, and thus leave grasping and environmental compliance out of our scope. Second, we assume that there is a single maintained contact between a robot and the environment, such as a contact point, line, plane, or other continuous contact area. Third, we assume that all unconstrained degrees of freedom are excited, to prevent ambiguity between true physical constraints that do not allow motion and non-observed motions.

Bruyninckx and De Schutter defined kinematic constraint classes based on contact (Bruyninckx and De Schutter, 1993a; Bruyninckx et al., 1993b). They proposed to identify constraints by two methods: first, based on the absence of mechanical power in the direction of constraints, requiring position and force measurements. Second, based on whether velocities or forces separately fit candidate constraint models. They used both methods in a Kalman filter with a candidate constraint model of a specific class to estimate constraint geometry, such as contact points, axes of rotations, and polyhedral contact normals (De Schutter et al., 1999).

Several authors extended the methods of Bruyninckx and De Schutter, mainly on multi-contact polyhedral contacts (Meeussen et al., 2007; Cabras et al., 2010; Lefebvre et al., 2005). They identify, classify, and segment data containing arbitrary contacts between two uncertain polyhedral or curved objects using pose and wrench measurements. Where previous methods required approximate geometric models of the polyhedra, Slaets et al. identify arbitrary unknown polyhedra at runtime (Slaets et al., 2007). Although polyhedrons are useful to approximate many tasks, these methods are fundamentally limited in modeling rotations.

Alternative methods omit noise models, and fit constraint models of a specific class directly to data, identifying the constraint geometry in the process. Such models are specified manually, and when several candidate models are proposed, the best-fitting model is chosen. For example, several authors identify one of three candidate models (fixed, prismatic, and revolute joints) using kinematic measurements (Sturm et al., 2010; Niekum et al., 2015; Hausman et al., 2015). Subramani et al. identify six candidate models using kinematic measurements (Subramani et al., 2018). They later expand their method with force information and identify eight candidate models (Subramani et al., 2020).

Some methods do not require specific constraint models but use more versatile representations to capture multiple constraints. Sturm et al. fit Gaussian processes in configuration space, but the identified Gaussian process kernel parameters are not straightforward to interpret (Sturm et al., 2011). Mousavi Mohammadi et al. identify “task frames” instead of identifying constraints explicitly (Mousavi Mohammadi et al., 2024). Such frames conveniently describe contact tasks and thereby often align with constraint geometry, but lack constraint classification (Bruyninckx and De Schutter, 1996). They use velocities and/or forces in a multi-step decision process to identify frame properties that result in low or constant velocities and forces with minimal uncertainty. Van der Walt et al. identify constraints from kinematic data by fitting points, lines, planes, and their higher dimensional equivalents in six dimensional linear and angular velocity space, after which they select the best fitting model (Van der Walt et al., 2025).

Because kinematic constraints occur often in robot manipulation tasks, we believe more versatile constraint identification is an important step to advance the applicability of robots in the real world. However, the literature on constraint identification shows two common limitations. First, methods in the literature often require specific prior constraint models and estimates of geometry and noise. In everyday robot settings where many constraints can be encountered, it may be challenging to exhaustively define such models and estimates. Second, various methods require force measurements, which may not be available. This work overcomes both limitations by identifying constraint frames from kinematic data. First, the method can identify a wide variety of constraints without specific prior constraint models, or estimates of geometry or noise. The method is used to identify articulated/mechanism contacts, polyhedral contacts, and contour following contacts. Second, the method only requires kinematic measurements. Therefore, our method can be applied to various robots in everyday settings, without prior information about the environment or task.

The method offers two more useful features. First, the identified constraint frames can be used directly for task reproduction since the constraints are expressed in a task-relevant reference frame. Second, the number of identified parameters is fixed, in contrast to methods that use an increasing number of parameters for each added candidate model.

This paper is organized as follows: Section 2 discusses preliminaries on rigid body kinematics. Section 3 proposes to specify constraints using constraint frames. Section 4 proposes an optimization problem to identify constraint frames from kinematic data. Section 5 evaluates the method on experimental data from simulation and real robot task demonstrations. Section 6 reproduces robot tasks using the identified constraint frames. Section 7 discusses the results and draws conclusions.

2 Preliminaries on kinematics

This section contains preliminaries for rigid bodies kinematics (Lynch and Park, 2017). Motion between two rigid bodies can be represented by the transformation between two reference frames, one frame rigidly attached to each body. Such reference frames have a position and orientation in space, which can change with body motion. The transformation between two reference frames in three-dimensional space can be parameterized by a distance between their positions

which represents the pose (orientation

The velocity between two rigid bodies can be represented by a twist

where

Expressing the twist

expresses the twist

3 Constraint frames

This section introduces a method to specify constraints that result in constrained kinematics. This method is used in Section 4 for the inverse problem: identifying constraints from kinematic data.

3.1 Degrees of freedom

Kinematic constraints limit a robot’s motion. We consider Pfaffian constraints on the end effector twist

where

We restrict

We also allow constraints on

Here, axis

To specify whether the axes of

By summing the degree of freedom vector

Constraints can be thus defined by specifying

3.2 Frame type and geometry

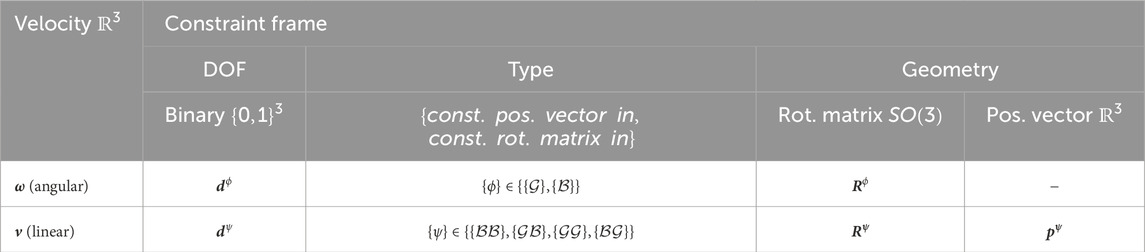

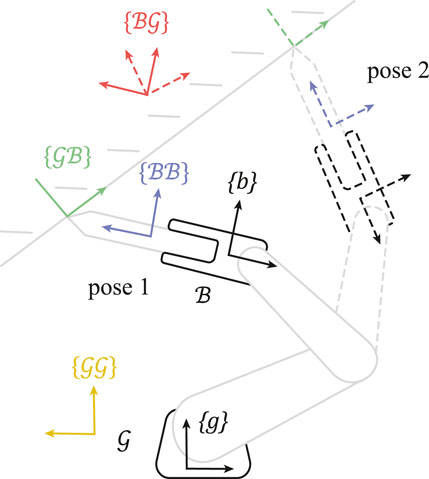

So far, the frame

The

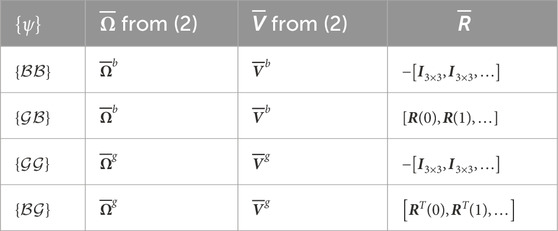

Figure 1. Constraint frame identification following a two-dimensional task demonstration, e.g., by a human guiding the robot. The behavior of the constraint frame types

Because the frames’ orientations and positions are constant in either one of the bodies, they can be parameterized by a constant rotation matrix

For the

3.3 Applied constraint frames

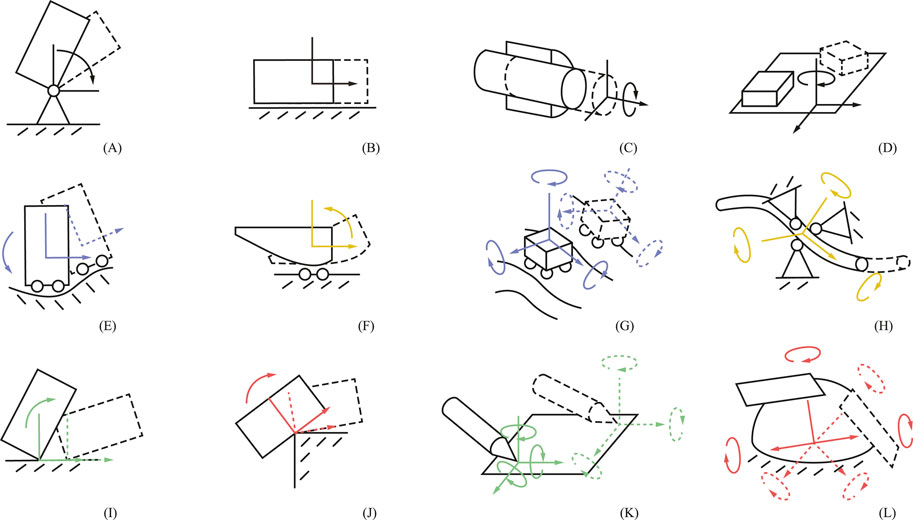

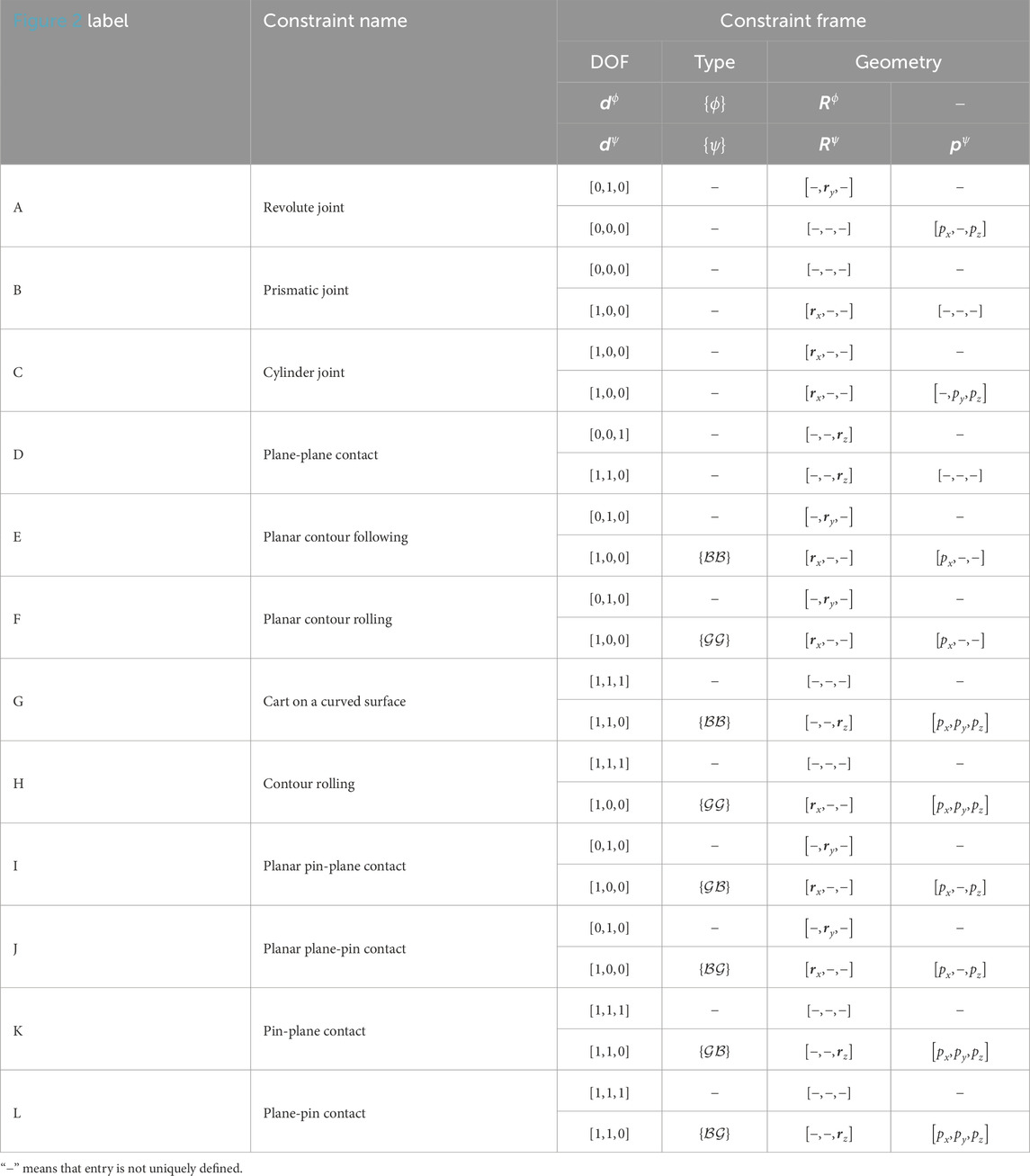

In summary, degrees of freedom

Figure 2. Visualization of twelve (not exhaustive) constraints that can be captured by the method. Each constraint (A–L) is described in Table 2. The left-hand figures are planar, where the rotation axis (y-axis) is normal to the plane. Colors (Table 2) indicate the linear velocity constraint frame type

The presented method does not uniquely define constraints for three reasons. First, the ordering of the axes is undefined. For example,

Table 2. Parameters of the example constraints of Figure 2.

In addition, two types of redundancies can occur depending on the constraint. First, (parts of) the frame

For simplicity, we consider angular velocity constraints where either type

4 Constraint frame identification

Section 3 introduced a method to specify constraints that result in constrained kinematics. This section considers the inverse problem: identifying constraints from constrained kinematic data. Constraint identification is first defined as an optimization problem for angular velocity

Section 3 specified constraints on

Thereby, a minimal representation of

Measuring

as defined by the scaled

for a signal

With noiseless measurements, the constraint condition (Equation 4) can then be tested by

assuming normally distributed noise

Instead of minimizing over

Therefore, we use a

but with

also known as the geometric mean (Bullen, 2003). This results in a quasinorm, because not all conditions for a norm are satisfied. By substituting the

Section 3 proposed two options for

where the first optimization

This method can also be applied to linear velocity

Section 3 proposed four options for

where

Table 3. Kinematic variables to transform (Equation 8).

Because the ground-truth constraint frame types

The inputs to the method are twists

5 Evaluation

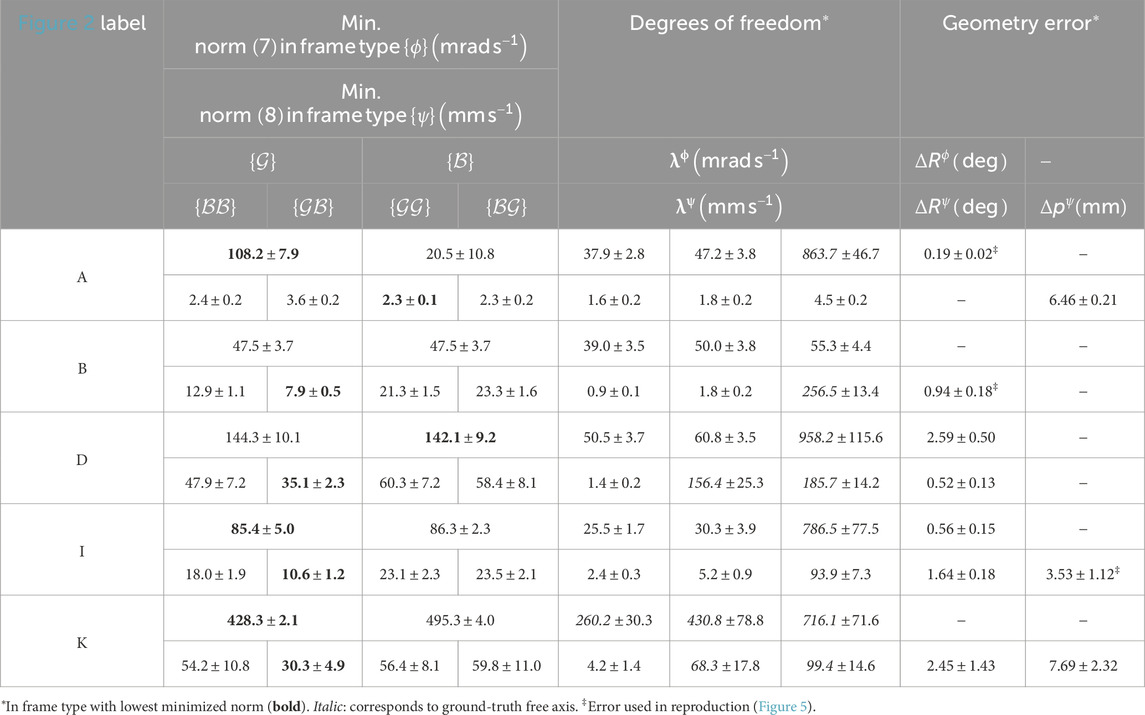

To evaluate the identification method, we simulated kinematic data for all twelve constraints of Figure 2 and gathered experimental robot data for five constraints. For the simulation experiments we used prior knowledge of the constraint frame types

5.1 Simulation experiments

For the simulation experiments, we first generated constrained end effector poses

Poses

Discrete time ran for

Figure 3. (A) Constraint frame identification of a plane-plane constraint (Figure 2D) in simulation experiments. (B) Constraint frame identification of a revolute joint (Figure 2A) in robot experiments. (C) Reproduction of motion in a prismatic joint (Figure 2B) by applying force

Normally distributed noise with standard deviation

5.2 Robot experiments

To test the method on real-world data, a Franka Research 3 (Franka Robotics, Munich, Germany) was used to collect constrained end effector poses

Poses

5.3 Error metrics for evaluation

Section 3.3 noted that the geometric parameters

Section 3.3 and Table 2 noted that

The ground truth constraint frame geometries were

5.4 Implementation

Given experimental poses

The rotation matrices

5.5 Simulation experiment results

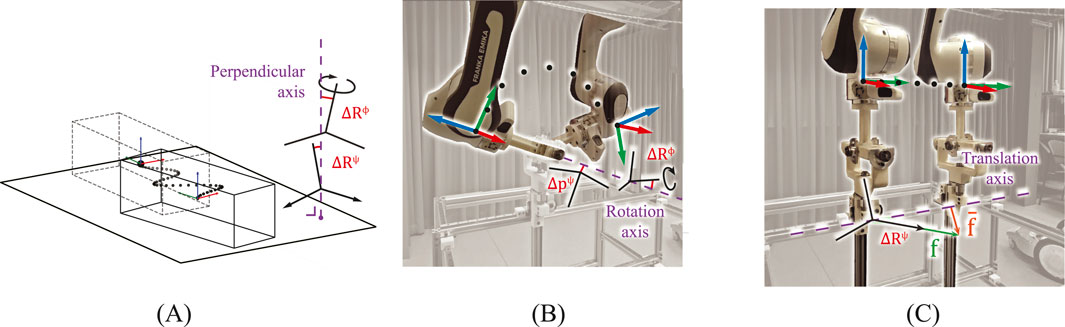

The geometric errors (

Figure 4. Sensitivities of the geometric identification errors (

5.6 Robot experiment results

Identifying constraint frames in demonstrations resulted in several candidate frame types (left, Table 4), each having associated degrees of freedom (middle, Table 4) and geometry (right, Table 4).

Section 4 noted that optimal frame types may be classified as the one with the lowest norms of Equation 7 or Equation 8. For constraints where any frame type is valid, norms are expected to be similar between frame types. This holds for all

After classifying the optimal frame type, the associated degrees of freedom and geometry can be evaluated (middle, right, Table 4). If any threshold between

6 Application to robot task reproduction

This section illustrates how robot tasks can be reproduced using control expressed in the identified constraint frames of Section 4, and how their identification errors (

6.1 Reproduction control

To reproduce the tasks, we used simple control based on the identified constraint frames from the experiments of Table 4, thereby illustrating simple LfD. We sent desired motor torques

Desired motor torques were sent to the robot using Robotic Operating System (ROS, version Noetic Ninjemys) from Ubuntu 20.04 with the franka_ros package. During operation, the user and bystanders were outside the workspace of the robot, and the user monitored the task reproduction with the robot’s emergency stop in hand.

Desired wrenches were set to constant values (

6.2 Effects of identification accuracy

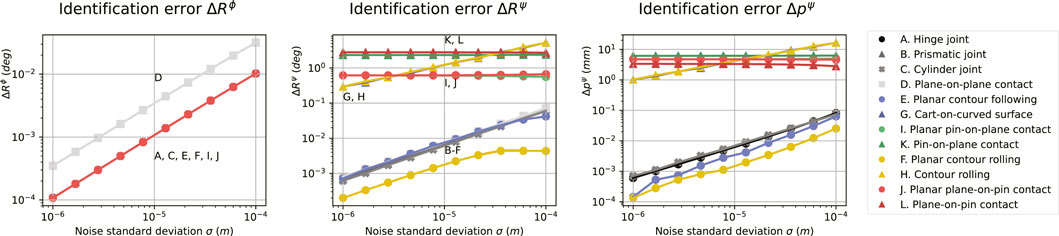

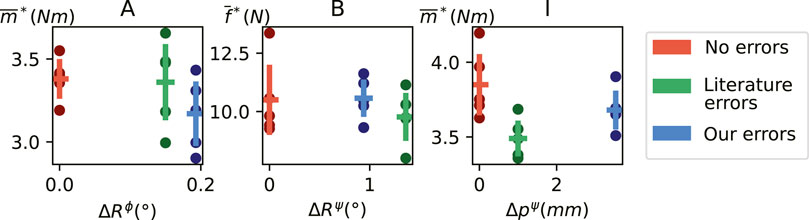

During constrained task reproduction there will be reaction wrenches along the constrained axes, which we consider undesirable in these experiments. Such reaction wrenches can be caused by, among other factors, the control applying wrenches based on imperfect constraint identification. Therefore, we compared the reaction wrenches in case the constraint frames used in the controller contain no errors (

From the measured interaction wrenches (

Figure 5. The peak magnitude reaction wrenches (

7 Discussion and conclusion

This work identifies constraints from kinematic data using constraint frames, consisting of a frame type that determines what body the frame is attached to, geometry that determines the orientation and position, and the degrees of freedom of velocities in that frame. First, frame geometries are identified by minimizing a norm on velocities in all frame types. Second, the optimal frame type is classified as the one with the lowest norm. Third, the degrees of freedom can be classified by thresholding the velocities in that frame.

7.1 Advantages

The method does not require force measurements, and can therefore be applied to any system that measures positions and orientations of constrained objects or manipulators. Examples include other (mobile) robotic arms with different kinematic chains, humanoid robots, and mobile robots constrained by their environments. Besides robotics, the method may also be applied to motion tracking systems, for example, to monitor human movement or estimate human joints (Ancillao et al., 2022). While impedance control was used to collect pose data and reproduce tasks, the constraint identification itself does not depend on force measurements. Because force measurements can improve identification accuracy it is the topic of future work (De Schutter et al., 1999; Subramani et al., 2020). Moreover, correct kinematics-based constraint identification requires that all true degrees of freedom are excited. Otherwise, there is no discernible difference between, e.g., a prismatic joint constraint and unconstrained but straight line translation, which will have the same apparent degrees of freedom.

The method requires no specific prior constraint models, nor estimates of geometry. Hence, there is no need to manually estimate or define constraints, which may be challenging to do exhaustively for all constraints that can be encountered in everyday settings. The method can identify a wide variety of constraints, of which twelve common examples were shown. However, many more can be identified by enumerating over all possibilities, including constraints where the angular and linear velocity frames are not orthogonal.

The method identifies a fixed number of intuitive parameters (Table 1). Methods that fit specific constraint models must identify and choose from multiple candidate models, each with their own parameters. Therefore, our method may be more efficient when many different constraints must be considered, such as in everyday settings.

Our identified constraint frames can be directly interpreted as “task frames” from the literature since both frames conveniently describe contact tasks (Bruyninckx and De Schutter, 1996; Mousavi Mohammadi et al., 2024). Although recent work on task frame identification by Mousavi Mohammadi et al. (2024) also has the above advantages, in this work we also classify constraints by discerning the degrees of freedom associated with our frames. Although we reproduced simple tasks, such frames can also be used to reproduce more complicated contact tasks with hybrid position and force control applied to the task frame axes (Conkey and Hermans, 2019; Ureche et al., 2015). Alternatively, impedance controller stiffness may be varied based on the identified constraints.

7.2 Limitations and future work

In this work, we applied our method to a window of kinematic data which we assume contains a single constraint. In future work, we will investigate how our method can be applied to data containing sequential constraints. Furthermore, we only considered single-contact constraints which can be modeled using a single constraint frame. Multiple-contact constraints, such as the two ends of a stick contacting separate planes, cannot be fully modeled using a single instance of the current method, since only one of the two contact points will be identified. However, a second instance of our method may identify a second contact point, if the second solution is (forced to be) distinct. Therefore, multiple contacts may be identified with multiple instances of our method, which is the topic of future work.

We defined constraint identification as a minimization problem and applied a general global-local optimization method. Improvements in accuracy and time efficiency may be achieved in several ways: by more efficient optimizer implementations, by choosing a different optimization method suited for our specific problem, or by tuning the optimization parameters. Furthermore, prior information may be useful for initialization. For example, a vision system may be used to identify a tool tip as prior information on constraint geometry.

Identifying constraint geometry does not require any parameter choice, but classifying the degrees of freedom requires two thresholds on RMS velocities, which can be chosen heuristically or empirically. Heuristically, thresholds may be chosen based on prior information, such as expected noise and expected velocities, which may differ between applications and measurement systems. If such prior information is available, classification may perform as expected without the need for threshold tuning. Empirically, thresholds may be chosen following demonstrations with known ground-truth constraints (Section 5). If such demonstrations are available and representative of future tasks, no explicit prior information about noise and velocities are needed, which may be more convenient depending on the application.

Geometric identification errors were found to scale linearly with noise in simulation experiments, and the exact scaling varies with the underlying constraints. Errors in robot experiments were larger than those in simulation experiments, which may be due to unmodeled factors such as structural compliance. Classification of the ground-truth constraint frame type was successful in all robot experiments. An analysis of factors that influence identification and classification performance is outside the scope of this work, such as the constraint, the optimizer, the relative direction and magnitude of motion, and the sample size.

Constraint identification methods in the literature report geometric identification accuracies using different metrics. In our method, we report the geometric parameter errors between points, lines, and planes. Methods that use similar metrics report similar errors: within 1–7.5 mm and 0.5–5 deg for point-on-plane contacts, prismatic, and revolute joints (De Schutter et al., 1999; Sturm et al., 2011; Mousavi Mohammadi et al., 2024). Other methods report the fitness between observations and their candidate constraint models (Sturm et al., 2011; Subramani et al., 2018; Subramani et al., 2020; van der Walt et al., 2025). Of such methods, the most accurate report sub-millimeter mean fit errors (Subramani et al., 2018). While such sub-millimeter mean model fit errors are not directly comparable to geometric parameter errors, they may correspond to sub-millimeter geometric errors.

While our method may be more versatile, it may yield larger geometric identification errors than methods that fit specific constraint models to data. Regardless, such larger errors (e.g., 5 mm instead of 0.5 mm) did not lead to substantially larger reaction wrenches in simple reproduction experiments with the default Franka controller (Section 6). Furthermore, similar errors have been shown to lead to acceptable performance in more complicated tasks in related work (Mousavi Mohammadi et al., 2024). The obtained accuracy may therefore be sufficient for such tasks, and other factors may have a greater effect on task performance, such as the task definition, environment, robot, controller, and definition of success. For example, control methods that are designed for compliant contact, such as impedance control, may be more robust to misidentified constraint geometry than non-compliant control. We aim to apply such compliant control methods to reproduce constrained tasks through LfD in future work.

7.3 Conclusion

This work identified constraint frames in robot tasks without prior knowledge of the constraints or tasks and without force measurements. Automatically modeling such robot-environment interactions, for example, in the context of Learning from Demonstration, may support versatile autonomous robot applications.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

AO: Conceptualization, Data curation, Formal Analysis, Investigation, Methodology, Project administration, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review and editing. DD: Supervision, Writing – review and editing. HvdK: Funding acquisition, Resources, Supervision, Writing – review and editing. MV: Conceptualization, Methodology, Project administration, Resources, Supervision, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This research was funded by the Dutch Ministry of Education, Culture, and Science through the Sectorplan Bèta en Techniek.

Acknowledgments

The authors would like to thank Arvid Q.L. Keemink and Gwenn Englebienne for their insight into sparse optimization. This work has previously appeared as a preprint (Overbeek et al., 2025).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1https://github.com/ET-BE/ReFrameId

References

Ancillao, A., Verduyn, A., Vochten, M., Aertbeliën, E., and De Schutter, J. (2022). A novel procedure for knee flexion angle estimation based on functionally defined coordinate systems and independent of the marker landmarks. Int. J. Environ. Res. Public Health 20, 500. doi:10.3390/ijerph20010500

Bruyninckx, H., and De Schutter, J. (1993a). Kinematic models of rigid body interactions for compliant motion tasks in the presence of uncertainties. Proc. IEEE Int. Conf. Robotics Automation 1, 1007–1012. doi:10.1109/ROBOT.1993.292107

Bruyninckx, H., and De Schutter, J. (1996). Specification of force-controlled actions in the “task frame formalism”-a synthesis. IEEE Trans. robotics automation 12, 581–589. doi:10.1109/70.508440

Bruyninckx, H., De Schutter, J., and Dutre, S. (1993b). The “reciprocity” and “consistency” based approaches to uncertainty identification for compliant motions. Proc. IEEE Int. Conf. Robotics Automation 1, 349–354. doi:10.1109/ROBOT.1993.292006

Cabras, S., Castellanos, M. E., and Staffetti, E. (2010). Contact-state classification in human-demonstrated robot compliant motion tasks using the boosting algorithm. IEEE Trans. Syst. Man, Cybern. Part B Cybern. 40, 1372–1386. doi:10.1109/TSMCB.2009.2038492

Conkey, A., and Hermans, T. (2019). Learning task constraints from demonstration for hybrid force/position control. IEEE-RAS Int. Conf. Humanoid Robots 2019-Octob, 162–169. doi:10.1109/Humanoids43949.2019.9035013

de Schutter, J., Bruyninckx, H., Dutré, S., de Geeter, J., Katupitiya, J., Demey, S., et al. (1999). Estimating first-order geometric parameters and monitoring contact transitions during force-controlled compliant motion. Int. J. Robotics Res. 18, 1161–1184. doi:10.1177/02783649922067780

Hausman, K., Niekum, S., Osentoski, S., and Sukhatme, G. S. (2015). “Active articulation model estimation through interactive perception,” in 2015 IEEE international conference on robotics and automation (ICRA). Presented at the 2015 (IEEE International Conference on Robotics and Automation ICRA), 3305–3312. doi:10.1109/ICRA.2015.7139655

Holladay, R., Lozano-Pérez, T., and Rodriguez, A. (2021). Planning for multi-stage forceful manipulation. arXiv, 6556–6562. doi:10.1109/icra48506.2021.9561233

Jain, A., and Niekum, S. (2018). “Efficient hierarchical robot motion planning under uncertainty and hybrid dynamics,” in Proceedings of the 2nd conference on robot learning. Presented at the conference on robot learning (PMLR), 757–766.

Kroemer, O., Niekum, S., and Konidaris, G., (2020). A review of robot learning for manipulation: challenges, representations, and algorithms. arXiv:1907.03146.

Lefebvre, T., Bruyninckx, H., and Schutter, J. D. (2005). Online statistical model recognition and State estimation for autonomous compliant motion. IEEE Trans. Syst. Man, Cybern. Part C Appl. Rev. 35, 16–29. doi:10.1109/TSMCC.2004.840053

Li, X., Baum, M., and Brock, O. (2023). “Augmentation enables one-shot generalization in learning from demonstration for contact-rich manipulation,” in 2023 IEEE/RSJ international conference on intelligent robots and systems (IROS). Presented at the 2023 (IEEE/RSJ International Conference on Intelligent Robots and Systems IROS), 3656–3663. doi:10.1109/IROS55552.2023.10341625

Li, X., and Brock, O. (2022). Learning from demonstration based on environmental constraints. IEEE Robotics Automation Lett. 7, 10938–10945. doi:10.1109/LRA.2022.3196096

Lynch, K. M., and Park, F. C. (2017). Modern robotics: mechanics, planning, and control. Cambridge University Press.

Meeussen, W., Rutgeerts, J., Gadeyne, K., Bruyninckx, H., and De Schutter, J. (2007). Contact-state segmentation using particle filters for programming by human demonstration in compliant-motion tasks. IEEE Trans. Robotics 23, 218–231. doi:10.1109/tro.2007.892227

Mousavi Mohammadi, S. A., Vochten, M., Aertbeliën, E., and De Schutter, J. (2024). Automatic extraction of a task frame from human demonstrations for controlling robotic contact tasks. arXiv. doi:10.48550/arXiv.2404.01900

Niekum, S., Osentoski, S., Atkeson, C. G., and Barto, A. G. (2015). Online Bayesian changepoint detection for articulated motion models. Proc. - IEEE Int. Conf. Robotics Automation 2015-June, 1468–1475. doi:10.1109/ICRA.2015.7139383

Oriolo, G., and Vendittelli, M. (2009). “A control-based approach to task-constrained motion planning,” in 2009 IEEE/RSJ international conference on intelligent robots and systems. Presented at the 2009 IEEE/RSJ international conference on intelligent robots and systems, 297–302. doi:10.1109/IROS.2009.5354287

Overbeek, A. H. G., Dresscher, D., Van der Kooij, H., and Vlutters, M. (2025). Versatile kinematics-based constraint identification applied to robot task reproduction. Preprint. Available online at: https://research.utwente.nl/en/publications/versatile-kinematics-based-constraint-identification-applied-to-r (Accessed May 31, 2025).

Simonič, M., Ude, A., and Nemec, B. (2024). Hierarchical learning of robotic contact policies. Robotics Computer-Integrated Manuf. 86, 102657. doi:10.1016/j.rcim.2023.102657

Slaets, P., Lefebvre, T., Rutgeerts, J., Bruyninckx, H., and De Schutter, J. (2007). Incremental building of a polyhedral feature model for programming by human demonstration of force-controlled tasks. IEEE Trans. Robotics 23, 20–33. doi:10.1109/TRO.2006.886830

Sturm, J., Jain, A., Stachniss, C., Kemp, C. C., and Burgard, W. (2010). “Operating articulated objects based on experience,” in 2010 IEEE/RSJ international conference on intelligent robots and systems. Presented at the 2010 IEEE/RSJ international conference on intelligent robots and systems, Taipei, Taiwan, 2739–2744. doi:10.1109/IROS.2010.5653813

Sturm, J., Stachniss, C., and Burgard, W. (2011). A probabilistic framework for learning kinematic models of articulated objects. J. Artif. Intell. Res. 41, 477–526. doi:10.1613/jair.3229

Subramani, G., Hagenow, M., Gleicher, M., and Zinn, M. (2020). A method for constraint inference using pose and wrench measurements. arXiv. doi:10.48550/arXiv.2010.15916

Subramani, G., Zinn, M., and Gleicher, M. (2018). “Inferring geometric constraints in human demonstrations,” in Proceedings of the 2nd conference on robot learning. Presented at the conference on robot learning (Zürich, Switzerland: PMLR), 223–236.

Suomalainen, M., Abu-Dakka, F. J., and Kyrki, V. (2021). Imitation learning-based framework for learning 6-D linear compliant motions. Auton. Robot. 45, 389–405. doi:10.1007/s10514-021-09971-y

Ureche, A. L. P., Umezawa, K., Nakamura, Y., and Billard, A. (2015). Task parameterization using continuous constraints extracted from human demonstrations. IEEE Trans. Robotics 31, 1458–1471. doi:10.1109/TRO.2015.2495003

Van der Walt, C., Stramigioli, S., and Dresscher, D. (2025). Mechanical constraint identification in model-mediated teleoperation. IEEE Robotics Automation Lett. 10, 5777–5782. doi:10.1109/LRA.2025.3560893

Keywords: constraint identification, physical constraints, constraint frames, contact modeling, robot manipulation, learning from demonstration, imitation learning

Citation: Overbeek AHG, Dresscher D, van der Kooij H and Vlutters M (2025) Versatile kinematics-based constraint identification applied to robot task reproduction. Front. Robot. AI 12:1574110. doi: 10.3389/frobt.2025.1574110

Received: 10 February 2025; Accepted: 02 June 2025;

Published: 15 July 2025.

Edited by:

Xiaopeng Zhao, The University of Tennessee, Knoxville, United StatesReviewed by:

Changchun Liu, Nanjing University of Aeronautics and Astronautics, ChinaDler Salih Hasan, Salahaddin University, Iraq

Copyright © 2025 Overbeek, Dresscher, van der Kooij and Vlutters. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alex H. G. Overbeek, YS5oLmcub3ZlcmJlZWtAdXR3ZW50ZS5ubA==

Alex H. G. Overbeek

Alex H. G. Overbeek Douwe Dresscher2

Douwe Dresscher2 Herman van der Kooij

Herman van der Kooij Mark Vlutters

Mark Vlutters