- 1College of Biosystems Engineering and Food Science, Zhejiang University, Hangzhou, China

- 2Key Laboratory of Intelligent Equipment and Robotics for Agriculture of Zhejiang Province, Zhejiang University, Hangzhou, China

- 3Chair of Robotics, Artificial Intelligence and Real-time Systems, Technische Universität München, München, Germany

Tasks in the meat processing sector are physically challenging, repetitive, and prone to worker scarcity. Therefore, the imperative adoption of mechanization and automation within the domain of meat processing is underscored by its key role in mitigating labor-intensive processes while concurrently enhancing productivity, safety, and operator wellbeing. This review paper gives an overview of the current research for robotic and automated systems in meat processing. The modules of a robotic system are introduced and afterward, the robotic tasks are divided into three sections with the features of processing targets including livestock, poultry, and seafood. Furthermore, we analyze the technical details of whole meat processing, including skinning, gutting, abdomen cutting, and half-carcass cutting, and discuss these systems in performance and industrial feasibility. The review also refers to some commercialized products for automation in the meat processing industry. Finally, we conclude the review and discuss potential challenges for further robotization and automation in meat processing.

1 Introduction

Meat consumption is widely acknowledged as an indispensable source of vital nutrition for individuals worldwide. Technician’s market research report indicates the meat market is for substantial expansion, with a projected value reaching USD 1210.97 billion by 2027. During the forecast period, an anticipated compound annual growth rate of approximately 7% is foreseen (Technavio, 2023). To address the heightened demand for meat, an estimated 80 billion animals undergo slaughter annually, which has significantly increased the meat processing workload (Ritchie, 2017). This accelerated growth motivated the meat industry’s increasing need for heightened efficiency and productivity, which has expedited the automation market’s growth within the entire meat industry.

Meat processing poses safety and contamination risks (Hamid et al., 2016; Das et al., 2019). There is a strong demand for automation to solve these problems, but it is still challenging in the whole meat process automation due to the high product variability (Romanov et al., 2022), dexterous manipulation, harsh environment, and space constraints (Singh et al., 2012).

Compared with other industries, the meat industry’s working environment is not very conducive to robotics. Meat processing automation is constrained by equipment sensitivity to size variations (Barbut, 2014) and material deformability, necessitating adaptive robotics. The depiction of meat distinctiveness and diversity, together with the mechanization and automation of processes, poses a formidable trial for Meat Industry 4.0. The initiative’s overarching objective is to secure superior quality, safety, and traceability in the meat sector through the application of advanced industry 4.0 technologies such as artificial intelligence, big data, robotics, smart sensors, and blockchain (Echegaray et al., 2022; Wang et al., 2024).

Due to the complexity of meat processing, which requires balancing efficiency with operational precision, meat processing automation faces several inherent challenges. Meat, being a typical non-standard flexible material, imposes high demands on robotic manipulation. The variation in the mechanical properties of different meat parts, such as its viscoelasticity, necessitates sophisticated control systems that can adapt to these inconsistencies. For instance, the automation system should manage multi-module coordination, integrating path planning for cutting with real-time force control. The system must be capable of adjusting forces dynamically based on the varying elasticity and stickiness of the meat at different points, ensuring both precision and safety. Moreover, when processing large livestock such as pigs, cows, and sheep, the scale of the equipment required becomes even more significant. These larger animals demand heavier and more robust machinery, which also has to accommodate the increased range of motion necessary for efficient processing. Robotic arms, in particular, must possess greater degrees of freedom to effectively plan and execute complex movements during the cutting and processing stages.

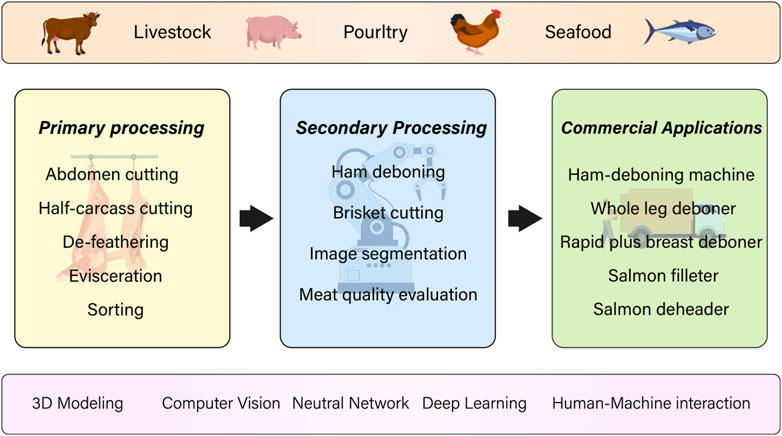

In this review, a comprehensive summary of recent progress in automatic and intelligent meat processing systems is presented, as depicted in Figure 1. The organization of the paper is structured as follows: In Section 2, a concise overview is provided of the key modules that make up a robotic system for meat processing. Additionally, a comprehensive analysis of relevant literature on this subject matter will be provided. The subsequent three sections are dedicated to discussing specific robotic tasks involved in meat processing, focusing on livestock (Section 3), poultry (Section 4), and seafood (Section 5) respectively. Section 6 delves into recent research trends in meat processing and analysis, presenting insights from recent studies. Finally, Section 7 brings the paper to a conclusion by summarizing the main findings and outlining future directions for research in this area.

2 Methodology

In principle, a meat-processing robotic system requires three major modules including a sensing and perception module, a control module, and an actuation module. In meat processing, sensing plays a crucial role in ensuring accurate execution. Effectively utilizing pertinent sensor information constitutes a pivotal factor in expanding the system’s functionality. As per the varying sensing requirements, the tasks to be performed before meat processing can be segregated into three primary categories: external features, internal features, and tissue boundaries. There has been some research on the practical application of different sensing systems including beef ear tag detection using color cameras (Kumar et al., 2017), pig organ grasping with force and torque sensors (Takács et al., 2021), laser profiling for sheep head removal (Condie et al., 2007), the feather bone detection along the beef carcass spine.

In meat processing, the main usage of the control component is producing cutting routes by utilizing the extracted outline of the animal body. The path derived from the contour may be highly intricate (Meng et al., 2024). Hence, the process involves discretizing it into multiple points, followed by fitting a seamless curve through these points. Additionally, the control module involves the alignment process among the robot tool coordinate systems (TCS), user coordinate systems (UCS), and camera coordinate systems (CCS).

The actuation module is deployed to execute the predefined tasks along the trajectory generated by the control module. Typically, the terminal actuator employed in meat processing is equipped with highly specialized functionalities. Robotic manipulators provided by reputable companies such as Motoman, KUKA, FANUC, and ABB are commonly employed as actuators. Robotic hands exhibit a versatile capacity to perform a diverse array of tasks facilitated by a variety of end manipulators.

Thus far, within the agri-food sector, scholars have provided valuable insights regarding the implementation of meat processing robots. In this study, we analyze selected academic papers. Furthermore, the interdependent relationship between equipment development automation and intelligent technologies is also obvious.

3 Livestock

Livestock production is a vital component of sustainable food systems. It provides animal protein crucial for proper health, implements environmentally sustainable production methods prioritizing conservation efforts, and fosters the growth of rural communities worldwide. The livestock industry holds a unique position as a leader and substantial contributor to global food system discussions. As the human population and incomes increase, there has been a notable increase in the volume of meat production on a global scale (Godfray et al., 2018; Liu et al., 2023). It is anticipated that by the year 2029, the aggregate growth rate of red meat production will soar by 80% (Schmidhuber et al., 2020). Facing this situation, extending meat processing automation is imperative.

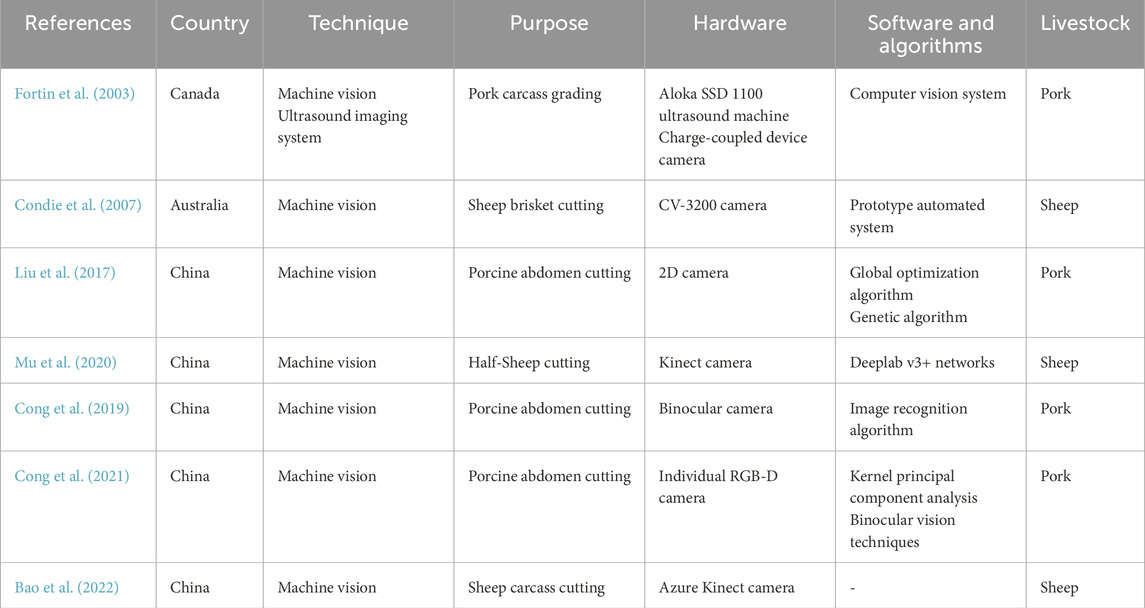

Modern livestock meat processing can be divided into several main steps: livestock handling, primary processing, secondary processing, packaging, and labeling (Esper et al., 2021). In Table 1, recent research on the identification and classification of livestock has been listed. In this section, we provide an overview of the recent advancements in automation within the meat processing industry. This includes abdomen cutting and half carcass cutting in primary processing, and ham deboning, brisket cutting, and carcass image segmentation in secondary processing. Furthermore, current commercialized processing machines and some quality assessment techniques for pork, beef, and mutton are also mentioned.

3.1 Primary processing of livestock

Primary livestock processing is an extremely crucial step that demands high hygiene, quality, and accuracy standards to ensure proper subsequent operations (Cong et al., 2021). It concerns activities within a slaughterhouse including stunning, dressing, viscera removing abdomen cutting, and so on (Longdell, 1996).

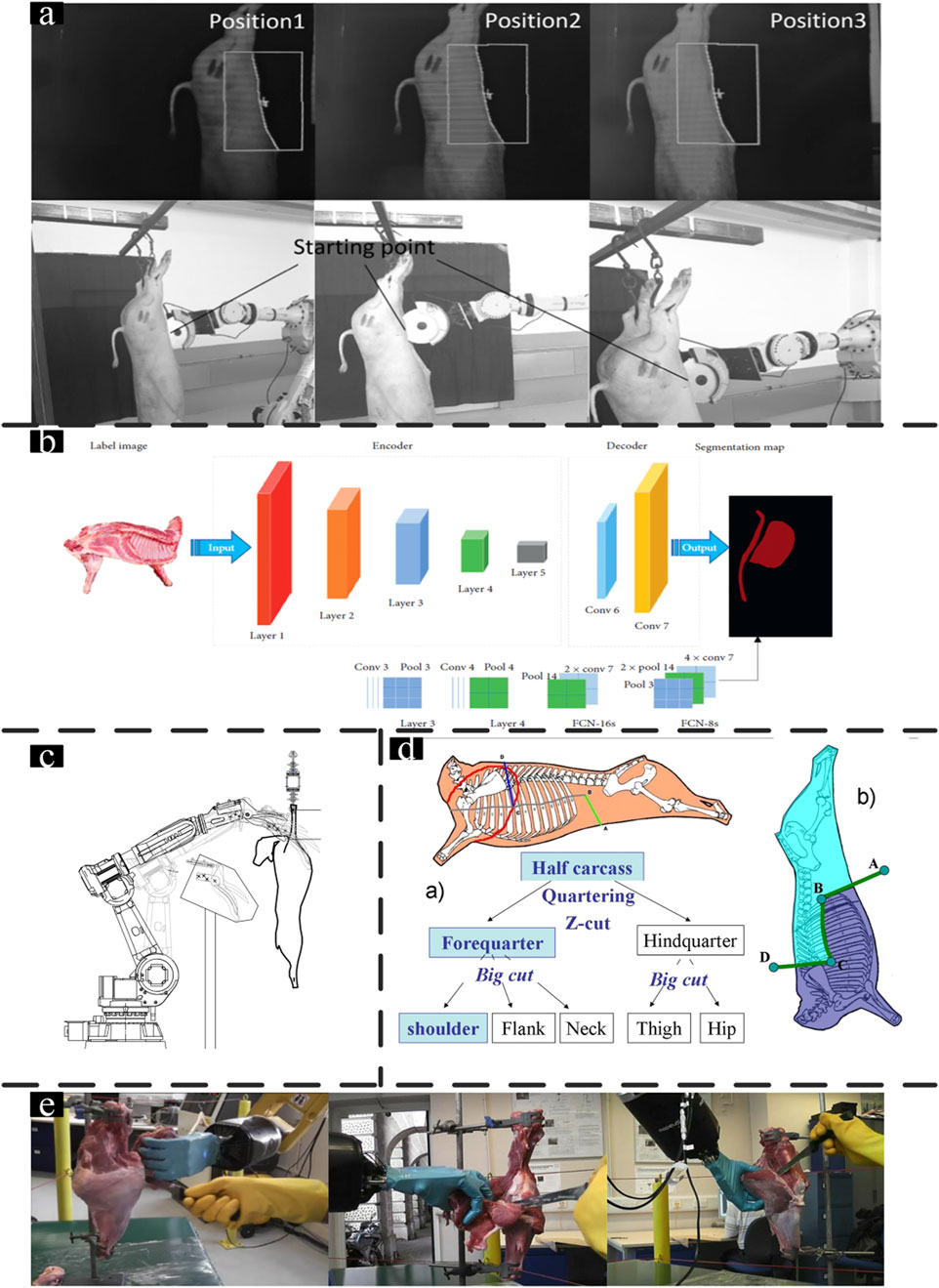

With the increasing application of machine vision related to meat analysis and livestock identification, vision-based robots have been studied. Based on a genetic algorithm, Liu et al. (2017) proposed a flexible robotic cutting system based on a genetic algorithm that utilizes trajectory planning a 2D camera captures the pig’s side view, and MATLAB extracts the abdominal curve, which is fitted with a fifth-order spline. The path is then optimized using a genetic algorithm (GA), minimizing cutting segments and errors. The optimized path is discretized into six segments, with a maximum cutting error of 1.6 mm, ensuring accurate cuts through the skin and muscle while avoiding internal organ damage. However, the recognized accuracy is still low. The abdominal cutting curve obtained through the image binarization shows limited alignment with the actual carcass, resulting in constrained cutting success rates. (Figure 2a) (Esper et al., 2021). Computer version techniques such as deep learning and 3D modeling could provide higher accuracy compared to machine vision (Kang et al., 2022), they bring more and better opportunities to meat processing systems. Deeplab v3+ networks used for a half-sheep cutting Robotic 3D Vision-Guided System acquire the key cutting points and the system stability is acceptable. As is one of the most complicated operations in meat processing. The result indicates the automation feasibility even for the hardest step in meat production and indicates the application potential for 3D versions in carcass cutting (Mu et al., 2020).

Figure 2. Livestock processing robot: (a) Feature identification with the profile moved (Liu et al., 2017); (b) FCN image segmentation model (Mu et al., 2020); (c) The axis representation for carcass in operational space (Singh et al., 2012); (d) Beef preparation process and Z-cut (Guire et al., 2010a); (E) Metamorphic hand integrated with ABB robot (Wei et al., 2014).

The 3D vision system is a kind of method with the ability to record the 3D features of the carcass in both coordinates and vectors. Grounding on the New Zealand sheep body segmentation specification, Mu et al. (2020) developed a segmentation robot for half-sheep cutting (Figure 2b). The 3D camera was used to obtain the depth image of the sheep carcass, and the deep image processing algorithms were employed to acquire the key cutting points. The cutting robot trajectory is planned according to the spatial coordinates of processed point clouds. While the system excels in cutting path planning, it still relies on open-loop control and does not incorporate force sensors for feedback, limiting its ability to detect sudden changes in cutting resistance. Additionally, the pre-defined cutting paths may struggle to adapt to the variability in carcass structure, posing a challenge to the system’s overall flexibility and precision. Another method of cutting sheep carcasses based on a 3D vision system with a dual-robot system was proposed by Bao et al. (2022). Compared to traditional robotics systems, the dual-robot system has the advantage of large operating space and is suitable for sheep carcass processing. A fixed device is designed to prevent the carcass swing in the cutting process, and the dual-robot system performs precision cutting according to the trajectory calculated by the point cloud spatial coordinates. These methods have shown promising results that can improve processing efficiency, accuracy, and consistency while reducing labor costs and improving worker safety.

The reviewed vision-based robotic systems demonstrate progressive advancements in meat processing automation. While 2D machine vision (Liu et al., 2017) struggles with alignment accuracy (70%–80% success), 3D vision systems (Mu et al., 2020; Bao et al., 2022) achieve superior precision (85%–92%) through point-cloud spatial mapping and dual-robot coordination. Deep learning-enhanced methods (Kang et al., 2022) further improve robustness against anatomical variability but require higher computational resources. Collectively, 3D vision and AI integration represent the most viable path toward fully automated, high-throughput carcass processing, though cost-effectiveness remains a challenge for small-scale operations (Liu et al., 2024).

3.2 Secondary processing of livestock

Secondary processing mainly involved primal brisket cutting and deboning. These tasks currently are performed manually other than automation due to the challenges of complicated manipulation and soft tissue characteristics. Examining manual tasks aids in gaining a comprehensive understanding of the prototype specifications. Brisket cutting is the operation after the processing of the viscera. Hence, the brisket-cutting process demands a heightened level of precision, labor expertise, and uniformity to ensure the attainment of superior meat quality (Singh et al., 2012).

By investigating the manual task of brisket cutting, Condie et al. (2007) developed a brisket-cutting robot with a laser profile analysis system. The sensing system, comprising image acquisition and laser profiling equipment, was controlled via a Labview interface and communicated with the PC and robotic system through an RS232 serial interface. The execution module utilized a commercial industrial robot integrated with a pneumatically actuated shear end-effector. However, any unexpected carcass movement during scanning may increase the error rate of 3D mapping, and contact with the ground can lead to organ contamination. It is crucial to address these issues for brisket cutting in meat industrial production. Singh et al. (2012) reported another method for sheep brisket cut (Figure 2c). With this method, the problems in the above research have been improved to some extent. It obtains an offset of the carcass entry point by optical and ultrasonic sensors and calculates the cutting path based on the statistical method. The system accuracy would be limited by utilizing a fixed profile for a certain breed type, which may be incapable of detecting different types of carcasses. However, both of these brisket-cutting systems relied solely on visual inputs and lacked capabilities for sensing cutting forces. This made it difficult to adapt to changes during cutting, often leading to damage to internal organs due to the inability to adjust cutting forces in real time. Despite the process of precise brisket cutting is still extremely challenging, the above systems lay an essential foundation for future studies.

The automated systems for deboning specific livestock meat sections are still limited to laboratory settings, and it is still a long way from full automation. Thus, an alternative approach is establishing a human-machine collaboration platform and employing a robotic manipulator to assist with manual cutting to enhance efficiency and mitigate the risk of human injury (Sørensen et al., 1993). Longdell (1996) discussed various kinds of beef deboning machines for different sections of the animal, which are similar to primalisation pulling arms. Based on the above research, promoting deboning process robotization and intelligent systems is paramount to improving efficiency, scalability, flexibility, and sustainability. Research has been conducted on replacing the human hand with a robotic arm for deboning operations by learning from the butcher during processing (Figure 2d) (Essahbi et al., 2012; Friedrich et al., 2000; Guire et al., 2010a; Guire et al., 2010b; Zhou et al., 2009; Zhou et al., 2007). Wei et al. (2014) developed a dexterous robotic hand to replace the human operator’s hand in ham deboning (Figure 2e). The robotic hand comprises a re-configurable palm and four fingers to establish a hyper-flexible human-robot co-working platform in meat processing, including handing, pulling, pushing, and twisting. The four fingers perform abduction as well as flexion and extension, with adjustments made to the palm configuration for different tasks and changing environments. Motion trajectories of the operator’s left hand were captured via instrumented data gloves with appropriate force/torque and position sensors for mapping the deboning operation task workspace to the robotic hand joint space and performing human-robot co-working deboning operation. However, some critical issues such as reducing tendon-driven friction of the hand and increasing contact point friction between the meat and the robotic hand still need further investigation.

In recent years, there has been a focus on improving systems adaption ability, flexibility, and cost-affectation. To achieve precise cutting operations, current robotic brisket-cutting systems and deboning systems integrate multi-sensor feedback mechanisms to monitor cutting forces. However, the viscoelastic nature of meat tissues imposes stringent requirements on force-control accuracy during operation, significantly prolonging processing time. Furthermore, the presence of blood and other fluids in the cutting environment introduces additional challenges for sensor-based recognition, ultimately limiting the system’s precision. The ongoing development of artificial intelligence and machine learning is also expected to lead to further improvements in deboning automation technology in the coming years.

3.3 Commercial applications

Based on the technology mentioned above, there has been a growing popularity in recent times concerning commercial practices centered around the cutting of livestock (Xu et al., 2023).

Mayekawa Co. Ltd. from Japan has developed HAMDAS-RX, the world’s first automated ham-deboning robotic system with a maximum processing capacity of 500 hams per hour (Mayekawa, 2021). Upon the completion of pre-cutting processes, HAMDAS-RX can perform automated deboning of pork ham which includes effective extraction of hipbone and tailbone and differentiate between the right and left legs automatedly. Another company, SCOTT Automation Robotics Co. Ltd., employs a combination of robotic technology, scribing saws, and sensing technologies to accurately identify the position and shape of the beef (Scott, 2021). This design can reduce workloads of two to three per shift, and increase productivity remarkably.

The integration of advanced technologies, including robotics and sensing, has resulted in diminished reliance on manual labor, increased precision, and heightened productivity. Nonetheless, there is still a long way to go for the wide promotion of meat processing robots.

4 Poultry

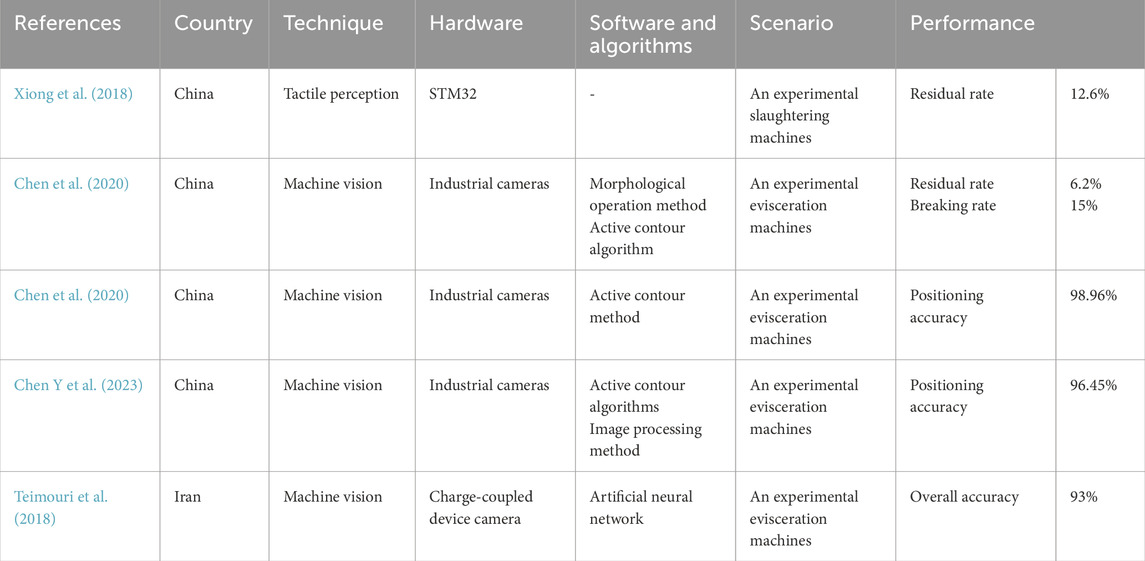

In recent years, the escalating rise in the yearly production of poultry necessitated the implementation of robotized and mechanized approaches for the processing of poultry meat commodities (Elahi E et al., 2022). When compared to other meat processing industries, poultry processing is comparatively automated, except for certain challenging operations. Poultry processing consists of live-chicken stunning, slaughter, bloodletting, feathers removal, fluff removal, evisceration, trimming, pre-cooling, segmentation, and other processing operations (Casnor and Gavino, 2022; Janssen et al., 2007; Ramírez-Hernández et al., 2017). In Table 2, recent research on the identification and classification of poultry has been listed. In this section, we introduce the relevant techniques involved in poultry processing sequentially.

4.1 Primary processing of poultry

The primary processing of poultry mainly includes de-feathering and evisceration. The procedure of de-feathering has been automated presently. In a friction-based process, dehairing and defeathering are typically achieved through a rotary rubber blade. Carcasses are subjected to plucking machines equipped with specially designed rubber “fingers” that effectively remove feathers.

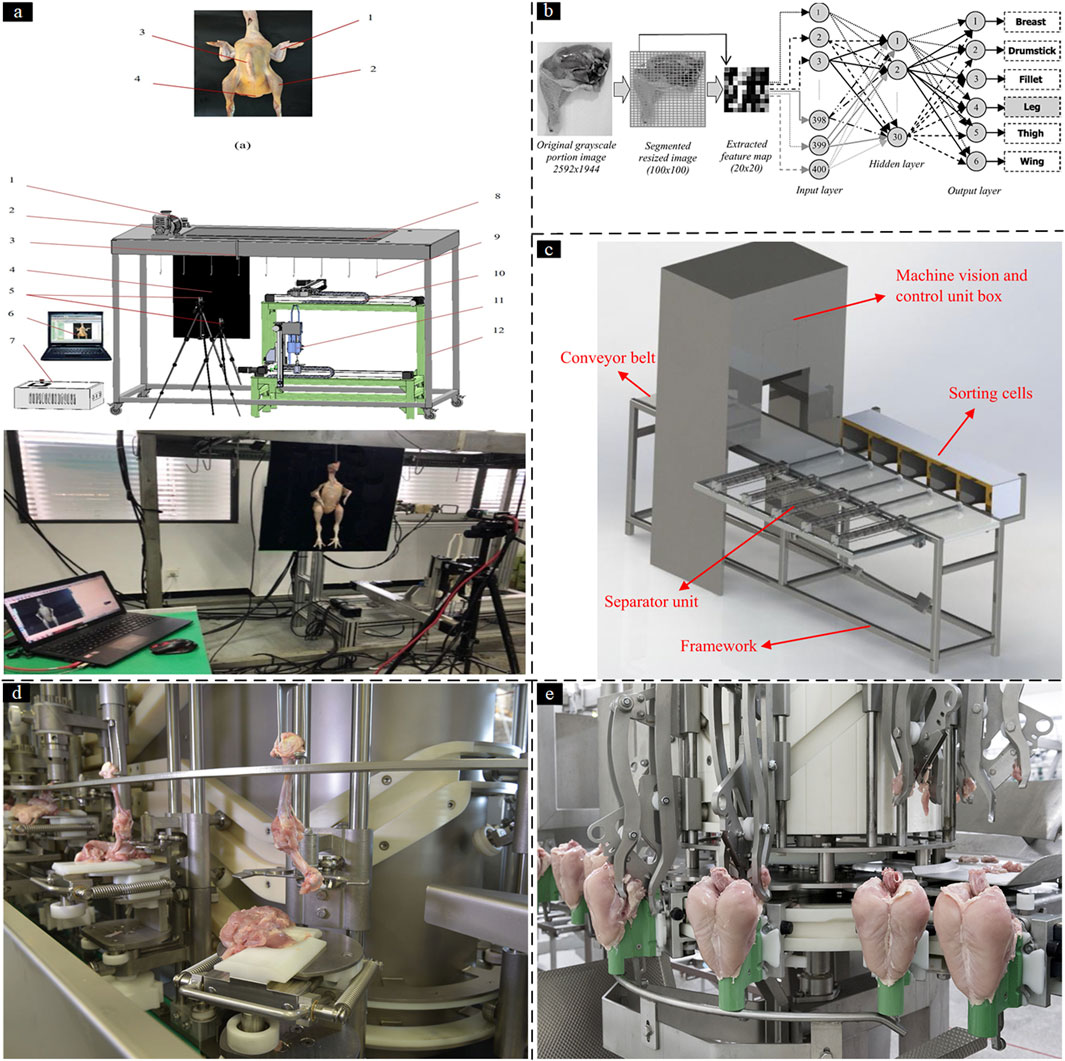

The subsequent stage following defeathering is evisceration, which is a critical and challenging aspect of the process. As a result, manual labor is often relied upon to carry out this particular operation (Chen et al., 2021a). Currently, automated evisceration systems provide superior industrial processing capabilities when compared to manual evisceration (Li et al., 2021). Wang et al. (2018) proposed a system of poultry slaughtering robots based on a machine vision system, and then position the robot hand for grabbing the viscera using position recognition. The proposed control system incorporates dual operational modalities: manual and automated control configurations. In manual control mode, the robot arms and carcass conveyor can be controlled independently to facilitate kinematic calibration of the end-effector. In automated control mode, integrated with the vision-guided motion synchronization module, the system implements a closed-loop control architecture to maintain continuous and automated evisceration cycles. However, internal organs are easy to damage when machines perform consistent evisceration, most of them do not consider the internal organ’s integrity in the robotic grasping. Therefore, another machine-vision-based method was proposed by Chen and Wang (2018). In this method, evisceration can be executed by a multi-fingered robot hand mounted on the DELTA robot, which simulates a human hand and is more flexible than the previously designed manipulators. A threshold segmentation method is applied for poultry carcass recognition. The camera first takes poultry RGB (Red Green Blue) images on the conveyor, and then the images are processed by using a computer vision algorithm. The object positioning for internal organs can be calculated which indicates that the relative position is significantly changed between carcass and viscera with chicken size in the longitudinal direction (Figure 3a) (Chen et al., 2021a). Therefore, computer vision technology can be satisfactorily applied to predict the chicken viscera position.

Figure 3. Poultry processing robot: (a) The acquisition system of the chicken evisceration (Chen et al., 2021b); (b) The poultry portion identification system: Image processing and neural network arbitration (Khashman, 2012); (c) The framework of chicken portion sorting machine simulated in CATIA software (Teimouri et al., 2018); (d) WLD Whole Leg Deboner M3.0 (Meyn, 1993). (e) RAPID plus breast deboner M4.3 (Meyn, 2023a).

4.2 Secondary processing of poultry

In the field of poultry processing, an additional significant concern pertains to secondary processing operations automation such as sorting, deboning, and meat quality evaluation. Different from other processing, manual sorting continues to be the most prevalent method used for sorting poultry portions before packaging. There are problems inherent with such manual sorting methods including high error rate, and worker fatigue (Nyalala et al., 2021). Based on the development of employing computer vision technology and ANN (Artificial Neural Network), Teimouri et al. (2018) proposed a new online method based on linear and nonlinear classifiers to categorize chicken portions automatically (Figure 3d). The geometrical features, color, and textural features were extracted from the image and selected by the Chi-Square technique, after which the classification was realized using ANN. This is the first attempt made to implement a system capable of sorting chicken portions in real-time practice using vision-based intelligent modeling.

As for the deboning process, there have been some solutions for deboning lines and cutting devices, but they still cannot automatically adjust to the variability of the poultry size (Daley et al., 1999; Heck, 2006; Guo and Lee, 2011; Woo et al., 2018). To solve this problem, Hu et al. (2012) designed an intelligent poultry shoulder deboning system by utilizing a knife equipped with a force sensor attached to a 2-DOF robot arm (Figure 3c). The system adopts a dynamic hybrid position/force control strategy. During the initial stage, position control is applied to track a predefined cutting trajectory. Upon detection of bone contact, the tool path is dynamically adjusted in real time to follow the tangential direction of the bone surface while maintaining a constant contact force to prevent bone chip formation. Similarly, Misimi et al. (2016) proposed a novel 3D vision-guided robot for front-half chicken harvesting. A computer vision algorithm has been developed to locate the grasping point in 3D as the initial contact point for the harvesting procedure, based on which, a feed-forward Look-and-Move control algorithm. A humanoid-inspired composite pneumatic gripper was employed as the end effector with a compliant design to adapt to the broiler chicken’s anatomy. A miniature force sensor ensures a precise grip and minimizes damage to the meat during handling. However, due to the restricted DOF, it is difficult to accomplish deboning of the entire carcass, and the force control will also be influenced by the shape of the blade. In the longer term, upgrading to a cutting robot possessing more DOF than the present could allow for more versatility in performing the various cuts required for complete poultry deboning. The two systems represent automated solutions for precision cutting and compliant grasping, respectively, collectively advancing the technological frontier of poultry processing automation.

Automation in the evisceration, breastbone deboning, grading, and packaging, has improved the overall production system in a fast and efficient manner. However, post-evisceration poultry inspection is still performed manually. The inspection system automation can eliminate inspector error and reduce the workload required for the carcass individual inspection, thereby reducing operating costs (Chao et al., 2000). Poultry meat color is also an important quality attribute for the rapid detection of “pale poultry syndrome”. Visual inspection is routinely used to assign grades or quality labels to chicken carcasses. Recently, novel techniques have been investigated for fast, reliable, and reagent-less meat quality assessment. For a fast assessment of chicken quality in large-scale processing plants, Barbin et al. (2016) investigated the potential application of an identification framework with color images to predict chicken color attributes and classify chicken breasts accordingly. This study is mainly concerned with detecting PSE (pale, soft, exudative) defects and pale poultry syndrome, which are critical indicators of pre-slaughter animal welfare and meat quality. While this work advances poultry quality assessment by enabling automated, high-throughput defect identification, further refinement is needed to ensure robustness and universal adoption across diverse production environments.

Current poultry processing automation demonstrates varying levels of technological maturity across different operations. While computer vision and ANN-based sorting (Teimouri et al., 2018) achieve real-time classification (with 90% accuracy), deboning automation remains constrained by limited DOF systems (Hu et al., 2012; Misimi et al., 2016), which struggle with anatomical variability and require force-vision hybrid control. In recent years, there has been a focus on improving system adaption, DOF, and reducing labor costs. These proposed strategies offer robust and promising alternatives for industrial poultry processing with high accuracy, rapid, and non-destructive methods.

4.3 Commercial applications

The poultry industry has built large, dedicated processing plants and continuously increased line speed through advancements in automation and mechanization of different processes within the plant (Barbut and Leishman, 2022).

Meyn Food Processing Technology B.V. Co. Ltd. (Meyn, 1993), the leading global manufacturer and marketer of systems and solutions for poultry and egg production, has proposed solutions to the entire poultry processing including live bird handling, slaughtering, evisceration, chilling, deboning, packing, and so on. The whole leg deboner M3.0 (Meyn, 1993) (Figure 3d) processes left and right anatomical legs at a maximum capacity of 4,200 legs per hour. Furthermore, the rapid plus breast deboner M4.3 (Meyn, 2023b) (Figure 3e) automates the breast deboning process by loading front halves in baskets, transferring them to product carriers, and then to a meat harvesting carousel and a carousel for wishbone cutting and scraping. This system can process both breast caps and front halves into over 15 different high-quality products at a speed of up to 7,000 BPH and saves up to 34 FTE per shift.

5 Seafood

Seafood products are essential dietary components with highly appreciated and consumed worldwide (Hassoun et al., 2022). The seafood’s perishable nature needs to be paid special attention to its preservation after harvesting. Automated seafood processing could enable higher profitability, and flexibility in production and increase the potential for high-value seafood products (Liu et al., 2022). The seafood industry has come a long way with automation advanced. Within this part, we present the pertinent methodologies implicated in the processing of seafood. These comprise categorization, slicing, the elimination of fish bones, and the evaluation of quality. Furthermore, specific commercial implementations that process seafood are also deliberated upon.

5.1 Primary processing of seafood

Sorting is considered an integral step in the primary processing of seafood, it can be categorized based on a factors combination of factors such as the species, size, and quality. In the early studies, fish was classified simply by its thickness (Booman et al., 1997). Later, various fish databases to automate the fish discrimination task were developed, and the application of machine vision and imaging technologies became increasingly prevalent in the sorting, grading, and processing of fish and its related products (Mathiassen et al., 2011; Hassoun et al., 2023).

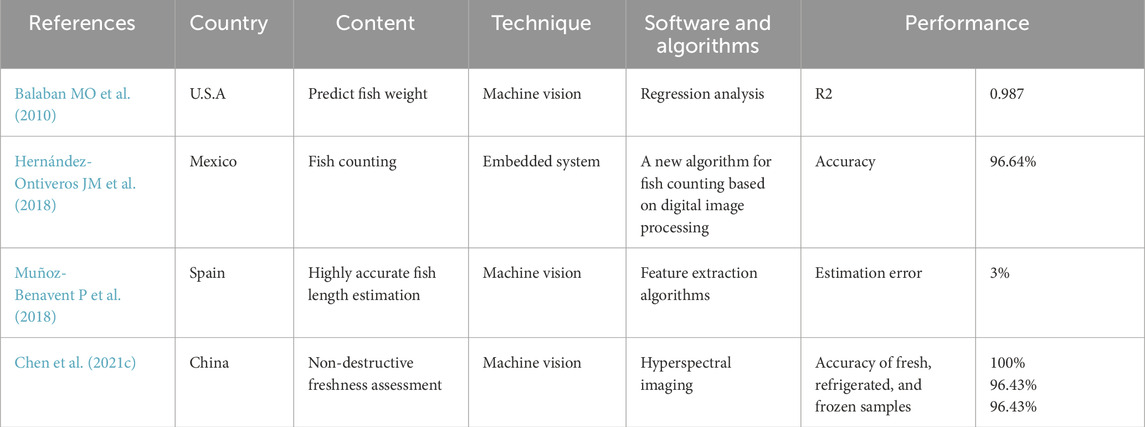

With the rapid development of machine vision technology represented by CNN (Convolutional Neural Network), computer vision-based seafood detection and identification technology has entered a new stage of development. Compared to traditional vision-based algorithms, emerging machine vision algorithms have huge advantages in seafood recognition. In Table 3, recent research on the identification and classification of seafood has been listed. Utilizing edge analysis enables the identification and removal of malformed items while preserving those with growth potential. To develop more accurate systems for automated fish sorting based on whole-shape characters. Costa et al. (2013) presented an automated sorting for scale, sex, and skeletal anomalies of farmed seabass. The high-resolution camera is employed to capture lateral-view images of live fish. Image binarization is performed using both the grayscale (G) channel and the Value (V) channel from the HSV color space. The system is designed for integration with online sorting equipment, achieving a theoretical processing speed of approximately 10 fish per second. However, its real-time performance in dynamic industrial environments remains untested, which may affect operational stability in practical applications. This could be an important step forward both for the routine sorting of deformed fish at different stages and for the implementation of selective breeding programs through efficient selection based on body size and phenotypic sex.

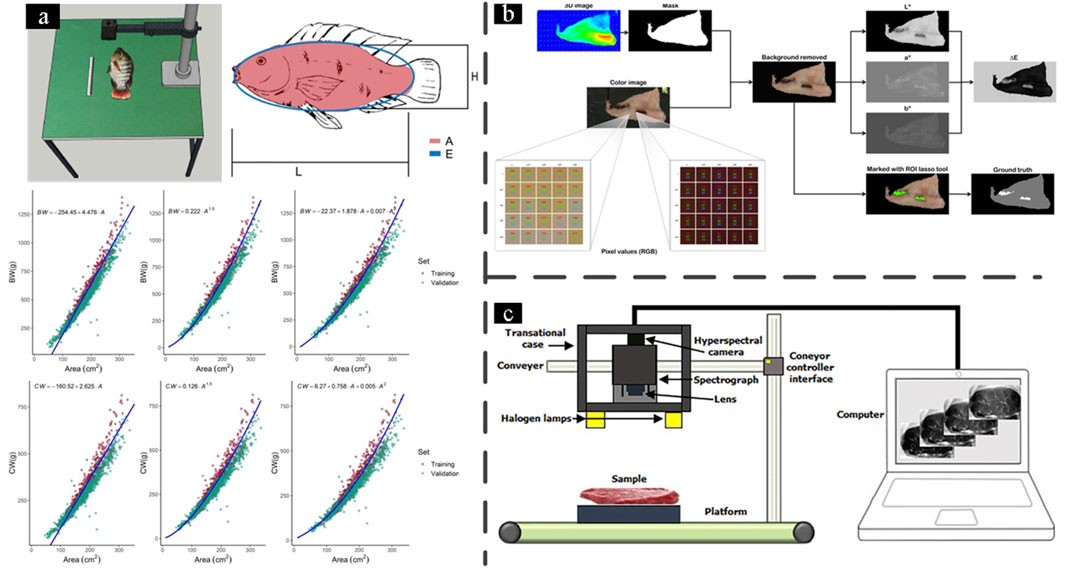

In addition, fish weight estimation is also researched based on computer vision and image analysis (Figure 4a) (Fernandes et al., 2020; Zhang et al., 2020). There is also some research on counting systems (Hernández-Ontiveros et al., 2018) and sizing systems (Muñoz-Benavent et al., 2018). Moreover, the combination of computer vision procedure and acoustic information is expected to estimate biomass in more complex situations.

Figure 4. Seafood processing systems: (a) Representation of background removal, fish identification, and visual evaluation of goodness of fit for live body weight and carcass weight for models that considered only the segmented fish body area (Fernandes et al., 2020); (b) RGB and 3D images of an example fillet and the sequence of computer vision operations used to generate images, features, and the ground truth used for training of the classification algorithms. RGB pixel values of the normal muscle are higher (lighter color) than the pixel values of the blood spots (dark color) (Misimi et al., 2017); (c) Integration of hyperspectral imaging technology with spectroscopy and computing for quality evaluation of seafood products (Ismail et al., 2023).

5.2 Secondary processing of seafood

There have been different methods for separate procedures in fish processing, such as fish beheading positioned with a cutting plane (Azarmdel et al., 2021), gutting beheaded and nonbeheaded fish were aspirated entrails and extracted blood and water from the abdominal cavity (Grosseholz and Neumann, 2008; Paulsohn et al., 2007), fish cutting and removal of the viscera without damage to either the viscera or the remaining fish product (Ryan, 2015).

In fish processing, the previous work on the integration of intelligent sensing, robots, and end effector tools for fish processing has resulted in several solutions based on machine vision and robots (Fu et al., 2023; Khodabandehloo, 2022; Mathiassen et al., 2011). Despite some singular unit operations having been successfully automated, and cooperatively with manual human labor, the current mechanical, semi-automated, and fixed automated solutions based on the existing technology are hardly able to perform a higher degree of automation in handling, processing, and higher raw material utilization. Based on the 3D vision system research, Mathiassen et al. (2011) established a salmon slaughter line by integrating a laser triangulation device with a robot to complete the salmon bleed-cutting task. The system first takes 3D images of fish in the production line and then completes the 3D segmentation of each fish. The fish head and tail are then graded according to the extracted characteristics. Finally, the entry point is set to direct the robot to complete the trimming process. This system can automatically slaughter 85-95 percent of all fish at an average feed rate of 30–80 salmon/min.

In today’s modern fish processing plants, the trimming operation is performed by a combination of automated trimming systems and manual post-trimming. Post-trimming includes the removal of belly fat, back fat, belly membrane, belly bone, collar bone, tail, blood, wounds, etc. Barbut and Leishman (2022) designed and implemented a prototype robotic post-trimming system for salmon fillets. The system integrated 3D machine vision, a high-speed robot manipulator, and a flexible lightweight cutting knife for enhanced tail-cut grounding. A smooth trajectory based on slow-motion analysis of human cutting motions, utilizing cubic Hermite interpolation for cutting path optimization. The six-degree-of-freedom (DOF) industrial robot is applied to enable flexible cutting paths for all relevant trimmed objects in a way that maximizes yield and minimizes waste.

There’s also some research focusing on blood spot detection. An image analysis method was developed to quantify the salmon fillets’ gaping, bruising, and blood spots (Balaban et al., 2011; Xu and Sun, 2018). An adaptive threshold value for lightness, depending on the fillet average color, was utilized to quantify the area with a luminance less than the threshold value under polarized light. However, this method cannot distinguish between gaping, bruising, and blood spots. With the development of more advanced image analysis technology, some deep learning algorithms like CNN and SVM (Support Vector Machine) were employed to realize a more robust classification approach (Misimi et al., 2017). Aligned RGB and depth images were used for image analysis as shown in Figure 4b.

As time passes, the quality of harvested fish may deteriorate due to storage and processing. As a result, evaluating the quality of processed fish has been extensively studied. HSI (Hyperspectral imaging) technology, a rapid and non-destructive tool, exhibits tremendous potential in evaluating the quality of seafood products through online or at-line detection (Ismail et al., 2023), thanks to its ability to provide spatial and spectral information coupled with multivariate analyses. As shown in Figure 4c, the HSI system is widely used in the seafood industry. Recently, artificial intelligence-based deep learning has emerged as a promising solution for data classification of hyperspectral imaging with shift-invariant feature recognition for seafood products. While complete automation remains challenging due to seafood’s biological variability, emerging technologies like hyperspectral imaging (Ismail et al., 2023) and deep learning (Misimi et al., 2017) now enable comprehensive quality assessment, surpassing traditional manual methods. These advances highlight the industry’s shift toward data-driven, high-throughput automation while ensuring product quality.

5.3 Commercial applications

Marel Co. Ltd. is the leading global supplier of advanced standalone equipment and integrated systems to the fish processing industry, which offers products of desliming, beheading, fillet processing, portioning, weighing, grading, and batching for salmon and whitefish.

The salmon fish is first measured to make the right cuts and ensure optimum yield. Then they are manually fixed in the gripper of the beheading machine MS 2730 (Marel, 2018) which can process up to 20 fish per minute. The salmon beheader is integrable with the filleter MS 2750 (Marel, 2023) with automatic transfer of the salmon straight from the deheader into the filleter with belly down. An additional set of circular knives cuts the fish from vent to tail. For the belly bone cut, four sets of finger pressures secure maximum control of the fish and enable optimum cutting of both pre-rigor and post-rigor fillets. The MS 2730 automatically adjusts to various fish sizes and can process up to 25 fish per minute depending on the length of the fish.

6 Discussion

Fresh meat is an abundant source of high-quality protein and essential trace minerals (Das et al., 2019). Nowadays, the demand for meat consumption has shifted towards personalized and diverse options that prioritize freshness, quality, and safety (Ren et al., 2022). Besides, there is a significant emphasis in the food industry on the unification of all supply chain processes (Barbut, 2020), particularly within the meat-producing sector, this involves incorporating data obtained through the monitoring of multiple stages involved in the processing plant, starting from the reception of live animals to the subsequent execution of various procedures. In recent times, the potential of robotics and automation as a promising method for meat processing has been thoroughly investigated. These technological advancements have unquestionably offered an advantageous foundation for establishing a traceability system within the meat production industry. To advance the robotization and automation of meat processing, it is imperative for future research to address the following issues.

Firstly, improve the software intelligence in the robotic system for meat processing. This includes boosting the efficiency of perception and recognition algorithms as well as the effectiveness of control systems to ensure the system functions optimally. In contrast to tasks performed by industrial robots, the operational environment encountered by meat processing robots is significantly more intricate and characterized by a greater degree of task uncertainty. Upon examining the historical progression of meat processing robots, and drawing a comparison between traditional vision techniques and CNN, it becomes clear that the iterative and methodical application of computer vision technology has substantially augmented the overall performance of these automated systems. Consequently, the implementation of inventive software algorithms remains a pivotal aspect in the continuing development of such robotics. Furthermore, sophisticated control strategies, like deep reinforcement learning, and imitation learning, may hold great potential for further enhancing the resilience and durability of robotic systems.

Secondly, achieve higher hardware performance of the robotic system, like designing nimble manipulations and enhancing the precision and robustness of sensors. Advanced meat processing techniques such as evisceration and deboning require robots with highly dexterous end-effectors. Recently, new actuator mechanisms that coordinate the movements of dual robotic arms have emerged. MRS (Multi-Robot Systems) presents many advantages over single robots, e.g., improved stability and payload capacity (Kennel-Maushart et al., 2022). There is potential for promising developments in meat processing robotics through the implementation of MRS and HRC (Human-Robot Collaboration). Thirdly, pay attention to the treatment of biological fertilizers generated in meat processing, and achieve sustainable production. During the process of slaughtering and meat processing, a significant amount of meat by-products and co-products are produced. These products need to be managed rationally to ensure ecological disposal (Irshad and Sharma, 2015; Toldrá et al., 2021). Therefore, it is critical to find efficient solutions that support sustainability. Innovative developments in this area can create high added value from meat by-products with minimal environmental impact, handling, and disposal costs, making it an essential component of the transition to bio-economy.

Additionally, automation enables the implementation of traceability systems, enhancing transparency across the meat supply chain. Leveraging big data analytics and IoT technologies can optimize resource efficiency and support agile food network systems (Lin et al., 2020). Such integration not only improves production monitoring but also advances research in food safety and quality control (Lin et al., 2020).

In summary, the core technological challenge in contemporary meat processing systems is balancing precision and throughput requirements. Even within the same species, substantial individual variations create significant obstacles for generic algorithms application. Current researches are increasingly focused on the 3D imaging modalities to obtain comprehensive carcass scans, theoretically enabling optimized cutting path planning. However, the high computational intensity prevents these systems from meeting industrial-scale processing rates. Additionally, The material properties of meat further complicate automation efforts. Meat as a non-uniform material, required precise control of cutting forces to maintain product integrity. While closed-loop control systems incorporating force feedback could potentially improve accuracy, the increased computational overhead and extended processing times decreased throughput. Consequently, existing meat processing systems mainly utilized the open-loop control strategy, sacrificing adaptability to individual variations in favor of operational efficiency through standardized cutting paths. This fundamental balance between processing accuracy and operational efficiency remains the central issue in advancing meat processing automation.

7 Conclusion

The meat processing industry presents significant opportunities for robotic automation, yet substantial challenges remain, particularly in livestock processing. This paper reviews the current status of robotic automation in meat processing. Full automation in livestock processing remains challenging, especially for beef due to its large size variations and complex structure, making a universal solution difficult to achieve. For poultry processing, there have been complete automation solutions to the whole slaughtering process, such as the products offered by Meyn Poultry Equipment Ltd. However, these current systems still have high requirements for consistency, and future research can focus on adaptability to variations in size. Fish processing is relatively simple due to its simple structure. Methods for deadheading, gutting, deboning, and filleting have been applied in the industry. The challenge is mainly in post-trimming, such as detecting various defects in fillets. Current research also focuses more on classification and quality control. With the development of computer vision techniques, the classification of species and sizes becomes more accurate which can enhance the yield of the production line.

Although the meat processing industry has great research value and development potential, it also faces many challenges. From the commercial and organizational aspects, the company needs to invest a great deal of cash in purchasing the equipment, which is not an easy task in the beginning. From the technical aspect, humans possess sophisticated, integrated sensory abilities with inbuilt reasoning and manipulation capabilities. It demands robotic and automated systems with highly accurate sensing systems and flexible processing strategies and methods.

Author contributions

YL: Visualization, Writing – original draft, Writing – review and editing. FW: Writing – original draft, Writing – review and editing, Investigation. QW: Writing – original draft, Writing – review and editing. GL: Writing – review and editing, Visualization. YZ: Writing – original draft. HJ: Supervision, Writing – review and editing. MZ: Conceptualization, Project administration, Supervision, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. We gratefully acknowledge the financial support from the National Key Research and Development Program of China (Grant No. 2023YFD2000901) and the ZJU100 Young Talent Program (Grant No. 194232301).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Azarmdel, H., Mohtasebi, S. S., Jafary, A., Behfar, H., and Rosado Muñoz, A. (2021). Design and simulation of a vision-based automatic trout fish-processing robot. Appl. Sci. 11, 5602. doi:10.3390/app11125602

Balaban, M. O., Unal Sengor, G. F., Gil Soriano, M., and Guillen Ruiz, E. (2010). Using image analysis to predict the weight of Alaskan salmon of different species. J. Food Sci. 75, E157–E162. doi:10.1111/j.1750-3841.2010.01522.x

Balaban, M. O., Unal Sengor, G. F., Soriano, M. G., and Ruiz, E. G. (2011). Quantification of gaping, bruising, and blood spots in salmon fillets using image analysis. J. Food Sci. 76, E291–E297. doi:10.1111/j.1750-3841.2011.02060.x

Bao, X., Leng, J., Mao, J., and Chen, B. (2022). Cutting of sheep carcass using 3D point cloud with dual-robot system. Int. J. Agric. Biol. Eng. 15, 163–171. doi:10.25165/j.ijabe.20221505.7161

Barbin, D. F., Mastelini, S. M., Barbon, S., Campos, G. F. C., Barbon, A. P. A. C., and Shimokomaki, M. (2016). Digital image analyses as an alternative tool for chicken quality assessment. Biosyst. Eng. 144, 85–93. doi:10.1016/j.biosystemseng.2016.01.015

Barbut, S. (2014). Review: automation and meat quality-global challenges. Meat Sci. 96, 335–345. doi:10.1016/j.meatsci.2013.07.002

Barbut, S. (2020). Meat Industry 4.0: a distant future? Anim. Front. 10, 38–47. doi:10.1093/af/vfaa038

Barbut, S., and Leishman, E. M. (2022). Quality and processability of modern poultry meat. Animals 12, 2766. doi:10.3390/ani12202766

Booman, A. C., Parín, M. A., and Zugarramurdi, A. (1997). Efficiency of size sorting of fish. Int. J. Prod. Econ. 48, 259–265. doi:10.1016/S0925-5273(96)00101-6

Casnor, R., and Gavino, R. B. (2022). Development of chicken (Gallus gallus domesticus) defeathering machine. CLSU Int. J. Sci. Technol. 6, 67–92. doi:10.13031/2013.5291

Chao, B. P., Chen, Y. R., Hruschkab, W. R., Wheaton, F. W., and F. W. Wheaton, (2000). Design of a dual-camera system for poultry carcasses inspection. Appl. Eng. Agric. 16, 581–587. doi:10.13031/2013.529

Chen, Y., Ai, H., and Li, S. (2021a). Analysis of correlation between carcass and viscera for chicken eviscerating based on machine vision technology. J. Food Process Eng. 44. doi:10.1111/jfpe.13592

Chen, Y., Feng, K., Lu, J., and Hu, Z. (2021b). Machine vision on the positioning accuracy evaluation of poultry viscera in the automatic evisceration robot system. Int. J. Food Prop. 24, 933–943. doi:10.1080/10942912.2021.1947315

Chen, Y., Lu, J., Feng, K., Wan, L., and Ai, H. (2023). Nutritional metabolism evaluation and image segmentation of the chicken muscle and internal organs for automatic evisceration. J. Anim. Physiol. Anim. Nutr. (Berl.) 107, 228–237. doi:10.1111/jpn.13693

Chen, Y., Wang, S., Bi, J., and Ai, H. (2020). Study on visual positioning and evaluation of automatic evisceration system of chicken. Food Bioprod. Process 124, 222–232. doi:10.1016/j.fbp.2020.08.017

Chen, Y., and Wang, S. C. (2018). Poultry carcass visceral contour recognition method using image processing. J. Appl. Poult. Res. 27, 316–324. doi:10.3382/japr/pfx073

Chen, Z., Wang, Q., Zhang, H., and Nie, P. (2021c). Hyperspectral imaging (HSI) technology for the non-destructive freshness assessment of pearl gentian grouper under different storage conditions. Sensors 21, 583. doi:10.3390/s21020583

Condie, P., MacRae, K., Ring, P., and Boyce, P. (2007). “Sheep brisket cutting using automated system,” in Proceedings of the 2007 Australasian Conference on Robotics and Automation (ACRA), Brisbane, Australia. Food Science Australia (CSIRO). Available online at: https://www.araa.asn.au/acra/acra2007/papers/paper188final.pdf/.

Cong, M., Wang, H., Ren, X., Du, Y., and Liu, D. (2019). Design of porcine abdomen cutting robot system based on binocular vision. 14th Int. Conf. Comput. Sci. Educ. (ICCSE), 188–193. doi:10.1109/ICCSE.2019.8845406

Costa, C., Antonucci, F., Boglione, C., Finoia, M. G., and Menesatti, P. (2013). Automated sorting for size, sex and skeletal anomalies of cultured seabass using external shape analysis. Aquac. Eng. 52, 58–64. doi:10.1016/j.aquaeng.2012.08.001

Cong, M., Zhang, J., Du, Y., Wang, Y., Yu, X., and Liu, D. (2021). A porcine abdomen cutting robot system using binocular vision techniques based on kernel principal component analysis. J. Intell. Robot. Syst. 101, 4–10. doi:10.1007/s10846-020-01280-3

Daley, W., He, T., Lee, K. M., and Sandlin, M. (1999). “Modeling of the natural product deboning process using biological and human models,” in Proceedings of the 1999 IEEE/ASME International Conference on Advanced Intelligent Mechatronics. IEEE, 49–54. doi:10.1109/AIM.1999.803141

Das, A. K., Nanda, P. K., Das, A., and Biswas, S. (2019). “Chapter 6 - hazards and safety issues of meat and meat products,” in Food safety and human health (Academic Press), 145–168. doi:10.1016/B978-0-12-816333-7.00006-0

Echegaray, N., Hassoun, A., Jagtap, S., Tetteh-Caesar, M., Kumar, M., Tomasevic, I., et al. (2022). Meat 4.0: principles and applications of Industry 4.0 technologies in the meat industry. Appl. Sci. Basel 12, 6986. doi:10.3390/app12146986

Elahi, E., Zhang, Z., Khalid, Z., and Xu, H. (2022). Application of an artificial neural network to optimise energy inputs: an energy- and cost-saving strategy for commercial poultry farms. Energy 244, 123169. doi:10.1016/j.energy.2022.123169

Esper, I. M., From, P. J., and Mason, A. (2021). Robotisation and intelligent systems in abattoirs. Trends Food Sci. Technol. 108, 214–222. doi:10.1016/j.tifs.2020.11.005

Essahbi, N., Bouzgarrou, B. C., and Gogu, G. (2012). Soft material modeling for robotic manipulation. Appl. Mech. Mater. 162, 184–193. doi:10.4028/www.scientific.net/AMM.162.184

Fernandes, A. F., Turra, E. M., de Alvarenga, E. R., Passafaro, T. L., Lopes, F. B., Alves, G. F., et al. (2020). Deep learning image segmentation for extraction of fish body measurements and prediction of body weight and carcass traits in Nile tilapia. Comput. Electron. Agric. 170, 105274. doi:10.1016/j.compag.2020.105274

Fortin, A., Tong, A., Robertson, W., Zawadski, S., Landry, S., Robinson, D., et al. (2003). A novel approach to grading pork carcasses: computer vision and ultrasound. Meat Sci. 63, 451–462. doi:10.1016/S0309-1740(02)00104-3

Friedrich, W., Lim, P., and Nicholl, H. (2000). Sensory gripping system for variable products. Proc. 2000 ICRA Millenn. Conf. IEEE Int. Conf. Robotics Automation Symposia Proc. (Cat No00CH37065) 1982, 1982–1987. doi:10.1109/ROBOT.2000.844885

Fu, J., He, Y., and Cheng, F. (2023). Intelligent cutting in fish processing: efficient, high-quality, and safe production of fish products. Food bioproc. Technol. 17, 828–849. doi:10.1007/s11947-023-03163-5

Godfray, H. C. J., Aveyard, P., Garnett, T., Hall, J. W., Key, T. J., Lorimer, J., et al. (2018). Meat consumption, health, and the environment. Science 361, eaam5324. doi:10.1126/science.aam5324

Grosseholz, W., and Neumann, R. (2008). Method for gutting beheaded and non-beheaded fish and device for implementing the same. Washington, DC: U.S. Patent and Trademark Office.

Guire, G., Sabourin, L., Gogu, G., and Lemoine, E. (2010a). Robotic cell for beef carcass primal cutting and pork ham boning in meat industry. Ind. Robot. Int. J. 37, 532–541. doi:10.1108/01439911011081687

Guire, G., Sabourin, L., Gogu, G., and Lemoine, E. (2010b). “Robotic cell with redundant architecture and force control: application to cutting and boning,” in 19th int. Workshop robotics alpe-adria-danube reg. doi:10.1109/RAAD.2010.5524601

Guo, J., and Lee, K. M. (2011). Musculoskeletal model for analyzing manipulation deformation of an automated poultry-meat deboning system. IEEE/ASME Int. Conf. Adv. Intell. Mechatronics Aim., 1081–1086. doi:10.1109/aim.2011.6027154

Hamid, A., Fatima, S. A., and Khalid, Z. (2016). Occupational health and safety in a meat processing industry. World J. Dairy Food Sci. 11, 163–178. doi:10.5829/idosi.wjdfs.2016.11.2.10598

Hassoun, A., Aït-Kaddour, A., Abu-Mahfouz, A. M., Rathod, N. B., Bader, F., Barba, F. J., et al. (2022). The fourth industrial revolution in the food industry—Part I: industry 4.0 technologies. Crit. Rev. Food Sci. Nutr., 1–17. doi:10.1080/10408398.2022.2034735

Hassoun, A., Cropotova, J., Trollman, H., Jagtap, S., Garcia-Garcia, G., Parra-López, C., et al. (2023). Use of industry 4.0 technologies to reduce and valorize seafood waste and by-products: a narrative review on current knowledge. Curr. Res. Food Sci. 6, 100505. doi:10.1016/j.crfs.2023.100505

Heck, B. (2006). Automated chicken processing: machine vision and water-jet cutting for optimized performance. IEEE Control Syst. Mag. 26, 17–19. doi:10.1109/MCS.2006.1636305

Hernández-Ontiveros, J. M., Inzunza-González, E., García-Guerrero, E. E., López-Bonilla, O. R., Infante-Prieto, S. O., Cárdenas-Valdez, J. R., et al. (2018). Development and implementation of a fish counter by using an embedded system. Comput. Electron. Agric. 145, 53–62. doi:10.1016/j.compag.2017.12.023

Hu, A. P., Bailey, J., Matthews, M., McMurray, G., and Daley, W. (2012). Intelligent automation of bird deboning. IEEE/ASME Int. Conf. Adv. Intell. Mechatronics Aim., 286–291. doi:10.1109/AIM.2012.6265969

Irshad, A., and Sharma, B. (2015). Abattoir by-product utilization for sustainable meat industry: a review. J. Anim. Prod. Adv. 5, 681–696. doi:10.5455/japa.20150626043918

Ismail, A., Yim, D. G., Kim, G., and Jo, C. (2023). Hyperspectral imaging coupled with multivariate analyses for efficient prediction of chemical, biological and physical properties of seafood products. Food Eng. Rev. 15, 41–55. doi:10.1007/s12393-022-09327-x

Janssen, P. C. H., Gerrits, J. G. M., and van den Nieuwelaar, A. J. (2007). Method and apparatus for the separate harvesting of back skin and back meat from a carcass part of slaughtered poultry. U.S. Pat. no. 7 (967)–668.

Kang, Z., Zhao, Y., Chen, L., Guo, Y., Mu, Q., and Wang, S. (2022). Advances in machine learning and hyperspectral imaging in the food supply chain. Food Eng. Rev. 14, 596–616. doi:10.1007/s12393-022-09322-2

Kennel-Maushart, F., Poranne, R., and Coros, S. (2022). Multi-arm payload manipulation via mixed reality. Int. Conf. Robot. Autom. (ICRA), IEEE, 11251–11257. doi:10.1109/ICRA46639.2022.9811580

Khashman, A. (2012). Automatic identification system for raw poultry portions. J. Food Process Eng. 35, 727–734. doi:10.1111/j.1745-4530.2010.00621.x

Khodabandehloo, K. (2022). Achieving robotic meat cutting. Anim. Front. 12, 7–17. doi:10.1093/af/vfac012

Kumar, S., Singh, S. K., Singh, R. S., Singh, A. K., and Tiwari, S. (2017). Real-time recognition of cattle using animal biometrics. J. Real-Time Image Process 13, 505–526. doi:10.1007/s11554-016-0645-4

Li, S., Liu, Y., and Li, K. (2021). Design and analysis of poultry evisceration manipulator mechanism. For. Chem. Rev., 570–578. Available online at: http://www.forestchemicalsreview.com/index.php/JFCR/article/view/378 (Accessed August 12, 2023).

Lin, K., Chavalarias, D., Panahi, M., Yeh, T., Takimoto, K., and Mizoguchi, M. (2020). Mobile-based traceability system for sustainable food supply networks. Nat. Food 1, 673–679. doi:10.1038/s43016-020-00163-y

Liu, J., Chriki, S., Kombolo, M., Santinello, M., Pflanzer, S. B., Hocquette, É., et al. (2023). Consumer perception of the challenges facing livestock production and meat consumption. Meat Sci. 200, 109144. doi:10.1016/j.meatsci.2023.109144

Liu, W., Lyu, J., Wu, D., Cao, Y., Ma, Q., Lu, Y., et al. (2022). Cutting techniques in the fish industry: a critical review. Foods 11, 3206. doi:10.3390/foods11203206

Liu, X., Li, Z., Zhou, Y., Peng, Y., and Luo, J. (2024). Camera-radar fusion with modality interaction and radar Gaussian expansion for 3D object detection. Cyborg Bionic Syst. 5, 0079. doi:10.34133/cbsystems.0079

Liu, Y., Cong, M., Zheng, H., and Liu, D. (2017). Porcine automation: robotic abdomen cutting trajectory planning using machine vision techniques based on global optimization algorithm. Comput. Electron. Agric. 143, 193–200. doi:10.1016/j.compag.2017.10.009

Longdell, G. R. (1996). Recent developments in sheep and beef processing in Australasia. Meat Sci. 43, 165–174. doi:10.1016/0309-1740(96)00063-0

Marel (2018). Salmon desliming and fillet washing. Available online at: https://marel.com/media/i5nn13ok/fish-salmon-desliming-fillet-washing-2018.pdf (Accessed October 24, 2023).

Marel (2023). A new era of salmon filleting is launched with the MS 2750. Available online at: https://marel.com/en/news/a-new-era-of-salmon-filleting-is-launched-with-the-ms-2750/(Accessed July 16, 2023).

Mathiassen, J. R., Misimi, E., Toldnes, B., Bondo, M., and Ostvik, S. O. (2011). High-speed weight estimation of whole herring (Clupea harengus) using 3D machine vision. J. Food Sci. 76, E458–E464. doi:10.1111/j.1750-3841.2011.02226.x

Mayekawa (2021). HAMDAS-RX - automated pork ham deboning machine. Available online at: https://mayekawa.com/products/deboning_machines/(Accessed May 19, 2023).

Meng, C., Zhang, T., Zhao, D., and Lam, T. (2024). Fast and comfortable robot-to-human handover for mobile cooperation robot system. Cyborg Bionic Syst. 5, 0120–20. doi:10.34133/cbsystems.0120

Meyn (2023a). Rapid plus breast deboner M4.3. Available online at: https://www.meyn.com/meyn/s/product-items?c__itemType=product&c__itemName=rapid%20plus%20breast%20deboner%20m4.3&c__itemCategory=deboning (Accessed May 18, 2023).

Meyn (2023b). WLD whole leg deboner M3.0. Available online at: https://www.meyn.com/meyn/s/product-items?c__itemType=product&c__itemName=wld%20whole%20leg%20deboner%20m3.0&c__itemCategory=DEBONING (Accessed May 18, 2023).

Misimi, E., Øye, E. R., Eilertsen, A., Mathiassen, J. R., Åsebø, O. B., Gjerstad, T., et al. (2016). GRIBBOT-Robotic 3D vision-guided harvesting of chicken fillets. Comput. Electron. Agric. 121, 84–100. doi:10.1016/j.compag.2015.11.021

Misimi, E., Øye, E. R., Sture, Ø., and Mathiassen, J. R. (2017). Robust classification approach for segmentation of blood defects in cod fillets based on deep convolutional neural networks and support vector machines and calculation of gripper vectors for robotic processing. Comput. Electron. Agric. 139, 138–152. doi:10.1016/j.compag.2017.05.021

Mu, S., Qin, H., Wei, J., Wen, Q., Liu, S., Wang, S., et al. (2020). Robotic 3D vision-guided system for half-sheep cutting robot. Math. Probl. Eng. 2020, 1–11. doi:10.1155/2020/1520686

Muñoz-Benavent, P., Andreu-García, G., Valiente-González, J. M., Atienza-Vanacloig, V., Puig-Pons, V., and Espinosa, V. (2018). Enhanced fish bending model for automatic tuna sizing using computer vision. Comput. Electron. Agric. 150, 52–61. doi:10.1016/j.compag.2018.04.005

Nyalala, I., Okinda, C., Kunjie, C., Korohou, T., Nyalala, L., and Chao, Q. (2021). Weight and volume estimation of poultry and products based on computer vision systems: a review. Poult. Sci. 100, 101072. doi:10.1016/j.psj.2021.101072

Paulsohn, C., Dann, A., Riisch, R., and Brandt, M. (2007). Tool, device, and method for gutting fish opened at the stomach cavity.

Ramírez-Hernández, A., Varón-García, A., and Sánchez-Plata, M. X. (2017). Microbiological profile of three commercial poultry processing plants in Colombia. J. Food Prot. 80, 1980–1986. doi:10.4315/0362-028X.JFP-17-028

Ren, Q. S., Fang, K., Yang, X. T., and Han, J. W. (2022). Ensuring the quality of meat in cold chain logistics: a comprehensive review. Trends Food Sci. Technol. 119, 133–151. doi:10.1016/j.tifs.2021.12.006

Ritchie, M. R. H. (2017). Meat and dairy production. Our world in data. Available online at: https://ourworldindata.org/(Accessed May 19, 2023).

Romanov, D., Korostynska, O., Lekang, O. I., and Mason, A. (2022). Towards human-robot collaboration in meat processing: challenges and possibilities. J. Food Eng. 331, 111117. doi:10.1016/j.jfoodeng.2022.111117

Schmidhuber, J., Pound, J., and Qiao, B. (2020). COVID-19: channels of transmission to food and agriculture. Covid. doi:10.4060/ca8430en

Scott (2021). STRIPLOIN SAW. Available online at: https://scottautomation.com/assets/Sectors/Meat-processing/Resources/Beef-Processing/Striploin-Saw-Brochure-EN.pdf (Accessed June 22, 2023).

Singh, J., Potgieter, J., and Xu, W. L. (2012). Ovine automation: robotic brisket cutting. Ind. Robot. Int. J. 39, 191–196. doi:10.1108/01439911211201654

Sørensen, S., Jensen, N., and Jensen, W. (1993). “Automation in the production of pork meat,” in Robotics in meat, fish and poultry processing (Springer), 115–147. doi:10.1007/978-1-4615-2129-7_6

Takács, B., Takács, K., Garamvölgyi, T., and Haidegger, T. (2021). “Inner organ manipulation during automated pig slaughtering—smart gripping approaches,” in 2021 IEEE 21st int. Symp. Comput. Intell. Informatics (CINTI). IEEE, 97–102. doi:10.1109/CINTI53070.2021.9668519

Technavio (2023). Global meat market 2023-2027. London, United Kingdom: Infiniti Research Limited. Available online at: https://www.technavio.com/report/meat-market-industry-analysis. Accessed September 17, 2023.

Teimouri, N., Omid, M., Mollazade, K., Mousazadeh, H., Alimardani, R., and Karstoft, H. (2018). On-line separation and sorting of chicken portions using a robust vision-based intelligent modelling approach. Biosyst. Eng. 167, 8–20. doi:10.1016/j.biosystemseng.2017.12.009

Toldrá, F., Reig, M., and Mora, L. (2021). Management of meat by-and co-products for an improved meat processing sustainability. Meat Sci. 181, 108608. doi:10.1016/j.meatsci.2021.108608

Wang, J., Sarkar, D., Mohan, A., Lee, M., Ma, Z., and Chortos, A. (2024). Deep learning for strain field customization in bioreactor with dielectric elastomer actuator array. Cyborg Bionic Syst. 5, 0155. doi:10.34133/cbsystems.0155

Wang, S., Hawkins, G. L., Kiepper, B. H., and Das, K. C. (2018). Treatment of slaughterhouse blood waste using pilot scale two-stage anaerobic digesters for biogas production. Renew. Energy 126, 552–562. doi:10.1016/j.renene.2018.03.076

Wei, G., Stephan, F., Aminzadeh, V., Würdemann, H., Walker, R., Dai, J. S., et al. (2014). “DEXDEB - application of DEXtrous robotic hands for DEBoning operation,” in Gearing up and accelerating cross-fertilization between academic and industrial robotics research in europe. Editors F. Röhrbein, G. Veiga, and C. Natale (Cham: Springer International Publishing), 217–235. doi:10.1007/978-3-319-03838-4_1

Woo, D. G., Kim, Y. J., Lim, H. K., and Kimm, T. H. (2018). Development of automatic chicken cutting machine. J. Biosyst. Eng. 43 (4), 386–393. doi:10.5307/JBE.2018.43.4.386

Xiong, L. R., Zheng, W., and Luo, S. H. (2018). Design of poultry eviscerated manipulator and its control system based on tactile perception. Trans. Chin. Soc. Agric. Eng. 34 (3), 42–48. doi:10.11975/j.issn.1002-6819.2018.03.006

Xu, J. L., and Sun, D. W. (2018). Computer vision detection of salmon muscle gaping using convolutional neural network features. Food Anal. Methods 11, 34–47. doi:10.1007/s12161-017-0957-4

Xu, W., He, Y., Li, J., Zhou, J., Xu, E., Wang, W., et al. (2023). Robotization and intelligent digital systems in the meat cutting industry: from the perspectives of robotic cutting, perception, and digital development. Trends Food Sci. Technol. 135, 234–251. doi:10.1016/j.tifs.2023.03.018

Zhang, L., Wang, J., and Duan, Q. (2020). Estimation for fish mass using image analysis and neural network. Comput. Electron. Agric. 173, 105439. doi:10.1016/j.compag.2020.105439

Keywords: meat processing system, automated equipment, meat production automation, meat processing robot, robot processing system

Citation: Lyu Y, Wu F, Wang Q, Liu G, Zhang Y, Jiang H and Zhou M (2025) A review of robotic and automated systems in meat processing. Front. Robot. AI 12:1578318. doi: 10.3389/frobt.2025.1578318

Received: 17 February 2025; Accepted: 28 April 2025;

Published: 23 May 2025.

Edited by:

Yantao Shen, University of Nevada, United StatesReviewed by:

Liuyin Wang, University of Nevada, United StatesCong Peng, University of Nevada, United States

Copyright © 2025 Lyu, Wu, Wang, Liu, Zhang, Jiang and Zhou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mingchuan Zhou, bWN6aG91QHpqdS5lZHUuY24=

†Present address: Mingchuan Zhou, College of Biosystems Engineering and Food Science, Zhejiang University, Hangzhou, Zhejiang, China

‡These authors share first authorship

Yining Lyu

Yining Lyu Fan Wu1‡

Fan Wu1‡ Qingyu Wang

Qingyu Wang Huanyu Jiang

Huanyu Jiang Mingchuan Zhou

Mingchuan Zhou