Abstract

The subject of this article is the development of an unmanned surface vehicle (USV) for the removal of floating debris. A twin-hulled boat with four thrusters placed at the corners of the vessel is used for this purpose. The trash is collected in a storage space through a timing belt driven by an electric motor. The debris is accumulated in a funnel positioned at the front of the boat and subsequently raised through this belt into the garbage bin. The boat is equipped with a spherical camera, a long-range 2D LiDAR, and an inertial measurement unit (IMU) for simultaneous localization and mapping (SLAM). The floating debris is identified from rectified camera frames using YOLO, while the LiDAR and IMU concurrently provide the USV’s odometry. Visual methods are utilized to determine the location of debris and obstacles in the 3D environment. The optimal order in which the debris is collected is determined by solving the orienteering problem, and the planar convex hull of the boat is combined with map and obstacle data via the Open Motion Planning Library (OMPL) to perform path planning. Pure pursuit is used to generate the trajectory from the obtained path. Limits on the linear and angular velocities are experimentally estimated, and a PID controller is tuned to improve path following. The USV is evaluated in an indoor swimming pool containing static obstacles and floating debris.

1 Introduction

There are an estimated 269,000 tons of plastic on the water surface (Prakash and Zielinski, 2025). Projections indicate that the global plastic waste production will surpass one billion metric tons (Subhashini et al., 2024). These vast amounts of primarily plastic debris persist in the environment and require hundreds of years for decomposition.

During decomposition, microplastics contain hazardous chemicals and cause lasting damage (Chrissley et al., 2017). Toxins originating from plastic elements disrupt ecosystems and pose threats to human health, including cancers, birth defects, and immune system disorders (Akib et al., 2019).

Traditional labor-intensive methods for waste collection are insufficient due to the scale and dispersed nature of the problem in remote or hazardous locations (Flores et al., 2021), and stationary solutions are hindered by environmental changes (Subhashini et al., 2024). Recently, the focus has shifted from manual cleanup (Chandra et al., 2021) to robotic systems that address ocean pollution (Akib et al., 2019).

Unmanned surface vehicles (USVs) are at the forefront of these efforts (Bae and Hong, 2023; Fulton et al., 2019; Turesinin et al., 2020; Costanzi et al., 2020; Shivaanivarsha et al., 2024; Lazzerini et al., 2024; Suryawanshi et al., 2024); they are designed for collecting floating debris from rivers, ponds, and oceans. Commercial solutions such as WasteShark, Clearbot, and MANTA are used for trash collection.

The development of these robots is underpinned by technologies such as a) edge computing (Carcamo et al., 2024; Chandra et al., 2021; Salcedo et al., 2024), b) computer vision and AI for object classification (Li et al., 2025), c) environmental awareness (Li et al., 2024; Wang et al., 2019), d) intelligent navigation and control (Gunawan et al., 2016; Li et al., 2025; Wang et al., 2019), and e) effective debris collection (Li et al., 2024; Subhashini et al., 2024; Akib et al., 2019).

Marine cleaning robots are often limited by their small-scale and restricted operational capacities (Chandra et al., 2021; Flores et al., 2021). The dynamic nature of aquatic environments poses a complex challenge for effective and adaptable path planning, and operation in remote or GPS-denied areas is particularly difficult (Wang et al., 2019).

Several challenges remain in developing autonomous debris collection systems, including lighting and weather conditions, efficient path planning, robust navigation, and mechanical designs for handling diverse floating waste.

This article presents Autonomous Trash Retrieval for Oceanic Neatness (ATRON), addressing these challenges through an integrated approach that combines mechanical design, perception, planning, and control, as shown in Figure 1. The designed USV is similar to that in Ahn et al. (2022) and utilizes a robust twin-hulled catamaran design that enables heavy-duty debris collection operations. There are some fundamental changes, including the use of SLAM and various sensors (spherical camera, LiDAR) for USV localization and algorithms related to path planning relying on the orienteering problem and the rapidly exploring random tree (RRT) algorithm.

FIGURE 1

ATRON: an autonomous USV capable of extracting debris from water surfaces.

The contributions in this article include the following:

The development of a twin-hulled, ROS-based USV, ATRON, for collecting up to 1 of floating debris. Four independent thrusters placed at the edges of the vessel provide a linear velocity of up to 1.47 m/s and an angular velocity of up to 0.3 rad/s.

The development of a visual system for debris and obstacle detection using a spherical camera; YOLOv11 is used for debris classification (Ali and Zhang, 2024).

The utilization of the orienteering problem for task planning and RRT for obstacle avoidance.

Simulation and experimental studies conducted in an indoor pool for debris collection and obstacle avoidance.

The development of a GPU-based physics simulator (Isaac Sim) for the evaluation of several classification algorithms under various sea states and lighting conditions.

2 ATRON design (materials and equipment)

The ATRON is a twin-hulled catamaran measuring m (above the waterline), with a dry weight of 120 kg and a 0.15 m draught depth; and it is stable up to sea-state 2. Two parallel pontoons constitute its framework and are connected via an aluminum ( m) platform, while a secondary elevated ( m) platform is used for its electronics, including the 360 camera mounted on top of a 1.2-m pole. The conveyor belt used for trash removal, isolation absorbers, and the four underwater thrusters supplement the structure.

Figure 2 illustrates the comprehensive system architecture integrating the mechanical, electrical, and computational subsystems. The USV operates on a hierarchical control structure built on ROS 2 Humble (Abaza, 2025), enabling modular communication between perception, planning, and actuation components.

FIGURE 2

ATRON structural block diagram.

The Insta360 × 2 spherical camera provides visual coverage for debris detection, while the Slamtec S2P 2D LiDAR is used for SLAM and obstacle avoidance, and the BNO055 9-DOF IMU is used for attitude estimation and sensor fusion. YOLOv11 (Ali and Zhang, 2024) is used for real-time debris and obstacle detection from the spherical camera feed, within the SLAM Toolbox (Macenski and Jambrecic, 2021) for GPS-denied localization using LiDAR and inertial measurement unit (IMU) fusion, while components of the OMPL (Şucan et al., 2012) are used for path planning. The system can be connected through a Wi-Fi/cellular router.

The ATRON utilizes a differential thrust propulsion system with four brushless thrusters positioned at the vessel’s corners on extended aluminum outriggers. Each thruster delivers 1.2 kgf at 12 V, controlled by bidirectional ESCs capable of 50 Hz PWM modulation for velocity control. A custom controller based on the STM32F103C8T6 microcontroller serves as the interface between high-level ROS 2 commands and low-level actuator control. It provides four PWM ESC-based output channels, PWM control for the conveyor motor via a solid-state relay, and C communication with the IMU. The controller communicates with the onboard computer via a USB-to-UART bridge, exchanging JSON-formatted commands and sensor data at 50 Hz for real-time control.

The debris collection system is a 300-mm-wide reinforced rubber timing belt with molded cleats spaced at 50 mm intervals. The belt spans a 600 mm incline from the waterline to the collection container, driven by a 500 W brushed DC motor with integrated 10:1 reduction gearing. Drainage holes (10 mm diameter) are distributed across the belt surface at 30 mm intervals to prevent water accumulation that could reduce lifting capacity or destabilize the collected items. The ATRON has a V-shaped extended metal funnel with a 120 opening angle to guide debris toward the collection zone.

All systems operate from a 12 V, 95 Ah sealed waterproof environment lead-acid battery providing 5.84 operating hours using a DC-to-AC inverter unit, while the USB computer-port can provide ample power for the LiDAR, the 360° camera, and the ATRON controller, which uses an onboard voltage regulator powering the IMU-sensor and any other circuitry. The thrusters are powered from bidirectional ESCs, while a solid-state relay is used in the conveyor mechanism, as shown in Figure 3.

FIGURE 3

ATRON electrical systems.

The ATRON microcontroller relies on the STMicroelectronics STM32F103C8T6, which provides six PWM output channels using dedicated hardware timers. All PWM signals are level-shifted to 5 V using a TXB0104PWR bidirectional voltage-level translator to ensure compatibility between the MCU and the external devices. The high-speed I2C bus is interfaced to a BNO055 IMU. This microcontroller polls the IMU and provides the sensor data in JSON format.

3 ATRON software design (methods)

3.1 Image rectification

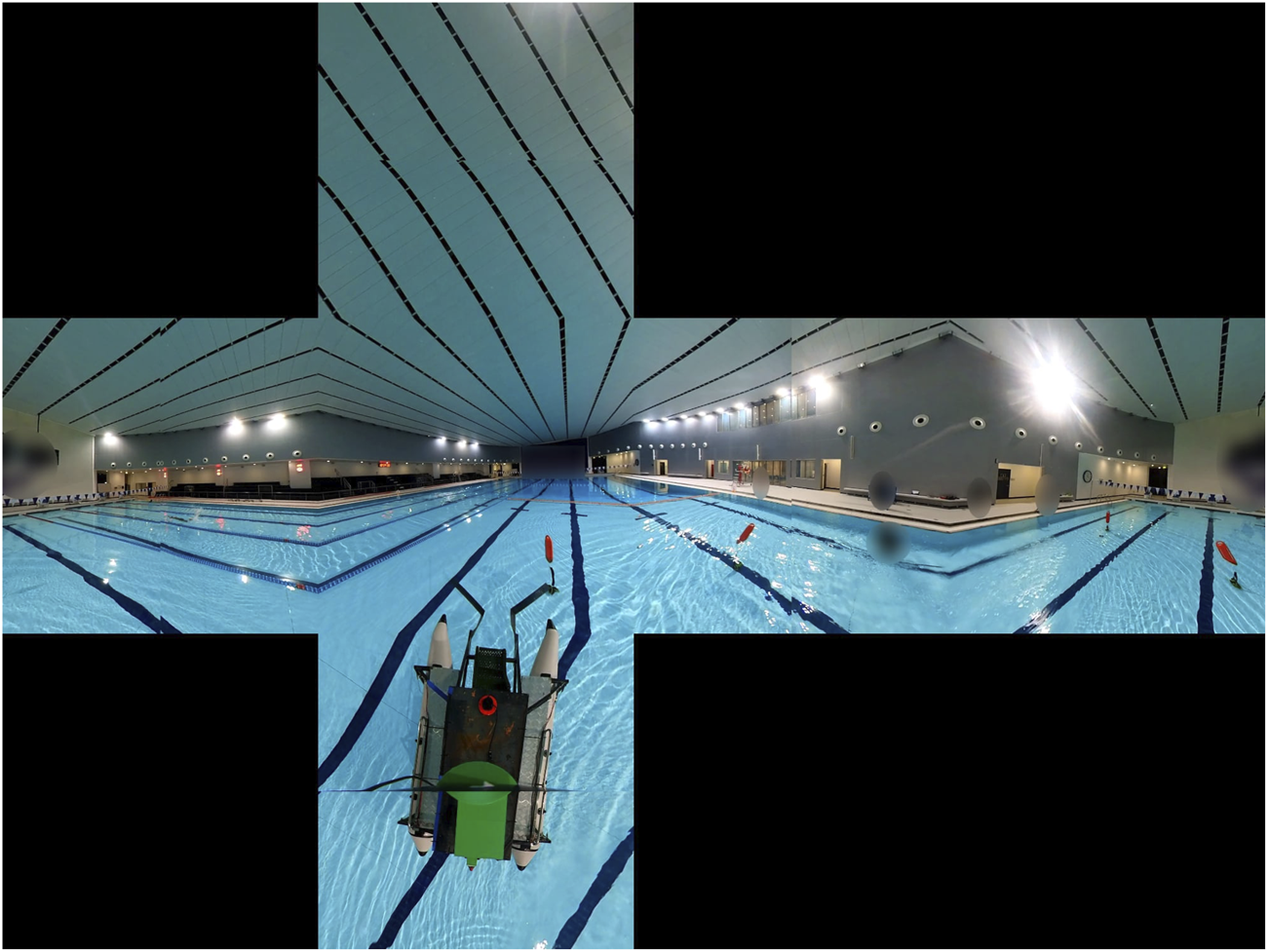

The spherical camera observes the surrounding space and returns dual fisheye images, as shown in Figure 4. The camera’s extrinsic calibration parameters include a) and , which correspond to the deviation of each hemispherical image from the optical center; b) crop size; and c) and , determining the relative translation and rotation between the front and back hemispherical images.

FIGURE 4

Dual fisheye image to equi-rectangular image.

After calibration, the equirectangular projection is applied as described by Flores et al. (2024). Each pixel in the equirectangular image is first converted to spherical coordinates via Equation 1where is the width (height) of the equirectangular image. These are then converted to 3D unit vectors described in Equation 2:For the front hemisphere points , the projection is described in Equation 3where is the width of the fisheye image. The pixel coordinates in the front fisheye image are A similar approach is used for points in the back hemisphere. The resulting fisheye to equirectangular process is shown in Figure 4.

The equirectangular format enables the extraction of perspective views at arbitrary viewing angles. Given a field of view (FoV), azimuth , and zenith , each pixel in the output perspective image is mapped in Equations 4–7:where represent the equirectangular coordinates.

The camera records = dual fisheye images. The cubemap images in Figure 5 have a FoV of , a width of 960, and a height of 960 pixels. The generated views of the left, front, up, down, right, and back perspectives, respectively, are shown in Figure 5.

FIGURE 5

Cubemap representation with FoV .

The ATRON utilizes a Slamtec S2P LiDAR (Madhavan and Adharsh, 2019) with a range of 50 m and angular resolution of 0.1125 with a 32 KHz sampling rate, along with a BNO055 9-DOF IMU.

3.2 2D to 3D object projection

Since the debris floats on the water’s surface, LiDAR cannot be used in wavy sea states, and visual methods are utilized instead, relying on YOLOv11 (Ali and Zhang, 2024). Since soda cans mostly constitute the debris and floatable buoys are the obstacles, the YOLOv11 model was trained on images containing objects with these two possible classes.

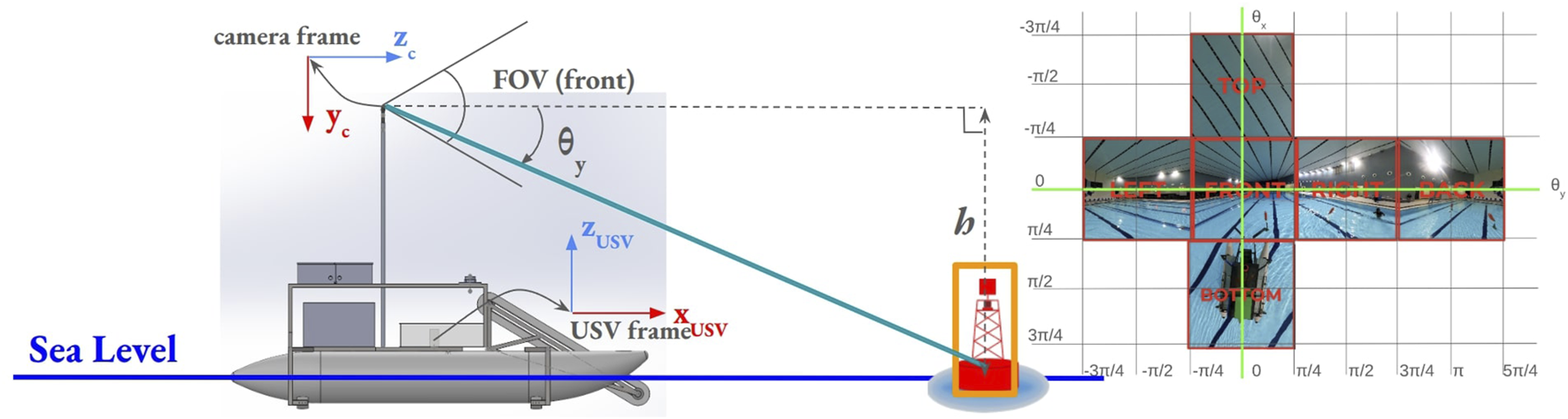

The distance (depth) of the debris or obstacles was estimated, and given that the camera was attached at a given height with respect to the sea level, as shown in Figure 6, a methodology similar to Li et al. (2025) was utilized, assuming that is positive downward.

FIGURE 6

2D image to 3D Cartesian coordinate projection (right). , the vertical angle between the camera axis and the detected object is calculated using the heading equation for . and are overlaid over the cubemap projection images (right). Notably, calculations are valid only for m.

The transformation from the 3D-coordinates in the camera frame to pixel coordinates is shown in Equation 8 (Wang et al., 2010)while the horizontal distance of the object (debris or obstacle) to the camera (Wang et al., 2010) is .

The heading (angle between the camera’s principal axis and the ray connecting the camera to the object) is The yaw angle of the object in the camera’s x-axis, , is described in Equation 9

3.3 USV-kinodynamics

Assuming minimal roll and pitch of the USV, its state vector in a 2D environment (Fossen, 2021) is , where a) are USV’s position coordinates in the 2D plane, b) is the USV’s heading angle, c) is the linear velocity in the direction of the USV’s heading, and d) is the USV’s angular velocity around the vertical axis. The control input vector commands are and affect the USV’s speed and turning rate; corresponds to the thrust generated by the propulsion system, and is the USV’s rotational torque that affects its heading.

The USV’s differential kinematics is while its simplified hydrodynamics model is expressed in Equation 10where is the USV’s mass (moment of inertia around the -axis) and is the linear (angular) drag coefficient.

3.4 USV simultaneous localization and mapping

The USV utilizes 2D LiDAR complemented by its IMU to perform SLAM. The orientation data from the IMU are fused to improve the accuracy of the SLAM algorithm using the extended Kalman filter (EKF) (Ribeiro, 2004; Einicke and White, 2002). The EKF recursively estimates the robot state by fusing the planar odometry from scan matching with IMU orientation measurements. The filter’s prediction step is expressed by Equations 11, 12followed by its update step in Equations 13–15.where and are the Jacobians of the motion and measurement models, respectively, and represent process and measurement noise co-variances, respectively, and is the Kalman gain. The covariance of the LiDAR (IMU) odometry is obtained from its specifications.

3.5 Orienteering problem for USVs

The order of the collected debris is optimized by treating each piece of debris as a node in a classic orienteering problem (OP). Each debris node is considered to have a score , and the edge joining nodes and are , where signifies that the edge between nodes and is chosen as part of the optimized orienteering cycle. The goal is to maximize the ensuing objective function (Gunawan et al., 2016) in Equation 16.Let be the length of the edge ; then, OP satisfies the constraint in Equation 17where is the maximum length of the orienteering cycle.

The USV implements a heuristic approach (Gunawan et al., 2016) to the OP. Figure 7 indicates that the orienteering path varies depending on the cost meters, where the depot node is at (0,0).

FIGURE 7

OP solution for various distance constraints. (a) OP solution with c = 30 m, (b) OP solution with c = 50 m, (c) Clustered OP solution via heuristic approach for c = 50 m. (a, b) Unclustered output; (c) clustered nodes.

The adopted approach assumes static debris, and a static high-level global planner is utilized, followed by a dynamic low-level local planner for small debris using a clustered OP (Angelelli et al., 2014; Elzein and Caro, 2022). A greedy algorithm is introduced that creates clusters with a maximum diameter, which represents regions in which the low-level dynamic follower is implemented.

Given nodes with positions and the maximum diameter , clusters are produced satisfying their maximum cluster diameter constraint where is the position of node .

A greedy algorithm selects a random node to be a cluster and then inspects each unassigned node . If including results in a cluster with a smaller diameter than , then it is included in that cluster. The algorithm continues until no further clusters can be created and has a complexity of .

Each cluster’s position is its centroid, and its score equals the number of nodes it contains. The original orienteering solution path is then mapped through these clusters, with consecutive duplicate clusters removed, as shown in Figure 7C. This enables hierarchical planning, in which global routes are determined using clusters, while local control manages individual debris.

This allows the planning algorithm in Section 3.6.1 to be agnostic to small deviations in the position of the debris (because of sea currents).

3.6 Path and trajectory planning

3.6.1 Path planning via OMPL

For path planning and obstacle avoidance, the USV relies on the OMPL (Şucan et al., 2012), and the USV’s footprint = ( m) is utilized. The USV’s configuration space is determined by extracting the free space obtained from the SLAM algorithm and subtracting the obstacle space. The path of the USV is then constrained by supplying the OMPL’s constrained planner (Kingston et al., 2019). The USV can be thought of as a tank-driven system represented by Wang et al. (2019), where is the velocity created by the left (right) thruster side described by Equation 18.

These constraints are used in OMPL to obtain the path via the RRT connect algorithm (Karaman and Frazzoli, 2011; Kuffner and LaValle, 2000). In environments with sparse obstacles, paths between subsequent nodes are obtained within less than 2 s on average as the USV can, in the vast majority of cases, simply drive around the obstacle.

3.6.2 Path tracking

The velocity of the USV is calculated using a regulated pure pursuit (RPP) controller (Macenski et al., 2023), an improved implementation of the classic pure pursuit (PP) controller, which operates at a constant linear velocity. The classic PP controller follows a look-ahead point specified by the look-ahead radius . The furthest point on the desired path within this look-ahead radius is considered. The PP algorithm geometrically determines the curvature required to drive the vehicle from its current position to the look-ahead point. The curvature is , where is the lateral offset of the look-ahead point in the vehicle’s coordinate frame, and is the look-ahead radius. The vehicle’s coordinate system is placed at the rear differential with the -axis aligned with the vehicle’s heading. The USV operates with a constant linear velocity (except for the starting and end points). The required angular velocity is The look-ahead distance acts as a tuning parameter: larger values result in smoother tracking with less oscillation but slower convergence to the path, while smaller values provide a tighter path following at the cost of potential oscillations.

In RPP, the linear velocity is further scaled to improve performance along tight turns. Let be the desired nominal speed. Let be the minimum radius of curvature that the USV can turn at this given nominal speed. RPP reduces the commanded linear velocity based on a threshold curvature . The implementation utilizes a modified regulated pure pursuit (MRPP) controller that has a final threshold , which acts as a safety net to calculate the linear velocity in Equation 19.

The MRPP was used to follow the path generated by OMPL shown in Figure 8. The USV’s actual path is represented by the blue line using m, m, and m/s.

FIGURE 8

Orienteering (purple), OMPL (green), and actual (blue) path of the USV.

3.6.3 PID control and signal mixing

In order to achieve the desired behavior, the input velocity of the USV is sent to a PID controller. PID controllers are used for the linear (angular) USV velocity. Discrete PID-controllers are then utilized with a -sampling period.

4 Results

4.1 Camera calibration

The camera’s extrinsic parameters are estimated by performing live dual-fisheye to equirectangular mapping in order to minimize any stitching artifacts, as shown in Figure 4. The camera uses pixel-images, resulting in the following intrinsic and extrinsic parameters from Wang et al. (2010), as summarized in Table 1.

TABLE 1

| Parameter | Value |

|---|---|

| Crop diameter | 1,920 pixels |

| Output dimensions | pixels |

| Extrinsic translation | m |

| Extrinsic rotation | |

| Focal length | 509.75 (509.75) pixels |

| Principal point | pixels |

| Distortion coefficients |

Fisheye camera configuration parameters.

Using these parameters allows the formation of equirectangular images in 49 m and the cubemap images in 79 m, resulting in a total per-image processing time of 128 m at 4K resolution when deployed on an Intel i5 processor.

4.2 Object detection results

Debris (obstacles) such as cans/plastic bottles (buoys) were collected by recording images from the camera and extracting four cubemap images representing the front, left, right, and back image views. A total of 1,416 images were extracted and annotated. Six image-augmentation steps were applied, including a) horizontal flipping, b) rotation, c) saturation, d) exposure, e) random bounding box flipping, and f) mosaic stitching, as shown in Figure 9. These augmentations increased the training data size to 5,192 images. Training–validation–test split was divided in a 70–20–10 ratio.

FIGURE 9

Preprocessed images in YOLO training.

Varying lighting conditions were used by adjusting the image brightness and saturation. Cubemap face images were also rotated to make the model more adaptable against USV tilt/yaw, and mosaic stitching was implemented. These preprocessing adjustments are shown in Figure 9.

The data were trained on YOLOv11 using the ADAM optimizer with an initial learning rate of 0.001, utilizing a cosine decay learning rate scheduler with three warmup epochs. A batch size of 16 was used, and the dataset contained 4,000 random images split into a 70–20–10 distribution for training, validation, and testing, respectively. Validation results are shown in Figure 10 along with the training results. The model achieves an mAP50 of 0.76 and an mAP95 of 0.365, with the precision-recall curves shown in Figure 10 (bottom). Overall, in sea states of 3 and above with poor lighting conditions, YOLO efficiently identified buoys (98% accuracy) but had several false positives recognized as cans (74% accuracy).

FIGURE 10

Model validation and training results.

This model was further investigated using the confusion matrix shown in Figure 11a. Several false positives/negatives occurred in detecting debris (due to their small size) from the background (poor lighting conditions); several samples are shown in Figure 11b. The false positives were primarily due to the sharp reflections in the water surface (environmental conditions), while the false negatives occurred when the debris appeared small in the images. Notably, a) the superclass debris had several classes under it; these classes included cans, plastics, and others, and b) the summation of column-elements in the normalized confusion matrix should be one. The confusion matrix implies that the buoys were classified and identified correctly, the debris was recognized with a 74% accuracy, and the small cases of background were misclassified as debris (93% of instances). This inference, when using a GPU RTX 4050 GPU, resulted in a 25-ms delay.

FIGURE 11

Confusion matrix and object detection failure cases. (a) Normalized Confusion Matrix, (b) Detection Failure Cases.

The model is inferred from the left, front, right, and back image views, as shown in Figure 12, where buoys are represented by orange cylinders and cans are represented by red markers (bottom). Furthermore, Figure 12 showcases a successful 2D-to-3D projection using the bounding box coordinates to estimate the position of various debris and obstacles.

FIGURE 12

Stitched and annotated left, front, right, and back image views inferred with the YOLOv11 model (top) are used to locate debris and obstacles in the 2D map (bottom).

4.3 USV kinodynamic measurements

The data sampling period was 20 ms, while . These were measured from the onboard IMU’s gyroscope readings during rapid rotational maneuvers.

Since no direct velocity sensor was available, the maximum linear velocity was estimated by numerically integrating the acceleration data obtained from the IMU’s accelerometer. The USV started from a stationary position and accelerated until it reached terminal velocity, . The control signal on the resultant ATRON thrust and torque is nearly identical for the clockwise and counterclockwise rotation of propellers.

4.4 Odometry and sensor fusion

An EKF was used to fuse LiDAR data with IMU using the robot localization technique (Censi, 2008). Figure 13a shows the yaw angles obtained from LiDAR odometry, from the IMU, and from the fused data. The EKF fuses the smooth LiDAR odometry (blue) with the noisy IMU orientation (red) to produce a robust and accurate fused orientation (yellow). Notably, inclusion of the IMU is necessary since the USV can have small pitch and roll angles because of the sea state (waves and currents).

FIGURE 13

Experimental USV results: (a) LiDAR fusion with IMU generating yaw angles. (b) USV lateral velocities. (c) Experimental USV path following.

The USV’s lateral velocity is shown in Figure 13b, reflecting tilting of the USV.

4.5 Path following results

To test the path-following capabilities of the USV, a path consisting of four corners was to be followed. The USV’s actual experimental response is shown in Figure 13c, where the use of the PID-based control significantly improves the robot’s path-following capabilities by reducing its overshoot.

Automatic tuning (Åström et al., 1993) was used for obtaining the PID parameters. The ultimate gain and ultimate period were different for the linear and angular (torsional) components of the USV-controller: for the linear controller, and s, while for the angular controller, and s. The PID parameters used were

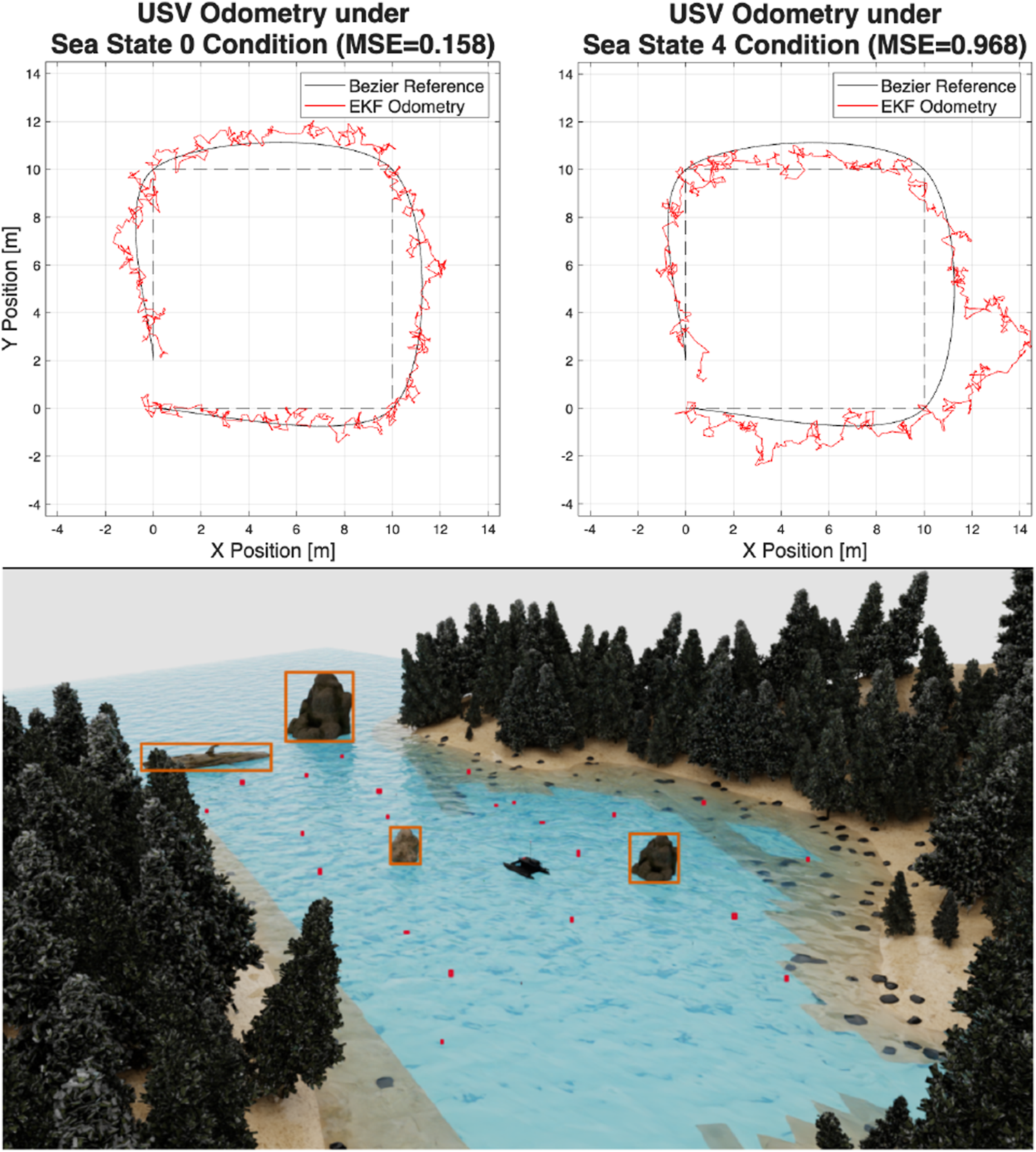

4.6 Path tracking for various sea states

The remote environment used in the simulation studies is shown at the bottom of Figure 14. This environment was used in NIVIDA’s Isaac simulator (Collins et al., 2021); this simulates the USV’s buoyancy, along with all sensors and transducers on top of the USV under ROS. Different sea state levels from 0 to 4 on the Beaufort wind scale are emulated (Meaden et al., 2007), which involves waves up to 4 m. Furthermore, the induced wind and the roll and pitch angles are nonzero and affect the effectiveness of the 2D LiDAR, the odometry system, and the ability to recognize the debris. The varying angles create erroneous behavior in the MRPP algorithm (Macenski et al., 2023), resulting in rapid turns, as shown in the upper half of Figure 14, while tracking a square m. In both cases, Bezier smoothing (Arvanitakis and Tzes, 2012) (passing through the vertices of this square) was utilized to improve the tracking performance. The maximum attainable velocities were enforced during the simulation while different sea states were applied (Meaden et al., 2007).

FIGURE 14

USV odometry tracking performance for different sea states (top) within the improved simulated environment (bottom).

5 Discussion

5.1 Summary of the results

This article presented ATRON, a large-scale autonomous USV designed for the removal of floating debris. With its m footprint and 1 collection capacity, ATRON represents a significant advancement over existing small-scale prototypes, demonstrating the feasibility of practical autonomous marine cleanup systems.

The system’s key innovations include the novel use of a camera with projection techniques for debris localization, avoiding the limitations of depth cameras in aquatic environments. The dual fisheye to equirectangular projection relies on placing the camera at an a priori known height. YOLOv11L successfully estimated debris positions, while the mapping capabilities were obtained with a 2D LiDAR. The integration of OMPL with the orienteering problem enabled optimal path planning, with the clustered variant effectively reducing computational complexity while maintaining collection efficiency.

Experimental validation in both an indoor pool environment and a simulated environment confirmed the system’s capabilities. The sensor fusion approach combining LiDAR odometry with IMU data through an extended Kalman filter provided robust localization despite the pitch and roll variations common in aquatic environments. The MRPP controller with PID control delivered precise path following, thus validating the overall system architecture.

5.2 Limitations and concluding remarks

Despite the demonstrated effectiveness of ATRON in controlled conditions, several limitations must be acknowledged. The USV has thus far only been evaluated in an indoor pool environment where hydrodynamic perturbations are negligible. While the catamaran’s low center of mass provides inherent passive stability, the absence of trials in open-water conditions with currents, waves, and wind precludes the validation of performance under realistic operating scenarios due to the necessary regulatory constraints and permit requirements.

A further limitation concerns the visual localization pipeline. The 2D-to-3D projection requires approximately 0.13 s per frame for calibration, which, at an angular velocity of 0.3 rad/s and an object distance of 10 m, introduces a positional differential of approximately 0.45 m. In addition, the absence of damping on the camera mount transmits vibrations from the thrusters and conveyor mechanism to the sensor, introducing noise into the projection parameters. This combination of calibration latency and vibration-induced disturbance degrades the accuracy of debris localization.

Future work will focus on addressing these limitations. The image-processing pipeline is being optimized through reduced resolution and refined projection algorithms to decrease calibration latency. Image stabilization is under development using both software- and hardware-based approaches, including the utilization of the integrated gyroscope in the 360° camera and the addition of physical damping elements. Furthermore, open-water trials are planned once regulatory requirements are satisfied, enabling the assessment of ATRON’s stability and performance in dynamic marine environments and advancing the system toward operational deployment.

Statements

Data availability statement

Publicly available datasets were analyzed in this study. These data can be found at https://github.com/RISC-NYUAD/ATRON.

Author contributions

JoA: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project Administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review and editing. HJ: Conceptualization, Funding acquisition, Investigation, Methodology, Resources, Writing – original draft. BE: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Visualization, Writing – original draft. JaA: Conceptualization, Data Curation, Investigation, Methodology, Resources, Writing – original draft. AT: Conceptualization, Funding acquisition, Project administration, Resources, Supervision, Writing – review and editing.

Funding

The author(s) declared that financial support was received for this work and/or its publication. This work was supported by the NYUAD Center for Artificial Intelligence and Robotics, Tamkeen under the New York University Abu Dhabi Research Institute Award CG010, and the Mubadala Investment Company in the UAE. The latter was not involved in the study design, collection, analysis, interpretation of data, the writing of this article, or the decision to submit it for publication.

Acknowledgments

This project acknowledges support from A. Oralov, D. Al Jorf, and F. Darwish for their contributions to the object detection system. Gratitude is expressed toward N. Evangeliou from NYUAD’s RISC Laboratory for his guidance on the various electromechanical systems.

Conflict of interest

Author JaA was employed by Mechanical Engineering, Egyptian Refining Company.

The remaining author(s) declared that this work was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author AT declared that they were an editorial board member of Frontiers at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declared that generative AI was not used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2025.1718177/full#supplementary-material

References

1

Abaza B. F. (2025). AI-Driven dynamic covariance for ROS 2 Mobile robot localization. Sensors25, 3026. 10.3390/s25103026

2

Ahn J. Oda S. Chikushi S. Sonoda T. Yasukawa S. (2022). Design and development of ocean debris collecting unmanned surface vehicle and performance evaluation of collecting device in tank. J. Robotics, Netw. Artif. Life9, 209–215. 10.57417/jrnal.9.3_209

3

Akib A. Tasnim F. Biswas D. Hashem M. B. Rahman K. Bhattacharjee A. et al (2019). “Unmanned floating waste collecting robot,” in TENCON 2019 IEEE region 10 conference. IEEE, 2645–2650. 10.1109/TENCON.2019.8929537

4

Ali M. L. Zhang Z. (2024). The YOLO framework: a comprehensive review of evolution, applications, and benchmarks in object detection. Computers13, 336. 10.3390/computers13120336

5

Angelelli E. Archetti C. Vindigni M. (2014). The clustered orienteering problem. Eur. J. Operational Res.238, 404–414. 10.1016/j.ejor.2014.04.006

6

Arvanitakis I. Tzes A. (2012). “Trajectory optimization satisfying the robot’s kinodynamic constraints for obstacle avoidance,” in 2012 20th mediterranean conference on control and automation. MED IEEE, 128–133.

7

Åström K. J. Hägglund T. Hang C. C. Ho W. K. (1993). Automatic tuning and adaptation for PID controllers-A survey. Control Eng. Pract.1, 699–714. 10.1016/0967-0661(93)91394-c

8

Bae I. Hong J. (2023). Survey on the developments of unmanned marine vehicles: intelligence and cooperation. Sensors23, 4643. 10.3390/s23104643

9

Carcamo J. Shehada A. Candas A. Vaghasiya N. Abdullayev M. Melnyk A. et al (2024). “AI-Powered cleaning robot: a sustainable approach to waste management,” in 2024 16th international conference on human System interaction. IEEE, 1–6.

10

Censi A. (2008). “An ICP variant using a point-to-line metric,” in 2008 IEEE international conference on robotics and automation (IEEE), 19–25.

11

Chandra S. S. Kulshreshtha M. Randhawa P. (2021). “A review of trash collecting and cleaning robots,” in 2021 9th international conference on reliability, Infocom technologies and optimization (Trends and future directions). IEEE, 1–5.

12

Chrissley T. Yang M. Maloy C. Mason A. (2017). “Design of a marine debris removal system,” in 2017 systems and information engineering design symposium. IEEE, 10–15.

13

Collins J. Chand S. Vanderkop A. Howard D. (2021). A review of physics simulators for robotic applications. IEEE Access9, 51416–51431. 10.1109/access.2021.3068769

14

Costanzi R. Fenucci D. Manzari V. Micheli M. Morlando L. Terracciano D. et al (2020). Interoperability among unmanned maritime vehicles: review and first in-field experimentation. Front. Robotics AI7, 91. 10.3389/frobt.2020.00091

15

Einicke G. A. White L. B. (2002). Robust Extended Kalman filtering. IEEE Trans. Signal Process.47, 2596–2599. 10.1109/78.782219

16

Elzein A. Caro G. A. D. (2022). A clustering metaheuristic for large orienteering problems. PLOS ONE17, e0271751. 10.1371/journal.pone.0271751

17

Flores H. Motlagh N. H. Zuniga A. Liyanage M. Passananti M. Tarkoma S. et al (2021). Toward large-scale autonomous marine pollution monitoring. IEEE Internet Things Mag.4, 40–45. 10.1109/iotm.0011.2000057

18

Flores M. Valiente D. Peidró A. Reinoso O. Payá L. (2024). Generating a full spherical view by modeling the relation between two fisheye images. Vis. Comput.40, 7107–7132. 10.1007/s00371-024-03293-7

19

Fossen T. I. (2021). Handbook of marine craft hydrodynamics and motion control, 2nd ed. John Wiley and Sons.

20

Fulton M. Hong J. Islam M. J. Sattar J. (2019). Robotic detection of marine litter using deep visual detection models. Int. Conf. on Robotics and Automation (IEEE), 5752–5758. 10.1109/icra.2019.8793975

21

Gunawan A. Lau H. C. Vansteenwegen P. (2016). Orienteering problem: a survey of recent variants, solution approaches and applications. Eur. J. Operational Res.255, 315–332. 10.1016/j.ejor.2016.04.059

22

Karaman S. Frazzoli E. (2011). Sampling-based algorithms for optimal motion planning. Int. J. Robotics Res.30, 846–894. 10.1177/0278364911406761

23

Kingston Z. Moll M. Kavraki L. E. (2019). Exploring implicit spaces for constrained sampling-based planning. Intl. J. Robotics Res.38, 1151–1178. 10.1177/0278364919868530

24

Kuffner J. LaValle S. (2000). RRT-connect: an efficient approach to single-query path planning. Proc. 2000 ICRA. Millenn. Conf. IEEE Int. Conf. Robotics Automation. Symposia Proc. (Cat. No.00CH37065)2, 995–1001. 10.1109/robot.2000.844730

25

Lazzerini G. Gelli J. Della Valle A. Liverani G. Bartalucci L. Topini A. et al (2024). Sustainable electromechanical solution for floating marine litter collection in calm marinas. OCEANS 2024 - Halifax, 1–6. 10.1109/oceans55160.2024.10753855

26

Li X. Chen J. Huang Y. Cui Z. Kang C. C. Ng Z. N. (2024). “Intelligent marine debris cleaning robot: a solution to ocean pollution,” in 2024 international conference on electrical, communication and computer engineering (IEEE), 1–6.

27

Li R. Zhang B. Lin D. Tsai R. G. Zou W. He S. et al (2025). Emperor Yu tames the flood: water surface garbage cleaning robot using improved A* Algorithm in dynamic environments. IEEE Access13, 48888–48903. 10.1109/access.2025.3551088

28

Macenski S. Jambrecic I. (2021). SLAM Toolbox: SLAM for the dynamic world. J. Open Source Softw.6, 2783. 10.21105/joss.02783

29

Macenski S. Singh S. Martín F. Ginés J. (2023). Regulated pure pursuit for robot path tracking. Aut. Robots47, 685–694. 10.1007/s10514-023-10097-6

30

Madhavan T. Adharsh M. (2019). “Obstacle detection and obstacle avoidance algorithm based on 2-D RPLiDAR,” in 2019 international conference on computer communication and informatics (IEEE), 1–4.

31

Meaden G. T. Kochev S. Kolendowicz L. Kosa-Kiss A. Marcinoniene I. Sioutas M. et al (2007). Comparing the theoretical versions of the Beaufort scale, the T-Scale and the Fujita scale. Atmos. Research83, 446–449. 10.1016/j.atmosres.2005.11.014

32

Prakash N. Zielinski O. (2025). AI-enhanced real-time monitoring of marine pollution: part 1-A state-of-the-art and scoping review. Front. Mar. Sci.12, 1486615. 10.3389/fmars.2025.1486615

33

Ribeiro M. I. (2004). Kalman and extended Kalman filters: concept, derivation and properties. Inst. Syst. Robotics43, 3736–3741.

34

Salcedo E. Uchani Y. Mamani M. Fernandez M. (2024). Towards continuous floating invasive plant removal using unmanned surface vehicles and computer vision. IEEE Access12, 6649–6662. 10.1109/access.2024.3351764

35

Shivaanivarsha N. Vijayendiran A. G. Prasath M. A. (2024). WAVECLEAN – an innovation in autonomous vessel driving using object tracking and collection of floating debris, in 2024 international conference on communication, computing and internet of things (IC3IoT), 1–6.

36

Subhashini K. Shree K. S. Abirami A. Kalaimathy R. Deepika R. Selvi J. T. (2024). “Autonomous floating debris collection system for water surface cleaning,” in 2024 international conference on communication, computing and internet of things (IEEE), 1–6.

37

Şucan I. A. Moll M. Kavraki L. E. (2012). The open motion planning Library. IEEE Robotics and Automation Mag.19, 72–82. 10.1109/mra.2012.2205651

38

Suryawanshi R. Waghchaure S. Wagh S. Jethliya V. Sutar V. Zendage V. (2024). “Autonomous boat: floating trash collector and classifier,” in 2024 4th international conference on sustainable expert systems (ICSES), 66–70.

39

Turesinin M. Kabir A. M. H. Mollah T. Sarwar S. Hosain M. S. (2020). “Aquatic Iguana: a floating waste collecting robot with IOT based water monitoring system,” in 2020 7th international conference on electrical engineering, computer sciences and informatics (IEEE), 21–25.

40

Wang Y. Li Y. Zheng J. (2010). “A camera calibration technique based on OpenCV,” in The 3rd international conference on information sciences and interaction sciences. IEEE, 403–406.

41

Wang W. Gheneti B. Mateos L. A. Duarte F. Ratti C. Rus D. (2019). Roboat: an autonomous surface vehicle for urban waterways. IEEE/RSJ International Conference on Intelligent Robots and Systems IROS, 6340–6347.

Summary

Keywords

collision avoidance, path planning, uncrewed marine vessel, YOLO object detection, orienteering problem

Citation

Abanes J, Jang H, Erkinov B, Awadalla J and Tzes A (2026) ATRON: Autonomous trash retrieval for oceanic neatness. Front. Robot. AI 12:1718177. doi: 10.3389/frobt.2025.1718177

Received

03 October 2025

Revised

18 November 2025

Accepted

17 December 2025

Published

22 January 2026

Volume

12 - 2025

Edited by

Mark R. Patterson, Northeastern University, United States

Reviewed by

Alberto Topini, University of Florence, Italy

Wenyu Zuo, University of Houston, United States

Updates

Copyright

© 2026 Abanes, Jang, Erkinov, Awadalla and Tzes.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anthony Tzes, anthony.tzes@nyu.edu

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.