Introduction

The basic premise of “teaching students according to their aptitude” is to have a relatively objective and accurate understanding of the students' current learning statuses (e.g., knowledge mastery level, learning motivation, learning attitude, and learning mode) and the developments/changes they undergo over time (e.g., did the students' knowledge mastery level improve, are the students' learning motivations enhanced). Measuring and improving individual development are topics that are actively tackled in psychological, educational, and behavioral studies.

In the past decades, learning diagnosis, which objectively quantifies students' current learning status and provides diagnostic feedback, has drawn increasing interest (Zhan, 2020). When focusing on fine-grained attributes (e.g., knowledge, skills, and cognitive processes), learning diagnosis can also be regarded as an application of cognitive diagnosis (Leighton and Gierl, 2007) in learning assessment. Although learning diagnosis aims to promote student learning based on diagnostic feedback and the corresponding remedial teaching (intervention), currently, only a few studies have focused on and evaluated the effectiveness of such feedback or remedial teaching (c.f., Tang and Zhan, submitted; Wu, 2019; Wang L. et al., in press; Wang S. et al., 2020). One of the main reasons is that cross-sectional design, which cannot measure individual growth in learning, is adopted by most current learning diagnoses. This issue may also be reflected in current learning diagnosis models (LDMs) or alternatively cognitive diagnosis models (for review, see Rupp et al., 2010; von Davier and Lee, 2019), which are the main tools for data analysis in learning diagnosis. Although various LDMs have been proposed and suggested by previous research, most of them are only applicable to cross-sectional data analysis, such as the deterministic inputs, noisy “and” gate (DINA) model (Junker and Sijtsma, 2001), the deterministic inputs, noisy “or” gate (DINO) model (Templin and Henson, 2006), the log-linear cognitive diagnosis model (LCDM) (Henson et al., 2009), and the generalized DINA (GDINA) model (de la Torre, 2011).

By contrast, longitudinal learning diagnosis evaluates students' knowledge and skills and identifies their strengths and weaknesses over a period of time. The data collected from longitudinal learning diagnosis provide researchers with the opportunities to develop models for learning tracking, which can be used to track individual growth over time as well as to evaluate the effectiveness of feedback. Compared to cross-sectional learning diagnosis, longitudinal learning diagnosis is more helpful when aiming to promote student learning.

Currently, longitudinal learning diagnosis is a new research direction that mainly stays in the model development stage and lacks practical applications and related topic research (e.g., missing data, measure invariance, and linking methods). Moreover, although some longitudinal LDMs have been proposed, these models still have some limitations that need to be further studied. Thus, for the rest of this opinion article, I will first make a minireview of current longitudinal LDMs and then I will elaborate on several future research directions that I believe are worth studying. With this opinion article, I hope to elicit more research attention toward longitudinal learning diagnosis.

Minireview

To provide theoretical support for data analysis in longitudinal learning diagnosis, longitudinal LDMs are needed. However, the latent variables (namely, attributes) in LDMs are categorical (typically, binary). Therefore, the methods for modeling growth for continuous latent variables (e.g., longitudinal item response theory models) cannot be directly extended to capture growth in the mastery of attributes. For example, the change in the mastery of attributes cannot be directly modeled by the variance-covariance methods when assuming that multiple continuous latent variables follow a multivariate normal distribution (e.g., von Davier et al., 2011).

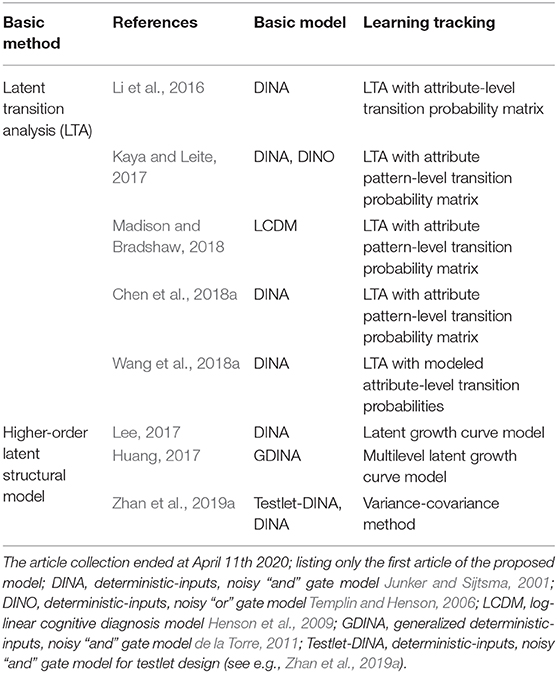

To this end, in recent years, several longitudinal LDMs have been proposed. They are summarized in Table 1. Current longitudinal LDMs can mainly be divided into two categories: the latent transition analysis (Collins and Wugalter, 1992)-based models (e.g., Li et al., 2016; Kaya and Leite, 2017; Chen et al., 2018a; Madison and Bradshaw, 2018; Wang et al., 2018a) and the higher-order latent structural (de la Torre and Douglas, 2004)-based models (e.g., Huang, 2017; Lee, 2017; Zhan et al., 2019a). The diagnostic results of these two model types have high consistency (Lee, 2017).

The latent transition analysis-based methods estimate the transition probabilities from one latent class/attribute to another or the same latent class/attribute. Two main differences exist in these models. First, different measurement models were used. Reduced LDMs, e.g., the DINA model and the DINO model, were used by Li et al. (2016), Kaya and Leite (2017), Chen et al. (2018a), and Wang et al. (2018a), but a generalized LDM, i.e., the LCDM, was used by Madison and Bradshaw (2018). Second, the attribute-level transition probability matrix (i.e., attributes are transited independently from one other) was used by Li et al. (2016) and Wang et al. (2018a), but the attribute pattern-level transition probability matrix was used by Kaya and Leite (2017), Chen et al. (2018a), and Madison and Bradshaw (2018). In addition, different from Li et al. (2016), Kaya and Leite (2017), Chen et al. (2018a), and Madison and Bradshaw (2018), who directly estimated the transition probabilities, Wang et al. (2018a) used a set of covariates, such as a time-invariant general learning ability and intervention indicators, to model the transition probabilities. The effectiveness of different learning interventions was further considered by Zhang and Chang (2019). Additionally, to reduce modeling complexity, Chen et al. (2018a) and Wang et al. (2018a) assumed learning trajectories to be non-decreasing (i.e., respondents did not forget). However, this non-decreasing assumption may only be suitable for short-time interval assessments. Furthermore, by incorporating response times into LDMs (Wang et al., 2018a, 2019; Zhan et al., 2018a; Zhang and Wang, 2018) used response times to assist in measuring students' growth in attribute mastery.

Meanwhile, the higher-order latent structural model-based methods estimate the changes in a higher-order latent ability over time to further infer the changes of lower-order latent attributes. One of the representative models is the longitudinal higher-order DINA (Long-DINA) model (Zhan et al., 2019a), which is a multidimensional extension of the higher-order DINA model (de la Torre and Douglas, 2004). However, multidimensionality does not refer to different general abilities, but rather, the same general ability measured at different time points. As noted by Zhan et al. (2019a), the latent growth curve model instead of the variance-covariance method can also be employed in the third order. Lee (2017) proposed a growth curve DINA model, which can be seen as an alternative of the long-DINA model that incorporates the latent growth curve model but ignores the local item dependence among anchor or repeat items. Furthermore, Huang (2017) proposed a multilevel GDINA model for assessing growth, which can be seen as an extension of Lee's (2017) model in both measurement model part (i.e., from DINA model to GDINA model) and latent structural model part (i.e., from one-level growth curve model to multilevel growth curve model).

Future Research Direction

Although the utility for analyzing the longitudinal learning diagnosis data of these longitudinal LDMs has been evaluated by some simulation studies and a few applications, these models are not without limitations, which need to be further studied. Based on current research on longitudinal learning diagnosis, I believe that the following are directions that are worthy of further study.

(1) A systematic comparison between different longitudinal LDMs, which can provide theoretical suggestions for practitioners in choosing suitable models.

(2) Only binary attributes (e.g., “1” means mastery and “0” means non-mastery) were considered in all current longitudinal LDMs. However, in actual teaching, it is challenging to use binary attributes to describe the growth of students, as they can be classified into only four categories between two adjacent time points, i.e., 0 → 0, 0 → 1, 1 → 0, and 1 → 1. Some small but existing growths are ignored, which in turn may lead students, especially those with low motivation to learn, to conclude that the current diagnostic feedback is ineffective or to abandon remedial action. Thus, further studies can attempt to extend the current models to handle polytomous attributes (Karelitz, 2004) and probabilistic attributes (Zhan et al., 2018b) because they can describe the learning growth in a more refined way than binary attributes.

(3) Only outcome data (or item response accuracy) were considered in most current longitudinal LDMs. Although a few studies have incorporated item response time into current models (e.g., Wang et al., 2018b), future studies may attempt to introduce other types of process data (e.g., number of trial and error and operation process), or even biometric data (e.g., eye-tracking data; Man and Harring, 2019). Utilizing multimodal data can evaluate the growth of students in multiple aspects, which is conducive to a more comprehensive understanding of the development of students (Zhan, 2019).

(4) All current longitudinal LDMs assumed that attributes are structurally independent, in that mastery of one attribute is not a prerequisite to the mastery of another. However, when attribute hierarchy (Leighton et al., 2004) exists, the development trajectory of students is not arbitrary and should be developed in such hierarchical order. Therefore, incorporating the attribute hierarchy into current longitudinal LDMs is worth trying.

(5) A limited number of attributes at each time point were assumed in current longitudinal LDMs. In practice, a large number of attributes may involve more than 10 or 15 attributes at each time point. In such cases, using current longitudinal LDMs with existing parameter estimation algorithms may lead to unrobust parameter estimation. Thus, more powerful or efficient algorithms or special strategies may need to be introduced.

(6) The simultaneity estimation strategy was adopted by almost all current longitudinal LDMs. This involves the reintegration of response data from multiple time points into one large response matrix, which is then analyzed as a whole (Zhan et al., 2019a). However, this strategy requires subjects to wait until all the tests end before an analysis of the results becomes available. Thus, using this strategy cannot provide timely diagnostic feedback to either students or teachers. In light of the foregoing, new estimation strategies for timely diagnostic feedback should be further studied (e.g., Zhan, 2020).

(7) In addition to theoretical and methodological studies, the corresponding applied studies should also be strengthened. For example, a few studies have focused on and evaluated the effectiveness of diagnostic feedback or remedial teaching in promoting learning (cf. Tang and Zhan, submitted). Moreover, effective and systematic intervention methods based on longitudinal diagnostic feedback are also worth studying.

(8) Adaptive learning system involving LDMs is also worthy of further study (e.g., Chen et al., 2018b; Tang et al., 2019). This system can diagnose an individual's latent attribute profile online while the assessment is being conducted.

(9) Compared with cross-sectional learning diagnosis, the diagnostic accuracy and validity of longitudinal learning diagnosis used to depict the learning trajectories are more worthy of attention by researchers and practitioners. In addition to choosing a suitable longitudinal LDM, many factors such as the quality of the longitudinal test itself, the setting of a cognitive model, students' response attitude, cheating, and missing data will also affect the accuracy and validity of the diagnostic results. The impact of these factors on the longitudinal learning diagnosis and the corresponding compensation or detection methods are also worthy of further discussion.

Overall, there are still many issues related to longitudinal learning diagnosis that are worthy of discussion. In view of the advantages of longitudinal learning diagnosis compared with cross-sectional learning diagnosis, the former is more in line with the idea of assessment for learning (Wiliam, 2011) and the needs of formative assessments.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 31900795) and the MOE (Ministry of Education in China) Project of Humanities and Social Sciences (Grant No. 19YJC190025).

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Chen, Y., Culpepper, S. A., Wang, S., and Douglas, J. (2018a). A hidden Markov model for learning trajectories in cognitive diagnosis with application to spatial rotation skills. Appl. Psychol. Meas. 42, 5–23. doi: 10.1177/0146621617721250

Chen, Y., Li, X., Liu, J., and Ying, Z. (2018b). Recommendation system for adaptive learning. Appl. Psychol. Meas. 42, 24–41. doi: 10.1177/0146621617697959

Collins, L. M., and Wugalter, S. E. (1992). Latent class models for stage-sequential dynamic latent variables. Multivariate Behav. Res. 27, 131–157. doi: 10.1207/s15327906mbr2701_8

de la Torre, J. (2011). The generalized DINA model framework. Psychometrika 76, 179–199. doi: 10.1007/s11336-011-9207-7

de la Torre, J., and Douglas, J. A. (2004). Higher-order latent trait models for cognitive diagnosis. Psychometrika 69, 333–353. doi: 10.1007/BF02295640

Henson, R., Templin, J., and Willse, J. (2009). Defining a family of cognitive diagnosis models using log-linear models with latent variables. Psychometrika 74, 191–210. doi: 10.1007/s11336-008-9089-5

Huang, H.-Y. (2017). Multilevel cognitive diagnosis models for assessing changes in latent attributes. J. Educ. Meas. 54, 440–480. doi: 10.1111/jedm.12156

Junker, B. W., and Sijtsma, K. (2001). Cognitive assessment models with few assumptions, and connections with nonparametric item response theory. Appl. Psychol. Meas. 25, 258–272. doi: 10.1177/01466210122032064

Karelitz, T. M. (2004). Ordered Category Attribute Coding Framework for Cognitive Assessments (Unpublished doctoral dissertation). University of Illinois at Urbana Champaign, Champaign, IL, United States.

Kaya, Y., and Leite, W. L. (2017). Assessing change in latent skills across time with longitudinal cognitive diagnosis modeling: an evaluation of model performance. Educ. Psychol. Meas. 77, 369–388. doi: 10.1177/0013164416659314

Lee, S. Y. (2017). Growth Curve Cognitive Diagnosis Models for Longitudinal Assessment (Unpublished doctoral dissertation). University of California, Berkeley, CA, United States.

Leighton, J. P., and Gierl, M. J. (2007). Cognitive Diagnostic Assessment for Education: Theory and Applications. Cambridge University Press. doi: 10.1017/CBO9780511611186

Leighton, J. P., Gierl, M. J., and Hunka, S. M. (2004). The attribute hierarchy method for cognitive assessment: a variation on Tatsuoka's rule-space approach. J. Educ. Meas. 41, 205–237. doi: 10.1111/j.1745-3984.2004.tb01163.x

Li, F., Cohen, A., Bottge, B., and Templin, J. (2016). A latent transition analysis model for assessing change in cognitive skills. Educ. Psychol. Meas. 76, 181–204 doi: 10.1177/0013164415588946

Madison, M. J., and Bradshaw, L. P. (2018). Assessing growth in a diagnostic classification model framework. Psychometrika 83, 963–990. doi: 10.1007/s11336-018-9638-5

Man, K., and Harring, J. R. (2019). Negative binomial models for visual fixation counts on test items. Educ. Psychol. Meas. 79, 617–635. doi: 10.1177/0013164418824148

Rupp, A. A., Templin, J., and Henson, R. A. (2010). Diagnostic Measurement: Theory, Methods, and Applications. New York, NY: Guilford Press.

Tang, X., Chen, Y., Li, X., Liu, J., and Ying, Z. (2019). A reinforcement learning approach to personalized learning recommendation system. Br. J. Math. Stat. Psychol. 72, 108–135. doi: 10.1111/bmsp.12144

Templin, J., and Henson, R. A. (2006). Measurement of psychological disorders using cognitive diagnosis models. Psychol. Methods 11, 287–305. doi: 10.1037/1082-989X.11.3.287

von Davier, M., and Lee, Y.-S. (2019). Handbook of Diagnostic Classification Models: Models and Model Extensions, Applications, Software Packages. New York, NY: Springer. doi: 10.1007/978-3-030-05584-4

von Davier, M., Xu, X., and Carstensen, C. H. (2011). Measuring growth in a longitudinal large-scale assessment with a general latent variable model. Psychometrika 76, 318–336. doi: 10.1007/s11336-011-9202-z

Wang, L., Tang, F., and Zhan, P. (in press). Effect analysis of individualized remedial teaching based on cognitive diagnostic assessment: taking “linear equation with one unknown” as an example. J. Psychol. Sci.

Wang, S., Hu, Y., Wang, Q., Wu, B., Shen, Y., and Carr, M. (2020). The development of a multidimensional diagnostic assessment with learning tools to improve 3-D mental rotation skills. Front. Psychol. 11:305. doi: 10.3389/fpsyg.2020.00305

Wang, S., Yang, Y., Culpepper, S. A., and Douglas, J. A. (2018a). Tracking skill acquisition with cognitive diagnosis models: a higher-order, hidden markov model with covariates. J. Educ. Behav. Stat. 43, 57–87 doi: 10.3102/1076998617719727

Wang, S., Zhang, S., Douglas, J., and Culpepper, S. (2018b). Using response times to assess learning progress: a joint model for responses and response times. Meas. Interdisciplinary Res. Perspect. 16, 45–58. doi: 10.1080/15366367.2018.1435105

Wang, S., Zhang, S., and Shen, Y. (2019). A joint modeling framework of responses and response times to assess learning outcomes. Multivariate Behav. Res. 55, 49–68. doi: 10.1080/00273171.2019.1607238

Wiliam, D. (2011). What is assessment for learning? Stud. Educ. Eval. 37, 3–14. doi: 10.1016/j.stueduc.2011.03.001

Wu, H.-M. (2019). Online individualised tutor for improving mathematics learning: a cognitive diagnostic model approach. Educ. Psychol. 39, 1218–1232. doi: 10.1080/01443410.2018.1494819

Zhan, P. (2019). A Cognitive Diagnosis Model for Analysis Multisource Data in Technology-Enhanced Diagnostic Assessments. Invited Report at School of Mathematics and Statistics, Northeast Normal University, Changchun, China. Retrieved from: http://math.nenu.edu.cn/info/1063/4271.htm (accessed April 11, 2020).

Zhan, P. (2020). A Markov estimation strategy for longitudinal learning diagnosis: providing timely diagnostic feedback. Educ. Psychol. Measure. doi: 10.1177/0013164420912318

Zhan, P., Jiao, H., and Liao, D. (2018a). Cognitive diagnosis modelling incorporating item response times. Br. J. Math. Stat. Psychol. 71, 262–286. doi: 10.1111/bmsp.12114

Zhan, P., Jiao, H., Liao, D., and Li, F. (2019a). A longitudinal higher-order diagnostic classification model. J. Educ. Behav. Stat. 44, 251–281. doi: 10.3102/1076998619827593

Zhan, P., Jiao, H., Man, K., and Wang, L. (2019b). Using JAGS for Bayesian cognitive diagnosis modeling: a tutorial. J. Educ. Behav. Stat. 44, 473–503. doi: 10.3102/1076998619826040

Zhan, P., Wang, W.-C., Jiao, H., and Bian, Y. (2018b). Probabilistic-input, noisy conjunctive models for cognitive diagnosis. Front. Psychol. 9:997. doi: 10.3389/fpsyg.2018.00997

Zhang, S., and Chang, H. (2019). A multilevel logistic hidden Markov model for learning under cognitive diagnosis. Behav. Res. Methods 52, 408–421. doi: 10.3758/s13428-019-01238-w

Keywords: longitudinal cognitive diagnosis, learning diagnosis, cognitive diagnostic assessment, assessment for learning, latent transition analysis

Citation: Zhan P (2020) Longitudinal Learning Diagnosis: Minireview and Future Research Directions. Front. Psychol. 11:1185. doi: 10.3389/fpsyg.2020.01185

Received: 25 November 2019; Accepted: 07 May 2020;

Published: 03 July 2020.

Edited by:

Sergio Machado, Salgado de Oliveira University, BrazilReviewed by:

Shiyu Wang, University System of Georgia, United StatesClaudio Imperatori, Università Europea di Roma, Italy

Copyright © 2020 Zhan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Peida Zhan, pdzhan@gmail.com

Peida Zhan

Peida Zhan