- Faculty of Business and Information Technology, Ontario Tech University, Oshawa, ON, Canada

This study evaluates the effectiveness of two tutoring approaches: a rule-based conversational agent (SoulsBot) and a traditional handholding tutorial, in teaching players the core mechanics of a mini-boss encounter in Dark Souls—The Board Game. Findings from a mixed-methods user study (n = 16) show that neither tool significantly improved learning or engagement: GEQ components showed no statistical differences, SUS scores were comparable (SoulsBot = 69.5, Tutorial = 76.1), quiz performance did not differ (69.38% vs. 63.75%), and gameplay metrics showed no significant effect of the tutoring method. Qualitative feedback indicated that SoulsBot was valued for its on-demand assistance but struggled with limited ontology coverage and rigid intent matching, leading to frequent unanswered questions. Participants also noted that conversational agents like SoulsBot appear more suitable for strategic, RPG, and dungeon-crawl games, but less effective in fast-paced or exploration-focused genres. To address SoulsBot’s limitations, we introduce SoulsBot+, an enhanced version that integrates a pre-trained large language model with retrieval-augmented generation and a fallback mechanism. SoulsBot+ improves the system’s ability to handle unanticipated questions, generate context-aware responses informed by real-time game state, and provide more flexible rule explanations and strategic guidance. These enhancements aim to overcome the identified shortcomings and support future development of adaptive tutoring in complex digital board games.

1 Introduction

In recent years, the development of board games has diverged along two paths: their transformation into digital formats and the evolution of roll-and-move games to emphasize interaction, strategy, and decision-making. Learning these games, with challenges like managing cards, understanding maps, and remembering various rules, can be daunting for novices (Sato and de Haan, 2016). Tutorials are crucial in this context, offering guidance and potentially enhancing engagement by easing the learning curve.

Rulebooks are what traditionally the players of board games have relied on for learning. Although players typically associate handholding tutorials with video games, they have also been widely employed in digital board games, ever since physical board games started getting ported to their digital versions. For example, digital tabletop games like Microsoft Solitaire Collection (n.d.), Ascension: Deckbuilding Game on Steam (n.d.) and Puerto Rico (n.d.) use handholding tutorials to teach core game mechanics to players. Another tutoring method typically associated with video games is video tutorials available on platforms like YouTube.

A study by Andersen et al. (2012) on video game tutorials showed that the effectiveness of tutorials depends on the difficulty level of the games. However, Andersen et al. did not find that tutorials significantly improve engagement in simple games. Beyond this, not a lot is known about the effectiveness of tutorials in digital board games. Although digital board games come in a wide variety of genres, it is unclear whether the effectiveness of tutorials is universal across different board game genres (Zhang and Lellan, 2022), either.

While an interactive tutorial may work for digital board games with a small set of possible moves, it is unclear how effective they are when it comes to learning moderately difficult digital board games. One of such games, which we picked to study in our work, is the dungeon crawl board game Dark Souls—The Board Game. It was selected due to the following characteristics:

• Game complexity: The game features a massive number of rules, game mechanics, moves and artifacts, e.g., cards, models, and encounters. As a result, the overload of information can make a novice player overwhelmed.

• Strategic decision-making: While playing the game, the player at times must make important strategic decisions, which may not be obvious in the beginning.

• Randomness: Unlike Eurogames, which are mostly deterministic with no or diminished reliance on luck, in Dark Souls—The Board Game, luck/chance plays an important role. Mechanics like shuffling cards and tossing dice are examples of luck elements incorporated to add randomness to the game. Although the game randomness makes the game re-playable and unpredictable, it builds a huge state space and levels up the complexity.

All these characteristics can put high mental effort onto a novice player and result in experiencing a steep learning curve. With that said, it is fair to assume that learning this game through a basic tutorial may appear sub-optimal because a basic tutorial is not designed to be adaptive to players’ learning pace and different needs and players can feel confused and lose track of the dots since a lot is happening at once. On the other hand, providing automated feedback adapted to the learner’s behavior is a challenging issue in designing learning systems for games because the analysis of the learner’s traces is complex in games with large amounts of available actions (Muratet et al., 2022).

As a result, players may typically search online to ask clarifying questions or review game rules, see, (e.g., Questions about pushing | Dark Souls: The Board Game, n.d.). Nevertheless, tutorials fall short when responding to players’ questions, as there is no channel for players to communicate with tutorials. To address this limitation, we developed SoulsBot, a rule-based conversational agent designed to answer player questions, provide context-aware guidance, and support learning during gameplay. SoulsBot operates alongside the portion of the digital version of Dark Souls—The Board Game (which we also developed), offering on-demand clarification of rules and mechanics. A detailed description of the system is provided in Section 3.

1.1 Research questions

To address these gaps, our study is guided by the following research questions:

1. What impact does SoulsBot, as a tutoring assistant, have on the gameplay experience, specifically in terms of player engagement and learning, compared to a traditional handholding tutorial?

2. What aspects of SoulsBot do players prefer or find lacking when contrasted with a handholding tutorial, and how might SoulsBot be enhanced based on this feedback? What is the outlook of Soulsbot in other genres of board games?

1.2 Motivation

Although players usually learn digital board games through in-game handholding tutorials, they are more likely to come up with questions when playing a complex board game involving many entities, rules, and mechanics. A basic tutorial typically cannot be matched with players’ learning experience, and, commonly, a player is left behind or distracted while following tutorial instructions.

One of the players’ typical actions to resolve this issue is asking questions online. This may not always be straightforward because while players’ questions are tied to the game context and states, external search engines are entirely out of the game context and are blind to players’ states in the game. Furthermore, looking for help outside of the game can break immersion and cause distraction.

It is safe to assume that employing a conversational agent in learning can be beneficial. However, despite promising findings that conversational agents have brought in related fields like educational environments (Schroeder and Craig, 2021) and serious games (Gamage and Ennis, 2018), applying them in tutoring digital board games has not been researched thoroughly.

1.3 Previous work

In our previous work (Allameh and Zaman, 2021), we introduced Jessy, a conversational agent designed to teach players how to play a simple digital board game. Jessy gave players instructive context-sensitive suggestions using an in-game chatbot interface. It could respond to players’ state-free and state-based questions. We evaluated the system in an exploratory study, which showed that our system helped players to engage with the game and learn the game basics in general. The study also provided insights on how to craft the system for a moderately difficult board game like Dark Souls—The Board Game, which we study in this work. Although SoulsBot extends the conversational-agent architecture of our earlier system Jessy, SoulsBot is intentionally named to reflect its domain specificity. Unlike Jessy, which was designed for a simple and generic board game, SoulsBot is tailored to the unique mechanics, combat system, and terminology of Dark Souls—The Board Game. Its name therefore indicates domain adaptation rather than general-purpose applicability.

1.4 Contributions

In this work, we study if our new conversational agent SoulsBot helps to engage players and improve the learning experience. Furthermore, as tutorials are widely employed in digital board games, we also developed a tutorial to compare SoulsBot’s effectiveness against it. In summary, the following are our contributions:

1. A domain-aware conversational tutoring agent designed for a moderately complex digital board game, extending prior work by incorporating state-based reasoning, improved fallback handling, and multimodal instructional support.

2. A controlled, within-subject comparative study evaluating conversational tutoring versus a traditional step-by-step tutorial in a medium-complexity board game context.

3. Empirical insights showing that, within the limited mini-boss scenario studied, neither approach sufficiently supported novice learning, while players perceived each method as beneficial in different ways.

4. Design implications and generalization analysis, identifying which game genres benefit most from conversational tutoring and outlining requirements for scalable agent-based instruction.

We additionally outline SoulsBot+, a design extension using an LLM fallback, demonstrating how hybrid rule-based + generative models can address limitations of traditional conversational agents. This prototype is presented to motivate future research rather than as an empirically validated system.

2 Related work

2.1 Transition from classic to modern board games

Even after millennia since their creation, board games continue to inspire people to play. Competitive interaction, social challenge, sensory experience, intellectual challenge, and imaginative experience are five intrinsic characteristics of boardgames that make table-top games appealing (Martinho and Sousa, 2023). Evolution of board games has seen a shift from classic games like ludo, checkers, and chess to more complex and strategic modern board games, exemplified by titles like Settlers of Catan (Teuber, 1995). Instead of traditional “roll-and-move” board games now players encourage interaction, involvement, strategy, and making decisions more frequently (Johnson and Lester, 2016).

Modern board games, as defined by Sousa and Bernardo (2019), are distinct from their classic counterparts in several aspects, including gameplay mechanics, design philosophy, target audience, complexity, social interaction, themes, genres, and production value. These games are typically commercial products created in the last five decades, featuring original mechanics and themes, high-quality components, and often having identifiable authors (Sousa and Bernardo, 2019).

In contrast, classic board games are characterized by uncertain authorship (Woods, 2012), simple mechanics, generic themes, and more rudimentary components. They tend to focus more on the board itself rather than on social interactions and appeal to a broader audience, including children and families (Parlett, 2018).

2.2 Classification of modern board games

Woods (2012) classifies board games into classic, mass-market, and hobby games, with hobby games being synonymous with modern board games (Sousa and Bernardo, 2019). These modern board games have evolved significantly and include various types like Wargames with military simulations, Role-Play Games (RPGs) that are narrative-driven, Collectible Card Games where players build unique decks, Eurogames focusing on strategic play with less randomness, and Amerigames that combine elements of wargames and RPGs.

Despite limited research on board game characteristics and taxonomy, further study is essential for understanding and effectively teaching various board game genres. Our focus is on Dark Souls—The Board Game, an RPG game designed for 1–4 players. This game features high-quality miniatures and combines luck, complexity, and depth in gameplay. Players engage in character progression, choosing specific roles and abilities, and experience a game centered around exploration, combat, and character development, typical of RPGs.

2.3 Game tutorials

2.3.1 Traditional rulebook-based learning

Learning new board games traditionally starts with reading rulebooks. However, rulebooks, as the basic format of board game tutorials, are often text-heavy, technical and poorly organized (Sato and de Haan, 2016). Rulebooks usually fall short when it comes to learning modern board games because unlike traditional “roll-and-move” board games, modern board games include many rules, mechanics, artifacts and interrelated entities (Sato and de Haan, 2016). Thus, newcomers, whenever possible, prefer to learn board games from players who are already experienced with the game, such as an expert friend who has already learned the game or at least is excited to learn and share it with others (Jackson, 2020).

Stenros and Montola (2024) discuss the common shortcomings of rulebooks in board games, attributing issues to their complexity, assumptions about players’ prior knowledge, and the difficulty in translating dynamic gameplay into clear text. Poorly organized and technically heavy rulebooks often hinder the learning process, causing new players to seek explanations elsewhere. These insights underscore the necessity for alternative learning tools, such as conversational agents like SoulsBot, which can provide interactive, real-time assistance and improve player engagement and understanding where traditional rulebooks fall short.

2.3.2 Official vs. community-generated tutorials

It is important to distinguish between official, developer-provided tutorials (e.g., rulebooks, in-game tutorials, publisher-produced videos or FAQs) and community-generated resources such as fan-made video tutorials, walkthroughs, and expert player responses on platforms like BoardGameGeek. Official tutorials aim to convey canonical rules and standardized procedures, whereas community resources often arise to clarify ambiguities, offer alternative explanations, or respond to highly specific player questions. It is important to distinguish them because players routinely rely on both sources when learning modern board games.

2.3.3 Digital and in-game tutorials

Along with growing advances in video game technologies, board games were digitized. As a result, computer-driven tutorials were employed in digital board games as well as in video games. Initially, game manuals were the first format of computer-driven tutorials applied in many games, such as Reversi, a board game that was part of Microsoft Windows 1–3.0 (Microsoft, 1990). Later, tutorials changed and became more interactive, where game instructions (in various formats, e.g., tooltips, animation, textual dialogues and annotations) were integrated into gameplay rather than overloading too much information in front-manuals or out of context (Andersen et al., 2012). This type of tutorial, which is referred to as background in-game tutorials (Shah, 2018), provides “just-in-time” instructions when players exactly need the information to be applied. Games like Microsoft Solitaire Collection (n.d.), Ascension: Deckbuilding Game on Steam (n.d.), and Puerto Rico (n.d.) are good examples of digital tabletop games using interactive tutorials.

Shah (2018) examined three different kinds of tutorials (non-interactive tutorials, interactive tutorials, background in-game tutorials) for a complicated educational game named GrAce including more than three puzzle mechanics. They found the background in-game tutorial is superior among the three in engaging players with the game. However, they did not find any significant discrepancy in terms of players’ learning.

2.3.4 Video tutorials and community support platforms

Another kind of tutorial that deserves recognition is the video tutorial. Looking at many acclaimed content creators’ reviews, such as those on Watch It Played (n.d.) and LetsPlay (n.d.) YouTube channels, reveals how appealing they have been to large audiences. While video tutorials can demonstrate processes in action by combining visual examples with simultaneous auditory explanations, on-screen text, graphics, or animation, they fall short when audiences ask unique questions, as video tutorials cannot adapt to diverse users’ needs, prior experience, or preferences. They do not hold players’ hands during their early in-game decisions. Furthermore, video tutorials are difficult to search for when addressing a specific question. Besides, they do not usually include reference materials to look up after instruction. It is not unreasonable to assume that players often start by watching tutorial videos before asking questions in online forums. Depending on the game, many videos can be very clear, while others can be complex to follow and arguably can provide only marginal improvement to the comprehension of gameplay, or can even make it more confusing and frustrating, if the player never read the rulebook in first place, which particularly can be true of tabletop games like Dark Souls—The Board Game. Board game forums can play a crucial role in learning as well: whatever players cannot understand through rule books or video tutorials, they can query in online communities like the BoardGameGeek (BGG) forums or dedicated subreddits on Reddit. These inquiries can often lead to rulebook reprints for corrections or clarifications.

2.3.5 Personalized and adaptive tutorial systems

Baek et al. (2023) introduced a personalized in-game tutorial generation method that leverages procedural content generation and a player student model to create adaptive tutorials tailored to individual learning progress. This approach aims to address the limitations of traditional tutorials that do not consider player differences in learning abilities and prior knowledge. By integrating procedural context generation with an internal knowledge model, the proposed method generates tutorial contexts that adapt to the player’s current understanding, thus optimizing the learning process. A large-scale user study demonstrated that this personalized tutorial generator significantly improved the players’ learning of game mechanics, reducing the required tutorial time and increasing engagement (Baek et al., 2023). This aligns with current trends in personalized learning and the use of AI to enhance educational outcomes, see, (e.g., Hu, 2024; Plass and Froehlich, 2025; Yeo and Lansford, 2025).

2.3.6 Gaps in tutorial research

Despite the long-standing use of tutorials in games, there is limited research on their effectiveness across different game genres. Most studies focus on traditional video games, leaving a gap in understanding how tutorials impact learning and engagement in digital board games. In our work we want to address this gap by evaluating the effectiveness of various tutorial methods specifically within the context of digital board games, thereby providing insights into best practices for tutorial design in this genre.

2.4 Conversational agents

2.4.1 Conversational agents in education

Conversational agents (CAs), encompassing chatbots and virtual assistants, facilitate human-computer interaction through natural language processing, effectively bridging the communication gap (Dale, 2016; Singh and Beniwal, 2021). These agents, when applied in educational contexts, are termed pedagogical agents (Chin et al., 2010). Despite their widespread use, the extent of their effectiveness in various learning environments especially in games remains ambiguous due to mixed results (Johnson and Lester, 2016).

Research by Gamage and Ennis (2018) demonstrated the effectiveness of virtual characters in serious games for education, enhancing engagement and knowledge retention. Further reviews (Schroeder et al., 2013; Kuhail et al., 2023) confirm that pedagogical agents generally improve learning outcomes and subjective satisfaction compared to environments without such agents.

Dennis et al. (2024) provide a comprehensive review of the use of chatbots in educational settings with the focus on pedagogical approaches and implementations that enhance learning effectiveness. They highlight how chatbots can increase learner motivation and engagement through gamification elements (badges and progress bars). Their experimental study demonstrated that gamified chatbots could significantly impact cognitive absorption, keeping learners motivated to engage more intensely in learning activities. However, the study also noted the challenges in achieving statistically significant results, indicating the need for further research to optimize the design of conversational agents for educational purposes. This aligns with existing literature on the importance of integrating effective game design elements to support meaningful engagement and learning outcomes in chatbot applications.

2.4.2 Conversational agents in gaming

In gaming, conversational agents often serve as virtual characters within narratives, providing tasks, information, and enhancing realism, particularly in role-playing games (RPGs) (Bellotti et al., 2011; Fraser et al., 2018). The evolution of Dialog Management Systems (DMS) from limited, pre-scripted dialogs to more dynamic natural language interactions has been notable. Mori et al. (2013) introduced a novel DMS in a serious game, allowing free-form interaction with NPCs to enhance learning through realistic conversations. While this system was well-received in terms of usability and engagement, it did not significantly outperform text-based methods in knowledge acquisition. Fraser et al. (2018) developed an emotional spoken conversational AI system using open-domain social conversational AI for the Amazon Alexa and IBM Watson, enabling non-scripted interactions with NPCs in an RPG game. This emotion-driven system was largely favored by players, enhancing the overall conversational experience.

2.4.3 Conversational agents in board games

In board games, studies (Rogerson et al., 2021; Karim et al., 2023) suggest that incorporating CAs is valuable in supporting players in learning board game rules and gameplay. Rogerson et al. (2021) outline eight categories of functions that digital apps and tools can perform in a hybrid board game, among which appear teaching rules, calculating scores, storytelling, and informing.

Besides the rich social capabilities of CAs, they are a promising solution for aiding accessibility by enabling communication in various formats, including text, speech, and embodiment. Karim et al. (2023) explored the design of CAs, particularly Amazon Alexa, in supporting players who are blind or have low vision (BLV) when learning the board game Ticket to Ride. Eventually, they suggested a new Alexa skill developed for BLV players and outlined a design guideline for game designers on how to use Alexa to support rule learning in board games for BLV players.

2.4.4 Technical foundations of conversational agents

In terms of technology, CAs have shown a lot of progress in recent years moving from pattern-matching based on regular expressions (e.g., mark-up language) to complex systems utilizing machine learning, e.g., RNN (Recurrent Neural Network), RL (Reinforcement Learning) for better recognition of the user input. According to a systematic review examining educational chatbots in recent years (Kuhail et al., 2023; Zhang et al., 2024), chatbots are classified into two categories in terms of interaction styles: chatbot-driven and user-driven. Chatbot-driven conversations are scripted and best represented as linear flows with a limited number of branches that a user can choose from, thus, they heavily rely upon acceptable user answers. Such chatbots are typically programmed with if-else rules. In contrast, user-driven conversations are powered by AI allowing for flexible free-form dialog as the user chooses the types of questions. As a result, user questions can drift off the chatbot’s script. Such chatbots are usually intent-based.

In general, chatbots employing AI-based methods are either retrieval-based or generative-based in terms of how they respond to a user’s intent (Adamopoulou and Moussiades, 2020; Zhang et al., 2024). While retrieval-based chatbots provide pre-defined responses to user input, generative-based chatbots can reconstruct word by word accurate responses according to the user’s specific input and requirements (Luo et al., 2022). Such chatbots can learn from users’ input in the same context to reconstruct more accurate responses in their next attempts (Kuhail et al., 2023).

2.4.5 Limitations of current CA frameworks and opportunities to address research gap

Despite considerable advances in natural language understanding, existing commercial chatbot frameworks typically follow strict, rule-based interaction scenarios suitable for question-answering, but not for simulating a complex interaction such as learning the rules of a new game that requires the system to be aware of the game rules and incorporating game states in response.

Given the complexity and potential for confusion in digital board games (The Skills System Instructor’s Guide, n.d.), a conversational tutor could add value by facilitating dialog between the player and the game’s knowledge base. Despite this opportunity, conversational agents have not been extensively explored in the context of tutoring digital board games to enhance learning experience and engagement. Table 1 summarizes related works incorporating CAs in games to enhance engagement and learning.

3 SoulsBot—a conversational agent for tutoring the digital version of ‘Dark Souls—The Board Game’

3.1 Models and design principles guiding the development of SoulsBot and the tutorial

The development of SoulsBot and the tutorial for Dark Souls—The Board Game was informed by a mix of hybrid board game design guidelines (Kankainen and Paavilainen, 2019) and the Hybrid Digital Boardgame Model (Rogerson et al., 2021), enhancing the learning experience for new players in complex mini-boss battles. From the Hybrid Digital Boardgame Model, the ‘Informing’ domain was key, with SoulsBot and the tutorial managing information flow and providing structured, tailored guidance on game rules and mechanics. The ‘Teaching’ domain was directly applied in these tools to educate players on strategies for mini-boss battles, while ‘Remembering’ helped SoulsBot track player progress and interactions for personalized assistance.

The design guidelines focused on ‘Accessibility’, making interfaces user-friendly and simplifying game complexity, particularly through SoulsBot. ‘Added Value’ was achieved via SoulsBot’s interactive, on-demand assistance. ‘Automation’ streamlined learning game rules and mechanics, and ‘Sociability’, though not currently supporting multiplayer gameplay, was considered for future enhancements. Both SoulsBot and the tutorial embodied the ‘Tutorials’ guideline, offering structured guidance on game mechanics. ‘Recovery’ features in both tools allowed players to pause, revisit, or repeat sections, supporting learning at an individual pace, with SoulsBot enabling re-asking of state-free questions. ‘Scalability’ was addressed to cater to varying player skill levels, with customized assistance based on player familiarity. The main goal of both SoulsBot and the tutorial is to convey the basic rules and mechanics of the board game. Regardless of whether a player uses the tutorial or SoulsBot, the knowledge imparted is the same. Both methods provide identical content, differing only in the degree of interactivity, with SoulsBot offering a more interactive experience. This ensures that the comparison between the two methods is based solely on their interactivity levels and not on content differences, allowing for a reliable evaluation of their effectiveness.

3.2 Overview of SoulsBot

SoulsBot, an intelligent interactive assistant, was developed to tutor players in the segment of the digital version of Dark Souls—The Board Game (which we also developed). SoulsBot is functioning as a conversational agent. It is designed to be aware of both the game rules and the player’s current state, facilitating interactive gameplay through player queries. The primary goal of SoulsBot is to boost player engagement and learning.

The development of SoulsBot utilized Unity (Unity Technologies, n.d.), a widely used game engine, and Rasa (Open source conversational AI, 2020), an open-source natural language processing platform for creating scalable text and voice-based AI assistants. SoulsBot’s architecture integrates these two technologies, with Rasa proving a framework for natural language understanding and dialogue management, and a rule engine developed in Unity to track and store game states. Communication between the Unity and Rasa layers is enabled through a RESTful API, as depicted in Figure 1.

In operation, SoulsBot functions as an in-game conversational agent interface, with the entire dialog management handled by the Rasa Layer. The Rasa Layer encompasses two built-in core modules: Rasa NLU and Rasa Core. The Rasa NLU module is the natural understanding solution, trained with a diverse list of example questions. As such, Rasa NLU can infer user intents and extract entities. On the other hand, Rasa’s Core module is a dialogue management solution built upon probability models, enabling it to determine the most appropriate response in correspondence to the recognized intent.

Answering users’ intents, however, depends on whether game states are required to be implied in the answer or not. To understand what kind of questions may be asked by players and what kind of answers should be designed to address each question (state-based vs. state-free), an observational study was conducted. See Section 5. For example, “Why have I lost two red cubes?” We refer to this as a state-based intent because losing red cubes calls for the user’s current score to calculate the damage taken. The second example, “How do I win?” is a state-free intent because the winning rule is general, regardless of the user’s game state. State-based or state-free intents have been annotated by the developer in the training data. As such, the conversational agent’s response (output) varies depending on whether the user’s intent (input query) is state-based or state-free. For state-based queries, Rasa requests the Unity module to transfer current game states via an API. Unity’s Game Rule Engine captures game data and stores them in a JSON file, passing it on to the Rasa module. The final response is then determined according to the model and sent back to the Unity interface, popping up in the chat box. State-free queries, however, are processed entirely within the Rasa Layer, as in this case, there is no specific game state bounded to the bot response.

3.3 Enhancements in SoulsBot

SoulsBot communicates with players through an in-game chatbot interface (Figure 2). It greets players upon game start and allows for queries to be typed and submitted by players during gameplay. Building on feedback from Jessy (Allameh and Zaman, 2021), we refined SoulsBot for more a complex game through five key improvements. Addressing the issue of misunderstood queries, we integrated FallbackClassifier in Rasa’s configuration. This allows SoulsBot to either offer a default response or clarify user input, reducing frustration from non-responses. See Figure 2a. Anticipating players’ potential queries, pre-set questions were added to guide and engage players, ensuring critical instructions are not missed. See Figure 2b. Initially, Jessy (Allameh and Zaman, 2021) provided automatic instructions (implicit interactions) throughout the game. However, to prevent repetitiveness and distraction, in SoulsBot, this approach now limits automatic guidance to the first three rounds. Post this phase, SoulsBot “sits back,” offering help only upon player request, thus balancing guidance with player autonomy. See Figure 2c.

Figure 2. The SoulsBot interface examples: (a) A fallback management example, (b) an example of pre-set questions, (c) a sit back message after the third round ends.

To bridge the gap between textual instructions and in-game objects, we introduced hyperlinks in SoulsBot’s responses. Clicking these links highlights the relevant game objects, enhancing player understanding and interaction. See Figure 3.

Figure 3. (a,b) Hyperlinked keywords highlight in SoulsBot corresponding game objects during the mini-boss battle in our digital version of Dark Souls—The Board Game.

Recognizing the power of visual aids, SoulsBot now includes related images with its instructions, catering to the human preference for visual information processing. See Figure 4.

3.4 Integration of SoulsBot into Dark Souls—The Board Game

For our evaluation, we selected Dark Souls—The Board Game due to its medium complexity and modern gameplay style. Board Game Geek (BGG), a leading board game database with over 130,000 games (BoardGameGeek, n.d.) categorizes game difficulty for comprehension. Unlike the Royal Game of Ur from our previous study, rated as ‘light’ in complexity (The Royal Game of Ur, n.d.), Dark Souls—The Board Game is classified as ‘medium’ (Dark Souls: The Board Game, n.d.). This game represents the dungeon crawling genre, known for its moderate level of player engagement, mechanics, communication, interaction, and strategic depth. This contrasts with the simpler “roll-and-move” mechanic of the game used in our previous work (Allameh and Zaman, 2021).

Dark Souls—The Board Game, designed by Steamforged Games, is a cooperative dungeon crawl board game for 1–4 players, inspired by the challenging video game series Dark Souls, known for its demanding gameplay and skill-based rewards (Dark Souls™: The Board Game, n.d.). Players assume roles like Assassin, Knight, Herald, or Warrior, exploring treacherous locations, uncovering treasures, and battling enemies, culminating in a mini-boss fight.

The game typically spans 3–4 h, featuring encounters with regular enemies, a mini-boss, and a main boss. For our study, we recreated a segment of the game in Unity, focusing on the mini-boss encounter in solo mode. This decision was influenced by a BGG survey (A survey regarding to DarkSouls: The Board Game | Dark Souls: The Board Game, n.d.), highlighting the mini-boss battle as a pivotal challenge, with most players preferring solo play.

Players start with a set number of souls (in-game currency for upgrades and treasures) and sparks (representing lives). The game ends when a player loses all their sparks. To keep our study under 2 h, we concentrated on three core player mechanics: blocking, movement, and attack, incorporating a popular house rule of starting with 32 souls and 2 sparks (Puleo, 2021). We simplified the game by automating mini-boss activation and excluding character-building mechanics.

We developed two mini-bosses (Titanite Demon, Gargoyle) and two characters (Warrior, Knight), each with unique health bars, heat-up points, and behavior patterns. Unlike the original tabletop game where players acquire additional cards progressively, our digital version provided two default treasure cards per character, offset by removing the initial 32 souls.

Players manage an endurance bar with a uniform capacity across characters, while each mini-boss’s health bar capacity varies. The game’s original heat-up point, marking a shift in mini-boss behavior, was repurposed as the game’s end point in our study. Gameplay largely revolves around dice rolls, with equipment cards dictating the number and type of dice used during player actions. See Figure 5.

Figure 5. Game setup illustrations for (a) Warrior vs. Titanite Demon and (b) Knight vs. Gargoyle, in our digital implementation of Dark Souls—The Board Game.

3.4.1 Game flow

The game flow is structured around a sequence of mini-boss and player activations. Initially, the mini-boss moves and attacks, followed by the player’s turn to move and attack. This cycle continues until either the mini-boss is defeated or the player dies. See Figure 6.

• Mini-boss defeat: The mini-boss is considered defeated once its health bar is reduced to the heat up point.

• Player death: The player is deemed to have died when they lose all their sparks. A spark is lost when the player’s endurance bar is completely filled with damage (red) and stamina (black) bars.

Figure 6. The interface of our implementation of Dark Souls—The Board Game demonstrating the game state changes in (a) player endurance, and (b) mini-boss health bar.

3.4.2 Game mechanics

In SoulsBot, we employ game mechanics such as resource management, worker placement, and action selection, inspired by popular board games. It is essential to distinguish between mechanics—the actions players can take—and mechanisms—the underlying processes enabling these actions. Sousa et al. (2021) emphasize that mechanisms serve as building blocks for mechanics, which in turn generate the game’s dynamics and player experiences.

3.4.2.1 Blocking

When a player is within a mini-boss’s attack range, they can block the attack, potentially reducing the damage. Each equipment card features two block values, one for physical and one for magical defense, denoted by numbers within colored (black, blue, orange) circles. To block an attack, the player sums the relevant block value (physical or magical, based on the mini-boss’s attack type) and rolls the dice corresponding to the color indicated on their equipment card. Successful blocking occurs if the total rolled is equal to or exceeds the mini-boss’s attack value. Failure to block results in damage, with each point of damage adding a red cube to the player’s endurance bar.

3.4.2.2 Player movement

In our digital implementation of Dark Souls—The Board Game, player movement is a key mechanic. Players can move to any adjacent node on the board, including horizontal, diagonal, and vertical movements. The first move to an adjacent node is free, indicated by nodes highlighted in green (see Figure 7a). Players can opt to move to additional nodes beyond the first free move, but each extra node traversed costs one stamina, with these nodes highlighted in black (see Figure 7b).

Figure 7. Our implementation of Dark Souls—the board game, demonstrating (a) player’s free movement (no stamina cost) and (b) player’s movement with stamina cost.

3.4.2.3 Player attack

During their turn, players can attack the mini-boss using a weapon card equipped in either the left or right hand slot. Each weapon card offers a range of attack options, varying in attack power and stamina cost. Players must select one attack option and pay the corresponding stamina cost during their activation (Figure 8).

Figure 8. A list of attack options for a player weapon card in our implementation of Dark Souls—The Board Game.

Weapon cards specify an attack range, indicating the maximum distance from which an attack can be effective. To launch an attack, the player must be within this range of the mini-boss. Additionally, the player’s position relative to the mini-boss is crucial; attacking from the mini-boss’ weak arc grants a bonus to the player’s attack power, represented by an additional black die added to the attack roll.

4 Overview of the evaluation

In our previous work with Jessy (Allameh and Zaman, 2021), we evaluated a conversational agent for the Royal Game of Ur. That exploratory study aimed to validate Jessy’s concept, but its simplicity limited the assessment to basic game mechanics. To extend our research to more complex games, we developed SoulsBot for tutoring Dark Souls—The Board Game, a game with intricate strategic elements, where we focused on the mini-boss battle. We conducted a follow-up study to evaluate SoulsBot’s effectiveness in this context. This study, informed by insights from Jessy, comprised two evaluation phases: observational and comparative.

While Jessy was arguably easy to learn (due to the simple explorative mechanics), the validity of the agent for tutoring other genres of board games was not examined. As such, we crafted SoulsBot for the purpose of tutoring Dark Souls—The Board Game which engages players with multiple strategic mechanics. We conducted an evaluation to investigate the usability and usefulness of the tutorial and SoulsBot and comparing them in a mixed research methods user study.

The evaluation was conducted on the mini-boss battle segment of Dark Souls—The Board Game, which we recreated as a digital video game.

4.1 Hypotheses

Our study posits the following hypotheses:

1. Effectiveness of SoulsBot vs. Traditional Tutorials: SoulsBot is hypothesized to be more effective than standard handholding tutorials in engaging newcomers to Dark Souls—The Board Game and facilitating their learning curve. Traditional tutorials, often rigid and non-adaptive, can be seen as intrusive and overwhelming, especially in complex games like Dark Souls—The Board Game, which require understanding numerous rules, mechanics, and their interrelations. Frequent interruptions by such tutorials can impede performance in complex tasks (Speier et al., 2004). In contrast, SoulsBot, as an agent tutor, offers a more natural learning experience, allowing players to seek guidance and clarifications at their own pace, thereby enhancing their understanding and engagement with the game.

2. Applicability of SoulsBot Across Game Genres: SoulsBot is anticipated to be particularly beneficial in board games characterized by intricate rules and detailed mechanics, which are not immediately intuitive. However, its utility might be limited in fast-paced, time-sensitive games where conversational learning could hinder the flow. This hypothesis stems the work of Zargham et al. (n.d.) who revealed that RPG games, especially those with a strong narrative focus or player character development, are suitable for employing speech interaction with NPCs. The findings suggest using speech-based interactions in single-player video games, whereas multi-player games and action-focused RPGs were deemed unsuitable for NPC interactions. This is relevant since SoulsBot can be considered an NPC. The effectiveness of SoulsBot in various game genres is uncertain, as player experiences can significantly differ across genres. We also foresee a range of suggestions for further enhancements, including UI improvements, ontology development, and usability refinements.

5 Observational study

Based on feedback from the previous work (Allameh and Zaman, 2021), to enhance agent’s performance, we focused on improving its ontology. Jessy’s correct response rate of 58.21% highlights the need for this enhancement. Key to this improvement was training the NLP model with a comprehensive dataset of potential in-game questions. We conducted an observational study to support this, with four primary objectives:

1. Gameplay Observation: Monitoring player interactions, noting common pitfalls, and understanding the range and phrasing of questions players typically ask during gameplay.

2. Intent Identification: Distinguishing between state-based and state-free questions (intents) posed by participants.

3. Response Tailoring: Developing appropriate responses that align with the identified player intents.

4. Interface Feedback: Gathering participant insights on the game interface design to further refine the user experience.

5.1 Participants

We recruited five participants (three females, two males), aged 24–35 (M = 28.6, SD = 5.86), from a graduate computer science student population. A pre-session questionnaire collected their demographic information and familiarity with Dark Souls—The Board Game. Four participants were completely new to the game, and one was aware of it but had not played. In terms of board game experience, three identified as beginners, and two had no prior experience. The preferred method of learning game rules for four participants was through explanation by a friend.

5.2 Apparatus

The game was played on an Apple MacBook Pro with an M1 Pro processor and 32 GB RAM. Conducted remotely, participants accessed the game via Parsec (Connect to Work or Games from Anywhere, n.d.), controlling the laptop’s mouse and keyboard. Communication was through Google Meet, and gameplay was recorded using Open Broadcaster Software | OBS (n.d.).

5.3 Procedure

The task was to defeat the mini-boss, Gargoyle. Participants first completed a demographic questionnaire, then connected to the lab laptop via Parsec. We provided a verbal introduction to the game’s rules and entities before gameplay. Participants were free to ask any game-related questions during play, with responses given verbally. The session was recorded for later review and analysis of questions, answers, and any notable observations such as misunderstandings or follow-up questions. The game continued until the mini-boss was defeated or all sparks were lost.

5.4 Outcomes

We categorized participant questions by their objectives, distinguishing between state-based and state-free intents. We identified game states linked to state-based intents. This classification, including all intents and corresponding answers, was used to train the SoulsBot’s Rasa NLU and Rasa Core modules.

6 Comparative study

6.1 Tutorial

We developed a tutorial to evaluate its usability and effectiveness compared to SoulsBot in an exploratory study. This tutorial offers step-by-step instructions with visual aids, such as hyperlinked keywords to game objects. Players progress through the tutorial by reading instructions and clicking ‘Continue’. Figure 9 shows a comparison between the tutorial and SoulsBot interfaces.

Figure 9. Our implementation of Dark Souls—The Board Game: (a) The tutorial’s interface and (b) SoulsBot’s interface, as they appeared in the study to the participants.

Table 2 details the features of both the tutorial and SoulsBot. Unlike SoulsBot’s interactive, two-way natural language communication, the tutorial provides a one-directional knowledge channel. Although both use the same knowledge base, their teaching strategies differ significantly. The tutorial delivers instructions at predetermined game moments, with player progression controlled by the ‘Continue’ button. In contrast, SoulsBot allows players to actively request assistance or clarification in natural language, in addition to receiving automated guidance in the initial rounds.

6.2 Design

The experiment followed a within-subject design. We presented two tutoring technologies (SoulsBot and Tutorial) to participants to teach them how to play Dark Souls—The Board Game. We refer to these tutoring technologies as Aid Tools. As such, Aid Tool is the independent variable.

In our study, to minimize order effects, we employed a counterbalancing strategy across two game conditions. These conditions were differentiated by a combination of mini-boss characters (Titanite Demon and Gargoyle) and player characters (Knight and Warrior). Specifically, we paired Gargoyle with Knight and Titanite Demon with Warrior. The order of these game conditions and the aid sequences (SoulsBot and Tutorial) were systematically varied among different participant groups to ensure a balanced distribution. This approach served as a key factor in our ANOVA tests, ensuring that the order did not bias the results due to its designation as a between-subject variable. Our methodology confirmed normality, the absence of outliers, and homogeneity of variances across all group combinations. In accordance with the triangulation guidelines suggested by Pettersson et al. (2018), we employed a variety of data gathering techniques. This included a combination of established questionnaires such as GEQ component scores (IJsselsteijn et al., 2013) and SUS score (Brooke, 1995), along with custom questionnaires and the participants’ gaming performance, to test our hypotheses. Additionally, we conducted a qualitative semi-structured interview after the study.

6.3 Participants

For our study, we recruited 16 participants aged between 18 and 26 (M = 21.94, SD = 3.23), comprising six females, nine males, and one non-binary individual. These participants were undergraduate and graduate students from game development, computer science, and related fields, and received compensation for their participation. None had prior experience with Dark Souls—The Board Game, ensuring a level playing field in terms of game familiarity.

Regarding their familiarity with Dark Souls—The Board Game, seven participants were completely unaware of it before the study, while nine had heard of it but never played. When asked about their knowledge of dungeon crawl board games, three participants were unfamiliar with the genre, 10 had heard of it but not played, two were familiar, and one considered themselves an expert. The participants’ overall board game expertise varied: one expert, eight with good knowledge, six beginners, and one with no expertise.

Participants also shared their preferred methods for learning board games, with reading rulebooks and asking experienced friends for guidance being the most common approaches.

6.4 Apparatus

The study was conducted in-person at the university lab. The apparatus from the first study was re-used and game sessions were recorded.

6.5 Procedure

In our study, participants first completed a demographic questionnaire. They then watched a three-minute warm-up video introducing basic game aspects like entities, controls, and win/lose conditions. This inclusion responded to feedback from Jessy (Allameh and Zaman, 2021), where participants preferred an initial basic game understanding. The video deliberately omitted details on game core mechanics to focus on knowledge transfer during gameplay.

Participants then commenced their first play session, assigned according to a Latin square design. They were instructed not to ask the first author game-related questions during play. Game sessions were recorded, including their duration, and continued until participants either won or lost.

Post-session, participants completed two questionnaires. The first, a self-developed questionnaire, had two sections: one for ranking the game’s complexity and the aid tool’s usability and effectiveness, and another for a quiz assessing learning about core mechanics. The quiz, formatted as case studies with game scene images, required understanding the scenario to answer multiple-choice questions, with a maximum score of 100%.

The second questionnaire comprised GEQ (Game Experience Questionnaire) (IJsselsteijn et al., 2013) and SUS (System Usability Scale) (Brooke, 2013), assessing the aid tools’ usability.

After a 10-min break, the second game session commenced with the same procedure. The study concluded with a semi-structured interview, focusing on comparing the aid tools. Questions explored tool preferences, features liked and disliked, potential applications of SoulsBot. Additional observations from the sessions were also discussed.

Participants received compensation for their time. The entire study lasted approximately 2 h.

6.6 Results and conclusions

We chose a within-subjects design because it minimizes variability between participants, as each participant acts as their own control. This approach also requires fewer participants compared to a between-subjects design. We mitigated the potential learning effect by using counterbalancing and pairing different mini-bosses and player characters. Additionally, our participants had limited prior experience with the game. Therefore, we do not believe a significant learning effect occurred. However, we acknowledge that the small sample size may have impacted our results.

6.6.1 Research question 1

6.6.1.1 GEQ

Using IJsselsteijn et al.’s (2013) scoring guidelines, we calculated GEQ component scores. Paired-samples t-tests showed no significant difference between SoulsBot and the tutorial across all components. Participants generally had a positive experience with a moderate challenge level in both conditions. In hindsight, we wish we have used the more robust questionnaires such PXI (Abeele et al., 2020) or miniPXI (Haider et al., 2022). However, we do not believe that the results would have been different, since all the methods we used to answer this research question provided consistent results.

6.6.1.2 SUS

SoulsBot scored an average of 69.5 (SD = 16.3), and the tutorial scored 76.1 (SD = 19.1). SUS scores above 68 are considered above average (Brooke, 2013). No significant difference was found (t(14) = 1.17, p > 0.05), indicating both tools are above average in usability.

6.6.1.3 Self-developed questionnaire

We used a self-developed questionnaire to gather participants’ self-reported experience on the usefulness of the assistive technology they used during their user study. The self-developed questionnaire was composed of two sections. In the first section, we asked participants to rank the difficulty level of the game on a Likert scale, where 1 means “not difficult at all” and 5 means “very difficult.” We asked this question because we wanted to see if SoulsBot could change players’ perception about the game’s complexity. We analyzed the results using a Wilcoxon signed-rank test, which did not reveal any significant difference, (V = 22, p > 0.05). The median ranks for both aid tools were equal at 4, which corresponds to being “difficult.” Indirectly, this can be corroborated with the results from the quiz that the participants took after being exposed to each experimental condition. See below.

We also asked participants to rank the usefulness of the aid tool for learning the game. More precisely, the question was: “To what extent the game assistive technology you used when playing the game helped you to learn the game?.” The rankings were on a 5- point Likert scale, with 1 being “not helpful at all” and 5 being “very helpful.” We analyzed the results using a Wilcoxon signed-rank test, which did not reveal any significant difference, (V = 19, p > 0.05). The median ranks for both aid tools were equal at 4, which correspond to being “helpful.”

6.6.1.4 Freeform feedback

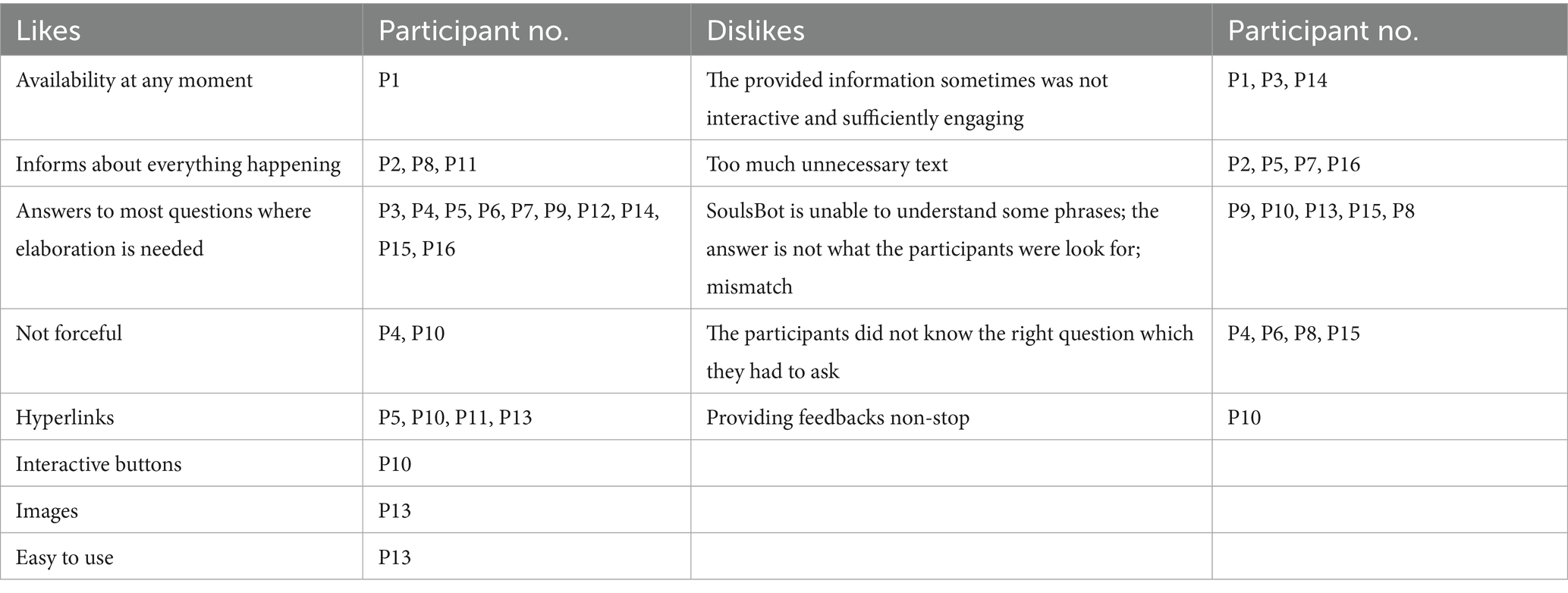

In the qualitative analyses below we grouped similar participant comments for clarity. In the freeform feedback field, we asked participants to provide reasoning pertaining to their ranking of the aid tools. Table 3 summarizes the participants’ feedback on the usefulness of SoulsBot while Table 4 presents feedback on the tutorial.

Participants’ responses highlighted the strengths and weaknesses of both SoulsBot and the tutorial. See below.

6.6.1.5 SoulsBot feedback

Advantages: Participants appreciated SoulsBot for its immediate availability and responsiveness to questions, aiding in basic knowledge transfer during gameplay (Table 3).

Participants described SoulsBot as useful for quick clarifications and for understanding game states:

• “It was useful for knowing where you are at the game.”

• “I liked that I could ask specific questions to know what was going on.”

• “The chatbot… helped with describing what was happening… prompted me to pay attention to certain actions that I could take.”

• “I feel that the chatbot has its usefulness when it comes to explaining situations.”

• “[SoulsBot] was very helpful to learn the rules of the game. I learned more about the game with [SoulsBot] answering most of my questions.”

Limitations: However, six participants noted SoulsBot’s failure to answer some questions. This was attributed to two main issues.

Natural language understanding: Difficulty in phrasing questions correctly, as P3 mentioned: “it [SoulsBot] was useful but I do not think I was necessarily asking the right question.” Additionally the following statement was made by another participant: “The answers provided were not always what I was looking for and it was hard to know what to ask to get an answer related to my question.”

Knowledge boundaries: Inadequate ontology to address broader questions, leading to unresponsive follow-ups:

• “As I played in the shoes of a new player who knew nothing, I found that when I asked questions the were some times that certain words did not work with the chatbot. As a new player, I will not think about which key words the robot needs but what the initial question I have and if the chatbot cannot answer it the average user would either try to find it their way or give up.”

• “It was difficult to know what to use to prompt the correct information.”

6.6.1.6 Tutorial feedback

Advantages: Most found the tutorial informative and detailed, though some noted it was overwhelming with information introduced at the start (Table 4). P6 remarked: “it [tutorial] dumps a lot of information at once.”:

• “The tutorial was great. It gave me all the information I needed to start the game.”

• “I found it a little bit easier to learn in this version.”

• “The tutorial taught me everything that I needed to play the game.”

• “I enjoyed that it was going step-by-step and allowed me to understand everything.”

Limitations: Participants found some instructions unclear or ambiguous, lacking clarification for complex game elements, and repetitiveness:

• “I wish it had more details regarding the ranged of attacks, since I got the correct way to use it at the end of the game!”

• “I would have liked if it was more clear about the boss’s movement pattern, and how the behavior card translated into the movement on screen. I also might’ve liked it to remind me of the resistance system, though that might’ve gotten annoying after some time.”

• “The tutorial pop ups felt repetitive after the first round, I already felt that I knew most of the game rules, so the pop ups began to disrupt the game flow.” These issues were echoed by another participant.

• “It left some parts out or wasn’t clear enough.”

Also majority of the participants stated there was too much text.

6.6.1.7 Comparative analysis

SoulsBot was favored for its conversational ease and quick reference capabilities:

• “I liked that I could ask specific questions…”

• “I feel that the chatbot has its usefulness when it comes to explaining the game to someone is new.”

The tutorial was valued for its comprehensive, though sometimes excessive, detail (as stated above because the majority mentioned there was too much text and some mentioned repetitiveness):

• “The descriptions were clear and concise.”

• “I enjoyed that it was going step-by-step and already providing you the information and guidance that you needed.”

Tutorial preference: Seven participants preferred the tutorial for its step-by-step approach, especially beneficial for first-time players (P5, P9, P10, P11, P12, P14, P18).

SoulsBot preference: Three participants favored SoulsBot for its specific question support and ability to revisit previous queries (P6, P15, P17).

Combination preference: Six participants saw value in both, suggesting the tutorial for initial learning and SoulsBot for addressing real-time confusions (P4, P7, P8, P13, P19, P20).

The tutorial was generally preferred for its structured, comprehensive introduction to the game.

Participants expressed a desire for SoulsBot to continue providing implicit instructions throughout the game, with P14 suggesting an interactive Q&A menu: “[I prefer] Tutorial, because it gave me instructions step by step. I think if SoulsBot provided me with [a] menu list of questions and answers it would have been nicer. For learning this type of game I’d rather be aware of my best move and worst move or at least I need to practice it for multiple rounds. I feel like I always miss a part of instruction while using [the] chatbot and I do not know which keyword works exactly or what to ask.”

A blend of both tools was seen as ideal, aligning with research (Alkan and Cagiltay, 2007) indicating that novice players learn through trial and error, underscoring the need for scaffolding in game design.

6.6.1.8 Quiz results

A quiz assessed knowledge acquisition, with no significant difference in scores between SoulsBot (M = 69.38, SD = 18.4) and Tutorial (M = 63.75, SD = 21.3) users, (t(14) = 1.24, p > 0.05). P6’s experience: “I already understood the game’s systems by the second playthrough, so I did not need to interact with the tutorial system as much,” suggests that neither tool fully facilitated learning.

6.6.1.9 Game logs

We logged participants’ game scores, including time spent, game outcome (win or lose), and progress level, to investigate the impact of the aid tool on these metrics.

Time spent: Time spent playing, a proxy for player engagement (Andersen et al., 2012; Wauck and Fu, 2017), showed no significant difference between SoulsBot (M = 898, SD = 444) and Tutorial (M = 878.3, SD = 318.3) conditions (t(14) = 0.81, ns). This could be due to players idling due to cognitive loads.

Game outcome: Wins and losses were evenly distributed across both aid tools, with no significant difference in outcomes according to a logistic regression analysis (b = 1.707 × 10−16, z = 0, p = ns). The game’s difficulty, as indicated by participants and quiz scores, likely influenced performance more than the choice of aid tool. Participant P11 noted, “It was good enough to learn the basic concepts of the game [through the chatbot]. However, I feel that I need to play more than two matches to get the more complicated rules of the game.”

Performance metrics analysis: The study also logged specific performance metrics: the number of rounds played, player red damage bars, player black stamina bars, and the mini-boss’s proportional damage. These metrics were used to analyze player progress and engagement. The mini-boss’s proportional damage was calculated using Equation 1 as follows:

Averages over two sparks were calculated for each metric. However, a correlation analysis and a repeated-measures MANOVA using Wilk’s Lambda statistic on these variables did not reveal a significant effect of the Aid Tool (Λ = 0.12, F(2, 29) = 0.12, ns). This suggests that neither aid tool provided a distinct advantage in terms of player performance.

6.6.2 Research question 2

In our study, participants provided feedback on SoulsBot’s features, as detailed in Table 5. The most liked feature was SoulsBot’s ability to answer questions and explain rules during the game, as highlighted by several participants including P1, P3, P4–P7, P9, P14–P16. The second most appreciated aspect was the design and usability of SoulsBot’s interactive interface. P10 noted, “I liked that it was in the corner of the screen and did not take up space on the screen which helped improve immersion for me so I could focus on the gameboard. I liked that there were interactable elements in the chat such as buttons to explain to me how to move or attack and blue words highlighted.” P7 added, “I liked that it wasn’t forceful and in-your-face too much.” P13 also commented on the ease of use: “I like how easy it was to use and that I could scroll up the chat for previous answers. I also like the options it gives when answering like the blue highlighted words. Some of the explanations even had pictures which was very helpful.”

The most significant issue with SoulsBot was its inability to respond correctly to questions. This was a notable concern since SoulsBot’s correct response rate was only 64.41%. P8 suggested, “Have a small list of the names for different elements of the game, so a glossary of terms used when describing the game would be useful. It [SoulsBot] was not able to respond to specific questions.”

Participants also expressed frustration with phrasing questions to get the correct answer. P4 shared, “As a new player, I will not think about which key words the robot needs but what the initial question I have and if the chatbot cannot answer it the average user would either try to find it their way or give up.” P15 echoed this sentiment: “The answers provided were not always what I was looking for and it was hard to know what to ask to get an answer related to my question.” P8 added, “It [SoulsBot] was not able to respond to specific questions. I also did not have the right vocabulary, so if I did not know what a specific attack or element of the game was called, there was no way I would be able to figure out my answer.”

Suggestions for improvement, summarized in Table 6, included making SoulsBot work with a breakdown list of frequent questions or a glossary. Participants also desired more control over receiving instructions and suggested more visual interactions with less text. P3, P12, and P14 recommended, “Less text (keep the most important stuff) and maybe make prompts for common questions people might have when they play,” “I much prefer to see more graphical pictures rather than text,” and “I think that by showing the player some sort of mini clip on the side of the assistive technology might give the players a better understanding of where things are on the UI.” Lastly, enhancing SoulsBot’s ontology for broader question response was advised by P9, P10, and P16: “More phrases could have been added for the same question, as this would make the player lose less time as well as make SoulsBot seem even smarter” and “I believe if its responses were more catered toward the question asked and condensed into bite size information would have been more helpful.”

In the final interview, participants discussed the suitability of SoulsBot for different game genres. Table 7 summarizes the results. Participants identified strategic board games, RPG/MMORPGs, and RPG subgenres like Dungeons & Dragons as well-suited for SoulsBot’s tutoring. Interestingly, Dark Souls—The Board Game combines these genres. However, genres deemed unsuitable for SoulsBot included exploration-based and fast-paced games like FPS, as well as classic board games such as Ludo, Checkers, Backgammon, and Chess, due to their simpler mechanics. This supports our second hypothesis. Some participants, notably P8 and P13, also saw potential for SoulsBot in video games, including MMOs and story-based action-adventure games, due to their complex mechanics and activities.

6.7 Limitations

This study acknowledges several limitations:

Partial representation of the game: Our adaptation of Dark Souls—The Board Game focused on a subset of the full game, specifically the mini-boss encounters, with some house rules applied. We limited characters to Knight versus Gargoyle and Warrior versus Titanite Demon. Additionally, our study was confined to the solo mode of this inherently cooperative game, not exploring the multiplayer dynamics which could present different challenges.

Digital version impact: Following Rogerson et al. (2015), the digitization of the board game may have influenced the gameplay experience. Automation changes gameplay activities and can affect player enjoyment, game state awareness, and flexibility in gameplay, as noted in Wallace et al. (2012). Our digital adaptation, therefore, might have impacted the results due to these alterations in the play experience.

Generalization of SoulsBot: To generalize SoulsBot for a broader range of games, significant effort is required to integrate new domain knowledge and rule-engine. SoulsBot needs to understand game rules and track player states and events, making its adaptation to new games a substantial task.

Lack of adaptive instructions in SoulsBot: SoulsBot does not adapt its instructions based on the player’s behavior, prior knowledge, or preferences, unlike interactive tutoring systems. This could affect the balance between learning and enjoyment. Overloading players with information not aligned with their profile may reduce the game’s challenge and interest (Desurvire et al., 2004). Currently, SoulsBot lacks a module to model learner behavior and adapt instructions accordingly.

Sampling bias: The study used convenience sampling, with participants primarily being game development students from our university. This might have influenced their responses to self-reporting measures, potentially leading to more positive feedback due to some familiarity with the researchers.

Sample size and experimental design constraints: This study was exploratory due to the lack of previous research guiding sample size determination. We assumed a large effect size, as it is typical in HCI studies of similar design styles (Cohen’s d = 0.8, α = 0.05, 1 − β = 0.8). This resulted in n = 15, which was rounded to 16 for proper counterbalancing. However, this initial assumption of a substantial effect size may have been overly optimistic, leading to multiple non-significant results which should be interpreted with caution, as they may represent Type II errors rather than a true lack of difference between conditions. We believe these results can be also explained by the fact that we focused on a partial representation of the game as stated above. The narrow focus of the game likely was not sufficient in capturing the differences between the two tutoring methods. In hindsight, we should have simplified the study and focused only qualitative data collection, as this mixed approach is likely more appropriate for investigating the differences in tutorial methods in a full game, and possibly longitudinally as well, where differences would likely appear more pronounced.

Fairness of comparison: We acknowledge that both SoulsBot and the baseline tutorial were internally developed. Currently, no standardized or widely adopted tutorial benchmark exists for Dark Souls—The Board Game or comparable digital board game tutors. To minimize bias, both tools were constructed to use the same underlying knowledge base and to differ only in their degree of interactivity. Future work should incorporate an external, literature-based baseline such as a standardized interactive tutorial framework or existing adaptive-tutoring systems.

7 SoulsBot+: enhancements with LLM fallback

SoulsBot has been effective for basic queries, but it was unable to handle unanticipated player questions, generate strategic advice and scale to complex game scenarios. In response to somewhat mixed results with the performance of SoulsBot, we aim to investigate if Large Language Models (LLMs) can provide a solution. LLMs are able to encapsulate knowledge related to game-play ranging from basic game-play rules to complex contextual information (Xu et al., 2024). Even with imperfect information games, where the knowledge about the game rules are limited, GPT-4 can engage and outperform traditional algorithms such as Neural Fictitious Self Play (NSFP) without specialized training (Zhang et al., 2019). LLMs offer promising alternatives by enabling natural language interactions but they often lack game-state awareness or domain-specific knowledge, leading to irrelevant responses (Karim et al., 2023).

We present SoulsBot+, an extension to the original SoulsBot with a pre-trained LLM as a fallback. The SoulsBot+ system integrates a Cohere-based language model, offering two primary modes of operation: fine-tuned response generation and retrieval-augmented generation (RAG). This dual approach allows the system to address both rule-based and context-sensitive user queries, enhancing flexibility and responsiveness.

SoulsBot+ is presented as a design-concept prototype rather than a fully validated system. A direct quantitative comparison between SoulsBot and SoulsBot+ was beyond the scope of the current work. Our intention in this section is to illustrate a technically feasible extension that addresses the limitations identified in our evaluation, rather than to claim immediate empirical superiority.

7.1 Data layer

At the core of the data layer is a Python-based threaded service, responsible for polling the GAMESTATEDATA.JSON file at 10-s intervals. This service ensures that real-time updates to the game state are continuously monitored. Using this data, the system constructs structured documents that encapsulate key game context, including player statistics (e.g., health, stamina, equipped items), mini-boss characteristics (such as attack patterns and defensive traits), and overall game phase data like round numbers and turn sequences.

7.2 Processing layer

The processing layer is built around a Flask-based API that exposes a/QUERY endpoint for seamless communication with the Unity game engine. Depending on the nature of the query, the system dynamically selects between two language models: a fine-tuned variant optimized for deterministic rule-based responses, and a RAG-ready model (COMMAND-R-PLUS) that leverages current game state information to deliver nuanced, context-aware answers. The output of these models is post-processed to improve readability by formatting the responses into digestible, eight-word segments.

7.3 Integration layer

Interaction between SoulsBot+ and the game environment is facilitated through a RESTful API, which transmits JSON-encoded messages comprising both user queries and their corresponding, pre-formatted responses. To ensure resilience, the system incorporates a fallback mechanism that identifies and handles malformed input gracefully by returning structured error messages. This layered design highlights three core architectural principles: contextual awareness enabled by dynamic document updates, fault tolerance through background threading, and modular integration with existing game infrastructure.

7.4 System architecture and design

The architectural framework of SoulsBot+ is centered on a hybrid language understanding system. The RAG component plays a pivotal role by leveraging game-state data—exported every 10 s by Unity in JSON format—to inform context-driven queries. This allows the model to respond to dynamic, state-dependent prompts such as “What is my health level?” Conversely, a fine-tuned model trained on game rules provides consistent answers to canonical questions like “How do I block?” For queries that fall outside these domains, a rules-based Rasa pipeline acts as a fallback, ensuring continuity of assistance through pattern matching.

7.5 Fallback mechanism

As illustrated in the fallback decision flow (see Figure 10), both Rasa and the Cohere model process each query in parallel. The Rasa component specializes in matching user intents with predefined patterns, offering deterministic responses. Simultaneously, the Cohere model generates responses informed by the conversational context. A lightweight decision algorithm then selects the final response: if the Rasa output matches a known error pattern (e.g., “I did not understand”), the system defaults to the Cohere-generated content; otherwise, the Rasa result is returned. This method balances guaranteed accuracy with adaptive flexibility, and requires only basic string-matching logic for integration with the game UI, making it computationally efficient (Table 8).

7.6 Cohere model training

The Cohere language model used within SoulsBot+ is pre-trained on a curated set of game-specific question-answer pairs. These pairs are structured using a consistent JSONL format that includes both user input and the bot’s response. Each entry is designed to capture essential gameplay knowledge, from fundamental mechanics to advanced strategies.

This training corpus enables the model to achieve three core competencies: comprehensive rule comprehension (including stamina systems and dice hierarchies), sensitivity to in-game context (such as current player status and equipped items), and strategic advisory capabilities (offering recommendations tailored to the ongoing game situation). The dataset is carefully validated to ensure coverage across combat systems, movement mechanics, and resource management. It progresses in complexity, starting from basic rules and advancing toward more sophisticated decision-making scenarios. The uniform formatting of this dataset enhances model reliability and generalization.

Through this training regimen and its integration with RAG techniques, the SoulsBot+ architecture successfully merges deterministic precision with contextual depth, potentially outperforming either method in isolation.

7.7 Comparison with SoulsBot and other language models

To contextualize SoulsBot+ against modern conversational agents, we compared it with (1) the original SoulsBot (Rasa-only), (2) ChatGPT (GPT-5.1), and (3) Gemini Pro. We used a set of 10 representative queries collected from early playtests, balanced between state-aware questions (e.g., “What is my health?,” “Whose turn is it?”) and broader rule/strategy questions (e.g., “What is sweep?,” “How should I approach the Gargoyle boss?”). All models received the same natural-language prompts; only SoulsBot+ had direct access to the game-state JSON exported by Unity.

7.7.1 Methodology

Responses were evaluated on three dimensions by the fourth author of this work as follows: correctness (0–2), helpfulness (0–2), and a binary frustration flag indicating generic failures, disclaimers, or incorrect rule interpretations as shown in Supplementary Table 1. Average scores were computed across all queries as well as within each query type to analyze strengths and weaknesses of each system. This methodological setup allowed us to directly assess the contribution of the hybrid fallback architecture and the impact of explicit state-awareness on answer quality.

7.7.2 Results