- 1Educational Testing Service, Princeton, NJ, United States

- 2Brighter Research, Thousand Oaks, CA, United States

- 3Department of Educational Psychology, University of Illinois Urbana-Champaign, Urbana, IL, United States

- 4School of Teaching and Learning, University of Florida, Gainesville, FL, United States

- 5Department of Computer & Information Science & Engineering, University of Florida, Gainesville, FL, United States

Persistence and academic resilience are two non-cognitive skills that are important for students' long-term academic success. To date, there has been a lack of consensus on how to define and measure these critical constructs at the K−12 level. Clear operational definitions and valid measures are essential to assess students' competencies with respect to these two skills and to evaluate how these skills may develop through educational interventions. To address this gap, we conducted a systematic review of 74 studies to synthesize definitions of persistence and academic resilience and evaluate available measures based on evidence of reliability, validity, and fairness. After reviewing the various definitions of persistence and academic resilience, we proposed synthesized definitions. We defined persistence as involving sustained effort toward completion of a goal-directed task despite challenges or difficulties and further broke this down into four components: presence of a goal-directed task, presence of challenges or difficulties, sustained effort, and task completion. Academic resilience was defined as the process of bouncing back or recovering in the face of challenges, adversities, or stressors to achieve successful outcomes (e.g., academic achievement) by using adaptive behaviors or coping strategies over time. Our results revealed wide variation in how existing measures align with these synthesized construct definitions. For persistence, self-report instruments such as the Attitude and Persistence toward STEM Scale and the Academic Self-Efficacy Scale demonstrated strong alignment with all four components. For academic resilience, the Adolescent Resilience Questionnaire and Design My Future Scale encompassed all facets of academic resilience. Behavioral measures were less commonly available, particularly for academic resilience. Additionally, our review revealed that both constructs are empirically linked with other social-emotional competencies (e.g., self-efficacy, self-regulation), suggesting an important avenue for future research and intervention development at the K−12 level. We conclude with recommendations for selecting and adapting measures in K−12 settings and offer suggested directions for future research.

Introduction

Educators, researchers, and policymakers have long been concerned with understanding how students recover from setbacks and persist through academic challenges. This is particularly notable today as students face growing academic demands and systemic inequities remain for many students. Non-cognitive characteristics, including social-emotional skills and personality traits, have emerged as critical factors influencing students' academic success. A growing body of work has demonstrated that these attributes can help students navigate challenges and recover from setbacks in school (Credé and Kuncel, 2008; Duckworth and Yeager, 2015; Farrington et al., 2012; García-Martínez et al., 2022; Molnár and Kocsis, 2024; Noftle and Robins, 2007; Poropat, 2009; West et al., 2016; Yang and Wang, 2022). Persistence and academic resilience are two particularly important non-cognitive constructs linked to students' long-term academic success (Andersson and Bergman, 2011; Caporale-Berkowitz et al., 2022; Farrington et al., 2012; Hattie, 2009; Hunsu et al., 2023; Kälin and Oeri, 2024; Richardson et al., 2012; Yaure et al., 2021).

However, a challenge for research on persistence and academic resilience is the lack of consensus on “gold standard” definitions or measures (Constantin et al., 2011; DiNapoli, 2023; Rudd et al., 2021; Windle, 2010; Windle et al., 2011). While some researchers use these terms interchangeably (e.g., Zhang et al., 2021), others define one construct as a component of the other. For example, persistence has been conceived both as a subcomponent of academic resilience (e.g., Cassidy, 2016) and as an outcome that is influenced by academic resilience (as well as other contextual factors; Joseph et al., 2017); on the other hand, resilience has also been considered a component of persistence (e.g., Skinner et al., 2022, Figure 1). To some degree, this variety in terminology and conceptualization reflects differences in theoretical orientations, from perspectives grounded in motivational theories (Skinner and Pitzer, 2012) to personality psychology (McCrae and Costa, 1987) and beyond. Differences in how these constructs are conceptualized are reflected in how they are measured, with different instruments including different framings and item content aligned to the underlying definitions (Caporale-Berkowitz et al., 2022). Understanding how to define these constructs and how to measure them reliably, fairly, and validly is vital for ensuring that appropriate assessments are used to evaluate students' levels of these non-cognitive skills, as well as for informing interventions supporting their development (Duckworth and Yeager, 2015; West et al., 2018).

In this article, we argue that the theoretical heterogeneity apparent in the educational literature underscores the need to better understand how persistence and academic resilience are defined and operationalized and which measures are the most appropriate to use, especially for diverse samples of K−12 students. Clear definitions and valid measures are necessary to enable researchers to design high-quality studies and for practitioners and policymakers to make informed decisions to assess and improve students' persistence and resilience (Farrington et al., 2012). Thus, we conducted a systematic review to synthesize relevant construct definitions and identify the most appropriate measures based on available evidence of validity, reliability, and fairness.

Literature review

The importance of persistence and resilience to student development and academic achievement is widely acknowledged (Farrington et al., 2012; Lee and Shute, 2010; Morrison et al., 2006; Yeager and Dweck, 2012). However, there are a variety of views on how persistence and academic resilience manifest and how they can be measured. In this section, we highlight relevant literature discussing the theoretical foundations of persistence and academic resilience, the reasons why measuring these constructs is critically important, and how the current study advances our understanding of persistence and academic resilience in K−12 education.

Theoretical foundations of persistence and academic resilience

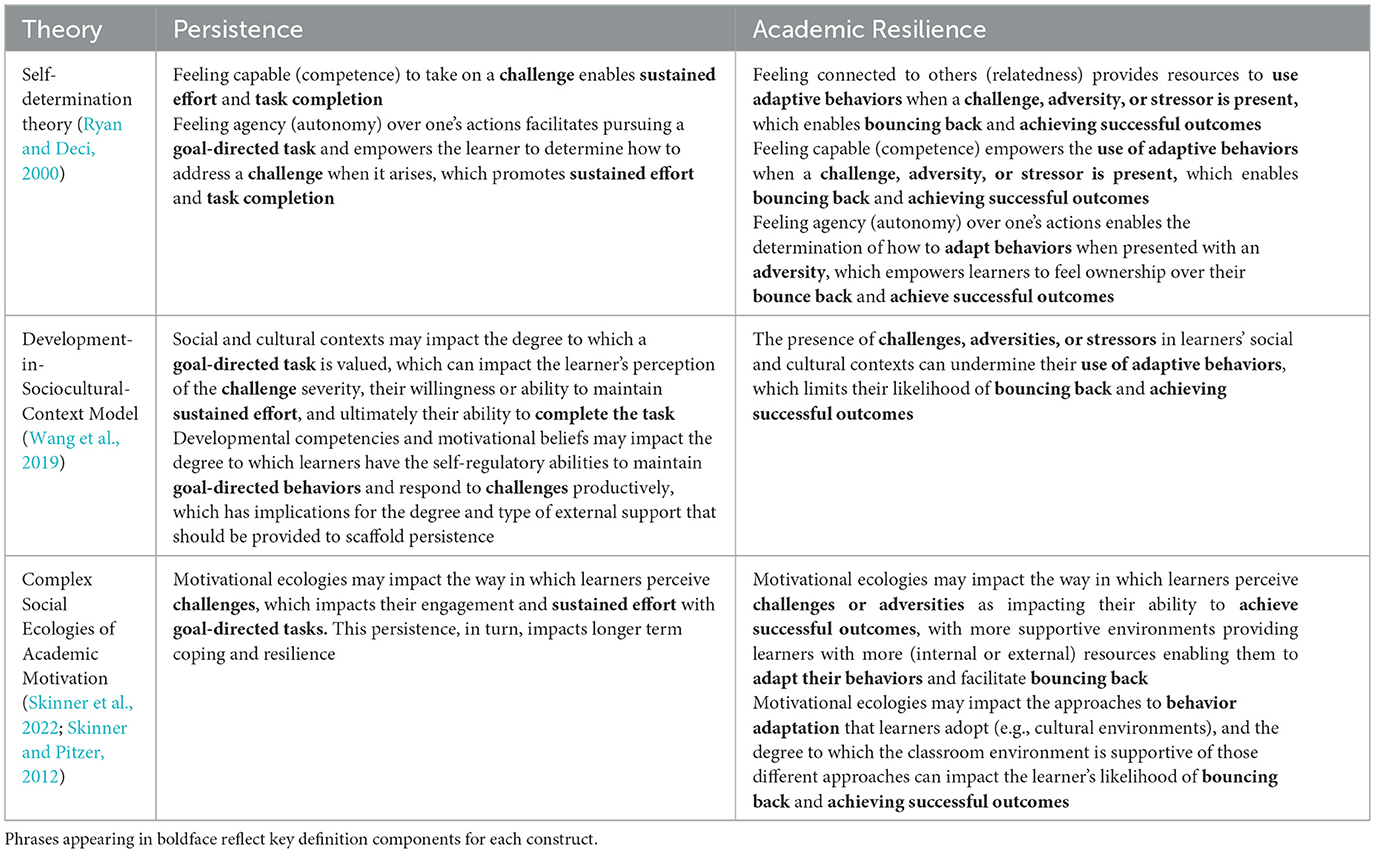

A wide variety of theoretical perspectives speak to the importance of persistence and academic resilience, generally emphasizing learners' capacity to sustain effort and recover from setbacks in pursuit of long-term goals. These frameworks include self-determination theory (SDT; Ryan and Deci, 2000), the Development-in-Sociocultural-Context Model (Wang et al., 2019), and the Complex Social Ecologies of Academic Motivation (Skinner et al., 2022), among many others. While these theories use similar terminology of “persistence” and “resilience” in academic contexts, we often see important, nuanced differences in authors' rationales as to why they are important, how they manifest, or how they should be measured. For example, SDT suggests that students are more likely to persist through challenges when three psychological needs are met: relatedness (e.g., feeling connected to others), competence (e.g., feeling capable of achieving success), and autonomy (e.g., feeling a sense of agency over one's actions; Ryan and Deci, 2000; Deci and Ryan, 2002). These needs serve as the foundation for intrinsic motivation (e.g., inherent enjoyment), which, in turn, supports engagement and the capacity to persist when one encounters challenges or setbacks.

Meanwhile, the Development-in-Sociocultural-Context Model (Wang et al., 2019) and the Complex Social Ecologies of Academic Motivation framework (Skinner et al., 2022; Skinner and Pitzer, 2012) further situate persistence and academic resilience within a broader developmental, social, and ecological system. The Development-in-Sociocultural-Context Model highlights how individual motivational processes are embedded within developmental trajectories shaped by peer, school, and cultural contexts. From this perspective, persistence and resilience are not merely individual characteristics but dynamic processes co-constructed through ongoing interaction with cultural and social factors in the environment. This model emphasizes that support from these factors could serve as critical proximal processes that foster persistence, while stressors in these contexts may undermine resilience (Wang et al., 2019). Importantly, Wang et al. (2019) emphasize the interplay between developmental competencies (e.g., cognitive strategies) and motivational beliefs (e.g., perceived competence) for supporting persistence. For example, a student may have strong problem-solving skills but decide not to persist if they attribute a setback to a lack of ability. Supportive contexts can help strengthen competence beliefs, thereby fostering persistence and resilience. Similarly, the Complex Social Ecologies framework (Skinner et al., 2022; Skinner and Pitzer, 2012) emphasizes that students' motivation unfolds within overlapping “motivational ecologies,” in which classrooms, peers, schools, and cultural environments work together to impact the balance of support and challenge experienced by students. Skinner et al. (2022) show that these dynamics depend on whether students perceive their classrooms as supportive spaces, where teachers provide warmth, clear structure, and meaningful opportunities for autonomy, or as risky spaces, where interactions are marked by coercion, disorder, or peer rejection. Persistence and resilience, in this view, emerge from the ways students draw on available supports and manage the demands in their environments, with adaptive capacities continually shaped by multiple social and cultural systems over time.

Taken together, these frameworks illustrate the importance of persistence and resilience in academic contexts and how they are impacted by developmental, social, and ecological contexts. However, the diverse operationalization among various theoretical frameworks creates challenges for generalization and interpretation. These frameworks thus suggest that measures of persistence and resilience should not only capture students' individual effort but also the ways in which their social and cultural contexts enable or constrain their ability to persist or “bounce back.” Given that students begin to encounter academic challenges and engage in complex problem-solving in elementary school, a clear understanding of how persistence and resilience are defined, measured, and fostered within the K−12 context is particularly crucial.

Measuring persistence and academic resilience in the K−12 context

A growing body of research has explored interventions aimed at promoting persistence and academic resilience in various learning settings. For example, strategies such as productive failure (Kapur, 2008), erroneous examples (Richey et al., 2019), and affect-aware feedback (Grawemeyer et al., 2017) have been shown to help students navigate confusion and frustration, leading to greater learning gains. These interventions recognize that persistence is not simply about students pushing through difficulties but involves strategically managing effort, receiving timely feedback, and developing metacognitive awareness of their learning processes. Importantly, studies across K−12 and higher education show that such interventions can be effective for diverse learners, although the success of these strategies often depends on contextual factors like task difficulty, teacher support, and student characteristics (Lodge et al., 2018).

While persistence and academic resilience are important at all stages of learning, fostering their development is particularly critical in the K−12 context. During early school experiences throughout childhood and adolescence, students are still forming their academic identities (Fallon, 2010), as well as their beliefs about learning, intelligence, and their ability to overcome challenges (Farrington et al., 2012). Confusion and frustration can often occur when students become stuck and are unsure how to proceed or experience moments of failure (Baker et al., 2025), both of which introduce opportunities for students to leverage their persistence and resilience skills to achieve positive outcomes. Students who learn to work through frustration and remain engaged despite setbacks are more likely to succeed in postsecondary education and the workforce (Jones and Kahn, 2017). Therefore, waiting until postsecondary education to address students' persistence and resilience gaps is too late for many (McClelland et al., 2017). Large-scale reviews have emphasized the need to integrate social, emotional, and motivational skill-building early and consistently throughout K−12 schooling (Dusenbury et al., 2015).

Importantly, students encounter structured academic demands and social norms about performance when they enter elementary school. These environments provide opportunities to practice adaptive responses to failure, confusion, and frustration, which are important conditions for cultivating resilience (Tough, 2016). In fact, there have been several interventions at the K−12 level that target promoting persistence and/or resilience (e.g., Irfan Arif and Mirza, 2017; Cook et al., 2019; Gamlem et al., 2019). In general, these interventions have been successful in terms of supporting students' persistence and resilience. However, the ways in which persistence and resilience are conceptualized and measured tend to differ across studies, making it difficult to draw conclusions across interventions (DiCerbo, 2016; Moore and Shute, 2017; Rudd et al., 2021; Tudor and Spray, 2018; Windle et al., 2011). Thus, as researchers continue to strive toward developing interventions targeting persistence and academic resilience, it is imperative that we conceptualize and measure these constructs appropriately.

The present study

Despite their importance, persistence and academic resilience remain complex and variably defined constructs in the literature. Some researchers conceptualize persistence as time-on-task or repeated attempts to solve a problem, while others frame it as a motivational trait involving sustained engagement over time (DiCerbo, 2016; Moore and Shute, 2017). Persistence is sometimes treated as synonymous with constructs like perseverance or grit, while other researchers argue that they are, in fact, distinct in terms of definitions, relevant measures, timescales, and grain size of analyses (see DiNapoli, 2023). Similarly, academic resilience is sometimes defined as the ability to recover from failure in a single learning episode, while other studies treat it as a broader long-term capacity to overcome systemic barriers to success (Bashant, 2014; Tudor and Spray, 2018; Windle, 2010). These varying definitions pose challenges for measurement and intervention design and highlight the need for more precise operationalization and reliable, valid assessments. Addressing this complexity is critical for advancing both theoretical understanding and practical applications in learning environments.

To summarize, despite the largely agreed-upon importance of developing persistence and academic resilience in K−12 education, there is no clear consensus on how persistence and academic resilience are defined and measured. Prior studies often use varying terminology, measure overlapping yet distinct constructs (e.g., grit, engagement, perseverance), and approach their work from a variety of diverse theoretical definitions, making it difficult to synthesize findings or generalize results across age groups. This lack of consistency in construct definition and measurement has implications for both research design and educational practice. To address these gaps, we conducted a systematic review of existing research on persistence and academic resilience in K−12 settings. Specifically, we sought to answer the following research questions:

RQ1. How has persistence been defined and measured in prior research?

RQ2. How has academic resilience been defined and measured in prior research?

Methods

Literature search

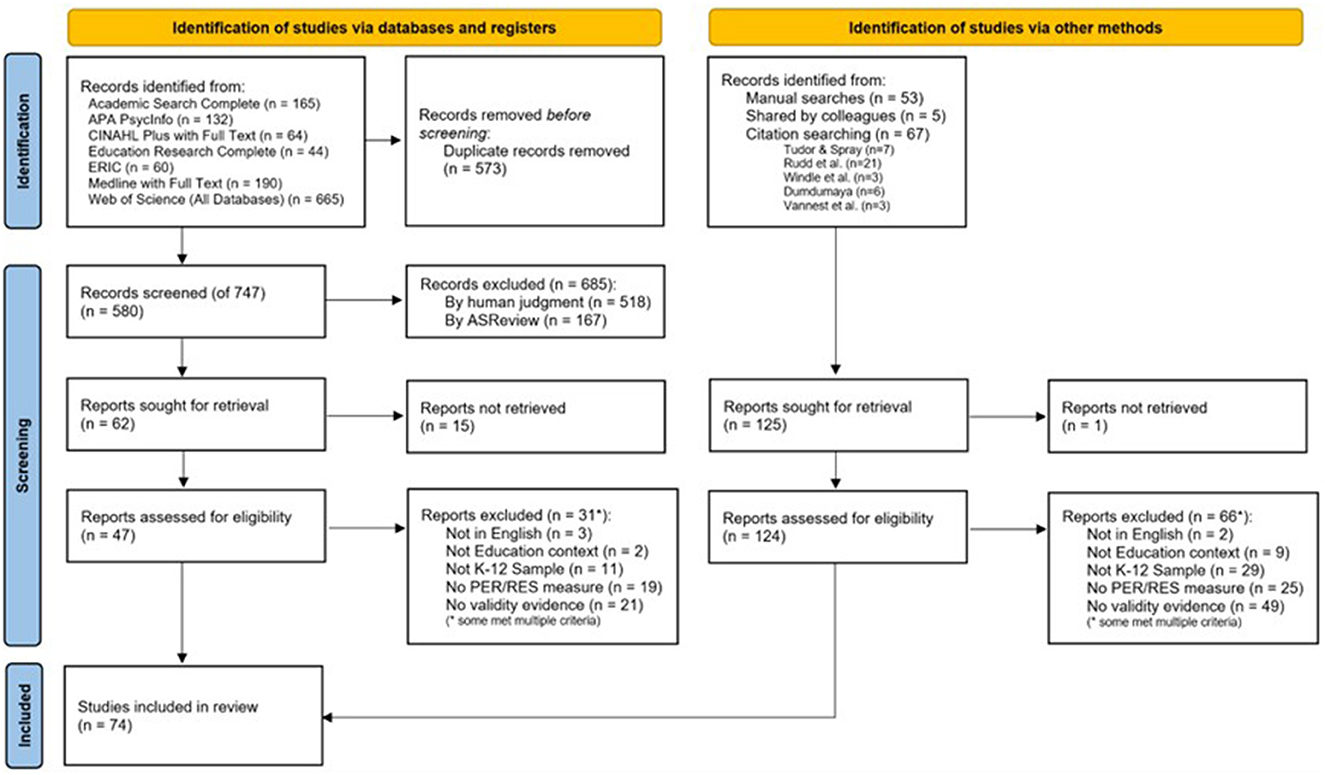

We conducted a systematic review and report that our methods generally followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses Checklist (PRISMA; Stevens et al., 2018). Figure 1 illustrates the identification and screening processes that were applied during the systematic review.

On October 25, 2023, we used the following search string to search seven different databases and registers for abstracts (see Figure 1): ((persist* OR resil*) AND (learn*) AND (K-12 OR primary* OR secondary* OR grade OR “elementary” OR “middle” OR “high”) AND (measur* OR instru* OR scale* OR surv* OR assess*) AND (valid*)) NOT (teacher). This process revealed 1,320 potential records for inclusion.

Inclusion and exclusion criteria

In order to be analyzed in this systematic review, studies had to meet the following five inclusion criteria:

1. Be available in English.

2. Be relevant to educational contexts (i.e., the theoretical framing, instruments administered, or study context must connect to educational issues, such as classroom learning, digital learning environments, academic achievement, etc.).

3. Include data sampled from K−12 learners.

4. Include at least one measure of persistence or resilience, including self-report measures, behavioral measures, other-report measures, or observational protocols.

5. Report at least one type of original quantitative evidence of reliability or validity for the measure(s) of persistence or resilience for the given sample.

In terms of reliability and validity evidence, we followed conceptual definitions and examples put forth in the Standards for Educational and Psychological Testing (AERA, NCME, and APA, 2014). Thus, we looked for reported metrics of internal consistency (i.e., Cronbach's α), inter-rater reliability, test-retest reliability, split-half reliability, and other relevant evidence of scale reliability, as well as evidence of content validity, cognitive validity, dimensionality (i.e., internal factor structure), and convergent and divergent validity (i.e., relationships to conceptually related and conceptually distinct constructs). We also examined evidence of fairness (e.g., appropriateness for use with diverse populations based on results from subgroup analyses of relevant samples) and sensitivity to change (e.g., responsiveness to intervention, change over time) where available.

We excluded studies if they did not include a measure of persistence or resilience specifically. Studies measuring persistence were excluded if they only administered the grit scale1 (Duckworth et al., 2007; Duckworth and Quinn, 2009) or general measures of conscientiousness, engagement, behavioral and emotional strengths, self-concept, or interest that did not include a subscale specific to persistence. Studies were also excluded if they only concerned “pipeline persistence,” such as intent to complete a course of study or to pursue higher education, or variables indicating that students completed a course of study or enrolled in college or a particular major. Studies measuring resilience were excluded if they did not administer any self-report or behavioral instruments but rather categorized or labeled students as “resilient” (or not) based on demographic or socioeconomic risk factors or risk factors in conjunction with demonstrated achievement (e.g., students who demonstrate high achievement despite low socioeconomic status or belonging to a racial/ethnic minority group; Waxman et al., 2003).

Study screening

Abstract screening

After removing duplicates, 747 records sourced from databases and registers were screened using ASReview (ASReview LAB Developers, 2023), which supports a more efficient abstract screening process by using machine learning to reorder abstracts in relation to relevance based on a training set (in this case, 2 relevant and 4 irrelevant abstracts) and subsequent human judgments of each abstract as relevant (or irrelevant). Campos et al. (2024) examined what percentage of abstracts needed to be screened to locate 95% of the studies that were later accepted in educational psychology systematic reviews. Campos et al. found that screening 60% of studies should be sufficient to locate 95% of studies that would be accepted in the sample, using logistic regression and Sentence BERT (see Reimers and Gurevych, 2019). Accordingly, we used Sentence BERT for feature extraction and logistic regression as the classification method, along with “maximum” as the query approach and dynamic resampling. We then screened 580 (77.6%) of the 747 records, terminating screening when there were over 250 consecutive irrelevant records reviewed. This screening process resulted in 62 records identified as relevant, for which full texts were requested.

Full-text screening

In addition to the 62 studies identified through abstract screening, we also conducted additional searches to locate more relevant studies. As shown in Figure 1, informal literature searches, studies shared by colleagues, and citation searching from other articles (including existing reviews) produced an additional 125 studies. We requested full texts of these 125 studies (one could not be retrieved) and the 62 studies identified from abstract screening (15 could not be retrieved). Altogether, 171 studies were successfully retrieved and subjected to full-text screening based on the inclusion/exclusion criteria described previously. The reasons studies were excluded are detailed in Figure 1.

Ultimately, 74 studies met the criteria for inclusion in the systematic review following this screening process, with 16 studies sourced from abstract screening and 58 studies identified through other means.

Inter-rater reliability

Two coders participated in the full-text screening process. The coders jointly screened two papers, then worked independently to screen six papers, resolving discrepancies through discussion and qualifying the inclusion/exclusion criteria as edge cases emerged during the coding. Additional rounds of independent coding of six papers and resolving disagreements were conducted until 32 (approximately 20–25%) papers were double-coded for inclusion/exclusion. The two coders then independently screened 10 (approximately 10%) additional full texts, achieving high inter-rater reliability for inclusion/exclusion (100% agreement; κ = 1.00) with five papers included and five excluded. Following reliability coding, the remaining full texts were divided among the two researchers for independent screening. The coders met as needed to review select cases and establish agreement on coding for inclusion/exclusion.

Data extraction

Data were extracted from studies in two phases. First, operational definitions were extracted for each construct by taking direct quotations from the studies and noting the relevant page number(s) for these definitions. If no explicit definition was stated, this was noted.

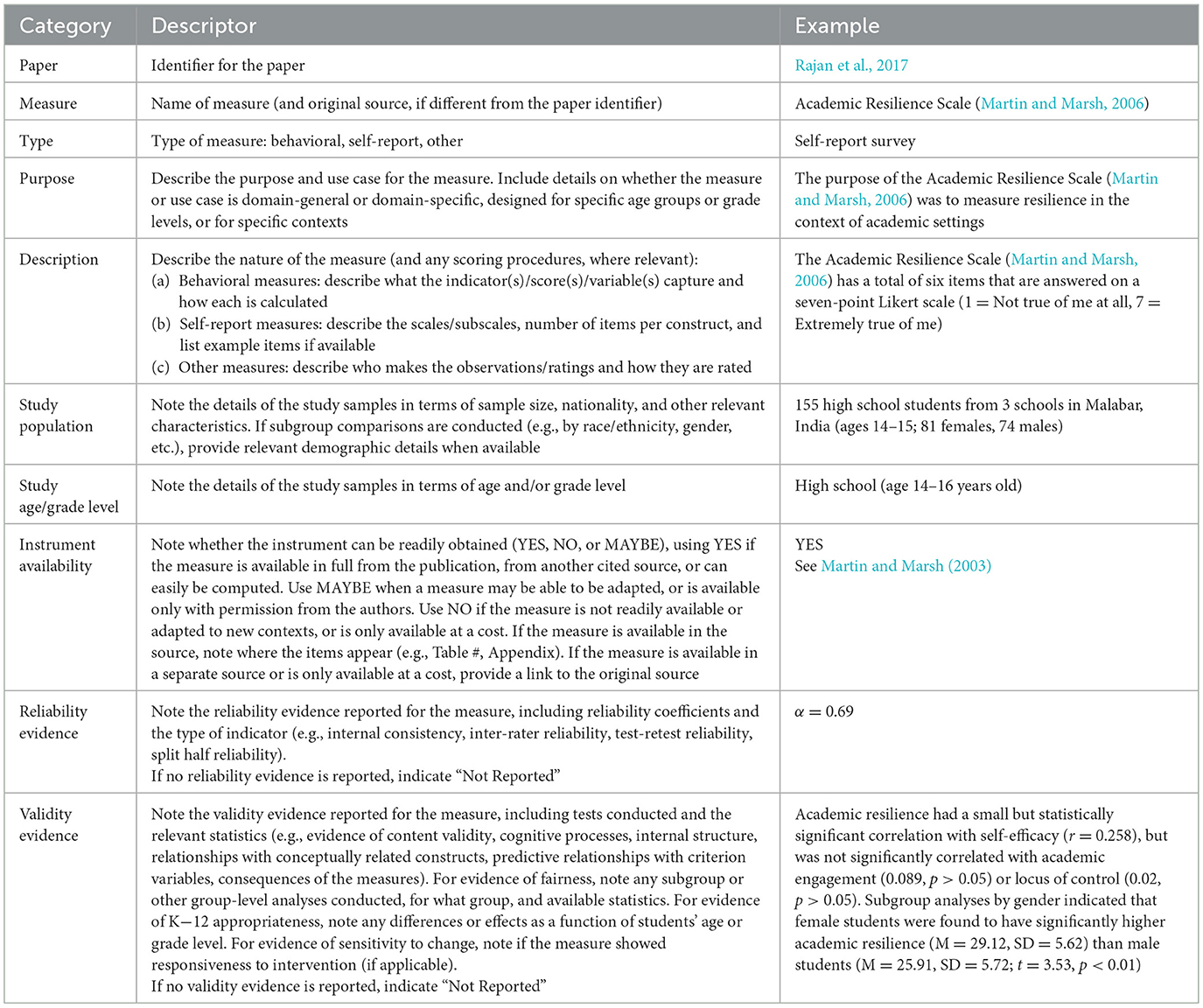

Next, details on available measures (i.e., name, purpose and use case, type of measure, structure of measure) were extracted, first for persistence (RQ1) and then for resilience (RQ2). We considered purpose and use case, as well as available evidence of reliability, validity, and fairness (AERA et al., 2014), to evaluate the technical quality of the measures. Thus, we also extracted information about the samples tested, subgroup comparisons evaluated, and available reliability and validity evidence reported in each study to aid in our analysis of the instruments. See Table 1 for details on the categories of information extracted for each measure.

Two coders participated in data extraction, each extracting data from approximately half the studies. The coders first met and co-coded a few studies to ensure consistency in interpreting the extraction categories (see Table 1). After a trial run of data extraction, the entire team met to review the data extracted thus far and discuss common challenges encountered when extracting the data. After discussion, the two coders continued data extraction and would meet periodically to discuss common challenges and ensure consistency across studies. Due to the iterative nature of this process, we did not formally calculate inter-rater reliability for data extraction.

Data availability

All data used in the analyses reported in this paper are provided in the Results section or in the Supplemental material.

Results

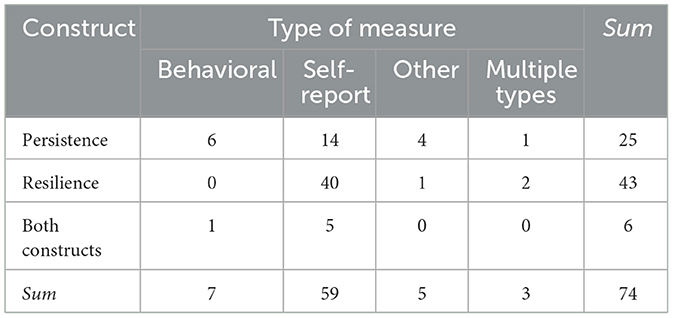

We located 74 papers that contained measures of persistence or resilience. Table 2 reports the distribution of the papers by the construct(s) and the type of measure(s) (self-report, behavioral, other, or multiple types) reported. Most of the papers reported on self-report (i.e., survey-based) measures of resilience (n = 40), followed by self-report persistence measures (n = 14). Relatively few behavioral measures (e.g., in-task actions, timing variables, or other behavioral observations) were reported, and most of these measured persistence (n = 6) or both constructs (n = 1). Five papers included measures that did not fall into self-report or behavioral categories, including teacher-report, parent-report, or observation protocols (i.e., coding schemes applied by researchers). Specifically, two papers reported on teacher-report instruments of persistence, one reported on a teacher-report instrument of resilience, and two papers reported on observation protocols for persistence. Altogether, six papers measured both constructs, but only one paper included both self-report and behavioral measures of persistence in the same study (Ventura and Shute, 2013), and two papers included both self-report and other-report instruments of resilience (Nese et al., 2012; Yang, 2014).

RQ1. How has persistence been defined and measured in prior research?

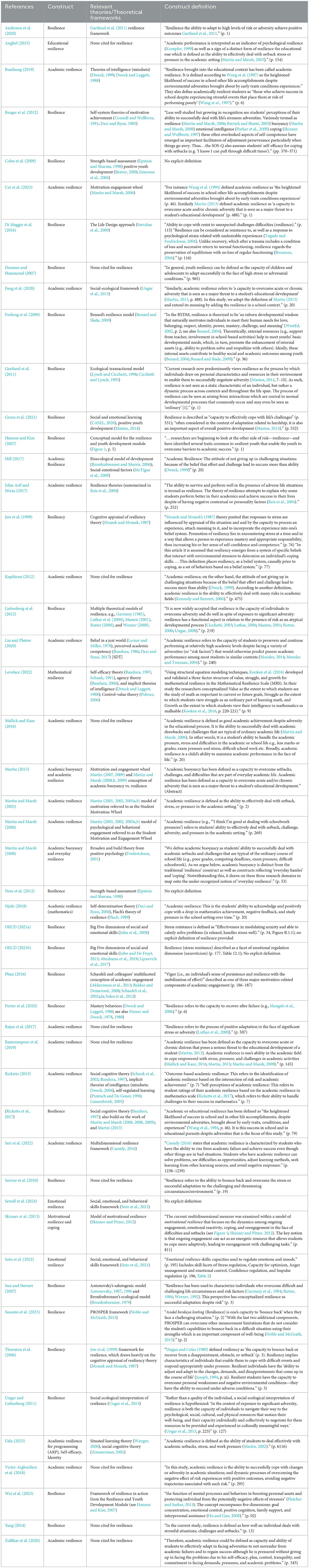

Defining persistence

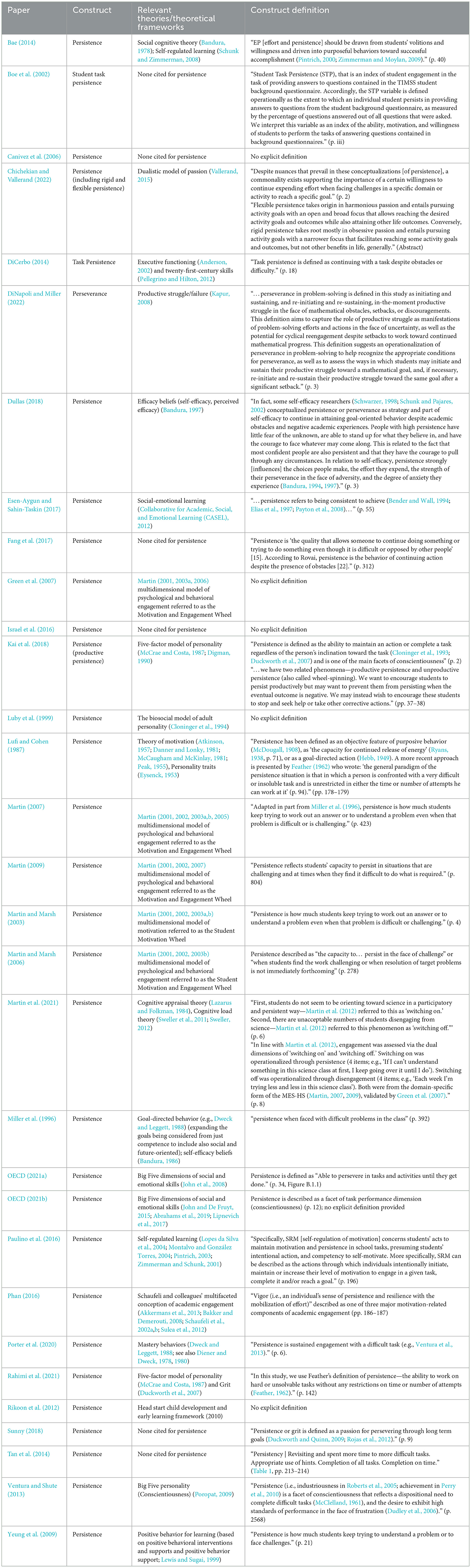

We reviewed construct definitions cited for persistence (and related constructs) as well as the associated theories or theoretical frameworks authors cited to inform their perspectives on this construct (see Table 3). Of 31 papers, six did not include an explicit definition for persistence, and six did not cite a relevant theoretical framework for persistence (two did not include either a definition or a theoretical framework). Of the remaining 25 papers that did cite particular theoretical frameworks, the theories varied, and some papers cited multiple frameworks. The most commonly cited theories were related to motivation and engagement, which characterize persistence as an adaptive state reflecting engaged, effortful behavior (e.g., Martin's Motivation and Engagement Wheel; seven papers), and personality factors, which characterize persistence as a facet of conscientiousness reflecting a disposition to persist (e.g., Big Five; seven papers). Other theories cited included social-emotional learning (three papers), self-regulated learning (three papers), and self-efficacy (two papers). Social cognitive theory, mastery behaviors, executive functioning, cognitive load, and several other frameworks (e.g., twenty-first century skills) were each cited once among the reviewed papers.

Despite diversity in their theoretical bases, examination of the persistence definitions did highlight some common themes, such as continuation of effort on a goal-directed task in the face of challenge or difficulty (Chichekian and Vallerand, 2022; DiCerbo, 2014; Martin, 2007, 2009; Porter et al., 2020), working until tasks are completed (OECD, 2021a; Tan et al., 2014; Ventura and Shute, 2013), and unlimited attempts or time allotted to work on difficult (or unsolvable) problems (see Feather, 1962, cited in Lufi and Cohen, 1987; Rahimi et al., 2021). Persistence is alternately characterized as equivalent to effort, engagement, resilience, perseverance, and grit; as a facet of effort, engagement, resilience, goal-directed behavior, conscientiousness, or grit; or as a multidimensional construct (flexible vs. rigid, productive vs. unproductive). We return to the issue of relationships among persistence and related motivational constructs in the Discussion.

Taken together, we propose a synthesized definition of (task) persistence as involving sustained effort toward completion of a goal-directed task despite challenges or difficulties. Persistence may especially be observed when students are faced with the opportunity to complete multiple tasks or to attempt the task or subtask multiple times (e.g., revise their work). The obstacles faced may come from the difficulty (or solvability) of the problems themselves, from external forces (“opposed by other people”; Fang et al., 2017), or internal states (e.g., “inclination toward the task”; Kai et al., 2018). This definition captures the predominant, recurring themes across the available definitions of persistence presented in Table 3.

Review of persistence measures

We reviewed measures of persistence described in 31 papers; one paper (Ventura and Shute, 2013) included three measures of persistence (two behavioral, one self-report), and some papers used common measures. In total, we reviewed evidence for 28 distinct measures.

We summarized details and reliability evidence for each measure by type, including behavioral measures (n = 9; Supplementary Table 1a), self-report measures (n = 16; Supplementary Table 2a), and other measures (i.e., one teacher-report instrument and two observational measures; Supplementary Table 3a). We also summarize validity evidence reported for each measure in Supplementary Tables 1b–3b. Within each table, measures are sorted roughly by age/grade level, with consideration of availability for use as well as available reliability and validity evidence.

Behavioral measures of persistence

Our review included eight papers that described a total of nine approaches to measuring persistence, which constituted behavioral measures derived from students' direct interactions with digital tasks (e.g., time-based measures, other actions or behaviors, as derived from digital log files or clickstream data captured during interactions). In terms of the availability of this group of measures (Supplementary Table 1a), many are readily implemented or adapted to other digital task contexts. Measures spanned a wide range of grade levels from first to twelfth grade, with some measures evaluated with middle school students, some with high school students, and several with a combination of elementary, middle, and high school students. Additionally, six of the nine measures were designed for use in domain-specific contexts (i.e., mathematics, science, or physics problem solving), while three were domain-general measures. While reliability evidence indicated adequate reliability when it was reported, most measures (six out of nine) did not report reliability evidence; thus, to the extent possible, future research should try to compute and report on measures of reliability (e.g., internal consistency, inter-rater reliability, test-retest reliability, split-half reliability) to ensure adequate technical quality of the behavioral indicators. Despite limited reliability evidence, all measures in this category reported some form of validity evidence in the studies reviewed (Supplementary Table 1b).

The most straightforward behavioral measure to apply is DiCerbo's (2014) behavioral indicators of task persistence (i.e., time on task and number of task events). This measure is readily available for use in digital learning tasks where time and the number of events (actions) can be tracked through log files. The measures showed adequate reliability (α's > 0.80) and evidence of sensitivity to students' development (i.e., scores increased by grade level), and validity evidence showed good fit for models based on this measure. The domain-general nature of this measure and its use across a wide range of ages (grades 1 through 9) imply that it can readily be adapted for use in various interactive task contexts, so long as those tasks include multiple events nested within multiple levels, units, etc. Measures created by Tan et al. (2014) in the context of the ASSISTments mathematics learning platform were also highly reliable (α's > 0.90) and could be promising for many research teams but would likely require some adaptation to the specifics of the target learning task if applied outside of ASSISTments.

Other behavioral measures developed in domain-specific contexts [i.e., Boe et al., 2002; Fang et al., 2017; Kai et al., 2018; Rahimi et al., 2021; Game-based Assessment of Persistence (GAP), Ventura and Shute, 2013] did not report reliability evidence and therefore should be used with caution. If these measures are selected for use, reliability evidence should be collected and analyzed to aid in the interpretation of results. Porter et al. (2020) also did not report reliability for the domain-general Persistence, Effort, Resilience, and Challenge-Seeking (PERC) task or its subscores, likely due to the small number of observations per subscore, although some validity evidence for the overall task and subscores is reported. In the PERC task, persistence was operationalized as total time spent on four difficult puzzles; this time-based measure was converted into a measure ranging from 0 to 1 to enable summation across subscores to create an overall PERC score. Implementing these tasks would require researchers to program their own versions of the tasks and to apply the specified sample-dependent scoring approaches; available evidence is not sufficient to support implementing the persistence measure alone. Researchers choosing to implement this measure will need to expend additional effort to prepare the tasks for administration, for scoring, and for validation, and therefore may prefer to use other measures not tied to this specific set of puzzle-solving tasks. The final measure in Supplementary Table 1a—the Performance Measure of Persistence (PMP; Ventura and Shute, 2013)—was sufficiently reliable but is not readily available for use and therefore also not recommended.

Among the behavioral measures of persistence, we observed that only the GAP and PMP (Ventura and Shute, 2013) were evaluated against an external survey-based measure of persistence, analyses of which indicated that the behavioral measures and survey measures were uncorrelated (although use of the PMP is not recommended because the items are not readily available). Porter et al. (2020) evaluated the PERC task against a self-report measure of mastery behaviors, but there were not sufficient items within the self-report survey to isolate a persistence subscore. Future research utilizing behavioral measures of persistence should also include relevant and robust self-report survey-based measures of persistence to examine the extent to which behavioral measures assess a similar or different construct from well-established surveys. We evaluate such survey-based measures in the following section.

Self-report measures of persistence

Our review included 20 papers that described a total of 16 distinct self-report measures of persistence appropriate for K−12 students (Supplementary Table 2a); some measures were included in two or more studies and are therefore grouped together in the table. When a measure was adapted for a different subject context (i.e., a domain-general instrument that was contextualized for a particular domain; e.g., Green et al., 2007) or was shortened from its original form (e.g., Martin et al., 2021), we considered it a distinct instrument.

Notably, almost all of the self-report measures of persistence reflect a subscale within a larger scale measuring some aspect of motivation, engagement, attitude, social-emotional skills, etc. Depending on additional constructs covered, researchers may opt to use the persistence subscales alone, in conjunction with additional relevant subscales, or the original scale with all subscales. Measures spanned a wide range of ages and grade levels, from first grade through twelfth grade. Seven of the measures were designed for use in domain-specific contexts, such as mathematics, science, or English. All the measures reviewed reported some form of reliability evidence, and most reported adequate reliability; however, four of the persistence measures are not sufficiently reliable to recommend subsequent use (i.e., α < 0.70; Chichekian and Vallerand, 2022; Esen-Aygun and Sahin-Taskin, 2017; Luby et al., 1999; Lufi and Cohen, 1987). All self-report measures reported some form of validity evidence in the studies reviewed (Supplementary Table 2b).

Altogether, seven measures (reported in eight sources) are readily available for use in subsequent research because the full text of each item is available in the reviewed publication or another source cited within that publication. A further three measures are available in either the reviewed publication or in the International Personality Item Pool (IPIP; Goldberg et al., 2006); however, due to reported adaptations or dropping items for subsequent analysis (e.g., due to low item-total correlation), it is unclear precisely which items should be included in the final scale (Luby et al., 1999; Miller et al., 1996; Ventura and Shute, 2013). Therefore, we do not recommend using these scales at this time due to the degree of inference required about the relevant items. The final nine papers report on survey instruments that are not readily available, either because the instrument is not available in English (Paulino et al., 2016) or because the scale is proprietary and only available at a cost, such as the Motivation and Engagement Scale-High School (MES-HS; Martin, 2007, 2009; Martin et al., 2021; see also Green et al., 2007) and the Student Motivation Scale (Martin and Marsh, 2003, 2006).

Of the seven measures that are readily available, five report adequate reliability evidence for the persistence measure or subscale and are therefore recommended for use in future research. Among these five measures, the number of items ranges from 6 to 15, suggesting that these instruments will be quick to administer. Three of the five instruments were contextualized within a specific content domain (i.e., STEM, mathematics, English). In terms of validity evidence (Supplementary Table 2b), these measures generally showed the expected factor structure, and most showed expected correlations to other motivation and engagement variables (e.g., self-efficacy, academic performance). To aid readers, we group these instruments by the age ranges for which validity and reliability evidence has been reported.

Elementary school and up

The Survey on Social and Emotional Skills (SSES; OECD, 2021a,b) is a domain-general instrument that captures social and emotional skills aligned with the Big Five personality domains. The persistence subscale consists of a seven-item scale (after dropping one poorly performing item) that offers a domain-general self-report measure of the general tendency to persist overall. The persistence scale had adequate reliability (α > 0.70 across all cities) and the overall instrument underwent rigorous validation, including multigroup confirmatory factor analyses to examine measurement invariance by age, gender, and sample location, as well as correlations with external measures of other social-emotional variables and student outcomes. Results for persistence indicated scalar invariance by age and gender (suggesting that scale scores can be directly compared across groups) and metric invariance by cities (indicating that within-group associations among variables can be compared across groups, but scale scores cannot directly be compared across cultures). The SSES was specifically designed for international, cross-cultural use with large and diverse samples of 10- and 15-year-olds (approximately 5th and 10th grade) across the globe and, accounting for metric invariance, seems appropriate for use within diverse elementary to high school contexts.

The Attitude and Persistence toward STEM Scale (APT-STEM; Sunny, 2018) is a 24-item scale with subscales for positive STEM attitudes (16 items) and persistence to succeed in STEM (eight items). This measure was highly reliable with a sample of students from 5th to 12th grade (α > 0.80). While confirmatory factor analysis indicated the two-factor structure had acceptable fit, the APT-STEM was not evaluated with convergent or divergent constructs (e.g., concurrent or predictive validity), so if this measure is selected, it is recommended to couple it with external measures to provide further validity evidence.

Middle school and up

The Academic Self-Efficacy Scale (ASES; Dullas, 2018) is a domain-general instrument that captures reports of the general tendency to persist in academics, evaluated for use with students in grades 7–10 in the Philippines. It consists of 62 items tapping four dimensions of academic self-efficacy: perceived control (12 items), competence (15 items), persistence (15 items), and self-regulated learning (20 items). The persistence subscale was highly reliable (α = 0.90), and the overall scale showed correlations in expected directions with external measures of self-efficacy and academic performance. The Self-Motivated Learning Inventory (SMLI; Bae, 2014) is a 43-item instrument developed to measure adolescents' characteristics relevant to motivation and self-regulated learning within the domains of English or Mathematics, based on several existing scales like the MSLQ (Pintrich et al., 1991). There are six subscales: self-efficacy (nine items), mastery goal (four items), performance avoidance goal (four items), effort and persistence (seven items), cognitive and metacognitive strategy use (12 items), and resource management (seven items). SMLI was evaluated with a sample of middle and high school students from South Korea, and the effort and persistence subscale was highly reliable (α = 0.89) for both math and English domains. The effort and persistence subscale also showed moderate correlations with achievement in both English and Mathematics.

High school

Finally, the vigor subscale of the Engagement Scale was evaluated for use with two samples of Fijian students in years 11 and 12 from multi-ethnic, metropolitan schools (Phan, 2016). The 17-item Engagement Scale, originally developed for use with undergraduate and adult populations (Schaufeli et al., 2002a,b), included three facets of engagement: absorption (six items), dedication (five items), and vigor (six items). Vigor was described as reflecting students' persistence and resilience (e.g., “As far as my studies in mathematics are concerned I always persevere, even when things do not go well”; Phan, 2016). The vigor subscale was highly reliable (α > 0.85) and showed significant correlations with the other subscales in expected directions, and CFA analyses showed good model fit.

Other measures of persistence

Our review included four studies that described approaches to measuring persistence that did not fall into the behavioral or self-report categories. Specifically, we identified two studies that included teacher-report surveys of student behavior and two studies involving behavioral coding schemes to be applied by trained researchers to analyze behavioral data captured through coding of video data or other verbal protocols (Supplementary Table 3a). Each of the studies reported reliability evidence. Three of the four studies reported some validity evidence (Supplementary Table 3b). For this group of measures, all are readily available, and each instrument has reported adequate reliability.

If researchers are interested in collecting teacher reports, the Learning Behaviors Scale (LBS) is recommended for use as a domain-general measure (see McDermott, 1999, for the original instrument). This teacher-report instrument was designed for students up through grade 12, and based on validity evidence summarized here, is appropriate for use with lower elementary (K-2) and elementary-to-middle school students (grades 1–7). The persistence subscale is reliable (α > 0.80), and available validity evidence indicates that the persistence subscale is distinct from three other factors within an overall four-factor structure, although we note that the two studies in our review (Canivez et al., 2006; Rikoon et al., 2012) used somewhat different labels for the four factors (see Supplementary Table 3a).

If researchers are coding student behaviors that are indicative of persistence, either the 3 Phase Perseverance (3PP) framework (DiNapoli and Miller, 2022) or the Collaborative Computing Observation Instrument (C-COI; Israel et al., 2016) is recommended, depending on purpose and grade level. The 3PP framework may only be suitable for use with high school students studying mathematics, and additional validation is required with other ages, grade levels, and subjects. If focusing on collaboration among elementary students, especially in a computer science context, the C-COI may be more appropriate. However, note that Israel et al. (2016) only report percent agreement and do not report validity evidence for the C-COI (Supplementary Table 3b). Thus, researchers are advised to use this measure with caution and to collect, evaluate, and report more robust reliability and validity evidence in their studies in order to contribute to the available evidence for this measure.

RQ2. How has academic resilience been defined and measured in prior research?

Defining resilience

We reviewed construct definitions cited for resilience (and related constructs) as well as the associated theories or theoretical frameworks authors cited to inform their perspectives on this construct (see Table 4). Of the 49 papers, four did not include an explicit definition for resilience, and 10 did not cite a relevant theoretical framework for resilience (with no overlap between these papers). Of the remaining 39 papers that did cite particular theoretical frameworks related to resilience, the theories varied, and some papers cited multiple frameworks. Notably, some of these theories overlapped with those cited for persistence (e.g., theories related to motivation and engagement, social-emotional learning, self-regulated learning, self-efficacy, and social cognitive theory). The most commonly cited theories were related to motivation and engagement, which characterize resilience as an adaptive state and energetic resource allowing students to cope with and recover from challenging tasks (e.g., Martin's Motivation and Engagement Wheel and Skinner and Pitzer's model of motivational resilience; eight papers), social-emotional learning frameworks, which characterize resilience as adapting to hardship or resistance to stress (e.g., CASEL's 2020 Social and emotional learning framework, OECD's Big Five dimensions of social and emotional skills framework; six papers), and social-ecological theories, which consider how individuals use internal and external resources to cope with adversity through a process of interactions within their environment and context (e.g., Ungar's social-ecological interpretation of resilience, Bronfenbrenner's ecological model; six papers). Other theories cited included developmental approaches (e.g., positive youth development; six papers), self-determination theory (four papers), social cognitive theory (three papers), and theories of intelligence (i.e., growth mindset; three papers). Additional theories such as academic buoyancy, self-efficacy, self-regulated learning, cognitive appraisal of resiliency, strength-based assessment, and personality theory were each mentioned in two or fewer papers.

As with persistence, we observed considerable theoretical diversity among approaches to resilience. However, several common themes emerged, most notably the idea of overcoming or experiencing successful outcomes despite adversity (e.g., Anderson et al., 2020; Cui et al., 2023; Liebenberg et al., 2012; Ricketts et al., 2017), adapting to or effectively dealing with stress or setbacks (e.g., Anghel, 2015; Burger et al., 2012; Donnon and Hammond, 2007; Jew et al., 1999; Rajan et al., 2017), coping with challenges (e.g., Green et al., 2021; Skinner et al., 2013; Victor-Aigboidion et al., 2018), and recovery after failure (e.g., Porter et al., 2020; Sari et al., 2022; Zulfikar et al., 2020). Views of academic resilience specifically frame the construct in terms of academic successes and accomplishments (e.g., Liu and Platow, 2020; Mallick and Kaur, 2016) and/or setbacks and challenges specific to academic contexts (e.g., “poor grades, competing deadlines, exam pressure, difficult schoolwork”; Martin and Marsh, 2003, 2006, 2008; Njoki, 2018). These adversities may range from “everyday” academic stressors to more acute or chronic issues that may threaten students' educational development (see discussions of academic buoyancy vs. academic resilience in Martin, 2013; Martin and Marsh, 2008).

Some views frame resilience as an individual capacity or ability (e.g., Porter et al., 2020; Sarwar et al., 2010; Susanto et al., 2023; Zulfikar et al., 2020), while social/ecological perspectives frame resilience as related to how individuals navigate internal and external resources in the context of environmental adversities (e.g., Gartland et al., 2011; Ungar and Liebenberg, 2011). In these perspectives, resilience can be considered an interaction among risks and protective factors (or assets) both internal and external to an individual (Furlong et al., 2009; Gartland et al., 2011; Sun and Stewart, 2007). Resilience is alternately characterized as equivalent to persistence (i.e., “the capacity of students to persevere”; Liu and Platow, 2020, p. 240), as a facilitator of persistence (i.e., “resilience… and coping… have emerged as important facilitators of adjustment and perseverance”; Burger et al., 2012, p. 371), or as a higher-level construct than persistence (e.g., Martin and Marsh, 2003, 2006).

Taken together, we propose a synthesized definition of (academic) resilience as involving the process of bouncing back or recovering in the face of challenges, adversities, or stressors to achieve successful outcomes (e.g., academic achievement) by using adaptive behaviors or coping strategies over time. Resilience can be considered a dynamic process (vs. an ability or inherent capacity) involving interactions among an individual's internal assets, their perceptions of task challenges, and the qualities of environmental protective factors and stressors or other internal or external adversities they may face. These adversities may be relatively minor (e.g., everyday academic setbacks, internal motivational issues) or more major (e.g., imminent failure or disaffection from schooling). As with persistence, this definition reflects the major recurring themes and ideas found in the resilience definitions summarized in Table 4.

Review of resilience measures

We reviewed measures of resilience described in the 49 papers retained for the final review; several papers included multiple resilience measures (i.e., Cui et al., 2023; Nese et al., 2012; Yang, 2014), and some papers used common measures, so in total we reviewed evidence for 46 distinct measures of resilience. We note that in two papers (Mallick and Kaur, 2016; Victor-Aigboidion et al., 2018), it was unclear based on the authors' descriptions exactly what measure was administered, and it was not possible to determine the origin of the measure or overlap with other instruments; thus, we treated these two papers as reporting distinct measures. As with persistence, we considered instruments that were adapted to a different domain, shortened, or combined with other instruments to be distinct measures.

We summarized details and reliability evidence for each measure by type, including behavioral measures (n = 1; Supplementary Table 4a), self-report measures (n = 41; Supplementary Table 5a), and other-report measures (three teacher-report and one parent-report surveys; Supplementary Table 6a). We also summarize validity evidence reported for each measure in Supplementary Tables 4b–6b. Within each table, measures are sorted roughly by age/grade level, with consideration of availability for use as well as available reliability and validity evidence.

Behavioral measures of resilience

Our review included only one paper describing a behavioral approach to measuring resilience, derived from students' direct interactions with digital tasks (Supplementary Table 4a). The PERC tasks (Porter et al., 2020) included a measure of resilience, which was operationalized as the percentage of correct responses on three easy puzzles presented after working on four difficult puzzles (i.e., whether students' performance reflects their ability to “bounce back” after experiencing difficult tasks). Porter et al. (2020) do not report reliability for the PERC tasks or their subscores, likely due to the small number of items included; although validity evidence is reported for the subscore (Supplementary Table 4b), there is limited evidence that the resilience items can be administered separately from the entire task. As mentioned when discussing the persistence subscore, administration of PERC would require researchers to program their own version of the tasks. If researchers wish to use these measures with appropriate justification, reliability and validity evidence should be collected and analyzed to aid in the interpretation of results, especially if any modifications are made to the instrument (e.g., administering difficult and then easy tasks to measure persistence and resilience without the other two subcomponents). Given the limitations of PERC, behavioral measures of resilience may be a promising area for future research and instrument development.

Self-report measures of resilience

We identified 41 distinct self-report measures of resilience appropriate for K−12 students (Supplementary Table 5a). Our review indicates that there is a wide variety of self-report resilience measures, with the majority of instruments readily available for use (28 instruments, approximately 68%). Three studies reported on scales for which items are available, but it is either unclear which subset of items was administered (Gartland et al., 2011; Sari et al., 2022) or the items must be requested from the study author (Jew et al., 1999), making it difficult to recommend using these scales in subsequent research due to the intact instrument not being readily available. Another 12 papers reported on survey instruments that were not readily available, either because the instrument is not available in English (e.g., Wei et al., 2023), available at a cost (e.g., the Social Emotional Assets and Resiliency Scales [SEARS]; Cohn et al., 2009; Nese et al., 2012), or only a few example items are reported with no further details on accessing the complete instrument (e.g., Burger et al., 2012; Skinner et al., 2013; Thornton et al., 2006). Two papers did not report clear details on the measures (Mallick and Kaur, 2016; Victor-Aigboidion et al., 2018). We focus on instruments that are readily and freely available (i.e., at no cost) for research use.

The measures reflect a mix of standalone resilience scales and resilience subscales reflecting one facet of larger motivational or social-emotional constructs. Measures spanned a wide range of ages and grade levels, from third grade through twelfth grade and into early adulthood. Seven of the self-report measures were designed (or adapted) for use in domain-specific contexts (e.g., mathematics, programming, English as a foreign language). Reliability evidence was not reported for three traditional self-report measures (Sari et al., 2022; Susanto et al., 2023; Victor-Aigboidion et al., 2018) or for the situational judgment tests administered by Yang (2014). While most of the studies that did report reliability evidence had adequate reliability, four of the resilience measures demonstrated inadequate reliability with the students sampled (i.e., α < 0.70; Fang et al., 2020; elementary school sample from Hanson and Kim, 2007; Rajan et al., 2017; Zulfikar et al., 2020). Notably, the Resilience Youth Development Module (RYDM) did not show adequate reliability with one sample of elementary school students (Hanson and Kim, 2007) but did with another elementary sample (Sun and Stewart, 2007) and with secondary school samples (Furlong et al., 2009; Hanson and Kim, 2007), and Martin and Marsh's Academic Resilience Scale did not show adequate reliability in one study (Rajan et al., 2017) but did in all other studies using this instrument (Anghel, 2015; Cui et al., 2023; Kapikiran, 2012; Martin and Marsh, 2003, 2006; Njoki, 2018). Some form of validity evidence was reported for all self-report measures of resilience (Supplementary Table 5b).

Altogether, there are 25 resilience measures (including adaptations) that are readily available and report adequate reliability evidence. To aid readers, we group these instruments by the age ranges for which validity and reliability evidence has been reported.

Elementary school and up

The SSES (OECD, 2021a,b) includes a short six-item measure (after dropping two poorly performing items) that offers a domain-general measure of the emotional aspects of resilience for 10-year-olds (approximately 5th grade) and 15-year-olds (approximately 10th grade), which could be administered in conjunction with the corresponding persistence scale from the same instrument. While generally the resilience (stress resistance) subscale was reliable (α = 0.80 for the full sample), values in four cities (i.e., Bogota, Helsinki, Houston, and Manizales) were inadequate (α < 0.70; OECD, 2021a), indicating differential reliability across cultures. As with persistence, results of measurement invariance tests indicated scalar invariance by age and gender (allowing direct comparison of scale scores across groups) and metric invariance by cities (allowing comparison of within-group associations across groups, but not direct comparisons of scale means); thus, this measure seems appropriate for use with diverse samples, taking metric invariance into account.

The nine-item Academic Resilience for Programming (ARP; Uslu, 2023), an adaptation of the Academic Resilience in Mathematics (ARM; Ricketts, 2015; Ricketts et al., 2017) measure, was successfully used in the context of computer programming with both 5th and 6th grade students in Turkey and had good reliability (α > 0.80). The 21-item elementary school survey of the RYDM (Hanson and Kim, 2007) was designed as a more comprehensive instrument (measuring multiple facets of internal resilience assets and protective factors), but reliability and validity evidence shows some potential challenges with the subscales designed for administration with elementary-level students, perhaps due to the small number of items tapping each internal resilience asset (Hanson and Kim, 2007). Specifically, Hanson and Kim (2007) found that only a few items showed appropriate factor loadings, and these subscales were not sufficiently reliable at the elementary school level (α's < 0.70). Sun and Stewart's (2007) administration of 34 California Healthy Kids Survey (CHKS) items was highly reliable (α > 0.90) and had good fit based on a confirmatory factor analysis; the factor analysis also included items from an additional scale (13 items from the Peer Support Scale from the Perceptions of Peer Support measure; Ladd et al., 1996) as an additional factor. Researchers may not have the flexibility to administer all 47 of these items, and available data do not address whether administering separate subscales would be appropriate. Thus, the use of this more comprehensive resilience measure at the primary grades is advised with caution, with the caveat that researchers will need to collect their own reliability and validity evidence to investigate whether administrations of smaller subsets of items are advisable.

Middle school and up

Among the eight instruments for middle school and older students, a wide range of resilience constructs are represented and appear to be measurable with sufficient reliability and validity evidence. While the CHKS resilience measure showed some low reliabilities at the elementary level, the 51-item secondary school survey appeared to perform well with samples of 7th-, 9th-, and 11th-grade students (α > 0.70; Furlong et al., 2009; Hanson and Kim, 2007) and would thus be suitable for measuring students' internal resilience assets (i.e., self-efficacy, empathy, problem-solving, and self-awareness) for middle school and older students. The Adolescent Resilience Questionnaire (ARQ; Gartland et al., 2011) is a multidimensional, 88-item survey developed as a comprehensive measure of resilience that taps both individual and environmental factors. Anderson et al. (2020) reported on a reduced 49-item version of the ARQ validated for a diverse sample of middle and high school students in the U.S., which showed good model fit and strong correlations with the original instrument. The Child and Youth Resilience Measure (CYRM-28) was originally designed as a 58-item cross-cultural measure of resilience processes with individual, relational, community, and cultural resources; while all but the relational dimension were sufficiently reliable, factor analysis indicated that these dimensions could not be recovered empirically, and indeed, the factor structure varied across subgroups of minority world girls and boys, majority world girls, majority world boys with high social cohesion, and majority world boys with low social cohesion (Ungar and Liebenberg, 2011). The 28-item version (Liebenberg et al., 2012) reflects individual, relational, and contextual factors (retaining items that were theoretically important and/or showed the strongest factor loadings across the four subgroups to ensure cross-cultural relevance) and showed high reliability (α > 0.70; test-retest 0.65–0.91) and good fit with a three-factor EFA for diverse Canadian youth. It is unclear how the reduced version functions with non-Western populations.

The 36-item self-rating Resilience Scale used in the Mission Skills Assessment (Yang, 2014) was highly reliable, and three-factor CFA models (efficacy, emotional stability, and resilience) showed good fit across multiple waves of data from large samples of middle school students. This instrument also showed statistically significant relationships to external academic measures (e.g., GPA, standardized assessment scores) and life outcomes (i.e., life satisfaction). The adaptations of (Cassidy 2015) Academic Resilience Scale, which was designed for higher education populations, also showed adequate reliability with middle school-aged students (ages 13–14) up to high school across multiple countries (Thailand, China, and Iran; Buathong, 2019; Cui et al., 2023; Ramezanpour et al., 2019). Note that Buathong (2019) used a 16-item variation of the scale following exploratory factor analysis, retaining three factors of emotional response, perseverance, and reflecting and adaptive help-seeking. Cui et al. (2023) used 18 items translated from the original scale, plus some item adaptations, for a 20-item scale measuring four factors of perseverance, self-reflection, adaptive help-seeking, and negative affect and emotional response; all factors were sufficiently reliable, and a four-factor CFA showed adequate fit with the revised four-factor structure.

The Academic Buoyancy Scale (ABS; Martin and Marsh, 2008; Martin, 2013) is an efficient four-item measure capturing students' “everyday resilience” and ability to deal with setbacks, challenges, or pressures that occur during everyday academic experiences. This measure showed adequate internal consistency (α > 0.70) and test-retest reliability (r = 0.67) with large samples of Australian middle and high school students. Martin and Marsh (2008) demonstrated that the measure can effectively be contextualized within a particular subject (i.e., mathematics), suggesting that this instrument can not only be used with a wide range of students (ages 11–19) but also potentially can be used in specific subject matter domains in addition to general academics. Martin (2013) also introduced the Academic Risk and Resilience Scale (ARRS) to capture specific instances of major academic adversity students might have experienced within the past year; if individuals respond “yes” to any of these major adversities, they are asked to respond to four items that parallel the ABS items in that they ask about students' ability to deal with that specific type of setback. ARRS showed high reliability (α = 0.90), and factor analyses showed good fit with academic buoyancy and academic resilience factors, suggesting that this measure provides complementary information on middle school and older students' experience of resilience following major adversity, as well as academic buoyancy (everyday resilience).

The nine-item ARM was sufficiently reliable (α > 0.80; reliability of person separation >0.70) with a diverse sample of 7th and 8th grade students (Ricketts, 2015; Ricketts et al., 2017), and CFA showed a good fit for a three-factor model (academic buoyancy, access to support, future goals; Ricketts, 2015). Thus, this instrument could be used in assessing a multifaceted conception of academic resilience in the mathematics context. In sum, depending on how multidimensional or focused a construct one wishes to capture, whether larger academic setbacks or everyday stressors are emphasized, and whether the focus is on general academics or domain-specific resilience, there are multiple options appropriate for middle school and older students.

High school and up

We identified nine instruments suitable for use with high school and older students; based on our analyses, we recommend the use of seven of these instruments. Lovelace (2022) reported on an adaptation of Kooken et al.'s (2016) 24-item Mathematical Resilience Scale, originally developed in the context of college-level mathematics, for use with low-income high school students in the U.S.; this study indicated that dimensions of Value, Struggle, and Growth all had good reliability (α > 0.85) and fit statistics in a three-factor CFA. Liu and Platow (2020) adapted the mathematics-focused ARM (Ricketts et al., 2017) to a more general measure of academic resilience for Chinese high school students, and this adaptation maintained good reliability (α > 0.85) and showed good fit of items to the scale based on results from a CFA; thus, these nine items could also be administered using a domain-general context.

The Adolescent Resilience Scale is a general psychological resilience instrument created by (Oshio et al. 2003) consisting of 21 items across three factors of novelty seeking, emotional regulation, and positive future orientation. Anghel (2015) evaluated this measure with a sample of urban Romanian high school students and found that the scale was sufficiently reliable (α = 0.77) and related to students' risk status, suggesting that this measure might be used in instances where a more general psychological notion of resilience is desired, versus a more focused measure of academic resilience. The Behavioral, Emotional, and Social Skills Inventory (BESSI; Soto et al., 2022) is another example of a larger social-emotional skills inventory designed for high school and older students that includes an emotional resilience facet, among other non-cognitive characteristics. Sewell et al. (2024) explored the development of shorter versions of the original 192-item BESSI and offered evidence in support of 96-, 45-, and 20-item versions with different reporting structures and testing times. Each of these shorter versions retained an emotional resilience skill domain. With a high school sample, each of the reduced-form instruments shows strong reliability (α > 0.70 across all forms) and validity evidence (i.e., good model fit and factor loadings) for emotional resilience and are recommended if a constellation of social, emotional, and behavioral characteristics is desirable to measure.

The Academic Resilience Scale (Martin and Marsh, 2003, 2006) is a six-item scale that results in a unidimensional overall academic resilience score; this instrument was shown to be highly reliable (α = 0.89) with a sample of over 400 Australian high school students, and correlations between this measure and other motivation and engagement constructs (e.g., self-beliefs, persistence, failure avoidance) were in the expected directions. This measure has successfully been adapted to several other samples including urban Romanian (Anghel, 2015), Indian (Rajan et al., 2017), Chinese (Cui et al., 2023), and Turkish (Kapikiran, 2012) high school students; Njoki (2018) adapted this instrument for a Kenyan secondary school population with a focus on mathematics. All of these administrations except for Rajan et al. (2017) showed adequate reliability (α > 0.70). When CFA analyses were conducted, the instrument showed adequate fit to a unidimensional model (Cui et al., 2023; Kapikiran, 2012; Martin and Marsh, 2006), suggesting that this measure may be successfully adapted to many contexts and, for the most part, maintain its technical properties.

Resilience was captured along with persistence in the six-item vigor subscale of Schaufeli et al.'s (2002a,b) Engagement Scale (Phan, 2016), designed to capture motivation-related attributes of engagement. The vigor subscale was reliable across time points (α = 0.86–0.87) and showed good CFA model fit and significant correlations with the other subscales of absorption (six items) and dedication (five items), based on analyses of two samples of Fijian high school students. The eight-item resilience subscale from the Design My Future (DMF) measure (Di Maggio et al., 2016) had good reliability (α = 0.80) and good fit via EFA and CFA with two samples of Italian high school students. This measure also showed significant correlations with many other student-level variables and therefore may be useful when relating resilience to other individual differences.

An adaptation of the 33-item Resilience Scale that (Wagnild and Collins 2009) developed for adult populations was used with secondary school students in Pakistan, and all dimensions and the overall scale were sufficiently reliable (α's > 0.70; Sarwar et al., 2010). However, because the rating scale was not reported, it is not apparent exactly what rating scale ought to be used. We would advocate using alternative instruments with more complete information available.

Other measures of resilience

Our review included four studies that described approaches to measuring resilience that did not fall into the behavioral or self-report categories. Specifically, we identified three studies that included teacher-report surveys of student behavior and one study that included a parent-report survey with items corresponding to teacher reports (Supplementary Table 6a). Each of the studies reported reliability and validity evidence (Supplementary Table 6b).

Two of the teacher-report measures are readily available, and evidence suggests that each instrument has high reliability. The Approaches to Learning subscale of the Social Skills Rating Survey (Gresham and Elliot, 1990; Hill, 2017), administered in the Early Childhood Longitudinal Survey (ECLS-K), captures teacher ratings of eight social skills reflecting students' approaches to learning and is appropriate for diverse samples of early elementary students (grade 3). For middle school students, the four-item resilience scale from the Mission Skills Assessment (Yang, 2014) may be an appropriate option that is efficient for teachers to administer. Both measures have been evaluated for large samples of students, and validity evidence shows that academic resilience, as reflected in teacher ratings, has significant relationships with key outcomes, such as reading achievement and GPA. The remaining measures represent the teacher and parent report surveys of the SEARS (Nese et al., 2012), which are appropriate for a wide range of grade levels (K−12) but are not freely available and therefore not recommended.

Discussion

This systematic review resulted in compilations of operational definitions and theoretical frameworks used to conceptualize the constructs of persistence and resilience, as well as measures of these constructs that can be leveraged in future research on persistence, resilience, and related constructs with K−12 students. Notably, evidence suggests that measures designed for specific disciplinary contexts may be amenable to adaptation to other domains or to domain-general adaptations (e.g., the ARM was successfully adapted from mathematics to the programming context as well as a domain-general version). Similarly, some measures may be adapted for use with different sample compositions from those reported in the specific research studies we reviewed. For example, while we focused on readily available English-language instruments, several measures reviewed were successfully developed, translated, or otherwise adapted for use in other language and cultural contexts, and similar adaptation processes could be applied in further studies.2 Such modifications will require future efforts to collect and evaluate evidence of reliability, validity, and fairness in diverse samples of K−12 students.

Alignment between construct definitions and available measures

A key consideration for selection of measures is the degree to which a given measure aligns with the researcher's conceptualization of the target construct. Accordingly, we evaluated each identified measure based on the degree to which it aligned with our synthesized construct definitions to facilitate measure selection for future research. This evaluation of alignment involved determining whether each component of the construct definition was addressed by the measure. We limited our alignment evaluation to those measures that we recommended based on our analysis of reliability, validity, and fairness evidence and did not separately consider adaptations of those measures for different contexts.

Our task persistence definition was decomposed into four components: presence of a goal-directed task, presence of challenges or difficulties, sustained effort, and task completion (see Table 5 for alignment between persistence definition components and related theoretical frameworks as previously discussed in the Literature Review). The in-game behavioral indicators (DiCerbo, 2014) directly addressed sustained effort and task completion, and the remaining two components (a goal-directed, challenging task) are implied in the task design. The recommended self-report measures varied in the degree to which they aligned with our persistence definition. APT-STEM (Sunny, 2018) and the persistence subscale of the ASES (Dullas, 2018) were the only two measures that addressed all four definition components. The presence of challenges and sustained effort were addressed in all self-report measures, whereas task completion was addressed in three measures: APT-STEM, ASES, and the persistence subscale from SSES (OECD, 2021a,b). The two other-report measures from the LBS (Discipline/Persistence, Rikoon et al., 2012; Attention/Persistence, Canivez et al., 2006) only addressed sustained effort, while researcher observations of perseverance from the 3PP framework (DiNapoli and Miller, 2022) addressed all four persistence components.

Table 5. Proposed relationships between synthesized definition components and relevant theories/frameworks.

Our academic resilience definition was also decomposed into four components: presence of challenge, adversity, or stressor; use of adaptive behaviors or coping strategies; evidence of bounce back or recovery; and achievement of successful outcomes (see Table 5 for the alignment between academic resilience definition components and related theoretical frameworks as previously discussed in the Literature Review). Like persistence, the 17 self-report measures varied in their alignment with our resilience definition. Five measures covered all four definition components: ARQ (Anderson et al., 2020), Adolescent Resilience Scale (Anghel, 2015), DMF (Di Maggio et al., 2016), ARM (Ricketts et al., 2017), and Mission Skills Assessment (Yang, 2014). Five additional measures covered three definition components (excluding successful outcomes): RYDM (Furlong et al., 2009); Academic Resilience Scale (Martin and Marsh, 2006); Resilience Scale (Sarwar et al., 2010); BESSI (Sewell et al., 2024); and Resilience Scale (Sun and Stewart, 2007). The presence of challenges was the definition component addressed most frequently across self-report surveys (15 measures), followed by evidence of recovery and use of adaptive behaviors (13 measures each), while successful outcomes was the least addressed component (six measures). The two teacher-report measures from the Social Skills Rating Scale (Gresham and Elliot, 1990; Hill, 2017) and Mission Skills Assessment (Yang, 2014) both addressed evidence of recovery, whereas the presence of challenges and use of adaptive behaviors were only addressed in the Mission Skills Assessment, and successful outcomes was only addressed by the Social Skills Rating Scale.

We recommend selecting a measure that fully aligns with our definitions to provide a measure of persistence or resilience that is robust in terms of construct conceptualization and evidence of reliability, validity, and fairness. Although we believe that this alignment evaluation is informative for the measurement selection process, we do acknowledge that a more in-depth analysis of the alignment is likely warranted when selecting measures for individual studies (e.g., number of items addressing each definition component). The preceding review should serve as a guide to researchers, who ultimately will make their own best judgments about what measures to administer, creating and evaluating novel measures to capture additional construct components as needed for the aims and theoretical orientations of the research.

Individual differences in persistence and resilience