- 1Department of Human Development and Quantitative Methodology, University of Maryland, College Park, MD, United States

- 2Educational Testing Service, Princeton, NJ, United States

- 3Department of Learning, Data Analytics and Technology, Faculty of Behavioral Management and Social Sciences, University of Twente, Enschede, Netherlands

Editorial on the Research Topic

Process Data in Educational and Psychological Measurement

The increasing use of computer-based testing and learning environments is leading to a significant reform on the traditional form of measurement, with tremendous extra available data collected during the process of learning and assessment (Bennett et al., 2007, 2010). It means that we can learn and describe the respondents' performances not only by their responses, but also their responding processes, in addition to the response accuracy in the traditional tests (Ercikan and Pellegrino, 2017).

The recent advances in computer technology enhance the convenient collection of process data in computer-based assessment. One such example is time-stamped action data in an innovative item which allow for the interaction between a respondent and the item. When a respondent attempts an interactive item, his/her actions are recorded, in the form of an ordered sequence of multi-type, time-stamped events. These sorts of data stored in log files, referred to as process data in this book, provide information beyond response data that typically show response accuracy only. This additional information holds promise to help us understand the strategies that underlie test performance and identify key actions that lead to success or failure of answering an item (e.g., Han et al., 2019; Liao et al.; Stadler et al., 2019; He et al., 2021; Ulitzsch et al., 2021a; Xiao et al., 2021).

With the availability of process data in addition to response data, the measurement field is becoming increasingly interested in borrowing additional auxiliary information from the responding process to serve different assessment purposes. For instance, recently researchers proposed different models for response time and the joint modeling of responses and response time (e.g., Bolsinova and Molenaar; Costa et al.; Wang et al.). In addition, other process data such as the path collected based on eye-tracking devices (e.g., Zhu and Feng, 2015; Maddox et al., 2018; Man and Harring, 2021), action sequences in problem-solving tasks (e.g., Chen et al.; Tang et al., 2020; He et al., 2021; Ulitzsch et al., 2021b), and processes in collaborative problem solving (e.g., Graesser et al., 2018; Andrews-Todd and Kerr, 2019; De Boeck and Scalise, 2019), are also worthy of exploration and integration with product data for assessment purposes.

This Research Topic (formed in this edited e-book) intends to explore the forefront of responding to the needs in modeling new data sources and incorporating process data in the statistical modeling of multiple possible assessment data. This edited book presents the cutting-edge research related to utilizing process data in addition to product data such as item responses in educational and psychological measurement for enhancing accuracy in ability parameter estimation (e.g., Bolsinova and Molenaar; De Boeck and Jeon; Engelhardt and Goldhammer; Klotzke and Fox; Liu C. et al.; Park et al.; Schweizer et al.; Wang et al.; Zhang and Wang), cognitive diagnosis facilitation (e.g., Guo and Zheng; Guo et al.; Jiang and Ma; Zhan, Liao et al.; Zhan, Jiao et al.), and aberrant responding behavior detection (e.g., Liu H. et al.; Toton and Maynes).

Throughout the book, the methods for analyzing process data in technology-enhanced innovative items in large-scale assessment for high-stakes decisions are addressed (e.g., Lee et al.; Stadler et al.). Further, the methods for the extraction of useful information in process data in assessments such as serious games and simulations were also discussed (e.g., Liao et al.; Kroehne et al.; Ren et al.; Yuan et al.). The interdisciplinary studies that borrow data-driven methods from computer science, machine learning, artificial intelligence, and natural language processing are also highlighted in this Research Topic (e.g., Ariel-Attali et al.; Chen et al.; Hao and Mislevy; Qiao and Jiao; Smink et al.), which provide new perspectives in data exploration in educational and psychological measurement. Most importantly, the models presenting the integration of the process data and the product data in this book are of critical significance to link the traditional test data with the new features extracted from the new data sources. Meanwhile, the papers included in the book provide an excellent source for data and coding sharing, which entails significant contributions to the applications of the innovative statistical modeling of assessment data in the measurement field.

The book chapters demonstrate the use of process data and the integration of process and product data (item responses) in educational and psychological measurement. The chapters address issues in adaptive testing, problem-solving strategy, validity of test score interpretation, item pre-knowledge detection, cognitive diagnosis, complex dependence in joint modeling of responses and response time, and multidimensional modeling of these data types. The originality of this book lies in the statistical modeling of innovative assessment data such as log data, response time data, collaborative problem-solving tasks, dyad data, change process data, testlet data, and multidimensional data. Further, new statistical models are presented for analyzing process data in addition to response data such as transition profile analysis, the event history analysis approach, hidden Markov modeling, conditional scaling, multilevel modeling, text mining, Bayesian covariance structure modeling, mixture modeling, and multidimensional modeling. The integration of multiple data sources and the use of process data provides the measurement field with new perspectives to solve assessment issues and challenges such as problem-solving strategy, cheating detection, and cognitive diagnosis.

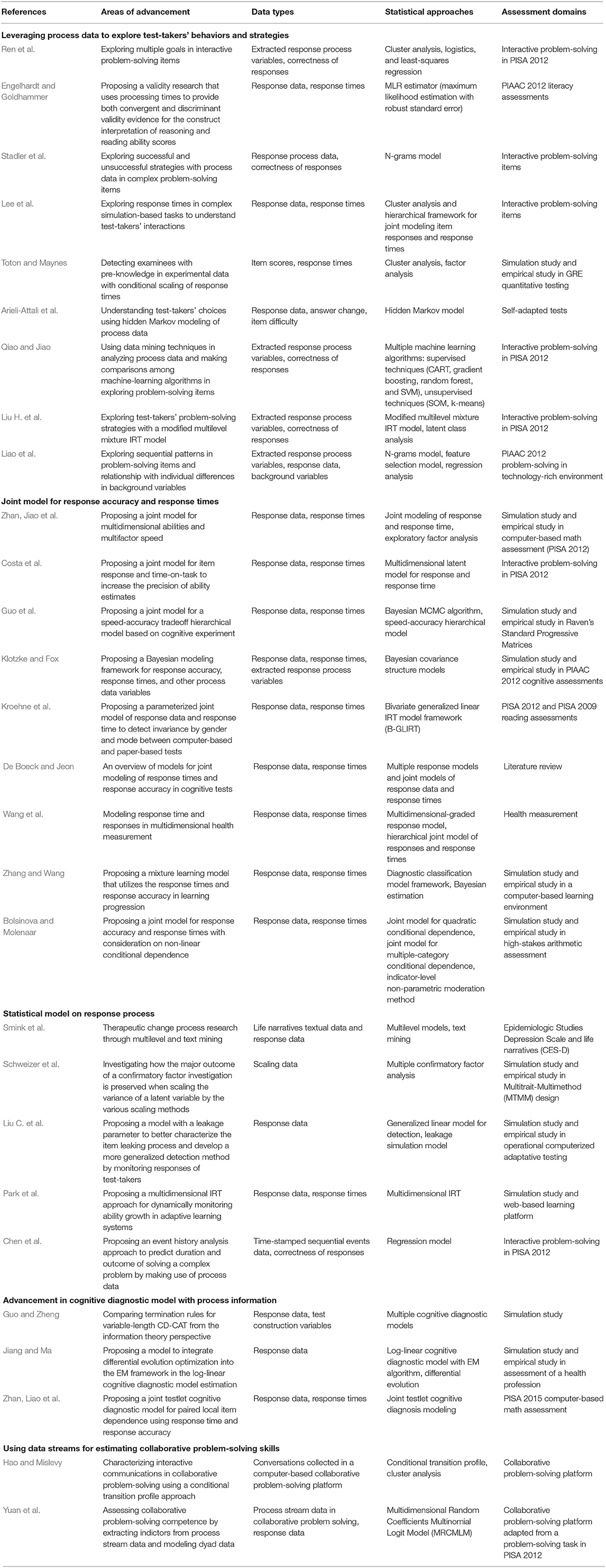

An overview of all the papers included in this Research Topic is summarized in Table 1 with respect to their key features. The scope of the Research Topic can be classified into five major categories:

(1) leveraging process data to explore test-takers' behaviors and problem-solving strategies,

(2) proposing joint modeling for response accuracy and response times,

(3) proposing new statistical models on analyzing response processes (e.g., time-stamped sequential events),

(4) advancing cognitive diagnostic models with new data sources, and

(5) using data streams in estimating collaborative problem-solving skills.

The above categorization focused on each paper's core contribution though some papers can be cross-classified. The papers' key findings and advancements impressively represent the current state-of-the-art methods in the field of process data analysis in educational and psychological assessments. As topic editors, we were happy to receive such a great collection of papers with various foci and submit these publications right as digital assessments are booming. The papers collected in this Research Topic are also diverse in data types, statistical approaches, and assessment with an extensive scope in both high-stake and low-stake assessments, covering research fields in education, psychology, health, and other applied disciplines.

As one of the first comprehensive books addressing the modeling and application of process data, this e-book has drawn great attention since its debut was cross-loaded on three journals in Frontiers in Psychology, Frontiers in Education, and Frontiers in Applied Mathematics and Statistics. With 29 papers from 77 authors, this book enhances interdisciplinary research in fields such as psychometrics, psychology, statistics, computer science, educational technology, and educational data mining, to name a few. As highlighted on the e-book webpage, (https://www.frontiersin.org/research-topics/7035/process-data-in-educational-and-psychological-measurement#impact) on November 13, 2021, this e-book has accumulated 115,069 total reviews and 17,940 article downloads since the Research Topic project launched in 2017. This number keeps growing on a daily basis. The diversified demographics provide convincing evidence that the papers in this book reached the global research community, addressing the critical issues of statistical modeling of multiple types of assessment data in the digital era. This book is just on time to provide tools and methods to shape this new measurement horizon.

As more and more data are being collected in computer-based testing, process data will become a very important source of information to validate and facilitate measuring response accuracy and provide supplementary information in understanding test-takers' behaviors, the reasons of missing data, and links with motivation studies. There is no doubt that there is high demand of such research in the large-scale assessment, both high-stake and low-stake, as well as in the personalized learning and assessment to tailor the best source and methods to help people learn and grow. This book is a timely addition to the current literature on psychological and educational measurement. It is expected to be applied more extensively in educational and psychological measurement, such as in computerized adaptive testing and dynamic learning.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

QH was partially supported by the National Science Foundation grants IIS-1633353.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We thank all authors who have contributed to this Research Topic and the reviewers for their valuable feedback on the manuscript.

References

Andrews-Todd, J., and Kerr, D. (2019). Application of ontologies for assessing collaborative problem-solving skills. Int. J. Testing 19, 172–187. doi: 10.1080/15305058.2019.1573823

Bennett, R. E., Persky, H., Weiss, A., and Jenkins, F. (2010). Measuring problem solving with technology: A demonstration study for NAEP. J. Technol. Learn. Assessment 8, 1–44. Retrieved from https://ejournals.bc.edu/index.php/jtla/article/view/1627

Bennett, R. E., Persky, H., Weiss, A. R., and Jenkins, F. (2007). Problem Solving in Technology-Rich Environments. A Report From the NAEP Technology Based Assessment Project, Research and Development Series (NCES 2007-466). National Center for Education Statistics.

De Boeck, P., and Scalise, K. (2019). Collaborative problem solving: Processing actions, time, and performance. Front. Psychol. 10:1280. doi: 10.3389/fpsyg.2019.01280

Ercikan, K., and Pellegrino, J. W. (Eds.). (2017). Validation of Score Meaning for the Next Generation of Assessments: The Use of Response Processes. New York, NY: Routledge.

Graesser, A. C., Fiore, S. M., Greiff, S., Andrews-Todd, J., Foltz, P. W., and Hesse, F. W. (2018). Advancing the science of collaborative problem solving. Psychol. Sci. Public Interest 19, 59–92. doi: 10.1177/1529100618808244

Han, Z., He, Q., and von Davier, M. (2019). Predictive feature generation and selection using process data from PISA interactive problem-solving items: an application of random forests. Front. Psychol. 10:2461. doi: 10.3389/fpsyg.2019.02461

He, Q., Borgonovi, F., and Paccagnella, M. (2021). Leveraging process data to assess adults' problem-solving skills: Identifying generalized behavioral patterns with sequence mining. Comp. Educ. 166:104170. doi: 10.1016/j.compedu.2021.104170

Maddox, B., Bayliss, A. P., Fleming, P., Engelhardt, P. E., Edwards, S. G., and Borgonovi, F. (2018). Observing response processes with eye tracking in international large-scale assessments: evidence from the OECD PIAAC assessment. Eur. J. Psychol. Educ. 33, 543–558. doi: 10.1007/s10212-018-0380-2

Man, K., and Harring, J. R. (2021). Assessing preknowledge cheating via innovative measures: A multiple-group analysis of jointly modeling item responses, response times, and visual fixation counts. Educ. Psychol. Meas. 81, 441–465. doi: 10.1177/0013164420968630

Stadler, M., Fischer, F., and Greiff, S. (2019). Taking a closer look: an exploratory analysis of successful and unsuccessful strategy use in complex problems. Front. Psychol. 10:777. doi: 10.3389/fpsyg.2019.00777

Tang, X., Wang, Z., He, Q., Liu, J., and Ying, Z. (2020). Latent feature extraction for process data via multidimensional scaling. Psychometrika 85, 378–397. doi: 10.1007/s11336-020-09708-3

Ulitzsch, E., He, Q., and Pohl, S. (2021a). Using sequence mining techniques for understanding incorrect behavioral patterns on interactive tasks. J. Educ. Behav. Statistics 45, 59–96. doi: 10.3102/10769986211010467

Ulitzsch, E., He, Q., Ulitzsch, V., Nichterlein, A., Molter, H., Niedermeier, R., et al. (2021b). Combining clickstream analyses and graph-modeled data clustering for identifying common response process using time-stamped action sequence. Psychometrika 86, 190–214. doi: 10.1007/s11336-020-09743-0

Xiao, Y., He, Q., Veldkamp, B., and Liu, H. (2021). Exploring latent states of problem-solving competence using hidden markov modeling on process data. J. Comp. Assisted Learn. 37, 1232–1247. doi: 10.1111/jcal.12559

Keywords: process data, psychological assessment, data mining, educational assessment, computer based assessment

Citation: Jiao H, He Q and Veldkamp BP (2021) Editorial: Process Data in Educational and Psychological Measurement. Front. Psychol. 12:793399. doi: 10.3389/fpsyg.2021.793399

Received: 12 October 2021; Accepted: 25 October 2021;

Published: 03 December 2021.

Edited and reviewed by: Huali Wang, Peking University Sixth Hospital, China

Copyright © 2021 Jiao, He and Veldkamp. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hong Jiao, hjiao@umd.edu; Qiwei He, qhe@ets.org; Bernard P. Veldkamp, b.p.veldkamp@utwente.nl

Hong Jiao

Hong Jiao Qiwei He

Qiwei He Bernard P. Veldkamp

Bernard P. Veldkamp