- 1School of Journalism and Communication, Tsinghua University, Beijing, China

- 2School of Journalism and Communication, Jinan University, Guangzhou, Guangdong, China

- 3Center for Computational Communication Studies, Jinan University, Guangzhou, Guangdong, China

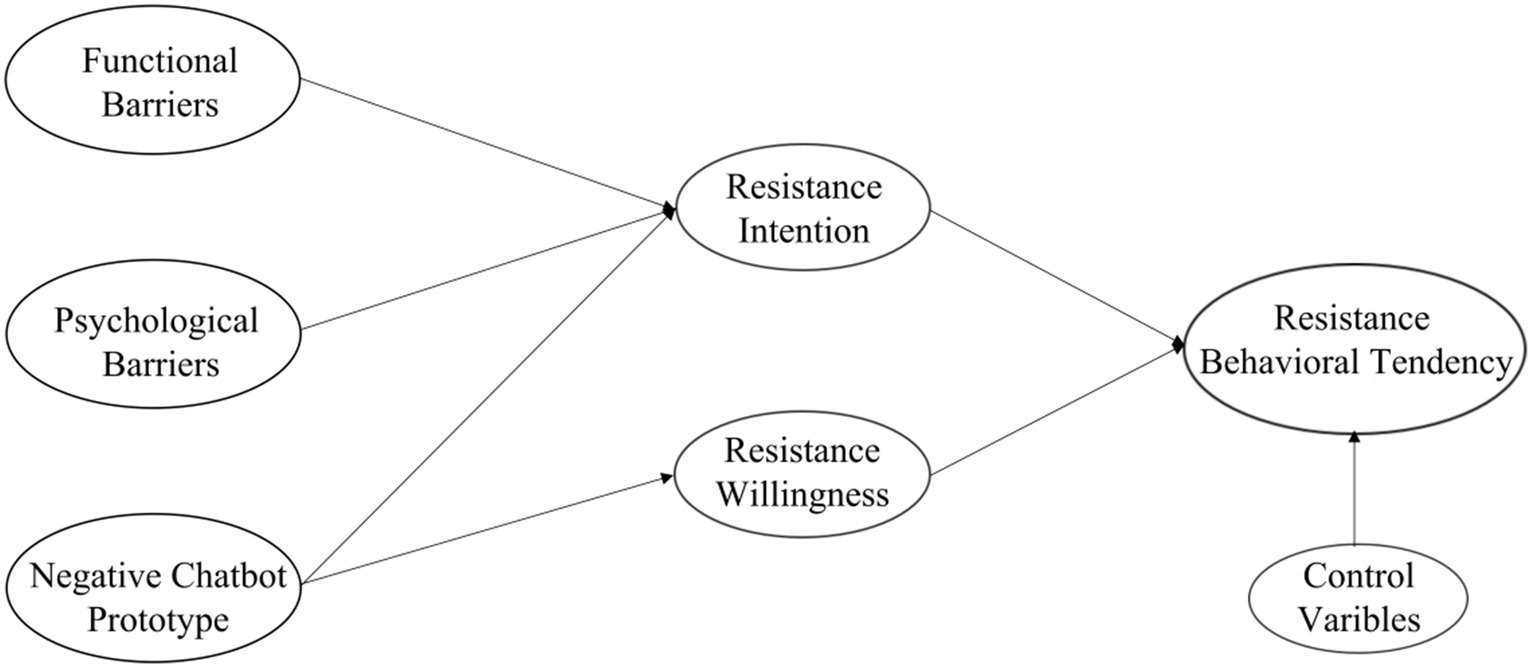

Introduction: Despite the numerous potential benefits of health chatbots for personal health management, a substantial proportion of people oppose the use of such software applications. Building on the innovation resistance theory (IRT) and the prototype willingness model (PWM), this study investigated the functional barriers, psychological barriers, and negative prototype perception antecedents of individuals’ resistance to health chatbots, as well as the rational and irrational psychological mechanisms underlying their linkages.

Methods: Data from 398 participants were used to construct a partial least squares structural equation model (PLS-SEM).

Results: Resistance intention mediated the relationship between functional barriers, psychological barriers, and resistance behavioral tendency, respectively. Furthermore, The relationship between negative prototype perceptions and resistance behavioral tendency was mediated by resistance intention and resistance willingness. Moreover, negative prototype perceptions were a more effective predictor of resistance behavioral tendency through resistance willingness than functional and psychological barriers.

Discussion: By investigating the role of irrational factors in health chatbot resistance, this study expands the scope of the IRT to explain the psychological mechanisms underlying individuals’ resistance to health chatbots. Interventions to address people’s resistance to health chatbots are discussed.

1 Introduction

Health chatbots are revolutionizing personal healthcare practices (Pereira and Díaz, 2019). Currently, health chatbots are utilized for personal health monitoring and disease consultation, diagnosis, and treatment (Tudor Car et al., 2020; Aggarwal et al., 2023). For example, a virtual nurse named “Molly,” developed by researchers at the Maastricht University Medical Center+ (MUMC+), offers healthcare guidance to patients with heart disease (Zorgenablers, 2019), and chatbots such as “Youper” have been designed to track users’ mood and provide them emotional management advice (Mehta et al., 2021). Further, “Tess” is a mental health chatbot that provides personalized medical suggestions to patients with mental disorders (Gionet, 2018), similar to a therapist. Remarkably, a personal health assistant aimed at preventative healthcare, “Your.MD,” has thus far been used to provide diagnostic services and solutions to nearly 26 million users worldwide (Billing, 2020). According to BIS Research, the global market for healthcare chatbots is expected to reach $498.1 million by 2029 (Pennic, 2019).

Medical artificial intelligence (AI) services, including health chatbots, are expected to be crucial for promoting the quality of healthcare, addressing the inequitable distribution of healthcare resources, reducing healthcare costs, and increasing the level and efficiency of diagnosis (Guo and Li, 2018; Lake et al., 2019; Schwalbe and Wahl, 2020). However, more participants preferred consulting with doctors rather than health chatbots for medical inquiries (Branley-Bell et al., 2023), even if they operate with the same level of expertise as human doctors (Yokoi et al., 2021); a significant number of users drop out during consultations with health chatbots (Fan et al., 2021), with nearly 40% of the people unwilling to even interact with them (PWC, 2017). Notably, many specialists are worried about the inherent limitations relating to potential discriminatory bias, explainability, and safety hazards of medical AI (Amann et al., 2020). For instance, one survey found that over 80% of professional physicians believe that health chatbots are unable to comprehend human emotions and represent the danger of misleading treatment by providing patients with inaccurate diagnostic recommendations (Palanica et al., 2019). Further, people perceive health chatbots as inauthentic (Ly et al., 2017), inaccurate (Fan et al., 2021), and possibly highly uncertain and unsafe (Nadarzynski et al., 2023), leading to their discontinuation or hesitation in circumstances where medical assistance is required. Although overcoming public resistance to AI healthcare technologies is critical for promoting its societal acceptance in the medical field in the future (Gaczek et al., 2023), few studies have investigated how resistance behavior toward AI healthcare technologies (e.g., health chatbots) is formed. Therefore, the first research question of this study was to explore which factors influence people to resist health chatbots.

Resistance is a natural behavioral response to innovative technology, as its adoption may change existing habits and disrupt routines (Ram and Sheth, 1989). The delayed transmission of innovation in the early stages of its growth is primarily attributed to people’s resistance behavior (Bao, 2009). Previous research has primarily focused on the impact of rational calculations underlying individuals’ technology adoption/resistance intentions on behavioral decisions. For example, the technology acceptance model (TAM) proposes that individuals’ desire to accept a certain technology is determined by the degree to which it improves work performance and its ease of use (Davis, 1989; Tian et al., 2024). Furthermore, according to the equity implementation model (EIM), people’s concerns about the ratio of technical inputs to benefits and comparisons with the advantages obtained by others in society have a substantial influence on their adoption behavior (Joshi, 1991). Some scholars, however, have indicated that “utility maximization” does not always serve as a criterion for people’s actions, and the rational paradigm may not competently explain people’s decision-making behavior (Baron, 1994; Yang and Lester, 2008). For example, Parasuraman (2000) found that individuals’ perceived discomfort and insecurity regarding innovative technologies are important limiting factors in their adoption process, and the normative sociocultural pressures of adopting innovative technologies may also lead to resistance behavior (Migliore et al., 2022). However, few studies have examined the influence of irrational motivations and psychological mechanisms on health chatbot resistance behaviors. As such, the second research question of this study was to explore the psychological mechanisms behind people’s resistance to health chatbots.

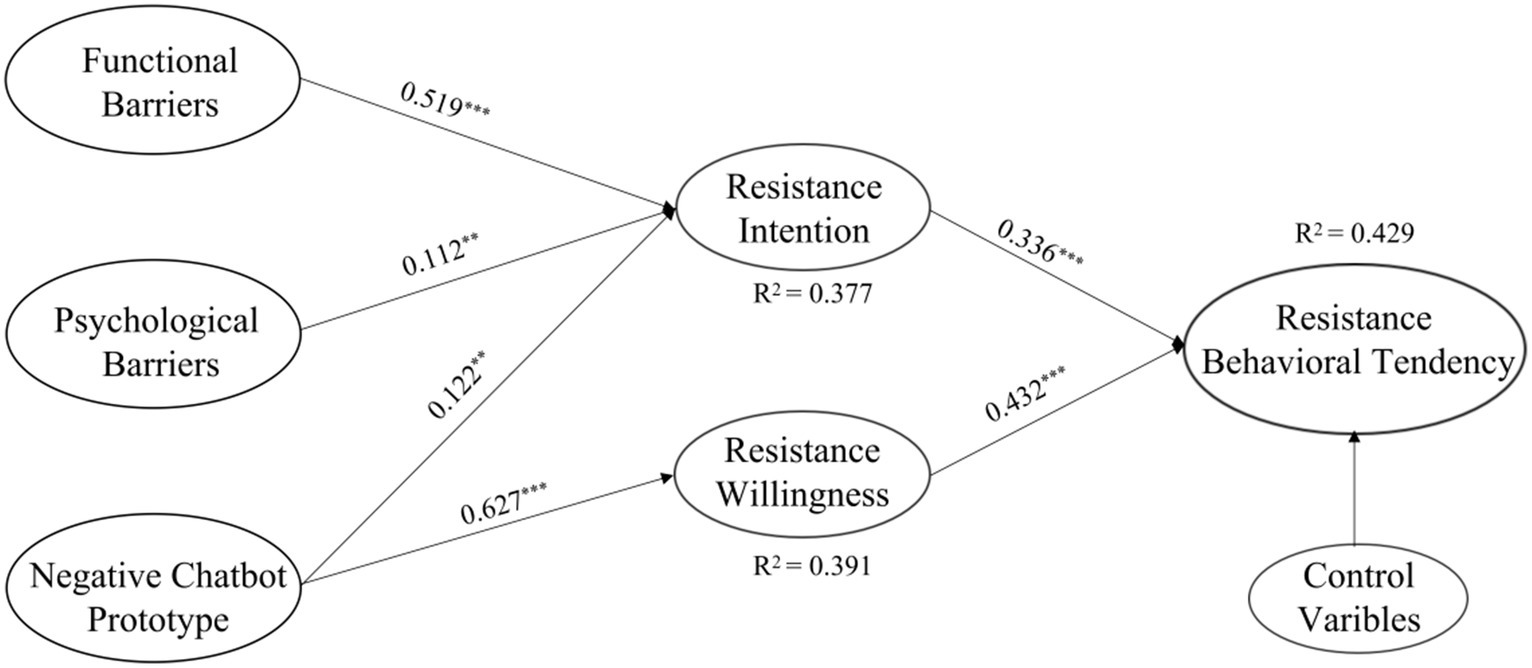

To address the abovementioned research gaps, in this study, we first reviewed prior literature on the innovation resistance theory (IRT) and prototype willingness model (PWM); based on these theories, we then developed a parallel mediation model to investigate the antecedents of people’s resistance behavioral tendency of health chatbots, as well as the underlying psychological mechanisms. The conceptual framework of the study is illustrated in Figure 1. The current study contributes to the existing literature in the following ways. First, while numerous prior studies have examined attitudes toward AI healthcare technologies and motivations for their adoption (Esmaeilzadeh, 2020; Gao et al., 2020; Khanijahani et al., 2022), the current study contributes to the existing literature by investigating people’s behavior in resisting health chatbots and the underlying psychological mechanisms. Second, by identifying the rational and irrational factors influencing individuals’ resistance to health chatbots, this study advances the established literature’s comprehension of resistance behavior toward health chatbots. Finally, by combining the IRT and PWM, this study identifies the dual rational/irrational mediating mechanisms that influence people’s health chatbot resistance behavioral tendency and provides a valuable and insightful perspective for conducting future research on medical AI adoption behavior.

2 Literature review and hypothesis development

2.1 Innovation resistance theory

The IRT, initially proposed by Ram (1987), draws on the diffusion of innovation theory (DIT; Rogers and Adhikarya, 1979) and attempts to explain why people oppose innovation from a negative behavioral perspective. Individual resistance to innovation, according to the IRT, originates from changes in established behavioral patterns and the uncertainty aspect of innovation (Ram and Sheth, 1989). If innovation is likely to disrupt daily routines and conflict with established behavioral patterns and customs, individuals may refuse to utilize it and thus develop resistance behavior (Ram, 1987). Subsequently, Ram and Sheth (1989) revised the IRT by proposing that two particular barriers perceived by individuals when confronted with innovation, namely, functional and psychological barriers, result in their resistance behavioral tendency. Functional barriers refer to potential conflicts between individuals and innovations in terms of usage, value, and risk, whereas psychological barriers relate to the potential impact of individuals’ perceived innovation on established social beliefs, constituting tradition and image barriers (Kleijnen et al., 2009; Kaur et al., 2020).

The IRT provides a comprehensive operationalization framework for examining individual resistance to innovative technologies (Kleijnen et al., 2009). Previous research has demonstrated that functional and psychological barriers can significantly predict people’s resistance intentions and behaviors toward innovative technologies. For example, the IRT explains approximately 60% of the variance in people’s resistance to mobile payment technology (Kaur et al., 2020) and nearly 55% of the variance in their resistance to the online purchase of experience goods (Lian and Yen, 2013). Specifically, in terms of functional barriers, Prakash and Das (2022) discovered that the perceived value barriers, usage complexity, and privacy disclosure risks of digital contact-tracking apps can increase the intentions to resist such devices. Singh and Pandey (2024) also indicated that inefficient collaboration with AI devices is also a critical barrier to their usage. Yun and Park (2022), conversely, found that the reliability of chatbot service quality positively impacts users’ satisfaction and repurchase intention. Regarding psychological barriers, Chen et al. (2022) found that individuals’ perceived tradition barriers to changes in established ticketing habits brought about by mobile ticketing services were key predictors of resistance, and that those with a negative perception of online banking were more likely to display subsequent resistance (Kuisma et al., 2007).

Given that the IRT has previously demonstrated effective predictions of resistance intention and subsequent resistance behavior in innovative technologies such as mobile payments (Kaur et al., 2020), internet banking (Laukkanen et al., 2009), and smart home services (Hong et al., 2020), this study speculates that functional and psychological barriers of health chatbots are positively associated with people’s resistance intention. In a word, Individuals may be unwilling to engage with health chatbots if they believe that there are more barriers and risks involved than benefits. Similarly, when people have an adverse impression of the actual utility of health chatbots and perceive them as contradictory to their own healthcare-seeking norms, they may develop resistance intention to health chatbots. Accordingly, this study proposes the following research hypotheses:

H1: Functional barriers have a positive effect on health chatbot resistance intention.

H2: Psychological barriers have a positive effect on health chatbot resistance intention.

2.2 Prototype perception and resistance behavior

Prototypes are social images that represent individuals’ intuitive perceptions of the typical characteristics conveyed by engaging in certain social behaviors, such as the degree to which they evaluate behaviors including smoking (Piko et al., 2007), alcohol abuse (Norman et al., 2007), substance use (Wills et al., 2003), and risky selfies (Chen et al., 2019). In daily life, prototypes are commonly perceived as representations of a particular group that are easily identifiable and visible (Gibbons and Gerrard, 1995). Prototype perceptions of specific groups or social behaviors facilitate or inhibit individual behavioral tendencies (Thornton et al., 2002; Gerrard et al., 2008; Litt and Lewis, 2016; Lazuras et al., 2019). For example, adolescents who have negative prototype perceptions of smoking (e.g., it is “stupid”) significantly predict resistance to smoking (Piko et al., 2007). Conversely, if they perceived smoking as a positive prototype (e.g., it is “cool”), they were more likely to smoke (Gibbons and Gerrard, 1995). Thus, by adapting to, assimilating, or distancing themselves from specific prototypes, individuals can adopt behaviors that build a desired self-image or resist certain behaviors to avoid a socially unfavorable image (Gibbons et al., 1991; Gibbons and Gerrard, 1995).

Individual attitudes and subsequent behavioral tendencies are commonly thought to be influenced by prototypical similarity and favorability (Lane and Gibbons, 2007; Branley and Covey, 2018). Prototypical similarity is the degree of similarity between the individual’s perceived self and the prototype, and is usually assessed by the individual’s response to the question “How similar are you to the prototype?” (Gerrard et al., 2008). Prototypical favorability is considered to be an individual’s intuitive attitudinal evaluation toward a certain group or behavior, the assessment of which usually involves adjectival descriptors (Gibbons and Gerrard, 1995). For example, prototype favorability is usually measured by evaluating how certain behaviors are consistent with a series of adjectives such as “popular” or “unpleasant.” The more favorable individuals’ attitudes toward particular groups or objects, the greater their likelihood of joining the group or engaging in that behavior, and vice versa (Piko et al., 2007). Yokoi et al. (2021) discovered that the perceived low-value similarity of AI healthcare technologies led to a distrust of AI healthcare systems, and the degree of perceived anxiety and fear regarding health services may also lead to individual resistance behavior (Tsai et al., 2019).

Although initial research on the PWM relied solely on prototypical perceptions to explain behavioral willingness (Blanton et al., 1997; Gibbons et al., 1998), recent studies have shown that individuals’ prototypical perceptions can also explain behavioral intentions (Norman et al., 2007; Zimmermann and Sieverding, 2010). Further research on the effects of prototypical properties by Blanton et al. (2001) suggests that negative prototypical perceptions are more likely to lead to personal behavioral changes. In an investigation of teenage smoking resistance, it was observed that negative prototype perceptions were more likely to profoundly influence behavioral decisions than positive perceptions (Piko et al., 2007).

Based on the studies above, it can be inferred that if people have negative prototypical beliefs about health chatbots, such as “unsafe” and “unreliable,” their subsequent resistance intention and willingness to health chatbots are more likely to be substantial. Thus, this study speculates that a negative prototype perception regarding health chatbots may increase people’s resistance intention and willingness, and the following research hypotheses are proposed:

H3a: A negative prototype perception regarding health chatbots has a positive effect on resistance intention.

H3b: A negative prototype perception regarding health chatbots has a positive effect on resistance willingness.

2.3 Mediating role of resistance intention and resistance willingness

Previous studies have investigated the acceptance and resistance behaviors of individuals in the context of innovative medical technologies from a rational decision-making perspective (Tavares and Oliveira, 2018; Ye et al., 2019), generally concluding that individuals’ adoption behaviors toward medical technologies are the result of thoughtful deliberation (Deng et al., 2018; Wang et al., 2022). However, individuals’ decisions to accept healthcare innovations are not necessarily reasonable or logical. Irrational elements such as self-related emotions (Sun et al., 2023), social pressure (Jianxun et al., 2021), and specific sociocultural contexts (Hoque and Bao, 2015; Low et al., 2021) have also been found to have a significant impact on decisions to utilize digital health technology.

According to the PWM, “reasoned action” and “social reaction” constitute the two pathways through which individuals process information (Gibbons et al., 1998). “Reasoned action” is considered to be akin to the deductive pathway of the theory of reasoned action (TRA), which refers to people’s behavioral intention based on rational considerations and after thoroughly considering the consequences of a given behavior (Todd et al., 2016). For example, the perceived usefulness and usability of telemedicine technology are critical for promoting usage intentions and behaviors (Rho et al., 2014), whereas the greater the perceived performance and privacy risks of mobile physician procedures, the lower the adoption intentions (Klaver et al., 2021). Meanwhile, according to Todd et al. (2016), the “social reaction” pathway is dominated by irrational causes and is a behavioral reaction based on intuitive or heuristic elements. Thus, in contrast to behavioral intentions, which are built on rational decision-making, behavioral willingness represents reactive actions in response to a specific situational stimulus or social stress (Chen et al., 2019) and is more likely to be profoundly influenced by perceived prototypes (Hyde and White, 2010). For example, in a prior study investigating expert perspectives on the acceptance of chatbots for sexual and reproductive health (SRH) services, half the participants from the total sample expressed low acceptance because of the perceived unreliability of such devices (Nadarzynski et al., 2023).

Based on the aforementioned studies, this study suggests that there is a dual psychological process of resistance intention and resistance willingness behind people’s resistance behavioral tendency of health chatbots. Resistance intention refers to individuals’ taking action based on rational considerations before engaging in resistance behavior. Resistance willingness is recognized as a relatively emotional and impulsive behavioral tendency behind individuals’ resistance behavior (Gerrard et al., 2008; Todd et al., 2016), where individuals are more likely motivated by social pressure and ambiguous perceptions regarding the typical negative characteristics of a specific technology. In summary, individuals may follow both rational and irrational behavioral paths in the process of innovative medical technology utilization. Consequently, this study delineates the rational and irrational psychological processes behind individuals’ resistance behavior toward health chatbots and investigates the potential influence of two psychological mechanisms, resistance intention and resistance willingness, on their resistance behavioral tendency. Due to the functional and psychological barriers of the IRT stemming from the “rational person hypothesis,” it is emphasized that economic gains and cost savings will largely influence the likelihood of innovation resistance (Szmigin and Foxall, 1997). Therefore, this study speculates resistance intention mediates the relationships between functional barriers, psychological barriers, and resistance behavioral tendency, respectively. Furthermore, resistance intention and resistance willingness were hypothesized to mediate the relationship between negative prototype perceptions of health chatbots and resistance behavioral tendency. Accordingly, this study proposes the following hypotheses:

H4: Resistance intention and resistance willingness toward health chatbots have positive effects on resistance behavioral tendency.

H5: Functional barriers and resistance behavioral tendency are mediated by resistance intention toward health chatbots.

H6: Psychological barriers and resistance behavioral tendency are mediated by resistance intention toward health chatbots.

H7: A negative prototype perception regarding health chatbots and resistance behavioral tendency are mediated by resistance intention and resistance willingness toward health chatbots.

3 Methodology

3.1 Sample and procedure

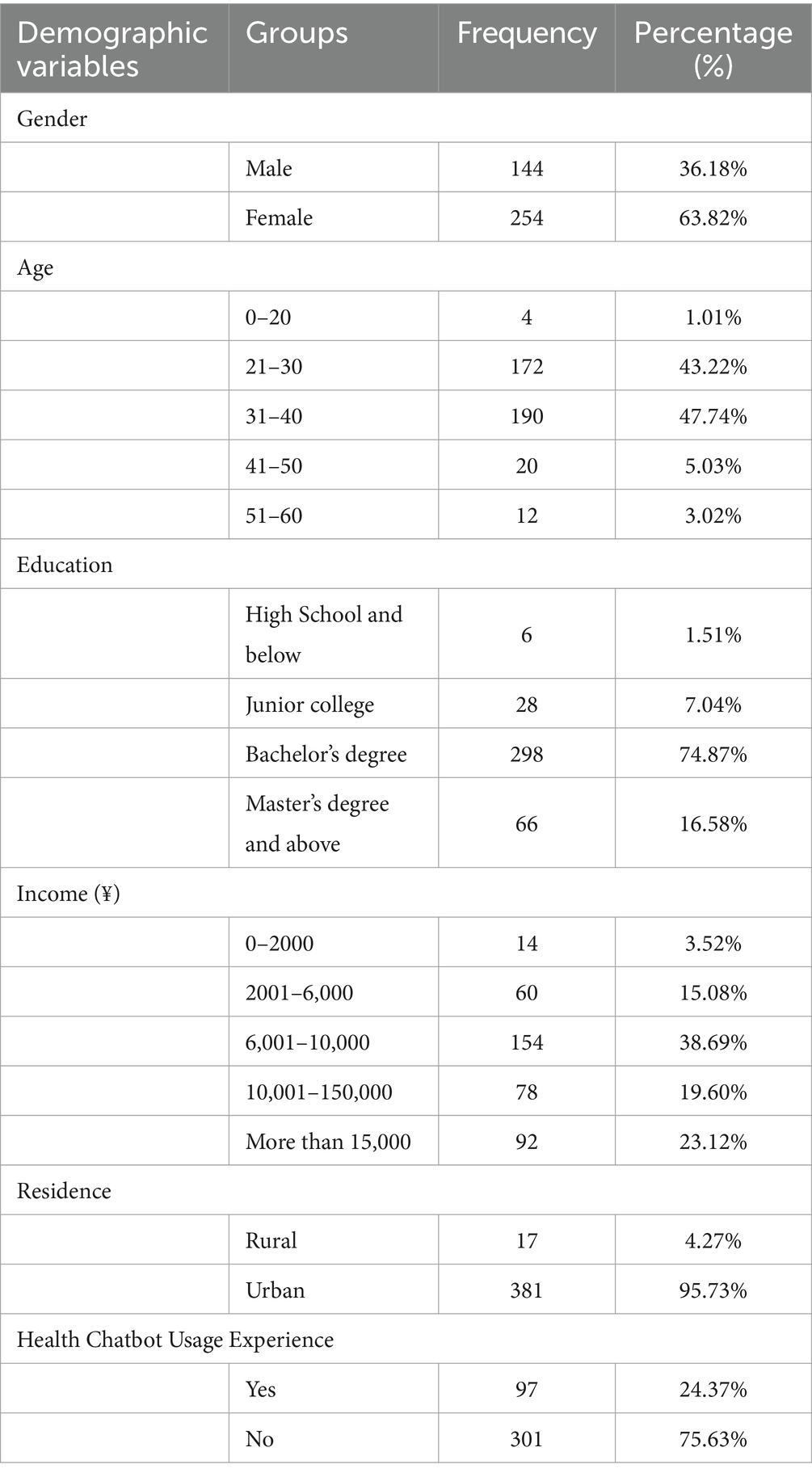

To examine the research hypotheses, data were collected using credamo (www.credamo. com), a popular online questionnaire research platform in China; the respondents were provided extrinsic incentives to register for the survey (Zheng, 2023). By checking each participant’s IP address and limiting each device to a single response, the questionnaire system automatically ensured the validity of the answers. Following completion of the informed consent form, participants completed a self-report questionnaire about health chatbots through credamo. To ensure that each participant was fully informed about health chatbots and their usage scenarios, the respondents were initially provided with a description of what a health chatbot is and how it functions. Following this step, a total of 28 items were presented in the main component of the questionnaire to evaluate respondents’ views concerning functional barriers, psychological barriers, prototype perceptions, resistance intentions, resistance willingness, and resistance behavioral tendency of health chatbots. Finally, respondents were asked to provide demographic information including gender, age, education, income, residence, and experience with health chatbots. A total of 406 questionnaires were collected; however, eight individuals were excluded because they failed the attention check, and 398 participants qualified to form our research sample. The Ethics Committee of the School of Journalism and Communication, Jinan University (China; JNUSJC-2023-018) provided ethics approval for this study.

The sample size was determined following the criteria recommended by Kline (2023), which suggests that the ratio of the number of measured items to the number of participants should be at least 1:10. The 398 participants whose data were used for our analysis exceeded this sample size estimation and satisfied the academic recommendations. Table 1 presents the demographic information of the participants.

3.2 Measures

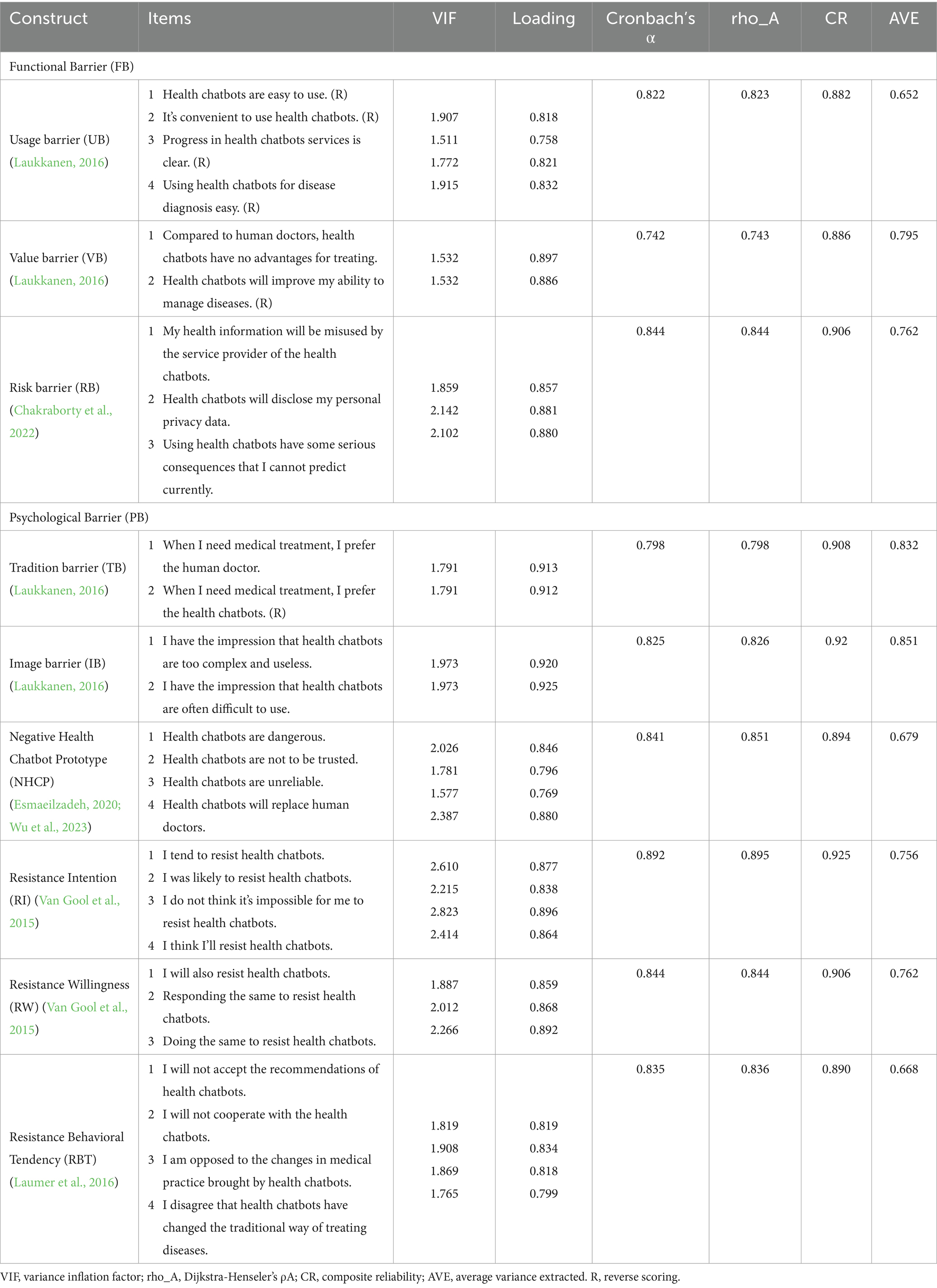

All measuring instruments utilized in this study were checked by two professionals in this research field, who jointly translated the instruments into Chinese after discussing and resolving differences to improve the questionnaires’ clarity, reliability, and content validity. Twenty-three respondents, including two experts, were included in a pretest to ensure the semantic content of the items and logical structure of the questionnaires. The questionnaires’ content and structure were modified based on their feedback, as necessary. All items (Table 2) were evaluated using a 5-point Likert scale ranging from “1 = completely disagree” to “5 = completely agree.”

3.2.1 Functional barriers

According to Ram and Sheth (1989), functional barriers are the constraints of innovative technologies that require changes in users’ established behavioral habits, norms, and traditions. They include three dimensions of individual perceptions relating to usage barriers, value barriers, and risk barriers regarding innovation. This study measured the perceived functional barriers of people’s resistance to health chatbots in terms of the three dimensions mentioned above.

Usage Barriers (UB): Usage barriers indicate the amount of effort required to comprehend and utilize innovative technologies, as well as the degree of change to existing usage routines and habits (Ram and Sheth, 1989). Four items derived from Laukkanen (2016) were used to evaluate individual assessments of usage barriers related to health chatbots; for example, “Health chatbots are easy to use” (Cronbach’s α = 0.823).

Value Barriers (VB): Value barriers are generated by inconsistencies between innovations and current value systems, particularly when there are inequitable advantages when adopting innovative technologies (Parasuraman and Grewal, 2000). Two items adapted from Laukkanen (2016) were used to evaluate individuals’ perceptions of value barriers related to health chatbots; for example, “Compared to human doctors, health chatbots have no advantages for treating” (Cronbach’s α = 0.742).

Risk Barriers (RB): Risk barriers are described as adoption barriers caused by users’ perceived uncertainty regarding innovative technologies (Marett et al., 2015). Three items derived from Chakraborty et al. (2022) were used to evaluate individuals’ risk perceptions of health chatbots; for example, “My health information will be misused by the service provider of the health chatbots” (Cronbach’s α = 0.844).

3.2.2 Psychological barriers

Innovation may lead to psychological contradictions for individuals in some aspects, such as the impact of individuals’ technology utilization on their traditions and norms, and the perceived image barriers of innovation, which include the two dimensions of tradition barriers and image barriers (Ram and Sheth, 1989; Yu and Chantatub, 2015).

Tradition Barriers (TB): Tradition barriers are characterized by changes in existing user routines, culture, and behaviors, as well as social pressures linked to the application of innovation (Sadiq et al., 2021). Two items adapted from Laukkanen (2016) were used to evaluate individuals’ perceptions of tradition barriers related to health chatbots; for example, “When I need medical treatment, I prefer the human doctor” (Cronbach’s α = 0.798).

Image Barriers (IB): Image barriers refer to individuals’ negative impressions of innovation, focusing primarily on perceptions of the level of complexity of innovation utilization (Lian and Yen, 2014). Two items adapted from Laukkanen (2016) were used to evaluate individuals’ perceptions of image barriers related to health chatbots; for example, “I have the impression that health chatbots are too complex and useless” (Cronbach’s α = 0.825).

3.2.3 Negative health Chatbot prototype

In line with the prototype perception literature (Gibbons and Gerrard, 1995; Lazuras et al., 2019) and existing public perception research on medical AI (Esmaeilzadeh, 2020; Wu et al., 2023), the participants were asked to self-report their perceptions of the typical risk characteristics of health chatbots. An item example is as follows: “Health chatbots are dangerous” (Cronbach’s α = 0.841).

3.2.4 Resistance intention

Four items adapted from Van Gool et al. (2015) were used to evaluate individuals’ resistance intentions toward health chatbots. For example, an item is “I tend to resist health chatbots” (Cronbach’s α = 0.892).

3.2.5 Resistance willingness

Based on the prototype perception literature (Gibbons and Gerrard, 1995; Van Gool et al., 2015), resistance willingness toward health chatbots was measured by asking individuals about their experience in a given scenario: “If in real life and the online world, you found yourself surrounded by people who were resisting health chatbots, what would you do?” The participants were asked to self-report their resistance willingness in response to three items based on this scenario; for example, “I will also resist health chatbots” (Cronbach’s α = 0.844).

3.2.6 Resistance behavioral tendency

Three items adapted from Laumer et al. (2016) were utilized to evaluate individual resistance behavioral tendency of health chatbots. An item example is as follows: “I will not accept the recommendations of health chatbots” (Cronbach’s α = 0.835).

4 Results

Partial least squares structural equation modeling (PLS-SEM) was employed to examine the proposed research model. Compared to covariance-based structural equation modeling (CB-SEM), another important structural equation modeling method, PLS-SEM has flexibility in model construction, supports path estimation, and computes model parameters under non-normal distribution conditions (Hulland, 1999), This maximizes the explanatory power of endogenous variables, making it more appropriate for small and medium samples, as well as for studies targeting causal inference and predictiveness (Hair et al., 2019). Generally, PLS-SEM consists of two components: a measurement model used to examine the correlation between observable and latent variables and a structural model used to examine the correlation between exogenous and endogenous latent variables.

4.1 Common method bias

Cross-sectional surveys based on respondents’ self-reports may have a common method bias (CMB) issue (Podsakoff et al., 2003). This study first employed Harman’s single-factor technique to examine possible CMB, and the results revealed that the single factor contributed 33.29% of the total variance and did not exceed the 50% threshold (Chang et al., 2020). Second, the potential marker method was used to evaluate CMB, utilizing age as the marker variable (Li et al., 2023); the results showed that the correlation coefficient between the marker variable and other variables in our model did not exceed 0.3 (Lindell and Whitney, 2001). Finally, the collinearity diagnostics results among the explanatory variables revealed that the variance inflation factor (VIF) was less than 3.3 (Kock, 2015). The statistical indicators shown above imply that there was no CMB in this study.

4.2 Measurement model assessment

First, the measurement model was tested to examine the validity and reliability of the survey instruments. Table 2 shows that for all the instruments, the Cronbach’s α, Dijkstra-Henseler’s ρA, and composite reliability (CR) exceed 0.7, indicating acceptable internal reliability of the tools (Fornell and Larcker, 1981; Dijkstra and Henseler, 2015). Furthermore, the factor loadings of the instruments were all higher than the expected value of 0.7, and the average variance extracted (AVE) varied from 0.652 to 0.851, which is higher than the threshold value of 0.5 (Fornell and Larcker, 1981). Consequently, the convergent validity of the instruments was verified.

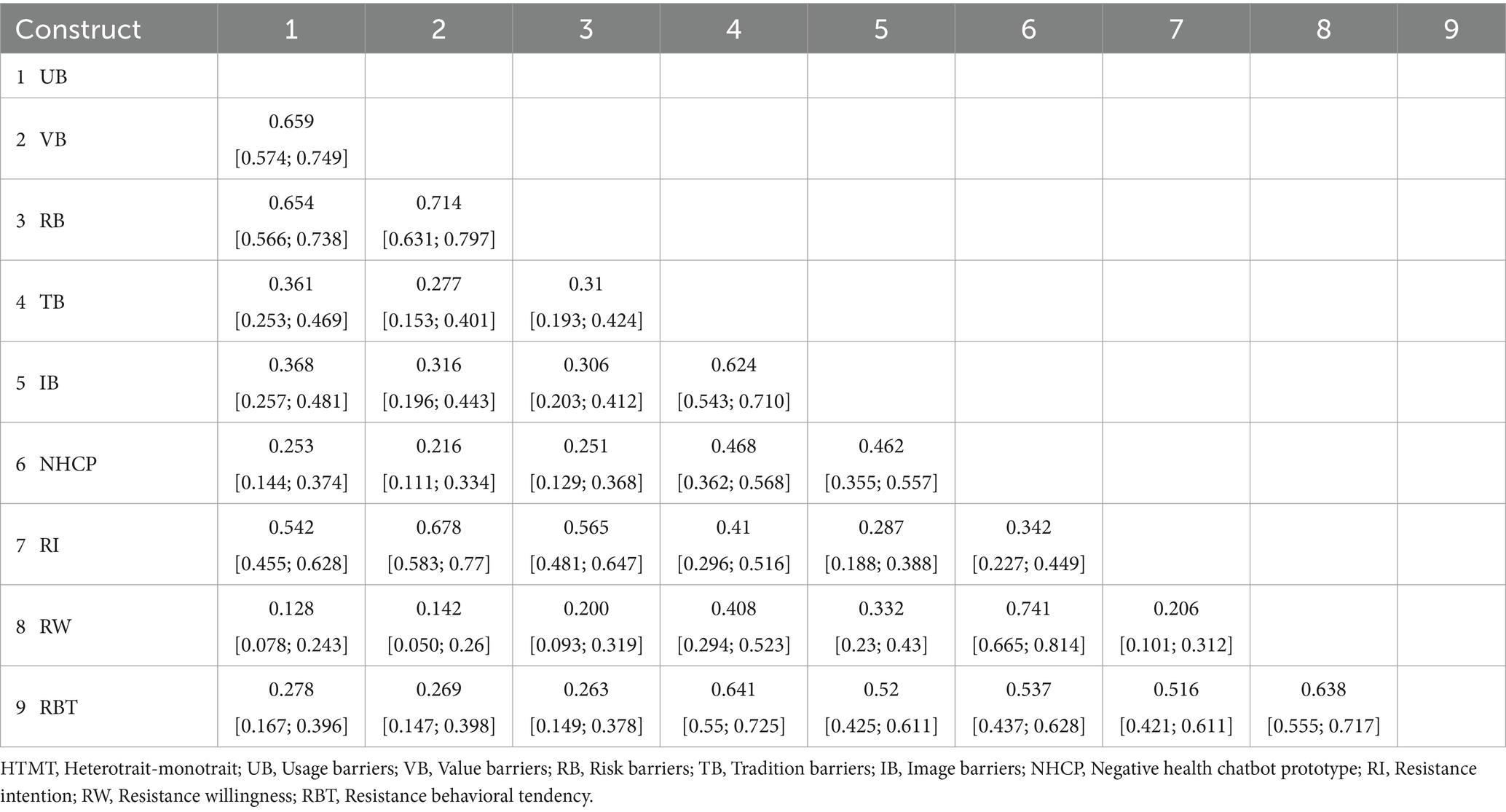

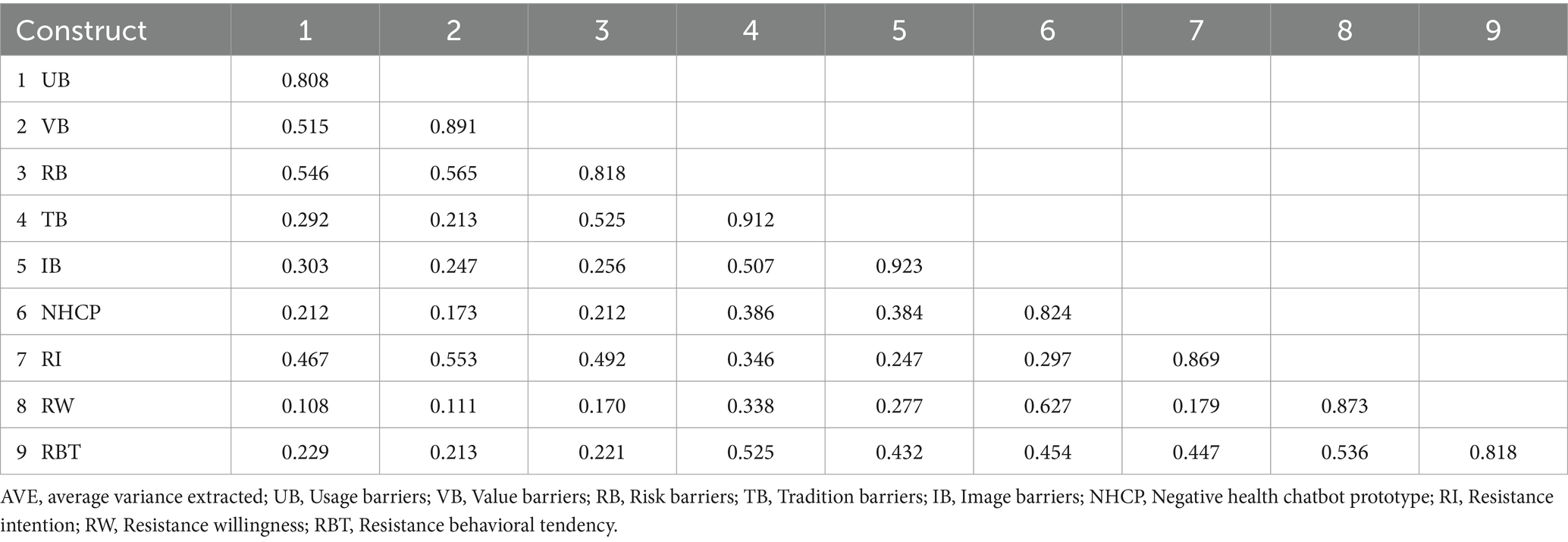

Second, the discriminant validity of the instruments was examined. As shown in Table 3, the HTMT ratio varied from 0.128 to 0.741 and did not exceed the 0.85 threshold, whereas the confidence interval of the HTMT ratio did not exceed 1.00 (Henseler et al., 2015). Table 4 indicates that the correlation coefficient between any two variables is less than 0.8, and the square root of the AVE exceeds the value of the correlation coefficient between the variables (Campbell and Fiske, 1959; Fornell and Larcker, 1981). These results suggest that the measurements passed the discriminant validity test.

Finally, the standardized root mean square residual (SRMR) and d_ULS were assessed to evaluate the global model fit. The SRMR index of the proposed research model was 0.054, which is lower than the recommended threshold of 0.08. The d_ULS is expected to be lower than 0.95 (Tenenhaus et al., 2005), and this value of the proposed research model was 0.926. Being higher than the recommended index, this indicated that the overall degree of model fit met the research requirements.

4.3 Structural model assessment

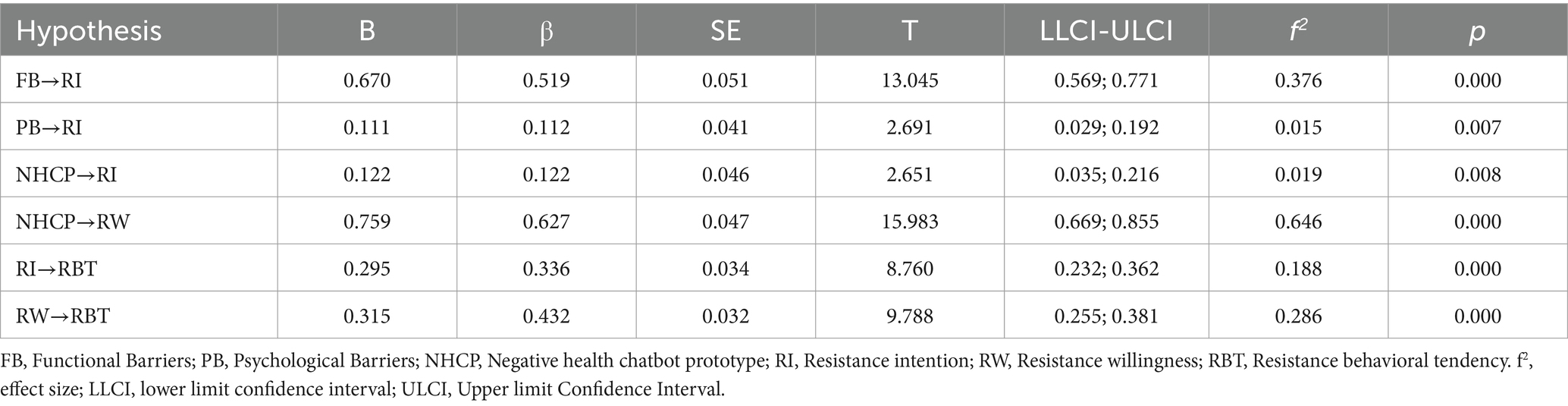

Using the PLS-SEM algorithm and bootstrapping resampling procedure, this study evaluated the path coefficients and significance of the proposed model. The explained variance (R2) and effect size (f2) were also estimated to test the model’s explanatory power and actual efficacy, respectively. The R2 values for resistance intention, resistance willingness, and resistance behavioral tendency were 0.377, 0.391, and 0.429, respectively, each of which was higher than 0.19 (Purwanto, 2021). This indicated that the research model had good explanatory power. The f2 value was used to estimate whether the latent variables had substantial effects on the endogenous variables. Table 5 indicates that, f2 values range from 0.015 to 0.646; this clarifies that in the model, two paths have weak effects and four paths exceed the medium effect (higher than 0.15; Cohen, 1988).

As shown in Table 5, the results of the path analysis indicate that functional barriers positively influenced resistance intention (β = 0.519, p < 0.001), thus H1 was supported. Additionally, psychological barriers also had a significant positive effect on resistance intention (β = 0.112, p = 0.007), thus, H2 was supported. A negative prototype perception regarding health chatbots also had a significant positive effect on both resistance intention (β = 0.122, p = 0.008) and resistance willingness (β = 0.627, p < 0.001); thus, H3a and H3b were supported. Finally, both resistance intention (β = 0.336, p < 0.001) and resistance willingness (β = 0.432, p < 0.001) were positive predictors of resistance behavioral tendency; thus, H4 was supported.

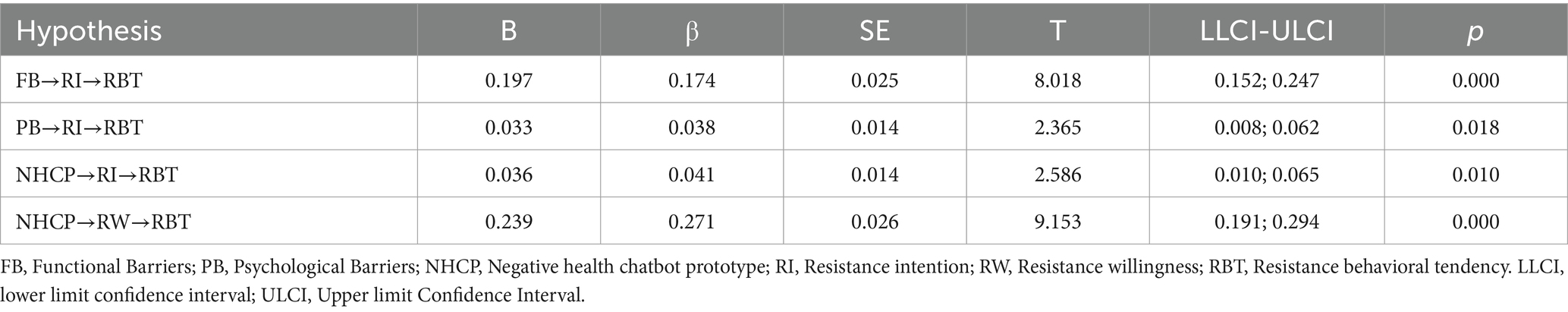

We also conducted a series of mediation analyses to examine the mediating role of resistance intention and resistance willingness between functional barriers, psychological barriers, a negative prototype perception regarding health chatbots, and resistance behavioral tendency. Table 6 indicates that resistance intention significantly mediated the link between functional barriers and resistance behavioral tendency (β = 0.174, CI [0.152; 0.247], p < 0.001). Thus, H5 was supported. Moreover, resistance intention (β = 0.038, CI [0.008; 0.062], p = 0.018) also had a significant mediating effect in the link between psychological barriers and resistance behavioral tendency. Thus, H6 was supported. Finally, resistance intention (β = 0.041, CI [0.010; 0.065], p = 0.010) and resistance willingness (β = 0.271, CI [0.191; 0.294], p < 0.001) also had significant mediating effects on the link between a negative prototype perception regarding health chatbots and resistance behavioral tendency. Thus, H7 was supported (see Figure 2).

5 Discussion and implications

5.1 Discussion

This study aimed to examine the factors contributing to individuals’ resistance toward health chatbots, as well as the underlying psychological mechanisms, by constructing a parallel mediation model. Based on the theoretical frameworks of IRT and PWM, our results clarify the effects of functional barriers, psychological barriers, and negative prototype perceptions regarding health chatbots on resistance behavioral tendency, as well as the mediating roles played by resistance intention and resistance willingness between their linkages.

Consistent with prior studies conducted in the domain of innovation resistance (Sadiq et al., 2021; Cham et al., 2022; Friedman and Ormiston, 2022), this study revealed that perceived functional and psychological barriers also exerted a significant positive influence on individuals’ resistance intention to health chatbots. Moreover, according to the path coefficients of the findings, we found that functional barriers of health chatbots have a greater positive impact on people’s resistance intention and behavior than psychological barriers. This conclusion is similar to that of prior studies, such as Kautish et al. (2023), who found that functional barriers to telemedicine apps play a more predictable role in users’ purchase resistance intentions. Furthermore, Our results demonstrate that people’s negative prototype perception regarding health chatbots, such as their being “dangerous” and “untrustworthy,” significantly influence their resistance intention, resistance willingness, and resistance behavioral tendency. This suggests that people’s heuristic perceptions of the negative images and risk beliefs concerning health chatbots are important determinants of their resistance behavioral tendency. This finding responds to a previous research question on whether people’s negative psychological perceptions of healthcare AI affect their subsequent usage intentions and behaviors (Schepman and Rodway, 2020; Jussupow et al., 2022). Specifically, according to the path coefficients, a negative prototype perception regarding health chatbots had a greater impact on resistance behavioral tendency through resistance willingness than resistance intention. This is consistent with previous research, which found that prototypical perceptions influence individual behaviors through behavioral willingness rather than behavioral intentions in behaviors such as smoking (Gerrard et al., 2005) and alcohol abuse (Davies et al., 2016; Gibbons et al., 2016).

Drawing on the PWM, this study reveals the dual rational/irrational mediating mechanisms underlying people’s resistance to health chatbots. In particular, this study demonstrates that individuals’ perceived functional and psychological barriers may significantly influence their resistance intention, thereby increasing the likelihood of subsequent resistance behavioral tendency. Similarly, negative prototypes regarding health chatbots may increase resistance behavioral tendency through resistance intention and resistance willingness. Importantly, our results indicate that negative prototype perceptions regarding health chatbots have a greater impact on individuals’ resistance willingness and their subsequent resistance behavioral tendency than functional and psychological barriers. In summary, when confronted with irrational factors such as social pressure and intuitive negative cues, people are more likely to reject health chatbots. This is consistent with previous research by Sun et al. (2023), who discovered that the presence of emotional disgust toward smartphone apps reduced individuals’ adoption intentions. This result reaffirms the prior finding that prototype perceptions have a greater influence through behavioral willingness, and thus impact individual behavior (Myklestad and Rise, 2007; Abedini et al., 2014; Elliott et al., 2017). Fiske et al. (2019) explained that since people do not integrate AI devices into their real lives, their ambiguous perceptions arising from their lack of specific knowledge can significantly affect the perceived risks of AI technologies (Dwivedi et al., 2021), and may lead to their refusal to utilize health chatbots.

5.2 Implications

5.2.1 Theoretical implications

By constructing a comprehensive model that includes rational and irrational psychological pathways to health chatbot resistance, this study contributes theoretically to the existing literature in the following ways. First, it enriches existing research on people’s acceptance behavior toward health chatbots. Previous studies have focused on investigating individuals’ attitudes toward health chatbots (Palanica et al., 2019), adoption motivations (Nadarzynski et al., 2019; Zhu et al., 2022), and psychological processes of adoption (Chang et al., 2022), with the aim of exploring ways to facilitate people’s adoption behavior in the context of medical AI technologies. However, identifying the factors that lead to people’s resistance to medical AI technology is a critical component in discovering ways to promote people’s adoption behaviors. This study systematically and empirically explored the factors and psychological mechanisms that influence people’s resistance to health chatbots by constructing a parallel mediation model. The study extends our understanding of individuals’ acceptance behaviors toward medical AI technologies from the perspective of their formative resistance behavioral tendency. Second, by combining the IRT and PWM, this study enriches existing literature on the antecedents and psychological pathways of individuals’ resistance to health chatbots. Prior research has primarily emphasized the impact of rational considerations such as acceptability (Boucher et al., 2021), perceived utility (Nadarzynski et al., 2019), and performance expectancy (Huang et al., 2021), on individuals’ health chatbot adoption behavior. This study focused on the effect of individuals’ direct heuristic negative prototype perceptions regarding health chatbots on resistance willingness and subsequent behavior, revealing that the irrational paths driven by negative prototype perceptions have a more profound influence on individuals’ resistance willingness and behavior toward health chatbots, providing valuable theoretical references for conducting future research on medical AI resistance behavior.

5.2.2 Practical implications

This study also reveals some practical insights that can contribute to the development of interventions for addressing people’s resistance to health chatbots. First, our findings suggest that individuals’ perceived functional barriers to health chatbots can significantly influence their resistance intentions and behaviors. Therefore, designing more convenient and relatively user-friendly health chatbots may be the way forward. As noted by Lee et al. (2020), improving the interactivity and entertainment of AI devices in healthcare may help reduce communication barriers between users and AI devices, thus increasing the acceptance of health chatbots. In addition, service feedback mechanisms for health chatbots should be established and adequately evaluated to optimize the devices, which in turn would reduce the perceived complexity of health chatbots and actual usage difficulty. Second, this study found that individuals’ psychological barriers to health chatbots also significantly impact resistance intention as well as subsequent resistance behavioral tendency. Thus, future designers of health chatbots should consider the important influence of psychological barriers on resistance behavioral tendency. Accordingly, health chatbot providers should design products and services that are more applicable to people’s daily lives and decrease the degree of disruption to their established routines. Furthermore, offline health chatbot experience programs should be established to enhance people’s sense of security in utilizing health chatbots and encourage the acceptance of innovative medical AI technologies. The necessary knowledge about health chatbots and their advantages should be increased to reverse the possible negative perception of health chatbots and reduce individuals’ psychological discomfort in their adoption process (Röth and Spieth, 2019). Finally, our findings highlighted the significant impact of individuals’ negative prototype perceptions regarding health chatbots on their resistance behavioral tendency. Therefore, it is crucial to eliminate people’s instinctive negative views of health chatbots for their social popularization. Health chatbot providers, in particular, should utilize influential media channels to continuously disseminate information regarding health chatbots’ scientific utility to address asymmetric perceptions and promote an objective understanding of this technology. Moreover, scientific facts about health chatbots, such as functioning principles, utilization scenarios, and essential precautions, should be popularized by media outlets to reverse negative prototypical perceptions about health chatbots and support rational views and assessments regarding this technology.

6 Limitations and future research

The present study had some limitations. First, the data for this study were derived from a cross-sectional survey based on self-reports; future research could use experimental methods to acquire causal insights or conduct a longitudinal tracking survey to construct a more dynamic model that explores the evolution of resistance attitudes and behaviors. Second, although this study has demonstrated the influence of factors such as functional barriers, psychological barriers, and negative prototype perceptions regarding health chatbots on resistance behavioral tendency, the influences of innovation resistance are commonly grounded in specific scenarios (Claudy et al., 2015). Thus, it would be valuable for future studies to incorporate in-depth interviews as well as qualitative research methodologies such as grounded theory to obtain more comprehensive results on the impact of health chatbots on individuals. Third, the measurement of resistance behavioral tendency in this study may not strictly represent actual resistance behavior. It is recommended that future research adopt more direct methods to measure people’s actual resistance behavior toward health chatbots. Fourth, consistent with prior research, the current study investigated resistance psychology and behavior primarily from the perspective of individual perceptions, attitudes, and behaviors. However, the factors influencing individual resistance to innovative technologies are diverse (Talwar et al., 2020; Dhir et al., 2021). For example, a recent study confirmed that user emotions impact innovation evaluation and subsequent resistance behavior (Castro et al., 2020). Therefore, future research should consider the effects of factors such as individual emotions, cultural context, and social circumstances on individuals’ resistance behaviors.

7 Conclusion

The popularization of AI in healthcare depends on the population’s acceptance of related technologies, and overcoming individual resistance to AI healthcare technologies such as health chatbots is crucial for their diffusion (Tran et al., 2019; Gaczek et al., 2023). Based on the IRT and PWM, this study investigated the effects of functional barriers, psychological barriers, and negative prototypical perceptions regarding health chatbots on resistance behavioral tendency and further identified the mediating roles of resistance intention and resistance willingness between their associations. The results indicated that resistance intention mediated the relationship between functional barriers, psychological barriers, and resistance behavioral tendency, respectively. Furthermore, The relationship between negative prototype perceptions and resistance behavioral tendency was mediated by resistance intention and resistance willingness. Importantly, the present study found that negative prototypical perceptions were more predictive of resistance behavioral tendency than functional and psychological barriers. This study empirically demonstrates the influence of the dual psychological mechanisms of rationality and irrationality behind individuals’ resistance to health chatbots, expanding knowledge on resistance behaviors toward health chatbots and recommending ways to overcome this resistance through tailored interventions.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://osf.io/d8mvu/.

Ethics statement

The studies involving humans were approved by the Ethics Committee of the School of Journalism and Communication, Jinan University (China) (JNUSJC-2023-018). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

XZ: Conceptualization, Data curation, Formal analysis, Methodology, Supervision, Writing – original draft, Writing – review & editing. YN: Formal analysis, Writing – original draft, Writing – review & editing. KL: Data curation, Funding acquisition, Project administration, Writing – review & editing. GL: Conceptualization, Formal analysis, Funding acquisition, Methodology, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This research is supported by the Fundamental Research Funds for the Central Universities (23JNQMX50).

Acknowledgments

The authors would like to express their appreciation to each person who took part in this online survey.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abedini, S., MorowatiSharifabad, M., Kordasiabi, M. C., and Ghanbarnejad, A. (2014). Predictors of non-hookah smoking among high-school students based on prototype/willingness model. Health Promot. Perspect. 4, 46–53. doi: 10.5681/hpp.2014.006

Aggarwal, A., Tam, C. C., Wu, D., Li, X., and Qiao, S. (2023). Artificial intelligence-based Chatbots for promoting health behavioral changes: systematic review. J. Med. Internet Res. 25:e40789. doi: 10.2196/40789

Amann, J., Blasimme, A., Vayena, E., Frey, D., and Madai, V. I. (2020). Explainability for artificial intelligence in healthcare: a multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 20, 310–319. doi: 10.1186/s12911-020-01332-6

Bao, Y. (2009). Organizational resistance to performance-enhancing technological innovations: a motivation-threat-ability framework. J. Bus. Ind. Mark. 24, 119–130. doi: 10.1108/08858620910931730

Baron, J. (1994). Nonconsequentialist decisions. Behav. Brain Sci. 17, 1–10. doi: 10.1017/s0140525x0003301x

Billing . (2020). Healthtech startup Your.MD raises €25m from FTSE 100 company. Sifted. Available at: https://sifted.eu/articles/healthtech-your-md-raises-25m (Accessed July 24, 2023).

Blanton, H., Gibbons, F. X., Gerrard, M., Conger, K. J., and Smith, G. E. (1997). Role of family and peers in the development of prototypes associated with substance use. J. Fam. Psychol. 11, 271–288. doi: 10.1037/0893-3200.11.3.271

Blanton, H., VandenEijnden, R. J., Buunk, B. P., Gibbons, F. X., Gerrard, M., and Bakker, A. (2001). Accentuate the negative: social images in the prediction and promotion of condom use. J. Appl. Soc. Psychol. 31, 274–295. doi: 10.1111/j.1559-1816.2001.tb00197.x

Boucher, E. M., Harake, N. R., Ward, H. E., Stoeckl, S. E., Vargas, J., Minkel, J., et al. (2021). Artificially intelligent chatbots in digital mental health interventions: a review. Expert Rev. Med. Devices 18, 37–49. doi: 10.1080/17434440.2021.2013200

Branley, D. B., and Covey, J. (2018). Risky behavior via social media: the role of reasoned and social reactive pathways. Comput. Hum. Behav. 78, 183–191. doi: 10.1016/j.chb.2017.09.036

Branley-Bell, D., Brown, R., Coventry, L., and Sillence, E. (2023). Chatbots for embarrassing and stigmatizing conditions: could chatbots encourage users to seek medical advice? Front. Commun. 8, 1–12. doi: 10.3389/fcomm.2023.1275127

Campbell, D. T., and Fiske, D. W. (1959). Convergent and discriminant validation by the multitrait-multimethod matrix. Psychol. Bull. 56, 81–105. doi: 10.1037/h0046016

Castro, C. A., Zambaldi, F., and Ponchio, M. C. (2020). Cognitive and emotional resistance to innovations: concept and measurement. J. Prod. Brand. Manag. 29, 441–455. doi: 10.1108/JPBM-10-2018-2092

Chakraborty, D., Singu, H. B., and Patre, S. (2022). Fitness Apps’s purchase behaviour: amalgamation of stimulus-organism-behaviour-consequence framework (S–O–B–C) and the innovation resistance theory (IRT). J. Retail. Consum. Serv. 67:103033. doi: 10.1016/j.jretconser.2022.103033

Cham, T. H., Cheah, J. H., Cheng, B. L., and Lim, X. J. (2022). I am too old for this! Barriers contributing to the non-adoption of mobile payment. Int. J. Health Serv. 40, 1017–1050. doi: 10.1108/IJBM-06-2021-0283

Chang, I. C., Shih, Y. S., and Kuo, K. M. (2022). Why would you use medical chatbots? Interview and survey. Int. J. Med. Inform. 165:104827. doi: 10.1016/j.ijmedinf.2022.104827

Chang, S. J., Van Witteloostuijn, A., and Eden, L. (2020). Common method variance in international business research. Res.Meth. Intl. Busi. 385, 385–398. doi: 10.1007/978-3-030-22112-6

Chen, C. C., Chang, C. H., and Hsiao, K. L. (2022). Exploring the factors of using mobile ticketing applications: perspectives from innovation resistance theory. J. Retail. Consum. Serv. 67:102974. doi: 10.1016/j.jretconser.2022.102974

Chen, S., Schreurs, L., Pabian, S., and Vandenbosch, L. (2019). Daredevils on social media: a comprehensive approach toward risky selfie behavior among adolescents. New Media Soc. 21, 2443–2462. doi: 10.1177/1461444819850112

Claudy, M. C., Garcia, R., and O’Driscoll, A. (2015). Consumer resistance to innovation—a behavioral reasoning perspective. J. Acad. Mark. Sci. 43, 528–544. doi: 10.1007/s11747-014-0399-0

Cohen, J. (1988). Statistical power analysis for the behavioral sciences. New York, NY: Academic Press.

Davies, E. L., Martin, J., and Foxcroft, D. R. (2016). Age differences in alcohol prototype perceptions and willingness to drink in UK adolescents. Psychol. Health Med. 21, 317–329. doi: 10.1080/13548506.2015.1051556

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13:319. doi: 10.2307/249008

Deng, Z., Hong, Z., Ren, C., Zhang, W., and Xiang, F. (2018). What predicts patients’ adoption intention toward mHealth services in China: empirical study. JMIR Mhealth Uhealth 6:e9316. doi: 10.2196/mhealth.9316

Dhir, A., Koshta, N., Goyal, R. K., Sakashita, M., and Almotairi, M. (2021). Behavioral reasoning theory (BRT) perspectives on E-waste recycling and management. J. Clean. Prod. 280:124269. doi: 10.1016/j.jclepro.2020.124269

Dijkstra, T. K., and Henseler, J. (2015). Consistent partial least squares path modeling. MIS Q. 39, 297–316. doi: 10.25300/MISQ/2015/93.2.02

Dwivedi, Y. K., Hughes, L., Ismagilova, E., Aarts, G., Coombs, C., Crick, T., et al. (2021). Artificial intelligence (AI): multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 57:101994. doi: 10.1016/j.ijinfomgt.2019.08.002

Elliott, M. A., McCartan, R., Brewster, S. E., Coyle, D., Emerson, L., and Gibson, K. (2017). An application of the prototype willingness model to drivers’ speeding behaviour. Eur. J. Soc. Psychol. 47, 735–747. doi: 10.1002/ejsp.2268

Esmaeilzadeh, P. (2020). Use of AI-based tools for healthcare purposes: a survey study from consumers’ perspectives. BMC Med. Inform. Decis. Mak. 20, 170–119. doi: 10.1186/s12911-020-01191-1

Fan, X., Chao, D., Zhang, Z., Wang, D., Li, X., and Tian, F. (2021). Utilization of self-diagnosis health chatbots in real-world settings: case study. J. Med. Internet Res. 23:e19928. doi: 10.2196/19928

Fiske, A., Henningsen, P., and Buyx, A. (2019). Your robot therapist will see you now: ethical implications of embodied artificial intelligence in psychiatry, psychology, and psychotherapy. J. Med. Internet Res. 21:e13216. doi: 10.2196/13216

Fornell, C., and Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 18, 39–50. doi: 10.1177/002224378101800104

Friedman, N., and Ormiston, J. (2022). Blockchain as a sustainability-oriented innovation?: opportunities for and resistance to Blockchain technology as a driver of sustainability in global food supply chains. Technol. Forecast. Soc. Change. 175:121403. doi: 10.1016/j.techfore.2021.121403

Gaczek, P., Pozharliev, R., Leszczyński, G., and Zieliński, M. (2023). Overcoming consumer resistance to AI in general health care. J. Interact. Mark. 58, 321–338. doi: 10.1177/10949968221151061

Gao, S., He, L., Chen, Y., Li, D., and Lai, K. (2020). Public perception of artificial intelligence in medical care: content analysis of social media. J. Med. Internet Res. 22:e16649. doi: 10.2196/16649

Gerrard, M., Gibbons, F. X., Houlihan, A. E., Stock, M. L., and Pomery, E. A. (2008). A dual-process approach to health risk decision making: the prototype willingness model. Dev. Rev. 28, 29–61. doi: 10.1016/j.dr.2007.10.001

Gerrard, M., Gibbons, F. X., Stock, M. L., Lune, L. S. V., and Cleveland, M. J. (2005). Images of smokers and willingness to smoke among African American pre-adolescents: an application of the prototype/willingness model of adolescent health risk behavior to smoking initiation. J. Pediatr. Psychol. 30, 305–318. doi: 10.1093/jpepsy/jsi026

Gibbons, F. X., and Gerrard, M. (1995). Predicting young adults’ health risk behavior. J. Pers. Soc. Psychol. 69, 505–517. doi: 10.1037/0022-3514.69.3.505

Gibbons, F. X., Gerrard, M., Blanton, H., and Russell, D. W. (1998). Reasoned action and social reaction: willingness and intention as independent predictors of health risk. J. Pers. Soc. Psychol. 74, 1164–1180. doi: 10.1037/0022-3514.74.5.1164

Gibbons, F. X., Gerrard, M., Lando, H. A., and McGovern, P. G. (1991). Social comparison and smoking cessation: the role of the “typical smoker”. J. Exp. Soc. Psychol. 27, 239–258. doi: 10.1016/0022-1031(91)90014-W

Gibbons, F. X., Kingsbury, J. H., Wills, T. A., Finneran, S. D., Dal Cin, S., and Gerrard, M. (2016). Impulsivity moderates the effects of movie alcohol portrayals on adolescents’ willingness to drink. Psychol. Addict. Behav. 30, 325–334. doi: 10.1037/adb0000178

Gionet . (2018). Meet Tess: The mental health chatbot that thinks like a therapist. The Guardian. Available at: https://www.theguardian.com/society/2018/apr/25/meet-tess-the-mental-health-chatbot-that-thinks-like-a-therapist (Accessed July 23, 2023).

Guo, J., and Li, B. (2018). The application of medical artificial intelligence technology in rural areas of developing countries. Health. Equity. 2, 174–181. doi: 10.1089/heq.2018.0037

Hair, J. F., Sarstedt, M., and Ringle, C. M. (2019). Rethinking some of the rethinking of partial least squares. Eur. J. Mark. 53, 566–584. doi: 10.1108/EJM-10-2018-0665

Henseler, J., Ringle, C. M., and Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 43, 115–135. doi: 10.1007/s11747-014-0403-8

Hong, A., Nam, C., and Kim, S. (2020). What will be the possible barriers to consumers’ adoption of smart home services? Telecommun. Policy 44:101867. doi: 10.1016/j.telpol.2019.101867

Hoque, M. R., and Bao, Y. (2015). Cultural influence on adoption and use of e-health: evidence in Bangladesh. Telemed. J. E Health 21, 845–851. doi: 10.1089/tmj.2014.0128

Huang, C. Y., Yang, M. C., and Huang, C. Y. (2021). An empirical study on factors influencing consumer adoption intention of an AI-powered chatbot for health and weight management. Int. J. Performability. Eng. 17:422. doi: 10.23940/ijpe.21.05.p2.422432

Hulland, J. (1999). Use of partial least squares (PLS) in strategic management research: a review of four recent studies. Strateg. Manag. J. 20, 195–204. doi: 10.1002/(SICI)1097-0266(199902)20:2%3C195::AID-SMJ13%3E3.0.CO;2-7

Hyde, M. K., and White, K. M. (2010). Are organ donation communication decisions reasoned or reactive? A test of the utility of an augmented theory of planned behaviour with the prototype/willingness model. Br. J. Health Psychol. 15, 435–452. doi: 10.1348/135910709X468232

Jianxun, C., Arkorful, V. E., and Shuliang, Z. (2021). Electronic health records adoption: do institutional pressures and organizational culture matter? Technol. Soc. 65:101531. doi: 10.1016/j.techsoc.2021.101531

Joshi, K. (1991). A model of users’ perspective on change: the case of information systems technology implementation. MIS Q. 15:229. doi: 10.2307/249384

Jussupow, E., Spohrer, K., and Heinzl, A. (2022). Identity threats as a reason for resistance to artificial intelligence: survey study with medical students and professionals. JMIR. Form. Res. 6:e28750. doi: 10.2196/28750

Kaur, P., Dhir, A., Singh, N., Sahu, G., and Almotairi, M. (2020). An innovation resistance theory perspective on mobile payment solutions. J. Retail. Consum. Serv. 55:102059. doi: 10.1016/j.jretconser.2020.102059

Kautish, P., Siddiqui, M., Siddiqui, A., Sharma, V., and Alshibani, S. M. (2023). Technology-enabled cure and care: an application of innovation resistance theory to telemedicine apps in an emerging market context. Technol. Forecast. Soc. Change. 192:122558. doi: 10.1016/j.techfore.2023.122558

Khanijahani, A., Iezadi, S., Dudley, S., Goettler, M., Kroetsch, P., and Wise, J. (2022). Organizational, professional, and patient characteristics associated with artificial intelligence adoption in healthcare: a systematic review. Health. Policy. Technol. 11:100602. doi: 10.1016/j.hlpt.2022.100602

Klaver, N. S., Van de Klundert, J., and Askari, M. (2021). Relationship between perceived risks of using mHealth applications and the intention to use them among older adults in the Netherlands: cross-sectional study. JMIR Mhealth Uhealth 9:e26845. doi: 10.2196/26845

Kleijnen, M., Lee, N., and Wetzels, M. (2009). An exploration of consumer resistance to innovation and its antecedents. J. Ecno. Psychol. 30, 344–357. doi: 10.1016/j.joep.2009.02.004

Kline, R. B. (2023). Principles and practice of structural equation modeling. New York, NY: Guilford publications.

Kock, N. (2015). Common method bias in PLS-SEM: a full collinearity assessment approach. Int. J. E-collab. 11, 1–10. doi: 10.4018/ijec.2015100101

Kuisma, T., Laukkanen, T., and Hiltunen, M. (2007). Mapping the reasons for resistance to internet banking: a means-end approach. Int. J. Inf. Manag. 27, 75–85. doi: 10.1016/j.ijinfomgt.2006.08.006

Lake, I. R., Colon-Gonzalez, F. J., Barker, G. C., Morbey, R. A., Smith, G. E., and Elliot, A. J. (2019). Machine learning to refine decision making within a syndromic surveillance service. BMC Public Health 19, 1–12. doi: 10.1186/s12889-019-6916-9

Lane, D. J., and Gibbons, F. X. (2007). Am I the typical student? Perceived similarity to student prototypes predicts success. Personal. Soc. Psychol. Bull. 33, 1380–1391. doi: 10.1177/0146167207304789

Laukkanen, T. (2016). Consumer adoption versus rejection decisions in seemingly similar service innovations: the case of the internet and mobile banking. J. Bus. Res. 69, 2432–2439. doi: 10.1016/j.jbusres.2016.01.013

Laukkanen, T., Sinkkonen, S., and Laukkanen, P. (2009). Communication strategies to overcome functional and psychological resistance to internet banking. Int. J. Inf. Manag. 29, 111–118. doi: 10.1016/j.ijinfomgt.2008.05.008

Laumer, S., Maier, C., Eckhardt, A., and Weitzel, T. (2016). User personality and resistance to mandatory information systems in organizations: a theoretical model and empirical test of dispositional resistance to change. J. Inform.Technol. 31, 67–82. doi: 10.1057/jit.2015

Lazuras, L., Brighi, A., Barkoukis, V., Guarini, A., Tsorbatzoudis, H., and Genta, M. L. (2019). Moral disengagement and risk prototypes in the context of adolescent cyberbullying: findings from two countries. Front. Psychol. 10:1823. doi: 10.3389/fpsyg.2019.01823

Lee, S., Lee, N., and Sah, Y. J. (2020). Perceiving a mind in a chatbot: effect of mind perception and social cues on co-presence, closeness, and intention to use. Int. J. Hum. Comput. Interact. 36, 930–940. doi: 10.1080/10447318.2019.1699748

Li, B., Chen, S., and Zhou, Q. (2023). Empathy with influencers? The impact of the sensory advertising experience on user behavioral responses. J. Retail. Consum. Serv. 72:103286. doi: 10.1016/j.jretconser.2023.103286

Lian, J., and Yen, D. C. (2013). To buy or not to buy experience goods online: perspective of innovation adoption barriers. Cumput. Human. Behav. 29, 665–672. doi: 10.1016/j.chb.2012.10.009

Lian, J. W., and Yen, D. C. (2014). Online shopping drivers and barriers for older adults: age and gender differences. Comput. Hum. Behav. 37, 133–143. doi: 10.1016/j.chb.2014.04.028

Lindell, M. K., and Whitney, D. J. (2001). Accounting for common method variance in cross-sectional research designs. J. Appl. Psychol. 86, 114–121. doi: 10.1037/0021-9010.86.1.114

Litt, D. M., and Lewis, M. A. (2016). Examining a social reaction model in the prediction of adolescent alcohol use. Addict. Behav. 60, 160–164. doi: 10.1016/j.addbeh.2016.04.009

Low, S. T., Sakhardande, P. G., Lai, Y. F., Long, A. D., and Kaur-Gill, S. (2021). Attitudes and perceptions toward healthcare technology adoption among older adults in Singapore: a qualitative study. Front. Public Health 9:588590. doi: 10.3389/fpubh.2021.588590

Ly, K. H., Ly, A., and Andersson, G. (2017). A fully automated conversational agent for promoting mental well-being: a pilot RCT using mixed methods. Internet Interv. 10, 39–46. doi: 10.1016/j.invent.2017.10.002

Marett, K., Pearson, A. W., Pearson, R. A., and Bergiel, E. (2015). Using mobile devices in a high risk context: the role of risk and trust in an exploratory study in Afghanistan. Technol. Soc. 41, 54–64. doi: 10.1016/j.techsoc.2014.11.002

Mehta, A., Niles, A. N., Vargas, J. H., Marafon, T., Couto, D. D., and Gross, J. J. (2021). Acceptability and effectiveness of artificial intelligence therapy for anxiety and depression (Youper): longitudinal observational study. J. Med. Internet Res. 23:e26771. doi: 10.2196/26771

Migliore, G., Wagner, R., Cechella, F. S., and Liébana-Cabanillas, F. (2022). Antecedents to the adoption of mobile payment in China and Italy: an integration of UTAUT2 and innovation resistance theory. Int. J. Med. Inform. 24, 2099–2122. doi: 10.1007/s10796-021-10237-2

Myklestad, I., and Rise, J. (2007). Predicting willingness to engage in unsafe sex and intention to perform sexual protective behaviors among adolescents. Health Educ. Behav. 34, 686–699. doi: 10.1177/1090198106289571

Nadarzynski, T., Lunt, A., Knights, N., Bayley, J., and Llewellyn, C. (2023). “But can chatbots understand sex?” attitudes towards artificial intelligence chatbots amongst sexual and reproductive health professionals: an exploratory mixed-methods study. Int. J. STD AIDS 34, 809–816. doi: 10.1177/09564624231180777

Nadarzynski, T., Miles, O., Cowie, A., and Ridge, D. (2019). Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: a mixed-methods study. Digit. Health. 5, 1–12. doi: 10.1177/20552076198718

Norman, P., Armitage, C. J., and Quigley, C. (2007). The theory of planned behavior and binge drinking: assessing the impact of binge drinker prototypes. Addict. Behav. 32, 1753–1768. doi: 10.1016/j.addbeh.2006.12.009

Palanica, A., Flaschner, P., Thommandram, A., Li, M., and Fossat, Y. (2019). Physicians’ perceptions of chatbots in health care: cross-sectional web-based survey. J. Med. Internet Res. 21:e12887. doi: 10.2196/12887

Parasuraman, A. (2000). Technology readiness index (TRI) a multiple-item scale to measure readiness to embrace new technologies. J. Serv. Res. 2, 307–320. doi: 10.1177/109467050024001

Parasuraman, A., and Grewal, D. (2000). The impact of technology on the quality-value-loyalty chain: a research agenda. J. Acad. Mark. Sci. 28, 168–174. doi: 10.1177/0092070300281

Pennic . (2019). Global Chatbots in Healthcare Market to Reach $498M by 2029. Hit consultant. Available at: https://hitconsultant.net/2019/09/23/global-chatbots-healthcare-market-report/(Accessed July 24, 2023).

Pereira, J., and Díaz, Ó. (2019). Using health chatbots for behavior change: a mapping study. J. Med. Syst. 43, 135–113. doi: 10.1007/s10916-019-1237-1

Piko, B. F., Bak, J., and Gibbons, F. X. (2007). Prototype perception and smoking: are negative or positive social images more important in adolescence? Addict. Behav. 32, 1728–1732. doi: 10.1016/j.addbeh.2006.12.003

Podsakoff, P. M., MacKenzie, S. B., Lee, J. Y., and Podsakoff, N. P. (2003). Common method biases in behavioral research: a critical review of the literature and recommended remedies. J. Appl. Psychol. 88, 879–903. doi: 10.1037/0021-9010.88.5.879

Prakash, A. V., and Das, S. (2022). Explaining citizens’ resistance to use digital contact tracing apps: a mixed-methods study. Int. J. Inf. Manag. 63:102468. doi: 10.1016/j.ijinfomgt.2021.102468

Purwanto, A. (2021). Partial least squares structural squation modeling (PLS-SEM) analysis for social and management research: a literature review. J. Ind. Eng. Manag. Res. 2, 114–123. doi: 10.7777/jiemar.v2i4

PWC . (2017). Why AI and robotics will define New Health. Available at: https://www.pwc.com/gx/en/industries/healthcare/publications/ai-robotics-new-health.html (Accessed July 25, 2023).

Ram, S., and Sheth, J. N. (1989). Consumer resistance to innovations: the marketing problem and its solutions. J. Consum. Mark. 6, 5–14. doi: 10.1108/EUM0000000002542

Rho, M. J., Choi, I. Y., and Lee, J. (2014). Predictive factors of telemedicine service acceptance and behavioral intention of physicians. Int. J. Med. Inform. 83, 559–571. doi: 10.1016/j.ijmedinf.2014.05.005

Rogers, E. M., and Adhikarya, R. (1979). Diffusion of innovations: an up-to-date review and commentary. Ann. Int. Commun. Assoc. 3, 67–81. doi: 10.1080/23808985.1979.11923754

Röth, T., and Spieth, P. (2019). The influence of resistance to change on evaluating an innovation project’s innovativeness and risk: a sensemaking perspective. J. Bus. Res. 101, 83–92. doi: 10.1016/j.jbusres.2019.04.014

Sadiq, M., Adil, M., and Paul, J. (2021). An innovation resistance theory perspective on purchase of eco-friendly cosmetics. J. Retail. Consum. Serv. 59:102369. doi: 10.1016/j.jretconser.2020.102369

Schepman, A., and Rodway, P. (2020). Initial validation of the general attitudes towards artificial intelligence scale. Comput. Hum. Behav. Rep. 1:100014. doi: 10.1016/j.chbr.2020.100014

Schwalbe, N., and Wahl, B. (2020). Artificial intelligence and the future of global health. Lancet 395, 1579–1586. doi: 10.1016/S0140-6736(20)30226-9

Singh, A., and Pandey, J. (2024). Artificial intelligence adoption in extended HR ecosystems: enablers and barriers. An abductive case research. Front. Psychol. 14:1339782. doi: 10.3389/fpsyg.2023.1339782

Sun, Y., Feng, Y., Shen, X. L., and Guo, X. (2023). Fear appeal, coping appeal and mobile health technology persuasion: a two-stage scenario-based survey of the elderly. Inform. Technol. Peopl. 36, 362–386. doi: 10.1108/ITP-07-2021-0519

Szmigin, I., and Foxall, G. (1997). Three forms of innovation resistance: the case of retail payment methods. Technovation 18, 459–468. doi: 10.1016/S0166-4972(98)00030-3

Talwar, S., Dhir, A., Kaur, P., and Mäntymäki, M. (2020). Barriers toward purchasing from online travel agencies. Int. J. Hosp. Manag. 89:102593. doi: 10.1016/j.ijhm.2020.102593

Tavares, J., and Oliveira, T. (2018). New integrated model approach to understand the factors that drive electronic health record portal adoption: cross-sectional national survey. J. Med. Internet Res. 20:e11032. doi: 10.2196/11032

Tenenhaus, M., Vinzi, V. E., Chatelin, Y. M., and Lauro, C. (2005). PLS path modeling. Comput. Stat. Data. Anal. 48, 159–205. doi: 10.1016/j.csda.2004.03.005

Thornton, B., Gibbons, F. X., and Gerrard, M. (2002). Risk perception and prototype perception: independent processes predicting risk behavior. Personal. Soc. Psychol. Bull. 28, 986–999. doi: 10.1177/014616720202800711

Tian, W., Ge, J., and Zheng, X. (2024). AI Chatbots in Chinese higher education: adoption, perception, and influence among graduate students—an integrated analysis utilizing UTAUT and ECM models. Front. Psychol. 15:1268549. doi: 10.3389/fpsyg.2024.1268549

Todd, J., Kothe, E., Mullan, B., and Monds, L. (2016). Reasoned versus reactive prediction of behaviour: a meta-analysis of the prototype willingness model. Health Psychol. Rev. 10, 1–24. doi: 10.1080/17437199.2014.922895

Tran, V., Riveros, C., and Ravaud, P. (2019). Patients’ views of wearable devices and AI in healthcare: findings from the ComPaRe e-cohort. NPJ. Digit. Med. 2, 1–8. doi: 10.1038/s41746-019-0132-y

Tsai, J., Cheng, M., Tsai, H., Hung, S., and Chen, Y. (2019). Acceptance and resistance of telehealth: the perspective of dual-factor concepts in technology adoption. Int. J. Inf. Manag. 49, 34–44. doi: 10.1016/j.ijinfomgt.2019.03.003

Tudor Car, L., Dhinagaran, D. A., Kyaw, B. M., Kowatsch, T., Joty, S., Theng, Y. L., et al. (2020). Conversational agents in health care: scoping review and conceptual analysis. J. Med. Internet Res. 22:e17158. doi: 10.2196/17158

Van Gool, E., Van Ouytsel, J., Ponnet, K., and Walrave, M. (2015). To share or not to share? Adolescents’ self-disclosure about peer relationships on Facebook: an application of the prototype willingness model. Comput. Hum. Behav. 44, 230–239. doi: 10.1016/j.chb.2014.11.036

Wang, H., Zhang, J., Luximon, Y., Qin, M., Geng, P., and Tao, D. (2022). The determinants of user acceptance of Mobile medical platforms: an investigation integrating the TPB, TAM, and patient-centered factors. Int. J. Environ. Res. Public Health 19:10758. doi: 10.3390/ijerph191710758

Wills, T. A., Gibbons, F. X., Gerrard, M., Murry, V. M., and Brody, G. H. (2003). Family communication and religiosity related to substance use and sexual behavior in early adolescence: a test for pathways through self-control and prototype perceptions. Psychol. Addict. Behav. 17, 312–323. doi: 10.1037/0893-164X.17.4.312

Wu, C., Xu, H., Bai, D., Chen, X., Gao, J., and Jiang, X. (2023). Public perceptions on the application of artificial intelligence in healthcare: a qualitative meta-synthesis. BMJ Open 13:e066322. doi: 10.1136/bmjopen-2022-066322

Yang, B., and Lester, D. (2008). Reflections on rational choice—the existence of systematic irrationality. J. Socio-Econ. 37, 1218–1233. doi: 10.1016/j.socec.2007.08.006

Ye, T., Xue, J., He, M., Gu, J., Lin, H., Xu, B., et al. (2019). Psychosocial factors affecting artificial intelligence adoption in health care in China: cross-sectional study. J. Med. Internet Res. 21:e14316. doi: 10.2196/14316

Yokoi, R., Eguchi, Y., Fujita, T., and Nakayachi, K. (2021). Artificial intelligence is trusted less than a doctor in medical treatment decisions: influence of perceived care and value similarity. Int. J. Hum. Comput. Interact. 37, 981–990. doi: 10.1080/10447318.2020.1861763

Yu, C. S., and Chantatub, W. (2015). Consumers resistance to using mobile banking: evidence from Thailand and Taiwan. Int. J. Electron. Commer. 7, 21–38. doi: 10.7903/ijecs.1375

Yun, J., and Park, J. (2022). The effects of chatbot service recovery with emotion words on customer satisfaction, repurchase intention, and positive word-of-mouth. Front. Psychol. 13:922503. doi: 10.3389/fpsyg.2022.922503

Zheng, Q. (2023). Restoring trust through transparency: examining the effects of transparency strategies on police crisis communication in mainland China. Public Relat. Rev. 49:102296. doi: 10.1016/j.pubrev.2023.102296

Zhu, Y., Wang, R., and Pu, C. (2022). “I am chatbot, your virtual mental health adviser.” what drives citizens’ satisfaction and continuance intention toward mental health chatbots during the COVID-19 pandemic? An empirical study in China. Digit. Health. 8:20552076221090031. doi: 10.1177/20552076221090

Zimmermann, F., and Sieverding, M. (2010). Young adults’ social drinking as explained by an augmented theory of planned behaviour: the roles of prototypes, willingness, and gender. Br. J. Health Psychol. 15, 561–581. doi: 10.1048/135910709X476558

Zorgenablers,. (2019). Molly: the virtual nurse. Available at: https://zorgenablers.nl/en/molly-the-virtual-nurse/ (Accessed July 23, 2023).

Keywords: health chatbots, resistance behavioral tendency, innovation resistance theory, prototype willingness model, parallel mediation analysis, partial least squares structural equation modeling

Citation: Zou X, Na Y, Lai K and Liu G (2024) Unpacking public resistance to health Chatbots: a parallel mediation analysis. Front. Psychol. 15:1276968. doi: 10.3389/fpsyg.2024.1276968

Edited by:

Xiaopeng Ren, Chinese Academy of Sciences (CAS), ChinaReviewed by:

Amit Mittal, Chitkara University, IndiaXiaopeng Du, Central University of Finance and Economics, China

Copyright © 2024 Zou, Na, Lai and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guan Liu, liuguan@jnu.edu.cn

Xiqian Zou

Xiqian Zou Yuxiang Na

Yuxiang Na Kaisheng Lai

Kaisheng Lai Guan Liu

Guan Liu