- 1Minglou Street Community Health Service Center, Yinzhou, Ningbo, China

- 2Shensu Science and Technology (Suzhou) Co., Ltd., Suzhou, Jiangsu, China

- 3Ningbo Yinzhou District Center for Disease Control and Prevention, Ningbo, China

Introduction: Vaccines are essential for reducing infectious disease incidence, but challenges like public awareness and healthcare workloads persist. This study aimed to evaluate the impact of optimizing conversational scripts of an intelligent consultation robot on enhancing operational efficiency in vaccination clinics.

Methods: A pilot project was conducted at the vaccination clinic of Minglou Street Community Health Service Center in Ningbo’s Yinzhou District. The robot system, developed by Shensu Science and Technology, was implemented from January to May 2024 with four experimental phases. The study used a pre-post comparison framework to assess changes in labor costs, work efficiency, and user satisfaction.

Results: As the scripts evolved, there was a notable increase in automated response rates and a decrease in human support transfers. User satisfaction improved, particularly in the final phase. The robot became more effective at managing user inquiries, reducing reliance on manual services.

Discussion: Optimizing the robot’s conversational scripts significantly improved daily operational efficiency in the vaccination clinic. By automating routine consultation tasks, the robot reduced healthcare professionals’ workloads. Future research could explore further refinements to dialogue strategies and expand the robot’s applications in healthcare settings.

1 Introduction

Vaccines are an effective intervention for reducing the incidence and mortality rates of certain infectious diseases (1–3). However, the implementation of vaccination programs is not without its challenges. In the current medical environment, healthcare professionals face numerous obstacles, particularly in the context of preventive vaccination services. One of the primary issues is the inadequate public understanding of the importance and benefits of vaccination (4, 5). This lack of awareness can lead to hesitancy and resistance, which in turn affects the overall success of vaccination campaigns (6, 7). In China, due to limited public awareness and the absence of a systematic adult vaccination recommendation program, adult vaccination rates remain low (8, 9). Du et al. (10) emphasized the need to improve public access to specialized vaccine-related information sources. Vaccination clinics, as primary venues for preventive services, handle daily tasks such as appointment scheduling, vaccine reminders, information inquiries, and post-vaccination guidance, which are both complex and repetitive (11). The heavy workload of healthcare workers often leaves them with limited time and capacity to effectively convey the critical information and significance of vaccinations to patients (12). In addition, inadequate medical staff expertise can also lead to the transmission of misinformation, which can lead to unnecessary delays or missed vaccinations (13).

Effective vaccination services not only ensure that a greater number of individuals receive the necessary vaccinations but also enhance patient experience, thereby fostering increased trust in healthcare systems. In this context, the rapid development of artificial intelligence (AI), particularly in speech recognition and voice interactive technologies, has marked significant advancements in the healthcare sector (14, 15). These intelligent human-computer interaction technologies have the potential to automate routine tasks, such as providing health consultations (16–18), auxiliary diagnosis (19, 20), and efficient information collection (21). This progress is not just a global trend but is also evident in China, where the integration of such technologies is transforming the delivery of healthcare services (22, 23). Studies have also attempted to improve vaccination clinic services, such as addressing vaccine hesitancy (24) and enhancing reminders (25, 26), through intelligent consultation robots. By doing so, they can free up medical staff to concentrate on more complex tasks and direct patient care. However, existing studies predominantly emphasize robotic technology development and preliminary effectiveness evaluations. There is a lack of in-depth analysis and research on the specific impact of intelligent consultation robot discourse and its actual application effects in vaccination clinics.

This paper aims to fill this gap by conducting a pilot project of the intelligent consultation robot system at the vaccination clinic of Minglou Street Community Health Service Center in Yinzhou District, Ningbo City (Minglou Clinic). This study documents the iterative process of the robot’s dialogue design and detailed analysis of user interaction logs. By analyzing the call records from January 2024 to May 2024, this study evaluates how the robot’s conversational script impacts medical staff workload, workflow efficiency, and patient satisfaction. The results of the study will provide empirical support for the application of intelligent consultation robots in vaccination clinics and provide references for further improving the quality and efficiency of preventive vaccination services.

2 Materials and methods

2.1 Intervention and study design

The intelligent consultation robot system, developed by Shensu Science and Technology (Suzhou) Co., Ltd., was designed for vaccination clinics to streamline consultation workflows, particularly by managing inbound calls from users inquiring about vaccination services. The system employs a scripted dialogue framework to interact with users, prompting them to categorize their inquiries (e.g., vaccine types, clinic schedules) and delivering tailored responses based on their input. The system comprises four core components: (1) a speech recognition module that transcribes voice inputs into text; (2) a natural language processing (NLP) engine that interprets user intent and generates responses; (3) a curated knowledge base containing vaccination schedules, clinic hours, and vaccine availability data; and (4) a dialogue management system that orchestrates interactions between modules to guide users through inquiry resolution.

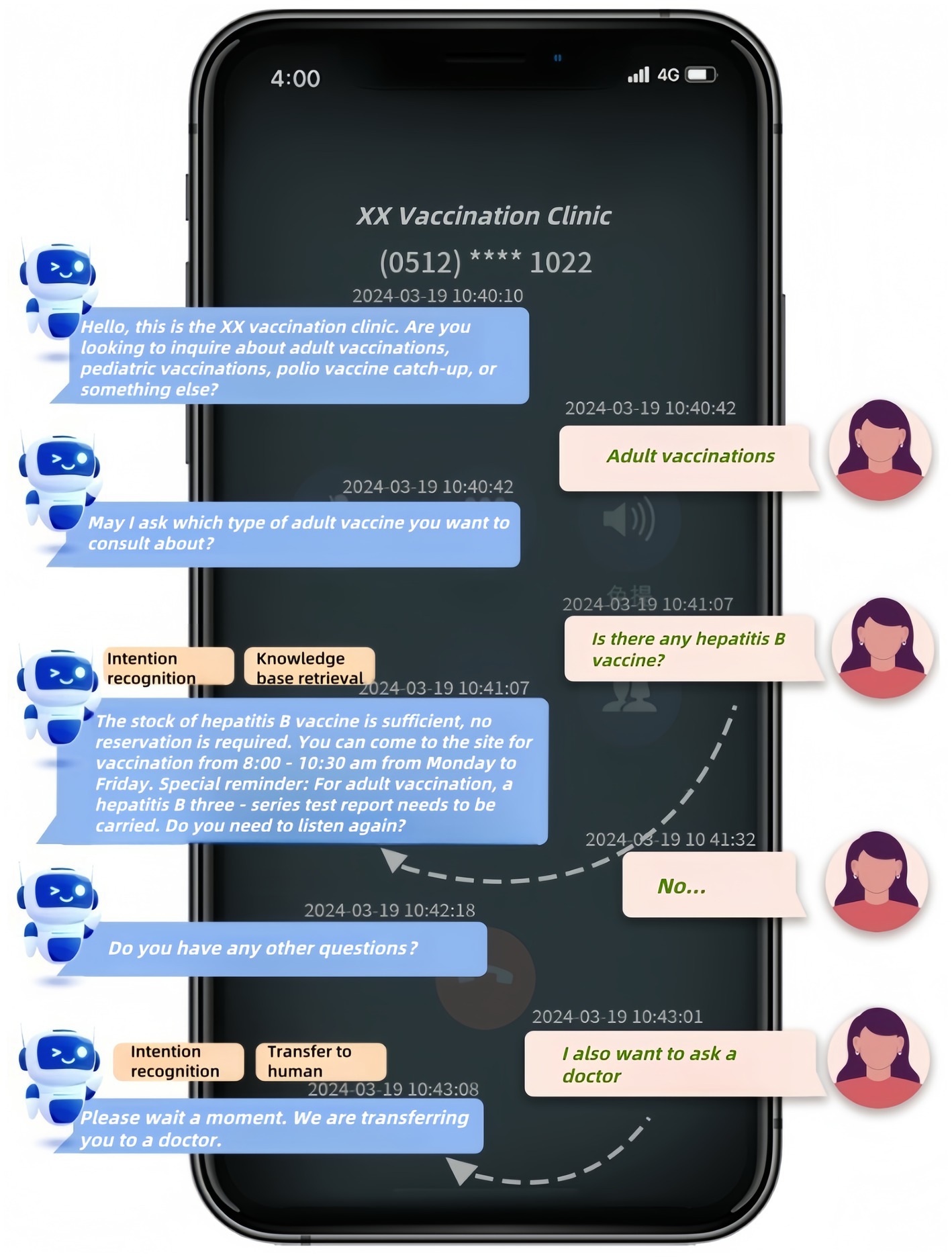

Figure 1 demonstrates a sample dialogue flow between a user and the system (translated from Chinese to English for clarity), highlighting key stages: intent recognition, knowledge base retrieval, and transfer to human. The interaction begins with the robot greeting the user and requesting them to specify their inquiry category (e.g., “adult vaccinations” or “pediatric vaccinations”). If the user’s input is ambiguous, the system employs a clarification loop to narrow the query scope before retrieving relevant information from its knowledge base. The dialogue persists until the user’s query is fully addressed or escalation to human agents occurs after predefined thresholds (e.g., three consecutive unrecognized inputs) are met.

Figure 1. Example Interaction with the intelligent consultation robot system in a vaccination clinic. Dialogue translated from Chinese for clarity.

In this study, the robot system was customized exclusively for Minglou Clinic to optimize vaccination consultation workflows and address previously unmet challenges in preventive care services. The conversational scripts were synthesized by analyzing prevalent inquiry patterns at Minglou Clinic, integrating NLP-driven intent classification frameworks and empirical insights from prior vaccination consultation projects. Historical consultation data from the clinic and feedback from medical staff were collected and analyzed to categorize prevalent inquiries and pinpoint essential information requirements. Iterative script revisions were conducted through interdisciplinary team discussions, with versions optimized based on user interaction analytics.

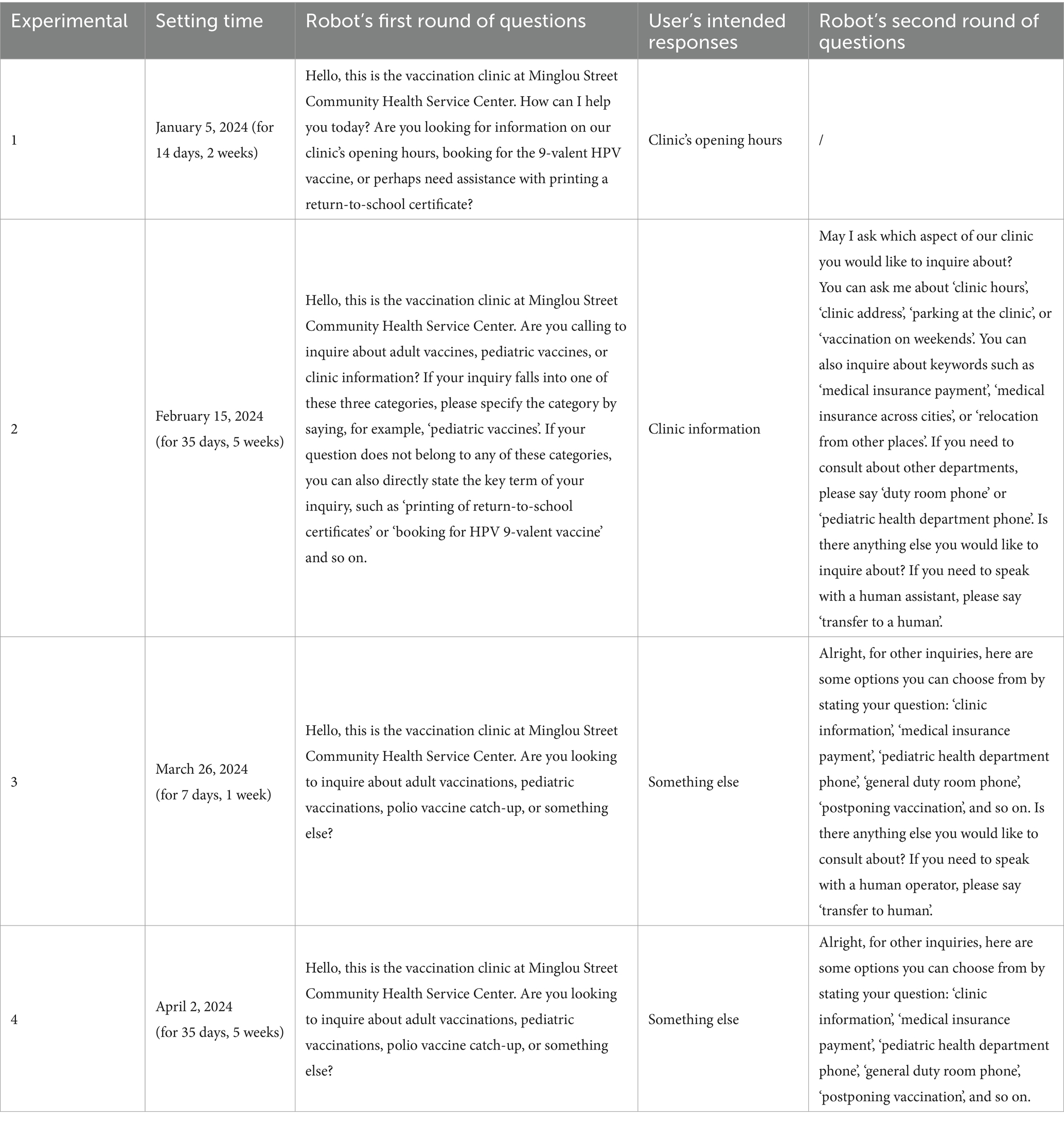

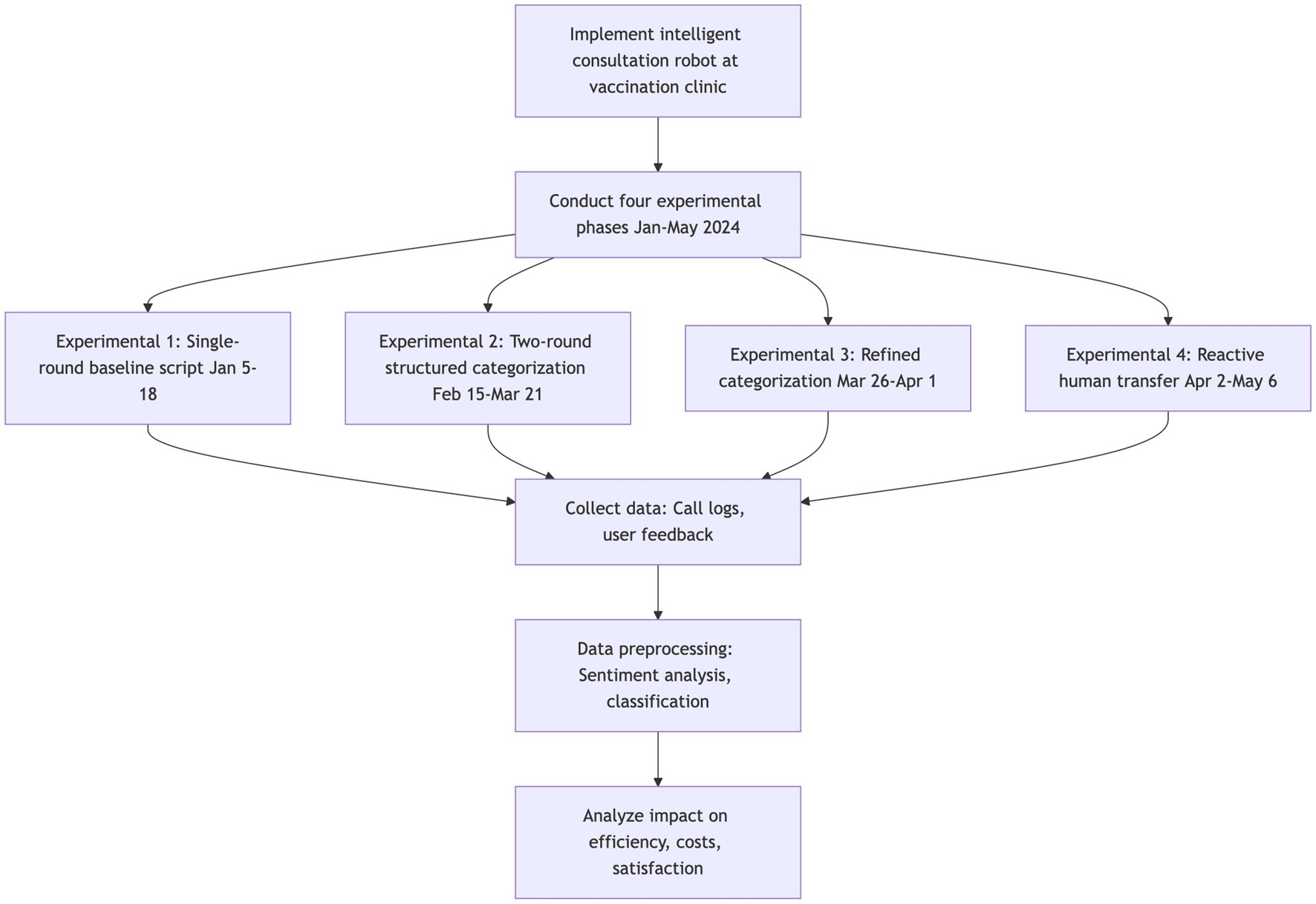

A modified pre-post comparison framework was adopted to assess iterative dialogue guidance strategies in vaccination clinic operations. All experimental phases (Experimental 1–4) were conducted between January and May 2024. Experimental 1 established a baseline post-deployment script, while Experimental 2–4 introduced optimized versions. Each phase targeted distinct operational metrics (efficiency, costs, satisfaction), with outcomes compared across groups to quantify incremental gains. Key features of the dialogue guidance strategies are summarized in Table 1 (complete Chinese scripts in Supplementary Table S1):

1. Experimental 1 (Baseline, Jan 5–18): Open-ended single-round questioning with example prompts

• In Experimental 1, the robot employs an open-ended approach to the initial round of guidance, allowing users to freely express their inquiries without being confined to specific categories. This method provides examples such as “outpatient clinic hours,” “HPV 9-valent vaccine appointments” and “printing of return-to-school certificates” to guide users in formulating their questions, yet it remains flexible to accommodate a wide range of user needs that may not be listed. However, this strategy could generate a wide array of diverse inputs, complicating the robot’s task of effectively categorizing and responding to user needs.

2. Experimental 2 (Feb 15-Mar 21): Two-round structured categorization (adult/child vaccines, clinic info)

• Experimental 2 introduces a more structured approach by categorizing consultation questions into three main categories during the first round of guidance: adult vaccine issues, pediatric vaccine issues, and clinic information inquiries. This method requires users to select from predefined categories, prompting them to specify their inquiry category, such as “pediatric vaccines.” For inquiries that do not fit into these main categories, users are prompted to directly state keywords, like “printing of return-to-school certificates” or “HPV 9-valent vaccine appointments.”

3. Experimental 3 (Mar 26-Apr 1): Refined categorization emphasizing polio vaccine boosters

• Experimental 3 further refines the categorization by replacing “clinic information inquiries” with “polio vaccine boosters” during the first round of guidance, placing a greater emphasis on specific vaccination-related inquiries. In this version of the conversational script, users are prompted to select between “adult vaccination issues,” “pediatric vaccination issues,” “polio vaccine boosters,” or “other.” The guiding phrases have become more concise compared to the previous version. General clinic information inquiries are now included under the “other” category and are addressed in the second round of guidance.

4. Experimental 4 (Apr 2-May 6): Reactive human service transfer protocol

• In Experimental 4, the robot no longer proactively suggests transferring to human service during the second round of guidance; instead, it offers the option to transfer to human service immediately in two scenarios: when it cannot understand the user’s question or when the user explicitly requests a transfer to human service. This change represents a shift toward a more reactive approach, prioritizing user experience by providing immediate assistance when the robot’s understanding is challenged, rather than following a predetermined script.

Each script iteration underwent a 7–35 day field trial (total n = 1,076 calls), with system logs capturing full interaction transcripts, resolution pathways, and user feedback. Figure 2 presents the entire research process, from the implementation of the intelligent consultation robot system at the vaccination clinic, through the four experimental phases, to the data collection, processing, and final analysis.

Figure 2. Research flowchart: assessing the impact of conversational script optimization on operational efficiency in a vaccination clinic.

2.2 Data collection and processing

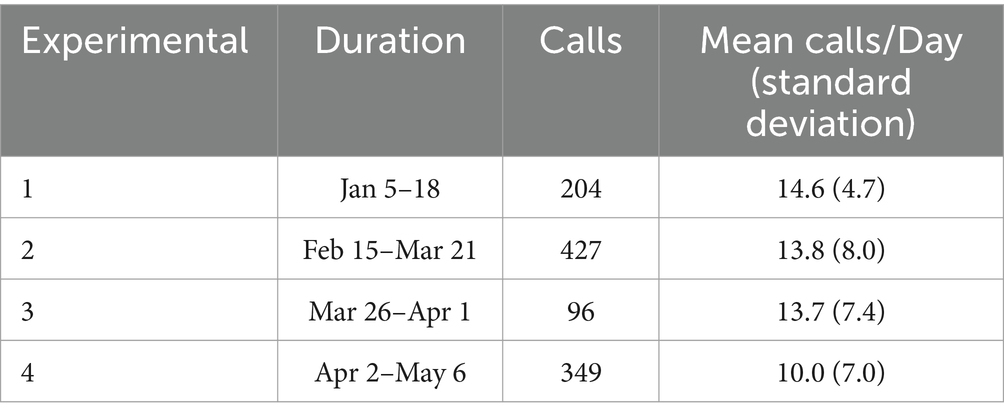

Call records were collected between January 5 and May 6, 2024, excluding two experimental phases (January 19 and March 22) due to insufficient valid user interactions (<50 calls). Only real user inquiries (excluding system-generated simulations) with complete metadata (timestamp, query type, and resolution status) were included. The dataset includes four experimental phases (Experimental 1–4) with sample sizes range from 96 to 427 annotated interactions, as detailed in Table 2.

Data preprocessing included sentiment labeling according to the following criteria:

• Whether the call was hung up immediately after being answered

• Whether the caller was satisfied with the assistance

• Whether the call was directly transferred to human service

• Whether the call was fully handled by the robot without transfer

• The number of routine questions contained in the call

• The number of routine questions resolved by the robot during the call.

Two complementary methods assessed caller satisfaction: (1) direct validation through a post-conversation survey where users rated their experience via button presses or voice commands, and (2) indirect inference via real-time conversational analysis detecting positive expressions (e.g., “Thank you,” “Very satisfied”) and negative cues (e.g., “It’s a mess,” “Do not talk anymore”).

Routine questions referred to inquiries about vaccination protocols and clinic information preloaded into the robot’s knowledge base.

2.3 Outcome measures

This study examined the impact of conversational script iterations across three key dimensions: labor costs, work efficiency, and user satisfaction.

2.3.1 Labor costs

To assess the labor cost savings from the intelligent consultation robot, two primary indicators are utilized: the fully automated response rate and the direct human support transfer rate:

The fully automated response rate

The direct human support transfer rate

where represents the number of calls fully handled by the robot, and represents the number of calls in which users choose to directly transfer to human service without following the robot’s guidance process.

2.3.2 Work efficiency

The study indirectly assessed the robot’s impact on the work efficiency of medical staff by monitoring two metrics: the average number of routine questions per call and the average number of routine questions resolved per call:

The average number of routine questions per call

The average number of routine questions resolved per call

where represents the number of routine questions, represents the number of routine questions being resolved by robot in calls, and represents the number of calls that was hung up immediately.

2.3.3 User satisfaction

For user satisfaction, the number of satisfied calls and the number of unsatisfied calls were calculated for each experimental phase. Additionally, the number of calls that had no satisfaction assessment recorded was also calculated.

2.4 Statistical methods

The data analysis was conducted using Python 3.11.5 and R 4.3.2, employing a combination of statistical methods to evaluate the performance metrics and user satisfaction. For trend analysis, least squares smoothing was applied to the weekly metrics, which facilitated the identification and visualization of underlying patterns and trends in the data over time. To compare the performance metrics across different experimental phases, independent t-tests were conducted. For the satisfaction analysis, Fisher’s Exact Test was applied to the categorical feedback data presented in a contingency table, allowing for a robust assessment of differences in user satisfaction between experimental phases.

3 Results

3.1 Labor costs

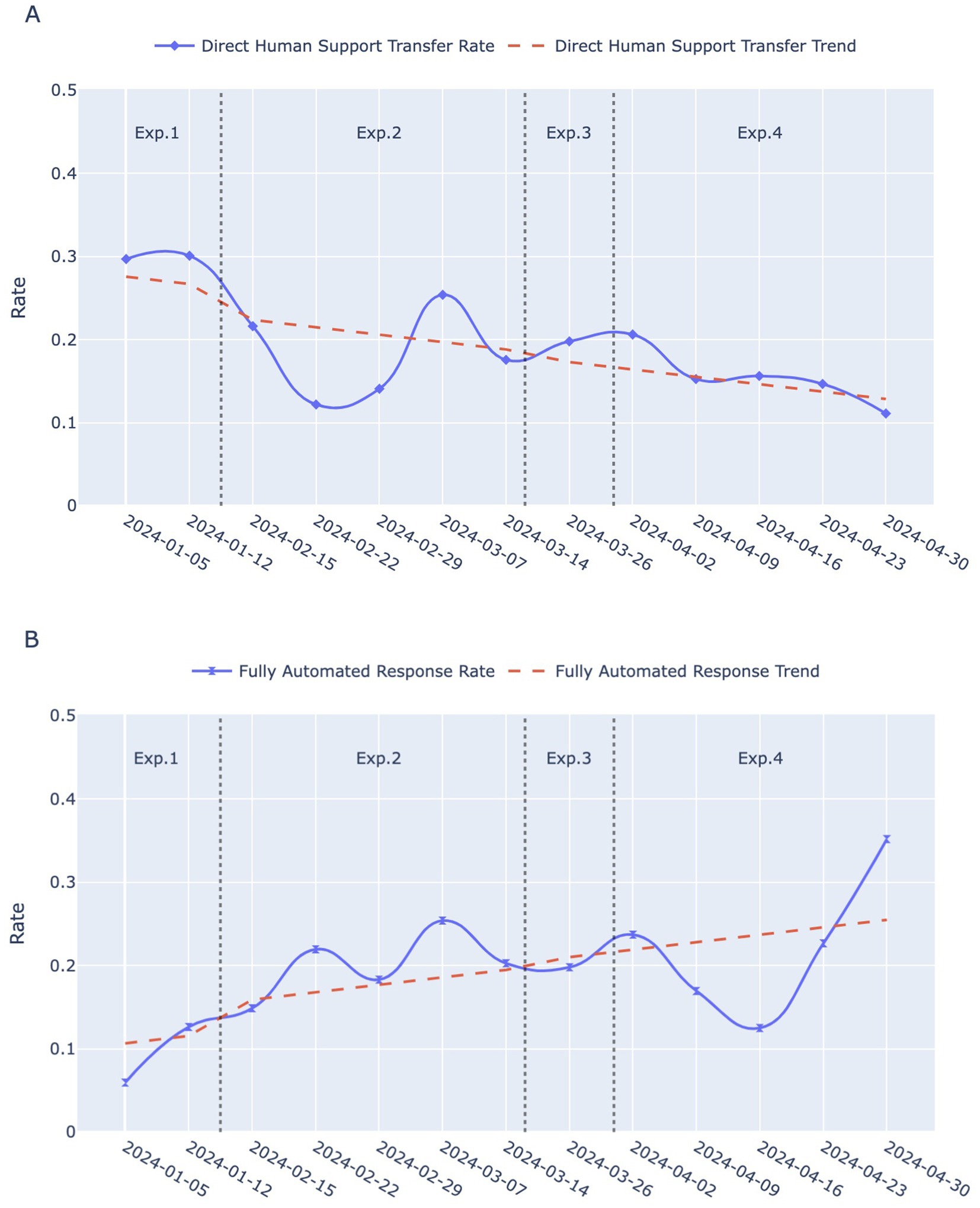

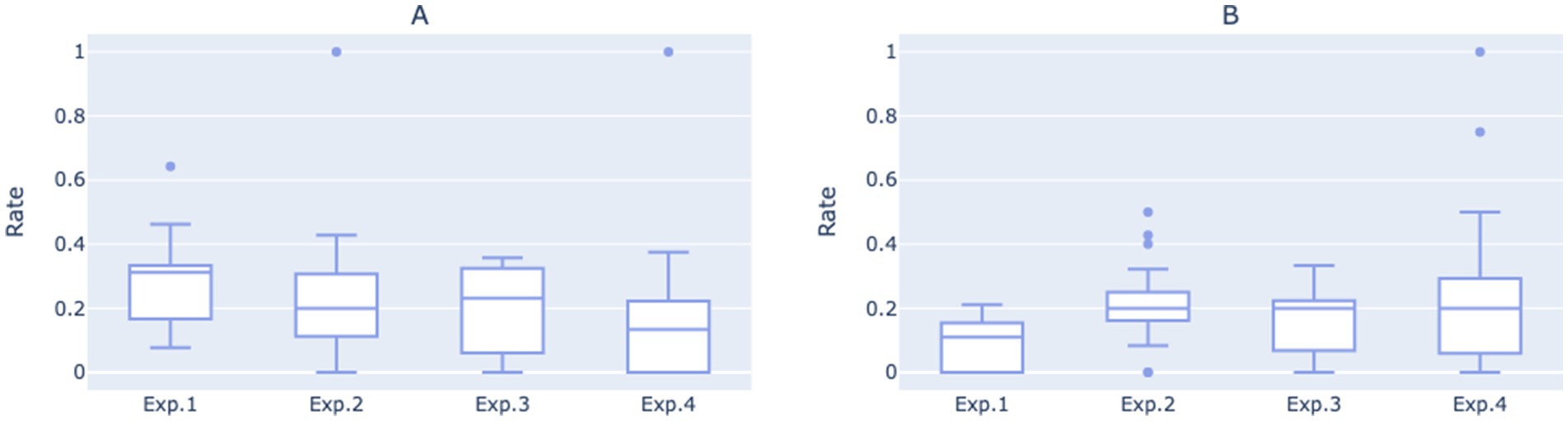

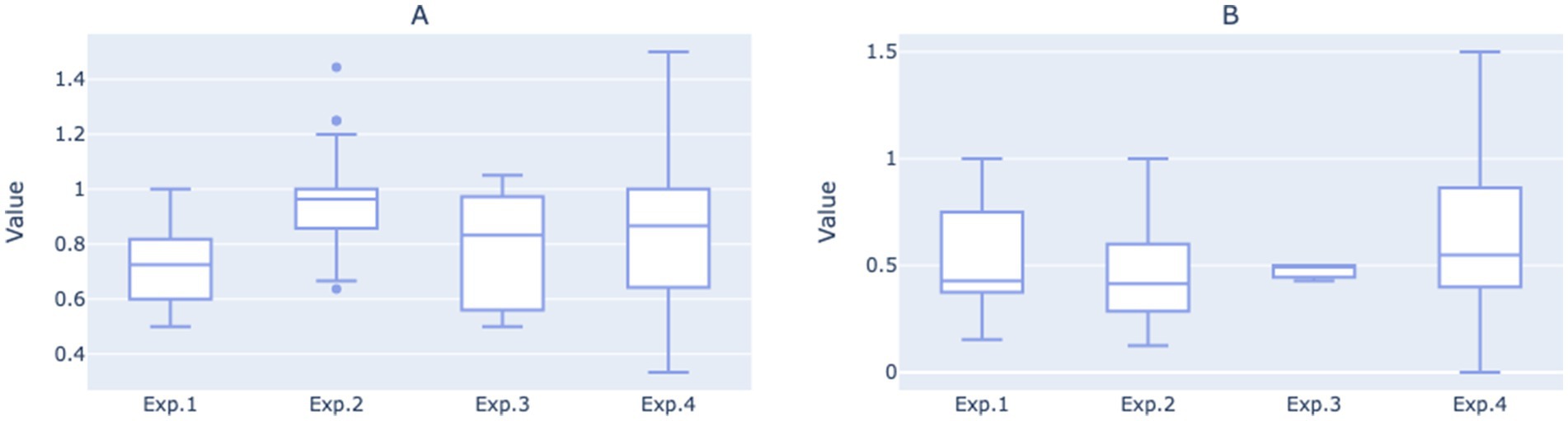

Figure 3 displays the fully automated response rate and direct human support transfer rates for each of the four experimental phases on a weekly basis, whereas Figure 4 presents boxplots of these rates for the same experimental phases, based on daily statistics. The least squares method was utilized to compute the trend lines of the data, shown as the red dashed lines in Figure 3. These lines indicate an overall declining trend in the direct human support transfer rate and an overall increasing trend in the fully automated response rate as the conversational script iterates.

Figure 3. Temporal trends in two indicators across Exp. 1–4: Experimental 1–4. (A) Direct human support transfer rates over weeks for the four experimental phases. The blue solid line with diamond markers represents the weekly rates, while the red dashed line indicates the smoothed trend. (B) Fully automated response rate over weeks for the four experimental phases. The blue solid line with hourglass markers indicates the weekly rates, and the red dashed line represents the smoothed trend.

Figure 4. (A) Boxplot of direct human support transfer rates for each of the four experimental phases based on daily statistics. (B) Boxplot of fully automated response rate for each of the four experimental phases based on daily statistics.

In the early period of Experimental 1 (from Jan 5 to Jan 11), the direct human support transfer rate stood at 30, and 12% of the calls were hung up immediately after being connected. Consequently, the open-ended, single-round guidance script engaged only about 58% of users in interactions with the robot effectively. The higher variability in user questions also indicates that the robot struggled to address them effectively.

Transitioning into Experimental 2, the direct human support transfer rate decreased, while fluctuations in the fully automated response rate showed an upward trend. An independent t-test revealed a statistically significant improvement in the fully automated response rate compared to Experimental 1 (p = 0.0030), suggesting that the rapid categorization of structured Q&A models is better suited for the workflows in vaccination clinic.

Experimental 3 introduced a more concise guidance script, leading to a further increase in the fully automated response rates, while the median daily direct human support transfer rate showed a slight upward trend.

In Experimental 4, after ceasing to explicitly prompt for human service, the direct human support transfer rate gradually dropped to 11% during the last week of the study (from April 30 to May 6). However, the fully automated response rate experienced a sudden decline. Nevertheless, it did not continue to decline; instead, it began to rise during the week commencing April 23 and eventually reached 35%. This unexpected reversal might be due to users adapting to the implicit handoff mechanism, as the removal of explicit prompts encouraged initial exploration of automated features before stabilizing at a higher efficiency level. Notably, the improvements in both the direct human support transfer rate (p = 0.0070) and the fully automated response rate (p = 0.0047) from Experimental 1 to Experimental 4 were statistically significant.

3.2 Work efficiency

When the intelligent consultation robot can effectively handle simple inquiries, it allows medical staff to focus on resolving complex and unconventional issues. As a result, medical personnel will no longer need to spend time explaining common questions, which is expected to reduce the duration of each telephone consultation. This ensures that the calls medical staff receive truly require their professional knowledge and skills, thereby reducing the time spent on phone calls and improving work efficiency while optimizing resource allocation. Furthermore, if the robot’s dialogue guidance is well-designed and effective, it will help users clarify their thoughts and define their issues before being transferred to human service. When users can express their needs and problems more accurately, it will also enhance the efficiency of communication after the transfer.

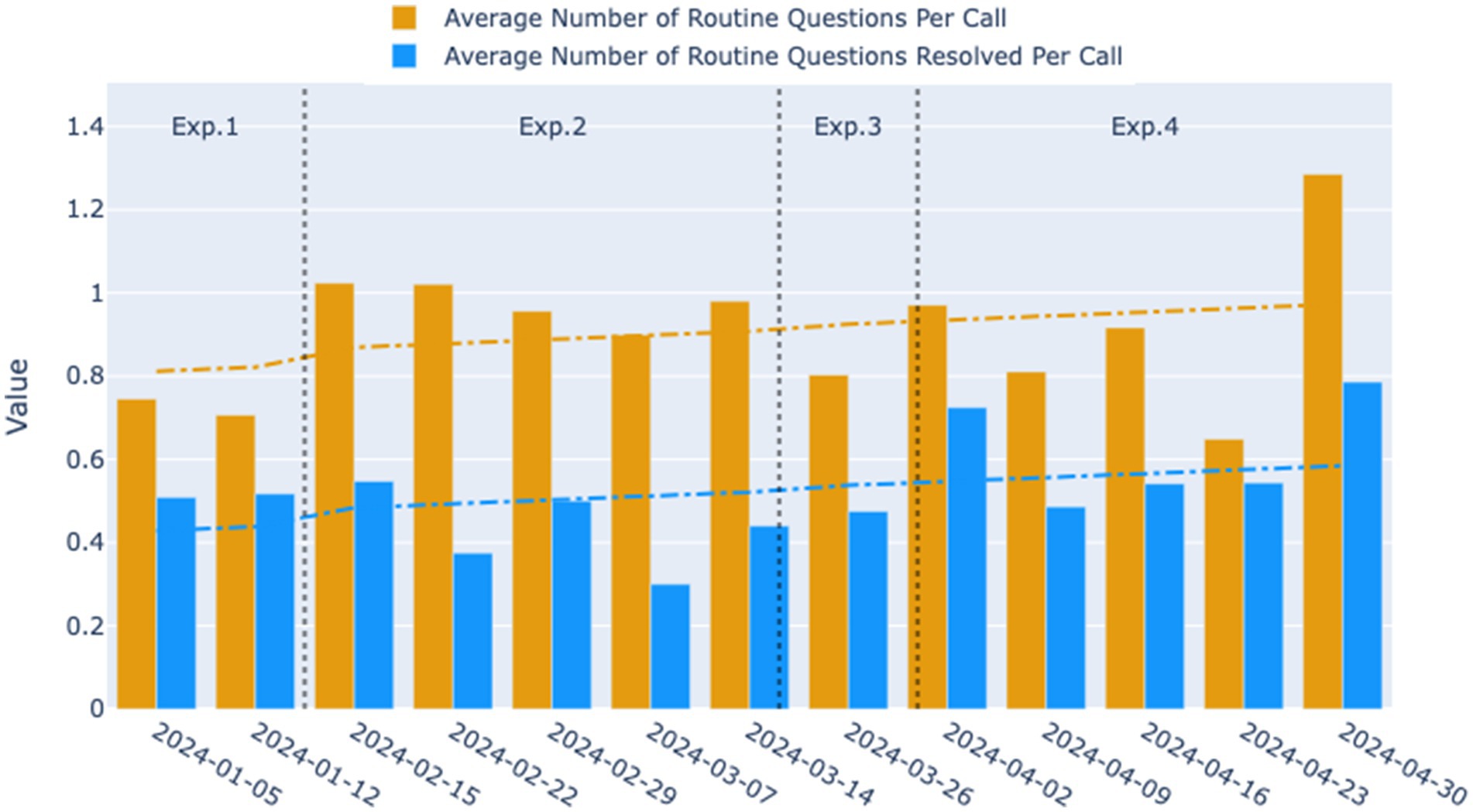

As shown in Figure 5, the average number of routine questions per call was approximately 0.75 during the time of Experimental 1, indicating that the relatively open-ended mode was not adept at guiding users to pose routine questions or helping them clarify their inquiry objectives. Users’ inability to extract effective information from the initial prompts may have contributed to a higher direct transfer to human rate.

Figure 5. Weekly trends in the average number of routine questions per call and the average number of routine questions resolved per call for the four experimental phases. The yellow bars represent the average number of routine questions per call, and the blue bars indicate the average number of routine questions resolved per call across the observed weeks. The dashed lines represent the smoothed trend lines, illustrating the overall direction of the data over time.

When transitioning to Experimental 2, the average number of routine questions per call initially rose to 1.02, followed by a gradual decline to 0.9 over time. However, the average remained significantly higher than that in Experimental 1 (p = 0.0018), suggesting that the two-round dialogue guidance system in Experimental 2 more effectively facilitated users in clarifying and articulating their questions. Despite the increase, the number of routine questions resolved per call did not see a significant improvement, instead exhibiting a fluctuating decline. This trend is evident upon examining the median values of the daily statistics for the two experimental phases (Figure 6). It suggests that during the second phase, while more questions were raised by users, the dialogue may not have been standardized or clear enough to ensure that all questions were adequately addressed during the phone interactions.

Figure 6. (A) Boxplot of average number of routine questions per call for each of the four experimental phases based on daily statistics. (B) Boxplot of average number of routine questions resolved per call for each of the four experimental phases on daily statistics.

After entering the third and fourth iterations, the average number of routine questions resolved per call showed an upward trend, eventually reaching 0.79. The average number of routine questions posed per call in Experimental 4 was comparable to Experimental 2, but with greater variance, peaking at 1.29. This indicates that under the structured two-round guidance system, as the dialogue script gradually adopted more concise language, the robot became more effective at resolving users’ issues during phone interactions, thereby enhancing the overall work efficiency of medical staff.

Moreover, when observing the overall growth trends for the two indicators, it was also noted that the gap between them has narrowed, especially when comparing Experimental 4 with Experimental 2, suggesting that a higher proportion of user questions were resolved during each call.

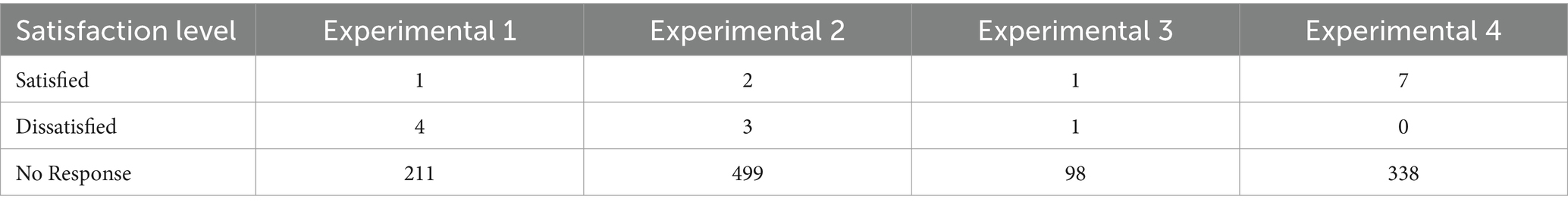

3.3 Customer satisfaction

Satisfaction feedback summaries for each experimental are displayed in Table 3. Due to the inability of call records to capture user emotions, the amount of clear positive or negative satisfaction evaluation data obtained solely from text is limited. Despite this, it was observed that Experimental 4 did not receive any negative satisfaction evaluations, in stark contrast to the 4 negative evaluations received by Experimental 1.

To further explore the differences in satisfaction between the experimental phases, Fisher’s Exact Test was employed for statistical analysis of the data from Experimental 1 and Experimental 4. The result shows a significant difference in satisfaction performance between the two experimental phases, with a p-value of 0.0097, indicating a significant difference in satisfaction for Experimental 4 compared to Experimental 1. This suggests that the robot’s concise and clear two-round guidance script has a positive effect on enhancing user satisfaction.

4 Discussion

In the scenario of incoming calls for consultation, the dialogue guidance strategy of the intelligent consultation robot is vital for enhancing the efficiency of resolving issues. If the guidance is not thorough, it may result in decreased efficiency when the robot assists users in solving problems, thereby increasing the working time required from medical staff. Conversely, accurate and clear scripts can guide users to express their issues quickly and precisely, allowing the robot to provide prompt responses. This efficient interaction not only reduces the waiting time for users but also improves the interaction experience between users and the robot, ultimately helping to increase overall customer satisfaction.

This paper elaborates on the positive impact of the robot’s precise and clear conversational scripts on the daily operations of vaccination clinics in terms of labor cost savings, work efficiency enhancement, and user satisfaction. However, this study also reveals several potential directions for future research and system improvements:

4.1 User satisfaction data collection

Currently, the number of satisfaction evaluations provided by users is relatively low. Future work could focus on further optimizing the satisfaction evaluation mechanism and conversational scripts to stimulate users’ interest in providing feedback (27–29), thereby collecting a greater number of direct evaluations. Additionally, applying large language models (LLMs) to perform real-time sentiment analysis (30, 31) on user voice data can help judge and record users’ emotions during conversations, serving as indirect evaluations to assist in analyzing the overall satisfaction.

4.2 Trade-offs in reactive human service transfer protocol

The significant improvement in fully automated response rates during Experimental 4 suggests that removing explicit “transfer to human” prompts enhanced system efficiency by encouraging users to explore automated features first. However, it risks creating “silent dropouts” of unresolved user issues. Human service was triggered only by system confusion (after three repeated queries) or explicit user requests. Users might abandon the system before reaching the threshold for human service transfer (e.g., due to impatience or low tolerance for iterative interactions). To address this issue, future designs could integrate adaptive thresholds that dynamically adjust escalation triggers based on real-time user behavior (e.g., session duration, response latency) rather than fixed repetition counts. Future work should prioritize user-centered metrics, such as issue resolution completeness and abandonment rate, to comprehensively evaluate the effectiveness of the system.

4.3 Post-transfer call recording and analysis

The system currently lacks the capability to record and save calls after users are transferred to human services. The absence of this feature limits the comprehensive assessment of the user service experience and the optimization of the dialogue guidance structure. With the implementation of this feature, the robot will be able to systematically collect and analyze the types, frequencies, complexities, and urgencies of the issues raised by users after being transferred to human services. This data will help identify potential bottlenecks and areas for improvement in the conversational script design. Furthermore, by analyzing the quality of the questions posed by users to medical staff, it will be possible to more accurately assess whether the conversational script iterations effectively help users clarify their inquiries and how these changes impact the daily operational efficiency of the vaccination clinic.

4.4 Addressing immediate call terminations

Another advantage of using an intelligent consultation robot is its ability to help the clinic filter out unwanted sales and nuisance calls, preventing the waste of medical staff’s time. During the study, about 12% of the calls were hung up immediately after being connected. Besides being potential nuisance calls, some of these calls might be users who hang up immediately upon realizing they are interacting with a robot (32, 33). On one hand, optimizing the initial conversation script and adjusting the robot’s voice intonation could improve user acceptance, reducing the hang-ups by non-nuisance users and giving the robot more opportunities to address routine inquiries, thereby reducing the burden on on-site medical staff. On the other hand, in the future, number recognition technology could be employed to effectively intercept nuisance calls, thereby reducing the likelihood of these calls being transferred to human services.

Data availability statement

The data analyzed in this study is subject to the following licenses/restrictions: the datasets analyzed during the study are not publicly available due to privacy concerns regarding the inclusion of personal identifiers such as telephone numbers, but de-identified datasets are available from the corresponding author and Minglou Street Community Health Service Center on reasonable request. Requests to access these datasets should be directed to Yinjun Hu, c3Nkc2h5akAxNjMuY29t.

Author contributions

BZ: Writing – original draft, Writing – review & editing, Funding acquisition, Investigation, Project administration. YaX: Writing – original draft, Writing – review & editing, Data curation, Formal analysis, Investigation, Visualization. JS: Writing – review & editing, Project administration. YiX: Writing – review & editing. DC: Writing – review & editing. QY: Writing – review & editing. YH: Project administration, Supervision, Validation, Writing – review & editing, Conceptualization, Resources.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The work was supported by Ningbo Yinzhou District Health Science and Technology Project (Project NO.: 2024Y28).

Conflict of interest

YH was employed by Shensu Science and Technology (Suzhou) Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2025.1535270/full#supplementary-material

References

1. Andre, FE, Booy, R, Bock, HL, Clemens, J, Datta, SK, John, TJ, et al. Vaccination greatly reduces disease, disability, death and inequity worldwide. Bull World Health Organ. (2008) 86:140–6. doi: 10.2471/blt.07.040089

2. Carter, A, Msemburi, W, Sim, SY, Gaythorpe, KA, Lambach, P, Lindstrand, A, et al. Modeling the impact of vaccination for the immunization agenda 2030: deaths averted due to vaccination against 14 pathogens in 194 countries from 2021 to 2030. Vaccine. (2024) 42:S28–37. doi: 10.1016/j.vaccine.2023.07.033

3. Larson, HJ, Jarrett, C, Eckersberger, E, Smith, DM, and Paterson, P. Understanding vaccine hesitancy around vaccines and vaccination from a global perspective: a systematic review of published literature, 2007–2012. Vaccine. (2014) 32:2150–9. doi: 10.1016/j.vaccine.2014.01.081

4. Handy, LK, Maroudi, S, Powell, M, Nfila, B, Moser, C, Japa, I, et al. The impact of access to immunization information on vaccine acceptance in three countries. PLoS One. (2017) 12:e0180759. doi: 10.1371/journal.pone.0180759

5. Jones, AM, Omer, SB, Bednarczyk, RA, Halsey, NA, Moulton, LH, and Salmon, DA. Parents’ source of vaccine information and impact on vaccine attitudes, beliefs, and nonmedical exemptions. Adv Prev Med. (2012) 2012:932741:1–8. doi: 10.1155/2012/932741

6. Du, F, Chantler, T, Francis, MR, Sun, FY, Zhang, X, Han, K, et al. The determinants of vaccine hesitancy in China: a cross-sectional study following the Changchun Changsheng vaccine incident. Vaccine. (2020) 38:7464–71. doi: 10.1016/j.vaccine.2020.09.075

7. He, Y, Liu, Y, Dai, B, Zhao, L, Lin, J, Yang, J, et al. Assessing vaccination coverage, timeliness, and its temporal variations among children in a rural area in China. Hum Vaccin Immunother. (2021) 17:592–600. doi: 10.1080/21645515.2020.1772620

8. Wang, Q, Yue, N, Zheng, M, Wang, D, Duan, C, Yu, X, et al. H. Influenza vaccination coverage of population and the factors influencing influenza vaccination in mainland China: a meta-analysis. Vaccine. (2018) 36:7262–9. doi: 10.1016/j.vaccine.2018.10.045

9. Wang, L, Zhong, Y, and Di, J. Current experience in HPV vaccination in China. Indian J Gynecol Oncol. (2021) 19:1–5. doi: 10.1007/s40944-021-00535-7

10. Du, F, Chantler, T, Francis, MR, Sun, FY, Zhang, X, Han, K, et al. Access to vaccination information and confidence/hesitancy towards childhood vaccination: a cross-sectional survey in China. Vaccine. (2021) 9:201. doi: 10.3390/vaccines9030201

11. Zhou, L, Liang, J, Zhu, X, and Liang, l. The process,current situation and outlook of digitalization in China′s vaccination clinics. Prev Med Trib. (2023) 29:152–156+160. doi: 10.16406/j.pmt.issn.1672-9153.2023.2.015

12. Wang, X, Pan, J, Liu, Z, and Wang, W. Optimization of vaccination clinics to improve staffing decisions for COVID-19: a time-motion study. Vaccine. (2022) 10:2045. doi: 10.3390/vaccines10122045

13. Ye, L, Mei, Q, Li, P, Feng, Y, Wu, X, and Yang, T. Knowledge and attitudes about contraindications and precautions to vaccination among healthcare professionals working in vaccination clinics in Ningbo, China: a cross-sectional survey. Vaccine. (2024) 12:632. doi: 10.3390/vaccines12060632

14. Sezgin, E, Huang, Y, Ramtekkar, U, and Lin, S. Readiness for voice assistants to support healthcare delivery during a health crisis and pandemic. NPJ Digital Medicine. (2020) 3:122. doi: 10.1038/s41746-020-00332-0

15. Ting, DS, Carin, L, Dzau, V, and Wong, TY. Digital technology and COVID-19. Nat Med. (2020) 26:459–61. doi: 10.1038/s41591-020-0824-5

16. Xu, L, Sanders, L, Li, K, and Chow, JC. Chatbot for health care and oncology applications using artificial intelligence and machine learning: systematic review. JMIR Cancer. (2021) 7:e27850. doi: 10.2196/27850

17. Jadczyk, T, Wojakowski, W, Tendera, M, Henry, TD, Egnaczyk, G, and Shreenivas, S. Artificial intelligence can improve patient management at the time of a pandemic: the role of voice technology. J Med Internet Res. (2021) 23:e22959. doi: 10.2196/22959

18. Laranjo, L, Dunn, AG, Tong, HL, Kocaballi, AB, Chen, J, Bashir, R, et al. Conversational agents in healthcare: a systematic review. J Am Med Inform Assoc. (2018) 25:1248–58. doi: 10.1093/jamia/ocy072

19. Mao, Y, and Zhang, L. Optimization of the medical service consultation system based on the artificial intelligence of the internet of things. IEEE Access. (2021) 9:98261–74. doi: 10.1109/ACCESS.2021.3096188

20. Zhang, J, Wu, J, Qiu, Y, Song, A, Li, W, Li, X, et al. Intelligent speech technologies for transcription, disease diagnosis, and medical equipment interactive control in smart hospitals: a review. Comput Biol Med. (2023) 153:106517. doi: 10.1016/j.compbiomed.2022.106517

21. Blackley, SV, Huynh, J, Wang, L, Korach, Z, and Zhou, L. Speech recognition for clinical documentation from 1990 to 2018: a systematic review. J Am Med Inform Assoc. (2019) 26:324–38. doi: 10.1093/jamia/ocy179

22. Huo, R, Ou, Y, Zhou, J, Liu, C, Li, A, and Chen, Q. Research progress on the effect evaluation of artificial intelligence voice technology in medical services. Chin J Mod Nurs. (2022) 28:6. doi: 10.3760/cma.j.cn115682-20210630-02866

23. Du, J, Zheng, Q, Luo, J, Nie, B, Xiong, W, Liu, Y, et al. Advances in pipelined dialogue system research and its application in medical field. Sci Technol Eng. (2024) 24:2187–200. doi: 10.12404/j.issn.1671-1815.2304501

24. Weeks, R, Cooper, L, Sangha, P, Sedoc, J, White, S, Toledo, A, et al. Chatbot-delivered COVID-19 vaccine communication message preferences of young adults and public health workers in urban American communities: qualitative study. J Med Internet Res. (2022) 24:e38418. doi: 10.2196/38781

25. Zhou, S, Silvasstar, J, Clark, C, Salyers, AJ, Chavez, C, and Bull, SS. An artificially intelligent, natural language processing chatbot designed to promote COVID-19 vaccination: a proof-of-concept pilot study. Digital Health. (2023) 9:20552076231155679. doi: 10.1177/20552076231155679

26. Wan, J, Pan, G, Chen, Y, Hu, B, and Chang, T. Application of intelligent voice automatic call system in vaccination. Anhui J Prev Med. (2022) 28:360–363+436. doi: 10.19837/j.cnki.ahyf.2022.05.004

27. Lin, CC, Wu, HY, and Chang, YF. The critical factors impact on online customer satisfaction. Procedia Comp Sci. (2011) 3:276–81. doi: 10.1016/j.procs.2010.12.047

28. Borghi, M, Mariani, MM, Vega, RP, and Wirtz, J. The impact of service robots on customer satisfaction online ratings: the moderating effects of rapport and contextual review factors. Psychol Mark. (2023) 40:2355–69. doi: 10.1002/mar.21903

29. Hsu, CL, and Lin, JC. Understanding the user satisfaction and loyalty of customer service chatbots. J Retail Consum Serv. (2023) 71. doi: 10.1016/j.jretconser.2022.103211

30. Xu, H, Zhang, H, Han, K, Wang, Y, Peng, Y, and Li, X. Learning alignment for multimodal emotion recognition from speech. Arxiv. (2019). doi: 10.48550/arXiv.1909.05645

31. Sun, X, Li, X, Zhang, S, Wang, S, Wu, F, Li, J, et al., Sentiment analysis through llm negotiations. arXiv. (2023). doi: 10.48550/arXiv.2311.01876

32. Huang, D, Chen, Q, Huang, J, Kong, S, and Li, Z. Customer-robot interactions: understanding customer experience with service robots. Int J Hosp Manag. (2021) 99:103078. doi: 10.1016/j.ijhm.2021.103078

Keywords: intelligent consultation robot, vaccination services, conversational script impact, dialogue guidance strategy, work efficiency, patient satisfaction

Citation: Zhou B, Xu Y, Shi J, Xiang Y, Chen D, Yao Q and Hu Y (2025) Effectiveness of conversational script optimization by intelligent consultation robots on daily work efficiency in vaccination clinics. Front. Public Health. 13:1535270. doi: 10.3389/fpubh.2025.1535270

Edited by:

Amit Arora, University of the District of Columbia, United StatesReviewed by:

Suraj Singh Senjam, All India Institute of Medical Sciences, IndiaVanessa Veronese, World Health Organization, Switzerland

Copyright © 2025 Zhou, Xu, Shi, Xiang, Chen, Yao and Hu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yinjun Hu, c3Nkc2h5akAxNjMuY29t

†These authors have contributed equally to this work and share first authorship

Bei Zhou1†

Bei Zhou1† Yan Xu

Yan Xu Yinjun Hu

Yinjun Hu