- 1Department of Brain Sciences, Faculty of Medicine, Imperial College London, London, United Kingdom

- 2Keele University, Keele, United Kingdom

- 3School of Public Health, Faculty of Medicine, Imperial College London, London, United Kingdom

- 4Wolfson Institute of Population Health, Queen Mary University of London, London, United Kingdom

- 5Institute of Psychiatry, Psychology and Neuroscience, King's College London, London, United Kingdom

- 6Royal Borough of Kensington and Chelsea, London, United Kingdom

- 7Listen to Act, London, United Kingdom

Background: Early detection and intervention of dyslexia in children and young people (CYP) can help mitigate its negative impacts. Schools play a crucial role as a key point of contact for dyslexia screening.

Objective: In this review, we examined the range of screening tools and reported sensitivities and specificities in school settings to identify CYP with dyslexia and explored variations in how tools captured the socio-demographic characteristics of screened student's groups.

Design: Narrative review.

Methods: We searched five electronic databases: EMBASE, MEDLINE, PsychInfo, Cochrane, and Scopus (2010–2023) to identify worldwide school-based dyslexia screening studies conducted in CYP aged 4–16 years. Three independent researchers screened the papers, and data were extracted on the sensitivity and specificity of the screening tools, the informants involved, the prevalence of dyslexia among those who screened positive, and the socio-demographic characteristics of the identified CYP.

Results: Sixteen of 6,041 articles met the eligibility criteria. The study population ranged from 95 to 9,964 participants. We identified 17 different types of school-based dyslexia screening tools. Most studies combined screening tools (mean number of 3.7, standard deviation = 2.7) concurrently to identify dyslexia. Three studies used a staged approach of two and three stages. Developmental Dyslexia and Dysorthographia and Raven Progressive Matrices were the most used tools. The percentage of cases screening positive for dyslexia ranged from 3.1 to 33.0%. Among CYP identified by screening with dyslexia, there were missing socio-demographic data on gender (50%) and socio-economic status (81%) and none on ethnicity.

Conclusion: A variety of screening tools are used to identify children and young people (CYP) with dyslexia in school settings. However, it is unclear whether this wide range of tools is necessary or reflects variations in definitions. Greater collaboration between researchers and front-line educators could help establish a solid evidence base for screening and reduce the inconsistencies in approach. In the meantime, a practical and beneficial approach may involve starting with a highly sensitive screening tool, followed by more specific tests to assess detailed deficits and their impact.

Introduction

Dyslexia is widely recognized as a learning disorder and neurodiverse condition that affects the accuracy and fluency of reading in ways that are atypical relative to an individual's age, education, and/or intellectual ability (1, 2). A child's phonological processing ability is considered one of the strongest predictors of literacy acquisition (3, 4). However, definitions of dyslexia vary across disciplines, regions, and contexts. For example, some frameworks view dyslexia as a distinct diagnostic disorder, while others treat it as a cluster of reading-related difficulties or symptoms. Although there is ongoing debate and variation in how dyslexia is defined across disciplines and contexts, we adopt the definition of dyslexia as a neurodevelopmental condition primarily characterized by difficulties with accurate and/or fluent word recognition, along with poor spelling and decoding abilities. This aligns with definitions provided by the Rose Report (5) and Snowling and Melby-Lervåg (6). Dyslexia may co-occur with other learning or emotional difficulties. It is one of the most prevalent neurodiverse conditions and commonly occurs alongside conditions such as dyspraxia, dyscalculia, Attention Deficit Hyperactivity Disorder (ADHD), and Autism Spectrum Disorder (ASD) (7).

Although global dyslexia prevalence among children and young people (CYP) is estimated to be 7.1% (8), figures can vary widely depending on criteria for diagnosis and language (9, 10). The long-term effects of dyslexia for CYP compared to those without the condition include social, emotional, and behavioral issues, school exclusion, increased likelihood of attending a youth offending institute, and overall poor educational outcomes (11–13).

Schools are an ideal setting to universally screen for children with dyslexia and mitigate against harmful educational outcomes. Yet children with dyslexia are often diagnosed late due to a lack of awareness among teachers and parents regarding the signs and symptoms of the condition. Adopting a universal approach to screening in schools (screening tools can be used before school entry) (14, 15) can help overcome barriers that exist in identifying children with dyslexia that may relate to finance and other socio-demographic factors.

The precision of a school-based screening tool for dyslexia is important and should ideally result in a high percentage of true positives (children who are correctly identified as at risk, known as its sensitivity) and a high percentage of true negatives (children who are correctly identified as not at risk, known as its specificity (16)). However, no single screening tool or set of screening tools can achieve 100% sensitivity and specificity, thus, rendering false positives and false negatives inherent to any tool (3) and is a trade-off and balance between sensitivity and specificity (16, 17). Additionally, screening methods for dyslexia in school settings can be broadly categorized into subjective (e.g., teacher or parent questionnaires, self-report tools) and objective methods (e.g., direct testing of phonological awareness, rapid automatized naming, or decoding skills). Each category carries its own strengths and limitations in terms of reliability, feasibility, and alignment with diagnostic criteria.

Currently, many schools use different approaches to screen for CYP with dyslexia. This review was guided by answering the following research questions: (1) What dyslexia screening tools are most commonly used in school-based settings for children and young people aged 4–16 years? (2) How frequently are combinations of tools employed, and in what ways? (3) Which tools demonstrate the highest reported sensitivity and specificity? and (4) What gaps remain in the evidence regarding socio-demographic equity of school-based screening?

Methods

Context

Ethical approval was not sought for this study as it was an evidence synthesis of existing published research.

Patient and public involvement

The topic for this review was identified in consultation with a young people's advisory group in Northwest London, United Kingdom (https://www.arc-nwl.nihr.ac.uk/research/multimorbidity-and-mental-health/arc-outreach-alliance/young-peoples-advisory-group-ypag). The advisory group were first involved in the design of the study and advised on the neurodiverse conditions to study.

Search strategy

Information sources

We electronically searched five academic databases: EMBASE, MEDLINE, PsychInfo, Cochrane, and Scopus in September 2022. The reference list of all included articles was screened for additional studies. An updated search was conducted in February 2024, which yielded one further article, and again in August 2024, which did not identify any additional articles. Search terms are available in Supplementary File 1.

Keywords and index terms from relevant articles were identified as part of an initial search of MEDLINE and PSYCHINFO and helped inform the development of a full search strategy. The terms were mapped using a PICO (population, intervention, control, and outcome) approach and cross-checked with clinical and academic colleagues. Further advice was sought from a medical librarian to ensure relevant terms were included. The search strategy was adapted for each database to reflect both school-based screening and dyslexia-related symptoms. As a narrative review, we did not perform a formal risk-of-bias assessment.

Eligibility criteria

We excluded all studies that were published before 2010 as research in how neurodiverse conditions are perceived has changed significantly. Supplementary Table 1 outlines the eligibility criteria. We excluded children under 4 years and over 16 years, as access to full time education is not a requirement in many countries. We screened for articles that had dyslexia as a condition. Our inclusion criteria focused on screening tools used in real-world school settings, as our aim was to assess tools most commonly used in educational settings. Tools such as Test of Word Reading Efficiency (TOWRE) and York Assessment of Reading for Comprehension (YARC), while highly regarded in diagnostic contexts, were excluded unless explicitly used as part of school-wide screening initiatives, as our focus was on screening tools feasibly implemented at scale in educational settings. We excluded gray literature, defined here as unpublished theses, internal school evaluation reports, and non-peer-reviewed conference abstracts, to maintain a focus on peer-reviewed research.

We included studies that evaluated tools used in school settings to screen for dyslexia-related symptoms (e.g., phonological deficits, decoding difficulties) rather than tools designed solely for diagnostic purposes. Studies involving populations with concurrent conditions (e.g., ADHD) were included if the screening tool aimed to detect dyslexia symptoms independently. Although not designed to detect dyslexia, tools assessing emotional health (e.g., SDQ) or cognitive ability (e.g., Raven's Matrices) were also included when they were integrated into a school-based dyslexia screening protocol.

Study selection

Following the search, we collated all identified citations and uploaded these into Covidence systematic review software (18). Duplicates were removed. Titles and abstracts were screened by three independent reviewers for assessment against the review's eligibility criteria. The full text of selected citations was assessed by three independent reviewers. Reasons for exclusion of sources of evidence in full text were recorded. Any disagreements that arose between the reviewers at each stage of the selection process were resolved through discussion with the wider research team.

Data collection process and data items

Data were extracted by two independent reviewers into an Excel spreadsheet. We reported on sensitivity and specificity of the screening tool, its informants (e.g., teachers, parents, and self-report), associated prevalence of screen positive cases of dyslexia, and socio-demographic characteristics. Any disagreements between the reviewers were resolved through discussion, and with additional research authors. Additional or missing data were not sought from authors of papers included.

Types of sources

We included experimental and quasi-experimental study designs (randomized controlled trials, non-randomized controlled trials, before and after studies, and interrupted time-series studies) and analytical observational studies (prospective and retrospective cohort studies, case-control studies, and analytical cross-sectional studies). Case studies and opinion papers and non-school-based screening studies were excluded from this review.

Results

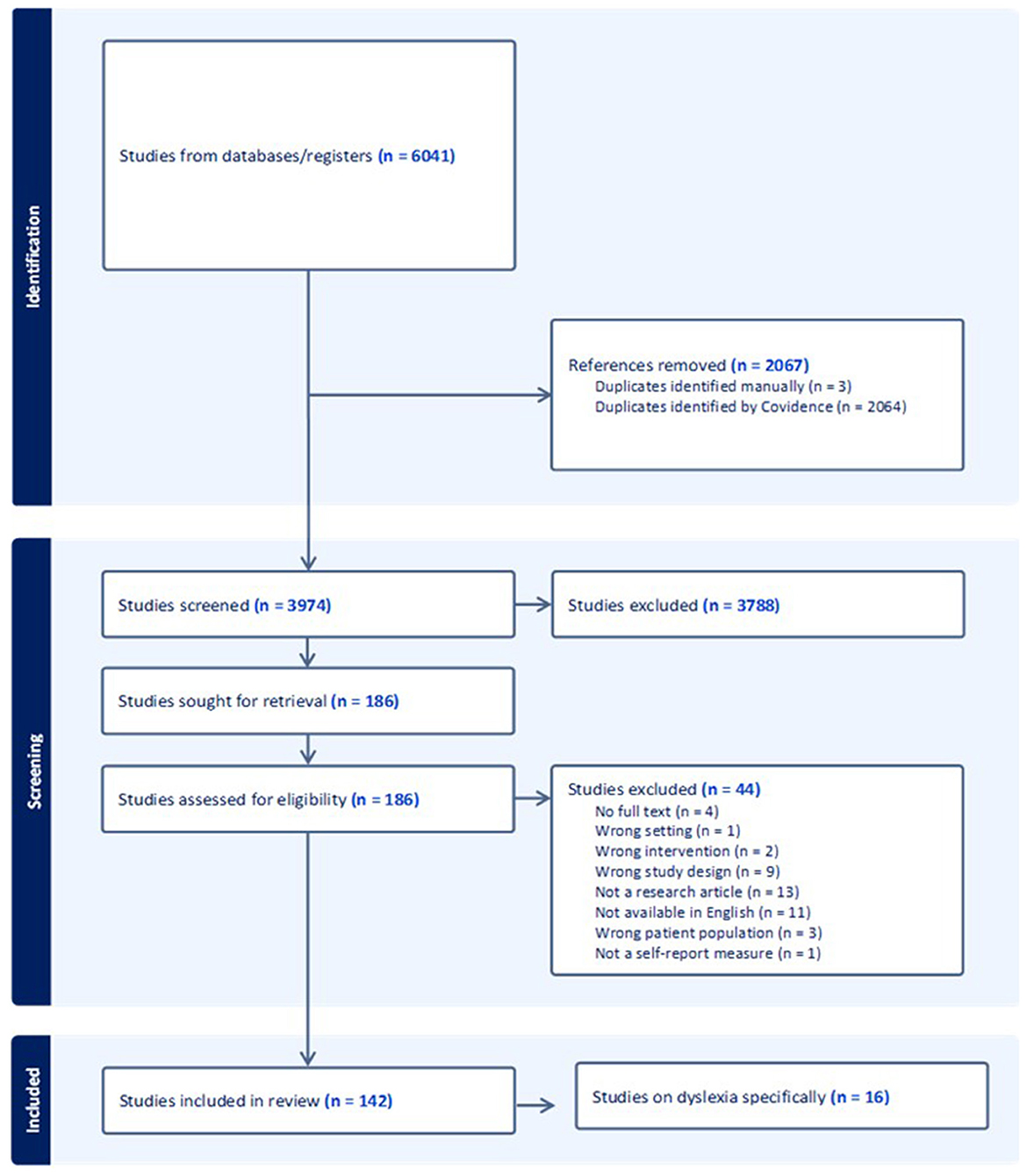

Figure 1 presents the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) diagram and details the search and selection process applied during the screening of articles.

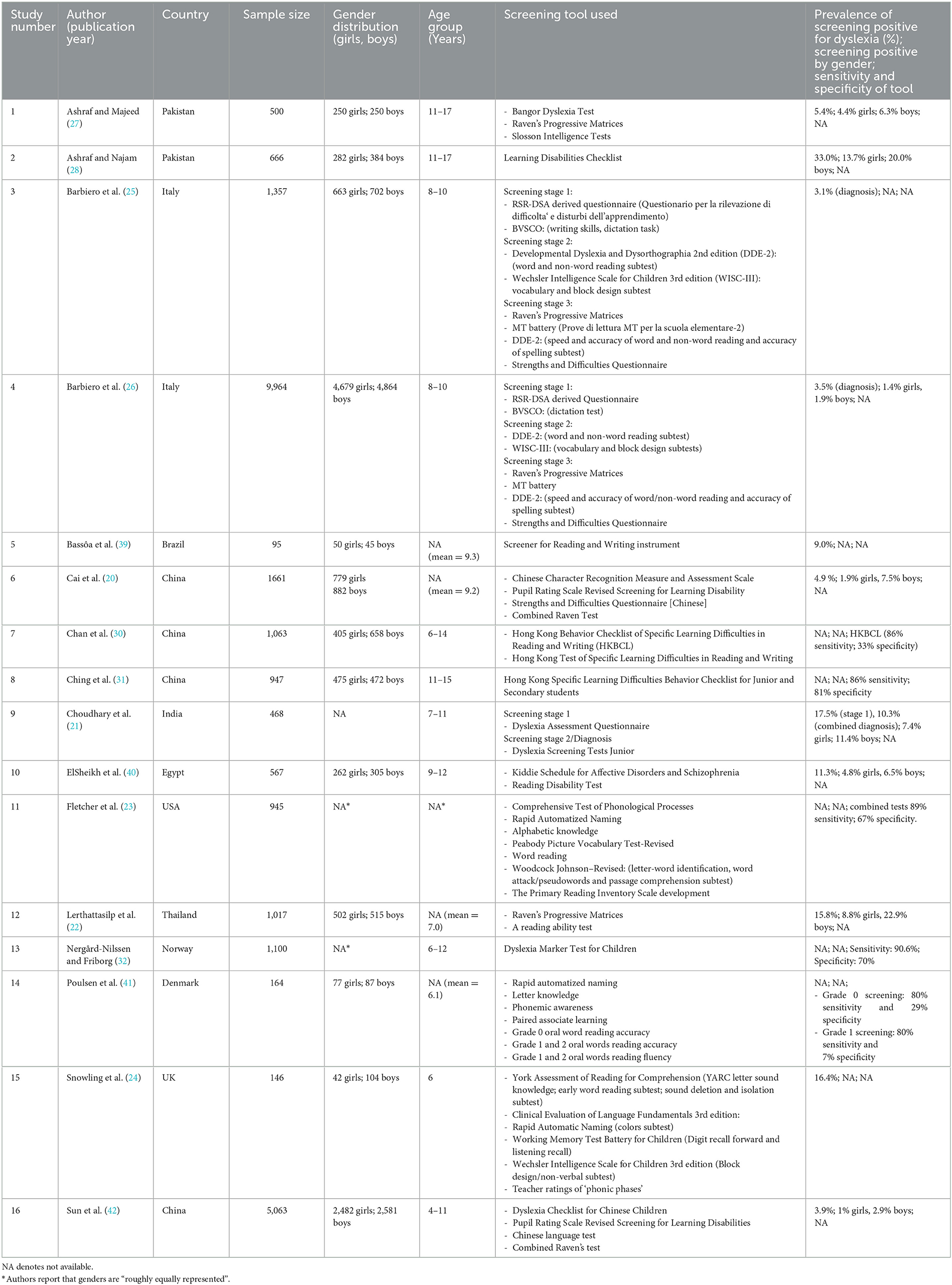

We identified 16 eligible studies of school-based screening for dyslexia in this review. Table 1 presents a summary of these studies including screening tools used and study outcomes (their informants, sensitivity, specificity, the language of administration and prevalence of CYP screening positive for dyslexia by gender and demographics) to improve transparency and facilitate comparison across different contexts. Studies were conducted across 11 countries and six World Health Organization regions (19): European (N = 5), Western Pacific (N = 4), the Americas (N = 2), Eastern Mediterranean (N = 2), Southeast Asia (N = 2), and African (N = 1). Screening tools were available in different languages including English, Mandarin, and Italian.

Table 1. Summary of included studies of screening tools used in identifying Children and Young People with dyslexia in school settings (N = 16).

Participants ranged in age from 4 to 16 years, and the study sample size ranged from 95 to 9,964 participants. In general, early primary school studies tended to emphasize phonological awareness and letter knowledge, whereas studies in older primary and secondary school students focused more on fluency, comprehension, and spelling. This suggests that the applicability of a tool may be age- or stage-dependent.

Seven studies recruited over 1,000 participants: China (N = 3), Italy (N = 2), Norway (N = 1) and Thailand (N = 1) and of these four studies reported similar screening positive prevalence for dyslexia; 3.1–4.9%. Of the eight studies which included a gender distribution for dyslexia screening prevalence, all of them reported higher figures among boys compared to girls.

Of the 16 studies we identified in our review, seven studies reported the socio-economic status of CYP screened. Of these seven, only three studies reported on the socioeconomic status of participants and its association with screening positive for dyslexia (20–22). Socio-economic status was assessed using parental occupation and education, and monthly family income, alone or in combination. Cai et al. (20) found that high levels of parental education was associated with less likelihood of screening positive for dyslexia while no significant association was found between family income and screening positive for dyslexia. Similarly, Lerthattasilp et al. (22) found higher monthly incomes were associated with less likelihood of screening positive for dyslexia and an association between parents with low levels of education screening positive for dyslexia. However, an earlier study by Choudhary et al. (21) showed that the socioeconomic status between CYP with dyslexia and those without (control group) did not differ. Socio-demographic factors such as parental education and family income were inconsistently reported, limiting conclusions regarding equity and generalizability.

No studies reported on the ethnicity of CYP who screened positive for dyslexia and only one study conducted in the USA (23) reported on ethnicity of the study population's CYP reflecting limited socio-demographic reporting. Other studies reported ethnicity in terms of nationality such as China or made no mention of ethnic status.

Dyslexia screening tools were used in combination and concurrently in 12 studies; the mean number of tools used was 3.7 (standard deviation = 2.7), with four studies using only one tool and two studies using eight tools to screen, reflecting variability in methodological approaches. Three studies adopted a staged screening for dyslexia whereby further screening tests were administered if a certain cut-off was achieved at the first stage. Different combinations of dyslexia screening tools were used as well as ‘other tools' which assessed the impact of dyslexia in terms of function as well as other concurrent problems. For example, the strengths and difficulties questionnaire (20) which is an emotional and behavioral screening tool and the Wechsler intelligence scale for children (24). Supplementary Table 2 shows the other screening tools that were identified in this review.

The most used dyslexia-specific screening tool was the Developmental Dyslexia and Dysorthographia 2nd edition (DDE-2). This albeit different subtests of DDE-2 were used four times in two separate studies (25, 26). Among other tools, the most used was the Raven Progressive Matrices which was used in four studies (22, 25–27).

Five studies did not report the prevalence of screening positive for dyslexia highlighting gaps in reporting and limiting cross-study comparisons. Among the remaining 11 studies the reported figure ranged from 3.1% to 33%. The highest figure of 33% was observed in a study from Pakistan (28) where the authors used the Learning Disability Checklist, a non-specific dyslexia test, which may explain the high figure and the likelihood of false positive. High rates of prevalence may be attributed in part to the lack of early assessment and screening strategies at school level (28, 29). In general, reported difficulties in using these tools included time constraints, need for trained personnel, and variability in teacher familiarity with the measures.

Additionally, 11 studies did not report the sensitivity or specificity of the screening tools used. In the five studies which did report these metrics, sensitivity was consistently higher than specificity in all combination of tools administered, for example, Chan et al. (30) reported the sensitivity of the Hong Kong Behavior Checklist of Specific Learning Difficulties as 86% while the specificity was 33%. Nergård-Nilssen and Friborg (32) reported the highest sensitivity of their screening protocol (90.6%) which used the Dyslexia Marker Test for Children. Fletcher et al. (23)'s combined seven tools to achieve the second highest sensitivity metric of 89% screening children approximately aged 5 years. It included the following: (1) Comprehensive Test of Phonological Processes, (2) Rapid Automatized Naming, (3) Alphabetic knowledge, (4) Peabody Picture Vocabulary Test-Revised, (5) Word reading, (6) Woodcock Johnson–Revised (WJR), and (7) Primary Reading Inventory Scale development.

The screening tool with the highest reported specificity of 81% was in a study conducted by Ching et al. (31) where students were screened using the Hong Kong Specific Learning Difficulties Behavior Checklist for Junior and Secondary. The Dyslexia Marker Test for Children in a study by Nergård-Nilssen and Friborg (32) scored the second highest of 70%.

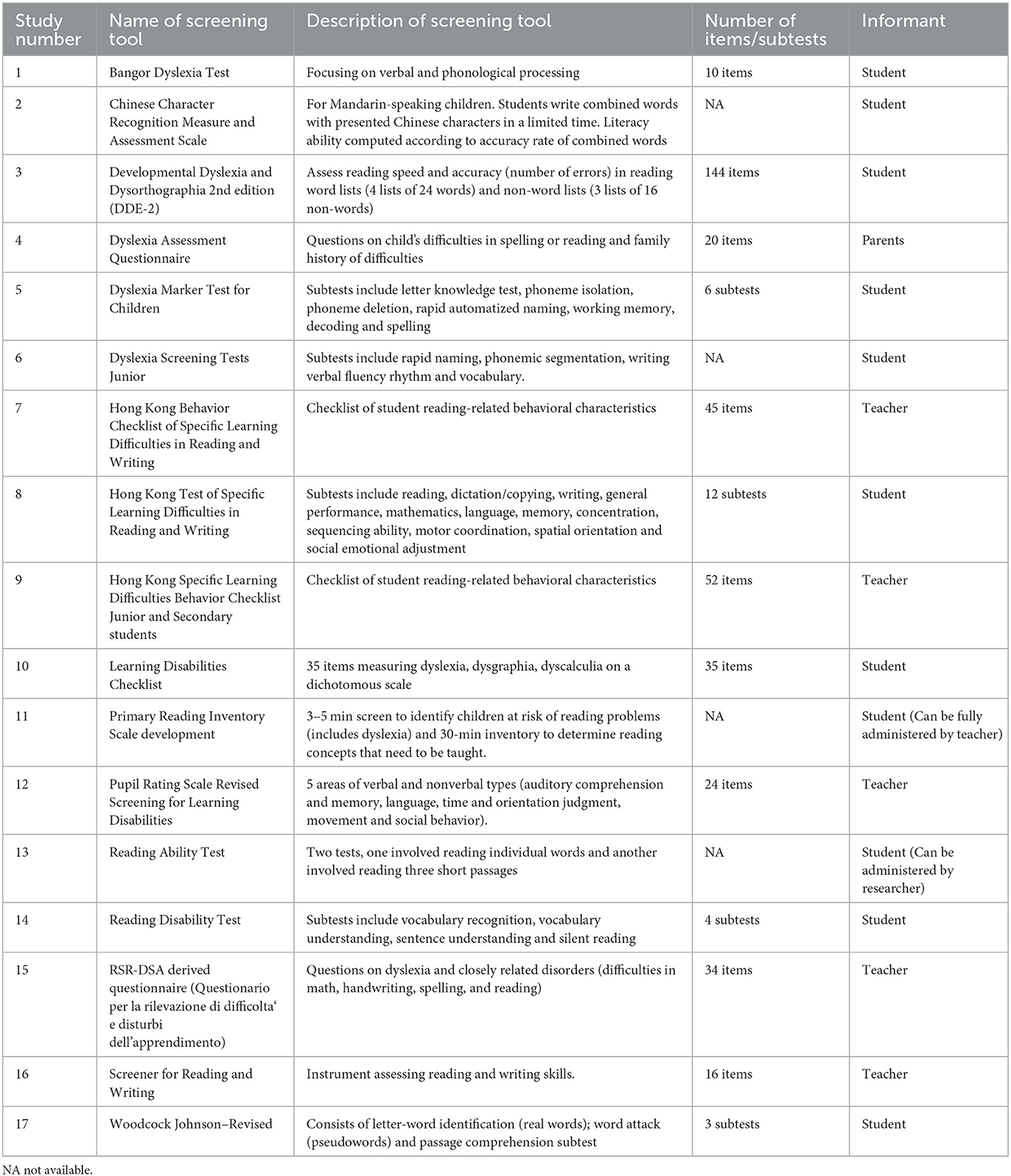

We identified 17 different dyslexia school-based screening tools that were used in the review (Table 2). Students were the main informant (N = 11) followed by teachers (N = 5) and then one tool used by parents (Dyslexia Assessment Questionnaire (21)).

Table 2. Summary of screening tools used for dyslexia and included in studies identified by the review (N = 17).

In undertaking dyslexia screening in schools, other tools were used to assess the impact of dyslexia and or concurrent problems (Supplementary Table 2), showing how some protocols integrated cognitive and emotional measures alongside dyslexia-specific screening. We identified 23 other tools that were used, and which assessed features such as intelligence through the Wechsler Intelligence Scale for Children (WISC), emotional and behavioral screening using the Strengths and Difficulties Questionnaire, or symptoms of psychosis and mood through the Kiddie Schedule for Affective Disorders and Schizophrenia. Of these other tools, all bar one where the parent was the informant, required completion by the young person.

Discussion

Our narrative systematic review reveals significant global differences in school-based dyslexia screening for children and young people (CYP) aged 4–16 years. The studies we included covered various countries, populations, and languages, making direct comparisons challenging. Additionally, few studies provided data on the sensitivity and specificity of the screening methods used. As a result, we were unable to determine whether school-based dyslexia screening should rely on a single tool, or a combination of tools used either together or in sequence. Our findings reinforce the need for clarity in how dyslexia is defined and operationalized in screening contexts. Tools used without consistent criteria or theoretical underpinning may contribute to under- or over-identification, especially in diverse populations.

The most commonly used dyslexia screening tool was the Developmental Dyslexia and Dysorthographia tool (second edition), which consists of eight subsets. In studies, different subsets of this tool were used for screening. It was developed based on norms from a sample of 1,200 children aged 7–14 years (33). The second most frequently used tool was the Raven Progressive Matrices, which was always combined with another screening tool. While standardized across various populations, this tool is designed to assess abstract reasoning and problem-solving abilities through pattern recognition (34), rather than specifically measuring dyslexia.

We identified several screening tools, such as the Strengths and Difficulties Questionnaire, that were not specifically designed for dyslexia screening but instead assessed the impact of dyslexia. For example, these tools measured the effects of dyslexia on an individual's psychosocial functioning, as well as emotional and behavioral difficulties. This type of supplementary screening could play an important role in school-based assessments, helping to identify children and young people with dyslexia who face the greatest challenges and guiding how resources are prioritized for them.

Research to date for dyslexia screening recommends the use of a tool with a sensitivity score of 90% (35, 36); high sensitivity scores are likely to identify nearly all children with dyslexia and that additional tests are then used to confirm an accurate diagnosis; removing the number of children identified wrongly (false positives). In our review, we found one study by Nergård-Nilssen and Friborg (32) that used a Dyslexia Marker Test that met this recommendation reporting a sensitivity of 91%. This tool consisted of six subtests: letter knowledge test, phoneme isolation, phoneme deletion, RAN, working memory, decoding and spelling and covered three areas: ability, attainment and diagnostic. The only other study conducted by Fletcher et al. (23) that nearly met this recommendation, reported a sensitivity score of 89% and used five screening tools.

The prevalence of children screening positive for dyslexia varied widely across studies, ranging from 3% to 33% of the sample population. The highest prevalence was reported in a Pakistani study (33%; 27), which used a Learning Disability Checklist. We also found that only 50% of the studies reported on the gender of students, 19% on their socio-economic status, and none on their ethnicity among those who screened positive for dyslexia. When considering educational stage, screening practices also diverged: tools for early years (ages 4–6) prioritized early phonological skills, rapid naming, and alphabet knowledge, whereas upper primary and secondary school tools often assessed fluency, comprehension, and spelling. Framing tools by school stage as well as age may help schools select the most developmentally appropriate instruments.

The wide variation in the dyslexia screening tools used in school-based research may reflect a lack of consensus on the best approach. A recent systematic review found that psychologists in English-speaking countries show little alignment in their methods for assessing dyslexia (37). Furthermore, the definition of dyslexia itself has evolved over time, with arbitrary cut-offs and the absence of clear diagnostic criteria (5, 6). This has led to differences in operational definitions and screening tools, each emphasizing different skills and characteristics (38). As a result, the lack of a unified approach to screening limits the ability to implement universal methods and complicates decisions within schools and the broader education system regarding which tool to use for dyslexia screening (38).

Limitations

Our search strategy involved five electronic databases with a broad approach that included terms related to other neurodiverse conditions, rather than focusing solely on dyslexia-specific terms. Additionally, we did not include gray literature in our search strategy, as the focus of this review was on effective school-based dyslexia screening tools, and it is unlikely that gray literature would have provided relevant articles.

Our review was limited to dyslexia screening tools that were specifically used and studied within school settings. While this focus is context-specific, it may have excluded tools that, although not used in schools, could still be effective for school-based screening. Additionally, the wide variety of screening tools identified made it difficult to generalize the findings or make direct comparisons. The studies also involved diverse school settings and educational systems, which may limit the applicability of the findings to different schools.

Many studies also lacked complete socio-demographic data on the children and young people (CYP) being screened. This absence of information limits our ability to determine whether the screening tools are suitable for use across different socio-demographic groups. Future research should aim to include a broader range of socio-demographic factors to address these gaps.

Strengths of review

This review has several methodological strengths. We conducted a systematic multi-database search using a protocol informed by PRISMA, with input from a young people's advisory group to ensure relevance to school settings. We also included a wide range of study designs and international settings, allowing us to highlight global variability in dyslexia screening approaches. The inclusion of both dyslexia-specific and supplementary tools (e.g., cognitive or emotional assessments) also provides a broader perspective on how schools operationalize screening in practice.

Recommendations for policy makers

Schools vary in their financial resources, student populations, technical infrastructure, and schedules. When choosing a dyslexia screening tool, they must consider factors such as the time required to complete the screening, its sensitivity and specificity, and the potential risks of identifying a high number of false positives, children who do not actually have dyslexia. These factors can influence their decision on which screening tool to use.

Given the lack of clear evidence from our review, we propose a practical two-stage approach for school-based dyslexia screening. The first stage would involve a simple and quick screening tool, such as the Dyslexia Marker Test for Children (32), which may have a higher rate of false positives. The second stage would involve a more detailed assessment to confirm the diagnosis. However, it's important to note that more specific assessments may come with higher costs. Further research into the factors influencing decision-makers' choice of screening tools could help identify key elements that support, or hinder, the implementation of school-based screening programs. Our recommendation for a two-stage screening model is therefore pragmatic: although evidence remains limited, this approach balances feasibility (quick initial screen) with accuracy (detailed follow-up), and reflects current practice in several educational systems.

We recommend adopting universal national screening guidelines to provide a structured approach to dyslexia screening in schools, helping to prevent potential harms. Additionally, there is an urgent need for a robust evidence base on dyslexia screening in schools, with ongoing monitoring to quickly address any biases or disparities in screening uptake and identification.

Conclusion

Various school-based screening tools are used to identify children with dyslexia, but there has been no consensus over time regarding the best approach. The wide range of dyslexia screening tools identified in this review raises questions about whether such diversity is necessary and or reflects variation in definition. Increased collaboration between researchers and front-line educators could help address these differing approaches and establish an evidence-based screening method. In the meantime, an initial screening with a highly sensitive tool, followed by more specific tests to assess detailed deficits and their impact, may provide a beneficial approach.

Author contributions

RB: Supervision, Investigation, Conceptualization, Writing – review & editing, Formal analysis, Writing – original draft, Resources, Data curation, Validation, Methodology. NF: Visualization, Data curation, Validation, Conceptualization, Methodology, Writing – review & editing, Formal analysis. FA: Conceptualization, Writing – original draft, Formal analysis, Writing – review & editing, Methodology. DN: Conceptualization, Supervision, Writing – review & editing. DH: Conceptualization, Writing – review & editing, Supervision. AL: Writing – review & editing, Conceptualization. LM: Data curation, Methodology, Formal analysis, Investigation, Writing – review & editing. S-NG: Writing – review & editing, Methodology, Data curation. KN: Writing – review & editing, Conceptualization, Methodology. HK: Writing – review & editing, Investigation. AW: Conceptualization, Writing – review & editing. SG: Data curation, Methodology, Conceptualization, Supervision, Writing – review & editing, Formal analysis, Writing – original draft.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This article presents independent research commissioned by the National Institute for Health and Care Research (NIHR) under the Applied Research Collaboration (ARC) program for Northwest London (NWL). SG is funded by the NIHR School for Public Health Research. The NIHR School Public Health Research (grant number: NIHR 204000) is a partnership between the Universities of Bristol, Cambridge, and Sheffield; Imperial; University College London; the London School of Hygiene and Tropical Medicine; LiLaC—a collaboration between the Universities of Liverpool and Lancaster; and Fuse—the Centre for Translational Research in Public Health, a collaboration between Newcastle, Durham, Northumbria, Sunderland, and Teesside Universities.

Acknowledgments

Special thanks go to the Young Advisors; Isra, Lukas, Kaden, James, Kiera, Civan, Sirad, Krishan, Merkesha, Lottie, Kawthar of the Young People's Advisory Group, coordinated by Listen to Act. This group sits within the NIHR ARC Outreach Alliance mental health infrastructure program. We would like to express our gratitude to the Young Advisors for their time, diligence and input to steer and inform this research.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Author disclaimer

The views expressed in this publication are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health and Social Care.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2025.1654470/full#supplementary-material

References

1. Peterson RL, Pennington BF. Developmental dyslexia. Lancet. (2012) 379:1997–2007. doi: 10.1016/S0140-6736(12)60198-6

2. Snowling MJ, Hulme C. Interventions for children's language and literacy difficulties. Int J Lang Commun Disord. (2012) 47:27–34. doi: 10.1111/j.1460-6984.2011.00081.x

3. Fletcher JM, Lyon GR, Barnes M, Stuebing KK, Francis DJ, Olson RK, et al. Classification of learning disabilities: an evidence-based evaluation. In: Identification of Learning Disabilities: Research to Practice. New York, NY: Guilford Press (2002). p. 185–250.

4. Fuchs D, Compton DL, Fuchs LS, Bryant VJ, Hamlett CL, Lambert W. First-grade cognitive abilities as long-term predictors of reading comprehension and disability status. J Learn Disabil. (2012) 45:217–31. doi: 10.1177/0022219412442154

5. Rose J. Identifying and teaching children and young people with dyslexia and literacy difficulties, DCSF-00659–2009. Nottingham, UK: DCSF Publications (2009).

6. Snowling MJ, Melby-Lervåg M. Oral language deficits in familial dyslexia: a meta-analysis and review. Psychol Bull. (2016) 142:498. doi: 10.1037/bul0000037

7. Kirby A. (n.d.). HM Inspectorate of Probation Neurodiversity—A Whole-Child Approach for Youth Justice. Manchester: HM Inspectorate of Probation.

8. Yang L, Li C, Li X, Zhai M, An Q, Zhang Y, et al. Prevalence of developmental dyslexia in primary school children: a systematic review and meta-analysis. Brain Sci. (2022) 12:240. doi: 10.3390/brainsci12020240

9. Shaywitz SE, Morris R, Shaywitz BA. The education of dyslexic children from childhood to young adulthood. Annu Rev Psychol. (2008) 59:451–75. doi: 10.1146/annurev.psych.59.103006.093633

10. Wagner RK, Zirps FA, Edwards AA, Wood SG, Joyner RE, Becker BJ, et al. The prevalence of dyslexia: a new approach to its estimation. J Learn Disabil. (2020) 53:354–65. doi: 10.1177/0022219420920377

11. Livingston EM, Siegel LS, Ribary U. Developmental dyslexia: emotional impact and consequences. Aust J f Learn Difficul. (2018) 23:107–35. doi: 10.1080/19404158.2018.1479975

12. Partanen M, Siegel LS. Long-term outcome of the early identification and intervention of reading disabilities. Read Writ. (2014) 27:665–84. doi: 10.1007/s11145-013-9472-1

13. Snowling MJ, Nash HM, Gooch DC, Hayiou-Thomas ME, Hulme C, Wellcome Language and Reading Project Team. Developmental outcomes for children at high risk of dyslexia and children with developmental language disorder. Child Dev. (2019) 90, e548–64. doi: 10.1111/cdev.13216

14. Sanfilippo J, Ness M, Petscher Y, Rappaport L, Zuckerman B, Gaab N. Reintroducing dyslexia: Early identification and implications for pediatric practice. Pediatrics. (2020) 146:e20193046. doi: 10.1542/peds.2019-3046

15. Lovett MW, Frijters JC, Wolf M, Steinbach KA, Sevcik RA, Morris RD. Early intervention for children at risk for reading disabilities: the impact of grade at intervention and individual differences on intervention outcomes. J Educ Psychol. (2017) 109:889. doi: 10.1037/edu0000181

16. Andrade OV, Andrade PE, Capellini SA. Collective screening tools for early identification of dyslexia. Front Psychol. (2015) 5:103765. doi: 10.3389/fpsyg.2014.01581

17. Gilbert JK, Compton DL, Fuchs D, Fuchs LS. Early screening for risk of reading disabilities recommendations for a four-step screening system. Assess Effect Interv. (2012) 38:6–14. doi: 10.1177/1534508412451491

18. Veritas Health Innovation. Covidence Systematic Review Software. Melbourne, Australia: Veritas Health Innovation (2023). Available online at: www.covidence.org (Accessed September 27, 2025).

19. World Health Organization. WHO Regions. (2023). Accessed online at: https://www.who.int/about/who-we-are/regional-offices (Accessed September 26, 2025).

20. Cai L, Chen Y, Hu X, Guo Y, Zhao X, Sun T, et al. An epidemiological study of Chinese children with developmental dyslexia. J Dev Behav Pediatr. (2020) 41:203–11. doi: 10.1097/DBP.0000000000000751

21. Choudhary MG, Jain A, Chahar CK, Singhal AK. A case control study on specific learning disorders in school going children in Bikaner city. Indian J Pediatr. (2012) 79:1477–81. doi: 10.1007/s12098-012-0699-7

22. Lerthattasilp T, Sritipsukho P, Chunsuwan I. Reading problems and risk of dyslexia among early elementary students in Thailand. J Popul Soc Stud. (2022) 30:726–40. doi: 10.25133/JPSSv302022.040

23. Fletcher JM, Francis DJ, Foorman BR, Schatschneider C. Early detection of dyslexia risk: Development of brief, teacher-administered screens. Learn Disabil Q. (2021) 44:145–57. doi: 10.1177/0731948720931870

24. Snowling MJ, Duff F, Petrou A, Schiffeldrin J, Bailey AM. Identification of children at risk of dyslexia: the validity of teacher judgements using ‘Phonic Phases'. J Res Read. (2011) 34:157–70. doi: 10.1111/j.1467-9817.2011.01492.x

25. Barbiero C, Lonciari I, Montico M, Monasta L, Penge R, Vio C, et al. The submerged dyslexia iceberg: how many school children are not diagnosed? Results from an Italian study. PLoS ONE. (2012) 7:e48082. doi: 10.1371/journal.pone.0048082

26. Barbiero C, Montico M, Lonciari I, Monasta L, Penge R, Vio C, et al. The lost children: the underdiagnosis of dyslexia in Italy. A cross-sectional national study. PLoS ONE. (2019) 14:e0210448. doi: 10.1371/journal.pone.0210448

27. Ashraf M, Majeed S. Prevalence of dyslexia in secondary school. Pak J Psychol Res. (2011) 26:73–85.

28. Ashraf F, Najam N. An epidemiological study of prevalence and comorbidity of non-clinical dyslexia, dysgraphia and dyscalculia symptoms in public and private schools of Pakistan. Pak J Med Sci. (2020) 36:1659. doi: 10.12669/pjms.36.7.2486

30. Chan DW, Ho CSH, Chung KK, Tsang SM, Lee SH. The Hong Kong behaviour checklist for primary students: developing a brief dyslexia screening measure. Int J Disabil Dev Educ. (2012) 59:173–96. doi: 10.1080/1034912X.2012.676437

31. Ching BHH, Ho CSH, Chan DW, Chung KK, Lo LY. Behavioral characteristics of Chinese adolescents with dyslexia: the use of teachers' behavior checklist in Hong Kong. Appl Psycholinguist. (2014) 35:1235–57. doi: 10.1017/S0142716413000179

32. Nergård-Nilssen T, Friborg O. The dyslexia marker test for children: development and validation of a new test. Assess Effect Intervent. (2022) 48:23–33. doi: 10.1177/15345084211063533

33. Sartori G, Job R, Tressoldi PE. DDE-2 Batteria per la valutazione della dislessia e della disortografia evolutiva - 2. Firenze, Italy: Giunti O.S. (2007).

34. Raven J, Raven JC, Court JH. Manual for Raven's Progressive Matrices and Vocabulary Scales. Section 3: The Standard Progressive Matrices. Oxford: Oxford Psychologists Press. (2000).

35. Jenkins JR, Hudson RF, Johnson ES. Screening for at-risk readers in a response to intervention framework. School Psych Rev. (2007) 36:582. doi: 10.1080/02796015.2007.12087919

36. Johnson ES, Jenkins JR, Petscher Y, Catts HW. How can we improve the accuracy of screening instruments?. Learn Disabil Res Pract. (2009) 24, 174–85. doi: 10.1111/j.1540-5826.2009.00291.x

37. Sadusky A, Berger EP, Reupert AE, Freeman NC. Methods used by psychologists for identifying dyslexia: a systematic review. Dyslexia. (2022) 28:132–48. doi: 10.1002/dys.1706

38. Andresen, A, Monsrud, M. B. (2022). Assessment of Dyslexia–Why, When, and with What? Scand J Educ Res. 66:1063–75. doi: 10.1080/00313831.2021.1958373

39. Bassôa A, Costa AC, Toazza R, Buchweitz A. Scale for developmental dyslexia screening: evidence of validity and reliability. CoDAS. (2021) 33:e20200042. doi: 10.1590/2317-1782/20212020242

40. El Sheikh MM, El Missiry MA, Hatata HA, Sabry WM, El Fiky AAA, Essawi HI. Frequency of occurrence of specific reading disorder and associated psychiatric comorbidity in a sample of Egyptian primary school students. Child Adolesc Ment Health. (2016) 21:209–16. doi: 10.1111/camh.12174

41. Poulsen M, Nielsen AMV, Juul H, Elbro C. Early identification of reading difficulties: a screening strategy that adjusts the sensitivity to the level of prediction accuracy. Dyslexia. (2017) 23:251–67. doi: 10.1002/dys.1560

Keywords: school-based screening tools, learning disability, dyslexia screening, sensitivity and specificity, systematic review

Citation: Bakhti R, Fonseka N, Amati F, Nicholls DE, Hargreaves D, Lazzarino A, McCan L, Gardner S-N, Narayan K, Kerslake H, Weston A and Gnani S (2025) A narrative review of school-based screening tools for dyslexia among students. Front. Public Health 13:1654470. doi: 10.3389/fpubh.2025.1654470

Received: 27 June 2025; Accepted: 25 September 2025;

Published: 23 October 2025.

Edited by:

Angela Jocelyn Fawcett, Swansea University, United KingdomReviewed by:

Pin-Ju Chen, Ming Chuan University, TaiwanAkanksha Dani, All India Institute of Medical Sciences Nagpur, India

Copyright © 2025 Bakhti, Fonseka, Amati, Nicholls, Hargreaves, Lazzarino, McCan, Gardner, Narayan, Kerslake, Weston and Gnani. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rinad Bakhti, ci5iYWtodGlAaW1wZXJpYWwuYWMudWs=

Rinad Bakhti

Rinad Bakhti Nishani Fonseka2

Nishani Fonseka2 Dasha Elizabeth Nicholls

Dasha Elizabeth Nicholls