- 1Department of Mathematics, Faculty of Science, UNAM Universidad Nacional Autonoma de Mexico, Ciudad de México, Mexico

- 2Autonomous Mobile Manipulation Laboratory, Centre for Applied Autonomous Sensor Systems, Orebro University, Orebro, Sweden

- 3PRISMA Laboratory, Department of Electrical Engineering and Information Technology, University of Naples Federico II, Naples, Italy

- 4Robotics, Learning and Perception Laboratory, Centre for Autonomous Systems, EECS, KTH Royal Institute of Technology, Stockholm, Sweden

- 5School of Computer Science, University of Birmingham, Birmingham, United Kingdom

Manipulation of deformable objects has given rise to an important set of open problems in the field of robotics. Application areas include robotic surgery, household robotics, manufacturing, logistics, and agriculture, to name a few. Related research problems span modeling and estimation of an object's shape, estimation of an object's material properties, such as elasticity and plasticity, object tracking and state estimation during manipulation, and manipulation planning and control. In this survey article, we start by providing a tutorial on foundational aspects of models of shape and shape dynamics. We then use this as the basis for a review of existing work on learning and estimation of these models and on motion planning and control to achieve desired deformations. We also discuss potential future lines of work.

1. Introduction

Robotic manipulation work tends to focus on rigid objects (Bohg et al., 2014; Billard and Kragic, 2019). However, most objects manipulated by animals and humans change shape upon contact. Manipulating a deformable object presents a quite different set of challenges from those that arise when manipulating a rigid object. For example, forces applied to a rigid object simply sum to determine the external wrench and, when integrated over time, result in a sequence of rigid body transformations in SE(3). This is relatively simple dynamic model, albeit still difficult to estimate for a given object, manipulator, and set of environment contacts.

Forces applied to a deformable body, by contrast, both move the object and change its shape. The exact combination of deformation and motion depends on the precise material composition. Thus, material properties become a critical part of the system dynamics, and consequently the underlying physics of deformation is complex and hard to capture. In addition, the dynamics models typically employed in high-fidelity mechanical modeling—such as finite element models—while precise, require detailed knowledge of the material properties, which would be unavailable to a robot in the wild. Yet such a lack of detailed physics knowledge does not prevent humans and other animals from performing dexterous manipulation of deformable objects. Consider the way a New Caledonian crow shapes a tool from a branch (Weir and Kacelnik, 2006) or how a pizzaiolo dexterously transforms a ball of dough into a pizza. Clearly, robots have a long way to go to match these abilities.

Even though a great deal of work has been done on developing solutions for each of these stages (Cootes et al., 1992; Montagnat et al., 2001; Nealen et al., 2006; Moore and Molloy, 2007), only a few combinations have actually been tried in robotics to date (Nadon et al., 2018; Sanchez et al., 2018). In contrast to earlier review articles, this paper consists of both a tutorial and a review of related work, aimed at newcomers to robotic modeling and manipulation of deformable objects who need a quick introduction to the basic methods, which are adopted from various other fields and backgrounds. We provide, in a single place, a menu of possible approaches motivated by computer vision, computer graphics, physics, machine learning, and other fields, in the hope that readers can find new material and inspiration for creatively developing their work. We then review how these methods are applied in practice. In this way, we take a more holistic approach than existing reviews. Since the literature in the fields we touch on is abundant, we cannot be exhaustive and will focus mostly on manipulation of volumetric solid objects.

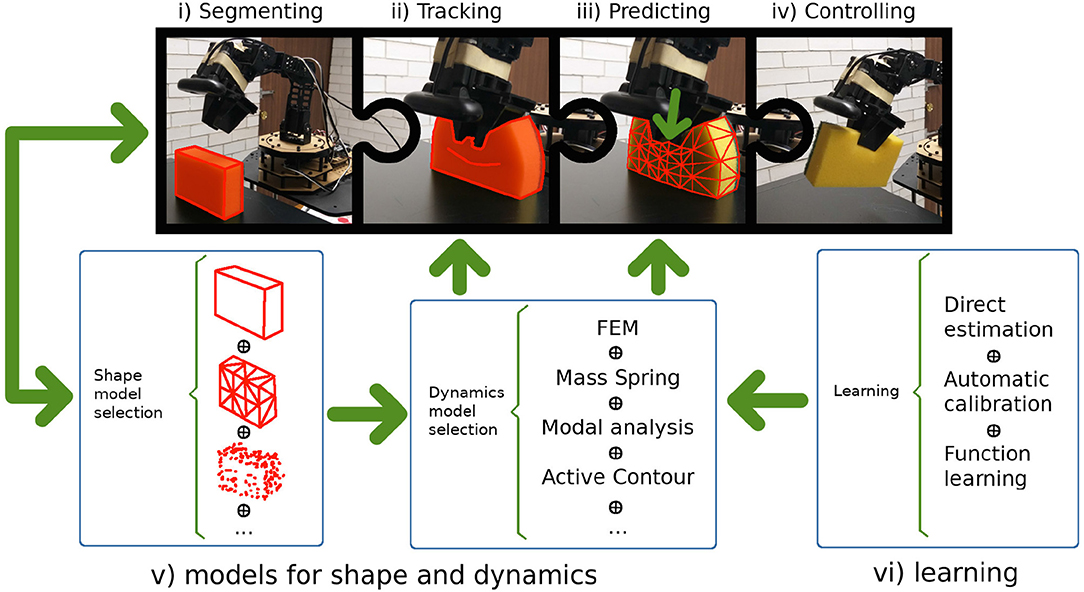

This review is motivated by a future goal in the form of an ideal scenario. In this scenario a general purpose robot would be capable of perceiving shape, dynamics, and necessary material properties (e.g., elasticity, plasticity) of deformable objects to implement manipulation strategies relying on planning and control methods. Currently no robot has all these capabilities. Therefore, we decompose the problem space into five main parts that work like pieces in a puzzle: Because representational choices are fundamental, we explain, in a tutorial style, (1) the modeling of shape (section 2) and (2) the modeling of deformation dynamics (section 3) to provide the reader with the necessary mathematical background; then we discuss, in survey form, (3) learning and estimation of the parameters of these models that are related to deformability of objects (e.g., material properties, such as elasticity, or shape properties, such as resolution of a mesh; section 4), (4) the application of the models to perception and prediction, and (5) planning and control of manipulation actions (section 5), since these topics build on the models explained in (1) and (2) and there is such a wide range of different approaches that it would be impossible to cover them all in depth. Figure 1 shows our guideline processing stream: (i) The robot perceives the object, segments it from its environment, and selects an adequate representation for its shape and intended task; the desired type of representation will determine which algorithms must be used to recognize the object. (ii) As the robot interacts with the object, it deforms the object and must register the deformation by modifying the shape representation accordingly; while doing so, it can make use of tracking/registration techniques or enhanced predictive tracking (which requires a model of the dynamics). (iii) A suitable model for the dynamics is selected to predict new configurations of the shape representation as the robot interacts with the object. (iv) Information from the previous stages is used to integrate the inputs and rules for the control strategies. Finally, all this information can be fed into learning algorithms capable of increasing the repertoire of known objects. At this stage, there are three types of strategies: (a) estimating new parameters directly (which is rarely viable); (b) calibrating known physics-based models automatically by allowing the robot to take some measurements that will help to determine the value of model parameters; and (c) approximating new functions that describe the dynamics, as is done with neural networks.

Figure 1. A general purpose robot must be capable of: (i) perceiving and segmenting the object from the scene; (ii) tracking the object's motion; (iii) predicting the object's behavior; (iv) planning and controlling new manipulation strategies based on the predictions; (v) selecting the appropriate model for each task, since all models for shape and dynamics have limitations; and (vi) learning new models for previously unknown shapes and materials.

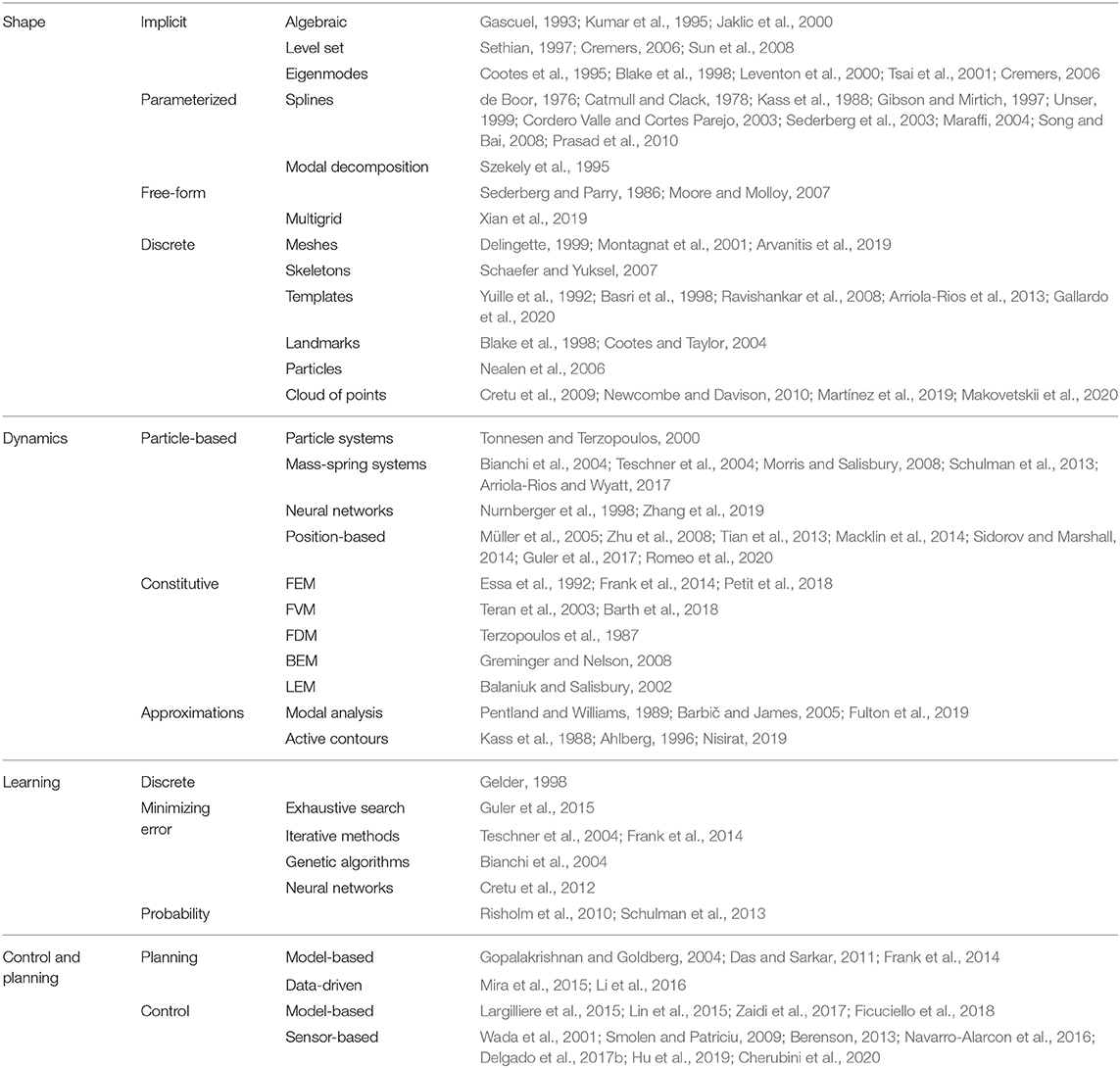

Figure 2 shows the connections between the different models covered in this review as they have been used in the publications mentioned. Table 1 gives a summary of the publications discussed in each section. The following describes the notation that will be used throughout the paper:

• Scalars: italic lower-case letters, e.g., x, y, z.

• Vectors: bold lower-case letters, e.g., p = {x, y, z}T.

• Matrices: bold upper-case letters, e.g., R.

• Scalar functions whose range is ℝ: italic lower-case letters followed by parentheses, e.g., f(·).

• Vector functions whose range is ℝn with n > 1: bold italic letters followed by parentheses, e.g., S(·).

• Sets: calligraphic letters, e.g., .

• Number sets: blackboard bold upper-case letters, e.g., ℝ, ℕ.

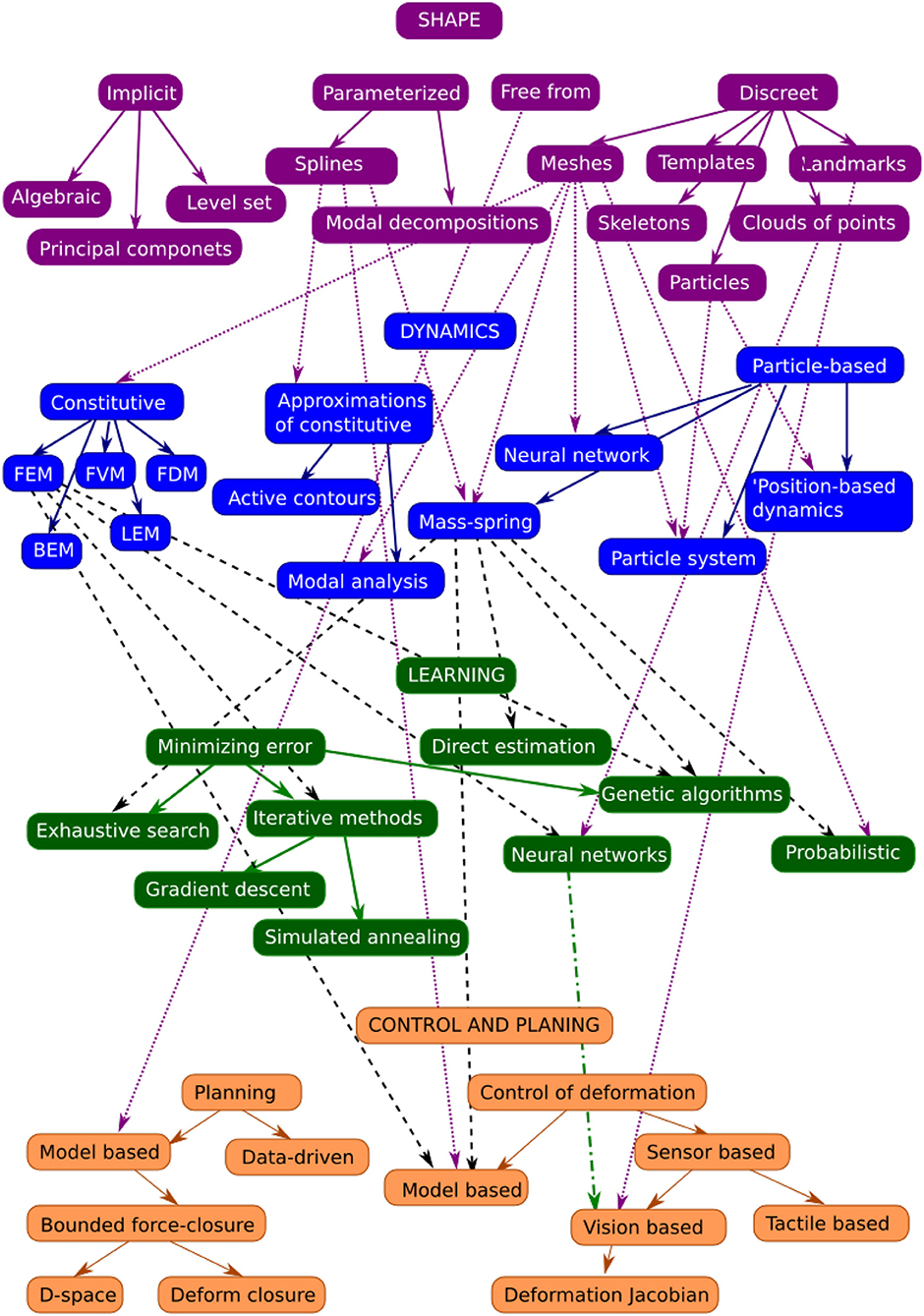

Figure 2. Relationships between shape, dynamics, learning models, and control methodologies as they have been used in the literature. Colored arrows indicate subcategories, while black arrows show when a methodology in one level has been used for the next one.

2. Representing Shape for Deformable Objects

The initial problem of manipulating a deformable object is to perceive and segment the object shape from the scene as it deforms (Figure 1i). The main difficulty with this problem is the number of degrees of freedom required to model the object shape. The expressiveness, accuracy, and flexibility of the model can ease the modeling of the dynamics or make it more difficult in different scenarios. For this reason, this section introduces a variety of models from mathematics, computer graphics, and computer vision for representation of the shape of deformable objects.

2.1. Implicit Curves and Surfaces

An implicit curve or surface of dimension n − 1 is generally defined as the zero set of a function f:ℝn → ℝ,

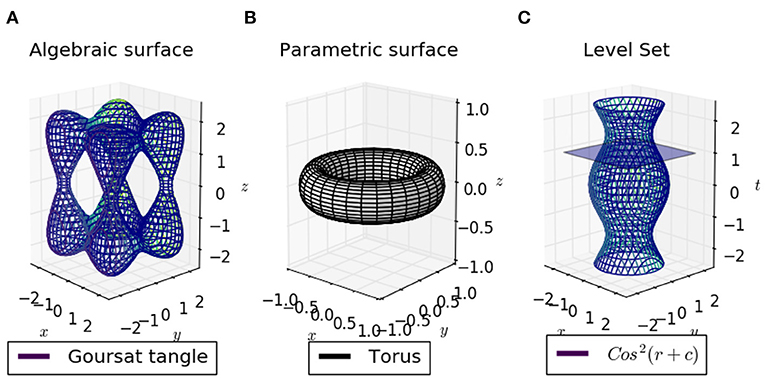

where p is a coordinate in an n-dimensional space in which the surface is embedded (Montagnat et al., 2001). Therefore, the set defines the surface formed by all points p in ℝn such that the function f, when evaluated at p, is equal to zero. The implicit function is used to locate surface points by solving the equation f (p) = 0 (Figure 3A). In robotics, n is usually 3 to represent Cartesian coordinates, but sometimes an extra dimension can be used to represent time. Representations that fall within this category are explained in the rest of this subsection.

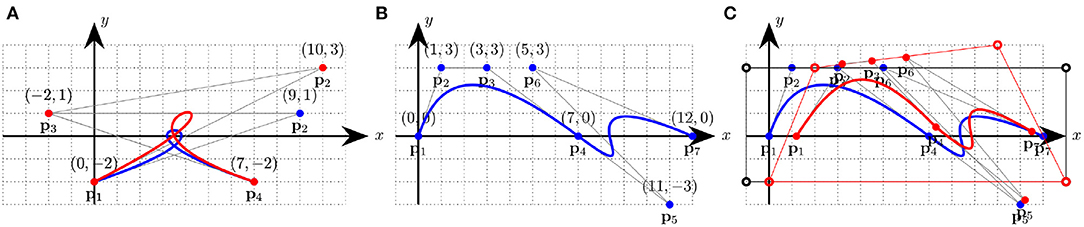

Figure 3. (A) Algebraic surfaces can represent basic and complex shapes, such as circles or the tangled cube; a limited but useful set of deformations is straightforward to define, while other deformations that are not so intuitive tend to be used in combination with skeletons and skinning techniques. (B) Parametric surfaces can represent a wider variety of shapes and their great flexibility allows deformation to be controlled with more intuitive parameters; therefore their use in connection with models of dynamics is considerable. (C) Level set curves can show the evolution of a 2D shape in time; the intersection of a time plane with the surface in 3D is a level curve, which represents the 2D contour shape of the object at a given time t. In this example cos2(r + c) is the mathematical representation of the 2D contour shape's evolution through time.

2.1.1. Algebraic Curves and Surfaces

Algebraic curves and surfaces satisfy (1) with f (p) being a polynomial. First-degree polynomials define planes and hyperplanes; second-degree polynomials define conics, which include circles, ellipses, parabolas, and hyperbolas, and quadrics, which include ellipsoids, paraboloids, hyperboloids, toroids, cones, and cylinders; their (n − 1)-dimensional extensions are surfaces in an n-dimensional space that satisfy the equation

where is a column vector, pT denotes the transpose of p (a row vector), A ∈ ℝn×n is a matrix, b ∈ ℝn is a row vector, and c is a scalar constant. Note that all the aforementioned shapes are included as particular cases of this definition. To define a particular shape, which satisfies a given set of constraints, the constant values in A, b, and c must be determined; for example, with n = 2, the constants that define a circle passing through a given set of three points can be found by solving the system of three equations where f (pi) = 0 for all i and f (pi) is a second-degree polynomial. In some contexts the same equation can be rewritten to facilitate this estimation; for example, it is easy to determine the circle centered at (xc, yc) with radius r if the second-degree polynomial is written as with p = {x, y}.

Superquadrics are defined by second-degree polynomials, while hyperquadrics are the most general form and allow the representation of complex non-symmetric shapes; they are given by the equation

where p = {x, y, z}T ∈ ℝ3, N is an arbitrary number of planes whose intersection surrounds the object, a, b, c, and d are shape parameters of these planes, and γi ∈ ℝ with γi ≥ 0 for all i. Kumar et al. (1995) presented a method for fitting hyperquadrics to very complex deformable shapes registered as range data. A model like (3) could be used as the base representation for a model of the dynamics if the deformations are small. It can be used in combination with local surface deformations to represent a wider set of surfaces. For example, if the superquadric is the set of points that satisfy the corresponding equation, a deformed model could be given by where c represents the inertial center of the superquadric, R is a rotation matrix, and d is a vectorial displacement field. Other types of deformation can be defined as well (Montagnat et al., 2001). For more information about superquadrics, see Jaklic et al. (2000).

Algebraic curves, surfaces, and volumes can be used as 1D, 2D, and 3D skeletons, or to represent objects of similar shapes and deformations (Gascuel, 1993). They are easy to deform in certain cases, but the types of deformations that are straightforward to apply are very limited, such as matrix transformations that bend, pitch, twist, stretch, or translate all the points on a curve or the space in which the curve is embedded. For this reason, algebraic curves and surfaces are more suited to representing articulated or semi-articulated objects. Objects can also be composed of several algebraic curves, where each component is easy to manipulate with this representation. To model more complex deformed shapes, Raposo and Gomes (2019) introduced products of primitive algebraic surfaces, such as spheres and cylinders, which enable both local and global deformations to be controlled more easily than in traditional algebraic shape models. Moreover, these models can be combined with skinning techniques to emulate soft deformable objects and also have parameterized representations.

2.1.2. Level Set Methods

In level set methods, the deformable model is embedded in a higher-dimensional space, where the extra dimension represents time (Sethian, 1997; Montagnat et al., 2001). A hypersurface Ψ is defined by Ψ(p, 0) = dist(p, S0), where S0 is the initial surface and dist can be the signed Euclidean distance between a point p and the surface. The distance is positive if the point lies outside the surface and negative otherwise. The evolution of the surface S is governed by the partial differential equation Ψt + |∇Ψ|F = 0 involving the function Ψ(p, t) and a speed function F, which determines the speed at which each point of the surface must be moved. Thus, the function Ψ evolves in time and the current shape corresponds to the surface given by Ψ(p, t) = 0 (Figure 3C). The function Ψ could have any form, such as a parameterized one, Ψ = Ψ(x (u, v), y (u, v), z (u, v), t), with the surface still defined in implicit form, Ψ(x (u, v), y (u, v), z (u, v), t) = 0. Unfortunately, it could also be that Ψ does not even have an algebraic expression and may need to be approximated with numerical methods. The main advantage of level set methods is that they allow changes of surface topology implicitly. The set may split into several connected components, or several distinct components may merge, while Ψ remains a function.

In computer vision applications, such as human tracking and medical imaging, level set methods have been used successfully in tracking deformable objects (Sethian, 1997; Cremers, 2006). For example, Sun et al. (2008) recursively segmented deformable objects across a sequence of frames using a low-dimensional modal representation and applied their technique to left ventricular segmentation across a cardiac cycle. The dynamics are represented using a distance level set function, whose representation is simplified using principal component analysis (PCA). Sun et al. used methods of particle-based smoothing as well as non-parametric belief propagation on a loopy graphical model capturing the temporal periodicity of the heart, with the objective being to estimate the current state of the object not only from the data observed at that instant but also from predictions based on past and future boundary estimates. Even though we did not find examples of this method being used in robotics, it seems a suitable candidate since it models the shape change through time implicitly and would thus allow the robot to keep track of the evolving shape of an object during manipulation.

2.1.3. Gaussian Principal Component Eigenmodes

This kind of representation is valid when the types of deformations can be described with a single mathematical formulation. Given a representative set of the types of surface deformation that objects can undergo, it is possible to use PCA to detect the main modes of deformation (i.e., the eigenmodes Φn) and thus re-express the shapes as a linear combination of those modes. Hence a new shape estimation can be done using

where Φn (n ≪ N) is the largest eigenmode of shape variations in SN, Sμ is the mean of the representative set of shapes SN, and α is a set of coefficients. Such an eigenmode representation is useful for dealing with missing or misleading information (e.g., noise or occlusions) coming from sensory data while constructing the shape of the object (Cootes et al., 1995; Blake et al., 1998; Cremers, 2006; Sinha et al., 2019).

Employing combinations of previously cited methods, Leventon et al. (2000) used eigenmode representation with level set curves to segment images, such as medical images of the femur and corpus callosum, by defining a probability distribution over the variances of a set of training shapes. The segmentation process embeds an initial curve as the zero level set of a higher-dimensional surface, and then evolves the surface such that the zero level set converges on the boundary of the object to be segmented. At each step of the surface evolution, the maximum a posteriori position and shape of the object in the image were estimated based on the prior shape information and the image information. The surface was then evolved globally toward the maximum a posteriori estimate and locally based on image gradients and curvature. The results were demonstrated on synthetic data and medical images, in both 2D and 3D. Tsai et al. (2001) further developed this idea.

2.2. Explicit Parameterized Representations

Explicit parameterized representations are evaluated directly from their functional definition (Figure 3B). In 3D, they are of the form C(u) = {x (u), y (u), z (u)}T for curves and S(u, v) = {x (u, v), y (u, v), z (u, v)}T for surfaces, where u and v are parameters. The curve or surface is traced out as the values of the parameters are varied. For example, if u ∈ [0, 1], the shape is traced out as u varies from 0 to 1, as happens with the circle

It is common practice to parameterize a curve by time t if it will represent a trajectory, or by arc length l1.

2.2.1. Splines

A mathematical spline S is a piecewise-defined real function, with k polynomial pieces si(u) parameterized by u ∈ [u0, uk], used to represent curves or surfaces (Cordero Valle and Cortes Parejo, 2003). The order n of the spline corresponds to the highest order of the polynomials. The values u0, u1, …, uk−1, uk where the polynomial pieces connect are called knots.

Frequently, for a spline of order n, S is required to be differentiable up to order n − 1, that is, to be Cn−1 at knots and C∞ everywhere else. However, it is also possible to reduce its differentiability to take into account discontinuities.

In general, any spline function S(u) of order n with knots u0, …, uk can be expressed as

where the coefficients pj are interpreted geometrically as the coordinates of the control points that determine the shape of the spline, and

constitute a basis for the space of all spline functions with knots u0, …, uk, called the power basis. This space of functions is a (k + (n + 1))-dimensional linear space. By using other bases, a large family of spline variations is generated (Gibson and Mirtich, 1997); the most important ones are the following.

Bezier splines have each segment being a Bezier curve given by

where each pi is a control point, the are the Bernstein polynomials of degree n, are the binomial coefficients, and u ∈ [0, 1]. The curve passes through its first and last control points, p0 and pn, and remains close to the control polygon obtained by joining all the control points, in order, with straight lines. Also, at its extremes it is tangent to the line segment defined by and . It is easy to add and remove control points from a Bezier curve, but displacing one causes the entire curve to change, which is why usually only third-degree polynomial segments are used (see Figure 4). A two-dimensional Bezier surface is obtained as the tensor product of two Bezier curves:

B-splines are more stable, since changes to the positions of control points induce only local changes around that control point, and the polynomials pass through the control points (de Boor, 1976). They are particularly suitable for 3D reconstructions. Song and Bai (2008) show how they can be used to fill holes and smooth surfaces originally captured as dense clouds of points, while producing a much more compact and manipulable representation.

Catmull-Clark surfaces approximate points lying on a mesh of arbitrary topology (Catmull and Clack, 1978).

Non-uniform rational B-splines (NURBS) are notable because they can represent circles, ellipses, spheres, and other curves which, in spite of their commonness and simplicity, cannot be represented by polynomial splines. They achieve this by introducing quotients of polynomials in the basis2.

T-splines and non-uniform rational Catmull-Clarck surfaces with T-junctions (T-NURCCs) allow for high-resolution representations of 3D deformable objects with a highly reduced number of faces and improved representations of joints between surface patches, by introducing connections with the shape of a T between edges of the shape (Sederberg et al., 2003).

Figure 4. (A) Two Bezier curves with control points; displacing one control point (e.g., p2) affects the entire curve. In this case, the line that joins the control points p1 and p2 is the tangent of the polynomial at p1; and the line that joins p3 and p4 is the tangent at p4. (B) Two enchained Bezier curves; by placing p3, p4, and p5 on a line it is possible to make the curve C1 at p4. (C) Free-form deformation of a spline; the black circles form a lattice in a new local coordinate system, the red circles show the deformed lattice, and the red spline is the result of shifting the blue spline in accordance with this deformation.

Splines are a very flexible tool for representing all sorts of deformable shapes. They are extremely useful for signal and image processing (Unser, 1999) as well as for computer animation (Maraffi, 2004) and shape reconstruction of 2D and 3D deformable objects (Song and Bai, 2008; Prasad et al., 2010). Active contours (see section 3.4.2 and Kass et al., 1988), also known as snakes, are splines governed by an energy function that introduces dynamic elements to the shape representation and are used for tasks, such as object segmentation (Marcos et al., 2018; Chen et al., 2019; Hatamizadeh et al., 2019).

The advantage of splines is that compact representations of deformable objects can be built on them in accordance with the complexity of their shape at each time. When new corners or points of high curvature appear, more control points can be added for an adequate representation, and if the shape becomes simplified these points can be removed. However, this flexibility also makes splines sensitive to noise and can lead to computation of erroneous deformations. Hence, learning the dynamics of such a representation is difficult with current learning algorithms, and a general solution remains an open problem.

2.2.2. Modal Decompositions

In modal decomposition, a curve or a surface is expressed as the sum of terms in a basis, whose elements correspond to frequency harmonics. The sum of the first modes constituting the surface gives a good rough approximation of its shape, which becomes more detailed as more modes are included (Montagnat et al., 2001). Among methods for modal decomposition, Fourier decomposition is in widespread use. A curve may be represented as a sum of sinusoidal terms and a surface as a combination of spherical harmonics which are explicitly parameterized:

where rl is a normalization factor for Y and the are constants. It is also possible to use other bases that may be more suitable for other shapes, such as surfaces homeomorphic to a sphere, torus, cylinder, or plane.

Modal decomposition has mostly been used in model-based segmentation and recognition of 2D and 3D medical images (Szekely et al., 1995). Its main advantage is that it creates a compact and easy-to-manipulate representation of objects whose shape can be described as a linear combination of a few dominant modes of deformation. The disadvantage of such methods is that it is easy for them to miss details in objects, such as small dents, because shapes are approximated by a limited number of terms.

2.3. Free-Forms

Free-form deformation is a method whereby the space in which a figure is embedded is deformed according to a set of control points of the deformation (Moore and Molloy, 2007; see Figure 4C). It can be used to deform primitives, such as planes, quadrics, parametric surface patches, or implicitly defined surfaces. The deformation can be applied either globally or locally. A local coordinate system is defined using a parallelpiped so that the coordinates inside it are p = {x1, x2, x3} with 0 < xi < 1 for all i. A set of control points pijk lie on a lattice. When they are displaced from their original positions, they define a deformation of the original space with new coordinates p′. The new position of any coordinate is interpolated by applying a transformation formula that maps p into p′. For some transformations it is enough to estimate the new coordinates of the nodes of a mesh or control points of a spline with respect to the new positions of the control points of the deformed space, and the rest of the shape will follow them, as in Sederberg and Parry (1986), where a trivariate tensor product Bernstein polynomial was proposed as the transformation function. Loosely related are multigrid representations, which also allow for local management of deformation (Xian et al., 2019).

2.4. Discrete Representations

Discrete representations contain only a finite fixed number of key elements describing them, mainly points and lines. Representations that fall into this category include the following:

Meshes are collections of vertices connected through edges that form a graph. Common shapes for their faces are triangles (triangulations), quadrilaterals, and hexagons for surfaces, and tetrahedrons for volumes. A special case consists of the simplex meshes, which have a constant vertex connectivity. This type of shape representation permits smooth deformations in a simple and efficient manner (Delingette, 1999; Montagnat et al., 2001). Therefore, meshes are used for various tasks, such as 3D object recognition (e.g., Madi et al., 2019) and simulation of the dynamics of deformable objects (see section 3.3.1) with efficient coding (Arvanitis et al., 2019).

Skeletons are made of rigid edges connected by joints that allow bending. The position and deformation of elements attached to the skeleton are defined with respect to their assigned bone. Skeletons tend to be used together with the method known as skinning, where a deformable surface is attached to the bone and softens the visual appearance of the articulated joints through interpolation techniques. By nature skeletons are designed to model articulated deformations. Schaefer and Yuksel (2007) proposed a method to automatically extract skeletons by detecting articulated deformations.

Deformable templates are parameterized representations of a shape that specify key values of salient features in an image. They deform with greater ease around these features. The features can be peaks and valleys in the image intensity, edges, and the intensity itself, as well as points of high curvature3 (sharp turns or bends; see Yuille et al., 1992; Basri et al., 1998). Deformable templates are mainly used for object recognition and object tracking (e.g., Ravishankar et al., 2008; Xia et al., 2019; Gallardo et al., 2020). They support the particular relevance of critical points in modeling deformations, which could make them key to developing a robot's ability to generate its own optimal representation of a deformable object. The use of deformable templates is explored and illustrated by experiments on natural and artificial agents in Arriola-Rios et al. (2013).

Landmark points are points that would remain stable across deformations. They can be corners, T-junctions, or points of high curvature. For example, when a rectangular sponge is pushed, its corners will still be corners after the deformation, while the point of contact with the external force will become a point of high curvature during the process and will remain as such; these are all stable points. In particular, landmark points can correspond to control points of splines (Blake et al., 1998). Methods for further processing, such as the application of deformations, can work more efficiently if they focus only (or mainly) on landmark points rather than on the whole representation (Cootes and Taylor, 2004).

Particles are idealized zero-dimensional dots. Their positions are specified as a vector function parameterized by time, P(t). They can store a set of attributes, such as mass, temperature, shape (for visualization purposes), age, lifetime, and so on. These attributes influence the dynamical behavior of the particles over time and are subject to change due to procedural stochastic processes. The particles can pass through three different phases during their lifetime: generation, dynamics, and death. However, manipulating them and maintaining constraints, such as boundaries between them can become non-trivial. For this reason, particles are used mainly to represent gases or visual effects in animations, where the interaction between them is very limited (Nealen et al., 2006).

Clouds of points are formed by large collections of coordinates that belong on a surface. They are frequently obtained from 3D scanned data and may include the color of each point. A typical problem consists in reconstructing 3D surfaces from such clouds (Newcombe and Davison, 2010; Makovetskii et al., 2020). Cretu et al. (2009) gives a comparative review of several methods for efficiently processing clouds of points and introduces the use of self-organizing neural gas networks for this purpose. Clouds of points can be structured and enriched with orientations of the 3D normals, as well as other feature descriptors for perceptual applications (Martínez et al., 2019).

3. Representing Dynamics for Deformable Objects

After an object's shape is defined as described in section 2, a suitable model of the dynamics can be used to register and predict deformations as a robot interacts with the object (Figures 1ii,iii). In this section, we introduce some of the most commonly used models from different fields (e.g., computer graphics; see Gibson and Mirtich, 1997; Nealen et al., 2006; Moore and Molloy, 2007; Bender et al., 2014) for predicting the dynamics of deformable objects. In robotics, the important features used to select an appropriate model are computational complexity (e.g., for real-time perception and manipulation), physical accuracy or visual plausibility, and simplicity or intuitiveness (i.e., the ability to implement simple cases easily and to be built on iteratively to accommodate more complex cases). Therefore, we divide the models into three classes: (1) particle-based models, which are usually computationally efficient and intuitive but physically not very accurate; (2) constitutive models, which are physically accurate but computationally complex and not very intuitive; and (3) approximations of constitutive models, which aim to decrease the computational complexity of constitutive models through approximations.

3.1. Background Knowledge of Deformation

First, we briefly review some background information about the physics and dynamics of deformation. Initially, the object is in a rest shape S0. In the discrete case it could be where N is the number of points constituting the shape of the object. Then, when an external force fext acts on the object, such as gravitational force or force applied by a manipulator, the object deforms and its points move to a new position pnew. In physics-based models, the resulting deformation is typically defined using a displacement vector field u = pnew − p0. From this displacement, the deformation can be computed through the stress σ (i.e., the force applied per area of the object shape) and the strain ϵ (i.e., the ratio of deformation to the original size of the object shape). The stress tensor σ is usually calculated for each point on the object shape using Hooke's law, σ = Eϵ, where ϵ can be calculated as with ∇u denoting the spatial derivative of the displacement field,

E is a tensor that is dependent on the real physical material properties of the object, such as Young's modulus E and Poisson's ratio υ. These properties are parameters in constitutive models (e.g., finite element models). Constitutive models describe strain-stress relationships as the response of materials (e.g., elastic or plastic) to different loads (e.g., forces applied) based on the material properties; they are commonly used to simulate deformation because of their high physical accuracy.

To simulate the dynamical behavior of deformation over time, Newton's second law of motion is employed. Let be the position of particle i at time t:

where mi, , , and vt ∈ ℝ3 are respectively the mass, external forces, acceleration, and velocity at time t, and and are first-order time derivatives of the position and velocity, respectively, which are approximated using finite differences. Then, according to these derivative approximations, in each time step Δt the points move according to a time integration scheme. The simplest such scheme is explicit Euler integration:

where and are the velocity and position of point i at time t. We remark that explicit Euler integration can cause problems, such as unrealistic deformation behavior (e.g., overshooting). There are other more stable integration schemes (e.g., implicit integration, Verlet, Runge-Kutta; see Hauth et al., 2003) that can be used.

Such a dynamical model can be represented simply as G (S0, fext, θ), where input to the model G includes the initial state of points of the object and the external forces fext; θ represents model parameters that could be related to material properties (e.g., E, υ), as in constitutive models, to simulate desired deformations. Then, within G, the deformation is computed and the points pt are iterated to time state t using an integration scheme, such as (14) and (15).

3.2. Particle-Based Models

3.2.1. Particle Systems

In a particle system, a solid object shape S is represented as a collection of N particles (see section 2.4). These particles are initially in an equilibrium position, , which can be regarded as the initial coordinates of each particle i ∈ {1, …, N} (Figure 5A). When an external force is applied, the object deforms and the particles move to new coordinates based on physics laws, in particular Newton's second law of motion (13), according to a time integration scheme, such as (14) and (15) (Figure 5B).

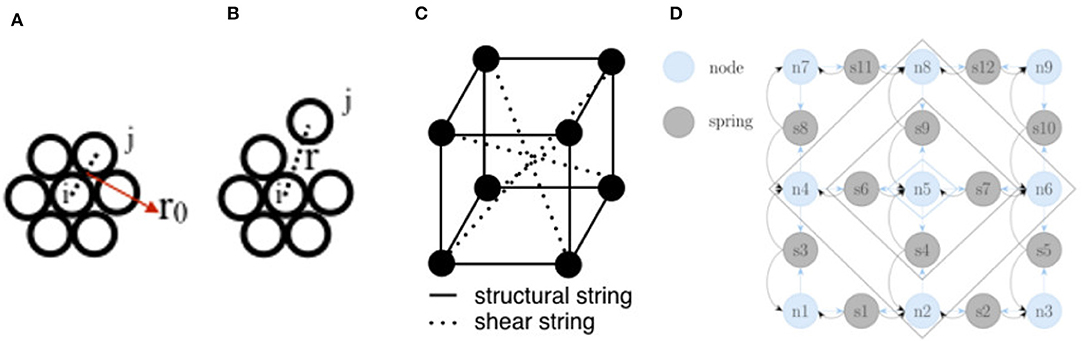

Figure 5. (A) A simple circular deformable object represented in 2D with N = 7 particles in the particle system; the particles are in static equilibrium (initial rest state). (B) When the distance r between the particles i and j increases, new forces exerted on i are calculated and the particles move. (C) A mass-spring model of a simple cubic deformable object with N = 8 particles in 3D; the particles are connected with structural and shear springs that enable the object to resist longitudinal and shear deformations. (D) In Nurnberger et al. (1998), the neurons of a recurrent neural network represent the mass nodes (light blue) and springs (gray) of a mass-spring model; the activation functions between the neurons of the network were devised to reproduce the mass-spring system equations.

Although particles are usually used to model objects, such as clouds or liquids, there are also particle frameworks for simulation of solids. These frameworks are based on so-called dynamically coupled particles that represent the volume of an object (Tonnesen and Terzopoulos, 2000). The advantage of particle systems is their simplicity, which allows simulation of a huge number of particles to represent complex scenes. A disadvantage of particle systems is that the surface is not explicitly defined. Therefore, maintaining the initial shape of the deforming object is difficult, and this can be problematic for applications, such as tracking the return of elastic objects to their original shape after deformation during robotic manipulation. Hence, for objects that are supposed to maintain a given structure, particle-based models with fixed particle couplings are more appropriate, such as models that employ meshes for shape representation.

3.2.2. Mass-Spring Systems

Mass-spring (MS) models use meshes for shape representation (see section 2.4). In such a model N particles are connected by a network of springs (Figure 5C). As in particle systems, particle motion is simulated using Newton's second law of motion (13). However, there are other forces between the connected particles, say i and j, that affect their motion, in particular the spring force , where ks is the spring's stiffness and lij is the rest length of the spring, and the damping force fd(p) = kd(vj − vi) of the spring, where kd is the damping coefficient. Then, the equation of motion (13) becomes

For the entire particle system, this can be expressed in matrix form as

where M ∈ ℝ3N×3N, D ∈ ℝ3N×3N, and K ∈ ℝ3N×3N are a diagonal mass matrix, a diagonal damping matrix, and a stiffness matrix for n = 3 dimensions. The MS system can be represented as a model GMS(S0, fext, θ), where input to GMS consists of the initial state of the mesh shape , the external forces fext, and the model parameters θ = {ks, kd}, which can be changed (tuned) to determine object deformability.

MS systems are a widely used type of physics-based model for predicting and tracking object states during robotic manipulation, since they are intuitive and computationally efficient (Schulman et al., 2013). However, the spring constants are difficult to tune according to the material properties to obtain the desired deformation behavior. One way to overcome this tuning problem is to use learning algorithms and reference solutions (Bianchi et al., 2004; Morris and Salisbury, 2008; Arriola-Rios and Wyatt, 2017). Another disadvantage of MS models is that they cannot directly simulate volumetric effects, such as volume conservation in its basic formulation. To simulate such effects, Teschner et al. (2004) introduced additional energy formulations. Also, the behavior of an MS model is affected by the directions in which the springs are placed; to deal with this issue, Bourguignon and Cani (2000) added virtual springs to compensate for this effect. In addition, Xu et al. (2018) proposed a new method by introducing extra elastic forces into the traditional MS model to integrate more complex mechanical behaviors, such as viscoelasticity, non-linearity, and incompressibility.

3.2.3. Neural Networks

Nurnberger et al. (1998) designed a method for controlling the dynamics of an MS model using a recurrent neural network (NN). Different types of neurons are used to represent the positions p, velocities v, and accelerations a of the mass points (nodes) and the springs (spring nodes) of the mesh shape S (Figure 5D). The differential equations governing the behavior of the MS system are codified in the structure of the network. The spring functions are used as activation functions for the corresponding neurons. The whole system poses the simulation as a problem of minimization of energy. The information is propagated to the neurons in stages, starting from the mass points where the applied force is greatest, and an equilibrium point must be reached to obtain the new configuration of the nodes at each time t. The training is carried out with gradient descent (backpropagation) for the NN. In addition, Zhang et al. (2019) employed a convolutional neural network (CNN) to model propagation of mechanical load using the Poisson equation rather than an MS model.

The advantage of using an NN to control deformation is the method's flexibility, such as being able to modify the network structure during simulation (e.g., by removing springs as in Nurnberger et al., 1998) and simulate large deformations efficiently (e.g., Zhang et al., 2019).

3.2.4. Position-Based Dynamics

Particle systems and MS models are force-based models where, based on given forces, the velocities and positions of particles are determined by a time integration scheme. In contrast, position-based dynamics (PBD) models compute the positions directly by applying geometrical constraints in each simulation step. PBD methods can be used for various purposes, such as simulating liquids, gases, and melting or visco-elastic objects undergoing topological changes (Bender et al., 2014). Here we focus on a special PBD method, called meshless shape matching (MSM; see Müller et al., 2005), that is used to simulate volumetric solid objects while preserving their topological shape.

In MSM, as in particle systems, an object is represented by a set of N particles without any connectivity (Figure 6A). Since there is no connectivity information between the particles, when they are disturbed by external forces (Figure 6B) they tend to adopt a configuration that does not respect the original shape p0 of the object. We call this disturbed configuration the intermediate deformed shape, , where . MSM calculates an optimal linear transformation, , between the initial shape p0 and the intermediate deformed shape that allows preservation of the original shape of the object; here and , with being the center of mass of the object (Figure 6B.1–3). Then, the linear transformation A is separated into rotational and symmetric parts: A = RS where R represents rigid behavior and S represents deformable behavior. Hence, to simulate rigid behavior, the goal (actual) position of the particles is

where t = c is the translation of the object. If the object is deformable, then S is also included and the goal position is

where β is a control parameter that determines the degree of deformation coming from the S matrix. If β = 0, (19) becomes (18). If β approaches 1, then the range of deformation increases. Subsequently, using the following integration scheme, the new position and velocity at time t are updated:

where α affects the stiffness of the model (similar to the MS model) and determines the speed of convergence of the intermediate positions to the goal positions. In simplest form this model can be represented as , where input to the model GMSM consists of the initial state S0, external forces fext, and model parameters θ = {β, α}, which can be tuned to decide the range of deformability and stiffness of the model.

Figure 6. (A) Meshless shape matching applied to a simple object consisting of N = 4 particles. (B).1 The method estimates the optimal linear transformation A that allows the particles to move to the actual deformed positions p with respect to the rest state p0, as in (C). (B).2 Then A is decomposed into a rotational (rigid) part R and a symmetric (deformation) part S. (B).3 The R transformation is used to simulate rigid motion; to simulate deformation, A and R are combined using a parameter, β.

The main advantages of PBD methods are their simplicity, computational and memory-wise (i.e., not needing a mesh model) efficiency, and scalability owing to their particle-based parallel nature. Also, they are able to calculate more visually plausible deformations than MS models. Hence, they have been used in a wide range of interactive graphical applications (Tian et al., 2013; Macklin et al., 2014), particularly for modeling the deformation of human body parts (Zhu et al., 2008; Sidorov and Marshall, 2014; Romeo et al., 2020), and robotic manipulation tasks (Caccamo et al., 2016; Guler et al., 2017). A disadvantage of PBD methods is that they simulate physical deformation less accurately than constitutive models, since they are geometrically motivated.

3.3. Constitutive Models

To simulate more physically accurate deformations, constitutive models, which incorporate real physical material properties, are used. In this subsection, we start by introducing the most commonly used constitutive models, namely finite element models, and then briefly mention other models that simplify finite element models to increase computational efficiency.

3.3.1. Finite Element Method

The finite element method (FEM) aims to approximate the true physical behavior of a deformable object by dividing its body into smaller and simpler parts called finite elements. These elements are connected through N nodes that make up an irregular grid mesh (Figure 7A). Thus, instead of particles, we work with node displacements. The mesh deformation is calculated through the displacement vector field u. For simulation, an equation of motion similar to (17) is used for an entire mesh. Usually, to decrease the computational complexity, the dynamical parts of the equation are skipped and the deformation is calculated for a static state in equilibrium (a = v = 0). Then, the relationship between a finite element e and its Ne nodes (e.g., Ne = 4 for a tetrahedron as in Figure 7A) can be expressed as

where contains the Ne nodal forces, is the displacement of an element between the actual and the deformed positions, and is the stiffness matrix of the element. The stiffness matrices of the different elements are assembled into a single matrix K ∈ ℝ3N×3N for an entire mesh with N nodes:

The matrix K relies on the nodal displacement u ∈ ℝ3 × N and the constitutive material properties (e.g., E and υ) to compute the nodal forces f ∈ ℝ3 × N of the entire mesh. Therefore, this huge matrix K should be calculated at every time step t.

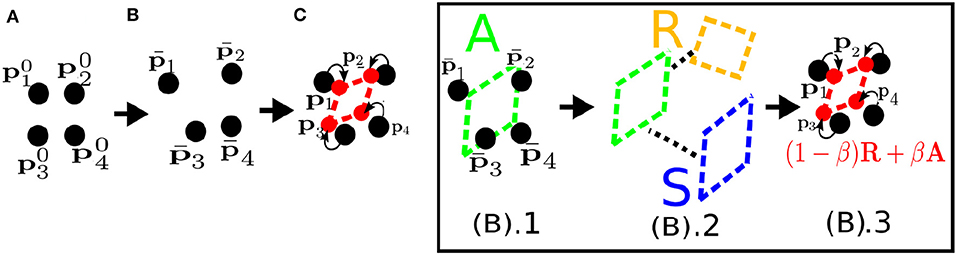

Figure 7. (A) An irregular grid as the mesh of a cubic deformed object in 3D using the finite element method (left) and an element e of the mesh with its Ne = 4 nodes (right); the arrows show the displacement fields u1 = {u1,x, u1,y, u1,z} at node i = 1, and ue is the nodal displacement vector of the element e. (B) An element with node j and forces applied on three adjacent faces in the finite volume model. (C) A regular discrete mesh to be used in calculations of the finite difference method.

The FEM can be represented as a model , which takes as input the initial state S0, external forces fext, and constitutive model parameters θ, such as E and υ. Then, within model GFEM, the new positions and velocities of the nodes are updated using a time integration scheme, such as (14) and (15).

The FEM can produce physically realistic simulations and model complex deformed configurations. Owing to these properties, FEM models have been used in many robotics applications, such as tracking (Essa et al., 1992; Petit et al., 2018; Sengupta et al., 2019) and planning manipulation around deformable objects (Frank et al., 2014). However, they can have a heavy computational burden due to re-evaluating K at each time step. This can be avoided by using linear FEM models where K in (24) stays constant4. The drawback is that this assumption limits the model to simulating only small deformations. Other methods that can decrease computational complexity, such as co-rotational FEM (Müller and Gross, 2004), can also be used.

3.3.2. Finite Volume Method

In the finite volume method (FVM), instead of calculating the nodal forces of the mesh shape S individually as in FEM, the force per unit area with respect to a certain plane orientation is calculated. This is done by using the constitutive law for the computation of the stress tensor σ (section 3.1). Then, the total force acting on face i of a finite element can be calculated using the formula

where Ai is a scalar representing the area of face i and ni is its normal vector. To calculate the nodal forces, the forces of surfaces adjacent to node j are summed and distributed evenly to each node (Teran et al., 2003; see Figure 7B):

The FVM model can be represented as , which takes as input the initial state S0, in which areas A and normals n can be calculated, the external forces fext, and the constitutive model parameters E and υ. Then, within model GFVM, the new positions p of nodes of S0 are updated at each time step t using a time integration scheme. Since this method is computationally more efficient than the FEM, it has been used in many computer graphics applications (Barth et al., 2018; Cardiff and Demirdžić, 2018). However, it restricts the types of deformation that can be simulated, such as the deformation of irregular meshes.

3.3.3. Finite Difference Method

In the finite difference method (FDM), the volume of the object is defined as a regular M × N × P discrete mesh of nodes with horizontal, vertical, and stacked inter-node spacings h1, h2, and h3, respectively (Figure 7C). The nodes are indexed as [m, n, p] where 1 ≤ m ≤ M (parallel to the x-axis), 1 ≤ n ≤ N (parallel to the y-axis), and 1 ≤ p ≤ P (parallel to the z-axis), and is the position of the node in 3D space. The object is deformed when an external force is applied. To calculate the nodal forces, a displacement vector u should be calculated using spatial derivatives. This is done by defining finite difference operators between the new node positions in the deformed mesh. For example, for pm,n,p the first-order finite difference operator along the x-axis can be defined as dx(pm,n,p) = (pm+1,n,p − pm,n,p)/h1. Using the finite difference operators, the nodal forces are calculated and the deformation of the object can be computed as in the FEM (section 3.3.1).

The FDM is one of the alternative methods suggested for decreasing the computational complexity of the FEM (Terzopoulos et al., 1987). A disadvantage of this method is that it is more difficult to approximate the boundaries of objects using a regular grid for the mesh (Nealen et al., 2006), and hence the accuracy is decreased.

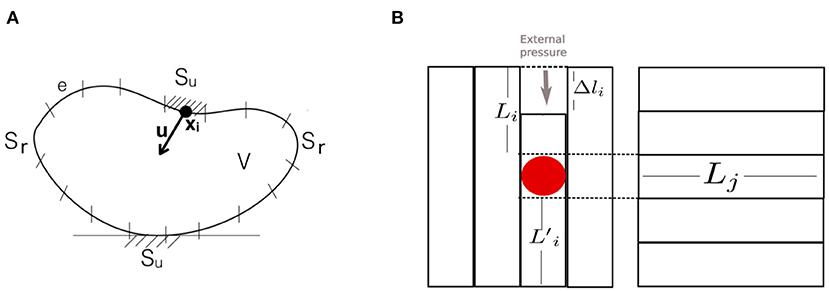

3.3.4. Boundary Element Method

The boundary element method (BEM) computes the deformation of S by calculating the equation of motion (17) over a surface rather than over a volume as in the FEM. The boundary (surface) S is discretized into a set of N non-overlapping elements (e.g., mesh elements) e, whose node coordinates pi, i = 1, …, N, are the centroids of the elements. These elements represent displacements and tractions, and Su and Sr are surface parts where the displacement and traction boundary conditions are defined, respectively.

The BEM provides a significant speedup compared to the FEM because it requires fewer nodes and elements. However, it only works for objects whose interior consists of homogeneous material. It has been used in the ArtDefo System (James and Pai, 1999) to simulate volumetric models in real-time. Also, it has been used to improve tracking accuracy against occlusions and spurious edges in (Greminger and Nelson, 2008).

3.3.5. Long Elements Method

In the long elements method (LEM), a solid object is considered to be filled with incompressible fluid as in biological tissues. The volume of object shape S is discretized into Cartesian meshes (i.e., one mesh for each axis), and each mesh contains long elements (LEs) e ∈ {1, …, Ni}, where Ni is the number of elements in the mesh shape Si discretized parallel to axis i (Figure 8B). The crossings of the LEs of the different axes define cells, each of which contains a particle. By calculating the state of these particles (e.g., position p and velocity v), the deformation of the object is simulated.

Figure 8. (A) In the boundary element method, the deformable object's shape S is discretized into a set of elements e; S is disturbed by a force that displaces the centroid node coordinates p, and the deformation is calculated based on the displacement and traction boundary conditions defined on Su and Sr, respectively. (B) A virtual object discretized as Cartesian meshes according to the long elements method. Here, for the sake of simplicity, we show a 2D object with only two axes (i and j). On the left and right figures are meshes of the object discretized with long elements Li and Lj parallel to the i and j axes, respectively. External pressure is applied at a particular point on the surface of the object, and the resulting force f on the particle (red dot) is calculated using the deformations Δli of long elements crossing the particle.

The state of each particle is calculated using the laws of fluid mechanics (e.g., Pascal's law). Then, a system of linear equations for each e that fills the object volume is created. By solving this system of equations by numerical methods, the deformation of e, Δli, is calculated. From Δli the forces occurring due to deformation are computed. Here, the LE is regarded as a spring attached to a particle with known mass. As an example, in Figure 8B, pressure is applied to an object. As a result of this pressure, a force fi acts on the particle along the ith axis and is calculated using the displacements of the crossing LEs attached to the particle:

where k is the spring constant. Therefore, an LEM model can be represented as GLEM(Si, Sj, fext, θ), which takes as input meshes of axes i and j for 2D space, external forces fext, and model parameters, such as spring constants that determine object deformability. Subsequently, the force obtained is used to calculate the velocities and positions of the particles along each axis.

The LEM was developed for modeling soft tissues, especially for surgical simulation (Balaniuk and Salisbury, 2002). It uses a smaller number of elements than tetrahedral (e.g., FEM) and cubic (e.g., FD) meshing, so the computational complexity of the model is reduced as well. It is therefore capable of interactive real-time soft tissue simulation for haptic and graphic applications, such as robotic surgery. However, it provides only an approximation of real physical deformation and so presents a trade-off between physical accuracy and computational efficiency.

3.4. Approximations of Constitutive Models

3.4.1. Modal Analysis

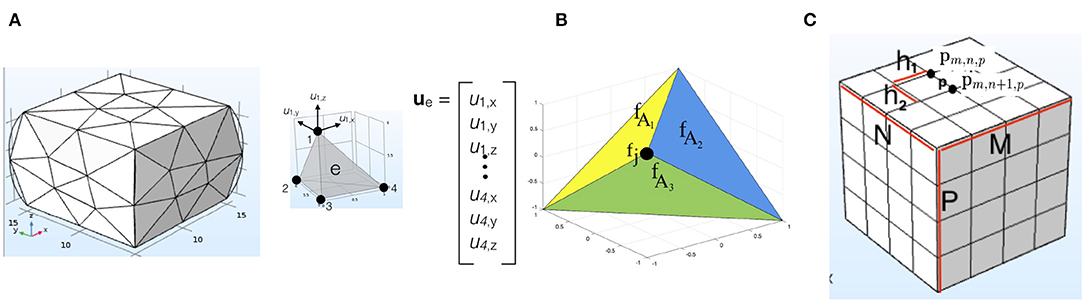

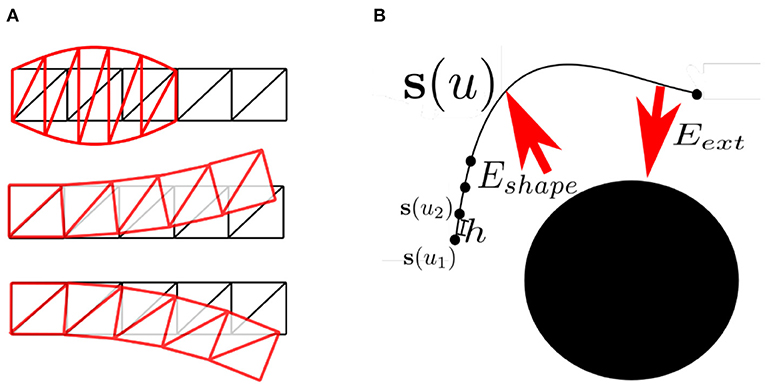

What makes constitutive models, such as the FEM expensive is calculation of the motion with large matrices M, D, and K in Equation (17); for example, with N = 20 nodal points of a mesh shape p = (x, y, z), the calculation would involve three matrices of size 60 × 60. Pentland and Williams (1989) proposed a way of reducing this computational complexity based on a method called modal analysis. Modal analysis is used for identifying an object's vibrational modes (Figure 9A) by decoupling (17). This is done by using linear algebraic formulations (Nealen et al., 2006). Below, we outline the steps of modal analysis using these formulations, while skipping the detailed derivations.

Figure 9. (A) A simple 2D rectangular object in different deformation modes in modal analysis: the upper shape is a deformation mode in response to compression, and the middle and lower shapes are deformation modes in response to bending forces from different directions. (B) Active contour S(u) controlled by external (Eext) and shape (Eshape) energies that attract or repel according to the shape of the object (e.g., in an image); as the object deforms, the position of S(u) is updated iteratively.

First, the matrices are diagonalized by solving the following eigenvalue problem (i.e., whitening transition):

where Λ and Φ are matrices containing the eigenvalues and eigenvectors of MK−1. Then, the eigenvectors of Φ are used to transform the displacement vector u:

By substituting (29) into (17) and multiplying by ΦT, the following system of equations is constructed:

where ∇M, ∇D, and ∇K are all diagonal matrices. This generates 3N independent equations of motion for the modes:

Then, (32) can be solved analytically for qi to compute the motion of each mode i ∈ {1, 2, …, N}. The matrix Φ contains a different mode shape in each of its columns (Figure 9A), i.e., Φ = [Φ1, Φ2, …, ΦN]. Hence, by analyzing the eigenvalues in Λ, high-frequency modes in Φ can be eliminated so that only the most dominant modes are updated using (32). This reduces the number of equations in (32) and hence lowers the computational cost significantly.

In modal analysis, as in constitutive models, taking K to be constant can increase the computational efficiency. However, this assumption is valid only when simulating small linear deformations and leads to errors when dynamically simulating large deformations. To overcome this problem, some methods use different formulations of the strain tensor (e.g., the Green strain as in Barbič and James, 2005) to enable simulation of larger non-linear deformations (An et al., 2008; Pan and Manocha, 2018), or adopt more data-driven approaches (e.g., by employing CNNs as in Fulton et al., 2019).

3.4.2. Active Contours

Active contour (AC) models are approximations of constitutive models, such as the FEM. They were first introduced by Kass et al. (1988) in the form of the snakes model. In their simplest form they can be described as a function of a spline shape (section 2.2.1), S(u) ∈ ℝn for u ∈ [0, 1], in an n-dimensional space, for example S(u) = {x(u), y(u)} ∈ ℝ2 in an image I:ℝ2 → ℝ (Figure 9B). This spline is fitted to the shape of an object in the image by minimizing the following energy formulation:

Here Eext depends on the contour position with respect to an attractor function f:

where in the n = 2 case the f (x, y) function could be the image intensity I (x, y), which would attract the snake to the brightest regions, or an edge detector, which would attract the snake to the edges (Moore and Molloy, 2007).

In (33), Eshape is the internal energy of the contour, which depends on the shape of the contour:

The first-order derivative |S′(u)2| controls the length of the contour, and the goal is to minimize the total length. The second-order derivative |S″(u)|2 controls the smoothness of the contour; this term enables the contour to resist stretching or bending by external forces due to f (S′(u)) and is used to regularize the contour. The weight parameters α and β determine elasticity and rigidity, respectively.

The energy Esnake can be discretized into N parts as si(u) where the ui = ih, for i ∈ {1, …, N}, are knots and :

Then, from the discretization, the derivatives in Eshape can be approximated using finite difference operators:

To find the contour that minimizes the total energy, is minimized. The resulting expression is then put into matrix form and used to update the position of the contour iteratively in time by using a time integration scheme as demonstrated in Kass et al. (1988). To represent an AC in 3D, some additional parameters are included in the shape energy formulation: the elasticity parameter β is defined along the third axis as well, and an extra parameter is added to control the resistance to twisting (Ahlberg, 1996).

AC models have been widely used, especially in medical imaging, for motion tracking and shape registration tasks (Williams and Shah, 1992; Leventon et al., 2000; Das and Banerjee, 2004), and they can also be combined with constitutive models to achieve greater physical accuracy (Luo and Nelson, 2001). The main disadvantage of AC models is their reliance on a good initialization of the snake contour near the desired shape in the image. To overcome this drawback, attractor functions other than image intensity, such as edge maps, have been proposed in recent years (e.g., Nisirat, 2019).

This concludes our tutorial-style description of models to provide some technical grounding in the basic mathematical approaches to deformable object modeling. The content of sections 4 (learning and estimation) and 5 (planning and control) builds on the models we have described. These sections are written in the form of broad surveys, as there is such a wide range of different approaches that we cannot cover them all in depth.

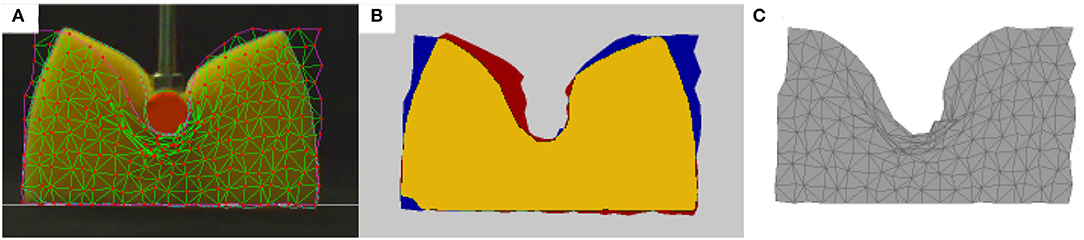

4. Learning and Estimation of Model Parameters

In the previous sections, we introduced computational models of deformable objects that have numerous applications. However, for the models to be useful, several parameters must be known beforehand (Figure 1vi), so these models should be calibrated carefully. In this section we give an overview of some representative cases of applying various learning algorithms, which can make the calibration process autonomous (Figure 10). The methods we review can be grouped into three types of strategies: (a) estimating parameters directly, which is rarely feasible (section 4.1); (b) calibrating known physics-based models, G, automatically by allowing the robot to take some measurements that will help to determine the values of model parameters, θ (section 4.2.2); and (c) approximating new functions that describe the dynamics, as is done with neural networks (section 4.2.4).

Figure 10. The general schema for learning: (A) A type of model and ground truth are selected, such as 2D images used as ground truth to calibrate a mass-spring model. (B) If the model's parameters have not been calibrated correctly, there will be a difference between the observed ground truth and the simulation; the observed ground truth is shown in red, the simulation in blue, and their intersection in yellow. (C) When the model is properly calibrated, it should be able to predict the behavior of the object if given the corresponding interaction parameters, such as external forces (e.g., contact forces of the manipulator) or geometric constraints (e.g., not crossing the floor).

4.1. Direct Estimation

For some models, it is possible to derive a formula to directly calculate the parameters. For example, Gelder (1998) obtained a formula for the parameter ks of an MS model (section 3.2.2) in a static state, where the materials are non-uniform but isotropic. An isotropic material is a material whose local deformation in response to force is independent of the direction in which the force is applied. However, in a non-uniform material the response varies with the position where the force is applied. For 3D tetrahedral meshes, the spring constant ks can be obtained from the formula

where the sum is over the volume Ve of a triangular element e of a 3D mesh shape S0 on its edge c. Young's modulus, E, is chosen empirically to give the desired amount of elasticity.

Direct estimation is a computationally efficient method. However, often it is not possible to do such calculations for models that rely on complex constitutive material laws as in the FEM.

4.2. Minimizing Error

This group of methods relies on the definition of an error function that measures the difference (e.g., Euclidean distance) between the deformation of some ground truth and the simulated virtual deformable object position , where pc is the point of contact. The ground truth can be obtained from camera observations of a real-world deformable object or from another, more reliable, simulation, usually an FEM simulation. Then, pθ ∈ Sθ is simulated with various θ values and the same interaction parameters as in the ground truth observations, such as the contact forces fext and positions of the manipulator or geometric constraints (boundary conditions) like the object not crossing the bottom surface. The objective of the learning algorithm is to find a set of parameters θ for the model G that minimizes the error function with the given interaction parameters.

Error minimization methods are usually successful at calibrating models that are difficult to tune, such as non-constitutive models (e.g., MS models) for which there is no direct link between the model parameters (e.g., ks) and the material properties (e.g., E and υ). However, they require the simulations to be run multiple times with different parameter values, which can be computationally expensive. Therefore, most of the time, offline estimation is performed (e.g., Leizea et al., 2017). Thus, these methods are not adaptable for online or for more dynamic deformable object manipulations. Also, in cases where the error function is not exactly convex, the algorithm may get stuck at a local minimum and result in deformations that are physically not very accurate or visually implausible.

4.2.1. Exhaustive Searches

Guler et al. (2015) used exhaustive search to obtain the β value for the MSM model (section 3.2.4) . This parameter determines the degree of deformability of a material. Camera images of real-world objects are used as the ground truth, with being the points detected on the surface of the object shape in the images. For β, uniform samples are taken from the interval [0, 1], and the deformed shape Sβ is simulated for these different β values with the corresponding interaction parameters (e.g., fext and pc ∈ Sβ) coming from ground truth observations. The β value that gives the minimum error between and is selected as the deformation parameter that represents the ground truth deformation in the best way:

4.2.2. Iterative Methods

Iterative methods try to decrease by moving the parameters step by step through the error function space. Techniques that help to ease the finding of the global minimum, such as gradient descent and simulated annealing, are frequently used to minimize the error of the model being calibrated.

For example, Frank et al. (2014) used gradient descent to find the E and υ values required by an FEM model (section 3.3.1) . The applied forces fext are measured with a sensor located in the robotic manipulator; pc is identified based on the collision point of the manipulator and the object in camera observations. Then, S0 is deformed according to these interaction parameters. To evaluate the simulation, 3D point clouds obtained from real objects through a depth camera are used as the ground truth and compared with the simulated FEM mesh, pE,υ ∈ SE,υ. Since the error function involves a computationally complex FEM model, it is difficult to compute the gradient directly. It is therefore approximated numerically by carrying out a sequence of deformation simulations that fix the interaction parameters in GFEM and vary E and υ. The model thus obtained is good enough to be used for the planning of motion around deformable objects.

In contrast, Morris and Salisbury (2008) used simulated annealing to automatically calibrate an MS model and a mesh shape model based on Teschner's linear, planar, and volumetric spring energies (Teschner et al., 2004). An FEM simulation is used as the ground truth . The forces fext are applied at vertices of a 3D mesh S0 that represents the object, producing deformations and resulting in a new state of static equilibrium (in which the object does not move but its shape is deformed under the influence of the forces). The objective is to reconstruct the FEM result with the MS model. To obtain the right set of parameters (e.g., ks), pools of sets of parameters are generated by randomly assigning values to the parameters of the springs. The simulations are run with these parameter sets, and those whose behavior is unstable or which do not reach static equilibrium after a certain number of steps are eliminated. For every surviving set, an error function (e.g., ) is defined, which measures the distance between the nodes in the FEM simulation and the same nodes when displaced by MS simulation after a stable static state has been reached. Simulated annealing is used to minimize this error function.

4.2.3. Genetic Algorithms

Genetic and evolutive algorithms are optimization strategies inspired by natural evolution. Combinations of elements (e.g., the spring stiffness ks of an MS model) that constitute solutions to a defined problem (e.g., calibrating the MS model) are codified as individuals in a population. A fitness or cost function, such as , is defined to evaluate the potential solution represented by each individual; may come from an FEM simulation (Bianchi et al., 2004) or from camera images of a deforming object (Arriola-Rios and Wyatt, 2017). The objective of the algorithm is to find the individuals with the highest fitness value. To accomplish this, the population is evolved by means of genetic operators, such as mutation and crossover. Mutation applies random changes to each member of the population with a certain probability (e.g., sampling new ks values from a Gaussian distribution). Crossover creates offspring by selecting genes from a pair of individuals and combining them into a new one, also with a predefined probability (e.g., combining some ks values sampled in the previous generation with newly sampled values in the current generation). Different criteria can be used to select which members of the population are passed onto each new generation. Although genetic algorithms do not guarantee convergence to the global optimum, local optima that are attained can still be good approximations.

4.2.4. Neural Network Estimations

NNs can be used to estimate the parameters of different types of models (e.g., shape models S or dynamics models G). An NN is made up of several convolutional or fully connected layers. The weights of the connections between layers enable the observable parameters (e.g., the force fext applied by the external manipulator to deform the object) to be mapped to an output (e.g., the predicted deformed positions p ∈ S of points constituting the shape of the object).

In several studies, Cretu et al. used neural gas networks to segment and track the shape of deformed objects (Cretu et al., 2010, 2012; Tawbe and Cretu, 2017). The shape of the object is represented using a mesh and neural gas, which allows a more adaptable representation (e.g., by increasing the resolution of the mesh around the manipulator, which needs a higher accuracy in the deformation computation, and decreasing the resolution in the rest of the areas for computational efficiency). An NN model is used to predict the positions of the nodal points of the mesh p with respect to the applied force fext. The NN is trained with data captured through force sensors and a depth camera. There are also works in which an NN is used with an FEM model to estimate parameters, such as E and υ and to predict the deformed positions of nodal points of the mesh of an object (e.g., Wang et al., 2018). The NN is trained offline using reference deformations with known parameters obtained from an FEM simulation and then used to predict online the deformation of real-world objects observed through a depth camera.

The advantage of such methods is that NNs provide efficient estimation similar to direct estimation, via an offline-trained NN mapping the observed input (e.g., forces applied) to an output (e.g., deformation). However, NNs require a lot of annotated training data, which may not be available or possible to obtain for every type of object that a robot might encounter.

4.3. Probabilistic Methods

In probabilistic approaches, usually one attempts to characterize a posterior probability, such as or . The posterior probability represents the position p or the displacement u of the deformed shape with the highest probability relative to the erroneous (e.g., noisy or missing) observed deformation coming from a sensor, such as a depth camera. Shape and dynamics models are used to represent and calculate p or u. To compute the posterior probability, methods, such as expectation maximization (Schulman et al., 2013) or sampling techniques, such as Markov chain Monte Carlo (Risholm et al., 2010) are used.

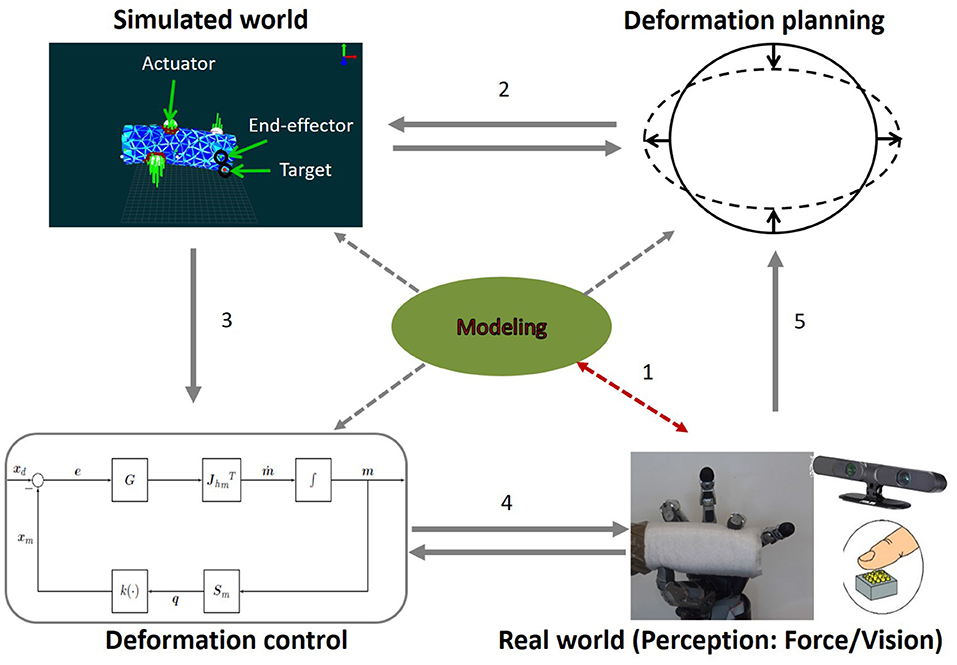

5. Manipulation Planning and Control

Accurate tracking and prediction of deformations, supported by learning models, are needed to control the actively changing shape and motion of objects during manipulation (Figure 1iv). Active control of shape and motion consists of five main modules (Figure 11). The core is (1) the modeling of deformation (i.e., shape representation, section 2) and dynamics (section 3) and the estimation of parameters (section 4), which contributes to (2) simulation, (3) planning, and (4) control, while receiving input from (5) perception. Developing a system that can perform precise information exchanges between all these modules is still an open research problem.

Figure 11. A block schema representing connections between different elements of a complex architecture for robotic manipulation of 3D deformable objects, namely modeling, perception, planning, simulation, and control. In this representation a cylindrical object and an anthropomorphic hand are taken as an example of a practical application.

Figure 11 shows an architecture for the control of deformable objects inspired by the work of Ficuciello et al. (2018), in which only some aspects of the manipulation problem are addressed. In the schema, the example of an anthropomorphic hand deforming a soft cylindrical object is considered. The manipulation is based primarily on modeling the deformation, with the model being refined and updated according to sensory perception that involves touch and vision (connection 1 in Figure 11). Then, based on the modeled shape S and dynamics G, planning consists in establishing the points/areas on S where concentrated/distributed external forces (called actuators) must be exerted by an end-effector to obtain desired (target) deformations. The choice of the points at which to exert the forces and the intensity of the forces are established during the planning phase, using G and a simulation that involves G and the object behavior, and this information is sent to the control (connection 3). Once the action is planned, the control module makes sure that the desired actions are correctly executed and that the expected deformations are achieved. To do this, it is necessary to consider a closed-loop control in which measurements of the state of the object and of the robot are used to correct errors (connection 4). Finally, based on the result of the action measured in the real world, a subsequent action can be planned (connection 5).

By taking into account this ideal architecture, in this section we survey the literature on robotic manipulation of deformable objects and organize the topic into two main categories, planning of object manipulation and control of deformation. The field of deformable object manipulation is still at an early stage of development. Therefore, as in Ficuciello et al. (2018), the works we review in the following cover only some of the modules depicted in Figures 1, 11 and do not describe a full system for complex deformation control of 3D objects.

5.1. Planning Object Manipulation

Only recently has the robotics community begun to publish results on manipulation of soft 3D objects. Most of the studies on motion planning for deformable object manipulation relate to deformable linear objects studied using mainly probabilistic roadmaps (Holleman et al., 1998; Moll and Kavraki, 2004; Saha and Isto, 2007) or deformable surgical tools and snake robots (Anshelevich et al., 2000; Bayazit et al., 2002; Teschner et al., 2004; Gayle et al., 2005). We identify two main bodies of work in the literature, namely work on deformable robots interacting with the environment and work on robots interacting with deformable environments. A few works concern planning in a complex deformable environment (Alterovitz et al., 2009; Maris et al., 2010; Patil et al., 2011).

As evidenced by the two blocks at the top of Figure 11, path planning for deformable object manipulation requires simulation of deformation, either when the deformation is the goal or when it is simply a side-effect of a planned action. In Jiménez (2012), a survey of model-based, offline planning strategies for deformable objects is presented and organized according to the type of manipulation, namely motion planning, folding/unfolding, topological modifications, and assembly. The paper is organized based on the geometric and physical characteristics of the object to be manipulated.

One of the methods adopting a model-based approach is that of Das and Sarkar (2011). In their method, three actuators are used to move the position of a point lying within the deformable object toward a goal location (target deformation). First an optimization technique, which minimizes the total force on the object to plan the location of the actuation points, is applied, and then a proportional-integral (PI) controller determines the motions of the actuators. The method uses a spline-like shape representation S (section 2.2.1) and an MS model (e.g., GMS) to simulate the dynamics of the deformable object (section 3.2.2).

Li et al. (2016) also used a model-based approach. However, rather than simulating the deformation using a dynamics model, they used a database of deformed shapes S simulated by a commercial physics simulator called Maya (Autodesk, INC., 2019). A predictive model-driven approach is used to generate a large number of instances that can be used for learning and to optimize trajectories for manipulation of deformable objects, so as to create a bridge between the simulated and real worlds. Another work that uses a database approach is Mira et al. (2015), but rather than shape models, their database contains previous grasps, which are used by their grasp planner to determine the contact points. The grasp planner was designed for flexible objects that can be grasped by exploiting their inherent flexibility. Due to similarities between the robot's hand and the human hand, the planner reproduces the action of the human hand.