- Faculty of Arts and Education, The University of Auckland, Auckland, New Zealand

Introduction: The emergence of generative artificial intelligence (AI) presents many opportunities and challenges to teaching and learning in higher education. However, compared to student- or administration-facing AI, little attention has been given to the impact of AI on faculty's perspective or their curriculum, instruction, and assessment (CIA) practices.

Methods: To address this gap, we conducted a systematic review of articles published within the first nine months following the release of ChatGPT. After screening following PRISMA statement guidelines, our review yielded 33 studies that met the inclusion criteria.

Results: Most of these studies (n = 17) were conducted in Asia, and simulation and modeling were the most frequently used methods (n = 15). Thematic analysis of the studies resulted in four themes about the impact of AI on CIA triad: (a) generation of new material, (b) reduction of staff workload, (c) automation/optimization of evaluation, and (d) challenges for CIA.

Discussion: Overall, this review informs the promising contribution of AI to higher education CIA practices as well as the potential challenges and problems it introduces. Implications for future research and practices are proposed.

Introduction

Large language models (LLMs) aim to simulate the natural language processing capabilities of human beings (Cascella et al., 2023), particularly understanding, translating, and generating texts or other content. The introduction of LLMs, such as ChatGPT and other generative artificial intelligence (AI), has created interesting possibilities and challenges for all educational systems. For instance, while AI can provide opportunities for instructors to personalize learning and provide students with more immediate feedback (Fauzi et al., 2023), it can raise concerns about academic integrity and the propagation of biased or inaccurate information. Tensions over the legitimacy of AI in higher education have placed significant pressure on academics and students. Much of the extant research on AI has focused on students (e.g., Chan and Hu, 2023; Crompton and Burke, 2023) or administrators (e.g., Nagy and Molontay, 2024; Teng et al., 2023). However, how academics, in their role as educators, perceive, use, and adapt to AI tools is still under-researched, particularly when many academics have reported insufficient AI literacy (Alexander et al., 2023).

Given that AI tools are increasingly being used in higher education with a strong potential to transform higher education teaching, learning, and assessment, it is important to systematically synthesize early empirical evidence regarding AI's impact, identify trends and patterns in the literature, and further inform AI policy, research, and practices. Therefore, this study aims to fill the gap through a systematic review driven by the overarching question: How has AI affected the teaching, curriculum design, or assessment practices of academics in higher education (HE)? Specifically, this systematic review aimed to explore what the first wave of research following the release of ChatGPT in November 2022 had focused on and found with respect to the impact of AI tools in HE. In particular, we wanted to understand how AI technologies were affecting curriculum, instruction, and assessment processes to identify pros and cons that might inform promising pathways as well as potential challenges and problems. To complement those insights, we also wanted to identify where this early research was being conducted, what methods were used by researchers, and which aspects of AI were of concern. We hope this contextual information helps readers better understand the applicability of results to their own jurisdictions or situations. By doing so, we provide an overview of how the field is handling these new technologies to change or adapt academics' work in terms of curriculum, instruction, and assessment.

The higher education curriculum-instruction-assessment (CIA) triad

All educational systems must make decisions concerning what they teach (i.e., curriculum), how they teach it (i.e., instruction), and how they evaluate student learning (i.e., assessment). Normally, curriculum decisions (e.g., what to teach and the order in which to teach it) lead to instructional decisions (e.g., how the material is to be introduced, and which methods might best help students learn it), and culminate in assessment and evaluation decisions (e.g., how many assessments of what type and when those assessments will take place). Thus, curriculum, instruction, and assessment comprise the essential triad of all educational practices (Pellegrino, 2006). Higher education systems give academics considerable autonomy over these decisions based on their higher research degrees and contribution to research outputs within their disciplines. While professional certifying bodies have some control over what must be covered, universities give academics responsibility for deciding how to organize, teach, and assess learning in their courses.

The CIA triad has been demonstrated to be highly related to the quality of specific programs and the college students they prepare for the future (Merchant et al., 2014; Sadler, 2016). However, HE settings are likely to shift considerably in the AI era—the curriculum might not just reflect the logic of specific disciplines but also include AI-related content; instructional practices may need to adapt to the co-existence of AI teachers; and assessment practices might include students' understanding and competencies related to AI use. In this light, understanding the benefits that AI brings to HE curriculum, instruction, and assessment could help academics make full use of the technology to reduce workloads (Holmes et al., 2023; Pereira et al., 2023) and improve productivity. Meanwhile, noticing some threats can remind academics to be prepared for negative impacts on college students' engagement and learning.

Method

A systematic review of the literature was carried out by the first author in three databases: Scopus, Web of Science (WoS), and EBSCOhost. These databases are major research databases, varying in coverage content, disciplines, and languages (Stahlschmidt and Stephen, 2020). They can complement each other and provide us with high-quality and relevant literature. To establish trustworthiness, the research team made agreements on search terms and initial inclusion and exclusion criteria before the first author identified the literature. To answer the research question, search terms were trialed iteratively to retrieve relevant literature on how AI has influenced curriculum, instruction, and assessment in higher education (HE). Synonyms for “AI” (e.g., ChatGPT), “teaching” (e.g., instruction), “curriculum” (e.g., planning), or “assessment” (e.g., evaluation) were searched within the title, abstract, keywords, or anywhere in the record. Search terms were then finalized and used identically in each database: (“artificial intelligence” OR “generative artificial intelligence” OR “generative AI” OR “Gen-AI” OR “ChatGPT” OR “GPT*”) AND ((“higher education”) AND (“teaching” OR “assessment” OR “evaluation” OR “feedback” OR “curriculum” OR “instruction*” OR “lesson” OR “planning” OR “delivery” OR “implementation”)). A total of 2,810 articles were identified.

Filters were set only to include peer-reviewed journal articles published in English from December 2022 to the end of the search in August 2023. The first 9 months of literature could capture the critical early phase, when educators and researchers started to publish their responses to newly released AI tools, such as ChatGPT. Filtering only to include peer-reviewed journal articles helped ensure the quality of literature in the search phases. The time frame was chosen to return the earliest possible exploration of the impact of AI, immediately following the release of a demo of ChatGPT on 30 November 2022.

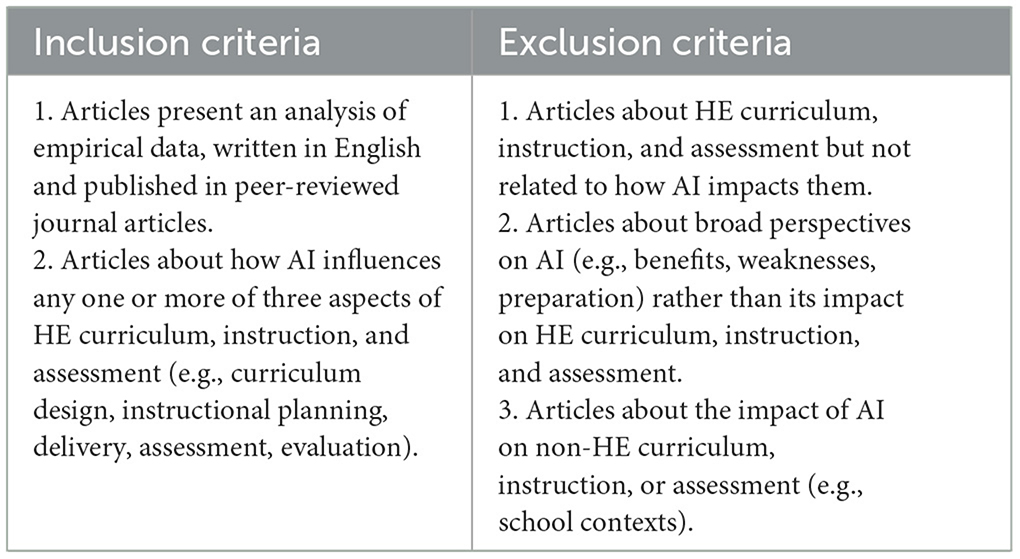

Moreover, articles in this review were limited to empirical articles on AI's impact on HE curriculum, instruction, and assessment (see Table 1). To be included, articles had to report a relationship between AI and any one or more of three aspects of HE curriculum, instruction, or assessment. Articles regarding the impact of AI on curriculum, instruction, and assessment in non-HE contexts were excluded.

Search process

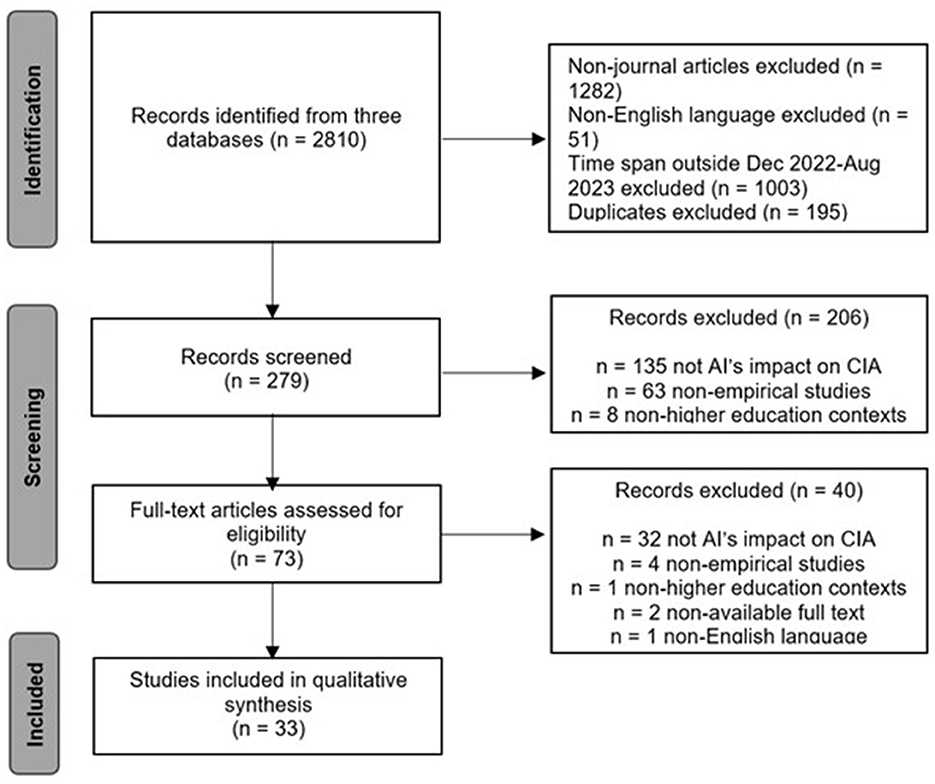

After removing duplications, 279 records were obtained for screening following the Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) guidelines (see Figure 1; Moher et al., 2009). PRISMA guidelines provide a structured framework for searching, identifying, and selecting articles, as well as extracting, analyzing, and synthesizing data to address specific research questions. These guidelines help ensure the quality of the review, minimize bias, and maintain transparency and replicability (Moher et al., 2009) for researchers.

Specifically, the screening process involved title and abstract screening and full-text screening. The titles and abstracts of these records were assessed using the agreed inclusion and exclusion criteria (see Table 1), resulting in the exclusion of 206 records. These records were excluded because their titles and abstracts showed that (a) they did not investigate how AI affected HE curriculum, instruction, and assessment (n =135), (b) they lacked empirical evidence (n = 63), or (c) they did not focus on university contexts (n = 8).

The remaining 73 records were downloaded for full-text screening. The articles were read and evaluated against the inclusion and exclusion criteria. Ones that did not meet the inclusion criteria were removed. Specifically, studies that introduced AI or HE curriculum, instruction, and assessment but did not actually explore the relationship between them were excluded (n = 32). Other articles were removed because they (a) did not have empirical evidence (n = 4), (b) were in a non-HE context (n = 1), (c) were not available as full text (n = 2), and (d) were not in English (n = 1). Consequently, a total of 33 articles were included for review.

During the screening stage, either author was unsure if a specific article should be included, and then the content of this article was discussed against the research question and focus of this review. These discussions resulted in refining the inclusion and exclusion criteria and a consensus on included articles.

Data extraction and analysis

Due to the exploratory nature of this research, an inductive thematic analysis (Braun and Clarke, 2006) was conducted to identify key patterns of the impact of AI on HE curriculum, instruction, and assessment. The first author read the 33 articles thoroughly and extracted key information from each paper, including citations, context, sample size, data collection method, measurement, and the impact on HE curriculum, instruction, and assessment. With an eye to finding answers to the research question, meaningful segments, such as “AI tools allow educators to/provide students with…” and “the challenge is,” were used to identify descriptive codes regarding how AI influences HE curriculum, instruction, and assessment.

Twenty-five initial descriptive codes (e.g., improve teaching effectiveness, challenge the role of educators, assess teaching effect) were captured. Then, the similarities and differences between each code were iteratively compared to identify high-level categories. For instance, codes such as “challenge instructors' AI teaching competencies,” “ethical consideration,” and “lack of support in AI teaching” were integrated into a category named “challenge existing teaching.” Based on the raw data, research questions, and conceptual framework, similar categories were further reviewed and merged into four key themes. Articles could be arranged into more than one theme because of the presence of multiple themes. Please see Appendix A for complete details of themes, categories, and codes.

During the data extraction and analysis stage, the first author coded the key information from each study to address the research questions. The other authors critically read and reviewed the coding results, final synthesis, and interpretation of the themes. Any uncertainty on internal homogeneity and external heterogeneity (Patton, 2003) among codes, categories, and potential themes were discussed at regular meetings.

Results

Nature of studies

Table 2 shows the characteristics of the regions where the 33 studies were conducted, as well as the methods utilized to explore the impact of AI on HE curriculum, instruction, and assessment. Details of which papers are in each category are provided in Appendix B. There are 16 countries around the world contributing to this field. Asia, predominantly China, accounted for 17 of the 33 studies. As Table 2 shows, the balance was distributed widely across the world.

Regarding research methods, 15 of the studies used modeling or simulation methods to design, implement, and test the accuracy and effect of AI tools. For instance, Shi (2023) designed a teaching mode based on the neural network model to provide students with personalized resources and assignments in moral education. This intelligent mode was then tested by simulating different teaching scenarios, and its accuracy and practical effect were confirmed. Each of the following methods was used in six or seven studies, (a) experimental designs to compare AI with an intervention group and a control group, (b) surveys, or (c) interviews. For instance, Farazouli et al. (2024) conducted blinded Turing test experiments by inviting instructors to examine AI-generated texts and student-written texts, and interviewed instructors for their perceptions of the quality of assessed texts and whether they were worried that AI had written the text. A small number of studies used one of a set of diverse methods (e.g., case study, workshop, observation, discussions, etc.).

Three distinct foci of AI were examined. The most common focus in 16 studies was the technological dimensions of AI, such as designing and modeling an AI tool for HE curriculum, instruction, and assessment and testing the accuracy of this tool itself. Computer science and engineering researchers tended to focus on these technological aspects. The human dimension of AI experience was the focus of 10 studies and seen mostly in social science research. These articles examined how university teachers perceived the impact of AI on their curriculum, instruction, and assessment. Just seven studies highlighted how AI supported curriculum, instruction, and assessment.

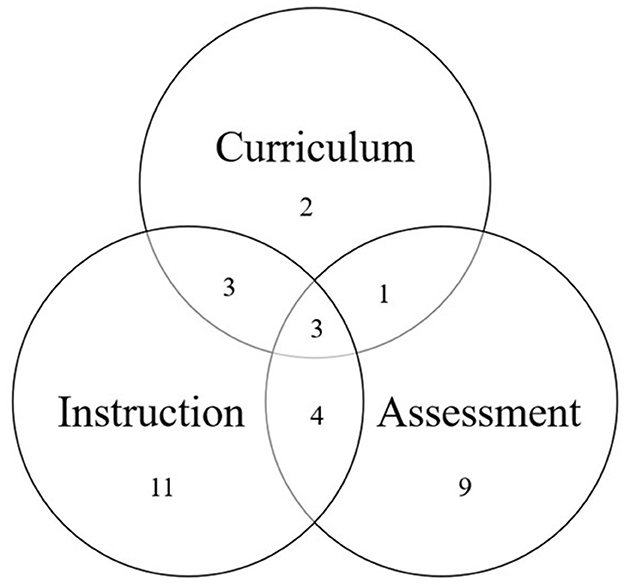

The focus of AI in higher education was classified according to the CIA triad. As shown in Figure 2, 22 of the studies addressed just one of the three aspects, with most being in instruction and assessment. Just 11 studies attempted an integration between two or more of the three aspects. Of the 33 studies, taking into account all overlapping categories, 21 (64%) papers had something to do with instruction, about half had something to do with assessment (17, 52%), and about a quarter focused on curriculum (9, 27%).

Thematic analysis

Based on thematic analysis of the articles (their purposes and findings), four key themes were identified: (a) generation of new material, (b) reduction of staff workload, (c) automation/optimization of evaluation, and (d) challenges for curriculum, instruction, and assessment. While we analytically identify specific aspects, it needs to be remembered that mentions of curriculum or instruction or assessment separately, many of those studies have connections with one or more of the other topics. For example, reference to curriculum is usually related to how instruction could be done, while reference to assessment is linked with how AI resources can be used for instruction or curriculum, and so on.

Generation of new material

Ten studies described the ample new material AI provides for curriculum preparation and instruction implementation. Attributes mentioned include providing various resources and generating new teaching content, building an immersive learning environment, and improving or replacing existing teaching modes with a new teaching approach (Al-Shanfari et al., 2023; Chen et al., 2023; Guo, 2023; Pisica et al., 2023; Pretorius, 2023; Shi, 2023; Wang, 2023; Yang, 2023; Li and Zhang, 2023; Zhu, 2023).

Generate new curriculum content

Two studies examined how academics perceived the influences of AI on specific subject-related curricula and teaching, one in data science and one in English translation (Chen et al., 2023; Wang, 2023). Both studies conducted focus group interviews, and revealed that AI, at curriculum levels, could provide instructors and students with new, rich, and personalized materials, contributing to curriculum design and development and facilitation of course preparation. According to Pisica et al. (2023), 18 academics from Romanian universities reported the benefits of AI in curriculum, which included generating new content for existing courses and developing new curricula or disciplines.

Provide an immersive learning environment

AI technology, such as smart classroom, enables the simulation of the atmosphere of a “real” classroom, practicum, or internship, in which students could better understand and practice what they had learned (Wang, 2023; Zhang et al., 2023). For instance, Wang (2023) stated that AI could make teaching content visualizable; that is, students could practice key communication competencies in a virtual community of practice, which improves teaching efficiency. Additionally, Zhang et al. (2023) designed and experimented with an intelligent classroom for English language and literature courses in China, and found that this AI tool provided the experimental group with a good learning environment and enhanced students' language proficiency.

Offer a new teaching mode

A large body of research has designed and implemented an AI tool (e.g., speech recognition, ChatGPT) in HE teaching, providing a new teaching mode with good accuracy and effectiveness (Al-Shanfari et al., 2023; Chen et al., 2023; Guo, 2023; Pisica et al., 2023; Pretorius, 2023; Shi, 2023; Yang, 2023; Zhu, 2023; Li and Zhang, 2023). Guo's (2023) study, conducted in the Chinese context, showed that a newly designed speech recognition method, based on a recurrent neural network algorithm, had a better accuracy rate and faster convergence, and could replace the previous method and effectively address issues of the low speech recognition rate caused by noisy environments. In addition, two studies in multimedia teaching or moral education (Shi, 2023; Yang, 2023) conducted simulation experiments, suggesting that the new AI-powered teaching mode stimulated students' multiple senses, improved learning and teaching efficiency, and appeared to be much more effective than traditional teaching modes, which to some extent hindered students' originality and interest in learning. The simulation results also suggested that AI-powered teaching mode had the potential to be implemented in real classrooms.

Reduction of staff workload

Ten studies have demonstrated that AI could support staff in curriculum, instruction, and assessment, by reducing their logistical workloads, especially in terms of labor related to curriculum design, interactions with students, delivering personalized instruction, and preparing adapted or personalized assignments (e.g., Holmes et al., 2023; Pereira et al., 2023; Sajja et al., 2023; Devi and Rroy, 2023).

Work as a curriculum assistant

AI could work as a virtual curriculum assistant that helps address students' time-consuming and repetitive questions about curriculum (e.g., content, time, deadline), reduce instructors' logistical workloads and give them more time to improve teaching quality and support students' development (Sajja et al., 2023). For example, Sajja et al. (2023) used the syllabus and other teaching materials to design a curriculum-oriented intelligent assistant and found that this virtualTA effectively provided accurate course information and improved students' course engagement.

Additionally, AI has been demonstrated to help instructors reflect on curriculum and content difficulty. One study investigated using an AI toolkit to collect students' assessment data and further support teachers' reflections on curriculum design (Phillips et al., 2023). The study evaluated the reading demand (using skip-gram word embedding) of passages in assessments (e.g., exams) against the demand of texts and lectures used to support instruction, on the assumption that reading in an assessment should not be harder than that used in instruction. The AI tool predicted the difficulty of course materials, including recorded lectures and assessment materials, in a similar way to lecturers' self-reported material difficulty. Not only would this tool ensure the alignment of assessment reading materials with course reading materials, but also provide valid evidence for the assessment materials.

Personalized instruction

Applying AI technologies can facilitate analyzing students' learning procedures, performance, and needs, providing instructors with timely feedback, and assisting them in delivering adaptive instruction. Consequently, teaching and learning effects were somewhat improved (Al-Shanfari et al., 2023; Firat, 2023; Kohnke et al., 2023; Li L. et al., 2023; Li Q. et al., 2023; Pisica et al., 2023; Wang, 2023; Li and Wu, 2023). By implementing embedded glasses in real classrooms, Li L. et al. (2023) showed that this device helped instructors recognize and process students' real-time images and emotions and keep abreast of their learning status, and this information further provided timely feedback to instructors to change their teaching strategies. Therefore, compared to the control group, the teaching effect of the experiment group increased by 9.44%, and students reported more satisfaction with teaching. Similarly, a new piano teaching mode powered by a vocal music singing learning system has been demonstrated to be relatively successful: it not only made piano teaching more personalized and intelligent, increased teaching efficacy by 7.31% compared to the traditional teaching mode, but also motivated students to engage more in piano practice time and classroom participation (Li Q. et al., 2023).

Prepare personalized assignments

A new assessment method driven by AI tools could help instructors prepare personalized assignments. Pereira et al. (2023) described how an emerging recommender system generated equivalent questions for assignments and exams, to enhance the variation of assignments and support instructors in preparing individualized assignments and minimizing plagiarism. They also indicated that this recommender system was confirmed to be accurate after instructors evaluated the equivalence (e.g., interchangeability, topic, and coding effort) of AI-created questions to the questions instructors had provided.

Automation/optimization of evaluation

Many scholars have investigated the potential of using AI in HE assessment and evaluation.

Assess students' learning process and outcomes

AI is found to accurately assess students' learning process and outcomes, and further determine teaching effect (Novais et al., 2023; Saad and Tounkara, 2023; Wang et al., 2025; Zhu et al., 2023). For instance, Archibald et al. (2023) showed that an AI-enabled discussion platform accurately calculated students' curiosity scores to present their engagement in discussion, further reducing teachers' assessment workload and facilitating their intervention based on the quality of posts written by students. A new assessment method driven by AI tools (i.e., a backward propagation neural network) could automatically evaluate teaching, learning, and grading in an experiential online course in agriculture (Kumar et al., 2023).

Using experiments with small-samples, Zhu et al. (2023) developed in China an AI tool to predict students' performance based on their classroom behavior and previous performance. They suggested that this tool could be used to adjust instructors' teaching strategies and improve teaching quality. Similarly, Tang et al. (2023) discussed how a designed intelligent evaluation system could better recognize voices, face, postures, and teaching skills in microteaching skill training, accurately assess preservice teachers' teaching performance, and provide accurate guidance. Moreover, Saad and Tounkara (2023) used students' information, including class participation frequency and quality, absence rate, contribution to online group work, and utilization of learning resources, in distance learning, to establish a preference model for instructors that could quickly recognize students at risk of dropping out and leader students who could help their peers. They found that this model correctly assigned 85% of students to the correct clusters (i.e., at risk or leader), and assisted instructors in making correct decisions.

Besides evaluating students' cognitive-related outcomes, researchers have also used AI to assess students' non-cognitive outcomes (e.g., emotions, attitudes, and values). For instance, Novais et al. (2023) designed an evaluation fuzzy expert system and employed it to build profiles of students' soft skills (e.g., communication and innovation skills, management skills, and social skills). AI-generated scores were compared with real scores, providing reliable feedback to instructors and students.

Assess teaching effect

Wang et al. (2025) combined human-computer interaction and deep learning algorithm to design an intelligent evaluation system for innovation and entrepreneurship. The system could detect students' attitudes and behaviors and assess teachers' teaching preparation, language expression, content mastery, and teaching design. The operability of this system was further supported by assessing the teaching quality and effect of two classes, and the AI results showed that both classes' teaching quality scored almost 7 out of 10, suggesting a need to improve.

Challenges for CIA

Besides the above advantages, some challenges brought by AI in HE curricula, instruction, and assessment are described in six studies.

Challenge existing curricula

AI is found to bring many challenges to curriculum developers and existing curricula, especially in deciding what content is more valuable, how to integrate AI into the current curriculum, and how to prepare students with digital literacy. In order to address these questions, Lopezosa et al. (2023) interviewed 32 journalism faculties from Spain and Latin America about how they perceived this new technology; however, no consensus on whether to integrate AI into the curriculum was identified. Although most faculties embraced AI technology and suggested establishing AI as a standalone subject, some stated that challenges, limitations, and uncertainty about AI in education should be thoroughly researched before incorporating it into the curriculum. Some individuals suggested a compromise idea of integrating AI into communication subjects as a preliminary step (Lopezosa et al., 2023).

Challenge existing instruction

There are some concerns about using AI in HE instruction, including challenging teacher's AI teaching competencies, ethical considerations, and lack of teaching support. Chan (2023) indicated that AI may cause overdependence on technology and weaken social connections between teachers and students. In this light, Firat (2023) indicated that implementing AI may require educators to change their role from being instructors to guides or facilitators. Furthermore, based on interviews with 12 university teachers in Hong Kong, Kohnke et al. (2023) found that AI challenged participants' teaching competencies about teaching students how to judge AI-generated text critically, use AI tools ethically, and foster digital citizenship.

Ethical concerns in instruction include incorrect or fabricated information, accessibility, and algorithm biases (Firat, 2023). According to a teaching reflection of an educator from Monash University, Pretorius (2023) taught postgraduate students how to use generative AI effectively by giving them examples of communicating with generative AI to brainstorm and design research questions. Consequently, her course achieved good teaching feedback. However, Pretorius realized that incorrect or biased information produced by ChatGPT, as well as unequal access to AI caused by distinct socioeconomic status, required educators to shift their ability to prepare students with AI literacy for using AI professionally and ethically. Firat (2023) also mentioned over-reliance on AI, data privacy, and unequal access to AI tools as challenges.

Another concern centers on inadequate technical support and training in integrating AI into teaching. For instance, Al-Shanfari et al. (2023) utilized a mixed-method study to understand how aware, prepared, and challenged instructors were in integrating intelligent tutoring systems (ITS) in Omani universities. They found that most participants considered ITS effective in providing customized instruction; however, the lack of support and guidance in using ITS brought the instructors substantial challenges. As one participant said, “Teaching approaches at my university are not supporting the use of ITS” (p. 956). Similarly, Chen et al. (2023) interviewed 16 faculty members in data science and revealed that inconsistent definitions of data science, inadequate team support, and lack of collaboration platforms were major challenges.

Challenge existing assessment methods and strategies

While there are various opportunities for HE assessment, several challenges exist and need to be addressed. The most frequently mentioned challenge is that AI has been proven to pass many examinations and assignments. Consequently, some students may use it to cheat or plagiarize. For instance, Chan (2023) stated that new concerns in HE assessment have emerged, as most students and teachers are worried that some students use AI tools to cheat and plagiarize, and teachers could not identify such dishonesty correctly. Similarly, Kohnke et al. (2023) found that AI challenged the current assessment system, as instructors were worried that AI tools are too convenient for students making it easy to cheat and not work independently.

Moreover, it is hard for humans or AI detectors to identify AI-generated texts or assignments, which in turn challenges existing assessment practices and strategies. A case study conducted in an Australian Master's program for Geographic Systems and Science found that ChatGPT, acting as a fictional student, effectively completed most assignments (e.g., coding; Stutz et al., 2023). Although AI detectors identified it, lecturers did not recognize AI had generated the answers and gave a grade of “satisfactory.” Stutz et al. (2023) also discussed the challenge ChatGPT poses to traditional evaluation methods and called on researchers and practitioners to rethink learning objectives, content, and assessment approaches. Assessments relying on oral exams or video conferences were suggested as alternatives that were resistant to AI dishonesty. In a similar study, both AI-generated and student-written texts were assessed by AI detectors and six English as a Second Language (ESL) lecturers from Cyprus (Alexander et al., 2023). It was found that AI detectors worked more effectively in identifying AI-generated texts than humans, and AI, to some extent, challenged lecturers' previous evaluation criteria and strategies. Lecturers seemed to conduct deficit assessment strategies and considered that AI-generated texts were characterized as having fewer grammar errors and more accurate expressions. Therefore, the authors recommended improving instructors' digital literacy and rethinking assessment policies and practices in the AI era. Similar findings were shown in Sweden, where Farazouli et al. (2024) conducted a Turing test among 24 university teachers in humanities and social sciences. They found that teachers tended to be critical about students' texts, underestimated students' performance, and doubted that some student texts had been finished by GPT. These concerns negatively influenced the trust relationship between teachers and students.

Discussion

This study examined how AI influences HE curriculum, instruction, and assessment by reviewing 33 recent articles. We summarize the review within a SWOT analysis (Gurl, 2017) framework to provide a structured framework about the strengths, weaknesses, opportunities, and threats of AI in terms of higher education curriculum, instruction, and assessment.

Benefits of AI in higher education

The analysis of 33 recent studies provides empirical evidence as to the geographical distribution of research, research methods, research foci, and the impact of AI on the CIA triad in higher education. Our results showed that most research was conducted in Asia, Europe, or North America. Consistent with findings indicating a rapid trend in Chinese research on AI in higher education (Crompton and Burke, 2023), China accounted for most studies in this review. One possible reason is that AI has been considered a priority in the Chinese government's agenda (State Council of PRC, 2017) and is thus highly emphasized in education. This review also indicated that simulation and modeling were the most frequently used methods to assess the potential impact of AI in the HE context (e.g., Phillips et al., 2023; Saad and Tounkara, 2023; Sajja et al., 2023; Shi, 2023). This finding might be related to research foci, as more attention has been given to testing the effectiveness of AI tools rather than to academics' perceptions and practices of AI tools in the real world.

Several benefits were identified in this review, such as generating new material, reducing staff workload, and evaluating automatically or optimally (e.g., Kumar et al., 2023; Pretorius, 2023; Shi, 2023). This review first reveals that AI can create new courses and resources, promote curriculum development, address time-consuming workloads concerning curriculum (e.g., questions about syllabi, time, and deadline), and evaluate the material difficulty and quality (Chen et al., 2023; Lopezosa et al., 2023; Pisica et al., 2023; Wang, 2023). These findings reinforce earlier findings that the implementation of AI (e.g., ChatGPT) could contribute to generating a lesson plan and course objectives (Kiryakova and Angelova, 2023; Rahman and Watanobe, 2023) and to assessing general resources and textbooks (Koć-Januchta et al., 2022). AI has also been found to provide an immersive learning environment and a new teaching mode, where instructors facilitate students to conduct “trial-error” strategies and practice specific competencies in simulated scenes (e.g., Wang, 2023; Zhang et al., 2023). Meanwhile, AI, as virtual teachers, could take up logistical workloads (e.g., reinforce students' mastery of key concepts) and provide instructors time and energy to conduct personalized instruction and satisfy students' distinct needs (Al-Shanfari et al., 2023; Firat, 2023; Kohnke et al., 2023). These findings are in line with previous studies: AI, in most cases, worked well in sharing instructors' tutoring tasks, providing students with immediate and unique feedback, and reducing instructors' workload (Chou et al., 2011; Zawacki-Richter et al., 2019). Additionally, AI seems to benefit assessments by generating personalized assignments (Pereira et al., 2023), effectively assessing and predicting students' academic achievement (Wang et al., 2025) and non-cognitive outcomes (e.g., soft skills, Novais et al., 2023), identifying disadvantaged students (Saad and Tounkara, 2023), and assessing teaching effectiveness (Wang et al., 2025). This review finds evidence that AI-empowered assessment can effectively assess students' learning and teachers' teaching (Hooda et al., 2022; Zawacki-Richter et al., 2019).

Thus, AI has been found to bring benefits to HE curriculum, instruction, and assessment, including generating new materials, alleviating faculty workloads, and automating or optimizing assessment, in alignment with progressive literature (Chou et al., 2011; Rahman and Watanobe, 2023). These findings pave the way for future studies to ascertain the generalizability of the early promising results and the identification of conditions in which the early benefits actually occur. The benefits identified here suggest directions in which HE policy could go, provided appropriate infrastructure and training are given to academics.

Weaknesses in the research

This early research, however, is potentially problematic because of its narrowness. Specifically, research conducted in many regions, especially developing countries, is poorly represented. The currently available research has been conducted largely in Western, Educated, Industrialized, Rich, and Democratic (WEIRD; Henrich et al., 2010) societies. This means that there is a bias in what we can know since participants from other regions of the world are excluded. To the degree that cultural, historical, and developmental factors impinge upon the practice of higher education, more work with such populations is needed. Such research would enhance our understanding of how academics perceive the threats and opportunities of AI.

Another gap in the literature is the absence of research into the real world of higher education classroom pedagogical activities, course development, and assessment design. Comparatively, few studies have focused on the human experience of using AI, especially in classrooms (e.g., Al-Shanfari et al., 2023; Archibald et al., 2023; Farazouli et al., 2024). Related to this is the lack of cross-disciplinary collaborative research between computer scientists and social scientists. If AI tools are meant to make a difference to classroom teaching, learning, and evaluation, researchers from different backgrounds will need to collaboratively explore how AI technology could be used in educational practice.

Based on this review, future research will need to explore the following questions:

• How does AI influence the teaching, curriculum design, or assessment practices of academics in higher education in the Global South contexts? How does it differ from research conducted in the Global North? How can AI tools, policies, and practice become more culture-sensitive based on this comparison?

• What are the best practices of academics in teaching students to use AI ethically and responsibly?

Opportunities of AI in higher education

The presence of AI seems to create opportunities for academics in terms of revisions to existing courses and freeing up time to focus on improving existing curriculum, instruction, and assessment quality. These opportunities point to the development of interdisciplinary courses with the help of AI, especially in terms of course content and assessment design. One way to implement interdisciplinary approaches would be to integrate ethical considerations of using or relying on AI in philosophy or research methods courses. Another way is to use AI to bridge the intersections of different disciplines (e.g., Arts-Arts disciplines, Science-Science disciplines, and Arts-Sciences disciplines). An example in the Science-Science disciplinary intersection could be using AI to predict how air pollution (environmental science) affects health outcomes (healthcare).

Given the benefits AI brings to academics' instruction by providing an immersive learning environment and a new teaching mode, it may be feasible to establish a collaborative teaching system, where virtual teachers (i.e., AI) share intensive and repetitious teaching workloads (e.g., immediate feedback, knowledge reinforcement), and where human teachers pay attention to student's personal, emotional, and development needs and conduct one-to-one adaptive instruction. For instance, AI teachers could automatically grade and constantly offer targeted practice for students, which would provide adaptive support to teachers. Consequently, developing AI-empowered student and teacher assessment models could be important research and practice directions.

Additionally, we suppose that student-facing AI assessment models can be implemented in three steps. Before the classroom, AI can be used to diagnose students' knowledge bases and help instructors better understand students' learning preferences, motivations, and needs. During the classroom, AI techniques (e.g., speech recognition, facial recognition) can be combined to collect students' facial expressions, emotions, gestures, classroom dialogue, and so on, and promptly analyze their learning engagement, behaviors, strategies, and difficulties. This information can inform instructors about students in need, possible changes in teaching strategies, and early advice on where to intervene. After the classroom, AI, working as a teaching assistant, could provide students with targeted assignments, facilitate individualized learning, and predict future performance based on current performance. Similarly, instructors' information (e.g., preparing lessons and teaching) could be collected into a digital profile for each instructor, informing assessments of their teaching performance, abilities, and professional development needs. It could inform faculty professional development programs. Nevertheless, caution is still needed when embracing AI-generated assessment results, as some indicators (e.g., instructors' professional ethics) cannot be assessed effectively or, depending on programming, or could even be overlooked. Therefore, combining AI-generated and human-based assessments is necessary, respecting human beings' values and educational principles. The challenge of students' unsanctioned use of AI within assessment processes will require higher education to find valid ways of implementing or managing AI.

Threats AI brings to higher education

Indeed, an important threat AI brings to education is the requirement that all teaching and learning has to happen in an ICT environment, which could be seen as antithetical to the human in the human experience of learning (Brown, 2020). While AI seems to be able to do many things, it is simply programming and thus not human.

The literature reported here makes clear substantial challenges to curriculum, instruction, and assessment. Despite the importance of curriculum, this review found less research into AI's integration into HE curriculum than on the two other aspects of the CIA triad. In terms of existing curricula, there is considerable debate as to what students need to be taught about or with AI and how it could be integrated (Lopezosa et al., 2023). AI creates the possibility that skill with large language models (e.g., to analyze data, to compose communication) is what students might need in the future. Considerable enthusiasm exists for the integration of AI skills with other graduate attributes such as the 4C skills (i.e., communication, collaboration, critical thinking, and creativity). This is an extension of the long-standing arguments advanced by technologists that the best way to prepare future citizens and workers is to ensure they develop generic competencies rather than disciplinary specific knowledge and ability (Chickering and Ehrmann, 1996; Cuban, 2001). Consequently, faculty members need to consider the intersection of disciplinary structure and AI affordances and constraints in terms of integrating contemporary capabilities with long-standing traditions of knowledge.

The threat of AI applies also to instructors' role and their teaching abilities. Most academics have little understanding of how AI tools are designed and what large language models can do. Thus, few have thought constructively about how to integrate AI into their teaching. The question is how AI tools, with their capacity to translate text, analyze it, and compose fluent but potentially meaningless text, can or should be integrated into diverse fields such as engineering, medicine, studio art, laboratory science, and so on. Application within humanities may be much more feasible with the current capacities of GenAI, but still academics have to learn how AI can be an adjunct to teaching rather than potentially a substitute for the instructor's knowledge and skill. Enthusiasm of technologists for using machines to replace the labor of humans (Brown, 2020) is clearly a threat to the human-in-the-loop. This is all the more important because currently AI cannot identify fabrication or error in the text that it assembles.

The most important challenge centers around assessment and evaluation of learning. With the free access students have to powerful AI language models, it is difficult to ensure that the work submitted by students is their own genuine intellectual contribution. The fear and possibility of non-detectable academic dishonesty will require substantial efforts to ensure the integrity and social warrant (Brown, 2022) of course grades and academic qualifications. A possible response to generative AI capabilities is to impose invigilated in-person examinations without access to digital resources and without bring-your-own-devices. Another way to ensure the integrity of evaluation is to require students to participate in an oral examination of their learning; a solution that will have a large impact on workloads, efficiency, validity of sampling, and accuracy of scoring. It is clear generative AIs will force academics to rethink the purpose of assessment (e.g., student-centered or knowledge-based learning), the content and format of what is assessed, the design of assessments (e.g., process evaluation, outcome evaluation, or value-added evaluation), and the formative use of assessed performances.

Given the interactive and integrated nature of curriculum, instruction, and assessment processes, there simply is little research on AI's impact on their intersection. Indeed, only three papers attempted to address all three legs of the CIA triad. Future research will need to examine the integration of AI impact, rather than studying each aspect of the triad in isolation.

Limitations

Although this review explored three major education databases to minimize selection bias, the recent articles were published in English rather than in other languages, such as Chinese and Spanish. Therefore, the generalizability of these findings needs to be taken with caution for use in non-English contexts. Considering that Asia accounted for a large number of studies and that an emerging number of studies were conducted in South America and the Middle East, multi-lingual or culture-responsive studies should be conducted in the future. More importantly, this review was limited to the first 9 months following the release of ChatGPT on 30 November 2022; hence, it is very much a preliminary exploration of how AI has impacted higher education. In light of how quickly AI systems are being developed and changed, new research is being published constantly. Hence, the findings presented in this review have probably been superseded already.

Conclusion

This review contributes to a better understanding of the benefits and threats of AI that recent research has identified in the higher education context. It also identifies challenging opportunities for higher education institutions and faculty members. This paper offers a first step toward understanding the impact AI on the CIA triad in higher education. While the future remains uncertain, several of the trends found in the study are likely to continue for some time to come. In particular, it seems very likely that China will continue to lead the way in research outputs and that studies using stimulations/modeling are likely to remain the most common method, perhaps because they are relatively easy to conduct. It is also likely that the challenges associated with meaningful integration of AI into curriculum, instruction, and assessment will remain difficult for years to come.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

JL: Conceptualization, Formal analysis, Investigation, Methodology, Writing – original draft, Writing – review & editing. JS: Conceptualization, Writing – review & editing. GB: Supervision, Writing – review & editing, Funding acquisition.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The authors would like to acknowledge financial assistance from the University of Auckland Open Access Support Fund.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1522841/full#supplementary-material

References

*Alexander, K., Savvidou, C., and Alexander, C. (2023). Who wrote this essay? Detecting AI-generated writing in second language education in higher education. Teach. Engl. Technol. 23, 25–43. doi: 10.56297/BUKA4060/XHLD5365

*Al-Shanfari, L., Abdullah, S., Fstnassi, T., and Al-Kharusi, S. (2023). Instructors' perceptions of intelligent tutoring systems and their implications for studying computer programming in Omani higher education institutions. Int. J. Membr. Sci. Technol. 10, 947–967. doi: 10.15379/ijmst.v10i2.1395

*Archibald, A., Hudson, C., Heap, T., Thompson, R. R., Lin, L., DeMeritt, J., et al. (2023). A validation of AI-enabled discussion platform metrics and relationships to student efforts. TechTrends 67, 285–293. doi: 10.1007/s11528-022-00825-7

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Brown, G. T. L. (2020). Schooling beyond Covid-19: an unevenly distributed future. Front. Educ. 5:82. doi: 10.3389/feduc.2020.00082

Brown, G. T. L. (2022). The past, present and future of educational assessment: a transdisciplinary perspective. Front. Educ. 7:1060633. doi: 10.3389/feduc.2022.1060633

Cascella, M., Montomoli, J., Bellini, V., and Bignami, E. (2023). Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J. Med. Syst. 47, 1–5. doi: 10.1007/s10916-023-01925-4

*Chan, C. K. Y. (2023). A comprehensive AI policy education framework for university teaching and learning. Int. J. Educ. Technol. Higher Educ. 20. doi: 10.1186/s41239-023-00408-3

Chan, C. K. Y., and Hu, W. (2023). Students' voices on generative AI: perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. Higher Educ. 20:43. doi: 10.1186/s41239-023-00411-8

*Chen, H., Wang, Y., Li, Y., Lee, Y., Petri, A., and Cha, T. (2023). Computer science and non-computer science faculty members' perception on teaching data science via an experiential learning platform. Educ. Inf. Technol. 28, 4093–4108. doi: 10.1007/s10639-022-11326-8

Chickering, A. W., and Ehrmann, S. C. (1996). Implementing the seven principles: technology as lever. AAHE Bull. 49, 3–6.

Chou, C.-Y., Huang, B.-H., and Lin, C.-J. (2011). Complementary machine intelligence and human intelligence in virtual teaching assistant for tutoring program tracing. Comput. Educ. 57, 2303–2312. doi: 10.1016/j.compedu.2011.06.005

Crompton, H., and Burke, D. (2023). Artificial intelligence in higher education: the state of the field. Int. J. Educ. Technol. Higher Educ. 20:22. doi: 10.1186/s41239-023-00392-8

Cuban, L. (2001). Oversold and Underused: Computers in the Classroom. Cambridge, MA: Harvard University Press.

*Devi, D., and Rroy, A. D. (2023). Role of artificial intelligence (AI) in sustainable education of higher education institutions in Guwahati City: teacher's perception. Int. Manage. Rev. 111–116.

*Farazouli, A., Cerratto-Pargman, T., Bolander-Laksov, K., and McGrath, C. (2024). Hello GPT! Goodbye home examination? An exploratory study of AI chatbots impact on university teachers' assessment practices. Assess. Eval. Higher Educ. 49, 363–375. doi: 10.1080/02602938.2023.2241676

Fauzi, F., Tuhuteru, L., Sampe, F., Ausat, A., and Hatta, H. (2023). Analysing the role of ChatGPT in improving student productivity in higher education. J. Educ. 5, 14886–14891. doi: 10.31004/joe.v5i4.2563

*Firat, M. (2023). What ChatGPT means for universities: perceptions of scholars and students. J. Appl. Learn. Teach. 6, 57–63. doi: 10.37074/jalt.2023.6.1.22

*Guo, J. (2023). Innovative application of sensor combined with speech recognition technology in college english education in the context of artificial intelligence. J. Sens. 2023:9281914. doi: 10.1155/2023/9281914

Henrich, J., Heine, S. J., and Norenzayan, A. (2010). The weirdest people in the world? Behav. Brain Sci. 33, 61–135. doi: 10.1017/S0140525X0999152X

*Holmes, W., Iniesto, F., Anastopoulou, S., and Boticario, J. G. (2023). Stakeholder perspectives on the ethics of AI in distance-based higher education. Int. Rev. Res. Open Distance Learn. 24, 96–117. doi: 10.19173/irrodl.v24i2.6089

Hooda, M., Rana, C., Dahiya, O., Rizwan, A., and Hossain, M. S. (2022). Artificial intelligence for assessment and feedback to enhance student success in higher education. Math. Probl. Eng. 2022, 1–19. doi: 10.1155/2022/5215722

Kiryakova, G., and Angelova, N. (2023). ChatGPT—a challenging tool for the university professors in their teaching practice. Educ. Sci. 13:1056. doi: 10.3390/educsci13101056

Koć-Januchta, M. M., Schönborn, K. J., Roehrig, C., Chaudhri, V. K., Tibell, L. A., and Heller, H. C. (2022). “Connecting concepts helps put main ideas together”: cognitive load and usability in learning biology with an AI-enriched textbook. Int. J. Educ. Technol. Higher Educ. 19:11. doi: 10.1186/s41239-021-00317-3

*Kohnke, L., Moorhouse, B. L., and Zou, D. (2023). Exploring generative artificial intelligence preparedness among university language instructors: a case study. Comput. Educ. Artif. Intell. 5:100156. doi: 10.1016/j.caeai.2023.100156

*Kumar, M. G. V., Veena, N., Cepov,á, L., Raja, M. A. M., Balaram, A., and Elangovan, M. (2023). Evaluation of the quality of practical teaching of agricultural higher vocational courses based on BP neural network. Appl. Sci. 13:1180. doi: 10.3390/app13021180

*Li, F., and Zhang, X. (2023). Artificial intelligence facial recognition and voice anomaly detection in the application of English MOOC teaching system. Soft Comput. 27, 6855–6867. doi: 10.1007/s00500-023-08119-7

*Li, L., Chen, C. P., Wang, L., Liang, K., and Bao, W. (2023). Exploring artificial intelligence in smart education: real-time classroom behavior analysis with embedded devices. Sustainability 15:7940. doi: 10.3390/su15107940

*Li, Q., Liu, H., and Zhao, X. (2023). IoT networks-aided perception vocal music singing learning system and piano teaching with edge computing. Mob. Inf. Syst. 2023, 1–9. doi: 10.1155/2023/2074890

*Li, Y., and Wu, F. (2023). Design and application research of embedded voice teaching system based on cloud computing. Wireless Commun. Mob. Comput. 2023, 1–10. doi: 10.1155/2023/7873715

*Lopezosa, C., Codina, L., Pont-Sorribes, C., and Vállez, M. (2023). Use of generative artificial intelligence in the training of journalists: challenges, uses and training proposal. El Profes. Inf. 32, 1–12. doi: 10.3145/epi.2023.jul.08

Merchant, Z., Goetz, E. T., Cifuentes, L., Keeney-Kennicutt, W., and Davis, T. J. (2014). Effectiveness of virtual reality-based instruction on students' learning outcomes in K-12 and higher education: a meta-analysis. Comput. Educ. 70, 29–40. doi: 10.1016/j.compedu.2013.07.033

Moher, D., Liberati, A., Tetzlaff, J., and Altman, D. G. (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 6:e1000097. doi: 10.1371/journal.pmed.1000097

Nagy, M., and Molontay, R. (2024). Interpretable dropout prediction: towards XAI-based personalized intervention. Int. J. Artif. Intell. Educ. 34, 274–300.

*Novais, A. S. d., Matelli, J. A., and Silva, M. B. (2023). Fuzzy soft skills assessment through active learning sessions. Int. J. Artif. Intell. Educ. 34, 416–451. doi: 10.1007/s40593-023-00332-7

Pellegrino, J. W. (2006). Rethinking and Redesigning Curriculum, Instruction and Assessment: What Contemporary Research and Theory Suggests. Chicago, IL: Commission on the Skills of the American Workforce, 1–15.

*Pereira, F. D., Rodrigues, L., Henklain, M. H. O., Freitas, H., Oliveira, D. F., Cristea, A. I., et al. (2023). Toward human–AI collaboration: a recommender system to support CS1 instructors to select problems for assignments and exams. IEEE Trans. Learn. Technol. 16, 457–472. doi: 10.1109/TLT.2022.3224121

*Phillips, T. M., Saleh, A., and Ozogul, G. (2023). An AI toolkit to support teacher reflection. Int. J. Artif. Intell. Educ. 33(3), 635–658. doi: 10.1007/s40593-022-00295-1

*Pisica, A. I., Edu, T., Zaharia, R. M., and Zaharia, R. (2023). Implementing artificial intelligence in higher education: pros and cons from the perspectives of academics. Societies 13:118. doi: 10.3390/soc13050118

*Pretorius, L. (2023). Fostering AI literacy: a teaching practice reflection. J. Acad. Lang. Learn. 17, T1–T8. Available online at: https://journal.aall.org.au/index.php/jall/article/view/891

Rahman, M. M., and Watanobe, Y. (2023). ChatGPT for education and research: opportunities, threats, and strategies. Appl. Sci. 13:5783. doi: 10.3390/app13095783

*Saad, I., and Tounkara, T. (2023). Artificial intelligence-based group decision making to improve knowledge transfer: the case of distance learning in higher education. J. Decis. Syst. 1–16. doi: 10.1080/12460125.2022.2161734

Sadler, D. R. (2016). Three in-course assessment reforms to improve higher education learning outcomes. Assess. Eval. Higher Educ. 41, 1081–1099. doi: 10.1080/02602938.2015.1064858

*Sajja, R., Sermet, Y., Cwiertny, D., and Demir, I. (2023). Platform-independent and curriculum-oriented intelligent assistant for higher education. Int. J. Educ. Technol. Higher Educ. 20:42. doi: 10.1186/s41239-023-00412-7

*Shi, X. (2023). Exploring an innovative moral education cultivation model in higher education through neural network perspective: a preliminary study. Appl. Artif. Intell. 37:2214767. doi: 10.1080/08839514.2023.2214767

Stahlschmidt, S., and Stephen, D. (2020). Comparison of web of science, scopus and dimensions databases. KB forschungspoolprojekt 2020:37.

State Council of PRC (2017). Notice of the State Council on Issuing the Development Plan for the New Generation of Artificial Intelligence. In Chinese. Available online at: https://www.gov.cn/zhengce/content/2017-07/20/content_5211996.htm (accessed March 1, 2025).

*Stutz, P., Elixhauser, M., Grubinger-Preiner, J., Linner, V., Reibersdorfer-Adelsberger, E., Traun, C., et al. (2023). Ch(e)atGPT? An anecdotal approach addressing the impact of ChatGPT on teaching and learning GIScience. GI_Forum 11, 140–147. doi: 10.1553/giscience2023_01_s140

*Tang, J., Zhang, P., and Zhang, J. (2023). Design and implementation of intelligent evaluation system based on pattern recognition for microteaching skills training. Int. J. Innov. Comput. Inf. Control 19, 153–162. doi: 10.24507/ijicic.19.01.153

Teng, Y., Zhang, J., and Sun, T. (2023). Data-driven decision-making model based on artificial intelligence in higher education system of colleges and universities. Expert Syst. 40:e12820. doi: 10.1111/exsy.12820

*Wang, D., Han, L., Cong, L., Zhu, H., and Liu, Y. (2025). Practical evaluation of human-computer interaction and artificial intelligence deep learning algorithm in innovation and entrepreneurship teaching evaluation. Int. J. Hum. Comput. Interact. 41, 1742–1750. doi: 10.1080/10447318.2023.2199632

*Wang, Y. (2023). Artificial intelligence technologies in college English translation teaching. J. Psycholinguistic Res. 52, 1525–1544. doi: 10.1007/s10936-023-09960-5

*Yang, X. (2023). Higher education multimedia teaching system based on the artificial intelligence model and its improvement. Mob. Inf. Syst. 2023:8215434. doi: 10.1155/2023/8215434

Zawacki-Richter, O., Marín, V. I., Bond, M., and Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education–where are the educators?. Int. J. Educ. Technol. Higher Educ. 16, 1–27. doi: 10.1186/s41239-019-0171-0

*Zhang, X., Sun, J., and Deng, Y. (2023). Design and application of intelligent classroom for English language and literature based on artificial intelligence technology. Appl. Artif. Intell. 37. doi: 10.1080/08839514.2023.2216051

*Zhu, K. (2023). Application of multimedia service based on artificial intelligence and real-time communication in higher education. Comput. Aided Design Appl. 20, 116–131. doi: 10.14733/cadaps.2023.S12.116-131

*Zhu, L., Liu, G., Lv, S., Chen, D., Chen, Z., and Li, X. (2023). An intelligent boosting and decision-tree-regression-based score prediction (BDTR-SP) method in the reform of tertiary education teaching. Information 14:317. doi: 10.3390/info14060317

*^ Included in the systematic review.

Keywords: artificial intelligence, large language models, curriculum, instruction, assessment, systematic review

Citation: Liang J, Stephens JM and Brown GTL (2025) A systematic review of the early impact of artificial intelligence on higher education curriculum, instruction, and assessment. Front. Educ. 10:1522841. doi: 10.3389/feduc.2025.1522841

Received: 05 November 2024; Accepted: 31 March 2025;

Published: 25 April 2025.

Edited by:

Xinyue Ren, Old Dominion University, United StatesReviewed by:

Lina Montuori, Universitat Politècnica de València, SpainDennis Arias-Chávez, Continental University, Peru

Copyright © 2025 Liang, Stephens and Brown. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jingjing Liang, amluZ2ppbmcubGlhbmdAYXVja2xhbmQuYWMubno=

Jingjing Liang

Jingjing Liang Jason M. Stephens

Jason M. Stephens Gavin T. L. Brown

Gavin T. L. Brown