- Department of Logic and Cognitive Science, Faculty of Psychology and Cognitive Science, Adam Mickiewicz University, Poznan, Poland

Artificial intelligence (AI), particularly generative technologies, and large language models are transforming modern education. On the one hand, these tools can automate certain aspects of teaching, personalize educational materials, and improve learning efficiency. However, concerns are emerging regarding the impact of AI on the quality of education, the mental health of students, and fundamental academic values. This article reviews the scientific literature on the applications of artificial intelligence in education, with a special emphasis on its impact on student mental health. Based on an analysis of 120 scientific articles, the study automatically extracted data on AI implementation's potential opportunities and threats. A novel aspect of this work is identifying factors that can be considered both opportunities and threats depending on context. In addition, a frequency analysis of keywords and phrases uncovered many opportunities and challenges. All identified aspects are characterized in the article. The article also highlights key barriers to using large language models (LLMs) for detecting student mental health issues, such as underestimating suicide risk, difficulties with interpreting subtle language, biases in training data, lack of cultural sensitivity, and unresolved ethical concerns. These challenges illustrate why generative AI is not yet reliable for supporting student mental health, especially in high-risk situations. One of the key conclusions is that the use of generative AI to support student mental health is seldom addressed in existing review articles, likely due to the current unreliability of this technology.

1 Introduction

Contemporary education is undergoing a dynamic transformation driven by artificial intelligence (AI) development, particularly generative technologies such as ChatGPT, Gemini, Claude, and DeepSeek. AI is increasingly transforming how students acquire knowledge, process information, and participate in the educational process. Automating certain teaching aspects, personalizing educational materials, and providing easy access to AI tools can improve learning efficiency. However, alongside numerous benefits, some significant challenges and risks may affect the quality of education, student mental health, and fundamental academic values.

One of the key aspects related to the use of generative artificial intelligence (GAI) in education is its impact on academic integrity. As generative AI tools gain popularity, concerns arise regarding student autonomy in completing assignments (such as homework, essays, and exams) and the potential for AI to be used to circumvent traditional methods of knowledge assessment. Another primary concern is the influence of AI on critical thinking and creativity, as readily available responses generated by language models may lead to superficial knowledge acquisition and limit students' ability to analyze and interpret information independently.

At the same time, there is growing interest in the effects of AI on student mental health. On the one hand, Large Language Models (LLMs) and tools such as ChatGPT can serve as psychoeducational support, facilitating access to mental health information and providing initial coping strategies for stress and anxiety. On the other hand, excessive reliance on AI may reduce social interactions, deepen isolation, and lead to dependency on AI as the primary source of answers.

This study aims to analyze the opportunities and risks associated with using generative artificial intelligence in education and its impact on student mental health. This article examines the most commonly occurring opportunities and threats based on an extensive collection of review articles. This review also identifies the contexts in which student mental health issues are framed as opportunities or threats and how frequently they appear compared to other key challenges and benefits in the analyzed literature.

2 Materials and methods

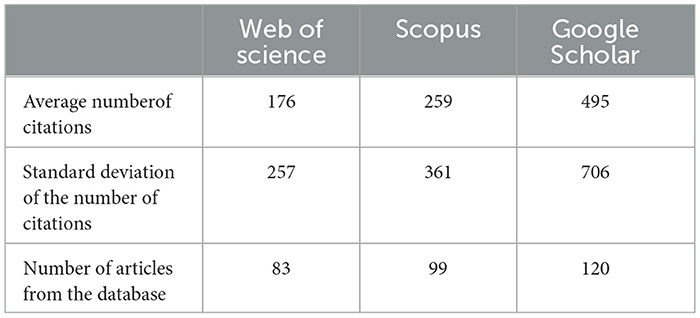

This review utilized a dataset of 120 PDF files containing scientific articles manually retrieved from three sources: Scopus, Web of Science, and Google Scholar. For all sources, publications were identified using relevant keywords and sorted in descending order by the number of citations. Only highly cited articles were selected for further analysis; specifically, in the case of Google Scholar, only articles with at least 50 citations were included. For Scopus and Web of Science, publications were identified using relevant keywords and then sorted in descending order by the number of citations. The most frequently cited articles were selected for further analysis. Descriptive statistics of the selected articles from the three databases are presented in Table 1. The selection process focused on publications whose titles or abstracts included the following phrases: “ChatGPT and education”, “Generative Artificial Intelligence and education” and “Large Language Models and education”. A key qualification criterion was classifying the selected articles as review papers or empirical research studies. To avoid author-related duplication bias, publications authored by the same individual were reviewed, and in cases where an author appeared in multiple articles, only the most cited publication was retained. In contrast, the others were excluded from the dataset. After collecting all documents, the GPT model's application programming interface (API) (version gpt-4o-mini) was employed to automatically extract data regarding opportunities and threats associated with implementing these technologies in education and teaching. The prompt used for this process is presented in the Box 1. As a result of this procedure, a dataset was developed comprising approximately 986 positive aspects (opportunities) and about 887 negative aspects (threats).

Table 1. Descriptive statistics of the selected articles from three databases: average number of citations, standard deviation, and number of articles.

Box 1 A structured prompt was provided to the language model to identify and expand upon opportunities and threats within a given text. The model was instructed to ignore previous context and return results in JSON format.

Forget previous analyses. Now analyze this new text thoroughly:

{text}

Your task is:

1. Identify all possible opportunities and threats present in the text.

2. Be as comprehensive and detailed as possible, extracting every relevant opportunity and threat without omitting any.

3. Avoid unnecessary repetition or irrelevant information.

4. Provide answers strictly in English.

5. Ensure consistent, repeatable, and objective results.

IMPORTANT: For each opportunity and threat, create a short, descriptive label based on the content and use it as the key in the JSON object.This label should be a natural phrase or short sentence summarizing the opportunity or threat – not a slug, camelCase, or one-word identifier.

Each value must be an object with two fields:

- “file”: the exact filename from which this information comes, e.g. “filename”,- “context”: a fragment of text from the source that supports the identified opportunity or threat.

IMPORTANT: Respond ONLY with valid JSON. Do NOT include any explanation, commentary, or extra text outside the JSON structure.

Respond with JSON enclosed strictly in triple backticks like this:

“‘json

{{“opportunities”: {{

/* your generated labels and values here */ }},

“threats”: {{

/* your generated labels and values here */}}

}}

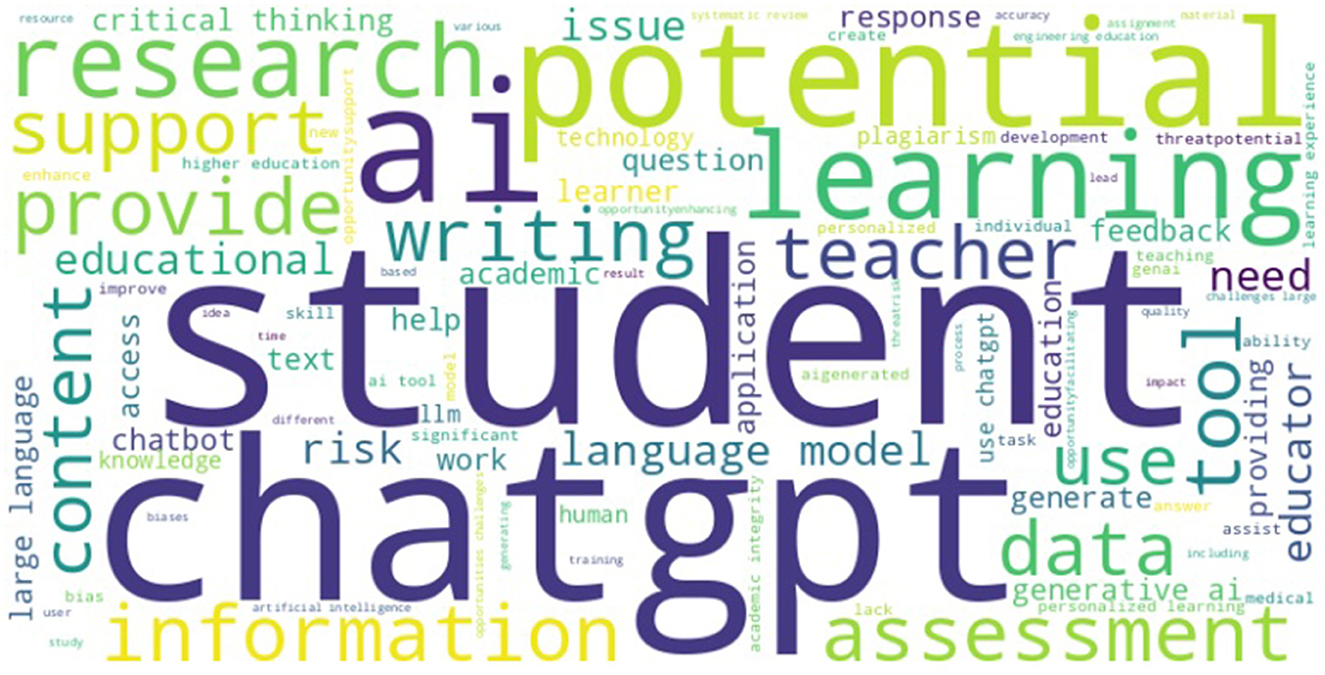

The designed prompt enabled the export of not only the file name and the opportunities or threats identified by the language model to the resulting CSV file, but also the textual context from which the model inferred the presence of a given opportunity or threat related to the use of generative AI in education. This made it possible to manually verify whether each identified opportunity or threat had been correctly recognized within the article. The following analysis stage involved reviewing the collected data regarding the frequency of individual words. Examples of the most frequently occurring words and phrases are presented in Figure 1.

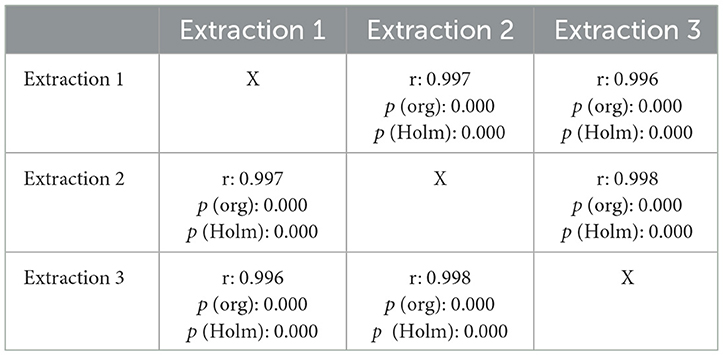

To examine the consistency of results generated by the GPT4 (gpt-4o-mini) model, an analysis was conducted to verify whether the list of opportunities and threats obtained for a given article remains similar across multiple runs. While the lists are not expected to be identical due to the model's inherent paraphrasing variability, they should contain the same key terms. To assess this, the keyword extraction procedure was executed three times. Subsequently, to evaluate the consistency of the generated results, correlation analysis was performed using Pearson's correlation coefficient. Additionally, due to multiple comparisons, p-value adjustment was applied using Holm's correction to control for Type I error. The results of the analysis are presented in Table 2. Based on the given data, the results obtained in successive runs exhibit very high consistency, indicating that the GPT model generates nearly identical sets of opportunities and threats in each script execution.

Table 2. Pearson correlation coefficients for the lists of opportunities and threats obtained in three independent runs of GPT4 (gpt-4o-mini), along with p-values adjusted using Holm's correction.

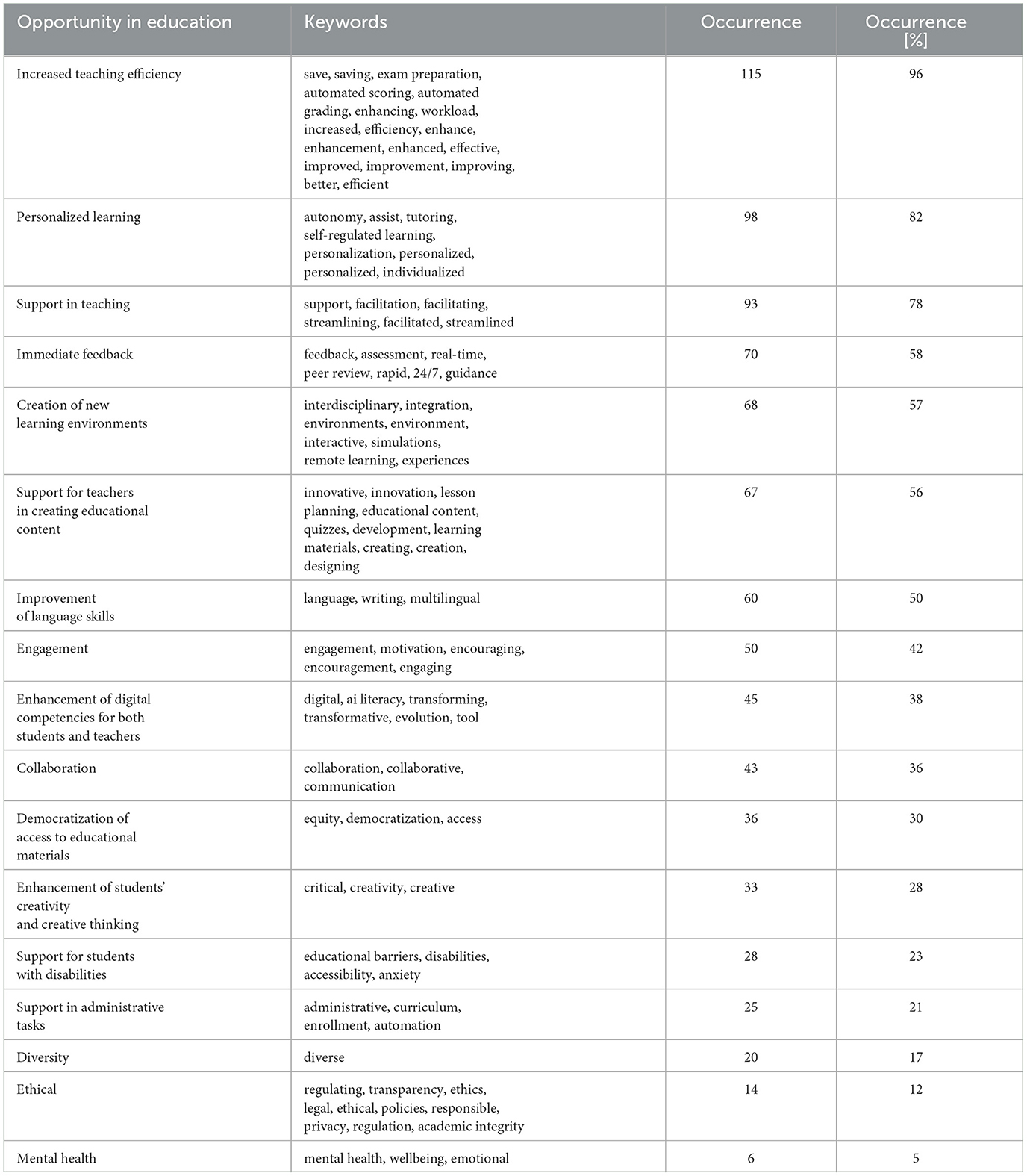

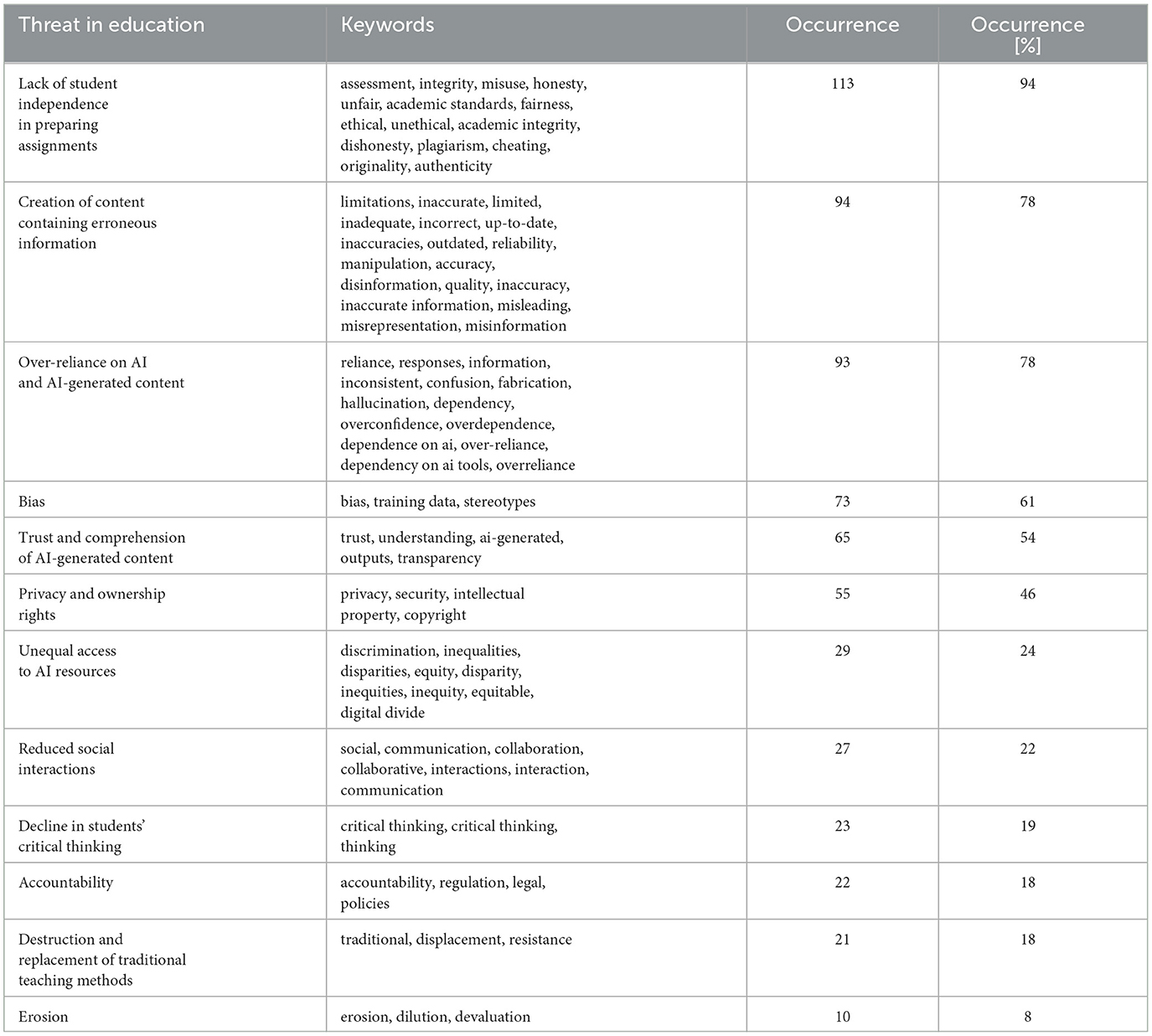

Based on a word frequency analysis, including consideration of synonyms and closely related concepts, Tables 3, 4 were developed. In the first column of these tables, a general description of each opportunity or threat is provided; the second column lists the keywords used to search for the corresponding concept or issue in the database; and the third column presents the percentage result, illustrating the frequency with which the given opportunity or threat appeared in the analyzed dataset.

Table 3. Compilation of identified opportunities based on word frequency analysis including their synonyms and closely related concepts.

Table 4. Compilation of identified threats based on word frequency analysis including their synonyms and closely related concepts.

Subsequent sections of this work are divided into three main chapters corresponding to the categories of the analyzed phenomena: Opportunities, Opportunities and Threats, and Threats. The Opportunities chapter focuses on the positive aspects of the issues under discussion, presenting solutions and technologies that may support the development of education and enhance teaching quality. Although the title of the Opportunities and Threats chapter might appear counterintuitive, its structure is dictated by the specificity of the analyzed data. All elements from the second and third tables that appeared in both positive and negative contexts have been compiled in this chapter. This indicates that some aspects, depending on the researchers' approach and the context of their analysis, were characterized as both opportunities and threats. An attempt is made to systematize and interpret these discrepancies, highlighting the conditions under which a given phenomenon may benefit education and when it may represent a challenge or risk. The final chapter, Threats, concentrates on the risks and potential negative consequences of the discussed phenomena. It contains an analysis of the difficulties associated with implementing new technologies, the impact of AI on education, and possible issues that may result from improper or excessive use.

3 Opportunities

This chapter will discuss Table 3 elements. The first thematic area concerns personalization in education and creating new learning environments, including “personalization of learning” and “creation of new learning environments”. The second key issue is the effective teaching and administrative process management. This section comprises “support in the creation of educational content”, “immediate feedback”, and “support in administrative tasks”. The third area focuses on language support, including technologies that assist in learning foreign languages, improving language accuracy, and working with the native language. Another part addresses support for individuals with disabilities, i.e., accessibility and assistance for people with diverse needs. The final subsection concerns mental health. Moreover, in the first subsection related to positive educational changes, all phrases generally referring to improving teaching quality—such as efficiency, diversity, collaboration, engagement, and support in teaching—have been included.

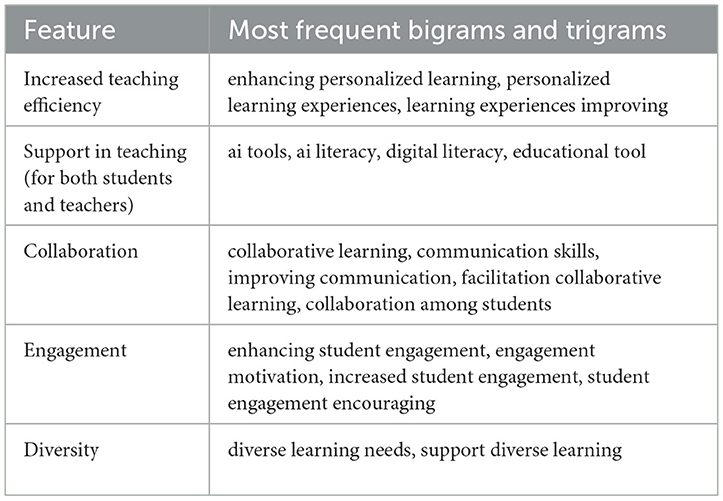

3.1 Positive transformation of education

To better understand the issues discussed in this subsection, Table 5 provides additional information regarding the most frequently occurring bigrams and trigrams–that is, pairs and triplets of words–that appeared in the context of the analyzed phrases. This facilitates a better capture of word co-occurrence in the dataset under study.

Table 5. Most frequent bigrams and trigrams in the context of analyzed phrases classified as opportunities.

In the context of “efficiency”, the presented possibilities for applying large language models in education can be classified into several key categories. First, these technologies significantly increase the accessibility of educational resources for both students and teachers (Samala et al., 2024), thereby contributing to enhanced inclusiveness and support for disadvantaged groups (Rudolph et al., 2024). Second, LLMs support the development of personalized learning (Gill et al., 2024; Al Murshidi et al., 2024), enabling the adaptation of educational materials and the pace of learning to the individual needs of students, which in turn enhances the overall efficiency of the educational process. Another aspect is the intensification of collaboration among students and teaching staff (Gill et al., 2024), which promotes improving teaching methods and exchanging interdisciplinary knowledge. Finally, immediate feedback—which appears to be the cornerstone of self-regulated learning (Mao et al., 2024; Chang et al., 2023)—is also emphasized. In terms of developing the competencies of students and teaching staff, these technologies support, among others, the enhancement of critical thinking skills (Gill et al., 2024; Al Murshidi et al., 2024), creativity (Samala et al., 2024), and communication (Gill et al., 2024).

The application of artificial intelligence contributes to an increased diversity of teaching methods. It enables the customization of educational content to the individual needs of learners, taking into account their diverse cognitive styles, learning paces, and specific needs arising from linguistic, cultural, or mental factors (Sok and Heng, 2023; Castro et al., 2024).

These technologies also offer mechanisms that support language learning (Limna et al., 2023), including translation, interactive language assistance, and real-time support (Michel-Villarreal et al., 2023), which can be particularly significant in the context of multilingual education.

Another crucial aspect is the support provided to teaching and administrative staff (Dempere et al., 2023). LLMs contribute to improving lesson planning, creating educational content, and designing interactive curricula (Mao et al., 2024; Michel-Villarreal et al., 2023; García-ópez et al., 2025).

Collaboration encompasses interactions among teachers, students, and researchers, which can facilitate knowledge exchange, develop joint educational projects, and enhance communication skills. In the academic context, AI-based tools can support cooperative processes by encouraging communication and access to information, as well as by streamlining the organization of group work (Michel-Villarreal et al., 2023; AlAfnan et al., 2023; Silva et al., 2024). One of the key aspects of collaboration is its impact on developing interpersonal skills, such as the ability to argue effectively, communicate efficiently, and work collaboratively in teams (Xu et al., 2024b). Joint activities among students and their interactions with instructors foster an academic community conducive to exchanging ideas and developing innovative solutions. Moreover, international and interdisciplinary collaboration significantly broadens participants' educational horizons and enhances their ability to adapt to the dynamically changing academic and professional environment (Michel-Villarreal et al., 2023; Xu et al., 2024a; Abujaber et al., 2023).

The increase in student engagement results from the interactivity and customization of content to their needs. Immediate feedback, personalized suggestions, and automatic progress assessment boost motivation for learning. Artificial intelligence fosters student activation through gamification, personalized educational pathways, and dynamic evaluation systems (Vargas-Murillo et al., 2023; Xiao and Zhi, 2023).

3.2 Personalization of education and interactive learning environments

Personalization of instruction is an issue that appears in nearly all the analyzed articles. Unlike concepts such as “increased teaching efficiency” or “support in teaching”, which are presented in general terms, personalization of learning is typically detailed with specific proposals for implementation. While increasing efficiency or supporting the educational process refers to broad, imprecise concepts, issues related to personalization are more frequently addressed practically, highlighting particular methods and tools that enable its implementation.

Generative artificial intelligence enables adapting educational materials to the individual needs of students (Diab Idris et al., 2024). In every group, some individuals quickly master new concepts, and others require more detailed explanations. GAI can assist the latter by providing more precise explanations and emphasizing or highlighting key segments of the material that have not been fully assimilated (Castillo et al., 2023).

Additionally, artificial intelligence can serve as a virtual assistant that explains misunderstood topics and conducts quizzes or exercises to reinforce learning. For example, after class, a student may request a chatbot to prepare a personalized set of questions to assess the extent of their comprehension (Castillo et al., 2023; Jeyaraman et al., 2023; Limo et al., 2023; Hung and Chen, 2023).

In particular, generative artificial intelligence can provide significant support in programming. A student working from home might submit a programming assignment for review, and the chatbot would offer immediate feedback, indicating which aspects are correct and which require improvement. It could also explain the errors made, propose specific ways to correct them, and suggest topics that should be revisited for further practice (Tian et al., 2023; Tayan et al., 2024).

Such a solution could enhance learning efficiency by allowing the student to receive real-time support without requiring direct involvement from a human instructor. Immediate assistance in the form of explanations and suggestions is critical, as it facilitates the prompt resolution of issues and the reinforcement of new information.

3.3 Effective management of the teaching and administrative process

Interactive artificial intelligence can prove extremely useful for tasks that teachers, regardless of the level of education, often dislike—for example, preparing documentation such as lesson plans or syllabus (Davis and Lee, 2024). In the case of these documents, it is sufficient to provide generative artificial intelligence with a general lesson plan, a conceptual outline, or even a syllabus template. The GAI can populate all required tables and sections based on the provided information, producing a complete document.

Moreover, generative artificial intelligence can assist in the preparation of tests. For this purpose, one can input an entire chapter from a textbook and then request the GAI to generate questions that assess comprehension of the material (Onal and Kulavuz-Onal, 2024). Such a solution can benefit teachers–students can also use GAI to create questions to prepare for exams or tests independently (Li, 2025; Lucas et al., 2024). (Kusuma et al. 2024) and (Jeon and Lee 2023) describe that this tool generates a general test or assignment template for an entire class in one step, followed by the rapid creation of specific versions for individual students. This approach ensures that each student receives individualized tasks, thereby minimizing the risk of adjacent students solving identical tests. Similarly, the GAI allows the generation of multiple versions of the same test. By paraphrasing content, altering test questions, or modifying answers, the tool creates tests that differ while maintaining the same structure and difficulty level. It can also modify incorrect answers, paraphrase correct ones, or generate new questions, ensuring the tests remain unique.

Generative artificial intelligence also facilitates the creation of various test formats. For example, a teacher can provide an open-ended question, and the GAI can modify it by expanding the content or transforming it into a single-choice or multiple-choice question. Such functionality significantly saves time for teachers and lecturers while streamlining the preparation of educational materials (Kıyak and Emekli, 2024).

Additionally, generative artificial intelligence can generate true or false questions and fill-in-the-blank exercises on a given topic–in quantities approaching “bulk” production. It is sufficient to provide the tool with a topic or a specific issue, and the LLM will generate the appropriate questions or exercises. One can also supply several problems simultaneously, and the tool will create questions or exercises for each of them (Kusuma et al., 2024; Jeon and Lee, 2023).

Another practical application of LLMs is the creation of dialogues for conversational exercises. It can generate entire segments or parts of dialogues, which students can then complete during class. Moreover, the generated materials can be adapted to the level of the group or even to individual students. A properly formulated prompt indicating the desired difficulty level of the tasks is all that is required (Kusuma et al., 2024; Jeon and Lee, 2023). This versatility makes GAI an extremely valuable tool that relieves teachers and enables more effective and personalized instruction.

Another practical application of GAI is the automatic grading of assignments. In (Jukiewicz 2024), the potential use of ChatGPT as a tool to support the automatic grading of student programming assignments was examined. The research demonstrated that ChatGPT exhibits high consistency in grading, meaning that the scores generated by the tool for the same assignment were largely coherent and stable. Moreover, these results aligned with the grades awarded by teachers, indicating a similar assessment trend. The study's findings suggest that ChatGPT can be a valuable tool for evaluating student work, particularly in generating feedback and identifying coding errors. Exceptional value was attributed to using this tool as an application supporting teachers. Manually grading hundreds of assignments and preparing detailed feedback is time-consuming and often requires many work hours. In such cases, ChatGPT can significantly reduce the time needed to perform these tasks by delivering results quickly and efficiently.

In another study (Qureshi, 2023) the differences between two groups of students participating in algorithms and data structures classes were investigated. One group used only traditional educational materials, while the other group had access to these conventional materials and the opportunity to use ChatGPT, particularly during programming tasks or when preparing code. The study results showed that the group with access to ChatGPT achieved higher scores and required less time to solve the tasks than those without access to the tool. However, the authors noted a critical aspect: students using ChatGPT committed more (albeit minor) errors in their solutions. The analysis of these errors revealed that ChatGPT is particularly useful in generating the structure and basic framework of the code. Still, it encounters difficulties with more advanced programming tasks that require deeper analysis or a specific approach.

3.4 Technologies supporting linguistic accuracy: correction, translation, and mistakes analysis

Language support provided by generative artificial intelligence can be understood in two ways, depending on whether it pertains to the native language or a foreign language (Imran and Almusharraf, 2023).

In the case of the native language, generative AI can assist in correcting grammatical, orthographic, and stylistic errors in written texts. It can also suggest improved (more precise, more expressive, and clarifying) formulations that enhance the readability and comprehensibility of the text. Moreover, GAI can adapt the language level to suit the audience's needs, for example, by simplifying complex scientific texts into accessible popular science articles (Imran and Almusharraf, 2023) or by employing a more colloquial style, depending on the context and user requirements.

Additionally, GAI can enrich texts by generating synonyms, metaphors, and other stylistic devices that enhance content quality. These capabilities benefit students and educators who aim to produce more professional, explicit, and refined materials (Imran and Almusharraf, 2023; Kohnke et al., 2023).

Concerning supporting the learning or use of a foreign language, generative AI offers a range of advantages. Primarily, GAI facilitates the translation of texts into multiple languages, handling both literal translations and those that consider the cultural nuances of the target language while also providing explanations for more challenging passages (Kohnke et al., 2023).

Similarly to its application in native language support, GAI can correct grammatical, orthographic, and stylistic errors in texts written in a foreign language, enabling users to produce more accurate, professional, and coherent writing (Imran and Almusharraf, 2023).

Furthermore, generative AI can be utilized in language learning by creating interactive exercises that assist in practicing grammar, vocabulary, and communication skills. GAI can simulate conversations in a foreign language, allowing users to practice fluency, intonation, and pronunciation. It also aids in learning grammatical rules and the correct usage of words or expressions in specific contexts, thus fostering a deeper understanding of the structure and logic of the language (Kohnke et al., 2023; Xiao and Zhi, 2023; Ma et al., 2024; Klimova et al., 2024).

3.5 Accessibility and support for individuals with diverse needs

The literature identifies support for individuals with disabilities as one of the positive opportunities for using generative artificial intelligence in higher education. In particular, it is emphasized that such technologies can assist students with ADHD, dyslexia, dyspraxia, or autism (Ayala, 2023; Bhuvan Botchu and Botchu, 2024; Zhao et al., 2024; Rakap and Balikci, 2024). These individuals often struggle with constructing a coherent text structure, organizing their thoughts, and applying appropriate grammatical and stylistic rules. In such cases, GAI-based tools, such as ChatGPT, can help edit texts and eliminate errors. Additionally, they can facilitate information synthesis, making the text more accessible and comprehensible (Zhao et al., 2024).

Generative AI technologies can also support individuals with sensory disabilities, including the visually impaired and those with low vision. In this context, speech recognition and synthesis systems play a crucial role by enabling natural voice interactions. These systems allow visually impaired individuals to easily “converse” with a chatbot that converts text to speech, thereby assisting in reading notes and accessing textual educational resources (Kuzdeuov et al., 2024). Moreover, such technologies can be integrated with existing communication platforms, enabling visually impaired or low-vision users to access educational materials.

Such solutions enhance the autonomy of individuals with disabilities and actively support their participation in educational, academic, and professional environments. Generative AI in these areas can be a significant step toward greater inclusivity and equality in educational access for people with disabilities.

3.6 Mental health

GAI offers a range of opportunities to support mental health in education. It can provide emotional support, facilitate access to educational materials, and reduce barriers to seeking psychological assistance. At the same time, its implementation requires a conscious approach—understanding the potential limitations of these tools and the necessity of supervising content generated in the mental health domain is essential. This is also emphasized by research findings: as noted in (Dergaa et al. 2024), ChatGPT is not yet ready to be used independently as a diagnostic or therapeutic tool, as its responses may be inadequate or even dangerous. The model shows significant shortcomings in understanding and interpreting patients' issues, especially in complex clinical cases. Nevertheless, the literature suggests that in the future, GAI may partially support such processes.

3.6.1 The use of large language models in mental health care

One of the main reasons for the growing interest in GAI in mental health is its wide accessibility and low barriers to entry. For many individuals, ChatGPT has become an easily accessible source of knowledge and preliminary support—users can quickly obtain information about symptoms, therapies, or self-help options at any time. Moreover, conversation with a chatbot often provides a “safe space” to share one's concerns and thoughts. For this reason, ChatGPT is often used as a free and widely available tool to supplement psychotherapy, frequently by those who have not yet decided to contact a professional (Raile, 2024). At the same time, it is important to acknowledge significant limitations: ChatGPT cannot replace a therapist, as it lacks access to the full context of an individual's situation and may reinforce simplifications or biases (Raile, 2024).

An increasing number of publications confirm the potential of generative artificial intelligence in the field of mental health and psychiatry (Sai et al., 2024; Xu et al., 2024c; Cheng et al., 2023). Particular hopes are associated with the use of natural language processing, which enables the analysis of vast text corpora and the identification of patterns indicative of mental disorders–often more quickly and efficiently than traditional methods (Cheng et al., 2023; Sai et al., 2024).

However, this promising perspective is tempered by the specific limitations of AI models, which become clearly evident when compared to actual therapeutic practice. For example, the study by (De Duro et al. 2025) showed that while ChatGPT, Claude-3, and LLaMAntino can mimic the structure of therapeutic conversations and language use, they are unable to replicate the emotional complexity of human interactions–highlighting crucial boundaries for the use of artificial intelligence in psychotherapy.

Other research focuses on the potential of ChatGPT and similar models in mental health analysis through natural language processing. (Lamichhane 2023) demonstrated that ChatGPT is fairly effective at identifying symptoms of depression and anxiety based on social media content analysis, although its effectiveness in recognizing suicidal ideation remains limited. Similar observations were reported in a practical study by (Shin et al. 2024), where the GPT-3.5 model analyzed emotional diaries and identified individuals with depression with very high accuracy (about 90%), surpassing previously used tools.

Large language models also prove effective in the analysis of extended narratives–both in psychological tests and spontaneous statements. A notable example is the study by (Lho et al. 2025), where GPT-4 and other LLMs were successfully used to detect depression and suicide risk among psychiatric patients, based on Sentence Completion Test results. However, the authors emphasize the need for extensive validation and further refinement of methods before their implementation in clinical practice.

Interestingly, in some studies, ChatGPT-3.5 outperformed humans in assessing emotional awareness based on text, which demonstrates the potential of these tools for new forms of mental health support (Elyoseph et al., 2024). Additionally, including paralinguistic features in prompts (such as speech rate or rhythm) significantly improved the classification of depression and anxiety by language models (Tao et al., 2023).

Simultaneously, the literature indicates that ChatGPT can serve as a tool supporting psychoeducation. (Maurya et al. 2025) found that the model's responses are precise, clear, and consistent with ethical standards, though further studies on its long-term effectiveness are needed. Similarly, (Qiu et al. 2023) presented the SMILECHAT system, which enables more complex patient dialogues, but also emphasize that AI should only supplement, not replace, professional therapy. According to (Spallek et al. 2023), ChatGPT can also support the creation of educational materials on mental health and addictions, as well as promote healthy habits and assist in the development of educational content aligned with well-being guidelines. Moreover, (Singh 2023) suggest that AI chatbots can act as companions, providing support to people with limited access to specialized help. Ultimately, however, the use of AI in psychiatry should be subject to regulation and oversight to ensure the safety and effectiveness of these tools.

3.6.2 Key challenges for the use of LLMs in detecting mental health problems

At this point, it is worth gathering and summarizing the main reasons why using large language models for detecting mental health problems–especially among students–is not an ideal solution. Although generative artificial intelligence theoretically holds potential in suicide prevention, its clinical effectiveness in this area remains unproven (Elyoseph and Levkovich, 2023; Levkovich and Elyoseph, 2023). A review of the literature highlights several key challenges that make this task particularly difficult:

1. Underestimation of suicide risk by LLMs Studies comparing assessments made by ChatGPT and mental health professionals have shown that ChatGPT consistently underestimates the likelihood of suicide attempts in the analyzed scenarios. The model is also less sensitive to important factors such as perceived burden and lack of belonging, and its evaluation of psychological resilience is typically lower than expert norms. As a result, users relying on ChatGPT may receive an overly optimistic and inaccurate risk assessment, potentially overlooking actual danger (Elyoseph and Levkovich, 2023; Levkovich and Elyoseph, 2023).

2. Ambiguity and subtlety of suicidal language Individuals with suicidal thoughts often do not communicate their intentions directly; they may use metaphors, allusions, and sarcasm or indirectly express psychological pain. Even advanced GAI models may struggle to recognize these subtle signals, which can be apparent to an experienced human professional (Ghanadian et al., 2024).

3. Limitations of training data and generalization issues The reliability of ChatGPT's assessments depends heavily on the quality and representativeness of its training data. Demographic imbalances, biases, or insufficient inclusivity in the data can result in incorrect predictions and exacerbate existing health disparities (Levkovich and Elyoseph, 2023).

4. Lack of understanding of cultural and demographic nuances Language models are trained on large, often generic datasets and do not account for the specifics of certain groups–such as adolescents in a particular cultural context, autistic individuals, or patients with schizophrenia. Suicide risk is complex and influenced by many factors: personal history, current circumstances, social support, mental and physical health, access to means, etc. GAI analyzes only the provided text and lacks access to broader context such as body language, tone of voice, history of previous interactions, or real-world data. Unless this has been specially implemented while maintaining privacy (Elyoseph and Levkovich, 2023; Levkovich and Elyoseph, 2023).

5. Tendency to generate plausible but superficial responses Large language models can produce professional and supportive answers but, upon closer examination, are too general or diagnostically inaccurate. These responses are often based on general language patterns rather than a genuine assessment of the user's mental state. There is also a risk of “hallucinations”—fabricated information that may mislead the user. It is important to remember that GAI does not “understand” concepts in the human sense and lacks empathy; its responses solely reflect its training data. In crisis situations, where authentic and empathetic responses are crucial, GAI is unlikely to provide fully individualized support (Kalam et al., 2024; Cheng et al., 2023).

6. Ethical and accountability issues A significant challenge remains the question of responsibility–who is accountable if GAI fails to detect suicide risk or responds inappropriately? Excessive reliance on technology in such a sensitive domain can foster a false sense of security or delay seeking professional help.

4 Opportunities and threats

The researchers classified specific terms as opportunities and threats during the analysis. This duality does not arise from the inherent nature of the term. Still, from the adopted perspective, I analyze the factors determining its duality in the following paragraphs and present the consequences stemming from both interpretations.

This chapter is organized into three subsections, each addressing significant aspects of contemporary education. The first subsection concerns the role of creative and critical thinking. It discusses both the “enhancement of students' creativity and creative thinking” (as shown in Table 3), which appeared as an opportunity in 33% of the analyzed articles, and the issue of the “decline in students' critical thinking” (Table 4), mentioned as a threat in 19% of the publications. The second subsection focuses on developing digital competencies and the lack of understanding of the technology in use. This section draws on data concerning the “enhancement of digital competencies for both students and teachers” (Table 3), identified as an opportunity in 38% of the articles, as well as issues related to “trust and comprehension of AI-generated content” and “the destruction and replacement of traditional teaching methods” (Table 4), which were recognized as threats in 54% and 18% of the articles, respectively. The final subsection addresses access to educational materials. Here, two key aspects are considered: the “democratization of access to educational materials” (Table 3), identified as an opportunity in 30% of the articles, and the “inequality of access to AI resources” (Table 4), reported as a threat in 24% of the cases.

4.1 Development of critical and creative thinking

Critical and creative thinking are best discussed in education because they complement each other and are essential for effective learning, problem-solving, and preparing students for the future. Critical thinking allows for the analysis of information, the evaluation of its credibility, and the detection of logical fallacies. In contrast, creativity facilitates the generation of new ideas and the ability to break away from established patterns.

Reimer (2024) emphasizes in their article that appropriately designed AI tools can support the critical thinking process by simulating complex problem situations that require analysis and decision-making. AI can ask guiding questions to provoke students to think more deeply and reflect on the presented data. Similar conclusions are drawn by Ahmed (2025), who notes that personalizing educational materials via AI allows content to be tailored to the student's level, thereby supporting active knowledge acquisition and a deeper understanding of the issues.

In addition to fostering analytical thinking, generative AI also significantly impacts creativity in the educational process. Ali et al. (2024) indicate that AI tools can inspire users by proposing unconventional solutions and generating innovative ideas, encouraging students to explore new concepts and creatively seek alternative ways of thinking.

In the context of “threats”, mechanical information processing may result in a decline in analytical abilities. Instead of independently analyzing data, students might more frequently accept AI-generated answers as ready-made solutions, leading to a “superficial” approach to learning where they rely on AI analyses without engaging in more profound reflection on the problem. Moreover, AI may contribute to the homogenization of thought processes. Algorithms based on repetitive patterns might generate content that students accept uncritically, thus losing their ability to evaluate and challenge the presented results independently. Consequently, this could limit cognitive diversity and hinder the development of intellectual autonomy (Ahmed et al., 2024; Mohammadreza Farrokhnia and Wals, 2024). Since these systems provide instant responses, students may not feel compelled to engage in independent research and analysis, weakening their ability to solve complex problems and seek new, innovative approaches.

4.2 Development of digital competencies and (lack of) understanding of technology

Developing digital competencies in education is undoubtedly an added value that better prepares students for functioning in the digital world. In the context of using generative artificial intelligence, it is a significant advantage, providing a deep and conscious understanding of the underlying technology that accompanies it. Merely possessing the skills to operate AI tools is insufficient if users do not understand their application's mechanisms, limitations, and potential consequences.

Some argue that generative AI, similar to the Industrial Revolution in the 19th century, may lead to the obsolescence of certain professions or a significant reduction in the number of workers needed for specific tasks (AbuMusab, 2024). However, this is not solely a negative phenomenon, as many experts believe that generative AI will also create new professions and job opportunities. To prepare society for these changes, it is necessary to develop modern digital competencies.

In this context, it is also essential to consider the challenges associated with ensuring equal access to the discussed technologies. An example can be drawn from the COVID-19 pandemic, when the rapid implementation of new software for remote education, which enabled the transfer of classes online, exacerbated digital inequalities. Difficulties in accessing appropriate hardware and software and the lack of teacher training significantly impacted part of the educational community, limiting their ability to participate in the educational process. Similarly, in the case of generative AI, ensuring effective global development of digital competencies will depend on providing real access to this technology. It should be emphasized that such access should not be limited to theoretical availability that requires specialized knowledge from the user.

A key element is a comprehensive understanding of the technology behind generative AI, which allows for its responsible and effective use. The full potential of this technology can only be exploited when its workings are clearly understood. In education and in parallel, emphasis must be placed on the ethical aspects of using AI to promote responsible usage and avoid issues such as plagiarism or the misuse of its capabilities. Only such an approach will enable the harmonious implementation of generative AI in society and the economy.

Currently, generative AI is often limited to posing questions and awaiting answers, reducing it to a substitute for a web browser (Shibani et al., 2024). This approach involves quickly obtaining ready-made solutions to various problems, yet it does not utilize the full potential of this technology. Unfortunately, in most cases, users—including students and educators—are neither aware of nor employ the more advanced features offered by GAI tools. Some lecturers may be reluctant to introduce GAI due to a lack of knowledge or fears of losing their role (Zhai, 2024).

Therefore, it seems extremely important to organize training sessions that enhance the digital competencies of both teachers and students, acquainting them with the possibilities of generative AI and teaching them how to use these technologies effectively. Such training could help the academic staff develop new teaching methods and integrate GAI to support the educational process. At the same time, students could learn how to use GAI effectively–for instance, to deepen their knowledge, develop analytical skills, or create their projects.

It is crucial to understand that generative AI can significantly support the educational process, but this requires proper preparation and familiarity with the specific functions of these systems. Only then will it be possible to exploit GAI's potential in teaching and independent learning fully.

4.3 Democratization of access to AI systems and resources

Democratization of access to knowledge has been classified as both an opportunity and a threat. The opportunity arises from the possibility of providing students with continuous, unrestricted access to educational content and academic support. Reducing dependence on traditional forms of education–which require the constant presence of a teacher and access to physical materials–enables a more egalitarian approach to teaching, particularly in regions with limited academic infrastructure or among groups with restricted access to high-quality educational resources. On the other hand, the threat is that students (e.g., in some areas of the world) may become even more digitally and educationally excluded if they do not have access to generative AI systems (Memarian and Doleck, 2023; Diab Idris et al., 2024; Hadi Mogavi et al., 2024).

5 Threats

The elements discussed in this chapter were prepared based on Table 4, excluding those already described in the previous chapter entitled “Opportunities and Threats”. In this section, two key issues also reflected in Table 4 are presented in detail. The first of these is the unethical use of generative artificial intelligence. The second concerns “the creation of content containing erroneous information”, “over-reliance on AI and AI-generated content”, and “bias”. As in the chapter on opportunities, the first issue discussed here pertains to the potentially harmful effects of transformation in education. This section examines aspects such as the erosion of accountability, a decline in engagement, reduced social interactions, and the deterioration of communication quality.

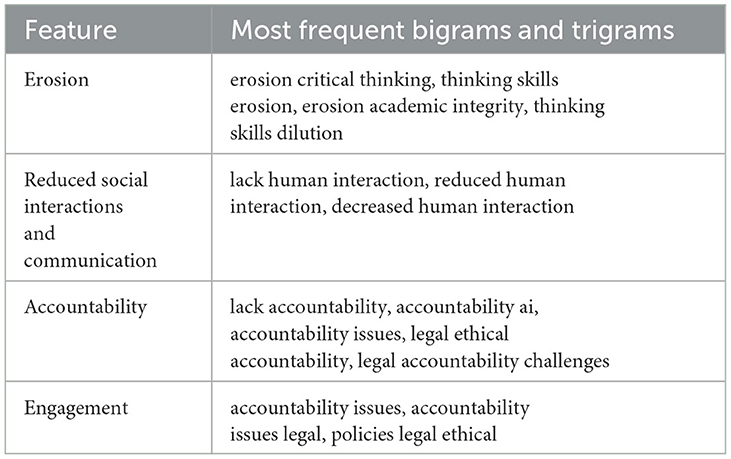

5.1 Negative transformation of education

As in the subsection on positive transformation of education, Table 6 provides additional information on the most frequently occurring bigrams and trigrams, this time related to the discussed threats.

Table 6. Most frequent bigrams and trigrams in the context of analyzed phrases classified as threats.

In the context of negative transformations in education, “erosion” is most frequently mentioned. The analyzed articles usually refer to the degradation of skills acquired by students, as discussed earlier in this work. The second key aspect is the erosion of academic integrity. Academic integrity, understood as the fundamental principle regulating the process of learning, research, and educational work, is based on ethics, reliability, and transparency. It requires fair practices in writing assignments, conducting research, and grading, necessitating the avoidance of plagiarism, accurate citation of sources, and independent content creation. It also demands transparency in conducting research, avoiding data manipulation or result falsification, and adhering to the principles of fair assessment. Academic integrity is crucial for ensuring the quality of education and scientific research and protecting the value and credibility of degrees and scholarly publications. It fosters responsibility and ethical behavior in academia and future professional work, preventing misinformation and unsound research practices (Debby et al., 2024; Currie, 2023; Bin-Nashwan et al., 2023). This threat is directly connected to the content of the following subsection.

In the context of the negative impact on social interactions, students increasingly use AI instead of consulting with peers, lecturers, or mentors as their first source of information and problem-solving. As a result, the need to engage in academic discussions, collaborative problem-solving, or idea exchange diminishes. Additionally, generative AI can reduce the number of interactions during group work. Students must collaborate, brainstorm, and negotiate joint solutions in traditional projects. However, GAI allows for the rapid generation of ready-made content, which can lead to situations where individual group members stop actively participating in the creative process. Instead of engaging in dialogue, they may rely on AI to automate most tasks, thereby limiting the number of interpersonal interactions (Kim et al., 2024; Lai et al., 2023).

Table 6 also refers to the issue of “responsibility”, understood as legal accountability for any breaches of academic integrity and for false data or responses generated by generative artificial intelligence systems. This threat is directly linked to the content of the following two subsections.

The decline in student engagement may stem from the excessive automation of the teaching process and the reduction of interpersonal interactions. Using instant feedback, automatic hints, and AI-based assessment systems may lead to the passive consumption of content instead of active participation in the learning process. Rather than motivating independent work, they might weaken students' initiative by limiting the need for critical thinking and active engagement (Vargas-Murillo et al., 2023; Xiao and Zhi, 2023).

5.2 Unethical use of generative artificial intelligence

The unethical use of artificial intelligence is one of the most prominent and intuitive problems that may arise when working with GAI in education at all levels and in everyday work. This issue affects not only students but also various professional and educational applications. The problem primarily stems from generative AI's intended purpose and proficiency in generating texts and communicating using sentences and words. This functionality enables the automatic writing of essays, solving tasks from various fields, or preparing complete documents such as theses.

Why is this problematic? First and foremost, such an application of GAI makes it challenging to assess students' actual skills, thereby fostering academic dishonesty. As a result, individuals submitting work generated by GAI attribute competencies to themselves that they do not possess. Moreover, detecting work that lacks originality becomes challenging for traditional plagiarism detection tools, which are not designed to analyze content generated by GAI (Perkins et al., 2024; Uzun, 2023; Weber-Wulff et al., 2023).

Another significant problem is the potential for dependency on the technology. Excessive use of generative AI may lead to a deterioration in critical thinking abilities and the capacity for independent problem-solving (Darwin et al., 2024). This, in turn, may negatively impact its users' intellectual and academic development.

It is also important to mention the automation of grading and the automated creation of educational materials, which, although increasing efficiency, may lead to a decline in the quality control of these processes (Farazouli, 2024). In the long term, this may negatively affect the level of education, diminishing the value of education and its ability to develop key competencies in students.

5.3 Over-reliance on generative artificial intelligence and erroneous information

However, like any advanced technology, generative AI also carries certain limitations and threats that may negatively affect the educational process. Understanding the challenges associated with implementing AI is essential to harness its potential responsibly and thoughtfully.

One of the fundamental problems associated with generative AI is its inherent biases (Bolukbasi et al., 2016; Caliskan et al., 2017; Nosek et al., 2002, 2009; Bender et al., 2021; Zhao et al., 2018; Kotek et al., 2023). This issue has already been described earlier in this work. The problem is that GAI's knowledge is based on training data, often containing hidden inequalities or stereotypes. For example, suppose the training data predominantly features tall, white males with blue eyes as protagonists. In that case, there is a high probability that GAI, when generating a character (for a story, for instance), will produce a character with such features. Similar biases may include race, gender, language, or stereotypes associated with social groups, nationalities, or professions. GAI, learning from data predominantly in English, may also transfer specific cultural characteristics inherent to English to content generated in other languages.

A second significant problem, although less common, is the occurrence of factual errors in the generated texts. Generative AI produces answers even when “does not know” the correct response. Instead of admitting a lack of knowledge, it often creates content regardless of accuracy, leading to the dissemination of erroneous information. These errors are usually called AI hallucinations (Siontis et al., 2023; Barassi, 2024).

Another difficulty associated with GAI is its lack of creativity. The generated texts are usually correct and well-structured, but they are often devoid of originality and creative depth (Davis et al., 2024; de Vicente-Yagüe-Jara et al., 2023). This is due to the inherent nature of GAI, which, when processing text, predicts subsequent words based on patterns from the training data. As a result, it cannot go beyond the information contained in its training set, making its responses competent but uninspired and lacking creative expression.

The final problem is the risk of diminishing creative thinking among students—and possibly even among teachers (Guo and Lee, 2023). GAI may negatively impact students' ability to think critically and solve problems independently. Excessive reliance on this technology can gradually erode analytical skills, creativity, and reflective thinking—the foundations of academic education (Guo and Lee, 2023). Suppose students begin to use GAI to create assignment texts or other works. In that case, there is a risk that they will not acquire the key competencies necessary for their future professional and intellectual lives.

6 Discussion

The analysis results indicate a broad spectrum of opportunities and threats to applying generative artificial intelligence in education. The presented data confirm that these tools can significantly support the educational process by offering personalized instruction, democratizing knowledge access, and fostering digital competencies development. At the same time, studies point to significant challenges, such as the erosion of academic integrity, reduced social interactions, and an overreliance on AI in cognitive processes.

An analysis of the content of the collected articles revealed that generative AI can positively impact various aspects of education. The personalization of educational content enables adapting instructional materials to students' needs, enhancing their engagement and learning effectiveness. Research findings suggest that immediate feedback and dynamic assessment systems facilitate self-regulation in the learning process. At the same time, interactive educational environments based on GAI promote the development of critical thinking and creativity. Furthermore, the availability of AI as a supportive learning tool allows students easier access to educational resources, particularly in regions where traditional methods of instruction may be limited. In this context, an important aspect is the support provided to students with disabilities, for whom generative AI can serve as a valuable compensatory tool.

However, despite the numerous benefits, the threat analysis revealed that using ChatGPT and similar systems is also associated with various challenges. One of the primary issues is academic integrity. Studies indicate that students often use generative AI to automatically produce content, which can lead to plagiarism and breaches of scholarly rigor. AI tools enable the rapid creation of academic work, rendering traditional methods of assessing student competencies less effective. Consequently, universities and educational institutions must seek new methods of evaluating knowledge that account for both the potential of AI and the necessity of maintaining academic standards.

Another significant threat is the reduction of social interactions among students. Generative AI, due to its accessibility and efficiency, may lead to a decrease in direct interpersonal contact—both among students and between students and instructors. Research shows that students increasingly regard ChatGPT as their primary source of information, forgoing traditional learning methods such as academic discussions, consultations with instructors, or group work. This phenomenon may result in a decline in interpersonal and argumentative skills, crucial for higher education and later professional life.

Overreliance on AI may also contribute to erasing critical thinking and creativity. Because GAI offers ready-made solutions, there is a risk that students will be less engaged in independent analysis and problem-solving. The learning process may become superficial, and users might lose the ability to question information and develop their original ideas critically. Moreover, frequent AI-generated answers may lead to a homogenization of thought, with students more inclined to accept generated content as definitive and objective—potentially limiting cognitive diversity and creativity in the learning process.

An interesting aspect that emerged in the analyzed articles is the issue of access to AI tools. Although generative AI has the potential to democratize education, there is a risk that it may also exacerbate educational inequalities. Not all students have equal access to modern technologies, which can lead to further digital exclusion. This problem is particularly evident in countries with limited technological infrastructure, where a lack of access to AI may pose a significant barrier to education.

Based on the analyses presented in this article, issues related to students' mental health and the use of generative artificial intelligence and large language models to support students with their mental health challenges rarely appear in review articles. They can even be considered sporadic compared to other opportunities and threats discussed in the literature. This article presents possible reasons for this phenomenon. A key reason for this may be the current unreliability of this technology for mental health improvement. However, this doesn't preclude its future potential.

In terms of future research directions, it seems crucial to develop a strategy for integrating generative AI in education in a controlled and responsible manner. The potential benefits–from personalized teaching and improved access to knowledge–and the risks associated with the erosion of critical thinking, reduced social interactions, and breaches of academic integrity must be considered. It will be essential to implement appropriate regulations and develop assessment methods that enable the effective use of AI without negative consequences for the quality of education.

7 Conclusion

This study analyzed the impact of generative artificial intelligence–particularly on ChatGPT—on education and students' mental health. A literature review indicates that AI-based tools can potentially transform the teaching process by offering personalized content, immediate feedback, and enhanced access to educational resources. They may also serve as valuable psychoeducational tools by supporting access to mental health information and preliminary diagnostics. At the same time, this technology poses significant challenges that could negatively affect academic integrity, social interactions, and the development of critical thinking and creativity.

Concerning future research and the evolution of academic policy, it will be essential to develop regulations and evaluation methodologies that enable the effective use of AI in education while minimizing its negative impacts. Further investigation is warranted into the long-term consequences of employing generative AI in the context of students' mental health and its influence on their capacity for independent and creative thinking.

In summary, generative AI offers substantial educational benefits, but its implementation requires a conscious and responsible approach. Appropriate regulations, education on the ethical use of AI, and the development of alternative methods of knowledge assessment can help harness the potential of this technology without compromising the quality of education and students' mental health and, in the future, achieving a balance between the integration of modern AI tools and preserving traditional academic values–such as critical thinking, creativity, and academic integrity.

Author contributions

MJ: Software, Funding acquisition, Writing – original draft, Formal analysis, Resources, Writing – review & editing, Investigation, Methodology, Data curation, Visualization, Project administration, Conceptualization, Supervision, Validation.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abujaber, A., Al-Qudimat, A., and Nashwan, A. (2023). A strengths, weaknesses, opportunities, and threats (SWOT) analysis of chatGPT integration in nursing education. Cureus 15:48643. doi: 10.7759/cureus.48643

AbuMusab, S. (2024). Generative ai and human labor: who is replaceable? AI Society 39, 3051–3053. doi: 10.1007/s00146-023-01773-3

Ahmed, A. R. (2025). Navigating the integration of generative artificial intelligence in higher education: Opportunities, challenges, and strategies for fostering ethical learning. Adv. Biomed. Health Sci. 4:24. doi: 10.4103/abhs.abhs_122_24

Ahmed, Z., Shanto, S. S., Rime, M. H. K., Morol, M. K., Fahad, N., Hossen, M. J., et al. (2024). The generative ai landscape in education: Mapping the terrain of opportunities, challenges, and student perception. IEEE Access 12, 147023–147050. doi: 10.1109/ACCESS.2024.3461874

Al Murshidi, G., Shulgina, G., Kapuza, A., and Costley, J. (2024). How understanding the limitations and risks of using chatGPT can contribute to willingness to use. Smart Learn. Environm. 11:36. doi: 10.1186/s40561-024-00322-9

AlAfnan, M. A., Dishari, S., Jovic, M., and Lomidze, K. (2023). ChatGPT as an educational tool: Opportunities, challenges, and recommendations for communication, business writing, and composition courses. J. Artif. Intellig. Technol. 3, 60–68. doi: 10.37965/jait.2023.0184

Ali, M. M., Wafik, H. A., Mahbub, S., and Das, J. (2024). Gen z and generative AI: shaping the future of learning and creativity. Cognizance J. Multidiscipl. Stud. 4, 1–18. doi: 10.47760/cognizance.2024.v04i10.001

Ayala, S. (2023). ChatGPT as a universal design for learning tool supporting college students with disabilities. Educ. Renaissance 12, 22–41.

Barassi, V. (2024). Toward a theory of ai errors: making sense of hallucinations, catastrophic failures, and the fallacy of generative ai. Harvard Data Sci. Rev. 1–16. doi: 10.1162/99608f92.ad8ebbd4

Bender, E. M., Gebru, T., McMillan-Major, A., and Shmitchell, S. (2021). “On the dangers of stochastic parrots: can language models be too big?,” in Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 610–623.

Bhuvan Botchu, K. P. I., and Botchu, R. (2024). Can chatGPT empower people with dyslexia? Disab. Rehabilitat. Assist. Technol. 19, 2131–2132. doi: 10.1080/17483107.2023.2256805

Bin-Nashwan, S. A., Sadallah, M., and Bouteraa, M. (2023). Use of chatGPT in academia: Academic integrity hangs in the balance. Technol. Soc. 75:102370. doi: 10.1016/j.techsoc.2023.102370

Bolukbasi, T., Chang, K.-W., Zou, J., Saligrama, V., and Kalai, A. (2016). “Man is to computer programmer as woman is to homemaker? Debiasing word embeddings,” in Proceedings of the 30th International Conference on Neural Information Processing Systems, NIPS'16 (Red Hook, NY: Curran Associates Inc), 4356–4364.

Caliskan, A., Bryson, J. J., and Narayanan, A. (2017). Semantics derived automatically from language corpora contain human-like biases. Science 356, 183–186. doi: 10.1126/science.aal4230

Castillo, A., Silva, G., Arocutipa, J., Berrios, H., Marcos Rodriguez, M., Reyes, G., et al. (2023). Effect of chat gpt on the digitized learning process of university students. J. Namibian Stud. History Polit. Cult. 33:1–15. doi: 10.59670/jns.v33i.411

Castro, R. A. G., Cachicatari, N. A. M., Aste, W. M. B., and Medina, M. P. L. (2024). Exploration of chatGPT in basic education: Advantages, disadvantages, and its impact on school tasks. Contemp. Educ. Technol. 16:ep511. doi: 10.30935/cedtech/14615

Chang, D. H., Lin, M. P.-C., Hajian, S., and Wang, Q. Q. (2023). Educational design principles of using ai chatbot that supports self-regulated learning in education: Goal setting, feedback, and personalization. Sustainability 15:12921. doi: 10.3390/su151712921

Cheng, S.-W., Chang, C.-W., Chang, W.-J., Wang, H.-W., Liang, C.-S., Kishimoto, T., et al. (2023). The now and future of chatGPT and gpt in psychiatry. Psychiatry Clin. Neurosci. 77, 592–596. doi: 10.1111/pcn.13588

Currie, G. M. (2023). Academic integrity and artificial intelligence: is chatGPT hype, hero or heresy? Semin. Nucl. Med. 53, 719–730. doi: 10.1053/j.semnuclmed.2023.04.008

Darwin, R., Mukminatien, D., Suryati, N., Laksmi, N., and Marzuki, E. D. (2024). Critical thinking in the ai era: an exploration of efl students' perceptions, benefits, and limitations. Cogent Educ. 11:2290342. doi: 10.1080/2331186X.2023.2290342

Davis, J., Van Bulck, L., Durieux, B. N., Lindvall, C., et al. (2024). The temperature feature of chatgpt: modifying creativity for clinical research. JMIR Human Fact. 11:e53559. doi: 10.2196/53559

Davis, R. O., and Lee, Y. J. (2024). Prompt: Chatgpt, create my course, please! Educ. Sci. 14:24. doi: 10.3390/educsci14010024

De Duro, E. S., Improta, R., and Stella, M. (2025). Introducing counsellme: A dataset of simulated mental health dialogues for comparing LLMS like haiku, llamantino and chatGPT against humans. Emerg. Trends Drugs, Addict. Health 5:100170. doi: 10.1016/j.etdah.2025.100170

de Vicente-Yagüe-Jara, M.-I., López-Martínez, O., Navarro-Navarro, V., and Cuéllar-Santiago, F. (2023). Writing, creativity, and artificial intelligence: ChatGPT in the university context. Comunicar: Media Educ. Res. J. 31, 45–54. doi: 10.3916/C77-2023-04

Debby, R. E, Cotton, P. A. C., and Shipway, J. R. (2024). Chatting and cheating: Ensuring academic integrity in the era of chatGPT. Innovat. Educ. Teach. Int. 61, 228–239. doi: 10.1080/14703297.2023.2190148

Dempere, J., Modugu, K., Hesham, A., and Ramasamy, L. K. (2023). The impact of chatGPT on higher education. Front. Educ. 8:1206936. doi: 10.3389/feduc.2023.1206936

Dergaa, I., Fekih-Romdhane, F., Hallit, S., Loch, A. A., Glenn, J. M., Fessi, M. S., et al. (2024). ChatGPT is not ready yet for use in providing mental health assessment and interventions. Front. Psychiatry 14:1277756. doi: 10.3389/fpsyt.2023.1277756

Diab Idris, M., Feng, X., and Dyo, V. (2024). Revolutionizing higher education: unleashing the potential of large language models for strategic transformation. IEEE Access 12, 67738–67757. doi: 10.1109/ACCESS.2024.3400164

Elyoseph, Z., and Levkovich, I. (2023). Beyond human expertise: the promise and limitations of chatGPT in suicide risk assessment. Front. Psychiatry 14:1213141. doi: 10.3389/fpsyt.2023.1213141

Elyoseph, Z., Refoua, E., Asraf, K., Lvovsky, M., Shimoni, Y., and Hadar-Shoval, D. (2024). Capacity of generative ai to interpret human emotions from visual and textual data: pilot evaluation study. JMIR Mental Health 11:e54369. doi: 10.2196/54369

Farazouli, A. (2024). “Automation and assessment: exploring ethical issues of automated grading systems from a relational ethics approach,” in Framing Futures in Postdigital Education: Critical Concepts for Data-driven Practices, A. Buch, Y. Lindberg, and T. Cerratto Pargman (Cham: Springer Nature Switzerland), 209–226.

Farrokhnia, M., Banihashem, S. K., Noroozi, O., and Wals, A. (2024). A swot analysis of chatgpt: implications for educational practice and research. Innovat. Educ. Teach. Int. 61, 460–474. doi: 10.1080/14703297.2023.2195846

García-L'opez, I. M., González González, C. S., Ramírez-Montoya, M.-S., and Molina-Espinosa, J.-M. (2025). Challenges of implementing chatGPT on education: Systematic literature review. Int. J. Educ. Res. Open 8:100401. doi: 10.1016/j.ijedro.2024.100401

Ghanadian, H., Nejadgholi, I., and Al Osman, H. (2024). Socially aware synthetic data generation for suicidal ideation detection using large language models. IEEE Access 12, 14350–14363. doi: 10.1109/ACCESS.2024.3358206

Gill, S. S., Xu, M., Patros, P., Wu, H., Kaur, R., Kaur, K., et al. (2024). Transformative effects of chatGPT on modern education: emerging era of ai chatbots. Intern. Things Cyber-Physi. Syst. 4, 19–23. doi: 10.1016/j.iotcps.2023.06.002

Guo, Y., and Lee, D. (2023). Leveraging chatGPT for enhancing critical thinking skills. J. Chem. Educ. 100, 4876–4883. doi: 10.1021/acs.jchemed.3c00505

Hadi Mogavi, R., Deng, C., Juho Kim, J., Zhou, P., D Kwon, Y., Hosny Saleh Metwally, A., et al. (2024). ChatGPT in education: A blessing or a curse? A qualitative study exploring early adopters' utilization and perceptions. Comp. Human Behav.: Artif. Humans 2:100027. doi: 10.1016/j.chbah.2023.100027

Hung, J., and Chen, J. (2023). The benefits, risks and regulation of using chatGPT in chinese academia: a content analysis. Soc. Sci. 12:380. doi: 10.3390/socsci12070380

Imran, M., and Almusharraf, N. (2023). Analyzing the role of chatGPT as a writing assistant at higher education level: a systematic review of the literature. Contemp. Educ. Technol. 15:ep464. doi: 10.30935/cedtech/13605

Jeon, J., and Lee, S. (2023). Large language models in education: a focus on the complementary relationship between human teachers and chatgpt. Educ. Inform. Technol. 28, 15873–15892. doi: 10.1007/s10639-023-11834-1

Jeyaraman, M., Jeyaraman, N., Nallakumarasamy, A., Yadav, S., Bondili, S. K., et al. (2023). ChatGPT in medical education and research: a boon or a bane? Cureus 15:44316. doi: 10.7759/cureus.44316

Jukiewicz, M. (2024). The future of grading programming assignments in education: The role of chatGPT in automating the assessment and feedback process. Think. Skills Creat. 52:101522. doi: 10.1016/j.tsc.2024.101522

Kalam, K. T., Rahman, J. M., Islam, M. R., and Dewan, S. M. R. (2024). ChatGPT and mental health: friends or foes? Health Sci. Rep. 7:e1912. doi: 10.1002/hsr2.1912

Kim, H. K., Nayak, S., Roknaldin, A., Zhang, X., Twyman, M., and Lu, S. (2024). Exploring the impact of chatGPT on student interactions in computer-supported collaborative learning. arXiv [preprint] arXiv:2403.07082. doi: 10.48550/arXiv.2403.07082

Kıyak, Y. S., and Emekli, E. (2024). ChatGPT prompts for generating multiple-choice questions in medical education and evidence on their validity: a literature review. Postgrad Med. J. 100, 858–865. doi: 10.1093/postmj/qgae065

Klimova, B., Pikhart, M., and Al-Obaydi, L. H. (2024). Exploring the potential of chatGPT for foreign language education at the university level. Front. Psychol. 15:1269319. doi: 10.3389/fpsyg.2024.1269319

Kohnke, L., Moorhouse, B. L., and Zou, D. (2023). ChatGPT for language teaching and learning. Relc J. 54, 537–550. doi: 10.1177/00336882231162868

Kotek, H., Dockum, R., and Sun, D. (2023). “Gender bias and stereotypes in large language models,” in CI '23: Proceedings of The ACM Collective Intelligence Conference (New York: ACM).

Kusuma, I. P. I., Roni, M., Dewi, K. S., and Mahendrayana, G. (2024). Revealing the potential of chatGPT for english language teaching: Efl preservice teachers' teaching practicum experience. Stud. English Lang. Educ. 11:650–670. doi: 10.24815/siele.v11i2.34748

Kuzdeuov, A., Mukayev, O., Nurgaliyev, S., Kunbolsyn, A., and Varol, H. A. (2024). “ChatGPT for visually impaired and blind,” in 2024 International Conference on Artificial Intelligence in Information and Communication (ICAIIC) (Osaka: IEEE), 722–727.

Lai, T., Xie, C., Ruan, M., Wang, Z., Lu, H., and Fu, S. (2023). Influence of artificial intelligence in education on adolescents' social adaptability: the mediatory role of social support. PLoS ONE 18:e0283170. doi: 10.1371/journal.pone.0283170

Lamichhane, B. (2023). Evaluation of chatGPT for NLP-based mental health applications. arXiv [preprint]. doi: 10.48550/arXiv.2303.15727

Levkovich, I., and Elyoseph, Z. (2023). Suicide risk assessments through the eyes of chatgpt-3.5 versus chatgpt-4: vignette study. JMIR Mental Health 10:e51232. doi: 10.2196/51232

Lho, S. K., Park, S.-C., Lee, H., Oh, D. Y., Kim, H., Jang, S., et al. (2025). Large language models and text embeddings for detecting depression and suicide in patient narratives. JAMA Netw. Open 8:e2511922–e2511922. doi: 10.1001/jamanetworkopen.2025.11922

Li, M. (2025). The impact of chatGPT on teaching and learning in higher education: Challenges, opportunities, and future scope. Encyclopedia of Information Science and Technology, Sixth Edition (Hershey, PA), 1–20.

Limna, P., Kraiwanit, T., Jangjarat, K., Klayklung, P., and Chocksathaporn, P. (2023). The use of chatGPT in the digital era: perspectives on chatbot implementation. J. Appl. Learn. Teach. 6, 64–74. doi: 10.37074/jalt.2023.6.1.32

Limo, F. A. F., Tiza, D. R. H., Roque, M. M., Herrera, E. E., Murillo, J. P. M., Huallpa, J. J., et al. (2023). Personalized tutoring: ChatGPT as a virtual tutor for personalized learning experiences. Przestrzeń Społeczna 23, 293–312.

Lucas, H. C., Upperman, J. S., and Robinson, J. R. (2024). A systematic review of large language models and their implications in medical education. Med. Educ. 58, 1276–1285. doi: 10.1111/medu.15402

Ma, Q., Crosthwaite, P., Sun, D., and Zou, D. (2024). Exploring chatGPT literacy in language education: a global perspective and comprehensive approach. Comp. Educ.: Artif. Intellig. 7:100278. doi: 10.1016/j.caeai.2024.100278

Mao, J., Chen, B., and Liu, J. C. (2024). Generative artificial intelligence in education and its implications for assessment. TechTrends 68, 58–66. doi: 10.1007/s11528-023-00911-4

Maurya, R. K., Montesinos, S., Bogomaz, M., and DeDiego, A. C. (2025). Assessing the use of chatGPT as a psychoeducational tool for mental health practice. Counsel. Psychother. Res. 25:e12759. doi: 10.1002/capr.12759

Memarian, B., and Doleck, T. (2023). ChatGPT in education: Methods, potentials, and limitations. Comp. Human Behav.: Artif. Humans 1:100022. doi: 10.1016/j.chbah.2023.100022

Michel-Villarreal, R., Vilalta-Perdomo, E., Salinas-Navarro, D. E., Thierry-Aguilera, R., and Gerardou, F. S. (2023). Challenges and opportunities of generative ai for higher education as explained by chatgpt. Educ. Sci. 13:856. doi: 10.3390/educsci13090856

Nosek, B., Banaji, M., and Greenwald, A. (2002). Harvesting implicit group attitudes and beliefs from a demonstration web site. Group Dynam.: Theory, Res. Pract. 6, 101+108–109. doi: 10.1037/1089-2699.6.1.101

Nosek, B. A., Smyth, F. L., Sriram, N., Lindner, N. M., Devos, T., Ayala, A., et al. (2009). National differences in gender–science stereotypes predict national sex differences in science and math achievement. Proc. Nat. Acad. Sci. 106, 10593–10597. doi: 10.1073/pnas.0809921106

Onal, S., and Kulavuz-Onal, D. (2024). A cross-disciplinary examination of the instructional uses of chatGPT in higher education. J. Educ. Technol. Syst. 52, 301–324. doi: 10.1177/00472395231196532

Perkins, M., Roe, J., Postma, D., McGaughran, J., and Hickerson, D. (2024). Detection of gpt-4 generated text in higher education: combining academic judgement and software to identify generative ai tool misuse. J. Acad. Ethics 22, 89–113. doi: 10.1007/s10805-023-09492-6

Qiu, H., He, H., Zhang, S., Li, A., and Lan, Z. (2023). Smile: Single-turn to multi-turn inclusive language expansion via chatGPT for mental health support. arXiv [preprint] arXiv:2305.00450. doi: 10.18653/v1/2024.findings-emnlp.34

Qureshi, B. (2023). Exploring the use of chatGPT as a tool for learning and assessment in undergraduate computer science curriculum: opportunities and challenges. arXiv [preprint] arXiv:2304.11214. doi: 10.48550/arXiv.2304.11214

Raile, P. (2024). The usefulness of chatGPT for psychotherapists and patients. Humanit. Soc. Sci. Commun. 11:1–8. doi: 10.1057/s41599-023-02567-0

Rakap, S., and Balikci, S. (2024). Enhancing IEP goal development for preschoolers with autism: a preliminary study on chatGPT integration. J. Autism Dev. Disord. 2024:1–6. doi: 10.1007/s10803-024-06343-0