- 1Department of Nutrition Science, East Carolina University, Greenville, NC, United States

- 2Department of Nutrition and Dietetics, University of North Florida, Jacksonville, FL, United States

- 3Academy of Nutrition and Dietetics, Chicago, IL, United States

- 4James A. Haley Veterans’ Hospital, Tampa, FL, United States

Objective: To develop and validate an abbreviated screening tool to screen Nutrition Care Process (NCP) proficiency.

Methods: The questionnaire was developed using existing literature. All iterations were reviewed by subject matter experts. The questionnaire underwent several methods of testing, including content validity, face validity, internal consistency reliability, and test–retest reliability. Questions were scored based on answer selection, and participants were categorized by observed levels of proficiency.

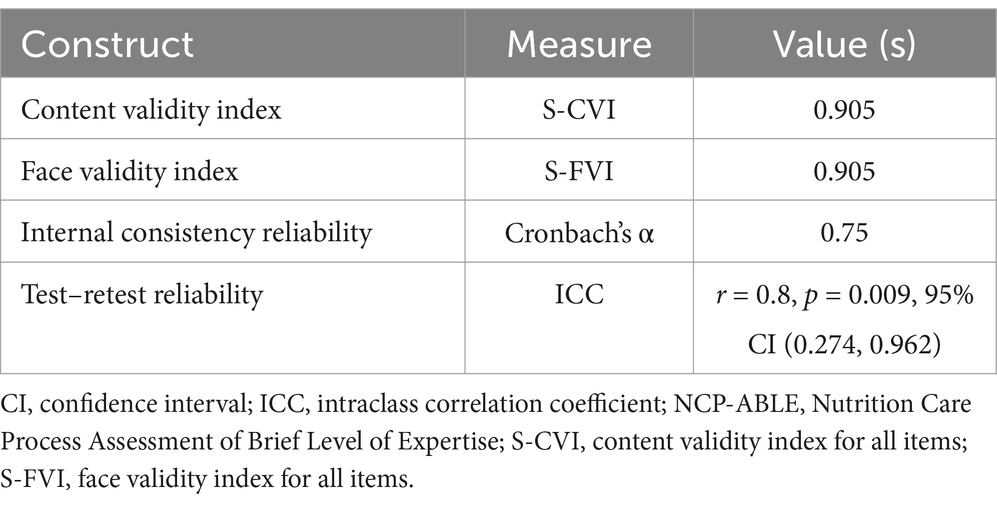

Results: Internal consistency reliability testing indicated removal of two items, creating a 3-item questionnaire (the NCP Assessment of Brief Level of Expertise, or NCP-ABLE). All items met content (S-CVI = 0.94) and face (S-FVI = 0.94) validity and internal consistency (α = 0.75) and test–retest (r = 0.8, p = 0.009, 95% CI: 0.274, 0.962) reliability thresholds. Six (85.7%) of the subject matter experts reported higher degrees of proficiency with scores of 3 (highest quartile placement), whereas one expert demonstrated lower levels of proficiency through the score of 1 (second quartile placement).

Conclusion: The NCP-ABLE met the established validity and reliability thresholds. This supports its utilization as a screen for NCP proficiency, particularly to identify individuals demonstrating lower levels of NCP knowledge proficiency. The NCP-ABLE may be effective for the screening of clinicians, educators, preceptors, and students for educational intervention or quality improvement initiatives. Future investigations may aim to validate the NCP-ABLE in other languages. Further research is needed to determine the relationship between NCP-ABLE scores and NCP implementation, possibly by comparing NCP-ABLE results and scores from robust assessments of NCP practice.

Introduction

Since the adoption of the Nutrition Care Process (NCP) by the Academy of Nutrition and Dietetics in 2003, the NCP has continued to evolve (1–4). The 4-step model (assessment and reassessment, diagnosis, intervention, monitoring, and evaluation), composed of six clinical judgments across two care phases (problem identification and problem solving), has become a practice standard internationally (4). Among the benefits suggested by Splett and Myers (5) in their original proposal are improvements in consistency and quality of care. These advantages have been partially credited to the structure and guidance provided by NCP chains, or individual relationships between each of the clinical judgments (evidence, diagnosis, etiology, goal, intervention, and outcome) (6). The continued development of the NCP and advancements in informatics, terminology (in the form of the Nutrition Care Process Terminology, or NCPT), and data management methodology have established the theory behind the model’s use (4, 7).

While sound in theory, years would pass before sufficient evidence for NCP implementation would surface. Investigation teams led by Lewis et al. (8) and Mujlli et al. (9) would take advantage of an NCP chart audit tool (known as the Diet-NCP-Audit) developed by Lövestam et al. (10) to support NCP use. In one Veterans Health Administration facility, Lewis et al. (8) observed a 38% increased odds of problem resolution for every point increase on the overall audit score (scaling from 0 to 26). Furthermore, findings suggested that the presence of the etiology-intervention chain link in documentation elicited a 51 times higher problem improvement rate (8).

At the same time, Mujlli et al. (9) were conducting a similar study in Saudi Arabia with the aim of evaluating the impact of RDN NCP/T use and documentation on non-alcoholic fatty liver disease and metabolic syndrome in a pediatric population. Significant inverse correlations with audit score in respect to changes in body mass index from the 6-to-12-month follow-up period and alkaline phosphatase measurements were observed (9). Despite the usage of NCP in different countries and under dissimilar context, improved Diet-NCP-Audit scores were found to be significantly associated with improved patient outcomes (8, 9).

Colin et al. (11) continued to expand the field with a secondary analysis of the 2017–2019 diabetes registry cases compiled via The Academy of Nutrition and Dietetics Health Informatics Infrastructure (ANDHII) Dietetics Outcome Registry (DOR). The DOR, one of the prominent tools available in ANDHII, allows for aggregation of de-identified encounter data submitted by nutrition and dietetics professionals (12). While the linkage associated with problem resolution varied from previous investigations, the NCP implementation in the presence of the evidence-diagnosis link was a significant predictor of diagnosis resolution. These findings were among the most notable to support the effective use of NCP/T (and its underlying clinical judgments as measured by chain links) for the purpose of improvements in quality of nutrition documentation.

The body of literature suggests that increased NCP knowledge and quality implementation are associated with greater improvements in the original NCP benefits introduced by Splett and Myers (5). Despite preceding these findings, the Academy of Nutrition and Dietetics Practice paper (13) discussing analytical skills needed for effective nutrition assessment and diagnosis was among the first works describing the characteristics associated with NCP proficiency. This was later captured in the first NCP update paper (3), which suggests that proficiency involves thorough understanding of the model (and underlying critical judgments), effective organization of data, prioritization of actionable problems, and recognition of patterns between care-related constructs. The qualities expressed at the level of proficiency are particularly essential in the problem identification phase, which involves critically evaluating nutrition assessment findings to identify the presence of nutrition problems and their most influential etiologies (2, 6, 14). This surpasses prerequisite stages of understanding that focus on recognition of model steps, ability to structure care, selection of assessment tools and procedures, and differentiation of problems, etiologies, and symptomologies (3, 13). Collectively, NCP proficiency can be described as having theoretical knowledge that exceeds competency and allows for higher-level nutrition assessment and diagnosis (including recognition of patterns between such as problems, etiologies, and symptomologies) and prioritization of actionable nutrition interventions.

The most widely practiced form of individual NCP assessment involves a retrospective approach using documentation audit tools. Tools such as the aforementioned Diet-NCP-Audit and the NCP Quality Evaluation and Standardization Tool (NCP QUEST) can effectively evaluate NCP implementation (10, 15). NCP QUEST in particular has been endorsed for its high content validity and potential for teaching and elevating outcomes management (15); however, chart auditing methods may introduce additional workloads for managers that are unfamiliar with or disinterested in these tools. These audits are also retrospective in nature, meaning that their items cannot be proactively administered in advance of care encounters unless used in resource-demanding educational simulations. A prospective approach in the form of knowledge screens may yield immediate data to recognize individuals or populations at risk of low NCP knowledge, allowing for educational intervention, quality improvement, and investigatory purposes. Assessments of comprehension have been developed, such as the NCP knowledge quiz in Module 4 of the International Nutrition Care Process Implementation Survey (INIS) (16). While being recognized as the most comprehensive web-based instrument for evaluation of NCP knowledge, attitudes, perceptions, and implementation, the questions introduced focus on basic NCP knowledge scaled to levels below proficiency (16). To the knowledge of the authors, a concise tool for evaluating the level of proficiency had not been validated. The purpose of this investigation was to develop and validate an electronic questionnaire to screen NCP proficiency in nutrition and dietetics professionals.

Methods

Questionnaire development

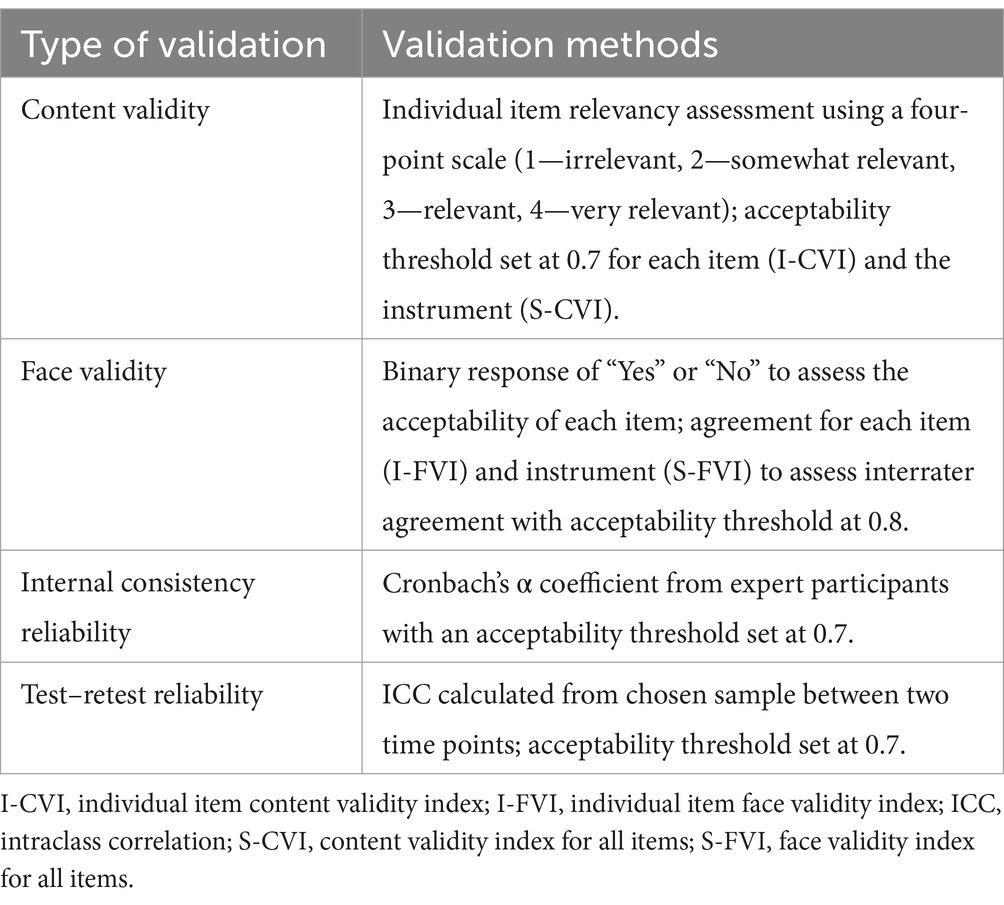

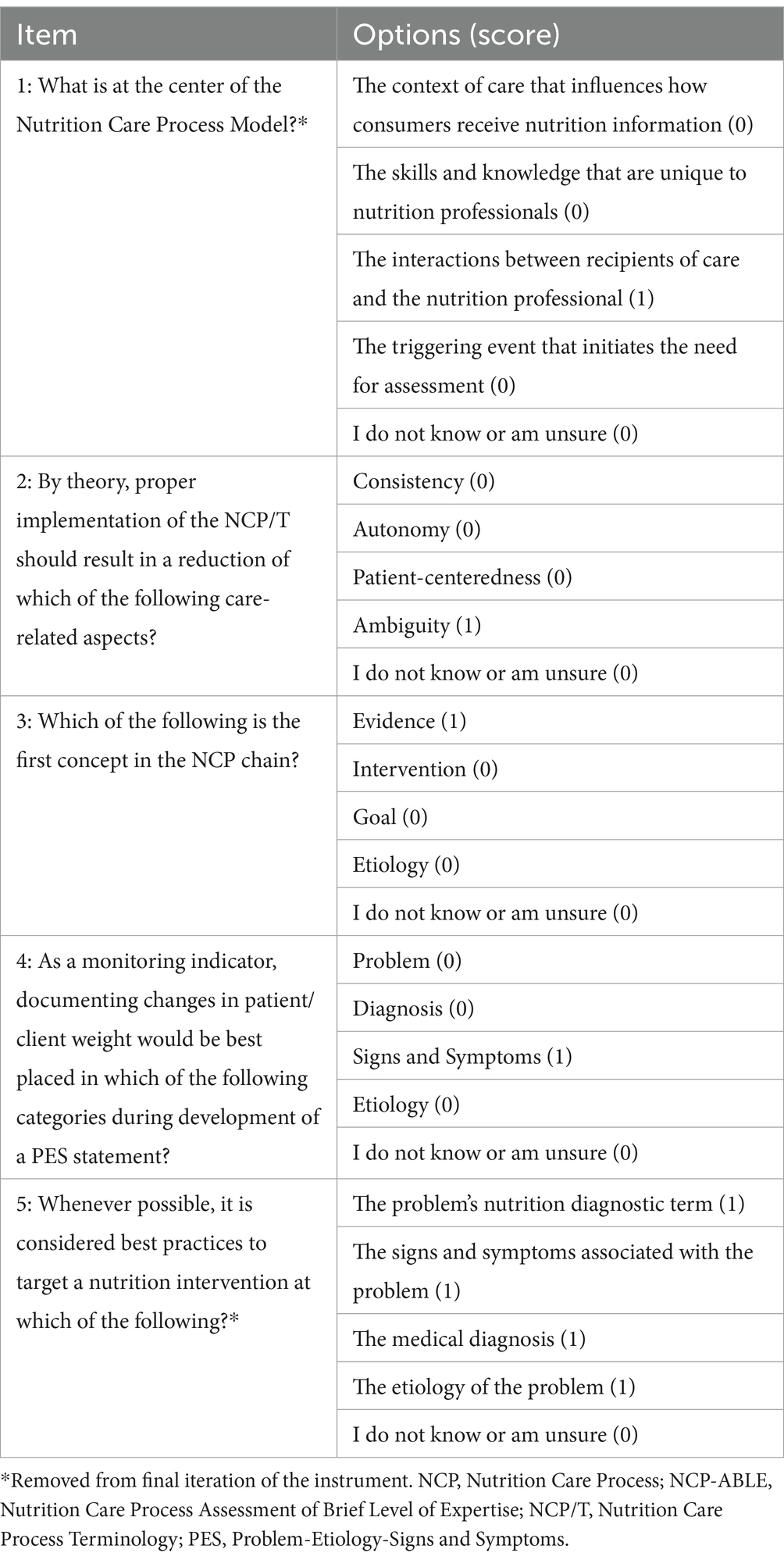

The authors aimed to include no more than five items in the final revision for concision. Four fully original items (items 1, 2, 3, and 5) were developed from key points identified in literature. The NCP update papers (3, 4) were reviewed as a broad foundation for question development. The works from Charney and Peterson (13) and Thompson et al. (6) were reviewed for improved context, as these support the qualities stated by Swan et al. (3) Another item (item 4) was modeled on the concept introduced by the eighth item within the NCP Knowledge Quiz of the INIS (16) and reworded for context. The draft instrument was developed by the PI with subsequent review by the authors for revision. Formal assessment, involving multiple rounds of adjustment, followed with the assistance of seven subject matter experts. Qualtrics, version March 2024 (Qualtrics) (17) hosted the survey for the investigation team and disseminated iterations to experts. Each round of revision included evaluation of expert feedback, reliability and validity testing, and discussion within the authors (Table 1).

Subject matter expert criteria

Eligibility for experts included maintenance of registered dietitian nutritionist (RDN) registration through the Commission on Dietetic Registration, self-perception of NCP proficiency, and at least 3 years of practice- or education-based NCP usage. Expert recruitment involved convenience sampling, as 11 eligible peers of the investigation team across academic, clinical, and research institutions were invited to participate in validation. After confirming eligibility and interest, prospective experts received an email containing the background information on the study, a copy of the draft instrument, and an invitation link to participate in a modified copy of the survey for assessment.

Content validity

The draft questions approved by the authors underwent content validity assessments endorsed by authors from the nursing discipline (18, 19). After each draft question was answered by subject matter experts, an item assessing relevancy using a four-point Likert scale (one being irrelevant and four being extremely relevant) followed (18). Upon completion of each question and prompt, a text box was provided for anonymous feedback. Each item receiving a score of three or four on the Likert scale yielded one point, with a score of one or two receiving zero points. Content validity index (CVI) was calculated for each individual question (I-CVI) and the entire instrument (S-CVI). All items with an I-CVI of less than 0.7 were identified for revision. A lower threshold of 0.7 was implemented due to broadness and concision of the questionnaire, as values less than 0.7 necessitate removal (18, 20). Feedback provided for these items and the collective instrument was considered, and experts were asked to repeat the process for the newly revised questions.

Face validity

Face validity was assessed with the goal of ensuring that the items are in alignment with research aims. The experts were determined to be an appropriate sample for this assessment, as they represent the target audience and provide perspective from the expert and participant perspectives. A binary selection (“Yes” or “No”) followed each individual content validity item, requesting each expert to indicate their agreement on the question’s appropriateness for the intended aims. Disagreeable experts were encouraged to leave feedback in the text box mentioned during content validity assessment. Interrater agreement scores, as face validity indexes (FVI) were calculated for each item (I-FVI) as well as an average for the entirety of the block (S-FVI) with an acceptability threshold of 0.8 (indicating acceptable scale agreement) (21). Agreement below 0.8 indicated revision based on the feedback provided.

Internal consistency reliability

Internal consistency reliability evaluation was conducted after content validity. Each item in this block was measured against the remaining individually using Cronbach’s α (22). Questions presenting a score less than 0.7 were considered unreliable and identified for revision (22). Feedback provided during the open-text portion of each item was considered. Revisions to or removal of identified questions were considered outcomes, with the latter potentially resulting in the generation of a new item for assessment. These were considered when administering the survey in following iterations.

Test–retest reliability

Upon conclusion of the last satisfactory distribution for content validity, face validity, and internal consistency reliability, item scores were collected. Subject matter experts were encouraged to provide candid answers with each attempt to ensure that the first point for test–retest reliability assessment was valid. Retest items were distributed 1 week after each item passed previous validity and reliability assessment and remained available for 1 week. Intraclass correlation coefficients (ICC) were calculated from total scores (22). Assessments yielding a r value less than 0.7 would be considered unreliable and indicate revision (22).

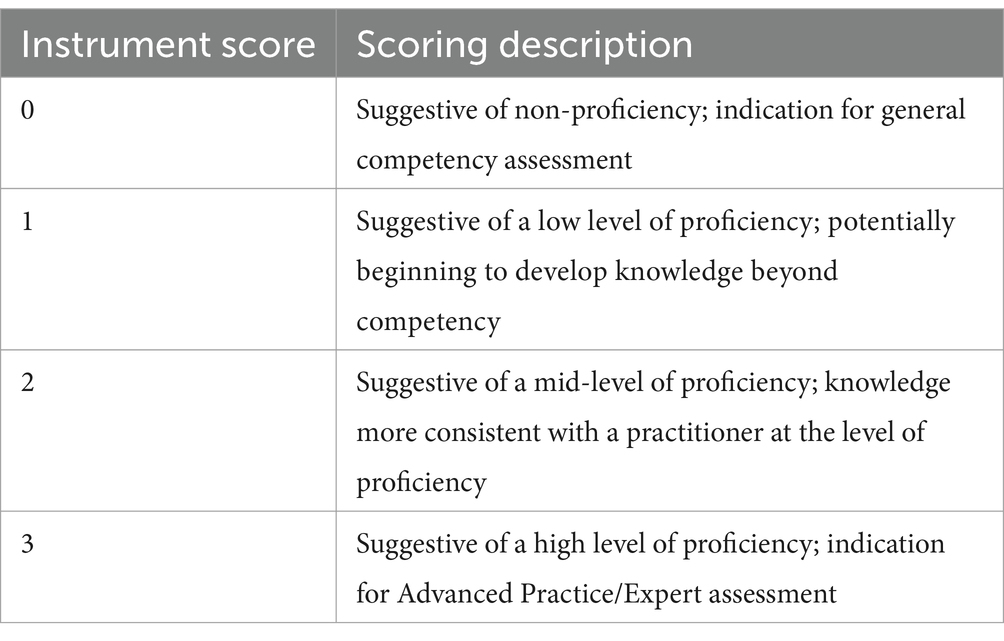

Scoring

A proficiency score (PS) was assigned to the first attempt based on item responses. A correct response awarded one point, and all incorrect responses yielded an item score of zero. Participants were categorized based on the total number of correct answers, with proficiency categories equal to the total number of included items in addition to a category for no correct answer selection (or score of 0). Higher scores indicated a stronger degree of proficiency, whereas lower scores suggested lesser levels of proficiency. Table 2 lists the scoring stratification descriptions.

Results

Subject matter experts

The five-item draft was accepted by the investigation team to undergo the following testing. Nine of the 11 (81.8%) solicited peers accepted the invitation with seven (63.6%) completing all subsequent steps of the validation process. Three of the seven (42.8%) participants held terminal degrees in dietetics, and all but one of the experts (14.3%) have experience as educators or preceptors in Accreditation Council for Nutrition and Dietetics (ACEND)-accredited programs.

Validity and reliability testing

The original 5-item questionnaire with validation items underwent three rounds of revision. Four (80%) of the items (1, 2, 3, and 5) passed acceptability thresholds for content and face validity. The third iteration of item 4 met the threshold. All five items met test–retest reliability thresholds; however, the inclusion of items 1 and 5 weakened the collective internal consistency reliability. Removal of these items increased Cronbach’s alpha to 0.75 and met the established threshold (Table 3). The final iteration of the proficiency questionnaire containing items 2, 3, and 4, referred to as the Nutrition Care Process Assessment of Brief Level of Expertise (NCP-ABLE), received no further feedback from the investigation team (Table 4).

Subject matter expert scoring

The distribution of collected scores was reviewed. When stratified in quartiles by score (0–3), six of the experts (85.7%) scored three points and displayed high levels of proficiency. One expert (14.3%) scored one point, suggesting a low degree of proficiency.

Discussion

As the use of the NCP and NCPT becomes more widely accepted and supported for clinical outcomes, a focus on practitioner skill and quality implementation needs investigation. This focus is accomplished through chart evaluation using tools such as the NCP QUEST (15) and the Diet-NCP-Audit (10). These instruments are effective at evaluating NCP implementation and can be used for education (from peer-to-peer or clinician-to-student views) (15); however, their implementation may be seen as taxing by stakeholders and unnecessary by those with poorer NCP attitudes or reduced NCP knowledge. These, in addition to the retrospective nature of chart reviews, may serve as barriers to timely assessment of clinician performance and care outcomes. Since these tools assess documentation of the care provided, as opposed to measure of prerequisite NCP knowledge or skills, their items are difficult to administer in advance of care provision. Development of prospective predictors of NCP implementation quality and care outcomes through screening tools like NCP-ABLE has the potential to minimize barriers and augment current practices.

NCP-ABLE has demonstrated potential as a screening questionnaire and in particular to identify low collective NCP proficiency. This purpose may be markedly useful for various populations, including educators and clinicians. Those involved in education may see value in administering NCP-ABLE to students at various points in their pre-registration preparation, such as following completion of didactic programming or preceding clinical supervised practice. Likewise, educational administration can implement NCP-ABLE to identify support needs for active preceptors. A clinical preceptor with low NCP proficiency may require educational intervention to become more familiar with current practice expectations before providing practice-based education and overseeing interns’ tasks. Moreover, clinical nutrition managers may use NCP-ABLE to screen current and future RDNs being supervised. This is especially apparent to support improvements in quality of care, as the recent literature strongly supports the relationship between NCP implementation and care outcomes (8, 9, 11). Low proficiency scores may suggest poorer implementation, necessitating in-depth chart audits using NCP QUEST (15) or educational intervention.

A key consideration of this investigation involved defining NCP proficiency. To ensure that the intended construct was being measured, the NCP update papers (3, 4) served as the basis for item development since they express the formally accepted working qualities for NCP proficiency. The works from Charney and Peterson (13) and Thompson et al. (6) allowed for further conceptualization of questions since these were credited by the update papers (3, 4) when describing critical thinking qualities necessary for proficiency. Furthermore, the findings from Lewis et al. (8) and Colin et al. (11) suggest that links between evidence and diagnosis and etiology and intervention (both associated with proficiency) are crucial for problem resolution. These assumptions related to the NCP chain described by Thompson et al. (6) along with the validated items developed by Lövestam et al. (16) for the INIS, support the NCP-ABLE items’ relationship to proficiency. This literature collectively suggests that a relationship between NCP proficiency and care outcomes exists, and more sophisticated prospective tools must continue to be developed.

Despite the theoretical basis for the instrument’s development, limitations of this investigation must be addressed. One notable consideration from this investigation relates to the experts involved. Four (36.4%) had declined participation or did not complete the entirety of the process. This dropout was double what had been anticipated. Additionally, one expert scored in the second quartile of the instrument and may not have similar proficiency as their peers. Regardless, the minimum number of experts was still met per the protocol suggested by Rubio and colleagues (23) for the validation of similar constructs. The use of experts for test–retest reliability is recognized as a limitation. A sample of dietetics practitioners unfamiliar with NCP-ABLE would have been better indicated; however, the expert sample was utilized for prompt validation (allowing for timely pilot deployment as part of a more robust questionnaire).

Another possible weakness may be perceived as the number of items in the NCP-ABLE. Since the aim of the investigation was directed at creating a minimal burden screen (serving as a complement to other instruments) to broadly screen for proficiency, the concision of the NCP-ABLE was desirable. While originally developed to include five items, the final iteration totaled three. While this may reduce scoring variability and survey fatigue among participants, a decrease in extensiveness may reduce content validity and lack the ability to thoroughly identify individual NCP/T deficiencies. Each question, while focusing on a different aspect of NCP/T proficiency, may provide an indication of deficiency but cannot confidently determine this in isolation. Furthermore, subsequent administration of this short questionnaire to the same population may introduce a degree of learning bias and overestimate practitioner knowledge. This suggests that short-duration follow-up screening may be contraindicated.

Future investigations are needed to support its use for prediction of proficient NCP application. Experimentally comparing NCP-ABLE results against robust practice-based assessments that may measure care outcomes (such as the NCP QUEST) may further validate its use across settings (15). This proposed investigation can assess the accuracy of the instrument and provide data to support the use of NCP-ABLE items in questionnaires that predict general categorization of NCP knowledge. Furthermore, continued validation across various dietetics populations (including those practicing with different languages or in countries) is indicated to ensure validity and widespread usage.

Conclusion

The NCP-ABLE met the established validity and reliability thresholds, suggesting appropriateness for its use as a screening tool of NCP knowledge proficiency. Various populations, such as educators and clinical nutrition managers, may find value from its screening potential to identify individuals with low collective NCP proficiency. While this investigation does not support its use as a predictor of NCP implementation quality or patient outcomes, future studies comparing the NCP-ABLE against more rigorous assessment methods that directly measure implementation may support broader use or revision of NCP-ABLE in its current iteration.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the University of North Florida Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

LL: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Writing – original draft, Writing – review & editing. CP: Conceptualization, Funding acquisition, Writing – review & editing. SL: Conceptualization, Funding acquisition, Writing – review & editing. CC: Conceptualization, Funding acquisition, Supervision, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. Funding for this study was provided by the Academy of Nutrition and Dietetics Foundation through the 2024 Commission on Dietetic Registration Emerging Researcher Grant.

Acknowledgments

The authors would like to acknowledge the efforts of peer experts from East Carolina University, the University of North Florida, the Veterans Health Administration, and the Academy of Nutrition and Dietetics. The volunteer efforts from these peers were necessary for the validation and scoring of this instrument.

Conflict of interest

CP is an employee of the Academy of Nutrition and Dietetics, which has a financial interest in the Nutrition Care Process Terminology described here.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Lacey, K, and Pritchett, E. Nutrition care process and model: ADA adopts road map to quality care and outcomes management. J Am Diet Assoc. (2003) 103:1061–72. doi: 10.1016/S0002-8223(03)00971-4

2. Hakel-Smith, N, and Lewis, NM. A standardized nutrition care process and language are essential components of a conceptual model to guide and document nutrition care and patient outcomes. J Am Diet Assoc. (2004) 104:1878–84. doi: 10.1016/j.jada.2004.10.015

3. Swan, WI, Vivanti, A, Hakel-Smith, NA, Hotson, B, Orrevall, Y, Trostler, N, et al. Nutrition care process and model update: toward realizing people-centered care and outcomes management. J Acad Nutr Diet. (2017) 117:2003–14. doi: 10.1016/j.jand.2017.07.015

4. Swan, WI, Pertel, DG, Hotson, B, Lloyd, L, Orrevall, Y, Trostler, N, et al. Nutrition care process (NCP) update part 2: developing and using the NCP terminology to demonstrate efficacy of nutrition care and related outcomes. J Acad Nutr Diet. (2019) 119:840–55. doi: 10.1016/j.jand.2018.10.025

5. Splett, P, and Myers, EF. A proposed model for effective nutrition care. American dietetic association. J Am Diet Assoc. (2001) 101:357–63. doi: 10.1016/S0002-8223(01)00093-1

6. Thompson, KL, Davidson, P, Swan, WI, Hand, RK, Rising, C, Dunn, AV, et al. Nutrition care process chains: the “missing link” between research and evidence-based practice. J Acad Nutr Diet. (2015) 115:1491–8. doi: 10.1016/j.jand.2015.04.014

7. Kight, CE, Bouche, JM, Curry, A, Frankenfield, D, Good, K, Guenter, P, et al. Consensus recommendations for optimizing electronic health Records for Nutrition Care. Nutr Clin Pract. (2020) 35:12–23. doi: 10.1002/ncp.10433

8. Lewis, SL, Wright, L, Arikawa, AY, and Papoutsakis, C. Etiology intervention link predicts resolution of nutrition diagnosis: a nutrition care process outcomes study from a Veterans' health care facility. J Acad Nutr Diet. (2021) 121:1831–40. doi: 10.1016/j.jand.2020.04.015

9. Mujlli, G, Aldisi, D, Aljuraiban, GS, and Abulmeaty, MMA. Impact of nutrition care process documentation in obese children and adolescents with metabolic syndrome and/or non-alcoholic fatty liver disease. Healthcare. (2021) 9:188. doi: 10.3390/healthcare9020188

10. Lövestam, E, Orrevall, Y, Koochek, A, Karlström, B, and Andersson, A. Evaluation of a nutrition care process-based audit instrument, the diet-NCP-audit, for documentation of dietetic care in medical records. Scand J Caring Sci. (2014) 28:390–7. doi: 10.1111/scs.12049

11. Colin, C, Arikawa, A, Lewis, S, Cooper, M, Lamers-Johnson, E, Wright, L, et al. Documentation of the evidence-diagnosis link predicts nutrition diagnosis resolution in the academy of nutrition and Dietetics' diabetes mellitus registry study: a secondary analysis of nutrition care process outcomes. Front Nutr. (2023) 10:1011958. doi: 10.3389/fnut.2023.1011958

12. Murphy, WJ, Yadrick, MM, Steiber, AL, Mohan, V, and Papoutsakis, C. Academy of nutrition and dietetics health informatics infrastructure (ANDHII): a pilot study on the documentation of the nutrition care process and the usability of ANDHII by registered dietitian nutritionists. J Acad Nutr Diet. (2018) 118:1966–74. doi: 10.1016/j.jand.2018.03.013

13. Charney, P, and Peterson, SJ. Practice paper of the academy of nutrition and dietetics abstract: critical thinking skills in nutrition assessment and diagnosis. J Acad Nutr Diet. (2013) 113:1545. doi: 10.1016/j.jand.2013.09.006

14. Hakel-Smith, N, Lewis, NM, and Eskridge, KM. Orientation to nutrition care process standards improves nutrition care documentation by nutrition practitioners. J Am Diet Assoc. (2005) 105:1582–9. doi: 10.1016/j.jada.2005.07.004

15. Lewis, SL, Miranda, LS, Kurtz, J, Larison, LM, Brewer, WJ, and Papoutsakis, C. Nutrition care process quality evaluation and standardization tool: the next frontier in quality evaluation of documentation. J Acad Nutr Diet. (2022) 122:650–60. doi: 10.1016/j.jand.2021.07.004

16. Lövestam, E, Vivanti, A, Steiber, A, Boström, A, Devine, A, Haughey, O, et al. The international nutrition care process and terminology implementation survey: towards a global evaluation tool to assess individual practitioner implementation in multiple countries and languages. J Acad Nutr Diet. (2019) 119:242–60. doi: 10.1016/j.jand.2018.09.004

18. Polit, DF, and Beck, CT. The content validity index: are you sure you know what's being reported? Critique and recommendations. Res Nurs Health. (2006) 29:489–97. doi: 10.1002/nur.20147

19. Lynn, MR. Determination and quantification of content validity. Nurs Res. (1986) 35:382–5. doi: 10.1097/00006199-198611000-00017

20. Yusoff, MSB. ABC of response process validation and face validity index calculation. Educ Med J. (2019) 11:55–61. doi: 10.21315/eimj2019.11.3.6

21. Drummond, KE, Reyes, A, Goodell, LS, Cooke, NK, and Stage, VC. Nutrition research: Concepts and applications. 1st ed. Burlington: Jones & Bartlett Learning (2022).

Keywords: Nutrition Care Process, validation, proficiency, nutrition education, questionnaire development, screening

Citation: LaBonte LR, Papoutsakis C, Lewis S and Colin C (2025) Development and validation of the Nutrition Care Process Assessment of Brief Level of Expertise screening tool. Front. Nutr. 12:1572181. doi: 10.3389/fnut.2025.1572181

Edited by:

Alessandra Durazzo, Council for Agricultural Research and Economics, ItalyReviewed by:

Chengsi Ong, KK Women’s and Children’s Hospital, SingaporeLudivine Soguel, University of Applied Sciences and Arts Western, Switzerland

Copyright © 2025 LaBonte, Papoutsakis, Lewis and Colin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Luc R. LaBonte, bGFib250ZWwxN0BlY3UuZWR1

Luc R. LaBonte

Luc R. LaBonte Constantina Papoutsakis

Constantina Papoutsakis Sherri Lewis

Sherri Lewis Casey Colin

Casey Colin