- 1Adult Literacy Research Center, College of Education and Human Development, Georgia State University, Atlanta, GA, United States

- 2Department of Psychology, Georgia State University, Atlanta, GA, United States

- 3Data Science and Analytics Program, Georgetown University, Washington, DC, United States

Introduction: Despite the necessity for adults with lower literacy skills to undergo and succeed in high-stakes computer-administered assessments (e.g., GED, HiSET), there remains a gap in understanding their engagement with digital literacy assessments.

Methods: This study analyzed process data, specifically time allocation data, from the Program for the International Assessment of Adult Competencies (PIAAC), to investigate adult respondents’ patterns of engagement across all proficiency levels on nine digital literacy items. We used cluster analysis to identify distinct groups with similar time allocation patterns among adults scoring lower on the digital literacy assessment. Finally, we employed logistic regression to examine whether the groups varied by demographic factors, in particular individual (e.g., race/ethnicity, age) and contextual factors (e.g., skills-use at home).

Results: Adults with lower literacy skills spent significantly less time on many of the items than adults with higher literacy skills. Among adults with lower literacy skills, two groups of time allocation patterns emerged: one group (Cluster 1) exhibited significantly longer engagement times, whereas the other group (Cluster 2) demonstrated comparatively shorter durations. Finally, we found that adults who had a higher probability of Cluster 1 membership (spending more time) exhibited relatively higher literacy scores, higher self-reported engagement in writing skills at home, were older, unemployed, and self-identified as Black.

Discussion: These findings emphasize differences in digital literacy engagement among adults with varying proficiency levels. Additionally, this study provides insights for the development of targeted interventions aimed at improving digital literacy assessment outcomes for adults with lower literacy skills.

Introduction

One in five adults in the U.S. (19%) perform at the lowest levels of literacy, and an additional 33% are nearing but not quite at proficient literacy levels (NCES, 2019). Many of these adults attend literacy programs to achieve adequate reading, writing, and math skills necessary for attaining a high school equivalency degree, joining the workforce (or elevating their career), or continuing into postsecondary education. Adult literacy students must oftentimes engage in high-stakes assessments for various reasons. For example, adult literacy students who enroll to attain a high school equivalency degree must take and ultimately pass assessments, such as the GED and HiSET. Additionally, adult literacy programs use information from high-stakes assessments to monitor progress, make funding decisions, and determine student educational levels (Lesgold and Welch-Ross, 2012).

One important challenge related to understanding the performance of adults with lower reading skills on high-stakes assessments is that they demonstrate diversity across an array of individual differences, including demographics, educational attainment, and foundational reading skills (Lesgold and Welch-Ross, 2012; MacArthur et al., 2012; Talwar et al., 2020; Tighe et al., 2023a). An added challenge is that high-stakes assessments have become increasingly digital (e.g., GED, HiSET). Some adults with lower literacy skills demonstrate stronger basic information and communication technology (ICT) skills than others (Olney et al., 2017), suggesting that this population is heterogeneous in terms of their technology skills and experiences.

Previous literature has considered heterogeneity in ICT performance amongst adults who struggle with literacy skills; however, little attention has been paid to how adults with lower literacy skills respond to and engage with digital assessments. Understanding how adults interact with digital assessments is crucial because challenges with reading and processing information in addition to knowing and adapting to technological features may influence their performance on these assessments. Process data (log files) from large-scale datasets could provide insight into various testing behaviors in a digital testing environment, such as the time spent on items (e.g., He and von Davier, 2016; He et al., 2019; Liao et al., 2019), which may offer a more nuanced understanding of the challenges that adults with lower literacy skills face within the context of taking digital assessments. It is also anticipated that such testing behaviors may vary across different individual and contextual factors with this population, including self-reported ICT skills-use, level of education, age, race/ethnicity, native language status, self-reported learning disability status, and literacy proficiency levels (Lesgold and Welch-Ross, 2012; Wicht et al., 2021). The purpose of this study was to use extant data from a large-scale digital assessment, Program for the International Assessment of Adult Competencies (PIAAC), to understand the time allocation patterns of adults of varying literacy levels on digital literacy performance and whether this varies by individual and contextual factors.

Digital assessment performance of adults with lower literacy skills

Recent literature has proposed a framework which posits that ICT skills on digital assessments vary amongst adults as a facet of numerous individual (e.g., literacy skills, education, and other socio-demographic characteristics) and contextual factors (i.e., the environments in which adults engage in and are exposed to ICT skills; Wicht et al., 2021).

Adults with lower literacy skills tend to struggle with basic ICT skills, which are vital to engaging with assessments in digital environments (Olney et al., 2017); yet may also demonstrate variability in skills within the realm of technology (Lesgold and Welch-Ross, 2012). For example, Olney et al. (2017) reported that adult literacy students between third to eighth grade reading levels demonstrated the highest degrees of difficulty on tasks that involved simple typing (e.g., signing into emails, composing emails) and right-clicking. However, the authors also found that the students demonstrated varying degrees of difficulty with various tasks ranging from basic computer skills to using web-features in a simulated email environment.

Individual factors

Adults who struggle with literacy skills represent a diverse population and therefore, may exhibit differences in assessment performance across a range of literacy levels, educational, and demographic factors (Lesgold and Welch-Ross, 2012). Preliminary evidence from paper-based reading assessments with adults who exhibit lower literacy skills has reported different profiles of literacy performance that also varied by age and native language status (e.g., MacArthur et al., 2012; Talwar et al., 2020). In addition, Tighe et al. (2022) reported that adults with lower literacy levels demonstrated the lowest performance on a paper-based literacy assessment when they exhibited lower educational attainment, self-reported a learning disability, were non-native English speakers, self-reported fair/poor health status, had lower or unknown parental education levels, and identified as Hispanic ethnicity.

Literacy assessments have increasingly become digital, which places higher demands on adults with lower literacy skills because they must have the reading and writing skills to succeed on the assessment as well as the technological skills necessary to meet the demands of the varying item types (Brinkley-Etzkorn and Ishitani, 2016; Graesser et al., 2019). Previous literature posits that literacy skills, specifically the ability to decode and comprehend written language, are a precursor to adults’ ICT skills (Coiro, 2003; van Deursen and van Dijk, 2016; Iñiguez-Berrozpe and Boeren, 2020; Wicht et al., 2021). Specific to digital assessment performance, Wicht et al., 2021 found that that adults’ literacy skills were positively associated with their performance on a digital assessment that required various literacy, ICT, and problem-solving skills. Few studies to date have focused on the relation of literacy skills to adults’ ICT skills and ICT use (de Haan, 2003; van Deursen and van Dijk, 2016; Wicht et al., 2021). Previous evidence with adults who exhibit lower literacy skills suggests that their performance on literacy assessments varies (Talwar et al., 2020), thus additional work is needed to understand the relation between literacy levels and engagement with digital assessments with this population.

Contextual factors

Practice engagement theory (PET) emphasizes that adults learn by engaging in day-to-day activities in reading, writing, and numeracy at home and at work (Reder, 2009a, 2010). This view of learning is particularly relevant to adults with lower literacy skills because they must read for various purposes in daily life (e.g., reading news stories, writing emails, filling out forms), and may encounter different types of texts, including texts in digital formats (OECD, 2013; Trawick, 2019). PET also posits that social practices play an integral role in the development of ICT skills for current cohorts of adults, especially older adults, because many of them did not formally receive ICT skills-training (Wicht et al., 2021). Instead, many of them depend on “learning-by-doing” in both home and work environments (Helsper and Eynon, 2010; Wicht et al., 2021).

Moreover, some evidence suggests that ICT skills-use in daily life varies amongst adults who have lower literacy skills (Feinberg et al., 2019), which is consistent with the heterogeneous nature of this population (Lesgold and Welch-Ross, 2012). For example, Feinberg et al. (2019) found a strong relation between performance on the PIAAC literacy assessment and self-reported frequencies of writing emails at home and at work, such that adults with relatively higher literacy scores were also more inclined to engage in ICT skills-use (e.g., writing emails) in daily life.

Using behavioral data to understand digital assessment performance

In addition to individual differences and contextual factors, it is critical to understand the behaviors of adults with lower literacy skills as they engage with digital assessments, which may reveal additional information regarding the strategies that they use or the challenges that they may face throughout the task (Cromley and Azevedo, 2006). As such, these behaviors may provide additional information about their performance beyond their overall, global score on a digital literacy assessment. Unlike individual factors (e.g., race/ethnicity, age, native speaker status), engagement in educational tasks and settings is not a static trait of the individual and may change in-the-moment for various reasons, such as the nature or difficulty of the task (Hadwin et al., 2001), the reader’s perceived value of the task, and/or the reader’s self-efficacy while engaged in the task (Dietrich et al., 2016; Shernoff et al., 2016).

In particular, understanding the length of time adults with lower literacy skills spend immersed in a digital assessment may reveal additional insights regarding their performance. Broadly, the children’s literature suggests that reading amount, or time spent reading, is related to students’ intrinsic motivation (Wang and Guthrie, 2004; Durik et al., 2006). Moreover, sustained engagement is important to successfully integrating and coordinating multiple cognitive processes, such as decoding, vocabulary, making inferences, and comprehension monitoring (Guthrie and Wigfield, 2017). Specific to adults with lower literacy skills, evidence from eye-tracking data suggests that readers who spent more time engaging and reading key areas of the text were more likely to correctly score on reading comprehension questions and were better at summarizing and explaining key information in text (Tighe et al., 2023a). In addition, Fang et al. (2018) found different performance clusters of adults with low literacy skills based on accuracy and timing data from interacting with a digital learning environment.

Process data (log files) can provide important behavioral information about respondents’ engagement in a digital assessment (Cromley and Azevedo, 2006; He and von Davier, 2015, 2016; Fang et al., 2018; He et al., 2019; Liao et al., 2019). Process data is informative for understanding how respondents engage in a digital assessment because it provides the amount of time spent performing specific actions, including the amount of time spent until the respondent initiated an action on an item (e.g., highlighting, choosing/clicking on an answer) or the amount of time that occurred between different actions (e.g., time it took between choosing an answer [last action] and moving onto the next item). Such timing data offers nuanced information regarding the amount of time adults with lower reading skills spent reading and processing the information in the test item before choosing an answer or submitting their response. Moreover, previous research with PIAAC data suggests that these behaviors are linked to response accuracy on digital problem-solving items, and that differences exist across certain individual and contextual factors (He and von Davier, 2015, 2016; He et al., 2019; Liao et al., 2019). For example, Liao et al. (2019) found that adults who were well-educated, had higher ICT skills-use, and reported more numeracy skills at work spent more time on digital assessment items that required higher reading skills, planning, and problem-solving. Similarly, adults with lower literacy skills are heterogeneous in terms of individual (e.g., educational attainment, age, learning disability status) and contextual (e.g., ICT, reading, and writing skills-use) characteristics, which may relate to time they spend on a digital assessment. Moreover, the literature reports mixed results regarding whether more time on digital assessments is beneficial for adult test-takers. Some research suggests that test-takers with higher skills need less time to successfully respond to assessment items (e.g., van Der Linden, 2007; Klein Entink, 2009; Wang and Xu, 2015; Fox and Marianti, 2016); however, other evidence suggests that test-takers can benefit from spending more time (Klein Entink et al., 2009). Therefore, additional work with adults who have lower literacy skills is needed to understand the relation between digital literacy performance and time spent on the assessment.

Current study

The current study used demographic and process data from the PIAAC to investigate three research questions. First, we examined whether adults with lower literacy skills (Levels 2 and below on the literacy scale) demonstrated different patterns of time allocation on a digital literacy assessment (i.e., time to performing the first action, time for the last action, and total time) from adults who demonstrate higher literacy skills. Because previous studies suggest more time is indicative of higher literacy scores (e.g., Liao et al., 2019), we anticipated that overall, adults with higher literacy skills would show patterns of increased time on digital literacy items. Second, this study investigated whether adult respondents with lower literacy skills (who scored Levels 2 and below on the literacy scale) were heterogeneous in terms of the amount of time they spent on a digital literacy assessment. Previous studies with adults who have lower literacy skills suggest that this population is diverse in terms of their reading skills and various individual, demographic characteristics (e.g., MacArthur et al., 2012; Talwar et al., 2020; Tighe et al., 2022), thus we anticipated that adults with lower literacy skills may also reveal different patterns of time spent on a digital assessment. Specifically, we expected that some of the respondents would spend substantially more time on digital literacy items than others. Finally, we examined whether different groups of individuals who demonstrated distinct patterns of time allocation varied according to multiple individual (self-reported learning disability status, employment status, educational attainment, age, native English-speaking status, race/ethnicity, literacy scores) and contextual (ICT, reading, writing, and numeracy skills-use) factors. We expected that adults with lower literacy skills who spent substantially more time on the digital literacy items would also score higher on the literacy scale and report higher skills-use. We specifically asked the following research questions:

1. Do adults with lower literacy skills on the PIAAC literacy scale (Levels 2 and below) demonstrate different patterns of time allocation on digital literacy items compared to adults who scored higher on the literacy scale?

2. Can we identify distinct clusters, or groups, of adults with lower literacy skills that emerge from the time allocation data?

3. Do individual and contextual factors predict identified cluster timing membership for adults with lower literacy skills?

Methods

This study analyzed behavioral data from the PIAAC public log files, specifically focusing on the computer-based version of the PIAAC literacy domain assessment. We investigated the timing of respondents who took the easiest items (Testlet L11) in stage 1 of the literacy assessment across three timing variables: time to first action, time for last action, and total time. For individuals scoring at Levels 2 and below, we used cluster analysis to examine potential groupings on the nine items across all three timing variables. Finally, we used demographic characteristics to predict cluster membership, including skills-use (ICT, reading, writing, numeracy), age, native English speaker status, self-reported learning disability status, educational attainment, employment status, and race/ethnicity.

Participants

Behavioral data (time to the first action, time for last action, total time) were available on 2,697 U.S. adults (ages 16–65) from the public PIAAC log files (process data; OECD, 2017). Depending on the specific testlet, 843–967 adults were available. Across testlets L11, L12, and L13, the sample consisted of 44.7% males, 87.1% native English speakers, and 70.4% of the sample self-identified as White. Adults with lower literacy skills (Levels 2 and below) represented 38 to 43% of the respondents across the three testlets.

Measures

General PIAAC survey

The PIAAC survey was developed to assess the proficiency of cognitive and workplace competencies of adults. Participants completed an extensive background questionnaire, literacy, and numeracy domains. Some participants also received a problem-solving in technology rich environments (PSTRE) domain and a paper-based reading components supplement (OECD, 2013). For this study, we only focus on the literacy domain and demographics and skills-use variables (ICT, reading, writing, and numeracy) from the background questionnaire.

Literacy domain

Content and questions for the literacy domain were derived from previous large-scale international assessments that were administered to adults [e.g., International Adult Literacy Survey (IALS)]. This domain tapped underlying cognitive skills and applied literacy skills that were deemed necessary to meet the demands of adults living and working during the 21st century (PIAAC Literacy Expert Group, 2009).

The PIAAC literacy domain included a range of texts (e.g., electronic and narrative texts across different medias). The assessment scores are reported on a 500-point scale, which fall into 5 different literacy proficiency levels that are characterized by different reading skills: In the first proficiency level, Below Level 1 (0–175 points), respondents can locate specific information in brief, familiar texts with minimal competing information. Basic vocabulary skills are sufficient, and literacy tasks are not specific to digital text features. In Level 1 (176–225 points), respondents can read short texts in various formats to locate information, and basic vocabulary skills are essential. In Level 2 (226–275 points), respondents can match text information, make low-level inferences, and sometimes navigate texts presented in a digital medium. In Level 3 (276–325 points), respondents can understand dense or lengthy texts, make inferences, and navigate complex digital texts. In Level 4 (326–375 points), respondents can perform multi-step operations, interpret complex information, and handle competing data. In Level 5 (376–500 points), respondents are able to perform tasks that demand integrating information across multiple dense texts, construct syntheses, and evaluate evidence-based arguments.

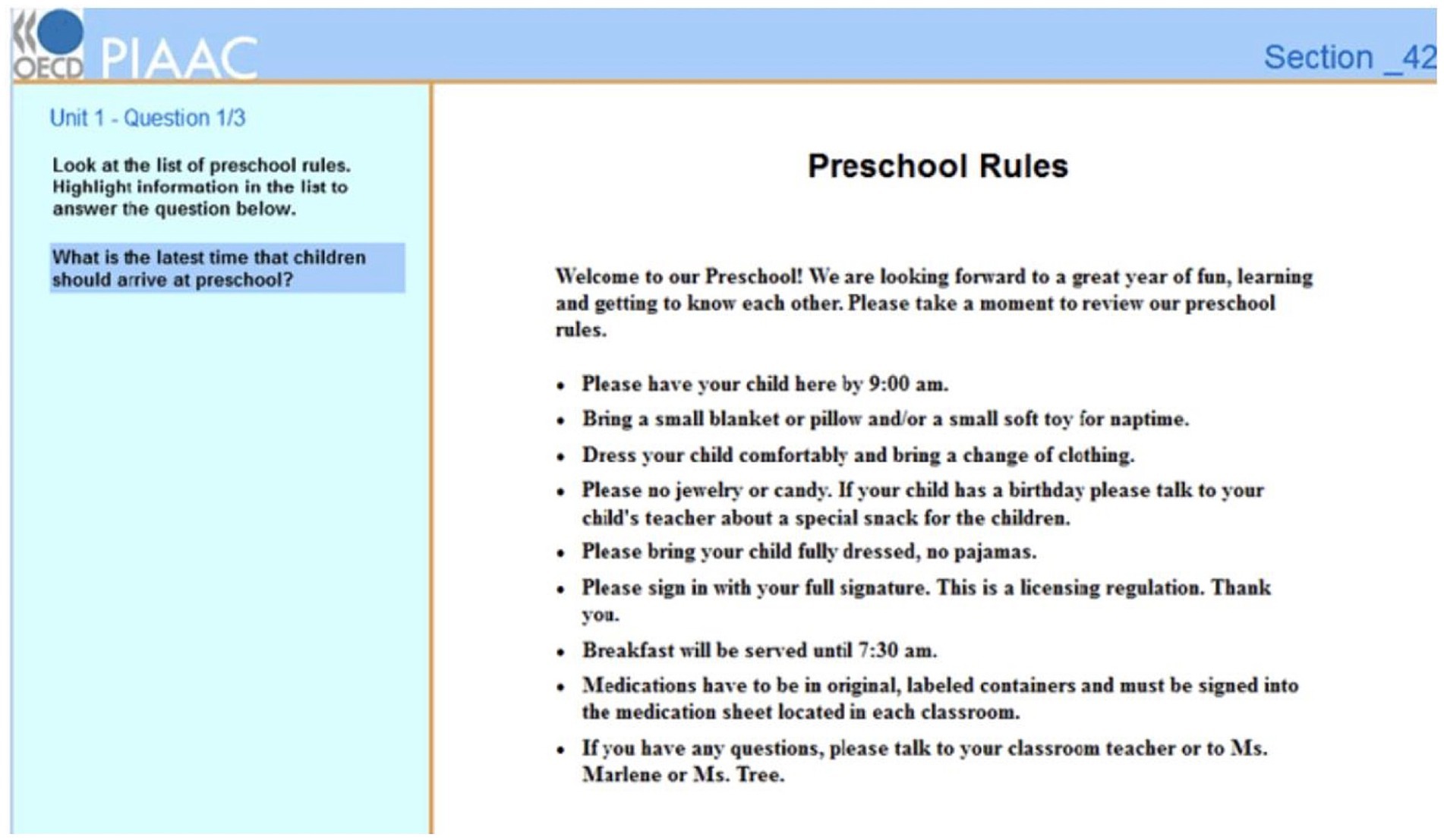

Item assignment on the literacy domain assessment followed a multistage adaptive testing rule. Each literacy module consisted of 20 items distributed across two sessions: nine items in stage 1 and 11 items in stage 2 (see Figure 1 for a sample literacy item). Some participants took a paper-based literacy assessment and thus were not included in the analysis for this study.

Figure 1. Sample Level 3 Literacy Item. This sample item was available from the technical report of the survey of adult skills (PIAAC, OECD, 2013).

It is noted that there was a total of 18 unique literacy items included in stage 1 of the PIAAC literacy assessment. These 18 items were assigned into three testlets (L11, L12, and L13) following an integrated block design.1 As a result, each testlet consisted of nine items (OECD, 2017). Details of item assignment in the three testlets are reported in Supplementary Table S1. These three testlets varied in difficulty: easy (L11), medium (L12), and difficult (L13), with an average difficulty level of −0.218, 0.485, and 0.972, respectively.2

The assignment of each respondent to a testlet was determined by three crucial variables: (a) education level (EdLevel3), categorized as low, medium, or high; (b) native versus nonnative speaker status, considering respondents as native speakers if their first language belonged to the assessment languages; and (c) the CBA-Core Stage 2 screening score. These variables were structured into a matrix, yielding two threshold numbers (refer to Supplementary Table S2 for a sample of the matrix design for testlet selection). Following assignment rules, respondents with a low education level, nonnative speaker status, and a low score on the CBA-Core Stage 2 screening were more likely to be assigned to the easier testlet, Testlet L11.

Despite these assignment criteria, the distribution of respondents with lower literacy levels (Levels 2 and below) remained notably similar across the testlets. Specifically, in Testlet L11, identified as the easiest testlet, 43% of respondents were categorized as Levels 2 and below. Comparatively, Testlets L12 and L13 had 44 and 38% of respondents at Levels 2 and below, respectively (see Supplementary Table S3 for a detailed breakdown of the frequency of respondents across levels in each testlet).

For research questions 2 and 3 of the present study, which primarily target respondents scoring Levels 2 and below, we focused more closely on Testlet L11. The items in Testlet L11 are acknowledged as the simplest items within the PIAAC literacy domain and were deliberately chosen to correspond with the proficiency levels of respondents who were more likely to score lower on the assessment. Consequently, conducting a thorough examination of timing in Testlet L11, with its easier items, enabled us to offer a more precise and focused analysis of time allocation patterns associated with individuals at lower literacy levels.

Background questionnaire

The background questionnaire included several demographic items. For this project, we used data on age, native English speaker status, self-reported learning disability status, employment status, race/ethnicity, and educational attainment. Age was categorized into “younger,” “middle,” and “older” groups. The “younger” group consisted of individuals aged 24 and younger and those aged 25 to 34, the “middle” group included respondents aged 35 to 44, and the “older” group encompassed those aged 45 to 54 and 55 and above.

The background questionnaire also contained several items pertaining to engagement in ICT, reading, writing, and numeracy skills-use at home and at work. Scaling indices of the skills-use variables have been derived with item response theory modeling on the related categorical variables and are available to be used (He et al., 2021). We used the numeric skills-use index variables to investigate associations between time-related variables and respondents’ skills-use at home and at work.

Many of the adults who were assigned Testlet L11 and achieved a Level 2 and below literacy level (N = 359) did not respond to the skills-use at work items (51, 22, 36, and 35% missing data for ICT, reading, writing, and numeracy skills at work, respectively). Therefore, in research question 3, we included the at home, but not the at work skills-use variables.

Analytic approach

Initially, we described the demographic and skills-use variables across each literacy proficiency level (Below Level 1, Level 1, 2, 3, 4, and 5). Means were computed for the skills-use variables, while frequency distributions were generated for the demographic variables.

To address our first research question, we conducted a descriptive analysis of Testlets L11 through L13. We specifically examined the mean time allocated to each of the nine items across the three timing variables (time to first action, time for last action, and total time) by literacy proficiency level. We focused on comparing the timing among individuals categorized as Below Level 1, Level 1, and Level 2 against those at Levels 3 and above. Furthermore, we descriptively explored and visually represented these trends for Testlets L12 and L13 to discern their consistency among individuals scoring at Level 2 or below on the more challenging items.

Furthermore, we conducted one-way ANOVA significance tests for Testlets L11 through L13. These tests assessed potential differences in time allocation on the nine items across literacy levels, considering the three timing variables. Additionally, we performed one-way ANOVAs across all testlets to investigate total mean differences on the nine items by literacy proficiency level for each of the three timing variables.

To address our second research question, we conducted a K-means cluster analysis to explore distinct patterns of time allocation among adult participants in Testlet L11 who scored Level 2 or below on the PIAAC literacy scale. We employed the ‘kmeans’ clustering function from the stats package, a core package offered in R Studio (R Core Team, 2021), to investigate clustering of the nine items across time to first action, time for last action, and total time spent. In total, we examined 27 different variables. For clarity and ease of interpretation, we converted these timing variables from milliseconds to seconds.

To gauge the effectiveness of the clustering, we calculated silhouette scores using the cluster package in R Studio (Maechler et al., 2021), for a range of cluster numbers spanning from 2 to 8. Silhouette scores, ranging from −1 to 1, serve as a metric to assess the clarity and separation of clusters. Furthermore, the conceptual evaluation of these clusters was based on the timing results from the first research question.

To address the third research question, we used regression in R Studio to investigate the relation between essential individual and contextual factors to the number of clusters that emerged from the data in research question 2. Depending on the number of clusters identified in the second research question, we anticipated employing the ‘glm’ function from the stats package in R Studio to conduct logistic regression for two clusters or multinomial logistic regression for more than two clusters. Predictors of cluster membership included total literacy scale score, age, native English speaker status, self-reported learning disability, employment status, race/ethnicity, educational attainment, and skills-use at home (ICT, reading, writing, and numeracy).

Results

Preliminary analyses

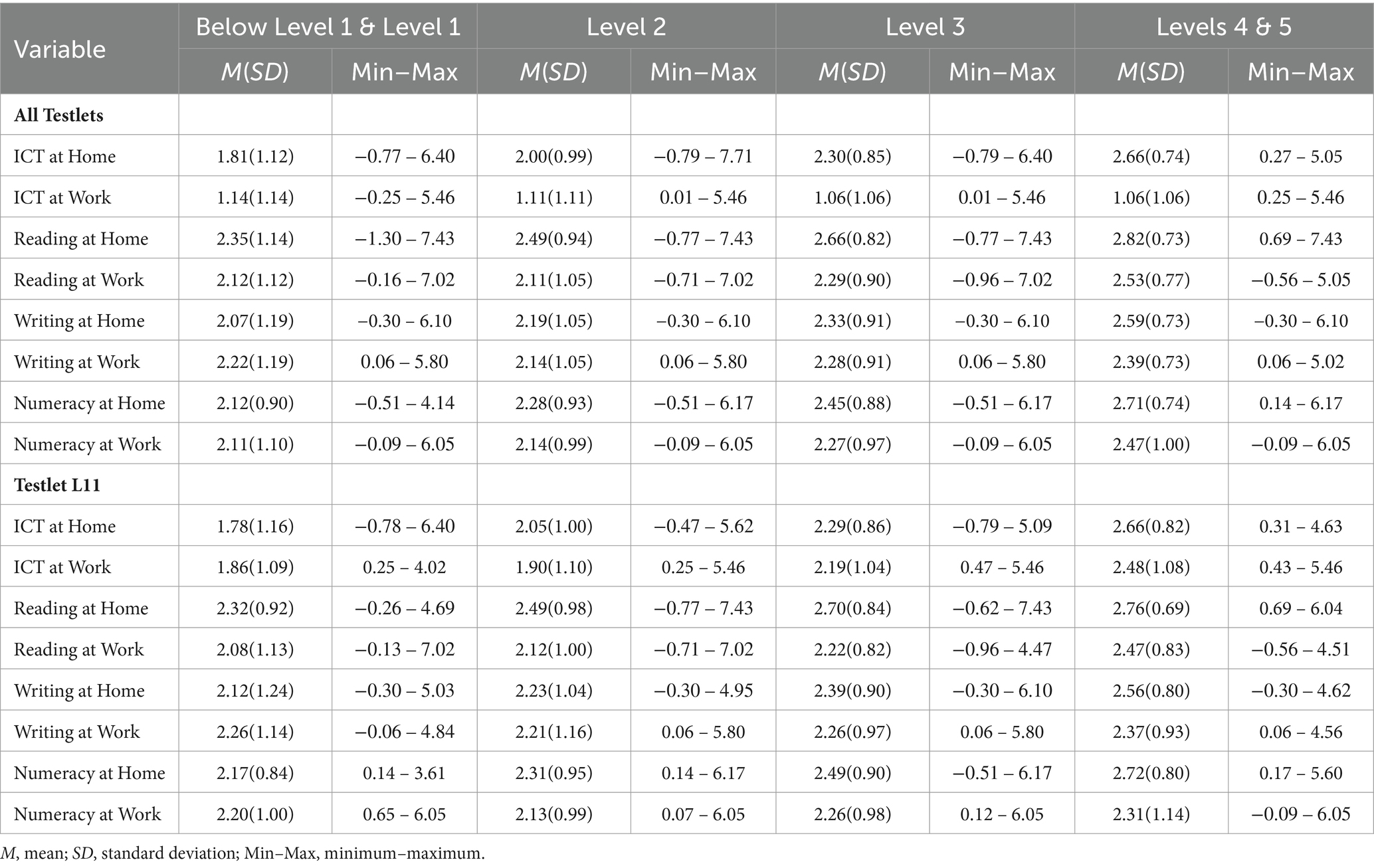

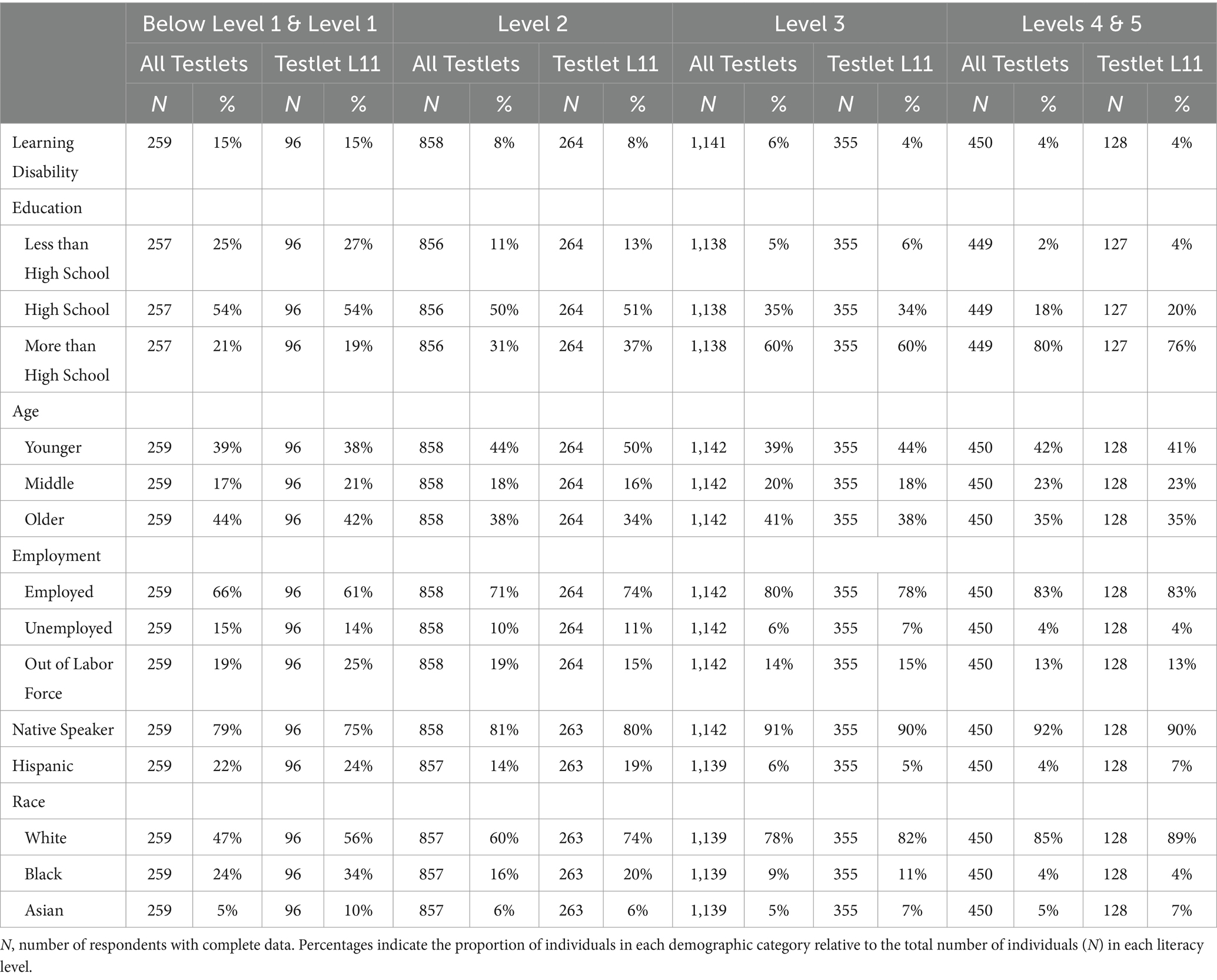

Table 1 presents mean skills-use (ICT, reading, writing, and numeracy) across the literacy levels for all testlets and solely for Testlet L11. Table 2 includes demographic frequencies across the literacy levels for all testlets and solely for Testlet L11. Below Level 1 and Level 5 groups consisted of substantially smaller sample sizes (Ns = 24 and 29, respectively); thus, we combined the Below Level 1 with Level 1 groups and the Level 4 with Level 5 groups. Across all testlets, respondents in the lower-scoring literacy groups (Levels 1 and below and Level 2) reported lower skills-use across ICT at home, and all reading, writing, and numeracy domains compared to the Level 3 and Levels 4 and 5 groups. Additionally, respondents in the lower literacy groups reported lower educational attainment than Level 3 and Levels 4 and 5. Respondents in the lowest literacy groups (Levels 1 and below) more frequently reported being in the oldest group, self-identifying as Black, and self-identifying as Hispanic. Respondents in the higher literacy groups reported the highest frequencies of being native English speakers and being employed. Similar patterns were observed for Testlet L11.

Time allocation patterns across adults with lower compared to higher literacy skills

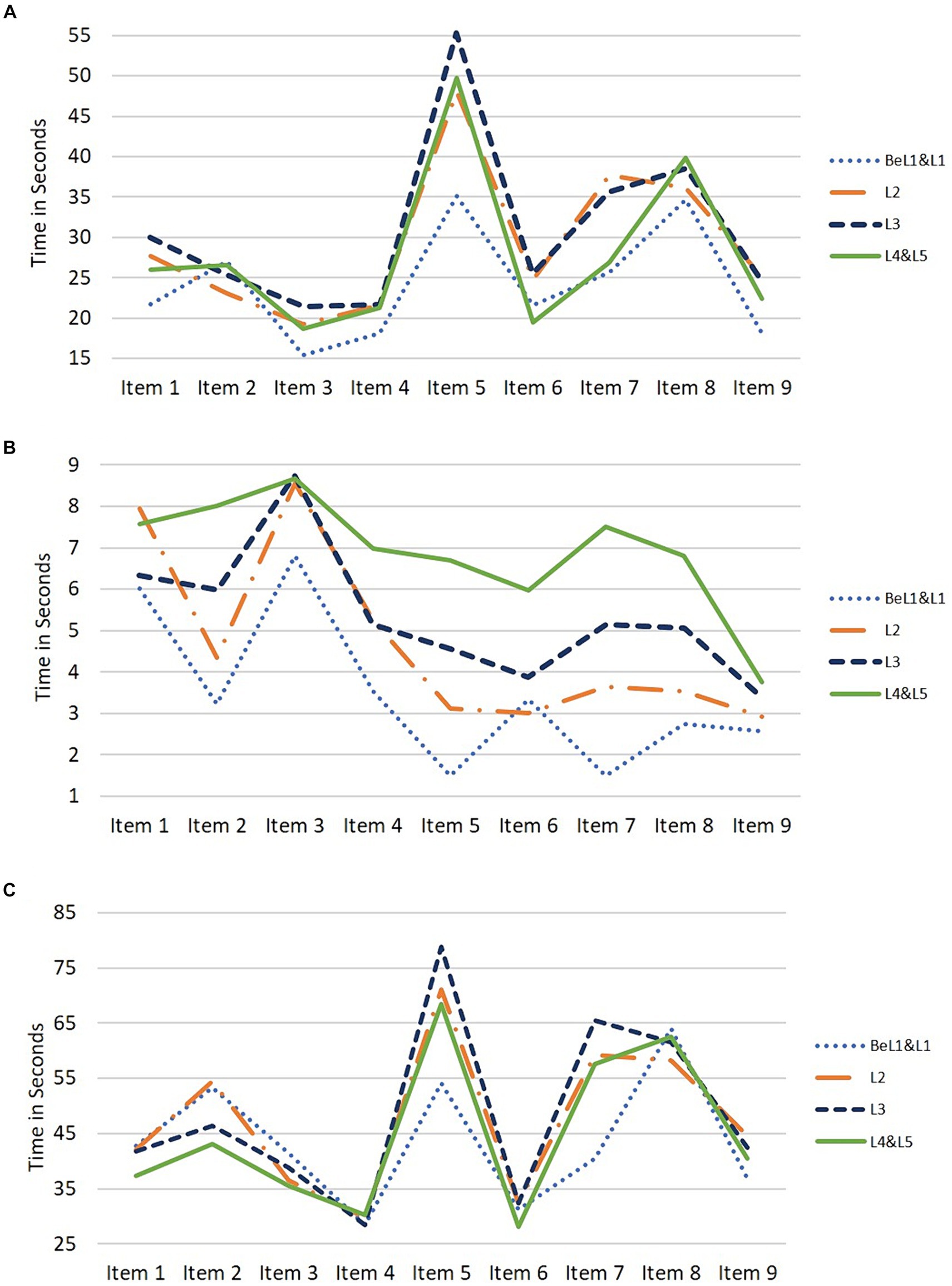

To address the first research question, we first examined patterns of time allocation in Testlets L11 through L13 on each of the nine items separately for each timing variable (time to first action, time for last action, total time) by literacy proficiency level (Levels 1 and below, Level 2, Level 3, Levels 4/5). These findings are visualized in Figure 2 for Testlet L11. Generally, respondents scoring at Level 1 and Below Level 1 on the PIAAC literacy domain allocated less time to items across all three timing variables compared to those scoring at Level 2 or above. Respondents at Level 2 showed similar time allocation patterns for time to first action and total time as Levels 3 and above; however, they seemed to spend less on time for the last action than the higher levels.

Figure 2. Timing variables by literacy proficiency group. (A) Mean time to first action. (B) Mean time for last action. (C) Mean total time.

The time allocation patterns for the nine items, categorized by literacy level, were also visualized for respondents assigned to Testlets L12 and L13 (refer to Supplementary Figures S1, S2). Across the three timing variables, those scoring at Level 1 and Below Level 1 on Testlets L12 and L13 seemed to allocate the least amount of time on more items compared to Testlet L11. Similarly, respondents scoring at Level 2 on these testlets also appeared to allocate less time than the higher literacy levels on more items compared to Testlet L11.

Next, we investigated potential significant differences in the timing variables across the literacy proficiency levels for each of the nine items in Testlet L11. Supplementary Table S4 presents ANOVA results along with pairwise comparisons, while Supplementary Table S5 displays the mean time spent in seconds for each item across the different literacy levels. Respondents scoring at Levels 1 and below demonstrated significantly shorter time to first action on items three, five, seven, and nine compared to those with higher literacy scores (ps < 0.05). Respondents scoring at Level 2 spent more time on item seven than those at Levels 4 and 5 (p < 0.01). In terms of time for the last action, respondents at Levels 1 and below spent significantly less time on items two, four, five, six, seven, and eight compared to individuals with higher literacy scores (ps < 0.05). Respondents at Level 2 also exhibited shorter time for last action than higher-scoring respondents on items two, five, six, seven, and eight (ps < 0.05). Overall, regarding total time spent, respondents at Levels 1 and below spent substantially less time than those with higher scores on items 5 and 7; however, no differences were noted for respondents at Level 2 compared to those who achieved a higher literacy level.

Significance tests were also conducted across the literacy levels for each of the nine items in Testlets L12 and L13. ANOVA results with pairwise comparisons for Testlets L12 and L13 can be found in Supplementary Tables S6, S7. In both testlets across the three timing variables, respondents who scored at Levels 1 and below demonstrated the least amount of time on more items compared to Testlet L11. Similarly, those who scored at Level 2 in Testlets L12 and L13 also allocated less time than the higher literacy levels on more items compared to respondents in Testlet L11. These findings suggest that Testlets L12 and L13 posed greater difficulty for respondents at lower literacy levels than Testlet L11.

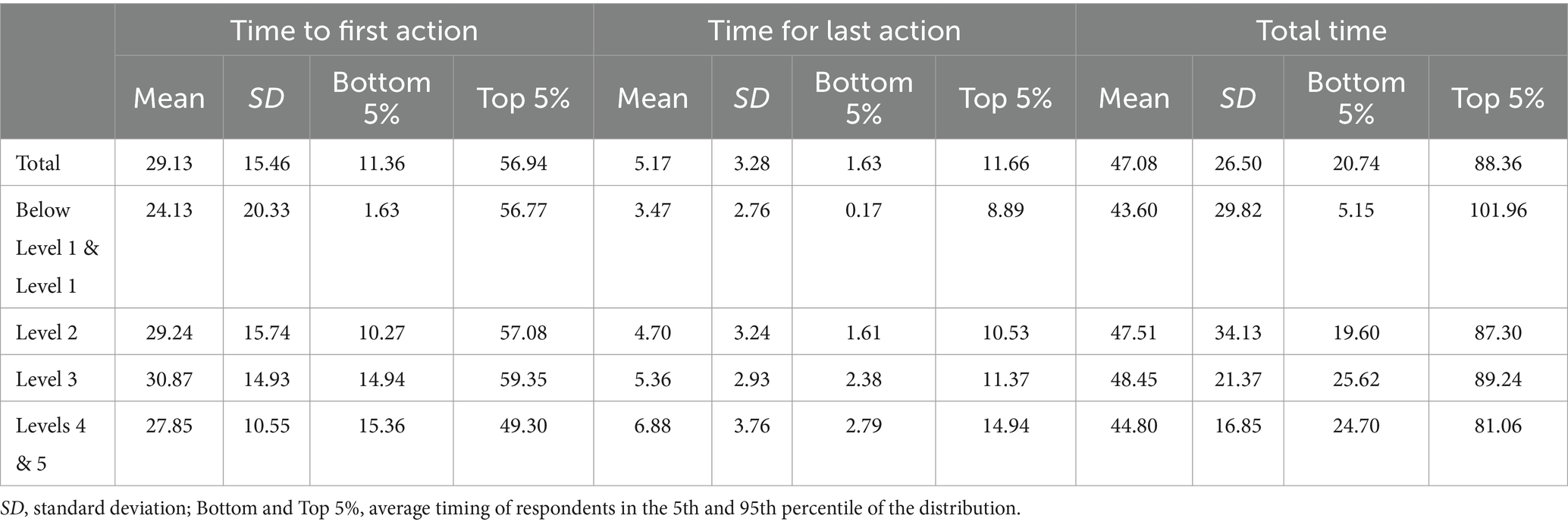

Finally, we calculated the total average across timing variables for all three testlets and conducted one-way ANOVAs to ascertain differences by literacy level. In Testlet L11, individuals at Levels 1 and below allocated significantly less time to first action compared to Levels 2 and 3 (ps < 0.01 and 0.001, respectively). No significant differences were observed between Level 2 and higher-scoring respondents. Regarding time for the last action, respondents scoring Levels 1 and below allotted significantly less time than Levels 2 and above (ps < 0.001). Those at Level 2 spent significantly less time than Levels 4 and 5 (p < 0.001). However, for total time spent, no significant differences were noted between Levels 2 or below and the higher literacy levels. Descriptive statistics for the total average across timing variables for Testlet L11 are presented in Table 3. Supplementary Table S8 contains ANOVA results with pairwise comparisons for Testlets L11 through L13.

Table 3. Means and standard deviations of timing variables across literacy proficiency groups for Testlet L11.

Supplementary Table S9 contains the descriptive statistics for Testlets L12 and L13 across literacy proficiency groups. Participants achieving Levels 1 and below in Testlets L12 and L13 displayed significantly shorter total time than respondents in the higher literacy levels; however, this result was not observed in Testlet L11. This finding reflects the item difficulty of Testlets L12 and L13, suggesting that individuals scoring Below Level 1 through Level 2 allocated less time than the higher literacy levels on more of the items as the difficulty of the testlets increased. Consequently, we opted to replicate the cluster analysis using Testlet L11 exclusively, as it contained the easiest items, which are the most appropriate for individuals who scored at the lower literacy levels (Levels and below).

Clusters of adults with lower skills by time allocation

To address the second research question, we used K-means cluster analysis based on all time-related variables (nine items × three timing variables = 27 variables) to examine whether there were distinct groups of respondents with lower literacy skills (at or below Level 2) on Testlet L11. We initially selected K = 2 clusters, supported by the findings in research question one, which indicated that adults at Level 2 spent more time on the task than Level 1 and Below Level 1. We thus anticipated that there would be at least 2 clusters of adults based on their time spent. The silhouette score analysis across the range of 2 through 8 clusters indicated that the highest silhouette score was observed for K = 2 clusters, validating that this solution was the best fit to our data (see Supplementary Figure S3). Therefore, we selected 2 clusters as the optimum number of clusters.

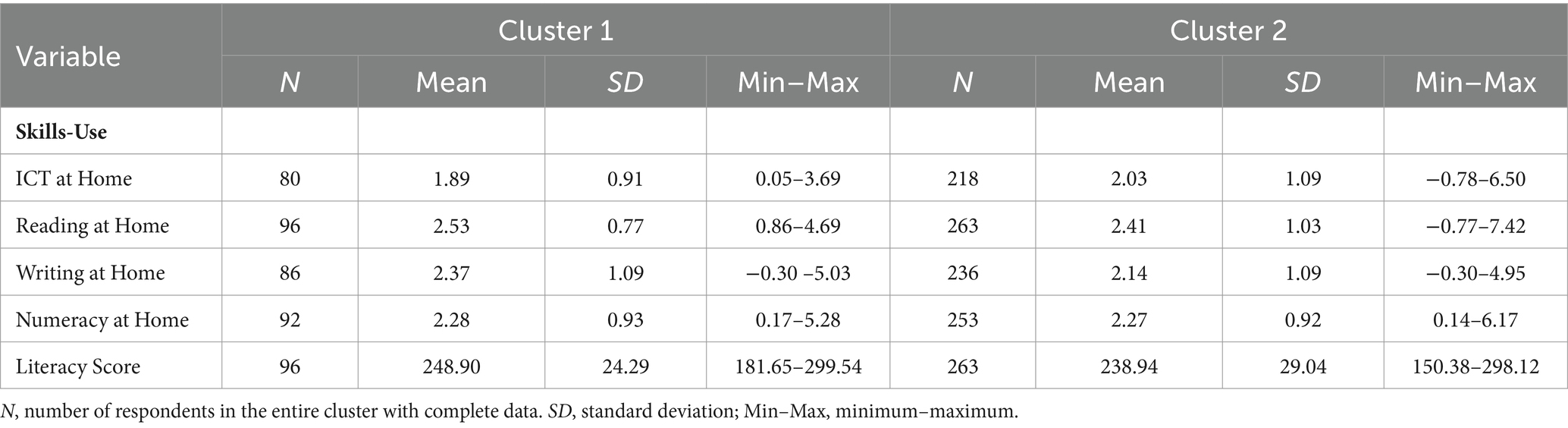

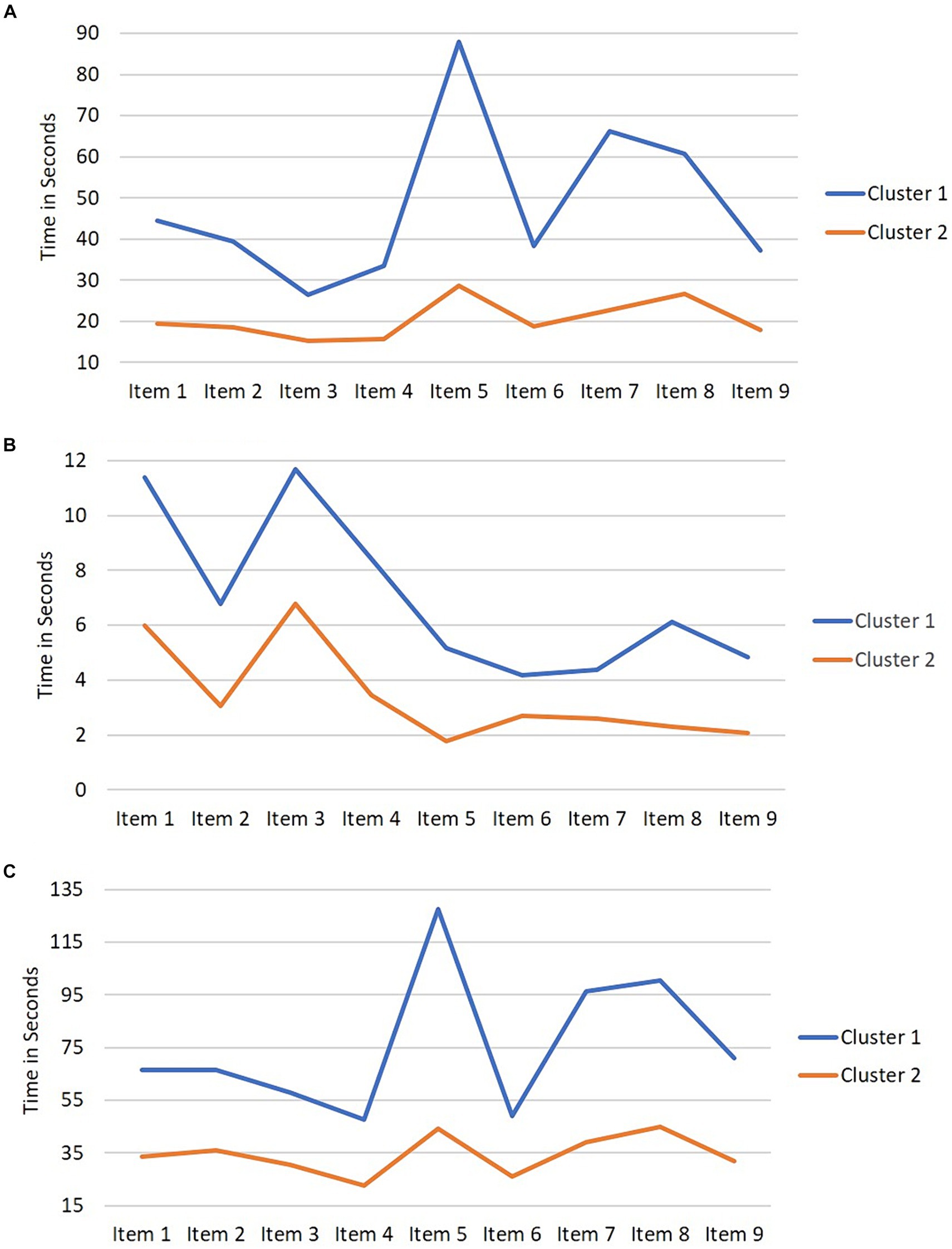

The visual representation of the 2-cluster solution is depicted in Figure 3. The findings indicate that Cluster 1 allocated more time than Cluster 2 across all nine items for the time to first action, time for last action, and total time variables. Additionally, we conducted t-tests to determine the significance of these differences across the nine items. Cluster 1 spent significantly more time than Cluster 2 on all nine items across all three timing variables (ps < 0.001). Supplementary Table S10 includes descriptive statistics for time spent in seconds on each item by cluster.

Figure 3. Timing variables by cluster. (A) Mean time to first action. (B) Mean time for last action. (C) Mean total time.

Next, we conducted one-way ANOVAs to compare mean differences between Clusters 1 and 2 across the average of the nine items for time to first action, time for last action, and total time. The mean and standard deviation (M [SD]) for time to first action, time for last action, and total time was 48.19 (18.06), 7.00 (3.60), and 75.90 (22.14) seconds, respectively, for Cluster 1 and 20.39 (8.85), 3.42 (2.36), and 34.22 (13.23) seconds, respectively, for Cluster 2. There were statistically significant differences between the means of the two clusters for time to first action (F(1, 357) = 377, p < 0.001), time for last action (F(1, 357) = 119.7, p < 0.001), and total time (F(1, 357) = 472, p < 0.001), indicating that respondents in Cluster 1 spent notably more time on the items than respondents in Cluster 2.

Predictors of cluster membership

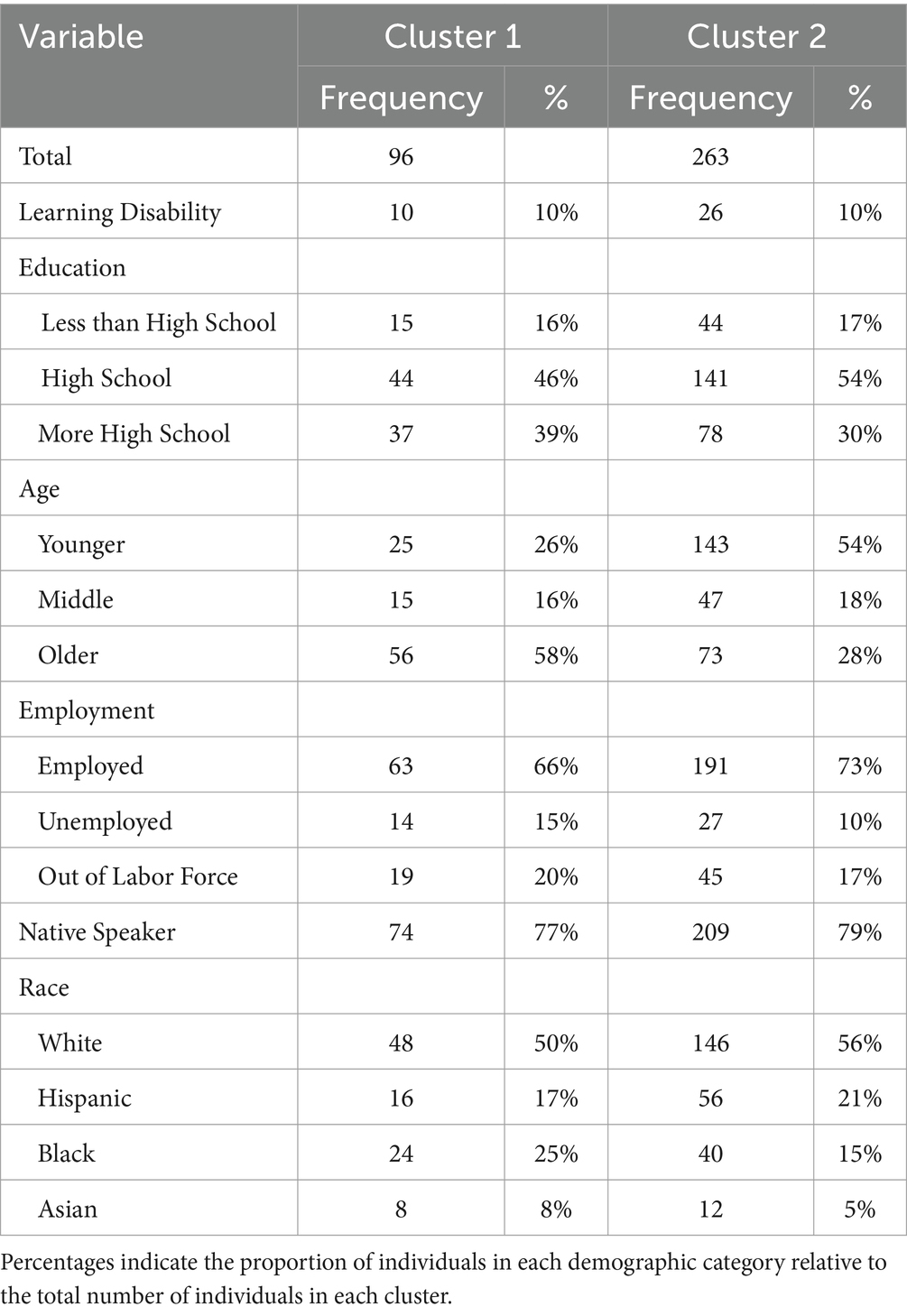

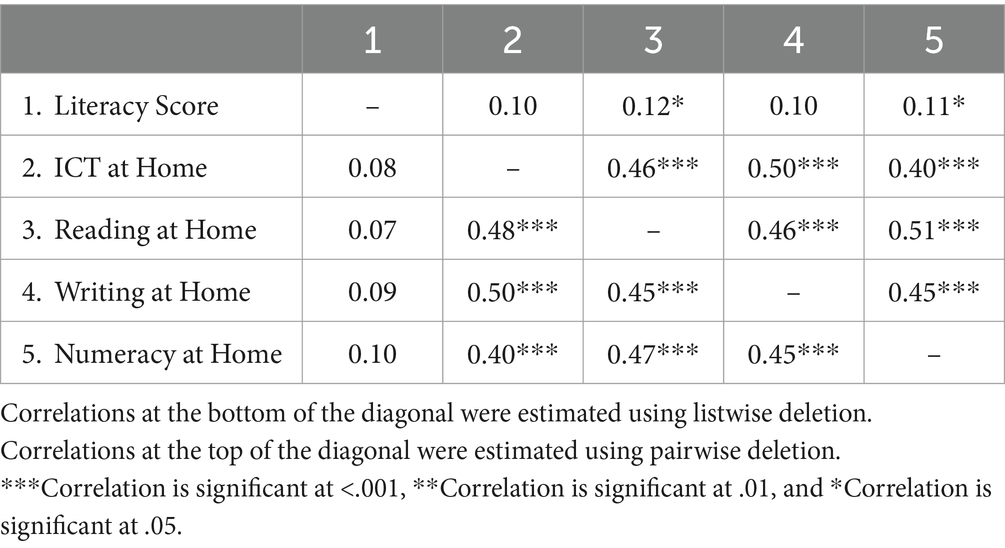

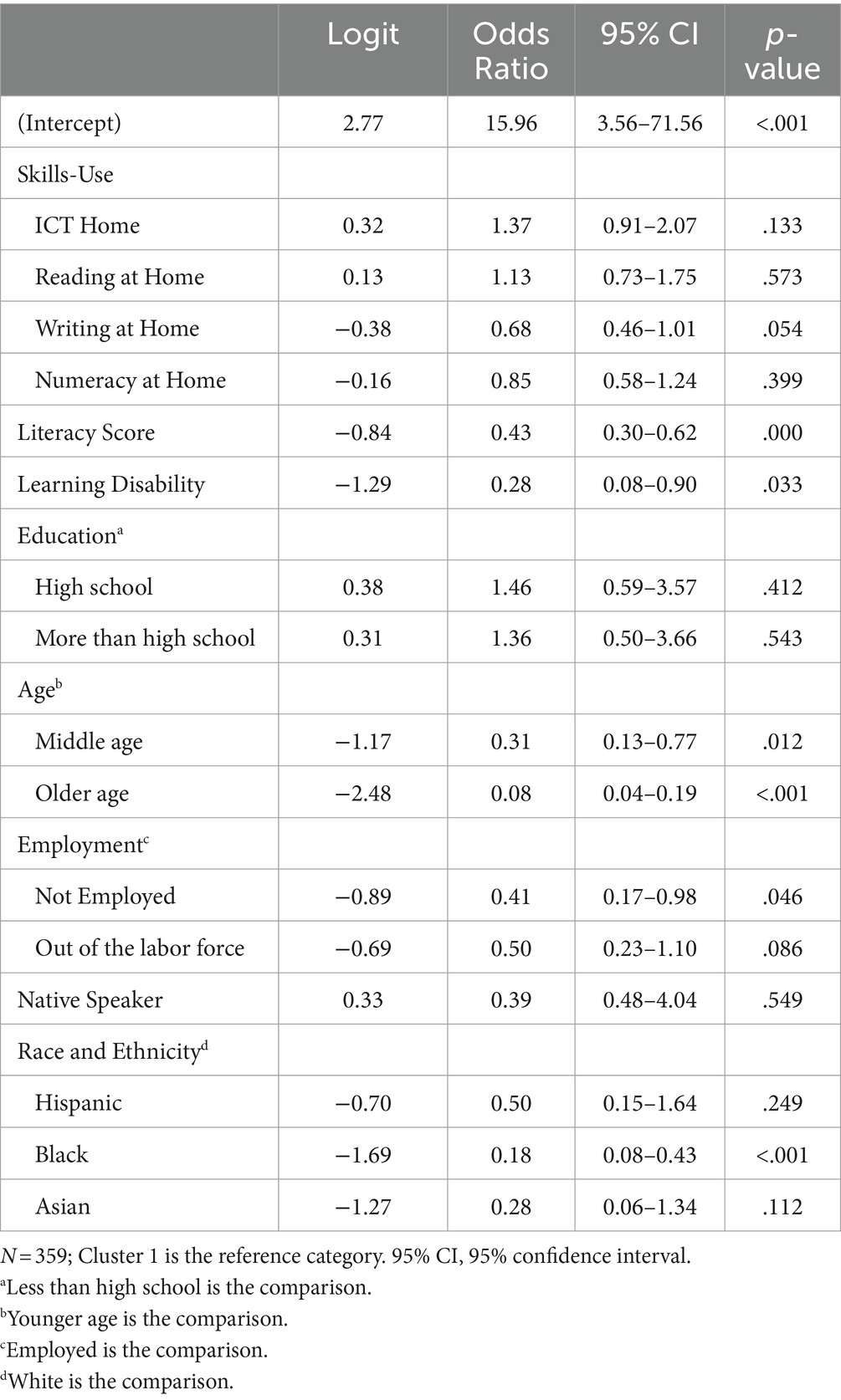

To address the third research question, logistic regression was employed to investigate whether various individual factors (such as literacy scores, educational attainment, and presence of a learning disability) along with contextual factors (ICT, reading, writing, and numeracy activities at home) predicted the clusters derived from respondents’ timing behaviors as explored in research question two. Table 4 reports the means of the skills-use and literacy scores and Table 5 contains demographic frequencies across Clusters 1 and 2. Table 6 reports correlations between the continuous variables (literacy score with ICT, reading, writing, and numeracy skills-use at home).

Initial analyses were conducted to evaluate missing data across all variables. The majority of missing data was observed for the skills-use variables at home; among 359 respondents, 17, 10, and 4% did not provide responses for ICT, writing, and numeracy skills at home, respectively. To assess whether the missingness followed a Missing at Random (MAR) pattern, a logistic regression was performed using the literacy and demographic variables in research question three to predict missingness on the skills-use at home variables. The demographic frequencies of respondents with missing and complete skills-use data are reported in Supplementary Table S11. The mean and standard deviation of the literacy domain scores for individuals with missing and complete skills-use data are 231.99 (32.26) and 244.68 (26.04), respectively. The parameter estimates of the model predicting missingness are reported in Supplementary Table S12, which displays the estimated coefficients, odds ratios (OR), 95% confidence intervals (95% CI), and p-values.

According to the findings, respondents with higher literacy scores were significantly less likely to have missing data on the skills-use variables at home (OR = 0.58, 95% CI = 0.50–0.81, p < 0.001). Furthermore, individuals who reported being unemployed were significantly less likely to exhibit missing data on the skills-use variables at home compared to those who were employed (OR = 0.17, 95% CI = 0.05–0.44, p < 0.001). These results suggest that missing data for skills-use at home is MAR; thus, we handled the missing data using multiple imputation with the mice and miceadds packages in R studio (van Buuren and Groothuis-Oudshoorn, 2011; Robitzsch and Grund, 2023). Additionally, results from complete case analysis (i.e., listwise deletion) were also reported to evaluate whether the findings remained consistent when cases with missing data were excluded. Supplementary Table S13 presents descriptive statistics for individuals with complete data, including average skills-use and literacy scores, categorized by cluster membership. Supplementary Table S14 provides demographic frequency data categorized by cluster membership.

Table 7 displays the estimated coefficients, ORs, 95% CIs, and p-values derived from the logistic regression analysis involving all 359 respondents. Writing skills-use at home approached significance, indicating that higher utilization of writing skills at home was associated with lower odds of belonging to Cluster 2 (OR = 0.68, 95% CI = 0.46–1.01, p = 0.054). Furthermore, scores on the literacy domain assessment were significantly linked with reduced odds of being in Cluster 2 (OR = 0.43, 95% CI = 0.30–0.62, p < 0.001). Respondents reporting a learning disability were also associated with decreased odds of Cluster 2 membership (OR = 0.28, 95% CI = 0.08–0.90, p = 0.033). Age exhibited a significant negative correlation with Cluster 2 membership; middle-aged and older individuals demonstrated significantly lower odds of belonging to Cluster 2 compared to younger individuals (OR = 0.31, 95% CI = 0.13–0.77, p = 0.012, and OR = 0.08, 95% CI = 0.04–0.19, p < 0.001, for middle-aged and older individuals, respectively). Moreover, individuals who were unemployed (OR = 0.41, 95% CI = 0.17–0.98, p = 0.046) and those who identified as Black (OR = 0.18, 95% CI = 0.08–0.43, p < 0.001) had significantly lower odds of belonging to Cluster 2 compared to employed individuals and White respondents, respectively.

Supplementary Table S15 contains the parameter estimates from the complete case analysis (N = 272 participants with complete data). Findings from the logistic regression utilizing complete case analysis to address missing data were largely similar to those from the analysis using multiple imputation, with two notable exceptions. Specifically, the utilization of writing skills at home emerged as a significant predictor of cluster membership in the complete case analysis (OR = 0.58, 95% CI = 0.36–0.91, p = 0.020); however, writing skills-use was not significant after including all 359 participants and imputing the missing data (OR = 0.68, 95% CI = 0.46–1.01, p = 0.054). In the model with 272 participants with complete data, the association with learning disability approached significance (OR = 0.29, 95% CI = 0.08–1.13, p = 0.065), yet was significant in the model with imputed data (OR = 0.28, 95% CI = 0.08–0.90, p = 0.033). Nevertheless, the direction and magnitude of the effects from the complete case analysis remained highly consistent with the model involving 359 participants that employed multiple imputation methods to handle missing data. Discrepancies in significance values may be attributed to the reduced sample size in the complete case analysis model.

Discussion

The purpose of the current study was threefold. First, we explored time allocation patterns (time to first action, time for last action, total time) of adults across all levels of proficiency on the PIAAC digital literacy items. Second, we examined whether we could identify different time allocation patterns (i.e., clusters) amongst adults who demonstrated lower literacy levels (Levels 2 and below). Finally, we examined whether individual and contextual factors predicted cluster membership with this group of adults. Results suggested that adults with the lowest literacy levels (Level 1 and Below Level 1) spent the least amount of time across many of the nine literacy items on time to first action and time for last action than adults who achieved Level 2 and higher (Levels 3 and above). Within the group of respondents with lower literacy skills (Levels 2 and below), we identified two distinct clusters, such that respondents in Cluster 1 spent significantly more time on the digital literacy items than respondents in Cluster 2. Finally, we found that adults with lower literacy skills who reported having a learning disability, being older, unemployed, identifying as Black, and having relatively higher literacy scores had a higher probability of Cluster 1 membership (spending more time). Engagement in writing activities approached significance (p = 0.054), suggesting that individuals who reported high frequency in writing at home were more likely to be members of Cluster 1. These findings have educational implications for adults with lower literacy skills as well as implications for future research with this population.

Time allocation patterns

Across all testlets, adults with higher literacy levels (Levels 3 and 4/5) demonstrated an overall pattern of spending more time across the items than adults with lower literacy levels (Level 2 and Levels 1 and below). This is consistent with evidence from work with proficient adults, which suggests that time spent on digital assessments is related to better performance (Liao et al., 2019). Similarly, some emerging work with adults with lower literacy skills suggests that more time spent on digital tasks may indicate better performance for some learners (e.g., Fang et al., 2018).

Interestingly, time allocation patterns varied for adults who demonstrated lower literacy skills (Levels 2 and below). Specifically, respondents in Testlet L11 with a Level 2 proficiency score spent overall less time for the last action than those in Levels 3–5. However, they demonstrated similar allocation patterns on time to first action as those in the higher-level proficiency levels on eight of the nine items. On item 7, those who scored at Level 2 spent more time to first action than Levels 3 and above. Adults at Levels 1 or below on average demonstrated patterns of spending the least amount of time than Levels 2 and above on many of the items across time to first action and time for last action. These patterns seem to also be echoed in our finding that two distinct clusters emerged within the group of respondents who scored at Levels 2 and below, and that the lowest literacy scores were associated with less time spent interacting with the items. These findings raise questions as to the challenges faced by respondents with lower skills when interacting with digital test items. For example, it is possible that respondents with lower literacy skills become fatigued over time and/or are unable to manage the cognitive (i.e., reading) and/or technology demands within the digital environment. Future research should explore the nature of the challenges that adults with lower skills face while interacting with digital assessments, as this may inform testing strategies and reading skills that could be taught in adult education programs to increase students’ performance.

Individual and contextual predictors

Generally, individual differences and contextual factors were similar across the two clusters, with the exceptions of age, race, literacy, and writing skills at home. In particular, younger adults (ages <24–34) were more likely to be in Cluster 2 (overall less time spent). Although the higher literacy levels (3 through 5) appear to have a higher representation of respondents who are younger and have higher ICT skills (see Tables 1, 2); our results focusing on Levels 2 and below emphasize that younger adults facing literacy challenges may not always demonstrate adequate engagement on digital literacy assessments. This finding aligns with Fang et al. (2018), who found four clusters, or groups, of adults based on timing actions and accuracy in a digital reading educational program. One of the clusters included a group of under-engaged readers that were fast, but relatively inaccurate on the digital tasks. The authors did not report information regarding individual and contextual factors (e.g., age, race, educational attainment, skills-use) of each profile; however, based on our findings, it may be the case that younger adults with lower literacy skills tend to be under-engaged readers.

Adults with lower literacy skills, particularly those who are older, self-identify as Black, report having a learning disability, demonstrate relatively higher literacy performance, and engage in greater writing activities at home, show a higher likelihood of belonging to Cluster 1 and spending more time on tasks. This aligns with previous research indicating that individuals with learning difficulties (e.g., Ziegler et al., 2006) and older adults (e.g., Comings et al., 1999; Sabatini et al., 2011; Greenberg et al., 2013) tend to exhibit better attendance in adult literacy intervention studies and educational classes. Moreover, daily exposure to printed materials among adult literacy students correlates with increased persistence and engagement in adult education (Greenberg et al., 2013), which aligns with our findings highlighting a connection between frequent engagement in writing activities at home and extended time spent in the digital literacy assessment. Additionally, recent research suggests that adults with low literacy skills who identify as Black tend to demonstrate better attendance in adult literacy programs across the state of Georgia compared to those who identify as White (Tighe et al., 2023b). Taken together, our study’s results and prior literature suggest that these groups exhibit higher levels of persistence, albeit being disproportionately represented among adults with lower literacy skills. These findings underscore the need for further research to better understand the factors and barriers affecting the performance of these groups of adults on digital literacy assessments. Such insights could inform tailored instructional strategies for these specific groups, potentially leading to improved outcomes on digital literacy assessments.

Educational implications

There are few educational implications that can be made from the results of this study. First, adults with lower literacy skills, particularly those at Levels 1 and below, spent considerably less time on the items, suggesting a potential need for support in sustaining their engagement during interactions with digital literacy assessments. Second, within the group scoring Levels 2 and below, individuals in Cluster 1 spent more time on digital literacy items and were more likely to score higher than those in Cluster 2, who spent much less time. However, Cluster 1, on average, spent more time (M = 75.90 s) than respondents with higher literacy proficiency levels (Levels 3 and above; Ms = 44.80–48.50 s). A similar finding by Fang et al. (2018) identified a group of adults spending more time on digital literacy tasks but struggling with accuracy compared to those spending less time. Therefore, sustained engagement may benefit adults with lower literacy skills, but its impact has limits. These results suggest that respondents may require additional support with foundational literacy skills to either enhance sustained engagement (for respondents in Cluster 2 who spent much less time) or to become better, more efficient readers (as seen in respondents in Cluster 1).

To date, a few intervention studies have focused on targeting the foundational reading skills of adults with lower literacy skills (e.g., Alamprese et al., 2011; Greenberg et al., 2011; Hock and Mellard, 2011; Sabatini et al., 2011). However, many of these studies reported minimal to no gains in learners (e.g., Greenberg et al., 2011; Sabatini et al., 2011). More recently, intervention work has explored a digital learning tool called AutoTutor to teach comprehension strategies to adults with lower literacy skills (Graesser et al., 2021; for an example, see www.arcweb.us). Recent evidence suggests that this tool may be a valuable supplement to traditional instruction (Hollander et al., 2021), potentially leading to improvements in the foundational reading skills needed (e.g., decoding, vocabulary) to perform better on literacy assessments, particularly digital literacy assessments.

Furthermore, respondents in the current study who scored at Levels 2 or below, particularly those at Levels 1 and below, might have displayed diminished engagement (or less time) on specific items due to limited use of ICT skills. These indicators of reduced engagement align with the skill profiles linked to Levels 2 and below in the PIAAC literacy domain, highlighting that individuals at these levels often lack skills in interacting with digital literacy texts. Our findings underscore the ongoing importance of exposing adult literacy learners to digital texts. In doing so, there is significant value in prioritizing the development of fundamental ICT skills essential for effective interaction with these texts, including activities such as scrolling, right-clicking, and utilizing web features (Vanek et al., 2020). Integrating technology into adult literacy classrooms has the potential to enhance performance on digital literacy assessments, but careful consideration should be given to the thoughtful integration of technology into classroom instruction (Vanek et al., 2020). Specifically, digital literacy activities should align with academic learning objectives and focus on helping adult literacy learners engage with digital texts relevant to high-stakes test-taking situations (e.g., GED, HiSET).

Moreover, our results from the broader sample of adult respondents suggest that respondents with higher literacy proficiency levels (Levels 3 and above) tend to also have higher engagement in skills-use overall at home and at work (see Table 1). Specific to individuals who scored at Levels 2 and below, respondents who scored higher on the literacy assessment also tended to report higher writing skills-use at home. Previous findings have suggested that literacy instruction in adult education programs that focuses on skills-use can lead to better outcomes (Reder, 2009b, 2019). Thus, instruction that is situated in daily, authentic scenarios that require reading and writing may help adults with lower literacy skills achieve higher gains and become more efficient when taking digital literacy assessments. Specifically, our findings emphasize the importance of adopting a “literacy-in-use” framework, which can help adults with lower literacy skills improve their reading and writing in different types of contexts and situations, some of which may occur in a digital environment, such as reading memos, emails, and interpreting instructions, diagrams, and/or maps (Trawick, 2019). This type of framework transcends instruction that is only focused on the foundational skills of reading (e.g., decoding, vocabulary knowledge), and emphasizes other higher-order skills that are necessary for adults with lower literacy skills (Tighe et al., 2023a,c), including making inferences, relating different pieces of a text or multiple texts to each other, and evaluating the quality of information in texts (Trawick, 2019).

Limitations and future directions

There were a few limitations to this study. First, this study focused on the digital literacy items, which are part of the computer-based PIAAC assessment. Thus, we were unable to capture the performance of all adults with lower literacy skills who may have opted out of the computer-based assessment or may not have qualified to take the assessment due to limited or no technology use. This suggests that there are adults with lower literacy skills who have virtually no technology experience and would benefit from increased attention to ICT skills in adult education programs, especially because more high-stakes assessments, such as the GED and HiSET, are becoming increasingly digital.

A second limitation of this study stems from the race and ethnicity item in the PIAAC dataset, which introduces conceptual complexities by combining race and ethnicity, notably because identifying as Hispanic denotes an ethnicity rather than a race. Consequently, it is imperative to recognize that our assessment of the race and ethnicity categories in the current study is a less precise interpretation of their effects on cluster membership. For instance, we designated individuals identifying as White as the reference group and generated dummy codes for Black, Hispanic, and Asian categories. This revised interpretation now contrasts individuals who identified as Hispanic (potentially also identifying as White or Black) solely against those identifying as White, non-Hispanic. Ideally, these respondents would be compared against all other non-Hispanic individuals in the sample. Unfortunately, the PIAAC dataset limited respondents’ ability to distinguish ethnicity from race.

A third constraint relates to the observed discrepancy between the logistic regression models employed in research question 3—multiple imputation and complete case analysis—to handle missing data. In our analysis, we found that writing at home significantly predicted cluster membership in the complete case analysis (p = 0.020), yet only approached significance post-imputation (p = 0.054). Similarly, the learning disability variable approached significance in the complete case analysis (p = 0.065), but was statistically significant following imputation with the augmented sample size (p = 0.033). The consistent direction and magnitude of effects between the complete case and imputed models suggest that this inconsistency could primarily be attributed to the larger sample size represented in the imputed model. Nevertheless, caution is warranted in the interpretation of findings related to the learning disability and writing at home variables due to this discrepancy.

Finally, this study used the PIAAC public-use process data and therefore, we were constrained to the three timing variables (time to first action, time for last action, and total time) that were available. Timing data is informative to understanding the behaviors of test-takers (Cromley and Azevedo, 2006) and provides important information regarding engagement or efficiency of adults with lower literacy skills on digital literacy tasks (e.g., Fang et al., 2018); however, we were not able to elaborate on any challenges specific to ICT skills needed to navigate a digital literacy assessment (e.g., using a mouse to highlight or click through the items). A future direction of our work will be to use the restricted access PIAAC process data to examine specific literacy items to map certain actions (e.g., highlighting, clicking) and sequences of actions, which will help us better understand the challenges adults with low literacy skills may have on a digital assessment and how these may influence item performance. Some previous work using PIAAC PSTRE items suggests that there are differences in the efficiencies of different sequences of actions to solve the items (e.g., He and von Davier, 2015, 2016). The items that were examined in these studies involved higher-order executive function and problem-solving skills, which included fewer adults with lower literacy skills. Thus, this has not been examined specific to PIAAC literacy items, which would require more basic ICT skills (e.g., sample item in Figure 1) for adults facing literacy challenges.

Conclusion

This study provides important insights into how adults with literacy challenges perform on digital literacy items. The time allocation patterns revealed a stark differentiation between literacy proficiency levels, and, in particular emphasized the heterogeneous nature of time allocation patterns amongst adults facing challenges on digital literacy assessments (Levels 2 and below). Overall, the findings underscore the need for tailored interventions to support adults with lower literacy skills on digital literacy assessments, recognizing that engagement may vary according to skills-use in everyday life, literacy proficiency, and a diverse set of demographic factors.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found at: https://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=2014045REV and https://search.gesis.org/research_data/ZA6712?doi=10.4232/1.12955.

Ethics statement

Ethical approval was not required for the study involving humans in accordance with the local legislation and institutional requirements. Written informed consent to participate in this study was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and the institutional requirements.

Author contributions

GK: Conceptualization, Formal analysis, Methodology, Visualization, Writing – original draft, Writing – review & editing. ET: Conceptualization, Funding acquisition, Supervision, Writing – original draft, Writing – review & editing. QH: Conceptualization, Data curation, Formal analysis, Funding acquisition, Methodology, Supervision, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. The research reported here is supported by the Institute of Education Sciences, U.S. Department of Education, through Grants R305A210344 and R305A180299 (awarded to Georgia State University). The opinions expressed are those of the authors and do not represent views of the Institute or the U.S. Department of Education.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2024.1338014/full#supplementary-material

Footnotes

1. ^In the integrated block design, the 18 unique items were assigned to four blocks: block A1 (4 items), block B1 (5 items), block C1 (4 items), and block D1 (5 items). Each testlet consisted of two blocks: L11 (block A1 and B1), L12 (block B1 and C1), and L13 (block C1 and D1). The block B1 and C1 functioned as linking blocks among the three testlets.

2. ^The PIAAC adopted item response theory 2PL and the Generalized Partial Credit Model (GPCM) to calibrate item parameters in literacy, numeracy and problem-solving in technology-rich environments (PSTRE). Each item has two item parameters: difficulty parameter and slope parameter. The difficulty parameter indicates the difficulty level in the item according to the latent ability estimation. The slope parameter indicates the discrimination level in the item to identify how sensitive the item can be used to distinguish between latent trait levels.

References

Alamprese, J. A., MacArthur, C. A., Price, C., and Knight, D. (2011). Effects of a structured decoding curriculum on adult literacy learners' reading development. J. Res. Educ. Effect. 4, 154–172. doi: 10.1080/19345747.2011.555294

Brinkley-Etzkorn, K. E., and Ishitani, T. T. (2016). Computer-based GED testing: implications for students, programs, and practitioners. J. Res. Practice Adult Liter. Sec. Basic Educ. 5, 28–48,

Coiro, J. (2003). Reading comprehension on the internet: expanding our understanding of reading comprehension to encompass new literacies. Read. Teach. 56, 458–464,

Comings, J. P., Parrella, A., and Soricone, L. (1999). Persistence among adult basic education students in pre-GED classes (NCSALL-R-12). Cambridge, MA: National Center for the Study of Adult Learning and Literacy, Harvard Graduate School of Education.

Cromley, J. G., and Azevedo, R. (2006). Self-report of reading comprehension strategies: what are we measuring? Metacogn. Learn. 1, 229–247. doi: 10.1007/s11409-006-9002-5

Dietrich, J., Viljaranta, J., Moeller, J., and Kracke, B. (2016). Situational expectancies and task values: associations with students’ effort. Learn. Instr. 47, 53–64. doi: 10.1016/j.learninstruc.2016.10.009

Durik, A. M., Vida, M., and Eccles, J. S. (2006). Task values and ability beliefs as predictors of high school literacy choices: a developmental analysis. J. Educ. Psychol. 98, 382–393. doi: 10.1037/0022-0663.98.2.382

Fang, Y., Shubeck, K., Lippert, A., Cheng, Q., Shi, G., Feng, S., et al., (2018). Clustering the learning patterns of adults with low literacy skills interacting with an intelligent tutoring system. Proceedings of the 11th international conference on educational data mining, 348–354

Feinberg, I., Tighe, E. L., Talwar, A., and Greenberg, D. (2019). Writing behaviors relation to literacy and problem solving in technology-rich environments: Results from the 2012 and 2014 U.S. PIAAC study. Retrieved from PIAAC Gateway website, Available at: https://www.piaacgateway.com. Washington, DC

Fox, J.-P., and Marianti, S. (2016). Joint modeling of ability and differential speed using responses and response times. Multivar. Behav. Res. 51, 540–553. doi: 10.1080/00273171.2016.1171128

Graesser, A. C., Greenberg, D., Frijters, J. C., and Talwar, A. (2021). Using AutoTutor to track performance and engagement in a reading comprehension intervention for adult literacy students. Revista Signos. Estudios de Linguistica 54, 1089–1114. doi: 10.4067/S0718-09342021000301089

Graesser, A. C., Greenberg, D., Olney, A., and Lovett, M. W. (2019). Educational technologies that support reading comprehension for adults who have low literacy skills. D. Perin (Ed.), The Wiley handbook of adult literacy, 471–493. Wiley-Blackwell

Greenberg, D., Wise, J. C., Frijters, J. C., Morris, R., Fredrick, L. D., Rodrigo, V., et al. (2013). Persisters and nonpersisters: identifying the characteristics of who stays and who leaves from adult literacy interventions. Read. Writ. 26, 495–514. doi: 10.1007/s11145-012-9401-8

Greenberg, D., Wise, J. C., Morris, R., Fredrick, L. D., Rodrigo, V., Nanda, A. O., et al. (2011). A randomized control study of instructional approaches for struggling adult readers. J. Res. Educ. Effect. 4, 101–117. doi: 10.1080/19345747.2011.555288

Guthrie, J. T., and Wigfield, A. (2017). Literacy engagement and motivation: rationale, research, teaching, and assessment. D. Lapp and D. Fisher (Eds.), Handbook of research on teaching the English language arts, 57–84, Routledge

Hadwin, A., Winne, P., Stockley, D., Nesbit, J., and Woszczyna, C. (2001). Context moderates students’ self-reports about how they study. J. Educ. Psychol. 93, 477–487. doi: 10.1037/0022-0663.93.3.477

He, Q., Borgonovi, F., and Paccagnella, M. (2021). Leveraging process data to assess adults’ problem-solving skills: identifying generalized behavioral patterns with sequence mining. Comput. Educ. 166:104170. doi: 10.1016/j.compedu.2021.104170

He, Q., Liao, D., and Jiao, H. (2019). Clustering behavioral patterns using process data in PIAAC problem-solving items. B. P. Veldkamp and C. Sluijter (Eds.), Theoretical and practical advances in computer-based educational measurement (pp. 10). Methodology of Educational Measurement and Assessment

He, Q., and von Davier, M. (2015). Identifying feature sequences from process data in problem-solving items with n-grams. A. Arkvan der, D. Bolt, S. Chow, J. Douglas, and W. Wang (Eds.), Quantitative psychology research: Proceedings of the 79th annual meeting of the psychometric society, 173–190, New York, NY: Springer

He, Q., and von Davier, M. (2016). Analyzing Process Data from Problem-Solving Items with N-Grams: Insights from a Computer-Based Large-Scale Assessment. In Handbook of Research on Technology Tools for Real-World Skill Development (pp. 29-56).

Helsper, E. J., and Eynon, R. (2010). Digital natives: where is the evidence? Br. Educ. Res. J. 36, 503–520. doi: 10.1080/01411920902989227

Hock, M. F., and Mellard, D. F. (2011). Efficacy of learning strategies instruction in adult education. J. Res. Educ. Effect. 4, 134–153. doi: 10.1080/19345747.2011.555291

Hollander, J., Sabatini, J., and Graesser, A. C. (2021). An intelligent tutoring system for improving adult literacy skills in digital environments. COABE J. 10, 59–64,

Iñiguez-Berrozpe, T., and Boeren, E. (2020). Twenty-first century skills for all: adults and problem solving in technology rich environments. Technol. Knowl. Learn. 25, 929–951. doi: 10.1007/s10758-019-09403-y

Klein Entink, R. H. (2009). Statistical models for responses and response times. Doctoral dissertation. Enschede: University of Twente.

Klein Entink, R. H., Fox, J. P., and van der Linden, W. J. (2009). A multivariate multilevel approach to the modeling of accuracy and speed of test takers. Psychometrika 74, 21–48. doi: 10.1007/s11336-008-9075-y

Lesgold, A. M., and Welch-Ross, M. (Eds.) (2012). Improving adult literacy instruction: Options for practice and research. Washington, DC: National Academies Press.

Liao, D., He, Q., and Jiao, H. (2019). Mapping background variables with sequential patterns in problem-solving environments: an investigation of United States adults’ employment status in PIAAC. Front. Psychol. 10:e646. doi: 10.3389/fpsyg.2019.00646

MacArthur, C. A., Konold, T. R., Glutting, J. J., and Alamprese, J. A. (2012). Subgroups of adult basic education learners with different profiles of reading skills. Read. Writ. 25, 587–609. doi: 10.1007/s11145-010-9287-2

Maechler, M., Rousseeuw, P., Struyf, A., Hubert, M., and Hornik, K. (2021). Cluster: cluster analysis basics and extensions. R package version 2.1.2. Available at: https://CRAN.R-project.org/package=cluster

NCES. (2019). Highlights of the 2017 U.S. PIAAC results web report (NCES 2020-777) Washington DC: U.S. Department of Education. Retrieved from: https://nces.ed.gov/surveys/piaac/current_results.asp

OECD (2013). Technical report of the survey of adult skills (PIAAC). OECD Publishing, Paris. Available at: https://www.oecd.org/skills/piaac/_Technical%20Report_17OCT13.pdf

OECD (2017). Programme for the international assessment of adult competencies (PIAAC), log files. Cologne: GESIS Data Archive ZA6712 Data file Version 2.0.0.

Olney, A. M., Bakhtiari, D., Greenberg, D., and Graesser, A. (2017). Assessing computer literacy of adults with low literacy skills. In international educational data mining society, paper presented at the international conference on educational data mining (EDM) (10th, Wuhan, China, Jun 25–28, 2017). Retrieved from ERIC database. (ERIC number: ED596577)

PIAAC Literacy Expert Group (2009). PIAAC literacy: a conceptual framework. OECD education working paper no. 34 : OECD Publishing.

R Core Team. (2021). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. Available at: https://www.R-project.org/

Reder, S. (2009a). Scaling up and moving in: Connecting social practices views to policies and programs in adult education. Literacy and numeracy studies: An international journal in the education and training of adults, 16(2) & 17(1), 1276.

Reder, S. (2009b). The development of literacy and numeracy in adult life. S. Reder and J. Bynner (Eds.), Tracking adult literacy and numeracy skills: Findings from longitudinal research, 59–84. New York/London: Routledge.

Reder, S. (2010). Participation, life events and the perception of basic skills improvement. J. Derrick, U. Howard, J. Field, P. Lavender, S. Meyer, and E. N. Rheinvon, et al. (Eds.), Remaking adult learning: Essays on adult education in honour of Alan Tuckett, 31–55. London, UK: Institute of Education

Reder, S. (2019). Developmental trajectories of adult education students: implications for policy, research, and practice. D. Perin (Ed.), Wiley handbook of adult literacy (pp. 429–450). Hoboken, NJ: Wiley Blackwell

Robitzsch, A., and Grund, S. (2023). Miceadds: some additional multiple imputation functions, especially for “mice”. R package version 3.16-18. Available at: https://CRAN.R-project.org/package=miceadds

Sabatini, J. P., Shore, J., Holtzman, S., and Scarborough, H. S. (2011). Relative effectiveness of reading intervention programs for adults with low literacy. J. Res. Educ. Effect. 4, 118–133. doi: 10.1080/19345747.2011.555290

Shernoff, D. J., Ruzek, E. A., and Sinha, S. (2016). The influence of the high school classroom environment on learning as mediated by student engagement. Sch. Psychol. Int. 38, 201–218. doi: 10.1177/0143034316666413

Talwar, A., Greenberg, D., and Li, H. (2020). Identifying profiles of struggling adult readers: relative strengths and weaknesses in lower-level and higher-level competencies. Read. Writ. 33, 2155–2171. doi: 10.1007/s11145-020-10038-0

Tighe, E. L., Hendrick, R. C., Greenberg, D., and Tio, R. (2023b). Exploring site-, teacher-, and student-level factors that influence retention rates and student gains in adult education programs. Paper presented at the 48th annual Association for Education Finance and Policy (AEFP) conference in Denver, Colorado

Tighe, E. L., Kaldes, G., and McNamara, D. S. (2023c). The role of inferencing in struggling adult readers' comprehension of different texts: a mediation analysis. Learn. Individ. Differ. 102, 102268–102213. doi: 10.1016/j.lindif.2023.102268

Tighe, E. L., Kaldes, G., Talwar, A., Crossley, S. A., Greenberg, D., and Skalicky, S. (2023a). Do struggling adult readers monitor their reading? Understanding the role of online and offline comprehension monitoring processes during reading. J. Learn. Disabil. 56, 25–42. doi: 10.1177/00222194221081473

Tighe, E. L., Reed, D. K., Kaldes, G., Talwar, A., and Doan, C. (2022). Examining individual differences in PIAAC literacy performance: Reading components and demographic characteristics of low-skilled adults from the U.S. prison and household samples. American Institutes of Research. Available at: https://static1.squarespace.com/static/51bb74b8e4b0139570ddf020/t/62790f61c142da17ba54bc21/1652100962180/AIR_PIAAC+Prison_Final_2022.pdf

Trawick, A. R. (2019). The PIAAC literacy framework and adult reading instruction. Adult, Literacy Education, 1, 37–52

van Buuren, S., and Groothuis-Oudshoorn, K. (2011). Mice: multivariate imputation by chained equations in R. J. Stat. Softw. 45, 1–67. doi: 10.18637/jss.v045.i03

van Der Linden, W. J. (2007). A hierarchical framework for modeling speed and accuracy on test items. Psychometrika 72, 287–308. doi: 10.1007/s11336-006-1478-z

van Deursen, A. J. A. M., and van Dijk, J. A. G. M. (2016). Modeling traditional literacy, internet skills, and internet usage: an empirical study. Interact. Comput. 28, 13–26. doi: 10.1093/iwc/iwu027

Vanek, J. B., Harris, K., and Belzer, A. (2020). Digital literacy and technology integration in adult basic skills education: A review of the research. Syracuse, New York: ProLiteracy. Available at: https://proliteracy.org/Portals/0/pdf/Research/Briefs/ProLiteracy-Research-Brief-02_Technology-2020-06.pdf

Wang, J. H.-Y., and Guthrie, J. T. (2004). Modeling the effects of intrinsic motivation, extrinsic motivation, amount of reading, and past reading achievement on text comprehension between U.S. and Chinese students. Read. Res. Q. 39, 162–186. doi: 10.1598/RRQ.39.2.2

Wang, C., and Xu, G. (2015). A mixture hierarchical model for response times and response accuracy. Br. J. Math. Stat. Psychol. 68, 456–477. doi: 10.1111/bmsp.12054

Wicht, A., Reder, S., and Lechner, C. M. (2021). Sources of individual differences in adults’ ICT skills: a large-scale empirical test of a new guiding framework. PLoS One 16:e0249574. doi: 10.1371/journal.pone.0249574

Keywords: adult literacy, PIAAC, digital literacy, behavioral process data, assessment

Citation: Kaldes G, Tighe EL and He Q (2024) It’s about time! Exploring time allocation patterns of adults with lower literacy skills on a digital assessment. Front. Psychol. 15:1338014. doi: 10.3389/fpsyg.2024.1338014

Edited by:

Marcin Szczerbinski, University College Cork, IrelandReviewed by:

Ciprian Marius Ceobanu, Alexandru Ioan Cuza University, RomaniaFreda Paulino, Saint Louis University, Philippines

Tomasz Żółtak, Polish Academy of Sciences, Poland

Copyright © 2024 Kaldes, Tighe and He. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gal Kaldes, gkaldes1@gsu.edu

†ORCID: Gal Kaldes, orcid.org/0000-0002-5429-8194

Elizabeth L. Tighe, orcid.org/0000-0002-0593-0720

Qiwei He, orcid.org/0000-0001-8942-2047

Gal Kaldes

Gal Kaldes Elizabeth L. Tighe

Elizabeth L. Tighe Qiwei He

Qiwei He