- 1Department of Computer Science & Engineering, School of Computing & IT (SoCIT), Taylor's University, Subang Jaya, Malaysia

- 2Department of Restorative Dentistry, Faculty of Dentistry, University of Malaya, Kuala Lumpur, Malaysia

- 3Charles Perkins Centre, Faculty of Medicine and Health, University of Sydney, Darlington, NSW, Australia

- 4Future Technology Research Centre, College of Future, National Yunlin University of Science and Technology, Yunlin, Taiwan

- 5BioMedical Machine Learning Lab (BML), The Graduate School of Biomedical Engineering, University of New South Wales (UNSW) Sydney, Kensington, NSW, Australia

- 6UNSW Data Science Hub, The University of New South Wales, UNSW Sydney, Kensington, NSW, Australia

- 7Health Data Analytics Program, AI-enabled Processes (AIP) Research Centre, Macquarie University, Macquarie Park, NSW, Australia

- 8Department of Computer Engineering, College of Computer and Information Technology, Taif University, Taif, Saudi Arabia

Age estimation in dental radiographs Orthopantomography (OPG) is a medical imaging technique that physicians and pathologists utilize for disease identification and legal matters. For example, for estimating post-mortem interval, detecting child abuse, drug trafficking, and identifying an unknown body. Recent development in automated image processing models improved the age estimation's limited precision to an approximate range of +/- 1 year. While this estimation is often accepted as accurate measurement, age estimation should be as precise as possible in most serious matters, such as homicide. Current age estimation techniques are highly dependent on manual and time-consuming image processing. Age estimation is often a time-sensitive matter in which the image processing time is vital. Recent development in Machine learning-based data processing methods has decreased the imaging time processing; however, the accuracy of these techniques remains to be further improved. We proposed an ensemble method of image classifiers to enhance the accuracy of age estimation using OPGs from 1 year to a couple of months (1-3-6). This hybrid model is based on convolutional neural networks (CNN) and K nearest neighbors (KNN). The hybrid (HCNN-KNN) model was used to investigate 1,922 panoramic dental radiographs of patients aged 15 to 23. These OPGs were obtained from the various teaching institutes and private dental clinics in Malaysia. To minimize the chance of overfitting in our model, we used the principal component analysis (PCA) algorithm and eliminated the features with high correlation. To further enhance the performance of our hybrid model, we performed systematic image pre-processing. We applied a series of classifications to train our model. We have successfully demonstrated that combining these innovative approaches has improved the classification and segmentation and thus the age-estimation outcome of the model. Our findings suggest that our innovative model, for the first time, to the best of our knowledge, successfully estimated the age in classified studies of 1 year old, 6 months, 3 months and 1-month-old cases with accuracies of 99.98, 99.96, 99.87, and 98.78 respectively.

Introduction

Age estimation in living and deceased individuals has always been important in pediatric studies, pathological complications, and forensic medicine (1, 2). Human bone age can be estimated from foot, shoulder, ankle, hip, elbow, cervix, ankle, and teeth bones (3–5). The accuracy of the calculated age from a bone specimen depends on genetic and environmental factors such as age, race, smoking, lifestyle, famine and natural disasters (6). While current age estimation methods, for example, Greulich-Pyle or Tanner–Whitehouse method, can accurately estimate age from radiological examination of the left hand in children, age assassination complexities significantly increase when comparing young adults to adolescents. The absence of formal age documentation makes the process even more complicated despite recent discoveries in anthropometric fields demonstrating the importance of other bones, such as the pelvis, in determining bone age (3, 4). OPG or dental radiographs remained to be the primary forensic concern (7).

Orthopantomography, also known as an OPG or dental radiographs, provides a wide panoramic view of the lower face bones (8). OPG displays all the teeth on both jaws. OPG also depicts the jawbone and the temporomandibular joint (TMJ) on the same radiography film. The dental OPG images have been suggested to be more effective when compared to other bones of the body X-ray images. Teeth bones are long-lasting and resistant to high temperatures (2) and organisms which decompose a dead body (9). The Malaysian Institute of Forensic Medicine claimed that the X-ray images of teeth are the primary and the most used method in estimating age (10, 11). However, the traditional image processing approach for OPG age estimation is still relatively expensive, laborious and requires long-term monitoring, increasing potential radiation exposure (12).

Traditional image processing for dental age measurement is a manual method that may include several steps such as segmentation, feature extraction, image pre-processing, classification, or regression. Each of these steps is error-prone and can induce variations in the outcome. For example, in an identical age range, the dry bone image will be different from the wet bone in radiography scan. In recent years, Machine Learning/Deep Learning techniques have been widely used to identify patterns in complex data such as clinical imaging, genomics, bioimaging, and phenotypic data (13–17). Machine learning algorithms for example, NN, SVM, KNN, Decision Tree, displayed promising ability in prediction and classification (18–28) including estimation of bone age (29, 30). The medical and biological datasets are increasing rapidly. To analyse such big and complex data, artificial intelligence and machine learning algorithms become most popular (31–41). Therefore, it is important to implement novel techniques to uncover the medical and biological patterns. In particular machine learning and deep learning techniques have been widely used to analyse imaging data (23, 37, 42).

Deep learning-based methods, also known as end-to-end learning-based methods, such as convolutional neural networks, are either unsupervised that operate directly on the input images and generate the desired output without intermediate steps such as segmentation and extraction or supervised. Supervised learning (SL) models utilize a learning function that requires example input-output pairs for the model validation. The supervised approach runs the comparison between a radiographic image of each subject to an existing reference (i.e., labeled images), including gender and age information (43). Nevertheless, most of these methods require a large amount of labeled data.

Collecting and labeling large datasets are usually expensive, time-consuming, or sometimes impossible. Thus, in recent years, Semi-Supervised Learning (SSL) has emerged. Leveraging both labeled and unlabelled data, SSL has been proved to be a practical approach. Transfer learning (TL), an SSL-based model) aiming to utilize training knowledge gained from one data set to analyse another using a few-shot classification framework (44, 45). Moreover, unprocessed image databases, which usually can be fed to conventional ML or DL models, are prone to inaccurate class recognition. Extracting specific characteristics that accurately represent behavior in different environmental conditions is also a time-consuming task. (11, 46). Hence, choosing a classifier that can differentiate behaviors in terms of diversity within and outside each class is one of the challenges in recognizing behavior. Therefore, converting raw data into attribute vectors systematically and using effective attribute extractors using an engineering approach is required for proper classification (47–49).

Despite these improvements developing and training deep neural networks remained challenging and time-consuming. Thus, Transfer learning (TL) can use the pre-trained deep network to perform data classification, attracting increasing attention (50, 51) this article proposed an innovative automated machine learning model approach to estimate bone age. This approach is based on a deep convolutional neural network (52). We improved the accuracy of age determination in dental panoramic images with approximately 6-month intervals. The proposed method determines the precise age in the range of 15 to 23 years old that is divided into nine age groups. Each age group includes subcategories of images with 1 year, six, three and 1-month(s) intervals. This method combined the methodology of features extraction of the convolutional neural networks with the nearest neighbor feature to analyse and classify information and features in dental images.

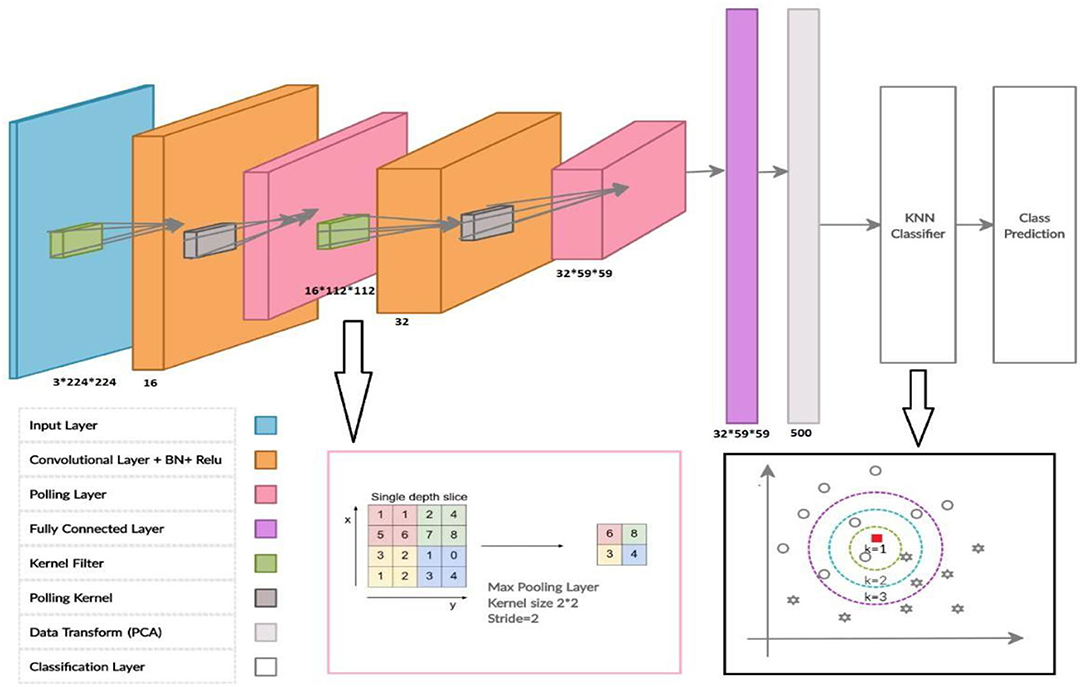

More specifically, the architecture of the proposed HCNN-KNN model used in this study consists of four steps (Figure 1). Initially, a convolutional neural network (CNN) model was trained on OPG images. Then, a fully connected layer generated from CNN was fed into a principal component analysis (PCA). The PCA performed data transformation and dimension reduction. In the last step, data were classified using the KNN algorithm. The details of the prediction process of the classes in the data set were explained in the following sections.

Related Literature

Age estimation based on dental X-ray images remained a critical field to develop. Tooth bones are immortal and can last more than 20 years through post-mortem decomposition. This made the tooth an essential part of the forensic investigation (53). Although the number of bone age estimation models has increased, most remain in the research state with slow or no transition in the industry. Computerized methods for bone age estimation based on OPG images are currently limited to ± 6 months precision (52). Moreover, current Deep neural network algorithms used to estimate the age using dental X-ray images are, for example, AlexNet and ResNet. Training these algorithms requires large imaging datasets. For instance, Houssein et al. used a dataset with 1,429 dental X-ray images to reach acceptable output parameters (11).

In another study, Avuçlu et al. (54) used 1,315 dental images and 162 different dental classes to morphologically predict the age and gender in dental X-ray images with 95% accuracy (54, 55). Farhadian et al. (30) applied Transfer learning to reduce using a neural network that relied on dental data to alter age assessment. The age group of between 14 and 60 was used, and the overall data sample size for the research was 300 images. With an MAE of 4.12 years, the neural network methodology showed a reasonably accurate outcome (30). The neural network errors were smaller than the regression model's estimation errors, with the test data set being RMSE of 10.26 years and MAE of 8.17 years.

Alkaabi et al. (8) examined different Convolutional Neural Networks s AlexNet, VGGNet, and ResNet. Architectures for age estimation. They used common CNN architectures to perform forensic dentistry's automatic age calculation without any modifications. Using Capsule-Net, predicting age estimation from dental images was also performed, which depicted 36% higher accuracy than the CNNs model. The Capsule-Net model based on transfer learning reached a cumulative accuracy of 76% for ± 1-Year-old sample OPG images.

Tao et al. (29) has introduced the Multilayer Perceptron Neural network to estimate dental age. The experiments are carried out on a dataset composed of 1,636 samples. It was also experimentally confirmed that this latest feature set makes the dental age estimation more reliable for sample OPG images of ± 1-Year-old individuals' sample OPG images.

Kim et al. (56) applied a deep learning algorithm, based on CNN neural, to X-ray images of 9,435 cases (4,963 male, 4,472 female). Data in this cohort was sorted into three-age gatherings. Their study suggests that deep learning algorithms based on CNN neural networks show that the proposed approach functions evaluated based on a database of panoramic dental radiographs and worked well for accuracy.

Banar et al. (57) also implemented a fully automatic method leveraging the total capacity of the deep learning approach on their study of a dataset of 400 OPGs x-ray images with 20 OPGs per category and per gender. To overcome the limitations of having a dataset with a limited number of embodiments, the Barnar group employed a transfer learning approach using pre-trained CNNs, and data augmentation. Their study significantly reduced the imaging assessment time over the current conventional method. For example, they have reported that the entire automatic workflow took 2.72 s to compute on average. Given the small size of the dataset, this pilot study indicated the strength of transform learning and suggested a completely automated solution capable of demonstrating outcomes not inferior to manual assessing the images.

Tuan et al. (58) introduced a semi-supervised fuzzy clustering algorithm for pre-image processing and segmentation of dental X-ray images. Their study showed that their recommended work has superior accuracy than the initial semi-supervised fuzzy clustering and several related approaches.

In the Department of Dentistry and Study of University Sains Islam Malaysia, an age evaluation approach was tested on Malaysian adolescents between the ages of 1 to 17. Initially, the first to the third teeth were segmented, and the invariant deformation characteristics were then collected based on a deep learning method. The designed DCNN model was used to extract a broad range of features in the hierarchical layers, for example, invariance of size, rotation and deformation for sample OPG images of ± 1-Year-old individuals.

Materials and Methods

Image Pre-processing and Data Augmentation

Prior to utilizing images in our model, we performed image processing, adjusted the contrast and highlighted the images, removed extra margins (cropping), and normalized the image pixel values at edges from zero to one. In this study, data augmentation was performed by mirroring and data duplication with rotation.

Data were classified before, Splitting for Training the model. To perform the classification, the data in the dataset were divided into two categories: train data and validation data. The cross-validation was performed with 50–50, 70–30, and 20–80 train and validation split. To take an example, 20–80 split the 20% of the data for validation and 80% for training the model. Obtaining acceptable results on various test data will indicate the correct performance of the proposed model. Thus, to further enhance the validation of the model, we perform additional validation of the trained model using a data set that the model was seeing for the first time. The additional validation dataset included various features such as OPGs of different races, with different genders and at different ages.

The Architecture of HCNN-KKN Proposed Model

In this study, we proposed a model based on a combination of the HCNN-KKN. Steps in our hybrid model depicted in Figure 2 for pre-image processing and the dental classification are as follows:

Figure 2. The conceptual framework for our hybrid model, dental image processing and classification processes.

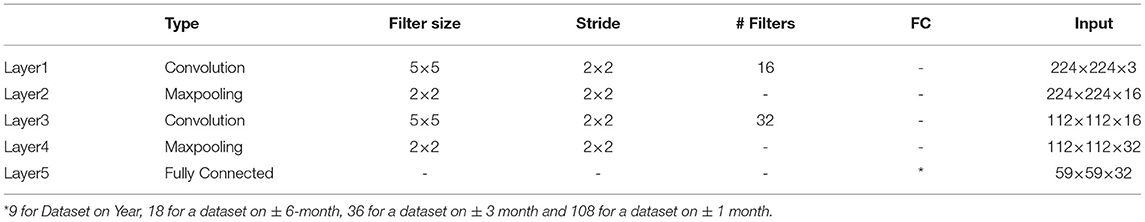

The architecture of the proposed HCNN-KNN model included an inputs layer, two Convolutional layers, a fully connected layer, a PCA transform data layer, and a KNN layer for classification (Figure 1). Using RGB images (224 x 224 pixels) as input, each image was fed to a convolutional layer (i.e., the second layer) containing 16 convolutional filters and a kernel size of 5 and 2 paddings. Low-level features like edges, blobs, shapes were obtained. In addition to the 16 convolutional normalization filters, batch normalization (16 layers), followed by a non-linear activation function via rectified linear unit (ReLU) were performed. The convolutional layer is termed “conv1”. Maxpooling layer downsampled the model with stride 2 and 2 paddings.

As a result, the Maxpooling layer reduced the dimensions of the images to 112 ×112 pixels, and the final output of this layer is equal to 112 ×112 ×16. These images were fed to the second convolutional block with a similar structure to the first. The later conventional block has 32 filters with 5 and 2 paddings kernel, resulting in the 56 ×56 ×32 images output. In the following step, all the fully connected layers were used to connect all the neurons in different layers (Table 1). To reduce the less important features to the next layer in the CNN model, the layers are connected with a 50% dropout between fully connected layers. We then applied a classification approach on a cohort of individuals between 15 to 23 years old and stratified all the cases with intervals of ± 6-months, ± 3-months, and ± 1-month. This resulted in 9 categories for each year (+_ Year), 18 categories for the 6 months intervals (each 6 months), 36 categories for each trimester of each year (each 3 months), 108 for every 12 months from each year (each 1 month). This resulted in the last fully connected layer to modify nine classification tasks with parameters like bias learn rate factor and weight learn rate factor. Figure 1: Illustration of the CNN Network elements.

In this step, a combined CNN model with KNN is used as a classifier to evaluate the class event instead of the SoftMax probability layer. Thus, before the data enters a fully connected layer of KNN layers, we proposed that in this model, the data were transferred in the space with more precise information and smaller dimensions using the principal component analysis method so-called “Fully_PCA”. Therefore, fully connected layer neurons after PCA data transformation and reductions are considered overlays of the KNN classification layer. In this case, the KNN input is assumed to be 500.

The HCNN-KNN model algorithm pseudo-code proposed in this bone age study is based on dental images. In the KNN network, the distance metric used is the Euclidean Distance metric and the “Ks” are 1, 2, 3. The model is trained using an SGD momentum optimiser with a 0.0001 learning rate. The model is then trained for 10 epochs with a mini-batch size of 16 images. After learning the network and classification operations, the error loss rate is obtained to evaluate the proposed network model in subsequent epochs of network learning.

Evaluating the Proposed Model on Validation Data

The network neurons' optimal weight and bias were evaluated following the network training step. The validation data, segmented according to Figure 2 (step 2), was then categorized on the CNN network with optimal parameters. The components of the classification algorithms were determined according to the training phase process and the criteria that allow the data to pass through the CNN-PCA-KNN layers. Finally, the identification rate was evaluated in which accuracy of the index was selected according to the Confusion Metrics. In brief, the sum of TP + TN (TP: the number of times the class is upbeat and correctly detected, FP: the number of times the class was positive and undiagnosed), divided with the sum of TP + FP + TN + FN. TP is the number of times the class is positive and correctly detected, FP abbreviation for the number of times the class was positive and undiagnosed. The TN, denotes the number of times the class is incorrect (negative) and correctly diagnosed. The FN represents the number of times each class is erroneous (negative) or not correctly recognized.

Validating the Proposed Model on a Test Dataset

To further validate the accuracy of the proposed model, we used test data which the model had not seen before, and was not part of the training dataset. This dataset was collected separately from the trained dataset and had different properties. This data combines X-ray OPG images of other races, with different genders and at different ages. This data enters the CNN network as a validation step and is categorized after passing through conv1, pooling1, conv2, pooling2, fully connected layer, PCA layer, and KNN layer. A vital feature of this step is the validation of the proposed model. Achieving acceptable results on various test data will indicate the satisfactory performance of the proposed model.

Computational Hardware Requirement

For this study, we used an NVIDIA GeForce GTX 1080 8GB, 8GB memory, Intel Core i7, and 3.40GHz. We also used Python libraries NumPy, Matplotlib, Sklearn, Metrics, PyTorch, Torchvision to perform the analyses.

Result and Discussion

We discussed the database used in this article, the tools, and the architecture introduced in the following. Finally, we evaluated our proposed model on the dataset.

Datasets Description

Dental OPG is a panoramic radiograph that scans the upper and lower jaws with a two-dimensional view that shows a semicircle from ear to ear. The used images in this study are dental OPG data, collected from dental teaching institutes and private dental clinics in Malaysia. These collected data with the different image sizes are resized before importing into the proposed model. The original image size for all data has been changed to 600 ×1,024 pixels.

Subjects from this study were randomly selected from 1,922 patients between 15 and 23 years of age, and this age range determines the age of minors which could help the model find more specific features and train the model more accurately, particularly for smaller time intervals. Moreover, to further validate the model, we used a dataset that hasn't been presented to the model during conventional training or the initial validation. Using an additional dataset including 130 random images, we showed that pre-training the model on such a prominent age recognition features (i.e. shorter time intervals, form of age range by year, age range by month, by season (3 months), and 6 months (Dataset on Year, on ± 6 months, on ± 3 months, and ± 1 month), allows us to evaluate our proposed method on a test database to enhance the performance of the proposed method (further details are provided in the experimental Result, Table 1, Figure 3).

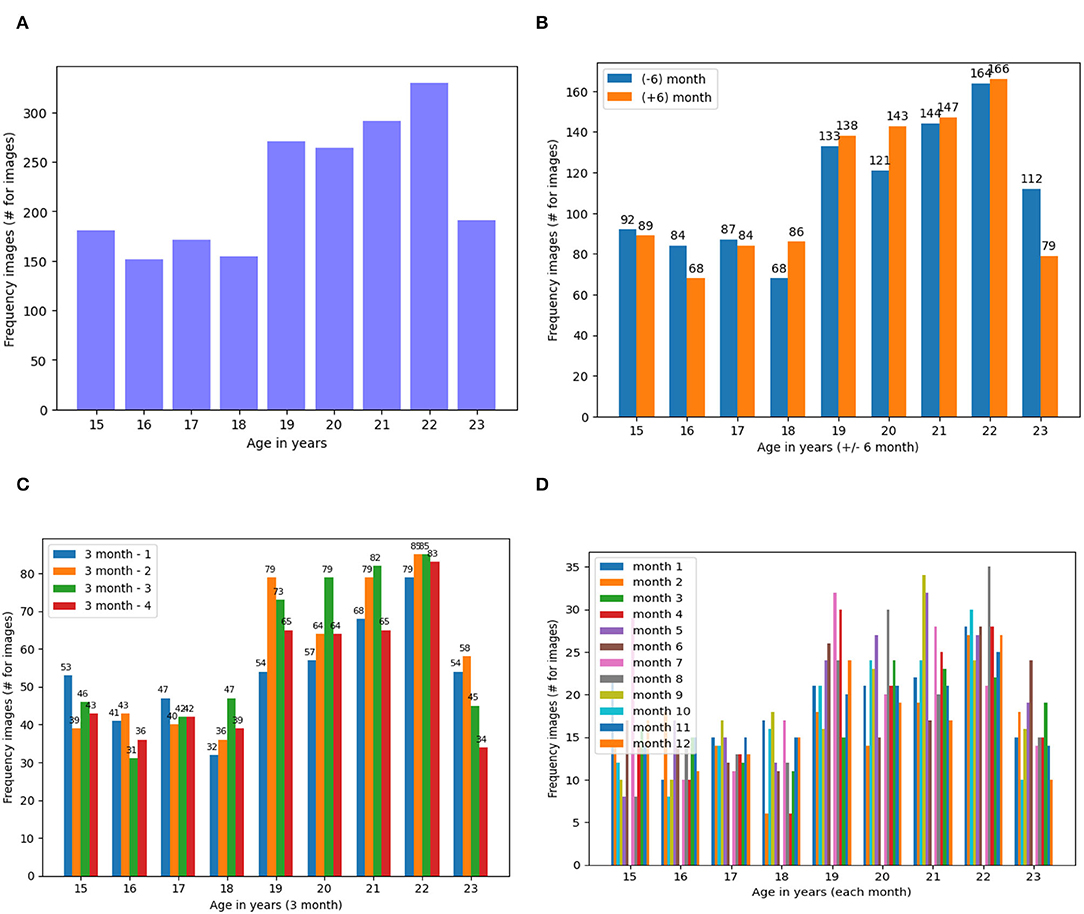

We elucidated the frequency of the dataset generated as the result of data augmentation when augmented more than 150, 68, 32 and 7 images in 1 year, 6 months, 3 months and 1-month categories, respectively (Figure 4).

Figure 4. Frequency of Dataset Images Based on the Four States of the Dataset (A) Dataset on Year, (B). On ± 6-Month, (C). On ± 3 Month and (D). On ± 1 Month. (A) Frequency of dental images for years dataset. (B) Frequency of dental images for ± 6 months dataset. (C) Frequency of dental images for ± 3 months dataset. (D) Frequency of dental images for ± 1 months dataset.

CNN Model Initial Accuracy on Four Different States of the Dataset

As the first experiment, we applied a CNN model on two other dataset states. In stage one, training and validation accuracy were evaluated from the original dataset without any pre-processing and data augmentation. In stage two, training and validation accuracy were assessed post-pre-processing operations on the data augmentation operations (Table 2).

We observed that pre-processing and data augmentation operations consistently improved the accuracy by approximately 20% and 40% for training and validation, respectively (Table 2).

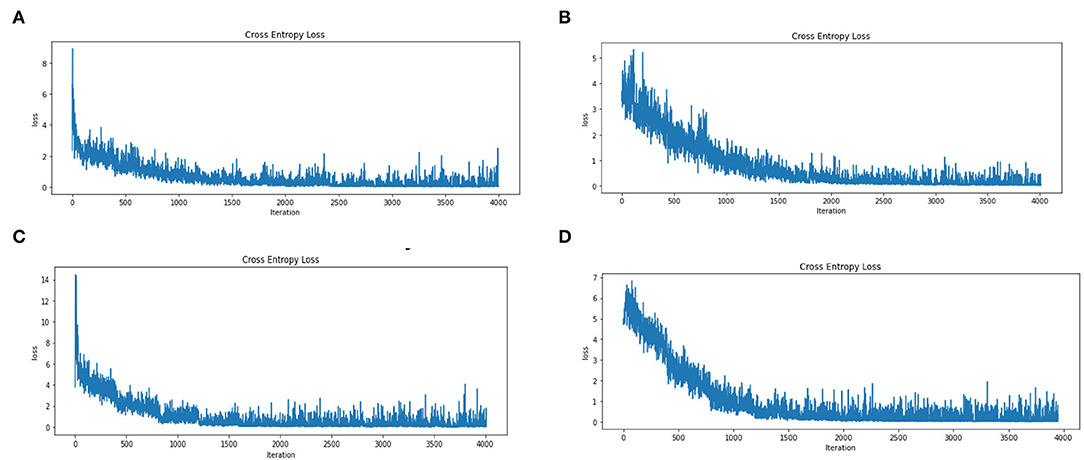

We then evaluated the loss reduction for each step (i.e., four separate modes) of this data set (1 Year, ± 6 months, ± 3 months, and ± 1 months) with 4,000 repetitions (i.e., epoch equivalent to 10 with about 400 batch size 16). Note that the results are obtained on cross-validation with 80% training and 20% validation and segmentation on dataset images. We observe a sharp decay in loss reduction values for both the 1 year and ± 3 months dataset, while the ± 6 months and ± 1 month dataset showed a more gradual decay (Figure 5).

Figure 5. Error Loss for Training on A Dataset Based on CNN Model on the Four States of the Augmented Dataset (A). Dataset on Year (B). On ± 6-Month (C). On ± 3 Month and (D). On ± 1 Month. (A) Error loss for dataset years. (B) Error loss for the dataset with ± 6 months. (C) Error loss for the dataset with ± 3 months. (D) Error loss for the dataset with ± 1 months.

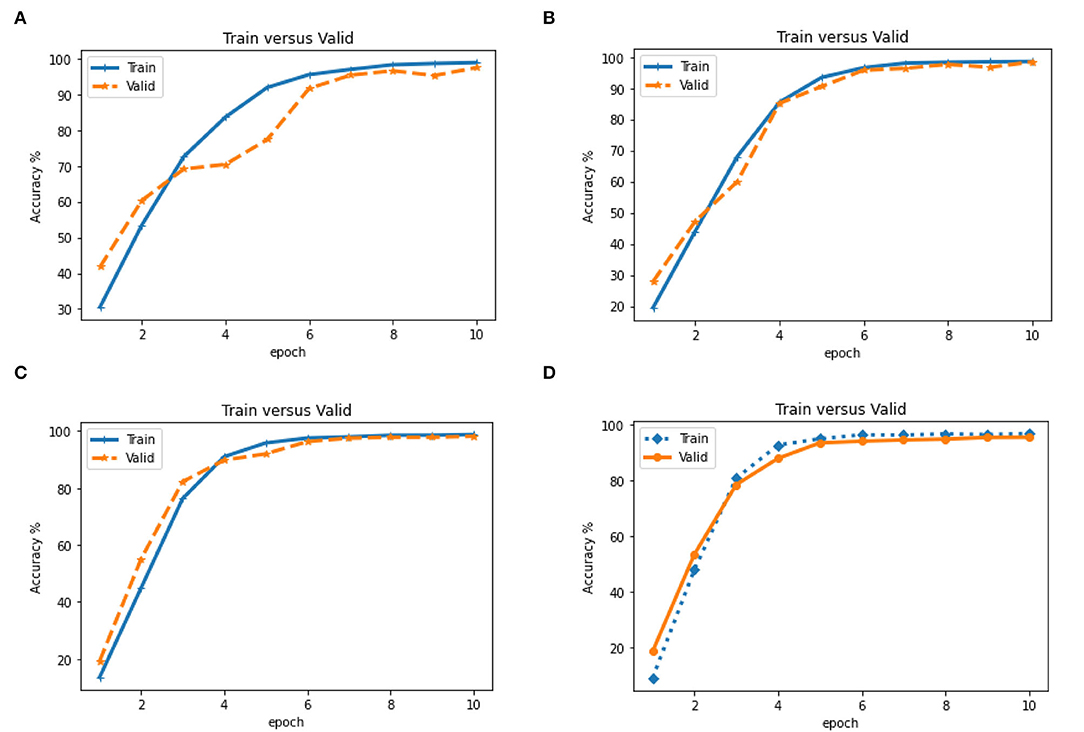

We also evaluated the accuracy of the proposed model on train and validation data in 10 epochs. We observed that the accuracy of train data is generally slightly higher than validation data (Figure 6). Therefore, the upward trend inaccuracy at 10 epochs indicates an increase in CNN learning.

Figure 6. Evaluation of accuracy (Train vs. Valid) in different epoch based on CNN model on four states of the dataset (A). Dataset on Year (B). on ± 6-month (C). on ±3 month and (D). on ± 1 month. (A) Train vs. Valid for dataset years. (B) Train vs. Valid for the dataset with ± 6 months. (C) Train vs. Valid for the dataset with ± 3 months. (D) Train vs. Valid for the dataset with ±1 months.

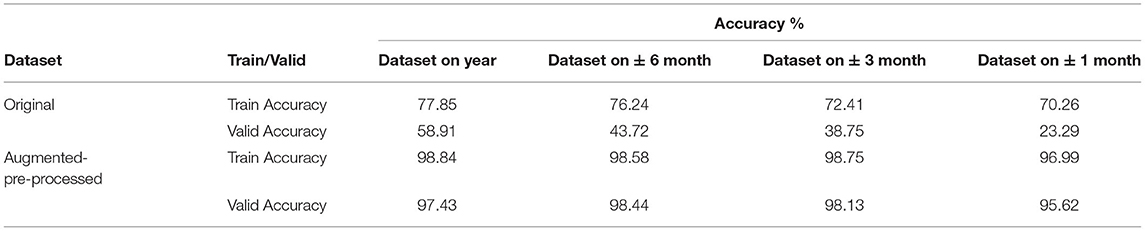

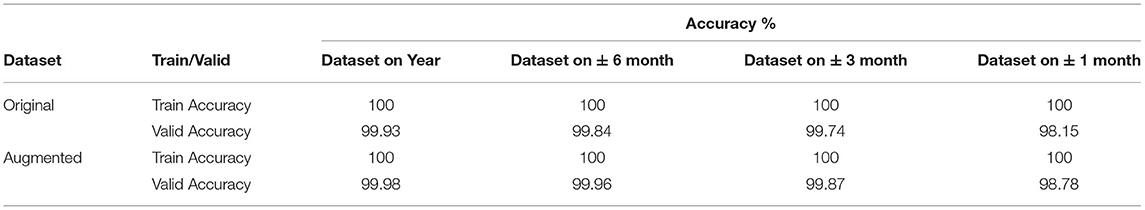

Combining HCNN-KNN Enhanced the Accuracy of the Model

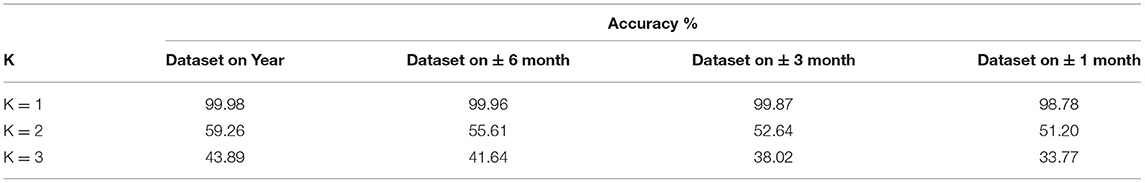

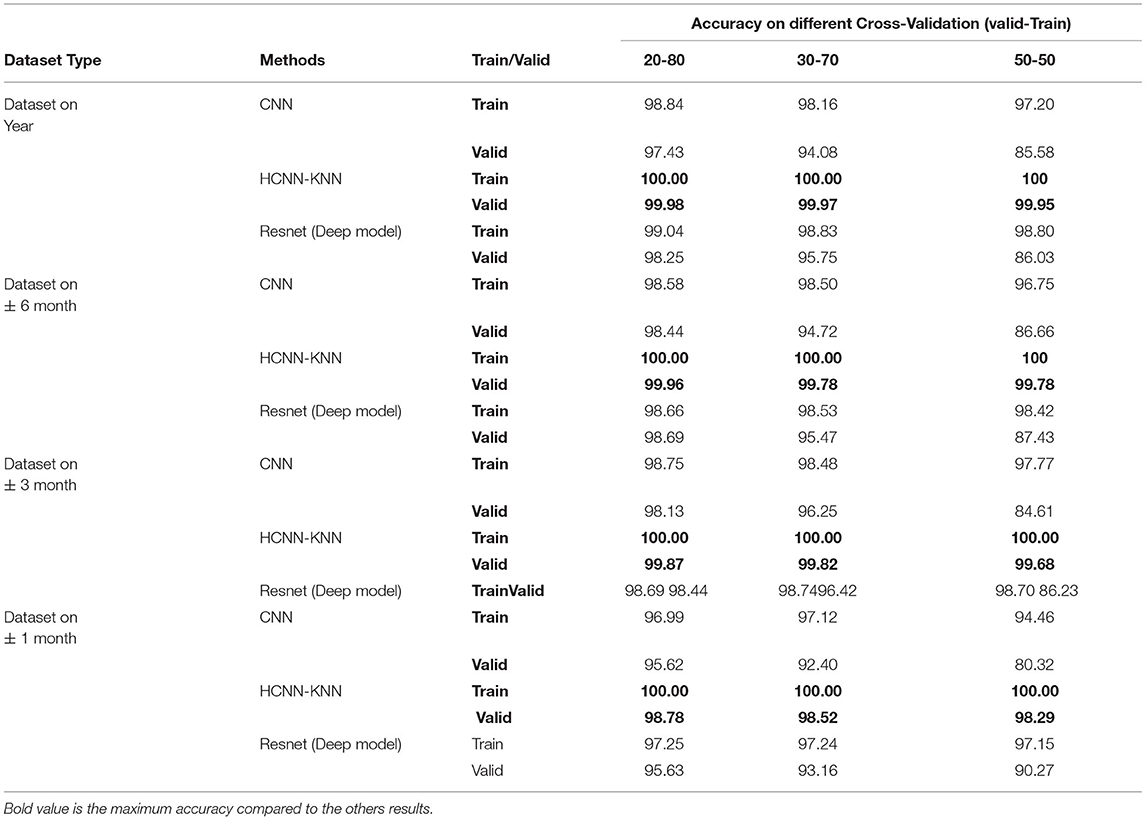

We used a combined methodology of convolutional neural networks and the nearest neighbor (classification) to further enhance the model's accuracy. The resulting hybrid model (HCNN-KNN) contained three main CNN, PCA, and KNN layers. Our findings showed that the KNN classification with 500 features selected from “Fully_PCA” (PCA data transform after fully connected in CNN) had increased the accuracy of the original data to approximately the same level of augmented data to 99.98, 99.96, 99.87, and 98.78 for 1 year, ± 6-months, ± 3-months, and on ± 1-month dataset, respectively. Intrinsically, we observed accuracy for train data to be equal to 100% (Table 3). This outcome indicated is fully trained using the training dataset. It is noteworthy that the results obtained for k-nearest neighbors value k = 1 in the proposed HCNN-KNN hybrid model. We have also evaluated the KNN method in the proposed HCNN-KNN hybrid model with a “k” value of 2 and 3 (Table 4). Model validation is essential for building a model and is susceptible to common pitfalls when splitting the training dataset (59, 60). To evaluate the possibility of overfitting pitfall resulting from splitting the training dataset, particularly for small ks (e.g., k = 1–3), we further validate the model using a dataset of dental images separate from the data used for initial training and validation; contained its special features. The unique features of these images are related to different races, different genders and are selected in the age range of 15 to 23. While obtaining acceptable results on these data indicates the proper performance of the KNN classifier even with k = 1.

Evaluation of Different Cross-Validation

Different cross-validation of datasets was applied to evaluate our method (e.g., 50–50, 70–30 and 20–80 train-valid cross-validation, Table 5).

Evaluation of Proposed Model on Second Dataset (Test Dataset)

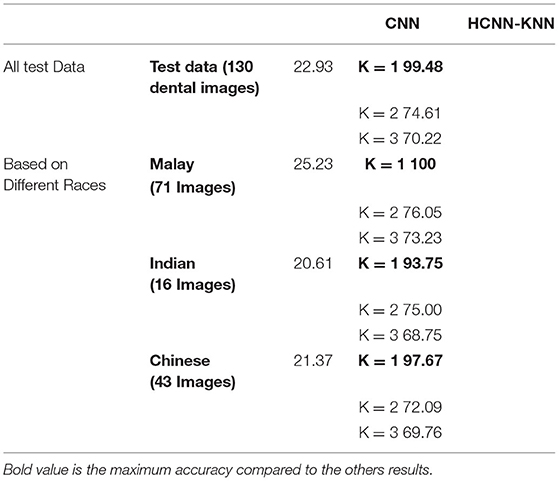

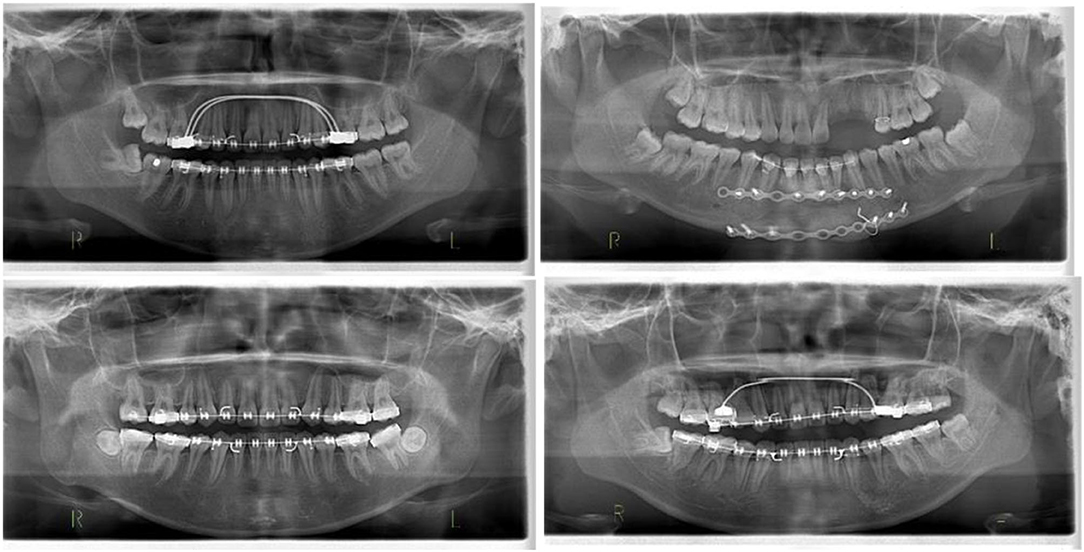

One of this study's innovative and key features is the additional validation step in which a set of images from a diverse cohort has been used to further validate the proposed model. After the initial training and validation, the images are only available to the model. The dataset had a combination of 130 X-Ray OPG images with different races (e.g., Malay, Indian and Chinese), with distinct features, various age ranges and normal distribution of ordinary dentistry, orthodontic and malignant images (Figure 7).

Figure 7. Different images of test data to evaluate the orthodontics (malignant) of the proposed model.

As Table 6 shows, the proposed HCNN-KNN model has obtained better results on the test data. Getting satisfactory results on a new dataset outside the dataset images indicates the performance of the proposed model. Thus, it seems that the proposed HCNN-KNN model has obtained superior results on the tested dataset. Obtaining satisfactory results on a new dataset outside the dataset images indicated the outstanding performance of the proposed model (Table 6). The HCNN-KNN model effects have also been tested for k 1, 2, and 3 (Table 6). The outcome shows the proper performance of the proposed model for k = 1. In KNN, where k is larger, the distance becomes more critical, overcoming the KNN principle that closer neighbors have the same density or classes. Since the classes in our dataset are very near to each other and are pre-processed with noise reduction, the result shows acceptable accuracy at K = 1.

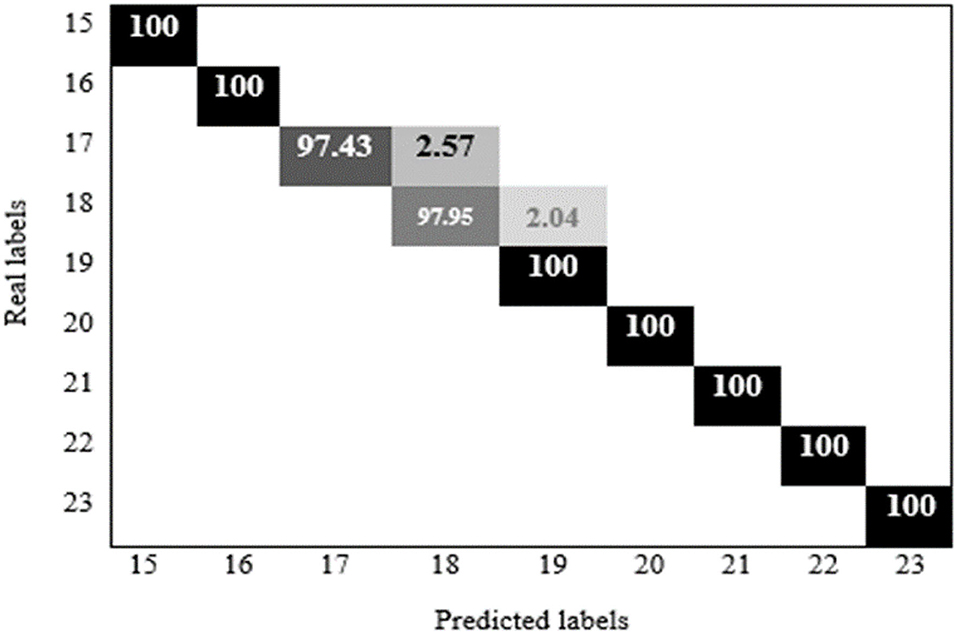

Figure 8 shows the confusion matrix of 97.95 % for the 18-year-old age range which was correctly estimated, and 2.04 % was incorrectly identified for the 19-year-old.

Models Accuracy

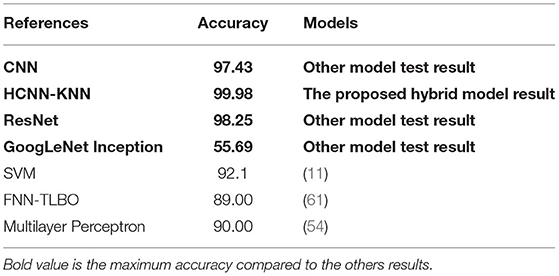

The collected data and the proposed model have been tested with other models such as ResNet, CNN, GoogLeNet Inception (Table 7). Our finding shows that the hybrid HCNN-KNN model has obtained much higher accuracy than different conventional classification algorithms tested.

Table 7. Comparison between the proposed HCNN-KNN model and other studies in bam based on dental images.

The novelty of our study is that to the best of our knowledge, no other study reported measurements of ± 6 months, ± 3 months, and ± 1 month so far, but only compared by the year. However, the results of this proposed model are compared with previous studies by years of accuracy. For example, Avuçlu used the Multilayer Perceptron model with 1,315 dental OPG images, and the highest accuracy was 90% just for accuracy of the years. Also, in 2020, Hussein used the SVM algorithm for a population of 1,429 dental radiographs, and they only achieved 92% accuracy for years. Therefore, based on Table 7, the results of the comparative studies for accuracy by years are still below the accuracy achieved in this study which is 99.98.

Conclusions

For the first time, with precision to the range of +/- 6 months, this novel implementation developed an HCNN-KNN model, used for BAM to increase the existing model's accuracy and prevent CNN overload situations. We considered more specific cases of bone age measurement with the help of Dental X-Ray OPG images to solve the problem of bone age measurement in determining the age range of 15 to 23 years based on the Year, ± 6 months, ± 3 months, and ± 1 month. We integrated the methodology of convolutional neural networks for extraction and analysis of information and features in dental images) and the nearest neighbor (classification method). The primary purpose of this proposed model is to use KNN instead of SoftMax in the fully connected layer to increase the performance of convolutional networks in the classification phase. Using principal component analysis as data transform and feature dimensionality reduction in a fully connected layer before classifying KNN as Fully_PCA. The primary purpose of this method is to transfer data to space with more specific information to increase the performance of the classification phase.

Our proposed model achieved the accuracy of 99.98, 99.96, 99.87, and 98.78 in 1 year, ± 6-month, ± 3-month, and ± 1-month range, respectively. Our proposed method evaluated different cross-validation of the dataset. 50–50 train-valid, 70–30 train-valid and 20–80 train-valid cross-validation on the dataset. Evaluating the proposed model on a new dataset with different races also proved the superior performance of the model. The benchmarking with current existing models also showed that the HCNN-KNN model is the best model for bone age measurement.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

FS and SB designed the study. FS wrote the manuscript, collected the data, carried out the analyses, including statistical analyses and the implementation of machine learning methods and generated all figures and tables. FS, SB, PO, and HA-R edited the manuscript. HA-R and PO were not involved in any analysis. All authors have read and approved the final version of the manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors would like to thank for the support of Taif University Researchers Supporting Project number (TURSP-2020/239), Taif University, Taif, Saudi Arabia. We are thankful to the computer science and engineering school, SCE Taylor's University, for providing the scholarship to complete the studies. HA-R has been supported by the UNSW Scientia Program Fellowship.

References

1. Mohammad N, Yusof MYPM, Ahmad R, Muad AM. Region-based segmentation and classification of mandibular first molar tooth based on Demirjian's method. J Phys. (2020) 1502:012046. doi: 10.1088/1742-6596/1502/1/012046

2. Kim S, Lee YH, Noh YK, Park FC, Auh Q. Age-group determination of living individuals using first molar images based on artificial intelligence. Sci Rep. 11:1–11. (2021) doi: 10.1038/s41598-020-80182-8

3. Wik EH, Martínez-Silván D, Farooq A, Cardinale M, Johnson A, Bahr R. Skeletal maturation and growth rates are related to bone and growth plate injuries in adolescent athletics scand. J Med Sci Sports. (2020) 30:894–903. doi: 10.1111/sms.13635

5. Dahlberg PS, Mosdøl A, Ding Y, Bleka Ø, Rolseth V, Straumann GH, et al. systematic review of the agreement between chronological age and skeletal age based on the greulich and pyle atlas. Eur Radiol. (2019) 29:2936–48. doi: 10.1007/s00330-018-5718-2

6. Gurpiner F, Kaya H, Dibeklioglu H, Salah AA. Kernel ELM and CNN based facial age estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. Las Vegas, NV: IEEE (2016). p. 80–6.

7. Mohammad N, Muad AM, Ahmad R, Yusof MYPM. Reclassification of Demirjian's mandibular premolars staging for age estimation based on semi-automated segmentation of deep convolutional neural network. Forensic Imag. (2021) 24:200440. doi: 10.1016/j.fri.2021.200440

8. Alkaabi S, Yussof S, Al-Mulla S. Evaluation of convolutional neural network based on dental images for age estimation. ICECTA. (2019) 2019:1–5. doi: 10.1109/ICECTA48151.2019.8959665

9. Ezhil I, Jagannathan N, Kumar MP. Estimation of age from physiological changes of teeth. Drug Invent Today. (2018).

10. Cular L, Tomaić M, Subašić M, Šarić T, Sajković V, Vodanović M. Dental age estimation from panoramic X-ray images using statistical models. In: Proceedings of the 10th International Symposium on Image and Signal Processing and Analysis. Ljubljana: IEEE (2017). p. 25–30. doi: 10.1109/ISPA.2017.8073563

11. Houssein EH, Mualla N, Hassan M. Dental age estimation based on X-ray images. Computers Materials Continua. (2020) 2:591–605. doi: 10.32604/cmc.2020.08580

12. Shi W, Yan G, Li Y, Li H, Liu T, Sun C, et al. Fetal brain age estimation and anomaly detection using attention-based deep ensembles with uncertainty. Neuroimage. (2020) 223:117316. doi: 10.1016/j.neuroimage.2020.117316

13. Alinejad-Rokny H. Proposing on Optimized Homolographic Motif Mining Strategy Based on Parallel Computing for Complex Biological Networks. J Med Imaging & Health Infor. (2016) 6:416–24. doi: 10.1166/jmihi.2016.1707

14. Javanmard A, Montanari A. IMA. State evolution for general approximate message passing algorithms, with applications to spatial coupling Information and Inference: A. J IMA. (2013) 2:115–44. doi: 10.1093/imaiai/iat004

15. Ahmadinia M, Meybodi MR, Esnaashari M. Energy-efficient and multi-stage clustering algorithm in wireless sensor networks using cellular learning automata. IETE J Res. (2013) 59:774–82. doi: 10.4103/0377-2063.126958

16. Mahmoudi MR, Heydari MH, Qasem SN, Mosavi AJ SS. Band AEJ Principal component analysis to study the relations between the spread rates of COVID-19 in high risks countries alex. Eng J. (2021) 60:457–64. doi: 10.1016/j.aej.2020.09.013

17. Hosseinpoor M. B-Koura IO, Yahia AJC, Composites C. New methodology to evaluate the reynolds dilatancy of self-consolidating concrete using 3D image analysis-coupled effect of characteristics of fine mortar and granular skeleton. Cem Concr Compos. (2020) 108:103547. doi: 10.1016/j.cemconcomp.2020.103547

18. Esmaeili L, Minaei-Bidgoli B, Alinejad-Rokny H, Nasiri M. Hybrid recommender system for joining virtual communities. Res J Appl Sci Eng Technol. (2012) 4:500–509.

19. Hussein IJ, Burhanuddin MA, Mohammed MA, Benameur N, Maashi MS. Maashi MS. Fully-automatic identification of gynaecological abnormality using a new adaptive frequency filter and histogram of oriented gradients (HOG). Expert Systems. (2021) 1:e12789. doi: 10.1111/exsy.12789

20. Khan Ah Iskandar DA, Al-Asad JF, El-Nakla S, Alhuwaidi SA. Statistical feature learning through enhanced delaunay clustering and ensemble classifiers for skin lesion segmentation and classification. J Theor Appl Inf Technol. (2021) 99:5.

21. Mahmoudi MR, Akbarzadeh H, Parvin H, Nejatian S, Rezaie V. Consensus function based on cluster-wise two level clustering. Artificial Intelligence Review. (2021) 54:639–65. doi: 10.1007/s10462-020-09862-1

22. Niu H, Khozouie N, Parvin H, Beheshti A, Mahmoudi MR. An ensemble of locally reliable cluster solutions. Appl Sci. (2020) 10:1891. doi: 10.3390/app10051891

23. Parvin H, Minaei B, Alizadeh H, Beigi A. A novel classifier ensemble method based on class weightening in huge dataset. International Symposium on Neural Networks. (2011) 2011:144–50. doi: 10.1007/978-3-642-21090-7_17

24. Parvin H, Parvin S. A classifier ensemble of binary classifier ensembles. IJLMS. (2013) 1:37–47. doi: 10.12785/ijlms/010204

25. Parvin H, MirnabiBaboli M, Alinejad-Rokny H. Proposing a classifier ensemble framework based on classifier selection and decision tree. Eng Appl Artif Intell. (2015) 1:34–42. doi: 10.1016/j.engappai.2014.08.005

26. Alinejad-Rokny H, Pourshaban H, Orimi AG. Baboli Network motifs detection strategies and using for bioinformatic networks. J Bionanoscience. (2014) 8:353–9. doi: 10.1166/jbns.2014.1245

27. Bahrani P, Minaei-Bidgoli B, Parvin H, Mirzarezaee M, Keshavarz A. User and item profile expansion for dealing with cold start problem. J Intell Fuzzy Syst. (2020) 38:4471–83. doi: 10.3233/JIFS-191225

28. Sharifrazi D, Alizadehsani R, Hassannataj Joloudari J, Shamshirband S, Hussain S, Alizadeh Sani Z, et al. CNN-KCL: Automatic myocarditis diagnosis using convolutional neural network combined with k-means clustering. Math Biosci Eng. (2021) 3:2381–402. doi: 10.20944/preprints202007.0650.v1

29. Dental age estimation in East Asian population with least squares regression. In: International Conference on Advanced Machine Learning Technologies and Applications Cham: Springer (2018). p. 653–60.

30. Farhadian M, Salemi F, Saati S, Nafisi N. Dental age estimation using the pulp-to-tooth ratio in canines by neural networks. Imaging Sci Dent. (2019) 49:19–26. doi: 10.5624/isd.2019.49.1.19

31. Alinejad-Rokny H, Anwar F, Waters S, Davenport M, Ebrahimi D. Source of CpG depletion in the HIV-1 genome. Mol Biol Evol. (2016) 33:3205–12. doi: 10.1093/molbev/msw205

32. Alinejad-Rokny H, Sadroddiny E, Scaria V. Machine learning and data mining techniques for medical complex data analysis. Neurocomputing. (2018) 276:1. doi: 10.1016/j.neucom.2017.09.027

33. Bayati M, Rabiee HR, Mehrbod M, Vafaee F, Ebrahimi D, Forrest AR, et al. a user-friendly and robust tool for identification and classification of mutational signatures and patterns in cancer genomes. Sci Rep. (2020) 10:1–11. doi: 10.1038/s41598-020-58107-2

34. Dashti H, Dehzangi A, Bayati M, Breen J, Lovell N, Ebrahimi D. Integrative analysis of mutated genes and mutational processes reveals seven colorectal cancer subtypes. BMC Bioinformatics. (2020) 23:1–24. doi: 10.1186/s12859-022-04652-8

35. Ghareyazi A, Mohseni A, Dashti H, Beheshti A, Dehzangi A, Rabiee HR. Whole-genome analysis of de novo somatic point mutations reveals novel mutational biomarkers in pancreatic cancer. Cancers. (2021) 13:4376. doi: 10.3390/cancers13174376

36. Hosseinpoor M, Parvin H, Nejatian S, Rezaie V, Bagherifard K, Dehzangi A. Proposing a novel community detection approach to identify cointeracting genomic regions. Math Biosci Eng. (2020) 3:2193–217. doi: 10.3934/mbe.2020117

37. Javanmard R, JeddiSaravi K. Proposed a new method for rules extraction using artificial neural network and artificial immune system in cancer diagnosis. J Bionanoscience. (2013) 7:665–72. doi: 10.1166/jbns.2013.1160

38. Rajaei P, Jahanian KH, Beheshti A, Band SS, Dehzangi A. VIRMOTIF A user-friendly tool for viral sequence analysis. Genes. (2021) 12:186. doi: 10.3390/genes12020186

39. Alinejad-Rokny H, Pedram MM, Shirgahi H. Discovered motifs with using parallel Mprefixspan method. Sci Res Essays. (2011) 20:4220–6. doi: 10.5897/SRE11.212

40. Ahmadinia M, Alinejad-Rokny H, Ahangarikiasari H. Data aggregation in wireless sensor networks based on environmental similarity: A learning automata approach. J Networks. (2014) 9:2567. doi: 10.4304/jnw.9.10.2567-2573

41. Parvin H, Asadi M. An ensemble based approach for feature selection. Res J Appl Sci. (2011) 9:33–43.

42. Niu H, Xu W, Akbarzadeh H, Parvin H, Beheshti A. Deep feature learnt by conventional deep neural network. Comput Electr Eng. (2020) 84:106656. doi: 10.1016/j.compeleceng.2020.106656

43. Hemalatha B, Rajkumar N. A modified machine learning classification for dental age assessment with effectual ACM-JO based segmentation. Int J Bio-Inspir Com. (2021) 2:95–104. doi: 10.1504/IJBIC.2021.114089

44. Ouali Y, Hudelot C, Tami M. An overview of deep semi-supervised learning. arXiv[Preprint]. (2020). arXiv: 2006.05278. Available online at: https://arxiv.org/pdf/2006.05278.pdf

45. Goldberg AB. New Directions in Semi-Supervised Learning. Doctoral dissertation. Madison : University of Wisconsin (2010).

46. Spampinato C, Palazzo S, Giordano D M. Aldinuccii RJM. A Leonardi Deep learning for automated skeletal bone age assessment in X-ray images. Medical Image Analysis. (2017) 36:41–51. doi: 10.1016/j.media.2016.10.010

47. Kalantari A, Kamsin A, Shamshirband S, Gani A H. Alinejad-Rokny A. Chronopoulos TJN computational intelligence approaches for classification of medical data: state-of-the-art, future challenges and research directions. Neurocomputing. (2018) 276:2–22. doi: 10.1016/j.neucom.2017.01.126

48. Shamshirband S T. Rabczuk, and K-W, Chau JIA. A survey of deep learning techniques: application in wind and solar energy resources. IEEE Access vol. (2019) 7:164650–66. doi: 10.1109/ACCESS.2019.2951750

49. Shamshirband S, Fathi M, Dehzangi A, Chronopoulos AT. A review on deep learning approaches in healthcare systems: taxonomies, challenges, and open issues. J Biomed Inform. (2021) 113:103627. doi: 10.1016/j.jbi.2020.103627

50. Qummar S, Khan FG, Shah S, Khan A, Shamshirband S, Rehman ZU, et al. Jadoon JIA. A deep learning ensemble approach for diabetic retinopathy detection. IEEE Access. (2019) 7:150530–9. doi: 10.1109/ACCESS.2019.2947484

51. Li J, Koyamada S, Ye Q, Liu G, Wang C, Yang R, et al. Suphx: Mastering mahjong with deep reinforcement learning. arXiv[Preprint]. (2020). arXiv: 2003.13590. Available online at: https://arxiv.org/pdf/2003.13590.pdf

52. Sharifonnasabi F, Jhanjhi N, John J, Alaboudi A, Nambiar P. review on automated bone age measurement based on dental OPG images. Int J Eng Res. (2020) 132:5408–22.

53. Blatt SH, Shields JR, Michael AR. Dental calculus reveals life history of decedents in forensic cases: An anthropological perspective on human identification. Forensic Genom. (2022) 2:5–16. doi: 10.1089/forensic.2022.0003

54. Avuçlu E, Başçiftçi F. The determination of age and gender by implementing new image processing methods and measurements to dental X-ray images. Measurement. (2020) 149:106985. doi: 10.1016/j.measurement.2019.106985

55. Fatih H, Catalin G. Recreation and physical activity of young girls with intellectual disability. Sci Mov Health. (2020) 20:237–41.

56. Kim JH, Kim BG, Roy PP, Jeong DM. Efficient facial expression recognition algorithm based on hierarchical deep neural network structure. IEEE Access. (2019) 7:41273–85.

57. Banar BB. Pengembangan media pembelajaran mobile learning dengan aplikasi berbasis android untuk meningkatkan hasil belajar siswa (studi pada peserta didik kelas XI Program Keahlian Otomatisasi Tata Kelola Perkantoran SMK Negeri 1 Pogalan (Doctoral dissertation, Universitas Negeri Malang).

58. Tuan TM. Dental segmentation from X-ray images using semi-supervised fuzzy clustering with spatial constraints. Eng Appl Artif Intell. (2017) 59:186–95. doi: 10.1016/j.engappai.2017.01.003

60. Greener JG, Kandathil SM, Moffat L. Jones DT. A guide to machine learning for biologists. Nat Rev Mol. (2022) 1:40–55. doi: 10.1038/s41580-021-00407-0

Keywords: dental age, estimation, Orthopantomogram, convolutional neural network, k-nearest neighbor, Biomedical machine learning

Citation: Sharifonnasabi F, Jhanjhi NZ, John J, Obeidy P, Band SS, Alinejad-Rokny H and Baz M (2022) Hybrid HCNN-KNN Model Enhances Age Estimation Accuracy in Orthopantomography. Front. Public Health 10:879418. doi: 10.3389/fpubh.2022.879418

Received: 19 February 2022; Accepted: 22 April 2022;

Published: 30 May 2022.

Edited by:

Ali Kashif Bashir, Manchester Metropolitan University, United KingdomReviewed by:

Ayse Oktay, Yıldız Technical University, TurkeyDirk Vandermeulen, University Hospitals Leuven, Belgium

Mohd Yusmiaidil Putera Mohd Yusof, Universiti Teknologi MARA, Malaysia

Ehsan Kozegar, University of Guilan, Iran

Copyright © 2022 Sharifonnasabi, Jhanjhi, John, Obeidy, Band, Alinejad-Rokny and Baz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shahab S. Band, c2hhbXNoaXJiYW5kc0B5dW50ZWNoLmVkdS50dw==; Noor Zaman Jhanjhi, Tm9vclphbWFuLkpoYW5qaGlAdGF5bG9ycy5lZHUubXk=

Fatemeh Sharifonnasabi1

Fatemeh Sharifonnasabi1 Noor Zaman Jhanjhi

Noor Zaman Jhanjhi Jacob John

Jacob John Peyman Obeidy

Peyman Obeidy Shahab S. Band

Shahab S. Band Hamid Alinejad-Rokny

Hamid Alinejad-Rokny