- 1Oakland University William Beaumont School of Medicine, Rochester, MI, United States

- 2Department of Foundational Medical Studies, Oakland University William Beaumont School of Medicine, Rochester, MI, United States

Background: YouTube is becoming an increasingly popular platform for health education; however, its reliability for surgical patient education remains largely unexplored. Given the global prevalence of preoperative anxiety, it becomes essential to ensure accurate information online.

Objectives: The objective is to assess tools/instruments used to evaluate YouTube videos on surgical procedures created to educate patients or health consumers.

Methods: In June 2023, a comprehensive literature search was conducted on PubMed, PsycINFO, CINAHL, and Scopus. Primary studies with empirical data that evaluate English YouTube videos to educate patients about surgical procedures in all specialties were included. Two reviewers independently completed title/abstract and full text screening, and data extraction in duplicate. The data extracted includes the number of videos evaluated, assessment tools, outcomes of significance, specific objectives, and features examined.

Results: A total of 41 studies were included in the review. The most commonly used evaluation tools were DISCERN (21 studies), the Global Quality Scale (11 studies), and the JAMA benchmark criteria (11 studies). Notably, 23 studies used a unique assessment instrument, and several studies employed more than one tool concurrently. Of the total studies included, 88% of the articles determined that patients were not adequately educated by YouTube videos per the ratings of the assessment tools, and 19 out of 41 articles mentioned that videos from professional sources were most useful.

Conclusions: This systematic review suggests that the educational qualities in YouTube videos are substandard. Patients should be cautious when relying solely on YouTube videos for medical guidance. Surgeons and medical institutions are encouraged to direct patients to high-quality patient education sources and create accessible medical content. As there is variability in the quality assessment tools used for evaluation, a standardized approach to creating and assessing online medical videos would improve patient education.

Introduction

In 2024, the number of active users on YouTube exceeded 2.56 billion (1). More than 500 h of content are uploaded to YouTube every minute (2). Approximately 25% of adults in the United States stated that they rely on YouTube as a regular source for obtaining news (3).

In recent years, YouTube has emerged as a popular platform for patient and health consumer education. In 2020, 40.8% of U.S. adults used YouTube to watch health–related videos (4). Recent literature published in 2022 has shown that YouTube is not a reliable source for medical and health-related information and there has only been one systematic review investigating the reliability of YouTube as a source of knowledge for surgical patients (5, 6). With the increasing availability of surgical videos on YouTube, it is crucial to assess the tools or instruments used to evaluate the quality and educational value of such content.

Surgical education plays a vital role in empowering patients to make informed decisions about their healthcare and enhance their understanding of complex medical interventions. YouTube offers an easily accessible and visually engaging platform to deliver such educational content. As preoperative anxiety remains a critical issue, occurring in ~48% of surgical patients globally (7), it is vital to ensure that accessible information online on surgical procedures is accurate and regulated to prevent unnecessary confusion.

The purpose of this systematic review is to assess the tools or instruments employed for evaluating YouTube videos focused on surgical procedures with the intent of educating patients or health consumers. The findings of this review will have implications for healthcare providers, educators, and content creators involved in patient education. Understanding the strengths and weaknesses of existing evaluation tools will facilitate the development of standardized guidelines and best practices for assessing the quality and educational impact of YouTube videos on surgical procedures. Ultimately, this systematic review aims to contribute to the improvement of patient education materials available on YouTube, ensuring that patients and health consumers have access to reliable, accurate, and informative content that enhances their surgical knowledge and decision-making abilities.

Methods

Literature search

A comprehensive literature search (MM) was conducted using PubMed, PsycINFO, CINAHL and Scopus from each database's inception to June 6, 2023. A combination of index terms and keywords were used to represent key concepts of “patient education,” “YouTube video,” “psychometrics,” “quality assessment,” and “surgical procedure” (See Appendix A for a sample search strategy for PubMed). A hand search of the reference lists of all identified studies were examined for additional studies.

Eligibility criteria

Articles were selected based on specific inclusion criteria. The review included original, full-text primary studies published in the English language that provided empirical data evaluating English YouTube videos created for patient and health consumer education. Videos encompassed information regarding surgical procedures in all surgical specialties. Reviews, duplicate articles, comments, editorials, letters, and abstracts lacking full content articles were excluded. Studies analyzing videos in other languages, from differing social media sites, and targeting health professional education were also excluded.

Data selection

All search results were imported into Covidence for screening and data extraction. Covidence is a web-based software for managing and streamlining systematic reviews. Two reviewers (MP and AL) first screened titles and abstracts against the selection criteria, followed by full text screening done in duplicate and independently. Any discrepancies in screening by the two reviewers were discussed and resolved to reach consensus. The third author (MM) assessed any variances and determined their inclusion.

Data extraction

A standardized data collection form was created on the Covidence platform, and the authors (MP and AL) completed data extraction in duplicate and independently. All discrepancies were discussed and resolved with the third author (MM). The parameters consisted of study aims, surgeries evaluated, type of quality tools used, number of videos analyzed, primary source of videos, types of video characteristics studied, educational quality based on author's judgment, study limitations, future recommendations, and video sources deemed the most useful. The video sources were divided into four categories: commercials, patients, professional entities (e.g., created by physicians, hospitals), educational institutions (association, organization, society, and others). A rating scale was developed to assess the educational quality of the videos: “poor,” “moderate” or “good” based on the articles' direct analyses, and the reasoning was noted.

Results

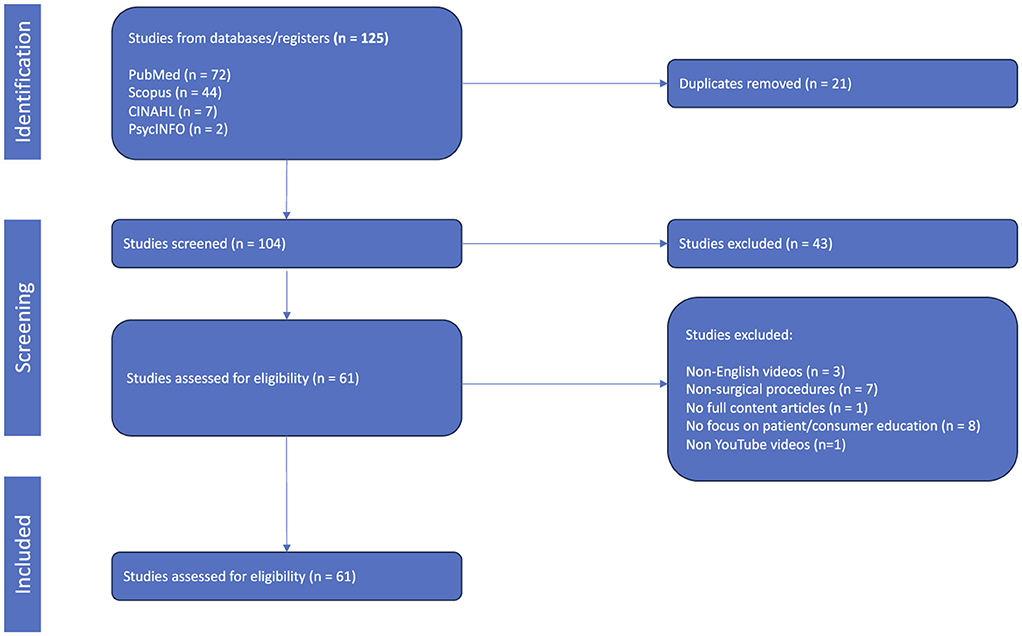

Using an initial dataset of 125 studies, articles were excluded based on specific inclusion criteria (Figure 1). Ultimately, 41 studies remained in the review for data extraction and analysis.

Video information data

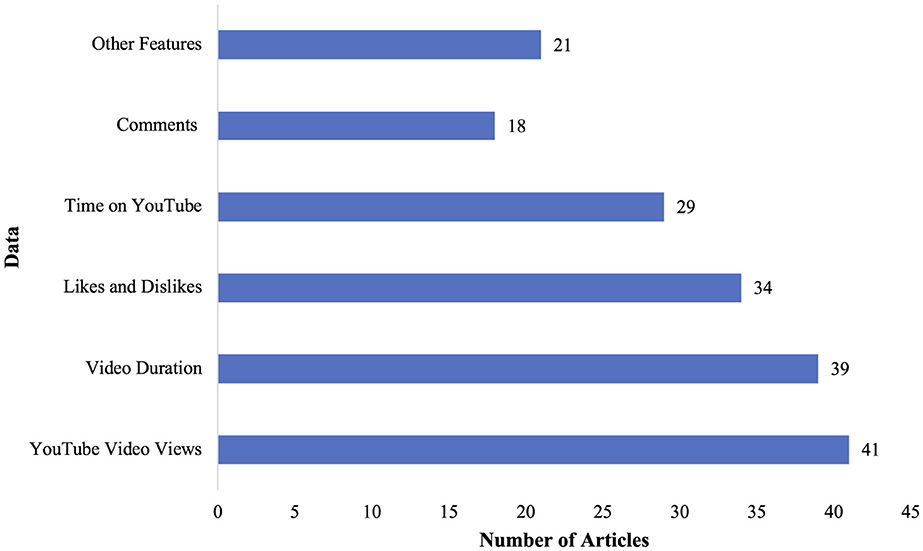

Using an initial dataset of 125 studies, articles were excluded based on specific inclusion criteria (Figure 1). Ultimately, 41 studies remained in the review for data extraction and analysis. These articles assessed an average of 98.8 videos per study, ranging from 16 to 523 videos evaluated per study. The parameters that quality assessment tools analyzed included YouTube video views, video duration, likes and dislikes, time on YouTube, comments, and other features (Figure 2). Studies were published from 2013 to 2023, with 2021 as the median publication year with a notable increase after 2020. A trend was noted for an increasing amount of research articles evaluating the quality of YouTube videos, reflecting growing reliance on social media and digital platforms for patient education. Videos encompassed information regarding surgical procedures in all surgical specialties, such as general surgery, oral and maxillofacial surgery, cardiac surgery, orthopedic surgery, dental and endodontic surgery, obstetric surgery, gynecology, urology, ophthalmology, neurosurgery, plastic and reconstructive surgery, neonatal surgery, and colorectal surgery.

Figure 2. Data assessed by quality assessment tools with “Other Features,” including title of video, universal resource locator, number of channel subscribers, video power index, country of origin, percentage positivity (proportion of likes to total likes plus dislikes), presence of subtitles, viewer interaction index, video title, target audience, presence of animation, video/audio quality, and daily viewing rate.

Video source characteristics

All of the YouTube videos included professional sources and creators, such as physicians, hospitals, educational institutions, and societies. The majority (68%) featured patients and their testimonials. Commercial content (59%) was present in over half of the videos, while other diverse sources were also frequently utilized (73%). Of the 23 studies reporting sources that provided the most useful data for patient and health consumer education, 20 recognized videos from professional sources, two noted patient sources, and one described commercials as the most helpful source. These findings suggest a strong association between source professionalism and perceived educational value.

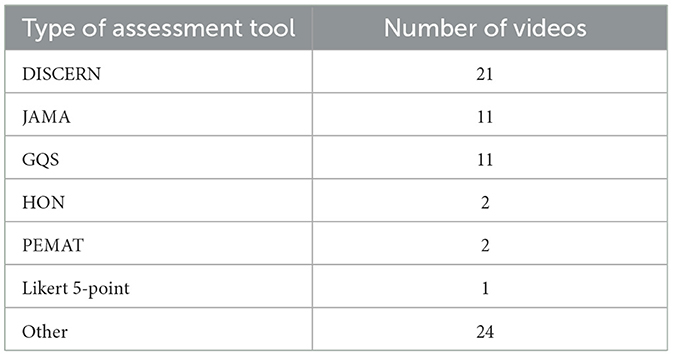

Use of quality assessment tools

The DISCERN reliability instrument was the predominant tool for video evaluation (8–16, 18–29). Other prevalent tools were the Journal of the American Medical Association (JAMA) Benchmark Criteria (8–18), Global Quality Scale (GQS) Criteria (8–10, 14, 17–19, 26, 27, 30, 31), Health on the Net (HON) Code of Conduct (12, 13), Patient Education Materials Assessment Tool (PEMAT) and the Usefulness Scoring System (20, 29). A notable portion of the articles used standardized reliability instruments developed by the authors, from previous studies, physicians and medical organizations (10, 15–17, 27, 30–47). The YouTube Video Assessment Criteria and Ensuring Quality Information for Patients Score were utilized less frequently (Table 1). While standardized tools provided a consistent framework, variation in implementation and scoring limited comparability. Notably, all studies that noted adequate patient education or moderate quality based on article author analysis used multiple assessment tools (e.g., DISCERN + JAMA + GQS).

Educational value

Educational quality, as rated by the respective quality assessment instruments, was predominantly low. Based on the articles' analyses of the educational quality of the YouTube videos, 33 studies were rated as poor quality, seven as moderate quality, and one as good quality. Five of the seven studies that determined moderate quality utilized GQS, four used DISCERN, three used JAMA, and four used other types of assessment tools, suggesting some consistency among these tools in identifying informative videos (8–10, 26, 30, 46, 48). The article that reported the videos were of good quality used JAMA, GQS, and its own quality assessment tool as well (17).

Three studies concluded that surgical YouTube videos adequately educated patients, and 36 yielded contrasting data. Many articles (44%) mentioned that YouTube videos were missing vital information regarding surgical procedures, such as treatment alternatives and potential risks. Others (27%) noted that the scores associated with the reliability instruments were low and 20% of the articles saw an increasing prevalence of source bias with the videos. Several studies (15%) detailed that there were notable issues with videography, like difficulties with music, overall quality, and narration. The three other studies noted high scores among the reliability instruments (9), reputable sources creating and distributing content (10), and easily understandable videos with adequate procedure descriptions, which are not systematically captured by existing assessment instruments (8). Interestingly, among the five videos related to ophthalmology, two were reported to have adequately educated patients.

Common deficiencies in video content

Several recurring deficiencies in YouTube surgical video content were identified across the included studies. Most notably, eighteen studies (44%) reported that videos often omitted critical information such as treatment alternatives, potential risks, or post-operative expectations, limiting their utility for comprehensive patient education. Additionally, eleven studies (27%) documented consistently low scores across validated reliability instruments, including DISCERN and JAMA, reflecting concerns about content accuracy and trustworthiness. Eight studies (20%) highlighted the presence of source bias or overt promotional messaging, which may compromise the objectivity of the information presented. Furthermore, six studies (15%) described production-related limitations, such as poor narration quality, distracting background music, or inadequate video resolution, which could detract from viewer comprehension.

Discussion

Patient and health consumer education is rapidly evolving, with digital platforms and social media resources becoming increasingly prominent. This shift requires a reevaluation of how surgical patients receive information about their conditions and procedures. Validated evaluation tools are essential for assessing the quality and accuracy of educational videos to identify videos that provide clear information and align with current medical standards.

Video sources and content

Since all 41 articles evaluated YouTube videos that included professional sources, the content is likely to be credible and created with expertise. However, despite this professional endorsement, the majority of the videos were deemed to provide poor educational quality. The prevalence of patient testimonials and commercial content contributes to further complications as these types of videos often prioritize personal experiences and promotional content over comprehensive educational information.

Quality assessment tools and quality analysis

To assess video quality, the authors predominantly utilized the DISCERN reliability instrument, along with other established tools such as the JAMA Benchmark Criteria and the GQS Criteria.

The DISCERN tool is a validated questionnaire designed to assess the quality of written consumer health information on treatment choices, consisting of 15 items rated on a 5-point scale and culminating in an overall quality score. Though comprehensive, DISCERN can be time-consuming and requires training for consistent use (49). In the context of video content, DISCERN has been adapted by some researchers to evaluate scripted narration or on-screen information, but its written-format origins may limit applicability to visual, interactive, or audiovisual cues that influence viewer perception and comprehension.

The JAMA Benchmark Criteria provide a more objective evaluation of online health information, assessing four elements: authorship, attribution, disclosure, and currency. While useful for gauging source credibility, the binary scoring system does not assess content accuracy, completeness, or audiovisual clarity—factors highly relevant in video-based media. As such, the JAMA criteria are often used as a supplemental tool rather than a standalone measure when evaluating videos (50).

The GQS criteria uses a 5-point Likert scale to assess the overall quality, flow, and usefulness of online content, especially videos, ranging from poor (1) to excellent (5). Though fast and intuitive, GQS is subjective and lacks detailed evaluative criteria, limiting its diagnostic utility (51). The HONcode certification, developed by the Health On the Net Foundation, was another credibility-focused tool that evaluated websites based on eight ethical principles, including authority, complementarity, privacy, attribution, justifiability, transparency, financial disclosure, and advertising policy. While HONcode was useful for identifying trustworthy health websites, it did not assess content depth, accuracy, or readability. As of December 15, 2022, the HONcode certification service has been discontinued, limiting its utility for future website evaluations (52, 53).

Eighty percent of articles rated the video educational quality as poor, suggesting a need to improve video quality. While these are established standardized tools, they are not designed to assess medical videos. These gaps suggest that the current tools are not entirely sufficient for ensuring high-quality educational content in dynamic online environments like YouTube (7). Therefore, a standardized tool should be created to assess video quality to ensure consistency across video evaluations.

In comparison to DISCERN, JAMA, and GQS, specialized frameworks such as the Instructional Videos in Otorhinolaryngology by YO-IFOS(IVORY) and LAParoscopic surgery Video Educational GuidelineS (LAP-VEGaS) guidelines have been developed to evaluate surgical videos intended for healthcare professional training (54, 55). These tools are more rigorous and procedure-specific in that they incorporate detailed criteria related to surgical technique, anatomical accuracy, intraoperative decision-making, step-by-step procedural clarity, video speed, camera angles, presentation clarity, and audio-visual delivery. Although these tools are designed for surgical training, they could be adapted to enhance the evaluation of surgical videos intended for patient education.

While the majority of the wide variety of assessment tools utilized by the articles indicated poor overall video quality, they also highlighted other problematic issues, including the omission of vital information, such as treatment alternatives, potential risks, low reliability scores, and increasing source bias. Several studies also pointed out technical issues, such as poor videography, suboptimal audio quality, and ineffective narration, which further detract from the educational value of the videos. Moreover, some studies identified misinformation and outdated content as critical problems, emphasizing the need for continuous updating and verification of online medical content. These deficiencies highlight another gap in the current use of YouTube as an educational tool for patients, and suggest that many videos fail to provide comprehensive information, which is essential for informed patient decision-making.

Contrasting findings

Interestingly, three studies (8–10) rated the YouTube videos as adequate educational tools, citing high scores on quality assessment instruments, reputable sources, and clear, understandable content. This discrepancy indicates that while the general trend points toward inadequate educational quality, there are exceptions where videos meet high standards. These positive examples can serve as benchmarks for creating better educational content in the future.

Limitations

This review is subject to several limitations. First, the included studies were assessed from the perspective of patients and healthcare consumers. While this approach is relevant to understanding public accessibility and perceived educational value, it may not fully reflect clinical accuracy or high educational quality. Additionally, it is important to acknowledge that YouTube functions primarily as an entertainment and social media platform rather than a formal educational resource. Consequently, many of the videos uploaded may not be intended for, or suitable as, educational content, limiting its suitability for patient and health consumer education. Another limitation is the exclusion of studies published in languages rather than English, which could introduce a potential selection bias, leading to incomplete or inaccurate conclusions, as studies published in other languages may contain crucial information that is not available in English-language sources.

Future recommendations

This review highlights the need for improved standards in the creation of surgical educational videos on YouTube. A new standardized tool should be developed that incorporates the strengths of widely used current tools, while addressing the unique challenges of assessing medical videos. Key criteria should account for dynamic audiovisual elements (e.g., clarity of narration, visual accuracy of demonstrations, use of animations or overlays), content accuracy, source credibility, and viewer engagement strategies. It should also consider accessibility features such as closed captions, language simplicity, and cultural sensitivity.

Advancements in artificial intelligence (AI), particularly natural language processing and deep learning, present promising opportunities for moderating health-related video content. For example, real-time misinformation detection using machine learning has proven effective during the COVID-19 pandemic (56). To implement these innovations, we propose a multidisciplinary task force, composed of clinicians, AI researchers, digital media experts, public health officials, and patient advocates, to develop validated scoring systems and collaborate directly with platforms, such as YouTube. Integration strategies may include voluntary quality tagging by verified content creators, peer-review-based content badges, and platform-endorsed health information panels. These features may help elevate trustworthy content while guiding users toward evidence-based information in an increasingly decentralized and saturated media landscape.

By directing patients to high-quality educational resources, surgeons can significantly enhance patient understanding and preparedness for surgical procedures. Given the overwhelming amount of online medical information, surgeons must guide patients toward reputable websites, vetted educational videos, and institutionally approved resources. They should also be aware of the quality assessment tools available to evaluate the quality of video content, ensuring that the materials they endorse are of the highest standard.

Conclusions

Though YouTube has indubitably transformed patient and health consumer education, the reliability and educational quality of its patient education videos remain a concern, particularly with surgical procedures. This systematic review finds that, despite their perceived credibility, quality assessment tools have determined that many videos from professional sources offer limited educational value. With improved patient education materials, the medical community can improve health consumer education, ultimately enhancing patient understanding, reducing anxiety, and potentially improving clinical outcomes in surgical settings.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

MP: Data curation, Formal analysis, Investigation, Methodology, Writing – original draft, Writing – review & editing. AL: Data curation, Formal analysis, Investigation, Methodology, Writing – review & editing. MM: Conceptualization, Project administration, Software, Supervision, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2025.1575801/full#supplementary-material

Abbreviations

GQS, Global Quality Scale; HON, Health on the Net; JAMA, Journal of the American Medical Association; PEMAT, Patient Education Materials Assessment Tool and the Usefulness Scoring System.

References

1. L Ceci Youtube - Statistics and Facts. Statista. February 16, 2024. Available online at: https://www.statista.com/topics/2019/youtube/#editorsPicks (accessed September 19, 2024).

2. Oxford economics. The State of the Creator Economy. Assessing the Economic, Cultural, and Societal Impact of YouTube in the US in 2022. Available online at: https://kstatic.googleusercontent.com/files/721e9ce19a4fe59b40135ad7b06a61d589468bf7df4b2d654c1be32aca225939b6dac1e16d1de1c0d0fe5bb496e82f5920afa7eceb7dc867e4f71751bc233261 (accessed June 10, 2023).

3. Pew Research Center. Social Media and News Fact Sheet. Pew Research Center; September 20, (2022). Available online at: https://www.pewresearch.org/journalism/fact-sheet/social-media-and-news-fact-sheet/ (accessed June 10, 2023).

4. Lee J, Turner K, Xie Z, Kadhim B, Hong YR. Association between health information-seeking behavior on YouTube and physical activity among U.S. adults. AJPM Focus. (2022) 2:100035. doi: 10.1016/j.focus.2022.100035

5. Osman W, Mohamed F, Elhassan M, Shoufan A. Is YouTube a reliable source of health-related information? A systematic review. BMC Med. Educ. (2022) doi: 10.21203/rs.3.rs-1047141/v1

6. Javidan A, Nelms MW, Li A, Lee Y, Zhou F, Kayssi A, et al. Evaluating YouTube as a source of education for patients undergoing surgery: a systematic review. Ann Surgery. (2023). 278:e712-e718. doi: 10.1097/SLA.0000000000005892

7. Guler MA, Aydin EO. Development and validation of a tool for evaluating YouTube-based medical videos. Ir J Med Sci. (2022) 191:1985–1990. doi: 10.1007/s11845-021-02864-0

8. Heisinger S, Huber D, Matzner MP. TLIF online videos for patient education-evaluation of comprehensiveness, quality, and reliability. Int J Environ Res Public Health. (2023) 20:4626. doi: 10.3390/ijerph20054626

9. Karataş ME, Karataş G. Evaluating the reliability and quality of the upper eyelid blepharoplasty videos on YouTube. Aesthetic Plast Surg. (2022) 46:754–759. doi: 10.1007/s00266-021-02504-z

10. Kalayci M, Cetinkaya E, Suren E, Yigit K, Erol MK. Are YouTube videos useful in informing patients about keratoplasty? Semin Ophthalmol. (2021) 36:469–474. doi: 10.1080/08820538.2021.1890145

11. Yasin S, Altunisik E. Quality analysis of YouTube videos on mechanical thrombectomy for acute ischemic stroke. J Stroke Cerebrovasc Dis. (2023) 32:106914. doi: 10.1016/j.jstrokecerebrovasdis.2022.106914

12. Noll D, Green L, Asokan G. Is YouTube™ a good source of information for patients to understand laparoscopic fundoplication? Ann R Coll Surg Engl. (2023) 105:365–371. doi: 10.1308/rcsann.2022.0039

13. Gupta AK, Kovoor JG, Ovenden CD, Cullen HC. Paradigm shift: Beyond the COVID-19 era, is YouTube the future of education for CABG patients? J Card Surg. (2022) 37:2292–2296. doi: 10.1111/jocs.16617

14. Sayin O, Altinkaynak H, Adam M, Dirican E, Agca O. Reliability of YouTube videos in vitreoretinal surgery. Ophthalmic Surg Lasers Imaging Retina. (2021) 52:478–483. doi: 10.3928/23258160-20210817-01

15. Ng MK, Emara AK, Molloy RM, Krebs VE, Mont M, Piuzzi NS. YouTube as a source of patient information for total knee/hip arthroplasty: quantitative analysis of video reliability, quality, and content. J Am Acad Orthop Surg. (2021) 29:e1034-e1044. doi: 10.5435/JAAOS-D-20-00910

16. Cassidy JT, Fitzgerald E, Cassidy ES. YouTube provides poor information regarding anterior cruciate ligament injury and reconstruction. Knee Surg Sports Traumatol Arthrosc. (2018) 26:840–845. doi: 10.1007/s00167-017-4514-x

17. Jadhav G, Mittal P, Bajaj NC, Banerjee S. Are YouTube videos on regenerative endodontic procedure reliable source for patient edification? Saudi Endodontic J. (2021) 12:43–49. doi: 10.4103/sej.sej_111_21

18. Ozturkmen C, Berhuni M. YouTube as a source of patient information for pterygium surgery. Ther Adv Ophthalmol. (2023) 15:25158414231174143. doi: 10.1177/25158414231174143

19. Zaliznyak M, Masterson JM, Duel B. YouTube as a source for information on newborn male circumcision: is YouTube a reliable patient resource? J Pediatr Urol. (2022) 18:678.e1-678.e7. doi: 10.1016/j.jpurol.2022.07.011

20. Lang JJ, Giffen Z, Hong S. Assessing vasectomy-related information on YouTube: sn snalysis of the quality, understandability, and actionability of information. Am J Mens Health. (2022) 6:15579883221094716. doi: 10.1177/15579883221094716

21. Er N, Çanakçi FG. Temporomandibular joint arthrocentesis videos on YouTube: are they a good source of information? J Stomatol Oral Maxillofac Surg. (2022) 123:e310-e315. doi: 10.1016/j.jormas.2022.03.011

22. Thomas C, Westwood J, Butt GF. Qualitative assessment of YouTube videos as a source of patient information for cochlear implant surgery. J Laryngol Otol. (2021) 135:671–674. doi: 10.1017/S0022215121001390

23. Ward M, Abraham ME, Craft-Hacherl C. Neuromodulation, deep brain stimulation, and spinal cord stimulation on YouTube: a content-quality analysis of search terms. World Neurosurg. (2021) 151:e156–e162. doi: 10.1016/j.wneu.2021.03.151

24. Tripathi S, ReFaey K, Stein R. The reliability of Deep Brain Stimulation YouTube videos. J Clin Neurosci. (2020) 74:202–204. doi: 10.1016/j.jocn.2020.02.015

25. Ward M, Ward B, Abraham M. The educational quality of neurosurgical resources on YouTube. World Neurosurg. (2019) 130:e660–e665. doi: 10.1016/j.wneu.2019.06.184

26. Oremule B, Patel A, Orekoya O, Advani R, Bondin D. Quality and reliability of YouTube videos as a source of patient information on rhinoplasty. JAMA Otolaryngol Head Neck Surg. (2019) 145:282–283. doi: 10.1001/jamaoto.2018.3723

27. Bayazit S, Ege B, Koparal M. Is the YouTube™ a useful resource of information about orthognathic surgery? A cross-sectional study. J Stomatol Oral Maxillofac Surg. (2022) 123:e981–e987. doi: 10.1016/j.jormas.2022.09.001

28. Safa A, De Biase G, Gassie K, Garcia D, Abode-lyamah K, Chen S. Reliability of YouTube videos on robotic spine surgery for patient education. J. Clin. Neurosci. (2023) 109:6–10. doi: 10.1016/j.jocn.2022.12.014

29. Roberts B, Kobritz M, Nofi C. Social media, misinformation, and online patient education in emergency general surgical procedures. J Surg Res. (2023) 287:16–23. doi: 10.1016/j.jss.2023.01.009

30. Coban G, Buyuk SK. YouTube as a source of information for craniofacial distraction osteogenesis. J Craniofac Surg. (2021) 32:2005–2007. doi: 10.1097/SCS.0000000000007478

31. Sader N, Kulkarni AV, Eagles ME, Ahmed S, Koschnitzky JE, Riva-Cambrin J. The quality of YouTube videos on endoscopic third ventriculostomy and endoscopic third ventriculostomy with choroid plexus cauterization procedures available to families of patients with pediatric hydrocephalus. J Neurosurg Pediatr. (2020) 25:607–614. doi: 10.3171/2019.12.PEDS19523

32. Lee KN, Son GH, Park SH, Kim Y, Park ST. YouTube as a source of information and education on hysterectomy. J Korean Med Sci. (2020) 35:e196. doi: 10.3346/jkms.2020.35.e196

33. Özdal Zincir Ö, Bozkurt AP, Gaş S. Potential patient education of YouTube videos related to wisdom tooth surgical removal. J Craniofac Surg. (2019) 30:e481–e484. doi: 10.1097/SCS.0000000000005573

34. Jain N, Abboudi H, Kalic A, Gill F, Al-Hasani H. YouTube as a source of patient information for transrectal ultrasound-guided biopsy of the prostate. Clin Radiol. (2019) 74:79.e11–79.e14. doi: 10.1016/j.crad.2018.09.004

35. Camm CF, Russell E, Ji Xu A, Rajappan K. Does YouTube provide high-quality resources for patient education on atrial fibrillation ablation? Int J Cardiol. (2018) 272:189–193. doi: 10.1016/j.ijcard.2018.08.066

36. Bae SS, Baxter S. YouTube videos in the English language as a patient education resource for cataract surgery. Int Ophthalmol. (2018) 38:1941–1945. doi: 10.1007/s10792-017-0681-5

37. Tan ML, Kok K, Ganesh V, Thomas SS. Patient information on breast reconstruction in the era of the world wide web. A snapshot analysis of information available on youtube.com. Breast. (2014) 23:33–37. doi: 10.1016/j.breast.2013.10.003

38. Strychowsky JE, Nayan S, Farrokhyar F, MacLean J. YouTube: a good source of information on pediatric tonsillectomy? Int J Pediatr Otorhinolaryngol. (2013) 77:972–975. doi: 10.1016/j.ijporl.2013.03.023

39. Sorensen JA, Pusz MD, Brietzke SE. YouTube as an information source for pediatric adenotonsillectomy and ear tube surgery. Int J Pediatr Otorhinolaryngol. (2014) 78:65–70. doi: 10.1016/j.ijporl.2013.10.045

40. Larouche M, Geoffrion R, Lazare D. Mid-urethral slings on YouTube: quality information on the internet? Int Urogynecol J. (2016) 27:903–908. doi: 10.1007/s00192-015-2908-1

41. Nason K, Donnelly A, Duncan HF. YouTube as a patient-information source for root canal treatment. Int Endod J. (2016) 49:1194–1200. doi: 10.1111/iej.12575

42. Hegarty E, Campbell C, Grammatopoulos E, DiBiase AT, Sherriff M, Cobourne MT. YouTube™ as an information resource for orthognathic surgery. J Orthod. (2017) 44:90–96. doi: 10.1080/14653125.2017.1319010

43. Gray MC, Gemmiti A, Ata A, et al. Can you trust what you watch? An assessment of the quality of information in aesthetic surgery videos on YouTube. Plast Reconstr Surg. (2020) 146:329e−336e. doi: 10.1097/PRS.0000000000007213

44. Castillo J, Wassef C, Wassef A, Stormes K, Berry AE. YouTube as a source of patient information for prenatal repair of myelomeningocele. Am J Perinatol. (2021) 38:140–144. doi: 10.1055/s-0039-1694786

45. Cho HY, Park SH. Evaluation of the quality and influence of YouTube as a source of information on robotic myomectomy. J Pers Med. (2022) 12:1779. doi: 10.3390/jpm12111779

46. Azer SA, AlKhawajah NM, Alshamlan YA. Critical evaluation of YouTube videos on colostomy and ileostomy: can these videos be used as learning resources? Patient Educ Couns. (2022) 105:383–389. doi: 10.1016/j.pec.2021.05.023

47. Lee KN, Joo YJ, Choi SY, et al. Content analysis and quality evaluation of cesarean delivery-related videos on YouTube: cross-sectional study. J Med Internet Res. (2021) 23:e24994. doi: 10.2196/24994

48. Brooks FM, Lawrence H, Jones A, McCarthy MJ. YouTube™ as a source of patient information for lumbar discectomy. Ann R Coll Surg Engl. (2014) 96:144–146. doi: 10.1308/003588414X13814021676396

49. Charnock D, Shepperd S, Needham G, Gann R. DISCERN an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health. (1999) 53:105–11. doi: 10.1136/jech.53.2.105

50. Mac OA, Thayre A, Tan S, Dodd RH. Web-based health information following the renewal of the cervical screening program in Australia: evaluation of readability, understandability, and credibility. J Med Internet Res. (2020) 22:e16701. doi: 10.2196/16701

51. Gudapati JD, Franco AJ, Tamang S, et al. A study of global quality scale and reliability scores for chest pain: an instagram-post analysis. Cureus. (2023) 15:e45629. doi: 10.7759/cureus.45629

52. Boyer C, Selby M, Scherrer JR, Appel RD. The Health on the net code of conduct for medical and health web sites. Comput Biol Med. (1998) 28:603–10. doi: 10.1016/S0010-4825(98)00037-7

53. Tan DJY, Ko TK, Fan KS. The readability and quality of web-based patient information on nasopharyngeal carcinoma: quantitative content analysis. JMIR Form Res. (2023) 7:e47762. doi: 10.2196/47762

54. Simon F, Peer S, Michel J, Bruce IA, Cherkes M, Denoyelle F, et al. IVORY Guidelines (Instructional Videos in Otorhinolaryngology by YO-IFOS): a consensus on surgical videos in ear, nose, and throat. Laryngoscope. (2021) 131:E732–7. doi: 10.1002/lary.29020

55. Celentano V, Smart N, Cahill RA, Spinelli A, Giglio MC, et al. Development and validation of a recommended checklist for assessment of surgical videos quality: the LAParoscopic surgery Video Educational GuidelineS (LAP-VEGaS) video assessment tool. Surg Endosc. (2021) 35:1362–9. doi: 10.1007/s00464-020-07517-4

Keywords: patient education, surgical procedure, social media, YouTube videos, quality assessment, psychometrics, health education

Citation: Pavuloori M, Lin A and Mi M (2025) Tools/instruments for assessing YouTube videos on surgical procedures for patient/consumer health education: a systematic review. Front. Public Health 13:1575801. doi: 10.3389/fpubh.2025.1575801

Received: 13 February 2025; Accepted: 20 June 2025;

Published: 10 July 2025.

Edited by:

Feng Guo, Tianjin University, ChinaReviewed by:

Mohammad Ainul Maruf, Universitas Muhammadiyah Jakarta, IndonesiaMitat Selçuk Bozhöyük, Bitlis Tatvan State Hospital, Türkiye

Copyright © 2025 Pavuloori, Lin and Mi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Manasa Pavuloori, bXNwYXZ1bG9vcmlAb2FrbGFuZC5lZHU=

Manasa Pavuloori

Manasa Pavuloori Amy Lin

Amy Lin Misa Mi

Misa Mi