- Science news

- Frontiers news

- Cutting through fast-churn science: How Frontiers raised the bar

Cutting through fast-churn science: How Frontiers raised the bar

'Fast-churn' science — manuscripts built from quick queries of public datasets — has become an industry-wide concern. These rapidly executed studies can flood journals with low-quality, redundant findings. The preprint Dramatic increase in redundant publications in the Generative AI era highlights the scale of the problem.

The preprint shows how quickly new research integrity challenges emerge, underscoring the need for agile, professional research integrity teams. In 2024, when the authors recorded a 400% jump in redundant papers, adequate editorial checks for article redundancy did not yet exist. The surge, amplified by generative AI, remains a serious challenge for publishers.

Frontiers’ response

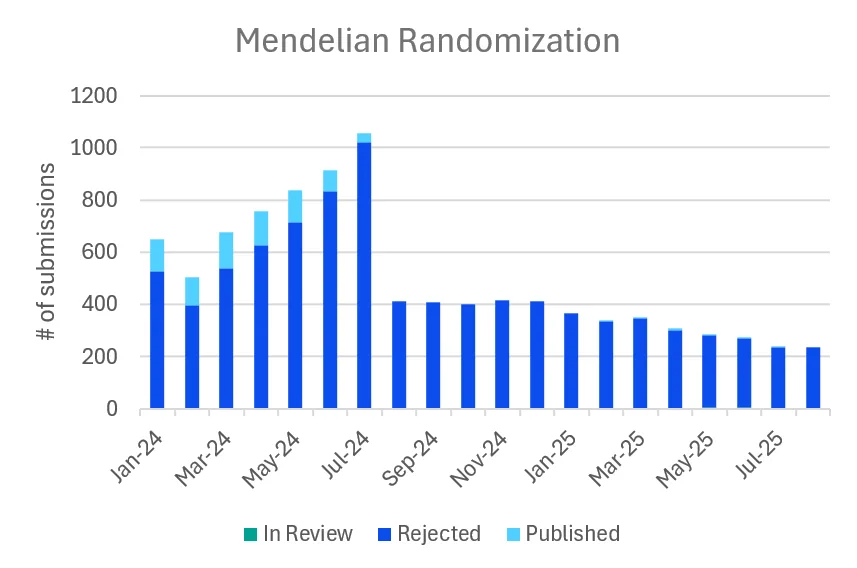

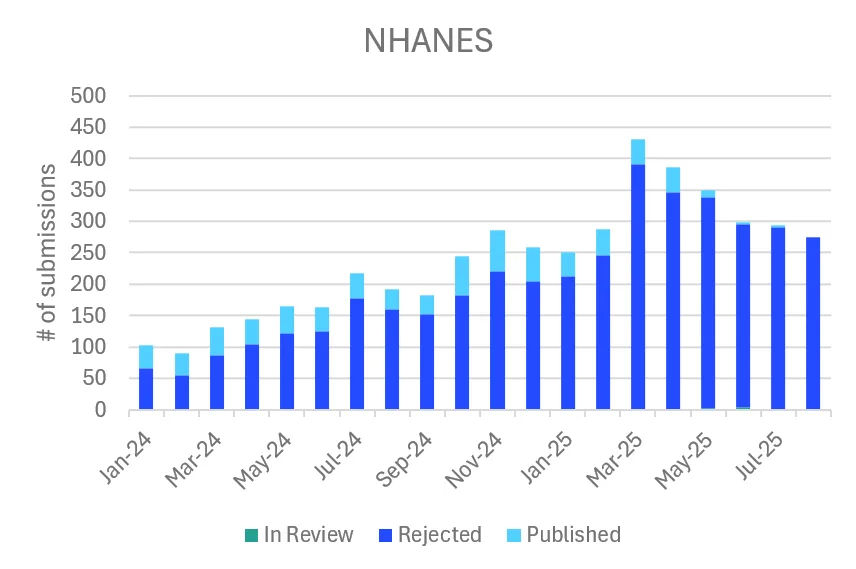

Frontiers acted early. By mid-2024 we saw a spike in Mendelian Randomization (MR) submissions, followed by a wave of papers using the US National Health and Nutrition Examination Survey (NHANES). In February 2025, Dr Arch G. Mainous III, Professor and Vice Chair of Research at the University of Florida and Field Chief Editor for Frontiers in Family Medicine and Primary Care, described the core risk: large datasets allow countless variable combinations, so purely data-driven correlations can appear 'statistically significant' by chance, without a guiding hypothesis.

“These databases have been the basis for many important studies and prevalence assessments. However, because of the large number of variables that are available it seems that enterprising groups can build a matrix of variables and simply correlate the variables looking for statistically significant relationships. What is missing in these analyses is a hypothesis with clear outcomes. Further, the hypothesis would need to consider contextual factors that are the underpinnings of the NHANES data set or other US data set and the need for cultural adaptation in other global cultural domains.”

As Dr Mainous noted, these databases have supported many important studies, but without a clear research question and contextual understanding, authors can simply hunt for patterns.

Frontiers moved to ensure every such submission is anchored in a testable, evidence-based hypothesis. Our policy requires authors to provide new experimental validation or additional data from their own institution, guaranteeing that each paper adds unique value beyond widely used datasets. All papers cited in the Spick et al. preprint pre-date this policy and would not meet current standards.

A clear line in the sand

In July 2024 Frontiers became one of the first publishers to require independent external validation for all health-dataset MR studies. MR manuscripts lacking new validation or institutional data were declined at desk review. The effect was immediate: MR submissions fell 61 % in the first month and over 70 % to date.

Robust screening also reduced NHANES submissions, but growth continued into 2025. Early this year we became the first publisher to make the external validation requirement explicit for all papers based on simple queries of public data, including NHANES articles, clarifying for authors and reviewers that each manuscript must contribute unique scientific value. Since adopting this stronger policy, we have rejected:

5,513 Mendelian Randomization studies (since July 2024)

1,382 NHANES-based submissions (since May 2025)

Figures below show the impact of these measures:

Integrity in an AI age: technology + expertise

Frontiers welcomes the preprint published today and is in contact with its authors to exchange insights. In the same February 2025 editorial, Dr Arch G. Mainous III described how 'papermills' could exploit large public datasets such as NHANES, manipulating data to create fraudulent articles for sale and overwhelming editors and reviewers:

“Unfortunately, these scientifically dubious but statistically significant relationships can be used as the basis for manuscripts to be sold by papermills. By having statistically significant relationships the likelihood of publication is enhanced. It is important to remember that if enough comparisons are computed some will be statistically significant even if it is purely by chance.

This creates a deluge of papers that can be sent to journals overwhelming editors and reviewers.”

Dr Mainous highlights the core risk: vast datasets can yield apparently 'significant' correlations that are meaningless unless carefully scrutinized.

Frontiers addresses this threat on several fronts. Our artificial intelligence assistant (AIRA) automatically screens every submission for more than twenty integrity indicators, including a dedicated papermill check that flags over ten distinct data points for human investigation. These signals trigger detailed evaluation by a team of more than sixty research integrity experts and by specialist editors and peer reviewers, who judge each paper on the strength of its science — not just statistical probabilities. We also partner with two leading external providers of problematic content detection, integrating their tools into our standard editorial workflow.

Frontiers’ policy goes beyond filtering. Each rejection includes clear feedback to help authors meet rigorous standards, and our research integrity team shares intelligence with other publishers and funders to strengthen community safeguards.

As Research Integrity Portfolio Manager Simone Ragavooloo notes:

“This trend isn’t only about papermills; it likely also includes well-meaning authors trying to stay within the rules of a system that values output and visibility. The result can be technically compliant but low-quality work that chips away at trust. Publishers must respond with clear policies and education that reassert what good science looks like.”

We also caution against blaming AI itself for redundancy. Our upcoming survey shows widespread, legitimate AI use by researchers. The priority is to support ethical use of AI while ensuring that any problematic article, AI-generated or not, never reaches publication.

Through robust policies, advanced technology, and active education, Frontiers protects the scientific record and keeps open data truly open, demonstrating that strong safeguards need not discourage legitimate analysis.