- 1Quantitative Imaging and Medical Physics Team, Center for Medical Physics and Biomedical Engineering, Medical University of Vienna, Vienna, Austria

- 2Department of Clinical Physiology, Nuclear Medicine and Positron Emission Tomography, Rigshospitalet, University of Copenhagen, Copenhagen, Denmark

- 3Department of Radiology and Nuclear Medicine, Vrije Universiteit Medical Center, Amsterdam, Netherlands

- 4Department of Nuclear Medicine and Molecular Imaging, University Medical Center Groningen, Groningen, Netherlands

- 5Service Hospitalier Frédéric Joliot, IMIV, CEA, INSERM, CNRS, Université Paris-Sud, Orsay, France

- 6King's College London & Guy's and St. Thomas' Positron Emission Tomography Center, School of Biomedical Engineering and Imaging Sciences, King's College London, London, United Kingdom

- 7Nuclear Medicine and Molecular Imaging, University Hospitals Leuven, Katholieke Universiteit Leuven, Leuven, Belgium

- 8Medical Physics, Guy's and St. Thomas' National Health Service Foundation Trust, London, United Kingdom

- 9Department of Radiology, Universitair Ziekenhuis Gasthuisberg, Leuven, Belgium

- 10Department of Nuclear Medicine, University Hospital Tuebingen, Tuebingen, Germany

- 11Department of Nuclear Medicine, Klinikum rechts der Isar, Munich, Germany

Quality control (QC) of medical imaging devices is essential to ensure their proper function and to gain accurate and quantitative results. Therefore, several international bodies have published QC guidelines and recommendations for a wide range of imaging modalities to ensure adequate performance of the systems. Hybrid imaging systems such as positron emission tomography/computed tomography (PET/CT) or PET/magnetic resonance imaging (PET/MRI), in particular, present additional challenges caused by differences between the combined modalities. However, despite the increasing use of this hybrid imaging modality in recent years, there are no dedicated QC recommendations for PET/MRI. Therefore, this work aims at collecting information on QC procedures across a European PET/MRI network, presenting quality assurance procedures implemented by PET/MRI vendors and achieving a consensus on PET/MRI QC procedures across imaging centers. Users of PET/MRI systems at partner sites involved in the HYBRID consortium were surveyed about local frequencies of QC procedures for PET/MRI. Although all sites indicated that they perform vendor-specific daily QC procedures, significant variations across the centers were observed for other QC tests and testing frequencies. Likewise, variations in available recommendations and guidelines and the QC procedures implemented by vendors were found. Based on the available information and our clinical expertise within this consortium, we were able to propose a minimum set of PET/MRI QC recommendations including the daily QC, cross-calibration tests, and an image quality (IQ) assessment for PET and coil checks and MR image quality tests for MRI. Together with regular checks of the PET–MRI alignment, proper PET/MRI performance can be ensured.

Introduction

With the introduction of clinical PET/MRI systems in 2010 [1, 2], a novel hybrid PET system became available, in addition to PET/CT and SPECT/CT. The combination of PET with MRI has several advantages; it offers high soft tissue contrast by MRI, together with a reduced radiation burden compared to CT [3]. Moreover, the high-resolution anatomical images from MRI can be used for accurate partial volume correction (PVC) [4] and fast MRI sequences can be used to correct for motion in PET examinations [5]. Further, a broad spectrum of available MRI sequences offers a variety of multi-parametric information, which bears high potential to improve disease characterization through radiomics and machine learning (ML) approaches [6].

Nonetheless, the typically rather low number of available datasets for a specific disease from PET/MRI in a single-center renders a systematic evaluation of possible advantages over other modalities, and the use of PET/MRI data for ML approaches challenging. Therefore, pooling of PET/MRI data across multiple imaging centers is desirable.

However, comparability of imaging data from hybrid PET information is often hampered by differences in local imaging protocols and quality control standards [7–9]. In addition to variations in imaging protocols driven by local preferences of the physicians in charge, variations in QC procedures and imaging protocols, as demonstrated for PET/CT operations [7], can be widely attributed to differences in system design between vendors and system generations (mainly using different reconstruction algorithms or settings). However, in contrast to PET/CT, there are only three main PET/MRI systems on the market all introduced between 2010 and 2015. Thus, all systems have implemented state-of-the-art reconstruction algorithms as well as similar technological characteristics. Therefore, a smaller variation in QC procedures and IQ parameters of PET/MRI compared with PET/CT could be expected. However, first findings indicate that this is not the case. Boellaard et al. [10] reported high accuracy of phantom-based QC for three PET/MRI systems, but only when using dedicated phantom acquisition and processing protocols; when using clinical protocols, significant variances between the systems were reported. Further, in a previous multi-center trial, the inter-site variability of NEMA image quality evaluations was reported to be similar to previous reports for PET/CT systems [11]. These findings are an indication that similar variabilities in PET/MRI operation, including basic quality control standards, exist as it was shown for PET/CT.

Currently, there are several recommendations on quality assurance procedures for PET, PET/CT, and MRI systems [12–16] and guidelines for standardized imaging protocols [12, 13, 15, 17, 18]. For PET/MRI, one report exists describing the implementation of a simultaneous hybrid PET/MRI system in an integrated research and clinical setting [19]. Further, two guidelines for oncological whole-body [18F]-FDG-PET/MRI [20, 21] have been published. However, there is currently no recommendation dedicated to QC procedures for PET/MRI systems.

This work aims at (a) summarizing relevant guidelines and recommendations by international bodies on PET and MRI quality control programs, (b) assessing variations of local QC procedures at European hybrid imaging sites, (c) summarizing PET/MRI QC procedures implemented by system's manufacturers, and (d) developing a consensus recommendation of a minimal set of QC measures for PET/MRI throughout the HYBRID (Healthcare Yearns for Bright Researchers for Imaging Data) consortium.

Materials and Methods

HYBRID is an innovative training network project funded by the European Commission (MSCA No. 764458). It aims at promoting the field of non-invasive disease characterization in the light of personalized medicine by enhancing the information gained from molecular and hybrid imaging technologies. The HYBRID consortium brings together international academic, industrial and non-governmental partners in a cross-specialty network including eight partner sites with extensive clinical experience in PET/MRI as well as three vendors of currently available PET/MRI systems.

Summary of Existing Recommendations

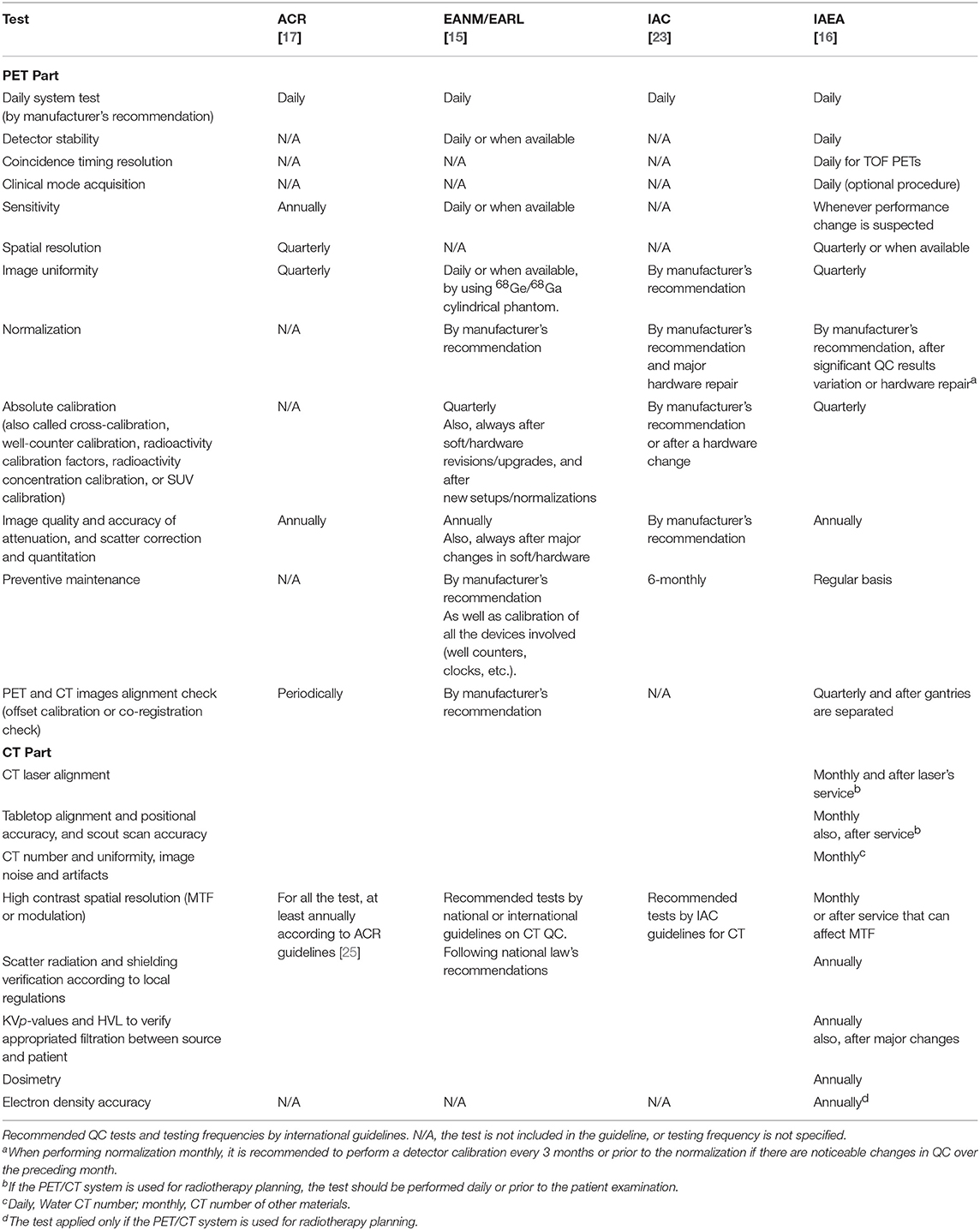

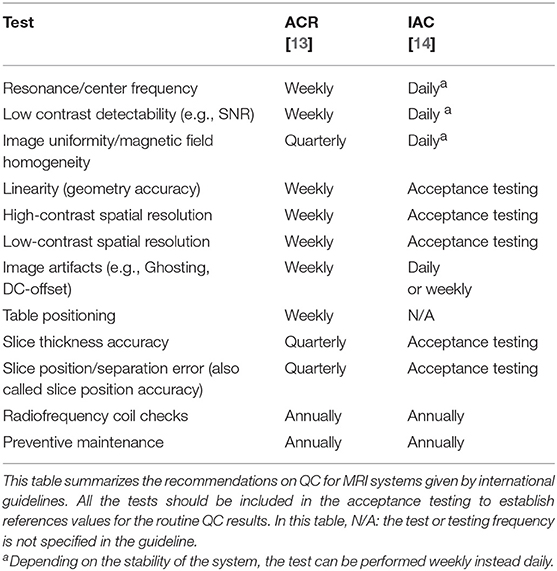

To get an overview of the existing and recommended QC tests for PET and MRI systems, we summarized QC guidelines and recommendations published by all major international bodies related to the field of medical imaging. For PET and PET/CT these included suggestions by the American College of Radiology (ACR) [17], the Society of Nuclear Medicine and Molecular Imaging (SNMMI), and the European Association of Nuclear Medicine (EANM) through the EANM Research Ltd (EARL) initiative [15, 22], the International Atomic Energy Agency (IAEA) [16] and the Intersocietal Accreditation Commission (IAC) [23]. For MRI, we gathered information from the ACR [13] and the IAC [14]. All the information was grouped in a table to have a complete overview of the differences and similarities between different recommendations.

State of Implementation of QC Procedures

Based on international QC guidelines and recommendations, we designed a survey to collect information about the QC tests and testing frequencies from all eight users of PET/MRI systems within the HYBRID consortium.

The survey form itself contained two tables: one with the list of the QC tests for the PET part of the systems, and the second one with commonly used MRI QC tests. For each test, the eight participating sites were asked to report the testing frequency at which they perform these tests. Further, the form permitted the addition of information regarding phantoms and tools used or any other free-text comments regarding the implemented procedures.

To increase the response rate from the participants, the document included an introduction to the topic, the aim of the survey and contact details for support during the time of evaluation. All documents, including the survey form, were sent by email to the imaging centers during summer-autumn 2018. Responses were grouped by PET/MRI system (one per center) in a single table and served as the basis for a consensus on PET/MRI QC.

QC Procedures Implemented by the Vendor

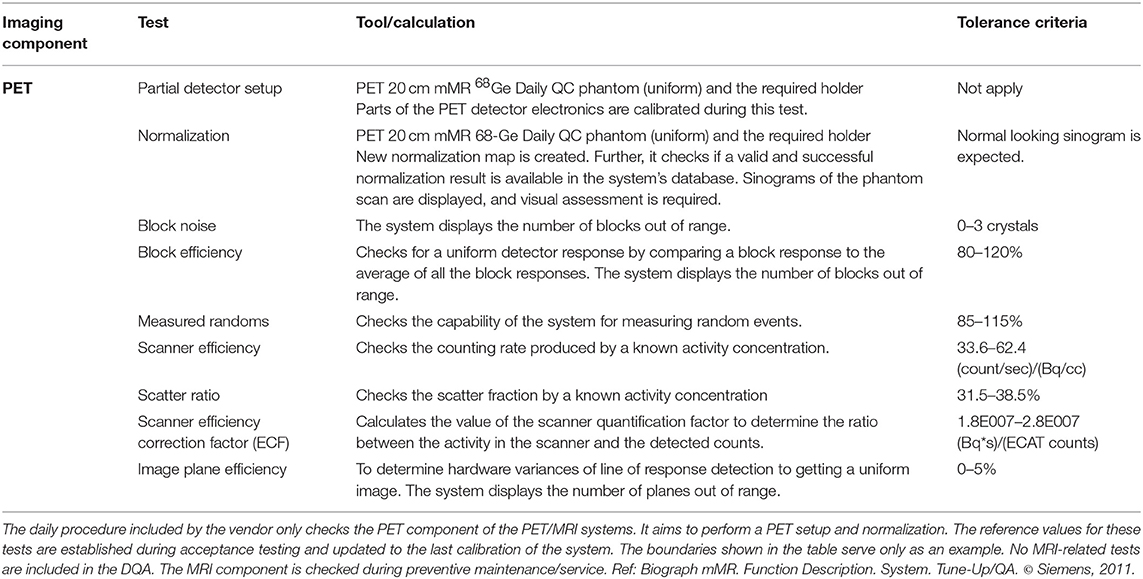

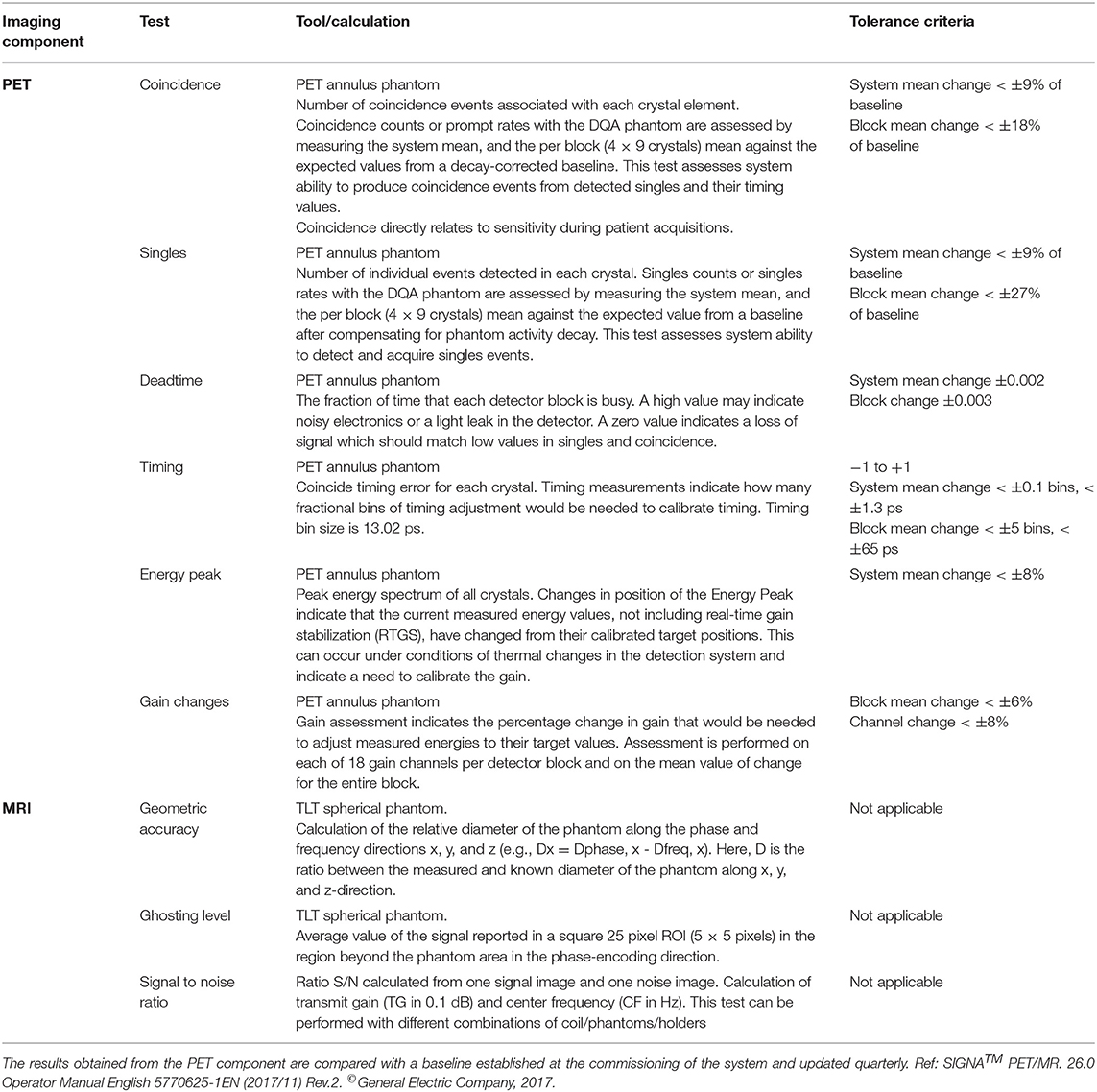

As supporting data for discussions on routine PET/MRI QC procedures, the quality control procedures implemented in three available PET/MRI systems (the Siemens Biograph mMR [2], the GE SIGNA PET/MR [24], and the Ingenuity TF PET/MR [1]) were summarized. These included daily quality assurance (DQA) procedures and additional regular tests. The information was collected from the user manuals of the systems and information provided directly by the vendors.

Consensus Recommendation on QC for PET/MR

Based on the available recommendations for stand-alone PET(/CT) and MRI systems, the responses to the survey on QC procedures and the vendor's implemented quality assurance measures, recommendations for PET/MRI QC were drafted. Findings were presented to and discussed with the participants of the survey, which included experienced clinical PET/MRI users, to achieve a consensus on a minimum set of PET/MRI QC procedures from the HYBRID consortium.

Results

Existing Recommendations for PET and MRI Modalities

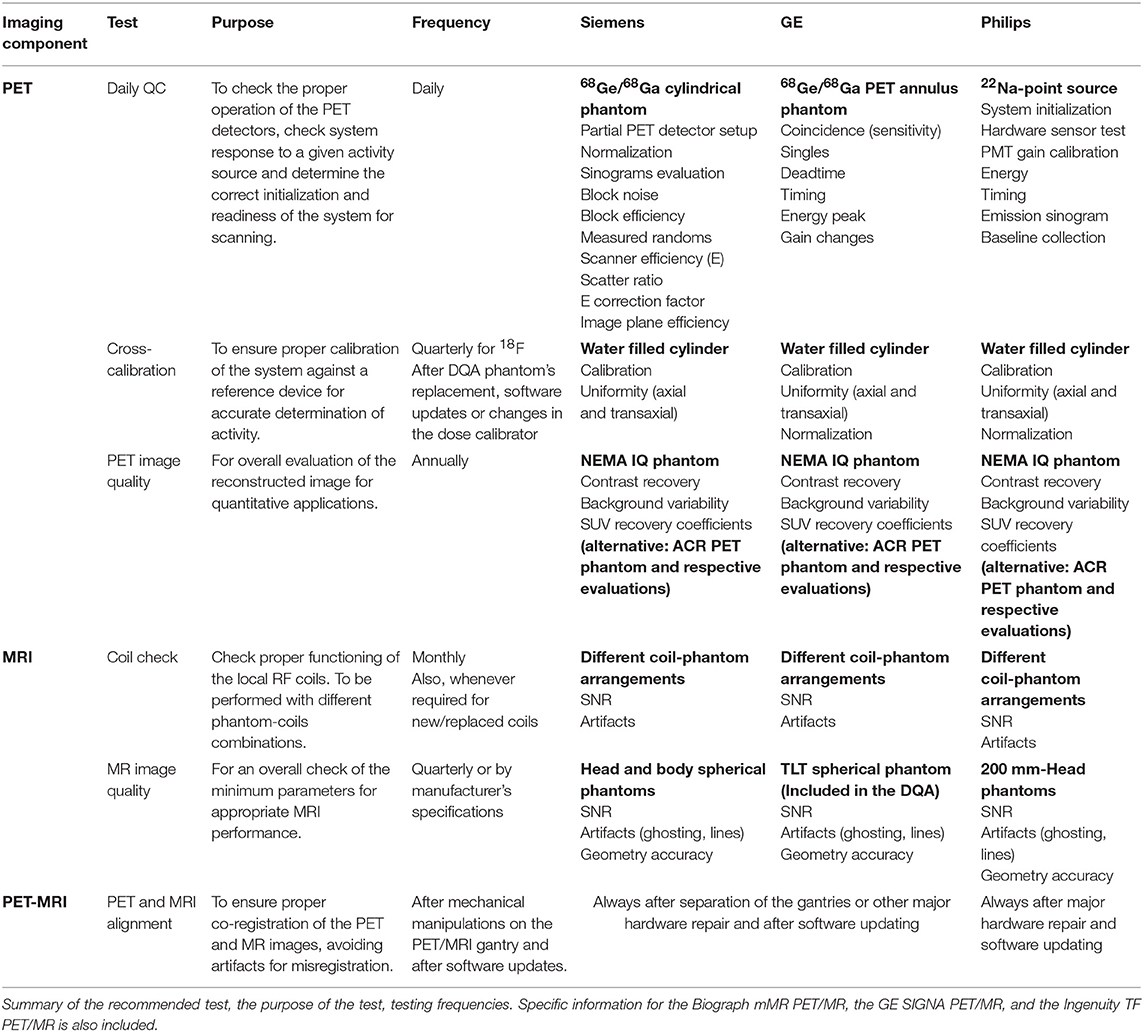

Recommended QC tests and testing frequencies for PET(/CT) and MRI reported by international organizations are summarized in Tables 1, 2, respectively. For PET, all guidelines included the Daily QC (as implemented by the vendor) and a test for image uniformity. However, other suggested tests and testing frequencies differ between the guidelines. For example, for the sensitivity, spatial resolution, and image uniformity a high variability of testing frequencies, tools, and calculation methods was observed. Likewise, for MRI systems, the testing frequencies for most of the tests such as image uniformity, linearity, spatial resolution, table positioning, slice thickness, and slice positioning were highly variable.

State of Implementation of QC Procedures

All of the eight participating centers completed the form for the available PET/MRI systems on-site resulting in reported QC procedures for five Siemens Biograph mMR PET/MR, two GE SIGNA PET/MR, and one Philips Ingenuity TF PET/MR. Of note, the GE and Philips systems have PET time-of-flight (TOF) capabilities. Survey forms were mainly completed by the on-site medical physics expert.

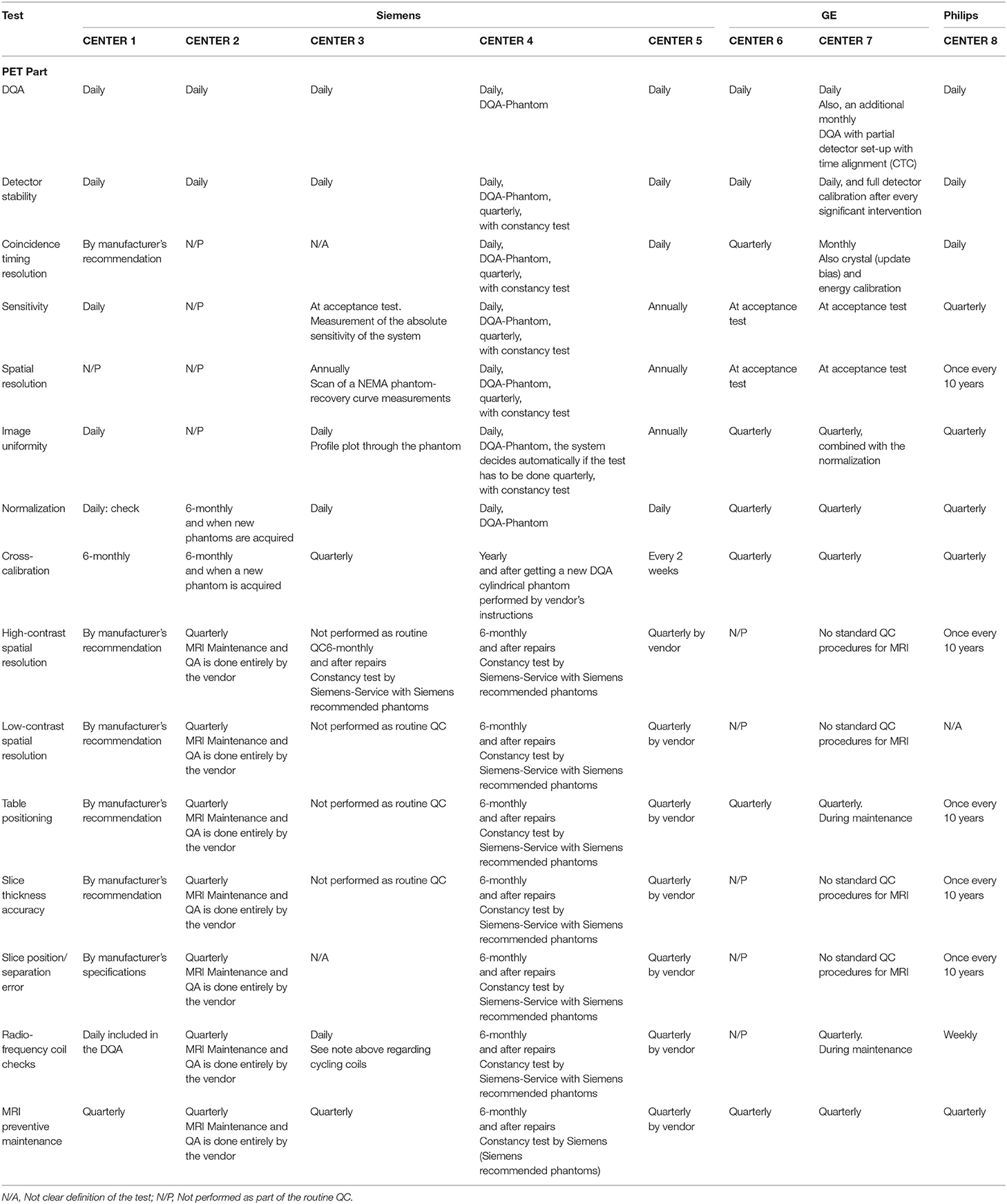

The DQA protocol provided by the vendors was indicated to be performed daily for all systems. However, the testing frequencies of other evaluated tests were highly variable (Table 3).

Table 3. Performed QC tests and testing frequencies for PET/MRI systems across the participant sites.

Tests and Testing Frequencies for the PET Component

DQA procedure

All PET/MRI centers indicated that they perform the DQA as implemented by the manufacturers, including the assessment of detector stability. It is performed automatically or semi-automatically with phantoms provided by vendors, after the routine system initialization or start-up. One of the GE sites (center 7) indicated they perform a daily check of the PET system readiness monitor and an additional monthly DQA, including a partial detector set-up with coincidence timing calibration (CTC).

Timing resolution

At 3/8 of the centers (two Siemens and one Philips), the test was indicated to be performed daily. Variations across the answers for other Siemens systems ranged from performing the test “by manufacturer's recommendations” to “not performed as part of the routine QC.” Responses for the GE systems varied between monthly and quarterly.

Sensitivity

The testing frequency for this test presented a high variability across the centers. The answers were: daily, quarterly, annually, or at the commissioning of the system (during the acceptance test). One of the Siemens centers indicated not performing the test as part of the routine QC.

Spatial resolution

Two of the involved centers did not present any specific testing frequency for this test, Center 4 indicated to perform it daily, and the rest of the answers (62.5 % of the systems) ranged from yearly to once every 10 years.

Image uniformity

Three of the eight centers indicated a daily testing frequency; another three indicated they perform the test every 3 months, one center reported the test to be done annually, and one reported not to perform it as part of the routine QC.

Normalization

A daily testing frequency was reported for 4 Siemens PET/MRI systems. From three of the sites (GE and Philips systems), the test was indicated to be done every 3 months. Only one of the centers indicated to perform the normalization test every 6 months.

Image quality and attenuation accuracy. Scatter correction and quantitation

Three centers indicated to performing the test annually, one of them specified to use the NEMA IQ phantom. One center reported to perform it every 10 years, two at the acceptance testing and one reported not to perform it as part of the routine QC.

Tests and Testing Frequencies for the MRI Component

Responses on the QC tests and testing frequencies for the MRI component varied significantly across partner sites. A summary of all the results of the QC survey can be found in Table 3. Results can be summarized in two statements: (I) there are no standard QC procedures for MRI or (II) the QC procedures are done entirely by and with phantoms provided by the vendors. We obtained a different response, only for some specific tests. The resonance frequency test for one of Siemens Biograph mMR (center 3) is indicated to be performed daily. For the Philips Ingenuity TF PET/MR, four of the surveyed tests [resonance frequency, image uniformity, linearity, and radiofrequency (RF) coils check tests] are performed weekly.

Additional to the performance tests for the PET and the MRI component of the system, PET and MR images alignment was reported to be checked with varying frequencies between centers with answers ranging from: at acceptance test or after an engineering service and by manufacturer's recommendation to regular checks in intervals between 3 and 12 months.

The frequency of the preventive maintenance was reported to be every 3 months in most of the centers with just two centers indicating intervals of every 6 months and yearly.

QC Procedures Implemented by the Vendor

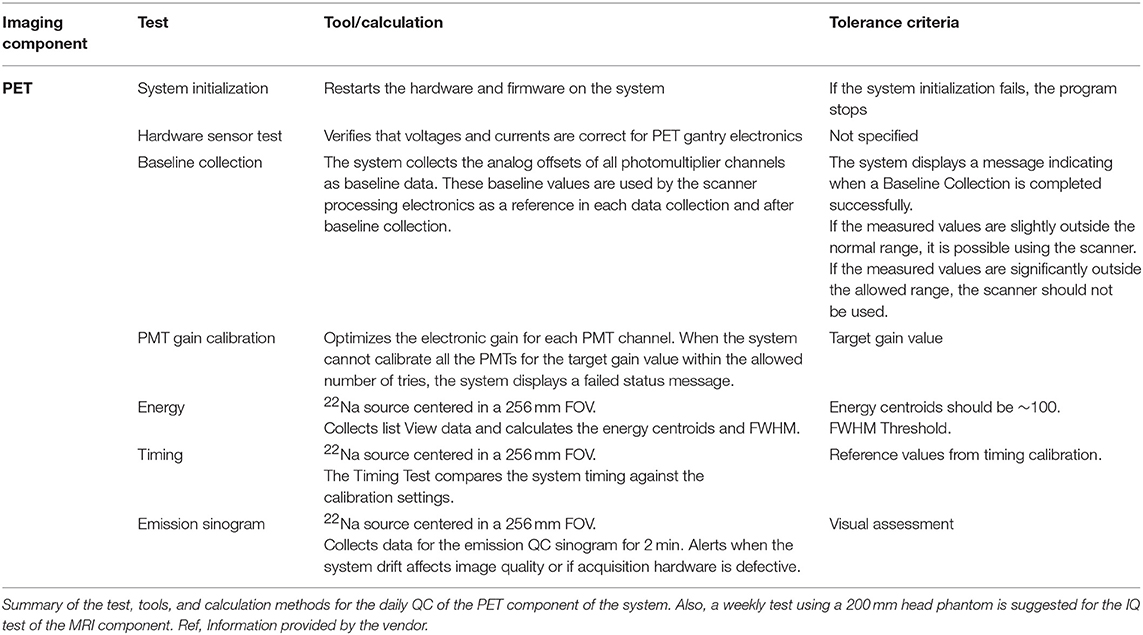

Tables 4–6 present tests and testing frequencies included in the DQA of the Siemens, GE and Philips PET/MRI systems. A detector stability test is included within all DQA procedures. However, other tests vary between vendors. For the PET component of GE and Siemens systems, the DQA by the vendors is based on a 68Ge/68Ga PET Annulus Phantom (a hollow cylinder with a thick radioactive wall) and a cylindrical 68Ge/68Ga phantom, respectively. The DQA by Siemens includes testing the correct function of the detectors and electric components of the system. It includes an assessment of block noise, block efficiency, system efficiency, and image plane efficiency. Further, it includes a measurement of randoms and scatter ratio, a normalization check, an inspection of the sinograms, and it performs a partial detector setup. For the GE PET/MRI systems, the DQA includes an assessment of the collected coincidences and singles, an assessment of deadtime, energy peak, and gain changes. In contrast, the DQA of the Philips systems is based on a 22Na point source. The DQA include the inspection of the sinograms and an assessment of the energy resolution. For SIGNA GE PET/MR and Philips Ingenuity TF PET/MR, systems with TOF capabilities, the DQA by vendors also includes a timing resolution check.

Differences across the QC protocols implemented by the vendor were found for the MRI part of the PET/MRI systems.

Within the Siemens DQA, a coil check is available. Further, the preventive maintenance usually includes an image quality test using the head and body spherical phantoms (180 and 300 mm outer diameter, respectively) provided by the vendor. This test allows an assessment of signal-to-noise ratio (SNR) and artifacts. Also, procedures to check each configured local coil individually based on evaluation of SNR and image intensity are available and done usually during preventive maintenance to verify satisfactory coil operation.

For GE systems, at least one specific phantom is provided to check the MRI component; the TLT spherical phantom is used to test geometry accuracy, ghosting level, and SNR. The test is available in the DQA implemented by the vendor, but the system's user can decide to perform it or not.

For Philips systems, the DQA does not include any test for the MRI component. However, Philips provides a 200 mm head phantom to check the MRI component of the Ingenuity TF PET/MRI through an MRI IQ evaluation. This procedure is recommended to be done weekly and can be performed automatically by the system. However, it is recommended to visually check the images for artifacts (e.g., ghosting and lines).

Consensus Recommendation on QC for PET/MRI

The minimum consensus on PET/MRI QC includes for all the systems the following tests: For the PET component, the DQA implemented by the vendor, a quarterly cross-calibration (CC) test and a yearly IQ test. For the MRI component, the consensus includes a monthly coil check and an MR image quality test to be performed at least quarterly. Further, the check of the PET-MRI alignment should be performed after mechanical manipulations on the PET/MRI gantry and after software updates. Table 7 summarizes the proposed recommendations, including the purpose of the test, testing frequency, and additional comments for the implementation on three of the commercially available PET/MRI systems.

Discussion

In this work, existing QC guidelines for PET and MRI systems were summarized, and the variability of implemented QC protocols for PET/MRI systems was assessed with the ultimate goal to reach a consensus on a minimum set of QC procedures. The results revealed quite significant variations between the existing recommendations and QC measures implemented in different centers. The reported variations of local PET and MRI QC procedures and testing frequencies are assumed to partly reflect the variations seen in the existing guidelines for the single modalities and the non-existence of specific recommendations for PET/MRI. However, in part, the variability in the reporting seems to be caused by differences in the definition of the specific tests between the centers and a lack of in-depth knowledge about the implemented QC measures included in the DQA and the tests performed by the vendor during the preventive maintenance. For example, the sensitivity of a PET system reflects how many events can be detected for a given activity within the field of view (FOV). A change in sensitivity can possibly be caused by malfunctioning detectors, changes in the energy resolution or the coincidence timing window. The NEMA NU2 protocols give clear testing procedures [26] to measure sensitivity in a reproducible and standardized way. However, to monitor sensitivity and to assess potential changes, multiple methods can be used. For example, the sensitivity can be monitored using the long half-life sources used for DQA (e.g., 68Ga/68Ge). Therefore, these differences in methods to measure sensitivity can lead to differences in local definitions of this test, and thus, to differences in implementation and reporting. It can be seen in the results from the survey, where the answers indicate that some of the centers seem to refer to the specific NEMA NU2 protocol as the test for sensitivity, and thus, report this test to be done only during acceptance testing.

QC, as part of a quality assurance program, aims at monitoring if a system works as expected. For proper performance of the PET part, the detectors and measurement electronics need to work correctly. Checking the detector stability and sensitivity allows early detection of any sudden changes (e.g., failures of detector modules). Normalization is needed to correct for variations of the efficiency of individual detectors and is essential for uniformity in reconstructed PET images. Further, the spatial resolution of a PET system, mainly dependent on the detector geometry and the set reconstruction protocol, is relevant for lesion detectability and quantitative readings of small structures. In TOF PET systems, the measurement of the timing resolution is needed to ensure TOF precision. Furthermore, the cross-calibration of the PET with the dose calibrators needs to be ensured for accurate quantitative measurements like SUVs. With the proposed recommendation, including the DQA, a quarterly cross-calibration test, and a yearly IQ assessment, proper PET performance can be ensured.

The DQA provided by the vendors covers most of the tests mentioned before. For all systems, the correct functionality of the detectors is checked, and the stability of sensitivity is assessed by the counts collected from a known activity source (68Ge/68Ga cylinder phantom for Siemens, 68Ge/68Ga PET Annulus Phantom for GE and 22Na point source for Philips). Further, for systems with TOF capabilities, a timing resolution test is included in the DQA, and for the Siemens PET/MRI system, the normalization map of the detectors is renewed. All these parameters are evaluated within the DQA compared to a baseline established at the commission of the system and updated during the last calibration or after replacement of the DQA source.

In addition to the DQA, cross-calibration is required to ensure proper quantification. The CC test is done using a homogeneously water filled cylinder usually provided by the vendor together with the respective attenuation correction protocol. A change in cross-calibration is unlikely to be caused by a change in PET system stability [27]. Therefore, changes are expected to be mostly related to replacement of the 68Ge/68Ga phantom (for GE and Siemens DQA) which are also used for PET calibration, changes in the calibration of the dose calibrators or modified cross-calibration factors after software updates. To covering all these potential influencing factors, an at least quarterly cross-calibration check for 18F is recommended. Furthermore, CC checks and eventually CC adjustments have to be performed after replacements of the DQA phantoms, software updates or changes in the dose calibrators used for patient injection dose measurements. In addition to the CC, the CC phantom measurements can be used for the evaluation of the axial and transaxial uniformity, e.g., using the method published by the IAEA [16], and it is used for the normalization of the Philips PET/MRI system.

In general, a PET system uses a single CC factor usually based on 18F. In theory, this should allow a correct quantification of any PET isotope, given the correct set of correction factors for differences in positron branching ratios and half-life in the PET software and, in case of non-pure positron emitters, a proper prompt gamma correction [28]. However, previous studies have reported deviations in CC for isotopes other than 18F [29]. These deviations were attributed to incorrect calibration factors within the dose calibrators [30] or effects resulting from the use of different isotope containers between calibration and clinical routine [31]. To account for these issues, a check of the CC for all PET isotopes in clinical use is recommended to be done at least once. In case of deviations in CC for additional isotopes, it is recommended to adjust the dose calibrator settings accordingly. In cases where this is not possible (e.g., due to legal restrictions concerning the calibration of the dose calibrator) the deviation in quantification needs to be communicated with the respective personal and has to be taken into account if quantitative readings are used for comparison between different PET systems. Further, as a regular QC it is recommended to check the dose calibrator settings for all used isotopes at least yearly (e.g., following a procedure as described in Logan et al. [32]) and, in case of doubt, perform an additional CC test.

With the DQA and the cross-calibration measurements, the proper function of the PET system is essentially ensured. However, in compliance with the EARL initiative, a yearly IQ test is recommended additionally to get an assessment of the overall system performance regarding image quality and, as contrast recovery- and recovery coefficients are dependent on the spatial resolution, also spatial resolution. To do so, a protocol as described by NEMA NU2 [33] or the EARL accreditation program [34] can be used. Further, the IQ phantom acquisition can be used to assess uniformity evaluating standard deviation and mean values of background regions-of-interest [35]. In case the NEMA IQ phantom is not available on-site, the alternative use of the ACR PET Phantom can be considered to assess overall image quality. Procedures for the measurements and image analysis of the ACR PET Phantom are described in Difilippo et al. [36], American College of Radiology [37], American College of Radiology [38]. However, corresponding valid attenuation correction maps are required when using PET phantoms for QC of PET/MRI systems [10, 39, 40]. Therefore, when adopting the ACR Phantom for IQ assessments, dedicated protocols (e.g., using CT-based templates for AC) must be available.

On top of the tests mentioned above, the synchronization of the clocks of all relevant devices (e.g., PET/MRI, dose calibrator, well-counter) needs to be ensured for accurate quantitative PET readings [41].

For the MRI component of the PET/MRI systems, we recommend doing at least an evaluation of the image quality by means of SNR, geometric accuracy, and artifacts (e.g., ghosting, lines). With an overall IQ test, which includes the parameters mentioned, distortions in resonance frequency, static B0 field, and gradient field can also be detected. For example, magnetic field homogeneity has a direct impact on the SNR. Therefore, GE has an implemented DQA for MRI including IQ assessment, Philips recommends an IQ assessment to be done weekly and Siemens systems allow general performance of the MRI component to be tested by using the head and body spherical phantoms, and thus, IQ assessment through artifacts and SNR calculation in the phantom images is feasible. In general, we suggest performing the MR image quality test by using the procedure and phantoms specified by the vendor. If no specifications are provided, the MRI IQ can be tested as described by the AAPM and ACR [42, 43]. The ACR suggests performing an MRI IQ test annually. However, the possibility to discover fails (e.g., in image intensity uniformity) with a higher testing frequency has been demonstrated [44]. Therefore, we suggest performing an MRI IQ test quarterly.

It is also recommended to check the function and quality of additional hardware such as coils. Checking the coil performance permits the detection of issues with the coils before these affect clinical scans and clinical image quality. This test is particularly important when using flexible coils, which are more susceptible to deterioration. The procedure for coil performance testing may be provided by coil or MRI system manufacturer, but can also be done by analyzing the image SNR and artifacts for specific phantom-coil arrangements, as described in American College of Radiology [43]. International guidelines for dedicated MRI systems suggest to perform an annual coil check; however, PET/MRI systems vendors such as Siemens suggest a daily test of the primary coils in use. Therefore, for easy implementation, we suggest performing a monthly coil check of the most used coils on-site, and whenever new/replacement coils need to be accepted.

Finally, no significant interference between the PET and MRI components has been observed for combined clinical 3T PET/MRI systems [2, 45–47], and therefore, QC test performed simultaneously on PET and MRI might not be required. However, the spatial alignment of both components needs to be ensured. Checking the image co-registration for different sequences used clinically may help to reduce the effect of misalignment artifacts, such as incorrect interpretation of fused images due to improperly localized lesions in oncological PET/MRI studies [48]. The test should always be performed after separation of the gantries and software updating since the offset calibration values can be overwritten.

Considerations for Specific Applications

It should be mentioned that the QC for MRI covers only a basic evaluation of the most critical parameters for proper performance of the imaging modality. Therefore, specific MRI techniques and sequences may require higher standards concerning the stability of specific parameters. For example, MRI applications such as ultrafast imaging (echo-planar imaging, EPI) will be more sensitive to field inhomogeneities and thus, may require more stringent testing [49]. Inhomogeneities in B0 magnetic field produce blurring, distortion, and signal loss at tissue interfaces, particularly at the edge of the field of view. These image distortions are relevant as they may cause errors in tissue segmentation on anatomical images and attenuation correction [50]. Likewise, distortions in the image scale (geometry) may cause significant bias in radiotherapy planning [51]. Therefore, it is recommended to include additional QC procedures tailored to the specific purpose and depending on the MRI application.

As an example, one of the centers involved in this consensus has created three specific QC procedures to monitor the MRI scanner's behavior for specific applications frequently performed on-site. MR spectroscopy QC is performed every week. The procedure entails a single voxel acquisition on the manufacturer-provided spectroscopy phantom containing water, acetone, and lactate. The water and acetone peaks are monitored for peak-frequency, peak-SNR, and peak-width. Furthermore, an MRI diffusion QC is performed weekly. In this case, the acquisition of apparent diffusion coefficient maps (ADC) and fractional anisotropy (FA) in the manufacturer-provided doped water bottle phantom is performed. Average ADC and FA within the bottle are monitored. A monthly QC procedure was implemented to monitor potential RF artifacts created by a blood-sampling pump which operates within the scanner room. The pump is switched on while a manufacturer-created RF noise scan performed. The peaks created by the pump are monitored for location and SNR, to intervene if the peak amplitude or position shifts away from normal.

Conclusion

Existing QC guidelines and recommendations for PET and MR imaging modalities vary both, in detail and range. Likewise, variations in local PET/MRI QC measures across European hybrid imaging sites were reported. However, these variations can partly be attributed to differences in the definitions of the tests and a lack of in-depth knowledge of the tests performed during DQA.

Based on these observations, a recommendation for a minimum set of QC procedures was developed in consensus, to ensure the proper functioning of whole-body PET/MRI systems. This recommendation includes the DQA, a cross-calibration measurement and an assessment of IQ for PET, a regular IQ test for MRI as well as a regular coil check, and checks of the PET and MRI alignment.

Data Availability Statement

All datasets generated for this study are included in the manuscript/supplementary files.

Author Contributions

AV, TB, and IR contributed to the conception, design of the study, and performed the analysis. AV organized the database and drafted the first draft of the manuscript. TB, IR, and FP contributed sections of the manuscript. All authors contributed to the collection of information, manuscript revision, and read, and approved the submitted version.

Funding

This project has received funding from the European Union's Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No. 764458. The work reflects only the author's view, and the Agency is not responsible for any use that may be made of the information it contains.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors thank the PhD students of the HYBRID consortium and imaging physicists across the sites who participated in this study for their contributions.

References

1. Zaidi H, Ojha N, Morich M, Griesmer J, Hu Z, Maniawski P, et al. Design and performance evaluation of a whole-body ingenuity TF PET-MRI system. Phys Med Biol. (2011) 56:3091–106. doi: 10.1088/0031-9155/56/10/013

2. Delso G, Fürst S, Jakoby B, Ladebeck R, Ganter C, Nekolla SG, et al. Performance measurements of the siemens mMR integrated whole-body PET/MR scanner. J Nucl Med. (2011) 52:1914–22. doi: 10.2967/jnumed.111.092726

3. Muzic RF Jr, DiFilippo FP. Positron emission tomography-magnetic resonance imaging: technical review. Semin Roentgenol. (2014) 49:242–54. doi: 10.1053/j.ro.2014.10.001

4. Erlandsson K, Dickson J, Arridge S, Atkinson D, Ourselin S, Hutton BF. MR imaging–guided partial volume correction of PET data in PET/MR imaging. PET Clin. (2016) 11:161–77. doi: 10.1016/j.cpet.2015.09.002

5. Keller SH, Hansen C, Hansen C, Andersen FL, Ladefoged C, Svarer C, et al. Motion correction in simultaneous PET/MR brain imaging using sparsely sampled MR navigators: a clinically feasible tool. EJNMMI Phys. (2015) 2:14. doi: 10.1186/s40658-015-0126-z

6. Huang SY, Franc BL, Harnish RJ, Liu G, Mitra D, Copeland TP, et al. Exploration of PET and MRI radiomic features for decoding breast cancer phenotypes and prognosis. NPJ Breast Cancer. (2018) 4:24. doi: 10.1038/s41523-018-0078-2

7. Rausch I, Bergmann H, Geist B, Schaffarich M, Hirtl A, Hacker M, et al. Variation of system performance, quality control standards and adherence to international FDG-PET/CT imaging guidelines. Nuklearmedizin. (2014) 53:242–8. doi: 10.3413/Nukmed-0665-14-05

8. Beyer T, Czernin J, Freudenberg LS. Variations in clinical PET/CT operations: results of an international survey of active PET/CT users. J Nucl Med. (2011) 52:303–10. doi: 10.2967/jnumed.110.079624

9. Kaalep A, Sera T, Oyen W, Krause BJ, Chiti A, Liu Y, et al. EANM/EARL FDG-PET/CT accreditation - summary results from the first 200 accredited imaging systems. Eur J Nucl Med Mol Imaging. (2018) 45:412–22. doi: 10.1007/s00259-017-3853-7

10. Boellaard R, Rausch I, Beyer T, Delso G, Yaqub M, Quick HH, et al. Quality control for quantitative multicenter whole-body PET/MR studies: a NEMA image quality phantom study with three current PET/MR systems. Med Phys. (2015) 42:5961–9. doi: 10.1118/1.4930962

11. Annual Congress of the European Association of Nuclear Medicine October 13 – 17, 2018 Düsseldorf Germany. Eur J Nucl Med Mol Imaging 2018; 45: 1–844. doi: 10.1007/s00259-018-4148-3

12. American College of Radiology. Nuclear Medicine Accreditation Program Requirements. Reston, VA (2018).

13. American College of Radiology. Manual of Procedures Part C: MRI Technical Procedures. Philadelphia, PA (2013).

14. Intersocietal Accreditation Commission. The IAC Standards and Guidelines for MRI Accreditation. Ellicott City, MD (2017).

15. Boellaard R, Delgado-Bolton R, Oyen WJ, Giammarile F, Tatsch K, Eschner W, et al. FDG PET/CT: EANM procedure guidelines for tumour imaging: version 2.0. Eur J Nucl Med Mol Imaging. (2014) 42:328–54. doi: 10.1007/s00259-014-2961-x

16. International Atomic Energy Agency. Quality Assurance for PET and PET/CT Systems. Vienna (2012).

17. American College of Radiology and the American Association of Physicists in Medicine. ACR–SPR Practice Parameters for Performing FDG-PET/CT in Oncology. Reston, VA (2016).

18. Lasnon C, Desmonts C, Quak E, Gervais R, Do P, Dubos-Arvis C, et al. Harmonizing SUVs in multicentre trials when using different generation PET systems: prospective validation in non-small cell lung cancer patients. Eur J Nucl Med Mol Imaging. (2013) 40:985–96. doi: 10.1007/s00259-013-2391-1

19. Sattler B, Jochimsen T, Barthel H, Sommerfeld K, Stumpp P, Hoffmann KT, et al. Physical and organizational provision for installation, regulatory requirements and implementation of a simultaneous hybrid PET/MR-imaging system in an integrated research and clinical setting. Magn Reson Mater Physics, Biol Med. (2013) 26:159–71. doi: 10.1007/s10334-012-0347-2

20. Tateishi U, Nakamoto Y, Murakami K, Kaneta T, Toriihara A. Guidelines for the Clinical Use of 18 F-FDG-PET/MRI 2012 (Ver 1.0): Part 2. Yokohama (2012).

21. Umutlu L, Beyer T, Grueneisen JS, Rischpler C, Quick HH, Veit-Haibach P, et al. Whole-body [18F]-FDG-PET/MRI for oncology: a consensus recommendation. Nuklearmedizin. (2019) 58:68–76. doi: 10.1055/a-0830-4453

22. EANM-SNMMI. Quality Control of Nuclear Medicine Instrumentation and Protocol Standardisation. Vienna (2017).

23. Intersocietal Accreditation Commission. The IAC Standards and Guidelines for Nuclear/PET Accreditation. Ellicott City, MD (2017).

24. Maisonobe J-A, Soret M, Kas A. 14. Quantitative performance of a Signa PET/MR based on the NEMA NU 2-2007 standard. Phys Med. (2016) 32:347–8. doi: 10.1016/j.ejmp.2016.11.065

25. Intersocietal Accreditation Commission. The IAC Standards and Guidelines for CT Accreditation. (2017). Available online at: https://www.intersocietal.org/ct/ (accessed June 4, 2018).

26. National Electrical Manufacturers Association. NEMA NU 2-2012: Performance Measurements of Positron Emission Tomographs. Rosslyn, VA (2012).

27. Keller SH, Jakoby B, Svalling S, Kjaer A, Højgaard L, Klausen TL. Cross-calibration of the Siemens mMR: easily acquired accurate PET phantom measurements, long-term stability and reproducibility. EJNMMI Phys. (2016) 3:11. doi: 10.1186/s40658-016-0146-3

28. Conti M, Eriksson L. Physics of pure and non-pure positron emitters for PET: a review and a discussion. EJNMMI Phys. (2016) 3:8. doi: 10.1186/s40658-016-0144-5

29. Wooten AL, Lewis BC, Szatkowski DJ, Sultan DH, Abdin KI, Voller TF, et al. Calibration setting numbers for dose calibrators for the PET isotopes 52Mn, 64Cu, 76Br, 86Y, 89Zr, 124I. Appl Radiat Isot. (2016) 113:89–95. doi: 10.1016/j.apradiso.2016.04.025

30. Bailey DL, Hofman MS, Forwood NJ, O'Keefe GJ, Scott AM, van Wyngaardt WM, et al. Accuracy of dose calibrators for 68 Ga PET imaging: unexpected findings in a multicenter clinical pretrial assessment. J Nucl Med. (2018) 59:636–8. doi: 10.2967/jnumed.117.202861

31. Bauwens M, Pooters I, Cobben R, Visser M, Schnerr R, Mottaghy F, et al. A comparison of four radionuclide dose calibrators using various radionuclides and measurement geometries clinically used in nuclear medicine. Phys Med. (2019) 60:14–21. doi: 10.1016/j.ejmp.2019.03.012

32. Logan WK, Blondeau KL, Widmer DJ, Holmes RA. Accuracy testing of dose calibrators. J Nucl Med Technol. (1985) 13:215–7.

33. National Electrical Manufacturers Association. Performance Measurements of Positron Emission Tomographs (PET). (2018). Available online at: https://www.nema.org/Standards/Pages/Performance-Measurements-of-Positron-Emission-Tomographs.aspx (accessed March 16, 2019).

34. European Association of Nuclear Medicine. EANM Research Ltd (EARL). New EANM FDG PET/CT Accredita- tion Specifications for SUV Recovery Coefficients. Available online at: http://earl.eanm.org/cms/website.php?id=/en/projects/fdg_pet_ct_accreditation/accreditation_specifications.htm (accessed March 16, 2019).

35. Deller TW, Khalighi MM, Jansen FP, Glover GH. PET imaging stability measurements during simultaneous pulsing of aggressive MR sequences on the SIGNA PET/MR system. J Nucl Med. (2017) 59:167–72. doi: 10.2967/jnumed.117.194928

36. Difilippo FP, Patel M, Patel S. Automated quantitative analysis of ACR PET phantom images. J Nucl Med Technol J Nucl Med Technol. (2019). doi: 10.2967/jnmt.118.221317

37. American College of Radiology. American College of Radiology PET Accreditation Program Testing Instructions. (2019). Available online at: https://www.acraccreditation.org/-/media/ACRAccreditation/Documents/NucMed-PET/PET-Forms/PET-Testing-Instructions.pdf?la=en (accessed July 30, 2019).

38. American College of Radiology. PET Phantom Instructions for Evaluation of PET Image Quality. (2010). Available online at: https://www.aapm.org/meetings/amos2/pdf/49-14437-10688-860.pdf (accessed July 30, 2019).

39. Ziegler S, Jakoby BW, Braun H, Paulus DH, Quick HH. NEMA image quality phantom measurements and attenuation correction in integrated PET/MR hybrid imaging. EJNMMI Phys. (2015) 2:18. doi: 10.1186/s40658-015-0122-3

40. Eldib M, Oesingmann N, Faul DD, Kostakoglu L, Knešaurek K, Fayad ZA. Optimization of yttrium-90 PET for simultaneous PET/MR imaging: a phantom study. Med Phys. (2016) 43:4768–74. doi: 10.1118/1.4958958

41. Boellaard R. Standards for PET image acquisition and quantitative data analysis. J Nucl Med. (2009) 50:11S−20S. doi: 10.2967/jnumed.108.057182

42. Price RR, Axel L, Morgan T, Newman R, Perman W, Schneiders N. AAPM Report No. 28: Quality Assurance Methods and Phantoms for Magnetic Resonance Imaging. New York, NY (1990).

43. American College of Radiology. ACR Magnetic Resonance Imaging (MRI) Quality Control Manual. (2015). Available online at: https://shop.acr.org/Default.aspx?TabID=55&ProductId=731499117 (accessed May 18, 2019).

44. Chen C-C, Wan Y-L, Wai Y-Y, Liu H-L. Quality assurance of clinical MRI scanners using ACR MRI phantom: preliminary results. J Digit Imaging. (2004) 17:279–84. doi: 10.1007/s10278-004-1023-5

45. Levin CS, Maramraju SH, Khalighi MM, Deller TW, Delso G, Jansen F. Design features and mutual compatibility studies of the time-of-flight PET capable GE SIGNA PET/MR system. IEEE Trans Med Imaging. (2016) 35:1907–14. doi: 10.1109/TMI.2016.2537811

46. Hong SJ, Kang HG, Ko GB, Song IC, Rhee JT, Lee JS. SiPM-PET with a short optical fiber bundle for simultaneous PET-MR imaging. Phys Med Biol. (2012) 57:3869–83. doi: 10.1088/0031-9155/57/12/3869

47. Vargas MI, Becker M, Garibotto V, Heinzer S, Loubeyre P, Gariani J, et al. Approaches for the optimization of MR protocols in clinical hybrid PET/MRI studies. Magn Reson Mater Phys Biol Med. (2013) 26:57–69. doi: 10.1007/s10334-012-0340-9

48. Roy P, Lee JK, Sheikh A, Lin W. Quantitative comparison of misregistration in abdominal and pelvic organs between PET/MRI and PET/CT: effect of mode of acquisition and type of sequence on different organs. Am J Roentgenol. (2015) 205:1295–305. doi: 10.2214/AJR.15.14450

49. AAPM REPORT NO. 100 Acceptance Testing and Quality Assurance Procedures for Magnetic Resonance Imaging Facilities. (2010). Available online at: https://www.aapm.org/pubs/reports/RPT_100.pdf (accessed May 2, 2019).

50. Exhibit E, Mallia A, Bashir U, Stirling J, Joemon J, MacKewn J, et al. Artifacts and diagnostic pitfalls in PET/MRI. ECR. (2016) C.0773:1–21. doi: 10.1594/ecr2016/C-0773

Keywords: hybrid imaging, consensus, recommendations, quality control, PET/MRI

Citation: Valladares A, Ahangari S, Beyer T, Boellaard R, Chalampalakis Z, Comtat C, DalToso L, Hansen AE, Koole M, Mackewn J, Marsden P, Nuyts J, Padormo F, Peeters R, Poth S, Solari E and Rausch I (2019) Clinically Valuable Quality Control for PET/MRI Systems: Consensus Recommendation From the HYBRID Consortium. Front. Phys. 7:136. doi: 10.3389/fphy.2019.00136

Received: 28 May 2019; Accepted: 05 September 2019;

Published: 24 September 2019.

Edited by:

Claudia Kuntner, Austrian Institute of Technology (AIT), AustriaReviewed by:

Michael D. Noseworthy, McMaster University, CanadaAbass None Alavi, University of Pennsylvania, United States

Copyright © 2019 Valladares, Ahangari, Beyer, Boellaard, Chalampalakis, Comtat, DalToso, Hansen, Koole, Mackewn, Marsden, Nuyts, Padormo, Peeters, Poth, Solari and Rausch. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ivo Rausch, aXZvLnJhdXNjaEBtZWR1bml3aWVuLmFjLmF0

Alejandra Valladares

Alejandra Valladares Sahar Ahangari2

Sahar Ahangari2 Thomas Beyer

Thomas Beyer Ronald Boellaard

Ronald Boellaard Zacharias Chalampalakis

Zacharias Chalampalakis Claude Comtat

Claude Comtat Michel Koole

Michel Koole Johan Nuyts

Johan Nuyts Ronald Peeters

Ronald Peeters Sebastian Poth

Sebastian Poth Esteban Solari

Esteban Solari Ivo Rausch

Ivo Rausch