- 1Chongqing Key Laboratory of Spatial Data Mining and Big Data Integration for Ecology and Environment, Rongzhi College of Chongqing Technology and Business University, Chongqing, China

- 2College of Medical Information Engineering, Shandong First Medical University & Shandong Academy of Medical Sciences, Tai'an, China

With the rapid development of vehicle-mounted communication technology, GPS data is an effective method to predict the current road vehicle track based on vehicle-mounted data. GPS-oriented vehicle-mounted data position prediction method is currently a hot research work and an effective method to realize intelligent transportation. In this paper, an improvement scheme is proposed based on the problem of falling into local optimization existing in the basic algorithm of teaching and learning optimization algorithm. An interference operator is used to disturb teachers to enhance the kinetic energy of the population to jump out of local optimization. By comparing the performance of GA, PSO, TLBO, and ITLBO algorithms with four test functions, the results show that ITLBO has efficient optimization effect and generalization ability. Finally, the ITLBO-ELM algorithm has the best prediction effect by comparing the vehicle GPS data and comparing the experimental algorithms.

Introduction

As a new swarm intelligence algorithm, Teaching Learning Based Optimization (TLBO) simulates the process of teachers' teaching to students and students' learning and the process of students' learning from each other. Through teachers' teaching and students' learning from each other, students' learning performance can be improved. Because TLBO has the advantages of few parameters, simple thinking, easy understanding and strong robustness [1–4], it has attracted the attention of many scholars since it was put forward and has been applied in many fields. Such as reactive power optimization of power system [5], LQR controller optimization [6], IIR digital filter design [7], steelmaking and continuous casting scheduling problem [8], PID controller parameter optimization problem [9, 10], feature selection problem [11], HVDC optimization of voltage source converter [12], extension of global optimization technology to constrained optimization [13], analysis of financial time series data [14], neural network optimization [15], etc. Compared with the existing swarm intelligence algorithm, the algorithm obtains better results. Firstly, the basic TLBO algorithm is introduced, then the improved TBLO algorithm is studied, and then the performance of ITLBO algorithm is tested by using F1–F4 four test functions. Finally, GPS data is used to verify the advantages of ITLBO-ELM algorithm.

Improved Teaching and Learning Optimization Algorithm

Basic TLBO Algorithm

TLBO algorithm is an optimization algorithm proposed by teachers' teaching and students' learning social activities in the process of inspired teaching. Both teachers and students are candidate solutions in TLBO algorithm population. Assuming that there are a total of N individuals in the class, the individuals with the best academic performance, that is, the best fitness, are regarded as teachers, and the rest are students. The specific implementation process of TLBO is described as follows:

A. Population initialization

Teachers and students are individuals in the class. Assuming that the solution space of the optimization problem is S-dimensional, any individual Xi in the population is initialized in a random way:

In the formula, U = (u1, u2, , …, us) and L = (l1, l2, , …, ls) are vectors formed by the upper and lower bounds of each variable, respectively; r is a random number between [0, 1].

B. Teaching stage

The best individual in the population is the teacher, and students improve their performance through the difference between the average value of teachers and classes. The i-th student Xi generates a new individual according to Equation (2):

Where is an updated individual, only if , is accepted, i.e., , otherwise Xi is kept unchanged; D is the difference between teacher Xt and student average Xm, which is described as follows:

Where is the teacher; is the mean individual of the population; r is a random number between [0, 1]; the value of Tf teaching is only 0 or 1, which means that students may have learned all the knowledge of the teacher, or they may have learned nothing from the teacher. As a result, D may not be able to learn teachers' knowledge. Tf is a teaching factor and is generated by Equation (4):

Where Round represents the rounding function.

C. Learning Stage

In addition to learning from teachers, students also need to communicate with each other and learn from each other's strong points. Randomly select a student Xk(i ≠ k) from the class to carry out communication learning according to Equation (5):

Where r is a random number between [0, 1]; f(·) is a fitness function; is accepted only if .

ITLBO Algorithm

Aiming at the shortcomings of the original TLBO, which is easy to fall into local optimization and low precision in the optimization process, an improved TLBO algorithm is proposed, which is recorded as ITLBO. In the process of TLBO optimization, a remedial period for the worst students is added. For the students with the worst academic performance in the class, the teacher will guide the students alone to quickly improve their knowledge. On this basis, an interference operator is used to disturb the teacher to enhance the kinetic energy for the population to jump out of the local optimal. The improvements are described below:

D. Remedial period

Assuming that the worst student in the class is Xw, the remedial process is as follows Equation (6):

Where r1 and r2 are random numbers between [0, 1]; is an individual updated by Xw, only if , replaces Xw; Otherwise, a reverse solution is generated to replace the original Xw, and the reverse solution is generated according to the following Equation (7):

Through tutoring or reverse learning for the worst students Xw, TLBO calculation points are used to speed up convergence and improve convergence accuracy. The strategy applied by Xw is to coach the worst students, which can directly improve the average value of the whole students. It is difficult to coach the best students to improve the average value of the whole students.

When TLBO algorithm solves complex multi-dimensional optimization problems, it is easy to fall into local optimization under finite iteration times. In order to further improve the global optimization capability of TLBO, interference operators are added in the search process pert(t):

The effect of interference operator pert(t) on teacher Xt is as follow Equation (9):

Where r3 is a random number between [0, 1]; pert(t) is the disturbance coefficient of the t-th iteration process. With the increase of t, pert(t) gradually decreases, and the disturbance degree of interference operator to teachers gradually decreases. In the early stage of the search, pert(t) is larger, which causes greater disturbance to the update of teachers' positions, increases population diversity, and effectively enhances Xt to jump out of the local optimization. In the later stage of search, pert(t) gradually decreases to 0 to avoid affecting the local optimization and convergence of TLBO algorithm in the later stage.

The implementation process of ITLBO algorithm is shown in follow steps.

Step 1: Initialization parameters: class size n, dimension s, tmax, t = 1 and Initialize the population according to Equation (1).

Step 2: Calculate Xmax and calculate D according to Equation (3) and calculate the new individual according to Equation (2) and update Xi.

Step 3: Individual Xj is randomly selected, new individuals are calculated according to Equation (5), and Xi is updated. Calculate according to Equation (6); calculate the Directional Solution of Xw by Equation (7).

Step 4: Update Xw with New Individual or Reverse Solution, Choose the optimal individual Xw and calculate the interference operator according to Equation (8); according to Equation (9), update Xt.

Step 5: If t > tmax t = t+1; go to Step 2; or Output optimal solution.

Extreme Learning Machine (ELM)

ELM has the advantages of simple structure, fast operation, and strong generalization ability and so on, and avoids the problem of local optimization. No matter in theoretical research or in practical application, ELM has attracted the attention of many machine learning researchers. However, ELM still has some difficult problems to solve. The input weight thresholds of ELM are given randomly. How to ensure that they are the optimal model parameters? Appropriate number of hidden layer nodes and hidden layer activation function can ensure the generalization ability and running speed of ELM, but the setting of hidden layer node number and selection of hidden layer activation function of ELM are difficult problems.

Extreme Learning Machine (ELM) is a novel learning algorithm for single-hidden layer feedforward neural networks (SLFNs). Compared neural network learning methods (such as BP neural network) have complicated parameter design in the training model and are easy to fall into local optimization. However, ELM only sets a reasonable number of hidden layer neurons, and the algorithm execution process does not need to iterate the hidden layer. Input weights and hidden layer thresholds are randomly generated and do not rely on training sample data. The weight matrix of the output layer is obtained through one-step analytical calculation, which avoids the complicated calculation process of repeated iteration of the traditional neural network and greatly improves the training speed of the network.

For any N random samples (xi, ti),, n is the number of input layer nodes, the number of hidden layer nodes is m, and the hidden layer excitation function is g (x), then the mathematical model is as follows:

Where Xj and oj are the input and output of the extreme learning machine, respectively, i.e., The input amount of location information of geographic information; W and β are both connection weight matrices; g(x) is the excitation function; b is the bias matrix, b = [b1, b2, …, bL], bi is the i-th neuron bias.

In order to minimize the output error, define the learning objectives:

Through continuous learning and training, are obtained, see as Equation (14)

Equation (14) is equal Equation (15).

The training process actually solves the linear process and is realized by outputting weights.

Where H is the output matrix and H* is the generalized inverse matrix of Moore-Penrose.

Simulation Test and Algorithm Comparison

Benchmark Test Function

In this paper, four benchmark functions are selected to test the algorithm.

The test functions are as follows:

A. Ackley's Function f 1:

The optimal values are: min(f(X*)) = f(0, 0, ..., 0) = 0.

The function is a unimodal function with only one optimal value, but the surface is uneven.

B. Schweffel's Problem 1.2 function f2:

The optimal values are: min(f(X*)) = f(0, 0, ..., 0) = 0

The function is a unimodal function, but the surface is smooth near the optimal value.

C. Generalized Schweffel's Problem 2.26 function f3:

The optimal values are: .

The function is a multimodal function with multiple optimal values and uneven surface.

D. Generalized Rastrigin's function f 4:

The optimal values are: min(f(X*)) = f(0, 0, ..., 0) = 0.

The function is a multimodal function with multiple optimal values and uneven surface.

Test Results

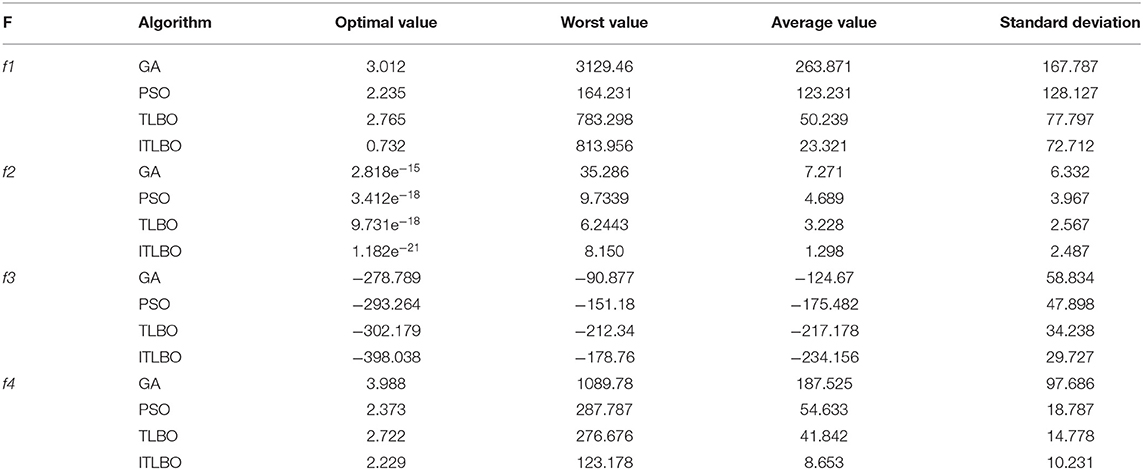

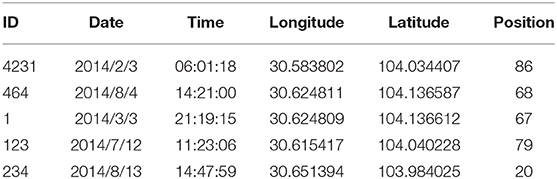

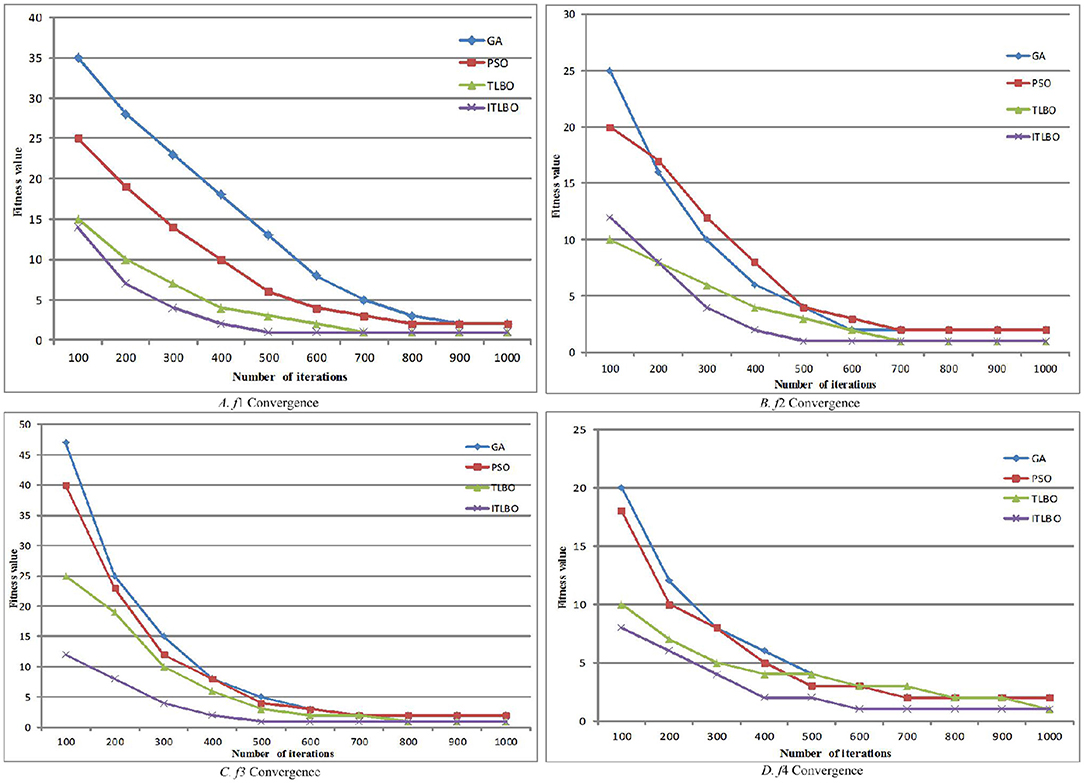

Verify the experimental results of TLBO-ELM algorithm, The algorithm uses Python language to implement the server Dell T610 operating system uses Ubuntu 64 bits, 2 CPU: x5650 main frequency 2.6G with 12 cores and 24 threads, memory 64G, The algorithm is run independently on four commonly used Benchmark functions for 30 times, The maximum number of iterations is 1,000, 100 iteration times are recorded, respectively, 200,., The average fitness value obtained 1,000 times describes the fitness value curve, The algorithms involved in the comparison include GA, PSO, TLBO and three commonly used swarm intelligence algorithms. The items to be compared include the average fitness value, the optimal result value, the worst result value and the standard deviation. The tested data are listed in detail in the test result comparison table. In order to compare the convergence performance of the four algorithms more vividly and clearly, the above three test functions are selected, and the convergence curves of the four algorithms on the selected functions are drawn, respectively. The test results are shown in Table 1 for example:

Through the above test results and comparison tables, it can be seen that when testing functions f 4 and f 1, the optimal values found by ITLBO algorithm perform best and the curves are relatively smooth, TLBO is better and the curves fluctuate little, and PSO and GA perform poorly. The standard deviation of TLBO algorithm is similar to that of ITLBO algorithm, while the standard deviation of GA and PSO is larger, which shows that TLBO algorithm performs better in convergence stability. When testing f 3 function, ITLBO algorithm finds the best optimal value, the curve is smoother, the standard deviation is lower, TLBO curve is smoother, the standard deviation is lower, but the convergence result value is not accurate enough. PSO and GA algorithms find the best value, the curve fluctuates greatly, and the standard deviation is higher. When function f2 tests, ITLBO algorithm still shows better optimization accuracy and convergence stability than the other three algorithms.

On the four functions tested, ITLBO algorithm shows the advantages of good convergence stability, high optimization accuracy and so on, and shows good optimization ability. The accuracy of the optimal solution found by the artificial fish swarm algorithm is not high enough, but the stability is good. Genetic algorithm and particle swarm optimization algorithm have the worst performance in finding the optimal solution value of the four functions.

As we can see from that optimization graph, with the increase of iteration times, the optimization results of the algorithm also tend to be stable. The stable optimization values of genetic algorithm and particle swarm optimization algorithm are obviously higher than those of other algorithms. The curve of TLBO algorithm is relatively smooth, but the solution results are not very accurate. ITLBO algorithm has advantages in optimization speed, optimization accuracy and optimization stability compared with other three algorithms.

Random selection of iteration times is 100, 200, …, 1,000 times, respectively, to test the fitness value of the algorithm and the relationship between iteration times. See as Figure 1.

Figure 1. Convergence comparison of four functions. (A) f 1 Convergence. (B) f 2 Convergence. (C) f 3 Convergence. (D) f 4 Convergence.

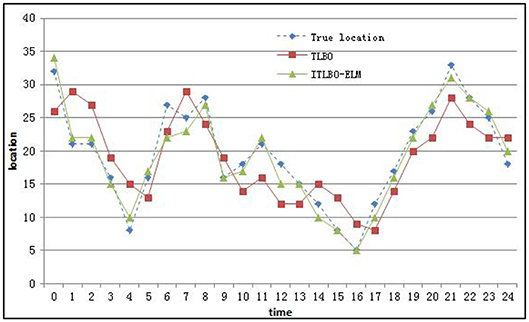

Comparative Study on GPS Vehicle Data Position Prediction

The data studied in this paper come from on-board GPS data. The data include: road number, vehicle ID, time, longitude, dimension, speed and position number. The data time is 15,000 on-board GPS data information from August 1, 2014 to August 31, 2014. Its data format is shown in Table 2 below:

Location numbering is based on the location range represented by dimensions. Different numbers differ in geographical locations. When the longitude and latitude of different geographical positions are numbered at the same number, it means that the two positions are identified as similar or the same position, i.e., the correct position is predicted. Based on the map division of this grid, different geographic location information is divided into the same or similar geographic spaces. The size of the grid also determines the accuracy of the prediction. When the grid is relatively large, the prediction effect is ideal. When there are many grids, the prediction effect may not be ideal. Under different grids, there may be two similar positions to deal with different grids. As a result, the grid size can also affect the accuracy of the experiment.

Through the processing and analysis of GPS data, this paper uses TLBO, ITLBO-ELM algorithms to compare the prediction results, and predicts different vehicle information from different road sections within the numbered range of the next position or related area. As shown in Figure 2.

Geographical locations are described by numerical numbers and different area numbers express different locations. The closer the data are, the shorter the distance between locations. As can be seen from Figure 2, ITLBO-ELM is closer to the real position than TLBO prediction method and ITLBO-ELM prediction method for prediction comparison of different positions at different time points. ITBLO combined with ELM algorithm is mainly to improve the convergence speed and accuracy of the algorithm. As can be seen from Figure 2, ITBLO-ELM algorithm has obvious advantages corresponding to other algorithms.

Geographic location information is encoded with specific area numbers and different area numbers express different geographic location information. There may be the same area numbers at different longitude and latitude. At this time, the location representation is similar. When judging from the effect of the actual position and the predicted position in Figure 2, there are obvious differences between the actual position and the TLBO prediction results, while in the whole prediction track process, the actual position area number is consistent with the ITLBO-ELM prediction results, and the two are relatively close. The experimental results show that ITLBO-ELM has good experimental results in overall performance.

Conclusion

In view of the poor prediction accuracy and effect in the geographic location information prediction algorithm, a prediction method based on improved teaching and learning optimization algorithm and ELM algorithm is proposed. The results show that ITLBO-ELM algorithm has high accuracy in predicting positions. The method proposed in this paper has good effect in predicting the position, considering the representation of position information under different coordinates. Further research work is carried out to predict the position information from different coordinates so as to reduce the prediction errors caused by coordinate differences. In the conclusion, in the future research work, whether more tests are considered in the algorithm to test the test effect of this paper. In the future research work, the convergence speed and position accuracy of the algorithm will be mainly considered.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

All authors participated in the preparation and presentation of the manuscript.

Funding

This work was supported by the Science and Technology Research Program of Chongqing Municipal Education Commission (Grant No. KJZD-K201902101).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1. Jiang W, Carter DR, Fu HL, Jacobson MG, Zipp KY, Jin J, et al. The impact of the biomass crop assistance program on the United States forest products market: an application of the global forest products model. Forests. (2019) 10:1–12. doi: 10.3390/f10030215

2. Liu JB, Zhao J, Zhu ZX. On the number of spanning trees and normalized Laplacian of linear octagonal-quadrilateral networks. Int J Quantum Chem. (2019) 119:1–21. doi: 10.1002/qua.25971

3. Liu JB, Zhao J, Cai Z. On the generalized adjacency, Laplacian and signless Laplacian spectra of the weighted edge corona networks. Physica A. (2020) 540:1–11. doi: 10.1016/j.physa.2019.123073

4. Wei L, Li J, Ren L, Xu J. Exploring livelihood resilience and its impact on livelihood strategy in rural China. Social Indicat Res. (2020). doi: 10.1007/s11205-020-02347-2

5. Ghasemi M, Taghizadeh M, Ghavidel S, Aghaei J, Abbasian A. Solving optimal reactive power dispatch problem using a novel teaching-learning-based optimization algorithm. Eng Appl Artif Intell. (2015) 39:100–8. doi: 10.1016/j.engappai.2014.12.001

6. Lee J, Chang H-J. Analysis of explicit model predictive control for path-following control. PLoS ONE. (2018) 13:e0194110. doi: 10.1371/journal.pone.0194110

7. Singh R, Verma HK. Teaching–learning-based Optimization Algorithm for Parameter Identification in the Design of IIR Filters. J Inst Eng. (2013) 94:285–94. doi: 10.1007/s40031-013-0063-y

8. Pan Q-K. An effective co-evolutionary artificial bee colony algorithm for steelmaking-continuous casting scheduling. Eur J Operat Res. (2016) 250:702–14. doi: 10.1016/j.ejor.2015.10.007

9. Dib F, Boumhidi I. Hybrid algorithm DE-TLBO for optimal and PID control for multi-machine power system. Int J Syst Assur Eng Manage. (2017) 8:S925–36. doi: 10.1007/s13198-016-0550-z

10. Luo Z, Guo Q, Zhao J, Xu S. Tuning of a PID controller using modified dynamic group based TLBO algorithm. Int J Comput Appl. (2017) 157:17–23. doi: 10.5120/ijca2017912593

11. Satapathy SC, Naik A, Parvathi K. Unsupervised feature selection using rough set and teaching learning-based optimisation. Int J Artif Intell Soft Comput. (2013) 3:244. doi: 10.1504/IJAISC.2013.053401

12. Yang B, Shu HC, Zhang RY, Huang LN, Zhang XS, Yu T. Interactive teaching-learning optimization for VSC-HVDC systems. Kongzhi yu Juece/Control Decis. (2019) 34:325–34. doi: 10.13195/j.kzyjc.2017.1080

13. Wang BC, Li HX, Feng Y. An improved teaching-learning-based optimization for constrained evolutionary optimization. Information Sci. (2018) 456:131–44 doi: 10.1016/j.ins.2018.04.083

14. Das SP, Padhy S. A novel hybrid model using teaching-learning-based optimization and a support vector machine for commodity futures index forecasting. Int J Mach Learn Cybernet. (2018) 9:97–111. doi: 10.1007/s13042-015-0359-0

Keywords: GPS, learning optimization algorithm, ITLBO algorithms, ELM, prediction method

Citation: Yang Z and Sun Z (2020) Research on Geographic Location Prediction Algorithm Based on Improved Teaching and Learning Optimization ELM. Front. Phys. 8:259. doi: 10.3389/fphy.2020.00259

Received: 15 April 2020; Accepted: 10 June 2020;

Published: 24 July 2020.

Edited by:

Jia-Bao Liu, Anhui Jianzhu University, ChinaReviewed by:

Hanliang Fu, Illinois State University, United StatesLi Shijin, Yunnan University of Finance and Economics, China

Shaohui Wang, Louisiana College, United States

Copyright © 2020 Yang and Sun. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zengwu Sun, c3VuemVuZ3d1QGFsaXl1bi5jb20=

Zhen Yang

Zhen Yang Zengwu Sun

Zengwu Sun