- 1Department of Cardiovascular Surgery, West China Hospital, Sichuan University, Chengdu, Sichuan, China

- 2Department of Anesthesia, West China Hospital, Sichuan University, Chengdu, Sichuan, China

- 3West China School of Medicine, Sichuan University, Chengdu, Sichuan, China

- 4West China School of Public Health, Sichuan University, Chengdu, Sichuan, China

Background: Currently, video platforms were filled with many low-quality, uncensored scientific videos, and patients who utilize the Internet to gain knowledge about specific diseases are vulnerable to being misled and possibly delaying treatment as a result. Therefore, a large sample survey on the content quality and popularity of online scientific videos was of great significance for future targeted reforms.

Objective: This study utilized normalization data analysis methods and a basic assessment scale, providing a new aspect for future research across multiple platforms with large sample sizes and for the development of video content quality assessment scales.

Methods: This cross-sectional study analyzed a sample of 331 videos retrieved from YouTube, BiliBili, TikTok, and Douyin on June 13, 2024. In the analysis of atrial fibrillation scientific videos across four social media platforms, comprehensive metrics and a basic scoring scale revealed associations between platforms, creators, and the popularity and content quality of the videos. Data analysis employed principal component analysis, normalization data processing, non-parametric tests, paired t-tests, and negative binomial regression.

Results: Analysis of the user engagement data using a composite index revealed a significant difference in the popularity of videos from publishers with a medical background (z = −4.285, p < 0.001), no aforementioned findings were found among video platforms, however, except for the Bilibili platform. As for content quality, while the difference in the total number of videos between the two groups was almost 2-fold (229:102), the difference in qualified videos was only 1.47-fold (47:32), a ratio that was even more unbalanced among the top 30% of videos with the most popularity. Notably, the overall content quality of videos from publishers without a medical background was also significantly higher (z = −2.299, p = 0.02).

Conclusion: This analysis of atrial fibrillation information on multiple social media platforms found that people prefer videos from publishers with a medical background. However, it appeared that these publishers did not sufficiently create high-quality, suitable videos for the public, and the platforms seemed to lack a rigorous censorship system and policy support for high-quality content. Moreover, the normalized data processing method and the basic assessment scale that we attempted to use in this study provided new ideas for future large-sample surveys and content quality review.

1 Introduction

Atrial fibrillation (AF) is the most common clinical arrhythmia, affecting approximately 59 million individuals worldwide (1). Patients with AF face a 2-fold increased risk of myocardial infarction (2) and a 5-fold increased risk of heart failure (3). Additionally, they have a 5-fold higher risk of ischemic stroke, which rises to 20-fold in the presence of mitral stenosis (4, 5). According to Lin et al., 28.6% of AF patients are asymptomatic and often hospitalized for other primary conditions (6). Therefore, educating patients about the health risks associated with AF may improve adherence to treatment more effectively than focusing solely on medication dosages and routine follow-ups.

Traditional medical education methods, such as posters and manuals, are often limited in their reach due to accessibility and geographic constraints (7). This restricts the dissemination of disease-related information to both chronic disease patients and the public (6, 8, 9). Today, with the widespread use of smartphones and personal computers, individuals can access health information anytime and anywhere. This shift has made online video platforms a powerful tool for distributing medical education and raising awareness (10–12). Many healthcare institutions on platforms like YouTube have obtained certification and are actively publishing videos (13). Lee et al. found that 40.8% of U.S. adults use YouTube to search for health-related information, leading to a 30% increase in physical activity (14). However, some meta-analysis of educational videos on online platforms revealed that there is still considerable room for improvement, particularly in terms of content accuracy and regulatory oversight on platforms like YouTube (15–17). Platforms demonstrate inadequate content moderation for user-uploaded videos, enabling virtually any user to disseminate disease-related articles or videos without rigorous vetting. Coupled with social media’s intrinsic real-time nature that facilitates viral propagation speeds, misinformation, unverified claims, and pseudoscientific content become freely accessible through platform search algorithms (15, 18, 19), The insufficient creator-audience interaction diminishes the signature dialogic nature of social media platforms (20), effectively rendering them functionally equivalent to traditional didactic health broadcasts.

Current research on the quality of educational videos predominantly consists of single-platform, single-disease studies with small sample sizes and video quality evaluations rely on horizontal comparisons of isolated unidimensional metrics (e.g., view counts, likes), without in-depth exploration of inter-indicator correlations. There is a notable lack of cross-platform quality comparative analyses and disease-specific quality assessment tools (depending on generic scales like DISCERN or JAMA). In this study, AF educational videos were retrieved from four platforms: YouTube, BiliBili, TikTok, and Douyin. We experimentally applied a normalization method to unify interaction data from different platforms for statistical analysis and used a ‘basic and essential’ professional rating scale to assess the content quality of sampled videos. This study provided a new perspective and method for future large-scale data analysis and content quality evaluation across different platforms.

2 Methods

2.1 Study design

This study was a cross-sectional analysis. On June 13, 2024, we performed keyword searches for “Atrial Fibrillation,” “AF,” and “AF + Management” across YouTube, BiliBili, TikTok, and Douyin platforms. For each video identified, we recorded the URL, the number of views, likes, and the number of comments and replies on the same day to mitigate potential changes over time. The content quality of the videos was then evaluated over the subsequent month.

2.2 Measures

The keyword searches were independently conducted by two reviewers using a web browser with a cleared cache. The top 50 results for each search term were selected. Exclusion criteria included duplicate videos, audio-only content, videos with titles that did not match the content, and videos in languages other than English or Chinese. In cases where the two reviewers disagreed, a third reviewer was consulted to make the final decision. After applying these criteria, a total of 331 videos were included in the analysis.

2.3 Data collection

2.3.1 Interaction metrics

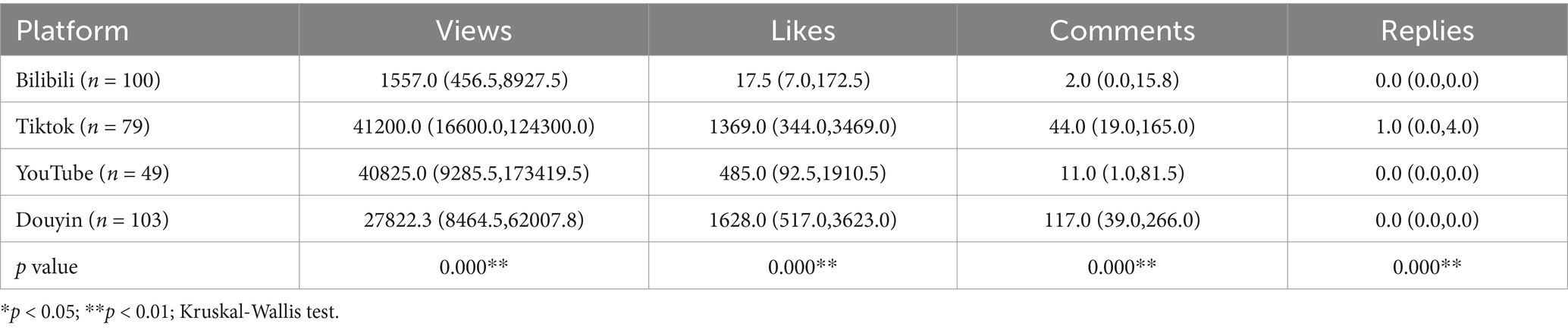

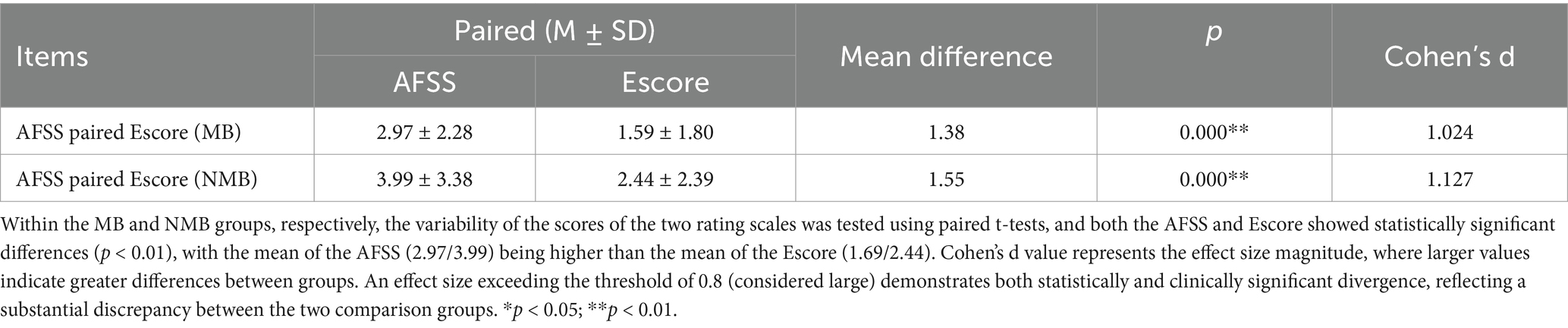

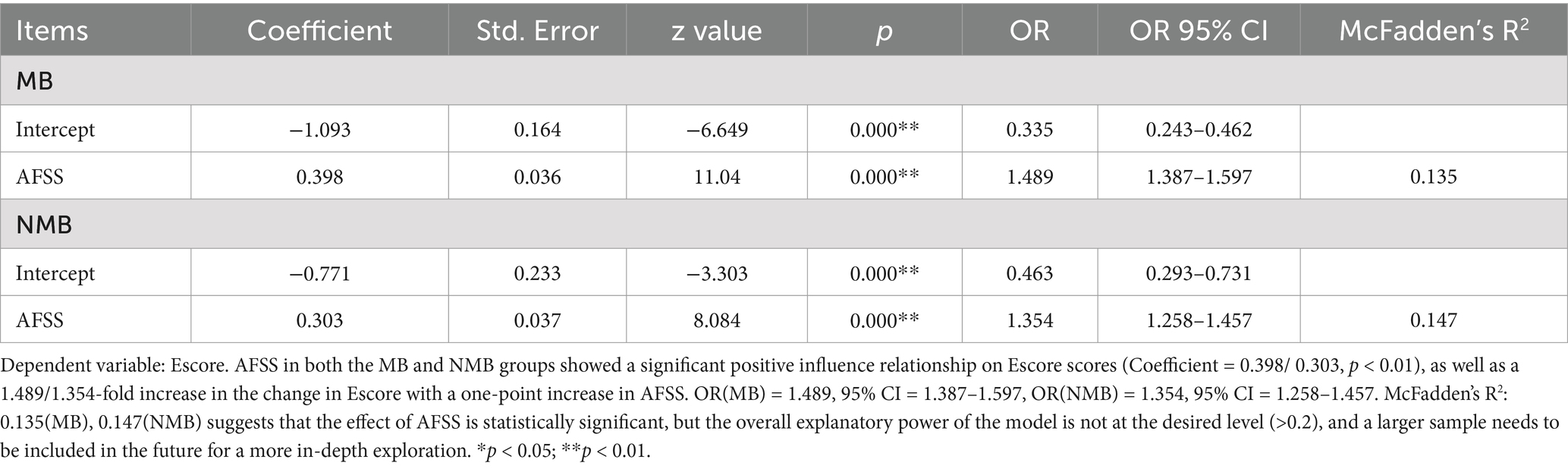

The interaction metrics collected for each video included: view count, number of likes, comments, and replies. To avoid potential bias, we matched 1:1 after data collection in rows stratified by a reply/comment ratio of 3:1. Metrics between different platforms were examined using Kruskal-Wallis tests (Table 1A). Principal Component Analysis (PCA) and weighted scoring were employed to preprocess interaction metrics data [Kaiser-Meyer-Olkin (KMO) = 0.741; and Bartlett’s test of sphericity < 0.001]. Based on the PC1 loading, the Heatscore, a composite index, was obtained using a normalization method (Figure 1; Table 1B).

Figure 1. Interaction metrics PCA 2D scatter plot. The horizontal and vertical coordinate are the principal component (PC1,2) axis and principal component contributions. PC1 explains 66.19% of the variance in the dataset. Specific data for each principal component loading can be found in Table 1B. Blue dots represent each sample included in the analysis.

2.3.2 Specialized metrics

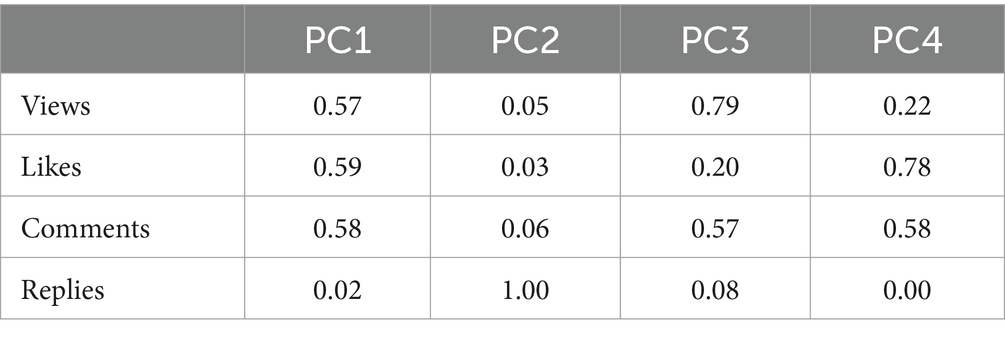

The DISCERN and JAMA scales have been utilized to evaluate the accuracy of information sources. However, the aforementioned scales are capable of assessing the reliability of the information sources present in the video but offer a limited capacity for the evaluation of content richness on a professional area. This observation is consistent with the findings of previous studies that have expressed reservations regarding the application of the DISCERN and JAMA scales (21–23), Therefore, the classification criteria developed by clinicians were used in this study. A scoring system, the Atrial Fibrillation Specific Score (AFSS), was designed based on existing literature and expert guidelines (24, 25). However, using such complex and precise scales to guide the creation of educational videos may result in content that is too advanced for viewers to comprehend, limiting their ability to engage in shared decision-making (26). In the course of routine treatment, it has been observed that patients tend to demonstrate a limited interest in the pathogenesis, preferring instead to inquire about the treatment options and the distinctions between them. However, during outpatient consultations, patients frequently encounter challenges in comprehending and assimilating a substantial volume of disease-related information within a limited timeframe. This observation underscores the imperative for the development of a scoring scale that quantifies the quality of popularized video content, with the objective of enhancing public comprehension of medical information. On the one hand, it can effectively promote the platform’s content review, and on the other hand, we hope that video authors can produce videos based on this scale to provide a good way for the public to understand and absorb disease-related knowledge before and after the treatment. To address this, we developed the Essential Score (Escore) as a simplified version of the AFSS framework. We have attempted to remove from the AFSS framework about diagnosis and management of the disease that may require a medical background to understand, and to focus on knowledge that is of more interest to the general public, such as the course of the disease, its associated dangers, methods for self-diagnosis, and available treatment options. Five specialized cardiac surgeons from our hospital were consulted to evaluate the content validity of both the AFSS and Escore rating scales. After multiple rounds of adjustments and assessments, the final criteria are presented in Table 2. Each video was scored for content quality independently by three authors, or by the corresponding author if the variation between them was more than three. The reliability, validity, and content validity indexes confirmed that these scales are appropriate for evaluating the content quality of the videos analyzed in this study (Table 2).

In this study, the content quality of the sample videos was evaluated using the Escore with one point awarded for fulfilling one item in the Diagnose and Management section, but slightly different in the Treatment section. With a clear diagnosis of a chronic disease, there is mostly an emphasis on long-term treatment and regular follow-up (27–29), and it is important for patients to have a thorough understanding of the treatments for their condition (30, 31), so a missing item in the Treatment section of the Escore was worth zero points. In video quality grading, treatment videos scoring below 3 were classified as disqualification. Scores of 5–7 and 3–4 were classified as adequacy and eligibility.

2.4 Statistical analysis

All videos were categorized based on publishing platform and author type. Categorical variables were analyzed using frequency and relative frequency, whereas continuous variables were summarized using median values. Python was utilized to perform PCA and calculate the Heatscore index. Pandas and NumPy were employed for data processing and numerical computations, PCA and the calculation of the Heatscore. IBM SPSS Statistics version 26.0 was applied for statistical analysis and the computation of the metrics. According to the Kolmogorov–Smirnov test, the data in this study exhibited non-normal distribution, necessitating the use of non-parametric tests to evaluate differences between groups.

3 Results

Out of the initial pool of 600 videos, 269 were excluded due to duplication, lack of audio, or irrelevance. This left 331 videos for analysis, with 31% from non-medical backgrounds (NMB), such as self-media and public accounts, and 69% from medical backgrounds (MB), including doctors and hospital media.

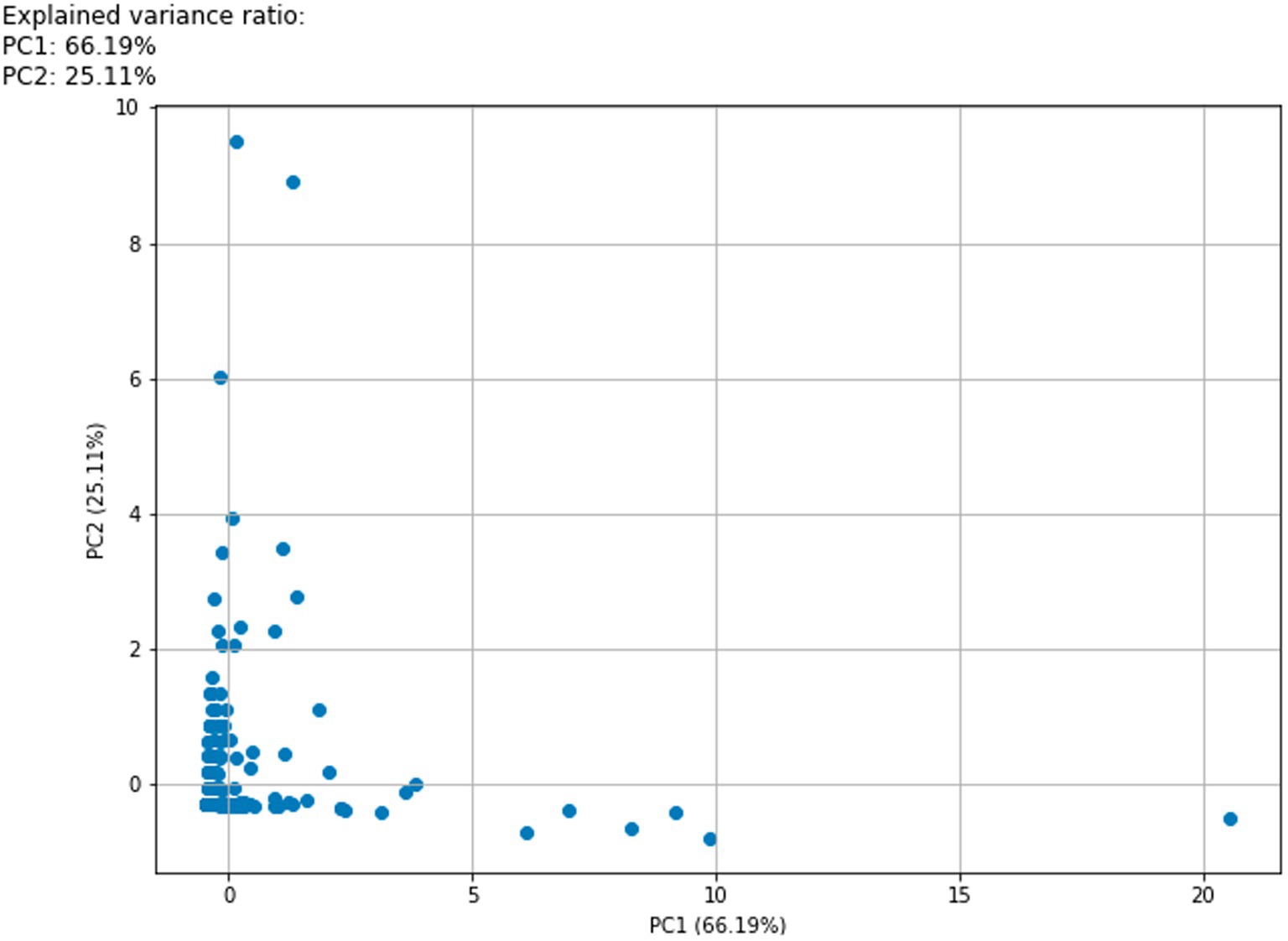

In the first phase, we analyzed interaction metrics (views, likes, comments, and replies) and specialized metrics (AFSS and Escore) based on video platform and author type. In the interaction metrics section, after processing through PCA and weighted scoring, we found that the Heatscore still showed significant differences among various video platforms. A subsequent Nemenyi post-hoc test revealed that the source of the difference was solely the video data from the Bilibili platform (Figure 2a). The MB group had higher Heatscore than the NMB group (z = −4.285, p < 0.001; Figure 2b).

Figure 2. (a) Analysis of between-group differences in heat score categorized by platform. The results of the two-by-two comparison based on the Nemenyi test showed that there were statistically significant differences between the observed metrics of the Bilibili platform and the other three analyzed platforms (TikTok, YouTube, and Douyin; p < 0.001). Comparisons between the other platforms were as follows: the difference between TikTok and YouTube was not statistically significant (p = 0.983), nor was the difference with Douyin (p = 0.405); the comparison between YouTube and Douyin also showed no significant difference (p = 0.779). (b) Analysis of between-group differences in heat score categorized by author type. The MB group had higher heat score then the NMB group (z = −4.285, p < 0.001).

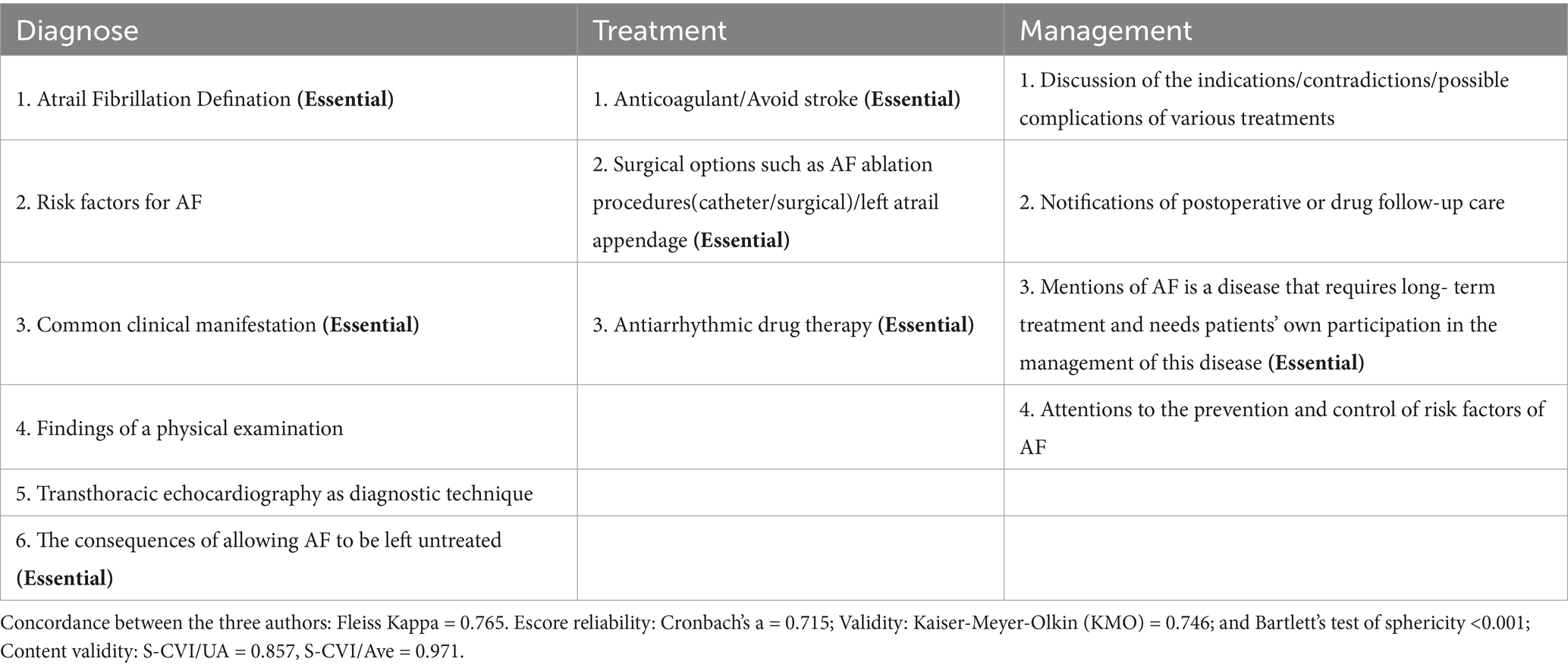

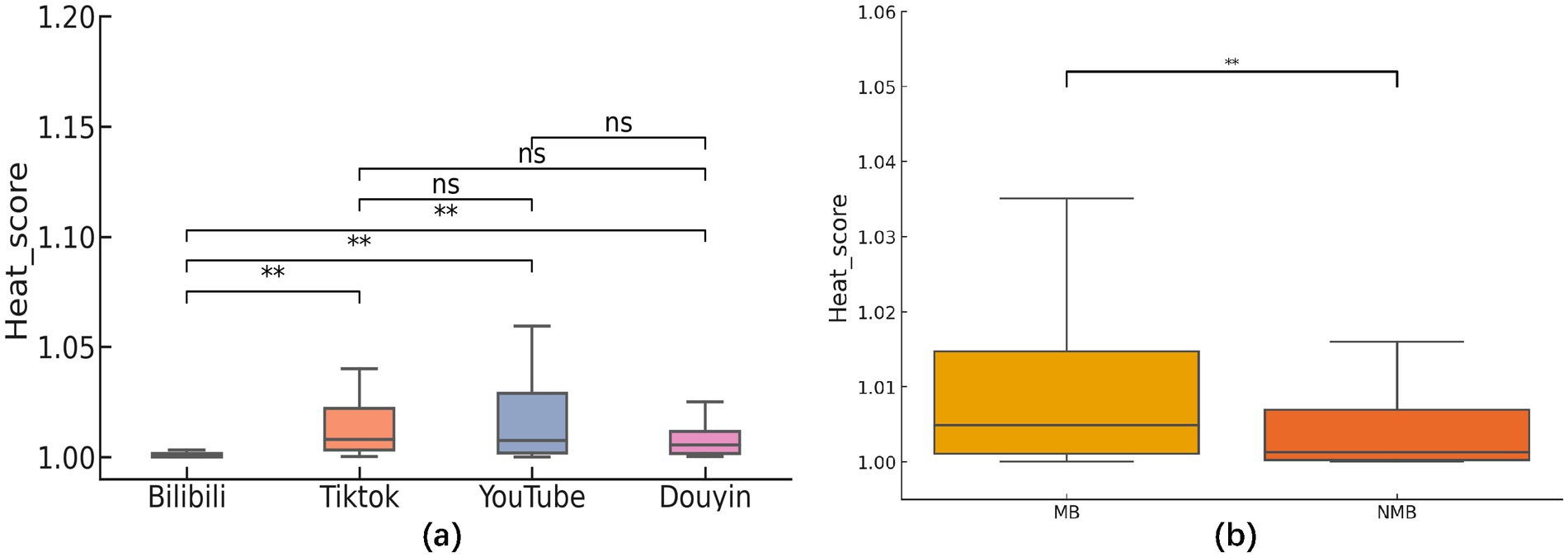

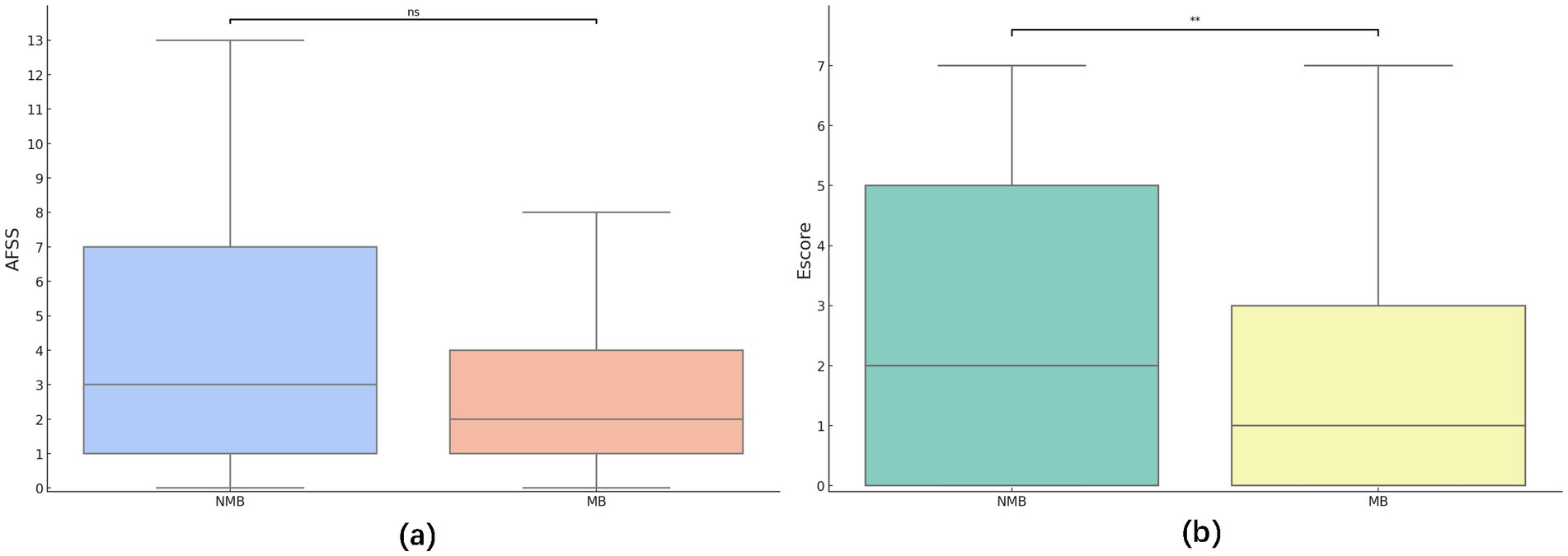

In terms of specialized metrics, there was no significant difference in AFSS scores between MB and NMB groups, but the Escore was notably higher in the NMB group (z = −2.299, p = 0.02; Figures 3a,b). Further paired t-tests and regression analysis showed that AFSS scores were significantly higher than Escore in two groups (t-statistic = 17.051/ 10.814, p < 0.001/ < 0.001; Table 3A). Using AFSS scores as the independent variable and Escore as the dependent variable in a negative binomial regression analysis, it was found that AFSS scores had a significant positive effect on Escore (Table 3B).

Figure 3. (a) Analysis of between-group differences in AFSS categorized author type. No statistically significant difference in AFSS scores was seen between the MB and NMB groups (z = −1.529, p = 0.126). (b) Analysis of between-group differences in Escore categorized author type. There was a statistically significant difference in Escore scores between the MB and NMB groups (z = −2.299, p = 0.022).

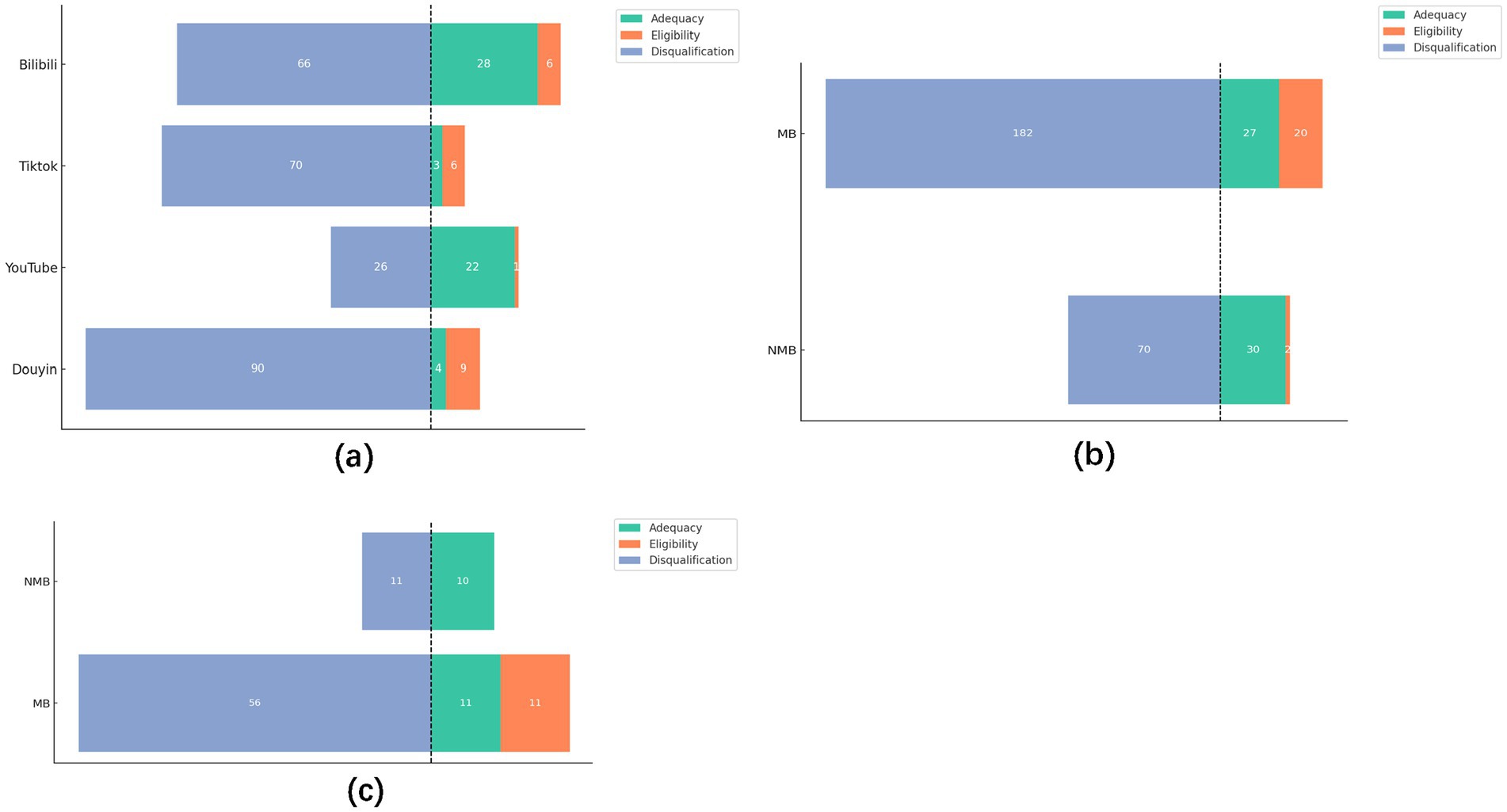

In the second part, it was observed that most adequacy scientific videos (50/57, 88%) originate from Bilibili and YouTube. Concurrently, all video platforms were inundated with a substantial number of disqualified videos (252/331, 76%). When categorized by author type, MB creators produced nearly twice as many videos as NMB creators (229:102). Yet, the proportion of qualified videos (Escore ≥ 3) was only 1.47:1 (47:32), while non-compliant videos were 2.6 times higher in the MB group (182:70; Figures 4a,b). Among high-popularity videos (top 30% in Heatscore), the MB group again had more videos (78:21) but with ratio of disqualified to qualified videos, approximately 2:1.

Figure 4. (a) Video quality datasets between the platforms. (b) Video quality datasets between the author types. (c) Video dataset (top 30% in Heatscore) between the author types.

4 Discussion

4.1 Interaction metrics

In previous similar reports, the data from different platforms were analyzed by directly profiling the number of plays, likes, and replies of the sample video (32–34). However, we recognize that different platforms encompass diverse user bases and content strategies. This variability complicates the interpretation of results, given the disparity in data across platforms (Table 1A). Lookingbil et al. took a randomized permutation test approach to statistically analyze user engagement (i.e., the number of views, likes, comments, and shares) of videos on a single video platform and revealed a significant correlation between the number of publishers’ followers, video likes, and the level of user engagement through linear regression, an approach that should be viewed as an attempt at integrative analysis (23). We believe that direct analysis of data from different platforms can well explain the differences in audience population and policy support of different platforms, but a comprehensive indicator may be needed to make a judgment on the popularity of the video itself. Therefore, our study introduces methods like PCA and normalization techniques for further processing interactive metrics (Table 1B). Importantly, the outcomes of these two statistical approaches yielded notably divergent results (Table 1A; Figure 2a).

The above analysis showed that although YouTube and TikTok outperform Douyin and Bilibili on paper, there is little difference in the Heatscore (popularity) among YouTube, TikTok and Douyin platforms. Bilibili consistently trails behind these platforms in terms of engagement. These findings align closely with similar studies in literature, where videos posted by individuals with medical backgrounds (MB groups) tend to garner higher popularity among viewers (35–37) (Figure 2b).

However, the findings are not cause for celebration. PCA analysis revealed that among the variables examined, the number of comments and replies accounted for 58 and 2% of the total variance in the raw data, respectively. Notably, the median number of replies across all platforms was zero, indicating a widespread lack of publisher responsiveness to viewer inquiries. Moreover, despite TikTok’s highest average view count of 41,200, this figure pales in comparison to the nearly 59 million individuals worldwide affected by atrial fibrillation (AF) (1). This disparity underscores the challenge in effectively reaching and engaging with the expansive AF community through current social media platforms.

4.2 Specialized metrics

Several previous studies have evaluated content quality using established scales such as DISCERN, JAMA, PEMAT-A/V, and GQS (32, 33, 38, 39) alongside self-constructed scales based on disease-specific guidelines (35, 40–43). The former has the advantages that the scale has been extensively validated, and the reliability of the conclusions is greater, but the applicability is poorer, and the content of the evaluation lacks the specialization of the disease in which it is related, as opposed to the latter, which is the same in reverse. The situation was such that video platforms were overwhelmed with low-quality and misleading content (41, 44, 45). Hence, specialized scales might be more advantageous in the evaluation of the quality of video content for related diseases, and their disadvantages could be ameliorated through multiple validations. In this study, the AFSS scale was created to cover diagnosis, treatment, and management in accordance with the European and US guidelines for the diagnosis and management of AF. Subsequently, we introduced the Escore scale, designed to prioritize essential and fundamental aspects, reflecting a more necessity approach. In this section, this study had two purposes, firstly to investigate the potential use of a “more basic necessity” rating scale. Secondly, to evaluate whether the content quality of the sample videos was satisfactory.

In developing the AFSS scale, it was found that the detailed scoring criteria, while professional enough, could overwhelm viewers seeking concise information from scientific videos. Hence, the Escore scale was crafted to distill essential elements more comprehensibly.

To assess the Escore scale’s efficacy, we conducted paired t-tests on groups with MB and NMB. Results showed that Escore were significantly lower than the AFSS, it was able to obtain fewer scores than the AFSS, suggesting that the Escore was not a parallel scale to the AFSS (Table 3A). Moreover, negative binomial regression analyses indicated a strong positive correlation between AFSS and Escore scores (Table 3B). The above results indicated that the “basic necessity” Escore scale maintained a high level of consistency and validity with the “complexity and comprehensiveness” AFSS scale. The similar OR valves suggested that the Escore performance is consistent across the two datasets, with no significant variations, supporting the stability of the Escore. As such, the Escore was qualified and potentially useful as a “more basic necessity” scale.

4.3 Satisfaction with content quality

In recent years, it has become a common way for the public to learn about diseases through online video sites or software (46–48). Tan et al. found that videos featuring medical professionals, highlighted by titles or attire, tend to attract more engagement (49). However, the findings of several studies are not positive about the quality of video content from professional authors (50–52).

Both publishers and platforms were involved in ensuring the quality of the content of the videos uploaded on platforms. This study assessed the publishers’ by rating the content quality of the sample videos using the AFSS/ Escore scale. It was noted with surprise that the median AFSS scores were only 2.0 and 3.0 out of a total of 13, and that there was no statistically significant difference in the scores between the two groups (Figure 3a). It indicated that the overall content quality of current streaming videos is low, and that the MB group has a higher Heatscore (popularity), but does not have the favorable conditions to create higher quality videos. On the one hand, the MB group may be more likely to be believed by the general public due to their platform accreditation or with the title of medical practitioners, but the quality of their existing videos is not sufficient to provide adequate information to the general public. On the other hand, regardless of whether or not the publishers have a medical background, the overall quality of the video content is substandard, and the incomplete introduction of the treatment content related to atrial fibrillation may result in patients having a biased understanding of the treatment, regardless of the medical background of the publisher. This may lead to compliance problems in long-term treatment. These shortcomings were even more prominent in the Escore scores, where it appeared that publishers in the NMB group, who did not have a medical background, were better able to create “more basic and necessary” scientific videos (z = −2.299, p = 0.005; Figure 3b). It is possible that NMB creators may encounter challenges in comprehending the intricate ECG manifestations, electrophysiological changes and disease management of AF. Consequently, these creators may tend to the production of video content that focuses on more readily comprehensible aspects of the disease, such as its manifestations and therapeutic treatments which are more aligned with the Escore scoring. Conversely, for creators in the MB group, the traditional medical education they received—which may dedicate several hours to comprehensively teaching a single disease—forces them to focus only on partial aspects of a condition when producing videos. This limitation stems from three critical constraints: their ingrained educational paradigms, video duration limits, and personal energy reserves. These factors collectively contribute to their challenges in crafting Escore scale based high-quality videos. However, of greater concern to us was the fact that, like the findings from the interactive metrics, overall, the quality of content in both groups remained low.

Next, we categorized the content quality into Adequacy, Eligibility, and Disqualification according to the Escore score range, aiming to figure out whether the platforms have reviewed the quality of the content. The results are consistent with the concerns expressed in related studies (41, 44, 45). Although platforms have already introduced medical certification measures (53–55). Still, video platforms were overflowing with disqualification videos (252/331, 76%), and there were only 14 more eligible videos in the MB group (1.47-fold) but 2.6-fold more disqualification videos in the NMB group when there were twice as many sample videos as in the NMB group (Figures 4a,b). We analyzed the top 30% of videos in the Heatscore to figure out whether platforms are grading and supporting high-quality videos. It was found that even among the MB group videos with high popularity, there is still a large proportion disqualification video (56/ 78, 72%). The MB groups had 3.7-fold videos than the NMB group but had 5.1-fold disqualification video while having only 2-fold qualified video (Figure 4c). Combined with the previous finding that the Heatscore of videos in the MB group was significantly higher than that of the NMB (Figure 2b), we assume that the platform did not have a well re-assessment process of the content quality while providing support to publishers with medical backgrounds, resulting in a situation where videos in the MB group were currently high in popularity, but a large number of disqualification videos still existed.

In the context of Internet globalization, social media platforms have been shown to be faster, more convenient and possess unique social attributes in comparison to traditional means of publicity. This provides a vast fertile ground for the widespread dissemination of disease-related knowledge, but it also breeds ‘bacteria’. It is incumbent upon platforms to provide creators with guidelines for uploading videos, establish a comprehensive background and content review mechanism to eliminate defective and shoddy works, provide views support for well-produced videos with reliable and detailed content, and dynamically monitor the view data of popular science videos so that searchers can find newer and better-quality videos. For the creators, it is essential to adhere to rigorous standards in medical science video production, ensuring that treatments are not selectively or utilitarian introduced. Secondly, the content should be presented in a manner that is more accessible to the general public, with a reduction in text and the adoption of simpler forms of expression such as images or animations.

5 Limitation

We only included videos that ranked in the top 50 search results, and it is possible that this strategy does not fully include search terms that may be used by the public. Second, this study attempted to use the Heatscore and Escore, although they were developed based on previous literature and authoritative guidelines and literature, their reliability needs to be explored in subsequent studies.

6 Conclusion

In this study assessing the quality of scientific videos on AF knowledge across different video platforms, with four platforms, it appeared that there was insufficient support for high-quality videos and a lack of a rigorous process for reviewing the quality of the content. Despite having medical backgrounds, creators in the MB group did not consistently produce higher-quality videos. Furthermore, this study introduced a normalization method to analyze data, which revealed significant differences between groups, yielding insights distinct from those obtained through raw data analysis. This methodological innovation presented a new way for future studies with larger sample sizes and across multiple platforms. In terms of content quality evaluation, this study pioneered the validation of a “basic and essential” scoring system, designed to better suit public consumption. This innovative approach offered a fresh perspective for future content reviews on video platforms.

The prevailing tendency among the general public to seek information regarding diseases from online sources has become increasingly pervasive. It is incumbent upon platforms to develop vetting standards, optimize recommendation algorithms and establish dynamic monitoring. Creators should consider forming interdisciplinary teams that integrate physicians (to ensure content authority), media scholars (to refine narrative structure), and visual designers (to achieve cognitive load reduction).

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding authors.

Ethics statement

Ethical approval was not required for the study involving human data in accordance with the local legislation and institutional requirements. Written informed consent was not required, for either participation in the study or for the publication of potentially/indirectly identifying information, in accordance with the local legislation and institutional requirements. The social media data was accessed and analyzed in accordance with the platform’s terms of use and all relevant institutional/national regulations.

Author contributions

CL: Data curation, Formal analysis, Investigation, Methodology, Writing – original draft. XQ: Data curation, Formal analysis, Writing – original draft. XX: Data curation, Formal analysis, Investigation, Methodology, Project administration, Writing – original draft. JG: Data curation, Investigation, Writing – original draft. YW: Data curation, Formal analysis, Investigation, Writing – original draft. WL: Project administration, Resources, Supervision, Validation, Visualization, Writing – review & editing. ZW: Project administration, Resources, Supervision, Validation, Visualization, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This study was supported by the Natural Science Foundation of Sichuan Province (2023NSFSC1648).

Acknowledgments

We thank the staff from the West China Hospital of Sichuan University, Sichuan, China, for acquiring and analyzing the data for this research.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2025.1507776/full#supplementary-material

Abbreviations

AF, Atrial fibrillation; PCA, Principal Component Analysis; AFSS, Atrial Fibrillation Specific Score; Escore, Essential score; NMB, Non-medical backgrounds; MB, Medical backgrounds.

References

1. Roth Gregory, A, Mensah George, A, and Johnson Catherine, O. Global burden of cardiovascular diseases and risk factors, 1990–2019. J Am Coll Cardiol. (2020) 76:2982–3021. doi: 10.1016/j.jacc.2020.11.010

2. Frederiksen, TC, Dahm, CC, Preis, SR, Lin, H, Trinquart, L, Benjamin, EJ, et al. The bidirectional association between atrial fibrillation and myocardial infarction. Nat Rev Cardiol. (2023) 20:631–44. doi: 10.1038/s41569-023-00857-3

3. Santhanakrishnan, R, Wang, N, Larson, MG, Magnani, JW, McManus, DD, Lubitz, SA, et al. Atrial fibrillation begets heart failure and vice versa. Circulation. (2016) 133:484–92. doi: 10.1161/CIRCULATIONAHA.115.018614

4. Marini, C, De Santis, F, and Sacco, S. Contribution of atrial fibrillation to incidence and outcome of ischemic stroke. Stroke. (2005) 36:1115–9. doi: 10.1161/01.STR.0000166053.83476.4a

5. Rogers, PA, Bernard, ML, Madias, C, Thihalolipavan, S, Mark Estes, NA, and Morin, DP. Current evidence-based understanding of the epidemiology, prevention, and treatment of atrial fibrillation. Curr Probl Cardiol. (2018) 43:241–83. doi: 10.1016/j.cpcardiol.2017.06.001

6. Lin, J, Wu, X-Y, Long, D-Y, Jiang, CX, Sang, CH, Tang, RB, et al. Asymptomatic atrial fibrillation among hospitalized patients: clinical correlates and in-hospital outcomes in improving Care for Cardiovascular Disease in China-atrial fibrillation. EP Europace. (2023) 25:euad272. doi: 10.1093/europace/euad272

7. Kingsley, K, Galbraith, GM, Herring, M, Stowers, E, Stewart, T, and Kingsley, KV. Why not just Google it? An assessment of information literacy skills in a biomedical science curriculum. BMC Med Educ. (2011) 11:17. doi: 10.1186/1472-6920-11-17

8. Sharma, D, and Kanneganti, TD. The cell biology of inflammasomes: mechanisms of inflammasome activation and regulation. J Cell Biol. (2016) 213:617–29. doi: 10.1083/jcb.201602089

9. Newman, D, Tong, M, Levine, E, and Kishore, S. Prevalence of multiple chronic conditions by U.S. state and territory, 2017. PLoS One. (2020) 15:e0232346. doi: 10.1371/journal.pone.0232346

10. Dragseth, MR. Building student engagement through social media. J Polit Sci Educ. (2020) 16:243–56. doi: 10.1080/15512169.2018.1550421

11. Bik, HM, and Goldstein, MC. An introduction to social media for scientists. PLoS Biol. (2013) 11:e1001535. doi: 10.1371/journal.pbio.1001535

12. Fischhoff, B. The sciences of science communication. Proc Natl Acad Sci. (2013) 110:14033–9. doi: 10.1073/pnas.1213273110

13. Knight, E, Intzandt, B, MacDougall, A, and Saunders, TJ. Information seeking in social media: a review of YouTube for sedentary behavior content. Interact J Med Res. (2015) 4:e3. doi: 10.2196/ijmr.3835

14. Lee, J, Turner, K, Xie, Z, Kadhim, B, and Hong, Y-R. Association between health information–seeking behavior on YouTube and physical activity among U.S. adults: results from health information trends survey 2020. AJPM Focus. (2022) 1:100035. doi: 10.1016/j.focus.2022.100035

15. Yeung, AWK, Tosevska, A, Klager, E, Eibensteiner, F, Tsagkaris, C, Parvanov, ED, et al. Medical and health-related misinformation on social media: bibliometric study of the scientific literature. J Med Internet Res. (2022) 24:e28152. doi: 10.2196/28152

16. Skafle, I, Nordahl-Hansen, A, Quintana, DS, Wynn, R, and Gabarron, E. Misinformation about COVID-19 vaccines on social media: rapid review. J Med Internet Res. (2022) 24:e37367. doi: 10.2196/37367

17. Javidan, A, Nelms, MW, Li, A, Lee, Y, Kayssi, A, and Naji, F. Evaluating YouTube as a source of education for patients undergoing surgery: a systematic review. Ann Surg. (2023) 278:e712–e718. doi: 10.1097/SLA.0000000000005892

18. Wang, Y, McKee, M, Torbica, A, and Stuckler, D. Systematic literature review on the spread of health-related misinformation on social media. Soc Sci Med. (2019) 240:112552. doi: 10.1016/j.socscimed.2019.112552

19. Suarez-Lledo, V, and Alvarez-Galvez, J. Prevalence of health misinformation on social media: systematic review. J Med Internet Res. (2021) 23:e17187. doi: 10.2196/17187

20. Liu, Z, Chen, Y, and Lin, Y. YouTube/ Bilibili/ TikTok videos as sources of medical information on laryngeal carcinoma: cross-sectional content analysis study. BMC Public Health. (2024) 24:1594. doi: 10.1186/s12889-024-19077-6

21. Azer, SA. Are DISCERN and JAMA suitable instruments for assessing YouTube videos on thyroid Cancer? Methodological concerns. J Cancer Educ. (2020) 35:1267–77. doi: 10.1007/s13187-020-01763-9

22. Tam, J, Porter, EK, and Lee, UJ. Examination of information and misinformation about urinary tract infections on TikTok and YouTube. Urology. (2022) 168:35–40. doi: 10.1016/j.urology.2022.06.030

23. Lookingbill, V, Mohammadi, E, and Cai, Y. Assessment of accuracy, user engagement, and themes of eating disorder content in social media short videos. JAMA Netw Open. (2023) 6:e238897. doi: 10.1001/jamanetworkopen.2023.8897

24. Joglar, JA, Chung, MK, Armbruster, AL, Benjamin, EJ, Chyou, JY, Cronin, EM, et al. Peer review committee members 2023 ACC/AHA/ACCP/HRS guideline for the diagnosis and Management of Atrial Fibrillation: a report of the American College of Cardiology/American Heart Association joint committee on clinical practice guidelines. Circulation. (2024) 149:e1–e156. doi: 10.1161/CIR.0000000000001193

25. Hindricks, G, Potpara, T, Dagres, N, Arbelo, E, Bax, JJ, Blomström-Lundqvist, C, et al. 2020 ESC guidelines for the diagnosis and management of atrial fibrillation developed in collaboration with the European Association for Cardio-Thoracic Surgery (EACTS): the task force for the diagnosis and management of atrial fibrillation of the European Society of Cardiology (ESC) developed with the special contribution of the European heart rhythm Association (EHRA) of the ESC. Eur Heart J. (2021) 42:373–498. doi: 10.1093/eurheartj/ehaa612

26. Barton, JL, Trupin, L, Tonner, C, Imboden, J, Katz, P, Schillinger, D, et al. English language proficiency, health literacy, and trust in physician are associated with shared decision making in rheumatoid arthritis. J Rheumatol. (2014) 41:1290–7. doi: 10.3899/jrheum.131350

27. Unger, T, Borghi, C, Charchar, F, Khan, NA, Poulter, NR, Prabhakaran, D, et al. International Society of Hypertension Global Hypertension Practice Guidelines. Hypertension. (2020) 75:1334–57. doi: 10.1161/HYPERTENSIONAHA.120.15026

28. ElSayed, NA, Aleppo, G, Aroda, VR, Bannuru, RR, Brown, FM, Bruemmer, D, et al. Introduction and methodology: standards of Care in Diabetes—2023. Diabetes Care. (2022) 46:S1–4. doi: 10.2337/dc23-Sint

29. KDIGO. 2024 clinical practice guideline for the evaluation and Management of Chronic Kidney Disease. Kidney Int. (2024) 105:S117–s314. doi: 10.1016/j.kint.2023.10.018

30. Barbarot, S, and Stalder, JF. Therapeutic patient education in atopic eczema. Br J Dermatol. (2014) 170:44–8. doi: 10.1111/bjd.12932

31. Walker, D, Adebajo, A, and Bukhari, M. The benefits and challenges of providing patient education digitally. Rheumatology. (2020) 59:3591–2. doi: 10.1093/rheumatology/keaa642

32. Wang, M, Yao, N, Wang, J, Chen, W, Ouyang, Y, and Xie, C. Bilibili, TikTok, and YouTube as sources of information on gastric cancer: assessment and analysis of the content and quality. BMC Public Health. (2024) 24:57. doi: 10.1186/s12889-023-17323-x

33. Suresh, CH, Leng, K, Washnik, NJ, and Parida, S. The portrayal of hearing loss information in online mandarin videos. J Otolaryngol. (2023) 18:152–9. doi: 10.1016/j.joto.2023.05.007

34. Chen, Z, Pan, S, and Zuo, S. TikTok and YouTube as sources of information on anal fissure: a comparative analysis. Front Public Health. (2022) 10:1000338. doi: 10.3389/fpubh.2022.1000338

35. Osman, W, Mohamed, F, Elhassan, M, and Shoufan, A. Is YouTube a reliable source of health-related information? A systematic review. BMC Med Educ. (2022) 22:382. doi: 10.1186/s12909-022-03446-z

36. Beautemps, J, and Bresges, A. What comprises a successful educational science YouTube video? A five-thousand user survey on viewing behaviors and self-perceived importance of various variables controlled by content creators. Original research. Front Commun. (2021) 5. doi: 10.3389/fcomm.2020.600595

37. Liu, C, Shi, Z-Q, Zhuo, J-W, Chen, H, and Chiou, W-K. Research on short video health communication in medical and health institutions under the background of “healthy 2030”. Springer Nature; Switzerland. (2023):476–485.

38. Yeung, A, Ng, E, and Abi-Jaoude, E. TikTok and attention-deficit/hyperactivity disorder: a cross-sectional study of social media content quality. Can J Psychiatr. (2022) 67:899–906. doi: 10.1177/07067437221082854

39. Ferhatoglu, SY, and Kudsioglu, T. Evaluation of the reliability, utility, and quality of the information in cardiopulmonary resuscitation videos shared on open access video sharing platform YouTube. Australasian Emergency Care. (2020) 23:211–6. doi: 10.1016/j.auec.2020.05.005

40. Brooks, F, Lawrence, H, Jones, A, and McCarthy, M. YouTube™ as a source of patient information for lumbar discectomy. Annals Royal College of Surgeons of England. (2014) 96:144–6. doi: 10.1308/003588414X13814021676396

41. Aragon-Guevara, D, Castle, G, Sheridan, E, and Vivanti, G. The reach and accuracy of information on autism on TikTok. J Autism Dev Disord. (2023). doi: 10.1007/s10803-023-06084-6

42. Radonjic, A, Fat Hing, NN, Harlock, J, and Naji, F. YouTube as a source of patient information for abdominal aortic aneurysms. J Vasc Surg. (2020) 71:637–44. doi: 10.1016/j.jvs.2019.08.230

43. Camm, CF, Russell, E, Ji Xu, A, and Rajappan, K. Does YouTube provide high-quality resources for patient education on atrial fibrillation ablation? Int J Cardiol. (2018) 272:189–93. doi: 10.1016/j.ijcard.2018.08.066

44. Burki, T. Vaccine misinformation and social media. Lancet Digital Health. (2019) 1:e258–9. doi: 10.1016/S2589-7500(19)30136-0

45. Benecke, O, and DeYoung, SE. Anti-vaccine decision-making and measles resurgence in the United States. Glob Pediatr Health. (2019) 6:2333794x19862949. doi: 10.1177/2333794x19862949

46. Parabhoi, L, Sahu, RR, Dewey, RS, Verma, MK, Kumar Seth, A, and Parabhoi, D. YouTube as a source of information during the Covid-19 pandemic: a content analysis of YouTube videos published during January to march 2020. BMC Med Inform Decis Mak. (2021) 21:255. doi: 10.1186/s12911-021-01613-8

47. Utunen, H, Tokar, A, Dancante, M, and Piroux, C. Online learning for WHO priority diseases with pandemic potential: evidence from existing courses and preparing for disease X. Arch Public Health. (2023) 81:61. doi: 10.1186/s13690-023-01080-9

48. Chatterjee, A, Strong, G, Meinert, E, Milne-Ives, M, Halkes, M, and Wyatt-Haines, E. The use of video for patient information and education: a scoping review of the variability and effectiveness of interventions. Patient Educ Couns. (2021) 104:2189–99. doi: 10.1016/j.pec.2021.02.009

49. Tan, Y, Geng, S, Chen, L, and Wu, L. How doctor image features engage health science short video viewers? Investigating the age and gender bias. Ind Manag Data Syst. (2023) 123:2319–48. doi: 10.1108/IMDS-08-2022-0510

50. Kunze, KN, Krivicich, LM, Verma, NN, and Chahla, J. Quality of online video resources concerning patient education for the Meniscus: a YouTube-based quality-control study. Arthroscopy. (2020) 36:233–8. doi: 10.1016/j.arthro.2019.07.033

51. Bora, K, Pagdhune, A, Patgiri, SJ, Barman, B, Das, D, and Borah, P. Does social media provide adequate health education for prevention of COVID-19? A case study of YouTube videos on social distancing and hand-washing. Health Educ Res. (2022) 36:398–411. doi: 10.1093/her/cyab028

52. Kunze, KN, Alter, KH, Cohn, MR, Vadhera, AS, Verma, NN, Yanke, AB, et al. YouTube videos provide low-quality educational content about rotator cuff disease. Clin Shoulder Elb. (2022) 25:217–23. doi: 10.5397/cise.2022.00927

53. Bilibili Certification System. (2024). Available online at: https://account.bilibili.com/account/official/home?spm_id_from=333.999.0.0 (Accessed June 29, 2024).

54. Expanding equitable access to health information on YouTube. (2023). Available online at: https://blog.youtube/news-and-events/expanding-equitable-access-to-health-information-on-youtube/ (Accessed June 29, 2024).

55. Doiyin Certification System. Available online at: https://lf3-cdn-tos.draftstatic.com/obj/ies-hotsoon-draft/douyin/cd0175f4-34b4-4e0f-8197-72089c9d7709.html (Accessed June 29, 2024).

Keywords: patient education, atrial fibrillation, social media platform, author type, video content quality

Citation: Luo C, Qin X, Xie X, Gao J, Wu Y, Liang W and Wu Z (2025) Cross-platform analysis of atrial fibrillation scientific videos: using composite index and a basic assessment scale. Front. Public Health. 13:1507776. doi: 10.3389/fpubh.2025.1507776

Edited by:

Feng Guo, Tianjin University, ChinaReviewed by:

Tianyi Wang, Wuhan University, ChinaManeeth Mylavarapu, Independent Researcher, Birmingham, United States

Copyright © 2025 Luo, Qin, Xie, Gao, Wu, Liang and Wu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Weitao Liang, MTAyMzQ0NDM2NkBxcS5jb20=; Zhong Wu, d3V6aG9uZzcxQHNjdS5lZHUuY24=

†These authors have contributed equally to this work and share first authorship

Chong Luo

Chong Luo Xiaoli Qin1†

Xiaoli Qin1† Jie Gao

Jie Gao